Abstract

In this study, we systematically evaluate the potential of a bunch of survey-based indicators from different economic branches to forecasting export growth across a multitude of European countries. Our pseudo-out-of-sample analyses reveal that the best performing indicators beat a well-specified benchmark model in terms of forecast accuracy. It turns out that four indicators are superior: the Export Climate, the Production Expectations of domestic manufacturing firms, the Industrial Confidence Indicator, and the Economic Sentiment Indicator. Two robustness checks confirm these results. As exports are highly volatile and turn out to be a large demand-side component of gross domestic product, our results can be used by applied forecasters in order to choose the best performing indicators and thus increasing the accuracy of export forecasts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Exports are one of the most important demand-side components of GDP. Considering that the share of exports of goods and services in total GDP rose from almost 30% in 1996 to 42% in 2016 for the EU-15, exports are also one major source of the creation of business cycles, since they transfer international shocks into the domestic economy. Fiorito and Kollintzas (1994) find for the G7 that exports are procyclical and coincide with the business cycle of total output. So trade is an important pillar for the economic development of countries, as the empirical literature shows (see Frankel and Romer 1999). Thus, especially unbiased export forecasts can, ceteris paribus, significantly reduce forecast errors of GDP.Footnote 1 Academics have studied forecasts of private consumption (see, among others, Vosen and Schmidt 2011) and imports (see Grimme et al. 2019) in particular. The other components are more or less disregarded. In this paper, we exclusively focus on exports and apply a forecasting competition between a large set of survey indicators for a multitude of European countries. Our main aim is to find out whether a superior survey-based indicator exists that works very well in forecasting export growth of different European countries. Indeed, we find four indicators that produce, on average, the lowest forecast errors across European countries: the Export Climate provided by the German ifo Institute, Production Expectations of manufacturing firms, the Industrial Confidence Indicator, and the Economic Sentiment Indicator.

Only a few studies exist that focus on the improvement of export forecasts. An early attempt has been made by Baghestani (1994). He finds that survey results obtained from professional forecasters improve predictions for US net exports. In the case of Portugal, Cardoso and Duarte (2006) find that business surveys improve the forecasts for export growth. For Taiwan, standard autoregressive integrated moving average (ARIMA) models are able to improve export forecasts compared to heuristic methods (Wang et al. 2011). Additionally, two German studies exist. Jannsen and Richter (2012) use a capacity utilization weighted indicator obtained from major export partners to forecast German capital goods exports. Elstner et al. (2013) and Grimme and Lehmann (2019) use hard data (for example, foreign new orders in manufacturing) as well as indicators from the ifo business survey (for example, ifo Export Expectations) to improve forecasts for German exports. Overall, survey indicators produce lower forecast errors than hard indicators do. Finally, Hanslin Grossmann and Scheufele (2019) show that a weighted Purchasing Manager Index (PMI) from major trading partners improves Swiss exports more than other indicators.

Next to these country-specific studies, some contributions focus on country aggregates. Keck et al. (2009) show that trade forecasts for the OECD-25 can be improved by applying standard time series models in comparison with a ‘naïve’ prediction based on a deterministic trend. Economic theory names two major drivers of exports: relative prices and domestic demand of the importing trading partners. Thus, Ca’Zorzi and Schnatz (2010) use different measures of price and cost competitiveness to forecast extra Euro-area exports and find that for a recursive estimation approach the real effective exchange rate based on the export price index outperforms the other measures as well as a ‘random walk’ benchmark. For the Euro area, Frale et al. (2010) find that survey results play an important role for export forecasts. From a global perspective, Guichard and Rusticelli (2011) show that the industrial production (IP) and Purchasing Manager Indices are able to improve world trade forecasts.

We contribute to this existing literature by creating a forecasting competition between a large set of survey-based indicators for a multitude of single European countries. We analyze the forecasting performance of twenty different survey indicators from several branches of the economy (for example, manufacturing and services) for eighteen European states in the period from 1996 to 2016. Based on the pseudo-out-of-sample forecast experiment, we can conclude that especially four survey-based indicators produce the most accurate export forecasts. These indicators are the Export Climate, Production Expectations of manufacturing firms, the Industrial Confidence Indicator, and the Economic Sentiment Indicator. The main results from the baseline experiment are robust to variations in the forecasting experiment.

In general, it is common knowledge that business and consumer surveys are powerful tools for macroeconomic forecasting (see Gelper and Croux 2010, for the European Sentiment Indicator). However, business surveys are not free of criticism. Croux et al. (2005) mention that surveys are very expensive and time-consuming for both the enterprise and the consumer. This expense, in terms of time and money, should result in any informative or even predictive character of the questions asked in the specific survey. The study by Croux et al. (2005) finds an improvement in industrial production forecasts through the usage of Production Expectations expressed by European firms. Despite the forecasting power of a survey indicator for European industrial production, the results for different macroeconomic aggregates are mixed. This leads to the conclusion by Claveria et al. (2007) that we actually have no definite idea why some qualitative indicators work for specific macroeconomic variables, whereas others do not. With this paper, we systematically analyze the performance of survey indicators for export growth.

The paper is organized as follows. In Sect. 2, we present our data set, followed by our forecasting approach in Sect. 3. Section 4 discusses our results in detail and presents some robustness checks. Section 5 offers a conclusion.

2 Data set

2.1 Export figures for European countries

Eurostat supplies comprehensive export data on a quarterly basis for all member states of the European Union plus Switzerland and Norway. These figures are comparable across countries as they share a common accounting basis for national accounts (European System of Integrated Economic Accounts 2010—ESA 2010). We apply our forecasting experiment to total exports (sum of traded goods and services), since this is the most relevant series for forecasting applications in practice and one of the corresponding aggregates to calculate gross domestic product (GDP). These total export figures are measured in real terms and are seasonally as well as calendar adjusted. Since we are interested in forecasting export development rather than levels, we transform the export figures into year-on-year growth rates. The time period for our forecasting experiment spans from the first quarter 1996 to the fourth quarter of 2016. Due to some data restrictions (for example, missing export data), we are not able to apply our methodology to all member states of the European Union, leaving us with 18 countries in the sample. Table 1 presents descriptive statistics for the countries’ export growth.

The table reveals a large heterogeneity in export growth rates across European countries. The largest average increase can be observed for Hungary (10.0%); Italy grew with the smallest rate (2.5%). One difficulty for applied export forecasting is the high volatility of the series, that is, highest for Estonia (12.7 p.p.) and lowest for the Netherlands (4.9 p.p.) in the period under investigation. These simple figures underpin why exports are one of the GDP determinants with the lowest accuracy in terms of the standard deviation in forecast errors (see the working paper version of Timmermann 2007, for an evaluation of the IMF’s World Economic Outlook). The spread in the minimums and maximums across countries, together with the heterogeneity in the series’ volatility, let us suggest that the export composition may play a crucial role for the differences occurring across countries. According to the Standard International Trade Classification (SITC), the exports of Denmark, for example, are characterized by a large share in food and living animals, whereas France exports relatively more chemical products.

2.2 Potential export leading indicators

According to standard macroeconomic theory, a country’s exports are determined by foreign demand and an exchange rate or competitiveness measure. Potential predictors for domestic exports can be extracted by three possible approaches. First, information or indicators can be used that approximate export development directly from a domestic perspective. Second, each single component of domestic exports is modeled separately such as that one finds suitable indicators either for foreign demand or the competitive measure. And third, an indicator which mirrors both components together is applied. All three approaches and corresponding indicators are discussed in the following.

The first two potential leading indicators stem from surveys conducted at the level of domestic manufacturing firms and are directly targeted to approximate export development.Footnote 2 In standard questionnaires, the firms are asked to assess their current export situation and how their exports will develop in the near future. Thus, the two questions focus on different time horizons. For the Export Order Books Level (EOBL), the survey participants should assess on a monthly basis whether their current amount of exports reaches a rather normal level or is above or below that threshold. In contrast, quarterly asked export expectations (XEXP) indicate the firms’ expected export development in the next 3 months. The participants can state whether their exports will either increase, decrease, or remain unchanged. As we focus on European countries, the indicators are taken from the ‘Joint Harmonised EU Programme of Business and Consumer Surveys,’ which is standardized across EU member states (see European Commission 2016). We exclusively rely on the survey results obtained from the manufacturing sector as equivalent questions are not available in the remaining sectors. However, this focus can bear a high risk as the share of service exports heavily varies across the countries in our sample.Footnote 3 Both predictors are expressed as balances, i.e., they are calculated as the weighted difference of ‘positive’ (above normal, will increase) and ‘negative’ (below normal, will decrease) answers; the ‘neutral’ category is not considered. However, balances are not indisputable in the existing literature as all neutral answers are neglected (see, for a critical discussion, Croux et al. 2005; Claveria et al. 2007, and the references therein). The weights are based on the firm size. EOBL and XEXP are seasonally adjusted; we calculate 3-month averages for the export order books in order to reach the same frequency as total exports.

Our second approach proxies foreign demand separately. As argued by Hanslin Grossmann and Scheufele (2019), this proxy can be based on survey results as well. The Kiel Institute for the World Economy (IfW) proposed a Weighted Foreign Capacity Indicator to forecasting German investment goods exports (IFWCAP; see Jannsen and Richter 2012). We adopt their idea and calculate the capacity-based indicator for all European countries in our sample. The basis for IFWCAP is the quarterly question on the manufacturing firms’ current level of capacity utilization (CU), again extracted from the previously mentioned EU questionnaire. Capacity utilization is measured as percentage of full capacity the firm can operate with. We can rely on 23 European countries for which capacity utilization is available throughout the entire period under investigation. For each country to which our forecasting experiment is applied to, we can weight the 22 remaining capacity indicators by their respective export shares in total domestic exports (\(w_i^d\)) that add up to one in order to calculate IFWCAP. The formal statement of the indicator is: \(\mathrm{IFWCAP}_t^d = \sum _{i=1}^{22}{w_{t,i}^d \times {\mathrm{CU}}_t^i}\). All capacity series are seasonally adjusted.

In addition to foreign demand, we also proxy the exchange rate or competitiveness measure for the domestic economy. The European-wide survey includes questions on the change in the firm’s competitive position over the past 3 months. They have to formulate a statement on how their competitive position inside or outside the EU (COMPIEU, COMPOEU) has developed. Again, three answers are possible: The firms can state whether their position on foreign markets has improved, remained unchanged, or even deteriorated. Compared to the previous indicators, both qualitative competitiveness measures are backward looking. The competitiveness series are published as seasonally adjusted balance statistics between the share of firms that report an improvement and those who report a deterioration.

For our last approach, we proxy both components of domestic exports, foreign demand and the price competitiveness, simultaneously. The ifo Institute suggested the Export Climate for the German case (IFOXC; see Elstner et al. 2013; Grimme and Lehmann 2019), which worked pretty well in forecasting German export growth. In our paper, we apply their idea to all the countries in the sample separately. As the Export Climate is rather complex, the easiest illustration can be given by the following formal statement:

The Export Climate for the domestic country (\(\mathrm{IFOXC}_t^d\)) consists of its world climate \(\mathrm{WC}_t^d\), approximating foreign demand, and an indicator that measures its relative price and cost competitiveness (\(\mathrm{PC}_t^d\)). In turn, the world climate is an export-weighted (\(w_{t,i}^d\)) average of the economic climates, \(\mathrm{EC}_t^i\), of 44 main trading partners to the domestic economy.Footnote 4 Each trading partner’s economic climate consists of its consumer and business confidence (\(\mathrm{CC}_t^i\) and \(\mathrm{BC}_t^i\)). Both confidence indicators are weighted by the share in consumer goods or investment goods exports of the domestic economy to the specific trading partner (\(\beta _i^d\)) that are summed up to one in advance. Thus, for each trading partner, the economic climate approximates its general demand with regard to the domestic economy. As approximation for the price competitiveness measure serves the real effective exchange rate compared to 37 industrial countries, deflated by harmonized consumer prices (HCPI) in advance (see European Commission 2014, for more details). These figures are provided by the Directorate-General for Economic and Financial Affairs (DG ECFIN) at the European Commission on a quarterly basis. In the end, the Export Climate results by weighting the world climate and the price competitiveness measure. The weight \(\alpha ^d\) is a ratio of two adjusted \(R^2\), both resulting from regressions either solely based on the exchange rate or by adding the world climate.

Figure 1 plots year-on-year export growth together with the export expectations and the Export Climate for Germany and the UK. We choose these two countries since they reveal a large heterogeneity in the leading characteristics of both indicators. Whereas the export expectations as well as the Export Climate show similar movements as export growth in the case of Germany, both indicators seem less interrelated to exports for the UK. This visual evidence is underpinned by the contemporaneous correlation coefficients. For Germany, the linear interrelationship between export growth and the export expectations (Export Climate) is 0.79 (0.86) with additional leading characteristics at hand. The opposite holds for UK as the contemporaneous correlations are 0.26 for the export expectations and 0.46 for the Export Climate; the correlations converge very quickly against zero for longer leads. We hypothesize from these findings that the forecasting performance of the indicators differs significantly across European countries.

2.3 Further potential predictors

Next to the indicators that are directly linked to export development, other survey indicators may also deliver important signals to forecasting export growth. We solely focus on the firm side of the economy and neglect the information by domestic consumers. In official statistics, trade figures are usually broken down to goods and service exports. Thus, we extract further survey indicators by distinguishing between different sectors and come up with four classes: (1) industry, (2) services, (3) retail trade, and (4) the overall economy. The industrial sector captures all goods exports of a country and the service category all activities including, for example, information and communication or real estate. We also make usage of the results from the retail trade survey that comprises all activities of selling motor vehicles as well as retail trade (see European Commission 2016). If, for example, a consumer from abroad buys a car from a domestic firm, this should show up in service exports of the home country. We exclude survey information from construction firms as they mainly operate on domestic markets. Also, financial services are excluded from our analysis as the time series start at the mid of 2006 and are thus too short for our purposes. To complete the picture, we include the Economic Sentiment Indicator (ESI) of a country as the most comprehensive predictor of economic activity.

Table 2 gives an overview of all additional indicators and how they are potentially linked to exports of the domestic country. As all of these predictors do not explicitly focus on exports, they may introduce noise to the forecast. Nevertheless, these are the information one can extract from the harmonized EU survey. The list of indicators comprises the confidence indicator of each sector, different expectations on the firms’ business development (for example, demand expectations in the service sector), formations on their price development in the near future, and sector-specific questions such as the stock of finished products of industrial products. All in all we can rely on twenty potential predictors to forecasting export growth across eighteen European countries.

3 Forecasting approach

We generate our pseudo-out-of-sample forecasts by employing the following standard autoregressive distributed lag (ADL) model:

where \(y_{t+h}\) is the h-step-ahead forecast for export growth and \(x_t\) represents one of the single indicators. We choose this type of model in order to assess whether each survey indicator separately adds, on average, forecasting power to the inherent dynamics of export growth. The forecasts are calculated at the end of each quarter; thus, all monthly values of the indicators for the latest quarter are available. We transform the monthly indicators to a quarterly frequency by applying 3-month averages. The forecast horizon h is defined in the range of \(h \in \) {1, 2} quarters since survey-based indicators are usually applied to short-term forecasts (see, among others, Gayer 2005). We allow a maximum of four lags for our target variable and each single indicator: \(p,q \le 4\). The optimal lag length is determined by the Bayesian Information Criterion (BIC). Our forecasting strategy is based on an expanding window approach; thus, the estimation window is enlarged by one quarter after the forecasts have been calculated. The initial estimation period varies across countries because of differences in the availability of the target series. Also, the number of available indicators differs across countries, since either no survey results are published (e.g., for the Luxembourgian service and retail trade sector) or the time series are too short for a reliable forecasting experiment.Footnote 5 We fix the number of forecasts produced for each country, leaving us with \(T=43\) predictions for each country and indicator. This implies an implementation of the ADL model in a direct-step fashion; thus, the forecasts for longer horizons do not depend on predictions of preceding quarters.

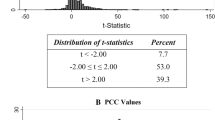

To evaluate the forecast accuracy of our different models, we define the h-step-ahead forecast error as \(\mathrm{FE}_{t+h,t} = y_{t+h} - {\widehat{y}}_{t+h,t}\), with \({\widehat{y}}_{t+h,t}\) denoting the forecast produced at time t. As the benchmark model serves an AR(p) process with the corresponding forecast error \(\mathrm{FE}_{t+h}^{\mathrm{AR}}\). We choose the root mean squared forecast error (RMSFE):

as the loss function. To decide whether one indicator performs, on average, better than the autoregressive process, we calculate the relative RMSFE or Theil’s U between the indicator model and the benchmark: \(\text{ Theil }_h = \text{ RMSFE }_h/\text{ RMSFE }_h^{\mathrm{AR}}\). Whenever this ratio is smaller than one, the indicator-based model performs better than the autoregressive benchmark. Otherwise, the AR(p) process is preferable. Nonetheless, calculating this ratio does not imply any difference between forecast errors in a statistical sense. For this purpose, we apply the test proposed by Diebold and Mariano (1995). Under the null hypothesis, the test states that the expected difference in the mean squared forecast errors (MSFE) between the benchmark and the indicator model is zero. In other words, the AR(p) is assumed to be the data generating process under the null. Adding an indicator to this process can then cause the typical problem of nested models. The larger model—with each of our single indicators—introduces a bias through estimating model parameters that are zero within the population. Thus, the AR(p) process nests the indicator model by setting the parameters of the indicator to zero. As stated by Clark and West (2007), this causes the MSFE of the larger model to be biased upward since redundant parameters have to be estimated. As a result, standard tests, such as the one proposed by Diebold and Mariano (1995), lose their power. On this account, we follow the literature (see, among others, Lehmann and Weyh 2016; Weber and Zika 2016) and apply the adjusted test statistic by Clark and West (2007).

4 Results

In the following, we present our main results. We first highlight the general findings by showing the best performing indicators for each European country and by carving out the heterogeneity in the indicators’ forecasting performance across countries. And second, we discuss how robust the general findings are compared to variations in the forecasting experiment.

4.1 General findings

We start by presenting the best indicator for each country and forecast horizon in Table 3. The table shows both the best indicator and the corresponding Theil’s U value; we additionally include the relative number of indicators that perform significantly better compared to the benchmark model (rel. #).Footnote 6 A significant improvement is denoted by asterisks.

In general, we observe a large heterogeneity across countries both in terms of the best indicator and its relative forecasting performance. The highest relative improvement over the benchmark is found for Sweden (Theil’s U, \(h=1\): 0.70 and Theil’s U, \(h=2\): 0.72). On the opposite, the lowest improvement of the best indicator is observed for the UK (Theil’s U, \(h=1\): 0.91) and Hungary (Theil’s U, \(h=2\): 0.98); thus, the span of improvement across countries is very large. One reason is the number of indicators that are merely able to beat the benchmark model. Table 3 reveals large variation in the relative numbers of indicators that produce significant lower forecast errors compared to the autoregressive model (rel. #); the relative number is the ratio of indicators with an significant improvement to the total number of available indicators for each country. For \(h=1\), the Netherlands turns out to be the country with the highest relative number of indicators that significantly outperform the benchmark model (83.3%); for Latvia, we observe the lowest value (15.4%). In case of forecasts for the next two quarters (\(h=2\)), Sweden shows the highest relative number with 77.8%. The UK takes the last place with a relative number of only 5.6%.

Turning to the best performing indicators, there is one predictor that frequently gets ranked first: the Export Climate (IFOXC). For one-quarter-ahead forecasts, the Export Climate is the best performing indicator for five out of 18 countries; for \(h=2\), it is ranked first for four countries in the sample. The Economic Sentiment Indicator (ESI) and the Industrial Confidence Indicator (ICI) follow immediately with three first places for \(h=1\) and \(h=2\), respectively. Across the best performing indicators, we also observe a distinct sectoral pattern. Only for Greece (DEXP—demand expectations in the service sector, \(h=1\)) and the Netherlands (PREXP-RET—price expectations for retail trade, \(h=2\)), indicators from non-manufacturing are ranked first place; for all remaining countries, indicators resulting from the survey conducted in the manufacturing sector show the lowest Theil’s U values.

Further interesting insights are achieved for indicators that should be directly linked to export growth. Among the indicators that might serve as leading ones, the Export Order Books Level (EOBL) is more often ranked first compared to export expectations (XEXP) of firms. Price competitiveness seems to play only a minor role as it is the best performing indicator only in the case of the UK (COMPIEU). In the end, as stated before, the Export Climate performs well for many countries; thus, an indicator incorporates a large set of signals from the domestic country’s main trading partners.

By exclusively taking a closer look on the first best indicators, we cannot draw reliable conclusions on each single indicator’s overall performance. Therefore, we introduce Table 4 that displays for both forecast horizons the mean Theil’s U value and the corresponding standard deviation of each indicator across all 18 countries. The indicators are listed according to their rank for one- quarter-ahead forecasts.

In terms of the standard deviations in Theil’s U values, Table 4 clearly underpins the large heterogeneity in indicator performance across countries already suggested by presenting the best predictors. However, the pattern for the top three performing indicators is clear-cut. The Export Climate is the top indicator for both forecast horizons (mean Theil: 0.84 and 0.91 for \(h=1\) and \(h=2\), respectively) and produces approximately 4–6 percentage points lower average forecast errors than the second or third best indicator (PEXP—Production Expectations, and ICI—Industrial Confidence Indicator). This is a very interesting finding as the best indicators do not approximate exports directly. Whereas both industrial indicators more or less mirror the current or expected business cycle in the manufacturing sector as a whole, the Export Climate approximates foreign demand of domestic products, enriched by the price competitiveness of the domestic economy. Both indicators that should be directly linked to exports: Export Order Books Level (EOBL) and export expectations (XEXP), perform relatively bad across countries (mean Theil: 0.91 and 1.01 for \(h=1\) and \(h=2\), respectively). In particular in the case of the export expectations, the heterogeneity is remarkably pronounced by looking at the standard deviations in the average Theil’s U values (0.11 for both forecast horizons). This finding lets us suggest that it is much more difficult for firms across countries to formulate an accurate statement on their expected export development, which might be driven by the composition of the domestic economies’ exports. We leave such an examination for future research activities.

Finally, we again take a closer look at the performance of non-manufacturing predictors. Only two indicators from the service sector produce mean Theil’s U values lower than one: the confidence indicator (SCI) and demand expectations (DEXP). All remaining variables are more or less not able to beat the simple autoregressive benchmark model. This might reflect the fact that only minor parts of country exports stem from retail trade. For most of the countries, exports are dominated by goods from the manufacturing sector.

4.2 Discussion on the forecasting performance

To check the validity of our general findings, we discuss two types of variations in the forecasting experiment. First, we use a rolling window instead of applying an expanding window approach. This means that the initial estimation window for Eq. (1) is not successively enlarged by one quarter but is rather fixed and moved forward in each iteration. In particular if breaks are present in the time series of export growth, the rolling window approach might be more suitable. In contrast, the advantage of the expanding window approach is its ability to capture the whole cyclicality or behavior of the underlying time series. Second, we test the forecasting performance of the survey indicators for a different transformation of the target variable. Instead of using year-on-year growth rates, we calculate quarter-on-quarter (qoq) growth rates. Such a transformation should capture the cyclical movement of the target variable during the year. In practice, forecasts of macroeconomic aggregates are based on the quarter-on-quarter transformation. However, the resulting series are much more volatile compared to the year-on-year transformation.

4.2.1 Estimation window

The approach on how the estimation window is specified might drive the out-of-sample results, especially if the target series show multiple breaks. We compare the results from the expanding window and the rolling window in Fig. 2 as tables would be hard to read in our case. Both sub-figures—one for each forecasting horizon and indicated by panel (a) and (b)—compare the Theil’s U of the expanding window approach (horizontal axis) with its counterparts from the rolling window approach (vertical axis). As indicated by both the caption, the target series to forecast are year-on-year growth rates. Each dot represents a Theil’s U pair of an indicator for a specific country (for example, performance of export expectations for Germany). To ease the interpretation of each sub-figure, we add the \(45^\circ \) line as well as a horizontal and a vertical line both crossing the value of one, indicating whether an indicator performs better or worse compared to the specific benchmark model. Each dot below the \(45^\circ \) line represents a combination for which an indicator’s Theil’s U is smaller in the rolling window approach compared to the expanding window case. The opposite holds for values above the \(45^\circ \) line. The horizontal and vertical lines divide the sub-figures into four quadrants. The interpretations of quadrants (I) and (III) are straightforward. A dot lying in quadrant (I) represents an indicator that produces, on average, higher forecast errors than the benchmark model for both the expanding and the rolling window approach. The opposite case is true for dots lying in quadrant (III); thus, these indicators produce lower average forecast errors than the benchmark in both approaches. Whenever an indicator enters quadrant (II), its performance becomes worse in an expanding window approach compared to a rolling window. For quadrant (IV), the indicator beats the benchmark in an expanding window setup, whereas it fails to do so in the rolling window approach.

The forecasting results would be perfectly robust to the applied window if all dots lie on the \(45^\circ \) line. Figure 2 reveals that this is not perfectly the case for the shorter forecast horizon [panel (a), \(h=1\)]. However, the results do not vary much between the two approaches, since the dots are located closely to the \(45^\circ \) line. Only 22% of all indicators either become better or worse with the rolling window approach compared to the expanding window. Most of these differences are, however, not statistically significant. The remaining 78% remain either in quadrant (I) or (III); thus, their relative performance is stable across the applied estimation window. As we are most interested in those cases for which the indicator beats the benchmark model [quadrant (III)], we can confirm the robustness of the general findings. Indicators that show a Theil’s U smaller than one with the expanding window approach also do so in 79% of all cases by applying a rolling window.

A similar picture emerges for the longer forecasting horizon \(h=2\) [see panel (b) in Fig. 2]. Overall, 72% of all indicators’ relative forecasting performance do not change with the applied estimation window; only 28% either become better or worse across the expanding or rolling window approach. Turning to those indicators that beat the benchmark model in the expanding window case, Fig. 2 panel (b) reveals that most of them are also favorable over the benchmark in the rolling window case. 80% of those indicators showing a Theil’s U smaller than one with the expanding window approach also beat the benchmark model in the rolling window case.

4.2.2 Transformation of the target series

Most of the existing applied forecasts base their analysis on quarter-on-quarter growth rates. This transformation leads, however, to highly volatile time series, especially in the case of exports. In the following, we check how the quarter-on-quarter transformation changes our general findings from the previous section.

We start by showing a similar figure to the one from the first robustness check, where we compared an expanding window with a rolling window approach. Figure 3 presents the corresponding scatter plots for \(h=1\) and \(h=2\), respectively. The indicators’ Theil’s U from the year-on-year transformation is plotted on the horizontal axes; the corresponding relative forecast errors from the quarter-on-quarter transformation are displayed on the vertical axes.

Overall, the relative forecasting performance of the indicators worsens on average. For the shorter forecasting horizon (\(h=1\)), approximately 50% of all indicators across the countries with a Theil’s U smaller than one in the year-on-year case also exhibit a better forecasting performance than the benchmark in the quarter-on-quarter case. [This corresponds to the proportion of quadrant (III) in panel (a) of Fig. 3.] This decline in forecasting performance over all indicators and countries becomes even worse by investigating the longer forecast horizon (\(h=2\)). Here, only one third of all indicators with a better forecasting performance than the benchmark in the year-on-year case also beat the benchmark model by applying the quarter-on-quarter transformation. [This is the corresponding proportion of quadrant (III) in panel (b) of Fig. 3.]

These findings raise the question on the reasons behind this worsening in forecasting performance. We disentangle this question by first comparing the best performing indicators for each country in both cases. And second, we investigate the average performance of each indicator across all countries, again in comparison of both transformations. Table 5 shows for both forecast horizons the best performing indicators from our baseline results (columns ‘Indicator’ and ‘Theil’ for the ‘yoy’ transformation) together with the best performing indicators in the quarter-on-quarter case (columns ‘qoq’). We can draw three main conclusions from Table 5. First, the relative forecasting performance of the best indicator in the quarter-on-quarter case is, on average, not as good as in the baseline setting. This holds true for both forecast horizons. The main reason is certainly the higher volatility of quarterly compared to yearly growth rates. Second, we still observe a best performing indicator that improves the performance of the benchmark model. Thus, it is rather the mass of indicators that become worse and lead to the patterns observed in the previous scatter plots from Fig. 3. We, however, have to state that not for all countries the best performing indicator also beats the benchmark model. For the shorter forecast horizon (\(h=1\)), the best indicator for the UK cannot improve the benchmark (see Theil COMPIEU: 1.00 in Table 5). For \(h=2\), the performance of the survey indicators is especially weak for Eastern European countries such as Estonia or Slovenia. Similar findings that survey indicators do not work that well for UK or some Eastern European countries have been documented in the literature in conjunction with other macroeconomic aggregates (see Lehmann and Weyh 2016 for employment growth or Grimme et al. 2019 for total imports). And finally, we have to state that the best performing indicator in the year-on-year case does in most cases not coincide with the best indicator in the quarter-on-quarter case. This third finding leads to our next examination: the average performance of each indicator across all countries.

In Table 6, we compare the average rank of each indicator across both transformations. The indicators are ordered in terms of their performance rank for the shorter forecast horizon (\(h=1\)) and the year-on-year case. By comparing the ranks, we can clearly state that the ordering of the indicators’ performance is very stable for \(h=1\) (rank correlation: 0.70). The top four performing indicators in the year-on-year case (Export Climate, Production Expectations, Industrial Confidence Indicator, and Economic Sentiment Indicator) are also among the top four in the quarter-on-quarter case. There are, however, some indicators which relative forecasting performance sharply decreases between both transformations. The export expectations (XEXP) of the firms clearly lose forecasting power in the quarter-on-quarter case. This finding is noteworthy as one would suggest that this indicator should especially be linked to future export growth. Follow-up studies might investigate the reasons behind this finding.

The ranking for the longer forecast horizon (\(h=2\)) is, on the opposite, not very stable between the transformations (rank correlation: 0.44). Also, the indicators’ performance is rather bad for the quarter-on-quarter case; across all countries, no single indicator is, on average, able to produce smaller forecast errors than the benchmark model. These means, however, coincide with large standard deviations in the countries’ Theil’s U.

All in all, our general findings for \(h=1\) are confirmed by looking at the quarter-on-quarter transformation. As the average forecasting performance worsens by considering quarterly growth rates, it is rather the mass of bad performing indicators that lead to a shift toward quadrant (IV) in panel (a) of Fig. 3. The best performing indicators are identical for both transformations. The poorer performance of the indicators for \(h=2\) can be described by a complete shift in the performance ranking, which might be explained by the larger volatility of the transformation. In addition, also the mass of indicators get worse in their performance to forecast export growth on a quarterly basis. This finding is also an expression of the limitation of survey indicators to produce good forecasts more than one quarter ahead. Per construction, survey indicators do not incorporate any signal for the development of macroeconomic aggregates in the medium or long run.

5 Conclusion

Macroeconomic forecasts consist of more than the prediction of a single number, namely gross domestic product (GDP). In practice, it is standard to forecast each single component (for example, exports) of total output. Disaggregated GDP forecasts are also seen in the academic literature as more accurate than direct predictions, especially in the short run. Thus, better forecasts on each single component lead, ceteris paribus, to lower forecast errors of GDP. In this paper, we concentrate on one major aggregate in total output: exports of goods and services. In conclusion, we ask whether there exist some superior indicators that improve export growth forecasts across a multitude of European countries most. We evaluate this question with a pseudo-out-of-sample exercise based on twenty survey-based indicators and eighteen single European countries. Our period of investigation runs from the first quarter 1996 to the fourth quarter of 2016 and therefore covers more than one business cycle. For all countries, we find the best performing indicators that significantly beat a well-specified benchmark model. It turns out that especially four survey-based indicators are the best performing across the eighteen European countries: the Export Climate, Production Expectations of the domestic manufacturing firms, the Industrial Confidence Indicator, and the Economic Sentiment Indicator. Two robustness checks confirm these results.

This paper expands the discussion on survey-based forecasting in general and export forecasts in particular. First, we use a multitude of survey indicators from different economic branches for our forecasting exercise. Second, we analyze this question for a multitude of European countries, thus broadening the picture of the usefulness of indicators for export forecasts. Third, we stick to the discussion by Croux et al. (2005) who state that survey results should have some predictive content for several macroeconomic variables as they are expensive and time-consuming for the firms. Our results clearly support the usage of four superior survey indicators for export forecasting. Nevertheless, our results reveal large heterogeneity in forecast accuracy across countries. This result is interesting and might initiate future research activities to concentrate on the reasons behind these observed country differences in forecast accuracy; meta-studies on the surveys’ abilities might therefore be a suitable approach. One can imagine that the countries’ forecast accuracy of survey indicators might be driven by the export composition of the domestic economy. Maybe it is easier for firms to formulate export expectations if they sell products such as machinery or cars compared to an oil exporter. The sales potential of the latter highly depends on the extremely volatile oil price, making it hard for the firm to formulate stable export expectations. Also, the overall increase in the importance of service exports might be challenging to formulate accurate export forecasts in the future as similar questions to export expectations in the industrial sector are missing in services. It might be reasonable to think about the incorporation of such questions also in the domestic service sector. In the end, future studies might also be interested in the calculation of a worldwide survey-based index to capture world trade growth.

Notes

Disaggregated forecast approaches that formulate forecasts for each single component (for example, private consumption and exports) in a first step and merge them together in a second step to form GDP are found to be preferable compared to a direct approach by the academic literature (see, among others, Angelini et al. 2010; Drechsel and Scheufele 2018). Thus, the forecast errors for GDP can significantly be reduced by forecasting each single component.

Table 7 in “Appendix A” presents detailed indicator descriptions and their corresponding sources.

According to national accounts statistics by the OECD, the share of nominal service exports ranged from 16% (Czech Republic) to 86% (Luxembourg) in 2016, with a standard deviation of 16 percentage points.

These 44 countries are representative as main trading partners since their share in total exports in 2016 varies between 73% for Greece and 95% in case of the Czech Republic. The standard deviation in the shares for our countries in the sample takes a value of 5.5 percentage points.

Table 8 in “Appendix A” summarizes the availability of indicators and the target series.

The full list of results can be found in the Online Appendix to this article.

References

Angelini E, Banbura M, Rünstler G (2010) Estimating and forecasting the euro area monthly national accounts from a dynamic factor model. OECD J J Bus Cycle Meas Anal 1:5–26

Baghestani H (1994) Evaluating multiperiod survey forecasts of real net exports. Econ Lett 44(3):267–272

Cardoso F, Duarte C (2006) The use of qualitative information for forecasting exports. Banco de Portugal Economic Bulletin Winter 2006

Ca’Zorzi M, Schnatz B (2010) Explaining and forecasting euro area exports: which competitiveness indicator performs best? In: de Grauwe P (ed) Dimensions of competitiveness, CESifo Seminar Series September 2010. MIT Press, Cambridge, pp 121–148

Clark TE, West KD (2007) Approximately normal tests for equal predictive accuracy in nested models. J Econ 138(1):291–311

Claveria O, Pons E, Ramos R (2007) Business and consumer expectations and macroeconomic forecasts. Int J Forecast 23(1):47–69

Croux C, Dekimpe MG, Lemmens A (2005) On the predictive content of production surveys: a pan-European study. Int J Forecast 21(2):363–375

Diebold FX, Mariano RS (1995) Comparing predictive accuracy. J Bus Econ Stat 13(3):253–263

Drechsel K, Scheufele R (2018) Bottom-up or direct? Forecasting German GDP in a data-rich environment. Empir Econ 54(2):705–745

Elstner S, Grimme C, Haskamp U (2013) Das ifo Exportklima—ein Frühindikator für die deutsche Exportprognose. ifo Schnelld 66(4):36–43

European Commission (2014) Price and Cost Competitiveness Report—Technical Annex. Brussels

European Commission (2016) The joint harmonised EU programme of business and consumer surveys—user guide. Brussels

Fiorito R, Kollintzas T (1994) Stylized facts of business cycles in the G7 from a real business cycles perspective. Eur Econ Rev 38(2):235–269

Frale C, Marcellino M, Mazzi GL, Proietti T (2010) Survey data as coincident or leading indicators. J Forecast 29(1–2):109–131

Frankel JA, Romer D (1999) Does trade cause growth? Am Econ Rev 89(3):379–399

Gayer C (2005) Forecast evaluation of European Commission survey indicators. J Bus Cycle Meas Anal 2005(2):157–183

Gelper S, Croux C (2010) On the construction of the European economic sentiment indicator. Oxf Bull Econ Stat 72(1):47–62

Grimme C, Lehmann R (2019) The ifo Export Climate–a leading indicator to forecast German export growth. CESifo Forum 20(4):36–42

Grimme C, Lehmann R, Noeller, M (2019) Forecasting imports with information from abroad. ifo Working Papers No. 294

Guichard S, Rusticelli E (2011) A dynamic factor model for world trade growth. OECD Economics Department Working Papers No. 874

Hanslin Grossmann S, Scheufele R (2019) PMIs: reliable indicators for exports? Rev Int Econ 27(2):711–734

Jannsen N, Richter J (2012) Kapazitätsauslastung im Ausland als Indikator für die deutschen Investitionsgüterexporte. Wirtschaftsdienst 92(12):833–837

Keck A, Raubold A, Truppia A (2009) Forecasting international trade: a time series approach. OECD J J Bus Cycle Meas Anal 2:157–176

Lehmann R, Weyh A (2016) Forecasting employment in Europe: are survey results helpful? J Bus Cycle Res 12(1):81–117

Timmermann A (2007) An evaluation of the World Economic Outlook forecasts. IMF Staff Pap 54(1):1–33

Vosen S, Schmidt T (2011) Forecasting private consumption: survey-based indicators vs. Google trends. J Forecast 30(6):565–578

Wang C, Hsu Y, Liou C (2011) A comparison of ARIMA forecasting and heuristic modelling. Appl Financ Econ 21(15):1095–1102

Weber E, Zika G (2016) Labour market forecasting in Germany: is disaggregation useful? Appl Econ 48(23):2183–2198

Acknowledgements

Open Access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

I am grateful to two anonymous referees. I also would like to thank Steffen R. Henzel, Tobias Lohse, Marcel Thum, Michael Weber, Klaus Wohlrabe, as well as conference and seminar participants at the Technische Universität Dresden, the ifo/CES Christmas Conference 2014, the 55th Congress of the European Regional Science Association (ERSA), and the 2015 Annual Congress of the German Economic Association (VfS) for valuable comments and suggestions on an earlier version of this paper that has been published as ifo Working Paper No. 196.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lehmann, R. Forecasting exports across Europe: What are the superior survey indicators?. Empir Econ 60, 2429–2453 (2021). https://doi.org/10.1007/s00181-020-01838-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-020-01838-y