Abstract

Without writing a single line of code by a human, an example Monte Carlo simulation-based application for stochastic dependence modeling with copulas is developed through pair programming involving a human partner and a large language model (LLM) fine-tuned for conversations. This process encompasses interacting with ChatGPT using both natural language and mathematical formalism. Under the careful supervision of a human expert, this interaction facilitated the creation of functioning code in MATLAB, Python, and R. The code performs a variety of tasks including sampling from a given copula model, evaluating the model’s density, conducting maximum likelihood estimation, optimizing for parallel computing on CPUs and GPUs, and visualizing the computed results. In contrast to other emerging studies that assess the accuracy of LLMs like ChatGPT on tasks from a selected area, this work rather investigates ways how to achieve a successful solution of a standard statistical task in a collaboration of a human expert and artificial intelligence (AI). Particularly, through careful prompt engineering, we separate successful solutions generated by ChatGPT from unsuccessful ones, resulting in a comprehensive list of related pros and cons. It is demonstrated that if the typical pitfalls are avoided, we can substantially benefit from collaborating with an AI partner. For example, we show that if ChatGPT is not able to provide a correct solution due to a lack of or incorrect knowledge, the human-expert can feed it with the correct knowledge, e.g., in the form of mathematical theorems and formulas, and make it to apply the gained knowledge in order to provide a correct solution. Such ability presents an attractive opportunity to achieve a programmed solution even for users with rather limited knowledge of programming techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The recent progress in solving natural language processing (NLP) tasks using large language models (LLMs) resulted in models with previously unseen quality of text generation and contextual understanding. These models, such as BERT (Devlin et al. 2018), RoBERTa (Liu et al. 2019), and GPT-3 (Brown et al. 2020), are capable of performing a wide range of NLP tasks, including text classification, question-answering, text summarization, and more. With more than 100 million users registered in two months after release for public testing through a web portal (Ruby 2023), ChatGPT (OpenAI 2022a) is the LLM that currently most resonates in the artificial intelligent (AI) community. This conversational AI is fine-tuned from the GPT-3.5 series with reinforcement learning from human feedback (Christiano et al. 2017; Stiennon et al. 2020), using nearly the same methods as InstructGPT (Ouyang et al. 2022), but with slight differences in the data collection setup. In March 2023, ChatGPT’s developer released a new version of GPT-3.5, GPT-4 (OpenAI 2023b). At the time of the writing of this paper, GPT-4 was not freely available, so our results do not include its outputs. However, a technical report on some of the model’s properties is available, so we have added the relevant information where appropriate.

A particular result of the ChatGPT’s fine-tuning is that it can generate corresponding code in many programming languages given a task description in natural language. This can be exploited in pair programming (Williams 2001), which is a software development technique in which two programmers work together at one workstation. One, the driver, writes code while the other, the navigator reviews each line of code as it is typed in and considers the “strategic” direction of the work. This work explores an approach where we, the human part of the pair, put ourselves in the role of the navigator, giving specific tasks to the driver, ChatGPT, representing the AI part of the pair. This approach offers several benefits, including:

-

Enhanced productivity: ChatGPT can help automate repetitive and time-consuming programming tasks, freeing up time for developers to focus on higher-level problem-solving and creative work. On average a time saving of 55% was reported for the task of writing an HTTP server in JavaScript in the study conducted by the GitHub Next team (Kalliamvakou 2022) for GitHub Copilot (GitHub 2021). The latter is another code suggestion tool that generates code snippets based on natural language descriptions, powered by an LLM similar to ChatGPT, Codex (Chen et al. 2021).

-

Improved code quality: Pair programming with ChatGPT can help identify errors and bugs in the code before they become bigger problems. ChatGPT can also suggest improvements to code architecture and design.

-

Knowledge sharing: ChatGPT can help less experienced developers learn from more experienced team members by providing suggestions and guidance.

-

Better code documentation: ChatGPT can help create more detailed and accurate code documentation by generating comments and annotations based on the code.

-

Accessibility: ChatGPT can make programming more accessible to people who may not have a programming background, allowing them to collaborate with developers and contribute to projects in a meaningful way.

For example, for researchers who have developed a new theory requiring computations, using tools like ChatGPT to implement the solution might be appealing and time-effective, eliminating the need to engage typically expensive software engineering manpower.

Currently, there appear several studies that assess the accuracy of LLMs like ChatGPT based on a set of tasks from a particular area. For example, multiple aspects of the mathematical skills of ChatGPT are evaluated in Frieder et al. (2023), with the main observation that it is not yet ready to deliver high-quality proofs or calculations consistently. In Katz et al. (2023), a preliminary version of GPT-4 was experimentally evaluated against prior generations of GPT on the entire Uniform Bar Examination,Footnote 1 and it is reported that GPT-4 significantly outperforms both human test-takers and prior models, demonstrating a 26% increase over the GPT-3.5-based model and beating humans in five of seven subject areas. In Bang et al. (2023), an extensive evaluation of ChatGPT using 21 data sets covering 8 different NLP tasks such as summarization, sentiment analysis and question answering is presented. The authors found that, on the one hand, ChatGPT outperforms LLMs with so-called zero-shot learning (Brown et al. 2020) on most tasks and even outperforms fine-tuned models on some tasks. On the other hand, they conclude that ChatGPT suffers from hallucination problems like other LLMs and it generates more extrinsic hallucinations from its parametric memory as it does not have access to an external knowledge base. Interestingly, the authors observed in several tasks that the possibility of interaction with ChatGPT enables human collaboration with the underlying LLM to improve its performance.

The latter observation is the main focus of this work. Rather than evaluating the accuracy of LLMs, we investigate ways to benefit from pair programming with an AI partner in order to achieve a successful solution for a task requiring intensive computations. Despite many impressive recent achievements of state-of-the-art LLMs, achieving a functional code is far from being straightforward; one of many unsuccessful attempts is reported in Clinton (2022). Importantly, successful attempts are also emerging. In Maddigan and Susnjak (2023), the authors report that LLMs together with the proposed prompts can offer a reliable approach to rendering visualisations from natural language queries, even when queries are highly misspecified and underspecified. However, in many areas, including computationally intensive solutions of analytically intractable statistical problems, a study that demonstrates the benefits of pair programming with an AI partner is missing.

This work fills this gap and considers applications involving copulas (Nelsen 2006; Joe 2014) as models for stochastic dependence between random variables. These applications are known for their analytical intractability, hence, the Monte Carlo (MC) approach is most widely used to compute the involved quantities of interest. As the MC approach often involves large computation efforts, conducting an MC study requires one to implement all underlying concepts. We demonstrate how to make ChatGPT produce a working implementation for such an application by interacting with it in a natural language and using mathematical formalism. To fully illustrate the coding abilities of ChatGPT, the human role is pushed to an extreme, and all the mentioned tasks are implemented without a single line of code written by the human or tweaking the generated code in any way. It is important to emphasize that even if the application under consideration relates to a specific area of probability and statistics, our observations apply in a wider scope as the tasks we consider (sampling from a given (copula) model, evaluation of the model’s density, performing maximum likelihood estimation, optimizing the code for parallel computing and visualization of the computed results) commonly appear in many statistical applications. Also, we do not present just one way to achieve a successful solution for a given task. Most of the successful solutions are complemented with examples demonstrating which adjustments of our prompts for ChatGPT turn unsuccessful solutions into successful ones. This results in a comprehensive list of related pros and cons, suggesting that if the typical pitfalls are avoided, we can substantially benefit from a collaboration with LLMs like ChatGPT. Particularly, we demonstrate that if ChatGPT is not able to provide a correct solution due to limitations in its knowledge, it is possible to feed it with the necessary knowledge and make ChatGPT apply this knowledge to provide a correct solution. Having all the sub-tasks of the main task successfully coded in a particular programming language, we also demonstrate how to fully exploit several impressive abilities of ChatGPT. For example, by a simple high-level prompt like “Now code it in Python.”, ChatGPT correctly transpiles the code from one to another programming language in a few seconds. Additionally, if an error is encountered during the execution of code produced by ChatGPT, it can not only identify the error but also immediately produce a corrected version once the error message is copy-pasted into ChatGPT’s web interface.

The paper is organized as follows. Section 2 presents the tasks we consider and sets up the way we interact with ChatGPT. Section 3 presents the development of the task via pair programming with ChatGPT. Section 4 summarizes the pros and cons observed during the task development, including a discussion on how to mitigate the latter, and Sect. 5 concludes.

2 Methodology

2.1 The task

Let \((x_{ij}) \in {\mathbb {R}}^{n\times d}\) be a sample of size n from a random vector \((X_1, \dots , X_d) \sim F\), where F is a joint distribution function with the continuous univariate margins \(F_1, \dots , F_d\) and copula (Sklar 1959) C implicitly given by \(F(x_1,\dots , x_d)=C(F_1(x_1),\dots , F_d(x_d))\) for \(x_1,\dots , x_d\in {\mathbb {R}}\). An explicit formula for C is \(C(u_1, \dots ,\) \(u_d) = F(F_1^{-1}(u_1), \dots , F_d^{-1}(u_d)), ~u_1, \dots , u_d \in [0,1]\). A typical application involving the MC approach and copulas assumes that C is unknown but belongs to a parametric family of copula models \(\{C_{\theta }: \theta \in \Theta \}\), where \(\Theta \) is an open subset of \({\mathbb {R}}^p\) for some integer \(p \ge 1\). The following steps are then considered:

-

1.

Estimate the true but unknown parameter \(\theta _0 \in \Theta \) of \(C_{\theta _0} = C\), e.g., using the pseudo maximum likelihood (ML) estimator

$$\begin{aligned} {\hat{\theta }} = \mathop {\text {argmax}}\limits _{\theta \in \Theta }\sum _{i=1}^n \log c_\theta ({\hat{u}}_{i1}, \dots , {\hat{u}}_{id}), \end{aligned}$$(1)where \(c_\theta \) is the density of \(C_\theta , ~\theta \in \Theta \), \({\hat{u}}_{ij} = {\hat{F}}_j(x_{ij})\) and \({\hat{F}}_j\) is an estimate of \(F_j\) for \(i \in \{1,\dots , n\}, ~j \in \{1, \dots . d\}\). For some copula families, e.g., for Archimedean ones, evaluation of \(c_\theta \) for large d is already a challenge; see Hofert et al. (2013). For pair-copula constructions, the main challenge lies in computing (1), see Haff (2013) or Schellhase and Spanhel (2018), typically done using numerical methods like gradient descent.

-

2.

Generate a sample \((v_{ij}) \in [0,1]^{N\times d}\) from \(C_{{\hat{\theta }}}\), typically with \(N \gg n\). For several popular copula families, this task is also challenging, and involves different techniques for efficiently sampling from \(C_{{\hat{\theta }}}\); see, e.g., Hofert (2010); Hofert et al. (2018) for sampling techniques related to Archimedean and Archimax copulas and their hierarchical extensions.

-

3.

Compute a sample from an analytically intractable distribution, e.g., from the distribution of \({\bar{X}} = \frac{1}{d}\sum _{j=1}^{d}X_j\). Compared to the previous two points, this is a trivial task, we just need to evaluate \({\bar{x}}_i = \frac{1}{d}\sum _{i=1}^{d}{\hat{F}}_j^{-1}(v_{ij}), ~i \in \{1,\dots , N\}\).

-

4.

Compute the desired quantity based on \({\bar{x}}_1,\dots ,{\bar{x}}_{N}\). For example, if \(X_1, \dots ,\) \(X_d\) represent risk factor changes, a quantile of the distribution function of \({\bar{X}}\) represents the Value-at-Risk VaR\(_\alpha \), commonly used in quantitative risk management; see McNeil et al. (2015). Approximating VaR \((X_1 + \dots + X_d)\) is also trivial as it just involves computing the order statistics \({\bar{x}}_{(1)} \le \dots \le {\bar{x}}_{(N)}\) and then picking out \({\bar{x}}_{(\lceil \alpha N \rceil )}\), where \(\alpha \in [0, 1]\) is a desired confidence level.

In the same realm, the quantity known as expected shortfall involves computing the average of the values \({\bar{x}}_i\) that are larger than VaR\(_\alpha \), so again a computationally trivial task.

In order to clearly see that the code generated by ChatGPT indeed works as expected without the need for an experienced programmer, we deviate a bit from the above outline, while keeping the non-trivial tasks, i.e., the sampling and estimation. We thus prompt ChatGPT to generate code that does the following:

-

1.

Generate a sample from \(C_{\theta _0}\), where \(\theta _0 \in {\mathbb {R}}\).

-

2.

Based on this sample, compute the ML estimator \({\hat{\theta }}\) of the true parameter \(\theta _0\) using (1).

Then, we repeat these two steps for several values of \(\theta _0\), e.g., linearly spaced on some convenient interval of \({\mathbb {R}}\). If the plot of the pairs of \((\theta _0, {\hat{\theta }})\) is close to the identity (\(\theta -\theta \)) plot, then one has strong evidence of a correct statistical sampling and estimation procedure. Finally, to allow for scaling, we ask ChatGPT to optimize the generated code for parallel computing on CPUs as well as on GPUs.

2.2 The communication protocol

When interacting with ChatGPT, we use the web portal provided by its development team.Footnote 2 Also, we set up and follow this communication protocol:

-

1.

We prompt ChatGPT to generate code for solving a selected task in natural language and using mathematical formalism, that is, we specify the task in plain text and do not use any specific formal language. For formulas, we use plain text like psi(t) = (1 + t) \({\hat{}}\) (-1/theta).

-

2.

If the solution generated by ChatGPT is wrong, that is, does not solve the given task, we communicate the problem to ChatGPT, and ask it to provide us with a corrected solution.

-

3.

If this corrected solution is still wrong, we feed ChatGPT with the knowledge necessary to complete the task successfully, e.g., we provide it with theorems and formulas in plain text. For an example, see the third prompt in Sect. 3.4.

In this way, we simulate an interaction between two humans, e.g., a client sends by email a task to a software engineer, and we play the role of the client and ChatGPT the role of the software engineer. As it is typical that the client is not aware of all details required to solve the task at the beginning of the interaction, such a communication protocol may be frequently observed in practice. The client starts by providing the (subjectively) most important features of the problem in order to minimize her/his initial effort, and then, if necessary, she/he adds more details to get a more precise solution. Importantly, this communication protocol led to a successful completion of the aforementioned tasks, which is reported in Sect. 3.

With regards to passing ChatGPT the required knowledge, it is important to realize that ChatGPT does not have any memory to remember the previous conversation with a user. Instead, the trick for ChatGPT to appear to remember previous conversations is to feed it the entire conversation history as a single prompt. This means that when a user sends a message, the previous conversation history is appended to the prompt and then fed to ChatGPT. This prompt engineering technique is widely used in conversational AI systems to improve the model’s ability to generate coherent and contextually appropriate responses. However, it is just a trick used to create the illusion of memory in ChatGPT.

If the previous conversation is too long (larger than 4096 tokens OpenAI (2023c), where a token is roughly 3/4 of an English word,Footnote 3 it may not fit entirely within the context window that ChatGPT uses to generate responses. In such cases, the model may only have access to a partial view of the conversation history, which can result in the model seeming like it has forgotten some parts of the conversation. To mitigate this issue, conversational AI designers often use techniques like truncating or summarizing the conversation history to ensure that it fits within the context window. The way we solve this problem in our example task is by reintroducing the parts that we mentioned to ChatGPT. For example, when transpiling the code from Python (“Appendix A”) to R (“Appendix B”), we first copy-paste the Python code to ChatGPT’s web interface and then ask it to transpile it to R. Without having this technical limitation in mind, it is unlikely to get a correct answer/solution if we refer to the conversion part that does not fit within the context window. Finally note that according to its technical report, GPT-4 uses a context window that is 8 times larger than that of ChatGPT, so it can contain roughly 25,000 words. This suggests that the limitation imposed by the context window length will become less and less of a concern.

2.3 The copula family

In order to make the example task specified in Sect. 2.1 precise, we choose the parametric family \(\{C_{\theta }: \theta \in \Theta \}\) to be the popular family of Clayton copulas (Clayton 1978), given by

where \(u_1,u_2\in [0,1]\) and \(-1\le \theta <\infty ,\,\theta \ne 0\). This family of copulas is used in a wide variety of applications. To mention several recent ones, e.g., Huang et al. (2022) use it to analyse the correlation between the residual series of a long short-term memory neural network and a wind-speed series. Particularly, the maximum likelihood estimation of the copula parameter is utilized, i.e., the procedure that ChatGPT implements here in Sect. 3.3. In the simulation study by Michimae and Emura (2022), where copula-based competing risks models for latent failure times are proposed, the authors utilize sampling from Clayton copulas, i.e., the procedure that ChatGPT implements here in Sect. 3.4.

For simplicity, as well as the fact that the models with \(\theta < 0\), that is, those with negative dependence, are rarely used in practice, we restrict to \(\theta \in (0, \infty )\), which allows one to rewrite (2) to

The technical reason for choosing this family is its simple analytical form, which makes it easier for the reader to track all the formulas we ask for and get from ChatGPT, e.g., the probability density function (PDF). Another reason is ChatGPT’s relatively limited knowledge of this family. By contrast, e.g., for the most popular family of Gaussian copulas, ChatGPT was not able to generate a sampling algorithm without being fed with some necessary theory. The latter simulates a realistic situation when ChatGPT is facing a new theory/concept, e.g., one recently developed by the user. However, we would like to encourage the reader to experiment with any family of interest or even with a task that differs from our example.

3 Pair programming with ChatGPT

We begin prompting ChatGPT with tasks that require rather fewer lines of code in order to not overwhelm the reader with the amount of results, while still demonstrating as many abilities of ChatGPT as possible. Then, we gradually move to larger tasks until we get all of them implemented. Our prompts are in sans serif font style. Responses from ChatGPT are either in verbatim font style if the response is code, or in italic font style, otherwise. In its first occurrence, we indicate this explicitly. Note that as ChatGPT is quite a loquacious LLM, we mostly limit the length of its responses, otherwise we would be overwhelmed with answers of unnecessary detail. For the same reason, when asking ChatGPT for code, we mostly omit its comments on the produced code. Also note that when commenting on ChatGPT’s responses, we speak of it as if it were a human, e.g., it “understands”, “knows” or “is aware of” something, which should be interpreted by the reader in the sense that ChatGPT produced a response that (typically very well) mimics a corresponding human reaction. As ChatGPT’s responses are by default non-deterministic, i.e., giving it the same prompt again, the response might slightly differ. To take this feature into account, we re-generate the response for each of our prompts three times, and if these responses are factually different from each other, we indicate it accordingly. Finally, note that the whole interaction is conducted in one chat window. Once we observe that ChatGPT starts to forget the previous context due to the reasons described in Sect. 2.2, we re-introduce it as we describe in the same section.

In the rest of this section, we first investigate the knowledge of ChatGPT on the topic under consideration, and then we prompt it to generate code for evaluation of the density of the Clayton copula, for ML estimation of its parameter, for sampling from the copula, for creating a visualization of the example Monte Carlo approach, and for optimizing the code for parallel computations.

3.1 Warm up

We see that ChatGPT can save us time by quickly and concisely summarizing basic facts about the topic of our interest. We can also limit the size of the answer, which is satisfied in this 91-word long answer. The information about the positive dependence probably follows from what we have already stated before: the negatively dependent models are rarely used in practice, which is probably reflected in ChatGPT’s training data. However, several details of the answer can be discussed. In lines 3 and 4, “random variables” instead of just “variables” would be more precise. From the last sentence, it follows that an Archimedean copula can be expressed as the generator function for some symmetric distributions. This is at least confusing as Archimedean copulas are rather a particular class of copulas admitting a certain functional form based on so-called generator functions. Finally, symmetric distributions have their precise meaning: such a distribution is unchanged when, in the continuous case, its probability density function is reflected around a vertical line at some value of the random variable represented by the distribution. Whereas Archimedean copulas possess a kind of symmetry following from their exchangeability, they do not belong to symmetric distributions.

To investigate the limits of ChatGPT’s knowledge, let us prompt it with two further questions. According to the previous response, we can speculate that it has limited knowledge regarding Clayton models with negative dependence.

We prompted ChatGPT to answer the same question three times and got contradicting answers. The first answer is correct; however, after asking again, ChatGPT changed its mind. Before commenting on that, let us try once again, with a slightly more complex concept.

If \((U_1, U_2)\sim C\), then the survival copula of C is the distribution of \((1-U_1, 1-U_2)\), and thus the properties of the lower tail of C are the properties of the upper tail of the survival copula. Hence, we got an incorrect answer. After asking again, we got this response.

Again, we got contradicting answers. Based on this observation, the reader could raise the following question.

This response confirms what we have seen so far, hence, any user should take these limitations into account with the utmost seriousness and be extremely careful when asking ChatGPT for some reasoning. The examples above also well illustrate that the current version of ChatGPT is definitely not an appropriate tool for reasoning, which is as also observed by Frieder et al. (2023) and Bang et al. (2023). However, this by no means implies that it cannot serve as a helpful AI partner for pair programming.

3.2 The density

It can be easily shown that the density \(c_{\theta }\) of \(C_{\theta }\) is

for \(\theta > 0\). Before we ask ChatGPT to generate code evaluating the Clayton copula density, it would be good to ask for a plain formula.

Out of many factually distinct responses, we present this one to illustrate how dangerous it could be to believe that ChatGPT knows or can derive the right formula. Even if it looks quite similar to (4), this is not the density of \(C_{\theta }\).

Another formula that is quite similar to (4), but also this one is incorrect. As already mentioned before, ChatGPT is not a good option when it comes to reasoning. So, to get the right formula, some symbolic tool is definitely preferred. However, note that ChatGPT plugins (OpenAI 2023a) have recently been announced, and particularly the Code interpreter, which is an experimental ChatGPT model that can use Python, handle uploads and downloads, and allow for symbolic computations. Even if not freely available yet, this might also be a possible way to mitigate the problem, directly in the ChatGPT environment.

Following our communication protocol, let us feed ChatGPT with the right formula, and ask for a corresponding function in three programming languages: (1) MATLAB (we used version R2020a),Footnote 4 which represents a proprietary software, (2) Python (version 3.9), an open-source software popular in the AI community, and R (version 4.2.2), an open-source software popular in the statistical community. Note that in cases when the output is too wide, we adjust it in order to fit on the page; otherwise we do not adjust it in any other way.

After feeding ChatGPT with the right formula, it immediately generated a functional code. Notice that we used quite a natural and relaxed form of conversation, e.g., like in an email.

As ChatGPT takes into account the previous conversation, we could afford to be extremely concise with our prompts and still get correct solutions. In what follows, we ask for code only in MATLAB to save space. However, the equivalent code in Python and R is shown in the appendices, where all the functions can be easily identified by their names.

3.3 The estimation

By contrast to our struggles with the PDF, we immediately got a correct solution. This may be due to the fact that code snippets computing ML estimators occur more frequently in ChatGPT’s training data. This pattern (the more general the task, the more frequently we receive a working solution on first trial) is observed also in other examples throughout this work.

3.4 The sampling

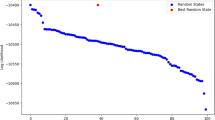

We see that u1 and u2 are drawn from the standard uniform distribution, which is typically used in such sampling algorithms. However, then these are just used as arguments of (3), resulting in two identical one-dimensional samples from the distribution of \(C(U_1,U_2)\), where \(U_1\) and \(U_2\) are two independent random variables with the standard uniform distribution. Note if the random vector \((U_1,U_2)\) would be distributed according to C, then the distribution function of \(C(U_1,U_2)\) would be the distribution known as Kendall function; see Joe (2014, pp. 419–422). So we are witnessing a mixture of approaches related to copula modelling, which, however, do not lead to a correct solution. A sample generated with this code for \(\theta = 2\) is shown at the left-hand side of Fig. 1, and it is clearly not a sample from the Clayton copula \(C_{\theta }\) with parameter \(\theta = 2\).

In the second try, ChatGPT also failed to produce a correct solution; see the sample generated by this code at the center of Fig. 1. Here, the reasoning behind is even less clear. These two trivial examples again illustrate that we must be extremely careful about results produced by ChatGPT. On the other hand, this gives us a chance to show that ChatGPT can interactively learn new concepts by feeding it with the necessary theory as we will demonstrate next.

A standard approach for efficient sampling from Archimedean copulas was introduced in Marshall and Olkin (1988). Let us feed ChatGPT with the related theory and ask it for a correct solution.

Apart from producing a correct solution, which generated the sample at the right-hand side of Fig. 1, this is a clear demonstration of the ability of ChatGPT to learn during the interaction with the user (having in mind that this capacity is just a quite convincing illusion enabled by the prompt engineering technique applied to ChatGPT, as discussed in Sect. 2.2). In contrast to the previous example, where it “only” translated the fed formula for the PDF to a more-or-less similarly looking code, this example shows that ChatGPT is able to understand even relatively complex concepts.

Such an ability makes ChatGPT a feasible tool, even in cases when it faces unknown concepts. This is essential, e.g., in cases when a new theory developed by a researcher is about to be coded. Also, notice that ChatGPT saves us time by mapping our concepts, e.g., the standard exponential and gamma distribution, to existing functions in the considered programming language. Particularly, without explicitly asking, ChatGPT avoided a loop iterating over 1 to n in which one sample from the copula would be generated, which is typically slow, but generates directly n samples of E1 and E2 from the standard exponential distribution (exprnd(1, 2, n), where 1 denotes the parameter of the exponential distribution). We can thus avoid probably the most boring part of coding when we are browsing the documentation for a particular function in available libraries.

Finally, let us perform a simple check of all the previously generated functions.

Being in the context of the previous conversation, ChatGPT exploited the Clayton CopulaMLE function generated in Sect. 3.3. After executing the simple check code, we got the following error.

We copy-pasted the error message to ChatGPT, e.g., gave it the prompt

where *** was the error message, and got the following response.

ChatGPT not only detected what is wrong, but provided a corrected solution together with an explanation of the problem. Given that these trivial mistakes occur quite often in daily practice, such help that points out to the problem or even solves it can significantly save time. After executing the simple check with the corrected version of ClaytonCopulaDensity, we got theta_hat = 2.12. So far so good.

3.5 The visualization

The plot generated by the response is depicted on the left-hand side of Fig. 2.

As the \((\theta , {\hat{\theta }})\) pairs are close to the identity, it gives an evidence that all previously generated code works properly. We would like to highlight that even if ChatGPT is not instructed to:

-

1.

The parameters \(\theta \) are linearly spaced in the desired interval. This is a typical choice for many visualizations.

-

2.

It shows perfect identity by a line, also typically considered in an ideal benchmark. This clearly demonstrates that ChatGPT at least partially understands the underlying concepts, i.e., that we are estimating the true value of some parameter.

-

3.

Typical time-consuming trivialities like limits, labels, title and legend are also shown.

All in all, this is the type of tasks where the user can substantially benefit from a collaboration with tools like ChatGPT.

However, we would like to note that two iterations of our last prompt were done before we obtained the presented one. In the first one, we omitted the content of the two parentheses with the function names. In that case, the output of ClaytonSample did not match the dimension of the input of ClaytonCopulaMLE. In the second iteration, we added those parentheses to the prompt, but without “U = ”, and encountered a very similar error. We copy-pasted the error message to ChatGPT, but this time it was not successful in providing a correct version. Finally, we added that “U = ” in the prompt with the intuition that ChatGPT is not aware of the right dimensions of the output, and this way we got a working solution. The main take-away from this example is that obtaining a correct solution is an iterative process requiring careful prompt engineering. The connection to human-human collaboration is clear: until the software engineer does not understand what the researcher exactly wants, she/he will probably deliver unsatisfactory solutions.

The response is shown in “Appendix A” and the corresponding plot is depicted at the right-hand side of Fig. 2. When a project is migrated from one programming language to another, this ability of ChatGPT could limit the related efforts or expenses dramatically.

In this case, the transpilation to R was not successful as we encountered a problem with the dimensions matching of the input of ClaytonCopulaMLE and output of ClaytonSample. Additionally, we were unsuccessful with copy-pasting the error message to ChatGPT. Clearly, we could be more precise by specifying these dimensions. However, we were deliberately underspecifying the task in order to illustrate what separates successful solutions from unsuccessful ones.

To resolve the task, we finally used a rather “brute-force” approach consisting of copy-pasting the whole code in Python to ChatGPT and asking it for the same code on R. The response is shown in “Appendix B” and the corresponding plot is depicted at the bottom of Fig. 2. Another way we obtained a working solution was to feed ChatGPT with explicit information about the dimensions of the inputs and outputs, i.e., using a similar approach as before by adding “U = ”. On the one hand, this approach is more elegant then the brute-force one, on the other hand, the brute-force approach allowed us to get a working solution in less time. A choice between them thus depends on the user’s priorities.

Basically we now have an implementation of our example task in every language supported by ChatGPT. Note that an implementation of sampling from the Clayton copula in Python appeared relatively recently as a serious effort presented in Boulin (2022). Here, we got an equivalent implementation completely created by an AI. Clearly, with the abilities of ChatGPT, achieving a solution in distinct programming languages will become much less valuable.

3.6 The parallelization

Note that as the codes for the following tasks in Python and R were rather long, we present only the MATLAB versions.

3.6.1 CPUs

We directly got a working solution, which generated the plot shown at the left-hand side of Fig. 3.

Two plots demonstrating the improvement in run-time when adding new workers, generated by the code produced by ChatGPT. The output of the code when executed on a local machine is shown on the left side. On the right side, the same when executed on an available server with 32 CPUs. For the latter, we adjusted the code with numWorkers = 1:32 and n = 10000

Let us highlight several points:

-

Being in the context of the conversation, we could afford to be extremely concise and use just one word (“that” in the first sentence) to point at the functionality we request; natural and time-saving.

-

We just prompted ChatGPT with a very high-level request, Create a demonstration, and got exactly what we wanted. Originally, we used, say, the traditional way of thinking, which involves being very specific when designing a task. For example, “create an array for time measurements, measure time for this and that, store it in the array and then plot it in the way that...”. Since such tasks probably occur many times in ChatGPT’s training data, overly specifying is not necessary, which, again, can save a lot of time.

-

Notice the 4 workers in numWorkers = 1:4. It is a common number of CPUs of an average office PC. This number is thus not just a pure random guess, but rather a most occurring one in ChatGPT’s training data.

Note that apart from our office PC, we executed the code on an available server with 32 CPUs, which generated the plot at the right-hand side of Fig. 3. As only 20 theta values (thetas) are considered, we observe that the improvement in time does not continue from the 21st worker onwards.

3.6.2 GPUs

We got two pieces of code as response. First, ChatGPT provided an optimization of ClaytonSample for GPUs.

Then, a code for its demonstration followed.

After executing the latter, the output was:

As can be observed, the optimization of ClaytonSample, ClaytonSampleGPUs, is based on the addition of gpuArray, which assures that the sampling from the standard exponential and gamma distributions as well as the remaining computations are performed directly on available GPUs. The outputs U and V are then gathered from the GPUs onto the client by gather. As the output of the demonstration part of the code shows, this roughly halved the non-optimized run-time.

Apart from a more efficient implementation, ChatGPT also saves our time by exempting us from inspecting whether or not each involved function is supported on GPUs. We should also not forget the educational aspect of the matter. As these optimization techniques are rather advanced, an inexperienced user genuinely learns from these outputs (having in mind they might not always be correct). For example, without explicitly mentioning it in our prompt, the values of theta and n are stored in separate variables before they are used in ClaytonSample and ClaytonSampleGPUs. This belongs to proper coding techniques.

4 Summary and discussion

During the development of the working code solving our example task, we observed a considerable list of advantages from which we can benefit while pair programming with ChatGPT. In particular:

-

1.

ChatGPT can save time by quickly and concisely summarizing basic facts about the topic of our interest, e.g., formulas or application examples, as illustrated in Sect. 3.1.

-

2.

If ChatGPT is not able to provide a correct solution due to a lack of or incorrect knowledge, we can feed it with the correct knowledge, and make it use it to provide a correct solution. In Sect. 3.2, this approach led ChatGPT to produce a function evaluating the PDF of the copula model in three different programming languages. In Sect. 3.4, a working code for sampling from the copula model is generated once ChatGPT was fed by the related non-trivial theory. Particularly the latter example shows that ChatGPT is able to understand even relatively complex concepts, and clearly demonstrates that it can be applied in cases when it faces unknown concepts.

-

3.

ChatGPT saves time by mapping simple concepts, e.g., sampling from the standard exponential and gamma distributions, to existing code (libraries, APIs, or functions) available for a given programming language, as illustrated in Sect. 3.4.

-

4.

The more common the task to solve, the more successful ChatGPT in generating a correct solution. This is illustrated, e.g., in Sect. 3.3, where we immediately obtained code implementing the maximal likelihood estimator by a simple prompt like write code for the maximum likelihood estimator of that parameter. Another example is the transpilation of the MATLAB solution to Python in Sect. 3.5, or the optimization of existing code for parallel computing on CPUs and GPUs in Sect. 3.6.

-

5.

ChatGPT can help in cases when an error is thrown after executing the generated code. In Sect. 3.4, we have seen that it not only detected what was wrong, but provided a corrected solution. Apart from saving time needed to search and fix the error, this can be crucial particularly for less experienced programmers, who could find the error too complex and eventually give up. ChatGPT helped us roughly with 1/3 of the errors we encountered. Even if not perfect, this is substantially better than no help at all.

-

6.

ChatGPT can help with creating visualizations. In Sect. 3.5, it generated a visualization indicating that all previously generated code is correct. Even if we have not asked for it, the visualization included all the typical trivia like labels, benchmarks, limits, legends, etc.

-

7.

ChatGPT at least partially understands the underlying concepts of what we are doing. Without asking it to do so, it added the plot of the identity to the visualization (see Sect. 3.5), suggesting that it is aware of us trying to estimating the true value of some parameter.

-

8.

ChatGPT can transpile code from one programming language to another also with high-level prompts like Code it in Python and And in R, as demonstrated in Sect. 3.5. The same section also shows that if the transpilation fails (which happened with the transpilation to R), it is possible to use a quick “brute-force” solution that also accomplished the task.

-

9.

ChatGPT can optimize the already generated code, e.g., for parallel computations. By prompting optimize that for parallel computing on CPUs, we immediately got the optimized version of the sample-estimate procedure developed in Sect. 3.5; see Sect. 3.6.1. The same section also shows that a high-level prompt like Create a demonstration of this optimization can result in code showing the impact of the optimization, again including the typically tedious but necessary trivia like labels, etc. Similarly, such an optimization together with a simple demonstration was generated also for computations on GPUs; see Sect. 3.6.2.

-

10.

ChatGPT follows proper coding techniques, so the user can genuinely learn them too. We observed that the produced code is properly commented, indented, modularized, avoids code duplicities, etc.

-

11.

ChatGPT helps the user to get familiar with the produced code faster. When providing code, ChatGPT typically surrounds it by further information explaining its main features. To save space, we mostly cut this out, however, an example can be found, e.g., in Sect. 3.4 in connection to the error message thrown by the simple check code.

We have also seen that pair programming with ChatGPT brings several disadvantages, which should be carefully considered. Let us summarize them and discuss possibilities to mitigate them:

-

1.

ChatGPT in its current version (early 2023) is poor in reasoning; see Sect. 3.1. On two examples, we demonstrated how it responds with contradicting answers to the same question. We particularly highlight the case when it first answered yes and then no to the same question. Also, we demonstrated how dangerous this could be in quantitative reasoning, where it generated incorrect formulas that looked very similar to correct ones; see the PDF derivation in Sect. 3.2. In order to mitigate this problem, a lot of effort can be currently observed. One of the most promising examples in the direction of quantitative reasoning is Minerva (Lewkowycz et al. 2022), an LLM based on the PaLM general language models (Chowdhery et al. 2022) with up to 540 billion parameters. This model, released in June 2022, gained its attention by scoring 50% on questions in the MATH data set, representing a notable advancement in the state-of-the-art performance on STEM evaluation datasets; see Table 3 therein. In other works, the authors develop models fine-tuned for understanding mathematical formulas (Peng et al. 2021), or employ deep neural networks in mathematical tasks like symbolic integration or solving differential equations (Lample and Charton 2019). Another way of mitigating the problem can be trying to exploit to the maximum the current LLMs by carefully adjusting the prompt in order to get more reliable answers. This increasingly popular technique, called prompt engineering, involves special techniques to improve reliability when the model fails on a task (OpenAI 2022b), which can significantly enhance the results, such as in the case of simple math problems, just by adding “Let’s think step by step.” at the end of the prompt. Note that we tried this technique in the example considering the tail dependence of the survival Clayton copula in Sect. 3.1, however, without success, probably because the underlying concepts go beyond simple mathematics.

-

2.

If ChatGPT lacks the necessary knowledge or possesses incorrect knowledge, it may generate an incorrect solution without any indication to the user. As illustrated in Sect. 3.4, after asking it for code for sampling from a Clayton copula model, ChatGPT first generated two routines, which were resembling proper sampling algorithms, but were entirely incorrect. Due to the opacity of the current state-of-the-art LLMs that contain tens or even hundreds of billions of parameters, the correctness of the solution can hardly be guaranteed in all cases. While there may be efforts to develop more explainable LLMs, it is unlikely that the fundamental challenges related to the complexity of language and the massive amounts of data required for training will be completely overcome. Therefore, it is essential for a human expert in the field to verify the output generated by the model in all cases.

-

3.

Specifically, ChatGPT tends to be less successful in producing accurate solutions for tasks that are less common. This means that the opposite of advantage 4. also applies. In Sect. 3.2, this is demonstrated through the probability density function (PDF) of the copula model. In Sect. 3.4, through the sampling algorithm. To address these challenges, we provided ChatGPT with the necessary theoretical knowledge, which resulted in correct solutions, as demonstrated in the aforementioned sections (Sects. 3.2 and 3.4).

-

4.

ChatGPT does not have any memory. Conversations that are too long to fit within its context window may cause the model to forget certain parts of the conversation. This limitation, along with potential mitigation strategies, has been discussed in detail in Sect. 2.2.

Besides ChatGPT, there are several other language models capable of generating code solutions from natural language inputs. One notable example is AlphaCode (Li et al. 2022), which achieved an average ranking in the top 54.3% in programming competitions on the Codeforces platform, with over 5000 participants. AlphaCode has recently been made publicly available,Footnote 5 providing example solutions from the mentioned contests. Another example is OpenAI Codex, mentioned earlier in the introduction. Unlike ChatGPT, these models have been specifically developed for code generation. Consequently, it is possible that they can generate solutions superior to those produced by ChatGPT. Therefore, an interesting avenue for future research would be to compare the effectiveness of these models in solving the tasks considered in this study.

On the other hand, ChatGPT might be more convenient for many users than these models as it allows for interaction during the coding process. Unlike AlphaCode and OpenAI Codex, which generate code snippets based on natural language inputs without any further interaction, ChatGPT allows users to provide feedback and adjust the generated code in real-time. This interaction can be beneficial for several reasons. First, it allows users to clarify their intent and ensures that the generated code aligns with their goals. For example, as we have seen in Sect. 3.4 that considers the sampling from a Clayton copula model, if a user requests a specific functionality and the generated code does not quite match what they had in mind, the user can provide feedback to ChatGPT to adjust the code accordingly. Second, the interaction with ChatGPT can help users learn more about programming and improve their coding skills. By engaging in a dialogue with ChatGPT, users can gain insights into the logic and structure of the code they are generating, and learn how to improve their code in the future. For example, in Sect. 3.6.2, we could genuinely learn how to convert existing code for parallel computing on GPUs. Finally, the interaction with ChatGPT can help users troubleshoot errors and debug their code more effectively. As we have seen in Sect. 3.4, ChatGPT can recognize common programming mistakes, and provide feedback that helps users to identify and fix errors in their code. These reasons, together with the fact that ChatGPT can be conveniently accessed through a web portal, led us to choose ChatGPT as our pair programming AI partner.

5 Conclusion

In a human-AI collaboration, we developed code that carries out several tasks. These tasks include sampling from a copula model, estimating its parameter, and providing visualizations to confirm that the tasks were executed correctly. Additionally, the code was adapted to enable parallelization across both CPUs and GPUs. To illustrate the coding abilities of the AI part, represented by ChatGPT, all the mentioned tasks were implemented without a single line of code written by the human. Further to presenting successful solutions for the given tasks, we also provided additional examples. These demonstrated how we transformed unsuccessful attempts into successful ones by modifying our prompts for ChatGPT. These efforts resulted in a comprehensive list of advantages and disadvantages of collaborating with AI partners like ChatGPT. This suggests that if we can effectively navigate the typical pitfalls associated with AI collaboration, we can derive substantial benefits from it.

Notes

For details on the exam, see https://www.ncbex.org/exams/ube/.

Available at https://chat.openai.com.

The total count of tokens in a piece of text can be precisely measured by https://platform.openai.com/tokenizer.

We also tested that the code produced by ChatGPT for MATLAB works as well in Octave (version 8.2.0) (https://octave.org/), which is an open-source software mimicking MATLAB.

Available at https://github.com/deepmind/code_contests.

References

Bang Y, Cahyawijaya S, Lee N, Dai W, Su D, Wilie B et al. (2023) A multitask, multilingual, multimodal evaluation of chatgpt on reasoning, hallucination, and interactivity. arXiv:2302.04023

Boulin A (2022) Sample from copula: a coppy module. arXiv:2203.17177

Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P et al (2020) Language models are few-shot learners. Adv Neural Inf Process Syst 33:1877–1901

Chen M, Tworek J, Jun H, Yuan Q, Pinto HPdO, Kaplan J et al (2021) Evaluating large language models trained on code. arXiv:2107.03374

Chowdhery A, Narang S, Devlin J, Bosma M, Mishra G, Roberts A et al (2022) Palm: scaling language modeling with pathways. arXiv:2204.02311

Christiano PF, Leike J, Brown T, Martic M, Legg S, Amodei D (2017) Deep reinforcement learning from human preferences. In: Advances in neural information processing systems, vol 30

Clayton DG (1978) A model for association in bivariate life tables and its application in epidemiological studies of familial tendency in chronic disease incidence. Biometrika 65:141–151. https://doi.org/10.1093/biomet/65.1.141

Clinton D (2022) Pair Programming with the ChatGPT AI—Does GPT-3.5 Understand Bash?—freecodecamp.org. https://www.freecodecamp.org/news/pair-programming-with-the-chatgpt-ai-how-well-does-gpt-3-5-understand-bash/. Accessed 04 Apr 2023

Devlin J, Chang M-W, Lee K, Toutanova K (2018) BERT: pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805

Frieder S, Pinchetti L, Griffiths R-R, Salvatori T, Lukasiewicz T, Petersen PC, Chevalier A, Berner J (2023) Mathematical capabilities of chatgpt. arXiv:2301.13867

GitHub (2021) GitHub Copilot. Your AI pair programmer—github.com. https://github.com/features/copilot. Accessed 04 Apr 2023

Haff IH (2013) Parameter estimation for pair-copula constructions. Bernoulli 19(2):462–491. https://doi.org/10.3150/12-BEJ413

Hofert M (2010) Sampling nested Archimedean copulas with applications to CDO pricing. Universität Ulm. https://doi.org/10.18725/OPARU-1787

Hofert M, Mächler M, McNeil AJ (2013) Archimedean copulas in high dimensions: estimators and numerical challenges motivated by financial applications. J Soc Fr Stat 154(1):25–63

Hofert M, Huser R, Prasad A (2018) Hierarchical Archimax copulas. J Multivar Anal 167:195–211. https://doi.org/10.1016/j.jmva.2018.05.001

Huang Y, Zhang B, Pang H, Wang B, Lee KY, Xie J, Jin Y (2022) Spatio-temporal wind speed prediction based on Clayton Copula function with deep learning fusion. Renew Energy 192:526–536. https://doi.org/10.1016/j.renene.2022.04.055

Joe H (2014) Dependence modeling with copulas. CRC Press, Boca Raton

Kalliamvakou E (2022) Research: quantifying GitHub Copilot’s impact on developer productivity and happiness—the GitHub Blog. https://github.blog/2022-09-07-research-quantifying-github-copilots-impact-ondeveloper-productivity-and-happiness/. Accessed 04 Apr 2023

Katz DM, Bommarito MJ, Gao S, Arredondo P (2023) GPT-4 passes the bar exam. SSRN 4389233

Lample G, Charton F (2019) Deep learning for symbolic mathematics. arXiv:1912.01412

Lewkowycz A, Andreassen A, Dohan D, Dyer E, Michalewski H, Ramasesh V et al (2022) Solving quantitative reasoning problems with language models. arXiv:2206.14858

Li Y, Choi D, Chung J, Kushman N, Schrittwieser J, Leblond R et al (2022) Competition-level code generation with alphacode. Science 378(6624):1092–1097. https://doi.org/10.1126/science.abq1158

Liu Y, Ott M, Goyal N, Du J, Joshi M, Chen D, Stoyanov V (2019) RoBERTa: a robustly optimized bert pretraining approach. arXiv:1907.11692

Maddigan P, Susnjak T (2023) Chat2vis: generating data visualisations via natural language using chatgpt, codex and gpt-3 large language models. arXiv:2302.02094

Marshall AW, Olkin I (1988) Families of multivariate distributions. J Am Stat Assoc 83(403):834–841. https://doi.org/10.1080/01621459.1988.10478671

McNeil A, Frey R, Embrechts P (2015) Quantitative risk management: concepts, techniques and tools. Princeton University Press, Princeton

Michimae H, Emura T (2022) Likelihood inference for copula models based on left-truncated and competing risks data from field studies. Mathematics 10(13):2163. https://doi.org/10.3390/math10132163

Nelsen RB (2006) An introduction to copulas, 2nd edn. Springer, Berlin

OpenAI (2022a) Introducing ChatGPT—openai.com. https://openai.com/blog/chatgpt/. Accessed 04 Apr 2023

OpenAI (2022b) Techniques to improve reliability. https://github.com/openai/openai-cookbook/blob/main/techniques_to_improve_reliability.md. Accessed 04 Apr 2023

OpenAI (2023a) ChatGPT plugins—openai.com. https://openai.com/blog/chatgpt-plugins. Accessed 04 Apr 2023

OpenAI (2023b) GPT-4 technical report. arXiv:2303.08774

OpenAI (2023c) OpenAI API—platform.openai.com. https://platform.openai.com/docs/models/gpt-3-5. Accessed 04 Apr 2023

Ouyang L, Wu J, Jiang X, Almeida D, Wainwright CL, Mishkin P et al (2022) Training language models to follow instructions with human feedback. arXiv:2203.02155

Peng S, Yuan K, Gao L, Tang Z (2021) Mathbert: a pretrained model for mathematical formula understanding. arXiv:2105.00377

Ruby D (2023) ChatGPT statistics for 2023 (New Data +GPT-4 facts). https://www.demandsage.com/chatgptstatistics/

Schellhase C, Spanhel F (2018) Estimating non-simplified vine copulas using penalized splines. Stat Comput 28:387–409. https://doi.org/10.1007/s11222-017-9737-7

Sklar A (1959) Fonctions de répartition a n dimensions et leurs marges. Publ l’Inst Stat l’Univ Paris 8:229–231

Stiennon N, Ouyang L, Wu J, Ziegler D, Lowe R, Voss C, Christiano PF (2020) Learning to summarize with human feedback. Adv Neural Inf Process Syst 33:3008–3021

Williams L (2001) Integrating pair programming into a software development process. In: Proceedings 14th conference on software engi-neering education and training. ’In search of a software engineering profession’ (Cat. No. PR01059), pp 27–36. https://doi.org/10.1109/CSEE.2001.913816

Acknowledgements

The author thanks the Czech Science Foundation (GAČR) for financial support for this work through Grant 21-03085S. The author also thanks to Martin Holeňa and Marius Hofert for constructive comments and recommendations that definitely helped to improve the readability and quality of the paper.

Funding

Open access publishing supported by the National Technical Library in Prague.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: The solution in Python

An example of a redundant code is n = data.shape[0]. As can be observed, the variable n has no use in ClaytonCopulaMLE.

Appendix B: The solution in R

Interestingly, even if this code is a direct transpilation of the code from “Appendix A”, the redundant code from the Python version of ClaytonCopulaMLE is not present. This hints on the ability of ChatGPT to keep only the code that is relevant.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Górecki, J. Pair programming with ChatGPT for sampling and estimation of copulas. Comput Stat (2023). https://doi.org/10.1007/s00180-023-01437-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00180-023-01437-2