Abstract

In this article we propose a boosting algorithm for regression with functional explanatory variables and scalar responses. The algorithm uses decision trees constructed with multiple projections as the “base-learners”, which we call “functional multi-index trees”. We establish identifiability conditions for these trees and introduce two algorithms to compute them. We use numerical experiments to investigate the performance of our method and compare it with several linear and nonlinear regression estimators, including recently proposed nonparametric and semiparametric functional additive estimators. Simulation studies show that the proposed method is consistently among the top performers, whereas the performance of existing alternatives can vary substantially across different settings. In a real example, we apply our method to predict electricity demand using price curves and show that our estimator provides better predictions compared to its competitors, especially when one adjusts for seasonality.

Similar content being viewed by others

References

Ait-Saïdi A, Ferraty F, Kassa R et al (2008) Cross-validated estimations in the single-functional index model. Statistics 42(6):475–494

Amato U, Antoniadis A, De Feis I (2006) Dimension reduction in functional regression with applications. Comput Stat Data Anal 50(9):2422–2446

Amiri A, Crambes C, Thiam B (2014) Recursive estimation of nonparametric regression with functional covariate. Comput Stat Data Anal 69:154–172

Avery M, Wu Y, Helen Zhang H et al (2014) RKHS-based functional nonparametric regression for sparse and irregular longitudinal data. Can J Stat 42(2):204–216

Baíllo A, Grané A (2009) Local linear regression for functional predictor and scalar response. J Multivar Anal 100(1):102–111

Barrientos-Marin J, Ferraty F, Vieu P (2010) Locally modelled regression and functional data. J Nonparametr Stat 22(5):617–632

Bates D, Mächler M, Bolker B et al (2015) Fitting linear mixed-effects models using lme4. J Stat Softw 67(1):1–48

Berlinet A, Elamine A, Mas A (2011) Local linear regression for functional data. Ann Inst Stat Math 63(5):1047–1075

Blumenson L (1960) A derivation of n-dimensional spherical coordinates. Am Math Mon 67(1):63–66

Boente G, Salibian-Barrera M (2021) Robust functional principal components for sparse longitudinal data. METRON 79(2):1–30

Breiman L, Friedman J, Olshen R et al (1984) Classification and regression trees, 1st edn. Routledge, Routledge

Burba F, Ferraty F, Vieu P (2009) K-nearest neighbour method in functional nonparametric regression. J Nonparametr Stat 21(4):453–469

Cardot H, Sarda P (2005) Estimation in generalized linear models for functional data via penalized likelihood. J Multivar Anal 92(1):24–41

Cardot H, Ferraty F, Sarda P (1999) Functional linear model. Stat Probabil Lett 45(1):11–22

Cardot H, Ferraty F, Sarda P (2003) Spline estimators for the functional linear model. Stat Sin 13(3):571–591

Carroll C, Gajardo A, Chen Y et al (2021) fdapace: functional data analysis and empirical dynamics. https://CRAN.R-project.org/package=fdapace, R package version 0.5.6

Chen D, Hall P, Müller HG et al (2011) Single and multiple index functional regression models with nonparametric link. Ann Stat 39(3):1720–1747

Dou WW, Pollard D, Zhou HH et al (2012) Estimation in functional regression for general exponential families. Ann Stat 40(5):2421–2451

Fan Y, James GM, Radchenko P et al (2015) Functional additive regression. Ann Stat 43(5):2296–2325

Febrero-Bande M, González-Manteiga W (2013) Generalized additive models for functional data. Test 22(2):278–292

Ferraty F, Vieu P (2002) The functional nonparametric model and application to spectrometric data. Comput Stat 17(4):545–564

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer, New York, NY

Ferraty F, Vieu P (2009) Additive prediction and boosting for functional data. Comput Stat Data Anal 53(4):1400–1413

Ferraty F, Peuch A, Vieu P (2003) Modèle à indice fonctionnel simple. CR Math 336(12):1025–1028

Ferraty F, Hall P, Vieu P (2010) Most-predictive design points for functional data predictors. Biometrika 97(4):807–824

Ferraty F, Park J, Vieu P (2011) Estimation of a functional single index model. In: Ferraty F (ed) Recent advances in functional data analysis and related topics. Physica-Verlag HD, Heidelberg

Ferraty F, Goia A, Salinelli E et al (2013) Functional projection pursuit regression. Test 22(2):293–320

Ferré L, Yao AF (2003) Functional sliced inverse regression analysis. Statistics 37(6):475–488

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Geenens G et al (2011) Curse of dimensionality and related issues in nonparametric functional regression. Stat Surv 5:30–43

Goia A, Vieu P (2015) A partitioned single functional index model. Comput Stat 30(3):673–692

Goldsmith J, Scheipl F, Huang L, et al (2020) refund: regression with functional data. https://CRAN.R-project.org/package=refund, r package version 0.1-23

Gregorutti B (2016) RFgroove: importance measure and selection for groups of variables with random forests. https://CRAN.R-project.org/package=RFgroove, r package version 1.1

Gregorutti B, Michel B, Saint-Pierre P (2015) Grouped variable importance with random forests and application to multiple functional data analysis. Comput Stat Data Anal 90:15–35

Greven S, Scheipl F (2017) A general framework for functional regression modelling. Stat Model 17(1–2):1–35

Hall P, Horowitz JL et al (2007) Methodology and convergence rates for functional linear regression. Ann Stat 35(1):70–91

Hastie T, Mallows C (1993) A statistical view of some chemometrics regression tools. Technometrics 35(2):140–143

James GM (2002) Generalized linear models with functional predictors. J R Stat Soc Ser B (Stat Methodol) 64(3):411–432

James GM, Silverman BW (2005) Functional adaptive model estimation. J Am Stat Assoc 100(470):565–576

Jiang CR, Wang JL et al (2011) Functional single index models for longitudinal data. Ann Stat 39(1):362–388

Kara LZ, Laksaci A, Rachdi M et al (2017) Data-driven KNN estimation in nonparametric functional data analysis. J Multivar Anal 153:176–188

Kudraszow NL, Vieu P (2013) Uniform consistency of KNN regressors for functional variables. Stat Probabil Lett 83(8):1863–1870

Li KC (1991) Sliced inverse regression for dimension reduction. J Am Stat Assoc 86(414):316–327

Lian H, Li G (2014) Series expansion for functional sufficient dimension reduction. J Multivar Anal 124:150–165

Liebl D et al (2013) Modeling and forecasting electricity spot prices: A functional data perspective. Ann Appl Stat 7(3):1562–1592

Ling N, Vieu P (2018) Nonparametric modelling for functional data: selected survey and tracks for future. Statistics 52(4):934–949

Ling N, Vieu P (2020) On semiparametric regression in functional data analysis. Wiley Interdisciplinary Reviews: Computational Statistics 1538. https://doi.org/10.1002/wics.1538

Mas A et al (2012) Lower bound in regression for functional data by representation of small ball probabilities. Electron J Stat 6:1745–1778

McLean MW, Hooker G, Staicu AM et al (2014) Functional generalized additive models. J Comput Graph Stat 23(1):249–269

Möller A, Tutz G, Gertheiss J (2016) Random forests for functional covariates. J Chemom 30(12):715–725

Müller HG, Yao F (2008) Functional additive models. J Am Stat Assoc 103(484):1534–1544

Müller HG, Stadtmüller U et al (2005) Generalized functional linear models. Ann Stat 33(2):774–805

Müller HG, Wu Y, Yao F (2013) Continuously additive models for nonlinear functional regression. Biometrika 100(3):607–622

Preda C (2007) Regression models for functional data by reproducing kernel Hilbert spaces methods. J Stat Plan Inference 137(3):829–840

Reiss PT, Ogden RT (2007) Functional principal component regression and functional partial least squares. J Am Stat Assoc 102(479):984–996

Shang HL (2016) A Bayesian approach for determining the optimal semi-metric and bandwidth in scalar-on-function quantile regression with unknown error density and dependent functional data. J Multivar Anal 146:95–104

Telgarsky M (2013) Margins, shrinkage, and boosting. Int Conf Mach Learn 28(2):307–315

Therneau T, Atkinson B (2019) rpart: recursive partitioning and regression trees. https://CRAN.R-project.org/package=rpart, r package version 4.1-15

Tutz G, Gertheiss J (2010) Feature extraction in signal regression: a boosting technique for functional data regression. J Comput Graph Stat 19(1):154–174

Wang G, Lin N, Zhang B (2014) Functional K-means inverse regression. Comput Stat Data Anal 70:172–182

Wood SN (2017) Generalized additive models: an introduction with R, 2nd edn. Chapman and Hall/CRC, Boca Raton

Yao F, Müller HG, Wang JL (2005) Functional data analysis for sparse longitudinal data. J Am Stat Assoc 100(470):577–590

Zhang T, Yu B (2005) Boosting with early stopping: convergence and consistency. Ann Stat 33(4):1538–1579

Zhao Y, Ogden RT, Reiss PT (2012) Wavelet-based lasso in functional linear regression. J Comput Graph Stat 21(3):600–617

Acknowledgements

The authors would like to thank Professors James and Ferraty for sharing the code used in their papers (James and Silverman 2005; Ferraty et al. 2013). In addition, we would like to thank two anonymous referees and an Associate Editor for their constructive comments on an earlier version of this work that resulted in a notably improved paper.

Funding

This research was supported by the Natural Sciences and Engineering Research Council of Canada [Discovery Grant RGPIN-2016-04288].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Proof of Theorem 1 in Sect. 2.1.1.

Proof

It is clear that if \(\{\beta _1,..., \beta _K \} = \left\{ (-1)^{l_1}\eta _1,..., (-1)^{l_K}\eta _K \right\}\) for some \(l_1,...,l_K \in \{0,1\}\), then \(g = {\tilde{g}}\). Therefore, it suffices to show that \(\{\beta _1,..., \beta _K \} = \left\{ (-1)^{l_1}\eta _1,..., (-1)^{l_K}\eta _K \right\}\) for some \(l_1,...,l_K \in \{0,1\}\). We prove that if there do not exist \(l_1,...,l_K\) for the two sets to be equal, there exists a set of indices for which (12) is a constant function and thus contradicts Condition 2.

For simplicity, we let \({\tilde{\eta }}_j = (-1)^{l_j} \eta _j\). If for any \(l_1,...,l_K\), \(\{\beta _1,..., \beta _K \} \ne \left\{ {\tilde{\eta }}_1,..., {\tilde{\eta }}_K \right\}\), we match two sets so that the same vectors \(\beta _j\) and \({\tilde{\eta }}_j\) align with each other. We let \(S = \{\beta _1,...,\beta _K \} \cap \{{\tilde{\eta }}_1,...,{\tilde{\eta }}_K \}\), \(\beta _j = {\tilde{\eta }}_j\), for \(j = 1,..., |S|\) and \(\beta _j \notin \{{\tilde{\eta }}_1,...,{\tilde{\eta }}_K \}\), for \(j = |S|+1,..., K\), and \(|S| < K\). By Condition 2, there exist a \(x_0\) for \(J = {|S|+1,...,K}\), (12) is not a constant function.

By (13), Conditions 1 and 2, for any \(t_1,..., t_K \in (-\delta , \delta )\)

and similarly

By Cauchy-Schwarz inequality and Condition 1, \((\langle \beta _j, \eta _j \rangle )^2 = 1\) for \(j = 1,..., |S|\) and \((\langle \beta _j, \eta _j \rangle )^2 < 1\) for \(j = |S+1|,..., K\). For any \(t_1,..., t_K \in (-\delta ,\delta )\),

where \(I_j = 1\) for \(j = 1,...,|S|\) and \(I_j = 0\) for \(j = |S+1|,..., |K|\).

Let \(x = x_0 + t e\) for any unit function \(e \in L^2({\mathcal {I}})\), \(\Vert e \Vert = 1\) and \(t \in (-\delta , \delta )\). Then x fills the space of \(B(x_0, \delta )\). For \(j = 1,...,K\), we define

which is a constant function of x and that contradicts Condition 2. \(\square\)

Appendix B

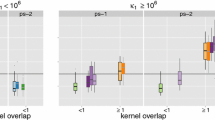

The summary statistics of test MSEs from 100 independent runs of the simulation are provided in Tables 2, 5, 8 and 11, with bold font indicating the lowest two average test errors in each setting. Summary statistics of the tree depths selected by TFBoost are provided in Tables 3,6, 9 and 12, and for the early stopping times for TFBoost are provided in Tables 4, 7, 10 and 13.

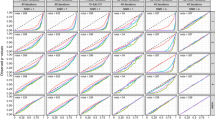

Figures 9, 10, 11 and 12 show the test MSEs averaged over 100 runs of the experiment versus the number of iterations for TFBoost. For the convenience of taking the averages, for each run, we let the test errors for the iterations past the early stopping time to keep the same test error as the one obtained at the early stopping time. It can be observed from figures that the test errors usually drop quickly within 100 iterations.

Appendix C

We consider another regression function that is linear:

The other specifications of the model remain the same as described in Sect. 3. Table 14 include the summary statistics of test MSEs from 100 independent runs of the simulation, with bold font indicating the lowest two average test errors in each setting. Tables 15 and 16 include the summary statistics of the tree depths and early stopping times selected by TFBoost methods.

Appendix D

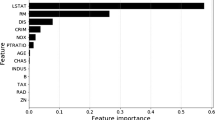

The summary statistics of test MSEs from 100 random partitions of the German electricity data in Sect. 4 are provided in Table 17.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ju, X., Salibián-Barrera, M. Tree-based boosting with functional data. Comput Stat 39, 1587–1620 (2024). https://doi.org/10.1007/s00180-023-01364-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-023-01364-2