Abstract

In this paper, we propose an adaptive smoothing spline (AdaSS) estimator for the function-on-function linear regression model where each value of the response, at any domain point, depends on the full trajectory of the predictor. The AdaSS estimator is obtained by the optimization of an objective function with two spatially adaptive penalties, based on initial estimates of the partial derivatives of the regression coefficient function. This allows the proposed estimator to adapt more easily to the true coefficient function over regions of large curvature and not to be undersmoothed over the remaining part of the domain. A novel evolutionary algorithm is developed ad hoc to obtain the optimization tuning parameters. Extensive Monte Carlo simulations have been carried out to compare the AdaSS estimator with competitors that have already appeared in the literature before. The results show that our proposal mostly outperforms the competitor in terms of estimation and prediction accuracy. Lastly, those advantages are illustrated also in two real-data benchmark examples. The AdaSS estimator is implemented in the R package adass, openly available online on CRAN.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Complex datasets are increasingly available due to advancements in technology and computational power and have stimulated significant methodological developments. In this regard, functional data analysis (FDA) addresses the issue of dealing with data that can be modeled as functions defined on a compact domain. FDA is a thriving area of statistics and, for a comprehensive overview, the reader could refer to Ramsay and Silverman (2005); Hsing and Eubank (2015); Horváth and Kokoszka (2012); Kokoszka and Reimherr (2017); Ferraty and Vieu (2006). In particular, the generalization of the classical multivariate regression analysis to the case where the predictor and/or the response have a functional form is referred to as functional regression and is illustrated e.g., in Morris (2015) and Ramsay and Silverman (2005). Most of the functional regression methods have been developed for models with scalar response and functional predictors (scalar-on-function regression) or functional response and scalar predictors (function-on-scalar regression). Some results may be found in Cardot et al. (2003); James (2002); Yao and Müller (2010); Müller and Stadtmüller (2005); Scheipl et al. (2015); Ivanescu et al. (2015); Hullait et al. (2021); Palumbo et al. (2020); Centofanti et al. (2020); Capezza et al. (2022). Models where both the response and the predictor are functions, namely function-on-function (FoF) regression, have been far less studied until now. In this work, we study FoF linear regression models, where the response variable function, at any domain point, depends linearly on the full trajectory of the predictor. That is,

for \(i=1,\dots ,n\). The pairs \(\left( X_{i}, Y_i\right) \) are independent realizations of the predictor X and the response Y, which are assumed to be smooth random processes with realizations in \(L^2 (\mathcal {S})\) and \(L^2 (\mathcal {T})\), i.e., the Hilbert spaces of square integrable functions defined on the compact sets \(\mathcal {S}\) and \(\mathcal {T}\), respectively. Without loss of generality, the latter are also assumed with a functional mean equal to zero. The functions \(\varepsilon _{i}\) are zero-mean random errors, independent of \(X_{i}\). The function \(\beta \) is smooth in \(L^2 (\mathcal {S}\times \mathcal {T})\), i.e., the Hilbert space of bivariate square integrable functions defined on the closed intervals \(\mathcal {S}\times \mathcal {T}\), and is hereinafter referred to as coefficient function. For each \(t\in \mathcal {T}\), the contribution of \(X_{i}\left( \cdot \right) \) to the conditional value of \(Y_i\left( t\right) \) is generated by \(\beta \left( \cdot ,t\right) \), which works as a continuous set of weights of the predictor evaluations. Different methods to estimate \(\beta \) in (1) have been proposed in the literature. Ramsay and Silverman (2005) assume the estimator of \(\beta \) to be in a finite dimension tensor space spanned by two basis sets and where regularization is achieved by either truncation or roughness penalties. (The latter is the foundation of the method proposed in this article as we will see below.) Yao et al. (2005b) assume the estimator of \(\beta \) to be in a tensor product space generated by the eigenfunctions of the covariance functions of the predictor X and the response Y, estimated by using the principal analysis by conditional expectation (PACE) method (Yao et al. 2005a). More recently, Luo and Qi (2017) propose an estimation method of the FoF linear model with multiple functional predictors based on a finite-dimensional approximation of the mean response obtained by solving a penalized generalized functional eigenvalue problem. Qi and Luo (2018) generalize the method in Luo and Qi (2017) to the high dimensional case, where the number of covariates is much larger than the sample size (i.e., \(p>> n\)). In order to improve model flexibility and prediction accuracy, Luo and Qi (2019) consider a FoF regression model with interaction and quadratic effects. A nonlinear FoF additive regression model with multiple functional predictors is proposed by Qi and Luo (2019).

One of the most used estimation methods is the smoothing spline estimator \(\hat{\beta }_{SS}\) introduced by Ramsay and Silverman (2005). It is obtained as the solution of the following optimization problem

where \(\mathbb {S}_{k_1,k_2,M_1,M_2}\) is the tensor product space generated by the sets of B-splines of orders \(k_1\) and \(k_2\) associated with the non-decreasing sequences of \(M_1+2\) and \(M_2+2\) knots defined on \(\mathcal {S}\) and \(\mathcal {T}\), respectively. The operators \(\mathcal {L}_{s}^{m_{s}}\) and \(\mathcal {L}_{t}^{m_{t}}\), with \(m_s\le k_1-1\) and \(m_t\le k_2-1\), are the \(m_{s}\)th and \(m_{t}\)th order linear differential operators applied to \(\alpha \) with respect to the variables s and t, respectively. The two penalty terms on the right-hand side of (2) measure the roughness of the function \(\alpha \). The positive constants \(\lambda _{s}\) and \(\lambda _{t}\) are generally referred to as roughness parameters and trade off smoothness and goodness of fit of the estimator. The higher their values, the smoother the estimator of the coefficient function.

Note that the two penalty terms on the right-side hand of (2) do not depend on s and t. Therefore, the estimator \(\hat{\beta }_{SS}\) may suffer from over and under smoothing when, for instance, the true coefficient function \( \beta \) is wiggly or peaked only in some parts of the domain. To solve this problem, we consider two adaptive roughness parameters that are allowed to vary on the domain \(\mathcal {S}\times \mathcal {T}\). In this way, more flexible estimators can be obtained to improve the estimation of the coefficient function.

Methods that use adaptive roughness parameters are very popular and well established in the field of nonparametric regression and are referred to as adaptive methods. In particular, the smoothing spline estimator for nonparametric regression (Wahba 1990; Green and Silverman 1993; Eubank 1999; Gu 2013) has been extended by different authors to take into account the non-uniform smoothness along the domain of the function to be estimated (Ruppert and Carroll 2000; Pintore et al. 2006; Storlie et al. 2010; Wang et al. 2013; Yang and Hong 2017).

In this paper, a spatially adaptive estimator is proposed as the solution of the following minimization problem

where the two roughness parameters \(\lambda _{s}\left( s,t\right) \) and \(\lambda _{t}\left( s,t\right) \) are functions that produce different amount of penalty, and, thus, allow the estimator to spatially adapt, i.e., to accommodate varying degrees of roughness over the domain \(\mathcal {S}\times \mathcal {T}\). Therefore, the model may accommodate the local behavior of \(\beta \) by imposing a heavier penalty in regions of lower smoothness. Because \(\lambda _{s}\left( s,t\right) \) and \(\lambda _{t}\left( s,t\right) \) are intrinsically infinite dimensional, their specification could be rather complicated without further assumptions.

The proposed estimator is applied to FoF linear regression model reported in (1), and is referred to as adaptive smoothing spline (AdaSS) estimator. It is obtained as the solution of the optimization problem in (3), with \(\lambda _{s}\left( s,t\right) \) and \(\lambda _{t}\left( s,t\right) \) chosen based on an initial estimate of the partial derivatives \(\mathcal {L}_{s}^{m_{s}}\alpha \left( s,t\right) \) and \(\mathcal {L}_{t}^{m_{t}}\alpha \left( s,t\right) \). The rationale behind this choice is to allow the contribution of \(\lambda _{s}\left( s,t\right) \) and \(\lambda _{t}\left( s,t\right) \), to the penalties in (3), to be small over regions where the initial estimate has large \(m_s\)th and \(m_t\)th curvatures (i.e., partial derivatives), respectively. This can be regarded as an extension to the FoF linear regression model of the idea of Storlie et al. (2010) and Abramovich and Steinberg (1996). Moreover, to overcome some limitations of the most famous grid-search method (Bergstra et al. 2011), a new evolutionary algorithm is proposed for the choice of the unknown parameters, needed to compute the AdaSS estimator. The method presented in this article is implemented in the R package adass, openly available online on CRAN.

The rest of the paper is organized as follows. In Sect. 2.1, the proposed estimator is presented. Computational issues involved in the AdaSS estimator calculation are discussed in Sects. 2.2 and 2.3. In Sect. 3, by means of a Monte Carlo simulation study, the performance of the proposed estimator is compared with those achieved by competing estimators already appeared in the literature. Lastly, two real-data examples are presented in Sect. 4 to illustrate the practical applicability of the proposed estimator. The conclusion is in Sect. 5. Supplementary Material is available online where the derivation of the approximations of the AdaSS penalty (Supplementary Material 1) and additional simulation studies are presented (Supplementary Material 2) together with the optimal tuning parameter selected in the simulation study in Sect. 3 (Supplementary Material 3).

2 The adaptive smoothing spline estimator

2.1 The estimator

The AdaSS estimator \(\hat{\beta }_{AdaSS}\) is defined as the solution of the optimization problem in (3) where the two roughness parameters \(\lambda _{s}\left( s,t\right) \) and \(\lambda _{t}\left( s,t\right) \) are as follows

that is,

for some tuning parameters \(\lambda ^{AdaSS}_{s},\delta _s,\gamma _s,\lambda ^{AdaSS}_{t},\delta _t,\gamma _t\ge 0\) and \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) initial estimates of \(\mathcal {L}_{s}^{m_{s}}\beta \) and \(\mathcal {L}_{t}^{m_{t}}\beta \), respectively. Note that the two roughness parameters \(\lambda _{s}\) and \(\lambda _{t}\) assume large values over domain regions where \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) are small. Therefore, in the right-hand side of (4), \( (\mathcal {L}_{s}^{m_{s}}\alpha )^2 \) and \(( \mathcal {L}_{t}^{m_{t}}\alpha )^2 \) are weighted through the inverse of \(|\widehat{\beta _{s}^{m_{s}}}|\) and \(|\widehat{\beta _{t}^{m_{t}}}|\). That is, over domain regions where \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) are small, \( (\mathcal {L}_{s}^{m_{s}}\alpha )^2 \) and \(( \mathcal {L}_{t}^{m_{t}}\alpha )^2 \) have larger weights than over those regions where \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) are large. For this reasons, the final estimator is able to adapt to the coefficient function over regions of large curvature without over smoothing it over regions where the \(m_s\)th and \( m_t \)th curvatures are small.

The constants \(\delta _s\) and \(\delta _t\) allow \(\hat{\beta }_{AdaSS}\) not to have \(m_s\)th and \(m_t\)th-order inflection points at the same location of \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\), respectively. Indeed, when \(\delta _s\) and \(\delta _t\) are set to zero, where \(\widehat{\beta _{s}^{m_{s}}}=0\) and \(\widehat{\beta _{t}^{m_{t}}}=0\) (\(m_s\)th and \(m_t\)th-order inflection points), the corresponding penalties go to infinite, and, thus, \(\mathcal {L}_{s}^{m_{s}}\alpha \left( s,t\right) \) and \(\mathcal {L}_{t}^{m_{t}}\alpha \left( s,t\right) \) become zero in accordance with the minimization problem. Therefore, the presence of \(\delta _s\) and \(\delta _t\) makes \(\hat{\beta }_{AdaSS}\) more robust against the choice of the initial estimate of the linear differential operators applied to \(\beta \) with respect to s and t. Finally, \(\gamma _s\) and \(\gamma _t\) control the amount of weight placed in \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\), whereas \(\lambda ^{AdaSS}_{s}\) and \(\lambda ^{AdaSS}_{t}\) are smoothing parameters. The solution of the optimization problem in (4) can be obtained in a closed form if the penalty terms are approximated as described in Sect. 2.2. There are several choices for the initial estimates \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\). As in Abramovich and Steinberg (1996), we suggest to apply the \(m_s\)th and \(m_t\)th order linear differential operator to the smoothing spline estimator \(\hat{\beta }_{SS}\) in (2).

2.2 The derivation of the AdaSS estimator

The minimization in (4) is carried out over \(\alpha \in \mathbb {S}_{k_1,k_2,M_1,M_2}\). This implicitly means that we are approximating \(\beta \) as follows

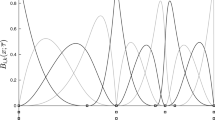

where \(\varvec{B}=\lbrace b_{ij}\rbrace \in \mathbb {R}^{(M_1+k_1)\times (M_2+k_2)}\). The two sets \(\varvec{\psi }^{s}=\left( \psi ^{s}_{1},\dots ,\psi ^{s}_{M_1+k_1}\right) ^{T}\) and \(\varvec{\psi }^{t}=\left( \psi ^{t}_{1},\dots ,\psi ^{t}_{M_2+k_2}\right) ^{T}\) are B-spline functions of order \(k_1\) and \(k_2\) and non-decreasing knots sequences \(\Delta ^{s}=\lbrace s_{0},s_{1},\dots ,s_{M_1},s_{M_1+1}\rbrace \) and \(\Delta ^{t}=\lbrace t_{0},t_{1},\dots ,t_{M_2},t_{M_2+1}\rbrace \), defined on \(\mathcal {S}=\left[ s_0,s_{M_1+1}\right] \) and \(\mathcal {T}=\left[ t_0,t_{M_2+1}\right] \), respectively, that generate \(\mathbb {S}_{k_1,k_2,M_1,M_2}\). Thus, estimating \(\beta \) in (4) means estimating \(\varvec{B}\). Let \(\alpha \left( s,t\right) =\varvec{\psi }^{s}\left( s\right) ^{T}\varvec{B}_{\alpha }\varvec{\psi }^{t}\left( t\right) \), \(s\in \mathcal {S}, t\in \mathcal {T}\), in \(\mathbb {S}_{k_1,k_2,M_1,M_2}\), where \(\varvec{B}_{\alpha }=\lbrace b_{\alpha ,ij}\rbrace \in \mathbb {R}^{(M_1+k_1)\times (M_2+k_2)}\). Then, the first term of the right-hand side of (4) may be rewritten as (see Ramsay and Silverman (2005), pag 291-293, for the derivation)

where \(\varvec{X}=\left( \varvec{X}_{1},\dots ,\varvec{X}_{n}\right) ^{T}\), with \(\varvec{X}_{i}=\int _{\mathcal {S}}X_{i}\left( s\right) \varvec{\psi }^{s}\left( s\right) ds\), \(\varvec{Y}=\left( \varvec{Y}_{1},\dots ,\varvec{Y}_{n}\right) ^{T}\) with \(\varvec{Y}_{i}=\int _{\mathcal {T}}Y_{i}\left( t\right) \varvec{\psi }^{t}\left( t\right) dt\), and \(\varvec{W}_{t}=\int _{\mathcal {T}}\varvec{\psi }^{t}\left( t\right) \varvec{\psi }^{t}\left( t\right) ^{T}dt\). The term \({{\,\mathrm{Tr}\,}}\left[ \varvec{A}\right] \) denotes the trace of a square matrix \(\varvec{A}\).

In order to simplify the integrals in the two penalty terms on the right-hand side of (4), and thus obtain a linear form in \(\varvec{B}_{\alpha }\), we consider, for \( s\in \mathcal {S}\) and \(t\in \mathcal {T}\), the following approximations of \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\)

and

where \(\Theta ^{s}=\lbrace \tau _{s,0},\tau _{s,1},\dots \tau _{s,L_s},\tau _{s,L_s+1}\rbrace \) and \(\Theta ^{t}=\lbrace \tau _{t,0},\tau _{t,1},\dots \tau _{t,L_t},\tau _{t,L_t+1}\rbrace \) are non decreasing knot sequences with \(\tau _{s,0}=s_0\), \(\tau _{s,L_s+1}=s_{M_1+1}\), \(\tau _{t,0}=t_0\), \(\tau _{t,L_t+1}=t_{M_2+1}\), and \(I_{\left[ a\times b\right] }\left( z_1,z_2\right) =1\) for \(\left( z_1,z_2\right) \in \left[ a\times b\right] \) and zero elsewhere. In (7) and (8), we are assuming that \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) are well approximated by a piecewise constant function, whose values are constant on rectangles defined by the two knot sequences \(\Theta ^{s}\) and \(\Theta ^{t}\). It can be easily proved, by following Schumaker Schumaker (2007) (pag. 491, Theorem 12.7), that the approximation error in both cases goes to zero as the mesh widths \(\overline{\delta }^{s}=\max _{i}\left( \tau _{s,i+1}-\tau _{s,i}\right) \) and \(\overline{\delta }^{t}=\max _{j}\left( \tau _{t,j+1}-\tau _{t,j}\right) \) go to zero. Therefore, \(\widehat{\beta _{s}^{m_{s}}}\) and \(\widehat{\beta _{t}^{m_{t}}}\) can be exactly recovered by uniformly increasing the number of knots \(L_s\) and \(L_t\). In this way, the two penalties on the right-hand side of (4) can be rewritten as (Supplementary Material 1)

and

where \(\varvec{W}_{s,i}=\int _{\left[ \tau _{s,i-1},\tau _{s,i}\right] }\varvec{\psi }^{s}\left( s\right) \varvec{\psi }^{s}\left( s\right) ^{T}ds\), \(\varvec{W}_{t,j}=\int _{\left[ \tau _{t,j-1},\tau _{t,j}\right] }\varvec{\psi }^{t}\left( t\right) \varvec{\psi }^{t}\left( t\right) ^{T}dt\), \(\varvec{R}_{s,i}=\int _{\left[ \tau _{s,i-1},\tau _{s,i}\right] }\mathcal {L}_{s}^{m_{s}}\left[ \varvec{\psi }^{s}\left( s\right) \right] \mathcal {L}_{s}^{m_{s}}\left[ \varvec{\psi }^{s}\left( s\right) \right] ^{T}ds\) and \(\varvec{R}_{t,j}=\int _{\left[ \tau _{t,j-1},\tau _{t,j}\right] }\mathcal {L}_{t}^{m_{t}}\left[ \varvec{\psi }^{t}\left( t\right) \right] \mathcal {L}_{t}^{m_{t}}\left[ \varvec{\psi }^{t}\left( t\right) \right] ^{T}dt\), and \(d^{s}_{ij}=\Big \{\frac{1}{\left( |\widehat{\beta _{s}^{m_{s}}}\left( \tau _{s,i},\tau _{t,j}\right) |+\delta _s\right) ^{\gamma _s}}\Big \}\) and \(d^{t}_{ij}=\Big \{\frac{1}{\left( |\widehat{\beta _{t}^{m_{t}}}\left( \tau _{s,i},\tau _{t,j}\right) |+\delta _t\right) ^{\gamma _t}}\Big \}\), for \(i=1,\dots ,L_s+1\) and \(j=1,\dots ,L_t+1\).

The optimization problem in (4) can be then approximated with the following

or by vectorization as

where \(\hat{\varvec{b}}_{AS}={{\,\mathrm{vec}\,}}\left( \hat{\varvec{B}}_{AS}\right) \), \(\varvec{L}_{rw,ij}=\left( \varvec{R}_{t,j}\otimes \varvec{W}_{s,i}\right) \) and \(\varvec{L}_{wr,ij}=\left( \varvec{W}_{t,j}\otimes \varvec{R}_{s,i}\right) \), for \(i=1,\dots ,L_s+1\) and \(j=1,\dots ,L_t+1\). For a matrix \(\varvec{A}\in \mathbb {R}^{j\times k}\), \({{\,\mathrm{vec}\,}}(\varvec{A})\) indicates the vector of length jk obtained by writing the matrix \(\varvec{A}\) as a vector column-wise, and \(\otimes \) is the Kronecker product. Then, the minimizer of the optimization problem in (12) has the following expression

where

The identifiability of \(\beta \), i.e., the uniqueness of \(\hat{\varvec{b}}_{AdaSS}\), comes from the fact that the inverse of \(\varvec{K}\) exists. This is guaranteed with probability tending to one as the sample size increases, under the condition that the covariance operator of X is strictly positive, i.e., his kernel is empty (Prchal and Sarda , 2007). In Equation (13), this reverts into the condition that \(\varvec{X}^{T}\varvec{X}\) is positive definite. Moreover, Scheipl and Greven (2016) show that identifiability still holds also in the case of rank deficiency of \(\left( \varvec{W}_{t}\otimes \varvec{X}^{T}\varvec{X}\right) \) if, and only if, the kernel of the covariate covariance operator does not overlap that of the roughness penalties.

To obtain \(\hat{\varvec{b}}_{AdaSS}\) in (13) the tuning parameters \(\lambda ^{AdaSS}_{s},\delta _s,\gamma _s,\lambda ^{AdaSS}_{t},\delta _t,\gamma _t\) must be opportunely chosen. This issue is discussed in Sect. 2.3.

2.3 The algorithm for the parameter selection

There are some tuning parameters in the optimization problem (12) that must be chosen to obtain the AdaSS estimator. Usually, the tensor product space \(\mathbb {S}_{k_1,k_2,M_1,M_2}\) is chosen with \(k_1=k_2=4\), i.e., cubic B-splines, and equally spaced knot sequences. Although the choice of \(M_1\) and \(M_2\) is not crucial (Cardot et al. 2003), it should allow the final estimator to capture the local behaviour of the coefficient function \(\beta \), that is, \(M_1\) and \(M_2\) should be sufficiently large. The smoothness of the final estimator is controlled by the two penalty terms on the right-hand side of (12).

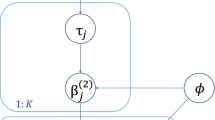

The tuning parameters \(\lambda ^{AdaSS}_{s},\delta _s,\gamma _s,\lambda ^{AdaSS}_{t},\delta _t,\gamma _t\) could be fixed by using the conventional K-fold cross validation (CV) (Hastie et al. 2009), where the combination of the parameters to be explored are chosen by means of the classic grid search method (Hastie et al. 2009). That is, an exhaustive searching through a manually specified subset of the tuning parameter space (Bergstra and Bengio 2012). Although, in our setting, grid search is embarrassingly parallel (Herlihy and Shavit 2011), it is not scalable because it suffers from the curse of dimensionality. However, even if this is beyond the scope of the present work, note that the number of combinations to explore grows exponentially with the number of tuning parameters and makes unsuitable the application of the proposed method to the FoF linear model in the case of multiple predictors. Then, to facilitate the use of the proposed method by practitioners, in what follows, we proposed a novel evolutionary algorithm for tuning parameter selection, referred to as evolutionary algorithm for the adaptive smoothing spline estimator (EAASS) inspired by the population based training (PBT) introduced by Jaderberg et al. (2017). The PBT algorithm was introduced to address the issue of hyperparameter optimization for neural networks. It bridges and extends parallel search method (e.g., grid search and random search) with sequential optimization method (e.g., hand tuning and Bayesian optimization). The former runs many parallel optimization processes, for different combinations of hyperparameter values, and, then chooses the combination that shows the best performance. The latter performs several steps of few parallel optimizations, where, at each step, information coming from the previous step is used to identify the combinations of hyperparameter values to explore. For further details on the PBT algorithm the readers should refer to Jaderberg et al. (2017), where the authors demonstrated its effectiveness and wide applicability. The pseudo code of the EAASS algorithm is given in Algorithm 1.

The first step is the identification of an initial population \(\mathcal {P}\) of the tuning parameter combinations \(p_i\)s. This can be done, for each combination and each tuning parameter, by randomly selecting a value in a pre-specified range. Then, the set \(\mathcal {V}\) of estimated prediction errors \(v_i\)s corresponding to \(\mathcal {P}\) is obtained by means of K-fold CV. We choose a subset \(\mathcal {Q}\) of \(\mathcal {P}\), by following a given exploitation strategy and, thus, the corresponding subset \(\mathcal {Z}\) of \(\mathcal {V}\). A typical exploitation strategy is the truncation selection, where the worse \(r\%\), for \(0\le r \le 100\), of \(\mathcal {P}\), in terms of estimated prediction error, is substituted by elements randomly sampled from the remaining \((100-r)\%\) part of the current population (Jaderberg et al. 2017). Then the following step consists of an exploration strategy where the tuning parameter combinations in \(\mathcal {Q}\) are substituted by new ones. The simulation study in Sect. 3 and the real-data Examples in Sect. 4 are based on a perturbation where each tuning parameter value of the given combination is randomly perturbed by a factor of 1.2 or 0.8. The exploitation and exploration phases are repeated until a stopping condition is met, e.g, maximum number of iterations. Other exploration and exploitation strategies can be found in Bäck et al. (1997). At last, the selected tuning parameter combination is obtained as an element of \(\mathcal {P}\) that achieves the lowest estimated prediction error.

The choice of the initial population \( \mathcal {P} \) may have an impact on the selection of the optimal tuning parameters. Although a perturbation step in the EAASS algorithm may allow escaping from \( \mathcal {P} \), it cannot be excluded that if the optimal tuning parameter combination is too far from this region, the algorithm may terminate before reaching it. This behaviour can be mitigated by either considering an adaptive stopping condition, which depends on the prediction error improvement between two consecutive iterations, or appropriately selecting \( \mathcal {P} \). However, in some cases the former solution may need too many iterations of the EAAS algorithm and consequently, it may result inefficiently slow. The latter solution is more suitable as a general recommendation and can be specifically implemented by preliminary running a few iterations of the EAASS algorithm to assess the coherence of the chosen region with the optimal tuning parameter combination. This is in fact the procedure used to select \( \mathcal {P} \) in both the simulation study of Sect. 3 and real-data examples of Sect. 4. Moreover, as explained in Jaderberg et al. (2017), when the size of \(\mathcal {P}\) is too small, the performance of the PBT algorithm may deteriorate. Being a greedy algorithm, the PBT algorithm may in fact get stuck in local optima for small population sizes. A simple guideline is to select the population size as large as possible to maintain enough diversity and scope for exploration with respect to the computational resources available.

The intuition on which the EAASS algorithm is based is quite straightforward. Instead of finding the optimal tuning parameter combination across the whole parameter space, which is clearly infeasible, the combinations in \(\mathcal {P}\) are the only ones to be considered in two phases, i.e., the exploitation and exploration phases. The former decides the combinations in \(\mathcal {P}\) that should be abandoned to focus on more promising ones, whereas the latter proposes new parameter combinations to better explore the tuning parameter space. In this way, tuning parameter combinations with unsatisfactory predictive performance are overlooked. Then, the computational effort is focused on tuning parameter regions that are closer to the tuning parameter combinations with the smallest prediction errors. Moreover, models obtained for each tuning parameter combination in \(\mathcal {P}\) could be estimated in a parallel fashion. In such a way, the EAASS algorithm comprises the features of both the parallel search and sequential methods.

3 Simulation study

In this section, the performance of the AdaSS estimator is assessed on several simulated datasets. In particular, we compare the AdaSS estimator with cubic B-splines and \(m_s=m_t=2\) with five competing methods that represent the state of the art in the FoF liner regression model estimation. The first two are those proposed by Ramsay and Silverman (2005). The first one, hereinafter referred to as SMOOTH estimator, is the smoothing spline estimator described in (2), whereas, the second one, hereinafter referred to as TRU estimator, assumes that the coefficient function is in a finite dimensional tensor product space generated by two sets of B-splines with regularization achieved by choosing the space dimension. Then, we consider also the estimator proposed by Yao et al. (2005b) and Canale and Vantini (2016). The former is based on the functional principal component decomposition, and is hereinafter referred to as PCA estimator, while the latter relies on a ridge type penalization, hereinafter referred to as RIDGE estimator. Lastly, as the fifth alternative, we explore the estimator proposed by Luo and Qi (2017), hereinafter referred to as SIGCOMP. For illustrative purposes, we also consider a version of the AdaSS estimator, referred to AdaSStrue, whose roughness parameters are calculated by assuming that the true coefficient function is known. Obviously, the AdaSStrue has not a practical meaning because the true coefficient function is never known. However, it allows one to better understand the influence of the initial estimates of the partial derivatives on the AdaSS performance. All the unknown parameters of the competing methods considered are chosen by means of a 10-fold CV. The tuning parameters of the AdaSS and AdaSStrue estimators are chosen through the EAASS algorithm. The initial population \(\mathcal {P}\) of tuning parameter combinations for the EAASS algorithm is generated by randomly selecting 24 values in pre-specified ranges. Specifically, \(\delta _s\) and \(\delta _t\) are uniformly sampled in \(\left[ 0,0.1\max |\widehat{\beta _{s}^{m_{s}}}\left( s,t\right) |\right] \) and \(\left[ 0,0.1\max |\widehat{\beta _{t}^{m_{t}}}\left( s,t\right) |\right] \), respectively; the constants \(\gamma _s\) and \(\gamma _t\) are uniformly sampled in \(\left[ 0,4\right] \); and the two roughness parameters \(\lambda _{s}\) and \(\lambda _{t}\) are uniformly selected in \(\left[ 10^{-8},10^3\right] \). The set \(\mathcal {V}\) is obtained by using a 10-fold CV, the exploitation and exploration phases are as described in Sect. 2.3 and a maximum number of iterations equal to 15 is set as the stopping condition. For each simulation, a training sample of n observations is generated along with a test set T of size \(N=4000\). They are used to estimate \(\beta \) and to test the predictive performance of the estimated model, respectively. Three different sample sizes are considered, viz., \(n=150,500,1000\). The estimation accuracy of the estimators are assessed by using the integrated squared error (ISE) defined as

where A is the measure of \(\mathcal {S}\times \mathcal {T}\). The ISE aims to measure the estimation error of \(\hat{\beta }\) with respect to \(\beta \). Whereas, the predictive accuracy is measured through the prediction mean squared error (PMSE) defined as

The observations in the test set are centred by subtracting from each observation the corresponding sample mean function estimated in the training set. The observations in the training and test sets are obtained as follows. The covariate \(X_i\) and the errors \(\varepsilon _i\) are generated as a linear combination of cubic B-splines, \(\Psi _{i}^{x}\) and \(\Psi _{i}^{\varepsilon }\), with evenly spaced knots, i.e., \(X_{i}=\sum _{j=1}^{32}x_{ij}\Psi _{i}^{x}\) and \(\varepsilon _{i}=k\sum _{j=1}^{20}e_{ij}\Psi _{i}^{\varepsilon }\). The coefficients \(x_{ij}\) and \(e_{ij}\), for \(i=1,\dots ,n\); \(j=1,\dots ,32\) and \(j=1,\dots ,20\), are independent realizations of standard normal random variable and the numbers of basis have been randomly chosen between 10 and 50. The constant k is chosen such that the signal-to-noise ratio \(SN\doteq \int _{\mathcal {T}}{{\,\mathrm{Var}\,}}_{X}[{{\,\mathrm{E}\,}}\left( Y_i|X_i\right) ]/\int _{\mathcal {T}}{{\,\mathrm{Var}\,}}\left( \varepsilon _i\right) \) is equal to 4, where \({{\,\mathrm{Var}\,}}_{X}\) is the variance with respect to the random covariate X. Then, given the coefficient function \(\beta \), the response \(Y_i\) is obtained.

It is worth remarking that the coefficient function \( \beta \) is not identifiable in \(L^2 (\mathcal {S}\times \mathcal {T})\), because \( X_i \) is generated as a finite linear combination of basis functions. This means that the null space of \( K_X \), i.e., the covariance operator of \( X_i \), is not empty. In this case, the coefficient function \( \beta \) is identifiable only in the closure of the image of \( K_X \) (Cardot et al. 2003), which is denoted by \( Im(K_X)=\lbrace K_X f :f\in L^2(\mathcal {S})\rbrace \). Thus, to obtain a meaningful measure of the estimation accuracy, ISE should be computed by considering estimate projections onto \( Im(K_X) \). This allows the estimation method performance to be compared over the identifiable part of the model, only. Also in accordance with James et al. (2009); Zhou et al. (2013); Lin et al. (2017), the space spanned by the 32 cubic B-splines used to generate \( X_i \) is sufficiently rich to reconstruct the true coefficient function \( \beta \) and its estimate \( \hat{\beta } \) for the proposed and competing methods. Hence, ISE in Equation (14) can be still suitably used.

In the Supplementary Material 2, additional simulation studies are presented to study the performance of both the proposed estimator with respect to different choices of partial derivative estimates (Supplementary Material 2.1) and the repeated application of the AdaSS estimation method (Supplementary Material 2.2).

3.1 Mexican hat function

The Mexican hat function is a linear function with a sharp smoothness variation in central part of the domain. In this case, the coefficient function \(\beta \) is defined as

where \(\phi \) is a multivariate normal distribution with mean \(\varvec{\mu }=\left( 0.6,0.6\right) ^{T}\) and diagonal covariance matrix \(\varvec{\Sigma }={{\,\mathrm{diag}\,}}\left( 0.001,0.001\right) \). Figure 1 displays the AdaSS and the SMOOTH estimates along with the true coefficient function for a randomly selected simulation run.

The proposed estimator tends to be smoother in the flat region and is able to better capture the peak in the coefficient function (at \(t\approx 0.6\)) than the SMOOTH estimate. The latter, to perform reasonably well along the whole domain, selects tuning parameters that may be not sufficiently small on the peaky region, or not sufficiently large over the flat region. This is also confirmed by the graphical appeal of the AdaSS estimate with respect to the competitor ones. In Fig. 2 and top of Table 1, the values of ISE and PMSE achieved by the AdaSS, AdaSStrue, and competitor estimators are shown as functions of the sample size n. Without considering the AdaSStrue estimator, the AdaSS estimator yields the lowest ISE for all sample sizes and thus has the lowest estimation error. In terms of PMSE, it is the best one for \(n=150\), whereas for \(n=500,1000\) it performs comparably with SIGCOMP and PCA estimators. The performance of the AdaSStrue and AdaSS estimators is very similar in terms of ISE, whereas the AdaSStrue shows a lower PMSE. However, as expected, the effect of the knowledge of the true coefficient function tends to disappear as n increases, because the partial derivative estimates become more accurate.

3.2 Dampened harmonic motion function

This simulation scenario considers as coefficient function \(\beta \) the dampened harmonic motion function, also known as the spring function in the engineering literature. It is characterized by a sinusoidal behaviour with exponentially decreasing amplitude, that is

Figure 3 displays the AdaSS and the SMOOTH estimates along with the true coefficient function. Also in this scenario, the AdaSS estimates are smoother than the SMOOTH estimates in regions of small curvature. But, it is more flexible where the coefficient function is more wiggly. Note that intuitively, the SMOOTH estimator trades off its smoothness over the whole domain. Indeed, it over-smooths at small values of s and t and under-smooths elsewhere.

a The integrated squared error (ISE) and b The prediction mean squared error (PMSE) \(\pm standard error\) for the TRU, SMOOTH, PCA, SIGCOMP, AdaSS and AdaSStrue estimators in the case of the dampened harmonic motion function. The Ridge estimator is not considered due to its too different performance

In Fig. 4 and in the second tier of Table 1, values of the ISE and PMSE for the AdaSS, AdaSStrue, and competitor estimators are shown as a function of the sample size n. Even in this case, the AdaSS estimator achieves the lowest ISE for all sample sizes, and thus, the lowest estimation error, without taking into account the AdaSStrue estimator. Strictly speaking, in terms of PMSE, note that the proposed estimator is not always the best choice, but it shows only a small difference with the best methods, viz., PCA and SIGCOMP estimators. In this case, the AdaSS and AdaSStrue performance is very similar for \( n=500,1000 \), whereas, for \( n=150 \), the AdaSStrue performs slightly better especially in terms of PMSE.

3.3 Rapid change function

In this scenario, the true coefficient function \(\beta \) is obtained by the rapid change function, that is

Figure 5 shows the AdaSS and SMOOTH estimate when \(\beta \) is the rapid change function. The SMOOTH estimate is rougher than the AdaSS one in regions that are far from the rapid change point. On the contrary, the AdaSS estimate is able to be smoother in the flat region and to be as accurate as the SMOOTH estimate near the rapid change point.

In Fig. 6 and the third tier of Table 1, values of the ISE and PMSE for the AdaSS, AdaSStrue, and competitor estimators are shown for sample sizes \(n=150,500,1000\). The AdaSS estimator outperforms the competitors, both in terms of ISE and PMSE. The performance of the AdaSStrue estimator is slightly better than that of the AdaSS one and this difference in performance reduces as n increases.

4 Real-data examples

In this section, two real datasets, namely Swedish mortality and ship CO2 emission datasets, are considered to assess the performance of the AdaSS estimator in real applications.

4.1 Swedish mortality dataset

The Swedish mortality dataset (available from the Human Mortality Database —http://mortality.org—) is very well known in the functional literature as a benchmark dataset. It has been analysed by Chiou and Müller (2009) and Ramsay et al. (2009), among others. In this analysis, we consider the log-hazard rate functions of the Swedish female mortality data for year-of-birth cohorts that refer to females born in the years 1751-1935 with ages 0-80. The value of a log-hazard rate function at a given age is the natural logarithm of the ratio of females who died at that age and the number of females alive of the same age. The 184 considered log-hazard rate functions (Chiou and Müller 2009) are shown in Fig. 7. Without loss of generality, they have been normalized to the domain \(\left[ 0,1\right] \).

The functions from 1751 (1752) to 1934 (1935) are considered as observations \(X_i\) (\(Y_i\)) of the predictor (response) in (1), \( i=1,\dots ,184 \). In this way, the relationship between two consecutive log-hazard rate functions becomes the focus of the analysis. To assess the predictive performance of the methods considered in the simulation study (Sect. 3), for 100 times, 166 observations out of 184 are randomly chosen, as training set, to fit the model. The 18 remaining ones are used as a test set to calculate the PMSE. The averages and standard deviations of PMSEs are shown in the first line of Table 2. The AdaSS estimator outperforms all the competitors. Only the RIDGE estimator has comparable predictive performance.

Figure 8 shows the AdaSS estimates along with the RIDGE estimates that represents the best competitor methods in terms of PMSE. The proposed estimator has slightly better performance than the competitor, but, at the same time, it is much more interpretable. In fact, it is much smoother where the coefficient function seems to be mostly flat and successfully captures the pattern of \(\beta \) in the peak region. On the contrary, the RIDGE estimates are particularly rough over regions of low curvature. The optimal tuning parameters selected for the AdaSS estimates depicted in Fig. 8 are \(\lambda ^{AdaSS}_{s}=10^{2.16},\lambda ^{AdaSS}_{t}=10^{0},\tilde{\delta }_s=0.003,\tilde{\delta _t}=0.052,\gamma _s=2.46,\gamma _t=3.60\), where \( \tilde{\delta }_s=\delta _s/\max |\widehat{\beta _{s}^{m_{s}}}\left( s,t\right) | \) and \( \tilde{\delta }_t=\delta _t/\max |\widehat{\beta _{t}^{m_{t}}}\left( s,t\right) | \).

4.2 Ship CO2 emission dataset

The ship CO2 emission dataset has been thoroughly studied in the very last years (Lepore et al. 2018; Reis et al. 2020; Capezza et al. 2020; Centofanti et al. 2021). It was provided by the shipping company Grimaldi Group to address aspects related to the issue of monitoring fuel consumptions or CO2 emissions for a Ro-Pax ship that sails along a route in the Mediterranean Sea. In particular, we focus on the study of the relation between the fuel consumption per hour (FCPH), assumed as the response, and the speed over ground (SOG), assumed as the predictor. The observations considered were recorded from 2015 to 2017. Figure 9 shows the 44 available observations of SOG and FCPH (Centofanti et al. 2021).

Similarly to the Swedish mortality dataset, the prediction performance of the methods are assessed by randomly chosen 40 out of 44 observations to fit the model and by using the 4 remaining observations to compute the PMSE. This is repeated 100 times. The averages and standard deviations of the PMSEs are listed in the second line of Table 2. The AdaSS estimator is, in this case, outperformed by the RIDGE estimator, which achieves the lowest PMSE. However, as shown in Fig. 10, it is able both to well estimate the coefficient function over peaky regions, as the RIDGE estimator, and to smoothly adapt over the remaining part of the domain. Also, the PCA estimator achieves a smaller PMSE than that of the proposed estimator. However, the PCA estimator is even rougher than the RIDGE estimator and, thus, it is not shown in Fig. 10. The optimal tuning parameters selected for the AdaSS estimates depicted in Fig. 10 are \(\lambda ^{AdaSS}_{s}=10^{1.93},\lambda ^{AdaSS}_{t}=10^{0.49},\tilde{\delta }_s=0.06,\tilde{\delta _t}=0.01,\gamma _s=2.22,\gamma _t=2.22\).

5 Conclusion

In this article, the AdaSS estimator is proposed for the function-on-function linear regression model where each value of the response, at any domain point, depends linearly on the full trajectory of the predictor. The introduction of two adaptive smoothing penalties, based on an initial estimate of its partial derivatives, allows the proposed estimator to better adapt to the coefficient function. By means of a simulation study, the proposed estimator has proven favourable performance with respect to those achieved by the five competitors already appeared in the literature before, both in terms of estimation and prediction error. The adaptive feature of the AdaSS estimator is advantageous for the interpretability of the results with respect to the competitors. Moreover, its performance has shown to be competitive also with respect to the case where the true coefficient function is known. Finally, the proposed estimator has been successfully applied to real-data examples considered, viz., the Swedish mortality and ship CO2 emission datasets. However, some challenges are still open. Even though the proposed evolutionary algorithm has shown to perform particularly well both in the simulation study and the real-data examples, the choice of the tuning parameters still remains in fact a critical issue, because of the curse of dimensionality. This could be even more problematic in the perspective of extending the AdaSS estimator to the FoF regression model with multiple predictors.

Supplementary information The online version contains supplementary material available at https://doi.org/10.1007/s00180-022-01223-6. Supplementary Material is available online and contains the derivation of the approximation of the AdaSS penalty terms (Supplementary Material 1), and additional simulation studies to assess the performance of both the proposed estimator for different choices of partial derivative estimates (Supplementary Material 2.1) and the repeated application of the AdaSS estimation method (Supplementary Material 2.2). Supplementary Material 3 presents the optimal tuning parameter selected in the simulation study in Section 3.

Change history

05 August 2022

Missing Open Access funding information has been added in the Funding Note.

References

Abramovich F, Steinberg DM (1996) Improved inference in nonparametric regression using lk-smoothing splines. J Stat Plann Inference 49(3):327–341

Bäck T, Fogel DB, Michalewicz Z (1997) Handbook of evolutionary computation. Chapman and Hall/CRC, UK

Bergstra J, Bengio Y (2012) Random search for hyper-parameter optimization. J Mach Learn Res 13(10):281–305

Bergstra J, Bardenet R, Bengio Y, Kégl B (2011) Algorithms for hyper-parameter optimization. Adv Neural Inf Process Syst, Curran Assoc Inc 24:871

Canale A, Vantini S (2016) Constrained functional time series: applications to the italian gas market. Int J Forecast 32(4):1340–1351

Capezza C, Lepore A, Menafoglio A, Palumbo B, Vantini S (2020) Control charts for monitoring ship operating conditions and \(\text{ CO}_{2}\) emissions based on scalar-on-function regression. Appl Stoch Models Business Ind 36(3):477–500

Capezza C, Centofanti F, Lepore A, Menafoglio A, Palumbo B, Vantini S (2022) Functional regression control chart for monitoring ship \(\text{ CO}_{2}\) emissions. Quality Reliability Eng Int 38(3):1519–1537

Cardot H, Ferraty F, Sarda P (2003) Spline estimators for the functional linear model. Stat Sinica 13:571–591

Centofanti F, Fontana M, Lepore A, Vantini S (2020) Smooth lasso estimator for the function-on-function linear regression model. http://arxiv.org/abs/200700529

Centofanti F, Lepore A, Menafoglio A, Palumbo B, Vantini S (2021) Functional regression control chart. Technometrics 63(3):281–294

Chiou JM, Müller HG (2009) Modeling hazard rates as functional data for the analysis of cohort lifetables and mortality forecasting. J Am Stat Assoc 104(486):572–585

Eubank RL (1999) Nonparametric regression and spline smoothing. Chapman and Hall/CRC, UK

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer, New York

Green PJ, Silverman BW (1993) Nonparametric regression and generalized linear models: a roughness penalty approach. Chapman and Hall/CRC, UK

Gu C (2013) Smoothing spline ANOVA models. Springer, New York

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction. Springer, New York

Herlihy M, Shavit N (2011) The art of multiprocessor programming. Morgan Kaufmann, USA

Horváth L, Kokoszka P (2012) Inference for functional data with applications. Springer, New York

Hsing T, Eubank R (2015) Theoretical foundations of functional data analysis, with an introduction to linear operators. Wiley, New Jersey

Hullait H, Leslie DS, Pavlidis NG, King S (2021) Robust function-on-function regression. Technometrics 63(3):396–409

Ivanescu AE, Staicu AM, Scheipl F, Greven S (2015) Penalized function-on-function regression. Comput Stat 30(2):539–568

Jaderberg M, Dalibard V, Osindero S, Czarnecki WM, Donahue J, Razavi A, Vinyals O, Green T, Dunning I, Simonyan K (2017) Population based training of neural networks. http://arxiv.org/abs/171109846

James GM (2002) Generalized linear models with functional predictors. J R Stat Soc: Ser B (Stat Methodol) 64(3):411–432

James GM, Wang J, Zhu J et al (2009) Functional linear regression that’s interpretable. Annal Stat 37(5A):2083–2108

Kokoszka P, Reimherr M (2017) Introduction to functional data analysis. Chapman and Hall/CRC, UK

Lepore A, Palumbo B, Capezza C (2018) Analysis of profiles for monitoring of modern ship performance via partial least squares methods. Quality Eng Int 34(7):1424–1436

Lin Z, Cao J, Wang L, Wang H (2017) Locally sparse estimator for functional linear regression models. J Comput Gr Stat 26(2):306–318

Luo R, Qi X (2017) Function-on-function linear regression by signal compression. J Am Stat Assoc 112(518):690–705

Luo R, Qi X (2019) Interaction model and model selection for function-on-function regression. J Comput Gr Stat 28(2):1–14

Morris JS (2015) Functional regression. Ann Rev Stat Appl 2:321–359

Müller HG, Stadtmüller U (2005) Generalized functional linear models. Annal. Stat 33(2):774–805

Palumbo B, Centofanti F, Del Re F (2020) Function-on-function regression for assessing production quality in industrial manufacturing. Quality Reliability Eng Int 36(8):2738–2753

Pintore A, Speckman P, Holmes CC (2006) Spatially adaptive smoothing splines. Biometrika 93(1):113–125

Prchal L, Sarda P (2007) Spline estimator for the functional linear regression with functional response. Preprint

Qi X, Luo R (2018) Function-on-function regression with thousands of predictive curves. J Multiv Anal 163:51–66

Qi X, Luo R (2019) Nonlinear function on function additive model with multiple predictor curves. Stat Sinica 29:719–739

Ramsay J, Silverman B (2005) Functional data analysis. Springer, New York

Ramsay JO, Hooker G, Graves S (2009) Functional data analysis with R and MATLAB. Springer, New York

Reis MS, Rendall R, Palumbo B, Lepore A, Capezza C (2020) Predicting ships’ \(\text{ CO}_{2}\) emissions using feature-oriented methods. Appl Stoch Models Business Ind 36(1):110–123

Ruppert D, Carroll RJ (2000) Theory & methods: spatially-adaptive penalties for spline fitting. Australian & New Zealand J Stat 42(2):205–223

Scheipl F, Greven S (2016) Identifiability in penalized function-on-function regression models. Electron J Stat 10(1):495–526

Scheipl F, Staicu AM, Greven S (2015) Functional additive mixed models. J Comput Gr Stat 24(2):477–501

Schumaker L (2007) Spline functions: basic theory. Cambridge University Press, Cambridge

Storlie CB, Bondell HD, Reich BJ (2010) A locally adaptive penalty for estimation of functions with varying roughness. J Comput Gr Stat 19(3):569–589

Wahba G (1990) Spline models for observational data. Soc Ind Appl Math 2:61

Wang X, Du P, Shen J (2013) Smoothing splines with varying smoothing parameter. Biometrika 100(4):955–970

Yang L, Hong Y (2017) Adaptive penalized splines for data smoothing. Comput Stat Anal 108:70–83

Yao F, Müller HG (2010) Functional quadratic regression. Biometrika 97(1):49–64

Yao F, Müller HG, Wang JL (2005) Functional data analysis for sparse longitudinal data. J Am Stat Assoc 100(470):577–590

Yao F, Müller HG, Wang JL (2005) Functional linear regression analysis for longitudinal data. Annal Stat 33(6):2873–2903

Zhou J, Wang NY, Wang N (2013) Functional linear model with zero-value coefficient function at sub-regions. Stat Sinica 23(1):25

Acknowledgements

The authors are deeply grateful to the editor, the associate editor, and two referees for their reviews, which led to significant improvements of the manuscript.

Funding

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Data Availability Statement

The code used in the simulation study is included in the supplementary information. The Swedish mortality dataset is available from the Human Mortality Database (http://mortality.org). For confidentiality reasons, the ship CO2 emission dataset is available upon request.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Centofanti, F., Lepore, A., Menafoglio, A. et al. Adaptive smoothing spline estimator for the function-on-function linear regression model. Comput Stat 38, 191–216 (2023). https://doi.org/10.1007/s00180-022-01223-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01223-6

Keywords

- Functional data analysis

- Function-on-function linear regression

- Adaptive smoothing

- Functional regression