Abstract

We consider the problem of constructing a reduced-rank regression model whose coefficient parameter is represented as a singular value decomposition with sparse singular vectors. The traditional estimation procedure for the coefficient parameter often fails when the true rank of the parameter is high. To overcome this issue, we develop an estimation algorithm with rank and variable selection via sparse regularization and manifold optimization, which enables us to obtain an accurate estimation of the coefficient parameter even if the true rank of the coefficient parameter is high. Using sparse regularization, we can also select an optimal value of the rank. We conduct Monte Carlo experiments and a real data analysis to illustrate the effectiveness of our proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reduced-rank regression (RRR), a useful tool for statistics, is based on a multivariate linear regression model with a low-rank constraint for the coefficient parameter. RRR reduces the number of parameters included in the model and enables us to easily interpret the relationship between response and predictor variables. Therefore, RRR is used in various fields of research, including genomics, signal processing, and econometrics. To date, various extensions for RRR have been proposed: high-dimensional RRR with a rank selection criterion (Bunea et al. 2011), RRR with a nuclear norm penalization (Yuan et al. 2007; Negahban and Wainwright 2011), reduced-rank ridge regression and its kernel extensions (Mukherjee and Zhu 2011), and reduced-rank stochastic regression with sparse singular value decomposition (Chen et al. 2013).

In recent years, the number of response and predictor variables has been increasing in the fields of research such as genomics. This causes difficulty in the estimating of parameters when the sample size is smaller than the number of the parameters included in the model. One approach for overcoming this problem is to apply a regularization method. During previous decades, sparse regularization methods, such as lasso (Tibshirani 1996), have been the focus of attention, because they can estimate parameters and exclude irrelevant variables simultaneously. Various studies have considered a multivariate linear regression model with some sparse regularization (see, e.g., Rothman et al. 2010; Peng et al. 2010; Li et al. 2015). Co-sparse factor regression (SFAR; Mishra et al. 2017) was proposed in one such study. SFAR is based on both RRR and a factor analysis model by assuming that the coefficient parameter can be decomposed by singular value decomposition with both a low-rank constraint and sparsity for the singular vectors. For the estimation of parameters, Mishra et al. (2017) proposed the sequential factor extraction via co-sparse unit-rank estimation (SeCURE) algorithm. The SeCURE algorithm sequentially estimates the parameters with orthogonality and sparsity for each factor. However, the SeCURE algorithm fails to estimate the parameters when the number of latent factors is large, because the algorithm is a greedy estimation method based on the classical Gram–Schmidt orthogonalization algorithm and it is well known that the classical method does not guarantee that the optimal solution will be obtained (Björck 1967).

To overcome this problem, we propose a factor extraction algorithm with rank and variable selection via sparse regularization and manifold optimization (RVSManOpt). Manifold optimization has demonstrated excellent performance over decades of study (Bakır et al. 2004; Mishra et al. 2013; Tan et al. 2019). The minimization problem of the SFAR model can be reformulated in terms of manifold optimization. Manifold optimization enables us to solve the minimization problem by taking the geometric structure of the SFAR model into consideration. By estimating the parameters on the manifold, we simultaneously obtain all latent factors. In addition, in order to select the optimal value of the rank, we introduce a regularizer which induces a hard-thresholding operator.

The remainder of the paper is organized as follows. In Sect. 2, we introduce RRR and derive the SFAR model from the factor regression model. In Sect. 3, we reformulate the minimization problem of the SFAR model based on manifold optimization. In Sect. 4, we provide the estimation algorithm based on manifold optimization and discuss the selection of tuning parameters. In Sect. 5, Monte Carlo experiments and a real data analysis support the efficacy of RVSManOpt. Concluding remarks which summarize our study are presented in Sect. 6. Supplementary materials and source codes of our proposed method are available at https://github.com/yoshikawa-kohei/RVSManOpt.

2 Preliminaries

Suppose that we obtain n independent observations \(\left\{ (\mathbf {y}_i, \mathbf {x}_i); i=1,\dots ,n \right\} \), where \(\mathbf {y}_i = {\left[ y_{i1}, \ldots , y_{iq} \right] }^\mathsf {T} \in \mathbb {R}^{q}\) is a q-dimensional vector of response variables and \(\mathbf {x}_i = {\left[ x_{i1}, \ldots , x_{ip} \right] }^\mathsf {T} \in \mathbb {R}^p\) is a p-dimensional vector of predictor variables. When we set \(\mathbf {Y} = {\left[ \mathbf {y}_1, \ldots , \mathbf {y}_n \right] }^\mathsf {T} \in \mathbb {R}^{n \times q}\) and \(\mathbf {X} = {\left[ \mathbf {x}_1, \ldots , \mathbf {x}_n \right] }^\mathsf {T} \in \mathbb {R}^{n \times p}\), RRR (Anderson 1951; Izenman 1975; Reinsel and Velu 1998) is formulated as

where \(\mathbf {C} \in \mathbb {R}^{p \times q}\) is the coefficient matrix, which has rank at most \(r = \min \left( \mathrm {rank}\left( \mathbf {X}\right) , q \right) \), and \(\mathbf {E} = {\left[ \mathbf {e}_1, \ldots , \mathbf {e}_n \right] }^\mathsf {T} \in \mathbb {R}^{n \times q}\) is the error matrix, which consists of independent random error vectors \(\mathbf {e}_i\) with mean \(\mathrm {E}\left[ \mathbf {e}_i\right] = \mathbf {0}\) and covariance matrix \(\mathrm {Cov}\left[ \mathbf {e}_i \right] = \varvec{\Sigma } \ (i = 1,\dots ,n)\). The estimator of the coefficient matrix \(\mathbf {C}\) can be obtained by solving the minimization problem

where \(\left\Vert \cdot \right\Vert _F\) denotes the Frobenius norm.

Mishra et al. (2017) proposed SFAR by extending RRR in terms of factor analysis. Before introducing SFAR, we describe the relationship between RRR and factor analysis. First, we consider the RRR model with a coefficient matrix \(\mathbf {C}\) that is decomposed as

where \(\mathbf {U} \in \mathbb {R}^{p \times r}\) and \(\tilde{\mathbf {V}} \in \mathbb {R}^{q \times r}\). Then we obtain the RRR model reformulated by

The Eq. (4) is related to a factor analysis model: \(\mathbf {XU}\) can be regarded as a common factor matrix and \(\tilde{\mathbf {V}}\) can be regarded as a loading matrix. Furthermore, if we assume \(\mathrm {E}[\mathbf {x}_i] = \mathbf {0}\) and \(\mathrm {cov}[\mathbf {x}_i] = \varvec{\Gamma } \ (i=1,\dots ,n)\), then \(\mathrm {cov}[{{\mathbf {U}}}^\mathsf {T} \mathbf {x}] = {{\mathbf {U}}}^\mathsf {T} \varvec{\Gamma } \mathbf {U} = \mathbf {I}_r\) is derived. When we decompose \(\tilde{\mathbf {V}} = \mathbf {V} \mathbf {D}\) such that \({{\mathbf {V}}}^\mathsf {T} \mathbf {V} = \mathbf {I}_{r}\) and \(\mathbf {D} = \mathrm {diag}( d_1, \dots , d_r ), (d_1> \dots> d_r > 0)\), we can obtain the following SFAR model:

Here, the coefficient matrix is \(\mathbf {C} = \mathbf {U} \mathbf {D} {{\mathbf {V}}}^\mathsf {T}\).

The estimator of SFAR is obtained by solving the minimization problem

where \(\lambda _1, \lambda _2 > 0\) are regularization parameters and \(h_1 (\cdot ), h_2 (\cdot )\) are the regularization functions which induce the sparsity for the parameters \(\mathbf {U}\) and \(\mathbf {V}\), respectively (Tibshirani 1996). The parameter \(\mathbf {U}\) has the role of constructing latent variables by linear combination of predictor variables. By sparsely estimating the parameter \(\mathbf {U}\), the latent variables can be constructed from predictor variables that contribute to the prediction. In addition, the parameter \(\mathbf {V}\) stands for the coefficient for the latent variable. By estimating \(\mathbf {V}\) sparsely, we can select the latent variables that contribute to the prediction. By solving the minimization problem (6), we obtain the estimator of the coefficient matrix \(\hat{\mathbf {C}} = \hat{\mathbf {U}} \hat{\mathbf {D}} {\hat{{\mathbf {V}}}}^\mathsf {T}\).

The minimization problem is solved under orthogonality and sparsity of the parameters. However, it is difficult to estimate the parameters directly. For this reason, Mishra et al. (2017) proposed the SeCURE algorithm. The SeCURE algorithm sequentially solves the minimization problem for the kth latent factor given by

where \(u_{ij}\) and \(v_{ij}\) are elements of \(\mathbf {U}\) and \(\mathbf {V}\), respectively, \(\mathbf {u}_k\) and \(\mathbf {v}_k\) for \(k=1,\dots ,r\) are the kth column vector of \(\mathbf {U}\) and \(\mathbf {V}\), respectively, and \(w_{ij}\) is the adaptive weight with positive value proposed by Zou (2006). The role of the adaptive weights is to control the strength of the regularization for each parameters. Here, \(\mathbf {Y}_k\) is defined by

in which \(d_j\) is the jth diagonal element of \(\mathbf {D}\) and \(\mathbf {Y}_1 = \mathbf {Y}\). By sequentially solving the minimization problem (7) with respect to each \(d_k, \mathbf {u}_k\) and \(\mathbf {v}_k\), we obtain the solutions \(\hat{d}_k, \hat{\mathbf {u}}_k\), and \(\hat{\mathbf {v}}_k\) which satisfy orthogonality and sparsity: \(\mathbf {u}_k^\mathsf {T} {\mathbf {X}}^\mathsf {T} \mathbf {X} \mathbf {u}_h = 0\) and \(\mathbf {v}_k^\mathsf {T}\mathbf {v}_h = 0\) when \(k \ne h\). When \(\hat{\mathbf {u}}_k = \mathbf {0}\) or \(\hat{\mathbf {v}}_k = \mathbf {0}\), the SeCURE algorithm updates \(d_k = 0\). This means that the updates are terminated. In addition, the index k that terminates the updates is regarded as the optimal value of the rank of the coefficient matrix \(\mathbf {C}\). It should be noted that the estimation method for the minimization problem (7) is the block coordinate descent algorithm proposed by Chen et al. (2012).

3 Minimization problem of co-sparse factor regression via manifold optimization

The SeCURE algorithm fails to estimate the parameters for the kth latent factor when k is large, because the algorithm is based on the classical Gram–Schmidt orthogonalization algorithm. Note that the classical Gram–Schmidt orthogonalization algorithm does not produce an optimal solution, owing to rounding errors (Björck 1967). To overcome this problem, we reconsider this minimization problem in terms of manifold optimization.

3.1 Reformulation of the minimization problem as manifold optimization

To consider the minimization problem (6) in terms of manifold optimization, we use the fundamental geometric structure given by

where \(q \ge r\). Here, \(\mathrm {St}(r, q)\) is called the Stiefel manifold, which is the set of orthogonal matrices of size \(q \times r\). Furthermore, we also use the generalized Stiefel manifold given by

where \(p \ge r\) and \(\mathbf {G} \in \mathbb {R}^{p \times p}\) is a symmetric positive definite matrix. In this paper, we use \(\mathbf {G} = {\mathbf {X}}^\mathsf {T} \mathbf {X} /n\).

By utilizing the geometric structures (9) and (10), the minimization problem (6) can be reformulated as

We introduce the following two penalties \(h_1(\mathbf {U})\) and \(h_2(\mathbf {V})\) for the minimization problem (6):

The minimization problem (11) is an unconstrained optimization problem, and solving it allows us to estimate all the parameters for all the latent factors at once.

3.2 Rank selection with sparse regularization

The reformulation of the minimization problem (6) gives us the unconstrained optimization problem (11). However, we cannot select the optimal value of the rank of the coefficient matrix \(\mathbf {C}\) because of not using a sequential estimating procedure, such as SeCURE. To overcome this drawback, we propose the following minimization problem:

where \(\mathbbm {1} ( \cdot )\) is an indicator function that returns 1 if the condition is true and returns 0 if the condition is false, \(w^{(d)}_{i}\) is an adaptive weight with a positive value proposed by Zou (2006), and \(\alpha \) is a tuning parameter having a value between zero and one. The group selection in the fourth term plays the role of the rank selection of the coefficient matrix \(\mathbf {C}\). The tuning parameter \(\alpha \) adjusts the trade-off between the third term and the fourth term. Introducing \(\alpha \) facilitates the interpretation of the regularizations imposed on the model without losing the generality of the optimization problem. The two terms can be regarded as Sparse Group Lasso (Wu and Lange 2008; Puig et al. 2009; Simon et al. 2013). The fourth term is a regularizer which induces a hard-thresholding operator. By imposing this regularization, we can estimate some column vectors of \(\mathbf {V}\) as zero vectors. As a consequence, the model is constructed with a small number of latent factors. In that sense, the indicator function plays the role of selecting the rank of the coefficient matrix \(\mathbf {C}\).

The reason why we do not apply Group Lasso, which induces a soft-thresholding operator (Yuan and Lin 2006), is to avoid a double shrinking effect for the parameter \(\mathbf {V}\). If we assume that the fourth term corresponds to the Group Lasso, then such a double shrinking effect appears to occur. The double shrinking effect reduces the variance of the model, but it excessively increases the bias. To prevent the double shrinking effect for the parameter \(\mathbf {V}\), we use a regularizer which induces a hard-thresholding operator, since it does not shrink the value of the parameter.

4 Implementation

4.1 Computational algorithm

To estimate the parameters, we employ a manifold optimization method (Edelman et al. 1998; Absil et al. 2008). Manifold optimization can be performed for differentiable functions. However, the minimization problem (13) includes nondifferentiable penalty terms. For this reason, we handle the nondifferentiability by applying the manifold alternating direction method of multipliers (M-ADMM) proposed by Kovnatsky et al. (2016) to the minimization problem (13).

Letting \(\mathbf {U}^* \in \mathbb {R}^{p \times r}\) and \(\mathbf {V}^*\) and \(\mathbf {V}^{**} \in \mathbb {R}^{q \times r}\) denote variables for splitting nondifferentiable penalty terms from the minimization problem (13), we consider a minimization problem with equality constraints as follows:

where \(u_{ij}^*, v_{ij}^*\) are the (i, j)th elements of \(\mathbf {U}^*\) and \(\mathbf {V}^*\), respectively, and \(\mathbf {v}_i^{**}\) is an ith column vector of \(\mathbf {V}^{**}\). When we let \(\varvec{\Omega } \in \mathbb {R}^{p \times r}\) and \(\varvec{\Phi }\) and \(\varvec{\Psi } \in \mathbb {R}^{q \times r}\) denote the dual variables, we obtain a scaled augmented Lagrangian (Boyd et al. 2011) as follows:

where \(\xi _1, \xi _2, \xi _3 > 0 \) are penalty parameters. For this study, we fixed \(\xi _1 = \xi _2 = \xi _3 = 1\). M-ADMM alternately updates each parameter to minimize the augmented Lagrangian. The estimators of elements in \(\mathbf {U}^*\) and \(\mathbf {V}^*\) indicate whether each element of the parameter \(\mathbf {V}\) is zero. The estimators of column vectors in \(\mathbf {V}^{**}\) indicate whether each vector of the parameter \(\mathbf {V}\) is a zero vector. In the M-ADMM procedure, we initialize the parameters by using \(\tilde{\mathbf {U}} \in \mathbb {R}^{p \times r}, \tilde{\mathbf {D}} = \mathrm {diag} (\tilde{d}_1, \dots , \tilde{d}_r), \tilde{\mathbf {V}} \in \mathbb {R}^{q \times r}\). Here, \(\tilde{\mathbf {U}}\) is calculated by \(({\mathbf {X}}^\mathsf {T} \mathbf {X})^{-} {\mathbf {X}}^\mathsf {T} \mathbf {Y} \tilde{\mathbf {V}} \tilde{\mathbf {D}}^{-1}\), where the kth diagonal element of \(\tilde{\mathbf {D}}^2\) is the kth eigenvalue of \((1/n) {\mathbf {Y}}^\mathsf {T} \mathbf {X} ({\mathbf {X}}^\mathsf {T} \mathbf {X})^{-} {\mathbf {X}}^\mathsf {T} \mathbf {Y}\), and the kth column vector of \(\tilde{\mathbf {V}}\) is the kth eigenvector of \((1/n) {\mathbf {Y}}^\mathsf {T} \mathbf {X} ({\mathbf {X}}^\mathsf {T} \mathbf {X})^{-} {\mathbf {X}}^\mathsf {T} \mathbf {Y}\).

We set the adaptive weights \(w_{ij}^{(u)}, w_{ij}^{(v)}, w_{i}^{(d)}\) as

where \(\kappa ^u\), \(\kappa ^v\), \(\kappa ^d > 0\) are tuning parameters.

The parameters \(\mathbf {U}\) and \(\mathbf {V}\) are estimated by a gradient descent algorithm based on manifold optimization. For example, the procedure for estimating \(\mathbf {U}\) can be represented by the following.

-

1.

At a given iteration s, calculate the Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {U}^{(s)}}\).

-

2.

Project \(\nabla \mathcal {L}_{\mathbf {U}^{(s)}}\) onto the tangent space \(\mathcal {T}_{\mathbf {U}^{(s)}} \mathrm {GSt}(p, r)\) using orthogonal projection \(\mathcal {P}_{\mathbf {U}^{(s)}}(\cdot )\) to obtain the gradient \(\mathrm {grad} \mathcal {L}_{\mathbf {U}^{(s)}}\) on the manifold.

-

3.

Update the parameter \(\mathbf {U}^{(s)}\) by retraction \(\mathcal {R}_{\mathbf {U}^{(s)}}(- t\ \mathrm {grad} \mathcal {L}_{\mathbf {U}^{(s)}})\) to obtain the parameter \(\mathbf {U}^{(s+1)}\), where \(t \in \mathbb {R}\) is an Armijo step size described in Absil et al. (2008).

The necessary notation is shown in Table 1. In the same way, we estimate the parameter \(\mathbf {V}\) on the manifold. The detailed calculation of the updates is described in the Appendix. This algorithm is called the factor extraction algorithm with rank and variable selection via sparse regularization and manifold optimization (RVSManOpt). RVSManOpt is summarized as Algorithm 1.

We have analyzed the computational complexity of RVSManOpt. The complexity of this algorithm is \(O(sp^3)\). The high complexity is due to the requirement of calculating the inverse matrix when performing retraction.

4.2 Selection of tuning parameters

We have six tuning parameters: \(\lambda _1, \lambda _2, \alpha , \kappa ^u, \kappa ^v\), and \(\kappa ^d\). To avoid a high computational cost, \(\alpha , \kappa ^u, \kappa ^v\), and \(\kappa ^d\) are fixed in advance. We set the values of these tuning parameters according to the situation. The tuning parameter \(\alpha \) is set to a large value when a sparse regularization is more important than a regularization for selecting the rank of the coefficient matrix \(\mathbf {C}\). Larger values of tuning parameters \(\kappa ^u, \kappa ^v\), and \(\kappa ^d\) correspond to a higher data dependence. To select the remaining two tuning parameters, \(\lambda _1\) and \(\lambda _2\), we use the Bayesian information criterion (BIC) given by

where \(\mathrm {SSE}_{\lambda _1, \lambda _2}\) is the sum of squared errors of prediction defined by

and \(df_{\lambda _1, \lambda _2}\) is the degree of freedom which evaluates the sparsity of the estimates \(\hat{\mathbf {U}}\) and \(\hat{\mathbf {V}}\) defined by

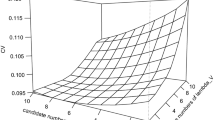

We select the tuning parameters \(\lambda _1\) and \(\lambda _2\) which minimize the BIC. The candidates values of \(\lambda _1, \lambda _2\) are taken from equally spaced values in the interval \([\lambda _{\min }, \lambda _{\max }]\). We set \(\lambda _{\max }=1\), \(\lambda _{\min }=10^{-15}\) and divide the interval into 50 equal parts in our numerical studies.

5 Numerical study

5.1 Monte Carlo simulations

We conducted Monte Carlo simulations to illustrate the efficacy of RVSManOpt. In our simulation study, we generated 50 datasets from the model:

where \(\mathbf {Y} \in \mathbb {R}^{n \times q}\) is a response matrix, \(\mathbf {X} \in \mathbb {R}^{n \times p}\) is a predictor matrix, \(\mathbf {C} \in \mathbb {R}^{p \times q}\) is a coefficient matrix, and \(\mathbf {E} = {[\mathbf {e}_1, \dots \mathbf {e}_n]}^\mathsf {T} \in \mathbb {R}^{n \times q}\) is an error matrix. Each row of \(\mathbf {X}\) followed a multivariate normal distribution \(\mathcal {N}(\mathbf {0}, \varvec{\Gamma })\), where \(\varvec{\Gamma } = [\gamma _{ij}]\) is a \(p \times p\) covariance matrix with \(\gamma _{ij} = 0.5^{|i-j|}\) for \(i,j = 1,\dots ,p\). We generated each row of \(\mathbf {E}\) by \(\mathbf {e}_i \overset{\mathrm{i.i.d.}}{\sim } \mathcal {N}(\mathbf {0}, \sigma ^2 \varvec{\Delta })\), where \(\varvec{\Delta } = [\delta _{ij}]\) is a \(q \times q\) matrix with \(\delta _{ij} = \rho ^{|i-j|}\) and \(\sigma \) is determined according to the signal-to-noise ratio defined by \(\mathrm {SNR} = \left\Vert d_r \mathbf {X} \mathbf {u}_r {\mathbf {v}}^\mathsf {T} \right\Vert _2 / \left\Vert \mathbf {E} \right\Vert _2 = 0.5\). We considered the ranks of the coefficient matrix as follows: \(r \in \{3, 5, 7, 10, 12 \}\). We generated the coefficient matrix \(\mathbf {C} = \mathbf {UD} {\mathbf {V}}^\mathsf {T}\), where \(\mathbf {U} = [\mathbf {u}_1, \dots , \mathbf {u}_r]\), \(\mathbf {D} = \mathrm {diag}(d_1, \dots , d_r)\), \(\mathbf {V} = [\mathbf {v}_1, \dots , \mathbf {v}_r]\). Specifically, we set

where \(\mathrm {rep}(a, b)\) represents the vector of length b with all elements having the value a. We considered four cases. In Cases 1 and 2, we set \(n=400, p=120\), and \(q=60\) in common, and we set the correlation as \(\rho =0.3\) (Case 1) or \(\rho =0.5\) (Case 2). In Cases 3 and 4, we set \(n=400, p=80\), and \(q=50\) in common, and we set the correlation as \(\rho =0.3\) (Case 3) or \(\rho =0.5\) (Case 4).

To demonstrate the efficacy of RVSManOpt, we compared RVSManOpt with RRR (Mukherjee et al. 2015), the SeCURE with an adaptive lasso (SeCURE(AL)), and the SeCURE with an adaptive elastic net (SeCURE(AE)). For 50 datasets, we measured the estimation accuracy of coefficient matrix \(\mathrm {Er}(\mathbf {C})\), the prediction accuracy \(\mathrm {Er}(\mathbf {XC})\) and the selected rank absolute error \(\mathrm {Er}(r)\). These are defined as

where \(\mathbf {C}^{(k)}\) is the true coefficient matrix, \(r^{(k)}\) is the true rank of the coefficient matrix \(\mathbf {C}^{(k)}\), \(\hat{\mathbf {C}}^{(k)}\) is an estimated coefficient matrix, and \(\hat{r}^{(k)}\) is the selected rank of coefficient matrix \(\hat{\mathbf {C}}^{(k)}\) for the kth dataset. In order to evaluate the sparsity, we computed Precision, Recall, and F-measure defined by

where \(\mathrm {Precision}^{(k)}\) and \(\mathrm {Recall}^{(k)}\) are defined by

for which \(\hat{u}_{ij}^{(k)}\) and \(\hat{v}_{ij}^{(k)}\) are respectively elements of the estimated \(\mathbf {U}\) and \(\mathbf {V}\) for the kth dataset and \(|\{\cdot \}|\) is the count of the elements of set \(\{\cdot \}\). All implementations were done in R (ver. 3.6) (R Core Team 2018).

Tables 2, 3, 4, and 5 show summaries of the results for, respectively, Cases 1 to 4 of the Monte Carlo simulations. In Cases 1 and 2, when the rank of the coefficient matrix \(\mathbf {C}\) is high, RVSManOpt outperforms other methods in terms of \(\mathrm {Er}(\mathbf {C})\), \(\mathrm {Er}(\mathbf {XC})\) and \(\mathrm {Er}(r)\). In contrast, when the rank of the coefficient matrix \(\mathbf {C}\) is low, the performances of all algorithms are approximately the same. Moreover, the F-measure gives almost the same value for RVSManOpt, SeCURE(AL) and SeCURE(AE), except for RRR. Since the RRR does not assume the sparsity for the estimation of parameter, the F-measure of RRR is small. In Cases 3 and 4, RRR outperforms other methods in terms of \(\mathrm {Er}(\mathbf {C})\), \(\mathrm {Er}(\mathbf {XC})\) and \(\mathrm {Er}(r)\). However, it has been reported that RRR is inferior to other methods in Cases 1 and 2. This shows that the estimation performance of RRR largely depends on the true structure of a model. Therefore, RVSManOpt achieves performance superior to those of other methods under the various situations.

Figure 1 shows box-plots of \(\mathrm {Er}(\mathbf {XC})\) for Case 1. Since the box-plots for the other cases are essentially same, except for RRR, these are available as the supplementary materials. When the rank of the coefficient matrix \(\mathbf {C}\) is high, we observe many outliers in the box-plots of RRR, SeCURE(AL) and SeCURE(AE). These outliers indicate that RRR, SeCURE(AL) and SeCURE(AE) fail to estimate parameters many times. On the other hand, the number of the outliers produced by RVSManOpt is small, and hence RVSManOpt performs the other methods in terms of stable estimation.

5.2 Application to yeast cell cycle dataset

We applied RVSManOpt to yeast cell cycle data (Spellman et al. 1998). The dataset was available in the secure package (Mishra et al. 2017) in the software R. The analysis of the yeast cell cycle enables us to identify transcription factors (TFs) which regulate ribonucleic acid (RNA) levels within the eukaryotic cell cycle. The dataset contains two components: the chromatin immunoprecipitation (ChIP) data and eukaryotic cell cycle data. The binding information of a subset of 1790 genes and 113 TFs was included in the ChIP data (Lee et al. 2002). The cell cycle data were obtained by measuring the RNA levels every 7 minutes for 119 minutes, thus a total of 18 time points, to cover two cycles. Since the dataset contained missing values, we complemented them by using the imputeMissings package in R. By complementing the dataset, we can use all \(n = 1790\) genes and analyze the relationship between the RNA levels in the \(q = 18\) time points and \(p = 113\) TFs. We compared RVSManOpt with SeCURE(AL) and SeCURE(AE) by computing the number of selected experimentally confirmed TFs among the total number of the selected TFs and the proportion of experimentally confirmed TFs. It is known that there are 21 TFs which have been experimentally confirmed to be involved in the cell cycle regulation (Wang et al. 2007).

Table 6 gives the results of a real data analysis. In RVSManOpt, the proportion of experimentally confirmed TFs is larger than both SeCURE(AL) and SeCURE(AE). RVSManOpt estimated \(\hat{r} = 5\), while SeCURE(AL) and SeCURE(AE) estimated \(\hat{r} = 4\). This result means that RVSManOpt may capture the latent structure of the yeast cell cycle data more precisely by identifying 5 latent factors.

Figure 2 shows estimated transcription levels of three of the experimentally confirmed TFs selected by RVSManOpt. The rest of the 12 experimentally confirmed TFs are available as the supplementary materials. Figure 2 indicates that the estimated transcription levels followed two cycles. It was experimentally confirmed that the transcription levels in the cell cycle did cover a two cycle time period. Thus, RVSManOpt was demonstrated to accurately estimate the cycles of data.

6 Concluding remarks

We proposed a minimization problem of SFAR on a Stiefel manifold and developed the factor extraction algorithm with rank and variable selection via sparse regularization and manifold optimization (RVSManOpt). RVSManOpt surpassed the traditional estimation procedure, which fails when the rank of the coefficient matrix is high. Numerical comparisons including Monte Carlo simulations and a real data analysis supported the usefulness of RVSManOpt.

In general, it is challenging to estimate parameters while preserving both orthogonality and sparsity. Mishra et al. (2017) indicates that enforcing orthogonality collapses sparsity and does not work from the viewpoint of prediction. Therefore, it may be unnecessary to construct a model with perfect orthogonality if we focus on prediction. Also, the recent paper by Absil and Hosseini (2019) discusses a theory of manifold optimization for non-smooth functions. It would be interesting to develop RVSManOpt based on this theory. In addition, convergence analysis needs to be established for a more detailed analysis of RVSManOpt. We leave these as future topics.

Change history

10 September 2022

A Correction to this paper has been published: https://doi.org/10.1007/s00180-022-01274-9

References

Absil PA, Hosseini S (2019) A collection of nonsmooth Riemannian optimization problems. In: Nonsmooth optimization and its applications. Springer, pp 1–15

Absil PA, Mahony R, Sepulchre R (2008) Optimization algorithms on matrix manifolds. Princeton University Press, Princeton

Anderson TW (1951) Estimating linear restrictions on regression coefficients for multivariate normal distributions. Ann Math Stat 22(3):327–351

Bakır GH, Gretton A, Franz M, Schölkopf B (2004) Multivariate regression via Stiefel manifold constraints. In: Joint pattern recognition symposium. Springer, Berlin, pp 262–269

Björck Å (1967) Solving linear least squares problems by Gram–Schmidt orthogonalization. BIT Numer Math 7(1):1–21

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends® Mach Learn 3(1):1–122

Bunea F, She Y, Wegkamp MH (2011) Optimal selection of reduced rank estimators of high-dimensional matrices. Ann Stat 39(2):1282–1309

Chen K, Chan KS, Stenseth NC (2012) Reduced rank stochastic regression with a sparse singular value decomposition. J R Stat Soc Ser B (Stat Methodol) 74(2):203–221

Chen K, Dong H, Chan KS (2013) Reduced rank regression via adaptive nuclear norm penalization. Biometrika 100(4):901–920

Edelman A, Arias TA, Smith ST (1998) The geometry of algorithms with orthogonality constraints. SIAM J Matrix Anal Appl 20(2):303–353

Izenman AJ (1975) Reduced-rank regression for the multivariate linear model. J Multivar Anal 5(2):248–264

Kovnatsky A, Glashoff K, Bronstein MM (2016) MADMM: a generic algorithm for non-smooth optimization on manifolds. In: European conference on computer vision. Springer, Cham, pp 680–696

Lee TI, Rinaldi NJ, Robert F, Odom DT, Bar-Joseph Z, Gerber GK, Hannett NM, Harbison CT, Thompson CM, Simon I (2002) Transcriptional regulatory networks in saccharomyces cerevisiae. Science 298(5594):799–804

Li Y, Nan B, Zhu J (2015) Multivariate sparse group lasso for the multivariate multiple linear regression with an arbitrary group structure. Biometrics 71(2):354–363

Mishra B, Meyer G, Bach F, Sepulchre R (2013) Low-rank optimization with trace norm penalty. SIAM J Optim 23(4):2124–2149

Mishra A, Dey DK, Chen K (2017) Sequential co-sparse factor regression. J Comput Graph Stat 26(4):814–825

Mukherjee A, Zhu J (2011) Reduced rank ridge regression and its kernel extensions. Stat Anal Data Min ASA Data Sci J 4(6):612–622

Mukherjee A, Chen K, Wang N, Zhu J (2015) On the degrees of freedom of reduced-rank estimators in multivariate regression. Biometrika 102(2):457–477

Negahban S, Wainwright MJ (2011) Estimation of (near) low-rank matrices with noise and high-dimensional scaling. Ann Stat 39(2):1069–1097

Peng J, Zhu J, Bergamaschi A, Han W, Noh DY, Pollack JR, Wang P (2010) Regularized multivariate regression for identifying master predictors with application to integrative genomics study of breast cancer. Ann Appl Stat 4(1):53

Puig AT, Wiesel A, Hero AO (2009) A multidimensional shrinkage-thresholding operator. In: 2009 IEEE/SP 15th workshop on statistical signal processing, pp 113–116

R Core Team (2018) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Reinsel GC, Velu RP (1998) Multivariate reduced-rank regression: theory and applications. Springer, New York

Rothman AJ, Levina E, Zhu J (2010) Sparse multivariate regression with covariance estimation. J Comput Graph Stat 19(4):947–962

Simon N, Friedman J, Hastie T, Tibshirani R (2013) A sparse-group lasso. J Comput Graph Stat 22(2):231–245

Spellman PT, Sherlock G, Zhang MQ, Iyer VR, Anders K, Eisen MB, Brown PO, Botstein D, Futcher B (1998) Comprehensive identification of cell cycle-regulated genes of the yeast saccharomyces cerevisiae by microarray hybridization. Mol Biol Cell 9(12):3273–3297

Tan M, Hu Z, Yan Y, Cao J, Gong D, Wu Q (2019) Learning sparse PCA with stabilized ADMM method on Stiefel manifold. IEEE Trans Knowl Data Eng 33:1078–1088

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B (Methodol) 58(1):267–288

Wang L, Chen G, Li H (2007) Group scad regression analysis for microarray time course gene expression data. Bioinformatics 23(12):1486–1494

Wu TT, Lange K (2008) Coordinate descent algorithms for lasso penalized regression. Ann Appl Stat 2(1):224–244

Yuan M, Lin Y (2006) Model selection and estimation in regression with grouped variables. J R Stat Soc Ser B (Stat Methodol) 68(1):49–67

Yuan M, Ekici A, Lu Z, Monteiro R (2007) Dimension reduction and coefficient estimation in multivariate linear regression. J R Stat Soc Ser B (Stat Methodol) 69(3):329–346

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Acknowledgements

The authors thank the reviewers for their helpful comments and constructive suggestions. S. K. was supported by JSPS KAKENHI Grant Nos. JP19K11854 and JP20H02227, and MEXT KAKENHI Grant Nos. JP16H06429, JP16K21723, and JP16H06430. The super-computing resource was provided by Human Genome Center (the Univ. of Tokyo).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised as there was an error in equation (15).

Appendices

Appendix: Detailed description of update procedures for the parameters

Appendix A: Formulas for updating U and V

The Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {U}}\) can be calculated as follows:

The formula for updating \(\mathbf {U}\) is given by

where \(\mathcal {R}_{\mathbf {U}}\) is the retraction mapping on a generalized Stiefel manifold, \(t_u\) is the Armijo step size, and \(\mathrm {grad} \mathcal {L}_{\mathbf {U}}\) is the gradient on the generalized Stiefel manifold. \(\mathrm {grad} \mathcal {L}_{\mathbf {U}}\) can be obtained by projecting the Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {U}}\) into the tangent space \(\mathcal {T}_\mathbf {U}\mathrm {GSt}\left( p,r \right) \) by using projection operator \(\mathcal {P}_\mathbf {U}(\cdot )\).

In a similar way, the Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {V}}\) can be calculated as follows:

The formula for updating \(\mathbf {V}\) is given by

where \(\mathcal {R}_{\mathbf {V}}\) is the retraction mapping on a Stiefel manifold, \(t_v\) is the Armijo step size, and \(\mathrm {grad} \mathcal {L}_{\mathbf {V}}\) is the gradient on the Stiefel manifold. \(\mathrm {grad} \mathcal {L}_{\mathbf {V}}\) can be obtained by projecting the Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {V}}\) into the tangent space \(\mathcal {T}_\mathbf {V} \mathrm {St} \left( q, r \right) \) by using projection operator \(\mathcal {P}_\mathbf {V}(\cdot )\).

Appendix B: Formula for updating D

The Euclidean gradient \(\nabla \mathcal {L}_{\mathbf {D}}\) is given by

When \(\nabla \mathcal {L}_{\mathbf {D}}= \mathbf {0}\), the optimal solution of \(\mathbf {D}\) is given by

Therefore, the formula for updating \(\mathbf {D}\) is given by

Appendix C: Formulas for updating \(\mathbf {U}^*\) and \(\mathbf {V}^*\)

The augmented Lagrangian with respect to \(\mathbf {U}^*\) is given by

The partial derivative of \(\mathcal {L}(\mathbf {U}^*)\) is calculated as follows:

where \(\partial | \cdot |\) is the subderivative operator defined as

When this partial derivative is equal to 0, the element of \(\mathbf {U}^*\) is represented as

Thus, the formula for updating \(\mathbf {U}^*\) can be obtained as follows:

This formula can be simplified using the soft-thresholding operator \(\mathrm {S}(\cdot , \cdot )\) as follows:

where \(\omega _{ij}\) is the (i, j)th element of \(\varOmega \) and \(\mathrm {S}(\cdot , \cdot )\) is the soft-thresholding operator

In a similar way to the updating of \(\mathbf {U}^*\), the formula for updating \(\mathbf {V}^*\) can be obtained as follows:

where \(\phi _{ij}\) is the (i, j)th element of \(\varPhi \).

Appendix D: Formula for updating \(\mathbf {V}^{**}\)

The augmented Lagrangian with respect to \(\mathbf {V}^{**}\) is given by

Here, we consider the augmented Lagrangian for every column vector \(\mathbf {v}_{i}^{**}\), \(i=1,\dots ,r\), as follows:

This equation can be divided into \(\mathbf {v}_i^{**} = \mathbf {0}\) and \(\mathbf {v}_i^{**} \ne \mathbf {0}\) cases as follows:

When \(\mathbf {v}_i^{**} \ne \mathbf {0}\), the optimal solution \(\mathbf {v}_i^{**}\) can be obtained as follows:

When we substitute \(\mathbf {v}_i + \varvec{\psi }_i\) in for \(\mathbf {v}_i^{**}\) in \(\mathcal {L}(\mathbf {v}_i^{**})\), the value of \(\mathcal {L}(\mathbf {v}_i^{**})\) is \(\sqrt{q} (1-\alpha ) \lambda _2 w_i^{(d)}\). It is necessary to satisfy the following condition

The formula for updating \(\mathbf {v}_i^*\) can be obtained as follows:

This formula is simplified by using the hard-thresholding operator \(\mathrm {H}(\cdot , \cdot )\) as follows:

where \(\psi _{ij}\) is the (i, j)th element of \(\varPsi \) and \(\mathrm {H}(\cdot , \cdot )\) is the hard-thresholding operator

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yoshikawa, K., Kawano, S. Sparse reduced-rank regression for simultaneous rank and variable selection via manifold optimization. Comput Stat 38, 53–75 (2023). https://doi.org/10.1007/s00180-022-01216-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01216-5