Abstract

In this paper, we propose a method that estimates the variance of an imputed estimator in a multistage sampling design. The method is based on the rescaling bootstrap for multistage sampling introduced by Preston (Surv Methodol 35(2):227–234, 2009). In his original version, this resampling method requires that the dataset includes only complete cases and no missing values. Thus, we propose two modifications for applying this method to nonresponse and imputation. These modifications are compared to other modifications in a Monte Carlo simulation study. The results of our simulation study show that our two proposed approaches are superior to the other modifications of the rescaling bootstrap and, in many situations, produce valid estimators for the variance of the imputed estimator in multistage sampling designs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The estimation of standard errors is an important topic for social surveys and official statistics. Standard errors are used to evaluate the quality of point or parameter estimations, for example, in confidence intervals or hypothesis testing. The basis of a valid standard error estimation is an accurate variance estimation. This estimation may be highly challenging to achieve, for example, due to a complex sampling design, such as a multistage sampling, the occurrence of nonresponse, and the use of imputation methods to compensate for nonresponse. In practice, it may be very difficult or even impossible to consider all these influences in the variance estimation. Thus, frequently, not all stages of the sampling design are considered, or the variance estimator for simple random sampling is used even when another sampling design has been applied. Furthermore, imputed values often are treated as observed, and the nonresponse as well as the imputation process are ignored in the variance estimation. Such procedures may lead to a heavily biased variance estimation that results in a biased standard error estimation. Hence, conclusions, e.g., from hypothesis testing and confidence intervals may be wrong. Thus, the aim of this paper is to present an approach to a variance estimation that includes all stages of a sampling design and that considers the imputation process.

1.1 Previous studies and research

There exists some previous studies in the literature that cover the variance estimation under imputation or the variance estimation in a multistage sampling design. Some of the studies also cover both issues.

Frequently, for both issues so called resampling methods (see, for example, Shao and Tu 1995) are applied, particularly, bootstrap procedures (Efron 1979). A good overview of bootstrap procedures is given in Mashreghi et al. (2016), in which procedures for multistage sampling or imputation methods are also described.

The basics for considering the imputation process in the bootstrap procedures are presented in Shao (2002), Shao and Sitter (1996) and Efron (1994). Further proposals of a bootstrap under imputation are, for example, presented in Mashreghi et al. (2014) or Saigo et al. (2001). Additional explanations of the application of bootstrap procedures under imputation are given in Haziza (2009, 2010). Moya et al. (2020) examine a rescaled bootstrap for confidence intervals in the presence of spikes in the distribution that may also be a result of certain imputation procedures. In this paper, we focus on single imputation procedures. In case of multiple imputation, two bayesian bootstrap procedures for multistage sampling designs are, for example, proposed in Zhou et al. (2016).

A bootstrap procedure for multistage sampling designs for complete observations that does not cover imputation is proposed in Rao et al. (1992) and that is an extension of the bootstrap procedure proposed in Rao and Wu (1988). Also, Saigo (2010) compares four different bootstrap procedures for stratified multistage sampling designs and complete data sets in a simulation study. Particularly, he considers the Bernoulli bootstrap for stratified multistage designs of Funaoka et al. (2006) and extensions of the bootstrap procedures described in Rao et al. (1992), Sitter (1992a) and Sitter (1992b). Further proposals of bootstrap procedures for multistage designs are presented in Haziza (2009), Wolter (2007) and Preston (2009). The latter one is the resampling method of interest in this paper.

To estimate the variance of an imputed estimator in a multistage design, we combine the rescaling bootstrap for multistage design of Preston (2009) with procedures that allows for a consideration of the imputation procedure in the resampling method. We choose the rescaling bootstrap of Preston (2009), since it allows for the inclusion of all stages of the sampling design in the variance estimation. Furthermore, the rescaling bootstrap considers finite population corrections in the weight adjustment that needs to be included in the variance estimation for the important case of without replacement random sampling (at the different stages of the sampling design). Thus, in this paper, we propose modifications of the rescaling bootstrap for multistage design of Preston (2009) to obtain a valid variance estimator in a multistage without replacement sampling design and under single imputation.Footnote 1 We present the rescaling bootstrap of Preston (2009) in the next section.

1.2 Rescaling bootstrap for a three-stage sampling design and complete response

In multitstage sampling designs in practice, the variance estimators often consider only the first stage, since the first stage variance estimator covers more than the actual variance of the point estimator at stage one (Särndal et al. 1992; Lohr 1999; Bruch et al. 2011; Bruch 2016). Under some parameter constellations, such as a small sampling fraction at the first stage or a homogeneous composition of sampling elements within the units of the first stage (primary sampling units; PSUs)Footnote 2 it is even possible to cover (almost) the entire variance by using only the variance estimator of the first stage. However, as shown by Särndal et al. (1992), Lohr (1999), Bruch et al. (2011); Bruch (2016), the variance may be heavily underestimated when only the variance estimator of the first stage is applied under parameter constellations, such as an increasing sampling fraction at the first stage or a heterogeneous composition of sampling elements within the PSU. Using such a variance estimate, for example, as a basis to compute a standard error estimate for hypothesis testing or confidence intervals, may lead to false conclusions. Thus, in some situations, it is important that the variance estimator includes all stages of the sampling design. A resampling method that takes all the sampling stages into account in the variance estimation is the multistage rescaling bootstrap proposed by Preston (2009), which is an extension of the rescaling bootstrap of Chipperfield and Preston (2007) for multistage sampling designs.Footnote 3 For this resampling method, subsamples are drawn independently without replacement at each stage of the sampling design. The rescaling bootstrap is applied in the case of a three-stage sampling design as follows [for the following explanations see Preston (2009)].

First, a subsample of size \(l^{*}= \lfloor l/2 \rfloor \) is drawn from the l PSUs in the sample at the first stage. The indicator variable \(\delta _{d}\) takes the value 1 if PSU d is in the subsample, and zero if not. Afterwards, new design weights \(w_{1d}^{*}\) for the original design weights \(w_{1d} = L/l\) (L is the number of PSUs in the population) are computed at the first stage by:

where \(f_1\) is the sampling fraction at the first stage.

A subsample of size \(m_{d}^{*}=\lfloor m_{d}/2 \rfloor \) is drawn from the \(m_{d}\) secondary sampling units (SSUs) within each PSU in the original sample at the second stage. The indicator variable \(\delta _{dq}\) takes the value 1 if SSU q in PSU d is in the subsample, and zero if not. Afterwards, new design weights \(w_{2dq}^{*}\) for the original design weights \(w_{2dq}=M_d/m_d\) (\(M_d\) is the number of SSUs in the PSU d in the population) are computed at the second stage by:

where \(f_{2d}\) is the sampling fraction in a PSU at the second stage.

A subsample of size \(n_{dq}^{*} = \lfloor n_{dq}/2\rfloor \) is drawn from the \(n_{dq}\) ultimate sampling units (USUs) within each SSU in the original sample at the third stage. The indicator variable \(\delta _{dqi}\) takes the value 1 if USU i in SSU q is in the subsample, and zero if not. Afterwards, new design weights \(w_{3dqi}^{*}\) for the original design weights \(w_{3dqi} = N_{dq}/n_{dq}\) (\(N_{dq}\) is the number of USUs in SSU q in PSU d in the population) are computed at the third stage by:

where \(f_{3dq}\) is the sampling fraction in a SSU at the third stage.

After computing the adjusted design weights at each stage, the overall adjusted design weights \(w_{dqi}^{*}\) are computed by

combined to vector \(\mathbf{w} ^{*}\). The point estimator of the rescaling bootstrap in each simulation run b is computed by:

where T is the function to compute the statistic of interest and \(\mathbf{y} _s\) is the vector with sample realisations.

The process beginning with the subsampling is repeated B times and the point estimator of the rescaling bootstrap is computed in each run b. Thus, it results the estimators \({\hat{\theta }}_{1}^{*} \ldots {\hat{\theta }}_{b}^{*} \ldots {\hat{\theta }}_{B}^{*}\), and the variance estimate is obtained by:

The procedure shows that the rescaling bootstrap considers all elements of the original sample in the computation of the rescaling point estimator. This procedure is hugely different from classical resampling methods, in which only elements drawn for the subsample are considered in the computation of the point estimator of the resampling method. However, weight adjustments for the rescaling bootstrap are performed with respect to the underlying subsample. Hence, it can be seen if USUs as well as their superordinate units are drawn for the subsample, the influence of these USUs on the variance estimation increases due to the weight adjustments compared to the elements that are not drawn.

Nonetheless, elements not drawn for the subsample can also have an influence on the variance estimation. This finding is, for example, shown in the simulation study by Bruch (2019) with respect to a single-stage design whereby elements not drawn for the subsample have a larger influence in the scenarios with larger sampling fractions than in the scenarios with smaller sampling fractions.

For the rescaling bootstrap in a multistage sampling design, influences of elements not drawn for the subsample on the variance estimation can also be larger or smaller, depending on the sampling fractions at the several sampling stages. However, this relationship is not as straightforward as for the single stage design. Elements can be drawn or not drawn at several stages and more parameters, such as the sampling fractions at a higher stage, also have an effect on the adjusted weights at certain stages in a multistage design as illustrated in (1), (2), and (3). The influence of elements drawn for the subsample and not drawn for the subsample on the variance estimation using the rescaling bootstrap is an important point. Thus, we examine this influence in more detail in the Appendix A.1 and the simulation study.

Due to subsampling at each stage of the sampling design and adjusting the corresponding weights, the rescaling bootstrap of Preston (2009) considers all stages of the sampling design in the variance estimation. However, the rescaling bootstrap of Preston (2009) is based on complete observations. Thus, the method has to be modified when the dataset consists of missing values, and imputation is applied as compensation. In the next section, we describe the variance decomposition of an imputed estimator and its implication for a multistage design that builds the starting point to include the imputation process in the rescaling bootstrap of Preston (2009).

1.3 Variance under imputation and nonresponse

For reasons of clarity, we first assume a deterministic imputation procedure to illustrate the proposed modifications in their fundamental form.Footnote 4 In general, in the presence of nonresponse and when a deterministic imputation procedure is applied as compensation for the nonresponse, the variance of an imputed estimator \({\hat{\theta }}_I\) can be decomposed as follows [Mashreghi et al. (2014), see also the reversed framework of Fay (1991) and Shao and Steel (1999)]:

The expected value and the variance according to the nonresponse mechanism are indicated by the subscript \(\eta \). The expected value and the variance with respect to the sampling are indicated by the subscript s.

Thus, the variance component \(V_1\) includes \(V_s({\hat{\theta }}_I|\mathbf{z} )\), which is the sampling variance of the imputed estimator \({\hat{\theta }}_I\), given the response vector \(\mathbf{z} \). According to Haziza (2010), calculating \(V_s({\hat{\theta }}_I|\mathbf{z} )\) is enough to estimate the component \(V_1\). Variance component \(V_2\) results from the treatment of the nonresponse mechanism as a random process. The variance component arises in a simulation study when a new response vector is generated in each simulation run (which we call in the following sections a varying response vector) by using a random model to reproduce the nonresponse mechanism. The variance component does not arise when the same response vector is used in each simulation run. Such a response vector may be called fixed (Mashreghi et al. 2014; Bruch 2019).

Referring to Mashreghi et al. (2014), the variance component \(V_2\) is only significant if the overall sampling fraction f is large. If the overall sampling fraction is negligible, the variance component \(V_2\) also is negligible, and it is sufficient to estimate the variance component \(V_1\) to cover the whole variance of the imputed estimator.

When applying a multistage sampling design, the variance component \(V_2\) often may be small or negligible. High survey costs and low survey budgets prevent a large overall sample size in relation to the population size. Multistage sampling designs are often used with respect to population surveys, for example, when the ultimate sampling units such as households or persons cannot drawn directly (i.e. when lists such as central population registers are not available, see Lynn et al. 2007; Häder 2004), when other constraints of the sampling frames such as the assignment of interviewers (European Social Survey 2018) are presented or due to geographic decisions with regard to cost-effectiveness are made (comparatively lower costs of sampling superordinate units, Lohr 1999; European Social Survey 2018). Thus, a comparatively small sample of USUs is faced with a large population. As a result, the overall sample fraction f may be rather small whereas this does not preclude that the sampling fraction is not large at a particular sampling stage. The sampling fractions at the different stages have an important influence on the variance estimation via the rescaling of Preston (2009), as seen in the previous section.

However, since the overall fraction may often be rather small in multistage sampling designs in practice, it may therefore often be enough to derive a valid variance estimator of \(V_1\) to obtain a valid variance estimator for the whole variance \(V({\hat{\theta }}_I)\). As a result, this component is of central interest in this paper. However, in the simulation study in Sect. 4, we also examine to what extent it is sufficient to estimate \(V_1\) to cover the whole variance or whether any parameter constellations exist that additionally require the estimation of \(V_2\).

1.3.1 Reimputation of imputed values

With respect to the explanations of the previous section, deriving a variance estimator for imputed estimator \({\hat{\theta }}_I\) in a multistage sampling design especially requires a valid variance estimator for variance component \(V_1\) and it is enough to estimate \(V_s({\hat{\theta }}_I|\mathbf{z} )\) to cover variance component \(V_1\) (Mashreghi et al. 2014; Haziza 2010). Thus, we intend to find a variance estimator that considers the sampling variability of the imputed estimator, given the response vector \(\mathbf{z} \). By doing so, we receive a valid variance estimator for the first term \(V_1\) in (7) (Mashreghi et al. 2014).

With respect to Mashreghi et al. (2014), one possibility for deriving a valid variance estimator for term \(V_s({\hat{\theta }}_I|\mathbf{z} )\) is to apply bootstrap procedures by taking paired subsamples from the elements in \(\mathbf{y} _I\) and their corresponding response status \(\mathbf{z} \) of the original sample to which the so-called reimputation (Shao 2002; Shao and Sitter 1996; Rao and Shao 1992) is applied. This approach considers the imputation process in a resampling method by reimputing the imputed values that are drawn for the subsample on the basis of the subsample’s observed values using the same imputation procedure—especially the same imputation model—as in the original sample. The reimputation of imputed values is appropriate for deterministic imputation procedures. However, it may lead to an overestimation of the variance if the imputation procedure includes random components, and if the subsample size is not the same size as the original sample (Shao 2002). In such cases, rather the adjustment of imputed values as described in Shao (2002) should be applied but this procedure requires a metric variable to be imputed.Footnote 5 For other imputation methods that do not allow the application of the reimputation or the adjustment of imputed values, other adjustments may be applied. However, in the following, we assume that an imputation method is applied that allows for a consideration of the imputation process in the rescaling bootstrap via reimputation or the adjustment of imputed values.

It is also possible to use the rescaling bootstrap of Chipperfield and Preston (2007) with the extension to multistage sampling designs proposed by Preston (2009) and to make use of subsampling process of the rescaling bootstrap to derive a variance estimator for \(V_s({\hat{\theta }}_I|\mathbf{z} )\). The important concern is how to apply the reimputation for the rescaling bootstrap. Usually, studies using the reimputation of imputed values, such as Shao and Sitter (1996), focus on classical resampling methods, in which only elements drawn for the subsample are considered in the computation of the point estimator of the resampling method. However, as described in the Sect. 1.2, the rescaling bootstrap includes all elements of the original sample in the computation of its point estimators. This is an important point, particularly, when elements not drawn for the subsample affect the variance estimation due to their influence on the rescaling bootstrap point estimators. Thus, all elements of the original sample need to be considered in the reimputation process (Bruch 2016, 2019). In the next section, we describe a modification of the rescaling bootstrap that includes the reimputation process for a single stage design and that can be used as a basis to modify the rescaling bootstrap in a multistage sampling with respect to imputation.

1.3.2 Rescaling bootstrap in a single-stage sampling design and imputed values

A modification of the rescaling bootstrap with respect to imputed values for the single-stage sampling design simple random sample is proposed by Bruch (2016, 2019). To derive this modification, different possibilities to apply the reimputation are evaluated that result in different requirements that have to be met.

One possibility is to conduct the reimputation regarding all the imputed values of the whole sample, without distinguishing between the imputed values drawn for the subsample and the imputed values not drawn for the subsample. By doing so, all the elements of the original sample will be considered in the reimputation process. However, in the case of a deterministic imputation procedure and when the reimputation is applied on the basis of the whole sample, the reimputed values are identical to the imputed values of the original sample. Thus, no variation can occur due to the reimputation process when computing the variance over all rescaling bootstrap point estimators (Bruch 2016). In this case, the estimator matches the variance estimator that treats the imputed values as actually observed. With respect to Shao and Sitter (1996), since this estimator does not consider the effect of missing values and imputation, the variance may be heavily underestimated (Bruch 2019).

Thus, Bruch (2016, 2019) rather proposes to reimpute the imputed values of the original sample drawn for the subsample and the imputed values of the original sample not drawn for the subsample separately. The necessity for such a procedure results from the comparison with the rescaling bootstrap for which the reimputation is done only for the imputed values drawn for the subsample and not for the imputed values not drawn for the subsample. Bruch (2016, 2019) shows that neglecting the reimputation of the imputed values not drawn for the subsample leads to an unintentional variance component, which results in the overestimation of the variance. The unintentional variance only occurs for increasing sampling fractions in a single-stage sampling design, since elements not drawn for the subsample only have a small impact when sampling fractions are small. We give a more detailed explanation for the appearance of this component in Appendix A.2.

However, the procedure of reimputing separately imputed values drawn and not drawn for the subsample only pertains to a single-stage sampling design. The question is how to apply the method in a multistage sampling design, when more stages of the sampling design must be considered. Thus, in the next section, we propose two modifications of the rescaling bootstrap to estimate the variance of an imputetd estimator in a multistage design. We evaluate these modifications in a Monte Carlo simulation study. We describe this study in Sect. 3, and present the results in Sect. 4. We discuss and summarize the results in Sect. 5 and provide an outlook for future research.

2 Rescaling bootstrap under nonresponse and imputation

The fundamentals of these approaches and this research are developed in the PhD thesis of Bruch (2016) with respect to a two-stage sampling design. However, in practice, multistage sampling designs often include more than two stages. Thus, in this paper, we apply the modifications in more realistic situations with more stages. The proposed methods are basically described for three-stage sampling designs, but they can be modified for sampling designs with more than three stages.

In the next section, we describe the sampling design and the estimator to derive the proposed modification and that build the basis for the Monte Carlo simulation study. Afterwards, we derive the proposed modifications as a combination of the rescaling bootstrap of Preston (2009) and variance estimation methods that consider the imputation. A further derivation and explanation of our proposed modifications to be a valid variance estimator, particularly under which conditions, is given in the Appendix A.3. Our modifications aim to estimate the variance component \(V_s({\hat{\theta }}_I|\mathbf{z} )\) and thus aim to cover variance component \(V_1\) in (7). As written in Sect. 1.3, it may often be enough to estimate only variance component \(V_1\) in practice when multistage sampling designs are applied. However, in Sect. 2.3 we discuss shortly the case when \(V_2\) has a strong contribution.

2.1 Imputed estimation for a multistage sampling design

To derive our modifications of the rescaling bootstrap and for their evaluation in the simulation study, we assume that a sample is drawn from the population U using a multistage sampling design with three stages. We use the notation introduced in Sect. 1. The sample sizes at the different stages are described by l, \(m_d\) and \(n_{dq}\). The corresponding population sizes are indicated by L, \(M_d\) and \(N_{dq}\). Overall, the population consists of N elements, and the complete sample S is size n.

The complete design weights \(w_{dqi}\) are computed by:

which are combined to the vector \(\mathbf{w} \).

The sampling fraction \(f_1\) at the first stage, the sampling fraction \(f_{2d}\) in a PSU at the second stage, and the sampling fraction \(f_{3dq}\) in a SSU at the third stage are defined by \(f_1 = l/L\), \(f_{2d} = m_d/M_d\) and \(f_{3dq} = n_{dq}/N_{dq}\). For simplicity, in the first part of the simulation study in Sect. 3.1.1, we assume that the sampling fraction \(f_{2d}\) is equal in each PSU with \(f_{2d}=f_2\), and the sampling fraction \(f_{3dq}\) is equal in each SSU with \(f_{3dq}=f_3\). Thus, we can use \(f_2\) and \(f_3\) to indicate unambiguously the sampling fraction at the corresponding stage and to define unambiguously the scenarios in the first part of the simulation study. However, with respect to the proposed methods, it is not necessary that the sampling fractions \(f_{2d}\) in each PSU and \(f_{3dq}\) in each SSU are all equal. Thus, the proposed methods can be applied in the same way for different sampling fractions \(f_{2d}\) in the PSUs and different sampling fractions \(f_{3dq}\) in the SSUs. In the second part of the simulation study in Sect. 3.2, the sampling fractions \(f_{2d}\) and \(f_{3dq}\) are not equal.

The variable of interest is described by y with sample realisation \(y_i\) for element i with \(i = 1 \ldots n\) and that are combined to vector \(\mathbf{y} _s\).

We assume that this variable consists of missing values that only appear at the last stage with respect to certain USUs. The missing values divide the sample S into a subset of elements with observed values \(i \in R\) and a subset of elements with missing values defined by \(i \in G\) with \(S = R \, {{\dot{\cup }}} \, G\). The size of R is r, and the size of G is g, where \(n=r+g\). We assume that the dataset contains a response indicator \(\mathbf{z} \), which takes the value 1 if an element responds and 0 if not. We use a certain single imputation method to impute missing values, and our imputation model includes two auxiliary variables \(x_1\) and \(x_2\) with realisations \(x_{1i}\) and \(x_{2i}\) for element i. We assume that the auxiliary variables have no missing values. After the imputation of sample nonresponse, it results the vector \(\mathbf{y} _I\) with respect to the variable of interest y that contains imputed as well as observed values. In detail, the values \(y_{I,i}\) of \(\mathbf{y} _I\) have the following manifestations:

where \(\tilde{y}_{i}\) is the imputed value for a missing value \( \forall i \in G\).

The statistic of interest is indicated by \(\theta \). This quantity is estimated by using the estimator \({\hat{\theta }}_I\) on the basis of imputed vector \(\mathbf{y} _I\) and including the design weights \(w_{dqi}\) from (8) with vector \(\mathbf{w} \):

where T is the function for the computation of the statistic of interest.

In this paper, we focus on the total value \(\theta = \tau \). The total value \(\tau \) is estimated on the basis of the imputed data and under the multistage sampling design by:

2.2 Rescaling bootstrap under imputations for a multistage design

When applying the rescaling bootstrap in a multistage design under imputations, three requirements have to be met. The first two requirements are a result of the single stage application described in Sect. 1.3.2, while the third requirement is essential for the adjustment to a multistage design.

-

1.

Not just a subset of the original sample, but all of the imputed values have to be reimputed to avoid the unintentional variance component described in Sect. 1.3.2. This variance component arises when not reimputed imputed values have an influence on the variance estimation.

-

2.

For the reimputation of imputed values, elements drawn and not drawn have to be separated. Otherwise, in case of deterministic imputation procedures, the reimputed values are the same as the imputed values of the original sample. Hence, the imputation process is not considered in the variance estimation.

-

3.

The variance estimator should capture \(V_s({\hat{\theta }}_I|\mathbf{z} )\), the sampling variance of the imputed estimator, given the response vector \(\mathbf{z} \). As described in Sect. 1.2, to capture the variance of an estimator in a multistage sampling design, all stages of the sampling design have to be considered in the variance estimation (at least when the parameter constellations does not allow to consider only the first stage). To ensure the correct estimation of the sampling variance in the multistage design with the rescaling bootstrap, subsampling from the original sample is applied independently at each stage of the sampling design. However, \(V_s({\hat{\theta }}_I|\mathbf{z} )\) concerns the sampling variance of the imputed estimator. Even when the imputations are done only with respect to the missing values at the last stage, the imputations affect the variance of the imputed estimator at all stages of the sampling design. This is a consequence of the imputed values being included in the computation of total values or mean values of the PSUs and the SSUs, which determine the variance at the corresponding stage. Thus, to capture \(V_s({\hat{\theta }}_I|\mathbf{z} )\), the reimputation of imputed values has to consider all stages of the sampling design and has to be conducted with respect to the subsampling process of the rescaling bootstrap.

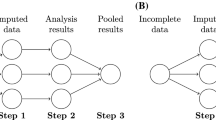

To derive a modification of the rescaling bootstrap that takes the three requirements into account, we present the subsampling process of the rescaling bootstrap that is described in Sect. 1.2 in another form as illustrated in Table 1.

The table shows the possible combinations for categorizing USUs with respect to the subsample process. A value of 1 in the last column indicates that the category includes the USUs that are drawn for the subsample, and a value of 0 means that the category includes the USUs that are not drawn for the subsample. A value of 1 in the third column indicates that the category includes the USUs whose superordinate SSU is drawn for the subsample, and a value of 0 means that the category includes the USUs whose superordinate SSU is not drawn for the subsample. A value of 1 in the second column specifies that the category consists of the USUs whose superordinate PSU is drawn for the subsample, and a value of 0 means that the category consists of the USUs whose superordinate PSU is not drawn for the subsample. The category C2, for example, includes the USUs whose superordinate PSU is drawn for the subsample, but these USUs and their superordinate SSUs are not drawn for the subsample. In contrast, category C8 includes all the USUs that are drawn for the subsample, as well as their corresponding PSU and SSU. By doing so, all the USUs of the original sample can be divided with respect to these eight categories of subsampling process. The categories are mutually exclusive, and each USU can be assigned only to one of the categories.

This division can be used as a basis for the reimputation to take all three requirements into account: The reimputation of all imputed values, the differentiation between elements drawn for the subsample and elements not drawn for the subsample, as well as to consider the different stages of the sampling design and the subsampling process respectively. We propose two different modifications of the rescaling bootstrap that use this division as a basis for the reimputation. In the first modification, the reimputation is done separately in all eight categories (with corresponding estimator Resc_Mod1, see Table 2), whereas the second modification (with corresponding estimator Resc_Mod2, see Table 2) combines categories with the same weight adjustments of the original design weights with respect to the formulas (1), (2), and (3). For example, the categories C3, C5, and C7 lead to same weight adjustmentsFootnote 6 as category C1, since even if the USUs or the SSUs are drawn for the subsample, their corresponding PSU is not drawn for the subsample.Footnote 7 In this case, \(\delta _{d}\) in (1), (2), and (3) takes the value 0 that results in same weight adjustments. Furthermore, the USUs in the categories C2 and C6 have the same weight adjustments, but these weight adjustments are different in comparison to the weight adjustments in the categories C1, C3, C5, and C7.

Both procedures have their advantages. When a reimputation is done separately in all eight categories, they are more equal in size, which may make the variance estimation more stable. Furthermore, this procedure makes more use of the subsampling process of the rescaling bootstrap. The second procedure, in which categories with the same weight adjustment are combined, has the advantage that it makes more use of the weight adjustment process of the rescaling bootstrap.

As a consequence, the two proposed modifications are described as follows, where they differ in Step 2:

-

1.

First, subsamples of size \(l^{*} = \lfloor l/2 \rfloor , m_{d}^{*} = \lfloor m_{d}/2 \rfloor \), and \(n_{dq}^{*} = \lfloor n_{dq}/2 \rfloor \) are drawn independently at each stage of the sampling design in each run b. This procedure corresponds to the rescaling bootstrap of Preston (2009) that is based on complete observations.

-

2.

To conduct the reimputation, all USUs are assigned to categories that are formed in each run b. For modification 1, the eight categories in Table 1 are built to include all possibilities to categorize the USUs with respect to the elements drawn for the subsample and the elements not drawn for the subsample at each stage of the sample design. For modification 2, the categories in Table 1 with the same weight adjustments are combined to one category. As explained, this concerns categories C1, C3, C5, and C7 as well as C2 and C6. Four categories remain—the two combined categories and the categories C4 and C8. For both modifications, the categories are formed again in each run b.

-

3.

The reimputation is conducted separately in each category c. In each run b, the imputed values of the original sample in each category c with \( i \in G_{bc}\) are reimputed on the basis of the observed values in each category c with \(i \in R_{bc}\), which results in the vector \(\mathbf{y} ^{*}_{reimp}\) that contains reimputed imputed values as well as observed values.Footnote 8

-

4.

The design weights \(w_{dqi}^{*}\) are computed in each run b with respect to (4) on the basis of the adjusted weights at each stage regarding (1), (2), (3), and as described by Preston (2009). The adjusted weights are combined to the vector \(\mathbf{w} ^{*}\).

-

5.

The point estimator of the rescaling bootstrap is calculated in each run b by

$$\begin{aligned} {\hat{\theta }}_{I}^{*}=T(\mathbf{y} ^{*}_{reimp}, \mathbf{w} ^{*}). \end{aligned}$$ -

6.

The steps 1 until 5 are repeated B times. Thus, the estimators \({\hat{\theta }}_{I,1}^{*} \ldots {\hat{\theta }}_{I,b}^{*} \ldots {\hat{\theta }}_{I,B}^{*}\) result and the variance is estimated with respect to (6) by

$$\begin{aligned} \hat{\text {V}}_{resc}\left( {\hat{\theta }}_{I}\right) =\dfrac{1}{B - 1} \cdot \sum _{b = 1}^{B}\left( {\hat{\theta }}_{I,b}^{*}-\dfrac{1}{B} \cdot \sum _{v=1}^{B}{\hat{\theta }}_{I,v}^{*}\right) ^2. \end{aligned}$$(10)

We include both modifications in the simulation study because of their different advantages and to evaluate their performance. The described procedure can be extended easily to sampling designs with more than three stages. In this case, the number of categories in Table 1 increases to \(2^{\phi }\), where \(\phi \) is the number of stages of the sampling design. Weight adjustments also can be extended easily to more stages by using the formula in Preston (2009, p. 229).

2.3 Procedures when \(V_2\) has a strong contribution

Assuming a deterministic imputation procedure and a variance decomposition as shown by (7), both modifications cover the variance component \(V_1\) and are valid variance estimators for the overall variance, if component \(V_2\) is negligible. Variance component \(V_2\) cannot be covered because this component includes the variance resulting from the treatment of the response mechanism as a random process and, thus, is based on the variance component related to a varying response vector. However, the rescaling bootstrap treats the response vector as fixed (for treatment of response vectors in resampling methods, see Mashreghi et al. 2014). This issue is due to the fact that the response vector, and thus nonresponse, is not generated in each run b of the rescaling bootstrap. The same response vector is used, and respondents and nonrespondents maintain their response status in each run b (Mashreghi et al. 2014). If the variance component \(V_2\) makes a significant contribution to the overall variance, an estimator is required that additionally covers this variance component. In this case, the two proposed modifications of the rescaling bootstrap may be extended by the procedures described in Mashreghi et al. (2014). For example, a new response vector can be generated for each run b by using a binomial distribution. As for the resampling methods described in Mashreghi et al. (2014), this procedure also may require adjustments of the weighting formulas in (1), (2), and (3) of the Preston’s rescaling bootstrap (Preston 2009). Another procedure for considering the variance component \(V_2\) is the usage of correction factors as described in Mashreghi et al. (2014) but modifications of these correction factors regarding multistage sampling designs may be necessary.

However, as described previously, in many situations, the overall sampling fraction f in multistage sampling designs may not be large enough in order for \(V_2\) to have a strong influence on the overall variance. Thus, the focus in the simulation study in Sect. 4 is more on the examination under which parameter constellations, particularly, the overall sampling fraction, the variance component \(V_2\) makes a significant contribution to the overall variance.

3 Design of the simulation study

We use a Monte Carlo simulation study to evaluate the quality of the proposed modifications of the rescaling bootstrap. According to Enderle et al. (2013), comparisons that are made on the basis of Monte Carlo simulation studies ensures an evaluation of such methods with respect to their actual application. This is a major point of this paper, because we aim to compare the proposed methods with variance estimation methods in which important components of the practical application such as the sampling process, the subsampling process or the imputation process are not considered. The effects of the non-consideration of such important components can be examined directly by using the corresponding distributions of the estimators. Furthermore, the Monte Carlo simulation study ensures an analysis of the effects of different parameter constellations. Within the scope of this paper, this is, for example, important with respect to the examination of possible effects of the variance component \(V_2\) on the variance estimation dependent on the overall sampling fraction.

We implement the simulation study by using the statistical software R (R Core Team 2021), and we describe this study in the following sections.

The simulation study consists of two parts. To evaluate the modifications in their basics, we draw a population from a multivariate normal distribution in the first part. This population is described in Sect. 3.1.1. In the second part of the simulation study in Sect. 3.2, we consider a more realistic dataset, to analyse the modifications under more complex conditions.

3.1 Multivariate normal distribution

3.1.1 Population, sampling design, and scenarios

The population generation process is similar to the population generation process used by Bruch (2016, 2019). In contrast to these studies, the population generation process in the simulation study of this paper is not related to a one or two stage sampling design but to a three-stage random sampling design.

First, we create the structure of the population with a size of \(N=10{,}000{,}000\) by dividing the population in 500 PSUs, 80 SSUs per PSU, and 250 USUs per SSU. This was done by a simple replication of the corresponding IDs of PSUs and SSUs according to their respective sizes.

Given this predefined structure, we draw the variable of interest y and auxiliary variables \(x_1\) and \(x_2\) from a multivariate normal distribution described by

where parameters \({\mu }\) and \({\Sigma }\) are defined by

and

We apply the function rmvnorm from the R package mvtnorm (Genz et al. 2014; Genz and Bretz 2009) to draw the universe from the multivariate normal distribution. As a result of this random drawing process to generate y, \(x_1\) and \(x_2\) given the predefined structure of PSUs and SSUs, these units are heterogeneous in this scenario.

We use the multivariate normal distribution for two reasons. First, the main aim of the paper is to propose the application of variance estimation methods when data is collected using multistage sampling designs and that in this collected data missing values appear that are compensated by using imputation. The proposed resampling method should be examined with regard to this main aim in this part of the simulation study, and the influences resulting, for example, from highly skewed variable distributions should be kept at a minimum. Second, the use of a multivariate normal distribution guarantees that a large proportion of the variance of the estimator appear at the last stage of the sampling design. In Särndal et al. (1992), Lohr (1999), Bruch et al. (2011), Bruch (2016) and in Sect. 1.2 it is described that with variance estimation procedures that only include the first stage of the sampling design, more than the variance of the estimator at the first stage is covered. In case the PSUs and SSUs are homogeneous, it may occur that most of the overall variance could be covered with the first stage’s variance estimator. However, in the simulation study, we aim to analyse, if the proposed modifications are able to estimate the variance correctly when also a large proportion of the variance occurs at the last stage and thus all stages of the sampling design may have to be considered. Thus, the PSUs and SSUs should be heterogeneous in this part of the simulation study. However, the PSU and SSU in the first part of the simulation study may be too heterogeneous for some applications. Thus, in the second part of the simulation study, we also consider a population with a less heterogeneous structure that may be more realistic for practical applications.

The simulation study includes different scenarios that differ with respect to the sampling fractions and the generation process of the response vector. We use the same response vector in each simulation run in the first two scenarios. The variance component \(V_2\) of (7) does not appear in this case. The aim is to show the extent the modifications of the rescaling bootstrap are able to estimate variance component \(V_1\). In Sect. 1.3, we described that this component may determine the whole variance of an imputed estimator in multistage sampling designs in many situations. Thus, it is of particular interest to find a valid variance estimator for this variance component, which is accomplished by comparing the two scenarios in the simulation study with different sampling fractions.

Sampling fractions are small in the first scenario, and 50 PSUs are drawn at the first stage (\(f_1=0.1\)); 8 SSUs in each drawn PSU are selected at the second stage (\(f_2=0.1\)) and 10 USUs in each drawn SSU are selected at the third stage (\(f_3=0.04\)). The overall sample size is \(n = 4000\) \((f=0.0004)\).

We examine the impact of large sampling fractions in the second scenario, particularly, the impact of missing reimputation of certain elements by which the influence increases with increasing sampling fractions. Thus, 300 PSUs are drawn at the first stage (\(f_1=0.6\)), 40 SSUs in each drawn PSU at the second stage (\(f_2=0.5\)), and 125 USUs in each drawn SSU at the third stage (\(f_3=0.5\)). The overall sample size is \(n=1{,}500{,}000\) \((f=0.15)\).

A new response vector is generated in each simulation run in Scenario 3. Thus, variance component \(V_2\) also may occur. In this scenario, the aim is to examine under which conditions this component has to be considered in a multistage sampling design and up to which point the proposed methods may still be a valid instrument to estimate the whole variance. As mentioned previously, we assume that the variance component \(V_2\) may be negligible in multistage sampling designs in many situations. However, the component is not negligible in the case of a large overall sampling fraction f. Thus, in this scenario, we choose sampling fractions that are rather high with \(f_1 = 0.25, f_2 = 0.25\), and \(f_3 \approx 0.25\) to obtain a rather high overall sampling fraction f. Hence, 125 PSUs are drawn at the first stage, 20 SSUs in each drawn PSU are selected at the second stage, and 63 USUs in each drawn SSU are selected at the third stage. The overall sample size is \(n = 157{,}500\). Also, the overall sampling fraction \(f \approx 0.016\) may be rather high compared to population surveys that use multistage sampling designs in practice but may not large enough for variance component \(V_2\) to have a large influence. Hence, to examine from which sampling fraction onwards the variance component \(V_2\) has an influence on the overall variance, we increase the sampling fractions up to \(f_1 = 0.6, f_2 = 0.5\), and \(f_3 = 0.5\) with overall sampling fraction \(f=0.15\).

3.1.2 Nonresponse generation

Nonresponse is generated in the population before sampling (see, for example, Fay 1991 or Shao and Steel 1999) and only for the USUs in our simulation study. The nonresponse is implemented by the procedure described and used in Enderle et al. (2013).Footnote 9 Following this procedure, the response vector is generated by using a logistic model and depends on the auxiliary variables \(x_1\) and \(x_2\). Thus, the missing mechanism is missing at random.

First, the response propensities \(p_{i}\) for each element in the population \(i \in U\) are computed by

where \({\tilde{\alpha }}\), \({\tilde{\beta }}\), and \({\tilde{\gamma }}\) are model parameters that determine the response mechanism. Second, random numbers \(u_i\) are selected from a uniform distribution U(0, 1) for each element i. The response vector \(\mathbf{z} \) for an element i takes the value 1 if \(p_i \ge u_i\), which indicates that element i has an observed value regarding y. The response vector \(\mathbf{z} \) takes the value 0 for an element i if \(p_i < u_i\), which indicates a missing value regarding y.

However, the main aim of this paper is to analyse the modifications of the rescaling bootstrap under nonresponse and imputation. Thus, the nonresponse rate has to be large enough. Also, the correlation of the auxiliary variables to the response vector should not be too small to ensure a missing-at-random mechanism. Thus, we set the parameters to generate the response vector to \({\tilde{\alpha }} = -20\), \({\tilde{\beta }}= 0.3\), and \({\tilde{\gamma }}= 0.07\), which leads to a nonresponse rate of about 43% for each scenario. The correlation of \(x_1\) to the response vector \(\mathbf{z} \) is approximately 0.68, and the correlation of \(x_2\) to the response vector is approximately 0.17.

We apply deterministic regression imputation to compensate for missing values. We apply a regression model in the linear form. The independent variables are the auxiliary variables \(x_1\) and \(x_2\) and the variable to be imputed y is the dependent variable. The regression imputation is conducted unweighted and all observed units are weighted equally when training the regression model. As a result of the population and sampling design, the ultimate sampling units have the same design weights so that it is not necessary to include them. The corresponding R-Code for the regression imputation is based on the R-code used by Davison and Sardy (2004).

3.2 AMELIA

The proposed procedures should also be evaluated under more realistic conditions. Thus, in a second part, we conduct the simulation study on the synthetic dataset AMELIA (Burgard et al. 2017, 2020; Kolb 2012; Alfons et al. 2011) that is based on the EU-SILC dataset from 2005. Also, the previously mentioned studies with respect to the rescaling bootstrap of Bruch (2016, 2019) used this dataset in their simulation studies.

The analyses are done at the person level and the population includes 10,012,600 persons. The variable of interest is the variable INC of the AMELIA dataset, that is the sum of all income components of a person. As a result, the variable is highly skewed and poses further challenges to the variance estimation. From this population, we draw samples with a size of 30,000 persons using a multistage sampling design. As PSUs we use the districts (variable DIS) whereby we assign districts 1 and 2 to district 3 due to their small population size. The total dataset therefore contains 38 PSUs in total. As SSUs, we use the cities and communities respectively (variable CIT). We assign small communities to larger communities when their population size is below 500.

To realize a sample size of 30,000, we select 10 PSUs, 10 SSUs in each PSU drawn, and 300 USUs in each SSU drawn. To generated nonresponse, we apply again the procedure described in Enderle et al. (2013). To model the imputation process, we use the variables BAS that describe the work status and the variable HHS that indicates the household size. We categorize the latter so that both variables consist of four categories. Since both variables are categorical, instead of (11), the propensities are computed by

where the parameters \({\tilde{\beta }}_{BAS, \nu [i]}\) and \({\tilde{\gamma }}_{CIT, o[i]}\) depend on the category \(\nu \) of variable BAS and on the category o of variable CIT to which an element i belongs. \({\tilde{\beta }}_{BAS, \nu [i]}\) results in a value of \(-3\) for \(\nu =1\), 1 for \(\nu =2\), 1 for \(\nu =3\) and 0 for \(\nu =4\). \({\tilde{\gamma }}_{CIT, o[i]}\) has the values \(-3\) for \(o=1\), 1 for \(o=2\), 0 for \(o=3\) and \(-2\) for \(o=4\).The parameter \({\tilde{\alpha }}\) is 2.5 in this scenario. The following steps to generate nonresponse are the same as for the previous scenarios. The nonresponse rate is also comparable with previous scenarios with a value of \(43\%\). In this part of the simulation study, we use a varying response vector.

In practice, often a stochastic imputation procedure is used. Thus, in this scenario, we examine the proposed variance estimation procedures for a stochastic imputation procedure, and we apply hot-deck-random imputation.Footnote 10 First, we construct imputation classes over the cross classification of variable BAS and CIT. Imputation classes that are too small are assigned to larger classes. Afterwards, for each missing value in an imputation class, a donor is drawn randomly from the observed value of the same imputation class. As a result of the stochastic imputation procedure, we apply the adjustment of imputed values as described in Shao (2002) instead of the reimputation. Due to the small overall sampling fraction, we do not consider any of the corrections described in Mashreghi et al. (2014) in this scenario.

3.3 Included estimator and benchmark

As shown in Table 2, we consider the following modifications of the rescaling bootstrap in the simulation study.

The modifications of the rescaling bootstrap Resc_Mod1 and Resc_Mod2 are the proposed methods of this paper. These are the only modifications that consider the three requirements to modify the rescaling bootstrap in the multistage design with respect to imputation that are described in Sect. 2.2. The other modifications of the rescaling bootstrap that are included in the simulation study do neglect at least one of the requirements. The aim of including these modifications of the rescaling bootstraps is to show the effect of important components not being considered or applied adequately, and that it is important to take all three requirements into account when applying the rescaling bootstrap in a multistage sampling design.

As a result, we include the estimator Resc_whole that applies the reimputation on the basis of the whole sample without distinction between the elements drawn and not drawn for the subsample and thus does not consider, for example, the second requirement. In case of a deterministic imputation method, this estimator should lead to the same results as the estimator that treats imputed values as actually observed. This issue is examined by including the estimator Resc_obs. Both estimators do not consider the imputation process adequately.

The estimators Resc_stage1, Resc_stage2, and Resc_stage3 do not consider certain stages of the sampling design and the subsampling process of the rescaling bootstrap in the reimputation process and thus the third requirement. The reimputation of these estimators only considers one stage of the sampling design. For example, the reimputation in the estimator Resc_stage1 is done separately only for the imputed values whose corresponding PSU is drawn for the subsample (\(\delta _{d}=1\)) and for the imputed values whose corresponding PSU is not drawn for the subsample (\(\delta _{d}=0\)). Stages 2 and 3 of the sampling design are neglected. The reimputation for the estimator Resc_stage2 is done separately only for the imputed values whose corresponding SSU is drawn for the subsample (\(\delta _{dq}=1\)) and for the imputed values whose corresponding SSU is not drawn for subsample (\(\delta _{dq}=0\)). Thus, stages 1 and 3 of the sampling design are neglected. The estimator Resc_stage3 does not consider the stages 1 and 2, and the reimputation is done separately only with respect to the imputed values drawn at the third stage (\(\delta _{dqi}=1\)) and the imputed values not drawn at the third stage (\(\delta _{dqi}=0\)). Instead of the eight categories in Table 1, we only have two categories in which the reimputation is applied within each estimator.

Similar to study of Bruch (2019) with respect to a single stage design, we also examine the need to reimpute the imputed values in a multistage design to avoid the unintentional variance component dependent on the sampling fractions, and the influence of not reimputed imputed missing values. This issue is related to the first requirement in Sect. 2.2 and is examined by including the estimator Resc_only_Sub. In contrast to the single stage design, statements regarding the need to reimpute imputed values dependent on the sampling fractions are not straightforward. That is due to more parameters having an impact on the weight adjustment, while the classification of elements being drawn (or not drawn) concerns the different stages.

With respect to the first point, we can only consider a limited number of parameter constellations in the simulation study, particularly, a scenario with small and a scenario with large sampling fractions. Thus, the statements focus mainly on these constellations. Regarding the second point, the classification of elements, we examine the effect of a missing reimputation dependent on the sampling fractions with respect to certain categories of Table 1 rather than with respect to a classification of elements drawn and not drawn for the subsample. Thus, for the estimator Resc_only_Sub, we do not reimpute the imputed values in the categories C1, C3, C5, and C7. We chose these categories because their elements receive same weight adjustments as described in Sect. 2.2. Thus, the effect of smaller and larger sampling fractions should be the same with respect to the influence of the elements of the categories C1, C3, C5, and C7 on the variance estimation. Particularly, the influence of the elements of these categories is very small when sampling fractions are small and larger when sampling fractions are large. This is shown in the Appendix A.1 and in Table 4, in which we compute the relative influence of USUs of a certain category c. The relative influence of categories C1, C3, C5, and C7 is altogether 4% in the scenario with small sampling fractions and 20% in the scenario with large sampling fractions. As a consequence, we expect a larger unintentional variance component and thus overestimation of the variance in the scenario with larger sampling fractions with estimator Resc_only_Sub.

In the first part of the simulation study, all estimators presented in Table 2 are considered. In this part of the simulation study, those estimators should perform well that fulfil the basic requirements for a variance estimation under imputation in a multistage sampling design, particularly, the three requirements defined in Sect. 2.2. As described in Sect. 3.1.1 using a multivariate normal distribution to create the population ensures, that all stages of the sampling design may have to considered and that other influences (such as the skewed variable of interest) that confound the effects that should be analysed are avoided. As a result, this scenario allows to focus on the analysis of the basic requirements of the variance estimation under imputation and is rather simple with respect to other influences. Thus, estimators that do not perform well in this scenario do not fulfil the requirements (as used in this study) to obtain a valid variance estimation under imputation in a multistage sampling design. For that reason, these estimators are not considered any further in the second part of the simulation study.

As a benchmark, we use the Monte Carlo variance, which is computed on the basis of the total value point estimators in (9) of 50,000 samples. This large number of samples for the benchmark is necessary, since the simulation study applied by Bruch (2016) shows that the Monte Carlo variance can be very unstable when the number of samples is not large. However, the variance estimation that applies resampling methods may converge much faster if the number of subsamples is large enough (Bruch 2019). Thus, and as a result of computation reasons, in the scenarios, that are based on the multinormal distributions, we computed the resampling methods on the basis of 125 subsamples and only for 1000 samples. In the scenario in which we use the AMELIA dataset the convergence was much slower due to the highly skewed variable of interest. For that reason, we increase the number of samples to 20,000.

4 Results

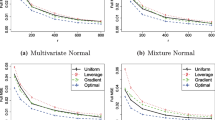

We present the results of the simulation study by using the relative bias. The results are visualized by boxplots that are produced by the R package lattice (Sarkar 2008). The black point in each boxplot indicates the mean value of the estimations of the different samples for a certain estimator. The red line across all boxplots indicates the Monte Carlo variance, whose relative bias is zero due to it being the benchmark.

4.1 Multivariate normal distribution

4.1.1 Scenario 1: Small sampling fractions, fixed response vector

Figure 1 presents the results for the small sampling fractions at all stages of the sampling design and a fixed response vector. As expected, the rescaling bootstrap that conducts the reimputation of the imputed values on the basis of the whole sample without distinction between the elements drawn and not drawn for the subsample (Resc_whole) leads to the same results as the rescaling bootstrap that treats the imputed value as actually observed (Resc_obs). Applying the reimputation of the imputed values on the basis of the whole sample without distinction between the elements drawn and not drawn for the subsample leads to the same reimputed value as the imputed value in the original sample when the imputation procedure is deterministic. In this case, this approach matches the rescaling bootstrap that treats the imputed value of the original sample as actually observed. Both estimators lead to a severe underestimation of the variance because they do not consider the effect of missing values and imputation. Particularly, it shows the need to meet requirement 2 in Sect. 2.2.

Furthermore, the figure shows that the three modifications of the rescaling bootstrap that neglect certain stages of the sampling design in the reimputation process (Resc_stage1, Resc_stage2 and Resc_stage3) underestimate the variance. These results strengthen the importance to meet requirement 3 in Sect. 2.2. However, the underestimation of the rescaling bootstrap that only considers the first stage in the reimputation process is not as large as the underestimation of the other two estimators.

As expected, the estimator that does not reimpute the imputed values in the categories C1, C3, C5, and C7 (Resc_only_Sub) leads only to a small overestimation of the variance. Elements of these categories have a minimal influence if the sampling fractions at the several stages are small as seen in Table 4 in the Appendix A.1. Thus, the unintentional variance component is small that leads to a small overestimation of the variance.

However, our two proposed modifications of the rescaling bootstrap—the one that makes more use of the weight adjustment process of the rescaling bootstrap (Resc_Mod2) and the other that separately does the reimputation in all the categories (Resc_Mod1)—lead to very similar results. On average, the estimations of both methods are close to the benchmark.

4.1.2 Scenario 2: Large sampling fractions, fixed response vector

These results of the previous scenario become more obvious when increasing the sampling fractions at the several stages. The results for large sampling fractions are presented in Fig. 2. Again, the estimators Resc_whole and Resc_obs lead to a huge underestimation of the variance. These results further confirm the need to meet requirement 2 in Sect. 2.2.

The modifications of the rescaling bootstrap that neglect certain stages of the sampling design (Resc_stage1, Resc_stage2 and Resc_stage3) strongly underestimate the variance, which is also the case for the rescaling bootstrap that only considers the first stage in the reimputation process. All estimations are below the benchmark for all three modifications. These results further show the need to meet requirement 3 in Sect. 2.2.

As expected, the estimator that does not reimpute the imputed values in the categories C1, C3, C5, and C7 (Resc_only_Sub) leads to a larger overestimation in this scenario than in the scenario with the small sampling fractions. This larger overestimation is a result of the increasing influence of elements of categories C1, C3, C5, and C7 with larger sampling fractions at the several stages as shown in Table 4 in the Appendix A.1. Thus, the unintentional variance component also increases and the overestimation is much larger. The results emphasise the need to meet requirement 1 in Sect. 2.2 and show the need to reimpute all imputed values of all (combined) categories when elements of the category have an influence on the variance estimation. Otherwise, the unintentional variance component can also appear in a multistage sampling design.

The only estimators that lead to estimations close to benchmark are the two proposed modifications of the rescaling bootstrap Resc_Mod1 and Resc_Mod2. These are the only estimators that meet all requirements in Sect. 2.2. The differences between these two modifications are again rather small.

4.1.3 Scenario 3: Varying response vector

This section presents the results of the simulation study when generating a new response vector in each simulation run. The results for sampling fractions \(f_1 = 0.25, f_2 = 0.25\), and \(f_3 = 0.25\) are displayed by Fig. 3. In this case, the total variance in (7) may also include the variance component \(V_2\). For a large sampling fraction f, the proportion of \(V_2\) may be relevant. Under such conditions, the modifications described in this paper may lead to an underestimation of the overall variance, since they do not cover this variance component.

However, the results for the sampling fractions \(f_1 = 0.25, f_2 = 0.25\), and \(f_3 = 0.25\) are very similar to the results of the previous section. The estimators Resc_whole, Resc_obs, Resc_stage1, Resc_stage2, and Resc_stage3 result in an underestimation of the variance, and the estimator Resc_only_Sub overestimates the variance. On average, the estimations of the modifications Resc_Mod1 and Resc_Mod2 are close to the benchmark. It seems that the variance component \(V_2\) only makes a small contribution to the total variance, even if the sampling fractions are rather large compared to many practical applications. The overall sampling fraction with \(f \approx 0.016\) may be too small for the component \(V_2\) to have an influence on the overall variance.

Thus, to examine this point in more detail, we further increase the sampling fractions in our simulation study. Table 3 presents the results for estimators Resc_Mod1 and Resc_Mod2. As can be seen, only from sampling fractions \(f_1 = 0.4, f_2= 0.4\), \(f_3 = 0.4\) and \(f = 0.064\) onwards, some underestimations arise due to the non-consideration of the variance component \(V_2\). The underestimations for \(f_1 = 0.4, f_2= 0.4\), \(f_3 = 0.4\) and overall sampling fraction \(f=0.064\) are rather small, with relative biases of −0.035 and −0.039. Even for the sampling fractions \(f_1 = 0.6, f_2 = 0.5\), and \(f_3 = 0.5\), with an overall sampling fraction f of 15%, the underestimations are not much larger with relative biases of −0.069 and −0.073. Thus, with respect to the conditions of our simulation study, it seems that the overall sampling fraction must be considerably larger for the variance component \(V_2\) to have an influence on the overall variance, and to be significant. However, as seen at the parameter constellations, such large sampling fractions may not often achieve in practical application. Thus, the modifications of the rescaling bootstrap described in Sect. 2.2 may ensure a valid variance estimation for the overall variance in many situations. Nevertheless, if the variance component \(V_2\) has an influence on the variance, the approaches described in Mashreghi et al. (2014) may be used as described in Sect. 2.3.

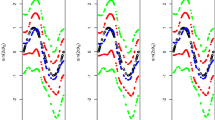

4.2 Simulation study using the AMELIA dataset

The results of the variance estimation using both of the proposed modifications of the rescaling bootstrap for the AMELIA dataset are presented by Fig. 4. Basically, the proposed modifications perform well under these challenging circumstances. The estimator Resc_Mod1 leads to a relative bias of −0.0246 on average and the estimator Resc_Mod2 results in a relative bias of -0.02352 on average, which is small when considering that the variable of interest INC is highly skewed. The overall sampling fraction is very small with 0.3%. However, as can be seen, due to the highly skewed variable of interest, the distributions of the variance estimations of both modifications are highly skewed as well. Particularly, larger outliers make the variance estimation less robust, a problem many variance estimations methods will be confronted with, when applying on such skewed data.

4.3 Computational efficiency

To evaluate the computational efficiency of the proposed modifications and the other included estimator, we evaluate the elapsed time of the different procedures for a certain sample in Scenario 1 (Sect. 4.1.1) with \(n = 4000\) using the R-command system.time of the base-package (R Core Team 2021). The computational times can only be evaluated with some stronger limitations, since it depends also on parameters that are not related to the actual different estimators, such as the computer power or the efficiency of the written R-Code of the different procedures.

Estimator Resc_Mod1 requires approximately 2.8 s per sample, Resc_Mod2 about 2.1 seconds and Resc_only_Sub 1.9 s. The estimators Resc_stage1, Resc_stage2 and Resc_stage3 have an elapsed time of about 1.6 seconds. With 1.4 seconds and 1.3 s, the estimators Resc_whole and Resc_obs are the fastest. The computational times show that the more complex the reimputation process is applied in the different estimator, the longer the computational time. Resc_Mod1 conducts the reimputation by considering the most number of categories. Resc_obs does not apply the reimputation. Hence, this may be one reason for the differences in computational time. The computational time can increase strongly with sample size in Scenario 2 (Sect. 4.1.2, \(n = 1{,}500{,}000\)). For example, Resc_Mod1 needs about 562 s and Resc_obs around 423 s.

5 Discussion and conclusion

In practice, frequently, there will be a trade off between the aim to achieve good variance estimates and the increase in complexity when using variance estimation procedures that include the sampling design and the imputation such as it was done in the proposed modifications. The theoretical part of Sects. 1 and 2 show the complexity to include the sampling design, particularly, the different stages and the imputation process that additionally concerns the implementation. As a result, the data analyst may have to adjust these procedures for their particular application, since they may be too specific for standard statistical software implementations. Moreover, the computational time with the proposed modifications increases since more components of the sampling design, particularly, more stages are taken into account as well as the reimputation has to be conducted again for each subsample.

However, from a theoretical view, the variance of an estimator is derived with respect to a certain sampling design that thus has to be considered in the variance estimation process. The imputation process also has an influence on the variance. Thus, the sampling design as well as the imputation process have to be considered adequately, otherwise, highly biased variance estimates may occur. This is confirmed in our simulation study. Our results show the need to consider the imputation process correctly in the variance estimation since our proposed modifications that include the sampling design and the imputation process adequately outperforms the other included estimators. Particularly, the subsampling process of the rescaling bootstrap and thus the different stages of the sampling design has to be taken into account in the reimputation process. As shown in the simulation study, the variance is heavily underestimated when the imputation process is not included in the variance estimation or the sampling design is not considered in the reimputation correctly. In case certain imputed values are not reimputed, the variance is overestimated. Thus, the results show that the consequences in the practical application may be severe since variance estimates are used, for example, in hypothesis testing and confidence intervals. For the estimators of the simulation study that do not consider the imputation process or the sampling design correctly in the reimputation, hypothesis tests with respect to a certain significance level may lead to falsely significant results since the variance estimate is too low. Confidence intervals and thus the coverage probability may be too small [see also the explanations in Haziza (2009)]. When using these variance estimates in subsequent surveys, the sample size may be chosen too small.

Using the variance estimations of the estimator that do not reimpute certain imputed values leads to opposite problems: hypothesis tests may be wrongly insignificant with respect to a certain significance level, confidence intervals are too large and too large sample sizes may be chosen in subsequent surveys.

The large extent of the bias produced by most of the considered estimators in comparison to the proposed modifications shows the need to include adequately the imputation and sampling design in the variance estimation and may overrule the disadvantage of a complex implementation and higher computational times. The extent of the Monte Carlo bias of most of the variance estimation procedures that do not consider the components correctly in our simulation study was so large that they cannot be applied in practice.

Thus, it is important to receive a more valid variance estimation when applying a complex sampling design, such as a multistage design, and imputation is applied. This can be ensured by using the proposed modifications of the rescaling bootstrap for multistage sampling designs of Preston (2009) that lead in our simulation study to estimations that are on average close to the benchmark when the variance component \(V_2\) is negligible.

Our simulation study did not detect any larger differences between the two proposed modifications. The decision for one of the two proposed modifications may be done based on the simplicity of implementation in a particular application.

However, our proposed modifications are limited to some degree, since they are combinations of different procedure that themselves have some limitations.

In the paper, we assumed that an imputation procedure is applied that allows for an inclusion of the imputation process in the rescaling bootstrap via the reimputation or the adjustment of imputed values. This is especially a consequence of the derivation of the proposed modifications to be an estimator for component \(V_s({\hat{\theta }}_I|\mathbf{z} )\). However, there may be imputation procedures for which both procedures may not be applied and thus the rescaling bootstrap may not be applied for these imputation procedures, at least in the current version. The reimputation may lead to an overestimation of the variance when the imputation procedure includes random components and the subsample size is not of the original sample size. This circumstances is also the case for the rescaling bootstrap. The adjustment of imputed values may be used instead but that would require metric variables to be imputed. Thus, other adjustments may be necessary for other imputation procedures. Such adjustments may also be included in the rescaling bootstrap but an evaluation is necessary in order to examine how to include these adjustments.

Additionally, for stochastic imputation procedures, for large sampling fractions, the joint bootstrap distribution of the sampling and random component may be a poor approximation of the corresponding joint distribution in the original sampling as result of the rescaling [see Mashreghi et al. (2014) for further explanations]. Thus, further corrections may be necessary in such a case. However, this limitation may be again rather irrelevant in survey practice with generally small sampling fractions.

When the component \(V_2\) makes a significant contribution to the overall variance, the proposed methods may be modified with respect to the approaches described in Mashreghi et al. (2014). However, a larger contribution of the variance component \(V_2\) to the overall variance was detected only in our simulation study for high sampling fractions, which are rather unrealistic in practice. As already described, a large overall sampling fraction f, which is the main determinant of the variance component \(V_2\), may be difficult to achieve in multistage sampling designs. Thus, in many situations, the proposed modifications may be sufficient to estimate the variance of an imputed estimator when multistage sampling designs are applied. However, due to the large number of possible parameter constellations, our simulation study could only be conducted for a few parameter constellations. In particular, the influence of the variance component \(V_2\) should be examined in more detail in further simulation studies under other conditions.

Furthermore, the simulation study based on the AMELIA dataset led to large outliers with respect to the variance estimation that can be explained by the highly skewed variable of interest. Thus, our modifications show potentials for improvements with respect to the robustness.

Finally, in our simulation study, we can control all influences, particularly the response mechanism, by setting the corresponding parameters and by considering the variables that are responsible for the nonresponse. This requirement is important to evaluate the proposed modifications. In practice, it is often not possible to receive a lot of information with respect to the nonresponse mechanism, and a biased point estimation cannot be avoided. This biased estimation also may affect the variance estimation. Future research also may examine our proposed modification under such circumstances in more detail. However, this goes beyond of the scope of this paper in which the proposed methods were evaluated if they are basically an appropriate instrument to estimate the variance of an imputed estimator in a multistage sampling design.

Availability of data and material (data transparency)

The data (the population) of the first part of the simulation study was generated by the function rmvnorm from the R-package mvtnorm (Genz et al. 2014; Genz and Bretz 2009). The parameters are presented in detail in the manuscript. The data from the second part of the simulation study is the dataset AMELIA (Burgard et al. 2017, 2020; Kolb 2012; Alfons et al. 2011) that is available at http://amelia.uni-trier.de/?p=287

Code availability

The R-Code of the proposed modifications are available from the corresponding author on reasonable request.

Notes

In the following we use the term imputation for single imputation since multiple imputation procedures are not covered in this study.

When the sampling elements in the PSU are similar with respect to the variable of interest y, a large part of the variance of the point estimator results from the first-stage sampling. In contrast, when sampling elements are very different regarding y in the PSUs, most of the variance results from the sampling at subsequent stages.

For simplification, we use the term rescaling bootstrap for both variance estimators independent of the sampling design.

However, in the second part of the simulation study, the proposed modifications are also evaluated with respect to a stochastic imputation procedure since such imputation methods are often applied in practice.