Abstract

A new clustering method for random intervals that are measured in the same units over the same group of individuals is provided. It takes into account the similarity degree between the expected values of the random intervals that can be analyzed by means of a two-sample similarity bootstrap test. Thus, the expectations of each pair of random intervals are compared through that test and a p-value matrix is finally obtained. The suggested clustering algorithm considers such a matrix where each p-value can be seen at the same time as a kind of similarity between the random intervals. The algorithm is iterative and includes an objective stopping criterion that leads to statistically similar clusters that are different from each other. Some simulations to show the empirical performance of the proposal are developed and the approach is applied to two real-life situations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interval-valued observations appear in many real-life situations as those involving fluctuations, subjective perceptions, ranges, censored or grouped data, to mention only a few (Billard and Diday 2003; D’Urso and Giordani 2004; Hudgens 2005; Horowitz and Manski 2006; Kreinovich et al. 2007; Magnac and Maurin 2008; Ramos-Guajardo and Grzegorzewski 2016).

Random intervals (RIs for short) have been shown to model and handle suitably such kind of data in different settings as, for instance, regression analysis, time series, hypothesis testing and clustering (Blanco-Fernández et al. 2013; Cappelli et al. 2013; De Carvalho et al. 2006a; D’Urso et al. 2015; González-Rodríguez et al. 2009; Ramos-Guajardo and Blanco-Fernández 2017; Ramos-Guajardo et al. 2020; Sinova et al. 2012). This work is focused on those situations in which a random process generating interval-valued outcomes is considered, and classical statistics are applied on them. Therefore, the approach proposed here is soundly applicable when the intervals generated by the random process either represent precise objects or they are imprecise descriptions or perceptions provided by an observer. Thus, intervals themselves are considered, without losing any information delivered by the interval structure.

Diverse (crisp) hierarchical and non-hierarchical clustering methods for grouping interval-valued data have been developed in the literature (see, for instance, (Hajjar and Hamdan 2013; Park et al. 2020; Rodriguez and De Carvalho 2019) and the references therein). Most of these methods have been elaborated in the context of symbolic data analysis. Concerning hierarchical methods, agglomerative and divisive methods have been introduced in Chavent (1998), Gowda and Ravi (1995), Xu et al. (2018). Besides, in the partitioning clustering framework several methods have been proposed based on different techniques, including dynamic clustering, city-block distances and self-organizing maps, to name a few (see Chavent and Lechevallier 2002; De Carvalho et al. 2006a, b; Hajjar and Hamdan 2013; Ralambondrainy 1995). Note that all mentioned methods are useful for classifying individuals but not variables.

Most of the approaches to the clustering of real variables found in the literature are of hierarchical type (Nicolau and Bacelar-Nicolau 1998) and vary according to either the nature of the variables under study or to the choice of the similarity measure. The usefulness of a hierarchical clustering method for variables focuses, for example, on being able to replace a set of variables that are closely related by a single representative variable or even by a synthetic variable by means of a principal component analysis approach (El-Shaarawi and Piegorsch 2002). Such a clustering method also allows arranging variables into homogeneous clusters in order to get meaningful structures. Two examples of the clustering of real variables can be found, for instance, in Chavent et al. (2012). The first one entails the classification of 10 sports performed by 41 athletes (according to their performances), and the second one is devoted to the classification of 31 variables describing some properties of 21 French wines.

In the real framework, a frequently used technique to cluster a set of variables consists of calculating similarities between these variables by means of correlation measures. However, the space of intervals is not linear but semilinear due to the lack of symmetric element with respect to the Minkowski addition, and the extension of the concept of covariance (and therefore of correlation) is not straightforward derived. Some alternatives to the employment of correlation measures for clustering real variables have been proposed, for instance, in Kojadinovic (2004), Maharaj (1996). Maharaj (1996) showed the use of the p-values obtained from a two-sample test to apply later a hierarchical clustering in the framework of time series. This method inspired the one developed by González-Rodríguez et al. (2009) that, as far as we know, is the only hierarchical approach in the literature for clustering RIs measured in the same units over the same group of individuals. Specifically, their approach aims to classify fuzzy random variables (FRVs for short), which are those variables taking fuzzy data as responses, although RIs can be seen as a particular case.

The goal of the method developed in González-Rodríguez et al. (2009) in the context of RIs is to group s features when the available data is a matrix of intervals obtained when observing s independent RIs on n independent statistical units. This objective is achieved by taking into account the p-values obtained by applying a hypothesis test for checking the equality of expectations of RIs proposed in González-Rodríguez et al. (2006). Thus, the higher the p-value attained, the more similar are the expected values of two RIs, since each p-value can be seen as a measure of the similarity between two RIs measured in the same group of individuals. However, it should be noted that the multi-sample test in González-Rodríguez et al. (2006) is only valid for simple RIs, i.e, those taking on a finite quantity of values.

In this work, the method in González-Rodríguez et al. (2009) is proposed to be extended for general (not only simple) RIs measured in the same units over the same group of individuals by using a bootstrap test procedure based on a similarity measure that is able to check not only the strict equality between expectations but also a degree of similarity among them ranging from 0 and 1, where 1 means that the intervals are exactly equal [which is analogous to the method in González-Rodríguez et al. (2009)] and 0 means there is not intersection between them. Note that the concept of similarity proposed here is a geometric one in the sense that two intervals are considered more similar whenever the ratio between the part they have in common and the union of both is higher. Thus, if that ratio is equal to 1 then both intervals are completely equal. Another possibility could be to consider a distance between intervals but then the concept of geometric similarity would be lost, and both the analysis and the interpretability will be different. Then, the idea is to relax the concept of strict equality between expected values by using a measure of geometric similarity between intervals that was initially introduced by Jaccard (1901), and which is defined as a ratio of the Lebesgue measures of the intersection and the union intervals (Shawe-Taylor and Cristianini 2004). To do that, a two-sample similarity test for the expected value of random intervals suggested by Ramos-Guajardo and Blanco-Fernández (2017) can be applied to obtain the p-values matrix instead of the usual two-sample test for the equality of expectations of RIs. The main advantages of the new approach are the following: (1) it can be used for comparing all types of RIs and not only simple RIs, (2) two groups of RIs can be linked providing their expected values present a high degree of similarity (according to the Jaccard-based similarity coefficient), not being necessary the strict equality between such expectations.

In this framework a hierarchical clustering method for RIs will be developed allowing specifying the degree of similarity that is intended to exist at least between the expectations of the clusters that are joined together in every step. Thus, once we have prefixed a similarity grade, the idea is to detect clusters of RIs such that the RIs in each group have not necessarily equal but similar population expected values at certain extent.

The paper is organized as follows. Section 2 includes two cases study involving real-life RIs in order to motivate the proposal. In Sect. 3 some preliminaries on RIs are given. Section 4 contains the clustering criterion to be applied based on the two-sample similarity test for RIs. The suggested hierarchical clustering algorithm for RIs is provided in Sect. 5. The results of a simulation study are discussed in Sect. 6 where the empirical performance of the proposal is investigated, also in comparison with the method in González-Rodríguez et al. (2009). The application of the approach to the real-life cases described in Sect. 2 is analyzed in Sect. 7 also in contrast to other methods by taking into account different similarity grades to set up the clusters. Finally, some concluding remarks are provided in Sect. 8.

2 Case-studies

The method to be developed in the next sections will allow us to classify random intervals measured in the same units over the same group of individuals by considering a measure of similarity between those random intervals. Below are presented two real situations in which the procedure outlined in this work can be subsequently applied.

2.1 Mathematics related beliefs questionnaire

As a first real life situation, a questionnaire including 17 statements has been proposed to a group of 117 students attending the second course of the Degree in Primary Education of the University of Cantabria (Spain). The statements are related to their mathematics related beliefs and are described below:

-

Q1 I think what I learn in math class is interesting.

-

Q2 I like what I learn in math class.

-

Q3 I am very interested in mathematics.

-

Q4 I like to do math stuff.

-

Q5 In math, I prefer challenging tasks to learn new things.

-

Q6 I hope to do well on math homework and on math tests.

-

Q7 I prefer mathematics in which I must strive to find the solution than those that do not require much effort.

-

Q8 I have found that if I have patience, I can do difficult math problems.

-

Q9 I am sure I can learn how to solve the most difficult math problem.

-

Q10 I do not have to try too hard to understand math.

-

Q11 Compared to other classmates I think I am good at math.

-

Q12 I think I will do well in math this course.

-

Q13 I understand everything we have done in math this year.

-

Q14 I can usually do math problems that take a long time to solve.

-

Q15 I can understand even the most difficult subject to teach me in mathematics.

-

Q16 By doing my best in math I try to show my teacher that I am better than other classmates.

-

Q17 I put a lot of effort into math to show the teacher and my classmates how good I am.

The survey respondents used interval data in a scale ranging from 0 to 10 for answering the questionnaire, where 0 represents a totally disagree to each statement whereas 10 represents a totally agree to it. Thus, each response in terms of an interval corresponds to the set of values that the student considers compatible with his/her opinion at some extent, i.e., the student considers that his/her opinion cannot be outside of this set. In this context, the interval responses of the 117 students about his/her opinion about each statement i, for \(i\in \{1,\ldots ,17\}\) are given in the same units and can be modeled through an RI \(\mathbf {QI}\). The purpose is, therefore, to classify the 17 RIs based on a measure of similarity between their expected values, as it will be seen in the following sections.

2.2 Daily concentration of PM10 particles in Spanish cities

The second study addresses the analysis of the daily concentration of PM10 particles from June to September 2019 (122 days) in 18 Spanish autonomous cities. The Spanish Ministry of the Environment defines the PM10 particles such as dust particles, ash, soot, metal, cement or pollen, scattered in the atmosphere, whose diameter varies between 2.5 and 10 \(\mu m\). Nowadays, scientists consider such particles are the most severe environmental pollution problem, due to their serious affections to the respiratory tract and the lung.

The hourly data on the concentration of PM10 particles from June to September of 2019 in the different cities have been extracted from the website https://www.miteco.gob.es/es/calidad-y-evaluacion-ambiental/temas/atmosfera-y-calidad-del-aire/calidad-del-aire/evaluacion-datos/datos/Datos_oficiales_2019.aspx. The cities analyzed were Vitoria, Palma de Mallorca, Santiago de Compostela, Logroño, Madrid, Murcia, Pamplona, Oviedo, Las Palmas de Gran Canaria, Santa Cruz de Tenerife, Santander, Sevilla, Toledo, Valencia, Valladolid, Zaragoza, Ceuta and Barcelona. The measurement of the daily concentration of these particles in each city i, for \(i\in \{1,\ldots ,18\}\) is modeled through an RI \(X_i\) whose data \(X_{ij}=[X_{ij}^m,X_{ij}^M]\) are determined by the minimum and maximum concentration of PM10 particles reached during day j (\(X_{ij}^m\) and \(X_{ij}^M\), respectively), for \(i\in \{1,\ldots ,122\}\). Thus, each interval reflects the daily variability of the pollution by PM10 particles in the corresponding city. Moreover, each of the cities can be identified with an RI and the aim is to be able to classify the 18 Spanish cities according to their daily PM10 concentration levels.

3 Preliminaries

Let \({\mathcal {K}}_{c}({\mathbb {R}})\) be the family of non-empty closed and bounded intervals of \({\mathbb {R}}\). An interval \(A\in {\mathcal {K}}_{c}({\mathbb {R}})\) can be characterized by either its \(\text {mid}\,\)/\(\text {spr}\,\) representation or its \(\inf \)/\(\sup \) representation. The first one, which is so that \(A=[\text {mid}\,A\pm \text {spr}\,A]\) with \(\text {mid}\,A\in {\mathbb {R}}\) being the mid-point or center and \(\text {spr}\,A\ge 0\) being the spread or radius of A, has been shown to be more operative than the second one, given by \(A=[\inf A, \sup A]\), and a valuable tool for different statistical purposes (see, for instance, Blanco-Fernández et al. 2011; D’Urso and Giordani 2004; Sinova et al. 2012).

The usual arithmetic between intervals is based on the Minkowski’s addition and the product by a scalar. In terms of the \((\text {mid}\,,\text {spr}\,)\) representation it is given by

where \(A_1,A_2\in {\mathcal {K}}_{c}({\mathbb {R}})\) and \(\lambda \in {\mathbb {R}}\). It should be remarked that the space \(({\mathcal {K}}_{c}({\mathbb {R}}),+,\cdot )\) is not linear but semilinear due to the lack of symmetric element with respect to the Minkowski addition. Thus, in general, \(A + (-1)A \ne {0}\) unless \(A = \{a\}\) is a singleton. Moreover, the Minkowski difference between two intervals \(A + (-1)B\) does not coincide with the natural difference \(A- B\), since it does not fulfill the addition/subtraction simplification, i.e. \((A + (-1)B) + B \ne A\) (see Blanco-Fernández et al. 2013 for more details).

On the other hand, the Lebesgue measure (or length) of \(A\in {\mathcal {K}}_{c}({\mathbb {R}})\) so that \(A\ne \emptyset \) is given by \(\lambda (A)= 2\text {spr}\,A\) whereas the Lebesgue measure of the empty set is clearly \(\lambda (\emptyset ) = 0\). In addition, according to (Shawe-Taylor and Cristianini 2004), if \(A,B\in {\mathcal {K}}_{c}({\mathbb {R}})\) the Lebesgue measure of the intersection between A and B can be expressed as follows:

A random interval (RI for short) is a random variable modelling those situations in which intervals on \({\mathcal {K}}_{c}({\mathbb {R}})\) are provided as outcomes. Mathematically, given a probability space \((\varOmega ,{\mathcal {A}},P)\), an RI is a Borel measurable mapping \(X : \varOmega \rightarrow {\mathcal {K}}_{c}({\mathbb {R}})\) w.r.t. the well-known Hausdorff metric on \({\mathcal {K}}_{c}({\mathbb {R}})\) (Matheron 1975). Equivalently, X is an RI if both \(\text {mid}\,X, \text {spr}\,X:\varOmega \rightarrow {\mathbb {R}}\) are real-valued random variables and \(\text {spr}\,X\ge 0\) a.s.-[P].

The expected value of X introduced by Aumann (1965) is expressed as the interval \(E([\text {mid}\,X\pm \text {spr}\,X])=[E(\text {mid}\,X)\pm E(\text {spr}\,X)]\) whenever \(\text {mid}\,X,\text {spr}\,X\in L^1(\varOmega , {\mathcal {A}},P)\), i.e., whenever \(\text {mid}\,X,\text {spr}\,X\) are integrable functions in the probability space \((\varOmega , {\mathcal {A}},P)\). Given a simple random sample of X, \(\{X_i\}_{i=1}^n\), the corresponding sample expectation of X is defined coherently in terms of the interval arithmetic as \({\overline{X}}=(1/n)\sum _{i=1}^n X_i\), and it fulfils \({\overline{X}}= [\overline{\text {mid}\,X} \pm \overline{\text {spr}\,X}]\). It should be noted that \(\overline{\text {mid}\,X}\) and \(\overline{\text {spr}\,X}\) are the sample mean of the real-valued random variables \(\text {mid}\,X\) and \(\text {spr}\,X\), respectively, i.e., \(\overline{\text {mid}\,X}=(1/n)\sum _{i=1}^n \text {mid}\,X_i=\text {mid}\,{\overline{X}}\) and \(\overline{\text {spr}\,X}=(1/n)\sum _{i=1}^n \text {spr}\,X_i=\text {spr}\,{\overline{X}}\).

On the basis of the measure defined in (1), a measure of the degree of similarity between two intervals \(A,B\in {\mathcal {K}}_{c}({\mathbb {R}})\) can be defined conforming to the Jaccard coefficient (Jaccard 1901) as

where \(\lambda (A\cup B)=\lambda (A)+\lambda (B)-\lambda (A\cap B)\), and both A and B are presumed not to be reduced to a singleton. Undoubtedly, since \(\lambda (A\cap B)\le \lambda (A\cup B)\) it is fulfilled that \(0\le S(A,B)\le 1\). Moreover, \(S(A,B)=0\) iff \(A\cap B=\emptyset \), \(S(A,B)=1\) if \(A=B\), and \(S(A,B)\in (0,1)\) iff \(A\cap B\ne \emptyset \) and \(A\ne B\). Some situations considering various possible similarity degrees are gathered in Fig. 1.

Notice, for instance, that the similarity measure of intervals \(A=[0,2]\) and \(B=[1,2]\) is 1/2, and so it is the similarity measures of A and \(C=[0,1]\), of A and \(D=[0,4]\), and of A and \(E=[-2,2]\).

4 Clustering criterion: two-sample similarity test for RIs

In this paper we aim at developing a method to cluster k independent RIs by paying attention to their expected values.

Given a probability space \((\varOmega ,{\mathcal {A}},P)\) and a set of s independent simple RIs \(X_i:\varOmega \rightarrow {\mathcal {K}}_{c}({\mathbb {R}})\), for \(i=1,\ldots ,s\), the purpose is to get different groups \(G_k\subset \{1,\ldots ,s\}\) so that the RIs belonging to the same group can be considered to be similar at some extent.

Concerning the generation process of the data, a simple random sample \(\{X_{ij}\}_{j=1}^n\) distributed as the RI \(X_i\) is drawn for each \(i\in \{i,\ldots ,s\}\). Thus, in practice the data collected is gathered in an interval data matrix \([x_{ij}]_{i=1,\ldots ,s}^{j=1,\ldots ,n}\) where each \(x_{ij}\in {\mathcal {K}}_{c}({\mathbb {R}})\).

In order to test if the expected values of two RIs can be considered to be similar at some extent, a version for different sample sizes of the two-sample similarity test for the expected value of RIs proposed in Ramos-Guajardo and Blanco-Fernández (2017) is suggested. It should be noted that the version of the test for different sample sizes is necessary to be able to establish such a comparison between clusters that include different number of RIs and, therefore, different sample sizes.

First, the study developed for equal sample sizes is recalled. Let \(X,Y:\varOmega \longrightarrow {\mathcal {K}}_{c}({\mathbb {R}})\) be two independent RIs such that \(\text {spr}\,E(X)>0\) and \(\text {spr}\,E(Y)>0\), and belonging to the class

in order to assure the non-singularity of the covariance matrices that relate \(\text {mid}\,X\) and \(\text {spr}\,X\), and \(\text {mid}\,Y\) and \(\text {spr}\,Y\). Note that in the expression of \({\mathcal {P}}\), \(\sigma ^2_{\text {mid}\,X}\) and \(\sigma ^2_{\text {spr}\,X}\) denote the variances of the real variables \(\text {mid}\,X\) and \(\text {spr}\,X\), whereas \(\sigma ^2_{\text {mid}\,X,\,\text {spr}\,X}\) is the square of the covariance between the real variables \(\text {mid}\,X\) and \(\text {spr}\,X\).

To test the null hypothesis \(H_0: S(E(X),E(Y)) \ge d\), where \(d\in [0,1]\) is the degree of similarity between E(X) and E(Y), is necessary to compute the probability that the empirical version of the Jaccard-based similarity coefficient introduced in (2) is less than d, whenever the null hypothesis is satisfied. However, such a computation is not immediate, since it is necessary to consider the measure of the intersection between two intervals is given by the expression in (1). Thus, based on this intersection measure, the test can also be expressed as follows by considering the mid/spr characterization of intervals (note that there has also been a change in the inequalities of the hypotheses):

Let \(\{X_i\}_{i=1}^n\) and \(\{Y_i\}_{i=1}^n\) be two simple random samples drawn from X and Y, respectively. The test statistic is defined as

Considerably large values of the statistic above would refer to a similarity degree between the expected values of X and Y lower than d and far from d and, as a consequence, a small p-value, so the null hypothesis should be rejected. The asymptotic distribution of the statistic T under \(H_0\) is discussed in Lemma 1 and its proof is provided in Ramos-Guajardo and Blanco-Fernández (2017). The corresponding limit distribution is based on the bivariate normal distributions \(Z=(z_1,z_2)^{\prime }\equiv {\mathcal {N}}_2\Big (\mathbf {0},\varSigma _1\Big )\) and \(U=(u_1,u_2)^{\prime }\equiv {\mathcal {N}}_2\Big (\mathbf {0},\varSigma _2\Big )\), where \(\varSigma _1\) is the covariance matrix for the random vector \((\text {mid}\,X,\text {spr}\,X)\) and \(\varSigma _2\) is the corresponding one for \((\text {mid}\,Y,\text {spr}\,Y)\).

Lemma 1

(Ramos-Guajardo and Blanco-Fernández 2017) For \(n\in {\mathbb {N}}\), let \(\{X_i\}_{i=1}^n\) and \(\{Y_i\}_{i=1}^n\) be two random samples independent and equally distributed from X and Y, respectively, and defined on the probability space \((\varOmega ,{{{\mathcal {A}}}},P)\). Let \(T_n\) be defined as in (4). If \(X,Y\in {\mathcal {P}}\), then:

-

(a)

If \(\text {spr}\,E(X)=d\,\text {spr}\,E(Y)\) and \(\text {mid}\,E(X)-\text {mid}\,E(Y)=(1-d)\text {spr}\,E(Y)\), then it is fulfilled that

$$\begin{aligned} T{\mathop {\longrightarrow }\limits ^{{\mathcal {L}}}}\max \{du_2-z_2,(1+d)(z_1-u_1) +(d-1)(z_2+u_2)\}. \end{aligned}$$ -

(b)

If \(\text {spr}\,E(X)=d\,\text {spr}\,E(Y)\) and \(-\text {mid}\,E(X)+\text {mid}\,E(Y)=(1-d)\text {spr}\,E(Y)\), then it is fulfilled that

$$\begin{aligned} T{\mathop {\longrightarrow }\limits ^{{\mathcal {L}}}}\max \{du_2-z_2,(1+d)(u_1-z_1) +(d-1)(z_2+u_2)\}. \end{aligned}$$ -

(c)

If \(d\,\text {spr}\,E(X)=\text {spr}\,E(Y)\) and \(\text {mid}\,E(X)-\text {mid}\,E(Y)=\displaystyle \frac{(1-d)}{d}\text {spr}\,E(Y)\), then it is fulfilled that

$$\begin{aligned} T{\mathop {\longrightarrow }\limits ^{{\mathcal {L}}}}\max \{dz_2-u_2,(1+d)(z_1-u_1) +(d-1)(z_2+u_2)\}. \end{aligned}$$ -

(d)

If \(d\,\text {spr}\,E(X)=\text {spr}\,E(Y)\) and \(-\text {mid}\,E(X)+\text {mid}\,E(Y)=\displaystyle \frac{(1-d)}{d}\text {spr}\,E(Y)\), then it is fulfilled that

$$\begin{aligned} T{\mathop {\longrightarrow }\limits ^{{\mathcal {L}}}}\max \{dz_2-u_2,(1+d)(u_1-z_1) +(d-1)(z_2+u_2)\}. \end{aligned}$$

It is also recalled that other situations under \(H_0\) different than the ones shown in the lemma above imply a weak convergence of T to a limit distribution stochastically bounded by one of those provided in the lemma. In addition, since the limit distribution of T depends on X, an alternative X-dependent statistic, called \(T^{\prime }\), based on the ideas described in Ramos-Guajardo (2015) is proposed, and the consistency and power of the test are also studied (see Lemma 2 in Ramos-Guajardo and Blanco-Fernández (2017) for more details).

The asymptotic distribution of the proposed statistic is not effective to compute critical values, so a bootstrap procedure is recommended. Specifically, a residual-type bootstrap based on the studies of Shao and Tu in Shao and Tu (1995) has been employed. Thus, if we consider bootstrap samples from X and Y, i.e. \(\{X_i^*\}_{i=1}^n\) and \(\{Y_i^*\}_{i=1}^n\) being chosen randomly and with replacement from \(\{X_i\}_{i=1}^n\) and \(\{Y_i\}_{i=1}^n\), respectively, the bootstrap statistic is defined as follows:

As it has been described in Ramos-Guajardo and Blanco-Fernández (2017), the minima-terms included in \(T^*\) are useful to determine the parts on its expression which have the influence to the maximum depending on the situation under \(H_0\). It has been also shown that \(T^{*}\) converges weekly (almost sure) to the same distributions as the ones provided in Lemma 1 under the same requisites. In addition, the bootstrap approach is also consistent and, in practice, the distribution of \(T^{*}\) is approximated by considering the Monte Carlo method. The p-value of the bootstrap test would be the probability that \(T^*>T\). In practice, the bootstrap procedure consists of getting a large sample B of values of the statistic, \(T^*_1,\ldots ,T^*_B\), close enough to the population distribution, and the p-value is approximated by the proportion of values in \(T^*_1,\ldots ,T^*_B\) which are greater than or equal to the value of the statistic T.

Remark 1

Note that the results above are valid for equal sample sizes. Nevertheless, if the size of the samples drawn from X and Y differs, the same statistics T and \(T^*\) are valid as long as the corresponding sample sizes, say n and m, satisfy that \(n/(n + m)\rightarrow p_1\in (0,1)\) and \(m/(n+m)\rightarrow (1-p_1)\in (0,1)\), as n and m tends to \(\infty \).

5 Hierarchical clustering method for RIs

The two sample similarity test for RIs given in Sect. 4 can be used to get a matrix of similarities to apply later a hierarchical clustering for interval-valued data following the ideas in González-Rodríguez and others (González-Rodríguez et al. 2009). In that paper the authors start from a matrix \(\mathbf{P }\) holding the p-values obtained by using a multi-sample test for random fuzzy sets to all the pairs of random fuzzy sets. The procedure to get the p-values to cluster RIs is provided in the next lines.

Assume that \(X_1\ldots ,X_s\) are s independent RIs taking values in \({\mathcal {K}}_{c}({\mathbb {R}})\). For each \(j\in \{1,\ldots ,s\}\), let \(\{X_{jl}\}_{l=1}^{n_j}\) be a simple random sample obtained from the RI \(X_j\), and let \(N\in {\mathbb {N}}\) be the overall sample size.

Maharaj (1996) showed the use of the p-value of a two-sample test in order to get a matrix of p-values, which can be interpreted as a matrix of similarities, to apply later a hierarchical clustering in the framework of time series, whenever the measure employed is symmetric and non-negative. The same procedure can be imitated by considering the similarity measure proposed in (2) due to its symmetry and no negativity. Thus, the two-sample similarity bootstrap test described in Sect. 4 can be applied to each pair \(i_1,i_2\in {1,\ldots ,s}\) in order to get a matrix of p-values \(\mathbf{P }=[p_{i_1,i_2}]\), where \(p_{i_1,i_2}\) is the p-value of the test \(H_0 : S(E(X_{i_1}),E(X_{i_2}))\ge d\). Of course, it is necessary to fix in advance a value \(d\in [0,1]\) as a threshold so that two RIs are grouped together whenever \(H_0\) is not rejected at the usual significance levels.

It is well-known that a p-value close to zero suggests rejecting the null hypothesis \(H_0\). Hence, the closer to zero the p-value is, the lower the similarity between \(E(X_{j_1})\) and \(E(X_{j_2})\) with respect to d is. On the other hand, one can assume that if a p-value is high, the similarity between \(E(X_{j_1})\) and \(E(X_{j_2})\) cannot be far below d, otherwise the test would be rejected. In fact, the higher the p-value is, the more similar \(E(X_{j_1})\) and \(E(X_{j_2})\) are and, in particular, if \(j_1=j_2\) the p-value should be equal to 1. In this way the obtained matrix \(\mathbf{P }\) can be seen as a kind of similarity matrix. In addition, consistently with (González-Rodríguez et al. 2009), \(d(j_1,j_2)=1-p_{j_1,j_2}\) is a distance that quantifies how similar \(E(X_{j_1})\) and \(E(X_{j_2})\) are with respect to d, so that the lower such a distance is, the more reason \(E(X_{j_1})\) and \(E(X_{j_2})\) must belong to the same cluster. Those distances can be used to obtain a hierarchical clustering method with any of the usual linkage criteria.

The proposed hierarchical clustering procedure starts from the singleton clusters, \(C_l=X_l\) for \(l\in \{1,\ldots ,s\}\), and computes the matrix of p-values \(\mathbf{P }\) resulting from the corresponding two-sample similarity bootstrap tests \(H_0 : S(E(X_{i_1}),E(X_{i_2}))\ge d\) for \(i_1,i_2\in {1,\ldots ,s}\). For that, the bootstrap procedure is applied to each pair of RIs by resampling from the individuals composing each variable to form a bootstrap sampling and computing the bootstrap statistic based on the midpoints and spreads of the resampling. At each stage, the procedure merges the two RIs with the highest p-value, whenever it is greater than a fixed significance level \(\alpha \). The two RIs involved in the new cluster form a unique RI (with double sample size) and the resampling in the next step is made over the individuals that make up such a joint RI. Then, the measures needed to start again the process are computed by considering the new cluster. Finally, the stopping criterion of the procedure is determined on the basis of the significance level \(\alpha \), which usually ranges from 0.01 to 0.1.

The reported method requires the computation of the bootstrap p-values for the two-sample similarity tests associated to all pairs of clusters at every step, which implies a moderate computational cost. However, one of the main advantages of this method in contrast to the one proposed in González-Rodríguez et al. (2009) is that the former is more flexible in the sense that the clustering of RIs is based on their degree of similarity and not on their exact equality. In this way, two random intervals could be grouped whenever their expectations present a high degree of similarity (according to the Jaccard-based similarity coefficient), and it is not necessary that both expectations are exactly equal. Besides, the multi-sample test employed in González-Rodríguez et al. (2009) is valid for fuzzy random variables or random intervals taking on a finite quantity of values, whereas the method proposed in the manuscript allows to classify all types of random intervals.

The clustering algorithm is displayed below.

Clustering algorithm

- Step 1 :

-

Fix a similarity degree d, a significance level \(\alpha \) and the number of bootstrap replications B. Let \(C_i=X_i\) be the singleton clusters for \(i\in \{1,\ldots ,s\}\).

- Step 2 :

-

For each \(i\in \{1,\ldots ,s\}\), obtain a large number B of boostrap samples \(\{X_{i1}^{*b},\ldots , X_{in_i}^{*b}\}_{b=1}^B\) drawn from \(\{X_{i1},\ldots , X_{in_i}\}\).

- Step 3 :

-

For each cluster \(C_i\), compute the values \(\text {mid}\,\overline{X_i}\) and \(\text {spr}\,\overline{X_i}\).

- Step 4 :

-

For each cluster \(C_i\) and each value \(b\in \{1,\ldots ,B\}\), compute the \(\text {mid}\,\) and spread of the sample mean of the values of each resample b, i.e., \(\text {mid}\,\overline{X_i^{*b}}\) and \(\text {spr}\,\overline{X_i^{*b}}\).

- Step 5 :

-

For each pair of clusters \(C_1\) and \(C_2\) compute the associate p-value \(p_{C_1,C_2}\) by using Monte Carlo method as follows:

- 5.1:

-

Initialize a counter \(W=0\) and compute the value of the two-sample similarity test statistic

$$\begin{aligned} \begin{aligned} T=&\sqrt{n_1}\max \Big \{D\,\text {spr}\,\overline{X_{C_2}}-\text {spr}\,\overline{X_{C_1}},d\,\text {spr}\,\overline{X_{C_1}}-\text {spr}\,\overline{X_{C_2}},\\&(1+d)\left| \text {mid}\,\overline{X_{C_1}}-\text {mid}\,\overline{X_{C_2}}\right| \\&+(d-1)\left( \text {spr}\,\overline{X_{C_1}}+\text {spr}\,\overline{X_{C_2}}\right) \Big \}\ . \end{aligned} \end{aligned}$$ - 5.2:

-

For each value \(b\in \{1,\ldots ,B\}\), compute the value of the bootstrap statistic

$$\begin{aligned} T^{*b}= & {} \max \Big \{\sqrt{n_1} \left( d\,\left( \text {spr}\,\overline{X^{*b}_{C_2}}-\text {spr}\,\overline{X_{C_2}}\right) +\text {spr}\,\overline{X_{C_1}}-\text {spr}\,\overline{X^{*b}_{C_1}}\right) \nonumber \\&+\min \left( 0,n_1^{1/4}(\text {spr}\,\overline{X_{C_2}}-\text {spr}\,\overline{X_{C_1}})\right) ,\nonumber \\&\sqrt{n_1}\left( d\,\left( \text {spr}\,\overline{X^{*b}_{C_1}}-\text {spr}\,\overline{X_{C_1}}\right) +\text {spr}\,\overline{X_{C_2}}-\text {spr}\,\overline{X^{*b}_{C_2}}\right) \nonumber \\&+\min \left( 0,n_1^{1/4}(\text {spr}\,\overline{X_{C_1}}-\text {spr}\,\overline{X_{C_2}}) \right) ,\nonumber \\&\sqrt{n_1}\Big ((1+d)\left( \text {mid}\,\overline{X^{*b}_{C_1}}-\text {mid}\,\overline{X_{C_1}}+\text {mid}\,\overline{X_{C_2}}-\text {mid}\,\overline{X^{*b}_{C_2}}\right) \nonumber \\&+(d-1)\left( \text {spr}\,\overline{X^{*b}_{C_1}}-\text {spr}\,\overline{X_{C_1}}+\text {spr}\,\overline{X^{*b}_{C_2}}-\text {spr}\,\overline{X_{C_2}}\right) \Big )\nonumber \\&+\min \left( 0,n_1^{1/4}(\text {mid}\,\overline{X_{C_1}}-\text {mid}\,\overline{X_{C_2}})\right) ,\nonumber \\&\sqrt{n_1}\Big ((1+d)\left( \text {mid}\,\overline{X_{C_1}}-\text {mid}\,\overline{X^{*b}_{C_1}}+\text {mid}\,\overline{X^{*b}_{C_2}}-\text {mid}\,\overline{X_{C_2}}\right) \nonumber \\&+(d-1)\left( \text {spr}\,\overline{X^{*b}_{C_1}}-\text {spr}\,\overline{X_{C_1}}+\text {mid}\,\overline{X^{*b}_{C_2}}-\text {mid}\,\overline{X_{C_2}}\right) \Big )\nonumber \\&+\min \left( 0,n_1^{1/4}(\text {mid}\,\overline{X_{C_2}}-\text {mid}\,\overline{X_{C_1}})\right) \Big \}, \end{aligned}$$and IF \(T^{*b}>T\), THEN W=W+1.

- 5.3:

-

Compute the bootstrap p-value as \(p_{C_1,C_2}=W/B\).

- Step 6 :

-

Find the maximum value of the matrix of p-values \({\mathbf {P}}\) obtained in the previous step, say \(\mathbf {max(P)}\), and label by \(C_{{\mathbf {P}}_1}\) and \(C_{{\mathbf {P}}_2}\) one of the pairs of clusters with this p-value.

- Step 7 :

-

IF

$$\begin{aligned} \mathbf {max(P)}>\alpha \end{aligned}$$THEN

join the clusters \(C_{{\mathbf {P}}_1}\) and \(C_{{\mathbf {P}}_2}\) in a new cluster \(C=C_{{\mathbf {P}}_1}\cup C_{{\mathbf {P}}_2}\) and repeat Steps 3 and 4 for this new cluster by computing the \(\text {mid}\,\) and spread of the sample mean of the elements in C, and of the mean of the elements of each resampling \(b\in \{1,\ldots ,B\}\). Compute the p-values between the new joint cluster and the other ones as in Step 5.1.-5.3. and go to Step 6.

ELSE

Stop.

Theorem 1 demonstrate that the clusters obtained by applying the approach described in the previous algorithm are statistically pairwise different. The result is supported by next proposition.

Proposition 1

Let \(C=\{X_1\}\cup \cdots \cup \{X_{m_1}\}\) be a cluster and let Y be a random interval so that \(S(E(X_i),E(Y))\ge d\) for a fixed similarity degree \(d\in [0,1]\) and \(i\in \{1,\ldots ,m_1\}\). Then, \(S(E(C),E(Y))\ge d\).

Proof

As \(S(E(X_i),E(Y))\ge d\) for \(i\in \{1,\ldots , m_1\}\), then, \(H_0\) in (3) is fulfilled for each pair \(\{X_i,Y\}\). In addition,

These conditions imply that

In addition, the same conditions together with the triangular inequality entails that

Therefore, \(\xi _3\le 0\) and \(\max \{\xi _1,\xi _2,\xi _3\}\le 0\), which means that \(H_0\) in (3) is fulfilled for \(\{C,Y\}\), so the result is given. \(\square \)

Theorem 1

Given a similarity degree \(d\in [0,1]\) and a fixed significance level \(\alpha \), the clustering algorithm provided in this section produces clusters \(\{C_i\}_{i=1}^k\) such that for all \(k_1\ne k_2\in \{1,\ldots ,k\}\) there exist \(i_1\in C_{k_1}\) and \(i_2\in C_{k_2}\) satisfying that \(S(E(X_{i_1}),E(X_{i_2})< d\) at the significance level \(\alpha \).

Proof

Let \(C_{k_1}\) and \(C_{k_2}\) be two clusters obtained by following the algorithm above such that \(C_{k_1}=\{X_1\}\cup \ldots \cup \{X_{m_1}\}\) and \(C_{k_2}=\{Y_1\}\cup \ldots \cup \{Y_{m_2}\}\).

Suppose that \(S(E(X_{i}),E(Y_{j})\ge d\) for \(i\in \{1,\ldots , m_1\}\) and \(j\in \{1,\ldots , m_2\}\). Then, \(H_0\) in (3) is fulfilled for each pair \(\{X_i,Y_j\}\). By Proposition 1, the inequality \(S(E(C_{k_1}),E(Y_j))\ge d\) is given for all \(j\in \{1,\ldots , m_2\}\). In the same way, by applying again Proposition 1 to the random interval \(C_{k_1}\) and \(\{Y_j\}_{j=1}^{m_2}\) it is satisfied that \(S(E(C_{k_1}), E(C_{k_2}))\ge d\), and the algorithm would have continued by joining \(C_{k_1}\) and \(C_{k_2}\). Thus, there must exist at least \(i_1\in C_{k_1}\) and \(i_2\in C_{k_2}\) so that \(S(E(X_{i_1}),E(X_{i_2})< d\). \(\square \)

From Theorem 1 it can be concluded that the clusters are different from each other. Besides, the procedure developed here is more flexible that the one in González-Rodríguez et al. (2009) since a degree of similarity between the expected values of the RIs can be chosen to compare with and not only exact equalities are allowed to classify the RIs. Moreover, in this case it is not required neither to prefix the number of clusters nor to solve any other optimization problem since the design of the clusters is given automatically by considering the probability of type I error that can be assumed.

6 Simulation studies

In this section some simulation studies are carried out in order to evaluate the performance of the proposal. In addition, a comparison between the method developed by González-Rodríguez et al. in González-Rodríguez et al. (2009) using the ANOVA test introduced in González-Rodríguez et al. (2012) particularizing it to RIs and the method proposed in this work is also established. From now on, the new proposal will be called ClustSim whereas the method in González-Rodríguez et al. (2009) will be called ClustANOVA. Note that the clustering algorithm used in ANOVA is the same as in González-Rodríguez et al. (2009) but considering the test and bootstrap statistics T and \(T^*\) proposed in González-Rodríguez et al. (2012) for the ANOVA test instead of the ones proposed in González-Rodríguez et al. (2006) for the multi-sample test for simple RIs.

As a first study, an inspection of the behavior of the type I error of the procedure ClustSim is developed. Thus, k populations belonging to the same cluster (i.e., whose similarity between the expectations of each pair of variables can be assumed to be greater than or equal to d) have been simulated for \(d\in \{0.8,0.9,1\}\). Moreover, the case \(d=1\) (equality of expectations) is also compared with method ClustANOVA in terms of type I error. RIs are randomly generated based on two parameters: their midpoint and their spreads. Therefore, any real continuous random variable will be valid for modeling the behavior of the midpoints, while for modeling the behavior of spreads it is necessary to consider a real continuous and positive random variable.

As a first attempt the following distributions have been chosen without loss of generality for \(j\in \{1,\ldots ,k\}\):

As an example of the generation process, the case \(d=0.8\) and \(k=3\) is being inspected, which is so that

-

\(X_1\) is so that \(\text {mid}\,X_1\hookrightarrow U(0,1)\) and \(\text {spr}\,X_1\hookrightarrow U(0,1)\),

-

\(X_2\) is so that \(\text {mid}\,X_2\hookrightarrow U(0.1,1)\) and \(\text {spr}\,X_2\hookrightarrow U(0,0.9)\),

-

\(X_3\) is so that \(\text {mid}\,X_3\hookrightarrow U(0.2,1)\) and \(\text {spr}\,X_3\hookrightarrow U(0,0.8)\).

Thus, \(E(X_1)=[0,1]\), \(E(X_2)=[0.1,1]\) and \(E(X_3)=[0.2,1]\), so we can assure that the degree of similarity between those expectations is at least 0.8 since \(S(E(X_1),E(X_2))=S(E(X_2),E(X_3))=0.9\) and \(S(E(X_1),E(X_3))=0.8\).

As it has been shown that the empirical sizes of the bootstrap similarity test and the ANOVA test are quite close to the expected nominal significance levels for moderate sample sizes (see Ramos-Guajardo 2015, and González-Rodríguez et al. 2012), then we will fix \(n=100\). In addition, the algorithm has been run for 1000 simulations with 500 bootstrap replications of the bootstrap test each by considering a significance level \(\alpha =0.05\) by considering 3, 5 and 8 populations belonging to one unique cluster. The proportion of times in which the correct number of clusters is obtained in every situation whenever uniform distributions are considered for \(\text {mid}\,/spr\) has been collected in Table 1.

An analogous study has been carried out given alternative distributions for the midpoints and spreads. Specifically, variations from normal/beta distributions and Student’s/chi-square distributions for midpoints/spreads have been considered by applying a variable generation process equivalent to the one described in Sect. 5. The proportion of times in which the correct number of clusters is obtained in both situations is gathered in Tables 2 and 3.

From Tables 1, 2 and 3 it can be concluded that the procedure has a success rate close to the empirical size of the bootstrap method (\(1-\alpha \)) for values \(d=0.8\) and \(d=0.9\). However, it can be observed that in the case \(d=1\) the results obtained remain below \(1-\alpha \). Although it is true that the method proposed in González-Rodríguez et al. (2009) based on the multi-sample test introduced in González-Rodríguez et al. (2006) for simple RIs presents better results, it should be noted that in that case, apart from being an appropriate procedure only for a very specific case of RIs, the bootstrap test statistic includes the variability of the RIs in its denominator, while in the ClustSim and ClustANOVA methods such variability is not included in the bootstrap statistics (see Ramos-Guajardo 2015; González-Rodríguez et al. 2012). Since the inclusion of variability of RIs is not a straightforward problem, it is left as an open problem to be addressed in the near future.

On the other hand, as a second scenario different sample sizes are considered, being them \(n\in \{50,80,100\}\), as well as \(s=10\) RIs and three similarity grades \(d\in \{0.8,0.9,1\}\). A structure of 3 clusters is considered so that 2 RIs belong to Cluster 1, 3 RIs belong to Cluster 2 and 5 RIs belong to Cluster 3. Regarding the distinction of clusters three different situations are considered:

-

Case 1 The clusters are distinguished with respect to the midpoints of the intervals.

-

Case 2 The clusters are distinguished with respect to the midpoints and the spreads of the intervals.

-

Case 3 The clusters are distinguished with respect to the spreads of the intervals.

In addition, two levels of separation for the clusters are taken into account:

-

Case A small separation between clusters.

-

Case B large separation between clusters.

The details of the generation process for the midpoints and spreads of the RIs in cases \(d=0.8\), \(d=0.9\) and \(d=1\) whenever uniform distributions are considered for \(\text {mid}\,/spr\) are gathered on Tables 4, 5 and 6.

An anologous generation process has been carried out for the situations in which normal/beta distributions and Student’s/chi-square distributions have been considered for \(\text {mid}\,/spr\). However, the specific details of such a generation process have been omitted to make the section easier to follow by the reader and due to extension reasons.

As an example, the RIs obtained through the generation process for case 2.A (clusters distinguished with respect to the midpoints and spreads of the intervals and small separation between clusters) when \(d=0.8\) proposed in Table 4 fulfil the following conditions:

-

The RIs involved in the first cluster (say \(X_1\) and \(X_2\)) satisfy that \(E(X_1)=[-1,0]\) and \(E(X_2)=[-1,-0.2]\), so \(S(E(X_1),E(X_2))=0.8\).

-

The RIs involved in the second cluster (say \(X_3\), \(X_4\) and \(X_5\)) satisfy that \(E(X_3)=[-0.5,0.5]\), \(E(X_4)=[-0.45,0.45]\) and \(E(X_5)=[-0.4,0.4]\), so the lowest similarity grade among each pair of random intervals in cluster 2 is \(S(E(X_3),E(X_5))=0.8\).

-

The RIs involved in the third cluster (say \(X_6\), \(X_7\), \(X_8\), \(X_9\) and \(X_{10}\)) satisfy that \(E(X_6)=[0,1]\), \(E(X_7)=[0.05,1]\), \(E(X_8)=[0.1,1]\), \(E(X_9)=[0.15,1]\) and \(E(X_{10})=[0.2,1]\), so the lowest similarity grade among each pair of random intervals in cluster 3 is \(S(E(X_6),E(X_{10}))=0.8\).

-

The highest similarity grade between RIs of different clusters is given by \(S(E(X_1),E(X_3))=S(E(X_3),E(X_6))=1/3\).

In order to gather the similarity degree between each pair of expected values of the variables involved in the different situations displayed in Tables 7, 8 and 9, the corresponding similarity degree matrices \(S^d_{{case}}\) are shown in Appendix A, for \(d\in \{0.8,0.9,1\}\) and \(case\in \{1.A,2.A,3.A,1.B,2.B,3.B\}\). Note that the similarity degree matrices provided do not depend on the distributions chosen for midpoints and spreads, since the three types of RIs generation processes considered (uniform/uniform, normal/beta and Student’s/chi-square) led to the same expected value for each RI, i.e., the expected value obtained for \(X_i\), for \(i\in \{1,\ldots ,10\}\), when applying an RI generation process based on uniform distributions for midpoints and spreads coincided with the one obtained when applying the alternatives normal/beta and Student’s/chi-square in the different frameworks analyzed.

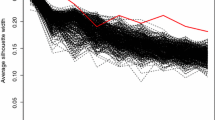

For every case and every sample size chosen, \(r=300\) random samples have been generated and, for each data set, \(B=500\) bootstrap replications of the two-sample similarity bootstrap test has been carried out in order to generate the p-values of the similarity matrix P. Tables 7, 8 and 9 collect the percentage of times in which the algorithm suggests 3 clusters containing the initial RIs of the generation process with respect to all the different cases proposed in Tables 4, 5 and 6 and the three types of distributions considered for \(\text {mid}\,/spr\). Again, the case \(d=1\) of method ClustSim has been compared with method ClustANOVA in this framework.

From the results gathered in Tables 7, 8 and 9 we can conclude that the procedure proposed in this work determines the correct number of clusters (as well as the RIs belonging to each cluster) in most of the suggested situations. However, once again it can be seen that in the case \(d = 1\) the correct detection of clusters is not so suitable, which may be due to the lack of a variability-related term in the corresponding test statistics. In all cases it should be remarked that the results are better when the sample size is larger. This is because both the two-sample similarity bootstrap test and the ANOVA test perform better for moderate/large sample sizes. Finally, there are no major differences in the performance of methods ClustSim and ClustANOVA when considering one type of distributions or another for \(\text {mid}\,/\text {spr}\,\) as well as when considering differences in midpoints, in spreads, or in both.

7 Real-life applications

The clustering method proposed in this work (ClustSim) is applied to the real cases presented in Sect. 2 by considering several similarity grades from which the different variables will be grouped. Situations \(d=1\), \(d=0.9\) and \(d=0.8\) are explored in both scenarios and \(B=5000\) bootstrap replications have been considered in all cases. The p-value matrices computed by following the procedure in the different cases are included in Appendices B and C. Besides, a comparison between case \(d=1\) of ClustSim, the procedure ClustANOVA developed in González-Rodríguez et al. (2009) and the classical hierarchical clustering method just using the midpoints is also provided.

7.1 Application 1: mathematics related beliefs questionnaire

The aim is to classify the RIs associated with the statements \(Q1-Q17\) about mathematics related beliefs according to the opinion given by 117 students. The representation of the sample means of the answers to each statement (which is also an interval) is gathered Fig. 2. It should be noticed that the spreads of the expected values considered are very similar (around 1 in all cases) which implies that the classification will be given based on the differences in midpoints in this case.

\(\mathbf{Case \,d=1\mathbf : }\)

A p-value lower than the significance level \(\alpha =0.05\) in the p-value matrix \({{\mathbf {P}}}_1\) (see Appendix B) implies that the the mean values of the rating of two statements involved in the questionnaire can be considered to be not completely equal. The optimal solution when applying the clustering procedure proposed in this work to the matrix \({{\mathbf {P}}}_1\) is composed by seven clusters: Cluster 1 = {Q2,Q14}, Cluster 2 = {Q3,Q10,Q15}, Cluster 3 = {Q4,Q7,Q9,Q11,Q5}, Cluster 4 = {Q1,Q8,Q13}, Cluster 5 = {Q16,Q17}, Cluster 6 = {Q6} and Cluster 7 = {Q12}.

Cluster 1 connects the statement related with the taste for mathematical learning and the one related to the perseverance when solving math problems; in both cases the average midpoints are around 5.2 points. Statements in Cluster 2 are those whose average midpoints are around 4.4 points and they talk about personal interest in math and self-abilities in understanding math. Cluster 3 includes those statements with average midpoints around 4.75 points which are related with general math liking, effort capacity and self-concept in math compared to other students. On the other hand, statements in Cluster 4 (average midpoints around 5.9 points) refer to learning interest, patience when solving math and self-expectations in understanding math the current course. Cluster 5 contains two statements regarding competitiveness and insecurity when dealing with mathematics with average midpoints around 3.5 points and, finally, the statement making up the sixth cluster has an average midpoint around 8 points and corresponds to the own general expectations in math marks, whereas the one making up the seventh cluster (whit an average midpoint around 6.4 points) entails self-concept about math achievements the current course.

The procedure ClustANOVA has also been applied leading to the same statements classification than ClustSim for \(d=1\). Besides, if the classical hierarchical algorithm for the mean of the midpoints of the intervals is applied, the dendrogram displayed in Fig. 3 is obtained.

Figure 3 shows that similar clusters are given when an appropriate cut of the dendrogram is considered. However, while an objective stopping criterion leads automatically to six groups when applying the other two procedures, further analyses are required to find a suitable cut for this classical approach. In addition, the classical hierarchical clustering approach does not consider the inherent imprecision in the students’ answers, producing a loss of information that can be avoided by using the whole intervals and not only their midpoints while it is true that this loss of information is not well reflected in this example due to the similarity in the spreads of the different expected values.

\(\mathbf{Case \,d=0.9\mathbf : }\)

In this case, a p-value lower than \(\alpha =0.05\) in the p-value matrix \({{\mathbf {P}}}_2\) (see Appendix B) implies that the similarity degree between the mean values of the rating of two statements involved in the questionnaire can be considered to be lower than 0.9. For instance, the p-value equal to .004 for statements Q1 and Q5 means that such statements are perceived in a very different way by the sample of students.

The method is applied to matrix \({{\mathbf {P}}}_2\), and five clusters have been obtained, mainly, Cluster 1 = {Q2,Q14}, Cluster 2 = {Q3,Q4,Q5,Q7,Q9,Q10,Q11,Q15}, Cluster 3 = {Q1,Q8,Q12,Q13}, Cluster 4 = {Q16,Q17} and Cluster 5={Q6}.

Clusters 1, 4 and 5 coincide with Clusters 1, 5 and 6 in case \(d=1\). On the other hand, Cluster 2 is obtained by linking Clusters 2 and 3 in case \(d=1\), having statements with average midpoints between 4 and 5 points. Thus, it includes statements related with general math liking, personal interest in math, self-abilities in understanding math, effort capacity in math and self-concept in math compared to other students. Statements included in Cluster 3 are the result of joining Clusters 4 and 7 in case \(d=1\) and refers to learning interest, patience when solving math, self-expectations in math understanding and math achievements during the current course. The statements included in new Cluster 3 have average midpoints between 5.5 and 6.5 points.

\(\mathbf{Case \, d=0.8\mathbf : }\)

The p-value matrix is \({{\mathbf {P}}}_3\) (see Appendix B) and, in this case, four clusters are obtained when applying the clustering procedure proposed in this work. The first cluster contains all elements of Clusters 1 and 2 for case \(d=0.9\) concerning math liking, math interest, self-concept in math compared to other students, effort capacity and self-expectations in the current course, perseverance and self-abilities in understanding math. All those statements have average midpoints moving betwen 4 and 5.5 points. Finally, Clusters 2, 3 and 4 coincide with clusters 3, 4 and 5 in case \(d=0.9\).

7.2 Application 2: daily concentration of PM10 particles in Spanish cities

Now the aim is to classify the RIs associated with 18 Spanish cities according to their concentration of PM10 particles during 122 days of the central months of 2019. Figure 4 shows the representation of the intervals corresponding to the sample means of the daily concentration of PM10 particles in the 18 cities.

\(\mathbf{Case \,d=1}\)

A p-value lower than \(\alpha =0.05\) in the p-value matrix \({{\mathbf {R}}}_1\) (see Appendix C) indicates that the mean values of the daily concentration of PM10 particles in the two cities compared are not equal. The solution obtained after applying the proposed procedure leads to nine clusters, which are the following: Cluster 1 = {Vitoria, Valladolid}, Cluster 2 = {Oviedo,Madrid}, Cluster 3 = {Palma de Mallorca, Sevilla, Valencia}, Cluster 4 = {Logroño, Las Palmas de Gran Canaria}, Cluster 5 = {Santiago de Compostela, Barcelona, Toledo.}, Cluster 6 = {Pamplona, Zaragoza}, Cluster 7 = {Santander, Ceuta}, Cluster 8 = {Murcia} and Cluster 9 = {Santa Cruz de Tenerife}.

It can be concluded Santa Cruz de Tenerife is, by far, the city that presents the least pollution by PM10 particles in mean in the central months of 2019 followed by the cities composing Cluster 6, that are Pamplona and Zaragoza. On the other hand, cities in Cluster 5 have the highest maximums, although the average intervals are quite wide because the variability of the daily concentration of PM10 particles during these months has been greater. The rest of the clusters contain cities that have had a moderate variability in terms of the concentration of PM10 particles, and said variability for the cities that make up each cluster can be considered to be the same.

The procedure ClusANOVA has also been applied given the analogies of both procedures, and the optimal solution coincides with the one above. Once again, the results obtained in the study of simulations are corroborated in relation to the similar performance of both methods.

On the other hand, the classical hierarchical algorithm for the mean of the midpoints of the intervals has been implemented leading to the classification shown in the dendrogram gathered in Fig. 5.

Figure 5 evidences that most of clusters are given when an appropriate cut of the dendrogram is considered. Nevertheless, in this case it should be noted that the imprecision and variability collected by an interval is lost when the information is summarized by a single value. This is the case, for example, of the city of Murcia, which according to the method proposed in this work does not belong to the same cluster as Santiago de Compostela, Toledo and Barcelona since the variability presented by the mean daily concentration of PM10 particles in the 4 cities cannot be considered to be the same.

\(\mathbf{Case \, d=0.9}\)

Now a p-value lower than \(\alpha =0.05\) in the p-value matrix \({{\mathbf {R}}}_2\) (see Appendix C) implies that the similarity degree between the mean values of the daily concentration of PM10 particles in the two cities compared can be considered to be lower than 0.9.

In this case, the clustering approach is applied to matrix \({{\mathbf {R}}}_2\), and five clusters have been obtained, mainly, Cluster 1 = {Vitoria, Valladolid, Oviedo, Madrid, Santander, Ceuta}, Cluster 2 = {Palma de Mallorca, Logroño, Las Palmas de Gran Canaria, Valencia}, Cluster 3 = {Santiago de Compostela, Murcia, Sevilla, Toledo, Barcelona}, Cluster 4 = {Pamplona, Zaragoza} and Cluster 5 = {Santa Cruz de Tenerife}.

Clusters 4 and 5 are the same that Clusters 6 and 9 in the case \(d=1\), and comprehend the cities with lower minimum pollution and lower variability of such pollution during the analyzed period of 2019. Cluster 1 comes from joining Clusters 1, 2 and 7 in the case \(d=1\), concerning cities that present moderate variabilities in terms of pollution with low minimums. On the other hand, Cluster 2 links Clusters 3 and 4 in case \(d=1\) except for the city of Sevilla which now is added to the previous Cluster 5 to form Cluster 3 (jointly with Murcia) in the new cluster composition. It can be concluded that Sevilla and Murcia are now linked with the cities that have the highest maximum values in terms of pollution, while the cities that include Cluster 2 have more moderate minimums and maximums, as well as a moderate variability. Therefore, it can be observed that if d reduces from 1, the cluster is obtained such that a degree of overlapping of two intervals are rightly considered even if the midpoints are slightly different.

\(\mathbf{Case \,d=0.8\mathbf : }\)

The p-value matrix obtained in this case is \({{\mathbf {R}}}_3\) (see Appendix C) and the application of the approach leads to four clusters: Clusters 1, 3 and 4 coincide with Clusters 1, 4 and 5 in case \(d=0.9\), referred to those cities with lower minimum daily concentration of PM10 particles and lower/moderate variability of such concentration. The second cluster is the result of linking Clusters 2 and 3 in case \(d=0.9\), corresponding to cities with higher maximum values and higher variability in terms of pollution.

8 Final remarks

In this contribution a novel approach for clustering RIs has been introduced. In contrast to the method developed in González-Rodríguez et al. (2009) for clustering simple fuzzy random variables (where RIs can be seen as a particular case of fuzzy random variables) based on the equality of expectations, the linkage criteria suggested here considers the similarity degree of expected values of the RIs belonging to the same cluster. It allows us to previously specify a degree of similarity in (0, 1) between expected values that we are willing to admit. Thus, a two-sample test for the degree of similarity of RIs is applied and a p-value matrix is obtained, that indicates somehow how similar are each pair of RIs and serves as a starting point of the clustering algorithm. The simulation studies to observe the performance of the approach along with the real data applications have shown the benefits of the proposed strategy of analysis, also compared to the method in González-Rodríguez et al. (2009) when the ANOVA test for all types RIs introduced in González-Rodríguez et al. (2012) is considered.

As future directions, the partition of RIs based on fuzzy clustering procedures and the similarity measure included here could be analyzed. The inclusion of the variability of the RIs both in the suggested statistic as well as in the ANOVA statistic in González-Rodríguez et al. (2012) can also be studied. The cluster analysis of more complex kind of variables (as, for instance, fuzzy random variables) considering such similarity measure can also be carried out. In addition, a distance between random intervals instead of a similarity index could be also considered for developing different clustering approaches. Finally, the classification of variables whose outputs are more complex objects in the context of simbolic data analysis based on a similarity grade could also be addressed.

Change history

05 November 2021

Simple differential equations for the correction functions B and C.

References

Aumann RJ (1965) Integrals of set-valued functions. J Math Anal Appl 12:1–12

Billard L, Diday E (2003) From the statistics of data to the statistics of knowledge: symbolic data analysis. J Am Stat Assoc 98:470–487

Blanco-Fernández A, Corral N, González-Rodríguez G (2011) Estimation of a flexible simple linear model for interval data based on set arithmetic. Comput Stat Data Ann 55(9):2568–2578

Blanco-Fernández A, Colubi A, García-Bárzana M (2013) A set arithmetic-based linear regression model for modelling interval-valued responses through real-valued variables. Inf Sci 247:109–122

Cappelli C, D’Urso P, Di Iorio F (2013) Change point analysis for imprecise time series. Fuzzy Sets Syst 225:23–38

Chavent M (1998) A monothetic clustering method. Pattern Recogn Lett 19(11):989–996

Chavent M, Lechevallier Y (2002) Dynamical clustering algorithm of interval data: Optimization of an adequacy criterion based on Hausdorff distance. In: Sokolowski A, Bock HH (eds) Classification, Clustering and Data Analysis. Springer-Verlag, Berlin, Germany, pp 53–59

Chavent M, Kuentz-Simonet V, Liquet B, Saracco J (2012) ClustOfVar: an R package for the clustering of variables. J Stat Softw 50(13):1–16

D’Urso P, Giordani P (2004) A least squares approach to principal component analysis for interval valued data. Chemometr Intell Lab Syst 70(2):179–192

D’Urso P, De Giovanni L, Massari R (2015) Trimmed fuzzy clustering for interval-valued data. Adv Data Anal Class 9(1):21–40

De Carvalho FdAT, De Souza RM, Chavent M, Lechevallier Y (2006) Adaptive hausdorff distances and dynamic clustering of symbolic interval data. Pattern Recogn Lett 27(3):167–179

De Carvalho FdAT, Brito P, Bock HH (2006) Dynamic clustering for interval data based on L2 distance. Comput Stat 21(2):231–250

El-Shaarawi AH, Piegorsch WW (2002) Encyclopedia of environmetrics. Wiley, New York

González-Rodríguez G, Montenegro M, Colubi A, Gil MA (2006) Bootstrap techniques and fuzzy random variables: synergy in hypothesis testing with fuzzy data. Fuzzy Sets Syst 157:2608–2613

González-Rodríguez G, Colubi A, D’Urso P, Montenegro M (2009) Multi-sample test-based clustering for fuzzy random variables. Int J Approx Reason 50:721–731

González-Rodríguez G, Colubi A, Gil MA (2012) Fuzzy data treated as functional data: a one-way ANOVA test approach. Comput Stat Data Anal 56:943–955

Gowda KC, Ravi T (1995) Agglomerative clustering of symbolic objects using the concepts of both similarity and dissimilarity. Pattern Recogn Lett 16(6):647–652

Hajjar C, Hamdan H (2013) Interval data clustering using self-organizing maps based on adaptive Mahalanobis distances. Neural Networks 46:124–132

Horowitz JL, Manski CF (2006) Identification and estimation of statistical functionals using incomplete data. J Econ 132:445–459

Hudgens MG (2005) On nonparametric maximum likelihood estimation with interval censoring and left truncation. J R Stat Soc Ser B 67:573–587

Jaccard P (1901) Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull Soc Vaudoise Sci Nat 37:547–579

Kojadinovic I (2004) Agglomerative hierarchical clustering of continuous variables based on mutual information. Comput Stat Data Anal 46:269–294

Kreinovich V, Nguyen HT, Wu B (2007) On-line algorithms for computing mean and variance of interval data, and their use in intelligent systems. Inf Sci 177(16):3228–3238

Magnac T, Maurin E (2008) Partial identification in monotone binary models: discrete regressors and interval data. Rev Econ Stud 75:835–864

Maharaj EA (1996) A significance test for classifying ARMA models. J Stat Comput Simul 54:305–331

Matheron G (1975) Random sets and integral geometry. Wiley, New York

Nicolau F, Bacelar-Nicolau H (1998) Some trends in the classi.cation of variables. In: Hayashi C, Ohsumi N, Yajima K, Tanaka Y, Bock H, Baba Y (eds) Data science, classiffication and related methods. Springer, Berlin, pp 89–98

Park S, Lim J, Choi H, Kwak M (2020) Clustering of longitudinal interval-valued data via mixture distribution under covariance separability. J Appl Stat 47(10):1739–1756

Ralambondrainy H (1995) A conceptual version of the K-means algorithm. Pattern Recogn Lett 16(11):1147–1157

Ramos-Guajardo AB (2015) Similarity Test for the Expectation of a Random Interval and a Fixed Interval. In: Grzegorzewski P, Gagolewski M, Hryniewicz O, Gil MA (eds) Strengthening links between data analysis and soft computing. Advances in Intelligent Systems and Computing vol 315, pp 175–182

Ramos-Guajardo AB, Grzegorzewski P (2016) Distance-based linear discriminant analysis for interval-valued data. Inf Sci 372:591–607

Ramos-Guajardo AB, Blanco-Fernández A (2017) Two-sample similarity test for the expected value of random intervals. In: Ferraro MB, Giordani P, Vantaggi B, Gagolewski M, Gil MÁ, Grzegorzewski P, Hryniewicz O (eds) Soft methods for data science. Advances in Intelligent Systems and Computing vol 456, pp 423–430

Ramos-Guajardo AB, González-Rodríguez G, Colubi A (2020) Testing the degree of overlap for the expected value of random intervals. Int J Approx Reason 119:1–19

Rodriguez SIR, De Carvalho FdAT (2019) Clustering interval-valued data with automatic variables weighting. In: Proceedings of the international joint conference on neural networks pp 1–8

Shao J, Tu D (1995) The Jackknife and Bootstrap. Springer-Verlag, New York

Shawe-Taylor J, Cristianini N (2004) Kernel methods for pattern analysis. Cambridge University Press, Cambridge

Sinova B, Colubi A, Gil MA, González-Rodríguez G (2012) Interval arithmetic-based linear regression between interval data: discussion and sensitivity analysis on the choice of the metric. Inf Sci 199:109–124

Xu X, Lu J, Wang W (2018) Hierarchical clustering of complex symbolic data and application for emitter identification. J Comput Sci Technol 33(4):807–822

Acknowledgements

The research in this paper has been supported by the Spanish Government through MINECO-18-MTM2017-89632-P Grant. Its financial support is gratefully acknowledged.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Similarity degree matrices for the situations considered in Tables 7, 8 and 9

Appendix B p-value matrices generated in the real-life Application 1

Appendix C p-value matrices generated in the real-life Application 2

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ramos-Guajardo, A.B. A hierarchical clustering method for random intervals based on a similarity measure. Comput Stat 37, 229–261 (2022). https://doi.org/10.1007/s00180-021-01121-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-021-01121-3