Abstract

High-quality machining is a crucial aspect of contemporary manufacturing technology due to the vast demand for precision machining for parts made from hardened tool steels and super alloys globally in the aerospace, automobile, and medical sectors. The necessity to upheave production efficiency and quality enhancement at minimum cost requires deep knowledge of this cutting process and development of machine learning-based modeling technique, adept in providing essential tools for design, planning, and incorporation in the machining processes. This research aims to develop a predictive surface roughness model and optimize its process parameters for ultra-precision hard-turning finishing operation. Ultra-precision hard-turning experiments were carried out on AISI D2 of HRC 62. The response surface method (RSM) was applied to understand the effect of process parameters on surface roughness and carry out optimization. Based on the data gained from experiments, machine learning models and algorithms were developed with support vector machine (SVM), Gaussian process relation (GPR), adaptive neuro-fuzzy inference system (ANFIS), and artificial neural network (ANN) for the prediction of surface roughness. The results show that all machine learning models gave excellent predictive accuracy with an average MAPE value of 7.38%. The validation tests were also statistically significant, with ANFIS and ANN having MAPE values of 9.98% and 3.43%, respectively. Additional validation tests for the models with new experimental data indicate average R, RMSE, and MAPE values of 0.78, 0.19, and 36.17%, respectively, which are satisfactory. The RSM analysis shows that the feed is the most significant factor for minimizing surface roughness Rɑ, among the process parameters, with 92% influence, and optimal cutting conditions were found to be cutting speed = 100 m/min, feed = 0.025 mm/rev, and depth of cut = 0.09 mm, respectively. This finding can be helpful in the decision-making on process parameters in the precision machining industry.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the years, the manufacturing sectors have developed various technologies for improving production efficiency and maximizing revenues at a reduced cost to be relevant in the competitive global market. These indices correlate with higher material removal rate, efficient machining time, and excellent surface quality finish on hardened steels through high-precision manufacturing. One such technology employed in phasing out the traditional grinding operations for better accuracy and surface quality in machining hard materials, superalloys, and other hard-to-cut materials to desired specification of components and parts is the ultra-precision hard-turning (UPHT) for finishing operations. Hard turning is a machining method for cutting the cylindrical surface of a workpiece of a hardness value of more than 45 HRC with a single-point cutting tool [1,2,3]. Ultra-precision hard-turning is a machining process involving high-precision machines and cutting conditions to produce parts with extremely high surface finish and geometric accuracy. It is typically used to produce parts with a surface roughness of less than 0.1 µm and form an accuracy of less than 1 µm.

Ultra-precision hard-turning typically requires specialized machine tools and cutting tools, such as diamond or CBN turning tools with negative rake angles, which can produce high-quality surface finishes and precise dimensional tolerances. The cutting conditions, such as cutting speed, feed rate, and depth of cut, must also be carefully controlled to achieve the desired level of precision. This process has been applied in different industries but not limited to the automobiles, aerospace, medical, and optical industry, where high precision and surface quality are crucial for optimal performance and economical manufacturing [4] and be more efficient than the grinding process [5]. Ultra-precision hard-turning is also used to produce machine parts, such as gears and bearings, and optical components, such as lenses and mirrors [6]. It is worth mentioning that the ultra-precision hard-turning process is a challenging task. It requires high skill and experience to achieve the desired precision and surface finish level. It also requires advanced measurement and control systems to ensure the finished parts meet the specifications.

In recent years, there have been studies on developing new cutting tools and techniques for hard turning. The cubic boron nitride (CBN) is a synthetic material recognized for its exceptional hardness, wear resistance, and thermal stability at high temperatures. It is second only to diamond in terms of hardness and is mostly well-suited for machining hardened steels, cast irons, and other difficult-to-cut materials [7,8,9]. More so, the polycrystalline cubic boron nitride among the likes of carbide and ceramic cutters is predominantly accepted for machining AISI D2 as perfect replacement for expensive grinding operations [10]. Additionally, research has been done on optimizing hard machining process parameters such as cutting speed, feed rate, depth of cut, chamfer angle (inserts), and novel prime inserts to improve surface finish, dimensional accuracy, and tool life [11,12,13]. Other investigations involving the use of coolant and lubrication during hard turning have been reported to have some remarkable improvement in surface quality and tool life, too [14, 15].

Modern manufacturing is undisputedly dependent on time, resources, and deployment of information from machines and processes across spatial constraints. These events enhance accuracy and dependability in predicting resources needed and allocation, maintenance scheduling, remaining useful life of tools and equipment, and the surface quality of product parts. Therefore, it is necessary to integrate machine learning (ML) algorithms to help actualize the goals of modern manufacturing. Artificial intelligence is the use of machines to imitate the functions of the human brain, using computers smartly based on algorithms [16, 17]. In contrast, machine learning is a branch of artificial intelligence that examines algorithms that can learn independently directly from input variables [13]. Machine learning algorithms are trained to generate predictions using statistical methodologies, discover vital insights in data mining schemes, and can effectively analyze extensive data, identify complex patterns, and make relevant deductions that are valuable for tool condition monitoring and damage detection of components [18,19,20,21]. Machine learning is generally categorized into three areas namely, thus, supervised, unsupervised, and reinforcement learning. Supervised learning has been reported in works of literature, among which are but not limited to artificial neural networks, genetic algorithms, response surface methods, support vector machines, decision trees, Gaussian process regression, random forest, adaptive neuro-fuzzy inference systems, logistic regression, and naïve Bayes classifiers [13]. In addition, ML methods have been applied in process system engineering, providing alternatives to first principle experimental models for traditional process engineering, for instance, in modeling, product design, fault diagnosis, and predictions of responses given input parameters [22, 23]. According to Imad et al. [24], the application of ML techniques in modeling and optimization problems in intelligent machining systems, including genetic algorithm (GA) and particle swarm optimization, has 23% each; due to their adaptability in comparison to other techniques for complex problems, followed by non-dominated sorting genetic algorithm (NSGA) recorded 15%, ANN and simulated annealing (SA) has 9%, multi-objective genetic algorithm (MOGA) and firefly algorithm (FA) recorded 6% each. In contrast, ant colony (ACO), grey relation algorithm (GRA), and response surface method (RSM) account for 3%, respectively, as of the period of the report.

Tang et al. [25] investigated the wear performance of the PCBN tool in dry hard turning of AISI D2 with various hardness from 40 to 60 HRC values with fixed cutting parameters of feed 0.1 mm/rev, depth of cut 0.15 mm, and cutting speed of 250 mm/min. The results show that workpiece hardness has a significant effect on flank wear. Patel and Gandhi [26] implored full factorial design of experiment (FFD) in the empirical modeling of surface roughness during hard turning of AISI D2 using the CBN tool. The analysis considered cutting speed, feed rate, and nose radius as cutting parameters. The results show that feed rate has the most significant impact on surface roughness, and the empirical model predictions are in close agreement with the experimental results when tested within conditions.

Rafighi et al. [27] utilized Taguchi L36 mixed orthogonal array design of experiment (DoE) in investigating the effect of CBN and ceramic inserts during the hard turning of AISI D2. An ANN and multivariate multiple regression (MMR) models were developed to predict surface roughness and force components. The results show that the ANN model had a better prediction accuracy than MMR, with 99.5% and 83.9%, respectively. More so, it was recorded that feed rate has a more significant effect (90.2%) on surface roughness than nose radius, cutting insert, depth of cut, and cutting speed. Kumar et al. [28] designed a Taguchi L9 orthogonal array to evaluate the effect of process parameters on cutting force and surface roughness on the hard turning of AISI D2 using CBN inserts. Results show that the feed rate has a more significant effect (56%) on cutting force than cutting speed while cutting speed has a more significant effect (72%) on surface roughness than feed rate. On the other hand, signal-to-noise ratio analysis was used for the optimal process parameters proposed at 80 m/min, 0.109 mm/rev, and 0.1 mm for cutting speed, feed, and depth of cut, respectively.

Srithar et al. [10], with a Taguchi L27 DoE, investigated the effect of process parameters on surface roughness during hard turning of AISI D2 using the PCBN tool. Considering feed rate, cutting speed, and depth of cut as process parameters, the results show that the feed rate is the most influential factor of the three cutting parameters. Sarnobat and Raval [29] utilized a central composite inscribed (CCI) design in investigating the effect of tool edge geometry and workpiece hardness on surface roughness and surface residual stress when turning AISI D2 using the CBN tool of the light, standard, and heavy-honed geometries, respectively. The results show that feed rate overall impacts the residual stress and surface roughness more than the depth of cut, hardness, and tool edge geometry. In contrast, CBN light-honed tool geometry in the low to moderate range cutting parameters generates a compressive residual stress state and better surface finish.

Zhang et al. [30] investigated the effect of process parameters, viz. feed, depth of cut, and cutting speed on surface quality, cutting temperature, and tool life using coolant and lubrication versus a dry environment during hard cutting of AISI D2 with PCBN. The results show that feed rate is the most influential process parameter on surface roughness in dry and lubricated conditions while cutting speed is the most significant factor for tool interface temperature and flank wear. It was concluded that the cooling and lubrication conditions could improve the surface quality and prolong tool life. Takács and Farkas [31] investigated the effect of varying the feed rate and cutting speed on cutting force components during ultra-precision hard-turning of AISI D2 using the CBN tool. The results show that the higher the feed rate, the larger the passive and cutting force components. The finite element model was developed to simulate the chip removal process, and it was deduced that the theoretical cutting force values compared to measured values were 45–120% higher.

Furthermore, to improve the surface quality and tool life, various machine learning techniques for predictive or/and optimization models have been employed in hard machining operations related to hardened AISI D2 or CBN cutters, providing good predictive accuracy and optimum combinations of process parameters. These results have been found more efficient and cost-effective in the long run compared to traditional control turning optimization methods [32,33,34,35,36,37,38,39,40,41,42].

Consequently, upon careful literature reviews, it is glaring that the optimization of process parameters which are essential selection considerations to attain better accuracy and surface quality during UPHT is insufficient as the earlier focus has been on dry hard turning operation with conventional lathe. More so, most investigation has implored DoE on Taguchi methods L9, L16, L27, L36 mixed orthogonal array and central composite design (CCD) and a few on FFD, which is a crucial aspect in precision machining. The inappropriate selection of process parameters will affect the surface quality and cost of production. Therefore, more investigation is required to evaluate the effect of process parameters deploying a full factorial design to include all possible combinations of the process parameters.

In addition, various researches have reported the application of different ML techniques as a predictive or optimization model; however, there are limited reports on the investigation of all RSM, GP, SVM, ANFIS, and ANN machine learning techniques, especially during UPHT of AISI D2 using the CBN cutting tool. It is reported that no appropriate ML technique has been certified as suitable for prediction [43]; thus, evaluating the performance of these machine-learning techniques is necessary.

Conclusively, very few reports have implored additional test data to validate the predictive accuracy of developed models for industrial applications. Therefore, the current research seeks to investigate the optimization of process parameters using RSM composite desirability with full factorial design method for all possible combinations of factors and prediction of surface quality using GP, SVM, ANFIS, and ANN in UPHT of AISI D2 using CBN tool and implore additional experimental data to validate the models for effective integration into industrial manufacturing technology and Industry 4.0 environment.

2 Experimental objectives, setup, and methods

2.1 Design of experiment

The specific objectives of the experiment include the prediction of surface quality using different machine learning models with various training algorithms, viz. Gaussian process regression (GPR), support vector method (SVM), adaptive neuro-fuzzy inference system (ANFIS), and artificial neural networks (ANN) and evaluating their performances with experimental results. Moreover, the optimization of process parameters (cutting speed, depth of cut, and feed), imploring the full factorial design for all possible combinations with the response surface method (RSM), in other to obtain the optimal combination of process parameters for minimum surface roughness or better surface quality during the ultra-precision hard-turning of AISI D2 with a CBN tool.

Ultra-precision hard-turning experiments were carried out on hollow cylindrical AISI D2 workpieces with outer and inner diameters of 12 mm and 60 mm, respectively. The length of the workpiece is 40 mm with a hardness value of 62 HRC (Fig. 1). The cubic boron nitride (CBN) cutters and inserts serve as fundamental tools utilized in machining operations, particularly in scenarios demanding high-speed cutting and removal of hard materials. The used insert for this experiment was SECO insert designated with DCGW11T308S-01020-L1-B CBN010. The manufacturer recommends this grade for finishing purposes [44]. The CBN cutter is an uncoated solid full-faced layer and brazed single tip of approximately 50% CBN grade with an average grain size of 2 µm, with TiC ceramic binder, nose radius of 0.8 mm, and chamfer size and rake angle of 0.10 mm and 20°, respectively. The tool shank has an ISO designation of SDHCL2020K11.

The operations were performed on a Hembrug ultra-precision machine (with Siemens 840 D CNC control) with a maximum spindle speed of 6000 rev/min, with repetitive and positioning accuracies of ± 1 µm (1 µm/150 mm), respectively. The AISI D2 steel was selected because of its great importance to the manufacturing industries like aerospace, mold-die making, and automotive, for die stamping, cold forming rolls, slitters, chipper knives, shear blades, tire shredders, and the list is endless due to its exceptional wear resistance and toughness properties [26], as shown in Table 1. A high-precision pneumatic 3-jaw chuck clamped the workpiece, and concentricity was checked with a dial indicator and versatile digital microscope. The front surface of the workpieces was machined in three sections, each with a new parameter combination (Fig. 2 (a)). The applied machine is a CNC machine with a’controller, which keeps the vc constant, coded in the NC codes (G-codes), by automatically calculating and setting the rotational speed in the case of face turning.

A full factorial design was implemented for the experiments for all possible combinations, with three factors having three levels each, providing 27 experiments (Table 2). The applied factors are as follows: cutting speed, \({v}_{c}\) (m/min), feed f (mm/rev), and depth of cut, \({a}_{p}\)(mm).

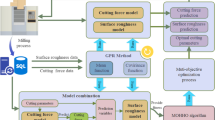

However, the experiment was done with two replicates; thus, 81 experiments were carried out. The essence of repeating the experiment twice is to ensure that error is minimized to validate the experimental process. The cutting environment is shown in Fig. 2b. The AISI D2 specimen is removed at the end of each systematic combination of 3 different parameters in each run. The surface roughness was measured with a Mitutoyo SJ-400 surface roughness tester with a diamond tip radii of 2 µm (Fig. 2c). Each surface was measured three times, and the arithmetic mean of these three measurements gives the roughness of the given surface. Regardless of this, the experiments were repeated three times. More so, to train the model, the data of all three experiments were used by taking their average (Rɑ). The experiment and ML model development have been represented in the flowchart, as shown in Fig. 3. In the case of successful training and testing of the model and the performance checked with reliable performance indicator MAPE [45, 46] and achieved satisfied result, the model is then further validated using validation test data and an additional test data (new experiment, with new dataset). Thus, if the validation performance is satisfied, the model is accepted, as it can satisfactorily predict the response for data from similar experimental conditions. However, if the model cannot be validated successfully, the model was tuned-up again by changing training function, training algorithm, membership function, number of neurons, rules, hidden layers, etc., in order to improve on it.

2.2 Response surface method

The response surface methodology (RSM) is a statistical approach utilized to examine the connections between various process or input parameters and the response of a system. It is a design of experiments (DoE) technique that involves constructing a mathematical model through several experimental trials. The goal of RSM is to enhance response by altering the input levels, such as process parameters, to discover the optimal combination that results in the preferred outcome. The RSM is expressed as shown in equation below [47, 48].

where Y is the predicted response, X are process parameters (X1, X2, X3,..., Xk), i and j are the linear and quadratic coefficients, respectively, k is the number of parameters, \(\beta\) is the regression coefficient, and \(\varepsilon\) is the experimental error. In this work, RSM in Minitab 21 statistical software was used to evaluate the effects of the process parameters on the response and approximate the best combinations of process parameters for optimal response.

2.3 Support vector machine

The support vector machine (SVM) is a popular machine learning algorithm that can be used for classification or regression and prediction for nonlinear problems. The fundamental concept of SVM is to locate the hyperplane that optimally divides the data points into their corresponding classes. SVM aims to maximize the margin, which refers to the space between the hyperplane and the nearest data points. These nearest data points are referred to as support vectors and are crucial in defining the hyperplane [49, 50]. Thus, the function for predicting new values is expressed in the equation:

where \({\alpha }_{n}\) and \({\alpha }_{n}^{*}\) are non-negative multipliers for each observation \({X}_{n}\), \(b\) is the bias, and \(G\left({X}_{n}, X\right)\) is the Gaussian kernel function. The SVM in this work was implemented using the Regression Learner of Statistics and Machine Learning Toolbox in MATLAB R2022b.

2.4 Gaussian process regression

The Gaussian process regression (GPR) is a type of non-parametric Bayesian machine learning method used for predicting target values of new data points based on the relationship between features and target values found in the training data [51,52,53]. The GPR can be summarized for prediction as expressed in the equation:

where \(m\left(X\right)\) and \(k \left(X, {X}{\prime}\right)\) represent the mean function often taken as zero with no loss of generality and kernel covariance function regulating model smoothness, respectively. The GPR in this work was deployed using the Regression Learner of Statistics and Machine Learning Toolbox in MATLAB R2022b.

2.5 Artificial neural network

Artificial neural networks (ANNs) are computer programs that aim to mimic the way the human nervous system works by replicating its complex network structure [54, 55] that could validate and predict responses from process parameters [56, 57]. These networks consist of an input layer, one or more hidden layers, and an output layer, each with multiple neurons, in which the neurons between adjacent layers are fully interconnected [45]. The ANN model works thus, with input or process parameters \({X}_{i}\) connected and transferred by multiplication with weights \({W}_{i}\) and summed with a bias, B, and an activation function (sigmoid, hyperbolic tangent, threshold, or ReLU) to obtain the output. A simple ANN model equation is expressed in the equation below [45].

During the several iterative processes, the output parameter(s) are compared with the original ones and adjusted accordingly to minimize error and maximize model accuracy. A simple multilayer perceptron for this work is presented in Fig. 4, with process parameters and response parameters such as cutting speed, depth of cut, feed, and surface roughness, respectively. The neural networks toolbox in MATLAB R2022b was used for the model training.

where \({X}_{i}\) are inputs or process parameters (\({X}_{1}, {X}_{2, } {X}_{3}, . . . {X}_{n}\)), \({W}_{i}\) are weights (\({W}_{1}, {W}_{2, } {W}_{3}, . . . {W}_{n}\)), and \(B\) is the bias.

2.6 Adaptive neuro-fuzzy inference system

The adaptive neuro-fuzzy inference system (ANFIS) is a fuzzy inference system that utilizes an adaptive algorithm to determine the fuzzy system parameters from the available data. ANFIS is an integrated machine learning system of two recognized methods, artificial neural networks (ANN) and fuzzy logic systems (FLS). ANFIS combines the strengths of both ANN and FLS to create a powerful and flexible model that can handle complex and nonlinear problems with fewer process and response parameters [38]. The ANFIS consists of a fuzzy inference system (FIS), responsible for decisions based on fuzzy rules, and the adaptive algorithm, which is the mechanism for adjusting the parameters of the FIS and enhancing its performance over time (backpropagation or the hybrid). Then comprises an input, fuzzy, rule, normalization, and output layers. The input layer receives the process parameters, which are used to make the predictions, and the fuzzy layer imposes a fuzzy rule (\({W}_{i}\)) on the inputs to process intermediate output, referred to as the membership functions (MF). The normalization layer (N, \(\overline{{W }_{i}}\)) normalizes the intermediate output between 0 and 1. The fuzzy output layer (\({W}_{i} {f}_{i}\)) combines the output from normalization using a specific weighted method to produce a linear membership function. In contrast, the final output layer defuzzifiers (\(\sum {W}_{i} {f}_{i}\)) and converts the fuzzy output to a concise and clear output for decision-making or prediction. This process is illustrated in Fig. 5. The ANFIS model for this investigation was developed using the NeurofuzzyDesigner toolbox in MATLAB R2022b.

2.7 Models performance evaluation

There are various statistical performance measures for evaluating predictive accuracy between two variables. In this case, we have measured values and predicted values. For this work, we shall be considering the correlation coefficient (R), root mean squared error (RMSE), and the mean absolute percentage error (MAPE).

2.7.1 Correlation coefficient (R)

The correlation coefficient (r or R) measures the relationship between two variables and varies between + 1 and 1. In the case of modeling, the relationship between the measured or experimental value (M) and the predicted value (P) can be evaluated by this parameter. Thus, an R-value that tends toward 1 means a strong positive correlation between the measured and the predicted values, and if it tends toward zero, the correlation will be weak. In contrast, negative values indicate a negative correlation between the two variables, and − 1 indicates a strong negative correlation. Therefore, the conventional polarity sign of R adopts the coefficient of regression [46]. It is also known as Pearson’s correlation coefficient and is expressed in the equation:

2.7.2 Root mean squared error (RMSE)

The root mean squared error (RMSE) is the square root of the sum of squared errors divided by the number of observations. The RMSE can be expressed in equation [27, 58]. In this case, the best value is that which tends to zero, while the worst is on the positive infinity [59].

2.7.3 Mean absolute percentage error (MAPE)

The mean absolute percentage error (MAPE) is the average of absolute errors divided by the measured or experimental values. The MAPE is the most effective measure in assessing the predictive accuracy of models in that it measures relative performance [45, 46, 57]. It is expressed in the equation below [58, 60]. The predicted values can be interpreted according to Lewis [46]; a model is classed as Highly accurate (if MAPE is less than 10), Good prediction (if MAPE is between 10 and 20%), and Satisfactory (if MAPE is between 20 and 50%). In comparison, Inaccurate (if MAPE is greater than 50%).

where \({P}_{i}\) = predicted values, \({M}_{i}\) = measured values, \(\overline{M}\) = mean of measured values, \(\overline{P}\) = mean of predicted values, \(N\) = number of observations, as represented in Eqs. 5-7.

3 Results and discussion

The process parameters, namely cutting speed (vc), feed (f), and depth of cut (ɑp), selected for the experiment, and the measured average surface roughness (Rɑ) have been investigated using the RSM in Minitab 21. The effect of process parameters on the response parameter has been reported in this section. In developing each ML model (ANFIS, SVM, GPR, and ANN), the input and measured parameters were divided systematically, training and testing the models. In the process parameters, the measured Rɑ and the ML-predicted Rɑ are summarized in Table 3.

3.1 Analysis of variance

The analysis of variance (ANOVA) in Table 4 shows that among the process parameters, only the feed rate has a significant effect on the surface roughness, and that the p-values at the linear, squares, and two-way interactions of the model were found to be far less than p-value at \(\propto\) = 0.05. The feed rate percentage contributions on surface roughness are about 92%, 82%, and 8% in the linear, square, and 2-way interactive effect (with cutting speed), respectively, as seen in the Normal plot and Pareto chart of standardized effect (Fig. 6).

The RSM model for surface roughness has an R-squared value of 94.93%, which shows that the model is highly satisfactory under the confidence interval of 95% at a significance of α = 0.05. The surface roughness model is found to be a quadratic regression, as expressed below, which is affirmed by the main effect plots shown in Fig. 7.

The main effect plot shows that the surface roughness decreases sharply as the cutting speed increases from 75 to 120 m/min, then gradually decreasing as the cutting speed increases to 175 m/min. On the other hand, surface roughness decreased gradually as the depth of cut increased from 0.06 to 0.08 mm and was observed to increase gradually at a further increase to 0.1 mm. However, it is glaring that the surface roughness increases for every increase in the feed from 0.025 to 0.125 mm/rev. The current results and the correlations found are similar to the general findings related to the effect of feed. This outcome could be explained by the direct relationship between surface roughness and feed, as represented in the equation below [27], where “nr” is the nose radius.

Figure 8 shows the contour plots of surface roughness, in which surface roughness is found to be less than 0.3 µm at the interaction between feed rate and cutting speed (for f ≤ 0.06 mm/rev and 75 m/min ≤ vc ≤ 175 m /min) with a constant value of ɑp = 0.08 mm, however for higher feeds, the surface roughness increases. Similarly, the surface roughness is found to be less than 0.3 µm at the interaction between feed and depth of cut (for f ≤ 0.06 mm/rev and 0.06 mm ≤ ɑp ≤ 0.1 mm) with constant value vc = 125 m/min; however, surface roughness increases with feeds higher than 0.06 mm. For the interaction between depth of cut and cutting speed (for ɑp ≤ 0.098 mm/rev and 80 m/min ≤ vc ≤ 175 m/min) with a constant value of f = 0.075 mm/rev, the Rɑ was found to be within 0.3 and 0.4 µm. Surface roughness values within these regions could be spotted on the contour as shown in Fig. 8 for vc = 81.9 m/min and f = 0.052 mm/rev Rɑ = 0.27 µm, while for ɑp = 0.098 mm and f = 0.058 mm/rev Rɑ = 0.28 µm.

3.2 Development and evaluation of machine learning models

The ML models were developed using different training algorithms, transfer functions, membership functions, and neurons, depending on the type of ML in each case. The best model in each technique that satisfied the training and test evaluation criteria based on the statistical performance indicators (R, RMSE, and MAPE) was saved and validated using additional test data afterward.

3.2.1 GPR model development

The regression learner application in MATLAB R2022b was used, and the data set was divided into 80% and 20% for training and testing (cross-validation) for the modeling. All GPRs of different regression learner algorithms were trained, including rational quadratic, squared exponential, matern 5/2, and exponential. The GPR algorithm with squared exponential algorithm of the isotropic kernel function, constant basic function and automatic kernel scale, sigma hyperparameters, the training time of 9.23 s, and prediction speed 390 obs/s was found to be the best with R-squared and RMSE values for trained data to be 0.88 and 0.08 respectively, and that for the test data were 0.77 and 0.11, respectively. These values are statistically significant compared to other GPRs. Figures 9 and 10 show the response plot and the predicted vs. measured values plot of the squared exponential GPR model.

3.2.2 SVM model development

The SVM model was developed using the regression learner in MATLAB R2022b. The data set was divided into training (80%) and testing (20%) data. The five inbuilt different kernel functions of SVM were investigated; they include SVM linear, quadratic, cubic, fine Gaussian, medium Gaussian, and coarse Gaussian. After all evaluations, the cubic SVM, with automatic kernel scale, box constraint, and epsilon hyperparameters and training time of 1.72 s with a predictive speed of 980 obs/s, was found to be better than the other four SVMs with R-squared and RSME values 0.88 and 0.07, respectively for training, while that of testing are 0.62 and 0.14, respectively. The response plot and prediction vs. measured response plot are shown in Figs. 11 and 12, respectively.

3.2.3 ANN model development

In developing the ANN models, the feedforward backpropagation with sigmoid hidden neurons and linear output neurons was considered due to its suitability for regression tasks with a gradient descent with momentum weight and bias adaptive learning function (learndgm). The data partitioning for each model was 80% and 20% of available data for training and testing, respectively. Three different training functions were used, namely thus, Levenberg–Marquardt (trainlm), Bayesian regularization (trainbr), and scaled conjugate gradient (trainscg). Two different types of architecture were considered, one hidden layer (3-N-1) and two-hidden layers (3-N–N-1), where N equals 6, 10, 12, and 20, and where the numbers 3 and 1 represent the number of input and output parameters, respectively. The results show that the Bayesian regularization model with one hidden layer 3–12-1 has the best predictive accuracy and minimum error with R, MSE, and RMSE values 0.9867, 0.0012, and 0.0346, respectively, at the 28th epoch. The performance and regression plots of the selected ANN model are shown in Figs. 13 and 14.

3.2.4 ANFIS model development

The ANFIS model, as earlier discussed, has the strength of fuzzy logic and ANN integrated by learning from the process and the capability of managing uncertain information. Therefore, it approximates nonlinear and uncertain systems without requiring an actual mathematical model. The ANFIS model was developed using the NeuroFuzzyDesigner in MATLAB R2202b. The model has three input parameters (cutting speed, feed rate, and depth of cut) and one output parameter (surface roughness) using the Sugeno fuzzy inference system, which was designated the m422c model, as shown in Fig. 15. It must be noted that different models were tested and named according to the membership function (MF) in each input parameter; however, the best was m422c. The ANFIS model m422c implies that 4-MF, 2-MF, and 2-MF are in the input parameters, cutting speed, feed rate, and depth of cut, respectively. The model utilizes Gaussian MF (gaussmf) in each input parameter, constant MF at the output with 16 rules connected with IF and AND logic operators, and default defuzzification method of weight average of all rules (wtaver). It was trained with the hybrid FIS optimization method. The architecture of the ANFIS model is shown in Fig. 16.

The result of the developed ANFIS model is statistically significant, with R and RMSE values of 0.99 and 0.03, respectively, for the training data. Conversely, that for the testing data was found to be 0.98 and 0.06, respectively (Table 5). More so, the surface interaction plots from the ANFIS model validated our ANOVA results using RSM, showing a rapid increase in surface roughness as the feed increases. In contrast, cutting speed does not significantly affect surface roughness (Fig. 17). Similarly, in Fig. 18, the feed shows a direct relationship with surface roughness. In contrast, the depth of cut has no significant effect. Finally, the cutting speed and depth of the cut surface interaction plot show no significant effect on the surface roughness (Fig. 19).

Therefore, based on the results, it could be seen that the predictive accuracy of all proposed ML models is said to be highly accurate since MAPE values are less than 10% for the trained data and good accuracy for trained data (since MAPE values are between 10 and 20%) [46]. Table 5 summarizes the statistical performance indicators of ML models. All models have high correlation coefficient R-values (ranges from 0.94 to 0.99) and low RMSE-values (ranges from 0.03 to 0.08) which are statistically satisfactory because they show a strong positive correlation between the measured and the predicted values and minimum errors. Similarly, validation test performance sees the R-values (from 0.88 to 0.99) and low RMSE-values (from 0.03 to 0.14), which are statistically satisfactory because they show a strong positive correlation between the measured and the predicted values and minimum errors respectively. However, it could also be deducted that the ANFIS and ANN models outperformed other ML model as highly accurate with MAPE values of 6.24% and 9.30%, respectively for trained data. While the validation test of the models were found to be 9.98% and 3.43% for ANFIS and ANN, respectively. More so, the ANN model has R (0.99) and RMSE (0.03) for trained data while the validation test values were R (0.99) and RMSE (0.03). Similarly, ANFIS has R (0.99) and RMSE (0.03) for trained data, then R (0.98) and RMSE (0.06) for validation test.

Figure 20 shows the comparative plots of measured and predicted surface roughness (Ra) values from the different developed machine learning models. It shows very close similarity and high correlation between the measured and predicted values. The predictive accuracies for the developed models are highly accurate, as the validation test affirms [45, 46]. However, it is essential to validate these models with additional experimental test data to recommend industrial applications and future integration for predicting surface quality in real-time.

3.3 Additional validation test of ML models

Furthermore, to validate the predictive accuracy of developed models for industrial application, an additional experiment test was carried out using a new set of machining parameters, and the corresponding surface roughness was measured. The cutting parameters were subsequently used as the input data for the developed models to predict the surface roughness (as output). The additional experimental test cutting parameters, the measured, and the predicted surface roughness have been summarized in Table 6. The additional validation test results are summarized in Table 7, and comparative plots of ML models predicted Rɑ with the measured Rɑ presented in Fig. 21. The R-values for GPR, SVM, ANN, and ANFIS are 0.79, 0.79, 0.78, and 0.81, respectively, showing strong correlations between measured and predicted values. Furthermore, it is essential to note that the MAPE values for all models were satisfactorily accurate, with an average value of 36.17% (MAPE is between 20 and 50%). However, it could be inferred that the ANFIS model has better predictive accuracy with a strong positive correlation between the measured and predicted values compared to other ML models, with an R-value of 0.81, a minimum RMSE of 0.17, and a MAPE value of 32.34%, which are statistically significant.

Table 6 shows some similarity among the ML models’ predicted surface roughness values with a strong correlation coefficient (R) value for GPR, SVM, ANFIS, and ANN models having 0.79, 0.79, 0.81, and 0.78, respectively. Figure 21 presents the comparison plot of measured and predicted surface roughness of additional validation test experiments. Additionally, it is interesting to note that there is a wide margin between the measured and predicted values of the ML models. This margin can be adequately explained by looking at the MAPE values in Table 7, in that the average error of the model’s prediction is 36.17% for entirely new data not used in model development. This result implies that the ML models’ predictive accuracy is statistically satisfactory because their errors are within 20–50%. However, the ANFIS has a better predictive accuracy among the developed ML models with a minimum MAPE value of 32.34% using additional new test data.

3.4 Optimization of process parameters

Furthermore, to investigate the appropriate process parameter selections for good surface quality in the hard-turning operations, the response surface method (RSM) was implored to optimize the process parameters to minimize surface roughness. After successful iterations, five different solutions were proposed for minimum Rɑ values. It could be observed that the depth of cut could be held at 0.08 or 0.09 mm, and feed of 0.025 mm or 0.029 mm with varying cutting speeds of 77 m/min, 78 m/min, 100 m/min, 120 m/min, and 121 m/min. The smallest surface roughness value investigated was 0.207 µm with process parameters as follows: 100 m/min, 0.025 mm/rev, and 0.09 mm for cutting velocity, feed, and depth of cut, respectively. The proposed optimization process parameter combinations for minimum surface roughness are summarized in Table 8.

4 Conclusion

This research has conducted a comprehensive review of predictive modeling related to the ultra-precision hard-turning operation. A systematic series of experiments were carried out on AISI D2 hardened tool using a CBN cutting insert with a full factorial design (FFD) for all possible combinations of process parameters (cutting velocity, feed, and depth of cut) at three levels, and the measured response was surface roughness. Different machine learning-based models were proposed and developed to predict and optimize surface roughness. Thus, the following main findings were made:

-

The effect of process parameters, investigated with analysis of variance (ANOVA), shows that the RSM model for surface roughness is statistically satisfactory; the R-squared and adjusted R-squared values are 94.93% and 92.25%, respectively. The feed was the only significant factor affecting the surface roughness, with about 92% influence, while cutting speed and depth of cut were insignificant within α = 0.05 at a 95% confidence interval (Fig. 6b).

-

The proposed and developed machine learning models were evaluated using correlation coefficient (R), root mean squared error (RMSE), and mean absolute percentage error (MAPE), and the results show that their predictive accuracies were satisfactory and statistically significant (Table 5). All machine learning models were highly accurate according to their MAPE values (6.24% at ANFIS, 9.30% at ANN, 7.88% at SVM, 6.11% at GPR) for the trained data, which are all less than 10% [46]. More so, the validation test indicates that ANFIS (9.98%) and ANN (3.43%) models are highly accurate, while SVM (16.18%) and GPR (13.51%) models have good predictions.

-

An additional validation test experiment was carried out to further validate the models’ predictive accuracy with new process parameters. The performance indices show that ML models’ average R, RMSE, and MAPE values are 0.79%, 0.18, and 36.17%, respectively. This implies that all proposed models are satisfactory and statistically significant [46], and ANFIS provides the minimum MAPE (32.34%). This could be attributed to its robust capabilities of incorporation of ANN and fuzzy logic algorithms.

-

More so, a full factorial design in RSM was implored to optimize the process parameters for minimum surface roughness to attain better surface quality, and a surface roughness model was developed for the process parameters, as expressed in Eq. 8.

-

Optimization of process parameters was investigated for minimum surface roughness (Rɑ), viz; vc (75, 125, 175 m/min), \(f\) (0.025, 0.075, 0.125 mm/rev), and ɑp (0.06, 0.08, 0.1 mm) in which five (5) solutions of composite desirabilities of 1 were obtained with minimum Rɑ, as presented in Table 8. However, the optimal parameters have been proposed to be 100 m/min, 0.025 mm/rev, and 0.09 mm for the cutting speed, feed, and depth of cut, respectively, in the case of machining parameter ranges of 75–175 m/min for vc, 0.025–0.125 mm/rev for f, and 0.06–0.1 mm for ap.

-

When integrated into the machining process, the proposed model could provide technical support on the shop floor for improving the process and good product quality by providing information on the proper process parameters selection and improving the overall manufacturing cost.

-

Further investigation could be done on integrating the machine learning models into the machining process for cloud-based and real-time prediction for response parameters during the machining process in future research.

Data availability

The datasets used or analyzed during the present research are available from the corresponding author upon satisfactory request.

Code availability

The code used during the present research is available from the corresponding author on satisfactory request.

References

Kumar R, Sahoo AK, Mishra PC, Das RK (2018) Comparative investigation towards machinability improvement in hard turning using coated and uncoated carbide inserts: part I experimental investigation. Adv Manuf 6(1):52–70. https://doi.org/10.1007/s40436-018-0215-z

Khan SA, Ahmad MA, Saleem MQ, Ghulam Z, Qureshi MA (2017) High-feed turning of AISI D2 tool steel using multi-radii tool inserts: tool life, material removed, and workpiece surface integrity evaluation. Mater Manuf Process 32(6):670–677. https://doi.org/10.1080/10426914.2016.1232815

Das SR, Kumar A, Dhupal D (2016) Experimental investigation on cutting force and surface roughness in machining of hardened AISI 52100 steel using CBN tool. Int J Mach Mach Mater. https://doi.org/10.1504/IJMMM.2016.078997

Geng H, Wu D, Wang H (2022) Experimental and simulation study of material removal behavior in ultra-precision turning of magnesium aluminate spinel (MgAl2O4). J Manuf Process 82:36–50. https://doi.org/10.1016/j.jmapro.2022.07.044

Gundarneeya TP, Golakiya VD, Ambaliya SD, Patel SH (2022) Experimental investigation of process parameters on surface roughness and dimensional accuracy in hard turning of EN24 steel. Mater Today Proc 57:674–680. https://doi.org/10.1016/j.matpr.2022.02.104

Hatefi S, Abou-El-Hossein K (2020) Review of non-conventional technologies for assisting ultra-precision single-point diamond turning. Int J Adv Manuf Technol 111(9):2667–2685. https://doi.org/10.1007/s00170-020-06240-7

Sirtuli LJ, Boing D, Schroeter RB (2019) Evaluation of layer adhered on PCBN tools during turning of AISI D2 steel. Int J Refract Met Hard Mater 84:104977, pp 1–9. https://doi.org/10.1016/j.ijrmhm.2019.104977

Srithar A, Palanikumar K, Durgaprasad B (2015) Hard turning of AISI D2 steel by polycrystalline cubic boron nitride (PCBN). Appl Mech Mater 766–767:649–654. https://doi.org/10.4028/www.scientific.net/AMM.766-767.649

Tang L, Yin J, Sun Y, Shen H, Gao C (2017) Chip formation mechanism in dry hard high-speed orthogonal turning of hardened AISI D2 tool steel with different hardness levels. Int J Adv Manuf Technol 93(5):2341–2356. https://doi.org/10.1007/s00170-017-0667-5

Srithar A, Palanikumar K, Durgaprasad B (2019) Experimental investigation and surface roughness analysis on hard turning of AISI D2 steel using polycrystalline cubic boron nitride (PCBN). Mater Today Proc 16:1061–1066. https://doi.org/10.1016/j.matpr.2019.05.196

Khan SA, Ameer MF, Uddin GM, Ali MA, Anwar S, Farooq MU, Alfaify A (2022) An in-depth analysis of tool wear mechanisms and surface integrity during high-speed hard turning of AISI D2 steel via novel inserts. Int J Adv Manuf Technol 122(9):4013–4028. https://doi.org/10.1007/s00170-022-10151-0

Karpat Y (2019) Influence of diamond tool chamfer angle on surface integrity in ultra-precision turning of single crystal silicon. Int J Adv Manuf Technol 101(5):1565–1572. https://doi.org/10.1007/s00170-018-3053-z

Bertolini M, Mezzogori D, Neroni M, Zammori F (2021) Machine learning for industrial applications: a comprehensive literature review. Expert Syst Appl 175:114820. https://doi.org/10.1016/j.eswa.2021.114820

Amigo FJ, Urbikain G, Pereira O, Fernández-Lucio P, Fernández-Valdivielso A, De Lacalle LNL (2020) Combination of high feed turning with cryogenic cooling on Haynes 263 and Inconel 718 superalloys. J Manuf Process 58:208–222. https://doi.org/10.1016/j.jmapro.2020.08.029

Kumar R, Sahoo AK, Mishra PC, Das RK (2019) Performance assessment of air-water and TiO2 nanofluid mist spray cooling during turning hardened AISI D2 steel. IJEMS Vol 26 (3&4) June-August, Accessed: Feb. 10, 2023. [Online]. http://nopr.niscpr.res.in/handle/123456789/51674

Sandhu V, Nivedha S, Prakash M (2020) An empirical science research on bioinformatics in machine learning. J Mech Contin Math Sci spl 7(1):86–94. https://doi.org/10.26782/jmcms.spl.7/2020.02.00006

Serin G, Sener B, Ozbayoglu AM, Unver HO (2020) Review of tool condition monitoring in machining and opportunities for deep learning. Int J Adv Manuf Technol 109(3–4):953–974. https://doi.org/10.1007/s00170-020-05449-w

Shastry KA, Sanjay HA (2020) Statistical modelling and machine learning principles for bioinformatics techniques, tools, and applications. Algorithms Intell Syst. Springer; pp. 25–39. https://doi.org/10.1007/978-981-15-2445-5_3

Taoufik N, Boumya W, Achak M, Chennouk H, Dewil R, Barka N (2022) The state of art on the prediction of efficiency and modeling of the processes of pollutants removal based on machine learning. Sci Total Environ 807:1–16. https://doi.org/10.1016/j.scitotenv.2021.150554

Janeliukstis R (2019) Review on time-frequency-based machine learning for structural damage assessment and condition monitoring, presented at the 18th International Scientific Conference Engineering for Rural Development. 22–24.05, pp. 833–838. https://doi.org/10.22616/ERDev2019.18.N364

Raffin T, Reichenstein T, Werner J, Kuhl A, Franke J (2022) A reference architecture for the operationalization of machine learning models in manufacturing. Procedia CIRP 115:130–135. https://doi.org/10.1016/j.procir.2022.10.062

Gao RX, Wang L, Helu M, Teti R (2020) Big data analytics for smart factories of the future. CIRP Ann 69(2):668–692. https://doi.org/10.1016/j.cirp.2020.05.002

Zhang Z, Wu Z, Rincon D, Christofides P (2021) Real-time optimization and control of nonlinear processes using machine learning. Mathematics 7(10):890 (1–25). https://doi.org/10.3390/math7100890

Imad M, Hopkins C, Hosseini A, Yussefian NZ, Kishawy HA (2022) Intelligent machining: a review of trends, achievements and current progress. Int J Comput Integr Manuf 35(4–5):359–387. https://doi.org/10.1080/0951192X.2021.1891573

Tang L, Sun Y, Li B, Shen J, Meng G (2019) Wear performance and mechanisms of PCBN tool in dry hard turning of AISI D2 hardened steel. Tribol Int 132:228–236. https://doi.org/10.1016/j.triboint.2018.12.026

Patel VD, Gandhi AH (2019) Analysis and modeling of surface roughness based on cutting parameters and tool nose radius in turning of AISI D2 steel using CBN tool. Measurement 138:34–38. https://doi.org/10.1016/j.measurement.2019.01.077

Rafighi M, Özdemir M, Al Shehabi S, Kaya MT (2021) Sustainable hard turning of high chromium AISI D2 tool steel using CBN and ceramic inserts. Trans Indian Inst Met 74(7):1639–1653. https://doi.org/10.1007/s12666-021-02245-2

Kumar S, Tamilselvan P, Feroskhan M, Doss AS, Sasikumar M, Elango M, Sivarajan S (2022) Hard turning of AISI D2 steel with cubic boron nitride cutting inserts. Mater Today Proc 72:2002–2006. https://doi.org/10.1016/j.matpr.2022.07.338

Sarnobat SS, Raval HK (2018) Experimental investigation and analysis of the influence of tool edge geometry and work piece hardness on surface residual stresses, surface roughness and work-hardening in hard turning of AISI D2 steel. Measurement 131:235–260. https://doi.org/10.1016/j.measurement.2018.08.048

Zhang J, Tang L, Ma F, Hu Y, Li B, Sun Y (2023) Experimental investigation of the low-temperature oil-on-water cooling and lubrication in turning the hardened AISI D2 steel. Int J Adv Manuf Technol 125:1161–1177. https://doi.org/10.1007/s00170-022-10692-4

Takacs M, Farkas BZ (2014) Hard cutting of AISI D2 steel. Proc 3rd Int Conf Mech Eng Mechatron 176:1–7 (https://avestia.com/ICMEM2014_Proceedings/papers/176.pdf)

Kumar R, Sahoo AK, Mishra PC, Das RK (2019) Performance of near dry hard machining through pressurised air water mixture spray impingement cooling environment. Int J Automot Mech Eng 16(1):6108–6133. https://doi.org/10.15282/ijame.16.1.2019.3.0465

Kumar R, Pandey A, Panda A, Mallick R, Sahoo AK (2021) Grey-fuzzy hybrid optimization and cascade neural network modelling in hard turning of AISI D2 steel. Int J Integr Eng 13(4):189–207

Pourmostaghimi V, Zadshakoyan M (2021) Designing and implementation of a novel online adaptive control with optimization technique in hard turning. Proc Inst Mech Eng Part J Syst Control Eng 235(5):652–663. https://doi.org/10.1177/0959651820952197

Pourmostaghimi V, Zadshakoyan M, Khalilpourazary S, Badamchizadeh MA (2022) A hybrid particle swarm optimization and recurrent dynamic neural network for multi-performance optimization of hard turning operation. AI EDAM 36:e28. https://doi.org/10.1017/S0890060422000087

Kara F, Karabatak M, Ayyıldız M, Nas E (2020) Effect of machinability, microstructure and hardness of deep cryogenic treatment in hard turning of AISI D2 steel with ceramic cutting. J Mater Res Technol 9(1):969–983. https://doi.org/10.1016/j.jmrt.2019.11.037

Kumar R, Sahoo AK, Mishra PC, Panda A, Das RK, Roy S (2019) Prediction of machining performances in hardened AISI D2 steel. Mater Today Proc 18:2486–2495. https://doi.org/10.1016/j.matpr.2019.07.105

Jamli MR, Fonna S (2018) Comparison of adaptive neuro fuzzy inference system and response surface method in prediction of hard turning output responses. J Adv Manuf Technol JAMT 12(1(3)):153–164 (https://jamt.utem.edu.my/jamt/article/view/4887)

Sivarajan S, Elango M, Sasikumar M, Doss AS (2022) Prediction of surface roughness in hard machining of EN31 steel with TiAlN coated cutting tool using fuzzy logic. Mater Today Proc 65:35–41. https://doi.org/10.1016/j.matpr.2022.04.161

Pourmostaghimi V, Zadshakoyan M, Badamchizadeh MA (2020) Intelligent model-based optimization of cutting parameters for high quality turning of hardened AISI D2. Artif Intell Eng Des Anal Manuf 34(3):421–429. https://doi.org/10.1017/S089006041900043X

D’Mello G, Pai PS, Puneet NP (2017) Optimization studies in high speed turning of Ti-6Al-4V. Appl Soft Comput 51:105–115. https://doi.org/10.1016/j.asoc.2016.12.003

Rajbongshi SK, Sarma DK (2019) Process parameters optimization using Taguchi’s orthogonal array and grey relational analysis during hard turning of AISI D2 steel in forced air-cooled condition. IOP Conf Ser Mater Sci Eng 491:012032. https://doi.org/10.1088/1757-899X/491/1/012032

Boga C, Koroglu T (2021) Proper estimation of surface roughness using hybrid intelligence based on artificial neural network and genetic algorithm. J Manuf Process 70:560–569. https://doi.org/10.1016/j.jmapro.2021.08.062

SECO Catalog & Technical Guide 2023.1 (2023) General ISO turning guides products guidance/insert products. Machining Navigator / Catalog Turning | Secotools.com, pp 10–153, 546–631, Accessed August 7, 2023. https://www.secotools.com/article/84585

Pant P, Chatterjee D (2020) Prediction of clad characteristics using ANN and combined PSO-ANN algorithms in laser metal deposition process. Surf Interfaces 21(100699):1–10. https://doi.org/10.1016/j.surfin.2020.100699

Lewis CD (1982) Industrial and business forecasting methods: a practical guide to exponential smoothing and curve fitting. London; Boston: Butterworth Scientific. Accessed February 12, 2023. http://archive.org/details/industrialbusine0000lewi

Shahmansouri AA, Nematzadeh M, Behnood A (2021) Mechanical properties of GGBFS-based geopolymer concrete incorporating natural zeolite and silica fume with an optimum design using response surface method. J Build Eng 36:102138. https://doi.org/10.1016/j.jobe.2020.102138

NIST/SEMATECH e-Handbook of statistical methods. Accessed November 15, 2022. https://www.itl.nist.gov/div898/handbook/

Liang Y, Hu S, Guo W, Tang H (2022) Abrasive tool wear prediction based on an improved hybrid difference grey wolf algorithm for optimizing SVM. Measurement 187(110247):1–13. https://doi.org/10.1016/j.measurement.2021.110247

Ghosh S, Dasgupta A, Swetapadma A (2019) A study on support vector machine based linear and non-linear pattern classification. International Conference on Intelligent Sustainable Systems (ICISS), 2019, pp 24–28. https://doi.org/10.1109/ISS1.2019.8908018

Liu H, Cai J, Ong YS, Wang Y (2019) Understanding and comparing scalable Gaussian process regression for big data. Knowl-Based Syst 164:324–335. https://doi.org/10.1016/j.knosys.2018.11.002

Deringer VL, Bartók AP, Bernstein B, Wilkins DM, Ceriotti M, Csányi G (2021) Gaussian process regression for materials and molecules. Chem Rev 121(16):10073–10141. https://doi.org/10.1021/acs.chemrev.1c00022

Karolczuk A, Skibicki D, Pejkowski L (2022) Gaussian process for machine learning-based fatigue life prediction model under multiaxial stress–strain conditions. Materials 15(21):7797(1–23). https://doi.org/10.3390/ma15217797

Paturi UMR, Cheruku S, Geereddy SR (2021) Process modeling and parameter optimization of surface coatings using artificial neural networks (ANNs): state-of-the-art review. Mater Today Proc 38:2764–2774. https://doi.org/10.1016/j.matpr.2020.08.695

Saoudi A, Fellah M, Hezil N, Lerari D, Khamouli F, Atoui L, Bachari K, Morozova J, Obrosov A, Samad MA (2020) Prediction of mechanical properties of welded steel X70 pipeline using neural network modelling. Int J Press Vessels Pip 186(104153):1–8. https://doi.org/10.1016/j.ijpvp.2020.104153

Jachak S, Giri J, Awari GK, Bonde AS (2021) Surface finish generated in turning of medium carbon steel parts using conventional and adhesive bonded tools. Mater Today Proc 43:2882–2887. https://doi.org/10.1016/j.matpr.2021.01.127

Chakraborty A, Kaur B, Ruchika (2018) Artificial neural network in a general perspective. JETIR 5(10):696–700. https://www.jetir.org. Accessed 06/12/2022

Adizue UL, Nwanya SC, Ozor PA (2020) Artificial neural network application to a process time planning problem for palm oil production. Eng Appl Sci Res 47(2):161–169. https://doi.org/10.14456/easr.2020.17 (https://ph01.tci-thaijo.org/index.php/easr/article/view/211130)

Chicco D, Warrens MJ, Jurman G (2021) The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput Sci 7:e623. https://doi.org/10.7717/peerj-cs.623

Roy SS, Samui P, Nagtode I, Jain H, Shivaramakrishnan V, Mohammadi-ivatloo B (2020) Forecasting heating and cooling loads of buildings: a comparative performance analysis. J Ambient Intell Humaniz Comput 11(3):1253–1264. https://doi.org/10.1007/s12652-019-01317-y

Acknowledgements

This research was partly funded by the National Research, Development and Innovation Office (NRDIO) through the projects ED_18-2018-0006 (research on prime exploitation of the potential provided by industrial digitalization) and OTKA-K-132430 (transient deformation, thermal, and tribological processes at fine machining of metal surfaces of high hardness). The research reported in this paper and carried out at BME has also been supported by the National Laboratory of Artificial Intelligence funded by the NRDIO under the auspices of the Ministry for Innovation and Technology.

Funding

Open access funding provided by Budapest University of Technology and Economics.

Author information

Authors and Affiliations

Contributions

All authors contributed to the research conceptualization and design. ULA, ADT, and EOI performed material preparation, data collection, and analysis. BZF configured the LabView software and set up the machine environment. ULA wrote the first draft of the manuscript, and all authors commented on previous versions. MT supervised the study and proofread the manuscript, and all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Consent to participate

All authors participated in the research work of this study.

Consent for publication

All authors agreed to publish the findings of this research.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Adizue, U.L., Tura, A.D., Isaya, E.O. et al. Surface quality prediction by machine learning methods and process parameter optimization in ultra-precision machining of AISI D2 using CBN tool. Int J Adv Manuf Technol 129, 1375–1394 (2023). https://doi.org/10.1007/s00170-023-12366-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-12366-1