Abstract

Particularly in sectors where mechanisation is increasing, there has been persistent effort to maximise the use of existing assets. Since maintenance management is accountable for the accessibility of assets, it stands to acquire prominence in this setting. One of the most common methods for keeping equipment in good working order is predictive maintenance with machine learning methods. Failures can be spotted before they cause any downtime or extra expenses, and with this aim, the present work deals with the online detection of wear and friction characteristics of stainless steel 316L under lubricating conditions with machine learning models. Wear rate and friction forces were taken into account as reaction parameters, and biomedical-graded stainless steel 316L was chosen as the work material. With more testing, the J48 method’s accuracy improves to 100% in low wear conditions and 99.27% in heavy wear situations. In addition, the graphic showed the accuracy values for several models. The J48 model is the most precise amongst all others, with a value of 100% (minimum wear) and an average of 98.92% (higher wear). Amongst all the models tested under varying machining conditions, the J48’s 98.92% (low wear) and 98.92% (high wear) recall scores stand out as very impressive (higher wear). In terms of F1-score, J48 performs better than any competing model at 99.45% (low wear) and 98.92% (higher wear). As a result, the J48 improves the model’s overall performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stainless steels are widely employed in industry particularly for their high resistance to corrosive environment. Stainless steel 316L is one of the most utilized types of stainless steel group thanks to its ability to create an oxide film layer on its surface which increases corrosion strength [1]. The steel is broadly used in medical sector as an orthopedic implant to overcome injuries and to cure broken bones with its biocompatible properties [2]. This special type of stainless steel is equipped with high density when compared to popular material groups, and high content of nickel provides facility to avoid pitting corrosion [3]. As known, the tribological performance depends on the capability of prevailing wear and friction behaviour of a material [4]. Loss of nickel from the steel under sliding conditions makes this material weak against corrosive media [5]. Also, despite the material carries attractive features such as high toughness and good workability to be used as an ideal option for biomedical apparatus, it presents poor wear resistance, low hardness and surface characteristics [6]. Therefore, it is crucial to increase the wear resistance to obtain longer product life under tribological environments for medical usage. It is highly critical for a biomaterial in serving as a long-term device while keeping its desired functions and working in a harmony with living tissue [7]. As being one of the legs of tribology, lubrication is a determinant factor in measuring the performance of a device in service conditions which will be the main subject of paper. Minimization of friction should be regarded for the parts which interacted with each other during actual conditions [8]. This is also about protecting the contact surfaces from extreme wear and low surface texture. Lubricants are one of the most effective methods for reducing friction forces [9]. Synthetic oils are commonly applied, but their harms on the ecological balance are still argued. Increasing trends consider not only technological performances but also the measures about sustainability and environmental impacts [10, 11].

Consequently, a decrease of the consumed lubricant guarantees the reduction of the released toxins to the environment. Some approaches have gained importance such as minimum quantity lubrication, cryogenic cooling and nanoparticle-added lubricants, i.e. nanofluids and hybrid version of the mentioned methods in the recent years [12, 13]. On the other hand, setup of the experimental infrastructure, preparation of the materials and physical tests bring not only valuable knowledge but also economic burden and workload undoubtedly. Therefore, estimation of the experimental results opens new doors for the researchers with a fundamental data. Paradigm shifting developments about artificial intelligence occur in these days which provide to extend the limits of the studies. Machine learning as a subset of artificial intelligence is a competent tool in handling the large datasets [14]. Simplified overview of a machine learning workflow is given in Fig. 1.

Simplified overview of a machine learning workflow [15]

One important advantage of the software-based systems is the ability to overcome the uncertainties which can be observed in complex systems. For instance, metalworking area contains vagueness mostly due to the changing material behaviours with thermal and mechanical effects. In sum, this work focuses on the application of the lubrication and cooling alternatives and predictability of their performance measures under varying tribological conditions.

1.1 Background work

There are some outstanding studies published in the area that put lights into the mysteries of the tribology concept for stainless steel 316L [16,17,18]. Moreover, Tassin et al. [19] tried to enhance the wear resistance of stainless steel 316L via laser surface alloying method. The researchers utilized chromium and titanium carbides for alloying, and serious improvement was achieved without any hazardous effect. Wear resistance layer was created on stainless steel 316L by Li et al. [20] which showed effective protection against adhesion, abrasion and oxidation mechanisms compared to untreated material. Dearnley and Aldrich-Smith [21] presented a new approach for improved wear resistance of stainless steel 316L which includes using thin hard coatings. The authors categorized their solutions where each provided successful results if the preparation of the surfaces is correct. High-temperature wear behaviour was discussed by the authors [22] to improve the wear resistance of the stainless steel 316L. Lower wear rate was found for a determined range which was seen around 300–400 °C. A comparison study was conducted by Thomann and Uggowitzer [23] for stainless steel 316L, Rex734 and P558 steels due to their outstanding properties on wear-corrosion behaviour which showed the weakness of stainless steel 316L in terms of mechanical and tribological aspects. Attabi et al. [24] applied ball burnishing process to enhance surface process for better microhardness and wear resistance on stainless steel 316L. The examination was done based on micro-nano hardness and elastic modulus which leads to raised properties after the burnishing process. Shot peening and nitriding applications were done on stainless steel 316L for enhanced mechanical properties of surface [25]. The specimens exposed to the surface treatments showed better wear resistance and hardness. Low-temperature carburized stainless steel 316L and duplex steels were tested for wear and corrosion behaviour. Accordingly, treated steel indicated comparable results which were explained by the microstructure and material behaviour. Saravanan et al. [1] optimized wear behaviour of stainless steel 316L while comparing the output with the titanium ally. Different test conditions were tried to measure the wear properties of materials, and seemingly, stainless steel 316L showed better wear resistance and coefficient of friction which was discussed with the help of response surface methodology. The impact of the microstructure on wear mechanisms was discussed during dry sliding for stainless steel 316L by Bahshwan et al. [26]. Different additive manufacturing approaches and their effects on microstructure and wear properties were evaluated in the paper.

Recent studies showed the potential of the cooling and lubricating environments in enhancing the tribological mechanism. In a paper [27], stainless steel was adopted for friction and wear tests to compare the cold wind, minimum quantity lubrication and dry media. Frictional forces and wear rate were found less than the dry medium under cold wind and minimum lubricated conditions, respectively. In another study [16], researchers focused on the tribological behaviour of the stainless steel under hybrid cooling and lubrication environments versus single modes and dry trial. Using an oiling and/or refrigerating agent makes a large difference for all tribological indicators such as volume loss, friction and depth of wear.

1.2 Research gaps

Several previous reports on the tribological behaviour of stainless steels have simply considered dry circumstances, whereas others have preferred to examine the effects of lubrication and/or cooling. No open research investigating the contribution rates of cooling/lubricating media using machine learning methods has been located to the best of our knowledge. Due to this, there is a significant gap in the existing literature that calls for the development of methods to combine the results of physical trials of tribological behaviour under different lubricating and cooling settings with machine learning models. For that reason, as an objective of the study, stainless steel 316L was selected as the work material and wear rate and friction forces were considered as the response parameters. Moreover, an in-depth analysis was carried out using SEM micro images and EDS results of the ball. Comprehensive details of the tribological tests are given in the next sections.

2 Materials and methods

2.1 Specimen and abrasive ball

Stainless steel 316L specimens with dimensions of Ø30 × 4 mm were used. The chemical composition of the specimens is as follows: 0.032%, C; 2.021%, Mn; 0.046%, P; 0.028%, S; 1.0%, Si; 17.86%, Cr; 13.88%, Ni; and 2.80%, Mo, by weight. The chemical composition of abrasive ball is as follows: 0.96%, C; 0.35%, Mn; 0.25%, Si; 1.50%, Cr; 0.28%, Ni; and 0.12%, Cu, by weight. The chemical composition of the samples and abrasive ball was determined by EDX measurements in the FESEM device at Karabuk University. Sandpaper with grits ranging from 600 to 2000 on the SiC scale was used respectively to sand and polish the samples. The Vickers microhardness value of the samples, as measured from the sample surface using the Qness Q10A/A + equipment, is about 251 HV, whereas the value for the abrasive ball is about 269 HV.

2.2 Abrasive wear test and environment information

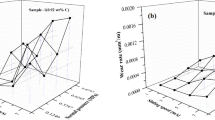

Wear tests were carried out at room temperature by a sliding speed of 75 mm/s. The experimental setup used is an experimental setup at the Metallurgy and Materials Laboratory, Karabük University, in compliance with the ASTM G133 standard and with a unique design similar to the setup specified in the standard. Mitutoyo SJ-410 Series measuring device, located in Iron and Steel Institute, Karabuk University, was used to profile the traces obtained after the wear tests. Volumetric loss values were calculated in mm3 using the wear profiles and length obtained. Experiments were repeated three times to ensure the results were stable. A lubricating medium was created between the two materials by using the minimum quantity lubrication (MQL) system. An oil flow rate of 40 ml/h and compressor air of 5 bar were used in the lubrication process. The process setup is shown in Fig. 2a.

2.3 Determination of volume loss and coefficient of friction

Volumetric loss (∆V) values were calculated by using the measurements made on the wear traces achieved from the experiments. The ∆V value used in this equation is the volumetric loss in mm3, the ww value is the wear width in mm, the wd value is the wear depth in mm and the s value is the trace length in mm, as shown in Eq. (1).

The friction coefficients were obtained as a result of continuous measurements during the experiment by using a dynamometer located on the experimental setup. The friction coefficients were obtained by the system using the friction force (Ff) and normal force (Fn) values. At the end of the experiments, SEM and EDX analyses were made on the traces and the results were examined.

3 Machine learning models for wear and friction characteristics

The present work deals with the wear and friction characteristics of stainless steel 316L under lubricating conditions. Different machine learning models are developed, and the performance is compared with each other. The complete procedure of machining learning models and data acquisition system is shown in Fig. 2b. The details of used models are given in following sections.

3.1 J48 decision tree

The decision tree (DT) is one of the popular supervised classifier which was formulated by Quinlan. The DT uses training data to construct a judgmental tree based on the principle of ‘entropy drop’ or ‘info gain’. A set of samples that are already classified (i.e. type of defect is known) is referred to as the training set. Suppose (A = a1, a2, a3, …, an) represents a training dataset, then each sector [22] comprises a vector with the dimension ‘k’ (b1,i, b2,i, b3,i, …, bk,i). Each bj represents attribute values of a sector, in addition to the category wherein ai is included. By the side of every node, J48 tries to distribute a set of samples into different subsets. Each subset belongs to a class (in our case, classes are faults). The criterion for splitting is the measure of the difference in entropy. The attribute with the highest difference of the entropy measure was chosen for making the decision. If at the node the classifier is not able to classify a whole subset into one class, then the process is repeated, considering another node. Some of the salient features of this algorithm are as follows:

-

a)

In a case wherein all sectors in the combination are of the same class, the classifier constructs a leaf node by providing clear categorization.

-

b)

In a case wherein no info gain is observed from any of the features, the algorithm constructs a decisive node.

-

c)

In a case wherein the difference in entropy is zero between all the attributes, the algorithm constructs a decisive node using the estimated identity of the class.

Basic terminology of decision tree is depicted in Fig. 3. The classification model is constructed using the J48 decision tree classifier, and hyper-parameters used in this training are stated here. The flow chart of decision tree is depicted in Fig. 4. J48 uses training data to construct a pruned or unpruned judgmental tree based on the C4.5 algorithm and the principle of ‘entropy drop’ or ‘info gain’. The hyper-parameters used for training the decision tree are as follows:

-

Seed: The random number generator assists the setting of primary weights of the connecting nodes. This also assists the shuffling of a training dataset and was set to 1.

-

ConfidenceFactor: It represents a threshold of allowed inherent error in data while pruning the algorithm. The smaller the value of ConfidenceFactor, the more will be the pruning. ConfidenceFactor was set to 2.5.

-

NumFolds: It defines the number of samples used in reduced-error pruning. Usually, one fold is employed for pruning and the others for training the rule sets and was set to 3.

-

MinNumObj: It represents the least possible samples per rule and was set to 2.

-

UseMDLcorrection: It was set to TRUE so that MDLcorrection is used for the discovery of splitting on numeric features.

-

subtreeRaising: It was set to TRUE which considers the sub-tree levitation at the stage of pruning.

-

collapse-Tree: It was set to TRUE so that splits are eliminated which does not decrease error in the training.

3.2 Random forest tree

The algorithm creates linkages between a number of different decision trees, and then integrates those relationships in order to arrive at improved, stable and truthful prediction estimations. The basic operational concept of the random forest classifier is depicted in Fig. 5. RF is observed as a collective technique since it uses regression and classification. The model’s training becomes reliable owing to extra randomness while training a tree arrangement. The critical attribute identifies the weightiest feature amongst random sub-divisions instead of splitting the node. This more comprehensive range helps grow consistent training. The first step is creating multiple sub-divisions and growing resultant decision trees simultaneously. It is self-explanatory; the ‘n’ sub-divisions correspond to the ‘n’ trees. The final step is to estimate the average of all decisions. It develops a variety of random trees and utilizes class modes for decision-making. Training the random forest tree assists in eliminating the over-fitting, and at times, it handles more extensive data of high dimensions. Figure 5 shows the induction of the random forest classifier. The classification model is constructed using a random forest tree classifier, and hyper-parameters used in this training are stated here. A forest of random trees is constructed. The flow chart of the random forest tree is depicted in Fig. 6. The classification model is constructed using a random forest tree classifier, and hyper-parameters used in this training are stated here.

-

Seed: The random number generator assists the setting of primary weights of the connecting nodes. This also assists the shuffling of a training dataset and was set to 1.

-

NumExecution-Slots: It is used for constructing the ensemble, and the number of implementable threads (slots) was set to 1.

-

bagSizePercent: This designates the % of a training dataset as the size of each bag and was assumed as 100.

-

numIterations: In this training, 100 iterations were performed.

-

max-Depth: It represents the depth of the tree at maximum extent and was set to 0 if for unlimited.

-

num-Features: It represents the number of randomly selected features. It was set to 0, and \(\mathrm{int}(\mathrm{log}\_2(\#\mathrm{predictors})+1)\) was used.

3.3 Best-first tree

The best-first tree (BFT) is a supervised learning algorithm used for the prediction of a class. It is a type of DT that is inducted in the best-first order, unlike the induction of the basic DT model. Both trees usually develop a similar kind of completely grown tree for the same set of data. However, if there is a constraint on the number of expansions, both the algorithms result in a different kind of tree. Given a set of data, unlike basic DT, the induction of the best-first tree model begins from a root node that separates the data based on the ‘best’ attribute. That is the attribute that results in minimum impurity in the following subsets. This procedure of selecting the ‘best’ attributes and splitting the data into subsets based on some criteria is repeated till the entire nodes are pure and/or a specified number of extensions have taken place. The flow chart of best-first tree is depicted in Fig. 7. In order to find such ‘best’ attribute for each split, a splitting criterion is required. This splitting criterion is based on the measure of impurity of a node. That is, the criterion resulting in the maximum reduction in node impurity is chosen to be the splitting criterion at that node. The classification model is constructed using the best-first tree classifier, and hyper-parameters used in this training are stated here. The best-first tree model begins from a root node that separates the data based on the ‘best’ attribute. That is the attribute that results in minimum impurity in the following subsets. This procedure of selecting the ‘best’ attributes and splitting the data into subsets on the basis of some criteria is repeated till the entire nodes are pure and/or a specified number of extensions have taken place. The hyper-parameters used for training the decision tree are the following:

-

Heuristic: It was set to TRUE for the binary splitting of nominal features.

-

MinNumObj: It represents minimum samples at the termination node and was set to 2.

-

NumFoldsPruning: It represents the folds used for internal cross-validation and was set to 5.

-

PruningStrategy: The strategy of ‘Post’ pruning was set.

-

Seed: The random number generator assists the setting of primary weights of the connecting nodes. This also assists the shuffling of a training dataset and was set to 1.

-

Size-Per: It represents the percentage of the training dataset and was set to 1.

-

Use-Gini: It was set to TRUE, so that for splitting criterion, the Gini index is used, or else, the information is employed.

4 Results and discussions

4.1 Parametric analysis on wear and friction characteristics

The tribological properties such as wear and coefficient of friction are studied with stainless steel 316L and abrasive ball under lubrication conditions. Figure 8 depicts the effect of load on the wear rate during the ball on flat test for stainless steel 316L with MQL as lubricant. With 20 N load during the sliding, a 0.0002 mm3/m wear rate was observed. Generally, due to continuous sliding of ball with flat surface, high contact temperature is generated which formed oxides on the surface [28]. The dislocation movements occur due to the plastic deformation at the interface which is limited by the formed oxides [29]. This exhibits high stress and great strain area. The flat surface rupture happens when the exhibited stress reached the fracture strength of the material and resulted in wear loss. However, due to the involvement of MQL, the debris produced during the sliding after reaching the fracture strength was removed [30]. It directly impacted the friction between the surfaces and reduced the energy required for the sliding. Also, it resulted in three-body abrasive behaviour which directly reduced the wear region and direct contact of the metal-to-metal surface as shown in schematic wear mechanism (Fig. 9). Moreover, MQL also lower down the temperature at the sliding surface interaction, which directly dissipated the heat from the surface and sustained the hardness of the material.

Schematic of wear mechanism by sustainable lubrication/cooling [31]

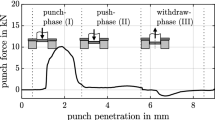

However, the higher load resulted in a large amount of wear rate. With 30 N and 40 N load of ball on the surface, 0.0006 mm3/m and 0.0023 mm3/m wear rates were measured. The wear rate was increased to 300% by increasing the load from 20 to 30 N and to 1150% by increasing the load from 30 to 40 N. The friction force trend obtained was similar as compared to the wear rate with respect to the applied load (see Fig. 10). The 20 N load during the sliding of two surfaces resulted in an average of 0.65 N friction force. The average friction force was measured to be 1.38 N with changing the ball load to 30 N. Furthermore, the large increment was observed from an average force ranging from 1.38 to 2.1 N with increasing the load to 40 N. Normally, in dry conditions, the friction forces are large owing to the high heat production by friction [32]. As mentioned above, the MQL provided cooling at the interaction of the surface and removed the debris by compressed air from the wear area by MQL aerosol [33]. It directly helps to lower down the friction forces in the wear area and dissipate the heat by reducing the friction [34]. Moreover, similar amount and pressure of MQL was used for the conditions with different loads (20 N, 30 N and 40 N). The high load on the surface increased the heat between the surfaces which resulted in large rupture of the surface. The amount of MQL at high-load conditions was not enough to overcome the large friction forces and resulted in high wear rate.

Furthermore, to analyse in detail the effect of load during the ball-on-flat test, SEM and EDS analyses were performed. Figure 11 depicts the SEM and EDS analyses of the ball after sliding stainless steel 316L with MQL assistance. A thin layer of the stainless steel 316L was observed on the surface of ball material 100Cr6 alloy. The detached material from the surface during sliding due to fracture was adhered on the ball surface. The high stress concentration and strain at wear area resulted in the rapture of the substrate material as discussed above [35, 36]. MQL was not sufficient to prevent the adhesion of the wear debris on the surface of the ball. The EDS analysis confirmed the involvement of the substrate debris on the ball surface.

Furthermore, SEM and EDS analyses of the worn surfaces by the ball-on-flat test with 20 N, 30 N and 40 N forces were studied (see Fig. 12). The work surface resulted in plastic deformation and ploughing out of the material. The plastic deformation acted due to the increment in the bond energy resistance by the rise in the friction forces as discussed above. MQL lubricants reduced the friction forces and resulted in a low wear rate. The surface was obtained with less fracture, wear depth and debris removal (marked by red in Fig. 12a). Moreover, the oxide formation was also low. However, by increasing the load during the sliding, the friction forces increased and resulted in high amount of fracture which is in line with the findings of Josyula and Narala [37]. The high wear depth and high rate of debris removal were observed with 30 N (marked by red in Fig. 12b). Also, the content of oxides was in higher quantity for the 30 N load as compared to that for the 20 N load. It confirms the formation of large oxides at the interaction surface which resulted in high wear rate. Furthermore, the SEM image of the surface slide at 40 N resulted in large wear depth and debris removal. The large load resulted in high concentration of the stresses and strains at the ball and substrate interface due to higher plastic deformation [38]. It resulted in the indentation of the sliding ball on the substrate and high wear rate [39].

4.2 ML result

ML is a subset of AI that focuses on the creation of algorithms and statistical models that enable computers to learn from data without being explicitly programmed. These models and algorithms are the primary emphasis of ML [40]. One of the key applications of ML is statistical analysis, which involves using algorithms to extract insights and patterns from data. ML methods can be used to perform a wide range of statistical analyses, such as regression analysis, clustering analysis, classification analysis and association rule learning. These techniques enable data scientists and analysts to identify relationships between variables, classify data into different categories and predict future outcomes. One of the key advantages of machine learning statistical analysis is its ability to handle large and complex datasets. ML algorithms can handle datasets with millions of variables and billions of observations, making it possible to extract insights from massive amounts of data that would be impossible to analyse using traditional statistical methods. Another advantage of ML statistical analysis is its ability to handle noisy and incomplete data. Traditional statistical methods rely on assumptions about the data, such as normality and linearity, which may not be held in real-world datasets. ML algorithms, on the other hand, can handle non-linear and non-parametric data. The models and various steps used in ML are shown in Fig. 13.

4.2.1 Mean

The mean is a measure of central tendency that indicates the average value of a dataset. It is often referred to as the ‘average’. To determine it, first, a dataset’s individual values are added together and then the resulting sum is divided by the entire number of values in the dataset. The mean is extremely sensitive to outlying values, which can have a considerable impact on the value it represents.

4.2.2 Median

The median is an additional measure of central tendency that indicates the middle value of a dataset when the data are sorted from smallest to biggest. The median is the middle value when the data are ordered from least to largest. It is the value that defines the boundary between the bottom half of the data and the upper half of the data. When compared to the mean, the median is less susceptible to the influence of numbers that are particularly severe.

4.2.3 Mode

The value that is most prevalent in a dataset is referred to as the mode of that dataset. It is helpful in determining which value appears the most frequently inside a distribution. A dataset could have one, more than one or no modes at all, depending on the frequency with which each value appears in the data.

4.2.4 Maximum value

The maximum value is the largest value in a dataset. It is useful for identifying the upper limit of a dataset and can be used to determine outliers or extreme values.

4.2.5 Minimum value

The minimum value is the smallest value in a dataset. It is useful for identifying the lower limit of a dataset and can also be used to determine outliers or extreme values.

4.2.6 Quartile 2

Quartile 2, also known as the median or the second quartile, is the value that separates the lower 50% of the dataset from the upper 50% when the data is ordered from smallest to largest. It is an important value in statistics because it provides a measure of the central tendency of a dataset that is less sensitive to extreme values than the mean. The range between quartile 1 (the 25th percentile) and quartile 3 (the 75th percentile) is known as the interquartile range, which is useful for identifying the spread of the data.

4.3 Comparative results with ML models

The performance of distinct supervised ML algorithms was done by the researchers using training and testing (random forest, best-first tree and J48). In the fields of machine learning and statistics, a confusion matrix is a performance assessment tool that is used to quantify the accuracy of a classification model. It is a matrix that summarizes the number of accurate and inaccurate predictions generated by a model for each class in a dataset. It is called a prediction accuracy matrix. The matrix is commonly split into four quadrants, with each quadrant representing one of the potential results of a binary classification job. These quadrants are labelled as follows: true positive (TP), false positive (FP), true negative (FN) and false negative (FN) (TN). TP denotes the number of occurrences in which the prediction of positive outcomes was made accurately, FP denotes the number of occurrences in which the prediction of positive outcomes was made incorrectly, FN denotes the number of occurrences in which the prediction of negative outcomes was made incorrectly and TN denotes the number of occurrences in which the prediction of negative outcomes was made accurately. The confusion matrix for low, medium and high wears for distinct models is presented in Fig. 14. Using a confusion matrix, several performance metrics can be calculated, including accuracy, precision, recall and F1-score. These metrics can help to assess the strengths and weaknesses of a model and to make informed decisions about how to improve its performance.

4.3.1 Accuracy

Accuracy may be defined as the degree to which a model properly recognizes both the positive and negative occurrences included within the data. The formula for determining it is the ratio of the number of accurate forecasts to the total number of predictions that were made.

4.3.2 Precision

The term ‘precision’ refers to the degree to which the actual number of positive cases matches the number that was expected. It is determined by dividing the total number of accurate positive predictions by the total number of positive forecasts made.

4.3.3 Recall

A model’s recall may be evaluated by determining what percentage of real-world positive examples it accurately identifies as positive. It is computed as the ratio of the total number of positive cases in the data to the number of predictions that were accurate in predicting those positive instances.

4.3.4 F1-score

The F1-score is a measurement of the overall performance of the model, taking into consideration both the precision and the recall of its predictions. It is computed using the harmonic mean, which is a mean that takes into account both accuracy and recall.

Overall, accuracy, precision, recall and F1-score are all important metrics for evaluating the performance of a machine learning model, and the choice of metric will depend on the specific problem and application at hand.

Figure 15 illustrates the effect of cross-validation of different algorithms. Accuracy alone is insufficient for selecting the optimal model to utilize; other performance outcomes of the model must also be considered. Furthermore, accuracy works best when the dataset is symmetric or has close or equal sample counts per class. The accuracy of the J48 approach is higher in the examination, with an overall accuracy of 100% in low wear condition and 99.27% in higher wear condition. The precision values of various models are also displayed in Fig. 15. When compared to all other models, the J48 model has the maximum precision value of 100% (low wear) and 98.92% (higher wear). The recall values of all the models under distinct machining condition show that the J48 performs well amongst all the other models with 98.92% (low wear) and 98.92% (higher wear). The comparison results of F1-score indicate that J48 outperforms with 99.45% (low wear) and 98.92% (higher wear). Thus, the overall performance of the model is higher with the J48.

5 Conclusion

This paper presents the tribological behaviour under MQL lubricating conditions and machine learning models for stainless steel 316L based on wear rate and friction forces as the output parameters. Some attractive results from the study are summarized in the following:

-

When sliding at 20 N, a wear rate of 0.0002 mm3/m was measured. The greater load significantly increased the wear rate. With 30 N and 40 N surface loads, the ball wore at a rate of 0.0006 mm3/m and a rate of 0.0023 mm3/m, respectively. Increasing the load from 20 to 30 N increases the wear rate by 300%, and increasing the load from 30 to 40 N increases the wear rate by 1150%.

-

When the two surfaces were sliding against one another under a pressure of 20 N, the average friction force was found to be 0.65 N. When the ball load was increased to 30 N, the average friction force was calculated to be 1.38 N. The average force increased dramatically from 1.38 to 2.1 N when the load was raised from 20 to 40 N. When the weather is dry, frictional forces tend to be strong since so much heat is generated.

-

All three load situations (20 N, 30 N and 40 N) utilized the same volume and pressure of MQL. Extreme surface cracking occurred as a result of the high stress on the surface, which increased heat between the surfaces. A significant wear rate was observed under high load circumstances because the amount of MQL was insufficient to counteract the strong friction forces.

-

The 30 N load had a higher oxide content than the 20 N load. High wear rates are confirmed to be the result of the production of massive oxides on the contact surface. The SEM picture of the surface slip at 40 N also shows deep wear and debris removal.

-

With a total inspection accuracy of 100% under low wear conditions and that of 99.27% under heavy wear conditions, the J48 method stands head and shoulders above the competition. The graphic also displays the accuracy numbers for several models. The J48 model outperforms all others with a maximum accuracy of 100% (minimal wear) and an overall accuracy of 98.92% (high wear). The J48 outperforms the competition when comparing recall values across models under varying machining settings, with a rating of 98.92% (low wear) (high wear). Results from the F1-score benchmark are 99.45% (low wear) and 98.92%, respectively, better than those from the J48 (high wear). This means the J48 improves the model’s performance in general.

Data availability

Not applicable.

References

Saravanan I, Elaya Perumal A, Vettivel SC et al (2015) Optimizing wear behavior of TiN coated SS 316L against Ti alloy using response surface methodology. Mater Des 67:469–482. https://doi.org/10.1016/j.matdes.2014.10.051

Dogan H, Findik F, Morgul O (2002) Friction and wear behaviour of implanted AISI 316L SS and comparison with a substrate. Mater Des 23:605–610. https://doi.org/10.1016/S0261-3069(02)00066-3

Guemmaz M, Mosser A, Grob J-J, Stuck R (1998) Sub-surface modifications induced by nitrogen ion implantation in stainless steel (SS316L). Correlation between microstructure and nanoindentation results. Surf Coatings Technol 100–101:353–357. https://doi.org/10.1016/S0257-8972(97)00647-6

Ren A, Kang M, Fu X (2023) Tribological behaviour of Ni/WC–MoS2 composite coatings prepared by jet electrodeposition with different nano-MoS2 doping concentrations. Eng Fail Anal 143:106934. https://doi.org/10.1016/j.engfailanal.2022.106934

Liu C, Lin G, Yang D, Qi M (2006) In vitro corrosion behavior of multilayered Ti/TiN coating on biomedical AISI 316L stainless steel. Surf Coatings Technol 200:4011–4016. https://doi.org/10.1016/j.surfcoat.2004.12.015

Sun Y, Bailey R, Moroz A (2019) Surface finish and properties enhancement of selective laser melted 316L stainless steel by surface mechanical attrition treatment. Surf Coatings Technol 378:124993. https://doi.org/10.1016/j.surfcoat.2019.124993

Williams DF (2008) On the mechanisms of biocompatibility. Biomaterials 29:2941–2953. https://doi.org/10.1016/j.biomaterials.2008.04.023

Martin KF (1978) A review of friction predictions in gear teeth. Wear 49:201–238. https://doi.org/10.1016/0043-1648(78)90088-1

Gupta MK, Niesłony P, Sarikaya M et al (2022) Tool wear patterns and their promoting mechanisms in hybrid cooling assisted machining of titanium Ti-3Al-2.5V/grade 9 alloy. Tribol Int 174:107773. https://doi.org/10.1016/j.triboint.2022.107773

Du N, Fathollahi-Fard AM, Wong KY (2023) Wildlife resource conservation and utilization for achieving sustainable development in China: main barriers and problem identification. Environ Sci Pollut Res. https://doi.org/10.1007/s11356-023-26982-7

Tian G, Lu W, Zhang X et al (2023) A survey of multi-criteria decision-making techniques for green logistics and low-carbon transportation systems. Environ Sci Pollut Res 30:57279–57301. https://doi.org/10.1007/s11356-023-26577-2

Maruda RW, Krolczyk GM, Feldshtein E et al (2017) Tool wear characterizations in finish turning of AISI 1045 carbon steel for MQCL conditions. Wear 372–373:54–67. https://doi.org/10.1016/j.wear.2016.12.006

Szczotkarz N, Mrugalski R, Maruda RW, et al (2020) Cutting tool wear in turning 316L stainless steel in the conditions of minimized lubrication. Tribol Int 106813

Rajesh AS, Prabhuswamy MS, Krishnasamy S (2022) Smart manufacturing through machine learning: a review, perspective, and future directions to the machining industry. J Eng 2022:1–6. https://doi.org/10.1155/2022/9735862

Chibani S, Coudert F-X (2020) Machine learning approaches for the prediction of materials properties. APL Mater 8:080701. https://doi.org/10.1063/5.0018384

Demirsöz R, Korkmaz ME, Gupta MK (2022) A novel use of hybrid Cryo-MQL system in improving the tribological characteristics of additively manufactured 316 stainless steel against 100 Cr6 alloy. Tribol Int 173:107613. https://doi.org/10.1016/j.triboint.2022.107613

Navarro CH, Martínez MF, Carvajal EEC et al (2021) Analysis of the wear behavior of multilayer coatings of TaZrN/TaZr produced by magnetron sputtering on AISI-316L stainless steel. Int J Adv Manuf Technol 117:1565–1573. https://doi.org/10.1007/s00170-021-07655-6

Riza SH, Masood SH, Wen C (2016) Wear behaviour of DMD-generated high-strength steels using multi-factor experiment design on a pin-on-disc apparatus. Int J Adv Manuf Technol 87:461–477. https://doi.org/10.1007/s00170-016-8505-8

Tassin C, Laroudie F, Pons M, Lelait L (1996) Improvement of the wear resistance of 316L stainless steel by laser surface alloying. Surf Coatings Technol 80:207–210. https://doi.org/10.1016/0257-8972(95)02713-0

Li G, Peng Q, Li C et al (2008) Effect of DC plasma nitriding temperature on microstructure and dry-sliding wear properties of 316L stainless steel. Surf Coatings Technol 202:2749–2754. https://doi.org/10.1016/j.surfcoat.2007.10.002

Dearnley PA, Aldrich-Smith G (2004) Corrosion–wear mechanisms of hard coated austenitic 316L stainless steels. Wear 256:491–499. https://doi.org/10.1016/S0043-1648(03)00559-3

Alvi S, Saeidi K, Akhtar F (2020) High temperature tribology and wear of selective laser melted (SLM) 316L stainless steel. Wear 448–449:203228. https://doi.org/10.1016/j.wear.2020.203228

Thomann UI, Uggowitzer PJ (2000) Wear–corrosion behavior of biocompatible austenitic stainless steels. Wear 239:48–58. https://doi.org/10.1016/S0043-1648(99)00372-5

Attabi S, Himour A, Laouar L, Motallebzadeh A (2021) Mechanical and wear behaviors of 316L stainless steel after ball burnishing treatment. J Mater Res Technol 15:3255–3267. https://doi.org/10.1016/j.jmrt.2021.09.081

Maleki E, Unal O, Reza Kashyzadeh K (2021) Influences of shot peening parameters on mechanical properties and fatigue behavior of 316 L steel: experimental, Taguchi method and response surface methodology. Met Mater Int 27:4418–4440. https://doi.org/10.1007/s12540-021-01013-7

Bahshwan M, Myant CW, Reddyhoff T, Pham M-S (2020) The role of microstructure on wear mechanisms and anisotropy of additively manufactured 316L stainless steel in dry sliding. Mater Des 196:109076. https://doi.org/10.1016/j.matdes.2020.109076

Chuangwen X, Ting X, Huaiyuan L et al (2017) Friction, wear, and cutting tests on 022Cr17Ni12Mo2 stainless steel under minimum quantity lubrication conditions. Int J Adv Manuf Technol 90:677–689. https://doi.org/10.1007/s00170-016-9406-6

García-León RA, Martínez-Trinidad J, Campos-Silva I et al (2021) Development of tribological maps on borided AISI 316L stainless steel under ball-on-flat wet sliding conditions. Tribol Int 163:107161. https://doi.org/10.1016/j.triboint.2021.107161

Qi Y, Zhao M, Feng M (2021) Molecular simulation of microstructure evolution and plastic deformation of nanocrystalline CoCrFeMnNi high-entropy alloy under tension and compression. J Alloys Compd 851:156923. https://doi.org/10.1016/j.jallcom.2020.156923

Hukkerikar AV, Arrazola P-J, Aristimuño P et al (2023) A tribological characterisation of Ti-48Al-2Cr-2Nb and Ti-6Al-4V alloys with dry, flood, and MQL lubricants. CIRP J Manuf Sci Technol 41:501–523. https://doi.org/10.1016/j.cirpj.2022.11.016

Wei Z, Wu Y, Hong S et al (2019) Effects of temperature on wear properties and mechanisms of HVOF sprayed CoCrAlYTa-10%Al2O3 coatings and H13 steel. Metals (Basel) 9:1224. https://doi.org/10.3390/met9111224

Zhang D, Li Y, Du X et al (2022) Microstructure and tribological performance of boride layers on ductile cast iron under dry sliding conditions. Eng Fail Anal 134:106080. https://doi.org/10.1016/j.engfailanal.2022.106080

Korkmaz ME, Gupta MK, Demirsöz R (2022) Understanding the lubrication regime phenomenon and its influence on tribological characteristics of additively manufactured 316 Steel under novel lubrication environment. Tribol Int 173:107686. https://doi.org/10.1016/j.triboint.2022.107686

Gupta MK, Demirsöz R, Korkmaz ME, Ross NS (2023) Wear and friction mechanism of stainless steel 420 under various lubrication conditions: a tribological assessment with ball on flat test. J Tribol 145

Bakhshandeh HR, Allahkaram SR, Zabihi AH, Barzegar M (2021) Evaluation of synergistic effect and failure characterization for Ni-based nanostructured coatings and 17–4PH SS under cavitation exposure in 3.5 wt % NaCl solution. Wear 466–467:203532. https://doi.org/10.1016/j.wear.2020.203532

Zou L, Zeng D, Wang J et al (2020) Effect of plastic deformation and fretting wear on the fretting fatigue of scaled railway axles. Int J Fatigue 132:105371. https://doi.org/10.1016/j.ijfatigue.2019.105371

Josyula SK, Narala SKR (2016) Experimental investigation on tribological behaviour of Al-TiCp composite under sliding wear conditions. Proc Inst Mech Eng Part J J Eng Tribol 230:919–929. https://doi.org/10.1177/1350650115620110

Gupta MK, El EH, Korkmaz ME et al (2022) Tribological and surface morphological characteristics of titanium alloys: a review. Arch Civ Mech Eng 22:72. https://doi.org/10.1007/s43452-022-00392-x

Demirsoz R, Uğur A, Erdoğdu AE et al (2022) Abrasive wear behavior of nano-sized steel scale on soft CuZn35Ni2 material. J Mater Eng Perform. https://doi.org/10.1007/s11665-022-07751-y

Paturi UMR, Palakurthy ST, Reddy NS (2023) The role of machine learning in tribology: a systematic review. Arch Comput Methods Eng 30:1345–1397. https://doi.org/10.1007/s11831-022-09841-5

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

The consent to submit this paper has been received explicitly from all co-authors.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Korkmaz, M.E., Gupta, M.K., Singh, G. et al. Machine learning models for online detection of wear and friction behaviour of biomedical graded stainless steel 316L under lubricating conditions. Int J Adv Manuf Technol 128, 2671–2688 (2023). https://doi.org/10.1007/s00170-023-12108-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-12108-3