Abstract

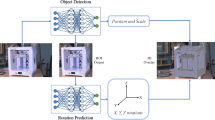

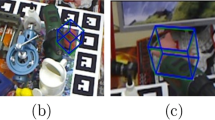

Augmented reality tracking is the core problem of augmented reality, which has not been well resolved so far, and limits the wider application of industrial augmented reality in actual industrial scenarios to a certain extent. In this paper, we propose a general pose estimation, refinement, and tracking framework for industrial augmented reality system. First, we propose a deep convolutional neural network for texture-less object pose estimation at first look. And a synthetic data generation pipeline is utilized to synthesize photorealistic training images for the network. Then, we implement the metric initialization of a monocular visual simultaneous localization and mapping (SLAM) through object pose, and the real-time camera pose is continuously tracked by the monocular visual SLAM. During the tracking process, a back-end pose optimization thread is proposed to calculate the pose residual between the model and real scene, and correct the pose residual in real time. Finally, the entire assembly models are superimposed on the real scene through transformation relationships included in the CAD models. The experiments on a gearbox assembly scenario show that the proposed method gives a highest tracking accuracy in compared with a marker-based methods and two state-of-the-art CNN-based object pose estimation methods. The whole proposed pipeline runs at 25 FPS for real-time tracking. Moreover, the proposed method is also applied to a shipbuilding outfitting construction scenario, and shows good scalability and generality for the complex and large-scale industrial scenario and different mobile terminals.

Similar content being viewed by others

References

Wang X, Ong SK, Nee AYC (2016) A comprehensive survey of augmented reality assembly research. Adv Manuf 4:1–22. https://doi.org/10.1007/s40436-015-0131-4

Bottani E, Vignali G (2019) Augmented reality technology in the manufacturing industry: a review of the last decade. IISE Trans 51:284–310. https://doi.org/10.1080/24725854.2018.1493244

Dalle Mura M, Dini G (2021) An augmented reality approach for supporting panel alignment in car body assembly. J Manuf Syst 59:251–260. https://doi.org/10.1016/j.jmsy.2021.03.004

de Souza Cardoso LF, Mariano FCMQ, Zorzal ER (2020) A survey of industrial augmented reality. Comput Ind Eng 139:106159. https://doi.org/10.1016/j.cie.2019.106159

Egger J, Masood T (2020) Augmented reality in support of intelligent manufacturing — a systematic literature review. Comput Ind Eng 140:. https://doi.org/10.1016/j.cie.2019.106195

Runji JM, Lee YJ, Chu CH (2022) User requirements analysis on augmented reality-based maintenance in manufacturing. J Comput Inf Sci Eng 22:. https://doi.org/10.1115/1.4053410

Eswaran M, Gulivindala AK, Inkulu AK, Raju Bahubalendruni MVA (2023) Augmented reality-based guidance in product assembly and maintenance/repair perspective: a state of the art review on challenges and opportunities. Expert Syst Appl 213:118983. https://doi.org/10.1016/j.eswa.2022.118983

Wang K, Liu D, Liu Z et al (2020) A fast object registration method for augmented reality assembly with simultaneous determination of multiple 2D–3D correspondences. Robot Comput-Integr Manuf 63:101890. https://doi.org/10.1016/j.rcim.2019.101890

Devagiri JS, Paheding S, Niyaz Q et al (2022) Augmented reality and artificial intelligence in industry: trends, tools, and future challenges. Expert Syst Appl 207:118002. https://doi.org/10.1016/j.eswa.2022.118002

Yu YK, Wong KH, Chang MMY (2005) Pose estimation for augmented reality applications using genetic algorithm. IEEE Trans Syst Man Cybern Part B Cybern 35:1295–1301. https://doi.org/10.1109/TSMCB.2005.850164

Konishi Y, Hanzawa Y, Kawade M, Hashimoto M (2016) Fast 6D pose estimation from a monocular image using hierarchical pose trees. Lect Notes Comput Sci Subser Lect Notes Artif Intell Lect Notes Bioinforma 9905 LNCS:398–413. https://doi.org/10.1007/978-3-319-46448-0_24

Kato H, Billinghurst M (1999) Marker tracking and HMD calibration for a video-based augmented reality conferencing system. In: Proceedings 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR’99): 85–94

Fiala M (2005) ARTag, a fiducial marker system using digital techniques. In: 2005 IEEE Comp Soc Conf Comp Vision Patt Recog (CVPR’05) 2:590–596

Radkowski R, Herrema J, Oliver J (2015) Augmented reality-based nanual assembly support with visual features for different degrees of difficulty. Int J Human-Computer Interact 31:337–349. https://doi.org/10.1080/10447318.2014.994194

Yin X, Fan X, Zhu W, Liu R (2018) Synchronous AR assembly assistance and monitoring system based on ego-centric vision. Assem Autom 39:1–16. https://doi.org/10.1108/AA-03-2017-032

Fang W, An Z (2020) A scalable wearable AR system for manual order picking based on warehouse floor-related navigation. Int J Adv Manuf Technol 109:2023–2037. https://doi.org/10.1007/s00170-020-05771-3

Yin X, Fan X, Yang X et al (2019) An automatic marker–object offset calibration method for precise 3D augmented reality registration in industrial applications. Appl Sci 9:4464. https://doi.org/10.3390/app9204464

Wu D, Zhou S, Xu, H (2021) Assembly operation process assistance based on augmented reality and artificial intelligence. Aeronautical Manuf Technol 13:26–32. https://doi.org/10.16080/j.issn1671-833x.2021.13.026

Marino E, Barbieri L, Colacino B et al (2021) An augmented reality inspection tool to support workers in Industry 4.0 environments. Comput Ind 127:103412. https://doi.org/10.1016/j.compind.2021.103412

Li W, Wang J, Liu M, Zhao S (2022) Real-time occlusion handling for augmented reality assistance assembly systems with monocular images. J Manuf Syst 62:561–574. https://doi.org/10.1016/j.jmsy.2022.01.012

Marchand E, Uchiyama H, Spindler F (2016) Pose estimation for augmented reality: a hands-on survey. IEEE Trans Vis Comput Graph 22:2633–2651. https://doi.org/10.1109/TVCG.2015.2513408

Du G, Wang K, Lian S, Zhao K (2021) Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: a review. Artif Intell Rev 54:1677–1734. https://doi.org/10.1007/s10462-020-09888-5

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60:91–110. https://doi.org/10.1023/B:VISI.0000029664.99615.94

Bay H, Ess A, Tuytelaars T, Van Gool L (2008) Speeded-up robust features (SURF). Comput Vis Image Underst 110:346–359. https://doi.org/10.1016/j.cviu.2007.09.014

Tombari F, Franchi A, Di L (2013) BOLD features to detect texture-less objects. 2013 IEEE International Conference on Computer Vision. IEEE, Sydney, Australia, pp 1265–1272

Chan J, Lee JA, Kemao Q (2016) BORDER: An oriented rectangles approach to texture-less object recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 2855–2863

Chan J, Lee JA, Kemao Q (2017) BIND: Binary integrated net descriptors for texture-less object recognition. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 3020–3028

Hinterstoisser S, Lepetit V, Ilic S et al (2013) Model based training, detection and pose estimation of texture-less 3D objects in heavily cluttered scenes. In: Lee KM, Matsushita Y, Rehg JM, Hu Z (eds) Computer Vision – ACCV 2012. Springer, Berlin Heidelberg, Berlin, Heidelberg, pp 548–562

Zubizarreta J, Aguinaga I, Amundarain A (2019) A framework for augmented reality guidance in industry. Int J Adv Manuf Technol 102:4095–4108. https://doi.org/10.1007/s00170-019-03527-2

Liu M-Y, Tuzel O, Veeraraghavan A et al (2012) Fast object localization and pose estimation in heavy clutter for robotic bin picking. Int J Robot Res 31:951–973. https://doi.org/10.1177/0278364911436018

Imperoli M, Pretto A (2015) D2co: fast and robust registration of 3d textureless objects using the directional chamfer distance. Lect Notes Comput Sci Subser Lect Notes Artif Intell Lect Notes Bioinforma 9163:316–328. https://doi.org/10.1007/978-3-319-20904-3_29

Zhang H, Cao Q (2019) Detect in RGB, optimize in edge: Accurate 6D pose estimation for texture-less industrial parts. In: 2019 International Conference on Robotics and Automation (ICRA), pp 3486–3492

Brachmann E, Krull A, Michel F et al (2014) Learning 6D object pose estimation using 3D object coordinates. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T (eds) Computer vision — ECCV 2014. Springer International Publishing, Cham, pp 536–551

Tejani A, Tang D, Kouskouridas R, Kim T-K (2014) Latent-class hough forests for 3D object detection and pose estimation. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T (eds) Computer vision — ECCV 2014. Springer International Publishing, Cham, pp 462–477

Drost B, Ulrich M, Navab N, Ilic S (2010) Model globally, match locally: Efficient and robust 3D object recognition. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp 998–1005

Adagolodjo Y, Trivisonne R, Haouchine N et al (2017) Silhouette-based pose estimation for deformable organs application to surgical augmented reality. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, Vancouver, BC, pp 539–544

Su Y, Rambach J, Minaskan N et al (2019) Deep multi-state object pose estimation for augmented reality assembly. In: 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp 222–227

Kehl W, Manhardt F, Tombari F et al (2017) SSD-6D: Making RGB-Based 3D Detection and 6D Pose Estimation Great Again. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp 1530–1538

Wang G, Manhardt F, Tombari F, Ji X (2021) GDR-Net: Geometry-guided direct regression network for monocular 6D object pose estimation. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 16606–16616

Xiang Y, Schmidt T, Narayanan V, Fox D (2018) PoseCNN: A convolutional neural network for 6D object pose estimation in cluttered scenes. In: Robotics: Science and Systems XIV. Robotics: Science and Systems Foundation

Sundermeyer M, Marton Z-C, Durner M et al (2018) Implicit 3D orientation learning for 6D object detection from RGB images. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer Vision – ECCV 2018. Springer International Publishing, Cham, pp 712–729

Wen Y, Pan H, Yang L, Wang W (2020) Edge enhanced implicit orientation learning with geometric prior for 6D pose estimation. IEEE Robot Autom Lett 5:4931–4938. https://doi.org/10.1109/LRA.2020.3005121

Rad M, Lepetit V (2017) BB8: A scalable, accurate, robust to partial occlusion method for predicting the 3D poses of challenging objects without using depth. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp 3848–3856

Tekin B, Sinha SN, Fua P (2018) Real-time seamless single shot 6D object pose prediction. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 292–301

Peng S, Liu Y, Huang Q, et al (2019) PVNet: Pixel-wise voting network for 6DoF pose estimation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 4556–4565

Li Z, Wang G, Ji X (2019) CDPN: coordinates-based disentangled pose network for real-time RGB-based 6-DoF object pose estimation. 2019 IEEE/CVF International Conference on Computer Vision (ICCV). IEEE, Seoul, Korea (South), pp 7677–7686

Di Y, Manhardt F, Wang G et al (2021) SO-pose: Exploiting self-occlusion for direct 6D pose estimation. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp 12376–12385

Yang X, Li K, Wang J, Fan X (2023) ER-Pose: learning edge representation for 6D pose estimation of texture-less objects. Neurocomputing 515:13–25. https://doi.org/10.1016/j.neucom.2022.09.151

Wu Y, Zand M, Etemad A, Greenspan M (2022) Vote from the center: 6 DoF pose estimation in RGB-D images by radial keypoint voting. In: Avidan S, Brostow G, Cissé M et al (eds) Computer vision — ECCV 2022. Springer Nature Switzerland, Cham, pp 335–352

Su Y, Saleh M, Fetzer T et al (2022) ZebraPose: Coarse to fine surface encoding for 6DoF object pose estimation. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 6728–6738

Haugaard RL, Buch AG (2022) SurfEmb: Dense and continuous correspondence distributions for object pose estimation with learnt surface embeddings. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 6739–6748

Mur-Artal R, Montiel JMM, Tardós JD (2015) ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans Robot 31:1147–1163. https://doi.org/10.1109/TRO.2015.2463671

Mur-Artal R, Tardós JD (2017) ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans Robot 33:1255–1262. https://doi.org/10.1109/TRO.2017.2705103

Campos C, Elvira R, Rodríguez JJG et al (2021) ORB-SLAM3: an accurate open-source library for visual, visual-inertial and multi-map SLAM. IEEE Trans Robot 37:1874–1890. https://doi.org/10.1109/TRO.2021.3075644

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: An efficient alternative to SIFT or SURF. In: 2011 International Conference on Computer Vision, pp 2564–2571

Engel J, Schöps T, Cremers D (2014) LSD-SLAM: large-scale direct monocular SLAM. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T (eds) Computer vision — ECCV 2014. Springer International Publishing, Cham, pp 834–849

Forster C, Pizzoli M, Scaramuzza D (2014) SVO: Fast semi-direct monocular visual odometry. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp 15–22

Engel J, Koltun V, Cremers D (2018) Direct sparse odometry. IEEE Trans Pattern Anal Mach Intell 40:611–625. https://doi.org/10.1109/TPAMI.2017.2658577

Botsch M, Steinberg S, Bischoff S, Kobbelt L OpenMesh — a generic and efficient polygon mesh data structure. 1st OpenSG Symposium

Yang X, Fan X, Wang J, Lee K (2022) Image translation based synthetic data generation for industrial object detection and pose estimation. IEEE Robot Autom Lett 7:7201–7208. https://doi.org/10.1109/LRA.2022.3180403

Lepetit V, Moreno-Noguer F, Fua P (2009) EPnP: an accurate O(n) solution to the PnP problem. Int J Comput Vis 81:155–166. https://doi.org/10.1007/s11263-008-0152-6

Eldar Y, Lindenbaum M, Porat M, Zeevi YY (1997) The farthest point strategy for progressive image sampling. IEEE Trans Image Process 6:1305–1315. https://doi.org/10.1109/83.623193

Alexopoulos K, Nikolakis N, Chryssolouris G (2020) Digital twin-driven supervised machine learning for the development of artificial intelligence applications in manufacturing. Int J Comput Integr Manuf 33:429–439. https://doi.org/10.1080/0951192X.2020.1747642

Manettas C, Nikolakis N, Alexopoulos K (2021) Synthetic datasets for deep learning in computer-vision assisted tasks in manufacturing. Procedia CIRP 103:237–242. https://doi.org/10.1016/j.procir.2021.10.038

Schoepflin D, Holst D, Gomse M, Schüppstuhl T (2021) Synthetic training data generation for visual object identification on load carriers. Procedia CIRP 104:1257–1262. https://doi.org/10.1016/j.procir.2021.11.211

Denninger M, Sundermeyer M, Winkelbauer D et al (2019) BlenderProc: Reducing the reality gap with photorealistic rendering. Robotics: Science and Systems (RSS) Workshops

Hodan T, Haluza P, Obdržálek Š et al (2017) T-LESS: An RGB-D dataset for 6D pose estimation of texture-less objects. In: 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), pp 880–888

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell PAMI. 8:679–698. https://doi.org/10.1109/TPAMI.1986.4767851

Grompone von Gioi R, Jakubowicz J, Morel J-M, Randall G (2010) LSD: a fast line segment detector with a false detection control. IEEE Trans Pattern Anal Mach Intell 32:722–732. https://doi.org/10.1109/TPAMI.2008.300

Besl PJ, McKay ND (1992) A method for registration of 3-D shapes. IEEE Trans Pattern Anal Mach Intell 14:239–256. https://doi.org/10.1109/34.121791

Hodaň T, Michel F, Brachmann E et al (2018) BOP: benchmark for 6D object pose estimation. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision — ECCV 2018. Springer International Publishing, Cham, pp 19–35

Funding

This work is supported by the Ministry of Industry and Information Technology of PRC (Grant No. CJ04N20) and National Natural Science Foundation of China (Grant No. 51975362).

Author information

Authors and Affiliations

Contributions

Xu Yang: conceptualization, methodology, investigation, resources, data curation, validation, writing — original draft, writing — review and editing. Junqi Cai: software, investigation. Kunbo Li: software, investigation. Xiumin Fan: investigation, writing — review and editing. Hengling Cao: software, investigation.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, X., Cai, J., Li, K. et al. A monocular-based tracking framework for industrial augmented reality applications. Int J Adv Manuf Technol 128, 2571–2588 (2023). https://doi.org/10.1007/s00170-023-12082-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-12082-w