Abstract

The paper deals with the creation and implementation of a methodology for optimizing the parameters of cascade control of the machine tool axis drives. The first part presents the identification of a dynamic model of the axis based on experimental data from measuring the axis dynamics. The second part describes the controller model, selection of optimization objective functions, and optimization of constraint conditions. The optimization of controllers is tuned by simulation using identified state-space model. Subsequently, the optimization procedure is implemented on the identified model, and the found control parameters are used on a real machine tool linear axis with different loads. The implementation of the proposed complex procedure on a real horizontal machine tool proved the advantage of simultaneous tuning of all parameters using optimization methods. The strategy solves the problem of mutual interaction of all control law parameters disabling effective usability of gradual sequential tuning. The methodology was developed on a speed control loop, the tuning of which is usually the most difficult due to the close interaction with the dynamic properties of the machine mechanics. The whole procedure is also applicable to the position and current control loop.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tuning the cascade controllers’ parameters is a demanding process that requires a high level of expertise of the operator performing this activity [1]. For this reason, a universal methodology has been developed that aims to automate this process as much as possible and significantly simplify it even for a less professional operator.

At the same time, the number of controller parameters that are subject to optimization is high, and these parameters interact. Based on this, there is a requirement for simultaneous optimization as an alternative to gradual optimization (manual tuning).

State feedback with Kalman filter state observer is the basis for the pole placement controller in [2]. The drive control parameters are determined from the multibody mechanical model representation in [3] to reduce the system vibrations. Tuning of cross coupling controller for the machine tool drives extends the stochastic linear quadratic Gaussian regulator, and it combines both the drive and the cutting dynamics into a unified model in [4]. Trajectory generation technique with a shaped frequency content to minimize residual vibrations is introduced in [5]; an alternative feedback control strategy with input signal shaper is presented in [6]. Improved artificial bee colony algorithm optimizes the settings of feedback PID and feedforward PD controller in [7] fulfilling the task of contour tracking. The adaptive sliding mode control with nonlinear sliding surface in [8] increases the feed drive motion accuracy. Static and dynamic machine tool stiffness [9] is influenced by force feedback applied to the PID cascade controller. The contour error–based sliding mode control is introduced in [10, 11] to control the industrial feed drive. The inverse dynamics compensation for machine feed drives using model predictive control is presented in [12]. Time-based linear programming links together feedrate optimization and servo error pre-compensation in [13] for obtaining machine servo drive input. In [14], a data-driven approach is used for controller tuning with Bayesian optimization approach.

The feed drives’ dynamics is identified using unbiased least squares scheme in [15]; parameters for identification are inertia and viscous friction. The genetic algorithm is used for drive axis identification in [16] covering rigid body motion and Coulomb friction. The moment of inertia system identification is performed in [17] by using relay feedback experiment in the time-domain combined with gradual pole compensation (it is supposed that single mass representation is satisfactory for the modeling of the system). Model-based system identification is used in [18] for obtaining the instantaneous model behavior, which can be used for system control. The reduced state-space model of the machine tool with feed drive is obtained in [19] covering dissipative phenomena (which were identified by measurement) and covering controller too. Theoretical estimation algorithm to determine modal parameters of a mechanical system is shown in [20]. Identification based on recursive least squares method is used to determine feed drive model including nonlinear friction [21]. In the paper [22], the pole search method is used in conjunction with least squares projection technique for identifying virtual machine tool drives, and it gives servo errors from the desired position with high accuracy. The system residual vibrations could be suppressed by active vibration absorber (delay resonator is presented in [23]) applied to the 2D robotic arm [24, 25].

This paper deals with the methodology for tuning the cascade control parameters of the machine drive axis. The methodology workflow is as follows: machine drive axis identification, cascade control loop assembly, formulation and processing of optimization task, and application of optimal controller settings. The paper is organized as follows: in Section 2, we describe the identification methods used. Section 3 describes the cascade control; Section 4 deals with the speed control model and emphasizes the free parameters (controller setting). Section 5 is the formulated optimization task objective function. Section 6 compares the simulation results (based on the model identified from measurement), performed with and without optimized controller settings. Section 7 presents the verification of auto-tuning algorithm on a real machine, and Section 8 summarizes the obtained results.

2 Identification of dynamic models

The model of electro-mechanical system with current feedback was obtained by separation from the identified model of velocity feedback. The identification was based on measured data of the desired engine velocity vd and the measured velocity vm, sampled with a time step Δt.

Inputs for identification are measured output data y1, y2, …, yq corresponding to measured inputs u1, u2, …, uq. The coefficient q denotes the total number of time steps. For the structured data, the most commonly used method is the Eigensystem realization algorithm (ERA) or methods derived from it (e.g., Multivariable Output Error State-Space (MOESP), [26, 27]).

2.1 Eigensystem realization algorithm method

The output of the ERA method (described by Gawronski [28]) is the discrete state description parameters in a balanced form (states vector is as controllable as observable). The state description is identified in the form described by Eqs. (3) and (4).

where A, B, C, and D are matrixes of state description, xi is the state vector, ui is the vector of inputs, and index i corresponds to a time step. The first step of identification is to construct the Markov parameters from the impulse response considering zero initial conditions. Generally, the construction of Markov parameters hk can be written as in Eq. (5).

The individual Markov parameters are then written to the Markov matrix H. The coefficient p denotes the prediction horizon (model order) and p ≪ q holds. Choice of order is significant, too low order would not affect part of dynamics, and too high order could bring undesirable dynamics (typically caused by noise in measured data), and in addition, it would increase computational demands.

To calculate Markov’s matrix H, it is first necessary to define an input matrix U and an output matrix Y.

The dependence (9) applies to such formulated matrices, and after pseudoinversion of the input matrix, we obtain the formula for determination of the Markov matrix (10) and its distribution yields also individual Markov parameters.

Observation matrix P and controllability matrix Q are expressed from the Hankel matrix H1 and H2; it results from Eq. (5).

By singular value decomposition (SVD), the Hankel matrix is broken down into a diagonal matrix of singular numbers Γ and an orthonormal matrices V and U. The diagonal matrix Γ is further broken down into Hankel matrices of singular numbers ΓV and ΓU.

The observation matrix P and controllability matrix Q are given by Eqs. (14) and (15).

The matrix A is expressed by a pseudoinversion from the shifted Hankel matrix H2.

The matrix B is determined as the first s columns of the matrix Q and the matrix C as the first r rows of the matrix P.

The matrix D corresponds to the zero Markov parameter.

2.2 Multivariable Output Error State-Space method

The MOESP method will be described using an approach by Katayama [29]. In the first step, the data matrices UK and YK are formed (q is supposed as sufficiently large).

Subsequently, LQ decomposition of these data matrices is performed.

where L11 and L22 are lower triangular matrices and Q1 and Q2 are orthogonal matrices. The next step is SVD decomposition of matrix L22

where U1 has dimension [p · l × n] and U2 has dimension [p · l × (p · l − n)], n is the dimension of the state vector, and l is the dimension of the output vector yi. Then, we define the extended observability matrix as:

where \( {\boldsymbol{S}}_{\mathbf{11}}^{1/2} \) is a matrix square root of the matrix S11.

Using the extended observability matrix, the matrix C is given by:

Note, that

Therefore, using the pseudoinverse of Op − 1, the matrix A can be solved as:

The calculation of B and D estimations is based on the solving equation:

Substituting \( {\boldsymbol{U}}_{\mathbf{2}}^T:= \left[{\mathcal{L}}_1{\mathcal{L}}_2\cdots {\mathcal{L}}_p\right] \) and \( {\boldsymbol{U}}_{\mathbf{2}}^T{\boldsymbol{L}}_{\mathbf{2}\mathbf{1}}{\boldsymbol{L}}_{\mathbf{11}}^{-1}:= \left[{\mathcal{M}}_1{\mathcal{M}}_2\cdots {\mathcal{M}}_p\right] \), we get overdetermined set of linear equations:

where \( {\overline{\mathcal{L}}}_i=\left[{\mathcal{L}}_i\cdots {\mathcal{L}}_p\right] \), i = 2, ⋯, p. It can be shown that a unique least-squares solution exists if p > n.

3 Cascade control

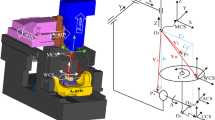

The cascade control of feed drive axis consists of three loops (Fig. 1). The innermost current loop, superior speed loop, and the most superior position loop. Determining influence has position control, and others are complementary. They significantly improve behavior of all feedback control. Further improvement brings velocity and acceleration feedforward. Significant influence on the quality of feedback control has the measuring systems and its placing on mechanical structure.

3.1 Current control

The most usual actuator in feed drive axes is brushless permanent magnet synchronous motor (PMSM). The indivisible part of this type of servomotor became the commutation sensor as a necessary tool for the computation of a right commutation angle. Such electronic, brushless, commutation superceded earlier used a mechanical commutator with brush. Further development brings sensorless motors, but the motors with position commutation sensor are still in front in precise applications.

Very advantageous feature of PMSM is linear relationship between the torque and the current (or force and current in the case of linear motor). This relationship can be described by using Eq. [30].

where the MK is a motor torque in [Nm], I is a current in [A], and KT is a torque constant in [Nm/A]. If the servomotor would be driven only by the voltage applied on its terminal, the movement would be flexible as the external moment would act on the shaft. Another phenomenon is the electro-magnetic force (EMF) which counteracts the terminal voltage lowering the motor current and thereby its moment. Both undesirable effects are suppressed by the introduction of the current control. PI current controller helps to suppress the effect of EMF and moreover significantly speeds up settling time of current in windings. With PI current control, the settling time of step response is approximately in milliseconds. The output of the current PI control is a desired motor voltage.

3.2 Speed control

Though the fact that the speed PI control works with speed signal, its main contribution is the theoretically infinite static stiffness of feedback control. This is because of the I component of the PI controller. The transfer function Gv (s) of speed PI control is:

where Kp is the proportional component and Tn is the integral component of the speed controller, and j is the imaginary unit. We could see that as ω→0, the value of Gv(s) goes to infinity.

Setting the speed control is crucial for the best performance of whole feed drive axis. The main aims are to reach wide bandwidth and high dynamic stiffness of control loop [30, 31]. High bandwidth allows good tracking properties, and high dynamical stiffness brings low sensitivity to external disturbance MD (Fig. 1). The key to reach these requirements is to set as high gain as possible. But there must be fulfilled requirements for stability and low content of mechanical resonances in step response. In praxis, the gain of the speed controller is limited due to mechanical resonance limits [32]. Such interaction is illustrated in Fig. 2 and Fig. 3.

Bode plot of speed control loop. Controlled mechanics has several resonances [33]

Bode plot of speed control loop. Comparison of bandwidths of speed feedback with and without frequency filters [33]

3.3 Position control

The most superior is the position control loop. The wide bandwidth control requirement assures the basic pre-requisite for accurate tracking in a wide range of frequencies. But the magnitude of the gain, the well-known Kv factor, must be set with regard to aperiodic response to input. No oscillations which can cause some undesirable cut into the workpiece contour are acceptable.

Figure 4 shows different shapes of position deviations. Each curve was captured for the same velocity. The position deviation is nonzero and constant during the constant velocity movement. Its value is proportional to the Kv factor and velocity. If Kv factors of interpolating axes are not equal, it causes contour deviation. It is readily seen during circular interpolation. Different Kv factors for axes cause elliptic instead circular shape. This effect is neglected when the feedforward (FFW) is used. In such case, the circular shape is held also for different Kv factors.

The feedforward (Fig. 1) can significantly reduce position deviation and allows accurate tracking of trajectory also for different Kv factors set in each moving axis. Control systems offer velocity feedforward and acceleration feedforward. Both of them work with the desired position as the input variable. Velocity feedforward derives a desired velocity in a way of derivative block series and weight coefficient Kw in range <0; 1>. Maximal suppression of position deviation is if Kw = 1. In a steady-state movement, the position deviation is in such case zero. Other suppression of position deviations in transition response is possible via acceleration feedforward.

4 Speed control loop model

The general methodology for tuning control loop controllers was developed on the speed control loop. This loop was chosen because its tuning is usually the most difficult. The first step of development was to build a simplified speed control loop model. The model (Fig. 5) consists of two parts, the speed controller and the model of the mechanical system with current control loop. The mechanical system model can be obtained either by modeling (analytical model) or by identification.

Simplified speed control loop model [34]

The speed controller consists of a PI controller, a series of notch filters, and a low-pass filter. The low-pass filter serves as a fuse; if the function of the notch filters is not guaranteed, therefore its use is not always required, and this must be considered when designing the mathematical model.

4.1 State-space description of PI regulator

The state-space model of the PI regulator (API, BPI, CPI, DPI) is given by 2 parameters: proportional gain Kp and integration time constant Tn.

4.2 State-space description of notch filter

The state-space model of the notch filter (ANi, BNi, CNi, DNi) is given by 4 parameters: eigenfrequencies Ω1,Zi, Ω2,Zi, and comparative damping ξ1,Ni, ξ2,Ni.

4.3 State-space description of low-pass filter

The state-space model of the low-pass filter (ALP, BLP, CLP, DLP) is given by 2 parameters: eigenfrequency ΩLP and comparative damping ξLP.

4.4 State-space description of the open-loop system

When constructing an open-loop model, a state-space description of a series of notch filters was first compiled. The shape of the state description matrices for n notch filters is described by using Eqs. (49)–(52). The notation of the matrix product is used in the notation. The short notation is used for the multiplication of matrixes DNi (e.g., DN3 → 1 = DN3 DN2 DN1).

The state-space description of the speed controller (ACi, BCi, CCi, DCi) was created in the next step. At this stage, either controller without low-pass filter (AC1, BC1, CC1, DC1) is constructed

or with low-pass filter controller (AC2, BC2, CC2, DC2) is created.

An open-loop state-space description was obtained by serial connection of the controller model and the mechanical system model.

where AMS, BMS, CMS, DMS are matrices of state-space mechanical model representation.

4.5 State-space description of the closed-loop system

A closed-loop system description (66)–(69) is obtained by input uOL substitution with the difference between the system output y and required value for the open-loop input u, Eq. (65). Arrays ACL, BCL, CCL, and DCL are matrices of the closed-loop state-space description.

4.6 Transformation between physical and machine parameters without reduction

The inputs of the optimization algorithm are physical parameters, but machine parameters of individual speed controller blocks are entered into the machine control system. For this reason, it was necessary to define forward and backward transformations between physical and machine parameters. Figure 6 shows the machine parameters of the notch filter (fZi - retention frequency, DZi - drop on fZi, WZi - bandwidth, RZi - reduction behind fZi).

5 Formulation of velocity controller tuning optimization task

The first step in the formulation of the optimization task was to select the quality criteria of the machine tool drive axis control [30]. Based on these criteria, the sub-objective functions were subsequently defined, and finally the overall objective function was compiled, and boundary conditions of the task were determined.

5.1 Influence of amplitude characteristics

The first selected quality control criterion was the amplitude characteristics of the system. This characteristic (see Fig. 7) can be divided into three areas: precision control area (zone 1), transition area (zone 2), and damping area (zone 3).

In the precision control area, the system response is required to match the best desired output. The aim is to approach the course of the amplitude characteristic to the horizontal axis. A partial objective function is defined as the absolute value of the area between the characteristic curve and the horizontal axis.

Since the amplitude characteristic is rendered discreetly, it was necessary to convert this function to a numerical form.

Conversely, in the damping area, resonant states are required to be suppressed. This requirement is ensured by reducing the global maximum in this region to the cutoff amplitude. The partial objective function is defined as the absolute value of the difference between the maximum and the limit amplitude.

The effect of the shape of the transition on the control quality has not been proven. For this reason, this partial objective function is zero. The key parameters of the transition area are edge frequencies f12 and f23 that determine the location and width of the area.

5.2 Influence of system unit step response

The second criterion of the objective function is the overshoot value in response to a unit jump. The optimum overshoot of step response is not zero (Fig. 8). The optimum overshoot value is usually selected around 20% to maintain machine tool dynamics.

The requirement for a sufficiently rapid response of the machine to the external stimulus is based on the effort to approach the maximum overshoot to the optimum value. The partial objective function is then defined as the absolute value of the difference between maximum and optimal overshoot.

5.3 Influence of system distance from stability limit

The last control quality criterion is the distance of the system from the stability limit (marked as e). This distance is given by the highest value of the real component of the poles of the system. If this value is lower than the limit distance, the system is guaranteed to be stable and the objective function is zero. Conversely, if it is greater than zero, the system is unstable, and the value of the partial objective function is very high. The transition of the partial objective function from the limit distance to the stability limit is given by linear dependence. Related objective function behavior is described by using Eq. (75) and is displayed in Fig. 9.

Partial target function of stability criterion [34]

5.4 The overall objective function

The overall objective function is the sum of the products of the partial objective functions and their weights. The value of these weights is chosen that the influence of individual objective functions on the total value is balanced.

The use of all criteria may not always be required, and if this is not the case, selected ones can be excluded from optimization by resetting the weight of the partial objective function. This step can be performed for criteria where fulfillment is ensured by a marginal condition of optimization and the partial objective function serves as an indicator of the quality of fulfillment of this criterion (amplitude characteristic - attenuation area, unit jump response, and stability criterion).

5.5 Boundary conditions of the optimization task

There are boundary conditions related to individual machine parameters. The choice of these conditions depends on the user experience and capabilities of the control software. Other optimization boundaries are inequality conditions based on control quality criteria, equations are as follows:

6 Simulation testing of developed optimization procedure

The optimization algorithm [35] was applied to the identified current control loop models of benchmark machine tool. The benchmark machine is a modern medium-sized horizontal machining center equipped with interchangeable technological pallets with a load capacity of 16 t. The machine itself has 5 fully controlled axes and two-axis indexed milling head. The machine is designed for productive machining; therefore, it works with high working feeds up to 36 m/min and spindle speeds up to 4000 1/min with a spindle diameter of 130 mm. The model identification was carried out at different loads on the machine tool. The machine tool load was simulated by placing the weight on the machine axis.

The identification process can be divided into two phases. In the first phase, an open speed control loop model with a weakly tuned speed controller is identified (the controller parameters are set to minimize the current control loop behavior); in the second phase, the current control loop model itself is separated. Figure 10 shows a comparison of the measured and simulated waveforms of open speed control loop response to the chirp signal.

All quality control criteria were used to calculate the objective function. Selected parameters of objective function calculation are recorded in Table 1.

6.1 Load state hm0

Optimization of the machine speed control loop parameters in the load state hm0 (i.e., without load) was chosen to present the functionality of the methodology. The change of the individual parameters before and after the optimization is recorded in Table 2.

On the course of the amplitude characteristic before and after optimization (Fig. 11), the extension of the precision control area and the attenuation of the influence of 2 natural machine frequencies in the attenuation area can be observed.

By comparing the system response to the unit jump (Fig. 12), it can be deduced that the condition of lowering the maximum overshoot below the optimum overshoot condition was met, but the system response was decreased.

6.2 Comparison of load states

For the sake of completeness, the results of optimization of all load cases (hm0 - without load, hm1 - light load (~5 t), hm2 - heavy load (~10 t)) are presented in this chapter.

Comparing the amplitude characteristics after optimization (Fig. 13), it can be concluded that increasing the load reduces the boundary of the precision control area. Similarly, a system response slowdown can be observed in comparing unit jump responses (Fig. 14).

7 Verification of auto-tuning algorithm on real machine

Verification tests proceeded on a real heavy machine tool with a ballscrew feed drive axis with parameters introduced in Section 6. The subject of the test was to verify computed constants of speed control by its transfer into the real control system and by measuring resulting behavior of the feed drive. Adjusted parameters were proportional Kp and integral component Tn of PI speed controller and the parameters of current filters, namely frequency and damping of low-pass filter and frequency, damping (notch), and bandwidth of band-stop filters.

The machine tool was equipped by the control system Heidenhain. An example of user screen with measured data is in Fig. 15.

7.1 Table without load — hm0

Figure 16 shows comparison of the original and optimized characteristic of speed control loop for the table without load. Comparison of the computed constants transferred into the machine parameters are presented in Table 3. Both optimized settings 1 and 2 bring stable and suitable behavior of the feed drive axis. Computed parameters are portable into the real control system. From a practical point of view, setting 1 is a little bit better than setting 2 because its filters are around the frequencies 150 Hz and 200 Hz less aggressive.

7.2 Table with one load — hm1 (~5 t)

Other verifications were carried out with one load of approximately 5 t on the table. Comparisons of such measurements are shown in Fig. 17; an overview of parameters transferred into the machine are in Table 4.

Automatic settings of speed control loop for axis with load approx. 5 t bring stable and suitable settings with more remarkable improvement of dynamic properties than in previous case hm0. Especially, optimization with setting 2 brings bandwidth increase of about 30%.

7.3 Table with two loads — hm2 (~10 t)

Further verifications were carried out with two loads of approximately 10 t on the table. Comparisons of such measurements are shown in Fig. 18, and transferred machine parameters are in Table 5.

Automated tuning brings a stable and suitable setting of speed control loop like in previous cases, and computed parameters are portable into a real machine control system. Comparisons of the machine parameters for presented measurements are in Table 5. In this case, three band-stop filters were used compared to two band-stop filters in previous two cases.

8 Conclusions

The main objective of the research was to create a methodology for optimization of the cascade regulation controller of the machine tool drive axes. This methodology was developed on the speed control loop. Its tuning is usually the most difficult because of close interaction with modal and other dynamic properties of the machine. The whole procedure is of course also applicable to the position and current control loop. During the development, a simplified experimentally identified model of system was built and used, the optimization task was formulated, and the quality criteria of control including optimization boundary conditions were applied.

Optimized settings applied on the real machine bring higher loop gain which improve dynamic behavior and stiffness of feed drive axis. Differences between the original auto-tuned settings seem to be low, but from the practical experience, further increasing of gain would lead to oscillating behavior with consequences in accuracy of machine. From this point of view, it can be resumed that the developed algorithm is capable to find the great compromise between the loop gain, stability, and measure of oscillation. Moreover, tests were carried out in a different level of the axis stroke (which brings small drift in natural frequencies) with no impact on results. Thereby, it is possible to highlight the robustness and portability of computed settings into the machine with the real control system.

The paper shows the efficiency of the proposed methodology applied to the identified model of the real machine tool axes. Furthermore, the possibility of using the proposed methodology as an alternative to manual tuning by a trained operator was proven. The implementation of the proposed complex procedure on a real machine tool proved the advantage of simultaneous tuning of all parameters using optimization methods. This strategy solves the problem of mutual interaction of all control law parameters disabling effective usability of gradual sequential tuning. As part of the further development of the methodology, it is planned to increase the automation of the preparatory phase (choice of initial optimization parameters) and to optimize parameters of all control loops (position, speed, current) together.

The subject of further work could be optimizing the shape of current filters.

Availability of data and material

Not applicable.

References

Yang H, Wang Z, Zhang T, Du F (2020) A review on vibration analysis and control of machine tool feed drive systems. Int J Adv Manuf Technol 107:503–525. https://doi.org/10.1007/s00170-020-05041-2

Erkorkmaz K, Altintas Y (2001) High speed CNC system design. Part III: high speed tracking and contouring control of feed drives. Int J Mach Tools Manuf 41(11):1637–1658. https://doi.org/10.1016/S0890-6955(01)00004-9

Chen C, Cheng C (2005) Integrated design for a mechatronic feed drive system of machine tools. Proceedings, 2005 IEEE/ASME International Conference on Advanced Intelligent Mechatronics 588-593. https://doi.org/10.1109/AIM.2005.1511046

Elbeheiry EM, Elmaraghy WH, Elmaraghy HA (2005) A structured linear quadratic gaussian based control design algorithm for machine tool controllers including both feed drive and process dynamics. J Vib Control 11(7):949–983. https://doi.org/10.1177/1077546305055542

Sencer B, Ishizaki K (2015) Smooth polynomial interpolation for point-to-point trajectories with vibration avoidance. IECON 2015 - 41st Annual Conference of the IEEE Industrial Electronics Society 002070-002075. https://doi.org/10.1109/IECON.2015.7392406

Beneš P, Valášek M, Šika Z, Zavřel J, Pelikán J (2019) SHAVO control: the combination of the adjusted command shaping and feedback control for vibration suppression. Acta Mech 230(5):1891–1905. https://doi.org/10.1007/s00707-019-2363-z

Chen S-Y, Chien Z-J, Wang W-Y, Chiang H-H (2020) Contour tracking control of a linear motors-driven X-Y-Y stage using auto-tuning cross-coupled 2DOF PID control approach. Appl Sci 10(24):9036. https://doi.org/10.3390/app10249036

Farrage A, Uchiyama N (2020) Improvement of motion accuracy and energy consumption for industrial feed drive systems using adaptive sliding mode control. ISA Trans 106:382–391. https://doi.org/10.1016/j.isatra.2020.06.025

Kehne S, Fey M, Brecher C, Herfs W (2020) Control of the static and dynamic stiffness of feed drive axes by using an external force sensor. 2020 International Conference on Electrical Machines (ICEM) 1081-1085. https://doi.org/10.1109/ICEM49940.2020.9271036

Msukwa MR, Nshama EW, Uchiyama N (2020) Contouring control based on reference adjustment and uncertainty compensator for precision motion of industrial feed drive systems. 2020 IEEE 29th International Symposium on Industrial Electronics (ISIE) 89-94. https://doi.org/10.1109/ISIE45063.2020.9152478

Msukwa MR, Nshama EW, Uchiyama N (2020) Adaptive sliding mode control with feedforward compensator for energy-efficient and high-speed precision motion of feed drive systems. IEEE Access 8:43571–43581. https://doi.org/10.1109/ACCESS.2020.2977395

Margolis BWL, Farouki RT (2020) Inverse dynamics toolpath compensation for CNC machines based on model predictive control. Int J Adv Manuf Technol 109(7):2155–2172. https://doi.org/10.1007/s00170-020-05719-7

Kim H, Okwudire CE (2020) Simultaneous servo error pre-compensation and feedrate optimization with tolerance constraints using linear programming. Int J Adv Manuf Technol 109(3):809–821. https://doi.org/10.1007/s00170-020-05651-w

Khosravi M, Behrunani V, Myszkor P, Smith RS, Rupenyan A, Lygeros J (2021) Performance-driven cascade controller tuning with Bayesian optimization. IEEE Transactions on Industrial Electronics. https://doi.org/10.1109/TIE.2021.3050356

Erkorkmaz K, Altintas Y (2001) High speed CNC system design. Part II: modeling and identification of feed drives. Int J Mach Tools Manuf 41(10):1487–1509. https://doi.org/10.1016/S0890-6955(01)00003-7

Wong WW-S, Erkorkmaz K (2010) Constrained identification of virtual CNC drives using a genetic algorithm. Int J Adv Manuf Technol 50(1):275–288. https://doi.org/10.1007/s00170-009-2496-7

Neugebauer R, Hofmann S, Hellmich A, Schlegel H (2011) Time-based method for the combined identification of velocity-loop parameters. Archive of Mechanical Engineering 58(2):175–184

Sekler P, Voß M, Verl A (2012) Model-based calculation of the system behavior of machine structures on the control device for vibration avoidance. Int J Adv Manuf Technol 58(9):1087–1095. https://doi.org/10.1007/s00170-011-3450-z

Rebelein C, Vlacil J, Zaeh MF (2017) Modeling of the dynamic behavior of machine tools: influences of damping, friction, control and motion. Prod Eng 11(1):61–74. https://doi.org/10.1007/s11740-016-0704-5

Khader N (2014) Modal parameters of a rotating multiple-disk-shaft system from simulated frequency response data. Applied and Computational Mechanics 8(1):187–198

Lee C-Y, Hwang S-H, Nam E, Min B-K (2020) Identification of mass and sliding friction parameters of machine tool feed drive using recursive least squares method. Int J Adv Manuf Technol 109(9):2831–2844. https://doi.org/10.1007/s00170-020-05858-x

Turhan MH, Tseng GWG, Erkorkmaz K, Fidan B (2020) Dynamic model identification for CNC machine tool feed drives from in-process signals for virtual process planning. Mechatronics 72:102445. https://doi.org/10.1016/j.mechatronics.2020.102445

Olgac N, Holm-Hansen BT (1994) A novel active vibration absorption technique: delayed resonator. J Sound Vib 176(1):93–104. https://doi.org/10.1006/jsvi.1994.1360

Kraus K, Šika Z, Beneš P, Krivošej J, Vyhlídal T (2020) Mechatronic robot arm with active vibration absorbers. J Vib Control 26(13-14):1145–1156. https://doi.org/10.1177/1077546320918488

Šika Z, Vyhlídal T, Neusser Z (2021) Two-dimensional delayed resonator for entire vibration absorption. J Sound Vib 500:116010. https://doi.org/10.1016/j.jsv.2021.116010

Jamaludin IW, Wahab NA, Khalib NS, Sahlan S, Ibrahim Z, Rahmat MF (2013) N4SID and MOESP subspace identification methods. 2013 IEEE 9th International Colloquium on Signal Processing and its Applications 140-145. https://doi.org/10.1109/CSPA.2013.6530030

Poshtan J, Mojallali H (2020) Subspace system identification. Iranian Journal of Electrical and Electronic Engineering 1(1):11-17

Gawronski WK (2004) Advanced structural dynamics and active control of structures, New York: Springer-Verlag New York. https://doi.org/10.1007/978-0-387-72133-0

Katayma T (2005) Subspace methods for system identification, London: Springer- Verlag. https://doi.org/10.1007/1-84628-158-X

Souček P (2004) Servomechanisms in production machines, Prague: ČVUT (in Czech)

Moravec J (2014) Minimization of errors and irregular motion of machine tool feed drive axes. Dissertation, Czech Technical University in Prague (in Czech)

Bubák A (2004) Increasing the dynamics and accuracy of machine tool feed axes. Dissertation, Czech Technical University in Prague (in Czech)

Moravec J, Šika Z (2019) Tuning of position control loop of machine tools based on experimentally identified mechanical model. Computational mechanics 2019: Proceedings of computational Mechanics 2019: 35th conference with international participation 127-128

Halamka V (2019) Optimization of control of machine drive axis. Masters thesis, Czech Technical University in Prague (in Czech)

Houck C, Joines J, Kay A (1994) A genetic algorithm for function optimization: a MATLAB implementation, North California State University

Funding

This paper has been supported by the project “Manufacturing engineering and precision engineering” funded as project No. CZ.02.1.01/0.0/0.0/16_026/0008404 by OP RDE (ERDF).

Author information

Authors and Affiliations

Contributions

All authors contributed on the paper; Vojtech Halamka provided the controller setting optimization, Jan Moravec contributed on the measurement and data acquisition, Petr Benes contributed on the measurement and system identification, Zdenek Neusser contributed on state of the art assembly and paper preparation, Jan Koubek contributed on data acquisition, Tomas Kozlok provided the measurement support, Michael Valasek contributed on the main paper idea, and Zbynek Sika supervised the measurement and controller parameters’ tuning process.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Halamka, V., Moravec, J., Beneš, P. et al. Drive axis controller optimization of production machines based on dynamic models. Int J Adv Manuf Technol 115, 1277–1293 (2021). https://doi.org/10.1007/s00170-021-07160-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-021-07160-w