Abstract

In selective laser melting (SLM), spattering is an important phenomenon that is highly related to the quality of the manufactured parts. Characterisation and monitoring of spattering behaviours are highly valuable in understanding the manufacturing process and improving the manufacturing quality of SLM. This paper introduces a method of automatic visual classification to distinguish spattering characteristics of SLM processes in different manufacturing conditions. A compact feature descriptor is proposed to represent spattering patterns and its effectiveness is evaluated using real images captured in different conditions. The feature descriptor of this work combines information of spatter trajectory morphology, spatial distributions, and temporal information. The classification is performed using support vector machine (SVM) and random forests for testing and shows highly promising classification accuracy of about 97%. The advantages of this work include compactness for representation and semantic interpretability with the feature description. In addition, the qualities of manufacturing parts are mapped with spattering characteristics under different laser energy densities. Such a map table can be then used to define the desired spatter features, providing a non-contact monitoring solution for online anomaly detection. This work will lead to a further integration of real-time vision monitoring system for an online closed-loop prognostic system for SLM systems, in order to improve the performance in terms of manufacturing quality, power consumption, and fault detection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) technologies, such as selective laser melting (SLM), adopt a layer-by-layer manufacturing principle to fabricate metallic components in a broad range of sectors [17, 18, 20, 45]. Despite the increasing attention from both academia and industrial fields, several challenges have represented a barrier for the breakthrough of SLM technology; one of the most significant challenges is the quality control of the laser-material interaction [13, 28, 32, 35]. Job failures and defects such as porosity and cracks could take place and can be only detected after the manufacturing process. All control parameters, such as laser power or scan patterns, are pre-determined empirically. The work will need to be repeated with different control parameters in order to find the most optimal combination, and such a process is very costly and time consuming. In situ process monitoring and in situ metrology hold the potential to overcome the technological barrier related to lack of assurance of quality in SLM.

Laser melting is generally associated with spatters in the material-laser interaction; the produced spatters exhibit different characteristics when the process parameters vary. It has been shown that spatters are able to produce inclusions and discontinuities in the built parts, which degrade the mechanical properties by decreasing the structural integrity [3, 4, 31, 37, 41]. This implies that the characteristics of the spatters under the optimum condition could be systematically analysed and treated as a significant factor to evaluate the manufacture quality during the SLM process.

Spattering is an important phenomenon that is highly related to the quality of the manufactured parts [3, 4, 37, 41, 42]. Characterisation and monitoring of spattering behaviours are highly valuable in understanding the manufacturing process and improving the manufacturing quality of SLM. Most of the camera systems mentioned above are based on either special imaging techniques, such as X-ray, or costly vision/optical systems, such as high-speed or thermal cameras.

Compared with costly high-speed cameras, standard cameras will capture spattering motions that appear as long-tailed trajectories due to the high speeds of spatters, where the trajectory lengths are related to the camera exposure time. The appearance of captured spatter trajectories provides rich information in terms of the spattering distributions and motion dynamics that can be understood as the effect of optical flow. As indicated in [3, 4, 42], the spattering appearance can be considered as the manufacturing process signatures that can be associated with the quality of the build. Limited research has been carried out in investigating in in situ automatic identification of spattering characteristics, as well as the correlation between spattering characteristics and the part quality.

Inspired by this, this paper introduces a method for automatic visual monitoring to distinguish spattering characteristics of SLM processes in different manufacturing conditions. The aim of this work is threefold, namely (1) to develop a novel algorithm that can classify spatters by their visual characteristics in multiple laser melting conditions using a consumable video recording device; (2) to develop a feature descriptor that is compact, interpretable, and robust to represent SLM spatter trajectories; and (3) to investigate in the feasibility of identifying the correlation between the quality of manufactured parts and the classification results of spatters.

The remainder of this paper is organised as follows. Section 2 introduces the related works. Section 3 introduces the details of the proposed method, followed by the experimental details in Section 4. The last section consludes the work.

2 Background

This section reviews related works with respect to three subjects: general SLM visual monitoring, spattering monitoring, and visual classification.

2.1 In situ visual monitoring for SLM/AM

The state-of-the-art AM processes have not yet incorporated in situ monitoring technologies for closed-loop control, such as real-time discontinuities detection. Many AM manufacturers offer additional monitoring modules added onto the basic AM machines for in situ process monitoring and data collection. However, in most cases, the data generated are stored but not analysed in real-time for closed-loop control feedback.

Comprehensive summaries of the in situ monitoring and closed-loop feedback modules currently available from prominent AM machine manufacturers can be found from [10, 13]. In-process monitoring research is at its infancy. Vision, with the obvious advantage of simple hardware modification required, is a very popular method for non-contact process monitoring. For instance, Bertoli et al. [34] used an high frame rate video recording of SLM of stainless steel to investigate the laser-powder interaction and melt pool evolution. Preliminary work for closed-loop control of melt pool temperature has been carried out, by in situ monitoring using high-speed cameras in combination with photodiodes [6]. Most in-process monitoring systems are for offline study and thus have not been integrated in the AM machines. Thermal cameras, such as Pyrometric or IR cameras, have been proved effective in producing clear visual features of melt pools [13, 14]. Along with melt pool monitoring, detection of material discontinuities, such as pores, imperfections in the powder bed, and over-processing causing part curing, are also proved to be feasible. Such previous works are all based on offline processing, rather than integrated on the AM machines [10, 13].

There are also other imaging techniques, such as X-ray imaging. Leung et al. [25] employed an in situ X-ray imaging technique to investigate the physical phenomena during the deposition of the first and second layers. It can be observed that the laser induced gas/vapour jet promotes the formation of metal tracks and denuded zone via spattering. A mechanism map for predicting the evolution of melt features, changes in melt track morphology was developed in their study. Zhao et al. [47] used high-speed X-ray imaging to study the formation, evolution and collapse of a keyhole instability leading to porosity formation. One recent work proposes a compressive sensing based method, named physics based compressive sensing (PBCS), for real-time temperature monitoring [28] of additive manufacturing with sparse measurement to construct three-dimensional temperature field. The aforementioned in situ imaging technique, however, is fairly costly and the melt pool behaviour is difficult to be captured due to the very high cooling rate.

2.2 Spattering monitoring

Spattering is highly related to the quality of the manufactured parts. Quantitative characterisation of spattering behaviours is valuable in understanding the physical process of manufacturing. There are a number of recent studies on spattering and how it relates to the quality of manufacturing.

Spattering is caused by three main sources, namely recoil pressure, Marangoni effect and heat effect in molten pool, and the different sources result in different spattering morphologies correspondingly. Figure 1 shows the three types of spatters: type I metallic jet, type II droplet, and type III powder spatter, presenting different morphologies. The spattering particles are embedded into the surface and interior of the SLM-fabricated parts. These results are helpful in controlling the intensity of spattering, improving stability and repeatability of the SLM fabrication process.

Spatter formation illustration [37]

The influence of laser energy on spattering is studied in [37], which analysed the formation principle, appearance and compositions of spattering. The results indicate that as the laser energy input increases, the intensity and the quantity of spattering increases correspondingly. The authors of [37] also studied how spattering particles will degrade the quality of the manufactured parts. Figure 2 illustrates the introduced pores and impurity, caused by spatters, which are considerably larger (\(\sim 162\ \upmu \)m) than the original powder (\(\sim 32\ \upmu \)m) [37].

Spattering particles on powder recoating and the introduced defects. (a) Powder recoating with spatters in presence. (b) Gaps introduced near spatters after melting. (c) Large spatters impeding spreading of liquid metal within the molten pools where impurities solidify. [37]

Another relevant work is to study the role of recoild pressure in the process of SLM using multi-laser technology [3] in order to optimise process parameters to enhance the mechanical properties of SLM products through. The formation mechanism and the behaviour of spatter particles during SLM fabrication is monitored using high-speed photography. Some image processing techniques are applied in the work to characterise the spatters, and the influence on the fabrication is studied. A further study suggests that changing the laser scan velocity has more influences on spatter formation in comparison with the energy input [4]. Microscopic examination and density analysis of SLM parts were performed in the work to interpret the relationship among the numbers of created spatter particles, induced unmelted regions and density variability. The analysis helps enhancing the current manufacturing process parameters optimization methods in SLM processes.

The correlation between the ex situ melt track properties and the in situ high-speed, high-resolution characterisation is studied [42]. The work attempts to correlate the protrusion at the starting position of the melt track with the droplet ejection behaviour and backward surging melt. The inclination angles of the depression walls are found consistent with the ejection angles of the backward-ejected spatter. As well, the vapour recoil pressure is quantified by in situ characterisation of the deflection of the typical forward-ejected spatter.

Porosity, cracks, and delamination are common in SLM manufacturing. A near-infrared (NIR) camera is used to capture images for the SLM process monitoring to study plume and spatter signatures [41], that are closely related to the melted states and laser energy density. An adapted deep belief network (DBN) framework was deployed for recognising the melted state using the NIR images and obtained the classification rate 83.40 for five melted states.

In [31], statistical descriptors of the spattering behaviours along the laser scan path are introduced by analysing high-speed camera images with image segmentation and feature extraction. To classify energy density conditions corresponding to different quality states, a logistic regression model is introduced and the results show a significant increase in successful detection of under-melting and over-melting conditions.

In addition, stereovision is also used for measuring and characterising spatters’ behaviours in terms of size, speed, direction, and age of spatters ejected from the laser melt pook [5]. A pair of low-cost, high-speed cameras are integrated and synchronised together to provide stereovision for monitoring and understanding the spattering in 3D. The work introduced their preliminary study to investigate in the monitoring of the health of the laser process with the aim to ensure spatter not contaminating the build.

2.3 Preliminary background on visual classification

Classification can be broadly categorised in two main families: conventional machine learning and the latest deep learning based methods. Conventional machine learning based methods generally involve two key steps, including feature extraction and selection, which are usually manually defined, and classification using algorithms, such as neural networks (NN) and support vector machines (SVM). Feature selection tries to find a subset of the original variables or attributes, and feature extraction tries to transform the measured data to more informative and non-redundant values, known as features, facilitating the subsequent learning and generalisation steps. Such features sometimes are more suitable for human interpretations.

In recent years, deep learning based methods have been shown to be the most promising solution that outperforms previous state-of-the-art machine learning techniques in many areas. The key principle is primarily the same as standard neural networks, but with more sophisticated and computationally intensive neural network architectures of more layers. Since 2012, the introduction of deep convolutional neural network (DCNN) [23], named AlexNet, is considered one important milestone for the first time that DCNN outperformed traditional and hand-crafted feature learning on the ImageNet. One of the latest works in DCNN is the ResNet, introduced by He et al. [19], who empirically show that there is a maximum threshold for depth with the traditional CNN model, by comparing the training and test error of a 20-layer CNN versus a 56-layer CNN. However, deep learning is extremely demanding high quality labelled data, making it impractical in several domains [29]. Also, since a neural network works in a manner as a black box, it is very difficult or impossible to interpret the data inside of the neural network, making it less attractive to some applications that require detailed fault diagnosis of failures [29].

In the area of metal additive manufacturing, machine learning has been used in several aspects, such as quality inspection. For example, [46] proposes to use a convolutional neural network (CNN) for online quality inspection by recognising the ‘beautiful-weld’ category from material CoCrMo top surface images with promising result of recognition accuracy. Zhu et al. [49] used machine learning to address the modelling of shape deviations in AM by statistical learning from multiple shapes data. [33] employed machine learning to identify in situ melt pool signatures in AM. In their studies, a scale-invariant description of melt pool morphology was constructed using computer vision techniques and the unsupervised machine learning was used to distinguish the observed melt pools. [21] used a random forest network machine learning model to optimise process parameters in AM of IN718. They demonstrated the procedural steps between input features and output parameters using a general machine learning framework.

With SLM, the spatters produced by the interaction between laser and melt pools appear to be very similar (see Fig. 3). Compared with typical image recogintion applications, such as face or car registration recognition, the images of spatters are not very informative. However, it is still clear to observe subtle differences in spattering trajectories, spark sizes, and temporal dynamic changes, which are very human interpretable. As the first attempt in this area, we aim to use the conventional methods with feature extraction and construct feature vectors, considering the advantages of less data required, interpretability, lower computational demand and so on.

Popular feature descriptors for object detection and recognition include HOG [11], local binary pattern (LBP) [2], scale-invariant feature transformation (SIFT), and SURF [22]. LBP features are usually found robust for face recognition [2]. HOG features represent occurrences of gradient orientation in localised portions of an image or region of interest (ROI) and are often useful for representing the contour of an object [11]. SIFT and SURF features are designed to be invariant to the scale changes, and are widely used in tracking and recognition of general objects with textures. Usually, additional dimensionality reduction algorithms, such as principal component analysis (PCA) and non-negative matrix factorisation (NMF) will be used to reduce the dimensions and computational complexity of the raw features [22].

Automatic fire detection, with many applications in safety and surveillance, has attracted rising attention in the community of computer vision, as an alternative method to conventional technologies such as smoke detection, temperature monitoring, and ion sensing, due to their limits of impracticality for large space and installation cost [36]. Here, considering the similarities to our problem, we will review some techniques used for object detection with the focus on the case of fire detection and its related areas. Various methods for automatic fire detection using computer vision have been investigated. Detection using a pre-built colour model is the most commonly used method. An early stage work adopts an RGB (red, green, blue) model based chromatic and disorder measurement for extracting fire pixels and smoke pixels [36], and the intensity of the red component plays a key role in the decision of fire pixels. A rule-based generic colour model for flame pixel classification [8] uses the YCbCr colour space to separate the luminance from the chrominance more effectively than colour spaces such as RGB. Conventional image processing techniques, such as region segmentation, is also applied to fire area detection [30, 39]. Similarly, YUV colour space is also used to detect flames [26].

In addition to basic colour-based segmentation, local primitive features are also used to discriminate fire from background. The BoF (bag-of-features) method is employed [26] in the YUV colour space. Advanced feature descriptors are also developed to eliminate the effect caused by the variety of shapes [43, 44] in smoke detection. In [40], CNN (convolutional neural network) is combined with SVM for fire detection, while Haar feature detector and AdaBoost cascade classier are implemented with OpenCV [1] first to extract regions of interest (ROI) and CNN with SVM are then deployed to filter results to reduce false positives.

Temporal characteristics by extracting features from sequential video frames are incorporated in fire detection as well. Liu and Ahuja [9] introduced a method of fusing spectral (colour probability density), spatial (spatial structure of fire pixels), and temporal (autoregressive model of temporal changes) models of fire regions in image sequences. In [48], a specific flame pattern is defined for forest, with three types of fire colours labelled. A SVM based classifier is deployed with the feature space of 11 static attributes including colour distribution, texture parameters, and shape roundness, as well as 27 additional features computed with Fourier analysis to represent the temporal variations of colour, texture, roundness, area, and contour. Similarly, flame colour and motion dispersion and similarity in consecutive frames are also studied for fire detection [12, 38].

3 Proposed method for spattering classification

This work is initially inspired by the phenomenon of the varying visual patterns of spatters with different process parameters, such as laser power, hatch spacing, and exposure time. The focus of this work lies on studying the effect of laser energy density in producing different spattering characteristics. Figure 3 shows five typical images of spatters with laser power of 200 W using different laser exposure times of 45 μs, 55 μs, 80 μs, 110 μs, and 190 μs respectively.

Despite a considerable level of similarity, subtle differences are observable to identify the characteristics, mainly lying in the spattering sizes and their flying trajectories. It should be also noted that these images are just selected static snapshots of the scene. The scene is very dynamic, and the patterns of the same control parameter vary greatly. Figure 4 illustrates 9 consecutive frames, with each frame captured with laser exposure time of 190 μs.

One key principle in this work is to construct feature descriptors that mainly rely on information of morphology or shapes of spatters, such as their trajectory segment lengths and orientations, and their spatial information, such as locations relative to the melting pools. Such information needs to be structured into a robust and compact feature vector before applying classification algorithms. We here first introduce the feature descriptor for single frames, and then integrate temporal information by incorporating multiple consecutive frames.

3.1 Data processing pipeline

As mentioned, the inspiration of this work is that spatter sizes, particle trajectory orientations, particle trajectory lengths, and spatter distributions in different regions of the images vary, mainly due to the difference of laser power. It should be also noted that the camera used in the work is a consumable camera, rather than a high-speed camera. It is not possible to capture the exact particles, but only the trajectories. In effect, such trajectories can be understood as the effect of the optical flow algorithm, comprising rich information of particle dynamics. One advantage of the optical flow information is that it allows us to extract particle dynamics directly in one image only. That is the motivation of extracting orientations and lengths of the spark trajectories.

The data pipeline used in this work is illustrated in Fig. 5. The top of the figure shows the four main steps in processing the data:

- Data pre-processing::

-

The first step is image acquisition and pre-processing. With an image captured, sparks need to be identified and localised automatically in each image. One important requirement of the features is the invariance of spark locations. The centre of the spark blob needs to be aligned for all images to eliminate biased false detection caused by the difference of spatial locations. A full image is then cropped to a sub-image in order to reduce the data processing burden and also noises from background.

- Feature construction::

-

This step is to construct feature descriptions that encode discriminative information of objects of interest. A feature vector, acting as a numerical fingerprint, can be constructed from an image using the feature descriptor and hence used to differentiate one feature from another. As depicted in Fig. 5, the feature construction step in our work comprises three main steps: line segment detection, image decomposition, and feature construction with information of spark trajectories in different regions of the image.

- Data training and parameter tuning::

-

This work is a typical supervised classification problem. This step is to train the model for classification with labelled data, and fine tune the parameters to identify optimal parameters, using techniques of grid search and cross-validation.

- Classification result display and analysis::

-

The last step is to test the model with the data and evaluate the performance.

The following sub-sections explain the details of the above four steps.

3.2 Data pre-processing

One requirement of a robust feature descriptor for this application is location invariance. Since the laser beam does not focus on a fixed location in the melting process, it is essential for the laser heated zones (LHZ) in all images to be aligned and a region of interest around the LHZ will be cropped out of the whole image. Our work extracts spatial information of spatters relative the location of LHZ, rather than using the global image coordinate frame. Estimation of the LHZ in each frame would allow automatic alignment of images with reference to the LHZs.

It is obvious that the interaction point on the sample surface with the laser beam always shows the largest and brightest blob. However, this area is highly saturated and the exact location of the laser beam is not visible to the camera. In this work, we assume the centroid of the blob is the likely place of the current LHZ.

To estimate the centroid of the brightest blob, several steps of morphological image processing are carried out, namely binarisation, erosion, and distance transform. First, the image is transformed to a binary format by using the Otsu’s binary threshold, segmenting the bright sparks from the background as local regions or contours. Erosion of a binary image shrinks the sizes of local regions, also disconnect connected regions, e.g. the central blob and its connected spark trajectories. Last, we use the distance transform to locate the centroid of the central blob. A grey-scale image can be obtained from the distance transform where the grey-scale intensity of each pixel inside the foreground region is the distance to the closest boundary from each point. The working principle is similar to the process of erosion, except that this updates the pixel values by counting the iteration while shrinking the foreground regions. The centroid would be the last pixel to be shrunk and the iteration will be the largest. Therefore, we can simply locate the highest intensity from the distance transformed image as the centroid. If multiple maximum values are detected, we simply take the centre location of these. With the images of SLM sparks, this method is shown to be very reliable. Figure 6 shows an example of the result of the above process, where the 1st figure is the cropped image around the detected centroid and the 4th figure shows the distance transform output with the centroid highlighted.

3.3 Feature extraction and description

It can be seen that both spatial and temporal information, such as locations of splashed particles and motions of particle trajectories, serve as distinguishable visual cues for human operators to classify sparks of different settings (Fig. 3). The feature descriptor proposed here encodes information of these visual cues of spatial distribution of sparks, motions of particle splashes (lengths and orientations), and size of the main centre spark.

To extract particle splashing motion features, morphology of spattering trajectories from the image pixel space needs to be transformed to representations using elementary shapes in the format of vector graphics, such as lines or curves. We extract line segments directly, as most of the spattering curves in such images appear relatively straight and also a curved line can be approximated as a series of line segments. Considering the simplicity of line representation, the feature descriptor in this work is thus constructed from the attributes of these local line segments. Figure 7 illustrates the feature composition and the process of feature construction.

First, the image is decomposed into local regions in the polar coordinate frame, with the centroid detected above as the origin, and study the spatter distribution in each of the polar regions. The motivation of using polar regions, rather than grid regions as used by methods, such as HoG, is because of the nature of spark distributions. Sparks move to all directions and will start to fall off or fade off after a while of splashing. Spatters become sparser in further regions, and this part of information plays an important role in discriminating spattering characteristics. As the polar regions become wider proportionally at longer distances, the probability of a particle remains in the same polar section or neighbouring polar sections will be higher and more consistent, in contrast to pixel-wise matching or grid regions. Figure 8 illustrates the local regions decomposed from an example of spark images. With the centre [cx, cy] detected, the image is sliced into n × m sections, where n is the number of angular sections, and m denotes the number of range sections. Angular and range resolutions need to be specified too.

To estimate line segments from the curves, the non-parametric LSD (line segment detector) algorithm [15, 16] is deployed. LSD is a linear-time line segment detector, designed to be parameter free for tuning and give subpixel accurate results. Local regions are detected first, known as line support regions, and such regions are candidates for line segments. The principal inertial axis of the line support region is used to determine the region’s direction. It controls its own number of false detections by combining gradient orientations and a validation procedure based on the Helmholtz principle. Iteratively, regions approximated as rectangles will be taken as line candidates when the termination condition is met. Actually, in the algorithm, there are still 6 internal parameters predefined that may be application dependent. In our case, the lines are quite bright from the background in general, making it easy to detect. The LSD is proved to be very effective to extract line segments, after testing with a large number of images. Each segment is further segmented at the intersection points between the lines and the region boundaries. Figure 9 shows an example of line segments extracted.

Technically, it is also possible to merge colinear neighbouring lines [24]. However, we consider parallel lines also contain extra information of the width of each spattering trajectory, and it is therefore considered not worth the extra computation.

In a local cell, the following attributes are computed, namely

- Total length of line segments::

-

This is defined as:

$$ L_{r,a} = \sum\limits_{i = 1}^{N}{\sqrt{\left( x^{start}_{i,r,a} - x^{end}_{i,r,a}\right)^{2} + \left( y^{start}_{i,r,a} - y_{i,r,a}^{end}\right)^{2}}} $$(1)where N is the number of line segments in the corresponding cell with the angular index of a and range index r for cell decomposition. \(x^{start}_{i,r,a}\), \(x^{end}_{i,r,a}\), \(y^{start}_{i,r,a}\) and \(y^{end}_{i,r,a}\) represent the coordinates of the start and end points of the i th line segment in the cell.

- Principal inertia axis of line segments::

-

This is to estimate the principal orientation of line segments in each cell. We calculate the angle difference between the principal inertia axis of each line segment and the orientation of the cell relative to the centre first, and compute the weighted expectation of the above, formulated below:

$$\bar{\delta \theta} = \frac{1}{{\sum}_{i=1}^{N} w_{i}} \sum\limits_{i=1}^{N} w_{i} (\theta_{i} - \theta_{cell}) $$(2)where θcell represents the orientation of the cell relative to the centre, and θi represents the principal inertial axis orientation of the i th line segment in the cell. wi denotes the weight for the i th line segment, defined as the length of the line segment; in other words, a longer line segment will have larger impact on the result.

- Size of the central spark::

-

This is obtained from the result of distance transform, which gives a value approximately equal to the LHZ radius.

- Standard deviation of line lengths::

-

This is to calculate the standard deviation of the lengths of line segments in the i th cell, defined as:

$$ \sigma_{l} = \sqrt{\frac{{\sum}_{i=1}^{N} (l_{i} - \bar{l})^{2}}{N - 1}} $$(3)where li is the length of the i th line segment and \(\bar {l}\) is the average length of the line segments.

- Standard deviation of line orientations::

-

Similarly, this is the standard deviation of the orientations of all line segments in the i th cell:

$$ \sigma_{o} = \sqrt{\frac{{\sum\limits_{i}^{N}}{w_{i}(\delta\theta_{i} - \bar{\delta \theta})^{2}}}{\sum\limits_{i=1}^{N}w_{i}}} $$(4)where δθ is the difference between the principal inertia axis and the orientation of the cell.

An elementary feature vector of an individual cell is thus formed as:

where r and a represent the indices of the local cell in range and angle respectively. For simplicity, the indices r and a are not included in some of the equations above. A final feature vector can be constructed by concatenating all local features, as below:

where m and n are the number of range and angular cells respectively. In the case of m = 4 and n = 4, the dimension of the feature vector will be 16 × 5 = 80.

When considering temporal information from consecutive frames, a new feature vector can be constructed from the individual frames by stacking all line segments together after line segmentation from the pre-processing stage. Thus, the feature construction step will be the same as above, except with a larger number of line segments. It is believed that statistically using temporal information from consecutive frames will be more robust and tolerant to outliers.

3.4 Classification

There are a large range of methods for classification. Two popular methods are evaluated, namely random forests and support vector machine (SVM), and both can be used for multiple-class classification and one-class classification.

SVM is generally known to be better for binary classification, and multi-class classification is achieved by executing multiple binary classifications. With a set of training data, each sample is labelled as one of the two classes, denoted as (− 1, 1), and SVM builds a model that separates the two or more sets of data by constructing a hyperplane or a set of hyperplanes in a high dimensional space. The hyperplanes divide two classes with a wide channel. Data in the high dimensional space that fall in one side of the channel belong to one class, while the other side is the other class. The gap between two classes is determined by maximising the distance or margin between several selected data samples from each class, known as support vectors. When data are highly non-linear, SVMs are usually incorporated with functions that transform the data from the original space into another space that is linearly separable, and this is called the kernel trick. Typical kernels include RBF (radial basis function) and polynomial etc. To formulate the problem, the labelled data are represented by a set of pairs of data xi and their corresponding classes, denoted as yi ∈ − 1, 1. A hyperplane can be represented as:

where ⋅ denotes the dot product and w is the normal vector to the hyperplane. The margin that separates the two classes is given by 2/||w|| and b/w determines the offset of the hyperplane. As said, the distance between two classes needs to be maximised under the constraint, expressed by Eq. 8 below:

Compared with SVM, the random forests algorithm [7, 27] belongs to the family of ensemble methods that combine the predictions of several base estimators for better generalisability and robustness of a single estimator. Similarly, random forests have to be fitted with a set of pairs of data, where each pair is comprised of the data samples, denoted as xi, and its corresponding class is the label yi. The principle is to build several independent estimators and their predictions of the ensemble will be averaged. The combination of multiple estimators built in isolation will outperform most individual estimators due to the reduced variance. Random forest has almost identical hyperparameters as a decision tree or a bagging classifier, but only takes a random subset of features into consideration in the process of splitting a node.

4 Experiments

4.1 Experiment preparation and data acquisition

The images were captured using a standard consumable optical camera. Due to some practicality constraints, such as limited space inside the chamber of the SLM machine, the camera was located outside of the SLM machine to capture video frames through a transparent window. Figure 10 illustrates the hardware setup that comprises a camera placed on a tripod placed outside of the SLM machine, monitoring the SLM process through the window.

The SLM machine used in this work is the Renishaw AM250 metal additive manufacturing system (Fig. 10). The window is transparent enough to allow the camera to see the details of the spatters. The window and camera were covered, disallowing ambient lights coming through. Over 7000 images were collected for each class (each laser energy setting), and the total number of images is 35k for 5 classes. For efficiency, we chose about 1000 images from each class for training and also about 1000 images from each class for testing, captured at different times.

The camera model used in this work is Panasonic Lumix DMC-LX100. The camera is set with a fixed set of parameters, particularly the exposure time and aperture, in order to avoid ambiguities with the lengths of spatter trajectories and brightness caused by the camera automatic exposure configurations. To select the exposure time, we need to make sure that the spatter trajectories appear as line segments. However, a long exposure time will result in trajectories with long tails, while short exposure time will result in less line-like shapes. In the former case, very long lines tend to be mixed and intersecting with each other, hence not ideal for extracting line segments. On the contrary, less line-like features are also not desirable. In this work, we found between 1/500s and 1/125s would be most suitable based on our observations. The aperture is set to F/4 to ensure good brightness. The camera only allows two stops between the two settings (1/500s, 1/250s, 1/125s). Figure 11 illustrates the difference with the spatter patterns due to different exposure times of 1/125s and 1/500s respectively. It is obvious that the lengths of spatter trajectories are different, where the longer exposure time 1/125s could produce longer tails with the spatters. Using the same exposure parameters ensure that the visual patterns are not affected by the camera settings. It is also worth mentioning that, in principle, there should not be significant performance difference between 1/500s or 1/125s, as long as the trajectories can be extracted as line segments and transformed into the feature space proposed in this work. In the work, we empirically chose 1/125s as the exposure time, and detailed camera settings are listed in Table 1.

In this work, a set of 316 L stainless steel cubic samples (10 × 10 × 10 mm3) were fabricated under 15 different laser energy density conditions varying from 26 J/mm3 to 166.7 J/mm3 (Fig. 10). The laser energy density may be expressed as \(e = \frac {P}{h \cdot v \cdot t}\), where P and h denote the laser power and hatch spacing, respectively; while v and t represent the scanning speed and powder layer thickness, respectively. The relative density of the fabricated cubic samples was measured using Archimedes’ principle to investigate the correlation of part’s quality with laser energy density, which is closely related to the spatter characteristics. The microstructure of the fabricated parts was also examined under each case to link the spatter characteristics with the part quality.

There are 3 × 5 = 15 samples as shown in Fig. 10. For visual classification, 5 representative laser energy densities from row 1 were chosen. More details can be seen in Section 4.3.3. The other samples were not considered here because they were placed closer to the camera and appeared larger and out of the focus of the camera.

4.2 Data pre-processing and feature construction

The first step is to locate the spatters in order to compute spatial features relative to the centroid of the spatters, the LHZ. As mentioned, we use the distance transform for computing the LHZ in each frame. It should be noted that, since the LHZ is dynamic, the relative perspectives from the camera to the spatters are not consistently stationary. In this study, we simplify the configurations at this stage, by minimising the melting area of each sample to 1 cm2. The effect of inconsistent perspectives between the camera and spatters is considered negligible due to the small area of the part.

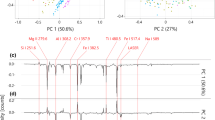

Next, feature vectors are constructed at this stage. Each image is first cropped to a small region of interest with resolution of 1052 × 640, which is then decomposed into sub-regions or cells in the polar coordinate frame, and line segments extracted from the images are allocated into corresponding local cells, as shown in Figs. 12 and 13 for two laser energy densities as examples. Some randomly selected feature vectors are plotted in Figs. 14 and 15 with two different parameters for cell decomposition and different laser energy densities. It is clear that higher energy densities are more likely to produce higher peaks across the feature vectors. Also, it can be seen that the feature values are more spread with na = 8 and nr = 8 for cell decomposition than with na = 4 and nr = 4, providing higher spatial resolution. It should be noted that the features shown here are randomly selected, and they clearly show the high complexity and dynamics of the spatters’ visual characteristics.

The values for different dimensions range quite largely, because these feature vectors are not normalised for visualisation purpose here, and apparently the values of trajectory segment lengths would be much larger than orientations, which range from 0 to 2π. High energy density features tend to contain more spikes in the feature vectors, especially for those cells with larger distances from the centre. This is the desired phenomenon as our initial motivation of extracting spatial information of spatters with respect to the LHZ. In other words, our rationale is that these features with longer trajectory segments in these far cells are good indicators of higher laser power. Apart from this obvious discriminative characteristic, differences in other regions are also observable. Orientations of spattering trajectories are less distinctive than the segment lengths. However, statistically, they appear to be distinctive too in different regions, as higher laser power tends to produce more up-right particle splash trajectories, while lower laser power produces spatter splashes that tend to fall off more quickly. Such phenomena projected to the 2D images produce the differences in their trajectory orientations.

4.3 Classification results and analysis

To evaluate the performance of the proposed method, we need to study the effects of different parameters and their combinations, including the number of cells for decomposition, number of consecutive frames for temporal feature construction, and classification algorithms.

We first study the effect of the parameters used for cell decomposition. This is followed by comparing with other popular feature construction methods. We then discuss the performance improvement by integrating temporal information into the features. We perform a thorough evaluation using two classification algorithms: the SVM and random forest methods, where SVM is tested using both the linear and RBF (radial basis function) kernel for non-linear classification.

4.3.1 Number of cells

There are two parameters used to decompose the image into sub-regions, namely number of angular sections na and number of range sections nr, where na = π/α, nr = r/γ, r is the maximum range from the centre, and α and γ represent the angular resolution and range resolution for the cells. Several different combinations of the parameters are tested, as shown in Table 2. For demonstration purpose, the features with na = 4, nr = 4 and na = 8, nr = 8 are displayed in Figs. 14 and 15 respectively.

In summary, the proposed method could achieve on average accuracy of 86% in classifying the five laser power settings. The optimal configuration for cell decomposition is the 36 × 8 setting using the random forest algorithm, and the performance with higher resolution settings will start to decline or maintain at this level. This is possibly due to the nature of the features that are generally very sparse, because a single continuous strike is considered as one unit. Increasing the resolution for cell decomposition will produce very spatially sparse features, and may not be representative enough for classification, especially when data are not sufficient.

4.3.2 Temporal features

To further enhance the performance, temporal information is also encoded as part of the features. A list of consecutive frames are concatenated together forming a whole set of data for constructing feature vectors. The method is to keep the same feature descriptor as used before, but stack all line segments in the list of images together after line segmentation at the pre-processing stage. The only difference is to use line segments from multiple images, rather than a single frame. It is worth noting that the new feature vector will not introduce more dimensions in the feature description, maintaining the feature compactness as used for a single frame.

Here, we test the effect of the number of frames in constructing temporal features. Tables 3, 4, 5 and 6 show the results of using different frame numbers of 4, 7, 10, and 13 respectively for classification with the four cell decomposition parameters as used in Section 4.3.1. The improvement of classification is considerably improved. For example, in the case of the lowest resolution for cell decomposition, (na = 4, nr = 4), the accuracy is improved from 76.8% with a single frame consistently to a plateau of 93.6%, when the frame number becomes 13. For higher cell decomposition resolution of (na = 36, nr = 8), the classification accuracy reaches about 96.7% from 85.9% using the frame number of 13. Even with only four frames, the result is also improved considerably to more than 94%. Cell decomposition with (na = 8, nr = 8) perform similar to (na = 4, nr = 4) with no significant improvement. Similarly, (na = 36, nr = 20) has not shown obvious advantage than (na = 36, nr = 8), and even performs poorer with SVM (RBF).

In the above experiments, parameters for the classifiers were chosen by performing a grid search. To avoid overfitting with the trained model, cross-validation of 10 fold with the data is used. In addition, as multiple experiments have been performed, to ensure fair and realistic performance evaluation, test data and training data are from different sets of data, rather shuffling the same set of data randomly, as typically used.

The processing time varies depending on various factors, including the number of frames, the dimension of feature vectors, and the number of lines. This work is carried out on a workstation with an Intel Core i7-6700 3.40 GHz CPU. The time required for feature extraction of one single image ranges from about 180 ms (na = 4, nr = 4) to about 210 ms (na = 36, nr = 20), with slight fluctuations due to the varying number of lines in each image. For temporal features, we use parallel processing of Python to allow multiple images to be processed simultaneously (in this work, 4 images). On average, the estimated time required for processing multiple images ranges from about 200 ms for 4 frames to about 1000 ms for 13 frames. The effect of different feature dimensions is negligible for classification that can mostly be completed in about 1.5 ms. With the example of using four frames with na = 36, nr = 8, it will then take about 201.5 ms for data processing and about 80 ms for image acquisition of 4 frames at 50 fps. Similarly, if na = 4, nr = 4 using 13 frames, it will require about 1001.5 ms for data processing and about 260 ms for image acquisition of 13 frames. Although it will not be possible to process every single image at high frame rates in real time, we consider the proposed method is still efficient enough and applicable for analysing and characterising spattering behaviours for real-time applications as usually not all frames are required. On the other hand, the algorithm is developed using Python with libraries of OpenCV and Scikit-Learn with only offline processing. The implementation can be further improved using C/C++ for real-time processing in our future research.

4.3.3 Part post-processing and discussions

Figure 16a shows the measured relative density with respect to laser energy density, where the spatters of the five typical cases (named cases 1 to 5) were analysed using the proposed novel machine learning algorithm. Specifically, the five typical cases indicate the range of laser energy densities employed in this study, where case 1 = 33 J/mm3, case 2 = 40.8 J/mm3, case 3 = 55.6 J/mm3, case 4 = 72.7 J/mm3, case 5 = 166.7 J/mm3.

The laser energy density was determined using the formula \(e = \frac {P}{h \cdot v \cdot t}\), as defined in Section 4.1. The SLM process parameters used in this study are shown in Table 7, with the corresponding densities for different settings. The thickness of each powder layer is 50 μm.

Five typical laser energy density values were selected because they relate to the actual process conditions. More specifically, a 72.7 J/mm3 energy density links to the normal melt condition; 33 J/mm3, 40.8 J/mm3 and 55.6 J/mm3 represent an insufficient melt condition although the energy density covered a wide range, while a 166.7 J/mm3 reflects an over-melt condition which is also known as keyhole melting mode.

It can be seen that the relative density increased with an increase in the laser energy density until an optimum value about 96 J/mm3, where a 99.1% relative density obtained; after that, the relative density decreased with further increasing the energy density. Figure 16b–d show the microstructure of the samples from cases 1, 4 and 5, respectively. When a fairly low energy density (33 J/mm3, case 1) was used, irregular pores formed due to the partial melting of stainless steel powders. This may be confirmed by the captured spatters, as shown in Fig. 17a, which appear to produce very few spatters, compared with higher energy densities. With an increase in the energy density to 72 J/mm3 (case 4), a large number of regular hemispherical molten pools formed. When the energy density reached to 166.7 J/mm3, the formed molten pools exhibited deeper depth and were associated with a few spherical pores. This is because with further increasing the energy density, the much higher energy input resulted in the evaporation of the molten material and the recoil pressure generated the pores with a size of about 50 μm. This kind of keyhole melting led to the formation of porosity and reduced the relative density of the fabricated parts. This can be linked to the observed spatters in Fig. 17b for the case of 166.7 J/mm3, where large spatters are clearly seen.

In this study, the particle size distribution, layer thickness, scanning strategy parameters were fixed, while the effects of scanning speed and hatch spacing were studied. The two variables were thus integrated forming the unified term laser energy density. The five cases used in this work represent five different conditions with different process parameter combinations. Future study needs to be conducted to examine the effects of scanning strategy on the spattering trajectories and re-examine the spattering patterns.

5 Conclusion

Characterisation and monitoring of spattering behaviours are valuable in understanding the physical process of manufacturing. This paper introduces a method for automatic classification of spattering characteristics using a consumable camera. The study shows the effectiveness of the work for distinguishing spattering characteristics of laser melting processes in different manufacturing conditions using machine vision algorithms. This work introduces a new compact and robust feature descriptor designed for spatter behaviours, by combining information of spatter trajectory morphology, spatial distributions in a polar coordinate frame, and temporal features. Spatters are extracted as line segments represented in the vector format. Statistics of the line segments in various sub-regions, known as cells in the polar image decomposition, show highly distinguishable features in this context and are used to construct the feature descriptor. Various parameters associated with the feature descriptor are also studied.

The classification is performed using the SVM as well as random forests for testing. The result is highly promising in distinguishing spattering behaviours with an accuracy of about 97%. In addition, the qualities of manufacturing parts are also studied demonstrating that the quality is highly related to the laser power density that would produce distinguishable spattering patterns. It shows the feasibility of closed-loop SLM control by classifying spattering characteristics under different SLM control parameters that are mapped to the part quality. Such a map table can then define the desired spatter features, providing a non-contact monitoring solution for online anomaly detection. This work will lead to a further integration of real-time vision monitoring system for an online closed-loop prognostic system for SLM systems. It is believed that the full integration will considerably improve the performance of the current SLM technologies in terms of manufacturing quality, power consumption, and fault detection.

References

(2014) The opencv reference manual. https://opencv.org/

Ahonen T, Hadid A, Pietikainen M (2006) Face description with local binary patterns: application to face recognition. IEEE Trans Patt Anal Mach Intel 28(12):2037–2041. https://doi.org/10.1109/TPAMI.2006.244. https://ieeexplore.ieee.org/document/1717463

Andani MT, Dehghani R, Karamooz-Ravari MR, Mirzaeifar R, Ni J (2017) Spatter formation in selective laser melting process using multi-laser technology. Mater Design 131:460–469. https://doi.org/10.1016/j.matdes.2017.06.040. https://www.sciencedirect.com/science/article/abs/pii/S0264127517306238?via%

Andani MT, Dehghani R, Karamooz-Ravari MR, Mirzaeifar R, Ni J (2018) A study on the effect of energy input on spatter particles creation during selective laser melting process. Additive Manuf 20:33–43. https://doi.org/10.1016/j.addma.2017.12.009. https://www.sciencedirect.com/science/article/pii/S2214860417304529?via%3Dihub

Barrett C, Carradero C, Harris E, McKnight J, Walker J, MacDonald E, Conner B (2018) Low cost, high speed stereovision for spatter tracking in laser powder bed fusion. In: 29Th Annual International Solid Freeform Fabrication Symposium, Austin, TX. https://www.semanticscholar.org/paper/Low-Cost%2C-High-Speed-Stereovision-for-Spatter-in-Barrett-Carradero/0fe3d8115113bf228083bfdeaa3342ea3414d331

Berumen S, Bechmann F, Lindner S, Kruth JP, Craeghs T (2010) Quality control of laser- and powder bed-based additive manufacturing (AM) technologies. Phys Procedia 5:617–622. https://doi.org/10.1016/j.phpro.2010.08.089. http://linkinghub.elsevier.com/retrieve/pii/S1875389210005158

Breiman L (2001) Random forests. Mach Learn 45(1):5–32 . https://doi.org/10.1023/A:1010933404324. http://link.springer.com/10.1023/A:1010933404324

Çelik T, Demirel H (2009) Fire detection in video sequences using a generic color model. Fire Safety J 44(2):147–158. https://doi.org/10.1016/j.firesaf.2008.05.005. http://linkinghub.elsevier.com/retrieve/pii/S0379711208000568

Liu C-B, Ahuja N (2004) Vision based fire detection. In: Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004. https://doi.org/10.1109/ICPR.2004.1333722. IEEE, pp 134–137

Chua ZY, Ahn IH, Moon SK (2017) Process monitoring and inspection systems in metal additive manufacturing: status and applications. Int J Precision Eng Manuf-Green Technol 4(2):235–245. https://doi.org/10.1007/s40684-017-0029-7

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), vol 1. IEEE, pp 886–893. http://lear.inrialpes.frhttp://ieeexplore.ieee.org/document/1467360/

Dogan R, Karsligil ME (2010) Fire detection using color and motion features in video sequences. In: 2010 IEEE 18th Signal Processing and Communications Applications Conference, pp 451–454. https://ieeexplore.ieee.org/document/5651727

Everton SK, Hirsch M, Stravroulakis P, Leach RK, Clare AT (2016) Review of in-situ process monitoring and in-situ metrology for metal additive manufacturing. Mater Design 95:431–445. https://doi.org/10.1016/j.matdes.2016.01.099. http://linkinghub.elsevier.com/retrieve/pii/S0264127516300995

Furumoto T, Ueda T, Alkahari MR, Hosokawa A (2013) Investigation of laser consolidation process for metal powder by two-color pyrometer and high-speed video camera. CIRP Ann 62(1):223–226. https://doi.org/10.1016/j.cirp.2013.03.032. http://linkinghub.elsevier.com/retrieve/pii/S0007850613000334

Grompone von Gioi R, Jakubowicz J, Morel JM, Randall G (2012) LSD: a line segment detector. Image Process Line 2:35–55. https://doi.org/10.5201/ipol.2012.gjmr-lsd. http://www.ipol.im/pub/art/2012/gjmr-lsd/?utmsource=doi

Grompone von Gioi R, Jakubowicz J, Morel J, Randall G (2010) Lsd: a fast line segment detector with a false detection control. IEEE Trans Patt Anal Mach Intel 32(4):722–732. https://doi.org/10.1109/TPAMI.2008.300. http://ieeexplore.ieee.org/document/4731268/

Guo N, Leu MC (2013) Additive manufacturing: technology, applications and research needs. Front Mech Eng 8(3):215–243. https://doi.org/10.1007/s11465-013-0248-8

Han Q, Mertens R, Montero-Sistiaga ML, Yang S, Setchi R, Vanmeensel K, Hooreweder BV, Evans SL, Fan H (2018) Laser powder bed fusion of hastelloy x: effects of hot isostatic pressing and the hot cracking mechanism. Mater Sci Eng A 732:228–239. https://doi.org/10.1016/j.msea.2018.07.008. http://www.sciencedirect.com/science/article/pii/S0921509318309249

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://ieeexplore.ieee.org/document/7780459, pp 770–778

Huang SH, Liu P, Mokasdar A, Hou L (2013) Additive manufacturing and its societal impact: a literature review. Int J Adv Manuf Technol 67(5):1191–1203. https://doi.org/10.1007/s00170-012-4558-5

Kappes B, Moorthy S, Drake D, Geerlings H, Stebner A (2018) Machine learning to optimize additive manufacturing parameters for laser powder bed fusion of inconel 718. In: Ott E, Liu X, Andersson J, Bi Z, Bockenstedt K, Dempster I, Groh J, Heck K, Jablonski P, Kaplan M, Nagahama D, Sudbrack C (eds) Proceedings of the 9th International Symposium on Superalloy 718 & Derivatives: Energy, Aerospace, and Industrial Applications. Springer International Publishing, Cham, pp 595–610. https://doi.org/10.1007/978-3-319-89480-5_39

Klette R (2014) Concise computer vision - an introduction into theory and algorithms. XVIII, 429. Springer, London. https://doi.org/10.1007/978-1-4471-6320-6

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1, NIPS’12. Curran Associates Inc., USA, pp 1097–1105. http://dl.acm.org/citation.cfm?id=2999134.2999257

Kwon YP (2014) Line Segment-based Aerial Image Registration. Technical Report, Electrical Engineering and Computer Sciences University of California at Berkeley. http://www.eecs.berkeley.edu/Pubs/TechRpts/2014/EECS-2014-121.html

Leung CLA, Marussi S, Atwood R, Towrie M, Withers P, Lee P (2018) In situ x-ray imaging of defect and molten pool dynamics in laser additive manufacturing. Nat Commun 9:1355. https://doi.org/10.1038/s41467-018-03734-7. https://www.nature.com/articles/s41467-018-03734-7

Liu ZG, Zhang XY, Wu Y-Y (2015) C.c.: A flame detection algorithm based on Bag-of-Features in the YUV color space. In: Proc Int Conf Intelligent Computing and Internet of Things, pp 64–67. https://doi.org/10.1109/ICAIOT.2015.7111539

Louppe G (2014) Understanding random forests, from theory to practice. Ph.D. thesis, Department of Electrical Engineering & Computer Science, Faculty of Applied Sciences University of liège. https://arxiv.org/pdf/1407.7502.pdf

Lu Y, Wang Y (2018) Monitoring temperature in additive manufacturing with physics-based compressive sensing. Journal of Manufacturing Systems. https://linkinghub.elsevier.com/retrieve/pii/S0278612518300724

Marcus G (2018) Deep learning: a critical appraisal. arXiv:1801.00631

Noda S, Ueda K (1994) Fire detection in tunnels using an image processing method. In: Proceedings of VNIS’94 - 1994 Vehicle Navigation and Information Systems Conference, pp 57–62. https://doi.org/10.1109/vnis.1994.396866

Repossini G, Laguzza V, Grasso M, Colosimo BM (2017) On the use of spatter signature for in-situ monitoring of laser powder bed fusion. Additive Manuf 16:35–48. https://doi.org/10.1016/j.addma.2017.05.004. http://www.sciencedirect.com/science/article/pii/S2214860416303402

Sames WJ, List FA, Pannala S, Dehoff RR, Babu SS (2016) The metallurgy and processing science of metal additive manufacturing. Int Mater Rev 61(5):315–360. https://doi.org/10.1080/09506608.2015.1116649

Scime L, Beuth J (2019) Using machine learning to identify in-situ melt pool signatures indicative of flaw formation in a laser powder bed fusion additive manufacturing process. Additive Manuf 25:151–165. https://doi.org/10.1016/j.addma.2018.11.010. http://www.sciencedirect.com/science/article/pii/S2214860418306869

Scipioni Bertoli U, Guss G, Wu S, Matthews MJ, Schoenung JM (2017) In-situ characterization of laser-powder interaction and cooling rates through high-speed imaging of powder bed fusion additive manufacturing. Materials & Design 135:385–396. https://doi.org/10.1016/j.matdes.2017.09.044. http://www.sciencedirect.com/science/article/pii/S0264127517308894

Tapia G, Elwany A (2014) A review on process monitoring and control in metal-based additive manufacturing. J Manuf Sci Eng-Trans Asme 136(6):060801–10. https://doi.org/10.1115/1.4028540. https://asmedigitalcollection.asme.org/manufacturingscience/article-abstract/136/6/060801/377521/A-Review-on-Process-Monitoring-and-Control-in?redirectedFrom=fulltext

Chen T-H, Wu P-H, Chiou Y-C (2004) An early fire-detection method based on image processing. In: 2004 International Conference on Image Processing, 2004. ICIP ’04. http://ieeexplore.ieee.org/document/1421401/, vol 3. IEEE, pp 1707–1710

Wang D, Wu S, Fu F, Mai S, Yang Y, Liu Y, Song C (2017) Mechanisms and characteristics of spatter generation in slm processing and its effect on the properties. Materials & Design 117:121–130. https://doi.org/10.1016/j.matdes.2016.12.060. http://www.sciencedirect.com/science/article/pii/S0264127516315866

Wang T, Shi L, Yuan P, Bu L, Hou X (2017) A new fire detection method based on flame color dispersion and similarity in consecutive frames. In: Proc Chinese Automation Congress (CAC), pp 151–156. https://ieeexplore.ieee.org/document/8242754

Wang W, Zhou H (2012) Fire detection based on flame color and area. In: Proc IEEE Int Conf Computer Science and Automation Engineering (CSAE). https://doi.org/10.1109/CSAE.2012.6272943, vol 3, pp 222–226

Wang Z, Wang Z, Zhang H, Guo X (2017) A novel fire detection approach based on cnn-svm using tensorflow. In: Huang DS, Hussain A, Han K, Gromiha MM (eds) Intelligent computing methodologies. Springer International Publishing, Cham, pp 682–693

Ye D, Fuh JYH, Zhang Y, Hong GS, Zhu K (2018) In situ monitoring of selective laser melting using plume and spatter signatures by deep belief networks. ISA Trans 81:96–104. https://doi.org/10.1016/j.isatra.2018.07.021. https://www.sciencedirect.com/science/article/abs/pii/S0019057818302763?via%3Dihub

Yin J, Wang D, Yang L, Wei H, Dong P, Ke L, Wang G, Zhu H, Zeng X (2020) Correlation between forming quality and spatter dynamics in laser powder bed fusion. Additive Manufacturing 31:100958. https://doi.org/10.1016/j.addma.2019.100958. http://www.sciencedirect.com/science/article/pii/S2214860419317415

Yuan F (2012) A double mapping framework for extraction of shape-invariant features based on multi-scale partitions with adaboost for video smoke detection. Pattern Recogn 45(12):4326–4336. https://doi.org/10.1016/j.patcog.2012.06.008. http://www.sciencedirect.com/science/article/pii/S0031320312002786

Yuan F, Fang Z, Wu S, Yang Y, Fang Y (2015) Real-time image smoke detection using staircase searching-based dual threshold adaboost and dynamic analysis. IET Image Process 9(10):849–856. https://doi.org/10.1049/iet-ipr.2014.1032

Zhang B, Goel A, Ghalsasi O, Anand S (2019) Cad-based design and pre-processing tools for additive manufacturing. Journal of Manufacturing Systems. http://www.sciencedirect.com/science/article/pii/S0278612519300160

Zhang B, Jaiswal P, Rai R, Guerrier P, Baggs G (2019) Convolutional neural network-based inspection of metal additive manufacturing parts. Rapid Prototyp J 25(3):530–540. https://doi.org/10.1108/RPJ-04-2018-0096

Zhao H, Niu W, Zhang B, Lei Y, Kodama M, Ishide T (2011) Modelling of keyhole dynamics and porosity formation considering the adaptive keyhole shape and three-phase coupling during deep-penetration laser welding. Journal of Physics D, Applied Physics 44(48). https://iopscience.iop.org/article/10.1088/0022-3727/44/48/485302/meta

Zhao J, Zhang Z, Han S, Qu C, Yuan Z, Zhang D (2011) SVM Based forest fire detection using static and dynamic features. Comput Sci Inform Syst 8(3):821–841. https://doi.org/10.2298/CSIS101012030Z. http://www.doiserbia.nb.rs/Article.aspx?ID=1820-02141100030Z

Zhu Z, Anwer N, Huang Q, Mathieu L (2018) Machine learning in tolerancing for additive manufacturing. CIRP Ann 67 (1):157–160. https://doi.org/10.1016/j.cirp.2018.04.119. http://www.sciencedirect.com/science/article/pii/S0007850618301434

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ji, Z., Han, Q. A novel image feature descriptor for SLM spattering pattern classification using a consumable camera. Int J Adv Manuf Technol 110, 2955–2976 (2020). https://doi.org/10.1007/s00170-020-05995-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-020-05995-3