Abstract

Due to major technological improvements over the last decades and a significant decrease in costs, electronic projection systems show great potential for providing innovative applications across industries. In most cases, projectors are used to display entire images onto contiguous plane surfaces, but with no active consideration of their three-dimensional environment. Since spatial interactive projections promise new possibilities to display information in the manufacturing industry, we developed a practical approach to how common projection systems can be integrated into a working space and interact with their environment. In order to display information in a spatially dependent manner, a projection model was introduced along with a calibration method for mapping. Subsequently, the approach was validated in the context of robot-based optical inspection systems where texture projections are applied onto sheet metal parts as references features, exclusively to designated regions. The results show that accurate region-specific projections were possible within the calibrated projection volume. In addition, the accuracy and computing speed were investigated to identify limitations. Our approach for interactive projections supports the transfer to other application areas, enables us to rethink current manual and automated procedures and processes in which visualized information benefits the task of interest, and provides new functionalities for manufacturing industries.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last decade, rapid developments and improvements in optical components and technological devices have resulted in electronic projection systems which are of high quality and now available at affordable costs [1, 2]. Although projectors are widely known from home entertainment systems and used for presentation purposes [1,2,3], their broad use in industrial applications, for example, in manufacturing, is still negligible.

Projection systems display information in the form of images or a sequence of images without establishing physical contact to the surface they project onto. This instance benefits many applications. Modern projectors, for example, already assist in assembly tasks or increase quality standards by highlighting detected defects or flaws during production. In particular, the geometric quality assurance benefits from high-quality state-of-the-art electronic projection systems, because they are capable of providing non-contact reference features, such as projected texture patterns.

The potential of current projectors continues to increase if they are also capable of spatially interacting with their environment. Instead of projecting a full-sized image, the texture information is placed only onto designated elements within the working space. This means that certain areas, for example, plane, featureless regions of a workpiece, are selected in advance, for instance by processing the CAD of the workpiece. Afterward, the projector displays texture information exclusively onto these regions. This concept incorporates the environment (e.g., workpiece, fixtures, and elements of a wall) into the projection image, opening up new possibilities for utilizing projected information.

In this respect, the main challenge consists of linking physical objects in the working space to corresponding pixels of the projection device. Parts of this challenge have been investigated in some form or another, but mostly with complex models, complicated calibration procedures, and with much expert knowledge necessary, which makes those concepts hardly applicable for industrial tasks. Our goal was to come up with a practical integration approach for off-the-shelf projection systems.

To this end, we developed an easy-to-use concept for embedding conventional projectors into their physical environment of a robot-based inspection system. We introduce the integration approach comprising a projection model, a calibration method, and guidelines. The concept is validated by a use case from the automotive industry.

The approach was investigated in the context of geometric quality assurance applications and a robot-based optical inspection system. In this respect, the automated generation of region-specific texture projections promises great potential to provide a new solution in comparison to the traditional procedure of manually applying physical markers (fiducials) to sheet metal parts for subsequent photogrammetry methods.

Overall, projection systems which are capable of interacting with their environment provide a new tool for manufacturing industries.

2 Background and motivation

2.1 Robot-based optical inspection systems

Robot-based inspection systems with optical 3D sensors are often employed for geometric quality assurance applications in the automobile industry. In order to monitor manufacturing tolerances, samples are extracted from the production line. Based on photogrammetric approaches, a digital representation of the manufactured part is generated and compared to a virtual reference (computer-aided design (CAD) part). This process usually implies manually attaching physical reference markers to the inspected part, which is time-consuming. During detachment, parts can get damaged, or potentially reenter the production cycle without all markers removed.

Current trends in production are giving rise to the demand to measure “faster, safer, more accurately and more flexibly” [4,5,6] as well as to the vision of autonomous robot-based inspection systems [7, 8]. They demonstrate the need for new automation concepts in manufacturing metrology.

Within this scope, Ulrich et al. [9, 10] introduced a robot-based 3D image stitching approach based on data-driven registration and the use of texture projection patterns. The purpose of the projections was to avoid the application of physical markers. Projected features, provided by a full-sized image with a certain pattern of squares, were applied onto the entire surface of a part. In subsequent data processing steps, the overlap areas of the point clouds were enriched by artificial points and passed on for algorithmic 3D matching. This concept introduced projectors into robot-based inspection systems and showed high accuracy without the need for any physical markers.

2.2 Region-specific projections

Ulrich [10] showed that high registration accuracy was obtained, especially for plane, featureless surfaces. Our first observations suggest that for a medium or high number of detail in part features, for example, the inside of a side door of an automobile, projected reference features can still be advantageous. However, they exhibit heavy distortions and variations of contrast on curved surfaces, which results in a decrease of registration accuracy.

In order to comply with the required measurement uncertainty of approximately 0.1 mm in the automotive industry, the impact of the underlying surface needs to be taken into account. For this purpose, a new offline concept for robot-based optical inspection systems was introduced [11], proposing the application of region-specific texture projections.

In this context, we developed and implemented an approach to integrate an off-the-shelf projector into the environment of a robot. The goal was to project geometric primitives such as squares, circles, or triangles onto a workpiece as reference features (see Section 2.1), but exclusively onto designated regions with predefined characteristics.

In addition, our aim is a simple solution to the described goal, which can be easily transferred to a broad range of different applications. Consequently, it also promotes new ideas for employing all kinds of electronic projection systems for spatial interactive projections in production sites. Among others, possible applications are providing augmented reality (AR) features very accurately on designated 3D objects, future mobile robots with deployed high-performance pico or pocket projectors, or live defect detection and visualization on moving parts on an assembly line based on automatic 2D and 3D data segmentation.

3 State-of-the-art and related work

3.1 Electronic projection systems

Over the years, a wide variety of projection displays have emerged. Brennesholtz and Stupp [1] use the following categorization of projection displays: direct view, projection, or virtual. Direct view implies that the image is generated on the same surface as it is viewed. Projection indicates that the viewing surface of an image is spatially disconnected from its source, whereas “virtual” refers to an image formation exclusively on the retina of an eye. In this paper, we focus on devices or systems that are able to provide projections on a separated surface which also are comparably large in size.

A broad range of projection displays are available these days, reaching from pico projectors with a resolution of 320 × 240 pixels at 5–25 lm to high-end projectors with 2048 × 1080 or more pixels and 30,000 lm [1]. The targeted systems in the scope of this paper are frequently addressed by the categories “consumer home theater” projectors, “business projectors,” or “visualization and simulation projectors” [1, 3]. These systems usually provide sufficient technical specifications regarding resolution, lumen output, and contrast to be employed with a high variability in terms of tasks and applications.

The abovementioned projectors are often utilized in home entertainment systems to display videos, in (conference) rooms for data visualization for business and professional purposes, or for educational and academic applications [1, 3]. The devices are generally installed off-centered [3], and thus a feature for keystone correction is usually provided. The end user adjusts each corner of the projection display once the first time it is started up. This is usually performed interactively, where the user manually changes the displayed field of view (FOV) via remote control. Accordingly, no knowledge is necessary with regard to the specifications of the optical setup, fundamentals of light propagation, and mathematical image rectification techniques.

3.2 Projector calibration

The keystone correction and incorporating spatial (Euclidean) information is usually achieved by determining the intrinsic and extrinsic parameters of an optical device in combination with perspective projection. Therefore, the following paragraph reviews established geometric calibration approaches rather than photometric calibration techniques.

The procedure for calibrating a projection system for planar surfaces is widely researched: Sukthankar et al. [12, 13] presented a vision-based approach to automatically correct perspective distortion for presentations by planar homography. Park and Park [14] presented an active calibration method where projected and printed pattern corners were employed. Raskar et al. [15] demonstrated a multi-projector display by means of two calibrated cameras. Fiala [16] uses self-identifying patterns for projector calibration to obtain a rectified image composed by multiple projectors. In structured light applications for 3D measurement systems, the accuracy of projector–camera calibration determines the measurement accuracy. In this context, more elaborate models were researched, some also address radial and tangential lens distortion [17,18,19]. A comprehensive overview and more advanced camera-based projection techniques, especially for geometric and radiometric calibration, are provided by Bimber [2].

In conclusion, projector calibration usually involves determining the intrinsic and extrinsic parameters of a projection system often by means of an additional camera [20]. This usually leads to complex setups and requires much knowledge about camera models, respectively, projector calibration models. Software implementation skills are also required, although there are toolboxes available, such as the camera calibration toolbox in MATLAB [21]. Hence, there is a lack of a practical, user-friendly, and ready-to-use approach to incorporate information about the environment into a projection image. We address this issue by modeling a propagation volume of a projected rectified image instead of modeling the optical device itself. Therefore, there is no need for an additional vision device.

3.3 Applications of spatial interactive projections

Geometric calibration of projection systems and a deeper consideration of Euclidean information was addressed by Raskar et al. [22]. They presented a fully calibrated projector–camera unit with an additional incorporated tilt sensor. Their work focuses on displaying projections onto complex surfaces by conformal projection, object–adaptive display by fiducials (context–aware projections), and clustering several projectors for image registration. Among others, the idea of region-specific lighting to support the coupled camera as an adaptive flash was mentioned as well as the application of object augmentation for user guidance. Beardsley et al. [23] presented a handheld projector–camera device to achieve distortion-free static images despite hand jitter. Their concept enables user interaction applications, and they introduced control elements for a direct user interface. They identified three main application areas for interactive projections: free choice of possible display screens for visualizing content; projected AR to provide additional information about physical objects; and for further data processing, an active selection of a region-of-interest in the environment achieved by user interaction. In the context of AR, pose awareness of projections was also mentioned, i.e., anticipating the projection distance to the physical object, but was not further elaborated on [23].

Spatial augmentation or projection/projective mapping, i.e., the procedure of deliberately placing projections onto arbitrary 3D objects, has also been realized in different industrial scopes: In 2001, Piper and Ishii [24] proposed a concept for projecting procedural instructions for assembly tasks based on rendered CAD parts. Rodriguez et al. [25] uses projection mapping to assist workers during the assembly of business card holders. The adaptation to the environment is based on a predefined grid with particular control points and subsequent manual adjustment of the projection image. Doshi et al. [26] augmented metal parts with projected cues to improve the precision and accuracy of manual welding spots. In medical engineering, a handheld projection device in combination with a tracking system was developed to display critical structures, such as blood vessels, on a patient’s body [27]. Gavaghan et al. presented a similar, more general approach by employing a portable calibrated projection device in an existing navigation system [28], in combination with a calibrated projector and a virtual camera for displaying anatomical information during open liver surgery [29]. Ekim [30] and Reinhardt and Sieck [31] presented the application of projections on facades of entire buildings as a form of digital art and communication: across industries, providing visual information by a projection device capable of spatially interacting with its environment give rise to innovative ideas which can benefit manufacturing industries and human beings.

In conclusion, most approaches are realized to some degree by a camera–projector calibration to accurately infer Euclidean information. In these approaches, the segmentation process of the environment is often not covered or it is assumed to project onto a planar surface over the entire FOV. In some cases, the selection of 3D elements is reduced to an object detection task in correspondent 2D images. Therefore, we present a detailed approach where the selection of environmental elements is based on 3D data processing of a point-based representation. This approach provides the possibility of automation, which still is difficult to achieve with current concepts. In addition, this paper addresses the accuracy of the projection mapping, which is often neglected in related work.

The next section covers the theoretical basis of the proposed projection model and introduces the calibration method developed for adapting the model to a physical setup.

4 Integration approach

4.1 Projection model

In order to compute a projection image which incorporates Euclidean information about the working environment, a projection model is necessary. The model enables us to map points to correspondent projector pixels. As a result, a default projection image—consisting of initialized pixels—is successively enriched by activating individual pixels belonging to points of the designated regions of interest. These pixels are referred to in the following as “activated pixels” and store the spatial mapping information.

The projection model comprises geometric relationships as well as hardware-specific and working space-specific parameters for adjustment to the available setup. The calibration method for determining the setup-specific parameters is presented in Section 4.2. Points and position vectors are referred to by bold capitals. Vectors are represented by bold lowercase letters.

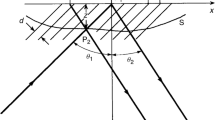

The model is based on the fundamentals of ray optics and perspective projections. It is considered as a one-point projection located in the projection center OProj (see Fig. 1). Hence, it is regarded as an infinitesimally small pinhole analogous to a pinhole camera, since a projector acts as the exact opposite [14, 18]. Furthermore, the model introduced for the investigation is developed for an oblique projection setup, because in most industrial applications, the robot or worker is positioned right in front of the workpiece or workstation. The approach presented here can be transferred for the scenario of a frontal projection.

For our approach, the elements to project onto, for example, certain regions of a workpiece and/or particular faces of the surrounding, are required to be placed within the projection volume. In addition, they need to be digitally represented by points \(\boldsymbol {P}_{i} (x_{P_{i}}, y_{P_{i}}, z_{P_{i}})^{T}\) in reference to a global coordinate system (CS). It is assumed that the elements of interest are segmented in advance, either manually or by point cloud data processing techniques.

This passage briefly summarizes the mapping process. The projection model assigns each point Pi to a projection plane \(\epsilon _{P_{i}}\) by its \(z_{p_{i}}\) value. A predefined straight line g holds all image center points (principal points) \(\boldsymbol {C}_{P_{i}}\) of possible projection images \(\text {image}_{P_{i}}\) (see Fig. 1). By means of the center point \(\boldsymbol {C}_{P_{i}}\) and the theorem of intersecting lines, the dimensions of \(\text {image}_{P_{i}}\)—height and width—within \(\epsilon _{P_{i}}\) are calculated. In combination with the resolution of the projector applied to the \(\text {image}_{P_{i}}\) and the relative distances in x- and y-direction from \(\boldsymbol {C}_{P_{i}}\) to Pi, the link to a correspondent pixel is established. Finally, the point-pixel relation is stored. The routine described above is repeated throughout all available points. After that, a projection image is retrieved, indicating for every pixel whether or not it needs to be activated in order to interact with the segmented points. In the end, all activated pixels ensure the illumination of the designated regions. The individual steps of the mapping process are elaborated on in the subsequent paragraphs.

The calibration parameters (OProj, C1, C2, h2, w2) of image1 and image2 are acquired distortion-free (see Section 4.2). This means that all center points \(\boldsymbol {C}_{P_{i}}\) of possible displayed projection images run along a straight line within the projection volume. The line is described by the general linear equation \(\boldsymbol {g} \in \mathbb {R}^{3} \)

and defined a priori by the two calibration points C1 and C2. Since the planes 𝜖1 and 𝜖2 stand perpendicular to the z-axis of the global CS (definition of our setup), the z value of a point Pi determines the center point \(\boldsymbol {C}_{P_{i}}\) of the associated displayed projection image (\(\text {image}_{P_{i}}\)) (see Fig. 1). Finally, the center point is obtained by the following:

Once, the center point \(\boldsymbol {C}_{P_{i}}\) is computed, the width and height of the associated \(\text {image}_{P_{i}}\) need to be determined. On the basis of the ratio of the length \(\overline {\boldsymbol {O}_{\text {Proj}} \boldsymbol {C}_{P_{i}}}\), referred to as di, and the length \(\overline {\boldsymbol {O}_{\text {Proj}} \boldsymbol {C}_{2}}\), referred to as \(d_{C_{2}}\) (see Fig. 1), the width wi and height hi of \(\text {image}_{P_{i}}\) are calculated. The measured width w2 and height h2 are obtained during the calibration of image2:

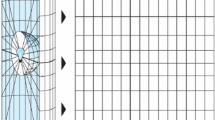

The dimensions of \(\text {image}_{P_{i}}\) in combination with the projector resolution specified by columns and rows (nres, c, nres, r) allow us to identify the correspondent projector pixel ur, c. The correct indices of a pixel addressing point Pi are determined by the vector  , referred to as lrel (see Fig. 2). Formulas 5 and 6 show the relationship between a point Pi and a corresponding pixel ur, c in the \(\text {image}_{P_{i}}\) with respect to the global CS:

, referred to as lrel (see Fig. 2). Formulas 5 and 6 show the relationship between a point Pi and a corresponding pixel ur, c in the \(\text {image}_{P_{i}}\) with respect to the global CS:

Since every point is assigned to a full-sized pixel, the decimal place is always rounded up to the next full integer value when calculating the indices of a pixel. In the case of zero-based indexing, it is adjusted downward. When ur, c is found, the pixel is activated, meaning that the established connection is stored. The projection model iteratively outputs a projection image displaying activated regions indicating correspondent elements in the working space.

Those regions finally need to incorporate the desired visualization task. This process is highly application-specific and, therefore, cannot be generalized. For this reason, an example is demonstrated and depicted in Fig. 3. It shows geometric primitives, squares consisting of 2 × 2 pixels with a surrounding placeholder space, which represent the desired visualization information and are fitted into the activated regions by individualized algorithms. It is worth mentioning that the visualized data can consist of pure textures, but they can also contain semantic information, for instance, in the form of ARTags or QR codes. The projection image is finally derived by setting the values of the actual projecting pixels and processing the values of the remaining initialized, activated, and placeholder pixels in a predefined manner.

Schematic of embedding a visualization task into an activated projection image. Activated pixels relate to points of designated elements in the working space in contrast to initialized pixels. In this case, the desired textures are squares consisting of 2 × 2 pixels and surrounded by placeholder pixels

As a result, the projection model connects a predefined space to a discretized image of a projector in order to deliberately visualize specified information on designated elements.

4.2 Calibration method

The calibration method adapts the projection model to an available physical setup. The methodical procedure presented here is a guideline that equips users with step-by-step instructions on how to embed electronic projection systems into the working environment.

The method mainly consists of two parts: positioning the projector relatively to the objects to project onto and measuring predefined calibration parameters with the aid of a calibration device. The entire calibration method is depicted in Fig. 4.

Flowchart of the calibration method to determine the geometric dimensions of an available setup for the projection model (see Fig. 1)

The projection system is placed at a suitable spot within the working space. Besides ensuring that there is no interference with a worker or a robot, it is also vital that the FOV of the projector, respectively the projection volume, is targeting the elements of interest, such as a workpiece, for example. Some projectors incorporate the “lens shift” feature, which enables a translational motion of the projection image instead of rotating the entire device. Although the lens shift in Fig. 1 is depicted as an angular change (simplification of the illustration), it is meant to indicate just a translational shift of the image in direction of the x- and y-axis, emphasized by the straight arrow. Due to perspective distortions resulting from the rotational change of the projector, the lens shift is preferred to align the projection image since it avoids keystoning [3]. After positioning, the electronic “keystone correction” feature is reset and the calibration image is displayed (see Fig. 5a). The calibration auxiliary device—a plane surface of some sort—helps the user to rectify the distortion. For this purpose, the calibration device is deployed analogously to plane 𝜖2 in Fig. 1. Applied markers (see Fig. 5b) facilitate adjustment of the FOV and setting the keystone correction in order to retrieve a distortion-free projection image. The adjustment is conducted iteratively until the corners of the calibration images match the printed markers. At the plane 𝜖2, the parameters center point C2, height h2, and width w2 are measured with respect to the global CS. Then, the calibration device is moved to a plane 𝜖1 and the parameters C1 and OProj are acquired. Finally, the calibration device is removed and the focus is adjusted to the distance of the element to project onto.

A successful calibration yields a projection volume in the shape of an oblique pyramid. Its size is theoretically increasing infinitely in the direction of propagation, but is limited by the illumination power of the projection device. The effective volume also depends on the depth of field (DOF) of the optical system. Once the volume is determined, it remains valid independent of the objects of interest.

Since the number of projector pixels is constant, the spatial resolution covered by a single pixel in the working space varies depending on the size of the FOV (zoom) and the distance between the element of interest and the projection device.

Subsequent effects on the real projected resolution due to the keystone correction are not further investigated in the scope of this paper. Due to the simplified modeling without any intrinsic parameters of the optical device, current approaches for the correction of lens distortion, as in [17, 19], were not considered.

Besides, it is worth mentioning that for a robot vision system, the keystone correction could also be realized by using the present vision system and planar homography.

In summary, the measured parameters C2, h2, w2, C1, and OProj allow us to adjust the projection model of Section 4.1 to a physical working space and, therefore, enables the linking of points, for example, of a workpiece, to correspondent projector pixels.

5 Experimental

5.1 Technical setup

The experimental investigation was conducted in the working space of the AIBox inspection system from Carl Zeiss Optotechnik, comprising an industrial robot and an optical 3D sensor (see Fig. 6). The Fanuc M-20iA robot functions as a flexible manipulator of the COMET Pro AE sensor, i.e., as a carrier of the imaging device.

The sensor acquires range images (point clouds) with a resolution of 4896 × 3264 pixels within the imaging plane of 600 × 450 mm2 (center plane). The sensor directly provides a measured point representation of its FOV. By means of the axis angles of the robot and the known relation from robot base to the global CS (kinematic model), range images are directly positioned in the virtual working space.

Additionally, we used the VPL-PHZ10 front projection device from Sony Corporation. It uses a 3 LCD system and displays images with a resolution of 1920 × 1200 pixels, provides 5000 lm, and a contrast ratio of 500,000:1. The device incorporates the features of a manual zoom, focus, and lens shift as well as a distortion correction for each corner point of the FOV individually.

For data processing, a HP Z840 workstation with an Intel Xeon CPU E5-2637 v4 at 3.50 GHz and 128 GB RAM was used (not depicted in Fig. 6) in combination with a NVIDIA Quadro M4000 graphics card.

5.2 Software

The project was implemented in the programming language C++. The input is a point representation of the scene to project onto and the calibration parameters, the output is a corresponding 2D projection image, which inheres in the application-specific visualization task.

Additionally, the open source frameworks Point Cloud LibraryFootnote 1 (PCL) [32] (version 1.8.1), and OpenCVFootnote 2 [33] (version 3.4.1) for point cloud data processing and 2D image data processing are used, which enables an easy-to-use and fast implementation.

5.3 Procedure guideline

This subsection covers the actions required to utilize the presented approach. Some are optional, but help to adapt the approach to other applications. The following three steps, however, are crucial:

-

1.

Calibration and processing point cloud data

-

2.

Applying the projection model

-

3.

Embedding the visualization task

First, the projection system is calibrated and the digital representation of the environment is processed. The calibration method is applied as presented in Section 4.2. The measured parameters are passed on to the projection model (see Section 4.1). In addition, the designated elements to project onto need to be processed, which implies on the one hand that the workpiece or the parts of the surrounding are digitally represented, for example, by a set of points, i.e., by point clouds. On the other hand, these elements need to be segmented either manually or by algorithmic segmentation approaches such as model-based or feature-based methods. Subsequently, the remaining 3D data set is positioned with respect to the global CS and the actual position in the working space. Among others, optional steps can comprise sampling of the point cloud to improve computing speed or filtering regions which are not in a direct line of sight towards the projection device.

Second, the segmented 3D data set is applied to the projection model. For each point, a corresponding projector pixel is determined. The model outputs an image with the resolution of the projector and activated pixels.

Third, the activated projection image is enriched by the visualization task. Individualized routines need to be implemented to enable integration of the intended information into these areas of activated pixels (see example in Fig. 3).

Apart from this, projections onto segmented non-planar surfaces (edges,curvaturesκ1, κ2≠ 0) or segmented planes with orientations other than perpendicular to the z-axis of the global CS exhibit distortions. The rectification of geometric complex surfaces was reported on by several researchers, e.g., [2, 15, 34].

6 Validation

6.1 Use case-projecting reference features

The use case presented here was investigated in the context of a new offline solution for robot-based inspection system in the automotive industry. The goal was the application of region-specific texture projections as reference features “see Section 2.2.”

The validation was conducted on the inside of a side door of an automobile. This part of the workpiece exhibits a highly featured surface. The point cloud representations were segmented by means of a curvature filter. The transformation of the reduced point clouds into the global CS was performed based on the odometric data of the robot and rigid transformations.

Figure 7 depicts the workpiece that was used. The red background indicates the calibrated projection volume (only for illustration purposes). The segmented area (black) corresponds to regions which comply to the defined curvature threshold, i.e., resembling plane regions. The bright squares display the visualization task.

Furthermore, keystone correction was applied for planes perpendicular to the z-axis in the global CS (see Section 4). As a consequence, the display of projections onto regions (plane areas) with the same orientation shows almost no perspective distortion. In the case of our application, this facilitates subsequent image processing with regard to accurate and robust edge detection.

Overall, Fig. 7 demonstrates that our approach for spatial interactive projections works. A slight deviation in x-direction is observable, which is probably linked to minor inaccuracies in the kinematic model used and in the calibration procedures.

6.2 Accuracy analysis

In order to deal with the effects of oblique projection, the on-board keystone correction feature was employed. That also implies a modification of the native resolution of the projector [3] and an a priori transformation (pre-warping) of the original projection image, which influences mapping accuracy. In combination with calibration inaccuracies such as estimation of OProj and measurement uncertainty (measuring tape), spatial deviations occur between a calculated projecting pixel of a point and the actual illuminated spot in the working space.

For this purpose, we generated an image with the native projector resolution, divided it into 25 equal segments, each with one center pixel. These 25 pixels are displayed on a plane surface, similar to the calibration auxiliary device depicted in Fig. 5b. The goal was to examine the accuracy of individual pixels as an error representation for the projected FOV.

Since the position of each pixel ur, c in the test image is known as is the z value of the deployed plane surface, the real-world coordinates can be calculated with the aid of the formulas introduced in Section 4.1. At this point, the displayed size of a pixel needs to be taken into account in each plane, because this calculation is based on the individual pixel indices. As a consequence, a pixel corresponds to a point in the working space which represents a particular corner of that pixel. Therefore, we corrected the measured deviations by this offset. Furthermore, we assume that a projected pixel is not skewed. The deviation is finally measured from the center of the illuminated spot to the calculated coordinate in the working space. Measured parameters for calibration and for conducting the analysis are shown in Table 1.

Figure 8 shows the deviations of the Euclidean distance across the FOV. The deviation magnitude of each center pixel is represented by the color according to the depicted heat map. The inaccuracies were identified at three different positions: C1z, zM, and C2z.

The auxiliary device was deployed right in front of the workpiece depicted in Fig. 6. The device consists of a purely plane surface which is aligned perpendicular to the global z-axis. The projector was refocused at each plane to reduce blurring. The illuminated spot was marked on a millimeter paper as well as the calculated coordinates. The x- and y-deviations were measured independently.

It is observable that at plane zM, high accuracy was obtained throughout the projected image, whereas the planes at C1z and C2z exhibit relatively higher inaccuracies, especially at the sides of the FOV. Most segments show deviations approximately between 1 and 2 mm. The mean deviations in the x- and y-axis at each plane (see Fig. 9), range from about 0.75 to 1.5 mm. The decrease of intensity at different planes and the effect of blurring due to insufficient FOV of the optical system was not accounted for separately. In summary, the Euclidean deviations remained below a threshold of about 3 mm for all three investigated planes in the scope of the described accuracy analysis.

Mean deviations in x- and y-axis at three different planes in the projection volume (cf. CS in Fig. 6)

It is noticeable that deviations also exist at the calibration points C1 and C2, although a superposition is to be expected. This is probably caused by minor alignment deviations when repositioning the auxiliary device at the original calibration z-distance. In terms of other deviations within the FOV, additional superposed effects come into play, e.g., the digital preprocessing of the original test image to a keystone corrected distortion-free display leads to a modified resolution with rearranged pixels. This influence was assumed to be negligible and the real coordinates of a pixel were calculated on the basis of the original resolution. In this matter, the spatial pixel density, which declines with increasing z values due to the propagation of the projection volume, also leads to an amplification of the effect. Furthermore, any optical system has aberrations, which were not separately compensated for. This error can amount to several pixels [34].

6.3 Computation time

In dynamic applications, i.e., projecting at certain frame rates, the computational cost for single processing tasks becomes critical. Therefore, we present a brief study determining how much time is actually needed and how processing time changes in relation to the size of a point cloud. The acquisition time was not taken into account. The test was conducted using the use case in Section 6.1.

Figure 10 shows the impact of the point cloud size on the computational cost measured in seconds. The computation was timed at the point of program execution until the output of the final projection image. The log–log graph depicts an exponential increase of the data processing time starting with 5 s (rounded) for 336,280 points until 938 s (rounded) for 7,134,998 points. Reading the data and the segmentation process (absolute curvature filter based on a principal component analysis) accounts for more than 94% of the computational cost. Instead of points, a mesh-like representation for segmentation processes might show improvement of the runtime behavior.

Runtime analysis for the use case presented in Section 6.1. An exponential increase of the computational cost is noticeable for larger point cloud sizes

For the use case presented above (projecting squared reference features), point clouds with a size smaller than approximately 106 points within the FOV were not feasible, because they output an empty projection image. This is caused by the decrease of the point density through sampling. The pixel-point mapping only activates a projector pixel if a correspondent point is present in the working space. In combination with our method of how squares are fit into exclusively contiguous, activated pixel regions, sparse point clouds experience difficulties. However, this can be circumvented by either subsampling the segmented regions of the point cloud to increase density or by image processing of the activated projection image to obtain uniform regions large enough to incorporate the visualization task.

For applications requiring real-time capability, processing time becomes vital. Consequently, implemented computation–intensive algorithms require evaluation and optimization in terms of runtime if necessary.

7 Conclusion and future work

The potential of current projection systems increases when spatially interacting with their environment. This ability provides a sophisticated tool and opens up new possibilities in manufacturing.

In this paper, we addressed the lack of a ready-to-use approach for integrating projection systems into the working space of a robot. Therefore, we introduced an integration approach comprising a projection model and a calibration method. The provided procedure guidelines aim at a quick transfer to other setups and applications across industries.

Our approach was validated in the context of quality assurance applications of sheet metal parts in the automotive industry. We showed that texture features were successfully projected onto predefined regions in the working space with high accuracy. The mean absolute x- and y-deviations of individual pixels were determined to approximately 0.75–1.5 mm for three different planes of our projection volume. The computational cost of our implementation follows an exponential increase in the runtime with larger point cloud sizes.

A large projection volume and a high spatial resolution are important figures of merit for many applications. To overcome these contrary goals, we propose investigating how the approach presented here is qualified to be used with an auxiliary handling system such as an additional robot. This way, the projection device can be moved through the working space, providing a larger projection volume at high resolution.

In summary, we provide a new approach, which enables standard electronic projection systems to spatially interact with their environment based on existing state-of-the-art technologies, features, and open source software. This approach extends the variety of possible applicabilities for projection displays and may foster new innovations for manufacturing industries.

Change history

09 June 2021

A Correction to this paper has been published: https://doi.org/10.1007/s00170-021-07309-7

Abbreviations

- AR:

-

Augmented reality

- CAD:

-

Computer-aided design

- CS:

-

Coordinate system

- DOF:

-

Depth of field

- FOV:

-

Field of view

References

Brennesholtz MS, Stupp EH (2008) Projection displays, 2nd edn. Wiley, Chichester, pp 1–11

Bimber O (2007) Projector-based illumination and display techniques. Technical University of Munich

Vandenberghe P (2016) Data projectors. Handbook of visual display technology. Springer, Cham, pp 2919–2926. ISBN:978-3-319-14346-0

Berthold J, Imkamp D (2013) Looking at the future of manufacturing metrology: roadmap document of the German VDI/VDE society for measurement and automatic control. J Sensors Sensor Syst 2:1–7. https://doi.org/10.5194/jsss-2-1-2013

Kiraci E, Franciosa P, Turley GA, Olifent A, Attridge A, Williams MA (2017) Moving towards in-line metrology: evaluation of a Laser Radar system for in-line dimensional inspection for automotive assembly systems. Int J Adv Manuf Technol 91:69–78. https://doi.org/10.1007/s00170-016-9696-8

Tuominen V (2016) The measurement-aided welding cell—giving sight to the blind. Int J Adv Manuf Technol 86:371–386. https://doi.org/10.1007/s00170-015-8193-9

Bauer P, Gonnermann C, Magaña A, Reinhart G (2019) Autonome Prüfsysteme in der digitalen Fabrik: skill-basierte Modellierung in der geometrischen Qualitätsprüfung für roboterbasierte Messsysteme / Autonomous inspection systems in the digital factory: skill-based modelling within the geometric quality assurance for robot-based inspection systems. wt Werkstattstechnik online 109:321–328

Gonnermann C, Reinhart G (2019) Automatized setup of process monitoring in cyber-physical systems. Proc Conf Manuf Syst 81:636–640. https://doi.org/10.1016/j.procir.2019.03.168

Ulrich M, Forstner A, Reinhart G (2015) High-accuracy 3D image stitching for robot-based inspection systems. IEEE Int Conf Image Process, 1011–1015. https://doi.org/10.1109/ICIP.2015.7350952

Ulrich M (2018) 3D-image-stitching für roboterbasierte Messsysteme. Dissertation, Technische Universität München

Bauer P, Magaña A, Reinhart G (2019) Free-form surface analysis and linking strategies for high registration accuracy in quality assurance applications. Proc Conf Manuf Syst 81:968–973. https://doi.org/10.1016/j.procir.2019.03.236

Sukthankar R, Stockton RG, Mullin MD (2000) Automatic keystone correction for camera-assisted presentation interfaces. Adv Multimodal Interfaces—Proc ICMI: 607–614. https://doi.org/10.1007/3-540-40063-X_79

Sukthankar R, Stockton RG, Mullin MD (2001) Smarter presentations: exploiting homography in camera-projector systems. Proceedings of International Conference on Computer Vision. https://doi.org/10.1109/ICCV.2001.10045

Park S-Y, Park GG (2010) Active calibration of camera-projector systems based on planar homography. In: IEEE 20th International conference on pattern recognition, pp 320–323. https://doi.org/10.1109/ICPR.2010.87

Raskar R, Brown M, Yang R, Chen W-C, Welch G, Towles H, Scales B, Fuchs H (1999) Multi-projector displays using camera-based registration. Proc Conf Visual, 161–522. https://doi.org/10.1109/VISUAL.1999.809883

Fiala M (2005) Automatic projector calibration using self-identifying patterns. IEEE Computer Society Conference on Computer Vision and Pattern Recognition. https://doi.org/10.1109/CVPR.2005.416

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22:1330–1334. https://doi.org/10.1109/34.888718

Falcao G, Hurtos N, Massich J (2008) Plane-based calibration of a projector-camera system. VIBOT Master, 9

Chen X, Xi J, Jin Y, Sun J (2009) Accurate calibration for a camera–projector measurement system based on structured light projection. Opt Lasers Eng 47:310–319. https://doi.org/10.1016/j.optlaseng.2007.12.001

Bimber O, Emmerling A, Klemmer T (2005) Embedded entertainment with smart projectors. Computer 38:48–55. https://doi.org/10.1109/MC.2005.17

Bouguet J-Y (2019) Camera calibration toolbox for Matlab. http://www.vision.caltech.edu/bouguetj/calib_doc/. Accessed 18 October 2019

Raskar R, van Baar J, Beardsley P (2003) iLamps: geometrically aware and self-configuring projectors. ACM Trans Graph 22:809–818. https://doi.org/10.1145/882262.882349

Beardsley P, Raskar R, Forlines C, van Baar J (2005) Interactive projections. IEEE Computer Graphics and Applications

Piper B, Ishii H (2001) CADcast: a method for projecting spatially referenced procedural instructions. Tech. rep., MIT Media Lab

Rodriguez L, Quint F, Gorecky D, Romero D, Siller HR (2015) Developing a mixed reality assistance system based on projection mapping technology for manual operations at assembly workstations. Procedia Comput Sci 75:327–333. https://doi.org/10.1016/j.procs.2015.12.254

Doshi A, Smith RT, Thomas BH, Bouras C (2016) Use of projector based augmented reality to improve manual spot-welding precision and accuracy for automotive manufacturing. Int J Adv Manuf Technol 89:1279–1293. https://doi.org/10.1007/s00170-016-9164-5

Kobler J-P, Hussong A, Ortmaier T (2010) Mini-projektor basierte augmented reality für medizinische Anwendungen. Tagungsband der 9. Jahrestagung der Deutschen Gesellschaft für Computer- und Roboterassistierte Chirurgie e.V, Düsseldorf, pp 115–118. ISBN: 978-3-86247-078-5

Peterhans M, Vom Berg A, Dagon B, Inderbitzin D, Baur C, Candinas D, Weber S (2011) A navigation system for open liver surgery: design, workflow and first clinical applications. Int J Med Robotics Comput Assist Surg 7:7–16. https://doi.org/10.1002/rcs.360

Gavaghan KA, Peterhans M, Oliveira-Santos T, Weber S (2011) A portable image overlay projection device for computer-aided open liver surgery. Trans Biomed Eng 58:1855–1864. https://doi.org/10.1109/TBME.2011.2126572

Ekim B (2011) A video projection mapping conceptual design and application: YEKPARE. Turkish Online J Des Art Commun 1:10–19. https://doi.org/10.7456/10101100/002

Reinhardt J, Sieck J (2014) Dynamische Projektion auf Gebäudefassaden. Wireless Commun Inform, 151–173. ISBN: 9783864880711

Rusu RB, Cousins S (2011) 3D is here: point cloud library (PCL). IEEE International Conference on Robotics and Automation. https://doi.org/10.1109/ICRA.2011.5980567

Bradski GR, Kaehler A (2011) Learning OpenCV, 1st edn. O’Reilly Media, Beijing. ISBN: 978-0-596-51613-0

Bimber O (2007) Projector-based augmentation. Emerging technologies of augmented reality: interfaces and design. https://doi.org/10.4018/978-1-59904-066-0.ch004

Acknowledgments

The integration approach presented in this paper for electronic projection systems is being investigated within the scope of the research project “CyMePro” (Cyber-physical measurement technology for 3D digitization in the networked production). The coordination is supervised by the VDI ∣ VDE ∣ IT. Additionally, we would like to thank our research partners AUDI AG and Carl Zeiss Optotechnik GmbH for their effort and support.

Funding

Open Access funding enabled and organized by Projekt DEAL. The Bavarian Ministry of Economic Affairs, Energy and Technology is kindly funding our project (funding code ESB036 / 001).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bauer, P., Fink, F., Magaña, A. et al. Spatial interactive projections in robot-based inspection systems. Int J Adv Manuf Technol 107, 2889–2900 (2020). https://doi.org/10.1007/s00170-020-05220-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-020-05220-1