Abstract

Manufacturing equipment embraces an increasing measure of tacit intelligence, in both capacity and value. However, this intelligence is yet to be exploited effectively. This is due to both the costs and limitations of developed approaches and a deficient understanding of data value and data origin. This work investigates the principal limitations of typical machine tool data and encourages consideration of such inherent limitations in order to improve potential monitoring strategies. This work presents a novel approach to the acquisition and processing of machine tool cutting data. The approach considers the condition of the monitored system and the deterioration of cutting tool performance. The management of the cutting process by the machine tool controller forms the basis of the approach, and hence, makes use of the tacit intelligence that is deployed in such a task. By using available machine tool controller signals, the impact on day-to-day machining operations is minimised while avoiding the need to retrofit equipment or sensors. The potential of the approach in the contexts of the emerging internet of things and intelligent process management and monitoring is considered. The efficacy of the approach is evaluated by correlating the actively derived measure of process variation with an offline measurement of product form. The potential is then underlined through a series of experiments for which the derived variation is assessed as a direct measure of the cutting tool health. The proposed system is identified as both a viable alternative and synergistic addition to current approaches that mainly consider the form and features of the manufactured component.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For organisations to remain both competitive and cost effective, they must produce high quality parts, quickly, with few defects or failures, and with fewer people involved. This presents a challenge, as often making advances in one of these areas is detrimental to progress in another. Moreover, widespread change within an organisation is very often resisted. In many situations, an optimised process requires a balance between the capabilities of the system in use and the expectations for the finished product, from those involved.

The act of finding the perfect balance benefits greatly when all involved understand the boundaries between acceptable or otherwise. However, this difference between good and bad can be distorted by both the controlled and the stochastic elements within a process; this makes the compromise between design and manufacture difficult.

To find the best balance between quality and productivity, organisations should be able to measure their progress against either to identify the resulting impact on collective process performance. Whilst quality can be difficult to quantify during manufacture, classifying and/or improving the performance or the capability of a system is possible to achieve. Current approaches include those enshrined in fit manufacturing (including six sigma) and overall equipment effectiveness (OEE) [1,2,3]. Application of established numerical indices for capability can use both the process index (CP) and/or the capability index (Cpk), as well as variations of the same [4]. Related approaches include the use of control charts or the consideration of underpinning statistics. It is noted that all the approaches risk being presented simply because authors believe it lends credibility to their approach, rather than being used in practice as tools to influence process decision-making. Practical implementation is further hindered by uncertainty over costs versus benefits.

Expanding on manufacturing capability, in the context of the operation of machine tools, some attempts have been made to characterise machine tools as a singular, all-inclusive entity [5, 6]. Other researchers are seeking to exploit machine tool intelligence to construct a digital representation of the entity creating so-called digital twins [7,8,9]. These approaches are promising and seek to capitalise on the increasing intelligence and connectivity of machine tools. Currently, however, most of them fall short of realising the complexity of the system, and hence, generate ambiguous information. Further to the data ambiguity, difficulties arise when considering specific components, the interaction between components, or the normal (baseline) versus abnormal states for an unknown process. Fundamentally, an argument can be made that accessible data is not necessarily suitable data. To resolve some of these issues, one must consider if the information gleaned from a machine tool is implying:

-

A deterioration of, or change in, the entire system

-

The deterioration of a system subset—e.g. axis drives or spindle

-

Causal changes in the process—i.e. new cutters, materials, changed feeds/speeds

-

Different process plans and/or machining methods employed by different operators

This complexity required further investigation into the possible influences behind process variation. An initial attempt to do so is summarised in Fig. 1. Figure 1 illustrates some of the underlying reasons for a changing or variable process. It is presented to add weight to the argument that the exact cause of an observed process change is hard to determine, or confirm, with confidence. It also offers justification as to why systems aiming to monitor and/or diagnose complex systems, such as machine tools, require the given assumptions and a degree of process competence or experience. This implies that the process is both complex and stochastic, indicating that a perfect solution is perhaps process specific. This in turn would generate the demand for approaches that provide the best fit for a given system.

Notwithstanding the above observations and challenges, it is the case that modern manufacturing equipment and processes are outwardly capable of meeting tolerances and/or providing a specified surface finish. In part, this is because of the reduction in human error and process planning variability with the introduction of computer numerical control (CNC). This should mean that the resulting process capability is in some way locally optimised. This will in part be the result of functions such as spindle load management or volumetric error compensation that are embedded within the CNC controller. However, given the complex nature of the causes of variation highlighted in Fig. 1, there will always be room for improvement. From a competitive position, if a process can be improved it should be, especially in pursuit of a better market position. In that regard, one key optimisation organisations tend to aim for, yet struggle with, is the condition of the cutting tools used.

The assessment of the condition of cutting tools predominantly relies on the original condition and quality ‘as new’ versus the combination of both time-dependent wear and stochastic wear. Time-dependent wear represents the predictable deterioration from continued use. Stochastic wear can be considered as an unpredictable variation within the process, increasing the risk of sudden or abrupt failure and otherwise confusing the prediction of remaining life. The original condition and quality of a cutting tool are normally established through a direct assessment of the cutting tool parameters (preferably in situ within the CNC machine environment) prior to use. Industry has presented numerous methods to achieve such an assessment, including both contact and non-contact tool setters and probes, and the introduction of machined and measured predefined geometry within so called “slave features” pre- and in-process [10].

The two forms of cutting tool wear are challenging to ascertain, and yet are critical to process safety. The failure of a cutting tool can result in a damaged product (scrap) and a damaged machine, leading to unexpected downtime and additional economic loss [11, 12]. To reduce the risks associated with unexpected failure, manufacturers tend towards combinations of both proactive and reactive approaches to the management of cutting tools. Replacements are often based on a set schedule, with a healthy safety margin of 20% of total life (proactive) [13,14,15]. On the other hand, unexpected failure, or poor performance, leads to process adjustments or system replacements (reactive). These conservative methods ‘paper over the cracks’ and ultimately increase process waste and cost [16].

There is an evident need to consider the implementation of effective tool condition monitoring (TCM) and, by extension, to consider whether predictive approaches can appropriately identify process concerns without issue. This paper and the work presented herein attempts to address some of the issues presented thus far in relation to end milling cutting tools. Firstly, two popular approaches towards TCM are presented and discussed. Secondly, novel methods are presented seeking to optimise both the assessment and use of cutting tools. Predominantly, the methods consider the employ of typical machine tools within manufacturing organisations. This work aims to promote better-engineered solutions for the management of cutting tools which may shift focus away from the traditional proactive and reactive approaches.

2 Tool condition monitoring

Accepting the premise that process variation can be attributed to the progressive deterioration of the cutting tool, an extraordinary amount of research has been undertaken aiming to quantify said deterioration during the manufacturing process. This is often approached in two ways: as direct attention to the tool or indirect consideration of the tool through auxiliary signals and/or outputs. Direct and indirect can thus be seen to be referring to the nature of the data acquisition. This division and the methods supported have been further expanded in the literature [17, 18]. This review is focussed on indirect assessments of tool wear, considering the variability in the form of a manufactured part and analysis of ‘smart’ machine data.

Assessment of tool condition from the form of the manufactured part is practiced. Some studies take a direct approach by assessing the geometry of the cutting tool itself in-progress [19]. Others evaluate the variation in geometric form (of the manufactured part) and attribute this variation to the deterioration of the cutting tool [20,21,22,23]. The methods are predominantly post-process oriented and better suited for diagnostics than active process control. The methods are viable from an academic perspective and enable the better understanding of tool wear phenomena, but they have a limited bearing on the continuous management of machining operations.

It may be considered that these studies evaluating geometric form fail to consider arguments made by fellow academics and industrial organisations. Both Astakhov [24] and Shaw [25] recognise that the association between geometry and cutting tool condition is tricky to quantify and the individual features difficult to apportion to responsible process variations. Their deduction corroborates with the argument that complex systems may generate ambiguous information. In addition, industrial organisations add further complexity with innovations that hide or mitigate tool condition, including for instance re-dimensioning cutting tools in-process, known as in-cycle-gauging (ICG). From an economic and competitive stance, removing the geometric variation is both sensible and beneficial. In the best instances, dimension-related post-processing can be eliminated or at least significantly reduced [10]. In theory, the data arising from the re-dimensioning of cutting tools can provide the information required to analyse the geometrical variation; however, the information is rarely retained for more than the correction of tool offsets.

In lieu of geometrical variation, some studies evaluate the variation in surface integrity (of the manufactured part) and attribute this variation to the deterioration of the cutting tool. This method is more popular within industrial organisations, being complimentary to the re-dimensioning of cutting tools. The approach has proven to be popular for turning operations [28,29,30]. However, it has been reported to be highly susceptible to changes in machining parameters and tool geometry when applied to the milling process [22, 31, 32]. Effective implementation of such an analysis may be impractical when the additional measurement apparatus is considered. This indicates that analysis of the surface integrity is equivalent to geometry analysis for the assessment of cutting tool condition, with perhaps added potential with respect to systems implementing ICG. Being inherently post-process, the analysis of surface integrity falls in with others that either detect poor condition too late, resulting in scrapped parts, or detract from the process efficiency when measured in-process. This indicates an inadequacy in the current form classification approaches for monitoring time-dependent tool condition.

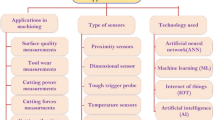

An alternative approach for the development of intelligent process monitoring (IPM) is to consider monitoring machine tool architecture [33]. This approach is popular with proposals designed for most of the machine tool components. Regarding the machine tool spindle, approaches are primarily focused on analysing the forces and/or vibrations occurring during the manufacturing process. However, approaches also consider the power consumption of the spindle motor [34,35,36,37]. The cutting tool condition is then often considered using any one of a threshold, time series, or a neural network style approach [17]. Deciding on the best approach requires consideration of two issues, data suitability and data quantity. A sensible approach will acquire all available data during an event as inevitably, post-process, the data is no longer available. However, all available data is not necessarily all sensible data. Therefore, a practical approach must assess all available data, and acquire only that which is necessary. Given this consideration, developed approaches tend to limit their consideration to select variables, reducing the complexity of the data. However, most conclude that additional data would be necessary for effective implementation of the approaches. This indicates that a single variable is relatively ineffective at identifying deviations from nominal yet can be effective when considered alongside others.

Within the many emerging themes of Internet-enabled connectivity, many organisations are tending towards the acquisition of all information that is available [38,39,40,41]. These approaches attempt to work with the mass of information, offering ‘intelligent’ interpretations of the data. However, at present, these approaches often require that the data is transferred off-site for processing, with results forwarded to the respective machine operators. In a data sensitive world, this is both challenging and potentially risky for organisations to adopt. Additionally, as the data footprint of these ‘smart’ machines increase, the rate at which the data can be sent, processed and returned is affected negatively. This infers that optimising the data use requires on-site processing. Machine tool manufacturers often attempt to process the data at source, offering ‘smart’ functions intended to protect the machine and part from damage [42]. However, currently, these approaches are often automatically applied to the real-time control of the machining operation to mitigate any detected changes, and thus extend the effectiveness of the machining process. They do not aim to provide evidence or supply data of any change affected but rather support the continued operation in a practical context and wholly rely on the machine operator to implement them effectively. Such methods are in effect relying on tacit knowledge related to the management of the cutting process that is built into modern advanced ‘smart’ CNC systems.

Despite these supporting methodologies, tools will still fail. This indicates a need to devise alternative, on-site, methods for monitoring the condition of cutting tools. In response, this work considers the possibility for diagnosing cutting tool condition using the information acquired from a ‘smart’ machine tool. It is the tacit knowledge, relating to the management and control of specific cutting processes, that makes such smart operations possible. These will include the response of the machine controller to changes in cutter condition, which will not normally be obvious to the user. The acquisition of data is limited to the spindle motor load, aiming to prove the efficacy of the system prior to introducing additional variables. The primary aim is to create a practical basis for exploiting the intelligence inherent in modern machine tools. Consideration will be given to the further development of this approach in overcoming some of the inherent limitations of acquiring ‘big data’ from relatively unknown sources. The intention is to consider the practical strategies for observing cutting tool wear during an intelligent process, based on said process intelligence.

3 Tool monitoring system development

This work considered the application of existing machine tool architecture for the development of cutting tool monitoring strategies. It uses machine tool information that is typically, either not available to the operator, or not utilised further than superficial infographics. To achieve this, a Mazak Vertical Centre Smart 430A VMC was studied alongside a Mazatrol matrix nexus 2 CNC controller (NC). This represents a small-scale version of the typical shop-floor machine tool employed within numerous manufacturing organisations. In-built functions include active vibration control, volumetric error compensation and active feed rate control. The comparatively small table area of 0.241 m2 and actual machine volume (AMV) of 0.123 m3 of the VMC used does not reduce the relevance of the control functions accessed and utilised. It is also noted that the VMC was calibrated prior to commencing empirical studies.

The effective machine volume (EMV) is considerably less than the AMV due to the internal machine volume accommodating build fixtures, an in-process TS27R ‘online tool setter’ (OTS) and the operator-adjusted table offset (100 mm). The OTS was included for an on-machine (online) estimate of the initial dimensions of the cutting tool. Measuring the tool online is more reliable than measuring offline as the tool is seated within the machine, hence behaving as in situ and accounting for the actual workable volume of the cutting tool. A drawing of the Mazak outline is identified in Fig. 2, indicating the major units. Table 1 indicates the major units of the Mazak, as identified in Fig. 2, and highlights the objects of interest.

Mazak outline, adapted from user manual [43]

Communication with the machine controller was achieved by a direct port into the electrical control cabinet (ECC). This was installed by the original equipment manufacturer (OEM) to support this research and provides access to the machine tool information at the point between the machine and the NC operating panel, but post primary processing. Where primary processing is defined as the conversion from raw numerical data (RNData) into information the OEM considered to be more meaningful. Access is not provided to the RNData over concerns with the potential impact on the ordinary operation of the machine. The machine tool information (post primary processing) is herein referred to as ‘MTData’ and was acquired in semi- real- time. The process outline is presented in Fig. 3.

The MTData refers to the closed loop information available within the controller enabling the VMC to maintain the process settings required by the user. The information returned includes common machining parameters, typically the machine feed rate, spindle rotational speed, machine temperatures, etc. All parameters are derived from internal pre-installed sensors, identified by the OEM as beneficial in the typical control, usage and/or maintenance of the machine. As no additional machine volume needs to be sacrificed for retrofit sensors, this is considered beneficial to the operation and subsequent assessment of the machine tool. The decision to forego additional sensors has also been highlighted as a preferred approach by others [33].

The MTData was transferred from the controller to the user by establishing an Ethernet link between the machine tool ECC and a PC, using a crossover cable. The data was transferred in packets from the controller to the local memory of a Hilscher CifX50E-RE interface board (HIB), each packet being processed in the order received (inline) and subsequently overwritten. Complete data transfer is achieved in 0.1 s, despite being possible in 0.0284 s, due to a requested packet interval (RPI) of 10 Hz. This was set at such to avoid possible detriment to the controller’s normal functions. It is noted that the architecture has no control over the application; hence, future proponents of this technology could reasonably expect integration within the controller.

Data was acquired from the local memory of the HIB by implementing a modular program (written in C), designed to communicate with the board. The program monitored the VMC for activity and initiated data acquisition when appropriate. The process information considered for this study was the spindle motor load (SML). The respective information is presented in Table 2.

3.1 Signal development

As only one register is allocated to the acquisition of SML, the data acquired is limited to the range 0–256, and is hence provided as integer percentages (quantised information). As percentages are more usefully considered in context, the raw data was reverse engineered into energy consumption using the manufacturer supplied motor specification. A simple power law relationship was applied to identify the rated power (Pmax) and rated torque (Tmax) for any individual rotational speed (ω) (1 and 2).

The power law relationships were derived from the spindle speed-power-torque (SPT) characteristic presented in Fig. 4. Coefficients A, B, C and D are identified in Table 3 for the different speed ranges. Obtaining the maximum power allows the process energy consumption (E) to be calculated (3).

where the calculated energy is in Joules, the sampling frequency (FS) is the rate of data acquisition, and the limits are the start (a) and end (b) of each machined part respectively. This is simplified further when the quantised nature of the signal is accounted for, allowing the integral to be approximated by the sum of the samples per part (4).

When applied to the raw SML information, this results in the transition illustrated by Fig. 5. The final stage follows outlier detection/removal using a Hampel filter. Figure 5 is intended to provide an overview of the approach and, for clarity, axes are intentionally omitted, and scales do vary.

Spindle motor speed-power-torque characteristic, derived from user manual [43]

The significant signal fluctuations, visible in Fig. 5 b, are primarily a result of the machining process incorporating multiple stages. However, it is also acknowledged that some of the variation directly results from the methods used for data acquisition and processing (and losses with that respect). The observed ‘staircase effect’ (Fig. 5 c) results from the quantised nature of the MTData propagating through the processing stages.

Noise is minimal due to the quantised nature of the signal; nevertheless, the implementation of the Hampel filter ensures the signal noise is negligible.

3.2 Health diagnostics and remaining life

In 1906, Taylor introduced his tool wear equations, and the matching curves, to show the relationship between cutting speed and tool life [44]. These relationships also sought to split the condition of a cutting tool into three stages:

-

1.

Rapid initial wear

-

2.

Gradual wear

-

3.

Rapid wear and failure

One could consider that these three stages accurately describe the step changes in the deterioration of the cutting process and, therefore, that Taylor’s wear curves are characteristic of the true tool deterioration. These well-known and generally accepted stages could thus be adopted to establish a benchmark for the process and to establish a basis for subsequent prognostics. The objective is to identify the key stages of cutting tool deterioration. To achieve this objective, independent of the material process conditions requires a deviation from Taylor’s equation for cutting tool wear. As such, a process benchmark can be developed based on the relatively straightforward third-order polynomial (cubic) (5).

A cubic function simplifies the mathematics involved and can be derived relatively easily using linear regression. Using Excel, the required regression formula is shown through two VBA (visual basic for applications) functions (Figs. 6 and 7). Using VBA enables a simple formula for the calculations; allowing for shorter array formulae, and reduced excel file sizes by 90%—in this case from 7 MB to 786 KB.

The coefficients A, B, C and D are calculated from the filtered energy consumption data. Using the original data, whilst workable, results in the cubic tending to zero, making additional processing inevitable. A further deviation from Taylor’s tool life theories was to consider four step changes in cutting tool condition. This decision was based upon the complexity of modelling such a stochastic and variable process and allows greater flexibility over the shape of the model. Hence, four stages for tool condition are considered. This was achieved by splitting the second stage in two. An example of the derived cubic is presented in Fig. 8, identifying the four condition stages.

The four stages are defined as follows:

-

1.

Extreme negative gradient (ENG) - Healthy

-

2.

Negative gradient (NG) - Used

-

3.

Positive gradient (PG) - Failing

-

4.

Extreme positive gradient (EPG) - Failed

Following the derivation of a cubic function, a novel algorithm was established to model the process. The established algorithm relies primarily on the acquired process-generated data and followed four stages:

-

1.

The curve is translated towards zero by subtracting the magnitude of the stationary point (SP) from all values. Subtracting the |SP| prevents the x-axis intersect from excessive variation during active monitoring—both the mean and median averages vary considerably when monitoring in-process. Additionally, whilst the mean and median averages end up weighted toward the latter stages of the process due to the relatively heavy skew towards a ‘positive gradient data’ (PG), the |SP| remains centrally located. The resulting polynomial (f(x,k)) can be represented by Eq. 6, where k is derived when the second derivative is equal to zero (7).

$$ f(x,k)=A(x^{3}-k^{3} )+B(x^{2}-k^{2} )+C(x-k) $$(6)$$ k=B/3A $$(7) -

2.

The curve is split in two parts, either side of zero, with values normalised between zero and ± one, following the rule: − 1 ≤NG < 0 ≤PG ≤ 1. This can be represented as the curve f(x,k)α (8).

$$ f(x,k)_{\alpha}= \left\{\begin{array}{ll} \frac{f(x,k)}{\min{f(x,k)}} &f(x,k)<0 \\ \frac{f(x,k)}{\max{f(x,k)}} &f(x,k)\geq0 \end{array}\right. $$(8) -

3.

The magnitude change in the slope of the first differential (g(x)) is calculated, per part, from the general equation (f(x)) to give the probable fit (P.Fit) (9), where the adjustment factor (γ) is calculated iteratively and shifts g(x) according to the observed tool life for the specific process (10).

$$ g(x)=\frac{3A({x_{n}^{2}}-x_{n-1}^{2} )-2B(x_{n}-x_{n-1})}{(x_{n-1}^{2}+x_{n-1}+C)+\gamma} $$(9)$$ \gamma=\text{ae}^{(b\text{(RTL}/100))}+\text{ce}^{(d(\text{RTL}/100))} $$(10)The remaining tool life (RTL) is found from the intersection between g(x) and f(x,k). The coefficients a, b, c and d are not the same as A, B, C and D.

-

4.

The polynomial (f(x,k)) is subtracted from the P.Fit (g(x)), following the logic presented in Eq. 11. The result (E.Fit) demonstrates the changing tool condition when plotted alongside P.Fit.

$$ \text{E.Fit}=|g(x)\cdot(g(x)>0)|-|f(x,k)\cdot(f(x,k)>0)| $$(11)The changing condition of the cutting tool is separated into the previously defined stages:

- ENG:

-

- Until E.Fit deviates from zero

- NG:

-

- Until E.Fit equates to f(x,k)α

- PG:

-

- Until E.Fit equals zero

- EPG:

-

- Until the process ends

It should be noted that despite the data-driven nature of the algorithm, the approach can only be guaranteed when implementing the specific conditions herein. Further work is necessary to identify the system response to different conditions.

4 Application

A series of regular cylindrical artefacts and single axis slots were manufactured into a section of bright mild steel (125 × 25 × 220 mm) (Fig. 9). Each cylindrical artefact was machined in four stages, each stage being a separate cylinder at increasing depths. The four stages are henceforth considered equivalent to an individual manufactured part. Single axis slots were machined in two passes, again each pass was equated to an individual part. This resulted in 48 equivalent parts per section of steel. The process continued until either four series’ (192 parts) were completed or the cutting tool broke. The cutting tool used was a 10 mm, four-flute square-end-mill made from high speed steel (HSS).

The manufacture of each part was divided into five stages as outlined in Fig. 10. The machine feed rates for each stage are presented in Table 4 as dimensionless values, each calculated relative to the helical cut.

Slotting is defined as using the full width of the cutting tool, whilst partial cutting utilised a fraction of the cutting tool width. For partial cutting the fraction of full cutting tool width (CutW) is approximated by the effective cutting tool diameter (\(\varnothing _{\text {CT}}\)) over the proportional feed (FR) (12).

where k is included to account for subsequent deviation due to operator adjustments.

The process was completed using fully flooded cutting conditions. The selected tool was a HSS, with no coating for the tool material. It is acknowledged that the popularity of HSS has reduced for production operations; however, the material choice was made to encourage a deterioration of the process within a shorter timescale than may be experienced with the use of carbide or coated tools in practice. To obtain a reliable indication of the process change, the manufacture of 192 parts was repeated four times with four cutting tools. The process settings for said repetitions are outlined in Table 5.

5 Verification and comparative diagnostics

It has been accepted that identifying variations in post-process geometry is of limited use in managing tool life. However, said variations could be utilised to verify the efficacy of MTData-based cutting tool monitoring strategies. Subsequently, in this work, it was decided not to access and deploy the in-process tool measurement system to re-set tool offset. This meant not attempting to control geometrical variation and allow for the natural variation to propagate through to post-process. The tool condition is then attainable as a proportion of the observable change in product condition and can be used to verify the efficacy of the proposed approach. To achieve a meaningful result for the tool condition, the change in product form was adapted to emphasise the systematic process deterioration (13) [45].

The product variation is calculated for two dimensions as the actual depth of cut per part cannot be derived post-process. Without an appropriate measure of the cutting depth, the volumetric measure of variation is unobtainable.

Figure 11 illustrates the variation in cross-section area (CSA) per part for each of the four cutting tools, presenting the fourth part from each artefact. Since the fourth part represented the last stage (i.e. the deepest cut) in each of the cylindrical artefacts, the dimensions relating to the fourth part were taken to represent the tool wear measured at the end of the machining cycles applied for the artefact. The first three parts of each artefact were also measured but were not included here for clarity. They may be considered separately since they potentially represent the significant variation introduced by influences other than the cutting tool condition. Similar observations have been made by Wilkinson et al. [31] and Ahmed et al. [18, 23]. The final two parts per artefact (5 and 6 representing the single-axis slots) were not measured.

The recommended limit identified on Fig. 11 represents ISO 8688-2: 1989 [46]. The standard recommends that HSS tools be withdrawn when the average flank wear exceeds 0.3 mm, or when any local maximum is 0.5 mm (e.g. a chipped tooth). The equivalent threshold in terms of the CSA per part was found to be 37.42 mm2, calculated by considering a 0.6 mm reduction in diameter. The recommended limit was surpassed by tools 1 and 3 after 142 parts. Tools 2 and 4 operated for 178 parts. All tools were utilised far beyond their recommended threshold; however, tools 1 and 3 failed, whereas tools 2 and 4 remained intact.

Having identified a visual indication of the tool condition from the product geometry, attention is drawn to the information derived from the machine tool itself. Figure 12 shows the process energy consumption (PEC), as derived from the spindle motor load (SML). The plots are separated into two figures to improve clarity. All four processes are visibly similar, with the greatest variation witnessed between tools 3 and 4 (Fig. 12b). This observation is sensible as tool 4 cut at 36 m/min. All preceding tools cut at 52 m/min demonstrating the established behaviour and potential for longer life when using cutting tools at lower speeds. The similarity between the processes provides further benefit for showing whether processes conform, or for highlighting issues with individual parts. For batch production, this could allow machine operators to focus their attention on parts flagged as abnormal. However, this would require the operator to have knowledge of the process and how the output is thus affected, or alternatively, necessitate additional information to identify where and how the process trends deviate from the norm.

The quantised nature of the MTData is observable for all cutting tools with significant ‘steps’ in the chart data. It is acknowledged that these steps limit the precision of the system; however, this potentially benefits the system by reducing signal noise. The proposed improvement in the resolution of the MTData will significantly reduce the observed ‘staircase effect’. Figure 12 also illustrates the system sensitivity to process/data interruptions. All interruptions result in a significant drop in measured PEC. These are marked by areas 1 to 3 in Fig. 12 to correspond to as follows:

-

1.

Operator influence—process stop for cutting fluid replacement

-

2.

Network failure—interruption to the communication between controller and PC (data loss)

-

3.

System shutdown—process stop/shutdown for an extended time period

In two of the observed interruptions (items 1 and 3), the process is stopped, adding unplanned machine downtime. The resulting energy signature is repeatable, suggesting occurrences could be monitored. However, as the information is derived from the spindle load, abrupt changes in the spindle speed will result in a similar energy signature. Identifying the exact nature of these signatures in a practical application would therefore require the consideration of additional information. The remaining of the three observed interruptions (item 2) represents a fault arising in the transfer of MTData, resulting in a gap in the tool history. The fault could, in theory, be confused with a process or condition change, and hence, may be difficult to identify in practice. However, the system recovered in reasonable time and the error code, incident time and incident duration were recorded. If this information remains available, the fault is classifiable. It is important to state that the system accommodated all process interruptions and continued to operate normally when they were resolved.

The (recommended) limit identified in Fig. 12 again represents ISO 8688-2: 1989 [46], in accordance with the CSA threshold. In the absence of a direct calculation, the PEC threshold is derived from the CSA variation by averaging the PEC for each tool at the instant the CSA threshold is exceeded. This gives an equivalent PEC threshold of 19.29 kJ for the four tools. The relevance of this PEC threshold is limited in practice, being derived from post-process analysis, and as such is only included as a guide. It is noted that the recommended limit was surpassed by all tools in similar style to the variation in CSA per part. Following consideration of data originating within the machine tool, a direct comparison of the two methods is appropriate (Fig. 13).

Figure 13 shows a direct comparison between the CSA variation and the PEC variation for each of the observed cutting tools. The data for each set is standardised using Eq. 14, enabling a direct comparison between different sets and ensuring axes are consistent.

In addition, the PEC variation is presented as an area plot to illustrate the potential range per part, rather than absolute values, to incorporate measurement uncertainty in the analysis. The PEC measurement uncertainty, accumulated due to the discrete nature of the MTData, shows that the progression in product CSA is within the equivalent PEC range. However, it is noted that the uncertainty margin is relatively large (1.26 units compared with a maximum measurement range of 5.20 units), meaning the probability that CSA measurements fall within the given PEC range is high, irrespective of correlation. The PEC potential range could be reduced with the acquisition of more precise, or additional sources, of MTData.

Notwithstanding, superficially the two trends are similar, both trending towards higher magnitudes, credited to a breakdown in the condition of the cutting tool. However, consideration of the time-dependent detail within the signals presents clear differences between the two trends. One could argue in favour of the PEC variation as the level of detail is distinctly better than for the CSA variation; however, consideration should be made for the inherent limitations of the detail:

-

Data quality is not yet assured to prevent false details carrying through

-

Staircase effect from quantised MTData

-

False positives from process changes/adjustments may not be completely filtered

It will also be the case that tool condition (including bluntness) may not be entirely evidenced in the CSA. It is the case that the changes to the dimension of the component will reflect changes in important tool features, but it is possible that some variations may not be identified. For example, should one tooth (flute) become blunted, it will not be apparent from the changes in component geometry, since the effect will be mitigated by the action of the remaining teeth. It will however be reflected in changes to the PEC, especially with the acquisition of MTData with increased resolution.

To provide a more literal comparison, the similarity was considered in terms of the stages of cutting tool wear and presented as a delay (In manufactured parts) between the CSA and the PEC approaches. CSA is assessed as the nominal variation. The individual stages are identified in Table 6, and the deviation between PEC and CSA is presented in Table 7.

As before, ISO8688-2: 1989 [46] indicates the recommended usage limit derived from the CSA. The delay (Table 7) is taken from the CSA relative to the equivalent PEC (negative values indicate the respective limit is flagged by the PEC earlier than the CSA). Table 7 suggests that utilising PEC for the estimation of wear returns a negative delay for tools 1, 3 and 4 (mean delay). This equates to shorter estimations of tool life for the PEC compared to the CSA of at least three artefacts. On the other hand, for tool 2, the PEC estimations of tool life were longer than predicted by the CSA by more than one artefact. Indeed, the PEC response for tool 2 indicates that the tool has substantially more life remaining than suggested by the CSA estimations. It should be underlined that the mean delay is substantially skewed by the estimations for the ENG and NG. In practice, the PG and EPG would dictate the continued use of a cutting tool. In this case, for all tools, the PEC indicated that the tools have substantially more life remaining than suggested by the CSA estimations.

Supposing the CSA estimations are accurate, one could infer that monitoring the process using the PEC variation will result in at least one sub-par artefact with tool 2, whilst tools 1, 3 and 4 are stopped early. However, supposing the CSA estimations are not accurate, when using PEC for the estimation of wear one could suggest that tool 2 is better utilised (and potential remaining life is not wasted), whilst tools 1, 3 and 4 are stopped before deteriorating substantially. Either of the two propositions could be considered as correct, this underlines a significant limitation in proving a method through comparison with another in that the efficacy of the new method relies entirely on the efficacy of the old method.

Nevertheless, the performance using PEC is seen as justifying its use as a viable alternative to using the CSA. Moreover, the variations in PEC and CSA should not correspond perfectly. In neither case is the uncertainty in the measurement nor the uncertainty in the fault diagnosis accounted for. The information presented indicates that using the PEC is a viable alternative to using CSA, provided the process change is attributed to the deterioration of the cutting tool and that the process change is similar in nature to that seen within this work. It should also be noted that the acquisition and processing of the data required to estimate PEC does not require any unjustified time or effort. In a practical application, for example in a batch manufacturing context, many more manufacturing cycles could be captured and used to further refine the approach. It is important to stress that changes in the PEC arise from the decisions made by the controller in response to embedded tacit intelligence. Changes in CSA can only be attributed in part to these responses. It is fundamentally better to monitor the system for evidence of process change than to wait for the consequence.

The results indicate that although the PEC performed as hoped for, the use of the PEC on its own requires further work. This gives evidence to the argument that additional signals are required to ensure that system diagnostics are robust to system and process changes, especially should prognostics-oriented approaches be pursued. In addition, extra signals would further ensure that conclusions have enough basis.

6 Conclusions

This paper explored the potential of enhancing the tool management capability of manufacturing equipment, the aim being to improve the safe utilisation of cutting tools within an active process. An initial investigation identified the predominant uncertainty in data acquired from what is inherently a challenging environment. This highlighted the confusion that is often apparent over data value and origin, despite their influence on current monitoring approaches. This also confirmed the limitations some current approaches endure regarding the diagnosis of tool condition, particularly considering industrial innovations that improve process conformance and consistency by suppressing the physical evidence of tool wear.

This paper presented a novel approach for the acquisition and processing of machine tool cutting data by using available machine tool controller signals. The presented approach minimised the impact on machining operations and avoided retrofit equipment or sensors by exploiting the changes in machining resulting from the tacit intelligence that is deployed by the controller during such operations. It is argued that a sensible approach should not endeavour to acquire all available data but should assess the data on merit and retain that with the most value. The approach presented in this paper acquired only the spindle motor load information. The results indicated that the derived approach is both a viable alternative and synergistic addition to current approaches that mainly consider the form and features of the manufactured component.

It was noted that users of this approach, or similar approaches built upon these principles, may in future wish to see integration of all technologies within the controller and/or machine tool entity.

It is acknowledged that one signal is insufficient to reliably diagnose tool condition. Additionally, it is warranted that the presented approach should be compared with further measures of process condition. Future work will consider additional process variables to further support the observations made and will compare the presented approach to the variation in product surface finish.

References

Esmaeel RI, Zakuan N, Jamal NM, Taherdoost H (2018) Understanding of business performance from the perspective of manufacturing strategies: fit manufacturing and overall equipment effectiveness. In: Eleventh international conference inter-eng: interdisciplinarity in engineering

Sahoo S, Yadav S (2017) Analyzing the effectiveness of lean manufacturing practices in Indian small and medium sized businesses. In: IEEE international conference on industrial engineering and engineering management

Gupta P, Vardhan S (2016) Optimizing OEE, productivity and production cost for improving sales volume in an automobile industry through TPM: a case study. Int J Prod Res 54(10):2976

Ganji ZA, Gildeh BS (2016) A modified multivariate process capability vector. Int J Adv Manuf Technol 83:1221

Laloix T, Vu HC, Voisin A, Romagne E, Iung B (2018) A priori indicator identification to support predictive maintenance: application to machine tool. In: Fourth European conference of the prognostics and health management society

Xu X (2017) Machine Tool 4.0 for the new era of manufacturing. Int J Adv Manuf Technol 92(5–8):1893

Sobie C, Freitas C, Nicolai M (2018) Simulation-driven machine learning: bearing fault classification. Mech Syst and Signal Process 99(1):403

Liu C, Xu X, Peng Q, Zhou Z (2018) MTConnect-based cyber-physical machine tool: a case study. In: CIRP conference on manufacturing systems

Liu C, Xu X (2017) Cyber-physical machine tool - the era of machine tool 4.0. In: CIRP conference on manufacturing systems

Renishaw plc (2011) Productive process pattern: cutter parameter update AP301. Renishaw plc, Wotton-under-Edge

Amer W, Grosvenor R, Prickett P (2007) Machine tool condition monitoring using sweeping filter techniques. Proc Inst Mech Eng Part I: J Syst and Control Eng 221(1):103

Lee K, Lee L, Teo S (1992) On-line tool-wear monitoring using a PC. J Mater Process Technol 29(1–3):3

Wiklund H (1998) Bayesian and regression approaches to on-line prediction of residual tool life. Qual and Reliab Eng Int 14(5):303

Liu C, Wang GF, Li ZM (2015) Incremental learning for online tool condition monitoring using Ellipsoid ARTMAP network model. Appl Soft Comput 35(1):186

Zhou Y, Xue W (2018) Review of tool condition monitoring methods in milling processes. Int J Adv Manuf Technol 96(5-8):2509

Aliustaoglu C, Ertunc HM, Ocak H (2009) Tool wear condition monitoring using a sensor fusion model based on fuzzy inference system. Mech Syst Signal Process 23(2):539

Jun CH, Suh SH (1999) Statistical tool breakage detection schemes based on vibration signals in NC milling. Int J Mach Tool Manuf 39(11):1733

Ahmed ZJ, Prickett PW, Grosvenor RI (2016) The difficulties of the assessment of tool life in CNC milling. In: The international conference for students on applied engineering

Čerče L, Pušavec F, Kopač J (2015) A new approach to spatial tool wear analysis and monitoring. Strojniški vestnik—J Mech Eng 61(9):489

Liu X, Zhang C, Fang J, Guo S (2010) A new method of tool wear measurement. In: The international conference on electrical and control engineering

Zhang C, Zhou L (2013) Modeling of tool wear for ball end milling cutter based on shape mapping. Int J Interact Des Manuf 7(3):171

Li H, He G, Qin X, Wang G, Lu C, Gui L (2014) Tool wear and hole quality investigation in dry helical milling of Ti-6Al-4V alloy. Int J Adv Manuf Technol 71(5-8):1511

Ahmed ZJ, Prickett PW, Grosvenor RI (2016) Assessing uneven milling cutting tool wear using component measurement. In: Thirtieth conference on condition monitoring and diagnostic engineering management (COMADEM)

Astakhov VP (2004) The assessment of cutting tool wear. Int J Mach Tool Manuf 44(6):637

Shaw MC (1984) Metal cutting principles. Oxford University Press, New York, p 1984

López de Lacalle LN, Lamikiz A, Fernández de Larrinoa J, Azkona I (2011) Machining of hard materials. In: Davim J P (ed) Machining of hard materials, 1st edn. Springer, London, pp 33– 86

Hosseini A, Kishawy HA (2014) Cutting tool materials and tool wear. In: Davim J P (ed) Machining of titanium alloys. Materials forming, machining and tribology. Springer, Berlin, pp 31–56

Kwon Y, Ertekin Y, Tseng TL (2004) Characterization of tool wear measurement with relation to the surface roughness in turning. Mach Sci Technol 8(1):39

Karim Z, Azuan SAS, Yasir AMS (2013) A study on tool wear and surface finish by applying positive and negative rake angle during machining. Aust J Basic Appl Sci 7(10):46

Danesh M, Khalili K (2015) Determination of tool wear in turning process using undecimated wavelet transform and textural features. In: Eighth international conference inter-eng: interdisciplinarity in engineering

Wilkinson P, Reuben RL, Jones JD, Barton JS, Hand DP, Carolan TA, Kidd SR (1997) Surface finish parameters as diagnostics of tool wear in face milling. Wear 205(1–2):47

Waydande P, Ambhore N, Chinchanikar S (2016) A review on tool wear monitoring system. J Mech Eng Autom 6(5):49

Prickett P, Johns C (1999) An overview of approaches to end milling tool monitoring. Int J Mach Tool Manuf 39(1 ):105

Fu L, Ling S F, Tseng CH (2007) On-line breakage monitoring of small drills with input impedance of driving motor. Mech Syst Signal Process 21(1):457

Tseng PC, Chou A (2002) The intelligent on-line monitoring of end milling. Int J Mach Tool Manuf 42 (1):89

Axinte D, Gindy N (2004) Assessment of the effectiveness of a spindle power signal for tool condition monitoring in machining processes. Int J Prod Res 42(13):2679

Abbass JK, Al-Habaibeh A (2015) A comparative study of using spindle motor power and eddy current for the detection of tool conditions in milling processes. In: IEEE international conference on industrial informatics

Bosch Rexroth AG (2015) ODiN condition monitoring. https://www.boschrexroth.com/en/xc/service/service-by-market-segment/machinery-applications-and-engineering/preventive-and-predictive-services/start-preventive-and-predicitive-marginal-13

MTConnect Institute (2018) A free, open standard for the factory. http://www.mtconnect.org/

Siemens AG (2018) SIPLUS CMS - Stepping up your production. https://www.siemens.com/uk/en/home/products/automation/products-for-specific-requirements/siplus-cms.html

Yamazaki Mazak Corp (2014) What is Mazak’s role in industry 4.0. https://www.mazakeu.co.uk/industry4/

Yamazaki Mazak Corp (2014) New functions. https://www.mazakeu.co.uk/smooth-new-functions/

Yamazaki Mazak Corp (2015) Operating manual vertical center Smart 430A: UD32SG0010E0. Yamazaki Mazak Corp, Worcester

Taylor F (1906) On the art of cutting metals. ASME, New York, p 1906

Hill J L, Prickett P W, Grosvenor R I, Hankins G (2018) CNC Spindle signal investigation for the prediction of cutting tool health. In: Fourth European conference of the prognostics and health management society

International Organization for Standardization (1989) ISO 8688-2: 1989(en). Tool life tesing in milling - part 2: end milling. ISO, Geneva

Funding

The Engineering and Physical Sciences Research Council and Renishaw jointly fund this research under an iCASE award, reference no. 16000122.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hill, J.L., Prickett, P.W., Grosvenor, R.I. et al. The practical exploitation of tacit machine tool intelligence. Int J Adv Manuf Technol 104, 1693–1707 (2019). https://doi.org/10.1007/s00170-019-03963-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-019-03963-0