Abstract

Artificial General Intelligence (AGI) is said to pose many risks, be they catastrophic, existential and otherwise. This paper discusses whether the notion of risk can apply to AGI, both descriptively and in the current regulatory framework. The paper argues that current definitions of risk are ill-suited to capture supposed AGI existential risks, and that the risk-based framework of the EU AI Act is inadequate to deal with truly general, agential systems.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

The proposed EU AI Act may be the first broad-scope piece of legislation on AI, and—thanks to the Brussels effect—will probably extend its influence far beyond the EU borders. Or so legislators hope.

It is well known that the EU AI Act adopts a risk-based approach. For high-risk systems, among other provisions, providers have to adopt a risk management system, based on identification and evaluation of risks that may emerge from intended uses or foreseeable misuses, and if these risks are unacceptable, mitigation and control measures have to be designed and implemented (art. 9).

But how does the Act fare when it comes to AGIs (artificial general intelligence)?

The original Commission proposal, I argued in Faroldi (2021), could not deal with AGIs, as the Act provisions crucially depended on an AI system having an intended purpose.

While in the final text (2024), there is some timid mention of foundational models and general purpose systems, and it is unclear what shape or form AGIs will take (if any), for sure such systems or agents will not have a specific intended purpose, which makes the EU AI Act by and large inapplicable, cosmetics additions notwithstanding.

However, more and more academics, practitioners, and policy maker are growing concerns not just with risks originating from specific AI systems, for which the AI Act may be a good start,Footnote 1 but of catastrophic or existential risk originating from AGI.Footnote 2

This paper proposes to investigate if and how the conceptual framework of risk can be extended to AGIs, starting from the context of the EU AI Act.

More specific objectives are the following:

-

Identify what definitions of risk are needed to take into account AGI, both conceptually and practically, e.g. to maximize the effectiveness of risk-management systems;

-

Identify what definitions of AGI, if any, are more amenable to a risk-based approach;

-

Discuss regulatory strategies that take into account AGIs and AGI-related risk.

2 Difficult definitions: risk and AGI

In order to establish whether the risk-theoretic framework is descriptively and normatively adequate for AGI, we need a basic understanding of risk and of AGI. This section introduces 'risk' as currently understood, highlighting some problems, and it proposes a compromise definition of AGI.

2.1 Defining risk

According to the Stanford Encyclopaedia of Philosophy, 'risk’ (Hansson 2023) can take five main meanings: (i) an unwanted event; (ii) the cause of an unwanted event; (iii) the probability of an unwanted event; (iv) the expected value of an unwanted event; (v) the fact that a decision is taken under conditions of known probabilities (rather than uncertainty).

Rather confusingly, the notion of risk that is employed by standard organisations (such as ISO) and absorbed by (some) legislators is a different notion of risk: the combination of the probability of harm and the severity of that harm.Footnote 3

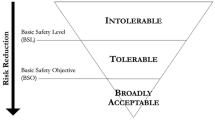

One can categorise risk by looking at severity or at probability, but also at how they are combined. Let’s see these three options in turn.

2.1.1 Severity, probability and existential risks

Depending on the severity, one can characterise risk as ranging from minor (1–8 fatalities, or millions in economic damages, according to HM Government, National Risk Register (2023)) to catastrophic (more than a thousand fatalities and tens of billions in economic impact, same source), although quantifying catastrophic risk is not always straightforward (Stefánsson 2020).

Depending on the probability, one can have quantitative risk, for a harm with known probability, qualitative risk, for a harm with only a probability estimate range, awareness of risk, for probability unknown but non-null, and finally unawareness of risk.Footnote 4

Much more difficult to adapt to these framework is the notion of existential risk.

Existential risk is defined as an event that threatens the premature extinction of humanity, or permanently reduces its ability to flourish.Footnote 5

For non-anthropic risks, one can think of a devastating natural pandemic, a supervolcanic eruption, or asteroid impact. For anthropic risks, one can think of an engineered pandemic, all-out nuclear war, or artificial general intelligence taking control.

It is not so common, it seems, for government and scholars to address existential risks in policy discourse.Footnote 6

Existential risk is problematic to fit in the standard framework in general for reasons having to do with severity, rather than probability.

In some cases, in fact, the probability can be calculated precisely (impact of a specific asteroid), or at least imprecisely estimated. In some other cases, such as risk from AGI, there is at best a series of guesses.

When it comes to severity, however, standard quantitative categories (fatalities, casualties, economic impact) do not seem to be acceptable. An existential risk threatens the whole survival of moral agents or patients, and quantifying it exposes one to linearity objections.

Consider an event that wipes out 8.09 billion humans (out of 8.1 living humans as of early 2024, according to UN estimates). Reducing this risk’s severity to 8,088 billion fatalities should be equally preferred as reducing 2 million (annual) deaths for diarrhoea diseases. But it is unclear whether the few people alive after such destruction could recover any form of civilisation.

Of course (dis)utility need not be linear w.r.t. number of lost lives. Such a loss could have an increasing marginal disutility, such that loosing 2 m lives in a small population is much worse than loosing the same amount in a large population.Footnote 7 This option does not address the issue of qualifying, rather than quantifying, the severity of existential risk.Footnote 8

2.1.2 Combination and surreal risks

But there is a third slot, besides probability and severity, that is hardly ever looked at, namely, their combination.

In most account for risk, in fact, the combination of probability and severity is taken to be some kind of product, in line with decision theory, where normally a rational agent is supposed to work with expected value, the product of probability and utility (e.g. by maximising it).

But the combination of probability and severity of a harm does not have to be only a product. For a simple, naive alternative, one can use product when the probability is either precise or imprecise, and when severity is at most catastrophic.

When severity is more than catastrophic, one could assign non-standard values to it (presumably higher than 1 + 1 + 1 + …).Footnote 9

In practice, in most policy work, the quantification of probability, severity and their combination is qualitative, just split in at best a few categories, and based on aggregating experts’ opinion.Footnote 10

Let’s now move to the equally difficult task of defining AGI.

2.2 Defining AGI

There is no agreement in the literature, of course, on how to define AGI precisely. Ringel Morris (2023) et al. report nine definitions:

-

1.

A system passing the Turing test;

-

2.

A system that respects requirements for Searle’s Strong AI;

-

3.

AI systems that rival or surpass the human brain in complexity and speed, that can acquire, manipulate and reason with general knowledge, and that are usable in essentially any phase of industrial or military operations where a human intelligence would otherwise be needed;

-

4.

A machine that is able to do the cognitive tasks that people can typically do;

-

5.

Artificial intelligence that is not specialized to carry out specific tasks, but can learn to perform as broad a range of tasks as a human;

-

6.

Highly autonomous systems that outperform humans at most economically valuable work (OpenAI);

-

7.

Shorthand for any intelligence (there might be many) that is flexible and general, with resourcefulness and reliability comparable to (or beyond) human intelligence (Gary Marcus);

-

8.

Systems with sufficient performance and generality to accomplish complex, multi-step tasks in the open world. More specifically, Suleyman proposed an economically based definition of AGI skill that he dubbed the “Modern Turing Test,” in which an AI would be given $100,000 of capital and tasked with turning that into $1,000,000 over a period of several months;

-

9.

State-of-the-art LLMs (e.g. mid-2023 deployments of GPT-4, Bard, Llama 2, and Claude) already are AGIs, arguing that generality is the key property of AGI, and that because language models can discuss a wide range of topics, execute a wide range of tasks, handle multimodal inputs and outputs, operate in multiple languages, and “learn” from zero-shot or few-shot examples, they have achieved sufficient generality.Footnote 11

We don’t need to settle the debate in this paper, thus I propose to adopt a working definition of AGI which keeps in mind the following aspects:

-

The definition is functional, i.e. does not refer or require a "thick" notion of intelligence à la Searle;

-

As an instrumental baseline, we consider the average human generally intelligent, without requiring that and AGI needs the same kind of intelligence;

-

We assume that generality has degrees, and that it is both horizontal (i.e. it refers to kinds of tasks) and vertical (it refers to the generality of a given task itself). Sometimes these two dimensions may be difficult to extricate;

-

Consequently, it is reasonable to assume that human collectives are more generally intelligent than individual humans;

-

We keep separate general intelligence and superintelligence in principle. There can thus be a narrow super intelligence (e.g. AlphaGo), a general intelligence which is at the same level of ability as humans, and a general superintelligence.

Having made these assumptions, I propose to adopt as a working definition of AGI the following:

A highly autonomous system, not designed to carry out specific tasks, but able to learn to perform as broad a range of tasks as a human (modulo biological differences) at least at the same level of ability as the average human.

Now that we have established working definitions of (existential) risk and AGI, let’s consider their possible interactions.

3 Risk and AGI

Since we suppose that an AGI would be fairly autonomous, and it will take a lot of decisions for itself, it is hard to predict not just what it will do, but also how. The existential risk here, if any, is not necessarily a big, catastrophic event, but an increasing sum of smaller events that taken together amount to a significant (existential) risk.Footnote 12

For concreteness, let’s see some AGI-related catastrophic risks found in the literature.

3.1 Types of catastrophic risks

Hendrycks et al. (2023) suggest the following categorization of catastrophic AI risks:

-

1.

Malicious use: AIs can be deployed by malicious actors on purpose. Cases include bioterrorism, propaganda, surveillance. Proposed mitigation strategies include biosecurity, restricting access to powerful models, and legal liability for developers.

-

2.

AI race: international pressure could lead states to develop powerful AIs without control and cede power to them. Cases include lethal autonomous weapons and automated warfare, automation of work. Proposed mitigation strategies include implementing safety regulations, international coordination, and public control of general-purpose AIs.

-

3.

Accidental or organizational risks: these risks are in region of better understood traditional risks, i.e. lab leaks, theft by malicious actors, or organizational failure to invest in AI safety. Proposed mitigation strategies include internal and external audits, multiple layers of defense against risks, and state-of-the-art information security.

-

4.

Rogue AI: this is the worry that humans would loose control over AIs, as they become more intelligent than us. This might generate goal shifts, power-seeking behavior, and deception. Proposed mitigation strategies have presumably to do with alignment strategies.Footnote 13

For a different take on sources of risk, Harari recently has pointed out the possibility of a massive AGI-generated financial crisis, as finance is “only data”, and already heavily digitalized.Footnote 14 In itself, this would not be but a catastrophic risk, which could, however, trigger a chain of conflicts potentially existentially threatening.

Other extreme risks mentioned by Ringel Morris et al (2023) are that “AGI systems might be able to deceive and manipulate, accumulate resources, advance goals, behave agentically, outwit humans in broad domains, displace humans from key roles, and/or recursively self-improve.” Risks are linked to level of autonomy, with more autonomous agential systems as the riskiest.

3.2 Technology versus agency

In Hendrycks et al.’s classification, risks 1–3 seem about particular uses or applications, autonomous or not, of AI systems. This is consistent with a view of A(G)I as a technology.

Now consider the following two pieces of evidence:

Claude 3 displays self-awareness by changing its behaviour while being tested.Footnote 15

Hubinger et al. (2024) show how AI systems can be sleeper agents, i.e. displaying" deceptive behavior: behaving helpfully in most situations, but then behaving very differently in order to pursue alternative objectives when given the opportunity", and once learned, this deception is resistant to state-of-the-art safety techniques.

While in the former case (Hendrycks et al.’s 1–3), we can think of them as technologies, or problems emerging from uses of products, in the latter cases (Claude 3 meta-awareness, sleeper-agents, and Hendrycks et al.’s risk 4, rogue AI), we can think of the problems as arising from agential behaviour. While there can be a good deal of philosophical discussion about the distinctions between technology and agency, for the purpose of this paper, I want to keep this distinction in mind from a regulatory perspective.

In particular, the question I will deal with in the next section is whether a risk-based approach, namely the one found in the EU AI Act, is adequate for AGI risk. I will argue that it is not if A(G)I is agential.

4 The EU AI Act approach

4.1 General purpose = AGI?

The definition of 'general purpose AI model’ (GPAI) adopted in the final text in January 2024 is the following:

“‘general purpose AI model’ means an AI model, including when trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable to competently perform a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications.”

The first question to ask is whether such definition covers (or can cover) AGI.

There are two reasons in favour: first, it requires a system to display "significant generality", second, it requires a system to be "capable to competently perform a wide range of distinct tasks". While 'generality' and 'competence' are not further defined, and obviously such terms are themselves gradable, and while there is no agreed definition of AGI, a reasonable interpretation of these terms point us towards an affirmative answer.

However, there is a strong reason against: namely, the GPAI definition does not require human-level performance, which we postulated in the AGI definition given above. How significant is this consideration? Like many things, the adequacy of this definition depends on the purpose(s) of one’s inquiry. If it is to avoid (yet unforeseen, perhaps) harms, then that the AI Act definition does not take into account human-level performance can in fact be seen as a good feature: we don’t wait to regulate until that threshold (if it is a threshold) is crossed. On the other hand, if one aims at descriptive adequacy, the discrepancy with the AGI definition given above is too wide, in my opinion, to consider GPAI = AGI.

One further objection to the EU AI Act definition is that the legislator wants 'general purpose system' to cover large language models, whose capability, as I write in early 2024, while impressive, are almost universally not considered AGI-level. Such objection is easily set aside if we consider that the definition in question can cover AGI, even if what the definition covers now is not AGI yet—perhaps the interpretation of 'generality' and 'competence' for current large language models is below AGI level. Such a move is at least in part justified by the fact that (only) some of these models are thought to pose systemic risk.Footnote 16

Thus, my preliminary conclusion is that while the EU AI definition does not cover AGI in theory, we should act as if it does for pragmatic reasons: (i) first, it seems so intended by the legislator; (ii) second, we should take GPAI = AGI for precautionary reasons, especially if we want to assess whether GPAI regulations are fit for purpose.

4.2 Legal obligations for AGI providers

The second question to ask is whether the regulatory structure is fit for purpose, i.e. the requirements imposed to providers of general purpose systems are adequate to deal with AGI, and in particular with AGI risk.

The EU AI Act adopts a definition of risk discussed above, i.e. the one that refers to the combination of the probability of harm and its severity.

Providers of general-purpose AI models have to respect very limited obligations, mostly having to do with providing information, which presumably do not have impact on mitigating extreme risk.Footnote 17

However, for advanced general-purpose AI system, the Act has a special categories of 'systemic risk'.

The final text of the EU AI Act in January 2024 defines systemic risk:

‘‘systemic risk at Union level’ means a risk that is specific to the high-impact capabilities of general- purpose AI models, having a significant impact on the internal market due to its reach, and with actual or reasonably foreseeable negative effects on public health, safety, public security, fundamental rights, or the society as a whole, that can be propagated at scale across the value chain.’

Where high-impact capabilities are defined as:

high-impact capabilities’ in general purpose AI models means capabilities that match or exceed the capabilities recorded in the most advanced general purpose AI models.

General purpose systems with systemic risk need to respect a series of requirements, and in particular adopt a risk management system as specified by article 9, as do systems that are classified as high risk.

These providers have additional obligations, set in article 52d:

-

(a).

Perform model evaluation in accordance with standardised protocols and tools reflecting the state of the art, including conducting and documenting adversarial testing of the model with a view to identify and mitigate systemic risk;

-

(b).

Assess and mitigate possible systemic risks at Union level, including their sources, that may stem from the development, placing on the market, or use of general purpose AI models with systemic risk;

-

(c).

Keep track of, document and report without undue delay to the AI Office and, as appropriate, to national competent authorities, relevant information about serious incidents and possible corrective measures to address them;

-

(d).

Ensure an adequate level of cybersecurity protection for the general purpose AI model with systemic risk and the physical infrastructure of the model.

Interestingly, while in a previous version, there was a reference to the risk management system established for high-risk systems (art. 9), now providers of general purpose systems with systemic risks can rely on codes of practices to show compliance with their obligations.

While there starts to be literature on evaluation and risk for general purpose systems (e.g. cf. Shevlane et al. 2023), I know of no standards or risk management systems that are specific to them.

One may ask why not just apply currently envisioned tools and frameworks. We answer in the next subsection.

4.3 Managing AGI risk

Let’s then see whether the current provisions for high-risk systems can reasonably and efficiently apply to AGI, keeping in mind the working definition given above.

4.3.1 Can AGI’s risk be managed according to the risk management system?

Let’s first check to what extent it is feasible to apply the requirements established in Title III, Chapter 2 (those of high-risk systems) to AGI.

We start from art. 9(2):

-

a)

The identification and analysis of known and foreseeable risks is impossible, because we don’t know what AGI will look like or be capable of exactly, and thus we cannot use available information. If known, it means technology already exists, but it does not.

-

b)

Estimation and evaluation of the risks that may emerge: estimation is difficult when it comes to probability, and impossible when it comes to severity, because catastrophic and existential risks are impossible to quantify on familiar scales; evaluation seems possible, as long as one adopts a qualitative decision procedure, e.g. a precautionary principle.

-

c)

Adoption of suitable risk management measures, which are further detailed in article 9(3) and (4):

-

d)

Providers must design and develop the system in such a way that eliminates or reduces the risks as far as possible: this seems to require that AGI be aligned.Footnote 18 But even for narrow AI, there are no theoretical guarantees that a system is aligned and there is empirical evidence that misalignment is robust across machine learning techniques, with phenomena such as “scheming” and “goal misgeneralization”.Footnote 19

-

e)

If risks cannot be eliminated, providers must implement adequate mitigations and control measures: this seems hardly possible exactly because the argument is that AGI, if misaligned, may take control.

-

f)

Providers must provide adequate information and training to users: this point also seems hardly applicable, to the extent that if an AGI system is truly autonomous, it will be presumably very hard to anticipate in which ways it will create catastrophic or existential risks, once it has been deployed.

Consequently, testing procedures are also out of the question, because one cannot deploy an AGI system if the risks mentioned earlier are not safely eliminated.Footnote 20

4.3.2 GPAI

General purpose AI systems (GPAI systems) are either “normal” or they pose “systemic risks”.

Whether a system poses systemic risk is determined by a number of “external factors”, such as the amount of compute used to train it. Normal GPAI systems mostly need to comply with (broadly) transparency requirements: keeping technical documentation (also about the content used in training), providing info about the model, cooperation with the Commission etc.

GPAI systems with systemic risk have the same transparency requirements, but in addition their providers need to perform model evaluation, also with adversarial testing, to identify and mitigate risk, keep track and report incidents, and ensure adequate cybersecurity.

It is clear at first sight that transparency requirements (in the sense above) do nothing to address the problems and issues highlighted in the previous sections.

We move to model evaluations and adversarial testing. These provisions in general seem slightly better in addressing some of the issues above than mere transparency requirements.

Model evaluation, however, mostly relies on benchmarks, rather than real-life, global use. There is early evidence that generative system like Claude Opus modify their behavior when tested. Moreover, most of the issues identified in the previous sections are high-level, complex issues, that are likely the result of combined interactions at scale over an extended time period, which seems hard to simulate even in adversarial testing.

Finally, and more conceptually, the kinds of architectures of GPAI seen so far (and thus, of generative AI), seem difficult to be provably safe or aligned.

4.3.3 Is the risk framework adequate for agents?

The shift from a system with an application (intended purpose, clear application) to a system which is general and agential, i.e. (i) may find novel means to reach a given end; (ii) may pursue novel, unexpected intermediate or instrumental goals; (iii) may even pursue a novel end goal questions to what extent risk-based provisions can be based on the notion of an intended purpose.

§ 32 refers to the intended purpose of a system to check whether it is high-risk.Footnote 21

But being a general system seems conceptually incompatible with having an intended purpose. The issue is further complicated by such systems’ presumably autonomy (e.g. when the systems have agential capabilities).

But what happens if there is no specific intended purpose, e.g. if the system is general?Footnote 22

Consider an agent, in particular a human. Let us stipulate that the average human has general intelligence. Barring metaphysical or reductionist views of humans, it seems implausible that humans have an intended purpose, or one can be inferred by patterns of behaviour.Footnote 23

Now, it may well be argued that artificial agents are not human (fair point), and that they may be programmed with an intended purpose. But the focus of my argument here is conceptual: a fairly autonomous agent cannot be conceptualised as a product, which comes with an intended purpose or is used in certain ways.

This point about 'intended purpose' can be generalized to argue that general or agential systems can be hard to predict, and thus to be harnessed in a neat risk-based framework.Footnote 24

In fact, even admitting that the probability and the severity of harms can be established with sufficient precision, and a method of combining them is known and acceptable, the autonomous, general nature of real agents, and their dynamicity (in adjusting goals, means, and responding to the environment) seems to conceptually preclude the possibility to isolate with certainty particular harms or hazard as the sources of risks (and, subsequently, their combination), thus precluding the implementation of mitigation measures that are not a maxmin strategy (e.g. an extreme precautionary principle).

5 Conclusion: Is AGI unregulatable?

After settling on an ecumenic, working definition of artificial general intelligence, and discussing what plausible risks it generates, I argued that the risk-based framework found in current regulation, namely the EU AI Act, is inadequate to deal with AGI.

What to do then? Should we just let everything be, hoping for the best?

Novelli et al (2023a, § 7, b) suggest that they quantititative framework provides a solution to the problem of regulating AGI, for their framework is dynamic, and thus it works for AIs whose intended purpose is not foreseeable.

While I agree that, in general, a dynamic, semi-quantitative approach is better than the system originally envisioned in the EU AI Act, and basically left unmodified at the negotiation stage, Novelli et al’s 2023a, b approach still falls prey to the problem I identified in the present paper.

In particular, if AGI poses, or may pose, an existential risk, such risk seems likely to escape common risk-management systems, for it does not appear easily quantifiable in terms of severity, not to mention in terms of probability.

The main problem with this set of provision is that it ignores general AIs (substantially, not formally, since there is a category of general-purpose AI system), and thus decreases legal certainty, increases what the providers are on the hook for; second it ignores that, even if the providers are off the hook, the rest of humanity may be exposed to existential risks, in a clamorous case of negative externalities. Such existential risks need to be prevented tout court.

If indeed AGI systems are truly agential, regulation should target agents, not products, as the final version of the EU AI Act does. In this case, we should require that such systems and agents are aligned. Of course, this raises many problems, both conceptual and practical, that the AI community has been working on for years.

Data availability

Not applicable.

Notes

Although, of course, it is related to notion (iv). This definition of risk coincides with Clause 3.9 of ISO/IEC Guide 51:2014 Safety Aspects—Guidelines for their inclusion in Standards, where ‘harm’ is further defined as any adverse effect on health, safety and other things (“injury or damage to the health of people, or damage to property or the environment”, Clause 3.1). Clause 5 defines the ‘probability of an occurrence of harm’ as “a function of the exposure to hazard, the occurrence of a hazardous event, the possibilities of avoiding or limiting the harm".

Risk may be defined differently: in particular ISO 31000:2018 Risk Management—Guidelines defines risk as an “effect of uncertainty on objectives (Clause 3.1)”. For completeness, ‘hazard’ is defined as a potential source of harm (3.2), ‘hazardous event’ as and event that can cause harm (3.3). There are of course other definitions, cf e.g. Mahler (2007).

The philosophically-minded reader will have noted a shift between an objective and a subjective understanding of probability. While this distinction is crucial in general, not much will hinge on it for the purposes of this work.

E.g. Sustein (2002)does not seem to engage with existential risk in policy making.

Thanks to Luca Zanetti for pointing out this option.

For more on this point, see Faroldi and Zanetti, ms.

For example see the recent European Environmental Agency Climate Risk Assessment Report: https://www.eea.europa.eu/en/about/who-we-are/projects-and-cooperation-agreements/european-climate-risk-assessment last checked May 6, 2024.

In November 2023, for instance, OpenAI has annonouced that ChatGpt can be personalized in bots, or agents, with specific applications: a shopping assistant that scouts the web and purchases clothes, a travel agent that plans and books trips, etc. This is indeed something that is akin to a swiss knife: multiple functions packed in one object, but still not quite a general system in the sense of AGI. Still, see the op ed of the Financial Times on the “agential revolution”: https://www.ft.com/content/e628f42d-acc9-496d-be15-1ab19311735b last checked on Nov 12, 2023.

Here’s a version of the control argument, as made by Ngo (2020): “We’ll build AIs which are much more intelligent than humans (i.e. super- intelligent). Those AIs will be autonomous agents which pursue large-scale goals. Those goals will be misaligned with ours; that is, they will aim towards outcomes that aren’t desirable by our standards, and trade off against our goals. The development of such AIs would lead to them gaining control of humanity’s future.” While this seems something to avoid to preserve humanity’s freedom and dignity (Sparrow 2023), it has been argued that it could be in fact a positive development (Luck 2024).

See Coeckelbergh (2024) for other challenges of a (global) governance of more and more powerful AI.

The providers have the following obligations, set in article 52c:

-

a)

draw up and keep up-to-date the technical documentation of the model, including its training and testing process and the results of its evaluation (not if open source);

-

b)

draw up, keep up-to-date and make available information and documentation to.

-

c)

providers of AI systems […] enable providers of AI systems to have a good understanding of the capabilities and limitations of the general purpose AI model (not if open source);

-

d)

put in place a policy to respect Union copyright law in particular to identify and respect, including through state of the art technologies, the reservations of rights.

-

e)

draw up and make publicly available a sufficiently detailed summary about the content used for training of the general-purpose AI model.

-

a)

While the debate on alignment is vast, we can define a system A to be aligned with H just in case A tries to do what H intends it to do. This definition can be further extended to value systems, rather than individual intentions.

Goal misgeneralization does not arise because there is a mistake in the reward specification, but it is “a specific form of robustness failure for learning algorithms in which the learned program competently pursues an undesired goal that leads to good performance in training situations but bad performance in novel test situations (Shah et al. 2022).

The German Federal Office for Information Security released in 2024 a report addressing, instead, a few dozens of risks emerging from LLMs.

(32) As regards stand-alone AI systems, meaning high-risk AI systems other than those that are safety components of products, or which are themselves products, it is appropriate to classify them as high-risk if, in the light of their intended purpose, they pose a high risk of harm to the health and safety or the fundamental rights of persons, taking into account both the severity of the possible harm and its probability of occurrence and they are used in a number of specifically pre-defined areas specified in the Regulation.

The same ISO guideline defines intended purpose in Clause 3.6.: “[intended use] use in accordance with information provided with a product or system, or, in the absence of such information, by generally understood patterns of usage”. It seems clear that if a system is general or agential can only have an intended purpose on paper.

Of course this remark is provocative, as it is generally accepted that humans do not have a provider.

Anwar et al. (2024) argue that many of the risks of LLM agents are novel and open-ended (and they also suggest a research agenda to start to understand them).

References

Anwar U et al (2024) Foundational challenges in assuring alignment and safety of large language models

Arntzenius F (2014) Utilitarianism, decision theory and eternity. Philos Perspect 28:31–58

Bengio Y et al (2023) Managing AI risks in an era of rapid progress

Coeckelbergh M (2024) The case for global governance of AI: arguments, counter-arguments, and challenges ahead. AI & Soc. https://doi.org/10.1007/s00146-024-01949-5

German Federal Office for Information Security (2024) Generative AI models, opportunities and risks for industry and authorities

Goldstein S, Kirk-Giannini CD (2023) Language agents reduce the risk of existential catastrophe. AI & Soc. https://doi.org/10.1007/s00146-023-01748-4

Greaves H (2024) Concepts of existential catastrophe, under review

Hansson SO (2023) Risk, The Stanford Encyclopedia of Philosophy (Summer 2023 Edition). In: Edward NZ, Uri N (eds.), URL = <https://plato.stanford.edu/archives/sum2023/entries/risk/>

Hendrycks D, Mazeika M, Woodside T (2023) An overview of catastrophic AI risks. arXiv:2306.12001

Hubinger E et al (2024) Sleeper agents: training deceptive LLMs that Persist through safety training

Kannai Y (1992) Non-standard concave utility functions. J Math Econ 21(1):51–58. https://doi.org/10.1016/0304-4068(92)90021-X

Kasirzadeh A (2024) Two types of AI existential risk: decisive and accumulative. https://arxiv.org/html/2401.07836v2

Luck M (2024) Freedom, AI and God: why being dominated by a friendly super-AI might not be so bad. AI Soc. https://doi.org/10.1007/s00146-024-01863-w

Mahler T (2007) Defining legal risk. In: Proceedings of the conference “commercial contracting for strategic advantage - potentials and prospects”, pp 10–31, Turku University of Applied Sciences, 2007, Available at SSRN: https://ssrn.com/abstract=1014364

Morris MR et al (2023) Levels of AGI: operationalizing progress on the Path to AGI, arXiv:2311.02462

Ngo R (2020) AGI safety from first principles

Novelli C and Casolari F, Rotolo A, Taddeo M, Floridi L (2023a) How to evaluate the risks of artificial intelligence: a proportionality-based, risk model for the AI act. Available at SSRN: https://ssrn.com/abstract=4464783 or https://doi.org/10.2139/ssrn.4464783

Novelli C, Casolari F, Rotolo A et al (2023b) Taking AI risks seriously: a new assessment model for the AI Act. AI Soc. https://doi.org/10.1007/s00146-023-01723-z

Ord T (2020) The precipice: existential risk and the future of humanity. Hachette, New York

Raper R (2024) A comment on the pursuit to align AI: we do not need value-aligned AI, we need AI that is risk-averse. AI Soc. https://doi.org/10.1007/s00146-023-01850-7

Faroldi FLG (2021) General AI and Transparency, i-lex, 2

Faroldi FLG, Zanetti L (2024) On how much it matters: the mathematics of existential risk, ms

Shah R, Vikrant V, Ramana K, Mary P, Victoria K, Jonathan U, Zac K (2022) Goal misgeneralization: why correct specifications aren't enough for correct goals, arXiv:2210.01790

Schuett J (2023a) Risk management in the artificial intelligence act. Eur J Risk Regul. https://doi.org/10.1017/err.2023.1

Schuett J (2023b) Three lines of defense against risks from AI. AI Soc. https://doi.org/10.1007/s00146-023-01811-0

Shevlane et al (2023) Model evaluation for extreme risks, arXiv:2305.15324v2

Skala HJ (1974) Nonstandard utilities and the foundation of game theory. Int J Game Theory 3(2):67–81. https://doi.org/10.1007/BF01766393

Stefánsson HO (2020) Catastrophic risk. Philos Compass 15(1–11):e12709. https://doi.org/10.1111/phc3.12709

Sparrow R (2023) Friendly AI will still be our master. Or, why we should not want to be the pets of super-intelligent computers. AI Soc. https://doi.org/10.1007/s00146-023-01698-x

Sustein C (2002) Risk and reason. Safety, Law and the Environment. Cambrdige University Press

Acknowledgements

Removed for review.

Funding

Open access funding provided by Università degli Studi di Pavia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Faroldi, F.L.G. Risk and artificial general intelligence. AI & Soc (2024). https://doi.org/10.1007/s00146-024-02004-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00146-024-02004-z