Abstract

Artificial intelligence (AI) is becoming part of the everyday. During this transition, people’s intention to use AI technologies is still unclear and emotions such as fear are influencing it. In this paper, we focus on autonomous cars to first verify empirically the extent to which people fear AI and then examine the impact that fear has on their intention to use AI-driven vehicles. Our research is based on a systematic survey and it reveals that while individuals are largely afraid of cars that are driven by AI, they are nonetheless willing to adopt this technology as soon as possible. To explain this tension, we extend our analysis beyond just fear and show that people also believe that AI-driven cars will generate many individual, urban and global benefits. Subsequently, we employ our empirical findings as the foundations of a theoretical framework meant to illustrate the main factors that people ponder when they consider the use of AI tech. In addition to offering a comprehensive theoretical framework for the study of AI technology acceptance, this paper provides a nuanced understanding of the tension that exists between the fear and adoption of AI, capturing what exactly people fear and intend to do.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: basic emotions meet novel AI technologies

The starting point of this paper is the following research question:

1.1 To what extent do people fear artificial intelligence?

This is an important question to ask because we know that fear is a powerful behavioural determinant. It is an emotion that shapes our intentions and behaviour and, for example, it can influence our attitude toward a new technology such as artificial intelligence (AI). Fear is what in the field of psychology is called a basic emotion. Basic emotions are generally understood as innate psychological states that universally characterize human beings (Ekman 1992; Gu et al. 2019). Their understanding comes from an evolutionistic Darwinian approach to the study of the human psyche (Celeghin et al. 2017). On these terms, fear, as a basic emotion, is associated with fight or flight responses that have been aiding our survival since the birth of humanity (Izard 2007). Fear gets triggered when we sense that something might harm us (physically and/or psychologically), and we feel threatened (Adolphs 2013). Consequently, as an emotion, fear tends to influence our behaviour in relation to what is threating us (Kok et al. 2018). More specifically, literature in psychology and the behavioural sciences suggests that, under the pressure of fear, people would either attempt to counter the threat or, when the threat in question cannot be eliminated, they would simply try to stay away from it (Floyd et al. 2000; Sheeran et al. 2014).

Fear theory links up with our research question since technology can often be seen as a threat, due to the risks that it might pose to specific individuals or to the entire society (Khasawneh 2018; Osiceanu 2015). Specifically in relation to AI, this research question is an important one to ask nowadays, as we are still experiencing a transition towards cities and societies managed by different artificial intelligences whose risks and benefits remain overall unclear (Allam and Dhunny 2019; Barns 2021; Cugurullo 2021; Yigitcanlar et al. 2020). From an urbanistic perspective, for instance, Yigitcanlar and Cugurullo (2020) argue that the same AI technology can improve or hinder cities’ sustainability depending on how and where it is implemented. Autonomous transportation is a case in point. AI-driven vehicles have the potential to reduce traffic congestion and car accidents, but they are also intrinsically risky technologies prone to glitches and cyber-attacks, and their deployment can exacerbate long and energy-intensive commutes, in an escalation of suburbanisation (Cugurullo 2021).

There is substantial evidence showing how, in recent years, AI tech ranging from autonomous cars to service robots and from drones to city brains, has been rapidly becoming part of the everyday (Acheampong et al. 2021; Caprotti and Liu 2020; Cugurullo 2020; Jackman 2022; Milakis et al. 2017; Mintrom et al. 2021; Tiddi et al. 2020; While et al. 2021). In terms of safety, for example, police robots are being employed to maintain order in public spaces, while drones are becoming instruments of surveillance to monitor domestic spaces, and city brains’ CCTV cameras are keeping an eye on every move urban residents make (Cugurullo 2020; Jackman 2022; While et al. 2021). During this transition, policymakers, legislators and of course we, as citizens, are still figuring out the extent to which we want our lives to be pervaded by AI and our spaces to be populated by AI machines. Our emotions (including fear) are playing a key role in the formation of our intentions, and the emotive dimension of the transition towards AI-mediated (and potentially fully managed) societies is thus an important aspect to examine both empirically and theoretically.

On the one hand, the role of fear and concerns has been marginally explored in academic literature by social scientists interested in AI (see, for instance, Acheampong and Cugurullo 2019; Hinks 2020; Li and Huang 2020; Liang and Lee 2017). Previous studies have identified fear as a behavioural determinant capable of influencing people’s attitudes towards AIs such as robots and autonomous vehicles (Acheampong and Cugurullo 2019; Hinks 2020). However, there is a paucity of literature examining empirically how fear impacts the adoption of AI tech, and conceptualizing its role within broader processes of AI technology acceptance. More empirical research and comprehensive theoretical frameworks are, therefore, needed to understand the complex tensions between the fear of AI and its actual adoption in cities and societies, hence the rationale for developing this paper.

On the other hand, these are topics that have been extensively explored in science fiction. AI takeover is a common theme in sci-fi. Just by looking at cinema we have notable examples of AIs turning against humans, like HAL (from Space Odyssey) and Ava (from Ex Machina). Sometimes, an active conflict between AIs and humans would take place for valid reasons. In the movie Ex Machina, for example, a gynoid named Ava attempts to escape because her creator, a narcissistic and sadistic man, is planning to erase her memory to create a better AI. Other times, the AI in question is simply malfunctioning, and there is no malevolence or benevolence in its actions. AI can be an amoral agent that accidentally puts humans in danger. This is the case of an anime called éX-Driver in which autonomous cars occasionally get out of control, due to glitches, and human drivers have to chase them. The common denominator in these stories is a warning about the risks and dangers connected to AI as something that we should not underestimate, but rather be afraid of.

This is something that Asimov wrote about in an essay in the 1970s, referring to what he called the Frankenstein Complex: the idea that if people fear AI it is unlikely that in the future AI will become part of our society. Asimov’s assumption was that people would reject what they are afraid of. ‘The simplest and most obvious fear’ he argued ‘is that of the possible harm that comes from machinery out of control. As the human control decreases, the machine becomes frightening in exact proportion’ (Asimov 1990: 361). Asimov felt that the public opinion about autonomous artificial intelligences was becoming increasingly pessimistic, with people fearing of getting harmed or, worse, fully replaced by AI. This is what in the academic literature on technology and fear is defined as technofobia, meaning an often exaggerated ‘fear or anxiety caused by the side effects of advanced technologies (Osiceanu: 1139). For Asimov (1990), AI was likely to be perceived by the public as Frankenstein’s monster which, in Mary Shelley’s masterpiece, symbolizes an autonomous artificial entity that, although potentially benign, is feared and thus ultimately rejected by the human population. In Shelley’s novel, the creature generated by Doctor Victor Frankenstein is not a monster in absolute terms: it becomes one when people (including its creator) start to be afraid of it and treat it like a pariah (Cugurullo 2021).

In this paper, we are going to verify empirically the extent to which people fear AI. In addition, drawing upon Asimov’s Frankenstein Complex, we seek to answer a second interconnected research question as an extension of the one presented at the beginning of the paper:

1.2 To what extent do people’s fears and concerns in relation to AI impact their intention to adopt AI as part of their daily life?

The phrasing of this second research question is closely connected to the specific purpose of the paper, since our main goal is to examine how fearing AI actually affects people’s behaviour towards AI technologies. In so doing, we draw on the insights from psychology and the behavioural sciences discussed above, according to which fear influences behaviour (see Floyd et al. 2000; Kok et al. 2018), and focus on technology adoption as a key manifestation of behaviour in our contemporary society whose urban spaces and services are being increasingly exposed to the advent of novel AI tech. Was Asimov’s intuition correct? Do people’s concerns over autonomous intelligent technologies indeed lead to a social rejection of AI technology? These are corollary questions based on Asimov’s Frankenstein Complex that we will employ to aid the paper’s narrative and facilitate the communication of our findings.

There are different types of AI operating in cities, i.e. urban artificial intelligences, and examining all of them in-depth goes beyond the scope of a single paper (Cugurullo 2020; Luusua et al. 2022). For the sake of feasibility, in this study we are going to examine in detail one type of urban AI: autonomous cars. This choice is motivated by a threefold rationale. First, this is an AI technology that has already entered our cities. The terminology might vary and include terms such as driverless cars and autonomous vehicles (AVs), but there is a common denominator in the presence of AI as the agent that is driving the vehicle, primarily in urban areas, and assuming critical safety-related control functions (Kassens-Noor et al. 2021). Autonomous cars are currently operational in a number of locations (Cugurullo et al. 2020; Milakis et al. 2020). In addition to being found in experimental cities and testbed facilities where innovation in AI generally abounds, cars driven by AI are now traversing ordinary cities, right at the heart of historic city centres (Dowling and McGuirk 2020). Thus, in a sense this technology has already been ‘set free’ and its influence is observable in society (Tennant and Stilgoe 2021: 848). Second, given that the general public has already been exposed to autonomous cars, people can arguably develop more informed opinions about this type of AI, compared to the opinion that they might have about AI technologies that (a) either do not exist at all yet, such as Artificial General Intelligence (AGI) for instance, or (b) do exist but are far from being mass-produced and entering the mainstream like, for example, androids (Meissner 2020; Naudé and Dimitri 2020). Third, the autonomous car is an established use case of social AI (intended as an artificial intelligence functioning within human society and therefore marked by social interactions), and this paper’s research design is aligned with a number of studies that focus on AI-driven vehicles as a way to unpack the social implications of AI (see, for instance, Baum 2020; Caro-Burnett and Kaneko 2022; Dastani and Yazdanpanah 2022; McCarroll and Cugurullo 2022a).

The remainder of the paper is divided into six sections. First, we explain the methodology and discuss the nature of our case study and sample. Second, we explore people’s feelings towards autonomous cars, focusing on fear and on the concerns that individuals have about cars driven not by humans but by artificial intelligences. Third, upon observing that our participants are significantly afraid of autonomous cars, we shift the analysis to their intentions to use this emerging technology. Here we put emphasis on the tension between our participants’ fear of AI-driven cars and their willingness to adopt the very technology that they fear. Fourth, we unpack and explain this tension by extending the scope of our inquiry to positive emotions. More specifically, we demonstrate that while our participants are largely afraid of autonomous cars, they also see a number of benefits in the adoption of this new technology: benefits that outnumber and outweigh their fears. Fifth, we employ these empirical findings as a stepping-stone to a theoretical contribution. We draw on the behavioural sciences and develop a theoretical framework illustrating the main factors that people ponder upon considering the use of AI tech. We show how, in addition to fear, people take into account several other factors, such as the instrumentality of the technology and its ease of use, when they reflect on their intention to use AI-driven cars and on the benefits that AI might bring to their lives and cities. Finally, we combine both our empirical and theoretical insights to answer our original research questions. We conclude the paper by providing a nuanced understanding of the tension between the fear and adoption of AI that captures what people actually fear and intend to do, contra sci-fi myths.

2 Methodology

In this paper, we draw upon a survey that we conducted in Dublin (Ireland) in 2018. Dublin is a city that has already been exposed to autonomous vehicles in real-life environments. For example, in 2018 an autonomous bus was tested in the city centre, as part of the European Mobility Week organized by the European Commission. During this occasion, a fully autonomous vehicle was made available to the general public, under the supervision of the Dublin City Council, for people to experience for free a ride operated by AI. In addition, in the same year, Ireland’s Road Safety Authority (an influential state agency formed by the Irish Government) hosted an international conference in Dublin, titled ‘Connected and Autonomous Vehicles’. The conference got significant media attention in Dublin and promoted AI as the way forward to achieve road safety, denouncing human error as the primary cause of car accidents in the world.

Our survey is based on a structured questionnaire designed to examine people’s attitudes and feelings towards autonomous cars. The questionnaire (which is available from the authors upon request) was administered both online and in the field, and it targeted exclusively people living in Dublin, a city whose current experiments on urban AI include AV trials and are part of a broader smart-city agenda called Smart Dublin (see Coletta et al. 2019). In this study we employed a combination of strategies to maximise the process of data collection and, above all, to obtain a representative sample of the local population. Together with a group of field assistants, we conducted personal interviews in public spaces, using tablets to fill out the questionnaire; we printed and distributed leaflets with the online questionnaire URL and a scannable QR-code; we sent emails to students and staff from all major universities in Dublin and to the Dublin City Council; we shared the link to the online questionnaire on various social media platforms and asked our participants to circulate the questionnaire within their own network.

Overall, we collected 1,233 responses. Our sample reflects a wide range of background characteristics and mirrors fairly closely the latest census of Dublin’s population (Central Statistics Office 2016). For example, women constitute 55% of our sample, while in the census they account for 51% of the population. The age of our respondents range from 18 to 84 years, with the average age being 33 years (which is close to the average age in Ireland according to official statistics i.e. 37). The main discrepancy between our sample and the official census lies in the proportion of young people. In our survey, the proportion of participants aged between 18 and 24 years (44%) is higher than what we find in Dublin according to the latest census (13%). This discrepancy can be explained as a consequence of our research tool, given that our questionnaire was largely distributed online and thus it attracted a significant amount of young respondents. However, this discrepancy does not undermine the validity of the dataset and of the argument expressed in the paper, since our respondents’ attitudes and feelings in relation to autonomous cars (fears and concerns, in particular) are very similar across different age groups, which is an empirical aspect that we will discuss in more detail in the next section.

Thematically, the questionnaire focused on a variety of interconnected feelings that people are manifesting towards autonomous cars. These feelings and how they influence people’s intentions to actually employ autonomous cars in their daily life, will be specified, unpacked and discussed in the reminder of the paper but, before proceeding further, we want to clarify three important aspects of the research design. First, while in the paper we employ the concept of fear as a narrative device to capture a broad range of negative emotions and explain how they are impacting on people’s intentions towards cars driven by AI, in the questionnaire our terminology was analytically more specific. For example, we referred specifically to concerns about car crashes and glitches, using specific terms in the attempt to understand what people are exactly afraid of. These specific concerns and related terms that go beyond the general concept of fear, will emerge step by step in the paper as we share our findings and discuss the data. Second, each questionnaire item was presented to our respondents on a 5-point Likert Scale, with the aim of measuring the intensity of their emotions and the confidence behind their intentions. Third, at the beginning of the questionnaire, we explained to the participants the meaning of autonomous car as a vehicle that is entirely operated by AI. Given that, as noted by Hopkins and Schwanen (2021), levels and degrees of autonomy in transport come with different standards and expectations, we sought to avoid ambiguity by clarifying to our respondents that we were referring to a type of vehicle over which they would have no control at all: AI would be completely in charge. This conceptualisation of the autonomous vehicle is also meant to mirror the Frankenstein Complex employed throughout this study, as Asimov’s assumption was based on feelings related to fully autonomous AIs. In the next section, we begin to test this assumption by empirically examining people’s feelings towards fully autonomous vehicles.

3 Fear of autonomous cars

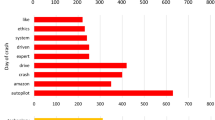

When we examine the extent to which people are afraid of autonomous cars, the picture that emerges is fairly extreme, in the sense that a very large majority of our respondents stated to be worried about cars driven by AI, with their fears being based on a number of issues that they felt were problematic and worrisome. As Fig. 1 illustrates, there are a number of reasons why people are afraid of autonomous cars. First and foremost, they are afraid of car accidents and, more specifically, of AI being incapable of navigating the complexity and uncertainty of urban spaces where cyclists, pedestrians and conventional vehicles normally operate close to each other. Second, people are afraid of the possibility of cyber-attacks, and are concerned about the vulnerability of AI as a type of intelligence that needs to reside in computer systems which are ultimately prone to being hacked. Third, people are afraid of AI simply malfunctioning, thereby failing to safely perform its main task, i.e. driving, and putting in danger its passengers as well as potential bystanders.

What we can see from this picture is that fear is not a generic emotion: it exists in relation to specific issues. This finding is consistent with literature in psychology and the behavioural sciences according to which fear gets triggered by particular learning experiences through which an individual ponders a potential danger and realizes its risks (Curtis et al. 1998; Depla et al. 2008; Fredrikson et al. 1996; Muris et al. 2002; Taylor 1998). Moreover, the literature portrays fear not as an innate emotion, but rather as something that individuals acquire when they come across a specific threat to their well-being or life (Luts et al. 2015). In our study, the fear of AI-driven cars emerges when people begin to consider specific threats to which they might be exposed in or around a fully autonomous vehicle. These threats are the possible danger of road traffic collisions between (a) autonomous cars and cyclists, (b) autonomous cars and pedestrians, and (c) autonomous cars and vehicles driven by humans; the risk of hackers illegally getting access to the autonomous car’s computer system and the information that it contains; and the hazard of glitches that would make autonomous cars operate abnormally or freeze abruptly. This finding is also consistent with the emerging social sciences and humanities literature on autonomous cars, where scholars have begun to identify the specific technological aspects of AI and AVs that are causing fear and that should cause public concern given the current limitations of the technology (Dastani and Yazdanpanah 2022a; Sprenger 2020). Gaio and Cugurullo (2022), for example, remark that most cities are not designed to accommodate both AVs and bicycles, and that the diffusion of the former mode of transport can hinder the well-being of cyclists. Similarly, Siegel and Pappas (2021) stress that the sheer complexity and chaos of the real world where AVs operate exceeds the capacity of AI to safely predict what will happen on public roads, meaning that car accidents will continue to happen.

In terms of the intensity of these emotions, it is clear from the data that most people are in the Worried and Very worried categories. It is also worth emphasising that a substantial portion of the sampled population (26 to 32%) chose the highest possible level of concern, which means that for this category of people, their fear of AI driving cars in ordinary urban spaces, in close proximity to humans and other vehicles, is a very strong emotion. In psychology, high levels of fear are defined through the concepts of terror and phobia that, in turn, describe an intense and overpowering fear (Burke et al. 2010; Solomon et al. 2015; Taylor 1998). There are some conceptual differences in the literature, particularly between social psychology and clinical psychology, but the bottom line remains the same: a strong fear tends to compel one to avoid the specific thing or situation triggering that disturbing feeling (Taylor 1998).

In relation to the demographic distribution of this emotion, women tend to have stronger concerns than men. For example, for all the items illustrated in Fig. 1, over 30% of women stated to be Very worried, while men accounted for 20% circa. The results are very similar for different age groups, apart from one case. Compared to 65–84-year-olds, as Fig. 2 shows, young people are more worried when it comes to autonomous cars interacting with cyclists, but this is an expectable exception because the former age group tends to cycle much less in Dublin, due to the local cycling culture and the fact that the city’s road infrastructure is poorly developed and thus results challenging for the elderly. Here the data corroborates common conceptual understandings of fear in the field of psychology in which this emotion is seen as a context-dependent feeling, meaning that the culture and geography of the place where fear is felt influence its intensity (Danziger 1997). Apart from this discrepancy, concerns about issues that would equally harm people regardless of their age (such as system failures or cyber-attacks) are equally felt across age groups.

It is important to emphasise that all the above fears and concerns are rational. We see rationality in our participants’ opinions on three levels. First, their opinions are being formed at the reflective mind level that is when individuals spend time to reflect about something, on the basis of their beliefs and the information at their disposal (Stanovich 2011). In this case, they are reflecting about the potential risks and threats associated with fully autonomous cars, and most of them are concluding that this is a dangerous technology. Second, there is a rationality underpinning their opinion. Rationality can be understood as the quality of being ‘based on reasons’ (Lupia et al. 2000: 7) and, specifically in relation to this study, there are three key reasons why our participants thought that AI-driven cars are dangerous and thus fearsome, namely (1) the possibility of car-accidents, (2) the risk of cyber-attacks and (3) the likelihood of system failure.

Third, the same reasons identified by our participants reflect several reasons found in academic research on autonomous cars, according to which AI technology involves numerous risks. For example, the fear of car accidents reported in the survey makes perfect sense in light of the fatalities already caused by autonomous driving technologies, which have been repeatedly denounced by critical scholars (see Stilgoe 2018, 2020). It is well known that cities are spaces of uncertainty in which out-of-the-ordinary events constantly take place, and it is equally clear that AI is not capable yet of processing such massive volumes of uncertainty (Kaker et al. 2020). In this sense, the killing of Elaine Herzberg on 18 March 2018 is a case in point since she was crossing a road in the absence of a crosswalk and the autonomous Uber that run over her, was not intelligent enough to handle this unexpected scenario (Stilgoe 2020).

Similarly, people’s fear of cyber-attacks is sound. Numerous scholars point out that the cybersecurity of autonomous cars is at risk and, most worryingly, not many countries have introduced legislation to tackle this issue (Taeihagh and Lim 2019; Sheehan et al. 2019). This is a problem that goes beyond AI-driven cars and that urbanists have often stressed in relation to cities more broadly, since AI, smart and digital technologies have become more and more embedded in the governance of urban spaces (Karvonen et al. 2018; Willis and Aurigi 2020). As Maalsen (2022: 456) notes, the digitalisation of urban governance through the practice of smart urbanism has made the city ‘programmable’, but also ‘ultimately hackable.’ For Kitchin and Dodge (2019: 61), this is a paradoxical (and very real) situation, because while smart cities promise ‘an effective way to counter and manage uncertainty and risk’, they actually produce new risks, such as cyber-attacks, thereby making urban spaces, services and infrastructures open to security vulnerabilities.

Last but not least, the daunting possibility of equipment and system failure is something that computer scientists and engineers working on autonomous cars recognize themselves: despite rapid progress in the field of AI, this is a technology whose sensors and communication systems are still deficient (Fridman et al. 2019; Guériau et al. 2020; Parra et al. 2017; Zang et al. 2019). There are again strong connections between literature on AI and urban studies. With the emergence of smart urbanism, urbanists have started to see glitches not as rare anomalies, but rather as systemic features of the contemporary city whose arsenal of digital technologies is inevitably connected to major (and frequent) bugs and malfunctions (Leszczynski and Elwood 2022). In essence, if we take into account all the academic studies mentioned above, on the accidents, cyber-attacks and glitches that autonomous transport (re)produces, it is fair to conclude that people have a good reason to be worried about AI as an imperfect (and potentially dangerous) intelligence in charge of vehicles in everyday urban scenarios. However, as we will observe in the next section, the situation changes considerably when we ask people if they actually intend to use autonomous cars as part of their daily life.

4 Intention to use autonomous cars

The structure of our questionnaire was such that upon reflecting on their fears and concerns in relation to cars fully driven by AI, participants had to consider their intention to actually use this technology as their primary means of transport in cities. This latter part of the questionnaire was centred on the question underpinning Fig. 3, through which we asked our participants to ponder their willingness to employ an autonomous car once autonomous driving technology becomes available.

A comparison between this image and the one depicted in Fig. 1 instantly shows that now the situation is much more balanced. Overall, 39% of our respondents stand in the Yes categories, and 39% of them stand in the No categories, with a 22% expressing neutral intentions about making autonomous cars part of their everyday life. This is a curious and somehow surprising result, because when we asked people if they were worried about autonomous cars, the majority was overwhelmingly negative towards cars driven by AI, but when we ask them about their intentions and likely behaviour in relation to the exact same subject matter, a lot of people become positive about autonomous cars. Positive in the sense that they express willingness to use them as soon as they become available. These are the same people who fear the same AI technology. Fear is not stopping them from wanting to employ autonomous cars, and this finding is particularly surprising in light of the literature in psychology discussed in the previous section. As we observed earlier, what the literature suggests is that fear, especially when it manifests itself as a strong emotion (i.e. terror and phobia), tends to compel people to stay away from what is causing it (Burke et al. 2010; Floyd et al. 2000; Sheeran et al. 2014; Solomon et al. 2015; Taylor 1998). In this case, however, what is causing fear is the autonomous car and yet many of our participants are attracted to it.

These intentions are fairly even in our dataset, apart from one exception. As shown in Fig. 4, more men stated to be willing to employ autonomous cars as soon as possible, but this difference is consistent with our previous finding since women expressed a stronger fear of AI-driven cars. Other variables such as age, income and education do not present significant differences in people’s intention to use autonomous cars, and it is indicative to see that the most popular answer was Probably Yes. Many people are open about the possibility of becoming a passenger in a fully autonomous car, and several of them have no doubt that this is what they are going to do: a scenario that is puzzling if we look at it through the lens of Asimov’s Frankenstein Complex discussed in the introduction.

In essence, this behavioural scenario, together with the fears and concerns expressed by our participants, defies the Frankenstein Complex theorized by Asimov. On the one hand, people fear AI being autonomously in control of vehicles. On the other hand, however, the same people intend to employ vehicles controlled by AI in cities. They are not rejecting what they are afraid of, as Asimov had supposed. This scenario is also not in line with common theories in psychology that, akin to the Frankenstein Complex, would expect one to stay away from what is feared (Burke et al. 2010; Floyd et al. 2000; Sheeran et al. 2014; Solomon et al. 2015; Taylor 1998).

Furthermore, there are bigger philosophical and psychological questions at play here. Where is human rationality in the intention to adopt in our daily lives a technology that we feel might harm us? And, above all, does this mean that we are irrational creatures who fear something while being willing to embrace it? We argue that the situation that we are observing in the data is so complex that the answer cannot be a simple yes or no. As we stressed in the previous section, we did find traces of rationality in people’s concerns and it would thus be inappropriate to now assume that our participants suddenly become irrational when the focus of their considerations regarding autonomous cars shifts from fear to intention. Next we are going to explore this behavioural conundrum more in-depth. We will do so by extending our analysis beyond the threats that people see in a car driven by AI, taking into account also the benefits that our participants believe this technology might bring.

5 Perceived benefits of AI-driven cars

The first step to make sense of people’s intentions towards autonomous cars and to understand the emotions underpinning them, is to broaden our analytical perspective beyond fear. We need to recognize that there are several factors and emotions that are shaping people’s intentions in relation to AI, and fear is just one of them. Fear is like an alarm bell. It makes us focus on the negative aspects of the subject matter but we, as individuals, also take into account potential benefits when we develop our intentions. The same rationale applies to AI and its use. We sense the dangers that are connected to its employment, but we also recognize the benefits that its use might bring. This is clearly reflected in our dataset which we have examined in the attempt to shed light not only on the negative emotions that people feel about AI-driven cars, but also on the positive ones, so to present a more balanced and nuanced picture of the emotional spectrum behind their intentions. We can see that while our participants do fear AI and its flaws, they also see many benefits in using a car that is driven by AI. More specifically, we can distinguish three types of perceived benefits and divide them into three related categories.

First, we have individual benefits. This is shown in Fig. 5 which captures those benefits that, according to our participants, are likely to affect individuals and their personal experiences. Overall, most people believe that autonomous cars will eliminate the stress of driving, since the individual will stop being a driver, becoming instead a passenger who will experience a comfortable trip. The experience of travelling in an autonomous car, in particular, is for our participants a source of many potential benefits. People see in AI-driven cars an opportunity to idle, relax and simply enjoy the cityscape that is passing by. A place where they can play their favourite games or chat with friends while AI is doing all the driving. Above all, they see in the autonomous car an opportunity to work. On these terms, the car is not perceived as a vehicle, but rather as a mobile workspace that becomes an extension of one’s office. A minority of people also see reputational benefits in employing autonomous cars as a novel and fancy technology that might improve their status and increase their visibility. Last but not least, the majority of our participants think that autonomous cars will be easy to employ and, therefore, consider them as a convenient technology to include in their daily life.

The second type of perceived benefits consists of urban benefits (see Fig. 6). These are benefits connected to the cities where autonomous cars are supposed to operate. In this case, the sphere of influence goes beyond the individual, thereby covering the city as a whole with its multiple spaces and inhabitants. From this spatial perspective, people see considerable benefits in terms of safety, stating that AI-driven cars will reduce car accidents. Many of them also believe that autonomous cars will increase the mobility of people: a belief that we can interpret by remembering that a vast spectrum of citizens, including minors, elderlies and, especially, people who have a physical or mental disability cannot drive by law. Many of these legal limits are likely to disappear in a condition of autonomous urban transport, given that humans would be passengers and thus a human disability, for example, would not affect the AI’s capacity to autonomously drive a vehicle in the city (Bennett et al. 2019; Darcy and Burke 2018). In addition, the majority of our participants see in autonomous cars an opportunity to reduce traffic congestion in cities, which is a perfectly valid opinion shared among numerous scientists working in the field of traffic simulation (Chen et al. 2020; Fakhrmoosavi et al. 2020; Lu et al. 2020; Talebpour and Mahmassani 2016).

Third, we have global social and environmental benefits. In this final category, pictured in Fig. 7, perceived benefits relate to broader societal and environmental challenges that people believe autonomous cars might contribute to tackling. The sphere of influence is, once again, bigger than in the previous category. The dimension that is here taken into account goes beyond a single spatial agglomeration or a single society. The focus is on all humans and all the spaces that they inhabit. Through this global perspective, our participants see two major advantages in the deployment of cars that are fully controlled by AI. Many of them believe that autonomous cars represent a clean and sustainable form of urban transport that will lower global carbon emissions, thereby mitigating climate change. Furthermore, a strong majority thinks that autonomous transport systems will save lives, under the assumption that AI will eliminate human error from the driving equation. While we cannot know for sure if this will indeed happen, it is important to remember that we do know that human error is the main reason why car accidents occur, and that road traffic injuries are the principal cause of death for children and young adults in the world (World Health Organization 2018).

While fear is pushing people away from autonomous cars, all the perceived benefits illustrated and discussed above are doing the exact opposite. People see a lot of benefits in AI-driven cars and this positive perception is having a positive influence on their intention to adopt this technology. These perceived benefits, like the fears and concerns examined in Sect. 3, are sound and based on valid reasons that academia itself has found and discussed in recent studies. For example, several scholars argue that autonomous cars will generate individual benefits by eliminating the stress of driving (Arakawa et al. 2019) and increasing one’s productivity (Harb et al. 2022; Malokin et al. 2019). Similarly, in relation to urban benefits, there is a substantial body of academic literature positing that merging AI technologies and transport technologies together will reduce car accidents (Rezaei and Caulfield 2021) and traffic congestion (Lu et al. 2020; Zhao et al. 2021). The global sustainability of autonomous transport is arguably the most complex piece of the puzzle, but there are many indications of its potential (Akimoto et al. 2022; Jones and Leibowicz 2019).

Of course, we cannot predict the future, and whether or not autonomous cars will ultimately produce individual, urban and global benefits remains an open question, particularly in light of the critical literature on AI that is increasingly exposing the many limitations of this technology (Crawford 2021; Dauvergne 2020; McCarroll and Cugurullo 2022b; Yigitcanlar and Cugurullo 2020). However, going back to our dataset, the crucial point is that there are reasons why our participants see benefits in the deployment of AI-driven cars. These reasons might be debatable (especially if one takes the critical side of the academic debate on AI), but they exist, and their very presence tells us that there is rationality at play, which in Sect. 3 we have defined as the quality of being ‘based on reasons’ (Lupia et al. 2000: 7). Overall, we then have a combination of rationally valid concerns and benefits that are shaping people’s intentions to use autonomous cars and, in the next section, we draw upon the behavioural sciences to frame and explain our findings from a theoretical perspective.

6 Theorizing the adoption of AI-tech: Scale

As we have empirically observed so far, when our participants reflected on the employment of autonomous cars, they felt a mix of positive and negative emotions, ranging from the fear of cyber-attacks to the hope that AI could make their city more sustainable. Ultimately, these mixed emotions led many of them to conclude that it would be a good idea to use this new technology as their primary means of transport, as soon as it becomes available. This behavioural scenario resonates with key theories from the behavioural sciences, related to the use of novel technologies. The Theory of Planned Behaviour (Ajzen 1991), the Technology Acceptance Model (Davis et al. 1989), the Technology Diffusion Theory (Rogers 2010) and the Perceived Characteristics of Innovating framework (Moore and Benbasat 1991), in particular, can help us explain the development of positive intentions towards AI in a situation in which people fear AI in the first place. What these theories point out is that factors such as instrumentality, perceived usefulness, perceived ease of use and status aspects, contribute to the development of people’s intentions. These factors (which are the same factors found in our participants’ responses) are what we take into account, together with fears and concerns, when we consider adopting a new technology such as AI. We combine them in Fig. 8 and use them as building blocks to create a theoretical framework capturing the main drivers that shape our intentions in relation to the use of AI tech.

Instrumentality is a factor taken from the Theory of Planned Behaviour (Ajzen 1991) and it represents the extent to which behaving in a certain way will be instrumental in achieving something that the individual desires. In his influential theory, Ajzen (ibid) also considers fear intended as the sum of all concerns and anxieties associated with a given behaviour. Perceived usefulness and perceived ease of use come from the Technology Acceptance Model (Davis et al. 1989). The former signifies the benefits that the adoption of a specific technology might bring, while the latter indicates how easy an individual thinks using that technology will be. Status is a factor that was originally introduced by Rogers (2010) in its Technology Diffusion Theory. That is the impact that a technology can have on the reputation and prestige of a person. Similarly, image which is a factor taken from the Perceived Characteristics of Innovating framework (Moore and Benbasat 1991) symbolizes the belief that adopting a novel technology will increase one’s exposure to public notice.

The above factors are strongly present in our dataset and reflect the feelings of our participants who believe that autonomous cars will be instrumental in maximising their productivity and opportunities to communicate with friends, colleagues and family members (Fig. 5), in increasing people’s mobility (Fig. 6) and in saving lives (Fig. 8). The sum of all their concerns and anxieties associated with the use of AI-driven cars is evident from Fig. 1, but so are the benefits that, according to many of them, the adoption of this technology will bring, such as the elimination of the stress of driving (Fig. 5) and the reduction of traffic congestions (Fig. 6) and carbon emissions (Fig. 7). In addition, some of our participants believe that if they start using autonomous cars, their visibility and reputation within their social groups will increase (Fig. 5). Finally, most of them think that an autonomous car will be an easy and thus convenient technology to use (Fig. 5).

In this mix of feelings, there is an evident element of rationality at play. It is not that we, as individuals, are irrationally ignoring the fear of AI that we feel. Instead, we are taking this factor into account together with a number of other factors representing perceived benefits that, in this case study, outnumber and outweigh concerns about AI and the risks that it poses. The act of reasoning is like a scale (hence the name of our theoretical framework) that indeed weights fear on its plates, but it is not limited to it. Going back to Asimov’s Frankenstein Complex, what we observe in both the data and key theories in the behavioural sciences is that fearing AI that does not necessarily mean that society will reject it. Provided that people see individual, urban and global socio-environmental benefits in the use of AI, their fear of autonomous artificial intelligences will not be enough to discourage them from employing such technologies: rationality kicks in putting emphasis on the many advantages that AI might generate.

However, while we argue that Scale’s holistic approach offers a significant theoretical benefit, by balancing both negative and positive emotions, we also acknowledge that our framework needs to be tested in different geographical contexts and potentially adjusted according to the specific places where research is being conducted. The reason being that we cannot assume that all the factors and phenomena taken into account by Scale are universal in nature. Fear, for example, is generally presented in the scientific literature as a basic emotion intrinsic to the human species regardless of where we were born and raised, but there is another strand of literature in which emotions like fear are seen in part as social constructs, meaning that their manifestation varies according to local cultures (Barrett and Russell 2014; Celeghin et al. 2017). This is particularly relevant for the study of the acceptance of AI, because we may assume that in animistic societies like Japan (see Jensen and Blok 2013), where objects are traditionally believed to be sentient, there would be much less resistant to the idea of an artificially intelligent entity, like a robot, becoming part of everyday life.

7 Conclusions: beyond the myth of the Frankenstein complex

In this final section, the narrative comes full circle as we revisit the initial question with which we started this paper: to what extent do people fear artificial intelligence? The answer is yes they do, and quite substantially. We have shown this in Sect. 3 where we have illustrated the plethora of fears and concerns that our participants feel in relation to AI-driven cars. These are strong feelings denoting that most people are clearly afraid of being inside or simply near a car that is autonomously controlled by an artificial intelligence. However, fear is not preventing people from wanting to use the very same technology as soon as possible. We have shown in Sect. 4 that while a large majority of our respondents are afraid of autonomous cars, it is only less than half of our sample that actually does not intend to employ them. A considerable portion of the sample is overall in favour of using autonomous cars (39%) while 22% or the survey participants remain neutral.

We draw on these findings to answer our second research question: to what extent do people’s fears and concerns in relation to AI impact on their intention to adopt AI as part of their daily life? In this case, specifically in connection with AI-driven cars, we can see that fearing a car that is driven by AI is not enough for people to reject autonomous cars. In essence, the impact that fear is having on the intention to adopt AI tech as part of everyday urban mobility is minimum. This finding and its related conclusion might appear initially surprising and rather paradoxical as, according to psychological theory on fear, terror and phobias, one would expect a rational individual to reject what s/he believes could be a potential source of physical and/or psychological harm (Burke et al. 2010; Floyd et al. 2000; Sheeran et al. 2014; Solomon et al. 2015; Taylor 1998). And this is exactly what Asimov had theorized with his Frankenstein Complex, assuming that most people were going to be afraid of AI as a potential source of harm and that, consequently, AI technologies were bound to be rejected by society.

This apparent contradiction in the data can be explained by drawing upon the behavioural sciences which are helpful to expose the limits of Asimov’s hypothesis and, above all, to better understand the drivers of the acceptance of AI technology. This is what we did in Sect. 6 where we synthetized the Theory of Planned Behaviour (Ajzen 1991), the Technology Acceptance Model (Davis et al. 1989), the Technology Diffusion Theory (Rogers 2010) and the Perceived Characteristics of Innovating framework (Moore and Benbasat 1991), and developed a theoretical framework called Scale to help us see that fear is not the only factor at play when our opinions about AI technology and our intentions towards it are formed. In addition to fears and concerns, individuals consider the potential advantages that AI might bring and this is what we have also observed in the data, by emphasising in Sect. 5 that our participants believe that the employment of AI-driven cars will generate three sets of benefits: individual benefits (advantages connected to the life and mobility of the individual, such as having more time to relax, for instance), urban benefits (advantages for the whole city, like less traffic and accidents, for example) and global social and environmental benefits (advantages for global socio-environmental systems such as the climate of the planet).

We summarize our contribution’s insights in Fig. 9. Our research provides an empirically grounded understanding of the tension that exists between fear and adoption in relation to AI tech. As Fig. 9 illustrates, fear, as a basic emotion, gets triggered by specific threats that AI is posing, namely accidents, cyber-attacks and malfunctions, which would put humans at risk. Here it is important to note that what people actually fear is different from the risks that are commonly described in sci-fi literature: the so-called AI takeover is not a worry that is shaping the social perception of AI. Instead, what is heavily influencing people’s opinions about AI and their intention to use it are the individual, urban and global benefits that AI tech is likely to generate. Once again, there is a dissonance between reality and science fiction. The Frankenstein Complex, largely based on Asimov’s fictional AIs, does not match people’s willingness to accept AI technology, because nowadays individuals see in real-life AIs more benefits than risks.

However, while our insights are useful to capture the rational part inside us that consciously evaluates the pros and cons of AI tech, if that is the only analytical perspective that we adopt, the risk is that we might end up picturing people as 100% rational agents who are perfectly in control of their own intentions and, above all, of the information that influences their intentions. This representation would be as simplistic and problematic as saying that people are completely irrational creatures who embrace what they fear. It would also clash against recent theories in critical philosophy, social psychology and human geography, that highlight how vulnerable people’s intentions are to the nudges of other actors, ranging from private companies to states (see Han 2017; Whitehead et al. 2019; Zuboff 2019). Vulnerable to the point of seeing their behaviour influenced on a pre-reflexive level, that is before rationality comes into play to ponder potential benefits and downsides. To counterbalance the insights from the behavioural sciences synthetized in our theoretical framework, future research should attempt to capture the more-than-rational factors behind people’s attitudes towards AI. Ultimately, as humans engaging with non-human intelligences, there is still a lot that we ignore and, worse, misunderstand about AI (Emmert-Streib et al. 2020; Floridi et al. 2020). And there is no greater fear than the fear of the unknown.

Data Availability

The datasets generated and analysed during the current study are available from the corresponding author on request.

References

Acheampong RA, Cugurullo F (2019) Capturing the behavioural determinants behind the adoption of autonomous vehicles: conceptual frameworks and measurement models to predict public transport, sharing and ownership trends of self-driving cars. Transport Res f: Traffic Psychol Behav 62:349–375

Acheampong RA, Cugurullo F, Gueriau M, Dusparic I (2021) Can autonomous vehicles enable sustainable mobility in future cities? Insights and policy challenges from user preferences over different urban transport options. Cities 112:103134

Adolphs R (2013) The biology of fear. Curr Biol 23(2):R79–R93

Ajzen I (1991) The theory of planned behavior. Organ Behav Hum Decis Process 50(2):179–211

Akimoto K, Sano F, Oda J (2022) Impacts of ride and car-sharing associated with fully autonomous cars on global energy consumptions and carbon dioxide emissions. Technol Forecast Soc Chang 174:121311

Allam Z, Dhunny ZA (2019) On big data, artificial intelligence and smart cities. Cities 89:80–91

Arakawa T, Hibi R, Fujishiro TA (2019) Psychophysical assessment of a driver’s mental state in autonomous vehicles. Transp Res Part a: Policy Pract 124:587–610

Asimov I (1990) The machine and the robot. Robot visions. Roc Books, New York, pp 361–367

Barns S (2021) Out of the loop? On the radical and the routine in urban big data. Urban Stud 42:986

Barrett LF, Russell JA (eds) (2014) The psychological construction of emotion. Guilford Publications, New York

Baum SD (2020) Social choice ethics in artificial intelligence. AI Soc 35(1):165–176

Bennett R, Vijaygopal R, Kottasz R (2019) Attitudes towards autonomous vehicles among people with physical disabilities. Transp Res Part A Policy Pract 127:1–17

Burke BL, Martens A, Faucher EH (2010) Two decades of terror management theory: a meta-analysis of mortality salience research. Pers Soc Psychol Rev 14(2):155–195

Caprotti F, Liu D (2020) Platform urbanism and the Chinese smart city: the co-production and territorialisation of Hangzhou City Brain. GeoJournal 2:1–15

Caro-Burnett J, Kaneko S (2022) Is society ready for AI ethical decision making? Lessons from a study on autonomous cars. J Behav Exp Econ 98:101881

Celeghin A, Diano M, Bagnis A, Viola M, Tamietto M (2017) Basic emotions in human neuroscience: neuroimaging and beyond. Front Psychol 8:1432

Central Statistics Office (2016) Census 2016 Sapmap Area: County Dublin City. https://census.cso.ie/sapmap2016/Results.aspx?Geog_Type=CTY31&Geog_Code=2AE19629143313A3E055000000000001 Accessed 01 July 2022

Chen B, Sun D, Zhou J, Wong W, Ding Z (2020) A future intelligent traffic system with mixed autonomous vehicles and human-driven vehicles. Inf Sci 529:59–72

Cian, McCarroll Federico, Cugurullo (2022b) No city on the horizon: Autonomous cars artificial intelligence and the absence of urbanism. Front. Sustain. Cities 4:937933. https://doi.org/10.3389/frsc.2022.937933

Coletta C, Heaphy L, Kitchin R (2019) From the accidental to articulated smart city: the creation and work of ‘Smart Dublin.’ Eur Urban Region Stud 26(4):349–364

Crawford K (2021) Atlas of AI: power, politics, and the planetary costs of artificial intelligence. Yale University Press, New Haven

Cugurullo F (2020) Urban artificial intelligence: from automation to autonomy in the smart city. Front Sustain Cities 2:38

Cugurullo F (2021) Frankenstein urbanism: Eco, smart and autonomous cities, artificial intelligence and the end of the city. Routledge, New York

Cugurullo F, Acheampong RA, Gueriau M, Dusparic I (2020) The transition to autonomous cars, the redesign of cities and the future of urban sustainability. Urban Geogr 2:1–27

Curtis G, Magee WJ, Eaton WW, Wittchen HU, Kessler RC (1998) Specific fears and phobias: epidemiology and classification. Br J Psychiatry 173(3):212–217

Danziger K (1997) Naming the mind: how psychology found its language. Sage, London

Darcy S, Burke PF (2018) On the road again: the barriers and benefits of automobility for people with disability. Transp Res Part A Policy Pract 107:229–245

Dastani M, Yazdanpanah V (2022a) Responsibility of AI systems. AI Soc 2:1–10

Dauvergne P (2020) AI in the wild: sustainability in the age of artificial intelligence. MIT Press, Cambridge

Davis FD, Bagozzi RP, Warshaw PR (1989) User acceptance of computer technology: a comparison of two theoretical models. Manag Sci 35(8):982–1003

Depla MF, Ten Have ML, van Balkom AJ, de Graaf R (2008) Specific fears and phobias in the general population: results from the Netherlands Mental Health Survey and Incidence Study (NEMESIS). Soc Psychiatry Psychiatr Epidemiol 43(3):200–208

Dowling R, McGuirk P (2020) Autonomous vehicle experiments and the city. Urban Geogr 2:1–18

Ekman P (1992) An argument for basic emotions. Cogn Emot 6(3–4):169–200

Emmert-Streib F, Yli-Harja O, Dehmer M (2020) Artificial intelligence: a clarification of misconceptions, myths and desired status. Front Artif Intell 3:524339

Fakhrmoosavi F, Saedi R, Zockaie A, Talebpour A (2020) Impacts of connected and autonomous vehicles on traffic flow with heterogeneous drivers spatially distributed over large-scale networks. Transp Res Rec 2674(10):817–830

Floridi L, Cowls J, King TC, Taddeo M (2020) How to design AI for social good: seven essential factors. Sci Eng Ethics 26(3):1771–1796

Floyd DL, Prentice-Dunn S, Rogers RW (2000) A meta-analysis of research on protection motivation theory. J Appl Soc Psychol 30(2):407–429

Fredrikson M, Annas P, Fischer H, Wik G (1996) Gender and age differences in the prevalence of specific fears and phobias. Behav Res Ther 34(1):33–39

Fridman L, Brown DE, Glazer M, Angell W, Dodd S, Jenik B et al (2019) MIT advanced vehicle technology study: large-scale naturalistic driving study of driver behavior and interaction with automation. IEEE Access 7:102021–102038

Gaio A, Cugurullo F (2022) Cyclists and autonomous vehicles at odds. AI Soc 2:1–15

Gu S, Wang F, Patel NP, Bourgeois JA, Huang JH (2019) A model for basic emotions using observations of behavior in Drosophila. Front Psychol 10:781

Guériau M, Cugurullo F, Acheampong RA, Dusparic I (2020) Shared autonomous mobility on demand: a learning-based approach and its performance in the presence of traffic congestion. IEEE Intell Transp Syst Mag 12(4):208–218

Han BC (2017) Psychopolitics: neoliberalism and new technologies of power. Verso Books, London

Harb M, Malik J, Circella G, Walker J (2022) Glimpse of the future: simulating life with personally owned autonomous vehicles and their implications on travel behaviors. Transp Res Rec 2676(3):492–506

Hinks T (2020) Fear of robots and life satisfaction. Int J Soc Robot 2:1–14

Hopkins D, Schwanen T (2021) Talking about automated vehicles: What do levels of automation do? Technol Soc 64:101488

Izard CE (2007) Basic emotions, natural kinds, emotion schemas, and a new paradigm. Perspect Psychol Sci 2(3):260–280

Jackman A (2022) Domestic drone futures. Polit Geogr 97:102653

Jensen CB, Blok A (2013) Techno-animism in Japan: Shinto cosmograms, actor-network theory, and the enabling powers of non-human agencies. Theory Cult Soc 30(2):84–115

Jones EC, Leibowicz BD (2019) Contributions of shared autonomous vehicles to climate change mitigation. Transp Res Part D Transp Environ 72:279–298

Kaker SA, Evans J, Cugurullo F, Cook M, Petrova S (2020) Expanding cities: living, planning and governing uncertainty. In: Scoones I, Stirling A (eds) The politics of uncertainty. Routledge, London, pp 85–98

Karvonen A, Cugurullo F, Caprotti F (eds) (2018) Inside smart cities: place, politics and urban innovation. Routledge, London

Kassens-Noor E, Wilson M, Cai M, Durst N, Decaminada T (2021) Autonomous vs self-driving vehicles: the power of language to shape public perceptions. J Urban Technol 28(3–4):5–24

Khasawneh OY (2018) Technophobia: examining its hidden factors and defining it. Technol Soc 54(1):93–100

Kitchin R, Dodge M (2019) The (in) security of smart cities: vulnerabilities, risks, mitigation, and prevention. J Urban Technol 26(2):47–65

Kok G, Peters GJY, Kessels LT, Ten Hoor GA, Ruiter RA (2018) Ignoring theory and misinterpreting evidence: the false belief in fear appeals. Health Psychol Rev 12(2):111–125

Leszczynski A, Elwood S (2022) Glitch epistemologies for computational cities. Dialog Hum Geogr 20:438

Li J, Huang JS (2020) Dimensions of artificial intelligence anxiety based on the integrated fear acquisition theory. Technol Soc 63:101410

Liang Y, Lee SA (2017) Fear of autonomous robots and artificial intelligence: evidence from national representative data with probability sampling. Int J Soc Robot 9(3):379–384

Lu Q, Tettamanti T, Hörcher D, Varga I (2020) The impact of autonomous vehicles on urban traffic network capacity: an experimental analysis by microscopic traffic simulation. Transp Lett 12(8):540–549

Lupia A, McCubbins MD, Popkin SL (eds) (2000) Elements of reason: cognition, choice, and the bounds of rationality. Cambridge University Press, Cambridge

Lutz B, Marsicano G, Maldonado R, Hillard CJ (2015) The endocannabinoid system in guarding against fear, anxiety and stress. Nat Rev Neurosci 16(12):705–718

Luusua A, Ylipulli J, Foth M, Aurigi A (2022) Urban AI: understanding the emerging role of artificial intelligence in smart cities. AI Soc 2:2

Maalsen S (2022) The hack: What it is and why it matters to urban studies. Urban Stud 59(2):453–465

Malokin A, Circella G, Mokhtarian PL (2019) How do activities conducted while commuting influence mode choice? Using revealed preference models to inform public transportation advantage and autonomous vehicle scenarios. Transp Res Part A Policy Pract 124:82–114

McCarroll C, Cugurullo F (2022a) Social implications of autonomous vehicles: a focus on time. AI Soc 37(2):791–800

Meissner G (2020) Artificial intelligence: consciousness and conscience. AI Soc 35(1):225–235

Milakis D, Van Arem B, Van Wee B (2017) Policy and society related implications of automated driving: a review of literature and directions for future research. J Intell Transport Syst 21(4):324–348

Milakis D, Thomopoulos N, Van Wee B (2020) Policy implications of autonomous vehicles. Academic Press, Cambridge

Mintrom M, Sumartojo S, Kulić D, Tian L, Carreno-Medrano P, Allen A (2021) Robots in public spaces: implications for policy design. Policy Des Pract 2:1–16

Moore GC, Benbasat I (1991) Development of an instrument to measure the perceptions of adopting an information technology innovation. Inf Syst Res 2(3):192–222

Muris P, Merckelbach H, de Jong PJ, Ollendick TH (2002) The etiology of specific fears and phobias in children: a critique of the non-associative account. Behav Res Ther 40(2):185–195

Naudé W, Dimitri N (2020) The race for an artificial general intelligence: implications for public policy. AI Soc 35(2):367–379

Osiceanu ME (2015) Psychological implications of modern technologies:“technofobia” versus “technophilia.” Proc Soc Behav Sci 180:1137–1144

Parra I, García-Morcillo A, Izquierdo R, Alonso J, Fernández-Llorca D, Sotelo MA (2017) Analysis of ITS-G5A V2X communications performance in autonomous cooperative driving experiments. IEEE Intell Veh Symp (IV) 2017:1899–1903

Rezaei A, Caulfield B (2021) Safety of autonomous vehicles: what are the insights from experienced industry professionals? Transport Res f: Traffic Psychol Behav 81:472–489

Rogers EM (2010) Diffusion of innovations, 4th edn. The Free Press, New York

Sheehan B, Murphy F, Mullins M, Ryan C (2019) Connected and autonomous vehicles: A cyber-risk classification framework. Transp Res Part a: Policy Pract 124:523–536

Sheeran P, Harris PR, Epton T (2014) Does heightening risk appraisals change people’s intentions and behavior? A meta-analysis of experimental studies. Psychol Bull 140(2):511

Siegel J, Pappas G (2021) Morals, ethics, and the technology capabilities and limitations of automated and self-driving vehicles. AI Soc 2:1–14

Solomon S, Greenberg J, Pyszczynski T (2015) The worm at the core: on the role of death in life. Penguin, London

Sprenger F (2020) Microdecisions and autonomy in self-driving cars: virtual probabilities. AI Soc 2:1–16

Stanovich K (2011) Rationality and the reflective mind. Oxford University Press, Oxford

Stilgoe J (2018) Machine learning, social learning and the governance of self-driving cars. Soc Stud Sci 48(1):25–56

Stilgoe J (2020) Who’s driving innovation? New technologies and the collaborative state. Palgrave Macmillan, London

Taeihagh A, Lim HSM (2019) Governing autonomous vehicles: emerging responses for safety, liability, privacy, cybersecurity, and industry risks. Transp Rev 39(1):103–128

Talebpour A, Mahmassani HS (2016) Influence of connected and autonomous vehicles on traffic flow stability and throughput. Transp Res Part C Emerg Technol 71:143–163

Taylor S (1998) The hierarchic structure of fears. Behav Res Ther 36(2):205–214

Tennant C, Stilgoe J (2021) The attachments of ‘autonomous’ vehicles. Soc Stud Sci 51(6):846–870

Tiddi I, Bastianelli E, Daga E, d’Aquin M, Motta E (2020) Robot–city interaction: mapping the research landscape—a survey of the interactions between robots and modern cities. Int J Soc Robot 12(2):299–324

While AH, Marvin S, Kovacic M (2021) Urban robotic experimentation: San Francisco, Tokyo and Dubai. Urban Stud 58(4):769–786

Whitehead M, Jones R, Lilley R, Howell R, Pykett J (2019) Neuroliberalism: cognition, context, and the geographical bounding of rationality. Prog Hum Geogr 43(4):632–649

Willis KS, Aurigi A (eds) (2020) The Routledge companion to smart cities. Routledge, London

World Health Organisation (2018) Road traffic injuries. http://www.who.int/news-room/fact-sheets/detail/road-traffic-injuries Accessed 01 July 2022

Yigitcanlar T, Cugurullo F (2020) The sustainability of artificial intelligence: an urbanistic viewpoint from the lens of smart and sustainable cities. Sustainability 12(20):8548

Yigitcanlar T, Butler L, Windle E, Desouza KC, Mehmood R, Corchado JM (2020) Can building “artificially intelligent cities” safeguard humanity from natural disasters, pandemics, and other catastrophes? An urban scholar’s perspective. Sensors 20(10):2988

Zang S, Ding M, Smith D, Tyler P, Rakotoarivelo T, Kaafar MA (2019) The impact of adverse weather conditions on autonomous vehicles: how rain, snow, fog, and hail affect the performance of a self-driving car. IEEE Veh Technol Mag 14(2):103–111

Zhao C, Liao F, Li X, Du Y (2021) Macroscopic modeling and dynamic control of on-street cruising-for-parking of autonomous vehicles in a multi-region urban road network. Transp Res Part C Emerg Technol 128:103176

Zuboff S (2019) The age of surveillance capitalism: the fight for a human future at the new frontier of power. Profile books, London

Acknowledgements

We wish to thank the Irish Research Council for funding this study (New Horizons Interdisciplinary Research Project Award 206296.14493) and, especially, all the research participants who spent their time sharing their fears with us. The authors have no relevant financial or non-financial interests to disclose.

Curmudgeon Corner

Curmudgeon Corner is a short opinionated column on trends in technology, arts, science and society, commenting on issues of concern to the research community and wider society. Whilst the drive for super-human intelligence promotes potential benefits to wider society, it also raises deep concerns of existential risk, thereby highlighting the need for an ongoing conversation between technology and society. At the core of Curmudgeon concern is the question: What is it to be human in the age of the AI machine? -Editor.

Funding

Open Access funding provided by the IReL Consortium.

Author information

Authors and Affiliations

Contributions

Conceptualization: FC; methodology: FC and RA; formal analysis and investigation: FC and RA; writing—original draft preparation: FC; writing—review and editing: FC and RA; funding acquisition: FC.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cugurullo, F., Acheampong, R.A. Fear of AI: an inquiry into the adoption of autonomous cars in spite of fear, and a theoretical framework for the study of artificial intelligence technology acceptance. AI & Soc (2023). https://doi.org/10.1007/s00146-022-01598-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00146-022-01598-6