Abstract

Putting laypeople in an active role as direct expert contributors in the design of service robots becomes more and more prominent in the research fields of human–robot interaction (HRI) and social robotics (SR). Currently, though, HRI is caught in a dilemma of how to create meaningful service robots for human social environments, combining expectations shaped by popular media with technology readiness. We recapitulate traditional stakeholder involvement, including two cases in which new intelligent robots were conceptualized and realized for close interaction with humans. Thereby, we show how the robot narrative (impacted by science fiction, the term robot itself, and assumptions on human-like intelligence) together with aspects of power balancing stakeholders, such as hardware constraints and missing perspectives beyond primary users, and the adaptivity of robots through machine learning that creates unpredictability, pose specific challenges for participatory design processes in HRI. We conclude with thoughts on a way forward for the HRI community in developing a culture of participation that considers humans when conceptualizing, building, and using robots.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the development of co-existing robots (Riek 2014) has gained increased momentum and interest among researchers and industry. These robots are defined to operate in a human social environment, are physically embodied, and have at least some degree of autonomy (Riek 2014). Their envisioned task is to support humans in useful ways and that humans can naturally and intuitively interact with them (Dautenhahn et al. 2005). This vision encompasses a range of challenges, e.g. robots having to cope with dynamic spaces, needing some form of “social” learning and affect awareness, as well as an understanding of social norms (Riek 2014).

Long-term field trials, however, revealed that commercially available co-existing robots, such as Karotz (Graaf et al. 2017b), Pleo (Fernaeus et al. 2010), and Anki Vector (Tsiourti et al. 2020) fail to be sustainably integrated into people’s everyday life [with vacuum cleaning robots being an exception (Fink 2014; Sung et al. 2009)]. Reasons for rejecting these robots include a perceived lack of functionality, utility, social intelligence, and a mismatch with people’s expectations (Graaf et al. 2017a; Rantanen et al. 2018). For future co-existing robots to succeed in our everyday lives, they need to respond to human needs. In the field of human–computer interaction (HCI) research participatory design (PD) has a long tradition with the potential for the collaborative development of meaningful technologies (Harrington et al. 2019).

At the core of PD stands an emphasis on mutual learning between researchers (designers) and laypeople (experts in lived experience), and to reduce social hierarchies by acknowledging their respective agency in the decision-making process (Bannon and Ehn 2012). PD is considered particularly suitable for real-world problems which need a close collaboration of designers, developers, and domain experts to achieve relevant and meaningful solutions (Von Hippel 2009).

Traditional user-centered development (UCD) of social service robots so far often resulted in specific design factors derived from user studies (Lee et al. 2009; Sirkin et al. 2015; Weiss et al. 2015), but did not successfully engage lay experts in all stages of conceptualizing, building, and using these robots (Frederiks et al. 2019). Faced with the challenges of developing robots for sustained and meaningful use, in recent years, PD has increasingly gained popularity in human–robot interaction (HRI) and social robotics (SR). The role of lay experts shifted from mere informants to co-designers of robotic technology. Bertel et al. for instance (2013) proposed a framework for PD in educational robotics. Lee et al. (2017) outlined how to involve older adults in the development of care robots. Azenkot and et al. (2016) used a PD approach to elicit design recommendations from a group of designers and lay experts who had a range of visual disabilities, in the design process of building service robots that interact with and guide a blind person through a building in an effective and socially acceptable way. Rose and Björling (2017) conducted a series of participatory design sessions with teenagers for the design of a social robot envisioned to measure their stress.

These previous works mention challenges for PD in robot development, such as its time-consuming aspects and that robot prototyping became faster and more cost-efficient, but not necessarily easier through off-the shelf components. We agree that the development of co-exiting social service robots poses additional challenges to PD that go beyond those that we face in the development of other interactive technologies. In this article, we critically reflect on the involvement of lay experts in the development process of two co-existing robots, one for citizen navigation: IURO and one for elderly care: Hobbit. Both projects followed a user-centered approach (of which the first author was in the lead) and faced HRI-specific challenges for which we want to create awareness for fellow researchers. This might allow us collectively, to avoid encountering the same issues over and over again and instead put us in a better position to address the robot-specific challenges to PD more adequately. Stemming from our analysis, we propose a way forward for the HRI community in developing a culture of participation when conceptualizing, building,and using robots.

2 Involving lay experts in technology development

Participatory design (PD) has its origins in Scandinavia and was developed in the context of the changing nature of work through technology (Gregory 2003; Simonsen and Robertson 2012). Technology was seen as a means to replace low-skilled workers and increase productivity; as a counter-proposal, PD aimed to create alternative futures in which workers are enabled and “re-qualified” through technology by involving them in the design process. Thereby, PD should support the democratization of labor markets (Bødker et al. 1987). Since then PD has become an important HCI research topic for the design of technology for and with people (Muller 2003), combining technological expertise with domain and practical knowledge to design alternative technological futures.

2.1 Challenges of PD in HCI research

Within HCI, PD researchers have wrestled with a range of challenges affecting processes and the actualization of its democratizing and empowering potentials. One challenge concerns the technological priming of lay experts, who might exhibit less creativity regarding novel forms of interaction and struggle with ‘design thinking’ (Bjögvinsson et al. 2012). To account for this issue, the field has developed a range of diverse methods allowing researchers and lay experts to collaboratively engage with each other on eye level [cf. (Simonsen and Robertson 2012)]. However, the field has only just started to consider reviewing these methods and developing more flexible approaches to respond to the abilities and preferences of a diverse range of potential lay experts (Frauenberger et al. 2017) and to similarly assess the outcomes of PD processes in a collaborative manner (Spiel et al. 2017). Subsequently, PD researchers have increasingly discussed power dynamics and differentials and their effects on design (Bratteteig and Wagner 2012) and have formulated suggestions for more equitable processes (Harrington et al. 2019). While these complex concerns also hold relevance for HRI research; however, the design and development of robotics come with an additional set of situated challenges.

2.2 Feasibility of PD in HRI research

The development of robotic systems based on the understanding and interpretation of a domain problem is one of the core interests of human-centered HRI research (Dautenhahn 2007). Already in 2010, Šabanović (2010) stressed that in this process, society (the context of use and stakeholders involved) is an active shaper rather than a “passive receptor” of robots. After careful considerations of requirements from various stakeholders and getting to know envisioned contexts of use and potential tasks in which the robot could be helpful, plenty of design decisions are made to best support the intended populations—however, often mainly shaped by designers and developers. The working prototypical robot design can be appropriate for the target group or miss its expectations.

For example, in the context of residential care: to develop appropriate robot designs, we need to get input from various stakeholders: roboticists, designers, psychologists, gerontologists etc. (a broad consensus in the HRI research community). However, we also need to acknowledge that every prototypical robot design is inextricably related to the specific problem context, the technological readiness level, the design, and the people, including the researchers involved in the development (Hornecker et al. 2020). What HRI researchers learn in the development process of robotic systems is, therefore, very personal, positional, and specific. This situated nature of HRI research means that our studies and prototypes can only be understood contextually. Robot sociality is mutually constructed through socially situated interactions with humans and with their broader social environment (Chang and Sabanovic 2015).

However, context not only relates to stakeholders, domain and cultural backdrop, but also to views, values, intuitions, and expertise of researchers. Personal stories and experiences of roboticists feed into the development of robot designs: robotic scientists were often unaware that the models they used for their machines were connected with their own personal difficulties–robots are intertwined with their makers in psycho-physical terms [Richardson 2015, p. 109]. Similarly, the relationship between humans and artifacts is constantly and mutually reconstituted and this process depends on the imagination and ingeniousness of robot designers [Niu et al. 2015, p. 7]. However, we still face a dominance of engineering perspectives in these imaginations (Cheon and Su 2016).

The involvement of lay experts in HRI and SR can happen at different phases: (1) The conceptualization/design of a new social service robot, (2) the building of a new robot or amendment of an existing robot platform for a new use case, (3) the use of a new social service robot, whereby the cases of building/amendment or use are likely to pose more challenges for PD as they come with a more constrained space in which participants are given the power to shape the technological context (Bratteteig and Wagner 2012). When new social service robots are conceptualized, potential stakeholders get the chance to express their thoughts and opinions to identify appropriate designs and uses of future robots. Existing PD techniques have already been successfully used in this phase to involve lay experts in the design. For example, to explore the ideal domestic robots, Caleb-Solly et al. (2014) used embodiment and scenario-focused workshops with older adults. Lee et al. (2012) chose a similar approach to develop concepts for domestic robots. Stollnberger et al. (2016) describe the development of a medical robotic system in the project ReMeDi. They worked together with doctors, patients, and assistants to mutually shape and negotiate specifications. The design space at this stage is completely open, but often driven by researcher assumptions and/or project visions, i.e. the context and intent of use are usually predetermined.

Involving lay experts in building a new robot or in amending an existing platform already poses challenges, which are harder to address with current PD methods. Typically, after a first conceptualization phase with lay experts, an initial prototype is partially or fully functionally built or reconfigured and then studied with participants in the lab. After a series of lab studies, iteratively applying the resulting findings to the robot design.

Involving lay experts in the phase of using is typically done in a summative evaluation of the fully integrated and autonomously working system “in the wild”, i.e. its actual context of use. Rarely, but with potential for more applicable findings, participants of such field trials are additionally involved in evaluation and meaning making about a robot’s intended purpose. Long-term field studies of complex robotic systems in which lay experts can actively shape and personalize their robots are still an exception. PD poses a significant challenge, when lay experts should actually use robots in the deployed domain. In this phase, we need to design means of how people can customize, program, and train robots after they have been deployed (Dautenhahn 2004; Frederiks et al. 2019; Saunders et al. 2015). However, long-term field trials mainly deploy commercially available robot systems with limited capabilities [e.g. Fernaeus et al. 2010; Fink 2014; Graaf et al. 2017b] and others so far focus on usability and acceptance studies of developed systems, but not on laypeople involvement [e.g. (Bajones et al. 2019)].

In the example projects IURO (Weiss et al. 2015) and Hobbit (Bajones et al. 2018) we aimed at involving lay experts at all phases—conceptualizing, building, and using—and experienced how difficult it can be to consider a participation culture above all in the implementation and usage of the robot. We will reflect particularities of social service robot design that might serve as indications as to why a “users as informants paradigm” is still dominant in the HRI academic culture—and how to address the related hurdles.

3 The cases of IURO and Hobbit

The abbreviation IURO stands for: interactive urban robot. This robot was developed following a UCD approach within an EU-funded project from 2010 to 2013. The main vision of the IURO project was to develop a robot that can independently and autonomously navigate from a starting point to a designated place in a public urban environment by asking pedestrians for guidance to find its way. In other words, the robot is placed in a public environment without any previous topological information and can navigate to a destination with the information obtained merely from people and its proprioception. Laypeople involvement happened at all stages of the design process (see Table 1) and informed the spiral design of mechanically constructing the robot (see Fig. 1).

The spiral design of mechanically constructing the robot (Weiss et al. 2015). The process consists of nine individual steps across designing, prototyping, and testing that are iteratively repeated

The basic idea of the EU-funded project Hobbit (2011–2015) was to build a service robot that enables older people to feel safe and to stay longer in their private homes using new technology including smart environments (Ambient Assisted LivingAAL). The project also followed a user-centered design approach.

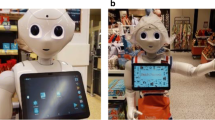

The main goal of the robot (see Fig. 2 for different development stages) was to provide a “feeling of safety and being supported” while maintaining or increasing older adult’s feeling of self-efficacy (one’s own ability to complete tasks). Consequently, the functionalists within the design team focused on emergency detection (mobile vision and AAL), handling emergencies (calming dialogues, communication with relatives, etc.) as well as fall prevention measures (keeping floors clutter-free, transporting small items, searching and bringing objects, and reminders) (Bajones et al. 2018). Moreover, high usability, acceptance, as well as a reasonable level of affordability were considered as key criteria to achieve a sustainable success of the robot (Vincze et al. 2014). Like in IURO, laypeople involvement occurred at all stages of the Hobbit project (see Table 2).

The Hobbit robot at different development stages: to the left, we show two different mock-ups, in the middle is the first prototype, and to the right, the final robot is depicted (Bajones et al. 2018)

Even though both projects IURO and Hobbit aimed for involving laypeople at all stages of development, the main research directions (e.g. basing the interaction with IURO on natural dialogue or Hobbit fetching and carrying objects for people) were given by the project visions (and funding proposals) and reinforced by the researchers’ ambitions. Participants had a chance to express their thoughts about mobile robots in the street or a helping companion robot for the elderly, but had little opportunity to bring up other issues that were important to them. Participants mainly acted as informants to the research team, rather than as collaborators actively joining in the agenda-setting and decision-making process of design. In the Hobbit project, this was also critically noted by some of the people who participated in several activities. They explicitly stated that they had the feeling that their voices were not heard (Frennert et al. 2013). The transition of knowledge from one stage to the next, as well as learning from each other on how the knowledge can be integrated in separate stages of robot development was experienced as the main challenge by researchers in both projects.

4 Challenges for a participation culture in robot development

Developing IURO and Hobbit revealed several challenges with regard to a participation culture. Those challenges differ compared to other computing technologies in that (1) the narrative of service robots comes with a lot of baggage (from popular media as well as from researchers), (2) power-balancing between stakeholders is affected by hardware constraints and a lack of perspectives beyond primary users, (3) robots as autonomous agents will be adaptive, meaning that their behavior changes over time, is not completely predictable, and cannot be designed to its end. As we will argue later, the first aspect can be (and already is) well addressed with current PD methods if properly applied within an HRI project. The second and the third, however, are more critical if we want to let all stakeholders participate in actually conceptualizing, building, and using robots.

4.1 The robot narrative

To enable participants to make design suggestions and take design decisions for service robots, they need to know enough about the technological possibilities and limitations to participate with equal and firm footing in the design process. However, the struggle often already begins with the term robot and all the narratives that come along with it. Even roboticists do not agree on a single definition of what makes a robot a robot (Nourbakhsh 2013), and one often hears the statement “I recognize a robot if I see one”. However, roboticists and HRI researchers have common ground on the technology underlying an interactive and/or autonomous system, such as machine vision, behavior coordination, localization, and mapping.

4.1.1 The impact of science fiction

Lay experts, in contrast, often have popular culture or media representations as a baseline (Bruckenberger et al. 2013; Feil-Seifer and Matarić 2009; Kriz et al. 2010) of what a robot is. These reference points can result in unrealistic expectations, as shown with children in educational robotics workshops (Veselovská and Mayerová 2014). Science fiction movies have often been inspired by real science and in turn inspired scientists to keep up with the presented fictional inventions (Lorenčík et al. 2013). Personal contact with real robots has influenced people’s expectations and made them more realistic, e.g. in the context of field studies with service robots (Mirnig et al. 2012b) or in a private context with a robot pet e.g., AIBO (Bartneck et al. 2007).

Three different studies which were performed within the IURO project (Bruckenberger et al. 2013) revealed that previous experience with fictional robots through the media not only increased expectations towards the actual IURO robot, but that people considered a future society including robots as given. Hence, on the one hand, science fiction makes it more difficult for actualized robots to be accepted though, on the other hand, it paves the way for the acceptance of service robots. In light of this trade-off, we suggest actively introducing state-of-the-art robotic technology to participants (e.g., akin to technology immersion (Druin 1999)), to reduce the impact of unrealistic expectations shaped by science fiction.

4.1.2 The connotations of the term robot

Participation in creating design ideas for service robots therefore relies on the competence of all participants (including researchers and/or designers) to imagine possible futures (and recognizing, acknowledging, and valuing each other’s suggestions). Classic PD storytelling methods like excursions (visiting functioning system installations), scenarios, or future workshops can enable people to understand what state-of-the-art service robots can do and provide an indication of what future robots will potentially be capable of.

In the Hobbit project, the term “robot” was intentionally avoided in the requirement workshops (Huber et al. 2014). To identify the expectations for the design of the robot, only the term “helper” was used in an association study procedure. This was done to prevent technologically colored associations [Huber et al. 2014, p. 105]. Techniques such as imagining what a “perfect helper” would look like, should avoid the challenges with all the baggage that comes with the term robot, however, it also opened up for an unrealistic design space for the project. For example, one participant stated: “This helper will need to clean my windows and water my plants”. You can imagine that amending a service robot with the capability of cleaning windows and watering plants would be a project on its own. However, you can also imagine how frustrated that participant was when she took part in the first laboratory trials with the robot and it did not have any functionality close to cleaning.

Such situations can be avoided if participants have a basic understanding of the capabilities and limitations of state-of-the-art service robots. One approach is to show pictures or videos of robots as a discussion stimulus. In the IURO project this approach was chosen to familiarize participants with state-of-the-art robotic platforms and to avoid too much bias from science fiction (Förster et al. 2011). Lee et al. (2017) decided to show videos of existing robotic systems for elderly care to learn how older adults interpret and critique them. In the 5-step plan of Lammer et al. (2015), the authors suggest working with definitions of the terms ‘technology’ and ‘robot’ to give children a basic understanding of the boundary object ‘robot’ that is the core concept of their educational robotics approach.

A participatory stance here would not try and avoid disillusion of participants, but rather try and identify higher level concepts that are addressed through the rhetoric of robots or helpers. In investigating what these metaphors and allegories might mean and what is important to whom in using them. To appropriately acknowledge participants’ ideas, this might sometimes mean abandoning the idea of creating a ‘robot’ when other interactive technologies might be more feasible in addressing a specific need or desire.

4.1.3 The assumption of human-like intelligence

Another critical aspect lies in the expectation towards human-like intelligence of robots. Since humans acquire capabilities in a particular order such that more basic capabilities provide the basis for more complex ones, they find it hard to imagine that a robot can have a sophisticated ability without having acquired all the more basic capabilities (Fischer 2006). This was also observed in the IURO field trials, when pedestrians asked us after the interaction experience how it was possible that the robot is capable of autonomously navigating from A to B, but it does not know where to go in the first place. Similarly, it was difficult to comprehend for field trial participants in the Hobbit project why the robot could pick-up any object from the floor, but was only able to find and grasp specific objects that it had previously learned about; i.e. that the activity (grasping) was not generalized beyond specifically defined instances (objects).

At a later stage of the development process of a service robot (after a first requirement analysis and implementation phase), Wizard-of-Oz (WoZ) settings may foster unrealistic expectations. WoZ is a technique frequently used in HRI research. A person (usually a developer) remotely operates a robot and puppeteers many of its attributes, such as speech, navigation, manipulation etc. whereby the involvement can have multiple degrees of control (Riek 2012). There is awareness among HRI practitioners for ethical risks involved in the illusion of robot intelligence. However, WoZ can also foster inappropriate expectations among people interacting with the robot (Riek and Howard 2014). For example, Bertel et al. claimed (2013) that in educational robotics, children become disappointed when they meet “real” robots after a wizarded study.

Another critical aspect of WoZ studies was also observed in the IURO project: findings from the wizarded lab trials (Mirnig et al. 2012a) did not transfer to the field trials. As a design element, developers decided to add a pointer on the head of the robot to indicate the direction where it will go. This decision was made, as they did not want to give the robot arms that could wrongly raise the expectation that it could manipulate objects (e.g. open doors).Footnote 1 In the lab trial, participants considered the information transferred by the pointer in a WoZ setup as helpful; therefore, the team decided to keep it for later prototypes. However, in the field trial not a single look at the now functional pointer was observed and it was further not mentioned by any of the participants in the post-interaction interviews (Weiss et al. 2015).

4.2 Power balancing of stakeholders

Traditional stakeholder involvement in HRI does not easily allow to gradually equalize the footing of researchers and participants in robot design across sessions. Therefore, participants often do not develop competency in interacting with it and subsequently do not feel more confident about their ability to contribute to design. In turn, researchers are not becoming more conscious of different perspectives on robot capabilities, usefulness, and challenges and consider their technical expert knowledge as superior to the lived expertise of participants. This mostly affects decisions when it comes to system integration after the conceptualization phase.

4.2.1 Dominant hardware decisions

Researchers’ and developers’ personal robot narratives and their interpretation of outside contributions to the design process (Cheon and Su 2016) heavily affect the actual building of the robot. This stage of the process fundamentally lacks equitable PD approaches for sharing decision power with lay experts. Concretizing ideas about service robots means to physically build them and integrate all the necessary behavior modules. Many decisions on the actually implemented HRI happen during the development without any outside involvement and many needed decisions cannot be predicted in advance and even come as a surprise to the researchers during the process.

There are design decisions in service robotics which are solely based on hardware constraints, such as where to put sensors, the overall size of a robot, and its general appearance. However, these design decisions are hardly ever made together with stakeholders outside research and development teams, and are scarcely documented in scientific papers. Often, the team itself is not consciously aware of the how, when, and why these decisions were made. As in design research in general “much of the value of prototypes as carriers of knowledge can be implicit or hidden. They embody solutions, but the problems they solve may not be recognized” [Stappers 2007, p. 7].

For example, the final design of the Hobbit robot was heavily determined by its sensor setup and the hardware that needed to be integrated into one mobile service robot platform. Even though the project team had design suggestions from participants of co-design workshops (see Fig. 2), decisions such as the height of the robot, the width of its torso, and the height and tilting angle of the head were driven by the sensor set-up to perform the fundamental functionalities, namely object detection, obstacle avoidance, object grasping, and object learning (see Fig. 3).

Visualization of the sensor set-up of two RGB-D sensors which dominated the appearance design decisions for the second Hobbit prototype (Puente et al. 2014)

Similarly, in the IURO project, the participatory conceptualization of the robot in a design workshop (Förster et al. 2011) was not transferred to the actual building of the robot. The workshop specifically challenged the assumption that a service robot in public space is expected to have a human-like appearance and revealed a tendency towards a preference of a non-anthropomorphic design. Participants wished for a robot with an animal-like head and only one arm for object manipulation (see Fig. 4). However, the design decisions on the humanoid head, the four-wheeled platform, and the two rubbery “dummy arms” stem from the spiral design of the mechanical construction of IURO and its hardware manufacturing (see Fig. 4). For example, one project decision was to base the head of IURO on an existing robotic head, but to create a nicer cover and in this process the information of having an animal-like appearance got lost. Similarly, other design decisions were in the end purely decided by the project researchers without any outside involvement: Based on the findings […], it was decided that the IURO robot should appear as a combination of human-oriented perception cues with an anthropomorphic, but not entirely humanoid appearance. Therefore, we aimed to combine a humanoid robot head with a functionally designed body. As the robot did not need to be able to grasp or manipulate anything we decided against equipping it with an arm with a pointing hand. Thereby, we wanted to avoid wrong expectations if the robot had a hand, but would not be able to grasp with it. Instead, we used a pointer, mounted on the head of the robot, for indicating directions. [Weiss 2009, p. 45f]

Both of these cases illustrate how designers and developers, if left to their devices, tend to prioritize their technical expertise or that of their peers over participants’ expertise regarding their lived experiences. Hence, before design decisions are implemented, designers should check in with their participants to allow them to challenge those decisions. Note, though, that it might be tempting then to fall into confirmation bias when defending one’s design decisions to participants. To account for this, researchers and designers need to take on the position of their participants without challenging it or defending their own.

4.2.2 Missing perspectives beyond primary users

Creating meaningful design ideas for service robots (as for every other technology) needs inclusion of all relevant stakeholders. Typically their involvement in service robot design mainly means primary target audiences of the system (Payr et al. 2015). However, when it comes to the development of service robots for older adults, some projects—including Hobbit and Lee et al. (2017) considered secondary stakeholders as relevant. Secondary stakeholders are people conducting the care tasks; thereby directly supporting the primary target audiences. However, the so-called tertiary stakeholders are hardly ever actively involved in the design process. Tertiary stakeholders in the context of care robots do not directly support older people, but have an interest in financing and supporting older people and include care organizations, social services, and health insurance companies, among others. Some design decisions made in the Hobbit project were, for instance, impacted by standardization and certification aspects, such as not including walking aids as this would make the robot a medical equipment which has to fulfill higher standardization aspects.

In the IURO project, the identification of secondary stakeholders was not even considered. The basic challenge was already the definition of the primary target audiences as the project narrative was to develop a robot for “potentially every by-passer on the street”. This is a reoccurring design challenge for service robots intended as tour guides (Karreman et al. 2012; Triebel et al. 2016), receptionist, and information desk robots (Lee et al. 2010). In the IURO project, this lead to the decision that every naive person engaging with the robot is suitable for involvement, but workshops and other requirement studies did not depict the necessary range of diversity among participants. Rather, stakeholder involvement became convenience or “non-probabilistic sampling” [Weiss et al. 2015, p. 50]: whoever is available for a study is a good fit. To compensate for that, it was decided early in the project to work with personas and scenarios (Zlotowski et al. 2011). However, those were only informed by available pre-existing data, and subsequently shaped by researcher assumptions only.

Overall, many future problems and uses of social service robots cannot be completely anticipated at design time. This aspect of meta-design is nothing new to the PD research community (Fischer and Giaccardi 2006). Meta-design especially acknowledges the need to design a socio-technical environment in which humans can express themselves and engage in personally meaningful activities (also referred to as infrastructuring). Future social service robots will have to be designed as open system that can be modified by their users acting as co-designers [Fischer and Giaccardi 2006, p. 206] for two distinct timescales: “the life cycle of a single robot (ontogenetic), and the successive life cycles of different generations of robot models/prototypes (phylogenetic)” [McGinn 2019, p. 3]. Ontogenetic changes in performance (due to machine learning, improvement of software, addition of hardware modules, etc.), are a challenging design aspect, rarely addressed in social service robot projects so far. In the PD community, artificial intelligence (AI) algorithms are already considered as a new type of design material (Holmquist 2017). However, in the future, this will be needed to ensure that the appearance and behavior design of the robot accurately matches its cognitive and interactive capabilities over time (Tapus et al. 2007).

4.3 The adaptivity of robots

In the HRI community, there is an ongoing debate on: Do we want robots that are under our total direct control with interfaces that can directly manipulate the robot? Or should they be widely autonomous, with their own agendas, robots that are not under our total control but that can be trained, robots that get to know us, that we can live with and that can live with us? [Dautenhahn 2004, p. 18]. Recent developments in machine learning changed the landscape towards the latter. The aim of developing autonomous robots that involve human interaction is a challenging endeavor and opens up a continuum of design choices [Dautenhahn 2004, p. 18]. Up to now, stakeholder involvement in HRI mainly focused on exploring the (social) roles robots could play in the future (Huber et al. 2014), appearances (Förster et al. 2011), aspects of multi-modal communication (Mirnig et al. 2012b), or social navigation (Carton et al. 2013) without considering the adaptivity of a learning robot. Designing robots as adaptive agents is a wicked problem, meaning that the task resists complete definition and resolution and often tackling one problem results in new, previously unanticipated, challenges (Rittel and Webber 1973).

4.3.1 Robot learning

In the IURO project, learning was only considered by researchers as the robot “learning” from pedestrians and that the value of human input (e.g. contradictory route descriptions) needs to be identified for the algorithm. However, none of these were discussed with laypeople, who were most likely not even aware of these constraints. Humans were at some stage of the project rather considered as “sensor noise” and not even as interaction partners, but ambivalent input sources.

In the Hobbit project, HRI researchers and developers “intentionally designed” the adaptivity: in a first step, HRI researchers created a set of parameters for a purely device-like robot, which showed no proactive behavior, and a very companion—like Hobbit, which proactively approached people (it was a project vision to show that a more adaptive robot is more accepted by the target audience). None of this behavior was learned, but only parameterized, e.g., after inaction of a pre-specified number of hours, the robot proactively approaches the person interacting with it suggesting some entertainment functionalities (Bajones et al. 2014). However, already implementing and integrating these parameters into the overall autonomous robot behavior was challenging and the actual robot behavior was not predictable for the developers. This parameterized approach could have been iteratively defined with stakeholders in a participatory way; however, this would have been way more resource intensive and deemed to by far exceed the possibilities of a 4-year project.

This shows how robot researchers and designers tend to more easily take on the perspective of the robot—a constrained parameter they know very well. Given how participants are paradigmatically a source of unknown consequences and rarely fully understood knowledge that is under the control of researchers, this perspective provides them with authority. For a participatory stance within HRI research and development, researchers and designers need to actively make time to engage with participants and acknowledge this inherent structural bias influencing their role.

4.3.2 Unpredictability

Interacting with service robots regularly involves unpredictability, from a robot-centered as well as from a human-centered perspective. From a robot-centered perspective, research focuses on how robots can deal with unpredictable worlds; from a human-centered perspective, it focuses on how interaction can be designed to be predictable or legible for lay experts (Lichtenthäler et al. 2012; Mubin and Bartneck 2015). A challenge which is little addressed so far is the complexity and unpredictability that comes with all systems that involve machine learning and automated decision making: Due to the increased complexity involved, it becomes impossible to exactly explain and predict why and how a system does what it does (Holmquist 2017).

This relates to the challenge that all systems involving machine learning need training. In the HRI research field, training a robot is often considered as part of the interaction and usage. A prominent interaction paradigm in HRI is “learning by demonstration” (Weiss et al. 2009) or “learning by imitation” (Alissandrakis et al. 2011). In these circumstances, the robot derives a policy based on one or more demonstrations of a person (Argall et al. 2009). This approach is considered as very promising by roboticists to account for future service robots needing to learn from their (social) environment.

With respect to PD, Holmquist (2017) and Bratteteig and Verne raise concerns that it may be hard for the user to differentiate between interaction and training [Bratteteig and Verne 2018, p. 4]. However, that might be different for service robots, where the training involves more active teaching of the robot (as for instance with Hobbit which could be effectively taught new objects to search for in the apartment) than for instance passive training of voice assistants. Future social service robots will have to allow for training/teaching and customization/ personalization when using them and little research has been done so far to develop techniques how lay experts can better be involved in that stage.

The compounded challenge for the design process is, therefore, to not only getting the appearance and behavior right for the current version, but for the entire life cycle and that robots become functionally relevant and enjoyable to keep them relevant to people’s changing needs and desires in the longer-term (Graaf et al. 2017b). In the following, we outline the possibilities of a culture of participation within HRI that can enable us to involve laypeople more actively in the phases of building/amending and using robots.

5 A call for a culture of participation

We agree with Lee et al. (2017) that participants’ active roles in the PD of social service robots are closely related to constructing ways to make them substantively meaningful. Co-existing social service robots need to be socially constructed by diverse groups of people representing the breadth and depth of the intended target audience through interaction and thereby affect other actors and their context of use. To develop the IURO and Hobbit as potentially capable co-existing social service robots, the projects focused on technical challenges such as enabling pro-active HRI in densely populated human environments [e.g. (Wollherr et al. 2016)] or grasping unknown objects [e.g. (Fischinger et al. 2015)] and, therefore, considered laypeople mainly as informants. Even though these projects tried to involve stakeholders in all stages of design (dialogue strategies, input and feedback modalities, appearance design etc.), an actual culture of participation was only present during conceptualization. Afterwards, outside feedback contributed solely in iterative studies.

How can we embed a culture of participation also in the building and using phase of robots? Crucial for success in developing a culture of participation will be a change in power structures in PD projects for service robots. Besides the dominance of engineering-based robot narratives and preference for lab-based studies in the HRI research field, the community also becomes more and more aware that people evaluate and categorize robots based on how they fit within their everyday life. Sociality needs to be understood as a co-shaping outcome of a socio-technical network and not only as a social cue used to design “intuitive” interaction paradigms [see also (Šabanović. 2010)].We are aware that robot development projects which put emphasis on lay expert involvement also in the phases of building and using robots will face numerous obstacles: from cost, time, and required management efforts to participant attrition and ethical concerns of their privacy, from the familiar high rate of mechanical robot failures to their unforeseen effects on daily living.

As we saw, discrepancies between perceived and actual capacities of robots have multiple sources in the robot narrative. Traditional UCD requirement studies followed-up by Wizard-of-OZ studies in the IURO project even enforced discrepancies instead of challenging them. However, carefully conducted PD can prevent that. An alternative strategy could be incremental robot co-design. This would put researchers in the commitment not only to suitably inform lay experts in the design process about state-of-the-art robot capacities, but also to advance robots in small steps, each of which is well grounded in participatory activities and eases people into a changing reality of robots as complex autonomous system—including budgeting for the appropriate time to have designs challenged and changed. Thereby, power within the process can be more balanced and the co-shaping of technology and sociality becomes more traceable which has the potential to lead to more meaningful participation accounting for the different expertise researchers (and/or designers) as well as participants bring to the table. A PD approach for current service robots needs to “gradually equalize the footing of researchers and participants in robot design over several sessions” [Lee et al. 2017, p. 8]. This is not only needed to make lay experts more confident about their potential contribution to the design and development of a service robot, but it is also relevant for researchers to learn to recognize and acknowledge their situated expertise and suggestions. We argue that this would also involve adequately compensating lay experts for the work they contribute to the development of a robot.

Another critical aspect is that of allowing lay experts control over the process outcomes while aiming to create robots that are self-explaining. Studies have shown that hand-crafted explanations can help maintain trust when a robot is not fully reliable (Wang et al. 2016). PD approaches could be fundamentally insightful and contribute to identifying ways in which robots could best explain their status, above all in failure situations (Tolmeijer et al. 2020). This would support the call of Holmquist (2017) to put people and robots in shared control. However, even the best designed explanations cannot cover all potential incidences, as learned autonomous behavior will always include unpredictable aspects. We expect that they will smooth the interaction and allow recovering from failure situations. Again, this has the potential to re-balance power structures not just within the design process, but additionally as part of the interaction paradigm between humans and robots, as the robot behavior becomes more traceable for humans.

Involving lay experts in teaching and training a robot can be a fruitful strategy to compensate for the side effects of an unclear robot status. For example, the iCustomPrograms (Chung et al. 2016) was developed as a system for programming socially interactive behaviors for service robots. A series of field studies confirmed the usefulness of this system. Similarly, Saunders et al. (2015) developed an interface that should allow for intuitive and nontechnical teaching of a service robot. Interfaces like these could be integrated in PD approaches and enable various stakeholders to be meaningfully involved in the behavioral design of the robotic system. This can also be considered as actual co-learning and reablement [Saunders 2015, p. 7], which can subsequently reinforce engagement with the robot and co-create meaning about the physical artifact as well as interacting with it. Thus, rather than passively accepting imposed solutions to a particular need, the user actively participates in formulating with the robot their own solutions and thus remains dominant of the technology and is empowered, physically, cognitively, and socially [Saunders 2015, p. 27].

Clearly, these suggestions are just a starting point how we might increase the participation culture in the development of service robots and thereby address our above-mentioned practicalities. We understand this as a first step enabling researchers to reflect on their development cultures and as a starting point to develop their individual cultures of participant in HRI research and design. However, PD in this process has the potential to (1) increase the knowledge of participants and intended audiences about the technology, (2) empower both participants and researchers, and (3) provide participants with shared control over the process and its outcomes as they are involved in the training and personalization of a given robot.

6 Conclusion

In recent years, stakeholder involvement found its way into the development processes of social service robots. However, we still observe that robotics researchers prefer to envision a passive role for lay experts instead of conceptualizing them as relevant participants (Cheon and Su 2016). Robotic and other off-the-shelf technology, though, evolve rapidly and make it, therefore, possible to involve lay experts in the design of robots in more substantial ways than before including in building and using robots (Frederiks et al. 2019).

We agree with Bratteteig and Verne [2018, p. 5] that AI (and with it robotics) poses complex challenges, but we do not think that they reach beyond the scope of any PD project. We argue for a close collaboration between PD, HRI, and robotics researchers as a chance to develop social service robots that co-exist with us in our social environments and meet lay experts’ actual needs and expectations. PD philosophy in combination with its established methods can change the culture of service robot development to be more participatory and oriented on shared agency in design and development. Complementary, the engineering complexity of social service robots offers a rich design space, full of learning opportunities for future PD practices. Our identified robot-related challenges should encourage PD and HRI researchers to work closer together to build up a solid base of methods, techniques and experiences for this situated design space—accounting for the general and specific challenges alike.

Specifically, we will need innovative approaches and methods in how to involve lay experts in the teaching and training of robots, not only while researchers are present, but also later when people interact with the robots in the deployed context-of-use.

The projects IURO and Hobbit illustrate that robot development is distributed across different actors, not only scientific disciplines, but also companies and organizations building its parts, and that the primary target audience we had initially in mind might not be the only one being affected by the eventual robot. Even though we deeply cared about involving laypeople in these projects in all phases of the development process we faced several challenges above all in building and using the robots.

In a future PD culture for robot development, we should aim to see humans and robots less as a dichotomy, but rather as human and non-human actors in a sociotechnical network that needs to be designed for as such. We experienced that stakeholders who would have been directly affected by the deployment of these robots in pedestrian areas (IURO) and private homes of older adults (Hobbit) were not given enough space for their perspectives during the building phase and that decisions were taken in the inner circle of the research team. We need to find ways to address the stark power imbalance between these groups as they will expand from research projects into societal uptake of robots. All story-telling is normative and reflected in lay experts’ expectations towards co-exiting robots. If we continue to create our stories on social service robots in the inner circle of our project teams we do not do justice to the public funding we receive for most of these projects, making us fail to take up our direct public responsibility.

Notes

Later in the project, this design decision was reversed and non-functional arms were added that could clearly not grasp anything, but gave the robot a more human-like look-and-feel.

References

Alissandrakis A, Syrdal’r DS, Miyake Y (2011) Helping robots imitate acknowledgmentof, and adaptation to, the robot’s feedback to a human task demonstration. New Front Hum Robot Interact 2:9–34. https://doi.org/10.1075/ais.2.03ali

Argall BD, Chernova S, Veloso M, Browning B (2009) A survey of robot learning from demonstration. Robot Auton Syst 57(5):469–483

Azenkot S, Feng C, Cakmak M (2016) Enabling building service robots to guide blind people a participatory design approach. In 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, pp 3–10

Bajones M, Huber A, Weiss A, Vincze M 2014 Towards more flexible hri: How to adapt to the user? In workshop on cognitive architectures for human-robot interaction at 9th ACM/IEEE International Conference on Human-Robot Interaction. Citeseer

Bajones M, Fischinger D, Weiss A, Wolf D, Vincze M, de la Puente P, Körtner T, Weninger M, Papoutsakis K, Michel D et al (2018) Hobbit: providing fall detection and prevention for the elderly in the real world. J Robot. https://doi.org/10.1155/2018/1754657

Bajones M, Fischinger D, Weiss A, Puente PD, Wolf D, Vincze M, Körtner T, Weninger M, Papoutsakis K, Michel D, Qammaz A, Panteleris P, Foukarakis M, Adami I, Ioannidi D, Leonidis A, Antona M, Argyros A, Mayer P, Panek P, Eftring H, Frennert S (2019) Results of field trials with a mobile service robot for older adults in 16 private households. J. Hum. Robot Interact. 9(2):1–27. https://doi.org/10.1145/3368554

Bannon LJ, Ehn P (2012) Design: design matters in participatory design. In: Simonsen J, Robertson T (eds) Routledge International Handbook of Participatory Design. Routledge, pp 57–83

Bartneck C, Suzuki T, Kanda T, Nomura T (2007) The influence of people’s culture and prior experiences with aibo on their attitude towards robots. AI & Soc 21(1–2):217–230

Bertel LB, Rasmussen DM, Christiansen E (2013) Robots for real: Developing a participatory design framework for implementing educational robots in real-world learning environments. In: IFIP Conference on Human-Computer Interaction. Springer, pp 437–444

Bjögvinsson E, Ehn P, Hillgren PA (2012) Design things and design thinking: contemporary participatory design challenges. Des Issues 28(3):101–116

Bødker S, Ehn P, Kammersgaard J, Kyng M, Sundblad Y (1987) A Utopian Experience: On design of Powerful Computer-based tools for skilled graphic workers. In: Bjerknes G, Ehn P, Kyng M (eds) Computers and democracy: a Scandinavian challenge. Aldershot, pp 251–278

Bratteteig T, Verne G (2018) Does AI make PD obsolete?: exploring challenges from artificial intelligence to participatory design. In: proceedings of the 15th participatory design conference: short papers, situated actions, workshops and tutorial, vol 2. ACM, p 8

Bratteteig T, Wagner I (2012) Disentangling power and decision-making in participatory design. In: proceedings of the 12th participatory design conference: research papers, Vol 1, pp 41–50

Bruckenberger U, Weiss A, Mirnig N, Strasser E, Stadler S, Tscheligi M (2013) The good, the bad, the weird: audience evaluation of a “real” robot in relation to science fiction and mass media. In: International Conference on Social Robotics. Springer, pp 301–310

Buchner R, Weiss A, Tscheligi M (2011) Development of a context model based on video analysis. In: Proceedings of the 6th International Conference on Human-robot interaction. ACM, pp 117–118

Caleb-Solly, P, Dogramadzi S, Ellender D, Fear T, Heuvel HV (2015) A mixed-method approach to evoke creative and holistic thinking about robots in a home environment. In: Proceedings of the 2014 ACM/IEEE International Conference on Human-robot interaction. ACM, pp 374–381

Carton D, Turnwald A, Wollherr D, Buss M (2013) Proactively approaching pedestrians with an autonomous mobile robot in urban environments. In: Experimental Robotics. Springer, pp 199–214.

Chang WL, Sabanovic S (2015) Interaction expands function: Social shaping of the therapeutic robot paro in a nursing home. In: 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), . IEEE, pp 343–350

Cheon E, Su NM (2016) Integrating roboticist values into a value sensitive design framework for humanoid robots. In: 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, pp. 375–382

Chung MJY, Huang J, Takayama L, Lau T, Cakmak M (2016) Iterative design of a system for programming socially interactive service robots. In: International Conference on Social Robotics. Springer, pp 919–929

Dautenhahn K (2004) Robots we like to live with?!-a developmental perspective on a personalized, life-long robot companion. In: RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No. 04TH8759). IEEE, pp 17–22

Dautenhahn K (2007) Socially intelligent robots: dimensions of human–robot interaction. Phil Trans R Soc B 362(1480):679–704

Dautenhahn K, Woods S, Kaouri C, Walters ML, Koay KL, Werry I (2005) What is a robot companion-friend, assistant or butler? In: 2005 IEEE/RSJ international conference on intelligent robots and systems. IEEE, pp. 1192–1197

De Graaf M, Allouch SB, Van Diik J (2017) Why do they refuse to use my robot?: Reasons for non-use derived from a long-term home study. In: 2017 12th ACM/IEEE International Conference on Human-Robot Interaction. HRI, pp 224–233

De Graaf M, Ben Allouch S, van Dijk J (2017) Why do they refuse to use my robot?: Reasons for non-use derived from a long-term home study. In: Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, HRI ’17. ACM, New York, USA, pp 224–233. DOI https://doi.org/10.1145/2909824.3020236.

de la Puente P, Bajones M, Einramhof P, Wolf D, Fischinger D, Vincze M (2014) Rgbd sensor setup for multiple tasks of home robots and experimental results. In: 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, pp 2587–2594

Druin, A (1999) Cooperative inquiry: Developing new technologies for children with children. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’99, Association for Computing Machinery, New York, USA, pp 592–599. DOI https://doi.org/10.1145/302979.303166

Feil-Seifer DJ, Matari MJ (2009) Human-robot interaction. pp. 4643–4659. Springer, New York. URL http://robotics.usc.edu/publications/585/

Fernaeus Y, Hakansson M, Jacobsson M, Ljungblad S (2010) How do you play with a robotic toy animal? a long-term study of pleo. In: Proceedings of the 9th international Conference on interaction Design and Children, pp 39–48

Fink J (2014) Dynamics of human-robot interaction in domestic environments. Tech rep, EPFL

Fischer, K (2006) What computer talk is and isn’t. human-computer conversation as intercultural communication 17

Fischer G, Giaccardi E (2006) Meta-design: A framework for the future of end-user development. In: End user development. Springer, pp. 427–457

Fischinger D, Weiss A, Vincze M (2015) Learning grasps with topographic features. Int J Robot Res 34(9):1167–1194

Fischinger D, Einramhof P, Papoutsakis K, Wohlkinger W, Mayer P, Panek P, Hofmann S, Koertner T, Weiss A, Argyros A et al (2016) Hobbit, a care robot supporting independent living at home: first prototype and lessons learned. Robot Auton Syst 75:60–78

Förster F, Weiss A, Tscheligi M (2011) Anthropomorphic design for an interactive urban robot-the right design approach? In: 2011 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, pp 137–138

Frauenberger C, Makhaeva J, Spiel K (2017) Blending methods: developing participatory design sessions for autistic children. In: Proceedings of the 2017 Conference on Interaction Design and Children, IDC ’17. Association for Computing Machinery, New York, USA, pp 39–49. https://doi.org/10.1145/3078072.3079727.

Frederiks AD, Octavia JR, Vandevelde C, Saldien J (2019) Towards participatory design of social robots. In: IFIP Conference on Human-Computer Interaction. Springer, pp 527–535

Frennert S, Östlund B, Eftring H (2012) Would granny let an assistive robot into her home? In: International conference on social robotics. Springer, pp. 128–137

Frennert S, Eftring H, Östlund B (2013) Older people’s involvement in the development of a social assistive robot. In: International Conference on Social Robotics. Springer, pp. 8–18

Gonsior B, Landsiedel C, Glaser A, Wollherr D, Buss M (2011) Dialog strategies for handling miscommunication in task-related hri. In: 2011 RO-MAN. IEEE, pp. 369–375

Gonsior B, Sosnowski S, Mayer C, Blume J, Radig B, Wollherr D, Kühnlenz K (2011) Improving aspects of empathy and subjective performance for HRI through mirroring facial expressions. In: 2011 RO-MAN. IEEE, pp 350–356

Gregory J (2003) Scandinavian approaches to participatory design. Int J Eng Educ 19(1):62–74

Harrington C, Erete S, Piper AM (2019) Deconstructing community-based collaborative design: Towards more equitable participatory design engagements. Proc. ACM Hum-Comput. Interact. 3(CSCW). https://doi.org/10.1145/3359318

Holmquist LE (2017) Intelligence on tap: artificial intelligence as a new designmaterial. Interactions 24(4):28–33

Hornecker E, Bischof A, Graf P, Franzkowiak L, Krüger N (2020) The interactive enactment of care technologies and its implications for human-robot-interaction in care. In: Proceedings of the 11th Nordic Conference on Human-Computer Interaction: Shaping Experiences, Shaping Society, NordiCHI ’20. Association for Computing Machinery, New York, USA. https://doi.org/10.1145/3419249.3420103

Huber A, Lammer L, Weiss A, Vincze M (2014) Designing adaptive roles for socially assistive robots: a new method to reduce technological determinism and role stereotypes. J Hum Robot Interact 3(2):100–115

Karreman DE, van Dijk EM, Evers V (2012) Contextual analysis of human non-verbal guide behaviors to inform the development of frog, the fun robotic outdoor guide. In: International Workshop on Human Behavior Understanding. Springer, pp 113–124

Körtner T. Schmid A, Batko-Klein D, Gisinger C, Huber A, Lammer L, Vincze M (2012) How social robots make older users really feel well–a method to assess users’ concepts of a social robotic assistant. In: International Conference on Social Robotics. Springer, pp 138–147

Kriz S, Ferro TD, Damera P, Porter JR (2010) Fictional robots as a data source in hri research: Exploring the link between science fiction and interactional expectations. In: 19th International Symposium in Robot and Human Interactive Communication. IEEE, pp 458–463

Lammer L, Huber A, Weiss A, Vincze M (2014) Mutual care: How older adults react when they should help their care robot. In: AISB2014: Proceedings of the 3rd international symposium on new frontiers in human–robot interaction. Routledge London, UK, pp 1–4

Lammer L, Weiss A, Vincze M (2015) The 5-step plan: a holistic approach to investigate children’s ideas on future robotic products. In: Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction Extended Abstracts. ACM, pp. 69–70

Lee MK, Forlizzi J, Rybski PE, Crabbe F, Chung W, Finkle J, Glaser E, Kiesler S (2009) The snackbot: documenting the design of a robot for long-term human-robot interaction. In: Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction. ACM, pp 7–14

Lee MK, Kiesler S, Forlizzi J (2010) Receptionist or information kiosk: how do people talk with a robot? In: Proceedings of the 2010 ACM Conference on Computer Supported Cooperative Work, CSCW ’10. ACM, New York, USA, pp 31–40. https://doi.org/10.1145/1718918.1718927

Lee HR, Sung J, Šabanović S, Han J (2012) Cultural design of domestic robots: a study of user expectations in Korea and the United States. In: 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication. IEEE, pp 803–808

Lee HR, Šabanović S, Chang WL, Nagata S, Piatt J, Bennett C, Hakken D (2017) Steps toward participatory design of social robots: mutual learning with older adults with depression. In: Proceedings of the 2017 ACM/IEEE international conference on human-robot interaction pp 244–253

Lichtenthäler C, Lorenz T, Karg M, Kirsch A (2012) Increasing perceived value between human and robots—measuring legibility in human aware navigation. In: 2012 IEEE workshop on advanced robotics and its social impacts (ARSO). IEEE, pp 89-94

Lorenčík D, Tarhaničová M, Sinčák P (2013) Influence of sci-fi films on artificial intelligence and vice-versa. In: 2013 IEEE 11th international symposium on applied machine intelligence and informatics (SAMI). IEEE, pp 27–31

McGinn C (2019) Why do robots need a head? the role of social interfaces on service robots. Int J Soc Robotics. https://doi.org/10.1007/s12369-019-00564-5

Mirnig N, Weiss A, Tscheligi M (2011) A communication structure for human-robot itinerary requests. In: Proceedings of the 6th international conference on Human-robot interaction. ACM, pp 205–206

Mirnig N, Gonsior B, Sosnowski S, Landsiedel C, Wollherr D, Weiss A, Tscheligi M (2012) Feedback guidelines for multimodal human-robot interaction: How should a robot give feedback when asking for directions? In: 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication. IEEE, pp 533–538

Mirnig N, Strasser E, Weiss A, Tscheligi M (2012) Studies in public places as a means to positively influence people’s attitude towards robots. In: International Conference on Social Robotics. Springer, pp 209–218

Mubin O, Bartneck C (2015) Do as i say: Exploring human response to a predictable and unpredictable robot. In: Proceedings of the 2015 British HCI Conference, pp 110–116

Muller, M.J (2003) Participatory design: the third space in HCI. In: J.A. Jacko, A. Sears (eds.) The Human-computer Interaction Handbook, pp. 1051–1068. L. Erlbaum Associates Inc. URL http://dl.acm.org/citation.cfm?id=772072.772138

Niu S, McCrickard DS, Harrison S (2015) Exploring humanoid factors of robots through transparent and reflective interactions. In: 2015 International Conference on Collaboration Technologies and Systems (CTS). IEEE, pp. 47–54

Nourbakhsh IR (2013) Robot futures. Mit Press

Payr S, Werner F, Werner K (2015) Potential of robotics for ambient assisted living. FFG benefit, Vienna

Pripfl J, Körtner T, Batko-Klein D, Hebesberger D, Weninger M, Gisinger C, Frennert S, Eftring H, Antona M, Adami I, Weiss A (2016) Results of a real world trial with a mobile social service robot for older adults. In: 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, pp 497–498

Rantanen T, Lehto P, Vuorinen P, Coco K (2018) The adoption of care robots in home care-A survey on the attitudes of Finnish home care personnel. J Clin Nurs 27(9–10):1846–1859. https://doi.org/10.1111/jocn.14355

Richardson K (2015) An anthropology of robots and AI: Annihilation anxiety and machines. Routledge

Riek LD (2012) Wizard of oz studies in HRI: a systematic review and new reporting guidelines. JHRI 1(1):119–136

Riek LD (2014) The social co-robotics problem space: six key challenges. robotics challenges and vision (RCV2013)

Riek L, Howard D (2014) A code of ethics for the human-robot interaction profession. Proceedings of We Robot

Rittel HW, Webber MM (1973) Dilemmas in a general theory of planning. Policy Sci 4(2):155–169

Rose EJ, Björling EA (2017) Designing for engagement: using participatory design to develop a social robot to measure teen stress. In: Proceedings of the 35th ACM International Conference on the Design of Communication, pp 1–10

Šabanović S (2010) Robots in society, society in robots. Int J Soc Robotics 2(4):439–450

Saunders J, Syrdal DS, Koay KL, Burke N, Dautenhahn K (2015) “teach me–show me”—end-user personalization of a smart home and companion robot. IEEE Trans Hum Mach Syst 46(1):27–40

Simonsen J, Robertson T (2012) Routledge international handbook of participatory design. Routledge

Sirkin D, Mok B, Yang S, Ju W (2015) Mechanical ottoman: how robotic furniture offers and withdraws support. In: Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction. ACM, pp 11–18

Spiel K, Malinverni L, Good J, Frauenberger C (2017) Participatory evaluation with autistic children. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, CHI ’17. Association for Computing Machinery. New York, USA, pp 5755–5766. DOI https://doi.org/10.1145/3025453.3025851

Stappers PJ (2007) Doing design as a part of doing research. Design research now, pp 81–91

Stollnberger G, Moser C, Giuliani M, Stadler S, Tscheligi M, Szczesniak-Stanczyk D, Stanczyk B (2016) User requirements for a medical robotic system: Enabling doctors to remotely conduct ultrasonography and physical examination. In: 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). IEEE, pp 1156–1161

Sung J, Christensen HI, Grinter RE (2009) Robots in the wild: understanding long-term use. In: Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, pp. 45–52

Tapus A, Maja M, Scassellatti B (2007) The grand challenges in socially assistive robotics. IEEE Robot Autom Mag 14(1):N-A

Tolmeijer S, Weiss A, Hanheide M, Lindner F, Powers TM, Dixon C, Tielman ML (2020) Taxonomy of trust-relevant failures and mitigation strategies. In: Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, pp 3–12

Triebel R, Arras K, Alami R, Beyer L, Breuers S, Chatila R, Chetouani M, Cremers D, Evers V, Fiore M, et al (2016) Spencer: a socially aware service robot for passenger guidance and help in busy airports. In: Field and service robotics. Springer, pp 607–622

Tsiourti C, Pillinger A, Weiss A (2020) Was vector a companion during shutdown? Insights from an ethnographic study in austria. In: Proceedings of the 8th International Conference on Human-Agent Interaction, HAI ’20 Association for Computing Machinery, New York, USA, p 269–271. https://doi.org/10.1145/3406499.3418767

Veselovská M, Mayerová K (2014) Pilot study: educational robotics at lower secondary school. In: Constructionism and Creativity Conference, Vienna

Vincze, M., Zagler, W., Lammer, L., Weiss, A., Huber, A., Fischinger, D., Koertner, T., Schmid, A., Gisinger, C (2014) Towards a robot for supporting older people to stay longer independent at home. In: ISR/Robotik 2014; 41st International Symposium on Robotics. VDE, pp 1–7

Vincze M, Bajones M, Suchi M, Wolf D, Lammer L, Weiss A, Fischinger D (2018) User experience results of setting free a service robot for older adults at home. Service Robots, InTech, p 23

Von Hippel E (2009) Democratizing innovation: the evolving phenomenon of user innovation. Int J Innov Sci

Wang N, Pynadath DV, Hill SG (2016) Trust calibration within a human-robot team: comparing automatically generated explanations. In: the eleventh ACM/IEEE International Conference on Human Robot Interaction. IEEE Press, pp 109–116

Weiss A, Igelsböck J, Calinon S, Billard A, Tscheligi M (2009) Teaching a humanoid: a user study on learning by demonstration with hoap-3. In: RO-MAN 2009-The 18th IEEE international symposium on robot and human interactive communication. IEEE, pp 147–152

Weiss A, Mirnig N, Buchner R, Förster F, Tscheligi M (2011) Transferring human-human interaction studies to hri scenarios in public space. In: IFIP Conference on Human- Computer Interaction. Springer, pp 230–247

Weiss A, Mirnig N, Bruckenberger U, Strasser E, Tscheligi M, Kuhnlenz B, Wollherr D, Stanczyk B (2015) The interactive urban robot: user-centered development and final field trial of a direction requesting robot. Paladyn J Behav Robot. https://doi.org/10.1515/pjbr-2015-0005

Wollherr D, Khan S, Landsiedel C, Buss M (2016) The Interactive Urban Robot IURO: towards robot action in human environments. In: Ani Hsieh M, Khatib O, Kumar V (eds) Experimental robotics. Springer, pp 277–291

Złotowski J, Weiss A, Tscheligi M (2011) Interaction scenarios for hri in public space. In: Bilge M, Christoph B, Jaap H, Vanessa E, Takayuki K (eds) International conference on social robotics. Springer, pp 1–10

Acknowledgements

We greatly acknowledge the financial support from the Austrian Science Foundation (FWF) under Grant Agreement No. V587-G29 (SharedSpace) and T 1146-G (Exceptional Norms).

Funding

Open access funding provided by TU Wien (TUW).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weiss, A., Spiel, K. Robots beyond Science Fiction: mutual learning in human–robot interaction on the way to participatory approaches. AI & Soc 37, 501–515 (2022). https://doi.org/10.1007/s00146-021-01209-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-021-01209-w