Abstract

Over the past two decades, researchers in the field of biometrics have presented a wide variety of coding-based palmprint recognition methods. These approaches mainly rely on extracting the texture features, e.g. line orientations, and phase information, using different filters. In this paper, we propose a new efficient palmprint recognition method based on the Different of Block Means. In the proposed scheme, only basic operations (i.e. mainly additions and subtractions) are used, thus involving a much lower computational cost when compared with existing systems. This makes the system suitable for online palmprint identification and verification. Furthermore, the technique has been shown to deliver superior performance over related systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the early 2000s, the human palmprint has emerged as a robust means that can efficiently be used for verifying and/or identifying the personal identity of individuals. Indeed, the inner surface of the human hand offers a vast region full of the distinctive features that can be efficiently used for verifying the personal identity of individuals. This has recently attracted widespread attention from researchers in the field of biometrics. Besides, all the distinctive features of the fingerprints, e.g. singular points and ridges exist in palmprints. Furthermore, the palmprint contains other discriminative features, e.g. principal lines and wrinkles, that can be used for recognition purposes at low-cost utilising different filters such as the Gabor filter [1, 2], the ordinal filter [3], and the wavelet filter [4]. Existing palmprint recognition techniques can be viewed from a number of perspectives depending on the way discriminative features are described. For instance, in [5, 6] edge detection filters were used to extract palm lines, which served as key information for matching. Other techniques rely on subspace projection to reduce features dimensionality and adopt distance measures or classifiers to perform matching [7,8,9]. Finally, some techniques employ a transform-based approach using low pass and rotational filters to encode the palmprint features in the form of a mapping matrix [2]. Such coding-based techniques have gained significant attention because of their high performance on well aligned/segmented low-resolution palmprint images.

In this context, the online palm authentication method, proposed by Zhang et al. [2], can be considered as the first attempt in the literature for identifying the personal identity using low-resolution palmprint images with a coding-based technique. Based on the fact that the inner surface of the human hand can be represented by some texture attributes such as the line and phase information. The technique, called PalmCode, uses a single 2D Gabor filter to describe the phase features of palmprints in the form of a phase-feature template. The latter is then exploited to generate a feature vector to efficiently represent the original palm. PalmCode achieves high rates of accuracy and speed in verifying the palmprint information extracted from low-resolution sets. Inspired by the PalmCode method, in [10], the authors presented a new palm-based recognition method called the competitive coding (CompCode) scheme. The scheme employs the real part of the neurophysiological-based 2D Gabor filter to extract the orientation information of a palmprint, and followed by a coding method to generate a feature vector to uniquely describe the original palmprint. The filter is nothing but an original Gabor filter whose freedom degrees were redistricted according to the findings of the neurophysiological method so that the palm-line pattern was modelled as an upside-down Gaussian function. In [11], a variant of the CompCode scheme has been considered by adopting a block-wise approach to compute the competitive codes. In an attempt to tackle the problem of the correlation of the old PalmCode, the same authors in [1] added two main alterations on their old work presented in [2]. This modification includes: (1) replace the static threshold of the old PalmCode by a dynamic one; (2) use a 2D Gabor filter with multiple orientations instead of single angle Gabor filtering to generate multiple PalmCodes. Then, these PalmCodes are merged to generate a feature vector called the Fusion Code (FusionCode). In [3], the 2D Gaussian filter was adopted to obtain the weighted intensity of each line-like region in the palmprint. The idea is to compare each pair of filtered regions that are orthogonal to each other in terms of the filters orientation. Generally speaking, coding-based palmprint approaches suffer severely from small image transformations, e.g. shift and rotations. In [12], the authors attempted to enhance the robustness of coding-based palmprint identification techniques against small image transformations, e.g. shift and rotation by using a modified version of the Radon transform (FRAT), called the modified Radon transform (MFRAT), to generate a code-like matrix. Furthermore, they presented a new matching method which takes into account small geometric changes by considering the neighbourhood of each pixel in the Radon-filtered image. This is referred in the literature to as the robust line orientation code (RLOC). Although RLOC shows slight superiority over its related competitors under the presence of geometric distortions, it suffers from highly computational complexity. The same authors addressed in [13] the problem of high dimensionality in the RLOC technique using the histogram of oriented lines where the magnitude and orientation of the Radon-filtered image were used to compute the histogram in a fashion that is similar to the conventional Histogram Of Gradient (HOG). Very recently, it has been found in [14] that the coding-based techniques offer more robustness when only two orientation angles are used in the filtering stage. This has significantly improved RLOC and the competitive coding technique. In [15], the authors developed a technique to estimate the Difference of Vertex Normal Vectors (denoted by DoN) which describe 3D palm information of the palm. These features have been successfully extracted from 2D palmprint images in the form of binary codes offering high performance on public datasets. More recently, a work in [16] explored the connection between the feature extraction model and the discriminative power of direction features in order to obtain highly discriminative palmprint features. The idea of exploring the latent direction features has also been reported in [17] where the authors exploited the latent direction features from the energy map layer of the apparent direction. The apparent and latent direction features were described in a histogram form for palmprint recognition. The proposed technique achieved state-of-the-art performance on four benchmark palmprint databases. The aforementioned coding-based techniques mainly rely on texture, contour, and edge features and are characterised by high identification accuracy and low computational complexity, making such systems suitable for real-time applications. These features have also been exploited on palm-vein images recently in biometric systems [18, 19].

Unlike existing coding-based palmprint identification techniques, this paper proposes a new, simple, and efficient palmprint coding technique based on the Difference of Block Means (DBM) that does not require any filtering operations. In the proposed scheme, only basic operations (i.e. mainly additions and subtractions) are used, thus involving a much lower computational cost when compared with existing systems. This makes the system suitable for online palmprint identification and verification. Furthermore, the technique has been shown to deliver superior performance over related systems. The rest of the paper is structured as follows. In Sect. 2, the proposed DBM code extraction scheme is described. Section 3 discusses a matching methodology adopted for palmprint identification and verification. Section 5 provides an experimental evaluation of the system in comparison with recent and related techniques. Section 6 summarizes and concludes the paper.

2 Proposed DBM palmprint code

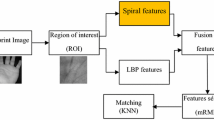

The proposed system for generating the palmprint code is illustrated by Fig. 1. First, the input hand image is pre-processed to obtain the region of interest describing the palmprint area (see Fig. 3). Here, the pre-processing stage that has been reported in existing research works such as [2, 20] is adopted. Then, the differences between overlapping block means are computed to describe the palm information in each direction. Finally, a threshold is applied to obtain the final palmprint code in each direction. Note that the palmprint codes are in binary form and are smaller in size than the original image due to the processing of block means instead of image pixels. This makes the matching process extremely fast for online palmprint recognition.

The idea of extracting differential block means (DBM) in the spatial domain was initially applied to videos for perceptual video hashing [21, 22]. However, to the best of our knowledge, no studies on the use of DBM in biometrics have yet been published. In this section, the features of DBM are claimed to make it more robust than the directional filters adopted in the literature (such as Gabor, wavelets, Radon, and Gaussian filters) for the construction of palmprint codes. The use of DBM features is justified in terms of their efficiency in representing textured areas, including edges and contours, at low computational cost. Moreover, only two directions are used to derive DBM codes. This idea is inspired by previous research [14] where it was found that two perpendicular directions describe the principal lines and the key texture of the palm in a more robust fashion than multiple directions. First, a two-dimensional (2D) array is formed by computing the mean of overlapping blocks of the same size in the palmprint ROI (Region Of Interest) image. Overlapping blocks in feature extraction are used due to their robustness against small geometric changes as demonstrated elsewhere [23]. Let \(M \times N\) be the number of overlapping blocks. Denote by A(i, j) the array obtained whose elements represent the statistical means of the overlapping blocks of the processed palmprint ROI image with \(0 \le i \le M-1\), \(0 \le j \le N-1\). Next, two 2D arrays of the same size are derived from A by calculating the differences in the horizontal (H) and vertical (V) directions, respectively, as:

Finally, the horizontal and vertical codes, \(C_{h}\) and \(C_{v}\), are derived by thresholding the previous DBM features as follows:

Figure 2 illustrates on a palmprint image sample the extraction of block means with a block size of \(16 \times 16\) and an overlap of 5 pixels as well as the DBM features and the corresponding codes.

3 Palmprint matching distance

The Hamming distance, \(D_{H}\), between a shifted version of a binary code \(C_{1}\) and \(C_{2}\) can be defined as

where k, l are integer indices. At the matching stage, the Hamming distance is the core of the proposed palmprint matching distance. However, the binary palmprint codes are shifted by one pixel in all possible directions to take small geometric changes into account. Let \(C_{1}=(C_{h,1},C_{v,1})\), and \(C_{2}=(C_{h,2},C_{v,2})\) be two palmprint code pairs corresponding to two palm images, accordingly. The horizontal distance \(d_{h}(C_{1},C_{2})\) is defined as:

Likewise, the vertical distance \(d_{v}(C_{v,1},C_{v,2})\) is defined as:

Finally, the proposed palmprint matching distance \(D_{\mathrm{palm}}(C_{1},C_{2})\) is defined in terms of the average of the horizontal and vertical distances. This is given as:

4 Computational complexity of DBM

Let P and Q be the size of the palmprint image. Denote by p and q the size of each block where P and Q are multiples of p and q, respectively, and s represents the overlap size. It is sensible to assume that \(p,q,s \ll \{P,Q\}\). It follows that

And

where \(\lfloor \cdot \rfloor \) is the floor function that takes the lower closest integer. The number of additions per block is \(p \times q-1\). Therefore, since the number of blocks is \(M \times N\), the computational cost due to incurred additions is O(PQ). To compute the statistical mean of each block, one division is required. Hence, the divisions are in the order of \(O(\frac{P Q}{p q})\). As for the subtractions required to compute the block differences in two directions (horizontal and vertical), there are 2MN subtractions. This also corresponds to \(O(\frac{P Q}{p q})\). Finally, the thresholding of the difference block means consist of an element-wise sign comparison that applies to all samples in the horizontal and vertical matrices, each of size \(M \times N\) and hence, this involves a cost of \(O(\frac{P Q}{p q})\). As a consequence, the total computational cost incurred by the DBM coding technique for an image of size \(P \times Q\) is O(PQ).

5 Experimental results

5.1 Experimental settings and datasets

In this research, four public palmprint datasets have been consider to validate our proposed system; namely: PolyU II [24], PolyU M-B [25], IITD [26], and CASIA [27] as will be detailed later. Figure3 shows samples from each dataset.

5.1.1 PolyU palmprint database (version 2.0)

The PolyU palmprint database (PolyU II) has been published by the Hong Kong Polytechnic University [24]. In biometrics, this dataset in particular has been widely used for evaluating palmprint recognition systems. However, PolyU II is available only in a full palmprint version. Hence, we used a universal cropping algorithm to extract the ROI which consists of a square of \(128 \times 128\) pixels. Samples of the ROIs that were extracted from PolyU II are depicted in Fig. 3a.

PolyU II consists of 7752 low-resolution palmprint images, collected from 193 individuals (Males and Females) over two different sessions separated by a minimum time interval of two months. In each session, each participant provided at least 10 low-resolution palmprint images from each of his/her left and right hand. That is, PolyU II can be viewed as a collection of 386 classes of palmprints. In our experiments, 1500 palmprint images (6 images per class \(\times \) 250 classes) from the first session are considered as reference images, whereas an equal number of palmprint images from the second session are used as query images. It is worth mentioning that in each database, the same image file order \(1,2,3,\ldots \), etc, is used as given by the original authors to obtain reference and query samples. For instance, in the PolyU II database the first 6 images in the first session (denoted by Set\(_{1}\)) for each participant are used as reference images and the first 6 images in the second session (denoted by Set\(_{2}\)) for each participant are used as query images. Our experiments suggest that the difference in performance when using different images within the same session is negligible. However, the difference when the reference and query sets are swapped (Set\(_{2}\) vs Set\(_{1}\) and Set\(_{1}\) vs Set\(_{2}\)) is clearly noticeable. Results of experiments on swapped sets are reported below, accordingly.

5.1.2 PolyU multispectral palmprint database

The PolyU multispectral (PolyU M) palmprint dataset was also collected by the Hong Kong Polytechnic University (PolyU) using a developed capturing machine, by which low-resolution versions of an image can be obtained under different illuminations such as Red (PolyU M-R), Green (PolyU M-G), Blue (PolyU M-B), and (PolyU M-NIR) near infra-red [25]. For each illumination, 250 individuals have provided 24 palmprint images from which 12 images were for the right hand and the other 12 images were for the left hand. This process has been conducted over two different sessions separated by a time interval of 9 days. In each session, each user was asked to provide six palmprint images for each of the right and left hand. In total, 6000 palmprint images were collected for each illumination corresponding to 500 classes. Unlike PolyU II, PolyU M is available in both full and cropped versions. Figure 3b shows samples of the ROIs that were extracted from the PolyU blue band palmprint (PlyU M-B) database. In our experiments, 3000 images for the blue band palmprints corresponding to 250 classes have been considered. That is, 1500 images acquired in the first session (i.e. 6 images per class) are used as reference images and 1500 images from the same classes but taken in a different session are used as query images.

5.1.3 IIT Delhi touchless Palmprint (version 1.0)

The IIT Delhi Touchless palmprint (IITD) database [26] has been developed over a period of one year (July 2006–Jun 2007) by the Biometrics Research Laboratory at IIT Delhi. The IITD palmprint database was collected from 230 participants, whose ages range between 12–57 years old. There are 14 high-resolution palmprint images of size 800 \(\times \) 600 pixels taken from the left or right hand of each participant. This makes a total of 3220 palmprint images corresponding to 230 classes (7 images per class). In addition to the full palmprint images, cropped versions of size 150 \(\times \) 150 pixels are also available, see Fig. 3c. In our settings, each of the reference and query sets contain 1380 palmprint images representing the 230 classes. That is, in each set a class is represented by 6 images.

5.1.4 CASIA palmprint database

The CASIA palmprint database was collected by the Chinese Academy of Sciences’ Institute of Automation (CASIA) [27]. The database consists of 5502 full palmprint images collected from 312 individuals. Each participant provided 16 palmprint images where at least 8 images were taken from each of the left and right hand. This represents 624 classes in total where each class has at least 8 images. The device that was used to acquire CASIA does not include any pegs to restrict postures and positions of the hand. The full palmprint images were cropped to a size of \(192 \times 192\) pixels, see Fig. 3d. In our settings, each of the reference and query sets contain 1800 palmprint images representing 450 classes where each class contains 4 images. As highlighted in [15], there are some issues when acquiring and sorting images in CASIA such as the presence of palmprint images in the wrong classes or the distorted image for individual 76. The classes that come with these issues are excluded in our setup.

5.2 System analysis

In this section, validation experiments have been conducted on the palmprint images representing the reference images. To this end, we have split the reference set in two subsets (reference subset and validation subset). The reason for conducting this experiment is twofold. First, to understand the sensitivity of the proposed system to block size change. Second, to determine the optimal block size for each dataset as the datasets have been acquired using different devices and under different illumination conditions in addition to the fact that images were taken at various resolutions. The proposed DBM technique has been analysed using the following protocol. For PolyU II, PolyU M-B, and IITD, 3 images from each class (the first 3 images in the dataset) represent the reference subset and the remaining 3 images constitute the validation subset. For CASIA, the first 2 images from each class form the reference subset and the remaining 2 images are used for validation. Results in terms of the proportion of correctly identified palmprint images are depicted in Table 1 for different values of the block size \((p \times q)\).

As can be seen, the system performs well on the validation subset though the accuracy slightly changes against the block size \((p \times q)\). In the rest of the paper and according to these validation results, the block size is set to \((16 \times 16)\) for PolyU II, PolyU M-B, IITD and to \((20 \times 20)\) for CASIA in the rest of the paper.

5.3 Comparison with state-of-the-art techniques

In order to assess the performance of the proposed palmprint recognition system, extensive experiments have been conducted on the four aforementioned standard palmprint databases. A number of related state-of-the-art techniques have also been applied where the same experimental protocols are maintained for all the tested techniques for fair comparison. All the competing palm-identification systems used in this paper have been implemented by the authors. This is because the performance of the reported techniques varies against the number and quality of palmprint images used, and since such images were not specifically mentioned in detail, it is not possible to replicate exactly the same experimental settings as reported in the literature. This is why different results with such techniques can be found in the literature on the same palmprint databases. In this context, a slight variation to our experimental settings has been considered in our evaluation in order to demonstrate this point. Indeed, to illustrate the sensitivity of palmprint recognition systems to image quality within each database, the reference and query sets, denoted here by Set\(_{1}\) and Set\(_{2}\), respectively, have been considered interchangeably. In the protocol Set\(_{2}\) vs Set\(_{1}\), Set\(_{2}\) represents query images and Set\(_{1}\) consists of reference images. On the other hand, Set\(_{1}\)vs Set\(_{2}\) is used to refer to Set\(_{1}\) as query images and Set\(_{2}\) as reference images.

To the best of our knowledge, there are very few systematic studies on deep learning with applications to palmprint recognition. We believe that current deep learning systems are still immature to compete with traditional methods in terms of performance and low complexity. In fact, as mentioned in [29], the systems that directly use global and high level features extracted by CNN cannot be suitable for the palmprint recognition problem. This has been clearly shown in [30] (Table V) where coding-based methods (Competitive code, DoN, and Ordinal code) outperform common deep learning structures such as AlexNet-S, GoogleNet, ResNet-50, and VGG-16. The deep learning-based palmprint recognition systems that have been shown to perform well have also used low level features or different filters than the standard ones including Gabor filters, PCA, or a shit-based loss function as in [30, 31] or an alignment network that precedes the CNN as in [29] and this has added extra complexity to enhance performance. Basically, deep learning systems already involve very high complexity cost and require much more computations than coding-based palmprint recognition techniques. The fact that GPUs are required for such systems clearly indicate that a large number of calculations have to be performed in parallel. This is because GPUs are designed with thousands of processor cores running simultaneously, and this enables massive parallelism where each core is focused on making efficient calculations. With a normal CPU, deep learning systems would require a significant amount of time to train and test. Furthermore, CNN-based systems have normally been trained with a large dataset and tested on a smaller subset unlike our experimental protocol where the number of test images is equal to the number of training images.

In this section, the performance of both palmprint identification and verification is assessed. In the identification experiments, performance is measured as the proportion of correctly identified palmprint images to the total number of query images. This is referred to as ’Iden’ in the rest of this paper. In palmprint verification, however, the task is to verify whether or not a query palmprint image represents a genuine participant. Because a threshold must be set in order to reach such a decision, one can use a range of values in order to measure the performance of the systems, including the false positive rate (FPR) and true positive rate (TPR), for each threshold value. This leads us to what is known in the literature as the receiver operating characteristics (ROC) curve. Also, the equal error rate (EER) has been adopted in verification experiments. The EER defines the point in percentage where the false rejection rate [i.e. \(100(1-TPR)]\) becomes equal to the false acceptance rate. This can be determined by finding a threshold \(T^{*}\) so that \(FPR(T^{*})=100(1-TPR(T^{*}))\).

Note that a palmprint image is said to be of a certain class if the nearest reference image belongs to that class, so that the matching distance for the corresponding reference image as given in (8), is the lowest among all reference images of other classes. The experimental results are depicted in Tables 2, 3, 4, 5 and Figs. 4, 5, 6, 7.

Tables 2, 3, 4, 5 show that the proposed palmprint recognition system achieves high performance on low-resolution palmprint image datasets when compared to its competitors from existing work such as the PalmCode and CompCode. It can also be seen from the results that the coding-based palmprint identification techniques perform well on both PolyU II and PolyU M-B because of the highly accurate alignment of images at the acquisition stage. The DoN technique [15] offers the best performance among the competing techniques and this is in line with the authors’ claim on its DoN superiority over related techniques in [15]. DoN delivers the best performance on PolyU II slightly outperforming our DBM technique. However, DBM clearly has the upper hand over its competitors on the challenging datasets IITD and CASIA. This is mainly attributed to the fact that the block-based nature of the algorithm offers more robustness against illumination noise as well as geometric changes at the image acquisition stage.

Although the authors in [14] argued that the pixel-to-pixel matching strategy is more robust than the pixel-to- area matching approach proposed in [12], our results seem to differ from their findings and are actually in perfect agreement with the claim made in [12] in the sense that the one-to-many matching approach takes into consideration small shifts and rotations in palmprint images. Finally, it is worth noting the sensitivity of the techniques to the quality of images used. Indeed, although the same number of images from each database was used, swapping query and reference images yields different results.

5.4 Complexity analysis

To analyse the computational complexity of the proposed system along with other competing code-based techniques, the average run time on 100 test palmprint images with a size of \(128 \times 128\) pixels is measured. All the source codes were implemented in MATLAB and run on a platform of an Intel Core Duo i7-4770 CPU 3.40 GHz with 16 GB of memory. Note that MATLAB is a high-level programming language and the results reported could be significantly improved using a lower level programming language such as C or C++. As previously mentioned, all the competing techniques have been implemented in this paper. The results in milliseconds (ms) are depicted in Table 6.

The computational cost of the proposed hashing system is low when compared to its competitors at both the code extraction and matching stages. This is because the code extraction stage does not require any filtering operations and uses only basic operations. Furthermore, the process is only conducted in two directions (horizontal and vertical) unlike other related techniques. The matching stage is conducted on binary codes as in other competing techniques but lower complexity was involved since the extracted codes are much smaller in size than those used in the compared techniques.

6 Conclusion

In this paper, a simple, fast, and efficient coding-based palmprint recognition system has been proposed. The technique relies on the Difference of Block Means (DBM) which require a sufficiently small number of basic operations (i.e. mainly additions and subtractions) for online and real-time applications. The system has been analysed and assessed on a number of palmprint databases, and it has been shown to achieve superior performance when compared to related state-of-the-art techniques at lower computational cost.

References

Kong, A., Zhang, D., Kamel, M.: Palmprint identification using feature-level fusion. Pattern Recognit. 39(3), 478–487 (2006)

Zhang, D., Kong, W.K., You, J., Wong, M.: Online palmprint identification. IEEE Trans. Pattern Anal. Mach. Intel. 25(9), 1041–1050 (2003)

Zhenan, S., Tieniu, T., Yunhong, W., Li. S.Z.: Ordinal palmprint represention for personal identification. In: IEEE International Conference on Computer Vision and Pattern Recognition (CVPR’05), vol. 1, pp. 279–284, June 2005

Xiang-Qian, W., Kuan-Quan, W., Zhang, D.: Wavelet based palm print recognition. Intern. Conf. Mach. Learn. Cybern. 3, 1253–1257 (2002)

Boles, W.W., Chu, S.Y.T.: Personal identification using images of the human palm. IEEE Annual Conf. Speech Image Technol. Comput. Telecommun. 1, 295–298 (1997)

Diaz, M.R., Travieso, C.M., Alonso, J.B., Ferrer, M.A.: Biometric system based in the feature of hand palm. In: Annual International Carnahan Conference on Security Technololgy, pp. 136–139, (Oct. 2004)

Lu, G., Zhang, D., Wang, K.: Palmprint recognition using eigenpalms features. Pattern Recognit. Lett. 24, 1463–1467 (2003)

Wu, X., Zhang, D., Wang, K.: Fisherpalms based palmprint recognition. Pattern Recognit. Lett. 24, 2829–2838 (2003)

Laadjel, M., Bouridane, A., Kurugollu, F., Yan, W.: Palmprint recognition based on subspace analysis of gabor filter bank. Intern. J. Dig. Crime Forensics 4, 1–15 (2010)

Kong, A.W.K., Zhang, D.: Competitive coding scheme for palmprint verification. In: Proceedings of the 17th International Conference on Pattern Recognition, vol. 1, pp. 520–523, (2004)

Zhang, L., Li, L., Yang, A., Shen, Y., Yang, M.: Towards contactless palmprint recognition: a novel device, a new benchmark, and a collaborative representation based identification approach. Pattern Recognit. 69, 199–212 (2017)

Jia, W., Huang, D.-S., Zhang, D.: Palmprint verification based on robust line orientation code. Pattern Recognit. 41(5), 1504–1513 (2008)

Jia, W., Hu, R.-X., Lei, Y.-K., Zhao, Y., Gui, J.: Histogram of oriented lines for palmprint recognition. IEEE Trans. Syst. Man Cybern. Syst. 44(3), 385–395 (2014)

Zheng, Q., Kumar, A., Pan, G.: Suspecting less and doing better: new insights on palmprint identification for faster and more accurate matching. IEEE Trans. Inf. Forensics Secur. 11, 633–641 (2016)

Zheng, Q., Kumar, A., Pan, G.: 3D feature descriptor recovered from a single 2D palmprint image. IEEE Trans. Pattern Anal. Mach. Intel. 38(6), 1272–1279 (2016)

Fei, L., Zhang, B., Xu, Y., Huang, D., Jia, W., Wen, J.: Local discriminant direction binary pattern for palmprint representation and recognition. IEEE Trans. Inf. Forensics Secur. 30(2), 468–481 (2020)

Fei, L., Zhang, B., Zhang, W., Teng, S.: Local apparent and latent direction extraction for palmprint recognition. Inf. Sci. 473, 59–72 (2019)

Bhilare, S., Jaswal, G., Kanhangad, V., Nigam, A.: Single-sensor hand-vein multimodal biometric recognition using multiscale deep pyramidal approach. Mach. Vis. Appl. 29, 1269–1286 (2018)

Ahmad, F., Cheng, L.-M., Khan, A.: Lightweight and privacy-preserving template generation for palm-vein-based human recognition. IEEE Trans. Inf. Forensics Secur. 15(5), 184–194 (2019)

Almaghtuf, J., Khelifi, F.: Self-geometric relationship filter for efficient sift key-points matching in full and partial palmprint recognition. IET Biom. 7(4), 296–304 (2018)

Oostveen, J., Kalker, T., Haitsma, J.: Feature extraction and a database strategy for video fingerprinting. In: Proceedings of the 5th International Conference on Recent Advances in Visual Information Systems, Taiwan, (Mar. 2002)

Khelifi, F., Bouridane, A.: Perceptual video hashing for content identification and authentication. IEEE Trans. Circuits Syst. Video Technol. 29(1), 50–67 (2019)

Khelifi, F., Jiang, J.: Perceptual image hashing based on virtual watermark detection. IEEE Trans. Image Process. 19, 981–994 (2010)

PolyU palmprint database. http://www4.comp.polyu.edu.hk/~biometrics/, accessed in Jan 2016

PolyU multispectral palmprint database. http://www4.comp.polyu.edu.hk/~biometrics/multispectralpalmprint/msp.htm, accessed in Jan.2016

IIT Delhi touchless palmprint database version 1.0. http://www4.comp.polyu.edu.hk/~csajaykr/iitd/database_palm.htm, accessed in Jan 2016

CASIA palmprint database, http://biometrics.idealtest.org/, accessed in Mar 2020

Fei, L., Zhang, B., Xu, Y., Yan, L.: Palmprint recognition using neighboring direction indicator. IEEE Trans. Human–Mach. Syst. 46(6), 787–798 (2016)

Liu, Y., Kumar, A.: Contactless palmprint identification using deeply learned residual features. IEEE Trans. Biom. Behav. Identity Sci. 2(2), 172–181 (2020)

Matkowski, W.M., Chai, T., Kong, A.W.K.: Palmprint recognition in uncontrolled and uncooperative environment. IEEE Trans. Inf. Forensics Secur. 15, 1601–1615 (2020)

Genovese, A., Piuri, V., Scotti, F., Plataniotis, K.N.: PalmNet: gabor-PCA convolutional networks for touchless palmprint recognition. IEEE Trans. Inf. Forensics Secur. 14(12), 3160–3174 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Almaghtuf, J., Khelifi, F. & Bouridane, A. Fast and efficient difference of block means code for palmprint recognition. Machine Vision and Applications 31, 51 (2020). https://doi.org/10.1007/s00138-020-01103-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-020-01103-3