Abstract

Purpose

To provide an overview and evaluate the performance of mortality prediction models for patients requiring extracorporeal membrane oxygenation (ECMO) support for refractory cardiocirculatory or respiratory failure.

Methods

A systematic literature search was undertaken to identify studies developing and/or validating multivariable prediction models for all-cause mortality in adults requiring or receiving veno-arterial (V-A) or veno-venous (V-V) ECMO. Estimates of model performance (observed versus expected (O:E) ratio and c-statistic) were summarized using random effects models and sources of heterogeneity were explored by means of meta-regression. Risk of bias was assessed using the Prediction model Risk Of BiAS Tool (PROBAST).

Results

Among 4905 articles screened, 96 studies described a total of 58 models and 225 external validations. Out of all 58 models which were specifically developed for ECMO patients, 14 (24%) were ever externally validated. Discriminatory ability of frequently validated models developed for ECMO patients (i.e., SAVE and RESP score) was moderate on average (pooled c-statistics between 0.66 and 0.70), and comparable to general intensive care population-based models (pooled c-statistics varying between 0.66 and 0.69 for the Simplified Acute Physiology Score II (SAPS II), Acute Physiology and Chronic Health Evaluation II (APACHE II) score and Sequential Organ Failure Assessment (SOFA) score). Nearly all models tended to underestimate mortality with a pooled O:E > 1. There was a wide variability in reported performance measures of external validations, reflecting a large between-study heterogeneity. Only 1 of the 58 models met the generally accepted Prediction model Risk Of BiAS Tool criteria of good quality. Importantly, all predicted outcomes were conditional on the fact that ECMO support had already been initiated, thereby reducing their applicability for patient selection in clinical practice.

Conclusions

A large number of mortality prediction models have been developed for ECMO patients, yet only a minority has been externally validated. Furthermore, we observed only moderate predictive performance, large heterogeneity between-study populations and model performance, and poor methodological quality overall. Most importantly, current models are unsuitable to provide decision support for selecting individuals in whom initiation of ECMO would be most beneficial, as all models were developed in ECMO patients only and the decision to start ECMO had, therefore, already been made.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

This extensive systematic review addresses the characteristics, quality and performance of all mortality prediction models which have ever been developed (n = 58) or externally validated (n = 225) in patients requiring extra-corporeal membrane oxygenation (ECMO) for refractory circulatory and/or respiratory failure. Despite the large number of ECMO mortality prediction models that have been published, current models seem unsuitable for individual decision making due to high concerns for bias and methodological shortcomings. Furthermore, the conditionality of models on the fact that ECMO had already been initiated in all cohorts further limits their applicability for ECMO allocation. |

Introduction

Extracorporeal membrane oxygenation (ECMO) is increasingly used as a salvage support modality in patients with refractory cardiogenic shock and/or severe respiratory failure. Despite its widespread application [1, 2], ECMO remains associated with high complication rates [3], and a considerable number of patients eventually die [4], or experience negative long-term sequelae [5, 6]. The increased use of ECMO for a broadened range of indications poses a significant socio-economic burden [7, 8] as its management requires extensive critical care by highly qualified personnel. Therefore, it is important to reserve ECMO support mainly for those who benefit most.

Prediction models could potentially aid in important decisions such as the allocation of ECMO to patients who would experience a clear survival advantage when receiving such support. In recent years, many prediction models have been developed for the dedicated use in ECMO patients [9,10,11,12,13,14,15,16], but a comprehensive overview of developed models with their predictive performance, and practical applicability to inform clinicians when and in which context these models could be applied currently lacks.

The primary objective of this systematic review was to summarize and appraise all published prognostic models for patients requiring veno-arterial (V-A) or veno-venous (V-V) ECMO support for severe cardiocirculatory shock and/or respiratory failure. As a secondary objective, model performance was assessed for each model.

Methods

This review was registered at the international prospective register of systematic reviews PROSPERO (University of York) with registration number CRD42021251873. The formalized scope of this review is outlined in the Population, Intervention, Comparator, Outcomes, Timing and Setting (PICOTS) table available in Table 1 of Electronic Supplementary Material (ESM) Appendix 1.

Eligibility criteria

We considered all original research reports reporting on the development, redevelopment and/or external validation of a multivariable (≥ 2 predictors) model aimed at the prediction of all-cause mortality in adult (> 18 years) patients receiving V-A or V-V ECMO support for severe cardiocirculatory and/or respiratory failure. Only peer-reviewed research articles published in English were considered for inclusion. There were no limitations on prediction horizon or year of publication.

Search and data extraction

A systematic literature search (details available in ESM Appendix 2) was undertaken in PubMed and EMBASE on January 3, 2022. Two reviewers (LP and JB) independently screened all articles for eligibility based on title and abstract. Subsequently, both reviewers assessed the remaining articles in full text. Discrepancies between reviewers were resolved through discussion with a third and independent reviewer (CM). Thereafter, articles citing studies reporting on the derivation of prognostic models were extracted through reference search in ISI Web of Science. In addition, a cross-reference check was performed in all retrieved articles to identify other eligible articles.

Two reviewers (LP and JB) independently extracted data using a standardized data extraction form (ESM Appendix 3). Again, discrepancies between reviewers were resolved through discussion with a third independent reviewer (CM). Items of the data extraction form were based on the CHecklist for critical Appraisal and data extraction for systematic Reviews of prediction Modeling Studies (CHARMS) which addresses key information on the source of data, study design, participants, outcomes, candidate predictors, prediction horizon, sample size, missing data, model development, model performance and model evaluation [17]. The Prediction model Risk Of BiAS Tool (PROBAST) was used to assess the risk of bias and concern for applicability per model, using signaling questions in four different domains (participants, predictors, outcomes and analysis) [18]. The overall risk of bias was judged ‘low’ when all four domains were scored as having low risk of bias. When at least one domain indicated a high risk of bias, overall risk of bias was scored high. When the risk of bias was ‘unclear’ in at least one domain and all other domains were scored low risk of bias, the overall risk of bias was scored ‘unclear’. This scoring system was also applied to the assessment of concerns of study applicability, indicating the extent to which the participants, predictors and outcomes in the study matched the review question.

We extracted concordance (c) statistics as a measure of discrimination. A c-statistic represents the ability of a model to distinguish a patient with—versus an individual without—the outcome of interest. A higher c-statistic illustrates a higher discriminatory capability (range 0–1). For the assessment of calibration, observed versus expected (O:E) ratios were extracted. O:E ratios reflect the agreement between the observed and predicted outcomes and the direction of misclassification, i.e., O:E > 1 reflects underprediction and O:E < 1 overprediction. When the predicted mortality was not reported, the reported mean model score in the dataset was used to estimate the corresponding predicted mortality or survival, if possible. When a study reported separate performance metrics for derivation and validation datasets, both values were included as independent observations.

Statistical analysis

We pooled the logit c-statistics and log O:E ratios [19] of prediction models with random-effects meta-analysis when these metrics were available in at least 10 independent datasets with comparable outcome of interest, i.e., we did not mix short-term and long-term mortality. If measures of uncertainties, i.e., confidence intervals (CI) and/or standard errors (SE) of the c-statistic or O:E ratio, were not reported they were approximated as described earlier [19]. Pooled c-statistics and O:E ratios were presented using forest plots. In addition, we calculated 95% prediction intervals (PI) [19]. A 95% PI reflects the range in which each performance metric is expected to fall in future validation studies and reflects the degree of between-study heterogeneity.

To assess whether model performance was influenced by differences in study characteristics (prediction moment, geographical location, year of start recruitment, mean model score and risk of bias), patient characteristics (median age, percentage male patients, percentage of patients with the predicted outcome) or features relating to the disease or ECMO configuration (percentage V-A ECMO, percentage bacterial and viral pneumonia, pre-ECMO cardiac arrest, postcardiotomy, immunocompromised status prior to ECMO), univariable random effects meta-regression was performed. In addition, subgroup analysis was performed to assess performance of prediction models in specific cohorts (e.g., after excluding ECPR patients). This systematic review was reported according to the PRISMA statement for reporting systematic reviews and meta-analysis [20]. R version 1.3.1093 with packages ‘metamisc’ and ‘metafor’ was used for data analysis.

Results

Our search yielded 4905 unique publications (Fig. 1). 4485 articles were excluded based on title/abstract, and 328 based on full text review, respectively, leaving 92 eligible articles for inclusion. After identification of 4 other articles through cross-reference checking, 96 articles were included in our study. Of these 96 articles, 46 articles showed the developments of 58 models. 77 articles reported on 225 external model validations and 27 articles reported on both model development and external validation(s).

Patients and settings

Of 58 developed models, 21 (15 articles) [9, 11, 13, 21,22,23,24,25,26,27,28,29,30,31,32] and 31 (29 articles) [10, 12, 14,15,16, 30, 33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57] were derived from ECMO recipients suffering from respiratory and cardiocirculatory failure, respectively. Six other models (in two studies) were derived in a mixed cohort [58, 59]. All models were developed in patients who received ECMO and a majority were single-center cohorts (n = 40, 69%). The models’ derivation cohort sizes ranged from 17 to 4175 patients. A comprehensive overview of the populations, geographical location and recruitment years of derived models is available in Fig. 2a. Recruitment of cohorts for model derivation and validation occurred between 2002 and 2020. Patients’ median or mean age (depending on normality of the distribution) ranged from 37 to 76 years across all cohorts. All cohorts comprised predominantly males with the percentage of male patients varying between 50 and 85%. The time point of prediction was shortly (within 48 h) after ECMO initiation in the majority of models (n = 42, 72%) [9,10,11,12,13,14,15,16, 21,22,23,24,25,26,27, 29,30,31,32,33,34,35, 37,38,39, 41,42,43,44,45, 49, 50, 52, 54, 59], during ECMO support (n = 8, 14%) [28, 40, 51, 53], after weaning (n = 1, 2%) [36], before durable mechanical circulatory support implantation (n = 1, 2%) [46], before dialysis while on ECMO (n = 1, 2%) [58] and pre-surgery in postcardiotomy patients (n = 1, 2%) [55]. In three models (5%) [47, 48, 57], the time point of prediction was unclear. Pre-ECMO variables were used for mortality prediction in the majority of models (67%) (the time point of prediction was after initiation of ECMO, also if only pre-ECMO variables were used). The most frequently included predictors were age, lactate, Sequential Organ Failure Assessment (SOFA) score, duration of mechanical ventilation prior to ECMO, weight, gender and immunocompromised status pre-ECMO (in 23, 18, 14, 7, 6, 5 and 5 models, respectively). An overview of the included predictors per model for ECMO patients suffering respiratory failure, cardiocirculatory failure and mixed cohorts is available in ESM Appendix 4 (Tables 2, 3 and 4).

The outcome of interest, i.e., the predicted outcome of the model, ought to occur in short term (in-hospital mortality or survival, n = 41 (71%), ICU mortality/survival, n = 3 (5%), 30-day mortality/survival, n = 2 (3%), mortality during ECMO, n = 2 (3%) and mortality in the first 7 days after initiation, n = 1 (2%)) in 49 (84%) of models and mid-to-long term (mortality/survival at 3–36 months after ECMO support) in 9 (16%) studies. No dynamic models were identified, i.e., none of the models was updated over time by taking into account serial changes in patient status or events for updated predictions. C-statistics in derivation cohorts varied between 0.602 and 0.970. Relevant study characteristics of derived models are presented in ESM Appendix 5, Table 5.

External validation

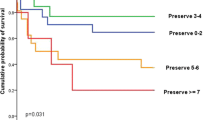

Of all 58 models which were developed for ECMO patients, only 14 (24%) models were externally validated (see Table 5 of ESM Appendix 5). Of these, the Survival after Veno-Arterial ECMO score (SAVE), Respiratory ECMO Survival Prediction Score (RESP) and Predicting death for severe ARDS on VV-ECMO (PRESERVE) score were externally validated more than 10 times (20, 18, and 12 times, respectively). Six prognostic models aiming to predict all-cause mortality in general ICU patients were externally validated in ECMO patients. Of these, the SOFA score, the Simplified Acute Physiology (SAPS II) Score, and the Acute Physiology and Chronic Health Evaluation (APACHE II) score were externally validated in more than 10 ECMO cohorts (37, 21 and 22 validations, respectively). External validation studies’ study and population characteristics are presented in Fig. 2b. The c-statistics of externally validated models ranged from 0.440 to 0.920. An overview of external validations grouped by model is provided in ESM Appendix 6, Table 6.

Predictive performance

Discrimination

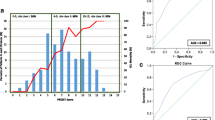

Figure 3a presents the pooled c-statistics for the various models. Pooled c-statistics of the SAVE, RESP, SAPS II, APACHE II and SOFA score were comparable (range 0.66–0.70). 95% PIs were wide for all aforementioned models indicating extensive between-study heterogeneity (Fig. 3b).

Calibration

Pooled O:E ratios were available for the SAVE, SAPS II, APACHE II and SOFA score (Fig. 3c. All models tended to underestimate mortality (O:E ratio > 1), except for the SAPS II score which, on average, overestimated mortality risk (O:E ratio < 1). Similar to the prediction intervals of the c-statistics, the prediction intervals of the O:E ratios were wide suggesting large between-study heterogeneity (Fig. 3d). Forest plots displaying reported c-statistics and O:E ratios of studies validating the SAVE, RESP, SAPS II, APACHE II and SOFA score are available in ESM Appendix 7, Fig. 1a–i.

Sources of heterogeneity

Meta-regression analyses for the SAVE, RESP, SAPS II, APACHE II and SOFA score did not reveal an association with previously specified factors (ESM Appendix 8, Figs. 2–6). After excluding studies with a high risk of bias in more than two domains (see below), the pooled O:E ratio of the APACHE II model changed from a tendency to underpredict to overprediction of mortality (from 1.09, 95% CI 0.92–1.30 to 0.64, 95% CI 0.54–0.74). No significant change in direction of pooled estimates was observed for the other models (ESM Appendix 9.1). Discriminatory performance of the SAVE Score was better in cohorts without ECPR patients (n = 7, c-statistic: 0.740 (0.590–0.840) than in cohorts also comprising patients after ECPR (n = 16, 0.700 (0.640–0.750) (ESM Appendix 9.2). The SAPS II, APACHE II and SOFA score performed best in cardiocirculatory and mixed cohorts (ESM Appendix 9.3). Performance in cohorts of adequate sample size (> 100 patients with the outcome) was comparable to performance in smaller cohorts (ESM Appendix 9.4). Lastly, performance of models in external validations published after the Transparent Reporting of a Multivariate Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) guidelines [60] did not differ significantly after excluding studies published before the guidelines became available (ESM Appendix 9.5). However, all aforementioned outcomes have to be interpreted with caution due to the small number of studies available for subanalysis.

Risk of bias assessment

Risk of bias was scored high for all but one derived model [14] (n = 57, 98%) and in 213 (95%) out of 225 external validations (Fig. 4a, c). The main reason for the high risk of bias for both model derivations and validations were small sample sizes. Only 4 (7%) derived models met the recommended event-per-variable (EPV) of ≥ 10 events (death or survival) per candidate variable, and 36 (16%) of the 225 external validations met the PROBAST recommendations of having at least 100 outcome events. Another reason for high risk of bias was a lack of reported calibration measures (a calibration plot was provided in 29 (13%) of external validations. The number of studies with a low risk of bias increased in recent years (from 2018 onwards, see Fig. 8 in ESM Appendix 9.5). Risk of bias assessment for each included derived model and external validation is provided in ESM Appendix 10. Concerns for applicability arose in 41% of derived models and 12% of external validations (Fig. 4b, d) and seemed primarily caused by a mismatch between the type of included patients and the aim of the study, being to provide decision support to the question whether or not to initiate ECMO support [10,11,12, 16, 21, 25, 27, 33, 34, 42, 50]. Namely, all models were developed in patients who had already received ECMO support.

Discussion

In this first, comprehensive systematic review and meta-analysis of studies focusing on mortality prediction in the setting of ECMO, we identified 58 unique prediction models designed specifically for such purpose. Among them, the SAVE and RESP score were most frequently externally validated but only had a moderate discriminative performance overall. A similar observation was made for the performance of general ICU prediction derived models SAPS II, APACHE II and SOFA score in ECMO cohorts. In addition, the majority of models had a high risk of bias and were conditional on the fact that ECMO support had already been initiated. As such, current models seem unfit to aid in decisions regarding individual cases.

The necessity for accurate and reliable prognostications as an aid for clinical decision making in patients with ECMO support is underscored by the large number of publications and exists because of several reasons. First, prediction models may assist in the decision to cease further treatment during ECMO support when models would indicate (a near) certainty for a fatal or unwanted outcome. Second, reliable prediction models may improve cost-effectiveness of ECMO, as the expanding growth of this resource-intensive support modality creates a considerable economic burden [1]. Finally, although current models would not suffice for such purpose, future prediction models, based on source populations where also patients would be included who did not receive ECMO after all, could provide a more objective assessment tool for selecting patients in whom chances of success (survival and weaning) are best.

Despite the abundance of prediction models that have been published, their clinical applicability seems limited by several factors. At first, nearly all (both derivation and external validation) studies had high risk of bias which was mainly attributable to the criterium of small sample sizes. Small sample sizes may cause bias through overfitting of predictors in derivation studies [18, 61] and provide distorted estimates of model performance in external validation studies. Second, overall performance for the different models was merely moderate rendering a significant possibility of misclassification. Of special interest was the post hoc observation that performance measures were not substantially different between general ICU prediction models and models that were specifically developed in—and for—ECMO recipients. It was to be expected that ECMO-specific models would outperform general ICU models as the ECMO population likely features additional and typical clinical characteristics and hence different predictors compared to a general ICU population; as similarly holds for models derived specifically for sepsis [62] and cancer patients [63] in the ICU. The overall observed equal performance of ICU models is yet likely explained by the clinical heterogeneity of ECMO patients in terms of age, indications and management, and the fact that important prognostic information occurring during the course of ECMO is not uniformly incorporated in all, i.e., ECMO-specific and general ICU models. In general, it appears that predictors of mortality in patients suffering from cardiocirculatory and/or respiratory failure are similar to a certain extent in patients with and without ECMO in current models.

On top of the previous discussion, a large heterogeneity in model performance (as illustrated by wide prediction intervals) was found throughout different studies and populations. We were not able to reveal significant associations between model performance and study/patient/disease characteristics in extensive meta-regression analyses. The absence of explanatory findings in meta-regression analyses could possibly be caused by small numbers of external validation studies. The heterogeneity may find its explanation in differences regarding ECMO indications and management between physicians, centers and countries. The observation of a lower model performance in external validations often prompted the development of a new model, which in turn ultimately led to a wide and undesirable variation of available prediction models. This dilemma is well recognized, and therefore it is recommended to update models instead of ‘starting from scratch’, especially when it is difficult to generate sufficiently large sample sizes [64].

The clinical applicability of all the models analyzed here was additionally limited by important conditionalities, i.e., the patients selected in the development cohorts and the timing of prediction. A physician may use the predicted mortality of a specific patient for two potential scenarios: one after—, and one before—the institution of ECMO (as described above). It should be noted that all 58 prediction models were derived in patients who had already received ECMO. Thus, physicians are currently merely informed about the first scenario, i.e., with ECMO, as the predicted mortality is conditional on having already received ECMO and being admitted in an ECMO practicing center. This is still the case when only pre-ECMO variables are incorporated in the prediction model. This conditionality renders current models methodologically unfit for the purpose of decision making prior to the initiation of ECMO, despite the aim of a substantial number of publications to do so [10,11,12, 16, 21, 25, 27, 33, 34, 42, 50]. For prediction in patients on ECMO, cohorts only comprising patients on ECMO support would obviously be preferred. However, a specific prediction moment limits their clinical applicability as most models are based on data acquired only around a single time point of prediction, usually upon initiation of ECMO.

Future directions

Findings from this systematic review of current prediction models in ECMO highlights the urgent need to (re)design and create reliable models that can assist in the potential irreversible clinical decision-making processes that are inevitably encountered during ECMO support. Currently available prediction models should be externally validated, regularly updated and recalibrated to adjust for changes in case mix and survival rates [65]). Models should also be evaluated and, possibly, be adjusted for specific subgroups of patients (e.g., ARDS or postcardiotomy). For this purpose, individual patient data (IPD) meta-analysis could help to generate sufficiently large patient cohorts. Another interesting development to improve performance of prediction models would be to update probabilities for survival over time by incorporating variables (e.g., complications and parameters reflecting organ (dys-) function collected during the course ECMO support. For this purpose, dynamic prediction approaches and artificial intelligence techniques could be of significant interest.

To aid in the decision to start or withhold ECMO for a given patient, it is essential to develop a model in one or multiple source populations also comprising patients who did not yet receive ECMO, or would not receive ECMO support at all. The actual initiation of ECMO can then be modeled as an additional variable in the equation. Bayesian modeling techniques might also prove helpful for this, by incorporating available knowledge from the literature and expert opinion about patients who are rarely represented in current models (i.e., elderly, cancer patients) in the statistical model.

Limitations of this review

In our approach, we decided a-priori to pool c-statistics and O:E ratios of external validation studies irrespective of heterogeneity in patient cohorts (Prospero, CRD42021251873), and potentially also in pooled outcomes. We decided to do so because all studies clearly addressed the same research question, being to externally validate a mortality risk prediction in critically ill ECMO patients. As such, we consider the observed heterogeneity rather as an outcome measure than a shortcoming of the study design. Second, pooling was only possible when c-statistics and O:E ratios were reported or could be estimated in more than 10 studies. As this was only true for the SAVE, RESP, SAPS II, APACHE II and SOFA score, it could have resulted in selective reporting of pooled c-statistics and O:E ratios and the possibility of bias. To mitigate this risk as much as possible, we calculated these performance metrics in as many validation studies as possible through calculating predicted mortality on basis of average model scores. We did so as these metrics are of great importance for the interpretation of performance and a subsequent safe use of prediction models in clinical practice [66].

Conclusions

Robust mortality prognostications could significantly help for important treatment decisions regarding treatment benefit and futility in patients supported with ECMO. Although a large number of ECMO mortality prediction models have been published for such purpose, discriminative performance of these models in external validation studies was moderate at best. In addition, these models suffered from methodological shortcomings and were largely conditional on the fact that ECMO had just been initiated. As such, currently available models seem unsuitable for individual decision-making pre-ECMO and while on ECMO support. Future research should focus on model development in cohorts of eligible ECMO patients as well as the incorporation of time-dependent parameters and events.

Data availability

Data and code are available upon request.

References

Friedrichson B, Mutlak H, Zacharowski K, Piekarski F (2021) Insight into ECMO, mortality and ARDS: a nationwide analysis of 45,647 ECMO runs. Crit Care 25(1):38

Organization Els. ECLS Registry Report: international summary. Ann Arbor: Extracorporeal life support organization; 2021 19th October 2021. Contract No.: 1.

Zangrillo A, Landoni G, Biondi-Zoccai G, Greco M, Greco T, Frati G et al (2013) A meta-analysis of complications and mortality of extracorporeal membrane oxygenation. Crit Care Resusc 15(3):172–178

Karagiannidis C, Brodie D, Strassmann S, Stoelben E, Philipp A, Bein T et al (2016) Extracorporeal membrane oxygenation: evolving epidemiology and mortality. Intensive Care Med 42(5):889–896

Cheng R, Hachamovitch R, Kittleson M, Patel J, Arabia F, Moriguchi J et al (2014) Complications of extracorporeal membrane oxygenation for treatment of cardiogenic shock and cardiac arrest: a meta-analysis of 1,866 adult patients. Ann Thorac Surg 97(2):610–616

Hodgson CL, Hayes K, Everard T, Nichol A, Davies AR, Bailey MJ et al (2012) Long-term quality of life in patients with acute respiratory distress syndrome requiring extracorporeal membrane oxygenation for refractory hypoxaemia. Crit Care 16(5):R202

Oude Lansink-Hartgring A, van den Hengel B, van der Bij W, Erasmus ME, Mariani MA, Rienstra M et al (2016) Hospital costs of extracorporeal life support therapy. Crit Care Med 44(4):717–723

Dennis M, Zmudzki F, Burns B, Scott S, Gattas D, Reynolds C et al (2019) Cost effectiveness and quality of life analysis of extracorporeal cardiopulmonary resuscitation (ECPR) for refractory cardiac arrest. Resuscitation 139:49–56

Schmidt M, Bailey M, Sheldrake J, Hodgson C, Aubron C, Rycus PT et al (2014) Predicting survival after extracorporeal membrane oxygenation for severe acute respiratory failure: the respiratory extracorporeal membrane oxygenation survival prediction (RESP) score. Am J Respir Crit Care Med 189(11):1374–1382

Muller G, Flecher E, Lebreton G, Luyt CE, Trouillet JL, Brechot N et al (2016) The ENCOURAGE mortality risk score and analysis of long-term outcomes after VA-ECMO for acute myocardial infarction with cardiogenic shock. Intensive Care Med 42(3):370–378

Schmidt M, Zogheib E, Roze H, Repesse X, Lebreton G, Luyt CE et al (2013) The PRESERVE mortality risk score and analysis of long-term outcomes after extracorporeal membrane oxygenation for severe acute respiratory distress syndrome. Intensive Care Med 39(10):1704–1713

Wang L, Yang F, Wang X, Xie H, Fan E, Ogino M et al (2019) Predicting mortality in patients undergoing VA-ECMO after coronary artery bypass grafting: the REMEMBER score. Crit Care 23(1):11

Pappalardo F, Pieri M, Greco T, Patroniti N, Pesenti A, Arcadipane A et al (2013) Predicting mortality risk in patients undergoing venovenous ECMO for ARDS due to influenza A (H1N1) pneumonia: the ECMOnet score. Intensive Care Med 39(2):275–281

Wengenmayer T, Duerschmied D, Graf E, Chiabudini M, Benk C, Mühlschlegel S et al (2019) Development and validation of a prognostic model for survival in patients treated with venoarterial extracorporeal membrane oxygenation: the PREDICT VA-ECMO score. Eur Heart J Acute Cardiovasc Care 8(4):350–359

Biancari F, Dalén M, Fiore A, Ruggieri VG, Saeed D, Jónsson K et al (2020) Multicenter study on postcardiotomy venoarterial extracorporeal membrane oxygenation. J Thorac Cardiovasc Surg 159(5):1844–54.e6

Schmidt M, Burrell A, Roberts L, Bailey M, Sheldrake J, Rycus PT et al (2015) Predicting survival after ECMO for refractory cardiogenic shock: the survival after veno-arterial-ECMO (SAVE)-score. Eur Heart J 36(33):2246–2256

Moons KG, de Groot JA, Bouwmeester W, Vergouwe Y, Mallett S, Altman DG et al (2014) Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PLoS Med 11(10):e1001744

Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS et al (2019) PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med 170(1):51–58

Debray TP, Damen JA, Snell KI, Ensor J, Hooft L, Reitsma JB et al (2017) A guide to systematic review and meta-analysis of prediction model performance. BMJ 356:i6460

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD et al (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372:n71

Hilder M, Herbstreit F, Adamzik M, Beiderlinden M, Bürschen M, Peters J et al (2017) Comparison of mortality prediction models in acute respiratory distress syndrome undergoing extracorporeal membrane oxygenation and development of a novel prediction score: the PREdiction of Survival on ECMO Therapy-Score (PRESET-Score). Crit Care 21(1):301

Cheng YT, Wu MY, Chang YS, Huang CC, Lin PJ (2016) Developing a simple preinterventional score to predict hospital mortality in adult venovenous extracorporeal membrane oxygenation: a pilot study. Medicine (Baltimore) 95(30):e4380

Kaestner F, Rapp D, Trudzinski FC, Olewczynska N, Wagenpfeil S, Langer F et al (2018) High serum bilirubin levels, NT-pro-BNP, and lactate predict mortality in long-term, severely ill respiratory ECMO patients. Asaio J 64(2):232–237

Wu MY, Chang YS, Huang CC, Wu TI, Lin PJ (2017) The impacts of baseline ventilator parameters on hospital mortality in acute respiratory distress syndrome treated with venovenous extracorporeal membrane oxygenation: a retrospective cohort study. BMC Pulm Med 17(1):181

Wu MY, Huang CC, Wu TI, Chang YS, Wang CL, Lin PJ (2017) Is there a preinterventional mechanical ventilation time limit for candidates of adult respiratory extracorporeal membrane oxygenation. Asaio j 63(5):650–658

Lazzeri C, Cianchi G, Mauri T, Pesenti A, Bonizzoli M, Batacchi S et al (2018) A novel risk score for severe ARDS patients undergoing ECMO after retrieval from peripheral hospitals. Acta Anaesthesiol Scand 62(1):38–48

Roch A, Hraiech S, Masson E, Grisoli D, Forel JM, Boucekine M et al (2014) Outcome of acute respiratory distress syndrome patients treated with extracorporeal membrane oxygenation and brought to a referral center. Intensive Care Med 40(1):74–83

Posluszny J, Engoren M, Napolitano LM, Rycus PT, Bartlett RH (2020) Predicting survival of adult respiratory failure patients receiving prolonged (≥14 days) extracorporeal membrane oxygenation. Asaio J 66(7):825–833

Chiu LC, Tsai FC, Hu HC, Chang CH, Hung CY, Lee CS et al (2015) Survival predictors in acute respiratory distress syndrome with extracorporeal membrane oxygenation. Ann Thorac Surg 99(1):243–250

Hsin CH, Wu MY, Huang CC, Kao KC, Lin PJ (2016) Venovenous extracorporeal membrane oxygenation in adult respiratory failure: Scores for mortality prediction. Medicine (Baltimore) 95(25):e3989

Enger T, Philipp A, Videm V, Lubnow M, Wahba A, Fischer M et al (2014) Prediction of mortality in adult patients with severe acute lung failure receiving veno-venous extracorporeal membrane oxygenation: a prospective observational study. Crit Care 18(2):R67

Zayat R, Kalverkamp S, Grottke O, Durak K, Dreher M, Autschbach R et al (2021) Role of extracorporeal membrane oxygenation in critically ill COVID-19 patients and predictors of mortality. Artif Organs 45(6):E158–E170

Siao FY, Chiu CW, Chiu CC, Chang YJ, Chen YC, Chen YL et al (2020) Can we predict patient outcome before extracorporeal membrane oxygenation for refractory cardiac arrest? Scand J Trauma Resusc Emerg Med 28(1):58

Park SB, Yang JH, Park TK, Cho YH, Sung K, Chung CR et al (2014) Developing a risk prediction model for survival to discharge in cardiac arrest patients who undergo extracorporeal membrane oxygenation. Int J Cardiol 177(3):1031–1035

Hong TH, Kuo SW, Hu FC, Ko WJ, Hsu LM, Huang SC et al (2014) Do interleukin-10 and superoxide ions predict outcomes of cardiac extracorporeal membrane oxygenation patients? Antioxid Redox Signal 20(1):60–68

Burrell AJC, Pellegrino VA, Wolfe R, Wong WK, Cooper DJ, Kaye DM et al (2015) Long-term survival of adults with cardiogenic shock after venoarterial extracorporeal membrane oxygenation. J Crit Care 30(5):949–956

Akin S, Caliskan K, Soliman O, Muslem R, Guven G, van Thiel RJ et al (2020) A novel mortality risk score predicting intensive care mortality in cardiogenic shock patients treated with veno-arterial extracorporeal membrane oxygenation. J Crit Care 55:35–41

Ayers B, Wood K, Gosev I, Prasad S (2020) Predicting survival after extracorporeal membrane oxygenation by using machine learning. Ann Thorac Surg 110(4):1193–1200

Lee SW, Han KS, Park JS, Lee JS, Kim SJ (2017) Prognostic indicators of survival and survival prediction model following extracorporeal cardiopulmonary resuscitation in patients with sudden refractory cardiac arrest. Ann Intensive Care 7:1

Tsai TY, Tu KH, Tsai FC, Nan YY, Fan PC, Chang CH et al (2019) Prognostic value of endothelial biomarkers in refractory cardiogenic shock with ECLS: a prospective monocentric study. Bmc Anesthesiol 19:1–8

Choi KH, Yang JH, Park TK, Lee JM, Song YB, Hahn JY et al (2019) Risk prediction model of in-hospital mortality in patients with myocardial infarction treated with venoarterial extracorporeal membrane oxygenation. Rev Esp Cardiol (Engl Ed) 72(9):724–731

Becher PM, Twerenbold R, Schrage B, Schmack B, Sinning CR, Fluschnik N et al (2020) Risk prediction of in-hospital mortality in patients with venoarterial extracorporeal membrane oxygenation for cardiopulmonary support: the ECMO-ACCEPTS score. J Crit Care 56:100–105

Peigh G, Cavarocchi N, Keith SW, Hirose H (2015) Simple new risk score model for adult cardiac extracorporeal membrane oxygenation: simple cardiac ECMO score. J Surg Res 198(2):273–279

Worku B, Khin S, Gaudino M, Avgerinos D, Gambardella I, D’Ayala M et al (2019) A simple scoring system to predict survival after venoarterial extracorporeal membrane oxygenation. J Extra Corpor Technol 51(3):133–139

Chen F, Wang L, Shao J, Wang H, Hou X, Jia M (2020) Survival following venoarterial extracorporeal membrane oxygenation in postcardiotomy cardiogenic shock adults. Perfusion 35(8):747–755

Saeed D, Potapov E, Loforte A, Morshuis M, Schibilsky D, Zimpfer D et al (2020) Transition from temporary to durable circulatory support systems. J Am Coll Cardiol 76(25):2956–2964

Wu MY, Lin PJ, Lee MY, Tsai FC, Chu JJ, Chang YS et al (2010) Using extracorporeal life support to resuscitate adult postcardiotomy cardiogenic shock: treatment strategies and predictors of short-term and midterm survival. Resuscitation 81(9):1111–1116

Masha L, Peerbhai S, Boone D, Shobayo F, Ghotra A, Akkanti B et al (2019) Yellow means caution: correlations between liver injury and mortality with the use of VA-ECMO. ASAIO J 65(8):812–818

Wang H, Chen C, Li B, Cheng Z, Wang Z, Huang X et al (2021) Nomogram to predict survival outcome of patients with veno-arterial extracorporeal membrane oxygenation after refractory cardiogenic shock. Postgrad Med 134:1–10

Chen WC, Huang KY, Yao CW, Wu CF, Liang SJ, Li CH et al (2016) The modified SAVE score: predicting survival using urgent veno-arterial extracorporeal membrane oxygenation within 24 hours of arrival at the emergency department. Crit Care 20(1):336

Miroz JP, Ben-Hamouda N, Bernini A, Romagnosi F, Bongiovanni F, Roumy A et al (2020) Neurological pupil index for early prognostication after venoarterial extracorporeal membrane oxygenation. Chest 157(5):1167–1174

Pabst D, Foy AJ, Peterson B, Soleimani B, Brehm CE (2018) Predicting survival in patients treated with extracorporeal membrane oxygenation after myocardial infarction. Crit Care Med 46(5):e359–e363

Distelmaier K, Roth C, Binder C, Schrutka L, Schreiber C, Hoffelner F et al (2016) Urinary output predicts survival in patients undergoing extracorporeal membrane oxygenation following cardiovascular surgery. Crit Care Med 44(3):531–538

Kim HS, Park KH, Ha SO, Lee SH, Choi HM, Kim SA et al (2020) Predictors of survival following veno-arterial extracorporeal membrane oxygenation in patients with acute myocardial infarction-related refractory cardiogenic shock: clinical and coronary angiographic factors. J Thorac Dis 12(5):2507–2516

Bartko PE, Wiedemann D, Schrutka L, Binder C, Santos-Gallego CG, Zuckermann A et al (2017) Impact of right ventricular performance in patients undergoing extracorporeal membrane oxygenation following cardiac surgery. J Am Heart Assoc. https://doi.org/10.1161/JAHA.116.005455

Muller G, Flecher E, Lebreton G, Luyt CE, Trouillet JL, Bréchot N et al (2016) The ENCOURAGE mortality risk score and analysis of long-term outcomes after VA-ECMO for acute myocardial infarction with cardiogenic shock. Intensive Care Med 42(3):370–378

Ye J (2021) Adverse prognostic factors for rescuing patients with acute myocardial infarction–induced cardiac arrest receiving percutaneous coronary intervention under extracorporeal membrane oxygenation. Hong Kong J Emerg Med. https://doi.org/10.1177/1024907921997614

Wu VC, Tsai HB, Yeh YC, Huang TM, Lin YF, Chou NK et al (2010) Patients supported by extracorporeal membrane oxygenation and acute dialysis: acute physiology and chronic health evaluation score in predicting hospital mortality. Artif Organs 34(10):828–835

Kim HS, Cheon DY, Ha SO, Han SJ, Kim HS, Lee SH et al (2018) Early changes in coagulation profiles and lactate levels in patients with septic shock undergoing extracorporeal membrane oxygenation. J Thorac Dis 10(3):1418–1430

Collins GS, Reitsma JB, Altman DG, Moons KG (2015) Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ 350:g7594

Nieboer D, Vergouwe Y, Ankerst DP, Roobol MJ, Steyerberg EW (2016) Improving prediction models with new markers: a comparison of updating strategies. BMC Med Res Methodol 16(1):128

Zhang K, Zhang S, Cui W, Hong Y, Zhang G, Zhang Z (2020) Development and validation of a sepsis mortality risk score for sepsis-3 patients in intensive care unit. Front Med (Lausanne) 7:609769

Groeger JS, Lemeshow S, Price K, Nierman DM, White P Jr, Klar J et al (1998) Multicenter outcome study of cancer patients admitted to the intensive care unit: a probability of mortality model. J Clin Oncol 16(2):761–770

Enger TB, Müller T (2018) Predictive tools in VVECMO patients: handicap or benefit for clinical practice? J Thorac Dis 10(3):1347–1351

Shah N, Said AS (2021) Extracorporeal support prognostication-time to move the goal posts? Membranes 11(7):537

Van Calster B, McLernon DJ, van Smeden M, Wynants L, Steyerberg EW (2019) Calibration: the Achilles heel of predictive analytics. BMC Med 17(1):230

Funding

Stichting Gezondheidszorg Spaarneland (SGS) Fund, Zilveren Kruis Achmea.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

None of the authors has conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Pladet, L.C.A., Barten, J.M.M., Vernooij, L.M. et al. Prognostic models for mortality risk in patients requiring ECMO. Intensive Care Med 49, 131–141 (2023). https://doi.org/10.1007/s00134-022-06947-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-022-06947-z