Abstract

Medical practice is dogged by dogma. A conclusive evidence base is lacking for many aspects of patient management. Clinicians, therefore, rely upon engrained treatment strategies as the dogma seems to work, or at least is assumed to do so. Evidence is often distorted, overlooked or misapplied in the re-telling. However, it is incorporated as fact in textbooks, policies, guidelines and protocols with resource and medicolegal implications. We provide here four examples of medical dogma that underline the above points: loop diuretic treatment for acute heart failure; the effectiveness of heparin thromboprophylaxis; the rate of sodium correction for hyponatraemia; and the mantra of “each hour counts” for treating meningitis. It is notable that the underpinning evidence is largely unsupportive of these doctrines. We do not necessarily advocate change, but rather encourage critical reflection on current practices and the need for prospective studies.

Similar content being viewed by others

Dogma often dictates the management of acutely ill patients; however, the underpinning evidence base is often slight at best leading to potential over-interpretation and misapplication. We encourage critical reappraisal of current practices. |

Introduction

Dogma represents an opinion believed to be true or irrevocable. It originates from the Greek word ‘δόγμα’ (‘that which one thinks is true’), a cousin of ‘δόξα’ (doxa) which itself derives from the verb δοκεῖν (dokein) meaning ‘to seem’, ‘to think’, ‘to accept’. Plato considered doxa a belief unrelated to reason and framed it as the opponent of knowledge [1]. Aristotle, however, took the view that doxa’s value was in its practicality and common usage and was the first step in finding knowledge [2]. ‘Fact’—something known or proved to be true—derives from the Latin factum, ‘do’. The original sense was an act but later it described a crime (as in “after the fact”). The current sense of ‘fact’, indicating truth or reality, dates from the late sixteenth century.

Fact and dogma are frequently superimposed in medicine. Everyday medical practice revolves around ‘facts’ enshrined in textbooks and management guidelines, and then reiterated in subsequent versions. However, such ‘facts’ often lack adequate source verification. Not infrequently, the source is absent, misinterpreted, misapplied from other situations, or outdated. Yet, ‘incestuous amplification’—a reinforcement of ideas and convictions held by like-minded individuals, and the wish to remain within a perceived tried-and-trusted comfort zone, tend to quash any iconoclastic challenge to these cherished beliefs.

Dogma plays an important part in current clinical practice as it offers long-established Aristotelian solutions that are considered to increase patient safety, optimise care, and standardise operating procedures. Strict adherence appeals as the dogma seems to work, or at least is assumed to do so. Treatment failures and covert side effects are conveniently ascribed to the patient, blaming frailty, comorbidity or illness severity rather than the intervention.

Reliance or, worse still, insistence, on dogma can deter clinicians from personalising care for the individual patient. The intervention may be appropriate for some but ineffective, wasteful or even harmful to others. It may suppress innovation for fear of error. Litigation may ensue as failure to follow an established dogma can be viewed as breach of duty, with arguments on causation often passing unchallenged for lack of appreciation of the weak or misquoted evidence base.

An excellent recent example of a successful challenge to dogma is the change in sepsis management guidelines that now recommend a more measured approach to commencing antibiotics [3,4,5]. In this article, we offer a further selection of long-standing management practices that are often taken as gospel but, in reality, the evidence base is non-existent, weak, outdated, and/or physiologically questionable. The practice is assumed to work when the patient improves or the prophylactically treated complication fails to materialise. Whether this is a consequence of the intervention itself, the body’s ability to withstand an unnecessary iatrogenic insult, or simply overkill is moot. We do not advocate wholesale change but hope that this article will remind the reader that all that glistens is not necessarily gold. We should also revisit long-established though scientifically dubious practices to confirm whether they remain valid in the current era, either in part or in totality.

Dogma: “Loop diuretics are needed to treat acute heart failure”

The recent European Society for Cardiology 2021 guideline describes intravenous diuretics as the ‘cornerstone of acute heart failure management’ albeit with a low (‘C grade’) level of evidence [6]. Clinical benefit is often ascribed to the diuresis that follows an intravenous furosemide bolus. The full urine bag is taken as proof positive of successful fluid removal from the lung that has led to symptomatic improvement. Yet this oft-stated paradigm can be readily challenged by conflicting observations. The diuretic effect only commences after 20–30 min and peaks after an hour or so, yet the patient has often improved markedly beforehand, an observation noted in 1966 [7]. The following year, significant clinical and biochemical improvement was reported within an hour of furosemide administration despite ongoing oliguria [8]. The lack of rapid radiological resolution of pulmonary oedema also argues against concurrent pulmonary water clearance. The X-ray does not ‘lag behind the patient’, as frequently cited, but simply demonstrates excess lung fluid still present despite symptomatic relief. It can take several weeks for clearance of pulmonary (interstitial and alveolar) oedema by lymphatic drainage and decreased ingress of fluid into the lung from raised hydrostatic pressures.

The benefit from intravenous furosemide arises from its rapid vasodilating effect [9]. Dikshit and colleagues described this change in cardiac loading conditions with a 27% fall in left ventricular filling pressure and a 52% increase in mean calf venous capacitance within 5–15 min following a furosemide bolus [10].

The downsides of furosemide should also be highlighted. Multiple studies report haemodynamic deterioration with falls in stroke volume and increases in heart rate, blood pressure and systemic vascular resistance [11,12,13]. This relates to arterial vasoconstriction induced by hypovolaemia and rapid activation of the neurohormonal axis [9, 13]. The corollary of hypovolaemia and compensatory rises in aldosterone and vasopressin is a decrease in renal blood flow, a fall in glomerular filtration and consequent oliguria. The knee-jerk response is to administer further, if not larger, doses of furosemide. Subsequent worsening in renal function is then blamed on the patient’s poor underlying cardiac status. A propensity-matched retrospective analysis in medical and cardiac patients admitted to the intensive care unit (ICU) and receiving loop diuretic use within 24 h of ICU admission reported an association between acute kidney injury and electrolyte abnormalities, though this did not impact on other outcomes [14].

The rationale for using furosemide as first-line therapy in patients with acute heart failure who are not intravascularly volume overloaded is thus questionable. Is it not better to aim for normovolaemia and optimal vasodilation, both arterial and venous, to optimise both ventricular filling and the resistance against which the dysfunctional ventricle has to pump? Nitrates are pharmacologically better suited to achieve rapid resolution of symptoms but with improved haemodynamics. At low dose, they are predominantly venodilators but an arterial dilating effect occurs with increasing dose. In addition, appropriate and cautious fluid loading may be advantageous to correct any hypovolaemic contribution to a compromised circulatory status which may be unmasked by vasodilator therapy. Few prospective randomised studies have compared nitrates and furosemide in acute heart failure. One open-label trial conducted in mobile coronary care units in Israel randomised 110 patients to high-dose furosemide plus low-dose isosorbide dinitrate, or the converse [15]. This design was at the insistence of the ethics committee since both agents were deemed essential. The rather large composite outcome of death, myocardial infarction and requirement for mechanical ventilation was significantly reduced (from 46 to 25%) in the high-dose nitrate/low-dose furosemide group. Smaller studies comparing furosemide against isosorbide dinitrate [16, 17] did not report outcomes but demonstrated a superior haemodynamic profile with nitrates. Similar findings have been made with sodium nitroprusside [18] and sodium nitrite [19]. An open-label randomised trial of sustained vasodilation using sublingual and transdermal nitrates and oral vasodilators against standard-of-care, however, reported no benefit with either all-cause mortality or rehospitalisation rates [20]. Notably, there was no difference in blood pressure and no sparing of furosemide use, with a fifth of patients in both groups suffering worse renal function. Another open-label randomised multicentre trial, performed in the emergency department, compared a bundle of care including intravenous nitrate boluses against standard-of-care in very elderly patients [21]. No outcome differences were shown though it is noteworthy that 98% of the intervention group received diuretics (median [IQR] dose 40 [40–80] mg). The study that needs to be done, in our view, is a comparison—preferably blinded—of diuretics against vasodilators with avoidance of diuretics unless specifically indicated (e.g. chronic diuretic use, true volume overload).

Dogma: “Every ICU patient should get heparin thromboprophylaxis”

Unless contraindicated by coagulopathy or active bleeding, the use of heparin has become a standard-of-care to prevent thromboembolic events in ICU patients. The underlying rationale is reasonable in that critically ill patients are generally immobile for prolonged periods and many inflammatory conditions are associated with a prothrombotic state [22]. Yet, how strong is the evidence that thromboprophylaxis actually makes a difference in this patient population?

In a 2013 meta-analysis, Alhazzani and colleagues could only identify three studies published between 1982 and 2000 comparing either unfractionated (UFH) or low molecular weight (LMWH) heparin prophylaxis against placebo in medical-surgical ICU patients [23]. Worryingly, the largest of the three trials, comprising 73% of included patients and which reported the most positive outcomes, has only been published in abstract form [24]. The other two studies did not record any cases of pulmonary embolism in their control groups. No effect was seen on symptomatic deep-vein thrombosis, mortality or bleeding rates.

For acutely ill medical patients (mainly non-ICU), a Cochrane meta-analysis [25] reported a significant reduction in deep venous thrombosis (DVT) incidence with heparin thromboprophylaxis (6.7%) versus either placebo or an unblinded control group (3.8%), but no significant impact on either non-fatal (0.5 vs. 0.2%) or fatal (0.3 vs. 0.2%) pulmonary embolism, nor on mortality. Major bleeding increased with heparin use (from 0.4 to 0.6%), although more so with unfractionated rather than LMW heparin.

The use of heparin thromboprophylaxis is more compelling in some surgical populations. In trauma patients, the heparin-treated group showed a significant reduction in DVT rates (8.7 to 4.3%) [26] and a non-significant reduction in pulmonary embolism rates (3.3 to 1.7%). Nonetheless, a meta-analysis of 3130 patients enrolled into eight randomised controlled trials (RCTs) for vascular surgery showed no significant difference in thromboembolic events nor bleeding complications [27]. A Cochrane Review including almost 3000 patients undergoing hip fracture repair reported a reduction in DVT events with any type of heparin, but no clear impact on fatal pulmonary embolisms or mortality, nor blood loss [28]. In patients with coronavirus disease 2019 (COVID-19) requiring intensive care, thromboembolic events, particularly pulmonary emboli, were fivefold higher compared to a matched non-COVID acute respiratory distress syndrome population [29]. Not unreasonably, heparin dosing was increased empirically to counter this risk. However, subsequent RCTs in ICU-level care patients showed no benefit of therapeutic dose over prophylactic (low or intermediate) dose heparin anticoagulation [30, 31], nor intermediate versus standard dose prophylaxis [32]. Of note, studies in non-ICU COVID patients reported a significant reduction in major thromboembolism events and death with therapeutic LMWH compared to low/intermediate dose LMWH anticoagulation [31, 33].

The other aspect of thromboprophylaxis that requires critical reappraisal is the evidence (or lack of) underpinning a fixed-dose subcutaneous LMWH regimen in ICU patients. This approach is advocated for ease of administration such that routine monitoring of anti-Factor Xa (anti-FXa) activity is not recommended. Nonetheless, what evidence does exist suggests many ICU patients are under-dosed, with pharmacodynamics in a critically ill population failing to mirror less sick ward cohorts. Priglinger et al. found significantly lower anti-FXa activity (e.g. 50% reduction in peak levels) after administration of enoxaparin 40 mg sc once daily in a critically ill ICU population with normal renal function compared to medical ward patients [34]. Robinson et al. also found 40 mg sc enoxaparin once daily was subtherapeutic in nearly 30% of their ICU patients, and 5% using 60 mg dosing [35]. In a mixed cohort of 219 ICU patients receiving enoxaparin thromboprophylaxis (40 mg sc non-obese, 60 mg obese, 20 mg renal failure), 30% were subtherapeutic in terms of anti-FXa activity and a further 30% just reached the therapeutic window [36]. In this study, the incidence of deep-vein thrombosis, adjudged by twice weekly ultrasound screening, was 2.7% yet all were asymptomatic. No pulmonary emboli were recognised. The question of once or twice daily dosing has also not been adequately addressed in the ICU setting [37].

Recent attention has been drawn to thromboprophylaxis in the COVID-19 population who are markedly pro-coagulopathic. Significantly lower mean anti-FXa activity was seen with 40 mg sc enoxaparin in COVID-19 patients in ICU patients compared to general ward patients (0.1 vs. 0.25 IU/ml), with 95% of ICU patients failing to achieve targeted anti-FXa activity levels [38]. Similar observations were made by Stattin et al. [39].

In summary, the evidence base supporting heparin thromboprophylaxis in all ICU patients is lacking in terms of outcome benefit. Worryingly, recommended fixed dosing regimens are often subtherapeutic. Nonetheless, the incidence of symptomatic DVT and pulmonary embolism is low. Whether, personalised targeted dosing does indeed provide an outcome difference, or whether alternatives such as direct oral anticoagulants (DOACs) are preferable, should be investigated in prospective studies.

Dogma: “A slow rate of sodium correction prevents central pontine myelinolysis”

Hyponatraemia (‘mild’ usually describes sodium values 130–135 mmol/l, ‘moderate’ between 120 and 130 mmol/l, and ‘severe’ < 120 mmol/l) is a common condition requiring hospitalisation. It is often due to excess sodium losses (including diuretic use) or excess water ingestion and, when lasting > 48 h, is deemed ‘chronic’ [40]. Risk of severe complications such as seizures and coma increases markedly with severe hyponatraemia. Management guidelines promote rapid correction by up to 5 mmol/l in the first hour for such severe complications, and thereafter gradual correction to avoid the feared complication of central pontine myelinolysis (perhaps more correctly termed osmotic demyelination syndrome as extrapontine myelinolysis is also reported). What constitutes ‘gradual’ remains a matter of conjecture, though more gradual correction is usually promoted for chronic hyponatraemia. National and expert panel recommendations are heterogenous. For instance, European guidelines [41] suggest targeting a rise of 5–10 mmol/l over the first 24 h, and then 8 mmol/l/24 h thereafter for both acute and chronic hyponatraemia. An American guideline from 2007 [42] recommended a rise < 10–12 mmol/l over 24 h, and < 18 mmol/l over 48 h for chronic hyponatraemia. No correction rate was offered for acute hyponatraemia. This was addressed in their revised 2013 guideline [43] where a review of the ‘limited available literature’ suggested an initial rapid rise of 4–6 mmol/l in serum sodium was sufficient to reverse the most serious manifestations of acute hyponatraemia; thereafter they considered that the correction rate need not be restricted for true acute hyponatraemia, nor was relowering of excessive correction indicated. A recent Best Practice document advised use of 3% hypertonic saline for any acute onset (< 48 h) and/or symptomatic hyponatraemia, and cited variable recommendations for correction rate ranging from 3–6 to 8–12 mmol/l/day in the first 24 h. For chronic asymptomatic hyponatraemia, they recommended a correction rate < 8 mmol/l/day [44].

This divergence of views largely relates to a lack of randomised, controlled trial data assessing correction rates. Three important questions to address are, first, the strength of evidence linking correction of hyponatremia to the development of osmotic demyelination syndrome; secondly, how commonly does osmotic demyelination syndrome arise; and, third, how often does osmotic demyelination syndrome cause permanent neurological disability.

A 1990 paper [45] performed magnetic resonance imaging in 13 patients with a mean sodium level at baseline of 103.7 mmol/l (range 93–113). The three patients who developed pontine lesions had a rise in mean (SD) serum sodium from 97.3 (6.7) to 127.3 (5.1) mmol/l in the first 24 h, i.e. a correction rate of 1.25 (0.4) mmol/l/hour. Of note, the ten patients not developing lesions still had a high correction rate of 0.74 (0.3) mmol/l/h, equating to 17.5 mmol/l over the first day. A 2015 systematic review characterised 158 cases with osmotic demyelination caused by hyponatraemia where sodium correction rates were described [46]. No difference in death nor degree of neurological outcome was seen, regardless of whether plasma sodium correction was slow (≤ 0.5 mmol/l/h) or rapid (> 0.5 mmol/l/h). Ayus and Arieff also failed to establish a correlation between patient outcome and either baseline level of sodium or the rate of correction [47]. In a retrospective analysis of 1490 patients with severe hyponatraemia (< 120 mmol/l), an overly rapid correction of serum sodium (defined as > 8 mmol in the first 24 h) occurred in 606 (41%) cases [48]. However, osmotic demyelination confirmed by MRI was described in only nine patients (0.6%) of whom one presented with demyelination at admission. Of the eight who developed subsequent demyelination, four had correction rates falling within the 10–12 mmol/l threshold recommended by the US [43] and European [41] guidelines.

Nearly, a quarter of patients who develop osmotic demyelination do so irrespective of hyponatraemia [49]. Other risk factors identified were alcohol abuse (54.7% of cases), cirrhosis (17.2%), malnutrition (16.4%) and liver transplantation (12.9%). Of the eight patients cited above [48] who developed osmotic demyelination, five had beer potomania, four had chronic alcohol abuse, and four were malnourished.

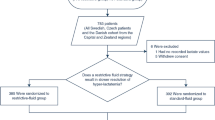

In terms of recovery, most studies report good functional outcomes either in the short or long term. A recent multicentre randomised trial of 178 patients with symptomatic hyponatraemia (sodium ≤ 125 mmol/l, 111 (62.5%) of whom had chronic hyponatraemia) compared rapid intermittent bolus vs slow continuous infusion of hypertonic saline [50]. Both strategies proved effective and safe though, even in this trial setting, 20.8% suffered from over-correction (increase in serum sodium > 12 mmol/l in 24 h or 18 mmol/l within 48 h). No patient, however, developed osmotic demyelination syndrome.

In summary, overly aggressive correction can predispose to osmotic demyelination [45]. However, all recent studies with more moderate correction rates (e.g. below 0.75 mmol/l/h) do not show any relationship with poor outcomes. Furthermore, osmotic demyelination is an uncommon event. A slower correction rate should perhaps be applied if other predisposing risk factors exist such as alcoholism and malnutrition. We should acknowledge the difficulty in precise hour-by-hour correction of sodium levels due to intrinsic patient variability and the challenge of balancing sodium and fluid input against losses (diuresis, vomiting, and diarrhoea).

Dogma: “Each hour counts for treating meningitis”

For acute bacterial meningitis, the current paradigm is to administer antibiotics as soon as possible, even commencing in the community. This dogma is applied to meningitis more than any other infectious condition. While we do not disagree with treatment urgency, the literature does not reflect this long-standing belief that “each hour counts”. Not surprisingly, there are no randomised controlled trials but 16 retrospective observational studies have sought an association between time-to-antibiotic from presentation (usually at hospital admission) to mortality (Supplement Table 1). Only one study [51] reported on duration of symptoms and trajectory of deterioration prior to admission and few reported on the incidence of long-term neurological sequelae. Overall, worse outcomes were associated with treatment delays post-hospital admission exceeding > 4–6 h, usually related to delayed diagnosis due to non-classical presentations. Examples are shown in Fig. 1a [52] and 1b [53]. Two studies reported an increasing risk of an unfavourable outcome per hour delay. Koster-Rasmussen [54] gave an odds ratio of 1.09 (95% confidence interval 1.01–19; p = 0.035) per hour delay for an unfavourable outcome, but did not report separately on mortality. Paradoxically, the effect was greater (OR 1.30 per hour, 95% CI 1.08–1.57, p < 0.01) when they excluded patients with a delay in antibiotic administration greater than 12 h. Glimaker et al. indicated a relative mortality increase of 8.8% (95% CI 3.4–14.4%; p < 0.01) per hour of delay [55]. However, the overall mortality rate was only 9.6% and so this equates to an absolute mortality increase of less than 1% per hour of delay. They provided a linear relationship extending to 14 h of delay; however, the mortality rate was curvilinear with little change over the first 4 h and with very wide and overlapping 95% confidence intervals (Fig. 1c). They reported that the risk of neurological sequelae was significantly associated with gender, age, aetiology, and mental status on admission, but made no mention of antibiotic delay.

Mortality related to time-to-antibiotics in three studies. A Case fatality rate according to door-to-antibiotic time interval in adult acute bacterial meningitis. (Figure redrawn from Ref [52]. By permission of Oxford University Press). B Time to antibiotic therapy and in-hospital mortality in community-acquired bacterial meningitis and time-to-antibiotic therapy and unfavourable outcome at discharge. *P value < 0.05 compared with patients treated 0–2 h from admission. (Figure redrawn from Ref [53]. By permission of Creative Commons Attribution 4.0 International License http://creativecommons.org/licenses/by/4.0/). C Probability of death related to time from admission to start of antibiotic treatment with 95% confidence intervals. (Figure redrawn from Ref [55]. By permission of Oxford University Press)

An interesting statistical commentary by Perera [56] highlighted Simpson’s paradox when performing analyses on such data, whereby the direction of the estimated effect can even change from benefit to harm. He noted that adjustment must be made for disease severity at the point at which the decision is made to give antibiotics as disease progression and the lack of specific signs and symptoms early in the illness are two important sources of confounding. Unfortunately, such data are not generally available in retrospective database analyses though this is clearly demonstrated by Bodilsen et al. (Fig. 1b) where patients treated with prehospital antibiotics had a higher mortality compared to patients treated post-admission. Such confounding has been recognised in sepsis; patients presenting in shock are more likely to be identified sooner and aggressively treated though their baseline mortality risk will be much higher [57]. Conversely, prolonged treatment delay is more likely in patients presenting with vague, non-specific features [58]. After adjustment for this particular confounder, time-to-antibiotic was not associated with mortality (adjusted OR 1.01; 95% CI 0.94–1.08; p = 0.78).

Age, mental status at admission and systemic disturbance prognosticate for poor outcomes [51, 55, 59, 60]. Aronin et al. [60] developed a prognostic model with low-, intermediate- and high-risk subgroups based on the absence (low-risk) or presence of hypotension, altered mental state and seizures either alone (intermediate-risk) or in combination (high-risk). Adverse clinical outcomes (death or persisting neurologic deficit) were more frequent in 42 patients progressing from low/intermediate to high risk before receiving antibiotics. No benefit was seen in 194 patients who remained at the same prognostic stage.

Delayed presentation in the community is also a poor prognosticator [51]. Of 286 patients with community-acquired bacterial meningitis, 125 had unfavourable outcomes. Pre-hospital delay in starting antibiotics from either onset of disease or onset of altered conscious level was a median one day longer in those suffering unfavourable outcomes. However, antibiotic delay post-admission did not impact on outcomes.

Finally, the long-running debate about delaying antibiotic administration until after computed tomography (CT) scan and lumbar puncture have been performed also remains unresolved. Glimaker et al. [55] demonstrated safety and outcome improvements with antibiotics given before lumbar puncture but pre-CT scanning, even in patients with moderate-severe impairment of mental status and/or new onset seizures. On the other hand, in their cohort of 1536 patients, Costerus et al. found no outcome impact in delaying antibiotics until post-CT scan [61].

In summary, in terms of outcome improvement, the urgency in treating meningitis appears to relate principally to severity of illness on presentation and a rapid trajectory of deterioration. This reflects findings for sepsis in general [3,4,5].

Conclusions

As we illustrate in the above examples, pros and cons of which are summarised in Fig. 2, adherence to dogma is commonplace in critical care practice. We could have offered multiple other examples where either the physiological rationale is questionable or the evidence base does not support either the practice nor the rigidly held beliefs. For lack of space in this article, we have not performed a formal systematic review on each dogma but believe we have captured the most important publications, utilising both search engines and reference lists from recent papers. In addition, we have scrutinised the content of each paper in depth and not relied on the headline findings in the abstract. We fully acknowledge that our interpretations of the data could potentially be challenged but this will lead to healthy, open discussion.

Clearly, there are many instances where the principle underlying the dogma must be accepted. No one would argue against treating symptomatic hypoglycaemia or life-threatening hyperkalaemia but specific aspects could be challenged. For example, what level of acute hyperkalaemia is life threatening? What protection, if any and for how long, is offered by an intravenous injection of calcium given as a membrane stabiliser? The general perception is that calcium is a non-harmful intervention (or the possibility of harm is not even considered), but can we be sure?

It is easier to challenge established practice when the condition is frequently seen and high-quality evidence can be amassed. Updated iterations of cardiac arrest guidelines have reflected this increasing knowledge base though, notably, some components have been discarded for lack of proof of benefit, e.g. the use of atropine for asystole, or bicarbonate to reverse acidosis. Amassing evidence is more problematic in less commonly seen situations so there is an understandable reliance on ‘tried and trusted’ protocols, aspects of which may be superfluous or even harmful.

How do we move forward? As a good starting point, we should remind each other, and particularly trainees, that protocols and policies should not be blindly accepted. Expert opinion carries a not insignificant risk of academic bias. As part of medical training, statements and positions must not be taken at face value but the underpinning literature carefully scrutinised for balance, misinterpretation and important omissions. The above-cited studies highlighting frequent subtherapeutic dosing of fixed LMWH regimens in ICU patients are a good example.

Big data drawn from multiple centres could be applied to examining low-incidence conditions, comparing outcomes and complications where different interventions are applied. ‘Nudges’ could be deployed by computerised prescribing systems to propose suggested interventions where equipoise or uncertainty exists about specific treatments [62].

Fear of litigation can be assuaged by hospitals reclassifying their clinical management policies as guidelines or recommendations. Policies are interpreted by prosecution lawyers as rules of stone that do not allow practice deviation. Breach of duty is often claimed for over-rapid correction of hyponatraemia but, as illustrated above, the literature is not supportive of causation unless correction is excessive. Medicine is not one-size-fits-all and the clinician should be allowed to personalise care, albeit providing contemporaneous justification in the patient records for selecting a particular management strategy (Fig. 3).

References

Moss J (2020) Analytic. Philosophy 61:193–217. https://doi.org/10.1111/phib.12192

Reeve CDC (2021) Hupolêpsis, doxa, and epistêmê in aristotle. Ancient Philos Today 3:172–199. https://doi.org/10.3366/anph.2021.0051

Evans L, Rhodes A, Alhazzani W, Antonelli M, Coopersmith CM, French C, Machado FR, Mcintyre L, Ostermann M, Prescott HC, Schorr C, Simpson S, Wiersinga WJ, Alshamsi F, Angus DC, Arabi Y, Azevedo L, Beale R, Beilman G, Belley-Cote E, Burry L, Cecconi M, Centofanti J, Coz Yataco A, De Waele J, Dellinger RP, Doi K, Du B, Estenssoro E, Ferrer R, Gomersall C, Hodgson C, Møller MH, Iwashyna T, Jacob S, Kleinpell R, Klompas M, Koh Y, Kumar A, Kwizera A, Lobo S, Masur H, McGloughlin S, Mehta S, Mehta Y, Mer M, Nunnally M, Oczkowski S, Osborn T, Papathanassoglou E, Perner A, Puskarich M, Roberts J, Schweickert W, Seckel M, Sevransky J, Sprung CL, Welte T, Zimmerman J, Levy M (2021) Surviving sepsis campaign: international guidelines for management of sepsis and septic shock 2021. Intensive Care Med 47:1181–1247. https://doi.org/10.1007/s00134-021-06506-y

Yealy DM, Mohr NM, Shapiro NI, Venkatesh A, Jones AE, Self WH (2021) Early care of adults with suspected sepsis in the emergency department and out-of-hospital environment: a consensus-based task force report. Ann Emerg Med 78:1–19. https://doi.org/10.1016/j.annemergmed.2021.02.006

Rhee C, Chiotos K, Cosgrove SE, Heil EL, Kadri SS, Kalil AC, Gilbert DN, Masur H, Septimus EJ, Sweeney DA, Strich JR, Winslow DL, Klompas M (2021) Infectious Diseases Society of America Position Paper: Recommended Revisions to the National Severe Sepsis and Septic Shock Early Management Bundle (SEP-1) Sepsis Quality Measure. Clin Infect Dis 72:541–552. https://doi.org/10.1093/cid/ciaa059

McDonagh TA, Metra M, Adamo M, Gardner RS, Baumbach A, Böhm M, Burri H, Butler J, Čelutkienė J, Chioncel O, Cleland JGF, Coats AJS, Crespo-Leiro MG, Farmakis D, Gilard M, Heymans S, Hoes AW, Jaarsma T, Jankowska EA, Lainscak M, Lam CSP, Lyon AR, McMurray JJV, Mebazaa A, Mindham R, Muneretto C, Francesco Piepoli M, Price S, Rosano GMC, Ruschitzka F, Kathrine Skibelund A, ESC Scientific Document Group (2021) ESC Guidelines for the diagnosis and treatment of acute and chronic heart failure. Eur Heart J 42:3599–3726. https://doi.org/10.1093/eurheartj/ehab368

Weinstein H, Solis-Gil V (1966) The treatment of acute pulmonary edema with furosemide. Curr Ther Res Clin Exp 8:435–440

Biagi RW, Bapat BN (1967) Frusemide in acute pulmonary oedema. Lancet 1:849. https://doi.org/10.1016/s0140-6736(67)92818-8

Jhund PS, McMurray JJ, Davie AP (2000) The acute vascular effects of frusemide in heart failure. Br J Clin Pharmacol 50:9–13. https://doi.org/10.1046/j.1365-2125.2000.00219.x

Dikshit K, Vyden JK, Forrester JS, Chatterjee K, Prakash R, Swan HJ (1973) Renal and extrarenal hemodynamic effects of furosemide in congestive heart failure after acute myocardial infarction. N Engl J Med 288:1087–1090. https://doi.org/10.1056/NEJM197305242882102

Tattersfield AE, McNicol MW, Sillett RW (1972) Relationship between haemodynamic and respiratory function in patients with myocardial infarction and left ventricular failure. Clin Sci 42:751–768. https://doi.org/10.1042/cs0420751

Nelson GI, Ahuja RC, Silke B, Okoli RC, Hussain M, Taylor SH (1983) Haemodynamic effects of frusemide and its influence on repetitive rapid volume loading in acute myocardial infarction. Eur Heart J 4:706–711. https://doi.org/10.1093/oxfordjournals.eurheartj.a061382

Francis GS, Siegel RM, Goldsmith SR, Olivari MT, Levine TB, Cohn JN (1985) Acute vasoconstrictor response to intravenous furosemide in patients with chronic congestive heart failure. Activation of the neurohumoral axis. Ann Intern Med 103:1–6. https://doi.org/10.7326/0003-4819-103-1-1

McCoy IE, Montez-Rath ME, Chertow GM, Chang TI (2019) Estimated effects of early diuretic use in critical illness. Crit Care Explor 1:e0021. https://doi.org/10.1097/CCE.0000000000000021

Cotter G, Metzkor E, Kaluski E, Faigenberg Z, Miller R, Simovitz A, Shaham O, Marghitay D, Koren M, Blatt A, Moshkovitz Y, Zaidenstein R, Golik A (1998) Randomised trial of high-dose isosorbide dinitrate plus low-dose furosemide versus high-dose furosemide plus low-dose isosorbide dinitrate in severe pulmonary oedema. Lancet 351:389–393. https://doi.org/10.1016/S0140-6736(97)08417-1

Nelson GI, Silke B, Ahuja RC, Hussain M, Taylor SH (1983) Haemodynamic advantages of isosorbide dinitrate over frusemide in acute heart-failure following myocardial infarction. Lancet 1:730–733. https://doi.org/10.1016/s0140-6736(83)92025-1

Baitsch G, Bertel O, Burkart F, Steiner A, Vettiger K, Ritz R (1979) Hämodynamische Konsequenzen der Herzinsuffizienzbehandlung mit Furosemid beim frischen Myokardinfarkt [Hemodynamic consequences of the furosemide treatment of cardiac insufficiency in recent myocardial infarct]. Schweiz Med Wochenschr 109:1663–1666

Franciosa JA, Silverstein SR (1982) Hemodynamic effects of nitroprusside and furosemide in left ventricular failure. Clin Pharmacol Ther 32:62–69. https://doi.org/10.1038/clpt.1982.127

Ormerod JO, Arif S, Mukadam M, Evans JD, Beadle R, Fernandez BO, Bonser RS, Feelisch M, Madhani M, Frenneaux MP (2015) Short-term intravenous sodium nitrite infusion improves cardiac and pulmonary hemodynamics in heart failure patients. Circ Heart Fail 8:565–571. https://doi.org/10.1161/CIRCHEARTFAILURE.114.001716

Kozhuharov N, Goudev A, Flores D, Maeder MT, Walter J, Shrestha S et al (2019) Effect of a strategy of comprehensive vasodilation vs usual care on mortality and heart failure rehospitalization among patients with acute heart failure: the GALACTIC Randomized Clinical Trial. JAMA 322:2292–2302. https://doi.org/10.1001/jama.2019.18598

Freund Y, Cachanado M, Delannoy Q, Laribi S, Yordanov Y, Gorlicki J et al (2020) Effect of an emergency department care bundle on 30-day hospital discharge and survival among elderly patients with acute heart failure: the ELISABETH Randomized Clinical Trial. JAMA 324:1948–1956. https://doi.org/10.1001/jama.2020.19378

Minet C, Potton L, Bonadona A, Hamidfar-Roy R, Somohano CA, Lugosi M et al (2015) Venous thromboembolism in the ICU: main characteristics, diagnosis and thromboprophylaxis. Crit Care 19:287. https://doi.org/10.1186/s13054-015-1003-9

Alhazzani W, Lim W, Jaeschke RZ, Murad MH, Cade J, Cook DJ (2013) Heparin thromboprophylaxis in medical-surgical critically ill patients: a systematic review and meta-analysis of randomized trials. Crit Care Med 41:2088–2098. https://doi.org/10.1097/CCM.0b013e31828cf104

Kapoor M, Kupfer YY, Tessler S (1999) Subcutaneous heparin prophylaxis significantly reduces the incidence of venous thromboembolic events in the critically ill. Abstr Crit Care Med 27(Suppl):A69

Alikhan R, Bedenis R (2014) Cohen AT (2014) Heparin for the prevention of venous thromboembolism in acutely ill medical patients (excluding stroke and myocardial infarction). Cochrane Database Syst Rev. https://doi.org/10.1002/14651858.CD003747.pub4

Barrera LM, Perel P, Ker K, Cirocchi R, Farinella E, Morales Uribe CH (2013) Thromboprophylaxis for trauma patients. Cochrane Database Syst Rev 3:CD008303. https://doi.org/10.1002/14651858.CD008303.pub2

Haykal T, Zayed Y, Kerbage J, Deliwala S, Long CA, Ortel TL (2021) Meta-analysis and systematic review of randomized controlled trials assessing the role of thromboprophylaxis after vascular surgery. J Vasc Surg Venous Lymphat Disord. https://doi.org/10.1016/j.jvsv.2021.08.019

Handoll HH, Farrar MJ, McBirnie J, Tytherleigh-Strong G, Milne AA, Gillespie WJ (2002) Heparin, low molecular weight heparin and physical methods for preventing deep vein thrombosis and pulmonary embolism following surgery for hip fractures. Cochrane Database Syst Rev 4:CD000305. https://doi.org/10.1002/14651858.CD000305

Helms J, Tacquard C, Severac F, Leonard-Lorant I, Ohana M, Delabranche X, Merdji H, Clere-Jehl R, Schenck M, Fagot Gandet F, Fafi-Kremer S, Castelain V, Schneider F, Grunebaum L, Anglés-Cano E, Sattler L, Mertes PM, Meziani F, CRICS TRIGGERSEP Group (2020) High risk of thrombosis in patients with severe SARS-CoV-2 infection: a multicenter prospective cohort study. Intensive Care Med 46:1089–1098. https://doi.org/10.1007/s00134-020-06062-x

Goligher EC, Bradbury CA, McVerry BJ, Lawler PR, Berger JS, Gong MN, Carrier M, Reynolds HR, Kumar A, Turgeon AF, Kornblith LZ, Kahn SR, Marshall JC, Kim KS, Houston BL, Derde LPG, Cushman M, Tritschler T, Angus DC, Godoy LC, McQuilten Z, Kirwan BA, Farkouh ME, Brooks MM, Lewis RJ, Berry LR, Lorenzi E, Gordon AC, Ahuja T, Al-Beidh F, Annane D, Arabi YM, Aryal D, Baumann Kreuziger L, Beane A, Bhimani Z, Bihari S, Billett HH, Bond L, Bonten M, Brunkhorst F, Buxton M, Buzgau A, Castellucci LA, Chekuri S, Chen JT, Cheng AC, Chkhikvadze T, Coiffard B, Contreras A, Costantini TW, de Brouwer S, Detry MA, Duggal A, Džavík V, Effron MB, Eng HF, Escobedo J, Estcourt LJ, Everett BM, Fergusson DA, Fitzgerald M, Fowler RA, Froess JD, Fu Z, Galanaud JP, Galen BT, Gandotra S, Girard TD, Goodman AL, Goossens H, Green C, Greenstein YY, Gross PL, Haniffa R, Hegde SM, Hendrickson CM, Higgins AM, Hindenburg AA, Hope AA, Horowitz JM, Horvat CM, Huang DT, Hudock K, Hunt BJ, Husain M, Hyzy RC, Jacobson JR, Jayakumar D, Keller NM, Khan A, Kim Y, Kindzelski A, King AJ, Knudson MM, Kornblith AE, Kutcher ME, Laffan MA, Lamontagne F, Le Gal G, Leeper CM, Leifer ES, Lim G, Gallego Lima F, Linstrum K, Litton E, Lopez-Sendon J, Lother SA, Marten N, Saud Marinez A, Martinez M, Mateos Garcia E, Mavromichalis S, McAuley DF, McDonald EG, McGlothlin A, McGuinness SP, Middeldorp S, Montgomery SK, Mouncey PR, Murthy S, Nair GB, Nair R, Nichol AD, Nicolau JC, Nunez-Garcia B, Park JJ, Park PK, Parke RL, Parker JC, Parnia S, Paul JD, Pompilio M, Quigley JG, Rosenson RS, Rost NS, Rowan K, Santos FO, Santos M, Santos MO, Satterwhite L, Saunders CT, Schreiber J, Schutgens REG, Seymour CW, Siegal DM, Silva DG Jr, Singhal AB, Slutsky AS, Solvason D, Stanworth SJ, Turner AM, van Bentum-Puijk W, van de Veerdonk FL, van Diepen S, Vazquez-Grande G, Wahid L, Wareham V, Widmer RJ, Wilson JG, Yuriditsky E, Zhong Y, Berry SM, McArthur CJ, Neal MD, Hochman JS, Webb SA, Zarychanski R, REMAP-CAP Investigators; ACTIV-4a Investigators; ATTACC Investigators (2021) Therapeutic anticoagulation with heparin in critically ill patients with covid-19. N Engl J Med 385:777–789. https://doi.org/10.1056/NEJMoa2103417

Spyropoulos AC, Goldin M, Giannis D, Diab W, Wang J, Khanijo S, Mignatti A, Gianos E, Cohen M, Sharifova G, Lund JM, Tafur A, Lewis PA, Cohoon KP, Rahman H, Sison CP, Lesser ML, Ochani K, Agrawal N, Hsia J, Anderson VE, Bonaca M, Halperin JL, Weitz JI, HEP-COVID Investigators (2021) Efficacy and safety of therapeutic-dose heparin vs standard prophylactic or intermediate-dose heparins for thromboprophylaxis in high-risk hospitalized patients with COVID-19: the HEP-COVID Randomized Clinical Trial. JAMA Intern Med. https://doi.org/10.1001/jamainternmed.2021.6203

Investigators INSPIRATION, Sadeghipour P, Talasaz AH, Rashidi F, Sharif-Kashani B, Beigmohammadi MT, Farrokhpour M, Sezavar SH, Payandemehr P, Dabbagh A, Moghadam KG, Jamalkhani S, Khalili H, Yadollahzadeh M, Riahi T, Rezaeifar P, Tahamtan O, Matin S, Abedini A, Lookzadeh S, Rahmani H, Zoghi E, Mohammadi K, Sadeghipour P, Abri H, Tabrizi S, Mousavian SM, Shahmirzaei S, Bakhshandeh H, Amin A, Rafiee F, Baghizadeh E, Mohebbi B, Parhizgar SE, Aliannejad R, Eslami V, Kashefizadeh A, Kakavand H, Hosseini SH, Shafaghi S, Ghazi SF, Najafi A, Jimenez D, Gupta A, Madhavan MV, Sethi SS, Parikh SA, Monreal M, Hadavand N, Hajighasemi A, Maleki M, Sadeghian S, Piazza G, Kirtane AJ, Van Tassell BW, Dobesh PP, Stone GW, Lip GYH, Krumholz HM, Goldhaber SZ, Bikdeli B (2021) Effect of intermediate-dose vs standard-dose prophylactic anticoagulation on thrombotic events, extracorporeal membrane oxygenation treatment, or mortality among patients with COVID-19 admitted to the intensive care unit: the inspiration randomized clinical trial. JAMA 325:1620–1630. https://doi.org/10.1001/jama.2021.4152

Lawler PR, Goligher EC, Berger JS, Neal MD, McVerry BJ, Nicolau JC, Gong MN, Carrier M, Rosenson RS, Reynolds HR, Turgeon AF, Escobedo J, Huang DT, Bradbury CA, Houston BL, Kornblith LZ, Kumar A, Kahn SR, Cushman M, McQuilten Z, Slutsky AS, Kim KS, Gordon AC, Kirwan BA, Brooks MM, Higgins AM, Lewis RJ, Lorenzi E, Berry SM, Berry LR, Aday AW, Al-Beidh F, Annane D, Arabi YM, Aryal D, Baumann Kreuziger L, Beane A, Bhimani Z, Bihari S, Billett HH, Bond L, Bonten M, Brunkhorst F, Buxton M, Buzgau A, Castellucci LA, Chekuri S, Chen JT, Cheng AC, Chkhikvadze T, Coiffard B, Costantini TW, de Brouwer S, Derde LPG, Detry MA, Duggal A, Džavík V, Effron MB, Estcourt LJ, Everett BM, Fergusson DA, Fitzgerald M, Fowler RA, Galanaud JP, Galen BT, Gandotra S, García-Madrona S, Girard TD, Godoy LC, Goodman AL, Goossens H, Green C, Greenstein YY, Gross PL, Hamburg NM, Haniffa R, Hanna G, Hanna N, Hegde SM, Hendrickson CM, Hite RD, Hindenburg AA, Hope AA, Horowitz JM, Horvat CM, Hudock K, Hunt BJ, Husain M, Hyzy RC, Iyer VN, Jacobson JR, Jayakumar D, Keller NM, Khan A, Kim Y, Kindzelski AL, King AJ, Knudson MM, Kornblith AE, Krishnan V, Kutcher ME, Laffan MA, Lamontagne F, Le Gal G, Leeper CM, Leifer ES, Lim G, Lima FG, Linstrum K, Litton E, Lopez-Sendon J, Lopez-Sendon Moreno JL, Lother SA, Malhotra S, Marcos M, Saud Marinez A, Marshall JC, Marten N, Matthay MA, McAuley DF, McDonald EG, McGlothlin A, McGuinness SP, Middeldorp S, Montgomery SK, Moore SC, Morillo Guerrero R, Mouncey PR, Murthy S, Nair GB, Nair R, Nichol AD, Nunez-Garcia B, Pandey A, Park PK, Parke RL, Parker JC, Parnia S, Paul JD, Pérez González YS, Pompilio M, Prekker ME, Quigley JG, Rost NS, Rowan K, Santos FO, Santos M, Olombrada Santos M, Satterwhite L, Saunders CT, Schutgens REG, Seymour CW, Siegal DM, Silva DG Jr, Shankar-Hari M, Sheehan JP, Singhal AB, Solvason D, Stanworth SJ, Tritschler T, Turner AM, van Bentum-Puijk W, van de Veerdonk FL, van Diepen S, Vazquez-Grande G, Wahid L, Wareham V, Wells BJ, Widmer RJ, Wilson JG, Yuriditsky E, Zampieri FG, Angus DC, McArthur CJ, Webb SA, Farkouh ME, Hochman JS, Zarychanski R, ATTACC Investigators; ACTIV-4a Investigators; REMAP-CAP Investigators (2021) Therapeutic anticoagulation with heparin in noncritically ill patients with Covid-19. N Engl J Med 385:790–802. https://doi.org/10.1056/NEJMoa2105911

Priglinger U, Delle Karth G, Geppert A, Joukhadar C, Graf S, Berger R, Hülsmann M, Spitzauer S, Pabinger I, Heinz G (2003) Prophylactic anticoagulation with enoxaparin: Is the subcutaneous route appropriate in the critically ill? Crit Care Med 31:1405–1409. https://doi.org/10.1097/01.CCM.0000059725.60509.A0

Robinson S, Zincuk A, Strøm T, Larsen TB, Rasmussen B, Toft P (2010) Enoxaparin, effective dosage for intensive care patients: double-blinded, randomised clinical trial. Crit Care 14:R41. https://doi.org/10.1186/cc8924

Benes J, Skulec R, Jobanek J, Cerny V (2021) Fixed-dose enoxaparin provides efficient DVT prophylaxis in mixed ICU patients despite low anti-Xa levels: A prospective observational cohort study. Biomed Pap Med Fac Univ Palacky Olomouc Czech Repub. https://doi.org/10.5507/bp.2021.031

Cauchie P, Piagnerelli M (2021) What do we know about thromboprophylaxis and its monitoring in critically ill patients? Biomedicines 9:864. https://doi.org/10.3390/biomedicines9080864

Dutt T, Simcox D, Downey C, McLenaghan D, King C, Gautam M, Lane S, Burhan H (2020) Thromboprophylaxis in COVID-19: anti-FXa-the missing factor? Am J Respir Crit Care Med 202:455–457. https://doi.org/10.1164/rccm.202005-1654LE

Stattin K, Lipcsey M, Andersson H, Pontén E, Bülow Anderberg S, Gradin A, Larsson A, Lubenow N, von Seth M, Rubertsson S, Hultström M, Frithiof R (2020) Inadequate prophylactic effect of low-molecular weight heparin in critically ill COVID-19 patients. J Crit Care 60:249–252. https://doi.org/10.1016/j.jcrc.2020.08.026

Hoorn EJ, Zietse R (2017) Diagnosis and treatment of hyponatremia: compilation of the guidelines. J Am Soc Nephrol 28:1340–1349. https://doi.org/10.1681/ASN.2016101139

Spasovski G, Vanholder R, Allolio B, Annane D, Ball S, Bichet D, Decaux G, Fenske W, Hoorn EJ, Ichai C, Joannidis M, Soupart A, Zietse R, Haller M, van der Veer S, Van Biesen W, Nagler E (2014) Clinical practice guideline on diagnosis and treatment of hyponatraemia. Intensive Care Med 40:320–331. https://doi.org/10.1007/s00134-014-3210-2

Verbalis JG, Goldsmith SR, Greenberg A, Schrier RW, Sterns RH (2007) Hyponatremia treatment guidelines 2007: expert panel recommendations. Am J Med 120:S1-21. https://doi.org/10.1016/j.amjmed.2007.09.001

Verbalis JG, Goldsmith SR, Greenberg A, Korzelius C, Schrier RW, Sterns RH, Thompson CJ (2013) Diagnosis, evaluation, and treatment of hyponatremia: expert panel recommendations. Am J Med 126:S1-42. https://doi.org/10.1016/j.amjmed.2013.07.006

https://bestpractice.bmj.com/topics/en-gb/1214. Accessed 5 Dec 2021

Brunner JE, Redmond JM, Haggar AM, Kruger DF, Elias SB (1990) Central pontine myelinolysis and pontine lesions after rapid correction of hyponatremia: a prospective magnetic resonance imaging study. Ann Neurol 27:61–66. https://doi.org/10.1002/ana.410270110

Pham PM, Pham PA, Pham SV, Pham PT, Pham PT, Pham PC (2015) Correction of hyponatremia and osmotic demyelinating syndrome: have we neglected to think intracellularly? Clin Exp Nephrol 19:489–495. https://doi.org/10.1007/s10157-014-1021-y

Ayus JC, Arieff AI (1999) Chronic hyponatremic encephalopathy in postmenopausal women: association of therapies with morbidity and mortality. JAMA 281:2299–2304. https://doi.org/10.1001/jama.281.24.2299

George JC, Zafar W, Bucaloiu ID, Chang AR (2018) Risk factors and outcomes of rapid correction of severe hyponatremia. Clin J Am Soc Nephrol 13:984–992. https://doi.org/10.2215/CJN.13061117

Singh TD, Fugate JE, Rabinstein AA (2014) Central pontine and extrapontine myelinolysis: a systematic review. Eur J Neurol 21:1443–1450. https://doi.org/10.1111/ene.12571

Baek SH, Jo YH, Ahn S, Medina-Liabres K, Oh YK, Lee JB, Kim S (2021) Risk of overcorrection in rapid intermittent bolus vs slow continuous infusion therapies of hypertonic saline for patients with symptomatic hyponatremia: the SALSA Randomized Clinical Trial. JAMA Intern Med 181:81–92. https://doi.org/10.1001/jamainternmed.2020.5519

Lepur D, Barsić B (2007) Community-acquired bacterial meningitis in adults: antibiotic timing in disease course and outcome. Infection 35:225–231. https://doi.org/10.1007/s15010-007-6202-0

Proulx N, Fréchette D, Toye B, Chan J, Kravcik S (2005) Delays in the administration of antibiotics are associated with mortality from adult acute bacterial meningitis. QJM 98:291–298. https://doi.org/10.1093/qjmed/hci047

Bodilsen J, Dalager-Pedersen M, Schønheyder HC, Nielsen H (2016) Time to antibiotic therapy and outcome in bacterial meningitis: a Danish population-based cohort study. BMC Infect Dis 16:392. https://doi.org/10.1186/s12879-016-1711-z

Køster-Rasmussen R, Korshin A, Meyer CN (2008) Antibiotic treatment delay and outcome in acute bacterial meningitis. J Infect 57:449–454. https://doi.org/10.1016/j.jinf.2008.09.033

Glimåker M, Johansson B, Grindborg Ö, Bottai M, Lindquist L, Sjölin J (2015) Adult bacterial meningitis: earlier treatment and improved outcome following guideline revision promoting prompt lumbar puncture. Clin Infect Dis 60:1162–1169. https://doi.org/10.1093/cid/civ011

Perera R (2006) Statistics and death from meningococcal disease in children. BMJ 332:1297–1298. https://doi.org/10.1136/bmj.332.7553.1297

Seymour CW, Gesten F, Prescott HC, Friedrich ME, Iwashyna TJ, Phillips GS, Lemeshow S, Osborn T, Terry KM, Levy MM (2017) Time to treatment and mortality during mandated emergency care for sepsis. N Engl J Med 376:2235–2244. https://doi.org/10.1056/NEJMoa1703058

Filbin MR, Lynch J, Gillingham TD, Thorsen JE, Pasakarnis CL, Nepal S, Matsushima M, Rhee C, Heldt T, Reisner AT (2018) Presenting symptoms independently predict mortality in septic shock: importance of a previously unmeasured confounder. Crit Care Med 46:1592–1599. https://doi.org/10.1097/CCM.0000000000003260

Grindborg Ö, Naucler P, Sjölin J, Glimåker M (2015) Adult bacterial meningitis—a quality registry study: earlier treatment and favourable outcome if initial management by infectious diseases physicians. Clin Microbiol Infect 21:560–566. https://doi.org/10.1016/j.cmi.2015.02.023

Aronin SI, Peduzzi P, Quagliarello VJ (1998) Community-acquired bacterial meningitis: risk stratification for adverse clinical outcome and effect of antibiotic timing. Ann Intern Med 129:862–869. https://doi.org/10.7326/0003-4819-129-11_part_1-199812010-00004

Costerus JM, Brouwer MC, Bijlsma MW, Tanck MW, van der Ende A, van de Beek D (2016) Impact of an evidence-based guideline on the management of community-acquired bacterial meningitis: a prospective cohort study. Clin Microbiol Infect 22:928–933. https://doi.org/10.1016/j.cmi.2016.07.026

Chen Y, Harris S, Rogers Y, Ahmad T, Asselbergs FW (2022) Nudging within learning health systems: next generation decision support to improve cardiovascular care. Eur Heart J 9:ehac030. https://doi.org/10.1093/eurheartj/ehac030

Acknowledgement

We thank Conrad Mattli for his assistance with Greek philosophy citations.

Funding

None.

Author information

Authors and Affiliations

Contributions

DAH and MS conceived the idea to write this manuscript. DAH did the initial literature search and first draft of the manuscript. DAH and MS finalised the manuscript and approved the final version.

Corresponding author

Ethics declarations

Conflicts of interest

None.

Ethics approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hofmaenner, D.A., Singer, M. Challenging management dogma where evidence is non-existent, weak or outdated. Intensive Care Med 48, 548–558 (2022). https://doi.org/10.1007/s00134-022-06659-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00134-022-06659-4