Abstract

We prove new bounds on the dimensions of distance sets and pinned distance sets of planar sets. Among other results, we show that if \(A\subset {\mathbb {R}}^2\) is a Borel set of Hausdorff dimension \(s>1\), then its distance set has Hausdorff dimension at least \(37/54\approx 0.685\). Moreover, if \(s\in (1,3/2]\), then outside of a set of exceptional y of Hausdorff dimension at most 1, the pinned distance set \(\{ |x-y|:x\in A\}\) has Hausdorff dimension \(\ge \tfrac{2}{3}s\) and packing dimension at least \( \tfrac{1}{4}(1+s+\sqrt{3s(2-s)}) \ge 0.933\). These estimates improve upon the existing ones by Bourgain, Wolff, Peres–Schlag and Iosevich–Liu for sets of Hausdorff dimension \(>1\). Our proof uses a multi-scale decomposition of measures in which, unlike previous works, we are able to choose the scales subject to certain constrains. This leads to a combinatorial problem, which is a key new ingredient of our approach, and which we solve completely by optimizing certain variation of Lipschitz functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Statement of Results

1.1 Introduction.

Given \(A\subset {\mathbb {R}}^d\), its distance set is \(\Delta (A)=\{|x-y|:x,y\in A\}\). Falconer [Fal85] pioneered the study of the relationship between the Hausdorff dimensions of A and \(\Delta (A)\). He proved that if \(d\ge 2\) and \(A\subset {\mathbb {R}}^d\) is a Borel (or even analytic) set then \({{\,\mathrm{dim_H}\,}}(\Delta (A)) \ge \min ({{\,\mathrm{dim_H}\,}}(A)-\tfrac{1}{2}(d-1),1)\), where \({{\,\mathrm{dim_H}\,}}\) stands for Hausdorff dimension. Falconer also constructed compact sets \(A\subset {\mathbb {R}}^d\) (based on lattices) of any Hausdorff dimension such that \({{\,\mathrm{dim_H}\,}}(\Delta (A)) \le \min (2{{\,\mathrm{dim_H}\,}}(A)/d,1)\). Although it is not explicitly stated in [Fal85], the conjecture that these lattice constructions are extremal, in the sense that one should have \({{\,\mathrm{dim_H}\,}}(\Delta (A))=1\) if \({{\,\mathrm{dim_H}\,}}(A)\ge d/2\), has become known as the Falconer distance set problem.

Falconer’s problem is a continuous version of the celebrated P. Erdős distinct distances problem [Erd46], asserting (in the plane) that if \(|A|=N\), \(A\subset {\mathbb {R}}^2\), then \(|\Delta (A)|\ge c N/\sqrt{\log N}\). Guth and Katz [GN15] (building up on work of Elekes and Sharir [ES11]) famously solved this problem, up to logarithmic factors, by showing that \(|\Delta (A)| \ge c N/\log N\). However, the approach of Guth and Katz and, indeed, all previous methods developed to tackle Erdős’ problem, do not appear to be able to yield progress on Falconer’s problem.

From now on, we focus on the case \(d=2\), which is the first non-trivial case, the best understood, and the focus of this article. Wolff [Wol99], based on a method of Mattila [Mat87] and extending ideas of J. Bourgain [Bou94], proved that if \(A\subset {\mathbb {R}}^2\) is a Borel set with \({{\,\mathrm{dim_H}\,}}(A)\ge 4/3\), then \({{\,\mathrm{dim_H}\,}}(\Delta (A))=1\). In fact, he proved that \({{\,\mathrm{dim_H}\,}}(A)>4/3\) ensures that \(\Delta (A)\) has positive length, and established the more general dimension formula

whenever \({{\,\mathrm{dim_H}\,}}(A)>1\). The method developed by Mattila and Wolff is strongly Fourier-analytic, depending on difficult estimates for the decay of circular averages of the Fourier transform of measures.

Later Bourgain [Bou03], crucially relying on earlier work of Katz and Tao [KT01], proved that if \(A\subset {\mathbb {R}}^2\) satisfies \({{\,\mathrm{dim_H}\,}}(A)\ge 1\), then

where \(\delta >0\) is a universal constant. Although non-explicit, it is clear from the proof that the value of \(\delta \) one would get is extremely small. The method of Katz–Tao and Bourgain is based on additive combinatorics, and it seems difficult for this type of arguments to yield reasonable values of \(\delta \).

A related problem concerns the dimensions of pinned distance sets

Peres and Schlag [PS00, Theorem 8.3] proved that if \(A\subset {\mathbb {R}}^2\) is a Borel set with \({{\,\mathrm{dim_H}\,}}(A)=s\), then for all \(0<t\le \min (s,1)\),

Recently, Iosevich and Liu [IL17] proved that (1.3) remains true with \(3+3t-3s\) in the right-hand side. This is an improvement in some parts of the parameter region. Both results imply that if \({{\,\mathrm{dim_H}\,}}(A)>3/2\), then there is \(y\in A\) such that \({{\,\mathrm{dim_H}\,}}(\Delta _y A)=1\), and it is unknown whether 3 / 2 can be replaced by a smaller number. We remark that the results of both [PS00] and [IL17] extend to higher dimensions.

These were the best known results towards Falconer’s problem in the plane for general sets prior to this article. For some special classes of sets, better results are known. In particular, the second author proved in [Shm17] that if \(A\subset {\mathbb {R}}^2\) is a Borel set of equal Hausdorff and packing dimension, and this value is \(>1\), then \({{\,\mathrm{dim_H}\,}}(\Delta _y(A))=1\) for all y outside of a set of exceptions of Hausdorff dimension at most 1, and in particular for many \(y\in A\). This verifies Falconer’s conjecture for this type of sets, outside of the endpoint. We remark that Orponen [Orp17b] and the second author [Shm17b] had previously proved weaker results of the same kind. See also [Mat87, IL16] for other results on the distance sets of special classes of sets.

1.2 Main results.

In this article we prove new lower bounds on the dimensions of (pinned) distance sets, which in particular greatly improve the best previously known estimates when \({{\,\mathrm{dim_H}\,}}(A)=1+\delta \), \(\delta >0\) small.

Theorem 1.1

If A is a Borel subset of \({\mathbb {R}}^2\) with \({{\,\mathrm{dim_H}\,}}A=s\), then

In particular, if \(s>1\), then one can find many \(y\in A\) such that

We remark that we get better bounds for the dimension of the full distance set, see Theorem 1.4 below.

The last claim in Theorem 1.1 improves the previously known bounds for the dimensions of pinned distance sets \(\Delta _y(A)\) with \(y\in A\) for all \(s\in (1,3/2]\). The bound (1.4) also improves upon (1.3) (and the variant of Iosevich and Liu) in large regions of parameter space, and in particular for \(t=\min (\tfrac{2}{3}s,1)\) and all \(s\in (3/5,5/3)\).

Theorem 1.1 is a special case of a more general result that takes into account the Hausdorff and also the packing dimension of A. We refer to [Fal14, §3.5] for the definition and main properties of packing dimension \({{\,\mathrm{dim_P}\,}}\), and simply note that it satisfies \({{\,\mathrm{dim_H}\,}}(A)\le {{\,\mathrm{dim_P}\,}}(A)\le {{\,\mathrm{{\overline{\dim }}_B}\,}}(A)\), where \({{\,\mathrm{{\overline{\dim }}_B}\,}}\) denotes the upper box-counting (or Minkowski) dimension. For our method, the worst case is that in which A has maximal packing dimension 2, and we get better bounds for the distance set under the assumption that the packing dimension is smaller:

Theorem 1.2

Let

Given \(0< s\le u \le 2\), the following holds: if A is a Borel subset of \({\mathbb {R}}^2\) with \({{\,\mathrm{dim_H}\,}}A\ge s\) and \({{\,\mathrm{dim_P}\,}}A \le u\), then

In particular, if \(s>1\) then there are many \(y\in A\) such that

and hence if \({{\,\mathrm{dim_H}\,}}(A)>1\) and \({{\,\mathrm{dim_P}\,}}A\le 2{{\,\mathrm{dim_H}\,}}A-1\), then \({{\,\mathrm{dim_H}\,}}(\Delta _y(A))=1\) for many \(y\in A\).

Note that Theorem 1.1 follows immediately by taking \(u=2\). A simple calculation shows that if \(0\le s\le u \le 2\) and \(s<2\), then

We remark that, taking \(u=s\), this theorem recovers the main result of [Shm17] mentioned above, namely that if \({{\,\mathrm{dim_H}\,}}(A)={{\,\mathrm{dim_P}\,}}(A)>1\), then \({{\,\mathrm{dim_H}\,}}(\Delta _y A)=1\) for many \(y\in A\). On the other hand, it was known from (1.3) that if \({{\,\mathrm{dim_H}\,}}(A)>3/2\) then there is \(y\in A\) such that \({{\,\mathrm{dim_H}\,}}(\Delta _y A)=1\). The last claim in Theorem 1.2 can be seen as interpolating between these two situations, and hence provides a new, more general, geometric condition under which Falconer’s conjecture is known to hold.

When \({{\,\mathrm{dim_H}\,}}(A)>1\), we are able to get much better lower bounds for the packing dimension of the pinned distance sets:

Theorem 1.3

Let A be a Borel subset of \({\mathbb {R}}^2\) with \(s={{\,\mathrm{dim_H}\,}}(A)\in (1,3/2)\). Then

In particular, there is \(y\in A\) such that

We recall that since upper box-counting dimension is at least as large as packing dimension, the above theorem also holds for upper box-counting dimension. Even though Falconer’s conjecture is about the Hausdorff dimension of the distance set, this result presents further evidence towards its validity.

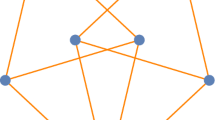

The three solid graphs show, from top to bottom: (1) the lower bound given by Theorem 1.3 for \({{\,\mathrm{dim_P}\,}}(\Delta _y(A))\) for y outside of a one dimensional set of y, (2) the lower bound for \({{\,\mathrm{dim_H}\,}}(\Delta (A))\) given by Theorem 1.4, (3) the lower bound given by Theorem 1.1 for \({{\,\mathrm{dim_H}\,}}(\Delta _y(A))\) outside of a one dimensional set of y. The dashed line is Wolff’s lower bound for \({{\,\mathrm{dim_H}\,}}(\Delta (A))\) (which was the previously known best bound, outside of a tiny interval to the right of 1). In all cases the variable is \({{\,\mathrm{dim_H}\,}}(A)\).

Finally, as anticipated above, we get a better bound for the dimension of the full distance set when \({{\,\mathrm{dim_H}\,}}(A)\) is slightly larger than 1:

Theorem 1.4

If \(A\subset {\mathbb {R}}^2\) is a Borel set with \({{\,\mathrm{dim_H}\,}}(A)=s\in (1,4/3)\), then

A calculation shows that this indeed improves upon Wolff’s bound (1.1) for the dimension of the full distance set for \(s\in (1,1.21931\ldots )\) (and upon Bourgain’s bound (1.2) for all \(s>1\)). We remark that this theorem is obtained by combining the idea of the Proof of Theorem 1.2 with a known effective variant of Wolff’s bound (1.1). Although achieving this combination takes quite a bit of work, Theorem 1.2 should perhaps be considered the most basic result, since its proof is shorter and already contains most of the main ideas, and the improvement given by Theorem 1.4 is relatively modest. Note also that already applying Theorem 1.1 for the full distance set improves upon (1.1) for \(s\in (1,6/5)\). See Figure 1 for a comparison of the lower bounds from Theorems 1.1, 1.3 and 1.4 and Wolff’s lower bound.

After this paper was made public, Liu [Liu18] posted a preprint extending Wolff’s result to pinned distance sets. In particular, he shows that if \(A\subset {\mathbb {R}}^2\) is a Borel set with \({{\,\mathrm{dim_H}\,}}(A)>4/3\), then \(\Delta _x(A)\) has positive Lebesgue measure for some \(x\in A\) (with bounds on the dimension of the exceptional set). This is stronger than our Theorem 1.1 for \(s>4/3\) (other than the exceptional set being larger).

1.3 Strategy of proof.

Our approach is completely different to those of Wolff, Bourgain, Peres and Schlag and Iosevich and Liu. Rather, it can be seen as a continuation of the ideas successively developed in [Orp17b, Shm17b, Shm17] to attack the distance set problems for sets with certain regularity. Thus, one of the main points of this paper is extending the strategy of these papers so that it can be applied to general sets.

At the core of our method is a lower box-counting estimate for pinned distance sets \(\Delta _y A\) in terms of a multi-scale decomposition of A or, rather, a Frostman measure \(\mu \) supported on A. See Section 4 for precise statements. A key aspect of these estimates is that they recover a global lower box-counting estimate for \(\Delta _y A\) from bounds on local, discretized and linearized estimates for the pinned distance measures \(\Delta _y \mu \).

The general philosophy of obtaining lower bounds for the dimension of projected sets and measures, in terms of multi-scale averages of local projections is behind a large number of results in fractal geometry in the last few years, see e.g. [Hoc12, Hoc14] and references there. The insight that this approach can be used also to study distance sets is due to Orponen [Orp12, Orp17b].

Up until the paper [Shm17], the scales in the multi-scale decomposition behind all the variants of the method described above were of the form \(2^{-N j}\) for some fixed N. One of the innovations of [Shm17] was to modify the method so that it could handle also scales of the form \(2^{-(1+\varepsilon )^j}\) (the point being that \((1+\varepsilon )^j\) is exponential in j, rather than linear). Although this was flexible enough to handle sets of equal Hausdorff and packing dimension (as opposed to Ahlfors-regular sets as in [Orp17b, Shm17b]), it was still too restrictive for dealing with general sets.

One of the main innovations of this paper is that we are able to work with scales \(2^{-M_j}\) where the \(M_j\) only need to satisfy \(\tau M_j \le M_{j+1}-M_j\le M_j+T\) (where \(\tau >0, T\in {\mathbb {N}}\) are fixed parameters). This provides a major degree of flexibility. In particular, a crucial point is that we are able to pick the sequence \((M_j)\) depending on the set A (or the Frostman measure \(\mu \)), while in all previous works the scales in the multi-scale decomposition were basically fixed. See Proposition 4.4. This leads us to the combinatorial problem of optimizing the choice of \((M_j)\) for each measure \(\mu \). We solve this problem completely, up to negligible error terms, in Section 5.

In fact, we deduce the combinatorial statements we need from several statements about the variation of Lipschitz functions, which might be of independent interest. More precisely, given a 1-Lipschitz function \(f:[0,a]\rightarrow {\mathbb {R}}\) satisfying certain additional assumptions, we seek to minimize

where \((a_n)_{n=0}^\infty \) is a strictly decreasing sequence tending to 0 with \(a=a_0\) and \(a_{n} \le 2 a_{n+1}\). Conversely, we also study the structure of functions f for which these sums are (for some sequence \((a_i)\)) close to the minimum possible value. We underline that this part of the method is completely new as the combinatorial problem does not arise for fixed multi-scale decompositions.

Another obstacle to dealing with arbitrary sets and measures is that energies of measures (which play a key role throughout) do not have a nice multi-scale decomposition in general. We deal with this by decomposing a general measure supported on \([0,1)^2\) as a superposition of measures with a regular Cantor structure, plus a small error term: see Corollary 3.5. This step is an adaptation of some ideas of Bourgain we learned from [Bou10]. After some technical difficulties, this reduces our study to those regular measures for which a suitable multi-scale expression of the energy does exist, see Lemma 3.3.

The strategy just discussed is behind the proofs of Theorems 1.2, 1.3 and 1.4. However (as briefly indicated above), the Proof of Theorem 1.4 is based on merging these ideas with a more quantitative version of Wolff’s result that if \({{\,\mathrm{dim_H}\,}}(A)\ge 4/3\) then \({{\,\mathrm{dim_H}\,}}(\Delta (A))=1\), see Theorem 6.4 below. The fact that one can improve upon Theorem 1.1 (for the full distance set) is based on the observation that for some sets \(A\subset {\mathbb {R}}^2\) of Hausdorff dimension \(s>1\) for which the method of the Proof of Theorem 1.1 cannot give anything better than \({{\,\mathrm{dim_H}\,}}(\Delta (A))\ge 2s/3\), the quantitative version of Wolff’s Theorem can give a much better bound. The fact that these two methods are based on totally different techniques and also have different “enemies” that one must overcome, suggests that neither of them (or even in combination as we do here) provides a definitive line of attack on Falconer’s problem.

1.4 Sets of directions, and the case of dimension 1.

Although Theorem 1.1 does provide new information on the pinned distance sets \(\Delta _y A\) when \({{\,\mathrm{dim_H}\,}}A=1\), it gives no information whatsoever on \({{\,\mathrm{dim_H}\,}}(\Delta (A))\) in this case. There are some well-known “enemies” that one must handle in order to improve upon the easy bound \({{\,\mathrm{dim_H}\,}}(\Delta (A))\ge 1/2\) when \({{\,\mathrm{dim_H}\,}}A=1\). One is that the corresponding fact is false over the complex numbers: \({\mathbb {R}}^2\) is a subset of \({\mathbb {C}}^2\) of half the dimension of the ambient space for which the (squared) distance set

also has half the dimension of the ambient space. Hence any improvements over 1 / 2 in the real case must take into account the order structure of \({\mathbb {R}}\). The other obstacle is a well-known counterexample to a naive discretization of the problem: see [KT01, Eq. (2) and Figure 1]. These enemies do not arise when \({{\,\mathrm{dim_H}\,}}(A)>1\). Despite these conceptual differences, we underline that, with the exception of the work of Katz and Tao [KT01] underpinning Bourgain’s bound (1.2), none of the other methods developed so far make any distinction between the cases \({{\,\mathrm{dim_H}\,}}(A)=1\) and \({{\,\mathrm{dim_H}\,}}(A)=1+\delta \).

From the point of view of our strategy, the key significance of the assumption \({{\,\mathrm{dim_H}\,}}(A)>1\) is that in this case the sets of directions determined by points in A has positive Lebesgue measure. In fact, we need a far more quantitative “pinned” version of this fact, which is due to Orponen [Orp17], improving upon a related result by Mattila and Orponen [MO16] (see Proposition 3.11 below). However, even the fact that the direction set has positive measure clearly fails if \({{\,\mathrm{dim_H}\,}}A=1\) when A is contained in a line. Since \({{\,\mathrm{dim_H}\,}}(\Delta _y A) = {{\,\mathrm{dim_H}\,}}(A)\) trivially when A is contained in a line, this does not rule out an extension of our approach to the case \({{\,\mathrm{dim_H}\,}}(A)=1\). However, this would require some variant of Proposition 3.11 when both s, u are slightly less than 1, under a suitable hypothesis of non-concentration on lines, and this appears to be very hard. In [Orp17, Corollary 1.8], Orponen also proved that the direction set of a planar set of Hausdorff dimension 1 which is not contained in a line has Hausdorff dimension \(\ge 1/2\), but this is very far from positive measure, let alone from anything resembling Proposition 3.11.

To understand why directions arise naturally, we recall that our whole approach is based on bounding the size of pinned distance sets in terms of a multi-scale average of local linearized pinned distance measures. The derivative of the distance function \(x\mapsto |x-y|\) is precisely the direction spanned by x and y. Thus we are led to study orthogonal projections of certain measures localized around x, where the angle is given by the direction determined by x and y. The fact that these directions are “well distributed” in a suitable sense can then be used in conjunction with a finitary version of Marstrand’s projection theorem (see Lemma 3.6) and several applications of Fubini to conclude that one can choose y such that for “many” x the direction determined by x and y is good in the sense that the \(L^2\) norm of the projection is controlled by the 1-energy of the measure being projected.

1.5 Structure of the paper.

In Section 2 we introduce notation to be used in the rest of the paper. Section 3 contains some preliminary definitions and results that will be repeatedly used in the later proofs. In Section 4 we establish a lower bound for the box-counting numbers of pinned distance sets that will be at the heart of the proofs of all main theorems. Section 5 contains a number of optimization results about Lipschitz functions on the line, as well as corollaries of these results for discrete \([-1,1]\)-sequences; these corollaries play a key role in the proofs of the main theorems. Theorems 1.2, 1.3 and 1.4 are proved in Section 6. We conclude with some remarks on the sharpness of our results in Section 7.

We remark that Sections 5.2 and 5.3 are not needed for the Proof of Theorem 1.2 (the results from Section 5.2 are required only in the Proof of Theorem 1.3, and Section 5.3 is needed only for the Proof of Theorem 1.4).

We also wish to thank for T. Orponen for many useful discussions at the early stage of this project, and an anonymous referee for several suggestions that improved the paper, and in particular for suggesting a simplification of the statement and Proof of Proposition 3.12.

2 Notation

We use Landau’s \(O(\cdot )\) notation: given \(X>0\), O(X) denotes a positive quantity bounded above by CX for some constant \(C>0\). If C is allowed to depend on some other parameters, these are denoted by subscripts. We sometimes write \(X\lesssim Y\) in place of \(X=O(Y)\) and likewise with subscripts. We write \(X\gtrsim Y\), \(X\approx Y\) to denote \(Y\lesssim X\), \(X\lesssim Y\lesssim X\) respectively.

Throughout the rest of the paper, we work with three parameters that we assume fixed: a large integer T and small positive numbers \(\varepsilon ,\tau \). We briefly indicate their meaning:

- (1)

We will decompose sets and measures in the base \(2^T\). In particular, we will work with sets and measures that have a regular tree (or Cantor) structure when represented in this base: see Definition 3.2.

- (2)

The parameter \(\tau \) will be used to define sets of bad projections: see Definition 3.8. The fact that \(\tau >0\) is required to ensure that these sets have small measure. It also keeps some error terms negligible, see Proposition 4.4.

- (3)

Finally, \(\varepsilon \) will denote a generic small parameter; it can play different roles at different places.

We will use the notation \(o_{T,\varepsilon ,\tau }(1)=o_{T\rightarrow \infty ,\varepsilon \rightarrow 0^+,\tau \rightarrow 0^+}(1)\) to denote any function \(f(T,\varepsilon ,\tau )\) such that

If a particular instance of o(1) is independent of some of the variables, we drop these variables from the notation. Different instances of the o(1) notation may refer to different functions of \(T,\varepsilon ,\tau \), and they may depend on each other, so long as they can always be made arbitrarily small.

Note that e.g. \(O_\varepsilon (1)\) denotes any (finite) function of \(\varepsilon \), while \(o_\varepsilon (1)\) denotes a function of \(\varepsilon \) that tends to 0 as \(\varepsilon \rightarrow 0^+\).

We will often work at a scale \(2^{-T\ell }\); it is useful to think that \(\ell \rightarrow \infty \) while \(T,\varepsilon ,\tau \) remain fixed.

The family of Borel probability measures on a metric space X is denoted by \({\mathcal {P}}(X)\). If \(\mu (A)>0\), then \(\mu _A\) denotes the normalized restriction \(\mu (A)^{-1}\mu |_A\). If \(f:X\rightarrow Y\) is a Borel map, then by \(f\mu \) we denote the push-forward measure, i.e. \(f\mu (A)= \mu (f^{-1}A)\).

We let \({\mathcal {D}}_j\) be the half-open \(2^{-jT}\)-dyadic cubes in \({\mathbb {R}}^d\) (where d is understood from context), and let \({\mathcal {D}}_j(x)\) be the only cube in \({\mathcal {D}}_j\) containing \(x\in {\mathbb {R}}^d\). Given a measure \(\mu \in {\mathcal {P}}({\mathbb {R}}^d)\), we also let \({\mathcal {D}}_j(\mu )\) be the cubes in \({\mathcal {D}}_j\) with positive \(\mu \)-measure. Note that these families depend on T. Given \(A\subset {\mathbb {R}}^d\), we also denote by \({\mathcal {N}}(A,j)\) the number of cubes in \({\mathcal {D}}_j\) that intersect A.

A \(2^{-m}\)-measure is a measure in \({\mathcal {P}}([0,1)^d)\) such that the restriction to any \(2^{-m}\)-dyadic cube Q is a multiple of Lebesgue measure on Q, i.e. a measure defined down to resolution \(2^{-m}\). Likewise, a \(2^{-m}\)-set is a union of \(2^{-m}\) dyadic cubes. If \(\mu \in {\mathcal {P}}({\mathbb {R}}^d)\) is an arbitrary measure, then we denote

that is \(R_\ell (\mu )\) is the \(2^{-T\ell }\)-measure that agrees with \(\mu \) on all dyadic cubes of side length \(2^{-T\ell }\). We also define the corresponding analog for sets: given \(A\subset {\mathbb {R}}^d\), \(R_\ell (A)\) denotes the union of all cubes in \({\mathcal {D}}_\ell \) that intersect A.

Due to our use of dyadic cubes, it will often be convenient to deal with supports in the dyadic metric, i.e. given \(\mu \in {\mathcal {P}}([0,1)^d)\) we let

Note that \(\mu ({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu ))=1\) and that \({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\subset {{\,\mathrm{supp}\,}}(\mu )\).

If a measure \(\mu \in {\mathcal {P}}({\mathbb {R}}^d)\) has a density in \(L^p\), then its density is sometimes also denoted by \(\mu \), and in particular \(\Vert \mu \Vert _p\) stands for the \(L^p\) norm of its density.

We make some further definitions. Let \(\mu \in {\mathcal {P}}([0,1)^d)\). If Q is a dyadic cube and \(\mu (Q)>0\), then we denote \(\mu ^Q = \text {Hom}_Q\mu _Q\), where \(\text {Hom}_Q\) is the homothety renormalizing Q to \([0,1)^d\). If \(M<N\) be integers, then for \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\), we define

In other words, \(\mu (x;M\rightarrow N)\) is the conditional measure on \({\mathcal {D}}_M(x)\), rescaled back to the unit cube, and then stopped at resolution \(2^{-(N-M)T}\). Likewise, for \(Q\in {\mathcal {D}}_M\) with \(\mu (Q)>0\) we define

Note that \(\mu (x;M\rightarrow N)\) and \(\mu (Q;N)\) are \(2^{-(N-M)T}\)-measures.

Logarithms are always to base 2.

3 Preliminary Results

3.1 Regular measures and energy.

In this section we define some important notions and prove some preliminary results.

Recall that the s-energy of \(\mu \in {\mathcal {P}}({\mathbb {R}}^d)\) is

Lemma 3.1

For any Borel probability measure \(\mu \) on \([0,1]^d\), if \(s>0\) then

If \(\mu \) is a \(2^{-{T\ell }}\)-measure and \(0<s<d\), then the sum runs up to \(\ell \) (in particular, the s-energy is finite).

Proof

First of all, by [PP95, Theorem 3.1], we can replace \({\mathcal {E}}_s(\mu )\) by the s-energy on the \(2^T\)-ary tree, i.e. by

where \(|x\wedge y| = \max \{ j: y\in {\mathcal {D}}_j(x)\}\) (both energies are comparable up to a \(O_{T,d}(1)\) factor). The formula for \({\mathcal {E}}_s(\mu )\) now follows from a standard calculation, see e.g. [Shm17, Lemma 3.1] for the case \(T=1\) (the proof of the general case is identical).

Finally, the case in which \(\mu \) is a \(2^{-{T\ell }}\)-measure follows again from another simple calculation, see e.g. [Shm17, Lemma 3.2] for the case \(T=1\). \(\square \)

One of the key steps in the proof of the main theorems is to decompose an arbitrary \(2^{-T\ell }\)-measure in terms of measures which have a uniform tree structure when represented in base \(2^T\). This notion (which is inspired by some constructions of Bourgain [Bou10]) is made precise in the next definition.

Definition 3.2

Given a sequence \(\sigma =(\sigma _1,\ldots ,\sigma _{\ell })\in [-1,d-1]^\ell \), we say that \(\mu \in {\mathcal {P}}([0,1)^d)\) is \(\sigma \)-regular if it is a \(2^{-{T\ell }}\)-measure, and for any \(Q\in {\mathcal {D}}_{j}(\mu )\), \(1\le j\le \ell \), we have

where \(\widehat{Q}\) is the only cube in \({\mathcal {D}}_{j-1}\) containing Q.

The expression \(2^{-T(\sigma _j+1)}\) in the definition may appear strange, but it turns out to be a convenient normalization. The key point in this definition is that a measure is \(\sigma \)-regular if all cubes of positive mass have roughly the same mass, and the sequence \((\sigma _j)\) helps quantify this common mass.

Lemma 3.3

If \(\nu \in {\mathcal {P}}([0,1)^d)\) is \(\sigma \)-regular for some \(\sigma \in {\mathbb {R}}^\ell \) and \(s\in (0,d)\), then

Proof

We use crude bounds which are enough for our purposes. From the definition it is clear that if \(Q\in {\mathcal {D}}_j(\nu )\) then

This implies, in particular, that

From the two displayed equations and Lemma 3.1 it follows that

Write \({\mathcal {M}}_s(\sigma ) := T \max _{j=1}^{\ell } \sum _{i=1}^j (s-1)-\sigma _j\). Bounding \(\sum _{j=1}^\ell \) by \(\ell \) times the maximal term in the right-hand side, we deduce that

This yields the claim. \(\square \)

Heuristically, the previous lemma says that for \(\log {\mathcal {E}}_s(\nu )\) to be small, it must hold that

Recalling the connection of \(\sigma _i\) to branching numbers, this means that the average branching number over any initial set of scales has to be sufficiently large, in a manner depending on s.

The following is a variant of Bourgain’s regularization argument (see e.g. [Bou10, Section 2] for a clean example). Recall that \({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\) denotes the dyadic support of \(\mu \).

Lemma 3.4

Let \(\mu \) be a \(2^{-{T\ell }}\)-measure on \([0,1)^d\) for some \(\ell \ge 1\). There exists a \(2^{-{T\ell }}\)-set X, contained in \({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\) and satisfying \(\mu (X) \ge (2Td+2)^{-\ell }\), such that \(\mu _X\) is \(\sigma \)-regular for some sequence \(\sigma \in [-1,d-1]^\ell \).

Proof

Recall that \(\widehat{Q}\) is the only cube in \({\mathcal {D}}_{j-1}\) containing \(Q\in {\mathcal {D}}_j\). For each \(k\in [0,Td]\cap {\mathbb {Z}}\), let

and set

Note that

and that \({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\) is the union of the \(X_\ell ^{(k)}\) together with \(X_{\ell }^{(>Td)}\). Pick the smallest \(k=k(\ell )\in [0,Td]\) which maximizes \(\mu (X_{\ell }^{(k)})\) and set \(\sigma _\ell = k/T-1\in [-1,d-1]\). Then

Set \(X_{\ell }:= X_{\ell }^{(k)}\) and \(\mu _{\ell }=\mu _{X_{\ell }}\).

Now continue inductively, replacing \(\ell \) by \(\ell -1\) and \(\mu \) by \(\mu _{\ell }\), until we eventually get a set \(X_1\) and a sequence \((\sigma _1,\ldots ,\sigma _\ell )\in [-1,d-1]^\ell \). Note that for \(Q\in {\mathcal {D}}_j(\mu _i)\) the value of \(\mu _i(Q)/\mu _i(\widehat{Q})\) remains constant for \(i\le j\) and, in particular, for \(i=1\). Hence \(X=X_1\) has the desired properties. \(\square \)

The set X given by the lemma will have far too little measure for our purposes: later we will need \(\mu _X(A)\) to be large (in particular nonzero) for certain sets A of mass roughly \(\ell ^{-2}\). By iterating the construction, we are able to get a moderately long sequence of sets \(X_i\) such that \(\mu ({\mathbb {R}}^d\setminus \cup _i X_i)\ll \ell ^{-2}\); by pigeonholing we will then be able to select some \(X_i\) with \(\mu _{X_i}(A)\) suitably large.

Corollary 3.5

Fix \(\ell \ge 1\), write \(m=T\ell \), and let \(\mu \) be a \(2^{-m}\)-measure on \([0,1)^d\). There exists a family of pairwise disjoint \(2^{-m}\)-sets \(X_1,\ldots , X_N\) with \(X_i\subset {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\), and such that:

- (i)

\(\mu \left( \bigcup _{i=1}^N X_i\right) \ge 1-2^{-\varepsilon m}\). In particular, if \(\mu (A)> 2^{-\varepsilon m}\), then there exists i such that \(\mu _{X_i}(A)\ge \mu (A)-2^{-\varepsilon m}\).

- (ii)

\(\mu (X_i) \ge 2^{-(\varepsilon +(1/T)\log (2d T+2)) m} \ge 2^{-o_{T,\varepsilon }(1) m}\),

- (iii)

Each \(\mu _{X_i}\) is \(\sigma (i)\)-regular for some \(\sigma (i)\in [-1,d-1]^\ell \).

Moreover, the family \((X_i)_{i=1}^N\) may be constructed so that it is determined by \(d, T,\varepsilon ,\ell \) and \(\mu \) (even though there may be other families satisfying the above properties).

Proof

Let \(X_1\) be the set given by Lemma 3.4, and put \(B_1=[0,1]^d\setminus X_1\). Continue inductively: once \(X_j,B_j\) are defined, let \(X_{j+1}\) be the set given by Lemma 3.4 applied to \(\mu _{B_j}\), and set \(B_{j+1}=B_j\setminus X_{j+1}\). Then (setting \(B_0=[0,1)^d\))

Let N be the smallest integer such that \(\mu (B_N) \le 2^{-\varepsilon m}\); such N exists thanks to (3.2).

It is clear that in this construction the family \(X_1,\ldots ,X_N\) is determined by \(d,T,\varepsilon ,\ell ,\mu \) since the set X constructed in the Proof of Lemma 3.4 is determined by \(d,T,\ell ,\mu \).

The first part of claim (i) is immediate. Then note that

so there must be i such that \(\mu _{X_i}(A) \ge \mu (A)-2^{-\varepsilon m}\), as claimed.

Finally, (ii) is immediate from (3.2) and the definition of N, and (iii) is clear since the sets \(X_i\) were provided by Lemma 3.4. \(\square \)

3.2 Sets of bad projections.

In this subsection, we introduce sets of “bad” multi-scale projections for a measure \(\mu \) around a point x. The simple fact that these sets can be taken to have small measure (independently of \(\mu \) and x) will play a crucial role later. Although a similar notion was introduced in [Shm17], the sets of bad projections we use here are far more flexible and also more involved, depending on the decomposition into regular measures provided by Corollary 3.5.

Given \(\theta \in S^1\), we denote the orthogonal projection \(x\mapsto x\cdot \theta \) by \(\Pi _\theta \). Normalized Lebesgue measure on \(S^1\) will be denoted by \(|\cdot |\). We recall the following consequence of the energy version of Marstrand’s projection theorem.

Lemma 3.6

Let \(\mu \in {\mathcal {P}}([0,1)^2)\) have finite 1-energy. Then, for any \(R>0\),

Proof

This is just a consequence of Markov’s inequality and the identity

see e.g. [Mat04, Equation 1.7]. \(\square \)

We restate [Shm17, Lemma 3.7] using our notation, for later reference.

Lemma 3.7

For any \(\nu \in {\mathcal {P}}({\mathbb {R}}^2)\), \(k\in {\mathbb {N}}\) and \(\theta \in S^1\),

Next, we define the various sets of “bad projections”.

Definition 3.8

Given \(\mu \in {\mathcal {P}}([0,1)^2)\), \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\) and non-negative integers \(j,k, j_0, \ell \), we let

We underline that the definition of \({{\,\mathrm{\mathbf {Bad}}\,}}_{j_0\rightarrow \ell }(\mu ,x)\) depends on the parameters \(T, \varepsilon \) and \(\tau \). Note that, since \(\mu (x;j\rightarrow j+k)\) has a bounded density by definition, both quantities in the definition of \({{\,\mathrm{\mathbf {Bad}}\,}}(\mu ,x,j, k)\) are finite.

Our next goal is to combine Lemma 3.6 with the decomposition given by Corollary 3.5. Starting with a \(2^{-T\ell }\)-measure \(\mu \in {\mathcal {P}}([0,1)^2)\) and \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\), we define

where \((X_i)_{i=1}^N\) are the sets given by Corollary 3.5. Note that \({{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu _{X_j})=X_j\).

Lemma 3.9

There exists a further constant \(\varepsilon '=\varepsilon '(T,\varepsilon ,\tau )>0\) such that, for any \(2^{-T\ell }\)-measure \(\mu \in {\mathcal {P}}([0,1)^2)\),

Proof

According to the definitions and Lemma 3.6, for any \(\nu \in {\mathcal {P}}([0,1)^2)\) and \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\nu )\),

The point here is that the bound does not depend on \(\nu \) or x. Hence the claim follows with \(\varepsilon ' = \varepsilon ^2 T\tau \).\(\square \)

Finally, if \(\mu \in {\mathcal {P}}([0,1)^2)\) and \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\), we let

We record the following immediate consequence of Lemma 3.9 for later use.

Lemma 3.10

for all \(x\in {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\mu )\), where \(\varepsilon '=\varepsilon '(T,\varepsilon ,\tau )>0\) is the constant from Lemma 3.9.

3.3 Radial projections.

The following result was recently established by Orponen [Orp17]. We state it only in the plane. We denote the radial projection with center y by \(P_y\), i.e. \(P_y(x)=(y-x)/|y-x|\in S^1\) is the (oriented) direction determined by x and y.

Proposition 3.11

Let \(\mu ,\nu \in {\mathcal {P}}([0,1)^2)\) be measures with disjoint supports, such that \({\mathcal {E}}_s(\mu )<\infty \), \({\mathcal {E}}_u(\nu )<\infty \) for some \(u>1\), \(2-u<s<1\). Then there is \(p=p(s,u)>1\) such that \(P_x\nu \) is absolutely continuous with a density in \(L^p(S^1)\) for \(\mu \) almost all x. Moreover,

Proof

This is stated in [Orp17, Equation (3.5)], except that Orponen deals with weighted measures \(\mu _y=|x-y|^{-1}d\mu \) instead of \(\mu \) (note that the roles of \(\mu \) and \(\nu \) are interchanged in [Orp17]). Since the weight \(|x-y|^{-1}\) is bounded away from 0 and \(\infty \) by the assumption that the supports of \(\mu \) and \(\nu \) are bounded and disjoint, the claim also holds for \(\mu \). \(\square \)

We point out that Proposition 3.11 uses the Fourier transform, and is the only point in the proofs of Theorems 1.2 and 1.3 that does (on the other hand, the Proof of Theorem 1.4 relies heavily on the strongly Fourier-analytic approach of Mattila-Wolff).

Proposition 3.11 has the following key consequence. A similar statement was obtained in [Shm17] using a slightly more involved argument.

Proposition 3.12

Let \(\mu ,\nu \in {\mathcal {P}}([0,1)^2)\) have disjoint supports and satisfy \({\mathcal {E}}_s(\mu ), {\mathcal {E}}_u(\nu )<\infty \) for some \(s\in (0,2), u>\max (1,2-s)\). Then there exists \(\kappa =\kappa (\mu ,\nu )>0\) such that the following holds:

Suppose that \(\Theta \subset [0,1)^2\times S^1\) is a Borel set such that

Then

Proof

Since \({\mathcal {E}}_s(\mu )<\infty \) implies that \({\mathcal {E}}_{s'}(\mu )<\infty \) for all \(s'<s\), we may assume that \(s<1\). By Proposition 3.11, there is \(p>1\) such that

Denote \(\Theta _x = \{ \theta \in S^1: (x,\theta )\in \Theta \}\) and \(-\Theta _x=\{ -\theta :\theta \in \Theta _x\}\). Using Fubini and Hölder, each twice, we estimate

The claim follows by choosing \(\kappa \) so that \(\kappa ^{1/p'} C^{1/p}\le 1/3\). \(\square \)

4 Box-Counting Estimates for Pinned Distance Sets

In this section we derive a lower bound on box-counting numbers of pinned distance sets that will be crucial in the proofs of Theorems 1.2, 1.3 and 1.4. Our estimate will be in terms of a multiscale decomposition where, unlike previous works in the literature, we are allowed to choose the sequence of scales (depending on the set or measure for which we are seeking estimates). This additional flexibility will ultimately allow us to improve upon the easy bounds on the dimensions of distance sets.

To begin, we recall some basic facts about entropy. If \(\nu \in {\mathcal {P}}({\mathbb {R}}^d\)) and \({\mathcal {A}}\) is a finite partition of \({\mathbb {R}}^d\) (or of a set of full \(\nu \)-measure), then the entropy of \(\nu \) with respect to \({\mathcal {A}}\) is given by

with the usual convention \(0\cdot \log 0=0\). It follows from the concavity of the logarithm that one always has

Hence, a lower bound for \(H(\nu ,{\mathcal {D}}_j)\) provides a lower bound for \({\mathcal {N}}(A,j)\) if A is a Borel set of full measure (recall that \({\mathcal {N}}(A,j)\) denotes the number of elements in \({\mathcal {D}}_j\) that intersect A). We will apply this when \(\nu \) is supported on a pinned distance set. Although box-counting numbers in principle give bounds only for box dimension, together with standard mass pigeonholing arguments we will be able to get bounds also for Hausdorff and packing dimension.

The following proposition is the key device that will allow us to bound from below the entropy of pinned distance measures (and hence also the box-counting numbers of pinned distance sets). Roughly speaking, we bound the entropy of the projection of a measure \(\mu \) under the pinned distance map by an average over both scales and space (the latter, weighted by \(\mu \)) of a quantity involving the \(L^2\) norms of projected local pinned distance measures. We emphasize that this method to bound the dimension of (linear or nonlinear) projections from below goes back in various forms to [Hoc12, Hoc14, Orp17b], although the use of projected \(L^2\) norms (rather than projected entropies) was first used in [Shm17].

Before stating the proposition we introduce some definitions. Given \(L\in {\mathbb {N}}\), a good partition of (0, L] is an integer sequence \(0=N_0<\cdots <N_q=L\) such that \(N_{j+1}-N_j\le N_j+1\). We write \(\Delta _y(x)=|x-y|\) for the pinned distance map, and \(\theta (x,y)=P_y(x)=(x-y)/|x-y|\).

Proposition 4.1

Let \(\mu \in {\mathcal {P}}([0,1)^d)\), let \(y\in {\mathbb {R}}^d\) be at distance \(\ge \varepsilon \) from \({{\,\mathrm{supp}\,}}(\mu )\), and fix a good partition \((N_i)_{i=0}^q\) of \((0,\ell ]\). Then

where \(x_Q\) is an arbitrary point in Q.

Proof

Write \(D_i=N_{i+1}-N_i\). Note that our \({\mathcal {D}}_i\) correspond to \({\mathcal {D}}_{T i}\) and our \(T N_i\) to \(m_i\) in [Shm17]. Recall also that \(\mu ^Q\) denotes the magnification of \(\mu _Q\) to the unit cube. It is shown in [Shm17, Proposition 3.8 and Remark 3.10] that

Applying Lemma 3.7 to \(\nu = \mu ^Q\) for some \(Q\in {\mathcal {D}}_{N_i}\) and \(k=D_i\), we get that

On the other hand, a simple convexity argument (see [Shm17, Lemma 3.6]) yields that, for any \(\nu \in {\mathcal {P}}({\mathbb {R}})\) and \(k\in {\mathbb {N}}\),

Applying this with \(k=D_i\) and \(\nu = \Pi _{\theta (y,x_Q)} \mu ^Q\), and recalling (4.3), we deduce that

Using this bound in each term in the right-hand side of (4.2), and absorbing the sum of the qO(1) terms into \(O_{T,\varepsilon }(q)\), we get the claim. \(\square \)

We remark that the assumption that \(N_{j+1}-N_j\le N_j+1\) in the definition of good partition (which will play a crucial role later) arises from the linearization of the distance function, and cannot be substantially weakened. The key advantage of having \(L^2\) norms instead of entropies in this proposition is that the estimate one gets is robust under passing to subsets of moderately large measure:

Proposition 4.2

With the assumptions and notation from Proposition 4.1, let us write \({\mathcal {F}}(\mu )\) for the right-hand side of (4.1) (we assume y and the partition \((N_i)\) are fixed). If \(\mu \in {\mathcal {P}}([0,1)^2)\), \(\nu =\mu _A\) where A is Borel and \(\mu (A)>0\), then

Proof

We start with the trivial observation that if \(\rho ,\rho '\in {\mathcal {P}}({\mathbb {R}}^d)\) have an \(L^2\) density and \(\rho '(S)\le K\rho (S)\) for all Borel sets S, then the same bound transfers over to the densities for a.e. point, and so \(\Vert \rho '\Vert _2^2 \le K^2 \Vert \rho \Vert _2^2\).

Let \(\zeta =1/(T\ell )\in (0,1)\). Fix \(i\in \{0,\ldots ,q-1\}\), and note that

Suppose \(\nu (Q) = \mu (A\cap Q)/\mu (A) \ge \zeta \mu (Q)>0\) for a given \(Q\in {\mathcal {D}}_{N_i}\). Then

for any Borel set \(S\subset [0,1)^2\). This domination is preserved under push-forwards and the action of \(R_{D_i}\) (where as before \(D_i = N_{i+1}-N_i\)), so in light of our initial observation we get

always assuming that \(\nu (Q)\ge \zeta \mu (Q)>0\) and \(Q\in {\mathcal {D}}_{N_i}\). Also, since the measure \(\Pi _{\theta (y,x_Q)} \mu (Q;N_{i+1})\) is supported on an interval of length \(\sqrt{2}\), it follows from Cauchy–Schwarz that

On the other hand, for any \(2^{-T D}\)-measure \(\rho \) on \({\mathbb {R}}\) one has \(\Vert \rho \Vert _2^2 \le 2^{T D}\). In light of Lemma 3.7, this implies that

Splitting (for each i) the sum \(\sum _{Q\in {\mathcal {D}}_{N_i}}\) in Proposition 4.1 into the cubes with \(\nu (Q) \ge \zeta \mu (Q)\) and \(\nu (Q)< \zeta \mu (Q)\), and recalling (4.4), we arrive at the estimate

where we merged the sum of the (\(\log \) of the) implicit constants in (4.6) into \(O_{T,\varepsilon }(q)\). Recalling that \(\zeta =1/(T\ell )\) and using (4.5) we get the desired result. \(\square \)

Our next goal is to get a simpler lower bound in the context of Proposition 4.2 when \(\mu \) is \(\sigma \)-regular (recall Definition 3.2), and \(\nu \) is the restriction of \(\mu \) to the set of points which are not bad in the sense of Section 3.2. Combining the results of Section 3.2 and Section 3.3, we will later be able to deal with general measures via a reduction to this special case.

We require some additional definitions:

Definition 4.3

We say that \(0=N_0<N_1<\cdots <N_q=L\) is a \(\tau \)-good partition of (0, L] if

for every \(0\le j< q\). In other words \((N_j)\) is a good partition and additionally \(N_{j+1}\ge (1+\tau ) N_j\).

Given a finite sequence \((\sigma _1,\ldots ,\sigma _L)\in {\mathbb {R}}^L\), let

For any good partition \({\mathcal {P}}=(N_j)_{j=0}^q\) of (0, L] and any \(\sigma \in {\mathbb {R}}^L\), we denote

where \(\sigma |I\) denotes the restriction of the sequence \(\sigma \) to the interval I.

Finally, given \(\sigma \in {\mathbb {R}}^L\) and \(\tau \in (0,1)\), we let

Recall that \(o_{T,\varepsilon }(1)\) denotes a function of T and \(\varepsilon \) which tends to 0 as \(T\rightarrow \infty ,\varepsilon \rightarrow 0^+\).

Proposition 4.4

Suppose that \(\rho \in {\mathcal {P}}([0,1)^2)\) is a \((\sigma _1,\ldots ,\sigma _\ell )\)-regular measure. Assume that there are a Borel set \(A\subset [0,1)^2\), a point \(y\in {\mathbb {R}}^2\) and a number \(\beta \in (0,1)\) satisfying that \(\rho (A)>0\), \(\mathrm {dist}(y,{{\,\mathrm{supp}\,}}(\rho ))\ge \varepsilon \), and for all \(x\in A\cap {{\,\mathrm{supp}\,}}_{{\mathsf {d}}}(\rho )\) there is \(\widetilde{x} \in {\mathcal {D}}_\ell (x)\) such that

Then

where

Proof

Let \({\mathcal {P}}=(N_i)_{i=0}^q\) be a \(\tau \)-good partition of \((0,\ell ]\). We have to show that

Fix \(i_0\) as the smallest value of i such that \(N_i\ge \beta \ell \), and note that \(N_{i_0}< 2\beta \ell +1\).

Let us rewrite the inequality from Proposition 4.2 applied to \(\rho \) and \(\rho _A\) in the form

where

where \(x_Q\) are arbitrary points in Q. By assumption, we may choose these points so that

Using that \((1+\tau )^q\le \ell \), we bound

Write \(D_i=N_{i+1}-N_i\). To estimate \(\Sigma _{\text {I}}\), we use the trivial bound \(\Vert R_{D_i}(\cdot )\Vert _2^2 \le 2^{D_i T}\) together with Lemma 3.7 and the bounds \(N_{i_0}<2\beta \ell +1\), \((1+\tau )^q \le \ell \), so that

Now, to estimate the main term \(\Sigma _{\text {II}}\), we need to go back to Definition 3.8. By (4.8), and using that \({\mathcal {P}}\) is a \(\tau \)-good partition of \((0,\ell ]\), we have \(\theta (x_Q,y)\notin {{\,\mathrm{\mathbf {Bad}}\,}}(\rho ,x_Q,N_i,D_i)\) for \(i_0 \le i < q\). We deduce that

for \(i_0 \le i < q\). On the other hand, by the assumption that \(\rho \) is \((\sigma _1,\ldots ,\sigma _\ell )\)-regular, and since \({\mathcal {P}}\) is a good partition of \((0,\ell ]\), the measure \(\rho (Q;N_{i+1})\) is \((\sigma _{N_i+1},\ldots ,\sigma _{N_{i+1}})\)-regular. Hence, using Lemma 3.3, we obtain

Combining the last two displayed formulas, we deduce that

Adding up from \(i=i_0\) to \(q-1\) and again using \(q= O_{\tau }(\log \ell )\), we get

Combining (4.9), (4.10) and (4.11), we conclude that

where \({{\,\mathrm{Error}\,}}\) is as in the statement. Recall that \({\mathcal {F}}(\mu )\) denotes the right-hand side of (4.1) in Proposition 4.1. Now Proposition 4.1 guarantees that

Since \(H(\mu ,{\mathcal {A}}) \le \log |{\mathcal {A}}|\) for any finite Borel partition \({\mathcal {A}}\) of a set of full \(\mu \)-measure, this finishes the proof. \(\square \)

Note that in this proposition, the sequence \(\sigma \) depends on the measure \(\rho \) and the bound is in terms of \(M_\tau (\sigma )\) (we will be able to make the error term arbitrarily small). Thus we are led to the combinatorial problem of minimizing \({\mathbf {M}}(\sigma ,(N_i))\) over all \(\tau \)-good partitions for a given \(\sigma \in [-1,1]^\ell \). This problem will be tackled in the next section: see Proposition 5.23, and also Proposition 5.24 for the case in which we are allowed to restrict \(\sigma \) to (0, L] for some large L.

5 Finding Good Scale Decompositions: Combinatorial Estimates

5.1 An optimization problem for Lipschitz functions.

We begin by defining suitable analogs of the concepts from Definition 4.3 for Lipschitz functions, instead of \([-1,1]\)-sequences.

Definition 5.1

A sequence \((a_n)_{n=0}^\infty \) is a partition of the interval [0, a] if \(a=a_0>a_1>\cdots >0\) and \(a_n\rightarrow 0\); it is a good partition if we also have \(a_{k-1} / a_{k} \le 2\) for every \(k\ge 1\).

A sequence \((a_n)_{n=0}^{\infty }\) is a \(\tau \)-good partition for a given \(0< \tau <1\) if it is a good partition and we also have \(a_{k-1} / a_{k} \ge 1+\tau \) for every \(k\ge 1\).

Let \(f:[0,a]\rightarrow {\mathbb {R}}\) be continuous and \((a_n)\) be a partition of [0, a]. By the total drop offaccording to\((a_n)\) we mean

and we also introduce the notation

We call the interval \([a_{n},a_{n-1}]\)increasing if \(\min _{[a_n,a_{n-1}]} f=f(a_n)\) and decreasing if \(\min _{[a_n,a_{n-1}]} f=f(a_{n-1})\). (Note that f needs not be increasing or decreasing on \([a_n,a_{n-1}]\).)

In this section we investigate the following question: given a 1-Lipschitz function \(f:[0,a]\rightarrow {\mathbb {R}}\) satisfying certain bounds, how large can \({\mathbf {T}}(f)\) and \({\mathbf {T}}_\tau (f)\) be?

First we study \({\mathbf {T}}(f)\). Later we show (see Corollary 5.20) that for small \(\tau \) the quantities \({\mathbf {T}}(f)\) and \({\mathbf {T}}_\tau (f)\) are close. Finally, from the bounds on \(T_\tau (f)\) we deduce corresponding bounds on \({\mathbf {M}}_\tau (\sigma )\): see for example Proposition 5.23. Hence this problem is closely related to that of minimizing the dimension loss when estimating the dimension of the pinned distance set via Proposition 4.4. Dealing first with Lipschitz functions rather than \([-1,1]\)-sequences allows us to avoid certain technicalities and make the arguments more transparent.

The basic result is the following.

Proposition 5.2

Let \(a>0\), \(-1\le D < C \le 1\) be given parameters such that \(C\ge 2D\). Let \(f:[0,a]\rightarrow {\mathbb {R}}\) be a 1-Lipschitz function such that \(Dx\le f(x)\le Cx\) for every \(x\in [0,a]\). Then

Proof

Since \(f(a)\ge Da\) and \(a>0\), the second inequality of (5.1) is clear, so it enough to prove the first inequality.

Let

Note that

and \(h,\rho \ge 0\) since we assumed \(C\ge 2D\) and \(C\ge D\), so \(2C\ge 3D\).

We will construct a good partition \((a_n)\) with the following two extra properties:

(*) every interval \([a_n,a_{n-1}]\) (\(n=1,2,\ldots \)) is either increasing or decreasing (recall Definition 5.1), and

(**) if \([a_k,a_{k-1}],\ldots ,[a_{l+1},a_l]\) (\( k \ge l+1 \ge 1\)) is a maximal block of consecutive decreasing intervals, then

First we show that this is enough to prove our claim. Let \(a=a'_0>a'_1>\ldots \) be the endpoints of the union of each maximal block of consecutive intervals of the same type (increasing or decreasing). It easily follows from the definitions and telescoping that \({\mathbf {T}}(f,(a_n))={\mathbf {T}}(f,(a'_k))\). Hence to obtain (5.1) it is enough to prove

We claim that

Indeed, by construction, the interval \([a'_{k},a'_{k-1}]\) is either increasing or decreasing. If it is increasing then

since f is 1-Lipschitz and \(a'_k<a'_{k-1}\).

If \([a'_{k+1},a'_{k}]\) is decreasing then, using first (**) and the fact that \(\rho <1\), and then (5.2), we get

which completes the Proof of (5.4).

By adding up (5.4) for \(k=1,2,\ldots \) and using that \(a'_0=a\), \(a'_k\rightarrow 0\) and \(f(a'_k)\rightarrow 0\) we get (5.3), which implies (5.1).

Therefore it is enough to construct a good partition \((a_n)\) with properties (*) and (**). Let \(a_0=a\) and suppose that \(a_0>\cdots>a_{n}>0\) are already constructed with properties (*) and (**) (up to n).

We distinguish three cases.

Case 1.\(\min _{[a_n/2,a_n]} f < f(a_n)\).

In this case let \(a_{n+1}\in [a_n/2,a_n]\) be the smallest number such that \(f(a_{n+1})=\min _{[a_n/2,a_n]}f\). Then \([a_{n+1},a_n]\) is an increasing interval and so (*) and (**) still hold and we can continue the procedure.

Case 2.\(\min _{[a_n/2,a_n]} f = f(a_n)\) and \(f(a_n/2)-f(a_n)\le h\cdot (a_n - a_n/2)\).

In this case let \(a_{n+1}=a_n/2\), and again (*), (**) hold for the extended sequence and we can continue the procedure.

Case 3.\(\min _{[a_n/2,a_n]} f = f(a_n)\) and \(f(a_n/2)-f(a_n)> h\cdot (a_n - a_n/2)\).

First we claim that \(h\ge -D\). Indeed, since \(-1\le D\le C\) we have

which implies that

and this implies \(h\ge -D\).

Since \(h\ge -D\) and \(f(x)\ge Dx\) we have \(f(a_n)\ge Da_n\ge -ha_n\) and so

This and the assumption \(f(a_n/2)-f(a_n)> h\cdot (a_n - a_n/2)\) implies that there exists a largest \(b\in [0,a_n/2)\) be such that

Now our goal is to find a sequence \(b=b_0<b_1<\cdots <b_M=a_n\) with \(M\ge 2\) such that

The sequence \((b_i)\) is constructed by induction. Let \(b_0=b\). Suppose that \(m\ge 0\), \(b=b_0<\cdots<b_m< a_n\) are already constructed and (5.6) holds for \(M=m\). If \(b_m\ge a_n/2\) then we can take \(b_{m+1}=a_n\) and \(M=m+1\). Then the construction is completed and (5.6) holds.

Now consider the case \(b_m< a_n/2\). Let \(b_{m+1}\in [b_m,2b_m]\) be maximal such that \(f(b_{m+1})=\min _{[b_m,2b_m]}f\). Our goal is to show that \(b_{m+1}>b_m\). For this it is enough to show that \(f(2b_m)\le f(b_m)\).

Using that b is the largest number in \([0,a_n/2]\) for which (5.5) holds, \(b_m\ge b\) and \(f(a_n/2)-f(a_n)> h\cdot (a_n - a_n/2)\), we get

Hence to get \(f(2b_m)\le f(b_m)\) it is enough to show that

Using (5.7) and \(Dx\le f(x)\le Cx\) we get

which implies that

Direct calculation shows that \(D+h=(C-D)(1-h)\) and \(C+h=(C-D)(2-h)\). Thus the last inequality and \(D<C\) imply that

Hence, using also that f is 1-Lipschitz and \(b_m<a_n/2\), we obtain

This completes the Proof of (5.8) and so also the proof of \(b_{m+1}>b_m\). It is easy to see that (5.6) holds for \(M=m+1\). Note also that the property \(b_i/b_{i-2}\ge 2\) implies that the construction of the sequence \((b_i)\) is completed after finitely many steps.

Now, to finish Case 3 we take \(a_{n+j}=b_{M-j}\) for \(j=1,\ldots ,M\). Then (*) and (**) hold (up to \(n+m\)) and so the procedure can be continued.

This way we obtain a sequence \(a=a_0>a_1>\cdots >0\) that forms a good partition with (*) and (**), provided \(a_n\rightarrow 0\). Therefore it remains to prove that \(a_n\rightarrow 0\).

Since \(a_{n+1}=a_{n}/2\) when Case 2 is applied and \(a_{n+M}=b_0=b\le a_n/2\) in Case 3, we are done if Case 2 or Case 3 is applied infinitely many times. It is easy to see that if both \(a_{n+1}\) and \(a_{n+2}\) were obtained from Case 1, then we have \(a_{n}/a_{n+2}\ge 2\). Thus \(a_n\rightarrow 0\), which completes the proof. \(\square \)

5.2 Small drop on initial segments.

The results in this subsection are required in the Proof of Theorem 1.3. We aim to minimize \({\mathbf {T}}(f|[0,u])/u\), where \(u>0\) is a new parameter that we are allowed to choose, subject to not being too small. The analysis will be strongly based on the study of hard points which we now define:

Definition 5.3

If \(f:[0,a]\rightarrow {\mathbb {R}}\) is a function, we say that \(p\in [0,a]\) is a hard point off if \(\min _{[p/2,p]} f=f(p)\).

We will say that a function f defined on an interval I is piecewise linear if I can be decomposed into finitely many intervals such that f is linear on each of them.

Lemma 5.4

Let \(f:[0,a]\rightarrow {\mathbb {R}}\) be a 1-Lipschitz function, which is piecewise linear on every closed subinterval of (0, a]. Then:

- (i)

The set of hard points of f can be written as a (possibly empty) finite or infinite union of closed (possibly degenerate) intervals \(H = \cup _j [u_j,v_j]\) such that \(v_1\ge u_1>v_2\ge u_2>\ldots \) and every closed subinterval of (0, a] intersects only finitely many \([u_j,v_j]\).

- (ii)

We have

$$\begin{aligned} {\mathbf {T}}(f)=\sum _{j} f(u_j)-f(v_j), \end{aligned}$$(5.9)where the empty sum is meant to be zero.

Proof

The first statement is easy, using that f is piecewise linear.

First we prove \(\ge \) in (5.9). Let \((a_n)\) be a good partition of [0, a] and let \(a=a'_0>a'_1>\ldots \) be an ordered enumeration of the set \(\{a_n\} \cup \{u_j\} \cup \{v_j\}\). It is easy to check that \((a'_n)\) is also a good partition of [0, a], and that by inserting a hard point of f into a good partition \((a_n)\), the value of \({\mathbf {T}}(f,(a_n))\) is not changed. Thus \({\mathbf {T}}(f,(a'_n))={\mathbf {T}}(f,(a_n))\). Now every \([u_j,v_j]\) is of the form \([u_j,v_j]=\cup _{n=n_j}^{m_j} [a'_{n},a'_{n-1}]\). Since f must be nonincreasing on any interval \([u_j,v_j]\) we obtain

for every j. Adding up, and using that \(f(a'_n)-\min _{[a'_n,a'_{n-1}]} f\ge 0\) and \({\mathbf {T}}(f,(a'_n))={\mathbf {T}}(f,(a_n))\) we get the claim.

To prove the other inequality we construct by induction a good partition of [0, a] such that \({\mathbf {T}}(f,(a_n))\le \sum _{j} f(u_j)-f(v_j)\). Let \(a_0=a\). Suppose that \(a_0,\ldots ,a_n\) are already defined.

Case 1. If \(a_n\in (u_j,v_j]\) for some j then choose \(k\ge 1\) and \(a_n>a_{n+1}>\cdots >a_{n+k}=u_j\) so that \(a_{n+i}/a_{n+i-1}\le 2\) for \(i=1,\ldots ,k\).

Case 2. Otherwise let \(a_{n+1}\in [a_n/2,a_n]\) be the smallest number for which \(f(a_{n+1})=\min _{[a_n/2,a_n]}f\). We claim that \(a_{n+1}<a_n\). If \(a_n\not \in H\) then this is clear from the definition. Since the only points of H that are not handled in the previous case are the left endpoints of the intervals \([u_j,v_j]\) we can suppose that \(a_n=u_j\) for some j. By the piecewise linearity of f, there exists \(w\in (u_j/2, u_j)\) such that f is linear on \([w,u_j]\) and \(w>v_{j+1}\). Since \(u_j\) is a hard point, f cannot be increasing on \([w,u_j]\). If f is constant on \([w,u_j]\) then \(a_{n+1}\le w<u_j=a_n\), so we are done. So we can suppose that f is decreasing on \([w,u_j]\). Since \(w>v_{j+1}\), every \(x\in [w,u_j)\) is not hard, so there exists an \(x'\in [x/2,x)\) such that \(f(x')<f(x)\). Since f is decreasing on \([w,u_j]\), \(x'<w\). By the continuity of f, this implies that there exists \(x_0\in [u_j/2,w]\) such that \(f(x_0)\le f(u_j)\). Thus indeed \(a_{n+1}<u_j=a_n\).

Note that if Case 2 was applied to obtain both \(a_{n+1}\) and \(a_{n+2}\) then \(a_n/a_{n+2}\ge 2\). This implies that \(a_n\rightarrow 0\), so \((a_n)\) is a good partition of [0, a]. It remains to show that \({\mathbf {T}}(f,(a_n))\le \sum _j f(u_j)-f(v_j)\).

If \(a_n\) was obtained in Case 1 then \([a_n,a_{n-1}]\) is a subinterval of some \([u_j,v_j]\) and \(f(a_n)-\min _{[a_n,a_{n-1}]} f=f(a_n)-f(a_{n-1})\). If \(a_n\) was obtained in Case 2 then \(f(a_n)-\min _{[a_n,a_{n-1}]} f=0\). Note also that f is nonincreasing on each \([u_j,v_j]\) since all points of \([u_j,v_j]\) are hard points of f. These show that indeed \({\mathbf {T}}(f,(a_n))\le \sum _j f(u_j)-f(v_j)\), which completes the proof. \(\square \)

The next proposition (or rather, the discrete corollary given in Proposition 5.24 below) will be crucial to get estimates on the packing dimension of the pinned distance sets.

Proposition 5.5

Let \(a>0\) and \(0\le D < 1/2\) be given parameters. Let \(f:[0,a]\rightarrow {\mathbb {R}}\) be a 1-Lipschitz function, which is piecewise linear on every closed subinterval of (0, a], and suppose that \(f(0)=0\) and \(Dx\le f(x)\) for every \(x\in [0,a]\). Let

Then for every \(\delta \in (0,1/2)\) there exists \(u\in [3a\Phi (D)2^{-1/\delta },a]\) such that

Proof

Let \(H\subset [0,a]\) be the set of hard points of f. If \(H=\emptyset \) then by Lemma 5.4, \({\mathbf {T}}(f)=0\), so \(u=a\) is clearly a good choice in this case. So suppose that H is nonempty.

First we briefly explain the idea of the proof in this nontrivial case. For simplicity, suppose that \(a=1\) and \(D=0\), which is the most interesting case anyway. Assume that the maximum of f(x) / x on \(H\cap (0,1]\) exists and is attained at u, and let B be this maximum. Since u is a hard point, \(f(x)\ge f(u)=Bu\) on [u / 2, u], and a calculation using that f is 1-Lipschitz shows that

Let \(F(x)=\min (f(x),2B x)\). Then it is not hard to show (see below for details) that every \(p\in H\cap [0,u]\) is also a hard point of F and that \(F=f\) on \(H\cap [0,u]\). By Lemma 5.4 this implies that \({\mathbf {T}}(f|[0,u])={\mathbf {T}}(F|[0,u])\), so we can study F|[0, u] instead of f|[0, u]. Let v be the largest number in [0, u) such that \(F(v)=Bv\). It follows from (5.11) that also \(F(x)> Bx\) if \(u'<x<u\), so we must have \(v\le u'\), and hence

and \(F(x)>Bx\) on (v, u). Since \(F(x)\le 2Bx\), for any hard point y of F we must have \(F(y)\le By\), and this implies that F has no hard point in (v, u). By Lemma 5.4 this implies that \({\mathbf {T}}(F|[0,u])={\mathbf {T}}(F|[0,v])\). Again using that \(F(x)\le 2Bx\), we can apply Proposition 5.2 on [0, v] to obtain

Calculus shows that \(\frac{(1/2-B)2B}{1+4B}\le \frac{2-\sqrt{3}}{4}=\Phi (0)\) for \(B\in [0,1]\), so we obtain \({\mathbf {T}}(f|[0,u])\le u\Phi (0)\).

Unfortunately, f(x) / x may not have a maximum on \(H\cap (0,1]\) and, even if it does, we might get an u which is too small. To avoid these problems we replace f(x) / x by \(f(x)/x +\delta \log x\). Then we can show that u exists, is not too small, and it still satisfies the claim of the proposition.

We now continue with the actual proof. Note that H is a closed set, and let \(h=\max H\). By Lemma 5.4, \({\mathbf {T}}(f)={\mathbf {T}}(f|[0,h])\).

If \(h<3a\Phi (D)\) then, applying Proposition 5.2 on [0, h] with \(C=1\), we get

so \(u=a\) is a good choice in this case.

Therefore in the rest of the proof we can suppose that \(h\ge 3a\Phi (D)\). Let

(Recall that in this paper \(\log \) denotes \(\log _2\).) Since f is nonnegative and 1-Lipschitz, \(0\le f(x)/x\le 1\) on (0, a], so for any \(x\in (0,2^{-1/\delta }h)\) we have

Now we claim that

To prove this we define a sequence \(u_0>u_1>\ldots \in H\) inductively. Let \(u_0=h\). Suppose that \(u_n\in H\) is already defined. Let \(v\in H\cap [\delta u_n, u_n]\) be the largest number such that \(\phi (v)=\max _{H\cap [\delta u_n, u_n]}\phi \). If \(v=u_n\) then let \(N=n\) and the procedure is terminated.

Otherwise letting \(u_{n+1}=v\) we have \(u_{n+1}<u_n\), so the procedure can be continued. Note that it follows from the construction that \(\phi (h)=\phi (u_0)\le \ldots \le \phi (u_n)\) and \(u_{n+2}<\delta u_n\) (\(n=0,1,\ldots \)). Thus (5.12) implies that the procedure must be terminated in finitely many steps and (5.13) holds for \(u=u_N\).

Let u be chosen according to (5.13). Then, using that \(h\ge 3a\Phi (D)\), we have \(u\ge 2^{-1/\delta }h\ge 2^{-1/\delta }\cdot 3a\Phi (D)\), so the requirement \(u\in [3a\Phi (D)2^{-1/\delta },a]\) is satisfied. Thus it remains to prove (5.10).

Let

Since u is chosen according to (5.13), we have

Let \(F(x)=\min (f(x),2Bx)\) (\(x\in [0,u]\)).

Now we claim that every \(p\in H\cap [\delta u,u]\) is also a hard point of F. Suppose, on the contrary, that \(p\in H\cap [\delta u,u]\) is not a hard point of F. Then there exists a \(q\in [p/2,p]\) such that \(F(q)<F(p)\). By (5.14) we have \(f(p)\le Bp\le 2Bp\), so by definition \(F(p)=f(p)\), and consequently we have

which implies that \(f(q)=F(q)\). Thus \(f(q)<f(p)\), so p cannot be a hard point of f, which is a contradiction.

Note that, by Lemma 5.4 and since \(F(p)=f(p)\) for any hard point of F, the above claim and the trivial estimate \({\mathbf {T}}(f|[0,\delta u])\le \delta u\) imply

First we consider the case when \(f(u)/u<-\delta \log \delta \).

Then \(B<-2\delta \log \delta \), and so

If \(-4\delta \log \delta >1\) then, since \(\Phi (D)\ge 0\) for \(D\le 1/2\), the righthand-side of (5.10) is larger than u. Since clearly \({\mathbf {T}}(g)\le u\) for any 1-Lipschitz function \(g:[0,u]\rightarrow {\mathbb {R}}\) we are done if \(-4\delta \log \delta >1\). So we may suppose that \(-4\delta \log \delta \le 1\). By Proposition 5.2 applied to F, with \(a=u, C=-4\delta \log \delta \) and \(D=0\), we obtain

By (5.15) (applied to \(v=u\)) this implies that

Since \(D\le 1/2\), we have \(\Phi (D)\ge 0\), so (5.16) implies (5.10), which completes the proof in the case when \(f(u)/u<-\delta \log \delta \).

So in the rest of the proof we may assume that

Since \(\delta <1/2\) this also implies that \(f(u)/u>\delta \). Putting this together with the fact that u was chosen according to (5.13), and with the inequality \(\log y\le y-1\), we get that if \(x\in H\cap [\delta u, u)\), then

Since u is a hard point, \(f(x)\ge f(u)\) on [u / 2, u], and so (5.18) implies that \(H\cap [u/2,u)=\emptyset \).

Again because u is a hard point, \(f(u/2)\ge f(u)\). Using this, \(\delta <1/2\) and the fact that f is 1-Lipschitz, we get

Using again that f is 1-Lipschitz and \(f(u/2)\ge f(u)\), we get

Thus

Let \(v_0= \frac{u/2-f(u)}{1-B}\). Note that \(f(x)>Bx\) also holds on the closed interval \([v_0,u/2]\) unless \(f(v_0)=Bv_0\). The definition \(B=\frac{f(u)}{u}-\delta \log \delta \) and the assumption (5.17) imply that \(B\le 2f(u)/u\), hence \(v_0\le u/2\). Let \(v=\max \{x\in [0,u/2]\ :\ f(x)=Bx\}\) (the maximum over a nonempty compact set). By (5.19) we have \(v\le v_0\) and \(f(x)>Bx\) on (v, u / 2]. By (5.14), this implies that \(H \cap [\delta u,u]\cap (v, u/2)=\emptyset \). Since above we obtained \(H\cap [u/2,u)=\emptyset \) we get \(H\cap (v,u)\subset [0,\delta u]\). Hence, using Lemma 5.4 and the trivial estimate \(T(f|[0,\delta u))\le \delta u\), we get

Since \(v\le v_0=\frac{u/2-f(u)}{1-B}\) and \(f(v)=Bv\),

Let \(C=\min (2B,1)\). We have just seen that

Note also that \(D\le f(u)/u=B+\delta \log \delta <B\), and so \(D\le C/2\) since we assumed that \(D\le 1/2\). Then \(Dx\le F(x)\le Cx\) on \([0,v]\subset [0,u]\), so we can apply Proposition 5.2 to get

Note that \(\frac{C/2-D}{1+2C-3D}< 1\). Using calculus, we get that \(\frac{(1-C)(C/2-D)}{1+2C-3D}\le \Phi (D)\) for \(C\in [2D,1]\). Therefore

Combining the above inequality with (5.15) and (5.20), we get (5.10). \(\square \)

5.3 Stability results.

The results of this subsection are only needed for the Proof of Theorem 1.4. Moreover, to get the bound \({{\,\mathrm{dim_H}\,}}(\Delta (A))\ge 37/54\) whenever \({{\,\mathrm{dim_H}\,}}(A)>1\), one only needs to consider the case \(D=0\) below. While there is no conceptual difference between the cases \(D=0\) and \(D>0\), the calculations are easier in the former case, so the reader may want to assume that \(D=0\) in a first reading.

In the \(C=1\) special case of Proposition 5.2, we get that if \(D\in [-1,1/2]\) and \(f:[0,1]\rightarrow {\mathbb {R}}\) is a 1-Lipschitz function such that \(f(0)=0\) and \(f(x)\ge Dx\) on [0, 1], then \({\mathbf {T}}(f)\le (1-2D)/3\). As we will see in Section 7, and is not hard to check, this estimate is sharp: if

then \({\mathbf {T}}(f)=(1-2D)/3\). In this section we prove a quantitative stability result (Proposition 5.15) for \(D\in [0,1/3]\), stating that if \({\mathbf {T}}(f)\) is close to \((1-2D)/3\) then f(x) must be close to the above function when x is not too far from 0 or from 1.

The general plan to get this result is the following. Let \(b=\min _{[1/2,1]} f\) and choose \(a\in [1/2,1]\) such that \(f(a)=b\). It is easy to see that \({\mathbf {T}}(f)={\mathbf {T}}(f|[0,a])\), so it is enough to study f|[0, a] instead of f. We need to get an upper estimate on T(f) when f is not close enough to the function defined in the previous paragraph. This upper estimate will be obtained by finding a point \(p\in [0,a]\) such that in the good partition in the definition of T(f), the points \(a_n\) in [p, a] can be chosen such that \(\min _{[a_n,a_{n-1}]}f = f(a_{n-1})\), and so for these indices the sum of the terms \(f(a_n)-\min _{[a_n,a_{n-1}]}f\) is \(f(p)-f(a)\) or, in other words, the smallest possible. Combining this with a near optimal good partition for f|[0, p] guaranteed by Proposition 5.2, we get a near optimal lower bound for T(f) for all f with such a special point p and value f(p). These points p will be called simple points, and after proving the above described near optimal upper estimate, most of the proof will be about hunting a simple point such that the estimate we obtain for \({\mathbf {T}}(f)\) is the upper estimate we claim.

First we collect some assumptions and define precisely the above mentioned notion of simple points.

Definition 5.6

Suppose that

A point \(p\in [0,a]\) is called simple if there exists a finite sequence \(p=p_0<p_1<\cdots <p_k=a\) such that

Lemma 5.7

If (5.21) holds and \(p\in [0,a]\) is a simple point then

Proof

Applying Proposition 5.2 to f|[0, p] with \(C=1\) we get \({\mathbf {T}}(f|[0,p])\le \alpha (p-f(p))\). Hence for any \(\delta >0\) there exists a good partition \((a_n)\) of [0, p] such that

Since p is simple there exists a finite sequence \(p=p_0<p_1<\cdots <p_k=a\) such that (5.22) holds.

For \(n\le k\) let \(a'_n=p_{k-n}\) and for \(n>k\) let \(a'_n=a_{n-k}\). Then \(( a'_n)\) is a good partition of [0, a] and

which completes the proof. \(\square \)

Lemma 5.8

Suppose that (5.21) holds and let \(p\in [0,a]\). If

then p is simple.

Proof

Let \(p_0=p\). Suppose that \(n\ge 0\) and \(p_0<\cdots <p_n\) are defined such that (5.22) holds for \(k=n\). If \(p_n\ge a/2\) then let \(p_{n+1}=a\) and we are done. Otherwise, let \(p_{n+1}\in [p_n,2p_n]\) be the largest number such that \(f(p_{n+1})=\min _{[p_n,p_{n+1}]} f\). By (5.23) we also have \(p_{n+1}>p_n\). It remains to check that the procedure terminates, which follows from the simple observation that \(p_{n+2}\ge \min (2 p_n,a)\) by definition. \(\square \)

Lemma 5.9

Suppose that (5.21) holds. If \(p\in [a/2,a]\), or if \(p\in [0,a/2]\) and \(f(p)\ge -2p+a+b\), then p is a simple point.

Proof

The case \(p\in [a/2,a]\) is clear, so suppose that \(p\in [0,a/2]\) and \(f(p)\ge -2p+a+b\). Then the 1-Lipschitz property of f implies that for any \(x\in [p,a]\) we also have \(f(x)\ge -2x+a+b\). Since f is 1-Lipschitz and \(f(a)=b\) we have \(f(y)\le -y+a+b\) for any \(y\in [0,a]\). Thus \(f(x)\ge -2x+a+b\ge f(2x)\) for any \(x\in [p,a/2]\), so Lemma 5.8 completes the proof. \(\square \)

Lemma 5.10

Condition (5.21) implies that \(1-a+2b-2D\ge 0\).

Proof

Note that \(b=f(a)\ge Da\), so

\(\square \)

Lemma 5.11

If (5.21) holds and \({\mathbf {T}}(f)> \frac{1-2D}{3}-\delta \) for some \(\delta \in (0,a/3)\) then

Proof

First note that \(\delta <a/3\) implies that \(t_0<a\). Since \(f(0)<-2\cdot 0+a+b\) and \(f(a)\ge -2\cdot a + a +b\) there exists a \(t\in (0,a]\) such that \(f(t)=-2t+a+b\). By Lemma 5.9, t is a simple point, so writing \(\alpha = \frac{1-2D}{3(1-D)}\) and using Lemma 5.7, we get

Combining this with the assumption \({\mathbf {T}}(f)> \frac{1-2D}{3} - \delta \) and multiplying through by \(3(1-D)\), we get

which can be rewritten as

By Lemma 5.10, this implies \(t < \frac{a+b}{3} +\delta (1-D)=t_0\). Using this and the 1-Lipschitz property of f, we obtain

Using again that f is 1-Lipschitz, this gives the claim. \(\square \)

Lemma 5.12

Suppose that (5.21) holds, \(0\le p\le \frac{a+b-v}{2} < u \le a\), \(f(u)=v\) and \(f(x)\ge v\) on \([p,\frac{a+b-v}{2}]\). If \(v\ge u/2\) or \(f(p)=-2p+u+v\), then p is simple.

Proof

It is useful to note that by the 1-Lipschitz property of f, the assumptions \(u\le a\), \(f(u)=v\) and \(f(a)=b\) imply that \(u+v\le a+b\), and so \(\frac{u}{2}\le \frac{a+b-v}{2}\).

By Lemma 5.8 it is enough to check (5.23). So let \(z\in [p,a/2)\). We distinguish three cases.

First suppose that \(z\ge \frac{a+b-v}{2}\). Then, using that \(f(\frac{a+b-v}{2})\ge v\), f is 1-Lipschitz, \(2z<a\) and \(f(a)=b\), we get

Therefore (5.23) holds in this case.

Now suppose that \(z\in [\frac{u}{2}, \frac{a+b-v}{2}]\). Since we consider only \(z\in [p,a/2)\) we also have \(z\in [p, \frac{a+b-v}{2}]\). Then \(f(z)\ge v\), \(u\in (z,2z]\) and \(f(u)=v\le f(z)\), so (5.23) holds in this case as well.

Finally, suppose that \(z\in [p,\frac{u}{2})\). Then \(v \le f(z)\le z < u/2\), hence we cannot have \(v \ge u/2\), so we must have \(f(p)=-2p+u+v\). Using that f is 1-Lipschitz and \(z\ge p\), this implies \(f(z)\ge -2z+u+v\). Since f is 1-Lipschitz and \(f(u)=v\) we have \(f(x)\le u+v-x\) on [0, u]. Thus \(f(2z)\le u+v-2z\le f(z)\), which completes the proof. \(\square \)

Lemma 5.13

If (5.21) holds and \({\mathbf {T}}(f)> \frac{1-2D}{3}-\delta \) for some \(\delta \in (0,a/3)\) then

Proof

Let \(v=\frac{a+b}{3}-2\delta (1-D)\). If \(v<0\) then the claim is clear, so we can suppose that \(v\ge 0\). By Lemma 5.11, \(f(t_0)>v\). Thus if the claim is false then there exists a \(u\in (t_0,2t_0-6\delta (1-D)]\) such that \(f(u)=v\).

By (5.21), we have \(b\le \frac{a}{2}\), which implies

Since \(f(0)\le v<f(t_0)\) we also have a largest \(p\in [0,t_0)\) such that \(f(p)=v\). Then \(f(x)\ge v\) on \([p,t_0]\). Since \(\frac{a+b-v}{2}=t_0\) and \(u/2\le t_0-3\delta (1-D)=v\), all the assumptions of Lemma 5.12 hold, so we get that p is simple.

Then by Lemma 5.7 we have \({\mathbf {T}}(f)\le \alpha p + (1-\alpha ) v - b\), where \(\alpha = \frac{1-2D}{3(1-D)}\). Since \(p<t_0=v+3\delta (1-D)\), this implies that \({\mathbf {T}}(f)\le v+(1-2D)\delta -b\). Combining this with the assumption \({\mathbf {T}}(f)> \frac{1-2D}{3}-\delta \) we get

Note that Lemma 5.10 implies that \(b+\frac{1-2D}{3}\ge \frac{a+b}{3}\), so we obtain \( v> \frac{a+b}{3}-2\delta (1-D)\), which is a contradiction. \(\square \)

From the last lemma and the Lipschitz property of f one can easily derive a good lower estimate also on \([2t_0-6\delta (1-D),a]\). However, the next lemma will lead to an even better (and, as we will see later, sharp) estimate on the right part of [0, a].

Lemma 5.14

Suppose that (5.21) holds,

Then

Proof

Since \(f(0)<-2\cdot 0+u+v\) and \(f(a)\ge -2\cdot a + u+v\) there exists a \(p\in (0,a]\) such that \(f(p)=-2p+u+v\). First we prove that p is a simple point. To get this, by Lemma 5.12, it is enough to check that \(p\le \frac{a+b-v}{2}<u\) and \(f(x) \ge v\) on \([p,\frac{a+b-v}{2}]\).

Since \(u\in (a/2,a]\), \(v=f(u)\) and \(b=\min _{[a/2,a]} f\), we have \(b\le v\), so \(\frac{a+b-v}{2}\le a/2<u\).

Note (as in Lemma 5.12) that \(u+v\le a+b\). By Lemma 5.11, we have \(f(t_0)>t_0-3\delta (1-D)\), where \(t_0=\frac{a+b}{3} +\delta (1-D)\). Then

Since f is 1-Lipschitz this implies that \(p< t_0\). Using this, \(u+v\le a+b\) and finally the assumption \(u\ge 2v+6\delta (1-D)\), we get

On \([0,t_0]\) we have \(f(x)-x> -3\delta (1-D)\) by Lemma 5.11, on [p, a] we have \(2x+f(x)\ge 2p + f(p) = u+v\) by the 1-Lipschitz property of f. Taking the linear combination of these inequalities with weights 2 / 3 and 1 / 3, we get

By the assumption \(u \ge 2v + 6\delta (1-D)\), this gives \(f(x)\ge v\) on \([p,t_0]\).

Using that f is 1-Lipschitz and then (5.24), we get that on \([t_0,a]\) we have \(2x+f(x)\ge 2t_0 +f(t_0)>a+b\), which implies that \(f(x)\ge v\) also on \([t_0,\frac{a+b-v}{2}]\).

Therefore, by Lemma 5.12, p is indeed a simple point. Now Lemma 5.7 gives

Recalling that \(\alpha =\frac{1-2D}{3(1-D)}\), it is easy to check that \(D<1\) implies that \(3\alpha -2 <0\). The 1-Lipschitz property of f and \(f(0)=0\) imply that \(0\le p-f(p)=3p-(u+v)\), so \(p\ge \frac{u+v}{3}\). Using these facts, the last displayed equation yields

Combining this with the assumption \({\mathbf {T}}(f)>\frac{1-2D}{3}-\delta \) we get \(u+v> 1-2D-3\delta +3b\). Note that \(u+v\le a+b\) and \(b=f(a)\ge Da\) imply that \(b \ge \frac{D}{D+1}(u+v)\). Combining these facts, we conclude that

which implies (using also that \(D<1/2\)) the claim. \(\square \)

The following proposition provides a global quantitative estimate for functions \(f:[0,1]\rightarrow {\mathbb {R}}\) for which \({\mathbf {T}}(f)\) is close to the maximum possible value.

Proposition 5.15

Fix \(D\in [0,1/3]\), \(\delta \in (0,1/21]\) and let \(f:[0,1]\rightarrow {\mathbb {R}}\) be a 1-Lipschitz function such that \(f(0)=0\), \(f(x)\ge Dx\) on [0, 1] and \({\mathbf {T}}(f) > \frac{1-2D}{3}-\delta \). Let

Then

Proof

Let \(b=\min _{[1/2,1]} f\) and choose \(a\in [1/2,1]\) such that \(f(a)=b\). Then it is easy to see that \({\mathbf {T}}(f)={\mathbf {T}}(f|[0,a])\). So combining the assumption \({\mathbf {T}}(f) > \frac{1-2D}{3}-\delta \) and Proposition 5.2 for f|[0, a] and \(C=1\), and then using \(b=f(a)\ge Da\), we get

This implies

and so

By (5.29),

Since f is 1-Lipschitz this implies that

which (using again that f is 1-Lipschitz) yields the upper estimate of (5.28) on [0, 1].

By definition we have \(\min _{[1/2,a]}f=f(a)=b\), but in order to apply our lemmas to f|[0, a] we have to show \(\min _{[a/2,a]}f=f(a)\). Suppose then that \(\min _{[a/2,a]}f<f(a)\). Then there exists an \(a'\in [a/2,1/2)\) such that \(\min _{[a/2,a]}f=f(a')\). Using Proposition 5.2 applied to \(f|[0,a']\) and \(C=1\) we get

which is impossible, since we assumed \(D\le 1/3\) and \(\delta \le 1/21\).

Therefore (5.21) holds for f|[0, a]. Note that (5.31) implies that \(t_0> t_1\), where \(t_0=\frac{a+b}{3}+\delta (1-D)\) (as in Lemma 5.13).

By (5.30), \(D\le 1/3\) and \(\delta \le 1/21\), we get \(a>4/7\), so \(\delta <a/3\) holds. Then applying Lemmas 5.11 and 5.13 to f|[0, a] and using that f is 1-Lipschitz we get the lower estimate of (5.26) and (5.27). The upper estimate of (5.26) is clear.

It remains to prove the lower estimate of (5.28). Lemma 5.14 (for f|[0, a]) gives that every point of the graph of f|(a / 2, a] must be above either the \(y=1+D-x-3\delta \frac{1+D}{1-2D}\) line, or the \(y=\frac{x}{2}-3\delta (1-D)\) line. These two lines intersect at \((2t_1,t_1-3\delta (1-D))\). On the other hand, a calculation using \(\delta \le 1/21\), \(D\le 1/3\), \(a\le 1\) and (5.30) shows that \(a/2\le 1/2< 2t_1 \le 1-\frac{3\delta }{1-2D}< a\). We deduce that \(f(2t_1)> t_1-3\delta (1-D)\). Using the 1-Lipschitz property of f, this gives the lower estimate of (5.28). \(\square \)

Remark 5.16