Abstract

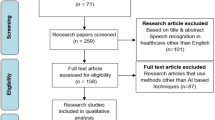

Spoken language change detection (LCD) refers to identifying the language transitions in a code-switched utterance. Similarly, identifying the speaker transitions in a multispeaker utterance is known as speaker change detection (SCD). Since tasks-wise both are similar, the architecture/framework developed for the SCD task may be suitable for the LCD task. Hence, the aim of the present work is to develop LCD systems inspired by SCD. Initially, both LCD and SCD are performed by humans. The study suggests humans require (a) a larger duration around the change point and (b) language-specific prior exposure, for performing LCD as compared to SCD. The larger duration requirement is incorporated by increasing the analysis window length of the unsupervised distance-based approach. This leads to a relative performance improvement of \(29.1\%\) and \(2.4\%\), and a priori language knowledge provides a relative improvement of \(31.63\%\) and \(4.01\%\) on the synthetic and practical codeswitched datasets, respectively. The performance difference between the practical and synthetic datasets is mostly due to differences in the distribution of the monolingual segment duration.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Spoken language diarization (LD) is a task to automatically segment and label the monolingual segments in a given multilingual speech signal. The existing works towards LD are very few [30]. The majority of them use phonotactic (i.e. the distribution of sound units) based approaches [5, 17, 32]. The development of LD using a phonotactic-based approach requires transcribed speech utterances. The same is difficult to obtain as most of the languages present in the code-switched multilingual utterances are resource-scarce in nature [30, 32]. Even though there exist some transfer learning approaches that adapt the phonotactic models of the high resource language to obtain the models for the low resource language, they may end up with performance degradation if both the languages are not from the same language group [30]. Further, LD is effortless for humans, especially for known languages, and challenging for machines. Hence there is a need for exploring alternative approaches for LD.

Speaker diarization (SD) is a task to automatically segment and label the mono-speaker segments for a given multispeaker utterance, which is well explored in the literature. Though there exist differences in the information that needs to be captured to perform LD and SD tasks, there exist many similarities like the features approximating the vocal tract resonances that have been successfully used for the modeling of both speaker and language-specific phonemes [4, 14, 15]. Furthermore, most of the approaches used for spoken language identification (LID) are inspired by the approaches used for the speaker identification/verification (SID/SV) task [25, 31]. In addition to that most of the successful LID systems that are borrowed from SID/SV literature do not require transcribed speech data [14, 31]. Alternatively, LID systems developed using the phonotactic approach require transcribed speech data. This motivates a close association study between the LD and SD tasks and may be exploited to come up with approaches for LD.

The SD field has evolved mainly in two ways: (1) change point detection followed by clustering and boundary refinement, and (2) fixed duration segmentation followed by i-vector/ embedding vector extraction, clustering, and boundary refinement [21, 23, 33]. [3, 7, 13, 23] reported that initial change point detection improved overall SD performance. Thus this study focuses on the development of spoken language change detection (LCD) through a comparative analysis between LCD and speaker change detection (SCD). The available SCD approaches can be broadly classified into two groups: (1) distance-based unsupervised approach and (2) model-based supervised approach [21, 23]. The distance-based approach applies hypothesis testing (either coming from a unique speaker or not) for predicting the speaker change to the speaker’s specific features extracted from the speech signal with sliding consecutive windows [21, 23]. Following this approach, many feature extraction techniques like excitation source [9, 27], fundamental frequency contour [13], etc., and distance metrics like Kullback-Leibler (KL) divergence [29], Bayesian information criteria (BIC) [6], KL2 [29], generalized likelihood ratio (GLR) [11] and information bottleneck (IB) [7] are proposed in the literature. Generally, the performance of the distance-based unsupervised approach degrades with variation in environment and background noise (it may predict false changes), hence to resolve the issue supervised model-based approaches are proposed in the literature [21, 23]. In the early days, the proposed approaches model individual speakers using the Gaussian mixture model and universal background model (GMM-UBM) [2, 21], hidden Markov model (HMM) [19], etc, but nowadays, using the deep learning framework the approach predicts the speaker change by discriminating between the speaker change segments (neighborhood of the speaker change point) with no change segments [21, 23]. However, the model-based approach smooths the output evidence and may lead to miss detection of the change points [21]. In addition to that training of the supervised model requires labeled speech data from a similar environment/recording condition, speaking style, language, etc., making the system development complicated. Therefore the distance-based unsupervised approaches are more popular and widely used for SCD tasks [7, 21, 23].

Even though the available SCD frameworks look simple to adopt, there are challenges in doing so. Figure 1a and b, show the time domain speech signals corresponding to the utterance having a speaker change and a language change, respectively. By listening and observing the time domain representation of both utterances, the identified speaker/language change points are manually marked. From the time domain signal, it is very difficult to locate both the speaker and language change points. Figure 1c and d show the spectrogram of both the utterances. The spectrogram shows that the high energy spectral structures around the speaker change show significant variation, whereas the structure is intact around language change. When the speaker changes, the speech production system changes, hence might be a variation in the spectral structures. However, the structure of the high energy frequencies remains intact during language change, as the single speaker is speaking both languages.

It is interesting to note that humans discriminate between spoken languages without knowing the detailed lexical rules and phonemic distribution of the respective languages. Of course, humans need to have prior exposure to the languages [14]. Humans may exploit the long-term phoneme dynamics to discriminate between languages. Therefore, the language change may be detected by capturing the long-term language-specific spectral-temporal dynamics. This may represent valid phoneme sequences and their combinations to form syllables and subwords of a language. Based on the need to exploit the long-term spectro-temporal evidence, it can be hypothesized that the LCD by human/machine may require more neighborhood duration around the change point than the SCD. In addition, the LCD may also benefit from the prior exposure of respective languages. A human subjective study that focuses on language/speaker change detection is set up for validating the same. Further, for the automatic detection of language change, initial studies are performed by considering the unsupervised distance-based SCD framework. After that, based on the experimental results, the framework will be appropriately tuned up to improve the performance of the LCD task.

The main contributions of this work are summarized as follows:

-

1.

Analysis of spectro-temporal representation around speaker and language changes indicates that, unlike speaker change detection, identifying language changes requires a larger duration around the change point and prior knowledge of the involved languages.

-

2.

The same hypothesis is investigated by the human subjective study.

-

3.

We generate a synthetic dataset to closely examine the relationship between Language Change Detection (LCD) and Speaker Change Detection (SCD) using the gold standard Indian Institute of Technology Madras text-to-speech (IITM-TTS) dataset.

-

4.

Initial baselines for LCD are established using SCD frameworks, and their performances are evaluated.

-

5.

Finally, these frameworks are further refined to improve the performance of LCD.

2 Database Setup

This section provides a brief description of the database used in this study. For performing the LCD/SCD task among humans, we have selected 32 and 15 utterances for the language and speaker change study, respectively. All the utterances have only one language/speaker change point and have approximately \(6-8\) syllables on either side of the change point. For the language change study, we have selected 32 utterances from the publicly available sources (mostly from Youtube), whereas we have chosen 15 utterances from the IITG-MV phase 3 and DIHARD datasets for the speaker change study [12, 26]. The 32 utterances used for the language change study are from the 10 language pairs and have 4, 4, 4, 4, 4, 2, 2, 4, 2, 2 utterances respectively from, (1) Hindi-English (HIE), (2) Bengali-English (BEE), (3) Telugu-English (TEE), (4) Tamil-English (TAE), (5) Bengali-Assamese (BEA), (6) Bengali-Bengali (BEB), (7) Assamese-Assamese (ASA), (8) Tamil-Malayalam (TAM), (9) Tamil-Tamil (TAT) and, (10) Malayalam-Malayalam (MAM) respectively. It is difficult to get the utterances having language pairs, Bengali-Assamese, and Tamil-Malayalam spoken by a single speaker. Hence, these language pairs with and without having a language change are considered along with a speaker change. The selected utterances for both LCD and SCD tasks along with their change point annotations are available at.Footnote 1

Initially, the studies have been performed with synthetically generated code-switch and multi-speaker utterances. For generating the utterances, we have used the IITM-TTS corpus [1]. The IITM-TTS corpus consists of speech data recordings from native speakers of 13 Indian languages. For each native language, two speakers (a male and a female) recorded their utterances in their native language and English. In this study for synthesizing the code-switch utterances, a female speaker speaking her native language Hindi, and her second language English is considered. For each language, the first 5 hours of data are used for training purposes. The rest of the monolingual utterances are stitched randomly for generating code-switched utterances. Altogether, 4000 utterances are generated having one to five language change points. The average monolingual segment duration of the generated code-switch utterances for Hindi and English languages are approximately 6.5 and 5.2 secs, respectively. The generated dataset is termed as TTS female language change (TTSF-LC) corpus. Similarly, for generating speaker change utterances by keeping the language identical, we have used English speech utterances from native Hindi and Assamese female speakers. The average mono-speaker segment duration of the generated utterances are 5.19 and 4.86 secs respectively. The generated dataset is termed as TTS female speaker change corpus (TTSF-SC). The detailed algorithm of the generated data is given in Appendix 2.

Finally, for generalizing the obtained observations, the experiments are performed on the standard LCD corpus. Microsoft code-switched challenge task-B (MSCSTB) dataset is used. The dataset has development and training partitions that consist of code-switched utterances and language tags (each 200 msec) from three language pairs: Gujarati-English (GUE), Tamil-English (TAE), and Telugu-English (TEE). The approximate duration of each language in the training and development set is 16 and 2 hours respectively. The details about the database can be found at [10].

3 Human Subjective Study for Language and Speaker Change Detection

An experimental procedure has been set up, where each human subject is exposed to a pool of utterances that may or may not have a language/speaker change. The human subjects are asked to mark, if there exists a language/speaker change or not. The utterances are classified into five groups. Each group is represented with approximate duration considered in terms of the number of energy frames (NEF) taken around the true/false change point. The true change point refers to the actual change points of the selected utterances. The selected utterances are split around the change point to generate the mono-language/speaker utterance. The false change point represents the centered energy frame’s start location of the given mono-language/speaker utterance. The energy frame is decided by taking \(6\%\) of the average short time frame energy (computed with a frame size of 20 msec and a frameshift of 10 msec) of a given utterance as a threshold [24]. The 30 mono-speaker utterances are generated by splitting the selected 15 utterances around the true change point. Out of 30, with respect to duration, the largest 15 has been chosen for this study. The same procedure has been followed to generate the mono-lingual utterances using the selected code-switched utterances belonging to the HIE, BEE, TAE, and TEE language pairs. However, there is an exception for the utterances belonging to BEA and TAM, as the utterances have a speaker change along with the language change. Hence for a fair comparison, the mono-lingual utterances for these cases are synthesized, such that they also have a speaker change, i.e. BEB, ASA, MAM, and TAT respectively. After that, each utterance S(n) is masked by considering x number of energy frames (NEF-x) from the left and right of the true/false change point. According to the value of x, the masked utterances are grouped into five different groups, termed NEF-10, NEF-20, NEF-30, NEF-50, and NEF-75. To avoid abrupt masking, a Gaussian mask G(n) with appropriate parameters is multiplied with the utterances to obtain the masked utterance \(S_{m}(n)=S(n) \times G(n)\). The masked signal is passed through an energy-based endpoint detection algorithm to obtain the final masked utterance [24]. The detailed procedure of the masked utterance generation is attached in Appendix 1, and the generated utterances are available at.Footnote 2

The listening experiment is conducted with 18 subjects. Out of them, 13 number of the subjects are male and 5 are female. The selected subjects are from the \(20-30\) years age group. The subjects have no prior exposure to the speech samples of the speakers used in this study. However, the subjects are comfortable with English, and for other languages, the comfortability varies. To know the language comfortability, each of the subjects is asked to provide a language comfortability score (LCS) from zero to three for each pair of languages.

The listening study is conducted with 390 utterances (i.e 240 for LCD and 150 for SCD). The LCD task is separate from SCD, hence conducted in two different sessions, and also the subjects are well rested so that they don’t have listener fatigue. A graphical user interface (GUI) has been designed to perform the listening study. For a specific LCD/SCD study, all the masked utterances are presented to the listener in a random order, irrespective of their segment duration. If a listener is unable to provide the response for one-time playing, s/he is allowed to play the utterance multiple times. Our objective here is to observe, how correctly humans recognize the speaker and language change by listening to the utterances coming from the five different groups. Hence, the responses recorded in [28] for analyzing the talker change detection ability of humans are used here. Three kinds of responses have been recorded, these are (1) language/speaker change detected or not (2) the number of times replayed (NR), and (3) response time (RT). RT is the time duration taken by a subject to provide his/her response, after listening to the full utterance. The RT is computed by subtracting the respective utterance duration (UD) from the total duration (TD) (i.e. \(RT=TD-UD\)). The TD is the duration taken by a subject (i.e. from pressing the play button to pressing the yes/no button) to provide his/her response.

For a given subject, there are three kinds of performance measures computed in this study: (1) average detection error rate (DER) (2) average number of times replayed (NR), and (3) average response time (RT). The DER is defined in Eq. 1, where N is the total number of trials, FA is the number of false language/speaker change utterances, marked as true by the subject and FR is the number of true language/speaker change utterances, marked as false by the subject, respectively. The DER measure defines the inability of the subject to detect language/speaker change. The NR provides an estimation of the average number of replays required for the subject to mark their response comfortably. Similarly, the RT provides an estimation of the average duration required for the subject to perceive the language/speaker change, after listening to the respective utterances. A higher value of the performance measures indicates the inability of the human subject to perceive the language/speaker change and vice versa.

After performing both the LCD and SCD experiments, the subject-specific, DER, NR, and RT are computed with respect to NEF. The distributions of the obtained DER with respect to the NEF are depicted in Fig. 2a. It can be seen that the DER values are smaller for the SCD than for the LCD, regardless of the NEF. This suggests that human subjects are more comfortable with detecting the switching of speakers than language. Furthermore, as the NEF increases from 10 to 75, the DER decreases for both SCD and LCD. The differences between the DER distribution of the LCD and SCD decrease with an increase in the NEF. This suggests that human subjects’ comfortability in detecting language change increases and becomes at par with speaker change, with the increase in the NEF. To further validate fact, a statistical test called an analysis of variance (ANOVA) has been performed between the DER distribution (after removing the outliers) of LCD and SCD. The obtained F-statistics values are depicted in Fig. 2b. The higher F-statistics value suggests having better discrimination between the two distributions and vice-versa. From the figure, it can be observed the F-statistics values reduced with an increase in NEF. This justifies the claim that humans’ language discrimination ability improves and goes closure to the speaker discrimination ability with an increase in NEF. The median values of the recorded NR and RT values are depicted in Fig. 3. It can be observed from the figure that, like DER, the median value of NR, and RT reduces with an increase in NEF. The median values of NR and RT are also smaller for SCD than LCD. This concludes that human subjects require a larger duration around the change point to detect language than the speaker change comfortably.

For observing the effect of language comfortability on detecting language change, the responses of the human subjects are considered for the group NEF-50 and NEF-75 that have the median of DER lesser than 0.25 (assuming sufficient duration from either side). With respect to the LCS, the responses are segregated into four groups. The group segregation with respect to language comfortability is done as 0: very low, 1: lower medium, 2: medium, and 3: excellent, respectively. The obtained DER distribution with respect to LCS is depicted in Fig. 4. From the figure, it can be observed that the DER values decrease with an increase in LCS. This concludes that a priori knowledge of languages helps people to better discriminate between languages.

4 LCD and SCD Using Unsupervised Distance-Based Approach

The objective of this section is to perform LCD tasks inspired by the existing unsupervised distance-based SCD framework. In general, the SCD task is performed by computing and thresholding the distance contour obtained between the features of the sliding analysis window with a fixed length N. Initially, this study uses the Mel-frequency cepstral coefficients (MFCC), linear prediction cepstral coefficients (LPCC), perceptual linear prediction (PLP), and shifted delta coefficients (SDC) [14] as feature representations to compare their language discrimination ability. Then taking evidence from the discrimination study, LCD is performed. The basic block diagram of the change detection approach is depicted in Fig. 5.

First feature vectors are extracted from the speech signal and then energy-based speech activity detection (SAD) is performed to obtain the energy frame indices. The energy frame indices are stored for future reference and the feature vectors corresponding to the energy frames are used for further processing. The two different Gaussian distributions (\(g_{a}\) and \(g_{b}\)) are estimated using the feature vectors from the two consecutive windows having a fixed length of N frames. The divergence distance contour is obtained through the entire scan of the given test utterance by sliding the analysis window with a frame, as mentioned in Eq. 2.

The divergence distance contour is then smoothed with the hamming window with length (\(h_{l}\)). The smoothed contour is then used for peak detection, with a peak-picking algorithm having a minimum peak distance parameter called \(\gamma \). The higher value of \(\gamma \) reduces the number of detected peaks and vice-versa. For reducing the number of false change points, an approach of deriving a threshold counter proposed in [16] is used here. The computation procedure of threshold contour is mentioned in Eq. 3.

where \(\alpha \) is the threshold amplification factor and M is the number of samples in the divergence distance contour. Finally, the change frame is obtained by comparing the strength of the detected peaks with the threshold contour. The change point’s actual frame index and sample location are obtained by using the stored energy frame locations.

We then used the TTSF-SC dataset for designing and tuning the hyperparameters of the SCD system. Out of 4000 test utterances, the first 100 utterances are used to tune the hyperparameters. It has been observed that the performance is optimal by considering \(\alpha =1\), \(\gamma \) equal to 0.9 times the analysis window length, and 150 frames as the analysis window length. Keeping the methodology and hyperparameters identical, the TTSF-LC and MSCSTB dataset is used to perform the LCD task. For evaluating the performance, the commonly used performance measures for event detection tasks, i.e. identification rate (IDR), false acceptance rate (FAR), miss rate (MR), and mean deviation (\(D_{m}\)) are used here [20, 22]. The measures are independent of the tolerance window, and calculated by observing the event activity in each region of interest (ROI) segment. The ROI segments are the duration between the mid-location of the consecutive ground-truth change points. The obtained performances of both tasks are tabulated in Table 1.

From the results, it can be observed that the performance of the SCD in terms of IDR is \(84.1\%\), whereas the performance of the LCD in terms of IDR is \(51.2\%\). The reduction in performance may be due to two reasons, (1) the used MFCC features may fail to capture language-specific discriminative evidence, and (2) the hyperparameters, mostly the analysis window length, are tuned for SCD and may not be appropriate for LCD. Hence to understand the issue a study is carried out by varying the features and analysis window length around the change point. The most used features in literature for language identification (LID) tasks, i.e. MFCC, LPCC, SDC, and PLP are considered here. The objective here is to observe the language discriminative ability of the features by considering a fixed number of energy frames (NEF), x around the change point, and compare it with the speaker discrimination ability of the MFCC feature. This study will help us to reason out the performance degradation of LCD as compared to SCD. Further, the observation will also help us to optimally decide the feature and analysis window length for performing LCD.

For performing the study, the TTSF-SC and TTSF-LC dataset is considered. Out of 4000 test utterances, the utterances having only one change point are selected. The number of utterances selected for speaker change and language change is 799 and 836, respectively. For observing the discrimination ability, the idea here is to observe the distributional difference between the true and false distances. The true distances are the KL divergence distance between the x number feature vectors from either side of the ground truth change point. Similarly, the false distance is computed by placing the change point randomly anywhere in the mono-language/ speaker segments. The procedure of computing the true and false distances is also depicted in Fig. 6. For observing the duration effect on the discrimination, the value x is considered as 10, 20, 30, 50, 75, 100, 150, 200, 250, and 300 respectively. For a given x value, the ANOVA test is conducted between the obtained true and false distances. The obtained F-statistics values of the ANOVA test are depicted in Fig. 7.

From the figure, it can be observed that the F-statistics values increase with an increase in NEF, saturate after a certain number of energy frames, and start decreasing after that. A similar observation has also been observed in the case of the LCD and SCD study by humans. However, in case humans’ performance doesn’t degrade with an increase in NEF. This may be due to the inability of the Gaussian (assumption of statistical independence) to model the speaker and language spectral dynamics and leading to the increase of the class-specific variance in the distance distribution. Using the MFCC feature, the F-statistic values of the SCD are higher than the LCD irrespective of the NEF. Further, it can also be observed that the discrimination ability (in terms of F-statistics) of the LCD follows the SCD with an increase in the NEF. Furthermore, it has also been observed that the highest F-statistics values obtained for speaker and language change study are at 150 and 200, respectively.

In addition to this, for language change study, the MFCC features provide better F-statistics value, followed by PLP, LPCC, and SDC. For clear observation, the distance distribution of the MFCC feature to perform SCD and the MFCC and PLP features to perform LCD with NEF of 50, 150, 200, and 250 is depicted through box plots in Fig. 8. From the box plots, it can also be noticed that the speaker and language discrimination saturates at NEF 150 and 200, respectively. Though the boxplots look to have better discrimination, the increase in inter-class variance leads to a decrease of the F-statistics values. Furthermore, the discrimination ability of the MFCC is better compared to PLP, as the separation between the true and false distance distribution of the MFCC feature is higher than the PLP feature for LCD at NEF equal to 200. This motivates us to consider the MFCC feature with the analysis window length of 200 for performing LCD for the TTSF-LC dataset. The performance of the LCD task with modified analysis window length is tabulated in Table 1.

The table shows that the performance in terms of IDR, FAR, and MDR follows the observations noticed with respect to the F-statistics. The performance obtained for the TTSF-LC dataset with MFCC feature (considering analysis window length 200) is \(66.1\%\) in terms of IDR, providing a relative improvement of \(29.1\%\) and followed by the IDR of \(64.06\%\) using PLP feature. Similar observations also have been reported using the MSCSTB dataset, where the performance in terms of IDR improved relatively with \(2.72\%\), \(2.85\%\), and \(1.63\%\) by considering the analysis window length of 160, 180, and 170 for GUE, TAE, and TEE language pairs, respectively. The analysis window length 160, 180, and 170 are decided greedily by evaluating the performance by considering the analysis window length from 100 to 250 with a shift of 10 on the first 100 test trails. Hence, this justifies the hypothesis that the requirement of relatively higher duration information to perform LCD than SCD.

5 Language Change Detection by Model-Based Approach

The SCD and LCD by human suggest that prior exposure to the language make human more efficient in detecting language change. This motivates extracting the statistical/embedding vectors from the trained machine learning (ML)/ Deep learning (DL) framework and using them to perform change detection tasks. The detailed procedure is explained in the following subsections.

5.1 Model-Based Change Detection Framework

The block diagram of the model-based change detection framework is depicted in Fig. 9.

5.1.1 Training of Statistical/Embedding Vector Extractor and PLDA Classifier

From the training data, initially, MFCC\(+\Delta +\Delta \Delta \) are computed, and then SAD is used to select the feature vectors. The feature vectors are then used to train the statistical models like the universal background model (UBM), adaptation model, Total variability matrix (T matrix), and DL model like TDNN-based x-vector models. The statistical vectors like u/a/i-vectors are extracted using trained UBM/adapt model/ T-matrix, respectively. The u-vector and a-vectors are computed by computing the zeroth order statistics from the UBM and adapt model, respectively. The zeroth order statistics are computed using Eq. 4, where i ranges from \(1 \le i \le M\), M is the number of mixture components, \(x_{j}\) are the MFCC features and T is the number of frames. The u-vectors are the M dimensional vectors extracted using the UBM model, whereas the a-vectors are the concatenation of the M dimensional vectors, extracted from the class-specific adapt models. The i-vectors are extracted as mentioned in [8]. Similarly, the x-vectors are extracted from the trained TDNN-based x-vector model. Both the statistical/ embedding vectors are computed by considering N number feature vectors as analysis window length. The extracted vectors are then used to train the linear discriminate analysis (LDA), within class covariance normalization (WCCN) matrix, and the probabilistic LDA (PLDA) model.

5.1.2 Change Detection During Testing

During testing, the feature vectors are extracted from the code-switched utterances. After that using the VAD labels, with a fixed number of frames the statistical/embedding (S/E) vectors are extracted using the trained models. The S/E vector extraction and the distance contour for each test utterance are computed using Eq. 5. Where \(x_{i}\)s’ are the feature vectors, \(\psi (.)\) is the distance computation function and \({\mathbb {F}}(.)\) is the mapping function from the feature space to S/E vector space.

The distance contour is then smoothed using a hamming window with length (\(h_{l}\)). The \(h_{l}\) is considered as \(1/\delta \) times N. The peaks of the smoothed contour are computed and the magnitude of peaks greater than the threshold contour are considered as the change points.

5.2 Experimental Setup

The TTSF-SC dataset is used for SCD, whereas TTSF-LC and MSCSTB are used for performing LCD tasks. The training partition of the TTSF-SC, TTSF-LC, and MSCSTB is used to train the statistical/embedding vector extractor, and the testing partition is used to evaluate the performance of the LCD and SCD tasks.

The 39 dimensional MFCC\(+\Delta +\Delta \Delta \) feature vectors are computed from the speech signal with 20 msec and 10 msec as window and hop duration, respectively. The energy frames are decided by considering the frame energy greater than the \(6\%\) of the utterance’s average frame energy. The UBM and adapt models are trained with a cluster size of 32. The dimensions of the u/a/i-vectors are 32, 64 and 50, respectively. The x-vector framework has 8 layers, out of 5 are working on frame level and the rest 3 are working on segment level (i.e. N frames). The number of neurons in each layer is 512, 512, 512, 512, 1500, 512, 512 and 2, respectively. For the speaker-specific study, the x-vectors are trained without dropout and L2 normalization, whereas for the language-specific study, dropouts of 0.2 in the second, third, fourth, and sixth layers are used along with L2 normalization.

During training, the speaker/language-specific feature vectors are used to extract the S/E vectors dis-jointly with a fixed N, whereas during testing the S/E vectors are extracted with a sample frameshift. All the models have been trained for 20 epochs. For TTSF-LC the optimal N is decided experimentally as 200 and for TTSF-SC N is considered as 50. After training, by observing the validation loss and accuracy the model corresponding to the \(15^{th}\) and \(11^{th}\) epoch is chosen for the language and speaker-specific study, respectively. Similarly, for MSCSTB, x-vector models for each language pair are trained. After training for 100 epochs, by observing the validation loss and accuracy the model belonging to the (\(54^{th}\), \(29^{th}\), and \(26^{th}\)) epochs for \(N=200\) are chosen for GUE, TAE, and TEE language pairs, respectively.

For TTSF-LC and TTSF-SC, the extracted embedding vectors are normalized without having LDA and WCCN. The normalized vectors are used for modeling the PLDA and computing the distance contour for LCD and SCD tasks. Using the MSCSTB dataset, it is observed that performing LDA, and WCCN along with using cosine kernel distance instead of PLDA distance contour improves the change detection performance. This may be due to the nature of the datasets. The TTSF-LC and TTSF-SC are the studio recording of read speech, whereas the MSCSTB is the conversation recording in the office environment.

For the SCD task, after extracting the i/x vectors, the change points are detected for each test utterance using the hyperparameters \(\alpha \), \(\delta \), and \(\gamma \) as 2.6, 1.3 and 0.9, respectively. The hyper-parameters are decided greedily by observing the change detection performance on the first 100 test trails. For the LCD task (using TTSF-LC), the hyper-parameters are decided as 3.2, 1.3, and 0.9, respectively. Similarly, for MSCSTB (\(N=200\)), the optimal hyperparameters for GUE, TAE, and TEE are (0.3, 4.5, and 1.1), (0.3, 4.5, and 1.1) and (0.3, 3.9, and 1.1), respectively.

5.3 Language Discrimination by Statistical/Embedding Vectors

The aim here is to observe the discrimination ability of the extracted S/E vectors for language discrimination, by synthetically emulating the CS scenario. The TTSF-LC, where the same speaker is speaking two languages is considered for this study. The training partition is used to train the UBM, adapt, T-matrix, and TDNN-based x-vector model. From the test partitions, two utterances are selected, one from each language, spoken by a speaker. Using the selected utterances the MFCC\(+\Delta +\Delta \Delta \) features and the S/E vectors are extracted and projected in two dimensions using t-SNE [18].

The two-dimensional representations are depicted in Fig. 10a–e. From the figure, it can be observed that the overlapping between the languages reduces by moving from the feature space to the S/E vector space. This shows, like human subjects, prior exposure to the languages through ML/DL models helps in better discrimination. Furthermore, among the S/E vectors, the overlap between the languages is least in the x-vector space, followed by the i-vector, adapt, and UBM posterior space. This is due to the ability of the modeling techniques to capture the language-specific feature dynamics.

For strengthening the observation, the features are extracted from the test utterances and pooled together with respect to a given language. The pooled feature vectors are randomly segmented with a context of 200 and used to extract the S/E vectors. The extracted S/E vectors are paired to form 2000 within a language (WL) and 2000 between language (BL) trails. The WL and BL vector pairs are compared using the PLDA scores. Figure 10f–i shows boxplots of the PLDA score distribution of the WL and BL pairs. From the box plot distribution, it can be observed that, between the WL and BL, the overlap of PLDA scores distribution reduces with improvement in the modeling techniques from UBM to x-vector.

In the change point detection task, the aim is to get a sudden change in the distance contour, when there exists a change in language. That can be achieved if the contour (negative of PLDA score) variation is less in WL and provide a sudden change in the contour for BL pairs. Hence for ensuring this, the PLDA score distribution between the WL and BL should be maximized. Keeping this into account, the equal error rate (EER) has been used as an objective measure, where the WL and BL trials are termed false scores and true scores, respectively. The obtained EER for UBM/adapt/i-vector and x-vector are 28.5, 17.35, 12.55, and 3.6, respectively. Hence as per the discrimination ability, the change point detection study has been carried out using i/x-vectors as the representations of the speaker and language.

5.4 Experimental Results

Initially, the change detection study is conducted with TTSF-SC and TTSF-LC using i/x-vectors as the speaker/language representation. The discrimination ability and the LCD/SCD study suggest that the x-vector is a better representation of the speaker/language than the i-vector. Therefore, the LCD task on the MSCSTB dataset is conducted by considering x-vectors as language representations.

The experimental results are tabulated in Table 2. The performance obtained in terms of IDR on SCD task using i-vector and x-vector is \(87.75\%\) and \(92.27\%\), respectively. Similarly, for LCD tasks the performances on TTSF-LC are \(80.58\%\) and \(87.01\%\), respectively. As evidenced by the language discrimination study, the performance of LCD provides a relative improvement of \(21.9\%\) and \(31.63\%\) using i-vectors and x-vectors, over the best performance achieved on the unsupervised distance-based approach, respectively. This justifies the claim that, like humans, the performance of the LCD can be improved by incorporating language-specific prior information through computational models.

The performance of the LCD task on the MSCSTB dataset using x-vectors as language representation with considering N as 200 (same as TTSF-LC) is \(46.56\%\), \(49.91\%\) and \(47.13\%\) in terms of IDR for the GUE, TAE, and TEE partitions, respectively. The performance provides a relative improvement of \(5.6\%\), \(2.3\%\), and \(4.2\%\) over the best performance achieved on the unsupervised distance-based approach, respectively. However, the improvement is small as compared to the improvement achieved using TTSF-LC data. This may be due to the distributional difference in the monolingual segment duration in the TTSF-LC and MSCSTB datasets. A boxplot showing the distribution of the monolingual segments of TTSF-LC’s and MSCSTB’s test set is depicted in Fig. 11. From the figure, it can be observed that the median of the monolingual segment duration in the case of TTSF-LC for primary and secondary language are (5.54 and 4.9) seconds, and for MSCSTB is (1.46 and 0.51), (1.54 and 0.41), (1.61 and 0.41) seconds for GUE, TAE, and TEE partition, respectively. Further, it has been observed that language discrimination is better by considering N equal to 200 (i.e. approx. 2 seconds). Hence, due to the monolingual segment duration of the MSCSTB dataset being smaller than the considered analysis window duration resulting in smoothing on the resultant distance contour, and leads to an increase in the MDR. Therefore, the alternative is to reduce the analysis window length, but that may affect the language discrimination ability of the x-vectors.

The language discrimination test is performed using the GUE partition of the MSCSTB dataset by reducing the analysis window length from 200 to 50. The cosine score distribution of the x-vectors’ WL and BL pairs after the LDA and WCCN projection with varying the analysis window length are depicted in Fig. 12. The test shows that the language discrimination ability reduces with a decrease in analysis window duration. From the figure, it can be observed that the overlap between the WL and BL score distribution increases with a decrease in the value of N. As an objective measure, the computed EER for N equals to 200, 150, 100, 75,and 50 are 7.1, 9.8, 12.8, 19.8 and 29.2, respectively. Hence resolving the issue may require coming up with a better embedding extractor strategy, that can provide better language discrimination in a small analysis window duration or can come up with a framework that is independent of the variation in analysis window duration.

6 Discussion

The human-based LCD and SCD study suggests that the language requires more neighborhood information as compared to the speaker for comfortable discrimination. Further, prior exposure to the languages helps humans to better discriminate between the languages. Motivated by this, it is hypothesized that the performance of LCD by machine can be improved with the (a) incorporation larger duration analysis window (N) and (b) language-specific exposure through computational models.

In the unsupervised distance-based approach, it has been observed that the performance of the LCD improves by increasing the value of N. The optimal N value for the SCD study is 150. Considering the same value of N, the LCD task is carried out for both TTSF-LC and MSCSTB datasets, and performances are tabulated in Table 3. In the case of the MSCSTB dataset, the average IDR values with respect to all three language pairs are tabulated. Motivating by the LCD/SCD study by humans, the N value is increased and the obtained optimal N value for the LCD with TTSF-LC is 200. Similarly, the optimum N value for MSCSTB is 160, 180, and 170 for the GUE, TAE, and TEE, respectively. The performance with the optimal N value for TTSF-LC and MSCSTB is \(66.1\%\) and \(46.02\%\), which provides a relative improvement of \(29.1\%\), and \(2.4\%\), respectively. These observations justify the claim that the performance of the LCD by machines can be improved by increasing the analysis window duration.

Furthermore, as hypothesized from the subjective study, the incorporation of language-specific exposure through computational models improves LCD performance. The i/x-vector models have been trained, which essentially capture the language-specific cepstral dynamics. It has been observed that with the x-vector approach, the obtained performance is \(87.01\%\) for TTSF-LC and \(52.59\%\) in terms of IDR, which provides a relative improvement of \(31.63\%\) and \(4.01\%\) over the performance of the unsupervised distance-based approach. Similarly, for the SCD task using the TTSF-SC dataset, the performance provides a relative improvement of \(9.71\%\). Comparing the performance of LCD and SCD on synthetic data, it can be observed that the improvement is more significant on LCD than the SCD. This concludes, like human subjective study, in an ideal condition (only speaker/language variation and keeping other variations limited), the requirement model-based approach is more significant on LCD than the SCD.

It is also observed that in the LCD task, the performance improvement on MSCSTB data is limited as compared to the improvement achieved on the synthetic TTSF-LC dataset. This is due to the difference in the mono-lingual segment duration. The trade-off between the analysis window duration and the language discrimination ability shows that the discrimination ability improves with an increase in analysis window duration. At the same time during change detection, as the mono-lingual segment duration can possibly be less than 500 msec (approx. 50 energy frames), considering a larger analysis window leads to degrading in performance by smoothening the evidence contour (leads to an increase in MDR). Hence to overcome this issue, (1) need to achieve significant language discrimination with the N value as small as possible, and (2) need to develop a framework whose performance will be least affected/independent with the variations of the analysis window duration.

7 Conclusion

In this work, we performed LCD using the available frameworks for SCD. From the subjective study, it is observed that humans require comparatively larger neighborhood information around the change point as compared to the speaker. It is also observed that prior language-specific exposure improves the performance of the LCD task. In the unsupervised distance-based approach, the incorporation of larger neighborhood information improves the LCD performance by relatively \(29.1\%\) and \(2.4\%\) on the synthetic TTSF-LC and the practical MSCSTB dataset, respectively. Similarly, incorporating language-specific prior information through the computational models provides a relative improvement of \(31.63\%\) and \(4.01\%\) over the unsupervised distance-based approach. This shows that it is indeed possible to perform LCD by appropriately tuning the SCD frameworks.

It has also been observed that the practical data set does not perform as expected like synthetic data. This is due to the distributional difference in the monolingual segment duration on both datasets. The MSCSTB dataset consists of the monolingual segments having a duration lesser than 0.5 secs, and for better language discrimination the required duration is about 2 secs (about 200 energy frames). Hence it is challenging to decide on the analysis window duration. The larger duration smooths the evidence contour and increases the MDR, whereas a smaller duration of 0.5 secs is not able to provide appropriate language discrimination.

Therefore, our future attempts will try to develop a better framework, that can provide better language discrimination on a small duration, and also plan to come up with a change detection framework, whose performance should be independent/less affected by the variations of the analysis window duration.

Data Availibility

The MSCS and IITM-TTS datasets used in this study are publicly available. The synthetically generated data can also be made available with a request or may be generated, by using the attached pseudo algorithm in Appendix 2.

Notes

https://github.com/jagabandhumishra/HUMAN-SUBJECTIVE-STUDY-FOR-LCD-and-SCD.

References

A. Baby, A.L. Thomas, N. Nishanthi, T. Consortium, et al.: Resources for indian languages, in: Proceedings of Text, Speech and Dialogue (2016)

C. Barras, X. Zhu, S. Meignier, J.L. Gauvain, Multistage speaker diarization of broadcast news. IEEE Trans. Audio Speech Lang. Process. 14(5), 1505–1512 (2006)

H. Bredin, C. Barras, et al.: Speaker change detection in broadcast tv using bidirectional long short-term memory networks, in: Interspeech 2017. ISCA (2017)

P. Carrasquillo, E. Singer, M. Kohler, R. Greene, D. Reynolds, J. Deller, Approaches to language identification using gaussian mixture models and shifted delta cepstral features, in Proceedings of ICSLP2002-INTERSPEECH2002 pp. 16–20 (2002)

J.Y. Chan, P. Ching, T. Lee, H.M. Meng, Detection of language boundary in code-switching utterances by bi-phone probabilities. in: 2004 International Symposium on Chinese Spoken Language Processing, pp. 293–296. IEEE (2004)

S. Chen, P. Gopalakrishnan, et al.: Speaker, environment and channel change detection and clustering via the bayesian information criterion, in: Proceedings of DARPA broadcast news transcription and understanding workshop, vol. 8, pp. 127–132. Virginia, USA (1998)

N. Dawalatabad, S. Madikeri, C.C. Sekhar, H.A. Murthy, Novel architectures for unsupervised information bottleneck based speaker diarization of meetings. IEEE/ACM Trans. Audio Speech Lang. Process. 29, 14–27 (2020)

N. Dehak, P.J. Kenny, R. Dehak, P. Dumouchel, P. Ouellet, Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 19(4), 788–798 (2010)

N. Dhananjaya, B. Yegnanarayana, Speaker change detection in casual conversations using excitation source features. Speech Commun. 50(2), 153–161 (2008)

A. Diwan, R. Vaideeswaran, S. Shah, A. Singh, S. Raghavan, S. Khare, V. Unni, S. Vyas, A. Rajpuria, C. Yarra, A. Mittal, P.K. Ghosh, P. Jyothi, K. Bali, V. Seshadri, S. Sitaram, S. Bharadwaj, J. Nanavati, R. Nanavati, K. Sankaranarayanan, T. Seeram, B. Abraham, Multilingual and code-switching asr challenges for low resource indian languages. Proceedings of Interspeech (2021)

H. Gish, M.H. Siu, J.R. Rohlicek, Segregation of speakers for speech recognition and speaker identification, in: Icassp, vol. 91, pp. 873–876 (1991)

B.C. Haris, G. Pradhan, A. Misra, S. Prasanna, R.K. Das, R. Sinha, Multivariability speaker recognition database in indian scenario. Int. J. Speech Technol. 15(4), 441–453 (2012)

A.O. Hogg, C. Evers, P.A. Naylor, Speaker change detection using fundamental frequency with application to multi-talker segmentation, in: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5826–5830. IEEE (2019)

H. Li, B. Ma, K.A. Lee, Spoken language recognition: from fundamentals to practice. Proc. IEEE 101(5), 1136–1159 (2013)

H. Liu, L.P.G. Perera, X. Zhang, J. Dauwels, A.W. Khong, S. Khudanpur, S.J. Styles, End-to-end language diarization for bilingual code-switching speech, in: 22nd Annual Conference of the International Speech Communication Association, INTERSPEECH 2021, vol. 2. International Speech Communication Association (2021)

L. Lu, H.J. Zhang, Speaker change detection and tracking in real-time news broadcasting analysis, in: Proceedings of the tenth ACM international conference on Multimedia, pp. 602–610 (2002)

D.C. Lyu, E.S Chng, H. Li, Language diarization for conversational code-switch speech with pronunciation dictionary adaptation, in: Signal and Information Processing (ChinaSIP), 2013 IEEE China Summit and International Conference on, pp. 147–150. IEEE (2013)

L.V.D. Maaten, G. Hinton, Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2605 (2008)

S. Meignier, D. Moraru, C. Fredouille, J.F. Bonastre, L. Besacier, Step-by-step and integrated approaches in broadcast news speaker diarization. Comput. Speech Lang. 20(2–3), 303–330 (2006)

J. Mishra, A. Agarwal, S.M. Prasanna, Spoken language diarization using an attention based neural network, in: 2021 National Conference on Communications (NCC), pp. 1–6. IEEE (2021)

M.H. Moattar, M.M. Homayounpour, A review on speaker diarization systems and approaches. Speech Commun. 54(10), 1065–1103 (2012)

K.S.R. Murty, B. Yegnanarayana, Epoch extraction from speech signals. IEEE Trans. Audio Speech Lang. Process. 16(8), 1602–1613 (2008)

T.J. Park, N. Kanda, D. Dimitriadis, K.J. Han, S. Watanabe, S. Narayanan, A review of speaker diarization: recent advances with deep learning. Comput. Speech Lang. 72, 101317 (2022)

L.R. Rabiner, Digital processing of speech signals (Pearson Education India, London, 1978)

F. Richardson, D. Reynolds, N. Dehak, Deep neural network approaches to speaker and language recognition. IEEE Signal Process. Lett. 22(10), 1671–1675 (2015)

N. Ryant, K. Church, C. Cieri, A. Cristia, J. Du, S. Ganapathy, M. Liberman, First dihard challenge evaluation plan. 2018, Technical Report (2018)

M. Sarma, S.N. Gadre, B.D. Sarma, S.M. Prasanna, Speaker change detection using excitation source and vocal tract system information, in: 2015 Twenty First National Conference on Communications (NCC), pp. 1–6. IEEE (2015)

N.K. Sharma, S. Ganesh, S. Ganapathy, L.L. Holt, Talker change detection: a comparison of human and machine performance. J. Acoust. Soc. Am. 145(1), 131–142 (2019)

M.A. Siegler, U. Jain, B. Raj, R.M. Stern, Automatic segmentation, classification and clustering of broadcast news audio, in: Proceeding DARPA speech recognition workshop, vol. 1997 (1997)

S. Sitaram, K.R. Chandu, K.K., Rallabandi, A.W. Black, A survey of code switching speech and language processing. arXiv:1904.00784 [cs.CL] (2019)

D. Snyder, D. Garcia-Romero, A. McCree, G. Sell, D. Povey, S. Khudanpur, Spoken language recognition using x-vectors, in: Odyssey, pp. 105–111 (2018)

V. Spoorthy, V. Thenkanidiyoor, D.A. Dinesh, SVM Based Language Diarization for Code-Switched Bilingual Indian Speech Using Bottleneck Features, in: Proceedings of The 6th Intl. Workshop on Spoken Language Technologies for Under-Resourced Languages, pp. 132–136 (2018). https://doi.org/10.21437/SLTU.2018-28

S.E. Tranter, D.A. Reynolds, An overview of automatic speaker diarization systems. IEEE Trans. Audio Speech Lang. Process. 14(5), 1557–1565 (2006)

Acknowledgements

The authors like to acknowledge "Anatganak", high-performance computation (HPC) facility, IIT Dharwad, for enabling us to perform our experiments. And the Ministry of Electronics and Information Technology (MeitY), Govt. of India, for supporting us through different projects.

Funding

Open access funding provided by University of Eastern Finland (including Kuopio University Hospital).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

This is to certify that, this work is supported by the Ministry of Electronics and Information Technology (MeitY), Govt. of India through various projects. Further, We also certify that the submission is original work and is not under review at any other publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix-1: Data generation: Language and speaker change detection by humans

The dataset used for this study consists of 32 utterances having language change and 15 utterances having speakers change. The selected utterances are split around the change point to generate the monolingual/speaker utterances in each case. After splitting, 32 and 15 mono-lingual and mono-speaker utterances, that are having higher duration are considered. Actual change points are known as true change points for two-language/speaker utterances, while the beginning of the middle energy frame is known as the false change point for mono-language/speaker utterances.

Each utterance S(n) is masked by considering the x number of energy frames (NEF-x) from the left and right of the true/false change point. To detect the energy frames, short-time energy (STE) based approach has been used with a frame size of 20 msec and a frameshift of 10 msec. According to the value of x, the masked utterances are grouped into five different groups, and termed as NEF-10, NEF-20, NEF-30, NEF-50, and NEF-75. To avoid abrupt masking, a Gaussian mask with appropriate parameters is multiplied by the utterances to obtain the masked utterances. Suppose an utterance is represented by S(n), and the Gaussian mask as G(n), then the masked utterance \(S_{m}(n)\) is computed as \(S_{m}(n)=S(n)*G(n)\). After that, the masked signal is passed through an energy-based endpoint detection algorithm to obtain the final masked utterance [24]. Concerning the value of x, the m and \(\sigma \) of the Gaussian mask have been computed using Eq. 6. Where st is the \((cfi-x)^{th}\) energy frame’s start sample location and en is the \((cfi+x)^{th}\) energy frame’s end sample location. The region between st and en is termed as region of interest (ROI). cfi is the center energy frame index, whose starting sample is just greater than the true/false change point.

The parameters m and \(\sigma \) of the Gaussian mask are chosen such that, for a given x value, the final masked utterance can preserve as much as the information present in the ROI region and smoothly discard as much as the information present in the non-ROI regions. To achieve the same the mean value is chosen as the midpoint of the st and en location, and the standard deviation is chosen empirically to \(1.5\sigma \) distance (ensures to preserve 86.6% of energy in the main lobe) by selecting \(l=3\).

Figure 13, shows the masked signal for a different value of l. From the figure, it can be observed that, for \(l=2\) the masked output is able to preserve the information present in the ROI regions, but not able to significantly discard the information present in the non-ROI regions. For \(l=3\), it is showing some attenuation of energy in the ROI regions but the information is intact and also able to discard most of the information present outside the ROI regions. For \(l=4\) and 6, though it is able to totally discard the information present in the non-ROI regions, not able to preserve the information present in the ROI region (as significant attenuation of energy can be observed from Fig. 13c and d). Thus \(l=3\) has been selected for this study.

The distribution of the duration of ROIs with five different groups for both speaker and language change study are shown in Fig. 14. The average ROI duration of the masked utterances with group NEF-10, NEF-20, NEF-30, NEF-50 and NEF-75, for speaker change study are 0.45, 0.78, 1.07, 1.68 and 2.43 seconds respectively. Similarly for language change study are 0.35, 0.63, 0.90, 1.54 and 2.25 seconds respectively. Irrespective of the groups, the average ROI duration of the speaker change study is higher than the language change study. This may be because the utterances chosen for the speaker change study are extempore speech, whereas for the language change study are read speech.

Appendix-2: Data generation: Language and speaker change data from IITM-TTS

The IITM-TTS corpus consists of speech data recordings from native speakers of 13 Indian languages. For each native language, two speakers (a male and a female) recorded their utterances in their native language and English. In this study for synthesizing the code-switch utterances, a female speaker speaking her native language Hindi, and her second language English is considered. For each language, the first 5 hours of data are used for training purposes. The rest of the monolingual utterances are stitched randomly to generate code-switched utterances. Altogether, 4000 utterances are generated having one to five language change points. The CS utterance generation algorithm is mentioned in Algorithm 1. The algorithm is given input with the list of primary (\(U_{p}\)) and secondary (\(U_{s}\)) utterances and the number of utterances (\(N_{u}\)) needs to be generated along with the minimum (\(N_{umin}\)) and maximum (\(N_{umax}\)) number utterances need to be concatenated. The outputs are the concatenated CS utterances and their corresponding rich transcription time-marked (RTTM) files. For generating a CS utterance, initially, the number of utterances that need to be concatenated (\(N_{uc}\)) is decided by sampling from a uniform discrete distribution (\({\mathcal {U}}\)) with a range from \(N_{umin}\) to \(N_{umax}\) and the initial language is decided by sampling from a Bernoulli distribution(\({\mathcal {B}}\)). As per the decided \(N_{uc}\) and \(L_{init}\), the language sequence is generated. After that, the utterances are sampled uniformly from the given language-specific utterance list to generate the language sequence. The selected utterances are concatenated to obtain a CS utterance and during concatenation, the duration of each utterance is taken as a clue to generate the RTTM file. For both speaker and language, 4000, testing CS utterances are synthetically generated. The mean of the monolingual segment duration of the Hindi and English languages are 6.5 and 5.2 seconds, respectively. Similarly, the mean of the mono-speaker segment duration of the generated two-speakers utterance is 5.19 and 4.86 seconds, respectively. The generated dataset for LCD and SCD study is called TTSF-LC and TTSF-SC, respectively.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mishra, J., Prasanna, S.R.M. Spoken Language Change Detection Inspired by Speaker Change Detection. Circuits Syst Signal Process (2024). https://doi.org/10.1007/s00034-024-02743-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00034-024-02743-w