Abstract

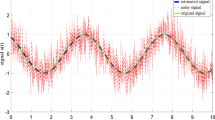

In this paper we have proposed a novel robust method of estimation of the unknown parameters of a fundamental frequency and its harmonics model. Although the least squares estimators (LSEs) or the periodogram type estimators are the most efficient estimators, it is well known that they are not robust. In presence of outliers the LSEs are known to be not efficient. In presence of outliers, robust estimators like least absolute deviation estimators (LADEs) or Huber’s M-estimators (HMEs) may be used. But implementation of the LADEs or HMEs are quite challenging, particularly if the number of component is large. Finding initial guesses in the higher dimensions is always a non-trivial issue. Moreover, theoretical properties of the robust estimators can be established under stronger assumptions than what are needed for the LSEs. In this paper we have proposed novel weighted least squares estimators (WLSEs) which are more robust compared to the LSEs or periodogram estimators in presence of outliers. The proposed WLSEs can be implemented very conveniently in practice. It involves in solving only one non-linear equation. We have established the theoretical properties of the proposed WLSEs. Extensive simulations suggest that in presence of outliers, the WLSEs behave better than the LSEs, periodogram estimators, LADEs and HMEs. The performance of the WLSEs depend on the weight function, and we have discussed how to choose the weight function. We have analyzed one synthetic data set to show how the proposed method can be used in practice.

Similar content being viewed by others

References

Y. Chen, X.L. Yang, L.T. Huang, H.C. So, Robust frequency estimation in symmetric \(\alpha \)-stable noise. Circuits Syst. Signal Process 37, 4637–4650 (2018)

A. de Cheveigńe, H. Kawahara, YIN, a fundamental frequency estimator for speech and music. J. Acoust. Soc. Am. 111, 1917–1930 (2002)

N.H. Fletcher, T.D. Rossing, The physics of musical instruments (Springer-Verlag, New York, 1991)

L. Fu, M. Zhang, Z. Liu, H. Li, Robust frequency estimation of multi-sinusoidal signals using orthogonal matching pursuit with weak derivatives criterion. Circuits Syst. Signal Process 38, 1194–1205 (2019)

P. Huber, Robust statistics (John Wiley, New York, 1981)

J.R. Jensen, G.O. Glentis, M.G. Christensen, A. Jakobsson, S.H. Jensen, Fast LCMV-based methods for fundamental frequency estimation. IEEE Trans. Signal Process. 61, 3159–3172 (2013)

T.S. Kim, H.K. Kim, S.H. Choi, Asymptotic properties of LAD estimators of a nonlinear time series regression model. J. Korean Stat. Soc. 29, 187–199 (2000)

D. Kundu, A. Mitra, A note on the consistency of the undamped exponential signals model. Statistics 28, 25–33 (1996)

D. Kundu, S. Nandi, A note on estimating the fundamental frequency of a periodic function. Signal Process. 84, 653–661 (2004)

D. Kundu, S. Nandi, Estimating the number of components of the fundamental frequency model. J. Jpn. Stat. Soc. 35, 41–59 (2005)

H. Li, P. Stoica, J. Li, Computationally efficient parameter estimation for harmonic sinusoidal signals. Signal Process. 80, 1937–1944 (2000)

V. Mangulis, Handbook of series for scientists and engineers (Academic Press, New York, 1965)

S. Nandi, D. Kundu, Estimating the fundamental frequency of a periodic function. Stat. Methods Appl. 12, 341–360 (2003)

S. Nandi, D. Kundu, Estimating the fundamental frequency using modified Newton-Raphson algorithm. Statistics 53, 440–458 (2019)

S. Nandi, D. Kundu, Statistical signal processing-frequency estimation, 2nd edn. (Springer, Singapore, 2020)

J.K. Nielsen, T.L. Jensen, J.R. Jensen, M.G. Christensen, S.H. Jensen, Fast fundamental frequency estimation: making a statistically efficient estimator computationally efficient. Signal Process. 135, 188–197 (2017)

L. Qiu, H. Yang, S.N. Koh, Fundamental frequency determination based on instantaneous frequency estimation. Signal Process. 44, 233–241 (1995)

P. Rengaswamy, K.S. Rao, P. Dasgupta, SongF0: a spectrum-based fundamental frequency estimation for monophonic songs. Circuits Syst. Signal Process 40, 772–797 (2021)

A.M. Walker, On the estimation of a harmonic component in a time series with stationary residuals. Biometrika 58, 21–36 (1971)

C.F.J. Wu, Asymptotic theory of the nonlinear least squares estimation. Ann. Stat. 9, 501–513 (1981)

Acknowledgements

The author would like to thank the Associate Editor and three unknown reviewers for their constructive comments which have helped to improve the earlier version of the paper significantly.

Funding

The author did not receive any funding for this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author does not have any conflict of interest in preparation of this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

To prove Theorem 1, we need the following lemmas.

Lemma 1

Let \(\{X(n)\}\) be a sequence of independent and identically distributed random variables with mean 0 and finite variance, then for \(k = 0,1,\ldots \),

The same result holds when \(\cos (t\theta )\) is replaced by \(\sin (t \theta )\).

Proof

\(k = 0\), the result is available in Kundu and Mitra [8]. For general k, the result follows from the fact \(\displaystyle \frac{n}{N} \le 1\), for \(1 \le n \le N\). \(\square \)

Lemma 2

Let \(\{X(n)\}\) be a sequence of independent and identically distributed random variables with mean 0 and finite variance, and the weight function w(t) has the form (8), then

The same result holds when \(\cos (t\theta )\) is replaced by \(\sin (t \theta )\).

Proof

Using Lemma 1, Lemma 2 can be easily obtained. \(\square \)

Lemma 3

Let \({{\varvec{\Theta }}}\) be the same as before, and let us define the set

If for any \(\delta > 0\) and for some \(M < \infty \),

where \(Q({{\varvec{\Theta }}})\) is same as defined in (10), then \({\widehat{\Theta }}\) is a strongly consistent estimator of \({{\varvec{\Theta }}}^0\).

Proof

It mainly follows by contradiction, along the same line as the proof of Lemma 1 of Wu [20]. Hence, the details are avoided. \(\square \)

Lemma 4

For any given \(\delta > 0\) and for some \(M < \infty \),

Proof

Consider

Hence, using Lemma 2, and the condition of the weight function w(t), we obtain

Consider the following sets for \(k = 1, \ldots , p\):

and \(\displaystyle \Gamma _1 = \cup _{k=1}^p \Gamma _{1k}\), \(\displaystyle \Gamma _2 = \cup _{k=1}^p \Gamma _{2k}\). Since

Observe that

Similarly, it can be shown for other sets also, hence the result follows. \(\square \)

Proof of Theorem 1:

Using Lemmas 3 and 4, it immediately follows. \(\square \)

Proof of Theorem 2:

To prove this result, let us consider the following \(2p+1\) vector \(Q'({{\varvec{\Theta }}})\), where

\(\displaystyle Q''({{\varvec{\Theta }}})\) is a \((2p+1)\times (2p+1)\) matrix contains the double derivative of \(Q({{\varvec{\Theta }}})\). Now using the Taylor series expansion

here \(\overline{{\varvec{\Theta }}}\) is a point on the line joining \(\widehat{{\varvec{\Theta }}}\) and \({{\varvec{\Theta }}}^0\). Since \(Q'(\widehat{{\varvec{\Theta }}}) = {{\varvec{0}}}\), hence (2) can be written as

Now using Central limit theorem and (13) to (19), it follows that

and using (13) to (19), it can be shown that

hence, the result follows.

Appendix B

To prove Theorem 3, we need the following Lemma.

Lemma 5

Let \(\{X(n)\}\) be a sequence of independent and identically distributed random variables with mean 0 and finite variance, and the weight function w(t) satisfies Assumption 1, then

The same result holds when \(\cos (t\theta )\) is replaced by \(\sin (t \theta )\).

Proof

Let \(Z(n) = X(n) I_{[|X(n)| \le \sqrt{n}]}\), where \(I_{[|X(n)| \le \sqrt{n}]} = 1\), if \(|X(n)| \le \sqrt{n}\), and 0, otherwise. Thus

Hence, \(\{X(n)\}\) and \(\{Z(n)\}\) are equivalent sequences. Thus it is enough to show that

Let \(U(n) = Z(n) - E(Z(n))\). Note that if \(G(\cdot )\) denotes the distribution function of X(1), then

Hence, the result is proved if we can show that

For any fixed \(\theta \) and \(\epsilon > 0\), and for \(\displaystyle 0< h < \frac{1}{4\sqrt{N}}\), we have

Note that \(\displaystyle h U(n) w \left( \frac{n}{N} \right) \cos (n \theta ) \le \frac{1}{4}\), for all \(n = 1, \ldots , N\), and on using the fact that \(e^{|x|} \le 2 e^x\) and \(e^x \le (1+x+x^2)\), then for \(\displaystyle |x| \le \frac{1}{4}\)

Choose \(\displaystyle h = \frac{1}{4\sqrt{N}}\), then for large N,

For some constant \(C > 0\). Let \(k = N^2\), and choose \(\theta _1, \ldots \theta _k\), such that for each \(\theta \in [0,\pi ]\), there exists a \(\theta _j\), such that \(\displaystyle |\theta _j-\theta | \le \frac{\pi }{N^2}\). Hence

Therefore, for large N, we have

Since, \(\displaystyle \sum _{N=1}^{\infty } N^2 \hbox {exp} \ \left( -\frac{\sqrt{N} \epsilon }{4} \right) < \infty \), hence, by Borel-Cantelli lemma

Similarly, it can be shown when the \(\cos (n\theta )\) is replaced by \(\sin (n \theta )\).

Lemma 6

For any given \(\delta > 0\) and for some \(M < \infty \), if the weight function w(t) satisfies Assumption 1, then

Proof

Note that based on Lemma 5, and following the proof of Lemma 4, it can be obtained. \(\square \)

Proof of Theorem 3:

Since we have a similar version of Lemma 3, where the weight function w(t) satisfies Assumption 1, and using Lemma 5, Theorem 3 follows. \(\square \)

We need the following Lemma to prove Theorem 4.

Lemma 7

Suppose \(0< \theta < \pi \) and w(t) satisfies Assumption 1, then

Proof of Lemma 7:

We will show the result for \(k = 0\), for general k, it follows along the same line.

For \(\epsilon > 0\), there exists a polynomial \(p_{\epsilon }(t)\), such that \(|w(t) - p_{\epsilon }(t)| \le \epsilon \), for all \(t \in [0,1]\). Hence

Further,

Suppose

Now using (14),

Taking \(N \rightarrow \infty \) in (4), we obtain

and using (3), it follows

Since \(\epsilon \) is arbitrary, the result follows. \(\square \)

Theorem 4

Following the same line as the proof of Theorem 2, it can be proved. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kundu, D. Fundamental Frequency and its Harmonics Model: A Robust Method of Estimation. Circuits Syst Signal Process 43, 1007–1029 (2024). https://doi.org/10.1007/s00034-023-02498-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02498-w

Keywords

- Sinusoidal model

- Least squares estimators

- Weighted least squares estimators

- Robust estimators

- Consistency

- Asymptotic normality