Abstract

We will consider the multidimensional truncated \(p \times p\) Hermitian matrix-valued moment problem. We will prove a characterisation of truncated \(p \times p\) Hermitian matrix-valued multisequence with a minimal positive semidefinite matrix-valued representing measure via the existence of a flat extension, i.e., a rank preserving extension of a multivariate Hankel matrix (built from the given truncated matrix-valued multisequence). Moreover, the support of the representing measure can be computed via the intersecting zeros of the determinants of matrix-valued polynomials which describe the flat extension. We will also use a matricial generalisation of Tchakaloff’s theorem due to the first author together with the above result to prove a characterisation of truncated matrix-valued multisequences which have a representing measure. When \(p = 1\), our result recovers the celebrated flat extension theorem of Curto and Fialkow. The bivariate quadratic matrix-valued problem and the bivariate cubic matrix-valued problem are explored in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we will investigate the multidimensional truncated matrix-valued moment problem. Given a truncated multisequence \(S = (S_{\gamma })_{{\mathop {\gamma \in {\mathbb {N}}_0^d}\limits ^{0 \le |\gamma | \le m}} }\), where \(S_{\gamma } \in {\mathcal {H}}_p\) (i.e., \(S_{\gamma }\) is a \(p \times p\) Hermitian matrix), we wish to find necessary and sufficient conditions on S for the existence of a \(p \times p\) positive matrix-valued measure T on \({\mathbb {R}}^d\), with convergent moments, such that

for all \(\gamma = (\gamma _1, \ldots , \gamma _d) \in {\mathbb {N}}_0^d\) such that \(0 \le |\gamma | \le m\). We would also like to find a positive matrix-valued measure \(T = \sum _{a=1}^{\kappa } Q_a \delta _{w^{(a)}}\) on \({\mathbb {R}}^d\) such that (1.1) holds and and

i.e., T is a finitely atomic measure of the form \(T = \sum _{a=1}^{\kappa } \delta _{w^{(a)}} Q_a\) with \(\sum _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a = {{\,\mathrm{rank}\,}}M(n)\) (confer Remark 3.4). If (1.1) holds, then T is called a representing measure for S. If (1.1) and (1.2) are in force, then T is called a minimal representing measure for S.

Before proceeding any further, we will first introduce frequently used notation. Commonly used sets are \( {\mathbb {N}}_0, {\mathbb {R}}, {\mathbb {C}}\) denoting the sets of nonnegative integers, real numbers and complex numbers respectively. Given a nonempty set E, we let

Next, we let \({\mathbb {C}}^{p \times p}\) denote the set of \(p\times p\) matrices with entries in \({\mathbb {C}}\) and \({\mathcal {H}}_p\subseteq {\mathbb {C}}^{p \times p}\) denote the set of \(p\times p\) Hermitian matrices with entries in \({\mathbb {C}}.\) Given \(x= (x_1,\dots , x_d) \in {\mathbb {R}}^d\) and \(\lambda = (\lambda _1, \dots , \lambda _d)\in {\mathbb {N}}_0^d,\) we define

and

Throughout the entirety of this paper we will assume that the given \({\mathcal {H}}_p\)-valued truncated multisequence \(S = (S_{\gamma })_{\gamma \in \Gamma _{2n,d}}\) satisfies

Let us justify this assumption. If \(S_{0_d} \succ 0\) (i.e., \(S_{0_d}\) is positive definite), then we can simply replace S by \({\widetilde{S}} = ({\widetilde{S}}_{\gamma } )_{\gamma \in \Gamma _{2n,d}}\), where \({\widetilde{S}}_{\gamma } = S_{0_d}^{-1/2} \, S_{\gamma } \, S_{0_d}^{-1/2}\). If \(S_{0_d} \succeq 0\) (i.e., \(S_{0_d}\) is positive semidefinite) and not invertible, then Lemma 5.50 and Smuljan’s lemma (see Lemma 2.1) readily show that we must necessarily have that \({{\,\mathrm{Ran}\,}}S_{\gamma } \subseteq {{\,\mathrm{Ran}\,}}S_{0_d}\) and hence \(\mathrm{Ker}S_{0_d} \subseteq \mathrm{Ker}S_{\gamma }\) for all \(\gamma \in \Gamma _{2n,d}\). Consequently, we can find a unitary matrix \(U \in {\mathbb {C}}^{p \times p}\) such that

and we may replace S by \({\widetilde{S}} = ({\widetilde{S}}_{\gamma })_{\gamma \in \Gamma _{2n,d}}\), where \({\widetilde{S}}_{\gamma } \in {\mathbb {C}}^{{\tilde{p}} \times {\tilde{p}}}\) with \({\tilde{p}} = {{\,\mathrm{rank}\,}}S_{0_d}\), and normalise as above.

Main Contributions

-

(C1)

We will characterise positive infinite d-Hankel matrices based on a \({\mathcal {H}}_p\)-valued multisequence via an integral representation. Indeed, we will see that \(S^{(\infty )} = (S_{\gamma })_{\gamma \in {\mathbb {N}}_0^d}\) gives rise to a positive infinite d-Hankel matrix \(M(\infty )\) with finite rank if and only if there exists a finitely atomic positive \({\mathcal {H}}_p\)-valued measure T on \({\mathbb {R}}^d\) such that

$$\begin{aligned} S_{\gamma } = \int _{{\mathbb {R}}^d} x^{\gamma } \, dT(x) \quad \quad \mathrm{for} \quad \gamma \in {\mathbb {N}}_0^d. \end{aligned}$$In this case, the support of the positive \({\mathcal {H}}_p\)-valued measure T agrees with \({\mathcal {V}}({\mathcal {I}})\), where \({\mathcal {V}}({\mathcal {I}})\) is the variety of a right ideal of matrix-valued polynomials based on the kernel of \(M(\infty )\) (see Definition 5.20) and the cardinality of the support of T is exactly \({{\,\mathrm{rank}\,}}M(\infty )\) (see Theorem 5.65).

-

(C2)

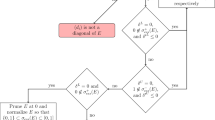

Let \(S = (S_{\gamma })_{\gamma \in \Gamma _{2n,d}}\) be a given truncated \({\mathcal {H}}_p\)-valued multisequence. We will see that S has a minimal representing measure \(T = \sum _{a=1}^{\kappa } Q_a \delta _{w^{(a)}}\) if and only if the corresponding d-Hankel matrix M(n) based on S (see Definition 3.2) has a flat extension \(M(n+1)\), i.e., a positive rank preserving extension. In this case, the support of T agrees with \({\mathcal {V}}(M(n+1))\), where \({\mathcal {V}}(M(n+1))\) is the variety of the d-Hankel matrix \(M(n+1)\) (see Definition 3.5) and \(\sum _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a = \sum _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}M(n)\).

-

(C3)

Let S be as in (C2). S has a representing measure if and only if the corresponding d-Hankel matrix M(n) has an eventual extension \(M(n+k)\) which admits a flat extension.

-

(C4)

Let \(S = (S_{00}, S_{10}, S_{01}, S_{20}, S_{11}, S_{02})\) be a given \({\mathcal {H}}_p\)-valued truncated bisequence. We will see that necessary and sufficient conditions for S to have a minimal representing measure consist of M(1) being positive semidefinite and a system of matrix equations having a solution. We will also see that if M(1) is positive definite and obeys an extra condition (which is automatically satisfied if \(p=1\)), then S has a minimal representing measure. However, if M(1) is singular and \(p \ge 2\), then S need not have a minimal representing measure.

Background and Motivation

The moment problem on \({\mathbb {R}}^d\) is a well-known problem in classical analysis and has been studied by mathematicians and engineers since the late 19th century, beginning with Stieltjes [77], Hamburger [42, 43], Hausdorff [44] and Riesz [67]. The full moment problem on \({\mathbb {R}}\) has a concrete solution discovered by Hamburger [42, 43] which can be communicated solely in terms of the positivity of Hankel matrices built from the given sequence. It is natural to wonder about a multidimensional analogue of the full moment problem on \({\mathbb {R}},\) that is, the full moment problem on \({\mathbb {R}}^d,\) where the given sequence is a multisequence indexed by d-tuples of nonnegative integers. It is well known that a natural analogue of Hamburger’s theorem fails (see, e.g., Schmüdgen [72]), i.e., there exist multisequences such that the corresponding multivariable Hankel matrices are positive semidefinite yet the multisequences do not have a representing measure. It turns out that the Hamburger moment problem on \({\mathbb {R}}^d\) is a special case of the full K-moment problem on \({\mathbb {R}}^d\) (where we wish to find a positive measure which is supported on a given closed set \(K\subseteq {\mathbb {R}}^d\)). We refer the reader to Riesz [67] (solution on \({\mathbb {R}}\)), Haviland [45, 46] (generalisation for \(d>1\)) and Schmüdgen [70] (when K is a compact semialgebraic set). For a solution to the truncated K-moment problem on \({\mathbb {R}}^d\) based on commutativity conditions of certain matrices see the first author [52], where an application to the subnormal completion problem is considered. Moment problems on \({\mathbb {R}}^d\) intertwine many different areas of mathematics such as matrix and operator theory, probability theory, optimisation theory, and the theory of orthogonal polynomials. Various applications for moment problems on \({\mathbb {R}}^d\) can be found in control theory, polynomial optimisation and mathematical finance (see, e.g., Lasserre [59] and Laurent [60]). For approaches to the multidimensional moment problem which utilise techniques from real algebra see Marshall [61] and Prestel and Delzell [66]. For a treatment of the abstract multidimensional moment problem see Berg, Christensen and Ressel [7] and Sasvári [68], which, in addition, treats indefinite analogues of multidimensional moment problems.

The truncated moment problem on \({\mathbb {R}},\) that is, where one is given a truncated sequence \((s_j)_{j=0}^m\) with \(s_j\in {\mathbb {R}}\) for \(j=0, \dots , m,\) has a concrete solution which can be communicated in terms of positivity of a Hankel matrix and checking a range inclusion. Moreover, a minimal representing measure can be constructed from the zeros of the polynomial describing a rank-preserving positive extension. We refer the reader to the classical works of Akhiezer [1], Akhiezer and Krein [2], Krein and Nudel’man [58], Shohat and Tamarkin [73] and the fairly recent work of Curto and Fialkow [15]. An area of active interest concerns the truncated moment problem on \({\mathbb {N}}_0\) where one seeks a measure whose support is contained in a given closed subset \(K\subseteq {\mathbb {N}}_0\) (see, e.g., Infusino, Kuna, Lebowitz and Speer [49]).

Curto and Fialkow in a series of papers studied scalar truncated moment problems on \({\mathbb {R}}^d\) and \({\mathbb {C}}^d\) (which is equivalent to the truncated moment problem on \({\mathbb {R}}^{2d}\)). We refer the reader to [16,17,18,19,20,21,22] where concrete conditions for a solution to various moment problems are investigated. For connections between bivariate moment matrices and flat extensions see Fialkow and Nie [37, 38], Fialkow [35] and Curto and Yoo [25]. For the bivariate cubic moment problem we refer the reader to Curto, Lee and Yoon [24], the first author [50], and Curto and Yoo [26].

We next wish to mention alternative approaches to the flat extension theorem for the truncated moment problem on \({\mathbb {R}}^d\). The core variety approach to the truncated moment problem began with the study of Fialkow [36]. Subsequently, Blekherman and Fialkow in [8] strengthened the core variety approach to feature a necessary and sufficient condition for a solution. For additional results related to the core variety approach see Schmüdgen [72] and di Dio and Schmüdgen [30]. Recently, in [23], Curto, Ghasemi, Infusino and Kuhlmann investigated the theory of positive extensions of linear functionals showing the existence of an integral representation for the linear functional.

We now wish to bring the matrix-valued and operator-valued moment problem into focus. The matrix-valued moment problem on \({\mathbb {R}}\) was initially investigated by Krein [56, 57]. See [65] for a thorough review on Krein’s work on moment problems. Andô in [4] was the first to study the truncated moment problem in the operator-valued case. Narcowich studied the matrix-valued and operator-valued Stieltjes moment problem in [64]. Kovalishina studied the nondegenerate case in [54, 55]. Bolotnikov considered the degenerate truncated matrix-valued Hamburger and Stieltjes moment problems in terms of a linear fractional transformation, see [10,11,12]. Dym [31] considered the truncated matrix-valued Hamburger moment problem associating it with parametrised solutions of a matrix interpolation problem. Alpay and Loubaton in [3] treated the partial trigonometric moment problem on an interval in the matrix case, where Toeplitz matrices built from the moments are associated to orthogonal polynomials. For connections between matrix-valued orthogonal polynomials and CMV matrices we refer the reader to Dym and the first author [32].

Simonov studied the strong matrix-valued Hamburger moment problem in [74, 75]. The truncated matrix-valued moment problem on a finite closed interval was studied by Choque Rivero, Dyukarev, Fritzsche and Kirstein [13, 14]. Using Potapov’s method of Fundamental Matrix Inequalities they characterised the solutions by nonnegative Hermitian block Hankel matrices and they investigated further the case of an odd number of prescribed moments. Dyukarev, Fritzsche, Kirstein, Mädler and Thiele [34] studied the truncated matrix-valued Hamburger moment problem with an algebraic approach based on matrix-valued polynomials built from a nonnegative Hermitian block Hankel matrix. Dyukarev, Fritzsche, Kirstein and Mädler [33] studied the truncated matrix-valued Stieltjes moment problem via a similar approach.

Bakonyi and Woerdeman in [5] studied the univariate truncated matrix-valued Hamburger moment problem and the odd case of the bivariate truncated matrix-valued moment problem. The first author and Woerdeman in [53] investigated the odd case of the truncated matrix-valued K-moment problem on \({\mathbb {R}}^d,\) \({\mathbb {C}}^d\) and \({\mathbb {T}}^d,\) where they discovered easily checked commutativity conditions for the existence of a minimal representing measure.

Applications of matrix-valued moment problems and related topics have been studied extensively in recent years. Geronimo [39] studied scattering theory and matrix orthogonal polynomials with the construction of a matrix-valued distribution function built from matrix-valued moments. Dette and Studden in [27] investigated matrix orthogonal polynomials and matrix-valued measures associated with certain matricial moments from a numerical analysis point of view. In [28], Dette and Studden considered optimal design problems in linear models as a statistical application of the problem of maximising matrix-valued Hankel determinants built from matricial moments. Moreover, Dette and Tomecki in [29] studied the distribution of random Hankel block matrices and random Hankel determinant processes with respect to certain matricial moments.

In [63], Mourrain and Schmüdgen studied extensions and representations for Hermitian functionals \(L: {\mathscr {A}}\rightarrow {\mathbb {C}},\) where \({\mathscr {A}}\) is a unital \(*\)-algebra. Let \({\mathscr {C}}\) be a \(*\)-invariant subspace of a unital \(*\)-algebra \({\mathscr {A}}\) and \({\mathscr {C}}^2:=\mathrm {span}\{a b:a, b\in {\mathscr {C}} \}.\) Suppose \({\mathscr {B}}\subseteq {\mathscr {C}}\) is a \(*\)-invariant subspace of \({\mathscr {A}}\) such that \(1\in {\mathscr {B}}.\) Mourrain and Schmüdgen say that a Hermitian linear functional \(L: {\mathscr {C}}^2\rightarrow {\mathbb {C}}\) has a flat extension with respect to \({\mathscr {B}}\) if

where \(K_{L}({\mathscr {C}}):=\{a\in {\mathscr {C}}: L(b^*a)=0 \}\). In [63], Mourrain and Schmüdgen showed that every positive flat linear functional \(L: {\mathscr {C}}\rightarrow {\mathbb {C}}\) has a unique extension \({\tilde{L}}: {\mathscr {A}}\rightarrow {\mathbb {C}}.\) Mourrain and Schmüdgen also showed that if \({\mathscr {A}}={\mathbb {C}}^{d\times d}[x_1,\dots , x_d]\) (see Definition 2.12), \({\mathscr {B}}={\mathbb {C}}^{d\times d}_n[x_1,\dots , x_d]\) (see Definition 2.13), \({\mathscr {C}}={\mathbb {C}}^{d\times d}_{n+1}[x_1,\dots , x_d]\) and \(L: {\mathscr {C}}^2\rightarrow {\mathbb {C}}\) is a positive linear functional which has a flat extension with respect to \({\mathscr {B}},\) then

for some choice of \(t_1,\dots , t_r\in {\mathbb {R}}^d\) and \(u_1,\dots , u_r \in {\mathbb {C}}^d\) with \(u_i={{\,\mathrm{col}\,}}(u_{ki})_{k=1}^{d}\) for \(i=1,\dots ,r,\) and in particular,

Structure

The paper is organised as follows. In Sects. 2, 3 and 4, we will formulate a number of definitions and basic results for future use. In Sect. 5.1, we will define infinite d-Hankel matrices and prove a number of results on the right ideal of matrix polynomials beloning to the kernel of a d-Hankel matrix. In Sect. 5.2 we will show that every \({\mathcal {H}}_p\)-valued multisequence which gives rise to a positive infinite d-Hankel matrix with finite rank has a representing measure. In Sect. 5.3, we will prove a number of necessary conditions for a \({\mathcal {H}}_p\)-valued multisequence to have a representing measure. In Sect. 5.4, we will precisely formulate and proof (C1). In Sect. 5.5, we will formulate a lemma which states that once a d-Hankel matrix has a flat extension, one can construct a sequence of flat extensions of all orders giving rise to positive infinite d-Hankel matrix with finite rank. In Sect. 6, we will prove our flat extension theorem, i.e., (C2). In Sect. 7, we will prove an abstract characterisation of truncated \({\mathcal {H}}_p\)-valued multisequences with a representing measure, i.e., (C3). In Sect. 8.1, we will provide necessary and sufficient conditions for the bivariate quadratic matrix-valued moment problem to have a minimal solution. In Sect. 8.2, we will analyse the bivariate quadratic matrix-valued moment problem when the 2-Hankel matrix M(1) is block diagonal. In Sect. 8.3, we will consider some singular cases of the bivariate quadratic matrix-valued moment problem. In Sect. 8.4, we will see that the bivariate quadratic matrix-valued moment problem has a minimal solution whenever the corresponding 2-Hankel matrix M(1) is positive definite and satisfies a certain condition which automatically holds if \(p=1\), i.e., the first part of (C4). In Sect. 8.5 we will go through a number of examples for the bivariate quadratic matrix-valued moment problem. In particular, we will see that \(S_{00} = I_p\) and \(M(1) \succeq 0\) is not enough to guarantee that a minimal solution exists. Finally, in Sect. 9, we will consider a particular case of the bivariate cubic matrix-valued moment problem.

For the convenience of the reader we have compiled a list of commonly used notation that appears throughout the paper.

Notation

\({\mathbb {R}}^{m \times n}\) and \({\mathbb {C}}^{m \times n }\) denote the vector spaces of real and complex matrices of size \(m \times n\), respectively. We will let \({\mathcal {H}}_p\) denote the real vector space of \(p \times p\) Hermitian matrices in \({\mathbb {C}}^{p \times p}\).

The \(p \times p\) identity matrix will be denoted by \(I_p\) or I (when no confusion can possibly arise) and the \(p \times p\) matrix of zeros will be denoted by \(0_{p \times p}\) or 0 (when no confusion can possible arise).

\({\mathbb {C}}^p\) denotes the p-dimensional complex vector space equipped with the standard inner product \(\langle \xi , \eta \rangle = \eta ^*\xi ,\) where \(\xi , \eta \in {\mathbb {C}}^p.\) The standard basis vectors in \({\mathbb {C}}^p\) will be denoted by \(\mathrm{e}_1, \ldots \mathrm{e}_p\).

\(A^*\) and \(A^T\) denote the conjugate transpose and transpose, respectively, of a matrix \(A \in {\mathbb {C}}^{n \times n}\). If \(A \in {\mathbb {C}}^{n \times n}\) is invertible, then we will let \(A^{-*}:= (A^{-1})^* = (A^*)^{-1}\).

Let \(M \in {\mathbb {C}}^{m \times n}\). Then \({\mathcal {C}}_{M}\) and \(\mathrm{ker}(M)\) denote the column space and null space, respectively.

\(\sigma (A)\) denotes the spectrum of a matrix \(A \in {\mathbb {C}}^{n \times n}\).

We will write \(A \succeq 0\) (resp. \(A \succ 0\)) if A is positive semidefinite (resp. positive definite).

\({{\,\mathrm{col}\,}}(C_{\lambda })_{\lambda \in \Lambda }\) and \({{\,\mathrm{row}\,}}(C_{\lambda })_{\lambda \in \Lambda }\) denote the column and row vectors with entries \((C_{\lambda })_{\lambda \in \Lambda }\), respectively.

\({\mathbb {N}}_0^d\), \({\mathbb {R}}^d\) and \({\mathbb {C}}^d\) denote the set of d-tuples of nonnegative integers, real numbers and complex numbers, respectively.

\(|\gamma |:= \displaystyle \sum _{j=1}^d \gamma _j\) for \(\gamma = (\gamma _1, \ldots , \gamma _d) \in {\mathbb {N}}_0^d\).

\(x^{\gamma }:= \displaystyle \prod _{j=1}^d x_j^{\gamma _j}\) for \(x = (x_1, \ldots ,x_d) \in {\mathbb {R}}^d\) and \(\gamma = (\gamma _1, \ldots , \gamma _d) \in {\mathbb {N}}_0^d\).

\(\varepsilon _j \in {\mathbb {N}}_0^d\) denotes a d-tuple of zeros with 1 in the j-th entry.

\(\Gamma (m, d):= \{ \gamma \in {\mathbb {N}}_0^d: 0 \le |\gamma | \le m \}\).

\(\prec _{\mathrm {grlex}}\) denotes the graded lexicographic order

\({\mathbb {C}}^{p \times p}[x_1, \ldots , x_d]\) denotes the ring of matrix polynomials in d indeterminate variables \(x_1, \ldots , x_d\) with coefficients in \({\mathbb {C}}^{p \times p}\).

\({\mathbb {C}}^{p \times p}_n[x_1, \ldots , x_d]\) denotes the subset of \({\mathbb {C}}^{p \times p}[x_1, \ldots , x_d]\) of matrix polynomials of total degree at most n, i.e., matrix polynomials of the form

\({\mathcal {V}}(M(n))\) will denote the variety of the d-Hankel matrix M(n) (see Definition 3.5).

\({\mathscr {B}}({\mathbb {R}}^d)\) will denote the sigma algebra of Borel sets on \({\mathbb {R}}^d\).

\({{\,\mathrm{card}\,}}\Omega \) denotes the cardinality of the set \(\Omega \subseteq {\mathbb {R}}^d\).

2 Preliminaries

In this section we shall provide preliminary definitions and results for future use.

We will begin with a useful characterisation for positive extensions given by Smuljan [76] via the following result.

Lemma 2.1

([76]) Let \(A\in {\mathbb {C}}^{n\times n}, A \succeq 0, B\in {\mathbb {C}}^{n\times m}, C\in {\mathbb {C}}^{m\times m}\) and let

Then the following statements hold:

-

(i)

\({\tilde{A}}\) is positive semidefinite if and only if \(B=AW\) for some \(W\in {\mathbb {C}}^{n\times m}\) and \(C\succeq W^*AW.\)

-

(ii)

\({\tilde{A}}\) is positive semidefinite and \({{\,\mathrm{rank}\,}}{\tilde{A}}= {{\,\mathrm{rank}\,}}A\) if and only if \(B=AW\) for some \(W\in {\mathbb {C}}^{n\times m}\) and \(C= W^*AW.\)

Definition 2.2

Let \((v_{\gamma })_{\gamma \in \Gamma _{m, d}},\) where \(v_{\gamma }\in {\mathbb {C}}^{p \times q}\) for \(\gamma \in \Gamma _{m, d}.\) We let

Definition 2.3

Let \({\mathcal {B}}({\mathbb {R}}^d)\) denote the collection of Borel sets on \({\mathbb {R}}^d\) and \(\langle u, v \rangle = v^* u\) for \(u, v \in {\mathbb {C}}^p\). A function \(T: {{\mathcal {B}}({\mathbb {R}}^d)}\rightarrow {\mathcal {H}}_p\) is called a positive \({\mathcal {H}}_p\)-valued Borel measure on \({\mathbb {R}}^d,\) if for each \(u\in {\mathbb {C}}^p\), \(\langle T(\sigma )u, u\rangle \) defines a positive Borel measure on \({\mathbb {R}}^d\) for all \(\sigma \in {{\mathcal {B}}({\mathbb {R}}^d)},\) or, equivalently,

The support of an \({\mathcal {H}}_p\)-valued measure T, denoted by \({{\,\mathrm{supp}\,}}T,\) is defined as the smallest closed subset \({\mathcal {S}}\subseteq {\mathcal {B}}({\mathbb {R}}^d)\) such that \(T({\mathbb {R}}^d{\setminus }{\mathcal {S}})=0_{p \times p}.\)

Definition 2.4

For a measurable function \(f: {\mathbb {R}}^d\rightarrow {\mathbb {C}}\), we let its integral

be given by

for all \(u, v \in {\mathbb {C}}^p\), provided all integrals on the right-hand side converge.

Remark 2.5

If an \({\mathcal {H}}_p\)-valued measure T is of the form

then

Definition 2.6

The power moments of a positive \({\mathcal {H}}_p\)-valued measure \(T = (T_{a,b})_{a,b=1}^p\) on \({\mathbb {R}}^d\) are given by

provided

Definition 2.7

For a measurable function \(f: {\mathbb {R}}^d\rightarrow {\mathbb {C}}\), we let its integral

be given by

for all \(u, v \in {\mathbb {C}}^p\), provided all integrals on the right-hand side converge, that is,

or, equivalently,

where \(T_{ab}\) is as in Definition 2.3.

Remark 2.8

If an \({\mathcal {H}}_p\)-valued measure T is of the form

then it is easy to check that

Definition 2.9

The power moments of a positive \({\mathcal {H}}_p\)-valued measure T on \({\mathbb {R}}^d\) are given by

provided

Definition 2.10

Given distinct points \(w^{(1)}, \dots , w^{(k)}\in {\mathbb {R}}^d\) and a subset \(\Lambda =\{\lambda ^{(1)}, \dots , \lambda ^{(k)}\}\) of \({\mathbb {N}}_0^d,\) we define the multivariable Vandermonde matrix by

We now present [53, Theorem 2.13] which is based on [69, Algorithm 1] and provides a useful machinery when the invertibility of a multivariable Vandermonde matrix is needed to compute the weights of a representing measure.

Theorem 2.11

Given distinct points \(w^{(1)}, \dots ,w^{(\kappa )} \in {\mathbb {R}}^d,\) there exists \(\Lambda \subseteq {\mathbb {N}}_0^d\) such that \({{\,\mathrm{card}\,}}\Lambda =\kappa \) and \(V(w^{(1)}, \dots ,w^{(\kappa )};\Lambda )\) is invertible.

Definition 2.12

Let \({\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) denote the set of \(p\times p\) matrix-valued polynomials with real indeterminates \(x_1,\dots , x_d,\) that is, \({\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) consists of matrix-valued polynomials of the form

where \(P_\lambda \in {\mathbb {C}}^{p \times p},\) \(x^\lambda = \prod \nolimits _{j=1}^{d} x_j^{ \lambda _j}\) for \(\lambda \in \Gamma _{n, d}\) and \(n\in {\mathbb {N}}_0 \) is arbitrary.

Definition 2.13

Let \({\mathbb {C}}^{p \times p}_n[x_1,\dots , x_d]\) denote the set of \(p\times p\) matrix-valued polynomials with degree at most n with real indeterminates \(x_1,\dots , x_d,\) that is, \({\mathbb {C}}^{p \times p}_n[x_1,\dots , x_d]\) consists of matrix-valued polynomials of the form

where \(P_\lambda \in {\mathbb {C}}^{p \times p},\) \(x^\lambda = \prod \nolimits _{j=1}^{d} x_j^{ \lambda _j}\) for \(\lambda \in \Gamma _{n, d}.\)

3 d-Hankel Matrices

In this section we will define d-Hankel matrices and the variety of a d-Hankel matrix.

Definition 3.1

We order \({\mathbb {N}}_0^d\) by the graded lexicographic order \(\prec _{\mathrm {grlex}},\) that is, \(\gamma \prec _{\mathrm {grlex}} {\tilde{\gamma }}\) if \(|\gamma |< |{\tilde{\gamma }}|,\) or, if \(|\gamma |= |{\tilde{\gamma }}|\) then \(x^{\gamma }\prec _{{{\,\mathrm{lex}\,}}} x^{\tilde{{\gamma }}}.\) We note that \(\Gamma _{m, d}\) inherits the ordering of \({\mathbb {N}}_0^d\) and is such that

Definition 3.2

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a given truncated \( {\mathcal {H}}_p\)-valued multisequence and M(n) be the corresponding d-Hankel matrix based on S and defined as follows. We label the block rows and block columns by a family of monomials \((x^\gamma )_{\gamma \in \Gamma _{n, d}}\) ordered by \(\prec _{\mathrm {grlex}}\) We let the entry in the block row indexed by \(x^\gamma \) and in the block column indexed by \(x^{{\tilde{\gamma }}}\) be given by

Definition 3.3

We will say that a representing measure T for a given truncated \( {\mathcal {H}}_p\)-valued multisequence \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) is minimal, if \(\sum \nolimits _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a\) (see Definition 2.3) is as small as possible.

Remark 3.4

It turns out that the corresponding d-Hankel matrix M(n) of S has the property that \({{\,\mathrm{rank}\,}}M(n) \le \sum \nolimits _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a\) for any representing measure of S (see Lemma 5.57) and hence, any minimal representing measure T satisfies

We next define the variety of a d-Hankel matrix in our matrix-valued setting. We introduce zeros of determinants of matrix-valued polynomials abstracting that way the notion of the variety of a d-Hankel matrix which can implicitly apeared first in Curto and Fialkow [16].

Definition 3.5

(variety of a d-Hankel matrix) Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a truncated \({\mathcal {H}}_p\)-valued multisequence and let M(n) be the corresponding d-Hankel matrix. Let \(P(x)= \sum _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}_n[x_1,\dots , x_d]\) such that \(P(X)\in C_{M(n)}.\) The variety of M(n), denoted by \({\mathcal {V}}(M(n)),\) is given by

4 Matrix-Valued Polynomials

We introduce important definitions and notation while establishing several algebraic results involving matrix-valued polynomials with several real indeterminates which will be important for proving our flat extension theorem for matricial moments.

Definition 4.1

A set \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) is a right ideal if it satisfies the following conditions:

-

(i)

\(P+Q\in {\mathscr {I}}\) whenever \(P, Q\in {\mathscr {I}}\).

-

(ii)

\(PQ\in {\mathscr {I}}\) whenever \(P\in {\mathscr {I}}\) and \(Q\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\)

Definition 4.2

Let \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) be a right ideal. We shall let

be the variety associated with the ideal \({\mathscr {I}}.\)

Definition 4.3

A right ideal \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) is real radical if

Remark 4.4

We wish to justify the usage of the moniker real radical of Definition 4.3 when \(p=1.\) We note that one usually says that a real ideal \({\mathscr {K}} \subseteq {\mathbb {R}}[x_1,\dots , x_d]\) is real radical if

(see, e.g., [60]). Suppose \({\mathscr {I}} ={\mathscr {I}}_1+{\mathscr {I}}_2\mathrm {i},\) where

and let \(f^{(a)}=q^{(a)}+r^{(a)}\mathrm {i},\) where

We claim that

holds. We wish to demonstrate a connection between the notion of a real ideal \({\mathscr {K}}\subseteq {\mathbb {R}}[x_1,\dots , x_d]\) being real radical and our notion of a complex ideal \({\mathscr {I}}\subseteq {\mathbb {C}}[x_1,\dots , x_d]\) being real radical, that is,

Then

Notice that \({\mathscr {I}}_1, {\mathscr {I}}_2\) are closed under scalar addition and multiplication and so they are ideals in \({\mathbb {R}}[x_1,\dots , x_d].\) If \(\sum \nolimits _{a=1}^\kappa |f^{(a)}(x)|^2\in {\mathscr {I}},\) then \(q^{(a)}+r^{(a)}\mathrm {i} \in {\mathscr {I}}\) for all \(a=1, \dots , \kappa .\) But then

since \({\mathscr {I}}={\mathscr {I}}_1+{\mathscr {I}}_2\mathrm {i}.\) However \(|f^{(a)}(x)|^2=(q^{(a)}(x))^2+(r^{(a)}(x))^2\) and so

can be written as

Notice that \(\sum \nolimits _{a=1}^\kappa ((q^{(a)}(x))^2+(r^{(a)}(x))^2)\in {\mathscr {I}}_1\) from which we conclude that the claim (4.1) holds.

In the following remark we will introduce an additional assumption on \({\mathscr {I}}\subseteq {\mathbb {C}}[x_1,\dots , x_d]\) which appears in Remark 4.4. As we noted in Remark 4.4, \({\mathscr {I}}={\mathscr {I}}_1+{\mathscr {I}}_2\mathrm {i},\) where \({\mathscr {I}}_1, {\mathscr {I}}_2\) are real ideals in \({\mathbb {R}}[x_1,\dots , x_d].\) Thus, it is clear that \(f\in {\mathscr {I}}\) vanishes on a set \(V\subseteq {\mathbb {R}}^d\) if and only if \({{\,\mathrm{Re}\,}}(f(x))\) and \(\pm {{\,\mathrm{Im}\,}}(f(x))\) vanish on V. In view of the Real Nullstellensatz (see, e.g., [9]), any real radical ideal must agree with its vanishing ideal (that is, the set of polynomials which vanish on the variety). Therefore, if \({\mathscr {I}}\subseteq {\mathbb {C}}[x_1,\dots , x_d]\) is real radical, then \(f\in {\mathscr {I}}\) implies that \({\bar{f}}\in {\mathscr {I}}.\)

Remark 4.5

Let \({\mathscr {I}}\subseteq {\mathbb {C}}[x_1,\dots , x_d]\) and \({\mathscr {I}}_1, {\mathscr {I}}_2\subseteq {\mathbb {R}}[x_1,\dots , x_d]\) be as in Remark 4.4. Suppose \({\mathscr {I}}\) has the additional property that \(f\in {\mathscr {I}}\) implies \({\bar{f}}\in {\mathscr {I}}.\) Then

-

(i)

\({\mathscr {I}}_1\subseteq {\mathbb {R}}[x_1,\dots , x_d]\) is real radical.

-

(ii)

\({\mathscr {I}}_2\subseteq {\mathbb {R}}[x_1,\dots , x_d]\) is real radical.

Since \({\mathscr {I}}\) is an ideal in \({\mathbb {C}}[x_1,\dots , x_d]\) which is closed under complex conjugation, we have that \({\mathscr {I}}_1\) and \({\mathscr {I}}_2\) are subideals of \({\mathscr {I}}\) over \({\mathbb {R}}[x_1,\dots , x_d].\) Hence, we may use the fact that \({\mathscr {I}}\) is real radical to deduce (i) and (ii).

Lemma 4.6

Fix \(\gamma \in {\mathbb {N}}_0^d \) with \(|\gamma |>n\) and let \(P(x)= x^{\gamma }I_p + \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) Then

where \(\gamma p:= (\gamma _1 p, \dots , \gamma _d p)\in {\mathbb {N}}_0^d \) and \(m<|\gamma |p.\)

Proof

We proceed by induction on p. For \(p=2,\) \(P(x) =\begin{pmatrix} x^{\gamma } + \beta _{11}(x) &{} \beta _{12}(x)\\ \beta _{21}(x) &{} x^{\gamma } + \beta _{22}(x) \end{pmatrix},\) where \(\beta _{ab}(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda ^{(a, b)} \in {\mathbb {C}}[x_1,\dots , x_d]\) with \(P_\lambda ^{(a, b)}\) the (a, b)-th entry of \(P_\lambda \) and \(1\le a, b \le 2.\) We also have

where \(L(x)=x^{\gamma }\beta _{22}(x) + +x^{\gamma }\beta _{11}(x),\;C(x)= \beta _{11}(x)\beta _{22}(x)- \beta _{12}(x)\beta _{21}(x)\in {\mathbb {C}}[x_1,\dots , x_d].\) Suppose the claim holds for \(p>2.\) We have

and so

Let \({\widetilde{L}}(x)\) be the sum of the terms of \(\det P(x)\) of degree up to \( \gamma (p-1)\) with \(|\gamma |>0 \) and \({\widetilde{C}}(x)\) the sum of the terms of \(\det P(x)\) of degree up to \( \gamma p\) with \(|\gamma |=0.\) Then

where \(m<|\gamma |p.\) Thus

\(\square \)

We order the monomials in \({\mathbb {C}}[x_1,\dots , x_d]\) by the graded lexicographic order \(\prec _{\mathrm {grlex}}.\)

Remark 4.7

Fix \(\gamma \in {\mathbb {N}}_0^d \) with \(|\gamma |>n\) and let \(P(x)= x^{\gamma }I_p + \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) For a polynomial \(\varphi (x) \in {\mathbb {C}}[x_1,\dots , x_d]\) given by

where \(\gamma p:= (\gamma _1 p, \dots , \gamma _d p)\in {\mathbb {N}}_0^d\) and \(m<|\gamma |p,\) the leading term of \(\varphi (x)\) is

Definition 4.8

We define the basis of \({\mathbb {C}}^{p \times p}\) viewed as a vector space over \({\mathbb {C}}\)

where \(E_{jk} \in {\mathbb {C}}^{p \times p} \) is the matrix with 1 in the (j, k)-th entry and 0 in the rest of the entries, \(j, k= 1, \dots , p.\)

Definition 4.9

Given a right ideal \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) we define

where \(E_{jk}\in {\mathbb {C}}^{p \times p}\) is as in Definition 4.8 for all \(j, k= 1, \dots , p.\)

Lemma 4.10

Suppose \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d] \) is a right ideal. Then \({\mathscr {I}}_{jk}\subseteq {\mathbb {C}}[x_1,\dots , x_d]\) is an ideal for all \(j, k= 1, \dots , p.\)

Proof

If \(f, g \in {\mathscr {I}}_{jk},\) then

and

Since \((f+g)(x)E_{jk}=(F+G)(x)E_{jk}, \) we have

If \(f\in {\mathscr {I}}_{jk}\) and \(h \in {\mathbb {C}}[x_1,\dots , x_d], \) then

and thus \( fh \in {\mathscr {I}}_{jk}. \) \(\square \)

Lemma 4.11

Suppose \({\mathscr {I}}\subseteq {\mathbb {C}}^{p \times p}[x_1,\dots , x_d] \) is a right ideal. If \({\mathscr {I}} \) is real radical, then \({\mathscr {I}}_{jj}\) is real radical for all \(j= 1, \dots , p.\)

Proof

We need to show

Let \(f(x)=\sum \nolimits _{a=1}^{\kappa } |f^{(a)} (x)|^2 \in {\mathscr {I}}_{jj}.\) Then there exists \(F\in {\mathscr {I}}\) such that

Without loss of generality, we may assume that \(F(x)=f(x)E_{jj}.\) If we let \(F^{(a)}(x)= f^{(a)}(x)E_{jj},\) then

Thus

and hence

which implies that \(F^{(a)}(x) \in {\mathscr {I}}\; \text {for all} \; a=1, \dots , \kappa ,\) since \({\mathscr {I}}\) is real radical. Consequently,

and \({\mathscr {I}}_{jj}\) is real radical. \(\square \)

5 Positive Infinite d-Hankel Matrices with Finite Rank

We shall study positive infinite d-Hankel matrices with finite rank, necessary conditions for a truncated \({\mathcal {H}}_p\)-valued multisequence to have a representing measure and extension results for positive d-Hankel matrices.

5.1 Infinite d-Hankel Matrices

In this subsection we define d-Hankel matrices associated with an \({\mathcal {H}}_p\)-valued multisequence. We investigate positive infinite d-Hankel matrices with finite rank and a right ideal of matrix-valued polynomials generated by column relations.

Definition 5.1

Let \((V_\lambda )_{\lambda \in {\mathbb {N}}_0^d},\) where \(V_\lambda \in {\mathbb {C}}^{p\times p}\) for \(\lambda \in {\mathbb {N}}_0^d.\) We let

Definition 5.2

Let

Lemma 5.3

\(({\mathbb {C}}^{p \times p})_{0}^{\omega }\) is a right module over \({\mathbb {C}}^{p \times p},\) under the operation of addition given by

for \(A={{\,\mathrm{col}\,}}(A_\lambda )_{\lambda \in {\mathbb {N}}_0^d}, B={{\,\mathrm{col}\,}}(B_\lambda )_{\lambda \in {\mathbb {N}}_0^d}\in ({\mathbb {C}}^{p \times p})_{0}^{\omega },\) together with the right multiplication given by

for \(A={{\,\mathrm{col}\,}}(A_\lambda )_{\lambda \in {\mathbb {N}}_0^d}\in ({\mathbb {C}}^{p \times p})_{0}^{\omega }\) and \(C \in {\mathbb {C}}^{p \times p}.\)

Proof

The verification that \(({\mathbb {C}}^{p \times p})_{0}^{\omega }\) is a right module over \({\mathbb {C}}^{p \times p}\) can be carried out in a very straight-forward manner. \(\square \)

We now give the definition of an infinite d-Hankel matrix based on \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d},\) where \(S_\gamma \in {\mathcal {H}}_p\) for all \(\gamma \in {\mathbb {N}}_0^d.\)

Definition 5.4

(infinite d-Hankel matrix) Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence. We define \(M(\infty )\) to be the corresponding moment matrix based on \(S^{(\infty )}\) as follows. We label the block rows and block columns by a family of monomials \((x^\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) ordered by \(\prec _{\mathrm {grlex}}.\) We let the entry in the block row indexed by \(x^\gamma \) and in the block column indexed by \(x^{{\tilde{\gamma }}}\) be given by

Let \(X^\lambda :={{\,\mathrm{col}\,}}(S_{\lambda +\gamma })_{\gamma \in {\mathbb {N}}_0^d},\; \lambda \in \Gamma _{n, d}\) and \(C_{M(\infty )}=\{M(\infty )V: V \in ({\mathbb {C}}^{p \times p})_{0}^{\omega }\}.\) We notice that \(X^\lambda \in C_{M(\infty )}.\)

For \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) a given truncated \( {\mathcal {H}}_p\)-valued multisequence and M(n) the corresponding d-Hankel matrix, we let \(X^\lambda :={{\,\mathrm{col}\,}}(S_{\lambda +\gamma })_{\gamma \in \Gamma _{n, d}}\) for \(\lambda \in \Gamma _{n, d}\) and \(C_{M(n)}\) be the column space of M(n).

Remark 5.5

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence. Then we can view \(M(\infty ): ({\mathbb {C}}^{p \times p})_{0}^{\omega }\rightarrow C_{M(\infty )}\) as a right linear operator, that is,

for \(V={{\,\mathrm{col}\,}}(V_\lambda )_{\lambda \in {\mathbb {N}}_0^d}\in ({\mathbb {C}}^{p \times p})_{0}^{\omega }\;\text {and}\; Q={{\,\mathrm{col}\,}}(Q_\lambda )_{\lambda \in {\mathbb {N}}_0^d}\in ({\mathbb {C}}^{p \times p})_{0}^{\omega }.\)

Definition 5.6

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence and let \(M(\infty )\) be the corresponding d-Hankel matrix. Suppose \(M(\infty ) \succeq 0\). We define

where M(n) the corresponding d-Hankel matrix based on \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d} }.\)

Definition 5.7

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence and let \(M(\infty )\) be the corresponding d-Hankel matrix. Suppose \(M(\infty ) \succeq 0\). We define the right linear map

to be given by

where \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\)

Definition 5.8

Given \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) we let

and

Remark 5.9

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence and let \(M(\infty )\succeq 0\) be the corresponding d-Hankel matrix. Given \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) we observe that

Indeed, notice that

Definition 5.10

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence and let \(M(\infty )\) be the corresponding infinite d-Hankel matrix. Suppose \(M(\infty ) \succeq 0\) and

We will write \(M(\infty )\succeq 0\) if

or, equivalently, \(M(n)\succeq 0\) for all \(n\in {\mathbb {N}}_0^d.\)

Definition 5.11

Let \({\mathbb {C}}^p[x_1,\dots , x_d]\) be the set of vector-valued polynomials, that is,

where \(q_\lambda \in {\mathbb {C}}^p,\) \(x^\lambda = \prod \nolimits _{j=1}^{d} x_j^{ \lambda _j}\) for \(\lambda \in \Gamma _{n, d}\) and n is arbitrary.

We shall proceed with a result on positivity when \(M(\infty )\) is treated as a linear operator \(M(\infty ): ({\mathbb {C}}^p)_{0}^{\omega } \rightarrow {\tilde{C}}_{M(\infty )}. \)

Lemma 5.12

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence and let \(M(\infty )\) be the corresponding d-Hankel matrix. Suppose

If \(M(\infty )\succeq 0,\) then

Proof

Let \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) Then by Definition 5.10, \(M(\infty )\succeq 0\) if

If \(e_{1} \) is a standard basis vector in \({\mathbb {C}}^p,\) then

Let \(q(x):= P(x)e_{1}.\) Notice that

Since \(P \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) is arbitrary, so is \(q\in {\mathbb {C}}^p[x_1,\dots , x_d]. \) Thus

\(\square \)

Definition 5.13

Suppose \(M(\infty )\succeq 0.\) Let \(P \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) We define the set

and the kernel of the map \(\Phi : {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\rightarrow C_{M(\infty )}\) by

Lemma 5.14

Suppose \(M(\infty )\succeq 0.\) Then

where \({\mathcal {I}}\) and \(\ker \Phi \) are as in Definition 5.13.

Proof

By Definition 5.10, \(M(\infty )\succeq 0\) if \({\widehat{P}}^*M(\infty ){\widehat{P}}\succeq 0_{p\times p}\;\text {for}\; P \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) and thus by Lemma 5.12, the corresponding d-Hankel matrixM(m) based on \(S:=(S_\gamma )_{\gamma \in \Gamma _{2m, d} }\) is positive semidefinite for all \(m\in {\mathbb {N}}.\) Hence \(M(m)^{\frac{1}{2}}\) exists and we let \(A:= M(m)^{\frac{1}{2}}{{\,\mathrm{col}\,}}(P_\lambda )_{\lambda \in \Gamma _{m, d}},\) for \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{m, d}} x^\lambda P_\lambda .\) Since \(P\in {\mathcal {I}},\)

But \({\widehat{P}}^*M(\infty ){\widehat{P}}=A^*A\) and hence \(A^*A=0_{p\times p}.\) Thus, all singular values of A are 0 and so \({{\,\mathrm{rank}\,}}A=0,\) which forces

Therefore

and

We have to show

We will show that for all \(\ell \ge m,\)

First notice

and

We write

where

and

Since \(M(\ell )\succeq 0,\) by Lemma 2.1, there exists \(W\in {\mathbb {C}}^{({{\,\mathrm{card}\,}}\Gamma _{m, d})p \times ({{\,\mathrm{card}\,}}( \Gamma _{\ell , d}\setminus \Gamma _{m, d}))p}\) such that \( M(m)W=B\quad \text {and}\quad C \succeq W^*M(m) W.\) Then

by Eq. (5.1).

Thus, Eq. (5.2) holds for all \(\ell \ge m\) and we obtain

which implies \(P \in \ker \Phi .\)

Conversely, if \(P \in \ker \Phi \) then

and so \({\widehat{P}}^*M(\infty ){\widehat{P}}=0_{p\times p},\) that is, \(P \in {\mathcal {I}}.\) \(\square \)

Lemma 5.15

Suppose \(M(\infty )\succeq 0.\) Then \({\mathcal {I}}=\ker \Phi \) is a right ideal.

Proof

Let \(P, Q\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) We have to show the following:

(i) If \(P\in \ker \Phi \) and \(Q\in \ker \Phi ,\) then \(P+Q\in \ker \Phi .\)

(ii) If \(P\in \ker \Phi \) and \(Q\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) then \(PQ\in \ker \Phi .\)

To prove (i) notice that since \(P\in \ker \Phi ,\)

and similarly, since \(Q\in \ker \Phi ,\)

We then have

that is, \(P+Q\in \ker \Phi .\)

To prove (ii) we need to show that if \(P\in \ker \Phi \) and \(Q\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) then

For

we let

We will show

We have

But since \(P\in \ker \Phi ,\)

which means that

For \({\tilde{\gamma }}=\gamma +\lambda ',\) we have

and Eq. (5.3) holds. For any fixed \(\lambda '\in \Gamma _{n, d},\) by Eq. (5.3),

and so

Hence

Finally, since

we have

as desired and we derive that \(\ker \Phi \) is a right ideal. By Lemma 5.14, \({\mathcal {I}}=\ker \Phi \) and so \({\mathcal {I}}\) is a right ideal as well. \(\square \)

Definition 5.16

Suppose \(M(\infty )\succeq 0\) and let \({\mathcal {I}}\) be as in Definition 5.13. We define the right quotient module

of equivalence classes modulo \({\mathcal {I}},\) that is, we will write

whenever

Lemma 5.17

Suppose \(M(\infty ) \succeq 0\). Then \({\mathbb {C}}^{p \times p}[x_1,\dots , x_d]/{\mathcal {I}}\) is a right module over \({\mathbb {C}}^{p \times p},\) under the operation of addition \((+)\) given by

for \(P, P' \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d],\) together with the right multiplication \((\cdot )\) given by

for \(P \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) and \(R\in {\mathbb {C}}^{p \times p}.\)

Proof

Let \(P, Q\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) The following properties can be easily checked:

-

(i)

\(((P+{\mathcal {I}})+(Q+{\mathcal {I}}))R=(P+{\mathcal {I}})R+(Q+{\mathcal {I}})R \quad \text {for all}\; R\in {\mathbb {C}}^{p \times p}.\)

-

(ii)

\((P+{\mathcal {I}})(R+S)=(P+{\mathcal {I}})R+(P+{\mathcal {I}})S\quad \text {for all}\; R,S\in {\mathbb {C}}^{p \times p}.\)

-

(ii)

\((P+{\mathcal {I}})(SR)=((P+{\mathcal {I}})S)R\quad \text {for all}\; R,S\in {\mathbb {C}}^{p \times p}.\)

-

(iv)

\((P+{\mathcal {I}})I_p=P+{\mathcal {I}}.\)

\(\square \)

Definition 5.18

Suppose \(M(\infty ) \succeq 0\). For every \(P, Q \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d], \) we define the form

given by

The following lemma shows that the form in Definition 5.18 is a well-defined positive semidefinite sesquilinear form.

Lemma 5.19

Suppose \(M(\infty )\succeq 0\) and let \(P, Q \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) Then \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is well-defined, sesquilinear and positive semidefinite.

Proof

We first show that the form \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is well-defined. We need to prove that if \(P+ {\mathcal {I}}=P'+ {\mathcal {I}}\;\;\text {and}\;\; Q+ {\mathcal {I}}=Q'+ {\mathcal {I}},\) then

where \(P,P',Q,Q'\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) We have

Since \(P-P'\in {\mathcal {I}},\)

and since \(Q-Q'\in {\mathcal {I}},\)

We write

and

We sum both hand sides of Eqs. (5.4) and (5.5) and we obtain

that is,

Therefore

We now show that \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is sesquilinear. Let \(A, {\tilde{A}} \in {\mathbb {C}}^{p \times p}.\) If

then

Let \({\tilde{m}}:=\max (m,n).\) Without loss of generality suppose \({\tilde{m}}=m.\) For \(\lambda \in \Gamma _{m, d} {\setminus }\Gamma _{n, d},\) let \(Q_\lambda :=0_{p\times p}.\) We may view Q as \(Q(x)= \sum \nolimits _{\lambda \in \Gamma _{m, d}} x^\lambda Q_\lambda .\) We have

and

and so \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is sesquilinear. Finally, we show that \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is positive semidefinite. By definition,

Moreover, it follows from the definition of \(M(\infty )\succeq 0\) (see Definition 5.10) that

Thus \([P+ {\mathcal {I}}, Q +{\mathcal {I}}]\) is positive semidefinite. \(\square \)

In analogy to Definition 3.5, we define the variety associated with the right ideal \({\mathcal {I}}.\)

Definition 5.20

Suppose \(M(\infty ) \succeq 0\). Let \({\mathcal {I}}\) be the right ideal as in Definition 5.13 and let the matrix-valued polynomial \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d].\) We define the variety associated with \({\mathcal {I}}\) by

Lemma 5.21

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a given truncated \( {\mathcal {H}}_p\)-valued multisequence and M(n) the corresponding d-Hankel matrix. Suppose \(M(n)\succeq 0\) has an extension \(M(n+1)\succeq 0. \) If there exists \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_\lambda \in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) such that \(P(X)={{\,\mathrm{col}\,}}( 0_{p\times p})_{\gamma \in \Gamma _{n, d}}\in C_{M(n)},\) then

Proof

If there exists \(P\in {\mathbb {C}}^{p \times p}[x_1,\dots , x_d]\) such that \(P(X)={{\,\mathrm{col}\,}}( 0_{p\times p})_{\gamma \in \Gamma _{n, d}}\in C_{M(n)},\) then since \(M(n)\succeq 0,\) we have

We will show

Notice that

and

We write

where

and

Since \(M(n+1)\succeq 0,\) by Lemma 2.1, there exists \(W\in {\mathbb {C}}^{({{\,\mathrm{card}\,}}\Gamma _{n, d})p \times ({{\,\mathrm{card}\,}}( \Gamma _{n+1, d}\setminus \Gamma _{n, d}))p}\) such that

Then

by Eq. (5.6). Thus, Eq. (5.7) holds and the proof is complete. \(\square \)

The following lemma is well-known, see, e.g., Horn and Johnson [47]. However, for the convenience of the reader, we provide a statement.

Lemma 5.22

Let \(A \in {\mathbb {C}}^{n \times n}\) and \(B\in {\mathbb {C}}^{m \times m}\) be given. Then

\(A \otimes B\) and \(B \otimes A\) are invertible if and only if A and B are both invertible.

Remark 5.23

By Lemma 5.22, the multivariable Vandermonde matrix \(V^{p \times p}(w^{(1)}, \dots , w^{(k)}; \Lambda )\) is invertible if and only if \(V(w^{(1)}, \dots , w^{(k)}; \Lambda )\) is invertible and \(I_p\) is invertible. However, since \(I_p\) is obviously invertible, we have \(V^{p \times p}(w^{(1)}, \dots , w^{(k)}; \Lambda )\) is invertible if and only if \(V(w^{(1)}, \dots , w^{(k)}; \Lambda )\) is invertible.

5.2 Existence of a Representing Measure for a Positive Infinite d-Hankel Matrix with Finite Rank

In this subsection we shall see that if \(M(\infty )\succeq 0\) and \({{\,\mathrm{rank}\,}}M(\infty )<\infty ,\) then the associated \({\mathcal {H}}_p\)-valued multisequence has a representing measure T.

Definition 5.24

We define the vector space

Definition 5.25

We let \({\tilde{C}}_{M(\infty )}\) be the complex vector space

Remark 5.26

We note that

Definition 5.27

Given \(q(x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} q_\lambda x^\lambda \in {\mathbb {C}}^p[x_1,\dots , x_d],\) we let

Lemma 5.28

Suppose \(M(\infty )\succeq 0\) and \(r={{\,\mathrm{rank}\,}}M(\infty )<\infty .\) Then \(r=\dim {\tilde{C}}_{M(\infty )}.\)

Proof

If \(\dim {\tilde{C}}_{M(\infty )}=m\) and \(m\ne r,\) then there exists a basis

of \({\tilde{C}}_{M(\infty )}\) for \(1\le k_a\le p,\) where \(e_{k_a}\) is a standard basis vector in \({\mathbb {C}}^p\) and \(a=1, \dots , m.\) We will show that

is a basis of \({\tilde{C}}_{M(\kappa )},\) where

First we need to show that \(\tilde{{\mathcal {B}}}\) is linearly independent in \({\tilde{C}}_{M(\kappa )}.\) For this, suppose that there exist \(c_1, \dots , c_m \in {\mathbb {C}}\) not all zero such that

Let \(v={{\,\mathrm{col}\,}}(v_{\lambda })_{\lambda \in \Gamma _{\kappa , d}}\) be a vector in \({\mathbb {C}}^{({{\,\mathrm{card}\,}}\Gamma _{\kappa , d} )p} \) with

Then by Eq. (5.8), \(M(\kappa )v={{\,\mathrm{col}\,}}(0_p)_{\gamma \in \Gamma _{\kappa , d}} \in {\tilde{C}}_{M(\kappa )}.\) Since \(M(\kappa +\ell )\succeq 0\) for all \(\ell =1, 2, \dots , \) we have

For \(\eta \in {\mathbb {C}}^p[x_1,\dots , x_d] \) with \({\hat{\eta }}:=v\oplus {{\,\mathrm{col}\,}}( 0_p)_{{\gamma \in {\mathbb {N}}_0^d} \setminus \Gamma _{ \kappa , d}},\) we have

that is, there exist \(c_1, \dots , c_m \in {\mathbb {C}}\) not all zero such that

However, this contradicts the fact that \({\mathcal {B}}\) is linear independent. Hence \(\tilde{{\mathcal {B}}}\) is linearly independent in \({\tilde{C}}_{M(\kappa )}.\) It remains to show that \(\tilde{{\mathcal {B}}}\) spans \({\tilde{C}}_{M(\kappa )}.\) Since \({\mathcal {B}}\) is a basis of \({\tilde{C}}_{M(\infty )},\) for any \({{\,\mathrm{col}\,}}(d_\gamma )_{\gamma \in {\mathbb {N}}_0^d }\in {\tilde{C}}_{M(\infty )}\) with \(d_\gamma \in {\mathbb {C}}^p,\) there exists \(c_1, \dots , c_m \in {\mathbb {C}}\) such that

We next let \({\mathcal {X}}^{\lambda ^{(a)}}={{\,\mathrm{col}\,}}(S_{\lambda ^{(a)}+\gamma })_{\gamma \in {\mathbb {N}}_0^d{\setminus }\Gamma _{ \kappa , d}}.\) We have

and so

Hence \(\tilde{{\mathcal {B}}}\) spans \({\tilde{C}}_{M(\kappa )}.\) Therefore \(\tilde{{\mathcal {B}}}\) is a basis of \({\tilde{C}}_{M(\kappa )},\) which forces \({{\,\mathrm{rank}\,}}M(\kappa )=m\; \text {for all}\;\kappa .\) Thus \( \sup _{\kappa } {{\,\mathrm{rank}\,}}M(\kappa )=\sup _{\kappa } m\;\text {for all}\;\kappa ,\) that is, \(r=m,\) a contradiction. Consequently \(\dim {\tilde{C}}_{M(\infty )}=r. \) \(\square \)

Remark 5.29

Presently, we shall view \(M(\infty )\) as a linear operator

and not as a linear operator

as in Sect. 3.1.

Remark 5.30

Assume \(r={{\,\mathrm{rank}\,}}M(\infty )\) (or, equivalently, \(\dim {\tilde{C}}_{M(\infty )}<\infty \)). Suppose

is a basis for \({\tilde{C}}_{M(\infty )},\) where \(e_{k_a}\) is a standard basis vector in \({\mathbb {C}}^p\) and \(a=1, \dots , r.\) Then there exist \(c_1, \dots , c_r \in {\mathbb {C}}\) such that any \(w \in {\tilde{C}}_{M(\infty )}\) can be written as

In analogy to results from Section 3.1, we move on to the following.

Definition 5.31

We define the map \(\phi : {\mathbb {C}}^p[x_1,\dots , x_d]\rightarrow {\tilde{C}}_{M(\infty )}\) given by

where \(v(x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} v_\lambda x^\lambda \in {\mathbb {C}}^p[x_1,\dots , x_d]. \)

Definition 5.32

Suppose \(M(\infty )\succeq 0.\) Let \(q \in {\mathbb {C}}^p[x_1,\dots , x_d].\) We define the subspace of \({\mathbb {C}}^p[x_1,\dots , x_d]\)

and the kernel of the map \(\phi \)

where \(\phi \) is as in Definition 5.31.

Lemma 5.33

Suppose \(M(\infty )\succeq 0.\) Then

where \({\mathcal {J}}\) and \(\ker \phi \) are as in Definition 5.32.

Proof

If \(q(x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} q_\lambda x^\lambda \in \ker \phi , \) then

that is, \(M(\infty ){\hat{q}}={{\,\mathrm{col}\,}}(0_p)_{\gamma \in {\mathbb {N}}_0^d },\) where \({\hat{q}} \in ({\mathbb {C}}^p)_{0}^{\omega }. \) Thus \(\langle M(\infty ){\hat{q}}, {\hat{q}} \rangle = 0\) and so \(q\in {\mathcal {J}}.\)

Conversely, let \(q(x)=\sum _{\lambda \in \Gamma _{n, d}} q_\lambda x^\lambda \in {\mathcal {J}}.\) Then \(\langle M(\infty ){\hat{q}}, {\hat{q}} \rangle = 0.\) It suffices to show that for every \(\eta (x)=\sum _{\lambda \in \Gamma _{m, d}} \eta _\lambda x^\lambda \in {\mathbb {C}}^p[x_1,\dots , x_d], \)

Let \({\tilde{m}}=\max (n,m).\) Without loss of generality suppose \({\tilde{m}}=m.\) Let \(\eta _\lambda =0_p\) for \(\lambda \in \Gamma _{n, d} {\setminus }\Gamma _{m, d}. \) We may view \(\eta \) as \(\eta (x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} \eta _\lambda x^\lambda . \) Since \(\langle M(\infty ){\hat{q}}, {\hat{q}} \rangle = 0,\) we have \({\hat{q}}^* M(\infty ) {\hat{q}}=0\) and so

Moreover, since \(M(\infty )\succeq 0,\) \( M(m)\succeq 0\) and hence, the square root of M(m) exists. Next, \(\langle M(m)^{\frac{1}{2}}{\hat{q}}, {\hat{q}} \rangle = 0\) implies \(\langle M(m)^{\frac{1}{2}}{\hat{q}}, M(m)^{\frac{1}{2}}{\hat{q}} \rangle = 0,\) that is,

Then \( M(m)^{\frac{1}{2}}{\hat{q}}= {{\,\mathrm{col}\,}}(0_p)_{\lambda \in \Gamma _{m, d}}\) and

which implies

If \(q(x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} q_\lambda x^\lambda \in {\mathcal {J}}\) and \(\eta (x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} \eta _\lambda x^\lambda \in {\mathcal {J}}\) with \({\hat{q}}, {\hat{\eta }} \in ({\mathbb {C}}^p)_{0}^{\omega },\) then

by Eq. (5.9). \(\square \)

Definition 5.34

Let \(M(\infty )\succeq 0\) and \({\mathcal {J}}\) be as in Definition 5.32. We define the quotient space

of equivalence classes modulo \({\mathcal {J}},\) that is, if

then

Definition 5.35

For every \(h,q \in {\mathbb {C}}^p[x_1,\dots , x_d], \) we define the inner product

given by

Lemma 5.36

Suppose \(M(\infty )\succeq 0\) and let \(h, q \in {\mathbb {C}}^p[x_1,\dots , x_d].\) Then \(\langle h+ {\mathcal {J}}, q +{\mathcal {J}}\rangle \) is well-defined, linear and positive semidefinite.

Proof

We first show that the inner product \(\langle h+ {\mathcal {J}}, q +{\mathcal {J}}\rangle \) is well-defined. We need to prove that if \(h+ {\mathcal {J}}=h'+ {\mathcal {J}}\;\;\text {and}\;\; q+ {\mathcal {J}}=q'+ {\mathcal {J}},\) then

where \(h,h',q,q'\in {\mathbb {C}}^p[x_1,\dots , x_d].\) We write

Since \(h-h'\in {\mathcal {J}},\)

and since \(q-q'\in {\mathcal {J}},\)

We write

and

We sum both hand sides of Eqs. (5.10) and (5.11) and we obtain

that is,

and hence

We now show that the inner product \(\langle h+ {\mathcal {J}}, q +{\mathcal {J}} \rangle \) is linear. We must prove that for every \(h, {\tilde{h}}, q \in {\mathbb {C}}^p[x_1,\dots , x_d]\) and \(a, {\tilde{a}} \in {\mathbb {C}},\)

Let

Then

Let \({\tilde{m}}=\max (n,m).\) Without loss of generality suppose \({\tilde{m}}=m.\) Let \(q_\lambda =0_h\) for \(\lambda \in \Gamma _{n, d} {\setminus }\Gamma _{m, d}. \) We may view q as \(q(x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} q_\lambda x^\lambda \) and we have

Finally, we show \(\langle h+ {\mathcal {J}}, q +{\mathcal {J}} \rangle \) is positive semidefinite. By definition,

Since \(M(\infty )\succeq 0,\) by Lemma 5.12,

Hence \(\langle h+ {\mathcal {J}}, h +{\mathcal {J}}\rangle \) is positive semidefinite. \(\square \)

Definition 5.37

We define the map \(\Psi : {\tilde{C}}_{M(\infty )} \rightarrow {\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}}\) given by

where

Lemma 5.38

\(\Psi \) as in Definition 5.37 is an isomorphism.

Proof

We consider the map \(\phi : {\mathbb {C}}^p[x_1,\dots , x_d]\rightarrow {\tilde{C}}_{M(\infty )}\) is as in Definition 5.31 and we first show that \(\phi \) is an homomorphism. For \(\sum \nolimits _{a=1}^{r}d_a{ x^{\lambda }}^{(a)}e_{k_a} \in {\mathbb {C}}^p[x_1,\dots , x_d],\) where \( d_1, \dots , d_r \in {\mathbb {C}},\) we have

Moreover, we shall see that \(\phi \) is surjective. Indeed, for every \(\sum \nolimits _{a=1}^{r}c_a{ X^{\lambda }}^{(a)}e_{k_a} \in {\tilde{C}}_{M(\infty )},\) there exists \( \sum \nolimits _{a=1}^{r}c_a{ x^{\lambda }}^{(a)}e_{k_a} \in {\mathbb {C}}^p[x_1,\dots , x_d] \) such that

By the Fundamental homomorphism theorem (see, e.g., [41, Theorem 1.11]), \({\tilde{C}}_{M(\infty )}\) is isomorphic to \({\mathbb {C}}^p[x_1,\dots , x_d]/\ker \phi \) and thus to \({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}},\) by Lemma 5.33. Hence, the map \(\Psi \) is an isomorphism. \(\square \)

Remark 5.39

By Lemma 5.28, \(r={{\,\mathrm{rank}\,}}M(\infty )=\dim {\tilde{C}}_{M(\infty )}<\infty .\) Since \(\Psi \) is an isomorphism, we derive that \(r=\dim ({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}} ).\)

In this setting, we present the multiplication operators \(M_{x_j},\;j=1, \dots , d,\) as defined below.

Definition 5.40

Let \(q\in {\mathbb {C}}^p[x_1,\dots , x_d].\) We define the multiplication operators

given by

where

is the multiplication operator defined by

for all \(j=1, \dots , d\) and \(\varepsilon _j \in {\mathbb {N}}_0^d,\) \(j=1, \dots , d.\)

Let us now continue with lemmas on properties of the multiplication operators \(M_{x_j}\).

Lemma 5.41

Let \(M_{x_j},\) \(j=1, \dots , d,\) be the multiplication operators as in Definition 5.40. Then \(M_{x_j}\) is well-defined for all \(j=1, \dots , d.\)

Proof

Let \(q(x)=\sum \nolimits _{\lambda \in \Gamma _{m, d}} q_\lambda x^\lambda \) and \(h(x)=\sum \nolimits _{\lambda \in \Gamma _{m, d}} h_\lambda x^\lambda .\) If \(q+{\mathcal {J}}=h+{\mathcal {J}},\) then

that is,

or equivalently,

which is equivalent to

that is,

and hence

as required. \(\square \)

Lemma 5.42

Let \(M_{x_j},\) \(j=1, \dots , d,\) be as in Definition 5.40. Then \(M_{x_j}(q+ {\mathcal {J}})=x^{\varepsilon _j}q+ {\mathcal {J}}\) for all \(j=1, \dots , d.\)

Proof

For all \(j=1, \dots , d,\)

as required. \(\square \)

Lemma 5.43

Let \(M_{x_j},\) \(j=1, \dots , d,\) be as in Definition 5.40. Then \(M_{x_j}M_{x_\ell }=M_{x_\ell }M_{x_j}\) for all \(j, \ell =1, \dots , d.\)

Proof

We need to show that for every \(q, f \in {\mathbb {C}}^p[_1,\dots , x_d], \)

that is, \( \langle x^{\varepsilon _j} x^{\varepsilon _\ell } (q+ {\mathcal {J}}), f+ {\mathcal {J}}\rangle = \langle x^{\varepsilon _\ell }x^{\varepsilon _j} (q+ {\mathcal {J}}), f+ {\mathcal {J}}\rangle .\) We have

Thus \(M_{x_j}M_{x_\ell }=M_{x_\ell }M_{x_j}\;\text {for all}\; j, \ell =1, \dots , d.\) \(\square \)

Lemma 5.44

Let \(M_{x_j},\) \(j=1, \dots , d,\) be as in Definition 5.40. Then \(M_{x_j}\) is self-adjoint for all \(j=1, \dots , d.\)

Proof

We need to show that

that is,

We have

and

Equation (5.12) is equal to

where \( {\hat{f}} \in ({\mathbb {C}}^p)_{0}^{\omega }\) and \(\widehat{(x_j q)}\in ({\mathbb {C}}^p)_{0}^{\omega }\) and equation (5.13) is equal to

where \( \widehat{(x_j f)} \in ({\mathbb {C}}^p)_{0}^{\omega }\) and \({\hat{q}}\in ({\mathbb {C}}^p)_{0}^{\omega }.\) It remains to show that \( {\hat{f}}^* M(\infty ) \widehat{(x_j q)} = \widehat{(x_j f)}^* M(\infty ) {\hat{q}}.\) We have

and the proof is now complete. \(\square \)

Next, we shall use spectral theory involving the preceding multiplication operators. First, we denote by \({\mathcal {P}}\) the set of the orthogonal projections on \({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}}.\)

Remark 5.45

\(M_{x_j}\) is self-adjoint for all \(j=1, \dots ,d\) and so by the spectral theorem for bounded self-adjoint operators on a Hilbert space (see, e.g., [71, Theorem 5.1]), there exists a unique spectral measure \(E_j: {{\mathcal {B}}(\sigma (E_j))} \rightarrow {\mathcal {P}},\; \sigma (E_j)\subseteq {\mathcal {B}}({\mathbb {R}}^d),\) such that

\(E_j\) is unique, in the sense that if \(F_j: {\mathcal {B}}({\mathbb {R}}) \rightarrow {\mathcal {P}}\) is another spectral measure such that

then we have

By [71, Lemma 4.3], \(E_j(\alpha )E_j(\beta )=E_j(\alpha \cap \beta )\;\text {for}\; \alpha , \beta \in {{\mathcal {B}}(\sigma (E_j))},\) which implies that

Since \(M_{x_j}\) is self-adjoint and pairwise commute, that is, \(M_{x_j}M_{x_k}=M_{x_k}M_{x_j}\) for all \(j, k=1, \dots , d\) (see Lemma 5.43), we have that for all Borel sets \(\alpha , \beta \in {{\mathcal {B}}({\mathbb {R}}^d)}, \)

Thus, by [71, Theorem 4.10], there exists a unique spectral measure E on the Borel algebra \({\mathcal {B}}(\Omega )\) of the product space \(\Omega = \sigma (E_1) \times \dots \times \sigma (E_d)\) such that

Remark 5.46

[71, Theorem 5.23] For \(M_{x_j},\) \(j=1, \dots ,d,\) commuting self-adjoint operators on the quotient space \({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}},\) there exists a joint spectral measure \(E: {{\mathcal {B}}({\mathbb {R}}^d)} \rightarrow {\mathcal {P}}\) such that for every \(q, f \in {\mathbb {C}}^p, \)

Definition 5.47

[71, Definition 5.3] The support of the spectral measure E is called the joint spectrum of \(M_{x_1},\dots , M_{x_d} \) and is denoted by \(\sigma (M_x)=\sigma (M_{x_1},\dots , M_{x_d}).\)

Lemma 5.48

If \(r={{\,\mathrm{rank}\,}}M(\infty )=\dim ( {\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}})< \infty ,\) then

where \(\sigma (M_x)\) is as defined in Definition 5.47.

Proof

Since \(M_{x_j}\) \(j=1, \dots ,d\) are self-adjoint operators on the finite dimensional Hilbert space \({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}},\) we have

with

We next fix a basis \({\mathcal {D}}\) of \({\mathbb {C}}^p[x_1,\dots , x_d]/{\mathcal {J}}\) and let \(A_j\in {\mathbb {C}}^{r\times r}\) be the matrix representation of \(M_{x_j}\) with respect to \({\mathcal {D}}.\) Then since \(M_{x_j}\) are commuting self-adjoint operators we get

By [47, Theorem 2.5.5], there exists unitary \(U \in {\mathbb {C}}^{r\times r}\) such that

and \(\mathrm {diag}(\nu _1^{(j)}, \ldots , \nu _r^{(j)})\in {\mathbb {C}}^{r\times r} \) with \(\nu _1^{(j)}, \ldots , \nu _r^{(j)}\) the eigenvalues of \(A_j.\) Therefore

from which we derive

\(\square \)

The following proposition proves the existence of a representing measure T for a given \({\mathcal {H}}_p\)-valued multisequence \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) which gives rise to an infinite d-Hankel matrix with finite rank. In Sect. 5.4 we will obtain additional information on the representing measure T.

Proposition 5.49

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \({\mathcal {H}}_p\)-valued multisequence with corresponding d-Hankel matrix \(M(\infty )\succeq 0.\) Suppose \(r:={{\,\mathrm{rank}\,}}M(\infty )< \infty .\) Then \(S^{(\infty )}\) has a representing measure T.

Proof

First we show

that is,

for all \(v\in {\mathbb {C}}^p\) and \(\gamma \in {\mathbb {N}}_0^d.\) For all \(v\in {\mathbb {C}}^p,\) we have

Therefore, we have obtained the left hand side of the equation (5.14). The right hand side is implied by Remark 5.46. Indeed we have

for \(\gamma \in {\mathbb {N}}_0^d\) and Eq. (5.15) holds. Let \(v^*T(\alpha )v:=\langle E(\alpha )(v+ {\mathcal {J}}), v+ {\mathcal {J}}\rangle \) for every \(\alpha \in {{\mathcal {B}}({\mathbb {R}}^d)}.\) We rewrite Eq. (5.15) as

and let \(T_{v, v}(\alpha ):= v^*T(\alpha )v,\) where \(\alpha \in {{\mathcal {B}}({\mathbb {R}}^d)}.\) Notice that \(T_{v, v}(\alpha ) \succeq 0.\) We need to show

Fix \(\alpha \in {\mathcal {B}}({\mathbb {R}}^d)\) and define

We observe

for all \(\gamma \in {\mathbb {N}}_0^d \) and \(v, w \in {\mathbb {C}}^p.\) Thus

Let \(\beta (w, v):{\mathbb {C}}^p \times {\mathbb {C}}^p \rightarrow {\mathbb {C}}\) be given by \(\beta (w, v ):=T_{w, v}(\alpha )\) where \(\alpha \in {\mathcal {B}}({\mathbb {R}}^d)\) is fixed. Using the assumption (1.3) we have

by the Rayleigh-Ritz Theorem (see, e.g., [47, Theorem 4.2.2]), where \(\max \frac{v^*I_pv}{v^*v},\) \(v\ne 0_p,\) is the maximum eigenvalue of the matrix \(I_p\). For all \( w, v \in {\mathbb {C}}^p,\) formula (5.16) yields

by the Cauchy-Schwarz inequality. Hence \(\beta \) is a bounded sesquilinear form. For every \(v \in {\mathbb {C}}^p,\) the linear functional \(L_{v}: {\mathbb {C}}^p\rightarrow {\mathbb {C}}\) given by \(L_{v}(w)= \beta (w, v)\) is such that

By the Riesz Representation Theorem for Hilbert spaces (see, e.g., [62, Theorem 4, Section 6.3]), there exists a unique \(\varphi \in {\mathbb {C}}^p\) such that \(L_{v}(w)= \langle \varphi , v \rangle \; \text {for all}\; v \in {\mathbb {C}}^p. \) Let \(T: {{\mathcal {B}}({\mathbb {R}}^d)}\rightarrow {\mathcal {H}}_p\) be given by

for which \(T(\alpha ) w =\varphi ,\) \(\alpha \in {{\mathcal {B}}({\mathbb {R}}^d)}.\) Since

we have \(T(\alpha )\succeq 0\;\;\text {for} \;\;\alpha \in {{\mathcal {B}}({\mathbb {R}}^d)}.\) Therefore, formula (5.17) implies

and so, \(S^{(\infty )}\) has a representing measure T. \(\square \)

5.3 Necessary Conditions for the Existence of a Representing Measure

Throughout this subsection a series of lemmas are shown on the variety of the d-Hankel matrix and its connection with the support of the representing measure.

Lemma 5.50

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a given truncated \( {\mathcal {H}}_p\)-valued multisequence and M(n) the corresponding d-Hankel matrix. If S has a representing measure T, then \(M(n)\succeq 0.\)

Proof

For \(\eta = {{\,\mathrm{col}\,}}(\eta _{\lambda })_{\lambda \in \Gamma _{n, d}},\) we have

where \(\zeta (x)=\sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda \eta _\lambda .\) \(\square \)

Definition 5.51

Let T be a representing measure for \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}},\) where \(S_\gamma \in {\mathcal {H}}_p\) for \(\gamma \in \Gamma _{2n, d}\) and \(P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_{\lambda } \in {\mathbb {C}}^{p \times p}_n[x_1, \dots ,x_d].\) We define

Remark 5.52

In view of [51, Theorem 2], if S has a representing measure T, then we can always find a representing measure \({\tilde{T}}\) for S of the form \({\tilde{T}}=\sum \nolimits _{a=1}^{\kappa } Q_a \delta _{w^{(a)}}\) with \(\kappa \le \left( {\begin{array}{c}2n+d\\ d\end{array}}\right) p.\) Then we may let

The following lemma will connect the support of a representing measure of an \( {\mathcal {H}}_p\)-valued truncated multisequence and the variety of the d-Hankel matrix M(n) and is a matricial generalisation of Proposition 3.1 in [16] (albeit in an equivalent complex moment problem setting). As we will see in Example 5.54, unlike the scalar setting (i.e., when \(p = 1\)), we only have one direction of the implication. Moreover, the proof of Lemma 5.53 is more cumbersome than the scalar case.

Lemma 5.53

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a given truncated \( {\mathcal {H}}_p\)-valued multisequence with a representing measure T. Suppose M(n) is the corresponding d-Hankel matrix. If

then

where \( P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_{\lambda } \in {\mathbb {C}}^{p \times p}_n[x_1, \dots ,x_d]. \)

Proof

If \({{\,\mathrm{col}\,}}\bigg ( \sum \nolimits _{\lambda \in \Gamma _{n, d}} S_{\gamma +\lambda } P_{\lambda }\bigg )_{\gamma \in \Gamma _{n, d}}={{\,\mathrm{col}\,}}(0_{p \times p})_{\gamma \in \Gamma _{n, d}},\) then

that is, \(\sum \nolimits _{{\lambda , \gamma }\in \Gamma _{n, d}} P_{\lambda } ^* S_{\gamma +\lambda } P_{\gamma } = 0_{p \times p},\) which is equivalent to \( \int _{{\mathbb {R}}^d} P(x)^*dT(x) P(x)= 0_{p \times p}.\) Indeed

and so

Suppose to the contrary that

Then there exists a point \(u^{(0)}\in {{\,\mathrm{supp}\,}}T\) such that \(u^{(0)} \notin {\mathcal {Z}}(\det P(x))\) and

has the property \(T(\overline{B_{\varepsilon }(u^{(0)})})\ne 0_{p\times p}\) and \(\overline{B_{\varepsilon }(u^{(0)})}\cap {\mathcal {Z}}(\det P(x))=\emptyset .\) We write

and we note that both terms on the right hand side are positive semidefinite.

Let \(Y:=T|_{\overline{B_{\varepsilon }(u^{(0)})}}=T(\sigma \cap \overline{B_{\varepsilon }(u^{(0)})} )\) for \(\sigma \in {\mathcal {B}}({\mathbb {R}}^d).\) Consider \({\tilde{S}}:=({\tilde{S}}_\gamma )_{\gamma \in \Gamma _{2n, d}},\) where

and note that \({\tilde{S}}_{0_{d}}=\int _{{\mathbb {R}}^d} dY(x)=Y(\overline{B_{\varepsilon }(u^{(0)})})\ne 0_{p\times p}.\) Applying [51, Theorem 2] we obtain a representing measure for \({\tilde{S}}\) of the form \({\widetilde{Y}}= \sum \nolimits _{a=1}^{\kappa } Q_a \delta _{u^{(a)}},\) with nonzero \(Q_a \succeq 0,\) \(\kappa \le \left( {\begin{array}{c}2n+d\\ d\end{array}}\right) p\) and \(u^{(1)}, \dots , u^{(\kappa )}\in \overline{B_{\varepsilon }(u^{(0)})}.\) But then

by Remark 5.52. Since \(P(u^{(a)}) ^* Q_a P(u^{(a)}) \succeq 0_{p \times p} \;\;\text {for} \; a=1,\dots \kappa ,\) we derive

But \(P(u^{(a)})\) is invertible and therefore formula (5.18) implies \(Q_a= 0_{p \times p}\) for \(a=1,\dots \kappa ,\) a contradiction. \(\square \)

The next example illustrates that the converse of Lemma 5.53 does not hold.

Example 5.54

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2, 2}}\) be a truncated \({\mathcal {H}}_2\)-valued bisequence with \(S_{00}=I_2,\) \(S_{10}=\frac{1}{2}\begin{pmatrix} 1&{}0\\ 0&{}0 \end{pmatrix}=S_{20},\) \(S_{01}=\frac{1}{2}\begin{pmatrix} 0&{}0\\ 0&{}1 \end{pmatrix}=S_{02}\) and \(S_{11}=0_{2\times 2}.\) Then S has a representing measure T given by

Choose the matrix-valued polynomial in \({\mathbb {C}}^{2 \times 2}_1[x,y]\)

and notice that \(\det P(x, y)=xy\) and

We have

which asserts that the converse of Lemma 5.53 does not hold.

We continue with results on the variety of a d-Hankel matrix and its connection with the support of a representing measure T.

Lemma 5.55

Suppose \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) is a given truncated \( {\mathcal {H}}_p\)-valued multisequence with a representing measure T. Let M(n) be the corresponding d-Hankel matrix and let \({\mathcal {V}}(M(n))\) be the variety of M(n) (see Definition 3.5). Let \( P(x)= \sum \nolimits _{\lambda \in \Gamma _{n, d}} x^\lambda P_{\lambda } \in {\mathbb {C}}^{p \times p}_n[x_1, \dots ,x_d].\) If

then

Proof

By Lemma 5.53, for any \( P(x) \in {\mathbb {C}}^{p \times p}_n[x_1, \dots ,x_d] \) with

we have \({{\,\mathrm{supp}\,}}T\subseteq {\mathcal {Z}}(\det P(x)).\) Thus

which implies that

\(\square \)

Lemma 5.56

Let \(S:=(S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be a given truncated \({\mathcal {H}}_p\)-valued multisequence and let M(n) be the corresponding d-Hankel matrix. If S has a representing measure T and \(w^{(1)}, \dots , w^{(\kappa )}\in {\mathbb {R}}^d\) are given such that

then there exists \(P(x) \in {\mathbb {C}}^{p \times p}_n[x_1, \dots ,x_d] \) such that

Moreover

and

where \( {\mathcal {V}}(M(n))\) the variety of M(n) (see Definition 3.5).

Proof

If we let \(P(x):= \prod _{a=1}^{\kappa } \prod _{j=1}^{d} (x_j -w_{j} ^{(a)})I_p,\) then \(\det P(x)= \prod _{a=1}^{\kappa } \prod _{j=1}^{d} (x_j -w_{j} ^{(a)})^p\) and so

Thus

If we let \({P}(x):= \prod _{a=1}^{\kappa } \bigg ( \sum _{j=1}^{d} (x_j -w_{j} ^{(a)})^2 \bigg )I_p,\) then \(\det {P}(x)= \prod _{a=1}^{\kappa } \bigg ( \sum _{j=1}^{d} (x_j -w_{j} ^{(a)})^2 \bigg )^p\) and hence

which yields

Then by inclusions (5.19) and (5.20), we obtain

Hence \( {\mathcal {Z}}(\det P(x))= \{ w^{(1)}, \dots , w ^{(\kappa )} \}= {{\,\mathrm{supp}\,}}T,\) where \(T=\sum \nolimits _{a=1}^{\kappa } Q_a \delta _{w^{(a)}}.\) We will next show that for both choices of \(P\in {\mathbb {C}}^{p \times p}_{n}[x_1, \dots ,x_d],\) one obtains

and thus \( {\mathcal {V}}(M(n))\subseteq {{\,\mathrm{supp}\,}}T.\) For the choice of \(P(x):= \prod _{a=1}^{\kappa } \prod _{j=1}^{d} (x_j -w_{j} ^{(a)})I_p\in {{\mathbb {C}}}^{p\times p}_n[x_1,\dots , x_d],\)

Consider \(P(X)\in C_{M(n)}.\) We have \({\mathcal {Z}}(\det P(x))= {{\,\mathrm{supp}\,}}T\) and we shall see \(P(X)= {{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}. \) We notice

where \(\varphi (x)= \prod _{a=1}^{\kappa } \prod _{j=1}^{d} (x_j -w_{j} ^{(a)}) \in {\mathbb {R}}[x_1, \dots ,x_d].\) Since \(T=\sum \nolimits _{a=1}^{\kappa } Q_a \delta _{w^{(a)}},\) P(X) becomes

and hence \(P(X)={{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}.\) Since there exists matrix-valued polynomial P(x) such that \(P(X)={{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}\) and \( {\mathcal {Z}}(\det P(x))= {{\,\mathrm{supp}\,}}T,\) we then have

which implies

Next, for the choice of \(P(x):= \prod _{a=1}^{\kappa } \bigg ( \sum _{j=1}^{d} (x_j -w_{j} ^{(a)})^2 \bigg )I_p \in {\mathbb {C}}^{p \times p}_{n}[x_1, \dots ,x_d],\) we will show that

We have \({\mathcal {Z}}(\det P(x))= {{\,\mathrm{supp}\,}}T\) and we consider \(P(X)\in C_{M(n)}\). We need to show that for this choice of P(x), \(P(X)= {{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}.\) Notice that

where \({\tilde{\varphi }}(x)= \prod _{a=1}^{\kappa } \sum _{j=1}^{d} (x_j -w_{j} ^{(a)})^2 \in {\mathbb {R}}[x_1, \dots ,x_d].\) Since \(T=\sum \nolimits _{a=1}^{\kappa } Q_a \delta _{w^{(a)}},\) P(X) becomes

and so \(P(X)={{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}.\) We thus conclude that there exists a matrix-valued polynomial P(x) such that \(P(X)={{\,\mathrm{col}\,}}(0_{p\times p})_{\gamma \in \Gamma _{n, d}}\) and \( {\mathcal {Z}}(\det P(x))= {{\,\mathrm{supp}\,}}T\) and thus we obtain

which asserts

\(\square \)

Lemma 5.57

Let \(S:= (S_\gamma )_{\gamma \in \Gamma _{2n, d}}\) be truncated \({\mathcal {H}}_p\)-valued multisequence and let M(n) be the corresponding d-Hankel matrix. If T is a representing measure for S, then

Proof

If \({{\,\mathrm{supp}\,}}T \) is infinite, then \({{\,\mathrm{rank}\,}}M(n) \le \sum \nolimits _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a\) holds trivially. If \({{\,\mathrm{supp}\,}}T \) is finite, that is, T is of the form \(T=\sum \nolimits _{a=1}^{\kappa } Q_a \delta _{w^{(a)}},\) then

where \(V:= V^{p\times p}(w^{(1)}, \dots , w^{(\kappa )}; \Lambda )\in {\mathbb {C}}^{\kappa p\times \kappa p} \) with \(\Lambda \subseteq {\mathbb {N}}_0^d\) and \({{\,\mathrm{card}\,}}\Lambda =\kappa \) and

Hence

and the proof is complete. \(\square \)

Proposition 5.58

Let \(S^{(\infty )}:=(S_\gamma )_{\gamma \in {\mathbb {N}}_0^d}\) be a given \( {\mathcal {H}}_p\)-valued multisequence with a representing measure T which has \(\sum \nolimits _{a=1}^{\kappa } {{\,\mathrm{rank}\,}}Q_a < \infty \) and \(M(\infty )\) be the corresponding d-Hankel matrix. Then

Proof