Abstract

In this work, we examine the solution properties of the Burgers’ equation with stochastic transport. First, we prove results on the formation of shocks in the stochastic equation and then obtain a stochastic Rankine–Hugoniot condition that the shocks satisfy. Next, we establish the local existence and uniqueness of smooth solutions in the inviscid case and construct a blow-up criterion. Finally, in the viscous case, we prove global existence and uniqueness of smooth solutions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We prove the well-posedness of a stochastic Burgers’ equation of the form

where \(x \in {\mathbb {T}}\) or \({\mathbb {R}},\) \(\nu \ge 0\) is constant, \(\{W_t^k\}_{k \in {\mathbb {N}}}\) is a countable set of independent Brownian motions, \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) is a countable set of prescribed functions depending only on the spatial variable, and \(\circ \) means that the stochastic integral is interpreted in the Stratonovich sense. If the set \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) forms a basis of some separable Hilbert space \({\mathcal {H}}\) (for example \(L^2({\mathbb {T}})\)), then the process \({{\,\mathrm{d\!}\,}}W := \sum _{k=1}^\infty \xi _k(x) \circ {{\,\mathrm{d\!}\,}}W_t^k\) is a cylindrical Wiener process on \({\mathcal {H}}\), generalising the notion of a standard Wiener process to infinite dimensions.

The multiplicative noise in (1.1) makes the transport velocity stochastic, which allows the Burgers’ equation to retain the form of a transport equation \(\partial _t u + \tilde{u} \,\partial _x u = 0,\) where \(\tilde{u}(t,x) := u(t,x) + \dot{W}\) is a stochastic vector field with noise \(\dot{W}\) that is smooth in space and rough in time. Compared with the well-studied Burgers’ equation with additive noise, where the noise appears as an external random forcing, this type of noise arises by taking the diffusive limit of the Lagrangian flow map regarded as a composition of a slow mean flow and a rapidly fluctuating one [9]. In several recent works, this type of noise, which we call stochastic transport, has been used to stochastically parametrise unresolved scales in fluid models while retaining the essential physics of the system [6, 7, 32]. On the other hand, it has also been shown to have a regularising effect on certain PDEs that are ill-posed [17, 20, 21, 25]. Therefore, it is of interest to investigate how the stochastic transport in (1.1) affects the Burgers’ equation, which in the inviscid case \(\nu =0\) is a prototypical model for shock formation. In particular, we ask whether this noise can prevent the system from developing shocks or, on the contrary, produce new shocks. We also ask whether this system is well-posed or not. In this paper, we will show that:

- (1)

For \(\nu = 0\), Eq. (1.1) has a unique solution of class \(H^s\) for \(s > 3/2\) until some stopping time \(\tau > 0\).

- (2)

However, shock formation cannot be avoided a.s. in the case \(\xi (x) = \alpha x + \beta \) and for a broader class of \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\), we can prove that it occurs in expectation.

- (3)

For \(\nu > 0\), we have global existence and uniqueness in \(H^2\).

On top of this, we prove a continuation criterion for the inviscid equation (\(\nu =0\)), which generalises the result for the deterministic case. The above results are not immediately evident for reasons we will discuss below. Although we cannot prove this here, we believe that shocks in Burgers’ equation are too robust and ubiquitous to be prevented by noise, regardless of what \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) is chosen. Our results provide rigorous evidence to support this claim.

The question of whether noise can regularise PDEs is not new. In finite dimensions, it is well-known that additive noise can restore the well-posedness of ODEs whose vector fields are merely bounded and measurable (see [41]). For PDEs, a general result is not known; however, there has been a significant effort in recent years to generalise this celebrated result to PDEs. In a remarkable paper, Flandoli et al. [20] demonstrated that the linear transport equation \(\partial _t u + b(t,x) \nabla u = 0\), which is ill-posed if b is sufficiently irregular, can recover existence and uniqueness of \(L^\infty \) solutions that is strong in the probabilistic sense, by the addition of a “simple” transport noise,

where the drift b is bounded, measurable, Hölder continuous, and satisfies an integrability condition on the divergence \(\nabla \cdot b \in L^p([0,T] \times {\mathbb {R}}^d)\). In a subsequent paper [17], the same noise was shown to retain some regularity of the initial condition, thus restoring well-posedness of strong solutions, and a selection principle based on taking the zero-noise limit as opposed to the inviscid limit was considered in [1].

However, for nonlinear transport equations such as Burgers’, the same type of noise \({{\,\mathrm{d\!}\,}}u + u\,\partial _xu {{\,\mathrm{d\!}\,}}t + \partial _x u \circ {{\,\mathrm{d\!}\,}}W_t = 0\) does not help, since a simple change of variables \(v(t,x) := u(t,x-W_t)\) will lead us back to the original equation \(\partial _t v + v \,\partial _x v = 0\). Hence, if noise were to prevent shock formation, a more general class would be required, such as the cylindrical transport noise \(\sum _{k=1}^\infty \xi _k(x) \partial _x u \circ {{\,\mathrm{d\!}\,}}W_t^k\) that we consider in this paper. In [21] and [12], it was shown that collapse in Lagrangian point particle solutions of certain nonlinear PDEs (point vortices in 2D Euler and point charges in the Vlasov–Poisson system), can be prevented by this cylindrical transport noise with \(\xi _k(x)\) satisfying a certain hypoellipticity condition, thus providing hope for regularisation of nonlinear transport equation by noise. More recently, Gess and Maurelli [25] showed that adding a simple stochastic transport term into a nonlinear transport equation

which in the deterministic case admits non-unique entropy solutions for sufficiently irregular b, can restore uniqueness of entropy solutions, providing a first example of a nonlinear transport equation that becomes well-posed when adding a suitable noise.

We should now stress the difference between the present work and previous works. First, we acknowledge that in Flandoli [23], Chapter 5.1.4, it is argued that shock formation does not occur even with the most general cylindrical transport noise, by writing the characteristic equation as an Itô SDE

which is a martingale perturbation of straight lines that will cross without noise. Thus, using the property that a martingale \(M_t\) grows slower than t almost surely as \(t \rightarrow \infty \), it is shown that the characteristics cross almost surely. However, the characteristic equation for the system (1.1) is in fact a Stratonovich SDE,

and therefore Flandoli’s argument can be applied to the martingale term, but not to the additional drift term, which may disrupt shock formation. The techniques we use here apply to Stratonovich equations; however, due to the difficulty caused by the additional drift term, we were only able to prove that the characteristics cross almost surely in the very particular case \(\xi (x) = \alpha x + \beta \), leaving the general case open for future investigation. By using a different strategy, where instead we look at how the slope \(\partial _x u\) evolves along a characteristic (1.5), we manage to show that for a wider class of \(\{\xi _k(\cdot )\}_{k=1}^\infty \) such that the infinite sum \(\sum _{k\in {\mathbb {N}}} ((\partial _x \xi _k)^2 - \xi _k \partial _{xx}\xi _k)\) is pointwise bounded, we have that

if \(\partial _x u(0,X_0) > 0\), then \(\partial _x u(t,X_t) < \infty \) almost surely for all \(t>0\) and

if \(\partial _x u(0,X_0)\) is sufficiently negative, then there exists \(0< t_* < \infty \) such that \(\lim _{t \rightarrow t_*} {\mathbb {E}}[\partial _x u (t,X_t)] = -\infty \).

In summary, shock formation occurs in expectation if the initial profile has a sufficiently negative slope and no new shocks can form from a positive slope.

We finally address the question of well-posedness. We will prove that by choosing a sufficiently regular initial condition, equation (1.1) admits a unique local solution that is smooth enough, such that the arguments employed in the previous section on shock formation are valid (in fact, we show this for a noise of the type \(Qu \circ {{\,\mathrm{d\!}\,}}W_t,\) where \(Qu = a(x) \partial _x u + b(x) u,\) which generalises the one considered in (1.1)). For Burgers’ equation with additive space-time white noise, however, there have been many previous works showing well-posedness [3, 11, 15, 16]. The techniques used in these works are primarily based on reformulating the equations by a change of variable or by studying its linear part. The main difference in our work is that the multiplicative noise we consider depends on the solution and its gradient. Therefore, the effect of the noise hinges on its spatial gradient and the solution, giving rise to several complications. For instance, when deriving a priori estimates, certain high order terms appear, which need to be treated carefully. Recently, the same type of multiplicative noise has been treated for the Euler equation [8, 22] and the Boussinesq system [2], whose techniques we follow closely in our proof. We note that the well-posedness analysis of a more general stochastic conservation law, which includes the inviscid stochastic Burgers’ equation as a special case, has also been considered, for instance in [18, 19, 27]. However, these works deal with the well-posedness analysis of weak kinetic and entropy solutions, in contrast to classical solutions, which we consider here. There is also the recent work [31] showing the local well-posedness of weak solutions in the viscous Burgers’ equation (\(\nu > 0\)) driven by rough paths in the transport velocity. An important contribution of this paper is showing the global well-posedness of strong solutions in the viscous case by proving that the maximum principle is retained under perturbation by stochastic transport of type \(Qu \circ {{\,\mathrm{d\!}\,}}W_t\).

1.1 Main results

Let us state here the main results of the article:

Theorem 1.1

(Shock formation in the stochastic Burgers’ equation) In the following, we use the notation \(\psi (x) := \frac{1}{2}\sum _{k=1}^\infty \left( (\partial _x \xi _k(x))^2 - \xi _k(x) \partial _{xx} \xi _k(x)\right) \). The main results regarding shock formation in (1.1) are as follows:

- (1)

Let \(\xi _1(x) = \alpha x + \beta \), \(x \in {\mathbb {R}}\) and \(\xi _k \equiv 0\) for \(k=2,3,\ldots \) and assume that u(0, x) has a negative slope. Then, there exists two characteristics satisfying (1.5) with different initial conditions that cross in finite time almost surely.

- (2)

Let \(X_t\) be a characteristic solving (1.5) with \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) satisfying the conditions in Assumption A1 below and let \(\partial _x u(0,X_0) \ge 0\). Then, if \(\psi (x) < \infty \) for all \(x \in {\mathbb {T}}\) or \({\mathbb {R}}\), we have that \(\partial _t u(t,X_t) < \infty \) almost surely for all \(t>0\).

- (3)

Again, let \(X_t\) be a characteristic solving (1.5) with \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) satisfying the conditions in Assumption A1 and let \(\partial _x u(0,X_0) < 0\). Also assume that \(\partial _x u(0,X_0) < \psi (x)\) for all \(x \in {\mathbb {T}}\) or \({\mathbb {R}}\). Then there exists \(0<t_*<\infty \) such that \(\lim _{t \rightarrow t_*} {\mathbb {E}} [\partial _x u(t,X_t)] = - \infty \).

Theorem 1.2

(Stochastic Rankine–Hugoniot condition) The curve of discontinuity \((t,s(t)) \in [0,\infty ) \times {\mathbb {T}}\) (or \({\mathbb {R}}\)) of the stochastic Burgers’ equation (1.1) satisfies the following:

where \(u_\pm (t,s(t)) := \lim _{x \rightarrow s(t)^\pm } u(t,x)\) are the left and right limits of u.

Theorem 1.3

(Well-posedness in the inviscid case) Let \(u_{0}\in H^{s} (\mathbb {T},\mathbb {R}),\) for \(s>3/2\) fixed. Then there exists a unique maximal solution \((\tau _{max},u)\) of the 1D stochastic Burgers’ equation (1.1) with \(\nu = 0\). Therefore, if \((\tau ',u')\) is another maximal solution, then necessarily \(\tau _{max}=\tau '\), \(u=u'\) on \([0,\tau _{max})\). Moreover, either \(\tau _{max}=\infty \) or \(\displaystyle \lim \sup _{s\nearrow \tau _{max}} ||u(s)||_{H^{s}}= \infty \).

Theorem 1.4

(Global well-posedness in the viscous case) Let \(u_{0} \in H^{2} (\mathbb {T},\mathbb {R}).\) Then there exists a unique maximal strong global solution \(u:[0,\infty ) \times \mathbb {T} \times \Xi \rightarrow \mathbb {R}\) of the viscous stochastic Burgers’ equation (1.1) with \(\nu > 0\) in \(H^{2} (\mathbb {T},\mathbb {R})\).

Remark 1.5

Theorems 1.3 and 1.4 can be extended in a straightforward manner to the full line \({\mathbb {R}}\) and to higher dimensions.

Remark 1.6

We prove Theorems 1.3 and 1.4 for a more general noise \({\mathcal {Q}} u \circ dW_t\), where \({\mathcal {Q}}\) is a first order linear differential operator, which includes the transport noise as a special case. For the sake of clarity, our proof deals only with one noise term \(\mathcal {Q}u \circ dW_t\), however, we can readily extend this to cylindrical noise with countable set of first order linear differential operators

by imposing certain smoothness and boundedness conditions for the sum of the coefficients.

1.2 Structure of the paper

This manuscript is organised as follows. In Sect. 2 we review some classical mathematical deterministic and stochastic background. We also fix the notations we will employ and state some definitions. Section 3 contains the main results regarding shock formation in the stochastic Burgers’ equation. Using a characteristic argument, we show that noise cannot prevent shocks from occurring for certain classes of \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\). Moreover, we prove that these shocks satisfy a Rankine–Hugoniot type condition in the weak formulation of the problem. In Sect. 4, we show local well-posedness of the stochastic Burgers’ equation in Sobolev spaces and a blow-up criterion. We also establish global existence of smooth solutions of a viscous version of the stochastic Burgers’ equation, which is achieved by proving a stochastic analogue of the maximum principle. In Sect. 5, we provide conclusions, propose possible future research lines, and comment on several open problems that are left to study.

2 Preliminaries and notation

Let us begin by reviewing some standard functional spaces and mathematical background that will be used throughout this article. Sobolev spaces are given by

for any \(s\ge 0\) and \(p\in [1,\infty ),\) equipped with the norm \(||f||_{W^{s,p}}=||(I-\partial _{xx})^{s/2}f||_{L^{p}}\). We will also use the notation \(\Lambda ^{s}=(-\partial _{xx})^{s/2}\). Recall that \(L^{2}\) based spaces are Hilbert spaces and may alternatively be denoted by \(H^{s}=W^{s,2}\). For \(s>0\), we also define \(H^{-s}:=(H^{s})^{\star }\), i.e. the dual space of \(H^s\). Let us gather here some well-known Sobolev embedding inequalities:

Let us also recall the well-known commutator estimate of Kato and Ponce:

Lemma 2.1

[34] If \(s\ge 0\) and \(1<p<\infty \), then

We will also use the following result as main tool for proving the existence results and blow-up criterion:

Theorem 2.2

[2] Let \(\mathcal {Q}\) be a linear differential operator of first order

where the coefficients are smooth and bounded. Then for \(f\in H^{2}(\mathbb {T},\mathbb {R})\) we have

Moreover, if \(f\in H^{2+s}(\mathbb {T},\mathbb {R})\), and \(\mathcal {P}\) is a pseudodifferential operator of order s, then

for every \(s\in [1, \infty )\).

Remark 2.3

Theorem 2.2 is fundamental for closing the energy estimates when showing well-posedness of the stochastic Burgers’ equation. It permits reducing the order of a sum of terms which in principle seems hopelessly singular.

Next, we briefly recall some aspects of the theory of stochastic analysis. Fix a stochastic basis \(\mathcal {S}=(\Xi ,\mathcal {F},\lbrace \mathcal {F}_{t}\rbrace _{t\ge 0}, \mathbb {P},\lbrace W^{k}\rbrace _{k\in \mathbb {N}}),\) that is, a filtered probability space together with a sequence \(\lbrace W^{k} \rbrace _{k\in \mathbb {N}}\) of scalar independent Brownian motions relative to the filtration \(\lbrace \mathcal {F}_{t}\rbrace _{t\ge 0}\) satisfying the usual conditions.

Given a stochastic process \(X\in L^{2}(\Xi ;L^{2}([0,\infty );L^{2}(\mathbb {T},\mathbb {R}))),\) the Burkholder–Davis–Gundy inequality is given by

for any \(p\ge 1\) and \(C_{p}\) an absolute constant depending on p.

We also state the celebrated Itô–Wentzell formula, which we use throughout this work.

Theorem 2.4

[36, Theorem 1.2] For \(0\le t<\tau \), let \(u(t,\cdot )\) be \(C^3\) almost surely, and \(u(\cdot ,x)\) be a continuous semimartingale satisfying the SPDE

where \(\{N_t^j\}_{j = 0}^\infty \) is a family of continuous semimartingales and \(\{\sigma _j(t,x)\}_{j=0}^\infty \) is also a family of continuous semimartingales that are \(C^2\) in space for \(0\le t<\tau \). Also, let \(X_t\) be a continuous semimartingale. Then, we have the following

Let us also introduce three different notions of solutions:

Definition 2.5

(Local solution) A local solution \(u\in H^s(\mathbb {T},\mathbb {R})\) for \(s>3/2\) of the Burgers’ equation (1.1) is a random variable \(u:[0,\tau ] \times \mathbb {T} \times \Xi \rightarrow \mathbb {R},\) with trajectories of class \(C([0,\tau ]; H^{s}(\mathbb {T}^{2},\mathbb {R}))\), together with a stopping time \(\tau : \Xi \rightarrow [0, \infty ],\) such that \(u(t \wedge \tau ),\) is adapted to \(\lbrace \mathcal {F}_{t}\rbrace _{t\ge 0},\) and (1.1) holds in the \(L^2\) sense. This is

for finite stopping times \(\tau ' \le \tau \).

Definition 2.6

(Maximal solution) A maximal solution of (1.1) is a stopping time \(\tau _{max}:\Xi \rightarrow [0,\infty ]\) and random variable \(u:[0,\tau _{max})\times \mathbb {T} \times \Xi \rightarrow \mathbb {R}\), such that:

\(\mathbb {P} (\tau _{max} >0) = 1, \ \tau _{max} = lim_{n \rightarrow \infty } \tau _n,\) where \(\tau _n\) is an increasing sequence of stopping times, i.e. \(\tau _{n+1}\ge \tau _{n}\), \(\mathbb {P}\) almost surely.

\((\tau _{n},u)\) is a local solution for every \(n\in \mathbb {N}\).

If \((\tau ',u')\) is another pair satisfying the above conditions and \(u'=u\) on \([0,\tau '\wedge \tau _{max} )\), then \(\tau '\le \tau _{max}\), \(\mathbb {P}\) almost surely.

A maximal solution is said to be global if \(\tau _{max}=\infty \), \(\mathbb {P}\) almost surely.

Definition 2.7

(Weak solution) We say that a random variable \(u \in L^2( \Xi ; L^\infty ([0, \infty ) \times {\mathbb {T}}))\) that satisfies the following integral equation

\({\mathbb {P}}\) almost surely for any test function \(\varphi \in C_0^\infty ([0,\infty ) \times {\mathbb {T}})\) is a weak solution to the problem (3.1). It is easy to show that a local solution in the sense of Definition 2.5 is indeed a weak solution.

Notations: Let us stress some notations that we will use throughout this work. We will denote the Sobolev \(L^{2}\) based spaces by \(H^{s}(\text {domain}, \text {target space})\). However, we will sometimes omit the domain and target space and just write \(H^s\), when these are clear from the context. \(a\lesssim b\) means there exists C such that \(a \le Cb\), where C is a positive universal constant that may depend on fixed parameters and constant quantities. Note also that this constant might differ from line to line. It is also important to remind that the condition “almost surely” is not always indicated, since in some cases it is obvious from the context.

3 Shocks in Burgers’ equation with stochastic transport

Recall that we are dealing with a stochastic Burgers’ equation of the form

for \(x \in {\mathbb {T}}\) or \({\mathbb {R}},\) where \(\nu \ge 0\) is constant, \(\{\xi _k(x)\}_{k \in {\mathbb {N}}}\) is an orthonormal basis of some separable Hilbert space \({\mathcal {H}},\) and \(\circ \) means that the integration is carried out in the Stratonovich sense. In this section, we study the problem of whether shocks can form in the inviscid Burgers’ equation with stochastic transport. By using a characteristic argument, we prove that for some classes \(\{\xi _k(x)\}_{k \in {\mathbb {N}}}\), the transport noise cannot prevent shock formation. We also consider a weak formulation of the problem and prove that the shocks satisfy a stochastic version of the Rankine–Hugoniot condition.

3.1 Inviscid Burgers’ equation with stochastic transport

The inviscid Burgers’ equation with stochastic transport is given by

which in integral form is interpreted as

for all \(x \in {\mathbb {T}}\) or \({\mathbb {R}}\). Also, we will assume throughout this paper that the initial condition is positive, that is, \(u(0,x) > 0\) for all \(x \in {\mathbb {T}}\) or \({\mathbb {R}}\).

Consider a process \(X_t\) that satisfies the Stratonovich SDE

which in Itô form, reads

We call this process the characteristic of (3.1), analogous to the characteristic lines in the deterministic Burgers’ equation. We assume the following conditions on \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\).

Assumption A1

\(\xi _k\) is smooth for all \(k \in {\mathbb {N}}\) and together with the Stratonovich-to-Itô correction term \(\varphi (x):=\frac{1}{2} \sum _{k=1}^\infty \xi _k(x) \partial _x\xi _k(x)\), satisfy the following:

Lipschitz continuity

$$\begin{aligned} |\varphi (x) - \varphi (y)| \le C_0 |x-y|, \quad |\xi _k(x) - \xi _k(y)| \le C_k |x-y|, \quad k \in {\mathbb {N}}, \end{aligned}$$(3.5)Linear growth condition

$$\begin{aligned} |\varphi (x)| \le D_0 (1 + |x|), \quad |\xi _k(x)| \le D_k (1+|x|), \quad k \in {\mathbb {N}} \end{aligned}$$(3.6)

for real constants \(C_0, C_1, C_2, \ldots \) and \(D_0, D_1, D_2, \ldots \) with

Provided \(u(t,\cdot )\) is sufficiently smooth and bounded (hence satisfying Lipschitz continuity and linear growth) until some stopping time \(\tau \), and \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) satisfies the conditions in Assumption A1, the characteristic equation (3.4) is locally well-posed. One feature of the multiplicative noise in (3.1) is that u is transported along the characteristics, that is, we can show that \(u(t,x) = (\Phi _t)_*u_0(x)\) for \(0\le t < \tau _{max},\) where \(\Phi _t\) is the stochastic flow of the SDE (3.4), \((\Phi _t)_*\) represents the pushforward by \(\Phi _t,\) and \((\tau _{max}, X_t)\) is the maximal solution of (3.4). This is an easy corollary of the Itô-Wentzell formula (2.9).

Corollary 3.0.1

Let \(u(t,\cdot )\) be \(C^3 \cap L^\infty \) in space for \(0<t<\tau \). Assume also that \(u(\cdot ,x)\) is a continuous semimartingale satisfying (3.2), \(\partial _x u (\cdot ,x)\) is a continuous semimartingale satisfying the spatial derivative of (3.2), and \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\) satisfies the conditions in Assumption A1. If \((\tau _{max},X_t)\) is a maximal solution to (3.4), then \(u(t,X_t) = u(0,X_0)\) almost surely for \(0<t<\tau _{max}\).

Remark 3.1

Notice that due to our local well-posedness result (Theorem 1.3) and the maximum principle (Proposition 4.10), one has \(u_t \in C^3 \cap L^\infty \) for \(t < \tau _{max}\) provided \(u_0\) is smooth enough and bounded. For instance, \(u_0 \in H^4 \cap L^\infty \) is sufficient.

Proof of Corollary 3.0.1

Note that under the given assumptions, \(\sigma _0(t,x) := u(t,x)\partial _x u (t,x),\) and \(\sigma _k(t,x) := \xi _k(x)\partial _x u(t,x)\) for all \(k \in {\mathbb {N}},\) satisfy the conditions in Theorem 2.4. We take \(N_t^0 = t\) and \(N_t^k = W_t^k\) for \(k \in {\mathbb {N}}\). Using the Itô-Wentzell formula (2.9) for the stochastic field u(t, x) satisfying (3.2), and the semimartingale \(X_t\), we obtain

Now, we have \(I_1 = I_2\) almost surely so indeed, \(u(t,X_t) = u(0,X_0)\) almost surely for \(0<t<\tau _{max}\). \(\square \)

3.2 Results on shock formation

In order to investigate the crossing of characteristics in the stochastic Burgers’ equation (3.1) with transport noise, we define the first crossing time \(\tau \) as

where \(X_t^a, X_t^b\) are two characteristics that solve the SDE (3.3) with initial conditions \(X_0^a = a\) and \(X_0^b = b\). This gives us the first time when two characteristics intersect. In the following, we will show that in the special case \(\xi _1(x) = \alpha x + \beta \) (where we only consider one noise term and the other terms \(\xi _k\) are identically zero for \(k = 2,3,\ldots \)), the first crossing time is equivalent to the first hitting time of the integrated geometric Brownian motion. We note that in this case, Eq. (3.4) is explicitly solvable, where the general solution is given by

Proposition 3.2

The first crossing time of the inviscid stochastic Burgers’ equation (3.1) with \(\xi _1(x) = \alpha x + \beta \) for constants \(\alpha , \beta \in {\mathbb {R}}\) and \(\xi _k(\cdot ) \equiv 0\) for \(k = 2,3,\ldots \) is equivalent to the first hitting time for the integrated geometric Brownian motion \(I_t := \int ^t_0 e^{-\alpha W_s}ds\).

Proof

Consider two arbitrary characteristics \(X_t^a\) and \(X_t^b\) with \(X_0^a = a\) and \(X_0^b = b\). From (3.9), one can check that \(X_t^a = X_t^b\) if and only if

Now, since the left-hand side is continuous, strictly increasing with \(I_0 = 0,\) and independent of a and b, we have

where

is the steepest negative slope of \(u_0\). Hence, the first crossing time is equivalent to the first hitting time of the process \(I_t\). \(\square \)

Remark 3.3

Note that the constant \(\beta \) does not affect the first crossing time, hence we can set \(\beta =0\) without loss of generality. Also in the following, we simply write \(\xi (\cdot )\) without the index when we only consider one noise term.

As an immediate consequence of Proposition 3.2, we prove that the transport noise with \(\xi (x) = \alpha x\) cannot prevent shocks from forming almost surely in the stochastic Burgers’ equation (3.1).

Corollary 3.3.1

Let \(\xi (x) = \alpha x\) for some \(\alpha \in {\mathbb {R}}\). If the initial profile \(u_0\) has a negative slope, then \(\tau < \infty \) almost surely.

Proof

To prove this, it is enough to show that

where we have assumed \(\alpha > 0,\) without loss of generality, and \(W_{\bullet } : {\mathbb {R}}_{\ge 0} \times \Xi \rightarrow {\mathbb {R}}\) is the standard Wiener process on the Wiener space \((\Xi , {\mathcal {F}}, {\mathbb {P}}),\) adapted to the natural filtration \({\mathcal {F}}_t\). This implies that \(\tau < \infty \) a.s. by Proposition 3.2.

First, define the set

Fixing \(\omega \in A\), choose \(t_1, t_2, \ldots \in {\mathbb {R}}_{\ge 0}\) with \(t_n < t_{n+1},\) such that \(\lim _{n \rightarrow \infty } t_n = \infty \) and \(\liminf _{n \rightarrow \infty } (t_{n+1}-t_n) > 0\), and consider the sequence

Clearly, \(\{I_n(\omega )\}_{n \in {\mathbb {N}}}\) is monotonic increasing, and it is also bounded since \(\omega \in A\). Hence, it is convergent by the monotone convergence theorem, and in particular, it is a Cauchy sequence. Therefore we have

Since the integrand is strictly positive, this implies \(\lim _{t \rightarrow \infty } e^{\alpha W_t(\omega )} = 0,\) and hence \(W_t(\omega ) \rightarrow -\infty \). On the other hand, for \(\omega \in \Xi \) such that \(W_t(\omega ) \rightarrow -\infty \), it is easy to see that \(\omega \in A\). This implies that under the identification \(\Xi \cong C([0,\infty );{\mathbb {R}})\), the set A is equivalent to the set of Wiener processes \(W_t\) with \(W_t \rightarrow -\infty \), which is open in \(C([0,\infty ); {\mathbb {R}})\) endowed with the norm \(\Vert \cdot \Vert _{\infty }\) and therefore measurable. In particular, for \(\omega \in A\), we have

but since \(\limsup _{t \rightarrow \infty } W_t = +\infty ,\) a.s., this implies \({\mathbb {P}}(A) = 0\). \(\square \)

In the following, we show that for a broader class of \(\{\xi _k(\cdot )\}_{k \in {\mathbb {N}}}\), shock formation occurs in expectation provided the initial profile has a sufficiently negative slope. Moreover, no new shocks can develop from positive slopes. We show this by looking at how the slope \(\partial _x u\) evolves along the characteristics \(X_t\), which resembles the argument given in [10] for the stochastic Camassa–Holm equation.

Theorem 3.4

Consider a characteristic \(X_t,\) and a smooth initial profile \(u(0,x)=u_0(x)\) such that \(\partial _x u(0,X_0) = - \sigma < 0\). If

then there exists \(0<t_*<\infty \) such that \(\lim _{t \rightarrow t_*} {\mathbb {E}} [\partial _x u(t,X_t)] = - \infty \). On the other hand, if \(\partial _x u(0,X_0) \ge 0\) and

then \(\partial _x u(t,X_t) < \infty \) almost surely for all \(t > 0\).

Proof

Taking the spatial derivative of (3.2), and evaluating the stochastic field \(\partial _x u(t,x)\) along the semimartingale \(X_t\) by the Ito–Wentzell formula (2.9) (again, this is valid due to the local well-posedness result, Theorem 1.3), the process \(Y_t := \partial _x u(t,X_t)\) together with \(X_t\) satisfy the following coupled Stratonovich SDEs

In Itô form, this reads

Taking the expectation of (3.14) on both sides, we obtain

Now, assume that there exists a constant \(C \in {\mathbb {R}}\) such that

for all \(x \in {\mathbb {R}}\). If \(Y_0 = -\sigma < 0\), we have \(Y_t < 0\) for all \(t > 0,\) since \(Y = 0\) is a fixed line in the phase space (X, Y) and therefore cannot be crossed. Hence from (3.16), we have

and (3.15) becomes,

since \({\mathbb {E}}[Y_t^2] - {\mathbb {E}}[Y_t]^2 = {\mathbb {E}}\left[ (Y_t - {\mathbb {E}}[Y_t])^2\right] \ge 0\).

Solving this differential inequality, we get

The right-hand side tends to \(-\infty \) in finite time provided \(-\sigma < C/2\).

Hence, if

for all \(x \in {\mathbb {R}}\), then there exists \(t_* < \infty \) such that \(\lim _{t \rightarrow t_*} {\mathbb {E}} [u_x(t, X_t)] = - \infty \).

Similarly, if \( \frac{1}{2}\sum _{k=1}^\infty \left( (\partial _x \xi _k(x))^2 - \xi _k(x) \partial _{xx} \xi _k(x)\right) < D\) for some \(D \in {\mathbb {R}}\), then for \(Y_0 > 0\) we have again

One can check that \({\mathbb {E}}[Y_t] < \infty \) for all \(t>0,\) which implies \(Y_t < \infty \) almost surely. \(\square \)

Remark 3.5

Blow-up in expectation does not imply pathwise blow-up. It is merely a necessary condition, which suggests that the law of \(\partial _x u\) becomes increasingly fat-tailed with time, making it more likely for it to take extreme values. Nonetheless, it is a good indication of blow-up occurring with some probability.

Example 3.6

Consider the set \(\{\xi _k(x)\}_{k \in {\mathbb {N}}} = \left\{ \frac{1}{k^2}\sin (kx), \frac{1}{k^2}\cos (kx)\right\} _{k \in {\mathbb {N}}}\), which forms an orthogonal basis for \(L^2({\mathbb {T}})\). Then, one can easily check that

for all \(x \in {\mathbb {T}},\) so blow-up occurs in expectation for any initial profile with negative slope, but no new shocks can form from positive slopes.

3.3 Weak solutions

We saw that if the initial profile \(u_0\) has a negative slope, then shocks may form in finite time (almost surely in the linear case \(\xi (x) = \alpha x\)), so solutions to (3.1) cannot exist in the classical sense. This motivates us to consider weak solutions to (3.1) in the sense of Definition 2.7.

Suppose that the profile u is differentiable everywhere except for a discontinuity along the curve \(\gamma = \left\{ (t,s(t)) \in [0,\infty ) \times M \right\} \), where \(M = {\mathbb {T}}\) or \({\mathbb {R}}\). Then the curve of discontinuity must satisfy the following for u to be a solution of the integral equation (2.10).

Proposition 3.7

(Stochastic Rankine–Hugoniot condition) The curve of discontinuity s(t) of the stochastic Burgers’ equation in weak form (2.10) satisfies the following SDE

where \(u_\pm (t,s(t)) := \lim _{x \rightarrow s(t)^\pm } u(t,x)\) are the left and right limits of u.

The main obstacle here is that the curve s(t) is not piecewise smooth and therefore we cannot apply the standard divergence theorem, which is how the Rankine–Hugoniot condition is usually derived. Extending classical calculus identities such as Green’s theorem on domains with non-smooth boundaries is a tricky issue, but fortunately, there have been several works that extend this result to non-smooth but rectifiable boundaries in [40], and to non-rectifiable boundaries in [28,29,30, 38].

Lemma 3.8

Green’s theorem for non-smooth boundaries Let \(\Omega \) be a bounded domain in the (x, y)-plane such that its boundary \(\partial \Omega \) is a Jordan curve and let u, v be sufficiently regular functions in \(\Omega \) (see Remark 3.9 below). Then

where the contour integral on the right-hand side can be understood as a limit of a standard contour integral along a smooth approximation of the boundary. Here, the integral is taken in the anti-clockwise direction of the contour.

Remark 3.9

For the above to hold, there must be a pay-off between the regularity of \(\partial \Omega \) and the functions u, v (i.e. the less regular the boundary, the more regular the integrand). In particular, the following condition is known:

\(\partial \Omega \) has box-counting dimension \(d < 2\) and u, v is \(\alpha \)-Hölder continuous for any \(\alpha > d-1\) (Harrison and Norton [30]).

Proof of Theorem 3.7

We provide a proof in the case \(M = {\mathbb {T}}\) with only one noise term. Extending it to the case \(M={\mathbb {R}}\) and countably many noise terms is straightforward. Take the atlas \(\{(U_1,\varphi _1),(U_2,\varphi _2)\}\) on \({\mathbb {T}} ={\mathbb {R}}/{\mathbb {Z}},\) where \(U_1 := (0,1)\), \(\varphi _1:(0,1) \rightarrow U_1\) and \(U_2 := (-\frac{1}{2},\frac{1}{2})\), \(\varphi _2 : (0,1) \rightarrow U_2\). Without loss of generality, assume that the shock \(s(\cdot )\) starts at time \(t=0\).

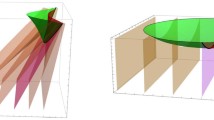

In the proof of the stochastic Rankine–Hugoniot condition (3.17), the domain \(\Omega _n := [\tau _{n-1},\tau _n) \times (0,1) \subset [0,\infty ) \times {\mathbb {T}}\) is split up into two parts: \(\Omega _-^n\), which is on the left of the shock curve (t, s(t)) and \(\Omega _+^n\), which is on the right

Now, consider a sequence \(0 = \tau _0< \tau _1< \tau _2 < \cdots ,\) with \(\lim _{n \rightarrow \infty } \tau _n = \infty \) such that for all \(n \in \mathbb {N}\), the curve \(\gamma _n := \{s(t) : t \in [\tau _{n-1}, \tau _n)\}\) is contained in either one of the charts \(U_1\) or \(U_2\). For convenience, we denote by \((U_n, \varphi _n)\) to mean the chart \((U_1,\varphi _1)\) or \((U_2,\varphi _2)\) that contains \(\gamma _n\). In local coordinates, we split the domain \(\Omega _n := [\tau _{n-1},\tau _n) \times \varphi _n^{-1}(U_n)\) into two regions (see Fig. 1)

For \(n \in {\mathbb {N}}\), consider the following integrals

Then by Lemma 3.8, we have

where

and

where

One can check by direct calculation that

where we used the assumption that \(\varphi (0,\cdot ) \equiv 0\).

Now, from (2.10), we have \(\lim _{N\rightarrow \infty }\sum _{n=1}^N (I_n + J_n) = 0\) and since \(\varphi \) has compact support, there exists \(N > 0\) such that \(\varphi (\tau _{N'},\cdot ) \equiv 0\) for all \(N' \ge N\). Hence,

and since \(\varphi \) is arbitrary, we have

for all \(t > 0\). \(\square \)

4 Well-posedness results

4.1 Local well-posedness of a stochastic Burgers’ equation

Now, we prove local well-posedness of the stochastic Burgers’ equation (1.1) with \(\nu =0\). In fact, since the techniques used in the proof are essentially the same, we prove local well-posedness of a more general equation, which includes (1.1) as a special case. The stochastic Burgers’ equation we treat is given by

Here \(\mathcal {Q}\) represents a first order differential operator

where the coefficients a(x), b(x) are smooth and bounded. We state the main result of this section:

Theorem 4.1

Let \(u_{0}\in H^{s} (\mathbb {T},\mathbb {R}),\) for \(s>3/2\) fixed, then there exists a unique maximal solution \((\tau _{max},u)\) of the 1D stochastic Burgers’ equation (4.1). Therefore, if \((\tau ',u')\) is another maximal solution, then necessarily \(\tau _{max}=\tau '\), \(u=u'\) on \([0,\tau _{max})\). Moreover, either \(\tau _{max}=\infty \) or \(\displaystyle \lim \sup _{s\nearrow \tau _{max}} ||u(s)||_{H^{s}}= \infty \).

We will provide a sketch of the proof, which follows closely the approach developed in [2, 8]. For clarity of exposition, let us divide the argument into several steps.

Step 1: Uniqueness of local solutions. To show uniqueness of local solutions, one argues by contradiction. More concretely, one can prove that any two different solutions to (4.1) defined up to a stopping time must coincide, as explained in the following Proposition.

Proposition 4.2

Let \(\tau \) be a stopping time, and \(u^1,u^2: [0,\tau ] \times \mathbb {T}\times \Xi \rightarrow \mathbb {R}\) be two solutions with same initial data \(u_{0}\) and continuous paths of class \(C\left( [0,\tau ]; H^{s}(\mathbb {T},\mathbb {R})\right) \). Then \(u^{1}=u^{2}\) on \([0,\tau ],\) almost surely.

Proof

For this, we refer the reader to [2, 8]. It suffices to define \(\bar{u} = u^1 - u^2,\) and perform standard estimates for the evolution of the \(L^2\) norm of \(\bar{u}\). \(\square \)

-

Step 2: Existence and uniqueness of truncated maximal solutions. Consider the truncated stochastic Burgers’ equation

$$\begin{aligned} {{\,\mathrm{d\!}\,}}u_{r} + \theta _{r}(||\partial _{x}u||_\infty )u_{r}\partial _{x}u_{r} {{\,\mathrm{d\!}\,}}t+ \mathcal {Q}u_{r} {{\,\mathrm{d\!}\,}}W_{t}= \frac{1}{2} \mathcal {Q}^{2}u_{r} {{\,\mathrm{d\!}\,}}t, \end{aligned}$$(4.2)where \(\theta _{r}:[0,\infty )\rightarrow [0,1]\) is a smooth function such that

$$\begin{aligned} \theta _{r}(x)= {\left\{ \begin{array}{ll} 1, \ \text {for } |x| \le r, \\ 0, \ \text {for } |x| \ge 2r, \end{array}\right. } \end{aligned}$$for some \(r>0\). Let us state the result which is the cornerstone for proving existence and uniqueness of maximal local solutions of the stochastic Burgers’ equation (4.1).

Proposition 4.3

Given \(r>0\) and \(u_{0}\in H^{s}(\mathbb {T},\mathbb {R})\) for \(s>3/2\), there exists a unique global solution u in \(H^{s}\) of the truncated stochastic Burgers’ equation (4.2).

It is very easy to check that once Proposition 4.3 is proven, Theorem 4.1 follows immediately (cf. [8]). Therefore, we focus our efforts on showing Proposition 4.3.

Step 3: Global existence of solutions of the hyper-regularised truncated stochastic Burgers’ equation. Let us consider the following hyper-regularisation of our truncated equation

$$\begin{aligned} {{\,\mathrm{d\!}\,}}u^{\nu }_{r} + \theta _{r}(||\partial _{x}u||_\infty )u^{\nu }_{r}\partial _{x}u^{\nu }_{r} {{\,\mathrm{d\!}\,}}t+\mathcal {Q}u^{\nu }_{r} {{\,\mathrm{d\!}\,}}W_{t}= \nu \partial ^{s'}_{xx} u^{\nu }_{r} {{\,\mathrm{d\!}\,}}t +\frac{1}{2} \mathcal {Q}^{2}u^{\nu }_{r} {{\,\mathrm{d\!}\,}}t, \end{aligned}$$(4.3)where \(\nu >0\) is a positive parameter and \(s'= 2s+1\). Notice that we have added dissipation in order to be able to carry out the calculations rigorously. Equation (4.3) is understood in the mild sense, i.e., as a solution to an integro-differential equation (see (4.4)).

Proposition 4.4

For every \(\nu ,r >0\) and initial data \(u_{0}\in H^{s}(\mathbb {T},\mathbb {R})\) for \(s>3/2\), there exists a unique global strong solution \(u^{\nu }_{r}\) of Eq. (4.3) in the class \(L^{2}(\Xi ; C([0,T]; H^{s}(\mathbb {T},\mathbb {R})))\), for all \(T>0\). Moreover, its paths will gain extra regularity, namely \(C([\delta ,T]; H^{s+2}(\mathbb {T},\mathbb {R}) )\), for every \(T>\delta >0\).

Proof of Proposition 4.4

The proof is based on a simple fixed point iteration argument which uses Duhamel’s principle. We will also omit the subscripts \(\nu \) and r throughout the proof. Given \(u_{0}\in L^{2}(\Xi ; H^{s}(\mathbb {T},\mathbb {R}))\), consider the mild formulation of the hyper-regularised truncated equation (4.3).

where

Now, consider the space \(\mathcal {W}_{T}:=L^{2}(\Xi ; C([0,T];H^{s}(\mathbb {T},\mathbb {R})))\). One can show that \(\Upsilon \) is a contraction on \(\mathcal {W}_{T}\) by following the same arguments in [2, 8]. Therefore, by applying Picard’s iteration argument, one can construct a local solution. To extend it to a global one, it is sufficient to show that for a given \(T>0\) and initial data \(u_{0}\in H^{s}(\mathbb {R},\mathbb {T})\), we have

so we could glue together each local solution to cover any time interval. Furthermore, by standard properties of the semigroup \(e^{tA}\) (cf. [26]), one can prove that for positive times \(T>\delta >0\), each term in the mild equation (4.4) enjoys higher regularity, namely, \(u\in L^{2}(\Xi ; C([\delta ,T];H^{s+2}))\) for every \(T>\delta >0\). All the computations can be carried out easily by mimicking the same ideas as in [2, 8]. \(\square \)

-

Step 4: Limiting and compactness argument. The main objective of this step is to show that the family of solutions \(\{u^{\nu }_{r} \}_{\nu >0}\) of the hyper-regularised stochastic Burgers’ equation (4.3) is compact in a particular sense and therefore we are able to extract a subsequence converging strongly to a solution of the truncated stochastic Burgers’ equation (4.2). The main idea behind this argument relies on proving that the probability laws of this family are tight in some metric space. Once this is proven, one only needs to invoke standard stochastic partial differential equations arguments based on the Skorokhod’s representation and Prokhorov’s theorem. A more thorough approach can be found in [8, 24]. In the next Proposition, we present the main argument to show that the sequence of laws are indeed tight.

Proposition 4.5

Assume that for some \(\alpha >0,\) \(N \in \mathbb {N},\) there exist constants \(C_1(T)\) and \(C_2(T)\) such that

uniformly in \(\nu \). Then \(\{u_{r}^{\nu }\}_{\nu >0}\) is tight in the Polish space E given by

with \(\beta >\frac{1}{2}\) and \(s>3/2\).

Proof of Proposition 4.5

It is enough to imitate the techniques in [2, 8]. \(\square \)

Step 5: Hypothesis estimates. We are left to show that hypothesis (4.6)–(4.7) hold. First, we will show that condition (4.6) implies condition (4.7). Applying Minkowski’s and Jensen’s inequalities, and carrying out some standard computations, we obtain

$$\begin{aligned} \mathbb {E} [ ||u^{\nu }_{r}(t)-u^{\nu }_{r}(s)||^2_{H^{-N}} ]&\lesssim (t-s) \int _s^t \mathbb {E} [\theta _{r}(||\partial _{x}u||_\infty )||u^{\nu }_{r}\partial _{x}u^{\nu }_{r}(\gamma )||^2_{H^{-N}}] {{\,\mathrm{d\!}\,}}\gamma \\&\quad + (t-s)\int _s^t \mathbb {E} [||\nu \partial ^{s'}_{xx} u^{\nu }_{r} (\gamma )||^2_{H^{-N}}] {{\,\mathrm{d\!}\,}}\gamma \\&\quad + (t-s) \int _s^t \mathbb {E} [|| {}^{\mathrm{a}}\mathcal {Q}^{2}u^{\nu }_{r} (\gamma )||^2_{H^{-1}}] {{\,\mathrm{d\!}\,}}\gamma \\&\quad + \mathbb {E} \left[ \left| \left| \int _s^t \mathcal {Q} u^{\nu }_{r} (\gamma ) {{\,\mathrm{d\!}\,}}W_{\gamma } \right| \right| ^2_{L^2} \right] . \end{aligned}$$

It is easy to infer that

since

and hypothesis (4.6). In the same way, one can check that for \(N=3s+2\),

since

Similarly,

since \(\left| \left| \mathcal {Q}^{2}u^{\nu }_{r} \right| \right| ^{2}_{H^{-1}} \lesssim ||u^{\nu }_{r}||^{2}_{H^{s}}\). We also have that the stochastic term can be controlled by

Combining estimates (4.8)–(4.11), we deduce that

Hence for \(0<\alpha < 1/2,\)

We are left to prove that hypothesis (4.6) holds true, i.e., there exists a constant C such that

Indeed, the evolution of the \(L^{2}\) norm of \(\Lambda ^{s}u\) is given by

The estimate of the nonlinear term is done via the Kato–Ponce commutator estimate (2.4),

Applying integration by parts in the dissipative term, we see that it has the correct sign so we can drop it:

The last two terms can be bounded using the general estimates (2.6) recently derived in [2].

To conclude this proof, we only need to bound the local martingale terms. This is effected by estimating their quadratic variation and using Burkholder–Davis–Gundy inequality (2.7). Indeed, let us denote

We will denote \(u_{r}^{\nu }\) by u to make the notation in the following estimates simpler, but implicitly taking into account that they indeed depend \(\nu \) and r. Therefore we get that

Applying Grönwall’s inequality and taking expectation,

Invoking Burkholder–Davis–Gundy inequality (2.7), the term \(|M_s|^{2}\) can be bounded as

where \([M]_t\) is the quadratic variation of \(M_t,\) given by

One can check that

as in the proof of Theorem 2.2 in [2]. Therefore, the above equation can be bounded as

Hence, combining (4.14)–(4.17), and Grönwall’s inequality yield

Finally, the bound (4.12) follows from a simple application of Jensen’s inequality, concluding the proof.

4.2 Blow-up criterion

We are now interested in deriving a blow-up criterion for the stochastic Burgers’ equation (1.1) with \(\nu =0\). However, we keep working with the generalised version (4.1), since the techniques needed are essentially the same. First of all, we we note that for the deterministic Burgers’ equation

there exists a well-known blow-up criterion. For this one-dimensional PDE, local existence and uniqueness of strong solutions is guaranteed for initial data in \(H^s(\mathbb {T},\mathbb {R}),\) for \(s>3/2.\) This can be concluded by deriving a priori estimates and then applying a Picard iteration type theorem. Assume that u is a local solution to (4.18) in \(H^s,\) and let \(T^*>0.\) The deterministic blow-up criterion states that if

then the local solution u can be extended to \([0,T^{\star }];\) and vice versa. We show an identical result for the stochastic case, which reads as follows.

Theorem 4.6

Blow-up criterion for stochastic Burgers’ Let us define the stopping times \(\tau ^2\) and \(\tau ^{\infty }\) by

Then \(\tau ^2 = \tau ^{\infty },\) \(\mathbb {P}\) almost surely.

Remark 4.7

The norm in the definition of \(\tau _n^2\) in Theorem 4.6 could be replaced with \(||u(t, \cdot )||_{H^s},\) for any \(s> 3/2,\) but we choose \(s=2\) for the sake of simplicity.

Proof of Theorem 4.6

We show both \(\tau ^{2}\le \tau ^{\infty }\) and \(\tau ^{\infty }\le \tau ^{2}\) in two steps.

Step 1: \(\tau ^{2}\le \tau ^{\infty }\). This is straight-forward to establish, since it follows from the well-known Sobolev embedding inequality (2.3) that

Step 2: \(\tau ^{\infty }\le \tau ^{2}\). Consider the hyper-regularised Burgers’ truncated equation introduced in (4.3), which is given by

For the sake of simplifying notation, we omit the subscripts \(\nu \) and r throughout the proof. We proceed now to compute the evolution of the \(H^{2}\) norm of u. First, we obtain

Integrating the dissipative term by parts, applying Hölder’s inequality in the nonlinear term, and using the cancellation property (2.5), we derive the inequality

The \(L^2\) norm of \(\partial _{xx} u\) evolves as follows:

Again, applying standard estimates for the nonlinear term, dropping the dissipative term, and invoking inequality (2.6), one obtains

Hence, combining inequalities (4.21) and (4.22), we get

To finish the argument, one has to treat the stochastic term. To do so, apply Itô’s formula for the logarithmic function, in a similar fashion as carried out in [8]. Without loss of generality, we assume \(||u||_{H^2} \ne 0\) and obtain

where \(N_t\) is the local martingale

By making use of (4.23), we can estimate the derivative of the logarithm as

where \(M_t\) is a local martingale defined as

Integrating in time, we derive

We need a good control of the semimartingale term in (4.25), which can done by invoking Burkholder–Davis–Gundy inequality (2.7). Hence, it suffices to estimate the quadratic variation of the stochastic process

Here, we have used estimation (4.16) to bound the numerator term in the integral above. Finally, applying Burkholder–Davis–Gundy inequality (2.7), we obtain

Taking expectation on (4.25) and using estimate (4.26), we establish

for any \(n, m \in \mathbb {N}\). Therefore

which in particular means that for \(n,m \in \mathbb {N},\) \(\displaystyle \sup _{s\in [0,\tau ^{\infty }_{n}\wedge m ]} ||u(s)||_{H^2}\) is a finite random measure \(\mathbb {P}\) almost surely. To conclude the proof, one just needs to notice that if

for every \(n,m\in \mathbb {N}\), then \(\tau ^{\infty }\le \tau ^{2}\) (cf. [8]). Note that we have omitted subscripts. Nevertheless, Fatou’s Lemma enables us to take limits on \(u^{\nu }_{r}\) as \(\nu \) tends to zero and r to infinity, recovering our result in the limit. \(\square \)

4.3 Global well-posedness of a viscous stochastic Burgers’ equation

The viscous stochastic Burgers’ equation is given by

in the Stratonovich sense, supplemented with initial condition \(u(x,0)=u_{0}(x)\). In the Itô sense, this can be rewritten as

The main result of this section establishes the global regularity of solutions of (4.29).

Theorem 4.8

Let \(u_{0} \in H^{2} (\mathbb {T},\mathbb {R}).\) Then there exists a unique maximal strong global solution \(u:[0,\infty ) \times \mathbb {T} \times \Xi \rightarrow \mathbb {R}\) of the viscous stochastic Burgers’ equation (4.29) with \(\nu > 0\) in \(H^{2} (\mathbb {T},\mathbb {R})\).

For our purpose, we prove the following result:

Proposition 4.9

Let \(u_{0}\in H^{2}(\mathbb {T},\mathbb {R}),\) \(T>0,\) and u(t, x) be a smooth enough solution to Eq. (4.29) defined for \(t \in [0,T]\). Then there exists a constant C(T), only depending on \(||u_{0}||_{H^2}\) and T, such that

Once we have proven the a priori estimate (4.30) we can repeat the arguments in Sect. 4.1 to obtain Theorem 4.8. However, since this is repetitive and tedious, we do not explicitly carry out these arguments here. Hence, we just provide a proof of Proposition 4.9.

Proof

Let us start by computing the evolution of the \(L^{2}\) norm of the solution u. First note that

By taking this into account and applying the same techniques as in Sect. 4.1 (use estimate (2.5)), we obtain

and therefore, by following techniques in Sect. 4.1, we get

The evolution of \(||\partial _{x}u||_{L^{2}}\) can be estimated as

Integrating by parts on the first term of the RHS, using Hölder’s inequality, and Young’s inequality we have that

The second term on the RHS can be rewritten as

Finally, the sum in the last line can be estimated (thanks to inequality (2.6) for \(\mathcal {P}=\partial _{x}\)) as

Notice that to bound rigorously the local martingale terms, we should introduce a sequence of stopping times and then by taking expectation those term should vanish. However we don’t repeat this same argument, in order to simplify the exposition. Putting together (4.31–4.33), one derives

Therefore, mimicking the arguments in Sect. 4.1, one obtains

and

Now we claim that the following maximum principle holds true

for some positive constant C(T), which we show in Lemma 4.10. By taking into account (4.36), it is easy to infer that by (4.34) and (4.35), together with Grönwall’s lemma, the quantities

are bounded by constants depending only on T and \(u_0\). Finally, when carrying out the estimates for the \(H^2\) norm of u, one can apply once again similar arguments, realising that thanks to (4.36) the quantities

can be bounded by constants depending only on T and \(u_0.\) This concludes the proof. \(\square \)

Lemma 4.10

The maximum principle (4.36) is satisfied.

Proof

Let Q be of the form \(Qu = a(x) \partial _xu + b(x) u,\) where a, b are assumed to be smooth and bounded. We perform the following change of variables: \(v(t,x) = e^{b(x)W_t} u(t,x)\), and by Itô’s formula in Stratonovich form we obtain

Next, following a similar type of argument used in [4], consider the SDE

and let \(\psi _t(X_0)\) be the corresponding flow of (4.37), which is a diffeomorphism provided a(x) is smooth and satisfies conditions (3.5) and (3.6) (see [37]). By the Itô–Wentzell formula (2.9), we evaluate v along \(X_t := \psi _t(X_0)\), getting

so the stochastic term cancels almost surely. Consider the change of variables \(w(t,X_0) := v(t, \psi _t(X_0))\) and by chain rule, obtain

Hence, (4.38) is equivalent to a PDE with random coefficients

where

We claim that w satisfies the maximum principle \(\Vert w_t\Vert _\infty \le \Vert w_0\Vert _\infty \). Indeed, we perform the change of variables \(f(t,X_0) = e^{-\alpha t}w(t,X_0)\) for any \(\alpha > 0\). We obtain

Now, assume by contradiction that f attains a maximum at \((t^*, X_0^*) \in [0,T] \times {\mathbb {T}}\) such that \(t^* > 0\). Then we have \(\partial _t f(t^*, X_0^*) \ge 0\), \(\partial _{X_0} f(t^*, X_0^*) = 0,\) and \(\partial _{X_0}^2 f(t^*, X_0^*) \le 0\). However, since \(f(t,\cdot ) > 0\) for all \(t>0\), we obtain \(-\alpha f(t,\cdot ) < 0\). Also since \(\tilde{\nu } > 0\), the left-hand side of (4.40) is nonnegative but the right-hand side is strictly negative which is a contradiction. Taking \(\alpha \rightarrow 0\), the claim follows.

Since \(\psi _t\) is a diffeomorphism, we have \(||v(t,\cdot )||_\infty = ||w(t,\cdot )||_\infty \) so the maximum principle also follows for v. Hence, we have shown

for all \(t>0\). Now, let \(c>0\) be a constant such that \(|b(x)| > c\) for all \(x \in {\mathbb {R}}\). Then we have

where \(C(T) = \sup _{0\le t \le T} e^{-cW_t} < \infty \). With this, we conclude the proof. \(\square \)

5 Conclusion and outlook

In this paper, we studied the solution properties of a stochastic Burgers’ equation on the torus and the real line, with the noise appearing in the transport velocity. We have shown that this stochastic Burgers’ equation is locally well-posed in \(H^s(\mathbb {T},\mathbb {R}),\) for \(s>3/2,\) and furthermore, found a blow-up criterion which extends to the stochastic case. We also proved that if the noise is of the form \(\xi (x)\partial _x u \circ dW_t\) where \(\xi (x) = \alpha x + \beta \), then shocks form almost surely from a negative slope. Moreover, for a more general type of noise, we showed that blow-up occurs in expectation, which follows from the previously mentioned stochastic blow-up criterion. Also, in the weak formulation of the problem, we provided a Rankine–Hugoniot type condition that is satisfied by the shocks, analogous to the deterministic shocks. Finally, we also studied the stochastic Burgers’ equation with a viscous term, which we proved to be globally well-posed in \(H^2\).

Let us conclude by proposing some future research directions and open problems that have emerged during the course of this work:

Regarding shock formation, it is natural to ask whether our results can be extended to show that shock formation occurs almost surely for more general types of noise.

Another possible question is whether our global well-posedness result can be extended for the viscous Burgers’ equation with the Laplacian replaced by a fractional Laplacian \((-\Delta )^{\alpha },\) \(\alpha \in (0,1)\). The main difficulty here is that in the stochastic case, the proof of the maximum principle (Proposition (4.10)) does not follow immediately since the pointwise chain rule for the fractional Laplacian is not available. In the deterministic case, this question has been settled and it is known that the solution exhibits a very different behaviour depending on the value of \(\alpha \): for \(\alpha \in [1/2,1]\), the solution is global in time, and for \(\alpha \in [0,1/2)\), the solution develops singularities in finite time [33, 35]. Interestingly, when an Itô noise of type \(\beta u {{\,\mathrm{d\!}\,}}W_t\) is added, it is shown in [39] that the probability of solutions blowing up for small initial conditions tends to zero when \(\beta > 0\) is sufficiently large. It would be interesting to investigate whether the transport noise considered in this paper can also have a similar regularising effect on the equation.

Similar results could be derived for other one-dimensional equations with non-local transport velocity [5, 13, 14]. For instance, the so called CCF model [5] is also known to develop singularities in finite time, although by a different mechanism to that of Burgers’. To our knowledge, investigating these types of equations with transport noise is new.

References

Attanasio, S., Flandoli, F.: Zero-noise solutions of linear transport equations without uniqueness: an example. C.R. Math. 347(13–14), 753–756 (2009)

Alonso-Orán, D., Bethencourt de León, A.: On the well posedness of a stochastic Boussinesq equation (2018). arXiv:1807.09493

Bertini, L., Cancrini, N., Jona-Lasinio, G.: The stochastic Burgers equation. Commun. Math. Phys. 165, 211–231 (1994)

Beck, L., Flandoli, F., Gubinelli, M., Maurelli, M.: Stochastic ODEs and stochastic linear PDEs with critical drift: regularity, duality and uniqueness (2014). arXiv:1401.1530

Córdoba, A., Córdoba, D., Fontelos, M.: Formation of singularities for a transport equation with nonlocal velocity. Ann. Math. 162, 1377–1389 (2005)

Cotter, C., Crisan, D., Holm, D.D., Pan, W., Shevchenko, I.: Modelling uncertainty using circulation-preserving stochastic transport noise in a 2-layer quasi-geostrophic model (2018). arXiv:1802.05711

Cotter, C.J., Crisan, D., Holm, D.D., Pan, W., Shevchenko, I.: Numerically modelling stochastic lie transport in fluid dynamics (2018). arXiv:1801.09729

Crisan, D., Flandoli, F., Holm, D.D.: Solution properties of a 3D stochastic Euler fluid equation (2017). arXiv:1704.06989

Cotter, C.J., Gottwald, G.A., Holm, D.D.: Stochastic partial differential fluid equations as a diffusive limit of deterministic lagrangian multi-time dynamics. Proc. R. Soc. A 473(2205), 20170388 (2017)

Crisan, D., Holm, D.D.: Wave breaking for the stochastic Camassa–Holm equation. Physica D 376, 138–143 (2018)

Catuogno, P., Olivera, C.: Strong solution of the stochastic Burgers equation. Appl. Anal. Int. J. 93(3), 646–652 (2013). https://doi.org/10.1080/00036811.2013.797074

Delarue, F., Flandoli, F., Vincenzi, D.: Noise prevents collapse of Vlasov–Poisson point charges. Commun. Pure Appl. Math. 67(10), 1700–1736 (2014)

De Gregorio, S.: On a one-dimensional model for the three-dimensional vorticity equation. J. Stat. Phys. 59, 1251–1263 (1990)

De Gregorio, S.: A partial differential equation arising in a 1D model for the 3D vorticity equation. Math. Methods Appl. Sci. 19, 1233–1255 (1996)

Da Prato, G., Debussche, A., Temam, R.: Stochastic Burgers’ equation. Nonlinear Differ. Equ. Appl. 1, 389–402 (1994)

Da Prato, G., Gatarek, D.: Stochastic Burgers equation with correlated noise. Stoch. Stoch. Rep. 52(1–2), 29–41 (2007)

Fedrizzi, E., Flandoli, F.: Noise prevents singularities in linear transport equations. J. Funct. Anal. 264, 1329–1354 (2013)

Friz, P.K., Gess, B.: Stochastic scalar conservation laws driven by rough paths. In: Annales de l’Institut Henri Poincare (C) Non Linear Analysis, vol. 33, pp. 933–963. Elsevier (2016)

Funaki, T., Gao, Y., Hilhorst, D.: Uniqueness of the entropy solution of a stochastic conservation law with a Q-brownian motion. hal-02159743 (2019)

Flandoli, F., Gubinelli, M., Priola, E.: Well-posedness of the transport equation by stochastic perturbation. Inventiones mathematicae 180, 1–53 (2010)

Flandoli, F., Gubinelli, M., Priola, E.: Full well-posedness of point vortex dynamics corresponding to stochastic 2D Euler equations. Stoch. Process. Appl. 121, 1445–1463 (2011)

Flandoli, F., Luo, D.: Euler–Lagrangian approach to 3D stochastic Euler equations (2018). arXiv:1803.05319

Flandoli, F.: Random Perturbation of PDEs and Fluid Dynamic Models: École d’été de Probabilités de Saint-Flour XL-2010, vol. 2015. Springer, Berlin (2011)

Glatt-Holtz, N., Vicol, V.: Local and global existence of smooth solutions for the stochastic Euler equation with multiplicative noise. Ann. Probab. 42, 80–145 (2014). https://doi.org/10.1214/12-AOP773

Gess, B., Maurelli, M.: Well-posedness by noise for scalar conservation laws (2017). arXiv:1701.05393

Goldstein, J.A.: Semigroups of Linear Operators and Applications. Courier Dover Publications, New York (1985)

Gess, B., Souganidis, P.E.: Stochastic non-isotropic degenerate parabolic–hyperbolic equations. Stoch. Process. Appl. 127(9), 2961–3004 (2017)

Harrison, J.: Stokes’ theorem for nonsmooth chains. Bull Am. Math. Soc. 29(2), 235–242 (1993)

Harrison, J.: Flux across nonsmooth boundaries and fractal Gauss/Green/Stokes’ theorems. J. Phys. A 32, 5317 (1999)

Harrison, J., Norton, A.: The Gauss–Green theorem for fractal boundaries. Duke Math. J 67(3), 575–588 (1992)

Hocquet, A., Nilssen, T., Stannat, W.: Generalized Burgers equation with rough transport noise (2018). arXiv:1804.01335

Holm, D.D.: Variational principles for stochastic fluid dynamics. Proc. R. Soc. Lond. A Math. Phys. Eng. Sci. 471(2176) (2015)

Kiselev, A.: Regularity and blow up for active scalars. Math. Model. Nat. Phenom. 5(4), 225–255 (2010)

Kato, T., Ponce, G.: Commutator estimates and the Euler and Navier–Stokes equations. Commun. Pure Appl. Math. 41(7), 891–907 (1988)

Kiselev, N.F.A., Shterenberg, R.: Blow up and regularity for fractal Burgers equation. Dyn. PDE 5(3), 211–240 (2008)

Kunita, H.: Some extensions of Itô’s formula. Séminaire de probabilités (1981)

Kunita, H.: Stochastic Flows and Stochastic Differential Equations, vol. 24. Cambridge University Press, Cambridge (1997)

Lyons, T.J., Yam, P.S.C.: On Gauss–Green theorem and boundaries of a class of Hölder domains. Journal de mathématiques pures et appliquées 85(1), 38–53 (2006)

Röckner, M., Zhu, R., Zhu, X.: Local existence and non-explosion of solutions for stochastic fractional partial differential equations driven by multiplicative noise. Stoch. Process. Appl. 124(5), 1974–2002 (2014)

Shapiro, V.L.: The divergence theorem without differentiability conditions. Proc. Natl. Acad. Sci. USA (1957)

Veretennikov, A.J.: On strong solutions and explicit formulas for solutions of stochastic integral equations. Sb. Math. 39(3), 387–403 (1981)

Acknowledgements

The authors would like to thank José Antonio Carillo de la Plata, Dan Crisan, Theodore Drivas, Franco Flandoli, Darryl Holm, James-Michael Leahy, Erwin Luesink, and Wei Pan for encouraging comments and discussions that helped put together this work. DAO has been partially supported by the Grant MTM2017-83496-P from the Spanish Ministry of Economy and Competitiveness, and through the Severo Ochoa Programme for Centres of Excellence in R&D (SEV-2015-0554). ABdL has been supported by the Mathematics of Planet Earth Centre of Doctoral Training (MPE CDT). ST acknowledges the Schrödinger scholarship scheme for funding during this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Alonso-Orán, D., Bethencourt de León, A. & Takao, S. The Burgers’ equation with stochastic transport: shock formation, local and global existence of smooth solutions. Nonlinear Differ. Equ. Appl. 26, 57 (2019). https://doi.org/10.1007/s00030-019-0602-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00030-019-0602-6