Abstract

Haglund et al. (Trans Am Math Soc 370(6):4029–4057, 2018) introduced their Delta conjectures, which give two different combinatorial interpretations of the symmetric function \(\Delta '_{e_{n-k-1}} e_n\) in terms of rise-decorated or valley-decorated labelled Dyck paths. While the rise version has been recently proved (D’Adderio and Mellit in Adv Math 402:108342, 2022; Blasiak et al. in A Proof of the Extended Delta Conjecture, arXiv:2102.08815, 2021), not much is known about the valley version. In this work, we prove the Schröder case of the valley Delta conjecture, the Schröder case of its square version (Iraci and Wyngaerd in Ann Combin 25(1):195–227, 2021), and the Catalan case of its extended version (Qiu and Wilson in J Combin Theory Ser A 175:105271, 2020). Furthermore, assuming the symmetry of (a refinement of) the combinatorial side of the extended valley Delta conjecture, we deduce also the Catalan case of its square version (Iraci and Wyngaerd 2021).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In [16], the authors introduced their Delta conjectures, which give two different combinatorial interpretations of the symmetric function \(\Delta '_{e_{n-k-1}} e_n\) in terms of rise-decorated or valley-decorated labelled Dyck paths. More precisely,

(see Sects. 2 and 3 for the missing definitions).

This symmetric function is of particular interest as it gives conjecturally the Frobenius characteristic of the so-called super diagonal coinvariants [25].

The rise version has been extensively studied [5, 6, 9, 12, 23] before being finally proved: the compositional refinement introduced in [8], which implies the original conjecture, has been proved in [10], while the extended version (already appearing in the original paper [16]) has been proved in [3]. On the other hand, the valley version received significantly less attention, this fact being mainly due to technical difficulties. Before mentioning what is known about the valley Delta to this day, we want to remark the intrinsic interest in this version of the conjecture: indeed in [17], a conjectural basis of the super diagonal coinvariants in [25] is provided, that would explain the Hilbert series predicted by the valley Delta conjecture (but not by the rise Delta).

To this date, extended [22] and square versions [19] of the valley Delta have been formulated. In [18, 19], it is proved that the original valley Delta conjecture implies these other versions, a surprising fact that has no analogue for the rise Delta conjectures: indeed, the rise version of the square Delta conjecture [7] is still open.

On the valley Delta conjecture itself, almost nothing is known: for example, it is not even clear that the combinatorial side (the rightmost sum in (1)) is a symmetric function.

In this work, we make a first step in this direction, by proving the so-called Schröder case of the valley versions of the Delta conjecture, i.e. the scalar product \(\langle -,e_{n-d}h_d\rangle \). The strategy, similar to the one used in [9], allows also to prove the Schröder case of the valley Delta square conjecture in [19]. Using results from [18], we are able also to deduce the Catalan case (i.e. the scalar product \(\langle -,e_{n}\rangle \)) of the extended valley Delta conjecture. Finally, assuming the symmetry of (a refinement of) the combinatorial side of the extended valley Delta conjecture, we deduce also the Catalan case of its square version [19].

The paper is organised in the following way: in Sects. 2 and 3, we introduce the combinatorial objects and the symmetric function tools, respectively, needed in the rest of the paper. In Sect. 4, we prove our main results, providing in particular a recursion leading to the proof of the Schröder cases of the valley Delta and the valley Delta square conjectures. In Sect. 5, we prove how the Schröder case of the valley Delta conjecture implies the Catalan case of the extended valley Delta conjecture, and in Sect. 6, assuming the symmetry of (a refinement of) the combinatorial side of the extended valley Delta conjecture, we deduce also the Catalan case of its square version.

2 Combinatorial Definitions

We recall the relevant combinatorial definitions (see also [16, 19, 22]).

Definition 2.1

A square path of size n is a lattice path going from (0, 0) to (n, n) consisting of horizontal or vertical unit steps, always ending with a horizontal step. The set of such paths is denoted by \(\textsf{SQ}(n)\). The shift of a square path is the maximum value s such that the path intersect the line \(y=x-s\) in at least one point. We refer to the line \(y=x+i\) as i-th diagonal of the path, to the line \(x=y\) (the 0-th diagonal) as the main diagonal, and to the line \(y=x-s\) where s is the shift of the square path as the base diagonal. A vertical step whose starting point lies on the i-th diagonal is said to be at height i. A Dyck path is a square path whose shift is 0. The set of Dyck paths is denoted by \(\textsf{D}(n)\). Of course, \(\textsf{D}(n) \subseteq \textsf{SQ}(n)\).

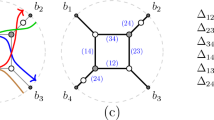

For example, the path (ignoring the circled numbers) in Fig. 1 has shift 3.

Definition 2.2

Let \(\pi \) be a square path of size n. We define its area word to be the sequence of integers \(a(\pi ) = (a_1(\pi ), a_2(\pi ), \ldots , a_n(\pi ))\) such that the i-th vertical step of the path starts from the diagonal \(y=x+a_i(\pi )\). For example the path in Fig. 1 has area word \((0, \, -\!3, \, -\!3, \, -\!2, \, -\!2, \, -\!1, \, 0, \, 0)\).

Definition 2.3

A partial labelling of a square path \(\pi \) of size n is an element \(w \in {\mathbb {N}}^n\) such that

-

if \(a_i(\pi ) > a_{i-1}(\pi )\), then \(w_i > w_{i-1}\),

-

\(a_1(\pi ) = 0 \implies w_1 > 0\),

-

there exists an index i such that \(a_i(\pi ) = - \textsf{shift}(\pi )\) and \(w_i(\pi ) > 0\),

i.e. if we label the i-th vertical step of \(\pi \) with \(w_i\), then the labels appearing in each column of \(\pi \) are strictly increasing from bottom to top, with the additional restrictions that, if the path starts with a vertical step then the first label cannot be a 0, and that there is at least one positive label lying on the base diagonal.

We omit the word partial if the labelling consists of strictly positive labels only.

Definition 2.4

A (partially) labelled square path (resp. Dyck path) is a pair \((\pi , w)\) where \(\pi \) is a square path (resp. Dyck path) and w is a (partial) labelling of \(\pi \). We denote by \(\textsf{LSQ}(m,n)\) (resp. \(\textsf{LD}(m,n)\)) the set of labelled square paths (resp. Dyck paths) of size \(m+n\) with exactly n positive labels, and thus exactly m labels equal to 0. See Fig. 1 for an example.

The following definitions will be useful later on.

Definition 2.5

Let w be a labelling of square path of size n. We define

The fact that we set \(x_0 = 1\) explains the use of the expression partially labelled, as the labels equal to 0 do not contribute to the monomial.

Sometimes, with an abuse of notation, we will write \(\pi \) as a shorthand for a labelled path \((\pi , w)\). In that case, we use the notation

Now, we want to extend our sets introducing some decorations.

Definition 2.6

The contractible valleys of a labelled square path \(\pi \) of size n are the indices \(1 \le i \le n\) such that one of the following holds:

-

\(i = 1\) and either \(a_1(\pi ) < -1\), or \(a_1(\pi ) = -1\) and \(w_1 > 0\),

-

\(i > 1\) and \(a_i(\pi ) < a_{i-1}(\pi )\),

-

\(i > 1\), \(a_i(\pi ) = a_{i-1}(\pi )\), and \(w_i > w_{i-1}\).

We define

corresponding to the set of vertical steps that are directly preceded by a horizontal step and, if we were to remove that horizontal step and move it after the vertical step, we would still get a square path with a valid labelling. In particular, if the vertical step is in the first row and it is attached to a 0 label, then we require that it is preceded by at least two horizontal steps (as otherwise by removing it we get a path starting with a vertical step with a 0 label in the first row).

Definition 2.7

The rises of a (labelled) square path \(\pi \) of size n are the indices in

i.e. the vertical steps that are directly preceded by another vertical step.

Definition 2.8

A valley-decorated (partially) labelled square path is a triple \((\pi , w, dv)\) where \((\pi , w)\) is a (partially) labelled square path and \(dv \subseteq v(\pi , w)\). A rise-decorated (partially) labelled square path is a triple \((\pi , w, dr)\) where \((\pi , w)\) is a (partially) labelled square path and \(dr \subseteq r(\pi )\).

Again, we will often write \(\pi \) as a shorthand for the corresponding triple \((\pi , w, dv)\) or \((\pi , w, dr)\).

We denote by \(\textsf{LSQ}(m,n)^{\bullet k}\) (resp. \(\textsf{LSQ}(m,n)^{*k}\)) the set of partially labelled valley-decorated (resp. rise-decorated) square paths of size \(m+n\) with n positive labels and k decorated contractible valleys (resp. decorated rises). We denote by \(\textsf{LD}(m,n)^{\bullet k}\) (resp. \(\textsf{LD}(m,n)^{*k}\)) the corresponding subset of Dyck paths.

We also define \(\textsf{LSQ}'(m,n)^{\bullet k}\) as the set of paths in \(\textsf{LSQ}(m,n)^{\bullet k}\) such that there exists an index i such that \(a_i(\pi ) = - \textsf{shift}(\pi )\) with \(i \not \in dv\) and \(w_i(\pi ) > 0\), i.e. there is at least one positive label lying on the base diagonal that is not a decorated valley. See Fig. 2 for examples.

Notice that, because of the restrictions we have on the labelling and the decorations, the only path with \(n=0\) is the empty path, for which \(m=0\) and \(k=0\).

We also recall the two relevant statistics on these sets (see [19]) that reduce to the ones defined in [20] when \(m=0\) and \(k=0\).

Definition 2.9

Let \((\pi , w, dr) \in \textsf{LSQ}(m,n)^{*k}\) and s be its shift. We define

i.e., the number of whole squares between the path and the base diagonal that are not in rows containing a decorated rise.

For \((\pi , w, dv) \in \textsf{LSQ}(m,n)^{\bullet k}\), we set \(\textsf{area}(\pi , w, dv) :=\textsf{area}(\pi , w, \varnothing )\), where \((\pi , w, \varnothing ) \in \textsf{LSQ}(m,n)^{*0}\).

For example, the paths in Fig. 2 have area 13 (left) and 10 (right). Notice that the area does not depend on the labelling.

Definition 2.10

Let \((\pi , w, dv) \in \textsf{LSQ}(m,n)^{\bullet k}\). For \(1 \le i < j \le n\), the pair (i, j) is a diagonal inversion if

-

either \(a_i(\pi ) = a_j(\pi )\) and \(w_i < w_j\) (primary inversion),

-

or \(a_i(\pi ) = a_j(\pi ) + 1\) and \(w_i > w_j\) (secondary inversion),

where \(w_i\) denotes the i-th letter of w, i.e., the label of the vertical step in the i-th row. Then we define

For \((\pi , w, dr) \in \textsf{LSQ}(m,n)^{*k}\), we set \(\textsf{dinv}(\pi , w, dr) :=\textsf{dinv}(\pi , w, \varnothing )\), where \((\pi , w, \varnothing ) \in \textsf{LSQ}(m,n)^{\bullet 0}\).

We refer to the middle term, counting the non-zero labels below the main diagonal, as bonus or tertiary dinv.

For example, the path in Fig. 2 (left) has dinv equal to 4: 2 primary inversions in which the leftmost label is not a decorated valley, i.e., (1, 7) and (1, 8); 1 secondary inversion in which the leftmost label is not a decorated valley, i.e., (1, 6); 3 bonus dinv, coming from the rows 3, 4, and 6; 2 decorated contractible valleys. Note that (2, 3) is not counted because 2 is a decorated contractible valley.

It is easy to check that if \(j \in dv\), then either there exists some diagonal inversion (i, j) or \(a_j(\pi ) < 0\), and so the dinv is always non-negative (see [19, Proposition 1]).

To lighten the notation, we will usually refer to a labelled path \((\pi , w, dr)\) or \((\pi , w, dv)\) simply by \(\pi \), so for example we will write \(\pi \in \textsf{LSQ}(m,n)^{*k}\) and \(\textsf{dinv}(\pi )\).

Let \(\pi \) be any labelled path defined above, with shift s. We define its reading word as the sequence of labels read starting from the ones attached to vertical steps in the base diagonal (\(y=x-s\)), read from bottom to top, left to right; next the ones in the diagonal \(y=x-s+1\), again from bottom to top, left to right; then the ones in the diagonal \(y=x-s+2\) and so on. For example, the path in Fig. 2 (left) has reading word 02401234.

Let us consider the paths in \(\textsf{LSQ}(m,n)^{\bullet k}\) where the reading word is a shuffle of m 0’s, the string \(1, 2,\ldots , n-d\), and the string \(n,n-1, \ldots n-d+1\). Notice that, given this restriction and the information about the position of the zero labels, all the information we need to keep track of the labelling is the position of the d biggest labels, which will end up labelling peaks, i.e., vertical steps followed by a horizontal step. Hence, we can denote the d biggest labels by decorated peaks and forget about the positive labels. We can, thus, identify our set with the set \(\textsf{SQ}(m,n)^{\bullet k, \circ d}\) of square paths with m vertical steps labelled by a zero, n non-labelled vertical steps, k decorated contractible valleys and d decorated peaks, where a valley is contractible if it is contractible in the corresponding labelled path, and the statistics are inherited from the labelled path as well. Similarly, we define \(\textsf{SQ}'(m,n)^{\bullet k, \circ d} \subseteq \textsf{SQ}(m,n)^{\bullet k, \circ d}\) to be the subset coming from \(\textsf{LSQ}'(m,n)^{\bullet k}\subseteq \textsf{LSQ}(m,n)^{\bullet k}\), \(\textsf{SQ}(m,n)^{*k, \circ d}\) the one coming from rise-decorated paths, and \(\textsf{D}(m,n)^{\bullet k, \circ d}\) and \(\textsf{D}(m,n)^{*k, \circ d}\) for the Dyck counterparts.

Finally, we sometimes omit writing m or k when they are equal to 0, e.g.

3 Symmetric Functions

For all the undefined notations and the unproven identities, we refer to [9, Sect. 1], where definitions, proofs and/or references can be found.

We denote by \(\Lambda \) the graded algebra of symmetric functions with coefficients in \(\mathbb {Q}(q,t)\), and by \(\langle \,, \rangle \) the Hall scalar product on \(\Lambda \), defined by declaring that the Schur functions form an orthonormal basis.

The standard bases of the symmetric functions that will appear in our calculations are the monomial \(\{m_\lambda \}_{\lambda }\), complete homogeneous \(\{h_{\lambda }\}_{\lambda }\), elementary \(\{e_{\lambda }\}_{\lambda }\), power \(\{p_{\lambda }\}_{\lambda }\) and Schur \(\{s_{\lambda }\}_{\lambda }\) bases.

For a partition \(\mu \vdash n\), we denote by

the (modified) Macdonald polynomials, where

are the (modified) q, t-Kostka coefficients, and \(n(\mu ) = \sum _{i} (i-1) \mu _i\) (see [14, Chapter 2] for more details).

Macdonald polynomials form a basis of the ring of symmetric functions \(\Lambda \). This is a modification of the basis introduced by Macdonald [21].

If we identify the partition \(\mu \) with its Ferrers diagram, i.e., with the collection of cells \(\{(i,j)\mid 1\le i\le \mu _j, 1\le j\le \ell (\mu )\}\), then for each cell \(c\in \mu \) we refer to the arm, leg, co-arm and co-leg (denoted respectively as \(a_\mu (c), l_\mu (c), a'_\mu (c), l'_\mu (c)\)) as the number of cells in \(\mu \) that are strictly to the right, above, to the left and below c in \(\mu \), respectively (see Fig. 3).

Let \(M :=(1-q)(1-t)\). For every partition \(\mu \), we define the following constants:

We will make extensive use of the plethystic notation (cf. [14, Chapter 1 page 19]). We will use also the standard shorthand \(f^*= f \left[ \frac{X}{M}\right] \).

We define the star scalar product by setting for every \(f,g\in \Lambda \)

where \(\omega \) is the involution of \(\Lambda \) sending \(e_\lambda \) to \(h_\lambda \) for every partition \(\lambda \).

It is well known that for any two partitions \(\mu ,\nu \) we have

We also need several linear operators on \(\Lambda \).

Definition 3.1

([2, 3.11]) We define the linear operator \(\nabla :\Lambda \rightarrow \Lambda \) on the eigenbasis of Macdonald polynomials as

Definition 3.2

We define the linear operator \(\mathbf {\Pi } :\Lambda \rightarrow \Lambda \) on the eigenbasis of Macdonald polynomials as

where we conventionally set \(\Pi _{\varnothing } :=1\).

Definition 3.3

For \(f \in \Lambda \), we define the linear operators \(\Delta _f, \Delta '_f :\Lambda \rightarrow \Lambda \) on the eigenbasis of Macdonald polynomials as

Observe that on the vector space of symmetric functions homogeneous of degree n, denoted by \(\Lambda ^{(n)}\), the operator \(\nabla \) equals \(\Delta _{e_n}\). Notice also that \(\nabla \), \(\Delta _f\) and \(\mathbf {\Pi }\) are all self-adjoint with respect to the star scalar product.

Definition 3.4

([8, (28)]) For any symmetric function \(f \in \Lambda ^{(n)}\) we define the Theta operators on \(\Lambda \) in the following way: for every \(F \in \Lambda ^{(m)}\), we set

and we extend by linearity the definition to any \(f, F \in \Lambda \).

It is clear that \(\Theta _f\) is linear, and moreover, if f is homogeneous of degree k, then so is \(\Theta _f\), i.e.,

It is convenient to introduce the so-called q-notation. In general, a q-analogue of an expression is a generalisation involving a parameter q that reduces to the original one for \(q \rightarrow 1\).

Definition 3.5

For a natural number \(n \in \mathbb {N}\), we define its q-analogue as

Given this definition, one can define the q-factorial and the q-binomial as follows.

Definition 3.6

We define

Definition 3.7

For x any variable and \(n \in \mathbb {N}\cup \{ \infty \}\), we define the q-Pochhammer symbol as

We can now introduce yet another family of symmetric functions.

Definition 3.8

For \(0 \le k \le n\), we define the symmetric function \(E_{n,k}\) [13] by the expansion

Notice that \(E_{n,0} = \delta _{n,0}\). Setting \(z=q^j\), we get

and in particular, for \(j=1\), we get

so these symmetric functions split \(e_n\), in some sense.

The Theta operators will be useful to restate the Delta conjectures in a new fashion, thanks to the following results.

Theorem 3.9

([8, Theorem 3.1] )

We will also need the following identity.

Theorem 3.10

([11, Corollary 9.2]) Given \(m,n,k,r \in \mathbb {N}\), we have

where \(h_m^\perp \) is the adjoint of the multiplication by \(h_m\) with respect to the Hall scalar product.

We applied the change of variables \(j \mapsto m, m \mapsto k, p \mapsto n-k-r, k \mapsto r, s \mapsto p, r \mapsto p-i\) in [11, Corollary 9.2] to make it easier to interpret combinatorially and more consistent with the notation used in [18].

4 The Schröder Case of the Valley Version of the Delta Conjectures

First of all, we state the extended valley Delta conjecture and the extended valley Delta square conjecture.

Conjecture 4.1

(Extended valley Delta conjecture [22])

Conjecture 4.2

(Extended valley Delta square conjecture [19])

Remark 4.3

It should be noticed that in general, the combinatorial sides of (3) and (4) are not even known to be symmetric functions. Hence, these conjectures include the statement that those combinatorial sums are indeed symmetric functions.

The main result we want to prove is the so-called Schröder case of the valley version of the Delta conjecture and the Delta square conjecture. In other words, we want to show that the identities hold if we take the scalar product with \(e_{n-d}h_d\). On the combinatorial side, assuming that those sums are symmetric functions (cf. Remark 4.3), the theory of shuffles (cf. [9, Section 3.3]) tells us that taking the scalar product with \(e_{n-d}h_d\) in (3) and (4) gives (cf. end of Sect. 2)

respectively.

Theorem 4.4

Theorem 4.5

To prove these results, we proceed as follows. First, we recall ((2) and [8, (26)]) that

when \(n-k > 0\), and \(e_0 = \omega (p_0) = 1\) when \(n-k=0\).

Then, we find an algebraic recursion satisfied by the polynomials \(\langle \Theta _{e_k} \nabla E_{n-k,r}, e_{n-d} h_d \rangle \), which we use to derive a similar one satisfied by the polynomials \(\langle \Theta _{e_k} \nabla \frac{[n-k]_q}{[r]_q} E_{n-k,r}, e_{n-d} h_d \rangle \).

Next, we prove that the q, t-enumerators of the sets

and

satisfy the same recursions as the aforementioned polynomials with the same initial conditions, thus proving the equality.

Finally, we take the sum over r of the identities we get, completing the proof of Theorems 4.4 and 4.5.

4.1 Algebraic Recursions

We begin by proving the following lemma.

Lemma 4.6

Proof

By [8, Lemma 6.1], we have

and by repeatedly applying the well-known identity \(\langle \Delta _{e_a} f, h_n \rangle = \langle f, e_a h_{n-a} \rangle \) (see [9, Lemma 4.1]), we have

as desired. \(\square \)

Now, the polynomial \(\langle \Delta _{h_k} \Delta _{e_{n-k-d}} E_{n-k, r}, e_{n-k} \rangle \) coincides with the expression \(F_{n,r}^{(d,k)}\) in [9, Section 4.3, (4.77)], so by [9, Theorem 4.18], up to some simple rewriting, we have the following.

Proposition 4.7

The expressions \(\langle \Theta _{e_k} \nabla E_{n-k, r}, e_{n-d} h_d \rangle \) satisfy the recursion

with initial conditions \(\langle \Theta _{e_k} \nabla E_{n-k, r}, e_{n-d} h_d \rangle = \delta _{r,0} \delta _{k,0} \delta _{d,0}\) when \(n=0\).

Notice that this implies that \(\langle \Theta _{e_k} \nabla E_{n-k, r}, e_{n-d} h_d \rangle \) is actually a polynomial in \(\mathbb {N}[q,t]\). We want to rewrite it slightly, for which we need the following lemma.

Lemma 4.8

Proof

We have

where in the first equality, we used the well-known q-Chu-Vandermonde identity [1, (3.3.10)] and the other ones are simple algebraic manipulations. \(\square \)

Combining Proposition 4.7 and Lemma 4.8, we get the following.

Theorem 4.9

The expressions \(\langle \Theta _{e_k} \nabla E_{n-k, r}, e_{n-d} h_d \rangle \) satisfy the recursion

with initial conditions \(\langle \Theta _{e_k} \nabla E_{n-k, r}, e_{n-d} h_d \rangle = \delta _{r,0} \delta _{k,0} \delta _{d,0}\) when \(n=0\).

Using Theorem 4.9, we can get a similar recursion for the other family of polynomials.

Theorem 4.10

The expressions \(\langle \Theta _{e_k} \nabla \frac{[n-k]_q}{[r]_q} E_{n-k, r}, e_{n-d} h_d \rangle \) satisfy the recursion

with initial conditions \(\langle \Theta _{e_k} \nabla \frac{[n-k]_q}{[r]_q} E_{n-k, r}, e_{n-d} h_d \rangle = \delta _{r,0} \delta _{k,0} \delta _{d,0}\).

Proof

We need to be careful with the initial conditions, as we have the factor \(\frac{[n-k]_q}{[r]_q}\) that becomes \(\frac{[0]_q}{[0]_q}\) when \(n-k=r=0\). However, the property we actually need is

which only holds for \(n-k>0\). For \(n-k = 0\) we have \(\omega (p_0) = 1\), so we need to set \(\frac{[n-k]_q}{[r]_q} E_{n-k,r} = 1\) whenever \(n-k=r=0\) for our identities to hold, which satisfies the initial conditions.

Using Theorem 4.9, we have

as desired. \(\square \)

Once again notice that this implies that \(\langle \Theta _{e_k} \nabla \frac{[n-k]_q}{[r]_q} E_{n-k, r}, e_{n-d} h_d \rangle \) is also a polynomial in \(\mathbb {N}[q,t]\).

4.2 Combinatorial Recursions

Let

and

be the q, t-enumerators of our set of lattice paths. We have the following results.

Theorem 4.11

The polynomials \(\textsf{D}_{q,t}(n \backslash r)^{\bullet k, \circ d}\) satisfy the recursion

with initial conditions \(\textsf{D}_{q,t}(0 \backslash r)^{\bullet k, \circ d} = \delta _{r,0} \delta _{k,0} \delta _{d,0}\) when \(n=0\).

Proof

The initial conditions are trivial, as the only Dyck path of size 0 has 0 steps on the main diagonal, 0 decorated valleys, and 0 decorated peaks.

We give an overview of the combinatorial interpretations of all the variables appearing in this formula. We say that a vertical step of a path is at height i if there are i whole cells in its row between it and the main diagonal.

-

r is the number of vertical steps at height 0 that are not decorated valleys.

-

j is the number of vertical steps at height 0 that are decorated valleys.

-

\(r-v\) is the number of decorated peaks at height 0.

-

u is the number of decorated peaks at height 0 that are also decorated valleys.

-

\(u+v\) is the number of vertical steps at height 0 without any kind of decoration.

-

s is the number of vertical steps at height 1 that are not decorated valleys.

Note that the vertical step following a decorated peak on the main diagonal cannot be a decorated valley, so we necessarily have \(r-v-1 \le r\), where the \(-1\) comes from the fact that the last decorated peak might be the last step of the path. Indeed, since the first vertical step cannot be a decorated valley either, and it does not follow any decorated peak, we actually have \(r-v-1 \le r-1\), so the number of decorated peaks at height 0 is at most r, so \(v \ge 0\) and the expressions all make sense.

Also note that the number of vertical steps at height 0 without any kind of decoration is given by the number of steps that are not decorated valleys (i.e. r), minus the number of decorated peaks (i.e. \(r-v\)), plus the number of these that are also decorated valleys (i.e. u). Indeed, \(r-(r-v)+u = u+v\), as expected.

The recursive step consists of removing all the steps that touch the main diagonal. There are \(r+j\) vertical steps that touch the main diagonal, of which j are decorated valleys and \(r-v\) are decorated peaks (which are not necessarily disjoint), so after the recursive step, we end up with a path in \(\textsf{D}(n-r-j \backslash s)^{\bullet k-j, \circ d-(r-v)}\).

Let us look at what happens to the statistics of the path.

The area goes down by the size (i.e. n) minus the number of vertical steps at height 0 (i.e. \(r+j\)). This explains the term \(t^{n-r-j}\).

The factor \(q^{\left( {\begin{array}{c}u+v\\ 2\end{array}}\right) }\) takes into account the primary dinv among all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks.

The factor \(q^{\left( {\begin{array}{c}u\\ 2\end{array}}\right) } \genfrac[]{0.0pt}{}{u+v}{v}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are both decorated valleys and decorated peaks (u of them), and the expression is explained by the fact that the latter cannot be consecutive (a decorated peak on the main diagonal cannot be followed by a decorated valley).

The factor \(\genfrac[]{0.0pt}{}{r}{u+v}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are decorated peaks but not decorated valleys (\(r-u-v\) of them), which can be interlaced in any possible way.

The factor \(\genfrac[]{0.0pt}{}{v+j-1}{j-u}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are decorated valleys but not decorated peaks (\(j-u\) of them), considering that the first of those must be non-decorated (we cannot start the path with a decorated valley).

Finally, the factor \(\genfrac[]{0.0pt}{}{v+j+s-1}{s}_q\) takes into account the secondary dinv between all the vertical steps at height 1 that are not decorated valleys (s of them), and all the vertical steps at height 0 that are not decorated peaks (\(v+j\) of them), considering that the first of those must belong to the latter set (we need a vertical step that is not a decorated peak on the main diagonal to go up to the diagonal \(y=x+1\)).

Summing over all the possible values of j, s, v, and u, we obtain the stated recursion. \(\square \)

Theorem 4.12

The polynomials \(\textsf{SQ}'_{q,t}(n \backslash r)^{\bullet k, \circ d}\) satisfy the recursion

with initial conditions \(\textsf{SQ}'_{q,t}(0 \backslash r)^{\bullet k, \circ d} = \delta _{r,0} \delta _{k,0} \delta _{d,0}\).

Proof

The initial conditions are trivial, as the only square path of size 0 has 0 steps on the main diagonal, 0 decorated valleys, and 0 decorated peaks.

We give an overview of the combinatorial interpretations of all the variables appearing in this formula. We say that a vertical step of a path is at height i if there are i whole cells in its row between it and the base diagonal.

-

r is the number of vertical steps at height 0 that are not decorated valleys.

-

j is the number of vertical steps at height 0 that are decorated valleys.

-

\(r-v\) is the number of decorated peaks at height 0.

-

u is the number of decorated peaks at height 0 that are also decorated valleys.

-

\(u+v\) is the number of vertical steps at height 0 without any kind of decoration.

-

s is the number of vertical steps at height 1 that are not decorated valleys.

The recursive step consists of removing all the steps that touch the base diagonal. We should distinguish whether we start with a Dyck path or a square path: if we start with a Dyck path, then the recursive step is the same as in Theorem 4.11, which correspond to the first summand; if we start with a square path that is not a Dyck path, we get the second summand. Since the case of a Dyck path is already dealt with, we only describe the recursion for square paths that are not Dyck paths.

There are \(r+j\) vertical steps that touch the base diagonal, of which j are decorated valleys and \(r-v\) are decorated peaks (which are not necessarily disjoint), so after the recursive step we end up with a path in \(\textsf{SQ}'(n-r-j \backslash s)^{\bullet k-j, \circ d-(r-v)}\).

Let us look at what happens to the statistics of the path.

The area goes down by the size (i.e. n), minus the number of vertical steps at height 0 (i.e. \(r+j\)). This explains the term \(t^{n-r-j}\).

The factor \(q^{\left( {\begin{array}{c}u+v\\ 2\end{array}}\right) }\) takes into account the primary dinv among all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks.

The factor \(q^{\left( {\begin{array}{c}u\\ 2\end{array}}\right) } \genfrac[]{0.0pt}{}{u+v}{v}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are both decorated valleys and decorated peaks (u of them), and the expression is explained by the fact that the latter cannot be consecutive (a decorated peak on the main diagonal cannot be followed by a decorated valley).

The factor \(\genfrac[]{0.0pt}{}{r-1}{u+v-1}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are decorated peaks but not decorated valleys (\(r-u-v\) of them), and the last of these must be a step without decorations: a decorated peak cannot be followed by a decorated valley, and the last vertical step at height 0 cannot be a decorated peak, since the shift of the path is positive and it has to finish above the main diagonal (as it must end with a horizontal step). This explains the \(r-1\) and the \(u+v-1\).

The factor \(\genfrac[]{0.0pt}{}{v+j}{j-u}_q\) takes into account the primary dinv between all the vertical steps at height 0 that are neither decorated valleys nor decorated peaks (\(u+v\) of them), and all the vertical steps at height 0 that are decorated valleys but not decorated peaks (\(j-u\) of them); unlike the previous case, now the interlacing can be any.

Finally, the factor \(\genfrac[]{0.0pt}{}{v+j+s-1}{s-1}_q\) takes into account the secondary dinv between all the vertical steps at height 1 that are not decorated valleys(s of them), and all the vertical steps at height 0 that are not decorated peaks (\(v+j\) of them), and since the shift of the path is positive and it has to finish above the main diagonal, the last vertical step at height 1 must occur after the last vertical step at height 0.

Summing over all the possible values of j, s, v, and u, we obtain the stated recursion. \(\square \)

4.3 The Main Theorems

We are now ready to prove Theorem 4.4 and Theorem 4.5.

Theorem 4.13

Proof

It follows immediately by combining Theorems 4.9 and 4.11. \(\square \)

Theorem 4.14

Proof

Use (5) together with Theorems 4.10 and 4.12. \(\square \)

Proof of Theorems 4.4and 4.5. Take the sum over r of both (5) and (6). \(\square \)

5 The Catalan Case of the Extended Valley Delta Conjecture

In [18], the authors show that the valley version of the Delta conjecture implies the extended version of the same conjecture. The argument requires the conjecture to hold in full generality to function, but we can still recycle it to derive the Catalan case of the extended conjecture from the Schröder case of the original one.

We need the following result, suggested by a combinatorial argument by the second author and Vanden Wyngaerd, and then proved by the first author and Romero.

Theorem 5.1

([11, Corollary 9.2]) Given \(d,n,k,r \in \mathbb {N}\), we have

Taking the scalar product with \(e_{n-d}\), we get the following.

Proposition 5.2

Given \(d,n,k,r \in \mathbb {N}\), we have

We want to prove that the q, t-enumerators of the corresponding sets satisfy the same relations.

Theorem 5.3

Given \(d,n,k,r \in \mathbb {N}\), we have

Proof

Using the same idea as in [18, Theorem 5.1], we delete the decorated peaks on the main diagonal, and apply the pushing algorithm to the remaining decorated peaks, that is, we swap the horizontal and the vertical step they are composed of, becoming valleys. If the peak is also a decorated valley, it becomes a decorated valley. As in [18, Theorem 5.1], pushing a peak this way does not change the dinv. If p is the number of decorated peaks on the main diagonal, and i the number of those that also are decorated valleys, we have that the loss of area given by the pushing contributes for a factor \(t^{d-p}\), removing the decorated peaks from the main diagonal contributes for a factor

Indeed, the factor \(\genfrac[]{0.0pt}{}{r}{p-i}_q\) counts the primary dinv between the \(p-i\) steps on the main diagonal that are decorated peaks but not decorated valleys, and the remaining \(r-p+i\) steps with no decoration: every time one of the latter appears to the left of one of the former, a unit of dinv is created, and there is no restriction on how they appear.

The factor \(q^{\left( {\begin{array}{c}i\\ 2\end{array}}\right) } \genfrac[]{0.0pt}{}{r-p+i}{i}_q\) counts the primary dinv between the i steps on the main diagonal that are both decorated peaks and decorated valleys, and the \(r-p+i\) steps on the main diagonal with no decoration: again, whenever one of the latter appears to the left of one of the former, a unit of dinv is created, but we must have a step with no decoration in between every two steps that have both kind of decorations, as a decorated peak cannot be followed by a decorated valley.

In the end, we are left with a path in \(\textsf{D}(d-p,n-d \backslash r-p+i)^{\bullet k-i}\): see [18, Theorem 5.1] for more details. The thesis follows. \(\square \)

Combining the two statements, we get the following.

Proposition 5.4

Given \(d,n,k,r \in \mathbb {N}\), we have

Proof

We can rewrite (7) as

and (8) as

Using (5) and induction on d (the base case \(d=0\) is simply (5)), we see that the right-hand sides are equal, hence so are the left-hand sides. This completes the proof. \(\square \)

Taking the sum over r in (9), we get the desired result.

Theorem 5.5

Given \(d,n,k \in \mathbb {N}\), we have

6 The Catalan Case of the Extended Valley Square Conjecture

Next, we want to prove the analogous statement for the square version. In this case, we need to assume that the combinatorial side of (a refinement of) Conjecture 4.1 is a symmetric function (cf. Remark 4.3).

Indeed, the argument in [19] can be recycled to show that, if the Catalan case of the valley version of the extended Delta conjecture holds, then the same case of the square version of the same conjecture also holds.

We need the following result.

Proposition 6.1

([19, Corollary 3]) Let

and

Then

Now, assuming that (10) (and hence also (11)) is a symmetric function, again by the theory of shuffles, taking the scalar product with \(e_{n}\) isolates the subsets of the paths whose reading word is \(1, 2, \dots , n\), which are in statistic-preserving bijection with unlabelled paths by simply removing the positive labels (there is a unique way to put them back once the reading word is fixed). The following theorem is now immediate.

Theorem 6.2

Given \(d,n,k,r \in \mathbb {N}\), if (10) is a symmetric function, then

Proof

Using (9) and Proposition 6.1, we have

so by taking the sum over r, the thesis follows.

\(\square \)

7 Future Directions

In [5], the authors gave an algebraic recursion for the (conjectural) q, t-enumerators of the Schröder case of the extended valley Delta conjecture. Hence, it would be enough to find a combinatorial recursion that matches the algebraic one to prove that case as well; however, we were not able to do so.

In [4], a combinatorial recursion for the “ehh” case of the shuffle theorem is given, and it would be interesting to extend such recursion to the valley-decorated version of the combinatorial objects, which in turn would lead to a proof of the Schröder case of the extended valley Delta conjecture via the pushing algorithm in [19]; once again, our attempts were unfruitful.

Finally, as the classical recursion for the q, t-Catalan [13] is an iterated version of the compositional one [15], and both these recursions extend to the rise version of the Delta conjecture [10, 24], it might be the case that the same phenomenon occurs here, and that there is a compositional refinement of the recursion for \(d=0\) that might lead to a full proof of the conjecture; at the moment we do not know what should such a refinement look like.

Data Availability

My manuscript has no associated data.

References

George E. Andrews, The theory of partitions, Cambridge Mathematical Library, Cambridge University Press, Cambridge, 1998. Reprint of the 1976 original. MR1634067

François Bergeron and Adriano M. Garsia, Science fiction and Macdonald’s polynomials, Algebraic methods and q-special functions (montréal, QC, 1996), 1999, pp. 1.52. MR1726826

Jonah Blasiak, Mark Haiman, Jennifer Morse, Anna Pun, and George H. Seelinger, A proof of the Extended Delta Conjecture, ArXiv e-prints (February 17, 2021), available at arXiv:2102.08815.

Michele D’Adderio and Alessandro Iraci, The new dinv is not so new, Electron J Comb 26 (2019), no. 3, P3.48.

Michele D’Adderio, Alessandro Iraci, and Anna Vanden Wyngaerd, The Schröder case of the generalized Delta conjecture, Eur J Combin 81 (2019), 58 .83.

Michele D’Adderio, Alessandro Iraci, and Anna Vanden Wyngaerd, The generalized Delta conjecture at t = 0, Eur J Combin 86 (2020), 103088.

Michele D’Adderio, Alessandro Iraci, and Anna Vanden Wyngaerd, The delta square conjecture, Int. Math. Res. Not. IMRN 1 (2021), 38.86. MR4198493

Michele D’Adderio, Alessandro Iraci, and Anna Vanden Wyngaerd, Theta operators, refined delta conjectures, and coinvariants, Adv. Math. 376 (2021), Paper No. 107447, 59. MR4178919

Michele D’Adderio, Alessandro Iraci, and Anna Vanden Wyngaerd, Decorated Dyck paths, polyominoes, and the Delta conjecture, Mem. Amer. Math. Soc. 278 (2022), no. 1370. MR4429265

Michele D’Adderio and Anton Mellit, A proof of the compositional Delta conjecture, Adv. Math. 402 (2022), Paper No. 108342, 17. MR4401822

Michele D’Adderio and Marino Romero, New identities for Theta operators, ArXiv e-prints (2020).

Adriano Garsia, Jim Haglund, Jeffrey B. Remmel, and Meesue Yoo, A Proof of the Delta Conjecture When q = 0, Ann Comb 23 (2019Jun), no. 2, 317.333.

Adriano M. Garsia and James Haglund, A proof of the q, t-Catalan positivity conjecture, Discrete Math 256 (2002), no. 3, 677.717. LaCIM 2000 Conference on Combinatorics, Computer Science and Applications (Montreal, QC). MR1935784

James Haglund, The q,t-Catalan numbers and the space of diagonal harmonics, University Lecture Series, vol. 41, American Mathematical Society, Providence, RI, 2008. With an appendix on the combinatorics of Macdonald polynomials. MR2371044

James Haglund, Jennifer Morse, and Mike Zabrocki, A Compositional Shuffle Conjecture Specifying Touch Points of the Dyck Path, Canadian J Math 64 (2012), no. 4, 822.844. MR2957232

James Haglund, Jeffrey B. Remmel, and Andrew T. Wilson, The Delta Conjecture, Trans. Amer. Math. Soc. 370 (2018), no. 6, 4029.4057. MR3811519

James Haglund and Emily Sergel, Schedules and the Delta Conjecture, Annals of Combinatorics 25 (March 2021), no. 1, 1.31 (en).

Alessandro Iraci and Anna Vanden Wyngaerd, “Pushing” our way from the valley Delta to the generalised valley Delta, ArXiv e-prints (January 2021), arXiv:2101.02600, available at 2101.02600.

Alessandro Iraci and Anna Vanden Wyngaerd, A Valley Version of the Delta Square Conjecture, Ann Comb 25 (March 2021), no. 1, 195.227 (en).

Nicholas A. Loehr and Gregory S. Warrington, Square q, t-lattice paths and \(\nabla (p_n)\), Trans. Amer. Math. Soc. 359 (2007), no. 2, 649.669. MR2255191

Ian G. Macdonald, Symmetric functions and Hall polynomials, Second, Oxford Mathematical Monographs, The Clarendon Press, Oxford University Press, New York, 1995. With contributions by A. Zelevinsky, Oxford Science Publications. MR1354144

Dun Qiu and Andrew Timothy Wilson, The valley version of the Extended Delta Conjecture, J. Combin. Theory Ser. A 175 (2020), 105271.

Marino Romero, The Delta conjecture at \(q=1\), Trans. Amer. Math. Soc. 369 (2017), no. 10, 7509.7530. MR3683116

Mike Zabrocki, A proof of the \(4\)-variable Catalan polynomial of the Delta conjecture, ArXiv e-prints (September 2016), available at 1609.03497.

Mike Zabrocki, A module for the Delta conjecture, ArXiv e-prints (2019Feb), arXiv:1902.08966, available at 1902.08966.

Funding

Open access funding provided by Universitá di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Communicated by Matjaž Konvalinka.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

D’Adderio, M., Iraci, A. Some Consequences of the Valley Delta Conjectures. Ann. Comb. (2023). https://doi.org/10.1007/s00026-023-00663-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00026-023-00663-1