Abstract

The article examines a linear-quadratic Neumann control problem that is governed by a non-coercive elliptic equation. Due to the non-self-adjoint nature of the linear control-to-state operator, it is necessary to independently study both the state and adjoint state equations. The article establishes the existence and uniqueness of solutions for both equations, with minimal assumptions made about the problem’s data. Next, the regularity of these solutions is studied in three frameworks: Hilbert–Sobolev spaces, Sobolev–Slobodeckiĭ spaces, and weighted Sobolev spaces. These regularity results enable a numerical analysis of the finite element approximation of both the state and adjoint state equations. The results cover both convex and non-convex domains and quasi-uniform and graded meshes. Finally, the optimal control problem is analyzed and discretized. Existence and uniqueness of the solution, first-order optimality conditions, and error estimates for the finite element approximation of the control are obtained. Numerical experiments confirming these results are included.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let us consider a domain \(\Omega \subset {\mathbb {R}}^2\) with a polygonal boundary \(\Gamma \). We are concerned with the Neumann boundary control problem

where \(y_d \in L^2(\Omega )\) and \(g_\varphi \in L^2(\Gamma )\) are given functions, \(\nu > 0\),

with \(-\infty \le u_{a}< u_{b}\le +\infty \), and \(y_u\) is the solution of

Assumptions regarding the symmetric second order differential operator A and the coefficients b and \(a_0\) will be described later. Let us just emphasize now that we will make no assumptions on b and \(a_0\) that would imply coerciveness of the associated bilinear form.

The main objective of this paper is to discretize the optimal control problem using the finite element method and to obtain error estimates for the approximations of the optimal control in terms of the discretization parameter h. The paper aims to minimize assumptions to better capture their essence. The results are valid for possibly non-convex domains and both quasi-uniform and graded meshes. Although the theory for Neumann boundary optimal control problems governed by elliptic equations is quite complete, to the best of our knowledge, the issues that arise when the elliptic operator governing the equation is not coercive have not been addressed yet; see Casas, Mateos and Tröltzsch 2005 [1], Casas and Mateos 2007 [2], Mateos and Rösch 2011 [3], Apel Pfefferer and Rösch 2012 and 2015 [4, 5], Krumbiegel and Pfefferer 2015 [6] or the thesis by Winkler 2015 [7]. The only papers, we are aware, that deal with optimal control problems governed by a non-coercive elliptic equation are about distributed controls; see Casas, Mateos and Rösch 2020 and 2021 [8, 9]. In both papers, this fact and the convexity of the domain are used in an essential way in some of the proofs, and hence those results are not applicable to our problem.

We will see that problem (P) has a unique solution \({\bar{u}}\), and that it satisfies the optimality conditions, which we state now in an informal way: there exist \({\bar{y}}\) and \({\bar{\varphi }}\) such that

Since (P) is a linear-quadratic strictly convex problem, existence and uniqueness of the solution follow in a standard way once we have proved existence and uniqueness of solution of the state equation and continuity of the control-to-state mapping. But, since we will not formulate any assumptions on b or \(a_0\) that would lead to a coercive operator, this task is not standard. In particular, \(\textrm{div}\,b\) may be large, such that the usual assumption \(a_0-\frac{1}{2}\textrm{div}\,b\ge c_0>0\) is not satisfied. This will be done in Sect. 2.

In Sect. 3 we investigate the regularity properties of the solutions of the state equation and the adjoint state equations. Since these are different, we perform this task in two steps resulting in Theorems 3.4 and 3.5, respectively. We obtain results in Hilbert–Sobolev, in Sobolev–Slobodeckiĭ and in weighted Sobolev spaces, with our focus on treating the numerical approximation of (P) in non-convex domains. The regularity results in non-weighted spaces serve us as intermediate results to prove the error estimates in weighted Sobolev spaces, but they are also of independent interest. Note that, although regularity results for elliptic boundary value problems are widely investigated, see, e. g., the monographs [10,11,12,13,14], the particular results which we need for our approximation theory were not available for non-coercive problems with variable coefficients.

In Sect. 4 we study the numerical discretization of both the state and adjoint state equation. We obtain existence and uniqueness of the solution as well as error estimates. Our results are valid in convex and non-convex domains and for quasi-uniform and graded meshes, with possibly a non-optimal grading parameter \(\mu \).

With these results at hand, we will be able to deduce existence, uniqueness, and optimality conditions in Sect. 5. Moreover, regularity properties of the optimal solution and its related state and adjoint state are given in terms of weighted Sobolev spaces. Finally, we will discretize the control problem. The control is approximated using piecewise constant functions whereas the state and adjoint state are discretized by continuous piecewise linear functions. A close inspection of the proofs in the above mentioned papers about Neumann control problems, suggests that, if no postprocessing step is done, the order of convergence of the error in \(L^2(\Gamma )\) for the control variable will be limited by the order of convergence of the finite element error in \(H^1(\Omega )\) for the state or the adjoint state equation; see e.g. the proof of Lemma 4.7 in [1]. This means that, for a non-convex domain and a quasi-uniform mesh, the order of convergence that can be obtained—applying the usual techniques in optimal control together with the regularity results and the finite element error estimates provided in this paper—is approximately \(h^\lambda \), where \(1/2<\lambda <1\). For instance, in the problem shown as an example in Sect. 6, \(h^{2/3}\) would be expected. Nevertheless, the numerical experiments show clearly order h, and we are able to get that in Theorem 5.7: If the corner singularities are of type \(r^{\lambda _j}\), the index j counting the corners, and the mesh is graded near the corners with parameter \(\mu _j\), then the approximation order of the control is \(s^*\le 1\) with \(s^*<\frac{3\lambda _j}{2\mu _j}\), i. e., \(s^*=1\) is achieved if \(\mu _j<\frac{3}{2}\lambda _j\) for all j. In the works by Apel, Pfefferer and Rösch [4, 5] a stronger grading \(\mu _j<\lambda _j\) is used to obtain an optimal control of convergence for the so-called post-processed control, i.e., the pointwise projection onto the admissible set of \(-{\bar{\varphi }}_h/\nu \), where \({\bar{\varphi }}_h\) is the discrete adjoint state associated to the discrete optimal control. In Theorem 4.2.1 of the thesis of Winkler [7] it is shown that for quasi-uniform meshes, i.e., \(\mu _j=1\), the order \(s^*=1\) is achieved for any angle.

2 Existence, Uniqueness and Continuous Dependence of the Solution of the State and Adjoint State Equations

On A, b and \(a_0\) we make the following assumptions.

Assumption 2.1

A is the operator given by

\(a_{ik}=a_{ki}\) for \(1\le i,k\le 2\), and satisfying the following ellipticity condition:

The function \(b:\Omega \rightarrow \mathbb {R}^2\) satisfies \(b\in L^{{\hat{p}}}(\Omega )^2\) with \({{\hat{p}}} > 2\) and there exists \({{\hat{q}}}>1\) such that \(\nabla \cdot b\in L^{{\hat{q}}}(\Omega )\) and \(b\cdot n\in L^{{\hat{q}}}(\Gamma )\). For the function \(a_0:\Omega \rightarrow \mathbb {R}\) it is assumed that \(a_0 \in L^{{\hat{q}}}(\Omega )\), \(a_0(x)\ge 0\) for a.e. \(x\in \Omega \) and there exists \(E\subset \Omega \) with \(\vert E\vert >0\) such that \(a_0(x)\ge \Lambda /2\) for all \(x\in E\).

Remark 2.2

Note that this assumption does not lead to a coercive bilinear form. While Assumption 2.1 is sufficient for the purposes of proving existence and uniqueness of solution, to establish adequate regularity results for the solution, further regularity must be imposed on the coefficients. The reader is referred to the results of Sect. 3 for the details required in the different scenarios.

Before addressing the main results of this section, we recall some well known inequalities that will be used throughout this paper.

We will often use the following form of Hölder’s inequality: for \(q,p_1,\ldots , p_k\in [1,\infty ]\) such that \(1/p_1+\cdots +1/p_k\le 1/q\) and \(f_i\in L^{p_i}(\Omega )\), \(i=1,\ldots ,k\) there exists a constant \(C_\Omega =\vert \Omega \vert ^{1/q-(1/p_1+\cdots +1/p_k)}\), such that \(\Vert f_1\cdots f_k\Vert _{L^q(\Omega )}\le C_\Omega \Vert f_1\Vert _{L^{p_1}(\Omega )}\cdots \Vert f_k\Vert _{L^{p_k}(\Omega )}\).

The inequality

is a generalization of Poincaré’s inequality and can be found, e.g., in [15, Theorem 11.19]. In dimension 2, Sobolev’s embedding theorem gives that for all \(r<\infty \) there exists \(K_{\Omega ,r}>0\) such that

We will denote by \(\langle \cdot ,\cdot \rangle _\Omega \) the duality product in \(H^1(\Omega )'\times H^1(\Omega )\) and by \(\langle \cdot ,\cdot \rangle _\Gamma \) the duality product in \(H^{1/2}(\Gamma )'\times H^{1/2}(\Gamma )\). We notice that any \(g\in H^{1/2}(\Gamma )'\) defines an element in \(H^1(\Omega )'\), which will be denoted in the same way by

In this case, we will simply write \(\langle g,z\rangle _\Gamma \). Also notice that for any fixed \(q>1\), the functions \(f\in L^q(\Omega )\) and \(g\in L^q(\Gamma )\) define elements in \(H^1(\Omega )'\) and \(H^{1/2}(\Gamma )'\) respectively by

For every \(y\in H^1(\Omega )\), we define \({\mathcal {A}}y\) by

Using this operator, we have that the weak form of the state Eq. (1.1) is: find \(y_u\in H^1(\Omega )\) such that

We first prove continuity of the operator \({\mathcal {A}}\) and Gårding’s inequality. We adapt the proof of [8, Lemma 2.1]

Lemma 2.3

Under Assumption 2.1 we have that \({\mathcal {A}}\in {\mathcal {L}}(H^1(\Omega ),H^{1}(\Omega )')\) and there exists a constant \(C_{\Lambda ,E,b}\) such that

where \(\Lambda \) and \(C_E\) are the constants from (2.1) and (2.2), respectively.

Proof

Let us show that \({\mathcal {A}}\) is a linear continuous operator. Denote \(S =\{z\in H^1(\Omega ):\ \Vert z\Vert _{H^1(\Omega )} = 1\}\). We split \({\mathcal {A}}\) into three parts \({\mathcal {A}}_i\), \(i=1,2,3\).

Take now \(s_p>1\) such that \(1/s_p = 1/{{\hat{p}}} + 1/2\). From (2.3) and Hölder’s inequality we infer for every \(y \in H^1(\Omega )\)

Fix now some \(s_q\in (1,{{\hat{q}}})\) and take \(r\in (1,+\infty )\) such that \(1/{{\hat{q}}}+1/r = 1/s_q\). From (2.3) we infer that

Hence, we have that \({\mathcal {A}}\) is a well-posed linear and continuous operator.

Let us prove (2.8). Using Assumption 2.1, (2.2), and Young and Hölder inequalities we get

Observe that the assumption \({{\hat{p}}} > 2\) implies that \(2< \dfrac{2 {{\hat{p}}}}{{{\hat{p}}} - 2} < \infty \). Now, we apply Lions’ Lemma, [16, Chapter 2, Lemma 6.1], to the chain of embeddings \(H^1(\Omega ) \subset \subset L^{\frac{2 {{\hat{p}}}}{ {{\hat{p}}} - 2}}(\Omega ) \subset L^2(\Omega )\), the first one being compact and the second one continuous, to deduce the existence of a constant \(C_0\) depending on \(\Lambda \), \(C_E\) and \(\Vert b\Vert _{L^{p}(\Omega )^2}\) such that

From the last two inequalities we conclude (2.8) with

and the proof is complete. \(\square \)

Remark 2.4

Notice that, to prove Lemma 2.3, we use neither \(\nabla \cdot b\in L^{{\hat{q}}}(\Omega )\) nor \(b\cdot n\in L^{{\hat{q}}}(\Gamma )\) for some \({{\hat{q}}}>1\).

The adjoint operator of \({\mathcal {A}}\) is \({\mathcal {A}}^*\). We have \({\mathcal {A}}^*z\in H^1(\Omega )'\) for every \(z\in H^1(\Omega )\). In the next lemma, we justify that under the mild Assumption 2.1, we can integrate by parts and use the expected form of the adjoint Eq. (1.2b).

Lemma 2.5

Suppose that Assumption 2.1 holds. Then

for all \(y\in H^1(\Omega )\).

Proof

By definition

and we only have to justify that, under Assumption 2.1, we can do integration by parts to get

This is equivalent to proving that we can apply the Gauss theorem to obtain

Using that \(y,z\in H^1(\Omega )\hookrightarrow L^r(\Omega )\) for all \(r<+\infty \), \(b\in L^{{\hat{p}}}(\Omega )^2\) for some \({{\hat{p}}}>2\) and \(\nabla \cdot b\in L^{{\hat{q}}}(\Omega )\) for some \({{\hat{q}}}>1\), applying Hölder’s inequality, we have

so \(\nabla \cdot (yzb)\in L^s(\Omega )\), where \(s = \min \left\{ \dfrac{2{{\hat{p}}}}{2+{{\hat{p}}}},\dfrac{{{\hat{q}}}+1}{2}\right\} \) satisfies \(1<s <2\). From Assumption 2.1, it is also clear that \(yzb\in L^s(\Omega )^2\), and using [17, Lema II.1.2.2], we deduce that yzb has a normal trace \(yzb\cdot n\) defined in the space of \((W^{1-1/s',s'}(\Gamma ))'\) via Gauss theorem: for every \(v\in W^{1,s'}(\Omega )\)

Since we are assuming that \(b\cdot n\in L^{{\hat{q}}}(\Gamma )\) for some \({{\hat{q}}}>1\), then \(yz b\cdot n\in L^{\frac{{{\hat{q}}}+1}{2}}(\Gamma )\hookrightarrow L^s(\Gamma )\). Therefore, we have that

Taking \(v = 1\) in the above equalities, we have that

and the proof is complete. \(\square \)

Next, we adapt the proof of [8, Theorem 2.2] to show existence and uniqueness of the solution of the state equation.

Lemma 2.6

Under Assumption 2.1, the linear operator \({\mathcal {A}}:H^1(\Omega ) \longrightarrow H^{1}(\Omega )'\) is an isomorphism.

Proof

Let us first see that \({\mathcal {A}}\) is injective. Consider \(y\in H^1(\Omega )\) such that \({\mathcal {A}}y =0\). We will prove that \(y\le 0\). The contrary inequality follows by arguing on \(-y\). Suppose there exists some \({\mathcal {O}}\subset \Omega \) with positive measure such that \(y (x) > 0\) if \(x\in {\mathcal {O}}\). Take \(0< \rho < \text {ess}\sup _{x\in \Omega } y(x)\le +\infty \) and define \(y_\rho (x) = (y(x)-\rho )^+ = \max \{y(x)-\rho ,0\}\). Denote \(\Omega _\rho = \{x\in \Omega :\ \nabla y_\rho (x)\ne 0\}\). Notice that \(y_\rho \in H^1(\Omega )\),

which means that \(\Omega _\rho \subset \{x:\ y(x)>\rho \}\). We also remark that \(y_\rho (x) = 0\text { if }y(x)\le 0\), and that \(y(x)\ge y_\rho (x) \ge 0\) if \(y(x) \ge 0 \). Using these properties, and Hölder’s and Young’s inequalities, we have that

Next we use that \(\Omega _\rho \subset \Omega \), (2.3), (2.2) and the just proved inequality to obtain:

We can deduce from this a positive lower bound for the norm of b in \(L^{{\hat{p}}}(\Omega _\rho )^2\) independent of \(\rho \):

But we have that \(\vert \Omega _\rho \vert \rightarrow 0\) as \(\rho \rightarrow \text {ess}\sup _{x\in \Omega } y(x)\); see [8, Theorem 2.2]. So we have achieved a contradiction.

Finally we have just to check that the range of \({\mathcal {A}}\) is dense and closed. Since we already have established Gårding’s inequality (2.8) for the operator \({\mathcal {A}}\), the proof of closeness done in [8, Theorem 2.2] applies to our case changing the norms in \(H^1_0(\Omega )\) and \(H^{-1}(\Omega )\) respectively by the norms in \(H^1(\Omega )\) and its dual space, and thus it is omitted. By a well known duality argument, the denseness of the range of \({\mathcal {A}}\) follows from the injectivity of \({\mathcal {A}}^*\).

The argument used above to obtain the injectivity of \({\mathcal {A}}\) does not work for \({\mathcal {A}}^*\). Notice that at one moment we use that \(\int _\Omega (b\cdot \nabla y) y_\rho \,\textrm{d}x= \int _{\Omega _\rho } (b\cdot \nabla y_\rho ) y_\rho \,\textrm{d}x\). When dealing with the adjoint operator, we would find the term \(\int _\Omega (b\cdot \nabla z_\rho ) z\,\textrm{d}x\), which in general is different from \(\int _{\Omega _\rho } (b\cdot \nabla z_\rho ) z_\rho \,\textrm{d}x\). But we can obtain injectivity of the adjoint operator as follows. Consider \(z\in H^1(\Omega )\) such that \({\mathcal {A}}^*z =0\). For all \(\varepsilon \ge 0\) define

Let us see that \(\vert \Omega ^0 \vert = 0\), which readily implies that \(z=0\). Let us define \(z^\varepsilon (x) = {\text {Proj}}_{[-\varepsilon ,\varepsilon ]}(z(x))\). Using integration by parts, that \(z=0\) in \(\Omega \setminus \Omega ^0\), that \(\nabla z^\varepsilon =0\) in \(\Omega ^\varepsilon \) and \(\nabla z^\varepsilon = \nabla z\) in \(\Omega {\setminus }\Omega ^\varepsilon \), and that \(z z^\varepsilon \ge (z^\varepsilon )^2\), we have

So, using this and that \(\vert z^\varepsilon (x)\vert \le \varepsilon \) for a.e. \(x\not \in \Omega ^\varepsilon \) we get

On the other hand, using that \(\vert z^\varepsilon \vert =\varepsilon \) in \(\Omega ^\varepsilon \) and the previous inequality, we have

Since \(\vert \Omega ^0{\setminus }\Omega ^\varepsilon \vert = \textrm{meas}\{x\in \Omega : 0< \vert z(x)\vert < \varepsilon \}\rightarrow 0\) as \(\varepsilon \rightarrow 0\), we have proved that \(\vert \Omega ^\varepsilon \vert \rightarrow 0\) as \(\varepsilon \rightarrow 0\) and hence \(\vert \Omega ^0\vert =0\). \(\square \)

Corollary 2.7

Under Assumption 2.1, the linear operator \({\mathcal {A}}^*:H^1(\Omega ) \longrightarrow H^{1}(\Omega )'\) is an isomorphism.

3 Regularity of the Solution of the State and Adjoint State Equations

To obtain further regularity, from now on we will suppose

Assumption 3.1

The coefficients \(a_{ik}\) belong to \(C^{0,1}({\bar{\Omega }})\), \(1\le i,k,\le 2\).

Let us denote by m the number of sides of \(\Gamma \) and \(\{S_j\}_{j=1}^m\) its vertices, ordered counterclockwise. For convenience denote also \(S_0=S_m\) and \(S_{m+1}=S_1\). We denote by \(\Gamma _j\) the side of \(\Gamma \) connecting \(S_{j}\) and \(S_{j+1}\), and by \(\omega _j\in (0,2\pi )\) the angle interior to \(\Omega \) at \(S_j\), i.e., the angle defined by \(\Gamma _{j}\) and \(\Gamma _{j-1}\), measured counterclockwise. Notice that \(\Gamma _{0}=\Gamma _m\). We use \((r_j,\theta _j)\) as local polar coordinates at \(S_j\), with \(r_j=\vert x-S_j\vert \) and \(\theta _j\) the angle defined by \(\Gamma _j\) and the segment \([S_j,x]\). In order to describe and analyze the regularity of the functions near the corners, we will introduce for every \(j\in \{1,\ldots ,m\}\) the infinite cone

For every \(j\in \{1,\ldots ,m\}\) we call \(A_j\) the operator with constant coefficients, corresponding to the corner \(S_j\), given by

We denote by \(\lambda _j\) the leading singular exponent associated with the operator \(A_j\) at the corner \(S_j\), i.e., the smallest \(\lambda _j>0\) such that there exists a solution of the form \(y_j=r_j^{\lambda _j}\varphi _j(\theta _j)\), with \(\varphi _j\) smooth enough, of

For instance, for \(Ay = -\Delta y\) we have \(\lambda _j = \pi /\omega _j\). We denote \(\lambda = \min \{\lambda _j\}\).

With the usual technique of taking a partition of the unity to localize the problem in the corners, freezing the coefficients and doing an appropriate linear change of variable, the classical results for the Laplace operator are also valid in our case; see, e.g. [18, Section 2.1] for a detailed example of application of this technique. Notice that the symmetry hypothesis \(a_{ik}=a_{ki}\) introduced in Assumption 2.1 implies that the same change of variable that transforms \(A_j\) into \(-\Delta \) will transform the conormal derivative \(\partial _{n_{A_j}}\) into the normal derivative \(\partial _n\) in the new variables, and not in an oblique derivative.

We continue with regularity results for problems with \(b\equiv 0\) and \(a_0\equiv 0\) and use the standard Sobolev and Sobolev–Slobodetskiĭ spaces but also weighted Sobolev spaces as follows. Let \(k\in \mathbb {N}_0\), \(1\le p\le \infty \), and \(\mathbf {\beta }=(\beta _1,\ldots ,\beta _m)^T\in \mathbb {R}^m\), \(j\in \{1,\ldots ,m\}\). For ball-neighborhoods \(\Omega _{R_j}\) of \(S_j\) with radius \(R_j\le 1\) and \(\Omega ^0:=\Omega \setminus \bigcup _{j=1}^m \Omega _{R_j/2}\) we define norms via

where the standard modification for \(p=\infty \) is used. The spaces \(W^{k,p}_{\mathbf {\beta }}(\Omega )\) and \(V^{k,p}_{\mathbf {\beta }}(\Omega )\) denote the set of all functions v such that

respectively, are finite. The corresponding seminorms are defined by setting \(\vert \alpha \vert =k\) instead of \(\vert \alpha \vert \le k\). For the definition of the corresponding trace spaces \(W^{k-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\), \(V^{k-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\), \(W^{k-1/p,p}_{\mathbf {\beta }}(\Gamma )\) and \(V^{k-1/p,p}_{\mathbf {\beta }}(\Gamma )\) we refer to [13, Sect. 6.2.10], see also [19, Section 2.2]. We will also use the notation \(L^p_{\mathbf {\beta }}(\Omega )\) for \(W^{0,p}_{\mathbf {\beta }}(\Omega )\).

Lemma 3.2

Suppose that Assumption 3.1 holds. Consider \(f\in H^1(\Omega )'\) and \(g\in H^{1/2}(\Gamma )'\) such that

and let \(y\in H^{1}(\Omega )\) be the unique solution, up to a constant, of

We have the following regularity results.

(a) If \(f\in H^{2-t}(\Omega )'\), and \(g\in \displaystyle \prod _{j=1}^m H^{t-3/2}(\Gamma _j)\) for some \(1<t<1+\lambda \), \(t\le 2\), then

(b) If \(f\in L^r(\Omega )\) and \(g\in \displaystyle \prod _{j=1}^m W^{1-1/r,r}(\Gamma _j)\) for some \(1<r<\dfrac{2}{2-\lambda }\) if \(\lambda <2\), \(r>1\) arbitrary if \(\lambda \ge 2\), then

(c) Consider \(s\in (1,\infty )\) and \({\mathbf {\beta }}\) such that \(2-\dfrac{2}{s}-\lambda _j<\beta _j<2-\dfrac{2}{s}\), \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\). If \(f\in L^s_{\mathbf {\beta }}(\Omega )\) and \(g\in \prod _{j=1}^m V^{1-1/s,s}_{\mathbf {\beta }}(\Gamma _j)\), then

Remark 3.3

Let us briefly comment on the function spaces appearing in the lemma. Notice that for \(t=2\), \(H^{t-2}(\Omega ) = H^{2-t}(\Omega )=L^2(\Omega )\), and for \(3/2<t\), \(H^{2-t}(\Omega ) = H^{2-t}_0(\Omega )\) and hence \(H^{t-2}(\Omega ) = H^{2-t}(\Omega )'\). Nevertheless, for \(1<t<3/2\), \(H^{2-t}(\Omega )' \ne H^{t-2}(\Omega )\). Also take into account that

We remark that the mapping \(y\mapsto \partial _{n_A}y\) is linear and continuous from \(H^2(\Omega )\) onto \(\prod _{j=1}^m H^{1/2}(\Gamma _j)\); see [10, Theorem 1.5.2.8].

Regarding weighted spaces, we notice that \(V^{1-1/s,s}_{\mathbf {\beta }}(\Gamma )=W^{1-1/s,s}_{\mathbf {\beta }}(\Gamma )\) if \(\beta _j>1-\frac{2}{s}\) or \(\beta _j<-\frac{2}{s}\) for all \(j\in \{1,\ldots ,m\}\), while these spaces differ by a constant in the vicinity of each corner \(S_j\) where \(-\frac{2}{s}<\beta _j<1-\frac{2}{s}\), see [12, Theorem 2.1] or [14, page 131].

Proof of Lemma 3.2

The result in (a) can be deduced from [20, Theorem 9.2] for \(1<t<3/2\), from [21, Theorem (23.3)] for \(3/2<t<2\), and from [10, Corollary 4.4.4.14] for \(t=2\). The case \(t=3/2\) follows by interpolation. Statement (b) follows from [10, Corollary 4.4.4.14]. Part (c) follows by standard arguments but we did not find this particular result in the literature. Therefore we sketch the proof here for the case of constant coefficients. As said above, the result in the case of Lipschitz coefficients follows from this one using the localization-and-freezing technique.

We will use [13, Theorem 1.2.5] stating a similar result for a cone K and weighted V-spaces. For the problem under consideration and in our notation it says that \(y\in V^{2,s}_\beta (K)\) if \(f\in L^s_\beta (K)\) and \(g\in V^{1-1/s,s}_\beta (\partial K{\setminus } O)\) provided that \(s\in (1,\infty )\) and \(2-\frac{2}{s}-\beta \not \in \{k\lambda , k\in \mathbb {Z}\}\). To satisfy the latter condition we assume \(2-\frac{2}{s}-\lambda _j<\beta _j < 2-\frac{2}{s}\) for all \(j\in \{1,\ldots ,m\}\).

The reformulation from the vicinity of a vertex of the domain \(\Omega \) is achieved by using cut-off functions \(\zeta _j:\Omega \rightarrow [0,1]\) with \(\zeta _j\equiv 1\) in \(\Omega _{R_j/2}\), \(\zeta _j\equiv 0\) in \(\Omega {\setminus }\Omega _{R_j}\), and \(\partial _{n_{A_j}}\zeta _j=0\) on \(\partial \Omega \cap \partial \Omega _{R_j}\). We split \(y\in H^1(\Omega )\) into

With this construction we get \(y_j(S_j)=0\) and \(\text {supp}\,y_j={\bar{\Omega }}_{R_j}\) such that we can consider the problem \(A y_j=f_j\) with Neumann boundary condition \(\partial _{n_{A_j}}y_j=g_j=\zeta _j g\) in the cone \(K_j\). For \(f_j\), we have

with smooth functions \({\mathfrak {b}}_j\) and \({\mathfrak {a}}_j\) due to the constant coefficients in A. From \(f\in L^s_{\mathbf {\beta }}(\Omega )\) and \(y\in H^1(\Omega )\) we conclude \(f_j\in L^{{\hat{s}}}_{\beta _j}(K)\), \({\hat{s}}=\min (s,2)\) where we use that \(\beta _j\ge 0\). Moreover, the assumption \(g\in \prod _{j=1}^mV^{1-1/s,s}_{\mathbf {\beta }}(\Gamma _j)\) leads to \(g_j\in V^{1-1/s,s}_{\beta _j}(\partial K_j{\setminus } O_j)\) such that [13, Theorem 1.2.5] leads to \(y_j\in V^{2,{\hat{s}}}_{\beta _j}(K_j)\hookrightarrow W^{2,{\hat{s}}}_{\beta _j}(K_j)\). Since the function w does not contain corner singularities, hence \(w\in W^{2,s}(\Omega )\), we obtain \(y\in W^{2,{\hat{s}}}_{\mathbf {\beta }}(\Omega )\). If \(s\le 2\) we are done.

Otherwise, when \(s>2\), we have \(y\in H^2(\Omega _{R_j}{\setminus }\Omega _{R_j/2})\hookrightarrow W^{1,s}(\Omega _{R_j}{\setminus }\Omega _{R_j/2})\), and we reiterate \(f_j\in L^s_{\beta _j}(K)\) and \(y_j\in V^{2,s}_{\beta _j}(K_j)\hookrightarrow W^{2,{\hat{s}}}_{\beta _j}(K_j)\) leading to \(y\in W^{2,s}_{\mathbf {\beta }}(\Omega )\). \(\square \)

Theorem 3.4

Suppose that Assumptions 2.1 and 3.1 hold. Consider \(f\in H^1(\Omega )'\) and \(u\in H^{1/2}(\Gamma )'\) and let \(y\in H^{1}(\Omega )\) be the unique solution of

We have the following regularity results.

(a) If \(a_0\in L^q(\Omega )\), \(f\in H^{2-t}(\Omega )'\) and \(u\in \prod _{j=1}^m H^{t-3/2}(\Gamma _j)\) for some t such that \(1<t<1+\lambda \), \(t\le 2\) and \(q = \dfrac{2}{3-t}\), then \(y\in H^{t}(\Omega )\) and there exists a constant \(C_{{\mathcal {A}},t}>0\) such that

(b) If \(a_0\in L^r(\Omega )\), \(f\in L^r(\Omega )\) and \(u\in \prod _{j=1}^m W^{1-1/r,r}(\Gamma _j)\) for some \(r\in (1,{{\hat{p}}}]\) satisfying \(r<\dfrac{2}{2-\lambda }\) in case of \(\lambda <2\), then \(y\in W^{2,r}(\Omega )\) and there exists a constant \(C_{{\mathcal {A}},r}>0\) such that

(c) If \(a_0\in L^{ p}_{\mathbf {\beta }}(\Omega )\), \(f\in L^{p}_{\mathbf {\beta }}(\Omega )\) and \(u\in \prod _{j=1}^m W^{1-1/{ p}, p}_{\mathbf {\beta }}(\Gamma _j)\) for some \(p\in (1,2]\) and some \(\mathbf {\beta }\) such that \(2-\dfrac{2}{p}-\lambda _j<\beta _j <2-\dfrac{2}{p}\) and \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\), then \(y\in W^{2,p}_{\mathbf {\beta }}(\Omega )\) and there exists a constant \(C_{{\mathcal {A}},\mathbf {\beta },p}>0\) such that

Proof

Let us define

From the proof of Lemma 2.3, we know that \(F\in H^1(\Omega )'\). Also, taking \(z=1\) in (3.1), we have that

so the conditions of Lemma 3.2 apply to the problem

We have to investigate the regularity of F.

(a) For \(1<\tau \le t\), define \(S = \{z\in H^{2-\tau }(\Omega ):\ \Vert z\Vert _{H^{2-\tau }(\Omega )} = 1\}\). We have that \(F \in H^{2-\tau }(\Omega )'\) if and only if

Applying Hölder’s inequality, we can deduce the existence of a constant \(C_\Omega >0\), that may depend on the measure of \(\Omega \), such that

where

Let us also notice that \(H^{2-\tau }(\Omega )\hookrightarrow L^s(\Omega )\) if and only if

We will apply a boot-strap argument.

Step 1. We know that \(y\in H^1(\Omega )\), so \(r_p=2\) and for \(r_q\) we can take any real number. Noting that \(q>1\), using (3.3) and taking

we have that \(1/s>0\) for \(r_q\) big enough and both conditions in (3.3) are satisfied. Hence we deduce that \(F\in H^{2-\tau }(\Omega )'\). Since \(u\in \prod _{j = 1}^{m}H^{t-3/2}(\Gamma _j)\), a direct application of Lemma 3.2 yields that \(y\in H^{\min \{t,\tau \}}(\Omega )\). If \(\tau \ge t\), the proof is complete.

Step 2. Otherwise we have that \(\nabla y\in H^{\tau -1}(\Omega )^2\hookrightarrow L^{r_p}(\Omega )^2\) for

and, since \(\tau > 1\), we can take \(r_q = +\infty \). As before, we select

We have two possibilities now.

Step 3. If \(\dfrac{1}{s} = 1-\dfrac{1}{q}\), then, applying (3.4) and taking into account our choice of q, we have that \(y\in H^{{\hat{\tau }}}(\Omega )\) with

and the proof is complete.

Step 4. Otherwise, \(\dfrac{1}{s} = 1-\dfrac{1}{{{\hat{p}}}}-\dfrac{1}{r_p}\) and we will have \(y\in H^{{\hat{\tau }}}(\Omega )\) with

and we have advanced a fixed amount \(1-\dfrac{2}{{{\hat{p}}}}\). If \(\hat{\tau } \ge t\), the proof is complete.

Step 5. In other case, we can redefine \(\tau = {\hat{\tau }}\) and go back to step 2.

Every time we repeat the process, either we finish the proof or we increment the size of \(\tau \) by the fixed amount \(1-\frac{2}{{{\hat{p}}}}\), so the proof will end in a finite number of steps.

(b) From the Sobolev embedding theorem, we have that

for \(t =\min \{2, 3-2/r\}\). The conditions imposed on r imply that \(1<t<1+\lambda \), \(t\le 2\), so we can apply Theorem 3.4(a) to obtain \(y\in H^t(\Omega )\) and we readily have that \(y\in L^\infty (\Omega )\) and hence \(a_0 y\in L^r(\Omega )\). Let us investigate the regularity of \(b\cdot \nabla y\). We use again a boot-strap argument.

We have that \(\nabla y\in H^{t-1}(\Omega )\hookrightarrow L^{\frac{2}{2-t}}(\Omega )\). Therefore \(b\cdot \nabla y\in L^s(\Omega )\) where

Applying Lemma 3.2(b), we have that \(y\in W^{2,\min \{s,r\}}(\Omega )\). If \(s\ge r\), the proof is complete. Otherwise, we have that \(\nabla y\in W^{1,s}(\Omega )\hookrightarrow L^{s^*}(\Omega )\), with

Therefore \(b\cdot \nabla y\in L^{{\hat{s}}}(\Omega )\) where

If \(\frac{1}{{\hat{s}}}\le \frac{1}{r}\), then the proof is complete. Otherwise, we can rename \(s:={\hat{s}}\) and repeat the argument subtracting at each step the positive constant \(\dfrac{1}{2}-\dfrac{1}{{{\hat{p}}}}\) until \(\dfrac{1}{{\hat{s}}}\le \dfrac{1}{r}\).

(c) To obtain this result, we want to apply Lemma 3.2(c), but the boundary datum in that result is in the space \(\prod _{j = 1}^m V^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\), while the boundary datum in this result is in \(\prod _{j = 1}^m W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\). Taking into account Remark 3.3, it is clear that for \(p<2\), the condition \(\beta _j\ge 0\) implies that \(\beta _j > 1-2/p\) and hence \(W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j) = V^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\) for all \(j\in \{1,\ldots ,m\}\). If \(p=2\), we define

where the \(\zeta _j\) are the cutoff functions introduced in the proof of Lemma 3.2(c). Taking into account again Remark 3.3 and noting that \(u_s\equiv 0\) in a neighbourhood of the corners \(S_j\) with \(\beta _j=0\), it is readily deduced that \(u_s\in \prod _{j=1}^m V^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\). We also have that the function \(u_r = u-u_s\in \prod _{j=1}^m W^{1-1/p,p}(\Gamma _j)\), because \(u_r\equiv 0\) in a neighbourhood of the corners \(S_j\) such that \(\beta _j > 0\). In the same way we define

and consider \(y_s,y_r\in H^1(\Omega )\) such that

so that \(y =y_r+y_s\). As an application of Theorem 3.4(b), \(y_r\in W^{2,2}(\Omega )\), which is continuously embedded in \(W^{2,2}_{\mathbf {\beta }}(\Omega )\) because \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\).

Taking into account the above considerations, in the rest of the proof we assume that \(\beta _j > 1-2/p\). If \(p< 2\) then this holds, as discussed before. If \(p=2\), we denote \(u_s=u\) to treat both cases simultaneously, and hence we can use both that \(u\in \prod _{j=1}^m W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\), which is needed to have an embedding in a non-weighted Sobolev space, and \(u\in \prod _{j=1}^m V^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\), which is needed to apply Lemma 3.2(c).

From [19, Lemma 2.29(ii)], we deduce that \(L^{p}_{\mathbf {\beta }}(\Omega )\hookrightarrow L^r(\Omega )\) for all \(r<\frac{2}{{\beta _j}+2/p}\le \frac{2}{2/p}= p\) for all \(j\in \{1,\ldots ,m\}\). On the other hand, using the definition of the \(\prod _{j = 1}^mW^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\)-norm and [19, Lemma 2.29(i)], we have the embedding \(\prod _{j = 1}^mW^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\hookrightarrow \prod _{j=1}^m W^{1-1/r,r}(\Gamma _j)\) for the same r as above. We notice at this point that the assumption \(\beta _j<2-\frac{2}{p}\) implies that \(\frac{2}{{\beta _j}+2/p}>1\), and \(2-\frac{2}{p}-\lambda _j <{\beta _j}\) implies \(r < \dfrac{2}{2-\lambda _j}\) for all j, therefore we can choose some \(r>1\) satisfying the assumptions of Theorem 3.4(b) and we have that \(a_0\in L^r(\Omega )\), \(f\in L^r(\Omega )\), and \(u\in \prod _{j=1}^m W^{1-1/r,r}(\Gamma _j)\). By Theorem 3.4(b) we obtain \(y\in W^{2,r}(\Omega )\) for some \(r>1\).

In particular, the result \(y\in W^{2,r}(\Omega )\) implies \(y\in L^\infty (\Omega )\), and hence \(a_0 y \in L^{ p}_{{\mathbf {\beta }}}(\Omega )\). We also have that \(\nabla y\in W^{1,r}(\Omega )^2\hookrightarrow L^{s_y}(\Omega )^2\) for \(s_y = \dfrac{2r}{2-r}\) if \(r<2\), any \(s_y<+\infty \) if \(r=2\) and \(s_y=+\infty \) if \(r>2\). From this we deduce that \(b\cdot \nabla y \in L^s(\Omega )\) for

Now we use that \({\mathbf {\beta }}\ge 0\) to deduce that \(b\cdot \nabla y\in L^{s}_{{\mathbf {\beta }}}(\Omega )\) and hence \(F = -b\cdot \nabla y -a_0 y \in L^{\min \{s,p\}}_{{\mathbf {\beta }}}(\Omega )\). By applying Lemma 3.2(c), we have that \(y\in W^{2,{\min \{s,p\}}}_{\mathbf {\beta }}(\Omega )\). If \(s\ge p\), the proof is complete.

Otherwise, in case \(s < p \le 2\), from Sobolev’s embedding theorem, we have that \(\nabla y\in W^{1,s}_{\mathbf {\beta }}(\Omega )\hookrightarrow L^{s_y}_{\mathbf {\beta }}(\Omega )\) for

Since \(\mathbf {\beta }\ge \textbf{0}\), using that \(b\in L^{{\hat{p}}}(\Omega )\), we have that \(b\cdot \nabla y \in L^{{\hat{s}}}_{\mathbf {\beta }}\), where

By applying Lemma 3.2(c), we have that \(y\in W^{2,\min \{p,\hat{s}\}}_{\mathbf {\beta }}(\Omega )\). If \({\hat{s}}\ge 2\), the proof is complete. Otherwise, we redefine \(s:={\hat{s}}\) and repeat the last step. Since at each iteration we subtract the positive constant \(\dfrac{1}{2}-\dfrac{1}{{{\hat{p}}}}\), the proof will end in a finite number of steps. \(\square \)

We conjecture that the result of Theorem 3.4(c) holds for \(p\in (1,{\hat{p}}]\), but the proof is limited to \(p\le 2\).

Notice that the operator \({\mathcal {A}}^*\) is different from \({\mathcal {A}}\), and hence the results in Theorem 3.4 are not immediately applicable. For the adjoint state equation, we will need another assumption on \(b\cdot n\), which is a result of the boundary term obtained due to integration by parts.

Theorem 3.5

Suppose Assumptions 2.1 and 3.1 hold. Consider \(f\in H^1(\Omega )'\) and \(g\in H^{1/2}(\Gamma )'\) and let \(\varphi \in H^1(\Omega )\) be the unique solution of

(a) If \(a_0,\, \nabla \cdot b\in L^q(\Omega )\), \(b\cdot n\in L^{q_\Gamma }(\Gamma )\cap H^{t-3/2}(\Gamma )\), \(f\in H^{2-t}(\Omega )'\), and \(g\in \prod _{j=1}^m H^{t-3/2}(\Gamma _j)\) for \(1<t<1+\lambda \), \(t\le 2\), \(q = \dfrac{2}{3-t}\), and \(q_\Gamma = \min \{2,1/(2-t)\}\), then \(\varphi \in H^{t}(\Omega )\), and there exists a constant \(C_{{\mathcal {A}}^*,t}>0\) such that

(b) If \(a_0,\, \nabla \cdot b,\, f\in L^r(\Omega )\), and \(g,b\cdot n\in \prod _{j=1}^m W^{1-1/r,r}(\Gamma _j)\) for some \(r\in (1,{{\hat{p}}}]\) satisfying \(r<\dfrac{2}{2-\lambda }\) in case of \(\lambda <2\), then \(\varphi \in W^{2,r}(\Omega )\), and there exists a constant \(C_{{\mathcal {A}}^*,r}>0\) such that

(c) If \(a_0,\, \nabla \cdot b,\, f\in L^{p}_{{\mathbf {\beta }}}(\Omega )\), and \(b\cdot n,\,g\in \prod _{j = 1}^m W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\) for some \(p\in (1,2]\) and some \(\mathbf {\beta }\) such that \( 2-\frac{2}{p}-\lambda _j<\beta _j < 2-\frac{2}{p}\), \(\beta _j \ge 0\), for all \(j\in \{1,\ldots ,m\}\), then \(\varphi \in W^{2,p}_{\mathbf {\beta }}(\Omega )\) and there exists a constant \(C_{{\mathcal {A}}^*,\mathbf {\beta },p}>0\) such that

Proof

The expression for \(\langle {\mathcal {A}}^*\varphi ,z\rangle _\Omega \) is derived in Lemma 2.5. Using the product rule, we have that the function \(\varphi \) satisfies

and we can apply Theorem 3.4 to this problem provided \(\varphi \nabla \cdot b\) and \(\varphi b\cdot n\) are in the appropriate spaces.

Notice that statement (a) for \(t=2\) is the same than statement (b) for \(r=2\). We will prove (a) for \(t<2\), and refer to (b) for \(t=2\).

Step 1: First, we prove \(W^{1,\delta }(\Omega )\) regularity for some \(\delta >2\).

Let us write the equation as

This is a Neumann problem posed on a Lipschitz domain. We will apply the regularity results in [22]. To that end, we first investigate the existence of \(r_f>2\) and \(q_\Gamma > 1\) such that \(f\in W^{1,r_f'}(\Omega )'\) and \(b\cdot n\in L^{q_\Gamma }(\Gamma )\). In each of the three cases, we have:

-

(a)

\(f\in H^{2-t}(\Omega )'\hookrightarrow W^{1,r_f'}(\Omega )'\) for \(r_f=\dfrac{2}{2-t}>2\) since \(1<t<2\). The exponent \(q_\Gamma \) is given in the theorem.

-

(b)

\(f\in L^r(\Omega )\hookrightarrow W^{1,r_f'}(\Omega )'\) for \(r_f = \dfrac{2r}{2-r} > 2\) if \(1<r<2\) and all \(r_f<+\infty \) if \(r\ge 2\). In this case we take \(q_\Gamma = r>1\).

-

(c)

\(f\in L^{p}_{\mathbf {\beta }}(\Omega )\subset L^r(\Omega )\) for \(r<\dfrac{2}{\beta _j+\frac{2}{p}}\) for all \(j\in \{1,\ldots ,m\}\). The condition \(\beta _j < 2-\dfrac{2}{p}\) implies that \(\dfrac{2}{\beta _j+\frac{2}{p}} > 1\), so we can choose \(r>1\) and \(L^r(\Omega )\hookrightarrow W^{1,r_f'}(\Omega )'\) for \(r_f = \dfrac{2r}{2-r} > 2\). Therefore \(f\in W^{1,r_f'}(\Omega )'\) for all \(2< r_f <\dfrac{2p}{2-(1-\beta _j) p}\). In this case we take \(q_\Gamma = r>1\).

Note that also in each of the three cases we have different assumptions on \(a_0\) and \(\nabla \cdot b\), but in any case there exists \(q_0>1\) such that \(a_0, \nabla \cdot b\in L^{q_0}(\Omega )\).

Let us check that also \(F = \varphi \nabla \cdot b + b\cdot \nabla \varphi - a_0\varphi +\varphi \in W^{1,r_\Omega '}(\Omega )'\) for some \(r_\Omega >2\). To this end define \(r_\varphi \), \(s_\Omega \) and \(r_\Omega \) by

such that

and \(W^{1,r_\Omega '}(\Omega )\hookrightarrow L^{s_\Omega }(\Omega )\). Using Lemma 2.6, we have that \(\varphi \in H^1(\Omega )\hookrightarrow L^{r_\varphi }(\Omega )\). Denote \(S = \{z\in W^{1,r_\Omega '}(\Omega ): \Vert z\Vert _{W^{1,r_\Omega '}(\Omega )} = 1\}\). Then

On the boundary, we want to check that \(b\cdot n \varphi \in W^{-1/r_\Gamma ,r_\Gamma }(\Gamma ) = W^{1/r_\Gamma ,r_\Gamma '}(\Gamma )'\) for some \(r_\Gamma >2\). To this end, define \(\hat{r}_\varphi \), \(s_\Gamma \) and \(r_\Gamma \) by

such that

and \(W^{1/r_\Gamma ,r_\Gamma '}(\Gamma )\hookrightarrow L^{s_\Gamma }(\Gamma )\). From Lemma 2.3 and the trace theorem, we have that \(\varphi \in H^{1/2}(\Gamma )\hookrightarrow L^{\hat{r}_\varphi }(\Gamma )\). Denote \(S = \{z\in W^{1/r_\Gamma ,r_\Gamma '}(\Gamma ):\ \Vert z\Vert _{W^{1/r_\Gamma ,r_\Gamma '}(\Gamma )} =1\}\). Then

Noting that for a general Lipschitz domain the \(W^{1,\delta }(\Omega )\) regularity is limited to \(\delta \le 4\), see [22], from the previous estimates, we can deduce that, for \(\delta = \min \{4,r_f,r_\Omega ,r_\Gamma \}>2\), \(\varphi \in W^{1,\delta }(\Omega )\).

Step 2: Let us check that \(\varphi \nabla \cdot b\) and \(\varphi b\cdot n\) satisfy the regularity assumptions for the source and the Neumann data respectively of the different cases of Theorem 3.4.

(a) On one hand \(\varphi \in W^{1,\delta }(\Omega )\hookrightarrow L^\infty (\Omega )\) and the assumption \(\nabla \cdot b\in L^q(\Omega )\) imply \(\varphi \nabla \cdot b\in L^q(\Omega )\hookrightarrow H^{2-t}(\Omega )'\), by the definition of q. On the boundary, by the trace theorem \(\varphi \in W^{1-1/\delta ,\delta }(\Gamma )\). If \(1<t\le 3/2\), then we use that \(W^{1-1/\delta ,\delta }(\Gamma )\hookrightarrow L^\infty (\Gamma )\) to conclude that \(\varphi b\cdot n\in L^{q_\Gamma }(\Gamma )\hookrightarrow H^{t-3/2}(\Gamma )\). The last inclusion follows by duality and the Sobolev imbedding \(H^{3/2-t}(\Gamma )\hookrightarrow L^{\frac{1}{t-1}}(\Gamma )\). If \(3/2<t<2\), we use that \(W^{1-1/\delta ,\delta }(\Gamma )\hookrightarrow H^{s_1}(\Gamma )\) for \(s_1=1-1/\delta > 1/2\). Since we are assuming that \(b\cdot n\in H^{s_2}(\Gamma )\) with \(s_2 = t-3/2\in (0,1/2)\), from the trace theorem and the multiplication theorem [23, Theorem 7.4], we have that \(\varphi b\cdot n\in H^{t-3/2}(\Gamma )\).

The result follows from Theorem 3.4(a).

(b) Using again that \(\varphi \in L^\infty (\Omega )\), we readily deduce that \(\varphi \nabla \cdot b\in L^r(\Omega )\). Let us check that \(\varphi b\cdot n\in \prod _{j = 1}^m W^{1-1/r,r}(\Gamma _j)\).

For all \(j\in \{1,\ldots ,m\}\), by the trace theorem and the assumption on \(b\cdot n\) we deduce the existence of \(B_j\in W^{1,r}(\Omega )\) such that the trace of \(B_j\) on \(\Gamma _j\) is \(b\cdot n\).

Suppose first that \(r\le 2\). Then, a straightforward application of the multiplication Lemma 3.6 below (in the case \(\beta _j=0\)) yields \(\varphi B_j\in W^{1,r}(\Omega )\), and hence, its trace on \(\Gamma _j\) belongs to \(W^{1-1/r,r}(\Gamma _j)\). Therefore, \(\varphi b\cdot n\in \prod _{j = 1}^m W^{1-1/r,r}(\Gamma _j)\) and the result follows from Theorem 3.4(b).

If \(r > 2\), from the previous paragraph we have that \(\varphi \in W^{2,2}(\Omega )\hookrightarrow W^{1,\delta }(\Omega )\) for all \(\delta < +\infty \). Repeating the previous argument, we obtain the desired result.

(c) Since \(\varphi \in L^\infty (\Omega )\) and \(\nabla \cdot b\in L^p_{\mathbf {\beta }}(\Omega )\), we have that \(\varphi \nabla \cdot b\in L^p_{\mathbf {\beta }}(\Omega )\). Next, we show that \(\varphi b\cdot n\in \prod _{j = 1}^m W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\).

For all \(j\in \{1,\ldots ,m\}\), by the trace theorem and the assumption on \(b\cdot n\) we deduce the existence of \(B_j\in W^{1,p}_{\mathbf {\beta }}(\Omega )\) such that the trace of \(B_j\) on \(\Gamma _j\) is \(b\cdot n\).

Since \(p\le 2<\delta \), a straightforward application of the multiplication Lemma 3.6 below yields \(\varphi B_j\in W^{1,p}_{\mathbf {\beta }}(\Omega )\), and hence, its trace on \(\Gamma _j\) belongs to \(W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\). Therefore, \(\varphi b\cdot n\in \prod _{j = 1}^m W^{1-1/p,p}_{\mathbf {\beta }}(\Gamma _j)\) and the result follows from Theorem 3.4(c). \(\square \)

It remains to prove the multiplication theorem used in the proofs of cases (b) and (c) in Theorem 3.5.

Lemma 3.6

(A multiplication theorem in weighted Sobolev spaces) Let \(1<q < +\infty \). Consider \(\varphi \in W^{1,\delta }(\Omega )\) for some \(\delta >\max \{2, q \}\) and \(\psi \in W^{1,q }_{{\mathbf {\beta }}}(\Omega )\) for some \(\mathbf {\beta }\) such that \(2-\frac{2}{q }-\lambda _j< \beta _j <2-\frac{2}{q }\), \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\). Then \(\psi \varphi \in W^{1,q }_{{\mathbf {\beta }}}(\Omega )\).

Proof

Since \(\delta > 2\), \(\varphi \in L^\infty (\Omega )\). Also it is clear that \(\psi \in L^{q}_{\mathbf {\beta }}(\Omega )\), and hence \(\psi \varphi \in L^{q}_{\mathbf {\beta }}(\Omega )\).

Let us check that also \(\vert \nabla (\psi \varphi )\vert \in L^{q}_{\mathbf {\beta }}(\Omega )\). We write \(\nabla (\psi \varphi ) = \varphi \nabla \psi + \psi \nabla \varphi \). Using again that \(\varphi \in L^\infty (\Omega )\) it is immediate to deduce that \(\vert \nabla \psi \vert \in L^{q}_{\mathbf {\beta }}(\Omega )\) implies that \(\vert \varphi \nabla \psi \vert \in L^{q}_{\mathbf {\beta }}(\Omega )\).

Checking that the term \(\psi \vert \nabla \varphi \vert \in L^{q}_{\mathbf {\beta }}(\Omega )\) is more involved. By localizing the problem at corner \(x_j\), and applying Hölder’s inequality we obtain

and therefore it is sufficient to prove that \(r^{\beta _j}\psi \in L^{\frac{q \delta }{\delta -{q}}}(\Omega _{R_j})\). Let us introduce \(1\le q_\delta < q \) and \(2\le q_\delta ^*<+\infty \) such that

so that \(q_\delta ^* \ge \frac{q \delta }{\delta -q}\), and \(W^{1,q_\delta }(\Omega _{R_j})\hookrightarrow L^{q_\delta ^*}(\Omega _{R_j})\hookrightarrow L^{\frac{q \delta }{\delta -{q}}}(\Omega _{R_j})\). We are going to prove that \(r^{\beta _j}\psi \in W^{1,q_{\delta }}(\Omega _{R_j})\).

First of all we notice that \(\nabla (r^{{\beta _j}} \psi ) = r^{{\beta _j}} \nabla \psi + \psi \nabla r^{{\beta _j}}\). By definition of \(W^{1,q}_{\mathbf {\beta }}(\Omega )\), we have that \( r^{\beta _j} \vert \nabla \psi \vert \in L^{q}(\Omega _{R_j})\hookrightarrow L^{q_\delta }(\Omega _{R_j})\).

For the second term we notice that \(\vert \psi \nabla r^{\beta _j}\vert \sim r^{{{\beta _j}}-1} \psi \). Since \(1-2/q_\delta =\max \{-1,\frac{2}{\delta }-\frac{2}{q}\} <0\le \beta _j\), we have that \(W^{1,q_{\delta }}_{\mathbf {\beta }}(\Omega _{R_j})\hookrightarrow L^{q_{\delta }}_{\mathbf {\beta }-1}(\Omega _{R_j})\); see e.g. [19, Lemma 2.29(i)]. We deduce that \(\psi \in W^{1,q}_{\mathbf {\beta }}(\Omega _{R_j}) \hookrightarrow W^{1,q_\delta }_{\mathbf {\beta }}(\Omega _{R_j})\hookrightarrow L^{q_{\delta }}_{\mathbf {\beta }-1}(\Omega _{R_j})\). This means that \(r^{\beta _j-1} \psi \in L^{q_{\delta }}(\Omega _{R_j})\), and we gather that \(\vert \psi \nabla r^{{\beta _j}}\vert \in L^{q_{\delta }}(\Omega _{R_j})\).

Therefore \(\nabla (r^{\beta _j} \psi )\in L^{q_{\delta }}(\Omega _{R_j})\), so we have that \(r^{\beta _j} \psi \in W^{1,q_{\delta }}({\Omega _{R_j}})\).

Using this, we conclude that \(\psi \vert \nabla \varphi \vert \in L^{q}_{{\mathbf {\beta }}}(\Omega )\) and consequently \(\vert \nabla (\psi \varphi )\vert \in L^{q}_{{\mathbf {\beta }}}(\Omega )\), which leads to the desired result. \(\square \)

4 Discretization

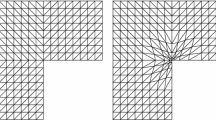

Consider a family of regular triangulations \(\{{\mathcal {T}}_h\}\) graded with mesh grading parameters \(\mu _j\in (0,1]\), \(j\in \{1,\ldots ,m\}\) in the sense of [24, Section 3.1], see also [25]. As usual, \(Y_h\subset H^1(\Omega )\cap C({\bar{\Omega }})\) is the space of continuous piecewise linear functions.

Lemma 4.1

There exists a constant \(c_{\mathbf {\mu }}>0\) such that

where \(I_h \) is the Lagrange interpolation operator, the vector \(\mathbf {\beta }\) satisfies that \(1-\lambda _j< \beta _j < 1\) and \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\) and the exponent s satisfies that \(s\le 1\) and \(s <\frac{\lambda _j}{\mu _j}\) for all \(j\in \{1,\ldots ,m\}\).

Proof

The case \(\mu _j=1\) (quasi-uniform mesh) is classical. For \(\mu _j<\lambda _j\) see [4, Lemma 4.1]. The case \(\lambda _j\le \mu _j<1\) can be proved with the same techniques and the additional idea that \(h_T\sim h^s r_T^{1-s\mu _j}\), \(1-s\mu _j=\beta _j > 1-\lambda _j\); see equation (3.14) in [24, Theorem 3.2], where it was used for a Dirichlet problem. \(\square \)

Define the bilinear form \(a(y,z)= \langle {\mathcal {A}}y,z\rangle _\Omega \). For a datum \(u\in H^{1/2}(\Gamma )'\), the discrete state equation reads

Existence and uniqueness of the solution of this equation is not immediate since \(a(\cdot ,\cdot )\) is not coercive over \(Y_h\).

Theorem 4.2

There exists \(h_0>0\) that depends on A, b, \(a_0\), \(\Omega \) and the mesh grading parameter \(\mathbf {\mu }\), such that the system (4.1) has a unique solution for every \(h\le h_0\) and every \(u\in H^{1/2}(\Gamma )'\). Further, there exists a constant \(K_0\) that depends on A, b, \(a_0\), \(\Omega \) and is independent of \(\mathbf {\mu }\) and h such that

The scheme of the proof is similar to that of [9, Lemma 3.1] for distributed control problems with homogeneous Dirichlet boundary conditions, but that proof is done for quasi-uniform meshes and uses this fact explicitly; see equations (3.8) and (3.9) in [9]. Since the mesh grading and the boundary terms imply some extra technicalities, we include a complete proof for the convenience of the reader.

Proof

Due to the linearity of the system, to show existence it is sufficient to prove uniqueness of solution in the case \(u=0\). Suppose \(y_h\in Y_h\) satisfies

Taking \(z_h=y_h\) and using Gårding’s inequality established in Lemma 2.3, we have that

Therefore

Since \(y_h\in L^2(\Omega )\subset L^2_{\mathbf {\beta }}(\Omega )\) for all \(\mathbf {\beta }\ge \textbf{0}\) such that \(1-\lambda _j < \beta _j \) for all \(j\in \{1,\ldots ,m\}\), from Theorem 3.5(c), we have that there exists a unique \(\psi \in W^{2,2}_{\mathbf {\beta }}(\Omega )\) such that

and there exists a constant \(C_{{\mathcal {A}}^*,\mathbf {\beta }}\) such that

Let us denote \({\hat{\psi }}_h\in Y_h\) the Ritz–Galerkin projection of \(\psi \) onto \(Y_h\) in the sense of \(H^1(\Omega )\), i.e., \({\hat{\psi }}_h\) is the unique solution of

From [5, Eq. (4.2)], Theorem 3.5(c), and the embedding \(L^2(\Omega )\hookrightarrow L^2_{\mathbf {\beta }}(\Omega )\), with embedding constant 1 due to the choice \(R_j\le 1\), we have that there exists a constant \({\hat{c}}_{\mathbf {\mu }}\) such that

where \(s\le 1\) and \(s <\frac{\lambda _j}{\mu _j}\) for all \(j\in \{1,\ldots ,m\}\); see Lemma 4.1. Taking \(z=y_h\) in the adjoint Eq. (4.5), and \(z_h={\hat{\psi _h}}\) in the homogeneous discrete Eq. (4.3), we deduce

Along the proof we will denote \(\Vert {\mathcal {A}}\Vert = \Vert {\mathcal {A}}\Vert _{{\mathcal {L}}(H^1(\Omega ),H^1(\Omega )')}\). Choosing \(h_0\) such that

we have that, for all \(h \le h_0\)

Using this and estimate (4.4), we deduce that

and hence \(y_h=0\).

Take now \(u\in H^{1/2}(\Gamma )'\) and denote \(y={\mathcal {A}}^{-1}u\). For \(h\le h_0\), let \(y_h\) be the solution of (4.1). Taking \(z=y_h\) in the adjoint Eq. (4.5), and \(z_h={\hat{\psi _h}}\) in the discrete Eq. (4.1), we deduce

where we have used that \(\Vert {\hat{\psi }}_h\Vert _{H^1(\Omega )}\le \Vert \psi \Vert _{H^1(\Omega )}\le {\hat{c}}_{\mathbf {\beta }}\Vert \psi \Vert _{W^{2,2}_{\mathbf {\beta }}(\Omega )}\le c_{\mathbf {\beta }} C_{{\mathcal {A}}^*,\mathbf {\beta }}\Vert y_h\Vert _{L^2(\Omega )}\); see [19, Lemma 2.29(i)] for the embedding \(W^{2,2}_{\mathbf {\beta }}(\Omega )\hookrightarrow H^1(\Omega )\). Now, using that \(h\le h_0\) and (4.7) we have

and applying Young’s inequality we deduce

Using Gårding’s inequality, the discrete Eq. (4.1) and \(y={\mathcal {A}}^{-1}u\), we infer

Multiplying (4.8) by \(C_{\Lambda ,E,b}\) and using the resulting inequality in (4.9), we obtain

where in the second step we have used Young’s inequality. Gathering the terms with \(\Vert y_h\Vert _{H^1(\Omega )}^2\) and taking the square root, we finally obtain:

Notice that the constant depends on \(\mathbf {\beta }\), which is itself limited by the value of \(\mathbf {\lambda }\), and hence the constant will finally depend on \(\mathbf {\lambda }\). \(\square \)

Theorem 4.3

There exists \(h_0^*>0\) that depends on A, b, \(a_0\), \(\Omega \) and the mesh grading parameter \(\mathbf {\mu }\), such that the discrete adjoint problem

has a unique solution for every \(y\in H^1(\Omega )'\) and every \(0< h \le h_0^*\). Further, there exists a constant \(K_0^*\) that depends on A, b, \(a_0\), \(\Omega \) and is independent of \(\mathbf {\mu }\) and h such that

Proof

Existence and uniqueness of solution of the discrete adjoint Eq. (4.10) follows for all \(0<h<h_0\) due to the finite-dimensional character of the problem. To get the estimate (4.11), we follow the steps of the proof of Theorem 4.2. Notice that in this case, the value of \(h_0^*\), which is used explicitly in the proof, may be different from the value of \(h_0\) provided in (4.7). \(\square \)

The following estimate is an immediate consequence of the previous results, Lemma 2.6, Corollary 2.7 and the trace theorem.

Corollary 4.4

Let \({\bar{h}}=\min \{h_0,h_0^*\}\) with \(h_0\) from Theorem 4.2 and \(h_0^*\) from Theorem 4.3. For \(u\in L^2(\Gamma )\) let \(y_h\in Y_h\) be the unique solution of (4.1). There exists a constant \(c_2>0\) that depends on the data of the problem, but not on the mesh grading parameters \(\mathbf {\mu }\) or on h, such that, for all \(h<{\bar{h}}\)

Proof

Let us denote \(C_{\textrm{TR}}\) the norm of the trace operator from \(H^1(\Omega )\) to \(L^2(\Gamma )\). We use Theorem 4.2, Lemma 2.6, and the fact that u can be seen as an element of \(H^1(\Omega )'\) and \(\Vert u\Vert _{H^1(\Omega )'} \le C_{\textrm{TR}} \Vert u\Vert _{L^2(\Gamma )}\), cf. (2.4) and (2.5). A straightforward estimation shows that

where \(\Vert {\mathcal {A}}^{-1}\Vert \) denotes the norm in \({\mathcal {L}}(H^1(\Omega )',H^1(\Omega ))\). The result follows for \(c_2 = C_{\textrm{TR}}^2 K_0 \Vert {\mathcal {A}}^{-1}\Vert \). \(\square \)

Theorem 4.5

(Error estimates in the domain). For \(0< h<{\bar{h}}\), where \({\bar{h}}\) is defined in Corollary 4.4, and \(u\in H^{1/2}(\Gamma )'\), let \(y_h\in Y_h\) be the solution of (4.1) and \(y\in H^1(\Omega )\) be the solution of (3.1) for \(f=0\). There exists \(C>0\) that depends on A, b, \(a_0\), \(\Omega \) but is independent of h such that

If further \(u\in \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\), where \(1-\lambda _j< \beta _j < 1\) and \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\), there exists \(C>0\) that depends on A, b, \(a_0\), \(\Omega \), \(\mathbf {\beta }\), and the mesh grading parameter \(\mathbf {\mu }\), but is independent of h and u such that

for all \(s\le 1\) and \(s <\frac{\lambda _j}{\mu _j}\) for all \(j\in \{1,\ldots ,m\}\).

Furthermore, for all \(f\in L^{2}_{\mathbf {\beta }}(\Omega )\) and \(g\in \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma )\), let \(\varphi \in W^{2,2}_{\mathbf {\beta }}(\Omega )\) be the solution of (3.6) and \(\varphi _h\) be the unique solution of

Then

Proof

We will prove (4.13) and (4.14). The proof of (4.15) follows the same lines.

We first prove that

Consider \(\psi \in W^{2,2}_\beta (\Omega )\) the solution of the adjoint problem

and let \({\hat{\psi _h\in }} Y_h\) be its Ritz–Galerkin projection onto \(Y_h\) in the sense of \(H^1(\Omega )\), as in the proof of Theorem 4.2. We have, with (4.6), that

and (4.16) follows. Estimate (4.13) follows from this, Theorem 4.2 and Lemma 2.6.

Using Gårding’s inequality established in Lemma 2.3, estimate (4.16), and the definition of \(h_0>0\) in (4.7), we have that for all \(h < h_0\)

and hence

Using Theorem 3.4(c) and Lemma 4.1

Using that \(a(y,I_h y_h) = a(y_h,I_h y_h)\), (4.17) and the above inequality, we have that

and the result follows. \(\square \)

Corollary 4.6

There exists \(C>0\) that depends on A, b, \(a_0\), \(\Omega \), and the mesh grading parameter \(\mathbf {\mu }\), but is independent of h such that for \(0< h<{\bar{h}}\)

for all \(s\le 1\) and \(s <\frac{\lambda _j}{\mu _j}\) for all \(j\in \{1,\ldots ,m\}\).

Further, for all \(f\in L^{2}_{\mathbf {\beta }}(\Omega )\) and \(g\in \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma )\) and all \(\theta \in (0,1)\), then

where C is independent of \(\theta \).

Proof

If \(u\in H^{1/2}(\Gamma )\), then, by (4.14) and the embedding \(H^{1/2}(\Gamma )\hookrightarrow W^{1/2,2}_{\mathbf {\beta }}(\Gamma )\hookrightarrow \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\) for some \(\mathbf {\beta }\) with \(\beta _j\ge 0\), \(1-\lambda _j< \beta _j < 1\), we obtain

The first result follows by complex interpolation between this estimate and (4.13).

The second one follows by interpolation between the estimates for \(\theta = 0\) and \(\theta =1\) that follow from (4.15). \(\square \)

5 Analysis of the Control Problem

Now, we turn to the analysis of the control problem

where \(y_u\in H^1(\Omega )\) solves (2.7). For every \(u\in H^{1/2}(\Gamma )'\), we define \(\varphi _u\in H^1(\Omega )\) as the unique solution of

We have that

Theorem 5.1

For any \(y_d\in L^2(\Omega )\) and \(g_\varphi \in L^2(\Gamma )\), problem (P) has a unique solution \({\bar{u}}\in U_\textrm{ad}\) and there exist \({\bar{y}},{\bar{\varphi }}\in H^1(\Omega )\) such that

and \({\bar{u}}\in H^{1/2}(\Gamma )\).

If, further, \(g_\varphi \in \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\) for some \(\mathbf {\beta }\) such that \(1-\lambda _j<\beta _j < 1\) and \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\), then \({\bar{y}}, {\bar{\varphi }}\in W^{2,2}_{\mathbf {\beta }}(\Omega )\cap C({\bar{\Omega }})\), \({\bar{\varphi }}\in W^{3/2,2}_{\mathbf {\beta }}(\Gamma )\cap C(\Gamma )\), \({\bar{u}}\in C(\Gamma )\).

If, moreover, the weights also satisfy \(\beta _j < 1/2\), for all \(j\in \{1,\ldots ,m\}\) then \({\bar{\varphi }}, {\bar{u}}\in H^1(\Gamma )\).

Proof

The existence of the solution follows from the appropriate continuity properties of the involved operators that are deduced from Lemma 2.6. Uniqueness is deduced from the strict convexity of the functional. The first order optimality conditions are deduced, hence, in a standard way from the Euler-Lagrange equation \(J'({\bar{u}})(u-{\bar{u}})\ge 0\) for all \(u\in U_\textrm{ad}\) and Corollary 2.7. The \(H^1(\Omega )\) regularity of \({\bar{y}}\) follows from Lemma 2.3 and the regularity of the adjoint state from Lemma 2.6. By the trace theorem, we have that \(\varphi \in H^{1/2}(\Gamma )\). This and the projection formula

which follows in a standard way from the third optimality condition, imply the regularity of \({\bar{u}}\).

Suppose now that \(g_\varphi \) belongs to \(L^2(\Gamma )\cap \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\) for some \(\mathbf {\beta }\) such that \( 1-\lambda _j<\beta _j < 1\) and \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\). The \(W^{2,2}_{\mathbf {\beta }}(\Omega )\) regularity of the state and adjoint state follow from a bootstrapping argument: since \({\bar{y}}\in H^1(\Omega )\) and \(\beta _j\ge 0\) for all j, we have that \({\bar{y}}-y_d\in L^2(\Omega )\hookrightarrow L^2_{\mathbf {\beta }}(\Omega )\). From Theorem 3.5(c) we deduce that \({\bar{\varphi }}\in W^{2,2}_{\mathbf {\beta }}(\Omega )\). This readily implies that \({\bar{\varphi }}\in W^{3/2,2}_{\mathbf {\beta }}(\Gamma )\). Using that \(L^2_{\mathbf {\beta }}(\Omega )\subset L^r(\Omega )\) for all \(1<r < 2/(1+\beta _j)\), we deduce from Theorem 3.5(b) that \({\bar{\varphi }}\in W^{2,r}(\Omega )\hookrightarrow C({\bar{\Omega }})\), so \({\bar{\varphi }}\in C(\Gamma )\). Again the projection formula leads to \({\bar{u}}\in C(\Gamma )\).

If \(\beta _j < 1/2\), then \(2/(1+\beta _j) > 4/3\), so there exists \(r>4/3\) such that \({\bar{\varphi }}\in W^{2,r}(\Omega )\hookrightarrow H^{3-2/r}(\Omega )\). Since \(3-2/r>3/2\), by the trace theorem we have that \({\bar{\varphi }}\in C(\Gamma )\cap _{j=1}^m H^1(\Gamma _j) = H^1(\Gamma )\). This last equality follows because \(\Gamma \) is one-dimensional and polygonal. This regularity is preserved by the projection formula, and therefore \({\bar{u}}\in H^1(\Gamma )\). \(\square \)

Notice that for any polygonal domain \(\lambda _j > 1/2\) for all \(j\in \{1,\ldots ,m\}\), so the condition \(\beta _j < 1/2\) may be a constraint in the regularity of the datum \(g_\varphi \), but it is not a constraint on the domain. Although some of the intermediate results below can be proved for \(g_\varphi \in L^2(\Gamma )\), since the main result requires \(H^1(\Gamma )\) regularity of the optimal control, in the rest of the work we will do the following assumption.

Assumption 5.2

We assume that \(g_\varphi \in \prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\) for some \(\mathbf {\beta }\) such that \(1-\lambda _j<\beta _j<1/2\), \(\beta _j\ge 0\) for all \(j\in \{1,\ldots ,m\}\). We denote

For every \(u\in L^2(\Gamma )\), we will denote \(y_h(u)\) the solution of the discrete state Eq. (4.1) and \(\varphi _h(u)\) the solution of

Our discrete functional reads like

To discretize the control, we notice that every triangulation \({\mathcal {T}}_h\) of \(\Omega \) defines a segmentation \({\mathcal {E}}_h\) of \(\Gamma \) and define \(U_{h,\mathrm ad}= U_h\cap U_\textrm{ad}\), where

Here and elsewhere \({\mathcal {P}}^i(K)\) is the set of polynomials of degree i in the set K. For every \(u\in L^1(\Gamma )\), we define \(Q_h u\in U_h\) by

where \(E\in {\mathcal {E}}_h\) and \(h_E\) is the length of E. Notice that \(u\in U_\textrm{ad}\) implies \(Q_h u\in U_{h,\mathrm ad}\).

Lemma 5.3

For every \(u\in H^1(\Gamma )\) there exists a constant \(C>0\) independent of h such that

If Assumption 5.2 holds, then we also have that

Proof

It is well known that for every \(E\in {\mathcal {E}}_h\) we have \(\Vert u-Q_h u\Vert _{L^2(E)} \le C h_E\Vert u\Vert _{H^1(E)}\). Using that \(h_E\le c h\), we have

The estimate for the norm in \(H^1(\Gamma )'\) follows now by duality since \(\int _\Gamma (u-Q_hu)w_h\,\textrm{d}x=0\) for all \(w_h\in U_h\). This estimate implies the third one taking into account that, using the same arguments as in the proof of Theorem 5.1, \(\varphi _u\in H^1(\Gamma )\), and

Therefore, we obtain

and the result follows using Young’s inequality. \(\square \)

Our discrete problems reads like

Existence and uniqueness of solution of problem \((\textrm{P}_h) \), as well as first order optimality conditions follow in a standard way. We state the result in the next theorem for further reference.

Theorem 5.4

For every \(0<h<{\bar{h}}\), problem \((\textrm{P}_h) \) has a unique solution \(\bar{u}_h\in U_{h,\mathrm ad}\). Further, if we denote \({\bar{y}}_h = y_h({\bar{u}}_h)\) and \({\bar{\varphi }}_h = \varphi _h({\bar{u}}_h)\), then

Before stating and proving the main theorem of this section, we prove two auxiliary results.

Lemma 5.5

There exists \(C>0\) independent of h, \(y_d\) and \(g_\varphi \) such that for all \(0<h<{\bar{h}}\),

If, moreover, Assumption 5.2 holds, then

Proof

Consider a fixed \(u_{\textrm{ad}}\in U_\textrm{ad}\) such that \(u_{\textrm{ad}}\in U_{h,\mathrm ad}\) for all \(h>0\). Using that \(\Vert \bar{y}_h-y_d\Vert ^2_{L^2(\Omega )}\ge 0\) and the optimality of \({\bar{u}}_h\) together with Young’s inequality and estimate (4.12), we have for all \(\varepsilon > 0\) that

where \(c_2\) is introduced in (4.12). Taking \(\varepsilon = \nu /(4c_2^2)\), we readily deduce that \(\{{\bar{u}}_h\}\) is uniformly bounded in \(L^2(\Gamma )\). The estimate for \(\Vert \bar{y}_h\Vert _{H^1(\Omega )}\) follows from this one and estimate (4.2).

Estimates for \(\Vert {\bar{u}}\Vert _{L^2(\Gamma )}\) and \(\Vert \bar{y}\Vert _{H^1(\Omega )}\) follow in a similar way. From this last one and Lemma 2.6 an estimate for \(\Vert {\bar{\varphi }}\Vert _{H^1(\Omega )}\) in terms of the data is obtained. The trace theorem and the projection formula (5.1) lead to the estimate for \(\Vert {\bar{u}}\Vert _{H^{1/2}(\Gamma )}\).

If Assumption 5.2 holds, then, using the estimate for \(\Vert {\bar{y}}\Vert _{L^2(\Omega )}\) and noting that the condition \(\beta _j<1/2\) implies \(\prod _{j=1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma _j)\hookrightarrow L^2(\Gamma )\) and hence

we obtain an estimate of \(\Vert {\bar{\varphi }}\Vert _{W^{2,2}_{\mathbf {\beta }}(\Omega )}\) in terms of \(M_d\). The trace theorem and the projection formula (5.1) lead to the estimate for \(\Vert {\bar{u}}\Vert _{H^1(\Gamma )}\). \(\square \)

In the rest of the work s represents any positive number satisfying \(s\le 1\) and \(s<\lambda _j/\mu _j\).

Lemma 5.6

Suppose Assumption 5.2 holds. Then, there exists \(C>0\) independent of h, \(y_d\), \(g_\varphi \) and \(\{{\bar{u}}_h\}\) such that

Moreover, for all \(\theta \in (1/2,1]\) we have the following estimate:

Proof

By the triangle inequality

where \(\varphi ^h\) is the unique element in \(H^1(\Omega )\) such that \(a(z,\varphi ^h) = \int _\Omega ({\bar{y}}_h-y_d)z\,\textrm{d}x+ \int _\Gamma g_\varphi z\,\textrm{d}x\) for all \(z\in H^1(\Omega )\), i.e., \({\bar{\varphi }}_h\) is the finite element approximation of \(\varphi ^h\).

Let us estimate the first term in the right hand side of (5.5). Noting that

we deduce from Theorem 3.5, the existence of \(C>0\) independent of h such that

Applying the finite element error estimate for the state (4.19) of Corollary 4.6 and Lemma 5.5, we have

This, together with (5.6) leads to

To estimate the second summand in the right hand side of (5.5) we apply the finite element error estimate (4.15), the uniform boundness result in Lemma 5.5 and the embedding \(\prod _{j = 1}^m W^{1/2,2}_{\mathbf {\beta }}(\Gamma ) \hookrightarrow L^2(\Gamma )\):

Estimate (5.3) follows, hence, from (5.5) together with this last estimate and (5.7).

Let us prove (5.4). First we notice that for \(1/2<\theta \le 1\), the trace operator is continuous from \(H^\theta (\Omega )\) to \(L^2(\Gamma )\), so

To estimate the term \(\Vert \varphi _{{\bar{u}}_h}-{\bar{\varphi }}_h \Vert _{H^\theta (\Omega )}\), we first introduce \(\phi _h\in Y_h\), the finite element approximation of \(\varphi _{{\bar{u}}_h}\), that satisfies \(a(z_h,\phi _h) = \int _\Omega ( y_{{\bar{u}}_h}-y_d)z_h\,\textrm{d}x+ \int _\Gamma g_\varphi z_h\,\textrm{d}x\) for all \(z_h\in Y_h\). The difference \(\phi _h-{\bar{\varphi }}_h\) satisfies \(a(z_h,\phi _h-{\bar{\varphi }}_h) = \int _\Omega (y_{{\bar{u}}_h}-{\bar{y}}_h) z_h\,\textrm{d}x\) for all \(z_h\in Y_h\). From the continuity estimate for the discrete adjoint equation of Theorem 4.3 we deduce that

Using the triangle inequality, the fact that \(\theta \le 1\), the finite element error estimate for the adjoint estate Eq. (4.19) of Corollary 4.6, (5.8), and the finite element error estimate for the state equation (4.20), together with the uniform boundness of \(\Vert \bar{u}_h\Vert _{L^2(\Gamma )}\) provided in Lemma 5.5, we obtain

where the last inequality is a result of Lemma 5.5 and the condition \(\theta > 1/2\).\(\square \)

We are now in position to prove the main result of this section.

Theorem 5.7

Suppose Assumption 5.2 holds. Then, there exists a constant independent of h, \(y_d\) and \(g_\varphi \) such that, for all \(0< h<{\bar{h}}\)

for all \(s^*\le 1\) such that \(s^* <\dfrac{3}{2}\dfrac{\lambda _j}{\mu _j}\) for all \(j\in \{1,\ldots ,m\}\).

Proof

Testing the equality \(a(z,{\bar{\varphi }}-\varphi _{{\bar{u}}_h}) = \int _\Omega ({\bar{y}}-y_{{\bar{u}}_h})z\,\textrm{d}x\) for \(z = {\bar{y}}-y_{{\bar{u}}_h}\) and using the state equation, we have that

So we can write

Let us bound the first term. First we insert in appropriate places \(Q_h{\bar{u}}\) and \({\bar{u}}\). Next, we apply the first order optimality conditions for the continuous and discrete problem. Finally we insert \(\varphi _{{\bar{u}}_h}\) to obtain

From Lemmas 5.3 and 5.5, it is clear that \(I_C\le C h^2 M_d^2\).

Let us study \(I_A\). Testing the equality \(a(z,{\bar{\varphi }}-\varphi _{{\bar{u}}_h}) = \int _\Omega ({\bar{y}}-y_{\bar{u}_h})z\,\textrm{d}x\) for \(z = {\bar{y}}-y_{Q_h{\bar{u}}}\) and using the state equation, Cauchy–Schwarz inequality, and Theorem 3.4(a), we obtain

Using this and Lemmas 5.3 and 5.5, we obtain

Next we bound \(I_B\) and II. By the Cauchy-Schwarz inequality, we have that, for every \(v\in L^2(\Gamma )\),

Taking \(v = {\bar{u}}- Q_h{\bar{u}}\) in (5.9) and using (5.4) and Lemmas 5.3 and 5.5, we conclude that

Finally, taking \(v = {\bar{u}}-{\bar{u}}_h\) in (5.9) and using (5.4), we have

Gathering all the estimates we have that

and the proof concludes using Young’s inequality. Notice that the appearance of the terms \(h \Vert {\bar{u}}-{\bar{u}}_h\Vert _{L^2(\Gamma )} M_d \) and \(h^2 M_d^2\) implies that the resulting exponent \(s^*\) is less or equal than one. On the other hand, since \(\theta > 1/2\), the term \(h^{(2-\theta )s}\Vert {\bar{u}}-{\bar{u}}_h\Vert _{L^2(\Gamma )} M_d\) yields the bound \(s^*\le (2-\theta )s< \dfrac{3}{2}s <\dfrac{3}{2}\dfrac{\lambda _j}{\mu _j}\). Finally, from the term \(h^{(2-\theta )s+1} M_d^2\) we obtain the bound \(s^*\le \min \{(2-\theta )s,1\}\), so no new conditions are imposed on \(s^*\). \(\square \)

6 A Numerical Example

Let \(\Omega \) be the L-shaped domain \(\Omega = \{x\in \mathbb {R}^2: r<\sqrt{2}, \theta < 3\pi /2\}\cap (-1,1)^2\). We consider a functional of the form

where

with data \(\nu \), \(y_d\), \(g_\varphi \), b, \(a_0\), \(g_y\) described below. The inclusion of data f and \(g_y\) is useful to write a problem with known exact solution. Notice that, if we denote \(y_0\in L^2(\Omega )\) the state related to \(u\equiv 0\) and redefine \(y_d:=y_d-y_0\) and \(y_u:=y_u-y_0\), the problem fits into the framework of problem (P) and Eq. (1.1).

Let \((r,\theta )\) be the polar coordinates in the plane, \(r\ge 0\), \(\theta \in [0,2\pi ]\). The interior angle at the vertex of the domain located at the origin is \(\omega = \omega _1 = 3\pi /2\) and we denote \(\lambda = \lambda _1 =\pi /\omega _1 = 2/3\). For \(j=2,\ldots ,6\), \(\omega _j=\pi /2\) and \(\lambda _j=2\).

We introduce \({\bar{y}} = r^\lambda \cos (\lambda \theta ),\) \({\bar{\varphi }} = -{\bar{y}}\) and \({\bar{u}} = -{\bar{\varphi }}/\nu \) on \(\Gamma \) and, for some \(\alpha > -3/2\) and some \(\delta \ge 0\), we consider \(b(x) = \delta r^{\alpha +1}(\cos \theta ,\sin \theta )^T\) and \(a_0(x) = r^\alpha \).

The data for this problem are defined as \(f = b\cdot \nabla {\bar{y}}+ a_0 {\bar{y}}\), \( g_y = \partial _{n} {\bar{y}} - {\bar{u}}\) on \(\Gamma \), \( y_d = {\bar{y}} +\nabla \cdot ({\bar{\varphi }} b)-a_0{\bar{\varphi }}\) and \(g_\varphi = \partial _n{\bar{\varphi }} + (b{\bar{\varphi }})\cdot n\).

For all \(\alpha > -2\), \(b\in L^{{\hat{p}}}(\Omega )\) for some \({{\hat{p}}}>2\) (Assumption 2.1). For \(\alpha > -1-\beta \), \(a_0,\nabla \cdot b, f, y_d\in L^2_{\mathbf {\beta }}(\Omega )\) and \(b\cdot n, g_y, g_\varphi \in W^{1/2,2}_{\mathbf {\beta }}(\Gamma )\), so the assumptions of Theorems 3.4(c) and 3.5(c) hold. If we impose \(\beta <1/2\) (assumption in Theorem 5.1), we have that for \(\alpha > -3/2\) all the assumptions of the paper hold. In our experiments, we fix \(\alpha = -1.25\).

The given \({\bar{u}}\) is the solution of the control problem

with related state \({\bar{y}}\) and adjoint state \({\bar{\varphi }}\), which satisfy the optimality system

It is clear that \({\bar{y}},{\bar{\varphi }}\in W^{2,2}_{\mathbf {\beta }}(\Omega )\) and \({\bar{u}}\in H^1(\Gamma )\cap W^{1/2,2}_{\mathbf {\beta }}(\Gamma )\) for \(\mathbf {\beta }=(\beta ,0,0,0,0,0)\) for all \(\beta> 1-\lambda >1/3\).

For \(\delta = 6\), we have checked numerically that the operator is not coercive,

To discretize the problem we use the finite element approximation described in the work. We use a family of graded meshes obtained by bisection; see, e.g., [25, Figure 1.2]. This meshing method does not lead to superconvergence properties in the gradients. The code has been done with Matlab on a desktop PC with Interl(R) Core(TM) i5-7500CPU at 3.4GHz with 24GB of RAM. The meshes have been prepared using functions provided by Johannes Pfefferer. The finite element approximations are obtained with code prepared by us and the linear systems are solved using Matlab’s [L,U,P,Q,D] = lu(S) method. The optimization of the resulting finite-dimensional quadratic program is done using Matlab’s pcg.

First we check estimates (4.14) and (4.15) for the error in the solution of the boundary value problem. For appropriately graded meshes, \(\mu < 2/3 = \lambda \), we expect order \(h^2\) in \(L^2(\Omega )\) and order h in \(H^1(\Omega )\). For a quasi-uniform family, \(\mu = 1\), we have \(s < 2/3\), so we expect order \(h^{1.33}\) in \(L^2(\Omega )\) and order \(h^{0.66}\) in \(H^1(\Omega )\). We summarize the results in Tables 1, 2, 3 and 4. We include results for both the state and adjoint state equation. Notice that \({{\tilde{\varphi }}}_h\) is the finite element approximation of \({\bar{\varphi }}\), obtained using the exact \({\bar{y}}\), i.e., \(a(z_h,{{\tilde{\varphi }}}_h) = \int _\Omega (\bar{y}-y_d)z_h\,\textrm{d}x+ \int _\Gamma g_\varphi z_h\,\textrm{d}x\) for all \(z_h\in Y_h\).

Next, we turn to the control problem and check the estimate in Theorem 5.7. Notice that we should obtain order of convergence h for both graded-meshes and quasi-uniform meshes. We summarize the results in Table 5.

Note that in this example the regularity of the adjoint state is even \({\bar{\varphi }}\in W^{2,\infty }_{\mathbf {\gamma }}(\Gamma )\) for \(\mathbf {\gamma }=(\gamma ,0,0,0,0,0)\) with \(\gamma >4/3\). This leads to superconvergence properties in the convergence in the norms of \(L^2(\Omega )\) and \(L^2(\Gamma )\) of both the state and adjoint state variable, where, despite expecting order of convergence 1, as for the control, we obtain the same order of convergence as the one for the boundary value problem, i.e, 1.33 or almost 2 in our examples. This phenomenon will be studied in a future paper.

Data availability

Not applicable.

References

Casas, E., Mateos, M., Tröltzsch, F.: Error estimates for the numerical approximation of boundary semilinear elliptic control problems. Comput. Optim. Appl. 31, 193–219 (2005). https://doi.org/10.1007/s10589-005-2180-2

Casas, E., Mateos, M.: Error estimates for the numerical approximation of Neumann control problems. Comput. Optim. Appl. 39, 265–295 (2008). https://doi.org/10.1007/s10589-007-9056-6

Mateos, M., Rösch, A.: On saturation effects in the Neumann boundary control of elliptic optimal control problems. Comput. Optim. Appl. 49(2), 359–378 (2011). https://doi.org/10.1007/s10589-009-9299-5

Apel, T., Pfefferer, J., Rösch, A.: Finite element error estimates for Neumann boundary control problems on graded meshes. Comput. Optim. Appl. 52(1), 3–28 (2012). https://doi.org/10.1007/s10589-011-9427-x

Apel, T., Pfefferer, J., Rösch, A.: Finite element error estimates on the boundary with application to optimal control. Math. Comp. 84(291), 33–70 (2015). https://doi.org/10.1090/S0025-5718-2014-02862-7

Krumbiegel, K., Pfefferer, J.: Superconvergence for Neumann boundary control problems governed by semilinear elliptic equations. Comput. Optim. Appl. 61(2), 373–408 (2015). https://doi.org/10.1007/s10589-014-9718-0

Winkler, M.: Finite element error analysis for Neumann boundary control problems on polygonal and polyhedral domains. Ph.D. thesis, Universität der Bundeswehr München (2015)

Casas, E., Mateos, M., Rösch, A.: Analysis of control problems of nonmontone semilinear elliptic equations. ESAIM Control Optim. Calc. Var. 26(80), 21 (2020). https://doi.org/10.1051/cocv/2020032

Casas, E., Mateos, M., Rösch, A.: Numerical approximation of control problems of non-monotone and non-coercive semilinear elliptic equations. Numer. Math. 149(2), 305–340 (2021). https://doi.org/10.1007/s00211-021-01222-7

Grisvard, P.: Elliptic Problems in Nonsmooth Domains. Pitman, Boston (1985)

Kozlov, V.A., Maz’ya, V.G., Rossmann, J.: Elliptic boundary value problems in domains with point singularities. In: Mathematical Surveys and Monographs, vol. 52, p. x+414. American Mathematical Society, Providence (1997). https://doi.org/10.1090/surv/052

Maz’ya, V.G., Plamenevskij, B.A.: Weighted spaces with nonhomogeneous norms and boundary value problems in domains with conical points. Transl. Ser. 2 Am. Math. Soc. 123, 89–107 (1984). https://doi.org/10.1090/trans2/123/03

Maz’ya, V., Rossmann, J.: Elliptic equations in polyhedral domains. In: Mathematical Surveys and Monographs, vol. 162, p. viii+608. American Mathematical Society, Providence (2010). https://doi.org/10.1090/surv/162

Nazarov, S.A., Plamenevsky, B.A.: Elliptic problems in domains with piecewise smooth boundaries. In: De Gruyter Expositions in Mathematics, vol. 13, p. viii+525. Walter de Gruyter & Co., Berlin (1994). https://doi.org/10.1515/9783110848915.525

Casas, E.: Introducción a las Ecuaciones en Derivadas Parciales. University of Cantabria, Santander (1992)

Nečas, J.: Les Méthodes Directes en Théorie des Equations Elliptiques. Editeurs Academia, Ottignies-Louvain-la-Neuve (1967)

Sohr, H.: The Navier-Stokes equations. In: Birkhäuser Advanced Texts: Basler Lehrbücher [Birkhäuser Advanced Texts: Basel Textbooks] An elementary functional analytic approach, p. x+367. Birkhäuser Verlag, Basel (2001). https://doi.org/10.1007/978-3-0348-8255-2

Mateos, M.: Optimal control problems governed by semilinear equations with integral constraints on the gradient of the state. Ph.D. thesis, Universidad de Cantabria, Spain (2000). https://digibuo.uniovi.es/dspace/handle/10651/28986

Pfefferer, J.: Numerical analysis for elliptic neumann boundary control problems on polygonal domains. Dissertation, Universität der Bundeswehr München, Fakultät für Bauingenieurwesen und Umweltwissenschaften, Neubiberg (2014). http://athene-forschung.unibw.de/download/92055/92055.pdf

Fabes, E., Mendez, O., Mitrea, M.: Boundary layers on Sobolev–Besov spaces and Poisson’s equation for the Laplacian in Lipschitz domains. J. Funct. Anal. 159(2), 323–368 (1998). https://doi.org/10.1006/jfan.1998.3316

Dauge, M.: Elliptic Boundary Value Problems on Corner Domains: Smoothness and Asymptotics of Solutions. Lecture Notes in Mathematics, vol. 1341, p. 259. Springer, Berlin (1988). https://doi.org/10.1007/BFb0086682

Geng, J.: \(W^{1, p}\) estimates for elliptic problems with Neumann boundary conditions in Lipschitz domains. Adv. Math. 229(4), 2427–2448 (2012). https://doi.org/10.1016/j.aim.2012.01.004

Behzadan, A., Holst, M.: Multiplication in Sobolev spaces, revisited. Ark. Mat. 59(2), 275–306 (2021). https://doi.org/10.4310/arkiv.2021.v59.n2.a2