Abstract

In this paper, we consider a subclass of piecewise deterministic Markov processes with a Polish state space that involve a deterministic motion punctuated by random jumps, occurring in a Poisson-like fashion with some state-dependent rate, between which the trajectory is driven by one of the given semiflows. We prove that there is a one-to-one correspondence between stationary distributions of such processes and those of the Markov chains given by their post-jump locations. Using this result, we further establish a criterion guaranteeing the existence and uniqueness of the stationary distribution in a particular case, where the post-jump locations result from the action of a random iterated function system with an arbitrary set of transformations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let Y be a Polish metric space, and suppose that we are given a finite collection \(\{S_i:\, i\in I\}\) of continuous semiflows acting from \({\mathbb {R}}_+ \times Y\) to Y, where \({\mathbb {R}}_+:=[0,\infty )\). The object of our study will be a piecewise deterministic Markov process (PDMP) \(\Psi :=\{(Y(t),\xi (t))\}_{t\in {\mathbb {R}}_+}\), evolving on the space \(X:=Y\times I\) in such a way that

Here, \(\Phi :=\{(Y_n,\xi _n)\}_{n\in {\mathbb {N}}_0}\) stands for a given Markov chain describing the post-jump locations of \(\Psi \), and \(\{\tau _n\}_{n\in {\mathbb {N}}_0}\) is an almost surely (a.s.) increasing to infinity sequence of non-negative random variables, representing the jump times, such that the inter-jump times \(\Delta \tau _{n+1}:=\tau _{n+1}-\tau _{n}\), \(n\in {\mathbb {N}}_0\), are independent and satisfy

with a given bounded continuous function \(\lambda :Y\rightarrow (0,\infty )\), for which \(\inf _{y\in Y} \lambda (y)>0\). The transition law of \(\Phi \), further denoted by P, will be defined using an arbitrary stochastic kernel J on Y and a state-dependent stochastic matrix \(\{\pi _{ij}:\,i,j\in I\}\), consisting of continuous functions from Y to [0, 1], so that J(y, B) is the probability of entering a Borel set \(B\subset Y\) by \(Y_n\) given \(Y(\tau _n-)=y\), while \(\pi _{ij}(y)\) specifies the probability of transition from \(\xi _{n-1}=i\) to \(\xi _n=j\) given \(Y_n=y\).

The main goal of the paper is to prove that there is a one-to-one correspondence between the families of stationary (invariant) distributions of the process \(\Psi \) and the chain \(\Phi \), assuming that the kernel J enjoys a strengthened form of the Feller property (Assumption 4.4). This result (i.e., Theorem 4.5) generalizes [5, Theorem 5.1], which has been established in the case where \(\Delta \tau _n\) are exponentially distributed with a constant rate \(\lambda \), and \(\pi _{ij}\) are constant. The latter, in turn, was intended to extend the scope of [4, Theorem 4.4], which refers to a model with a specific form of the kernel J.

Originally, PDMPs were introduced by Davis in [6] (see also [7]) as a general class of non-diffusive stochastic processes that combine a deterministic motion and random jumps. In this traditional framework, such processes evolve on the union of a countable indexed family of open sets in Euclidean spaces. Associated with each component of the state space is a flow generated by a locally Lipschitz continuous vector field, determining the trajectory’s evolution within this component between the jumps. The process \(\Psi \), investigated here, is defined in a similar manner, but it evolves on a general Polish space, and the semiflows are not generated by vector fields but given a priori. At the same time, let us emphasize that our model does not involve the so-called active boundaries (forcing the jumps), which occur in [6, 7]. Nevertheless, everything indicates that the techniques employed in this paper should also prove effective in their presence, and it is highly likely that we will present such results in another article.

For the classical PDMPs, analogous results to what we aim to achieve here were established in [2, Theorems 1–3] and [7, Ch. 34]. Their proofs are based on the concept of extended generator of a Markov semigroup (see [7, Definition 14.15]), defined as an extension of its strong generator (see [10, Ch. 1]) using a martingale interpretation of the Dynkin identity. Specifically, the key observation (resulting from [7, Proposition 34.7]) is as follows: If, for some finite Borel measure \(\mu \), the extended (or strong) generator \({\mathfrak {A}}\) of a given Markov semigroup satisfies

with \(D({\mathfrak {A}})\) standing for the domain of \({\mathfrak {A}}\), then \(\mu \) is invariant for this semigroup, provided that \(D({\mathfrak {A}})\) intersected with the space of bounded Borel measurable functions separates probability measures. On the other hand, Davis (in [6, Theorem 5.5]; cf. also [7, Theorem 26.14]) has completely characterized the extended generators of classical PDMPs and shown that their domains enjoy the separating property (see [7, Proposition 34.11]), which enabled the use of the aforementioned fact in the reasoning presented in [2, 7]. In our framework, establishing such a property would be rather challenging, if not impossible, as the state space is not Euclidean, and the deterministic dynamics do not correspond to systems of differential equations, which is essential for the discussion in [7, §31–32], leading to [7, Proposition 34.11].

A result similar to those discussed above, but in a much more simple setting, where the state space is compact, and randomness of the examined process arises solely from the switching between semiflows, which occur with a constant intensity, was established in [1, Proposition 2.4]. When proving this proposition, the authors utilize the compactness of the state space to show that the Markov semigroup under consideration (which enjoys the Feller property) is strongly continuous on the space of (bounded) continuous functions. According to the Hille–Yosida theorem ([10, Theorem 1.2.6]), this guarantees that the domain of its strong generator is dense in such a space and, therefore, separates the measures. Obviously, in our framework, this argument fails due to the lack of compactness.

For the purposes of our study, we employ the concept of weak generator ([8, 9]), similarly to the approach in [4]. This choice enable us to use an argument resembling the one utilized in [1], but in the context of the weak star (\(w^*\)-)topology. More precisely, by showing that the transition semigroup of \(\Psi \) is Feller (which follows from the corresponding property of J) and continuous in the weak sense on the space of bounded continuous functions, one can conclude that the \(w^*\)-closure of the domain of its weak generator contains that space. This, in turn, allows one to argue the \(\Psi \)-invariance of measures by verifying (1.2) for such a generator. Compared to the results of [4, 5], the primary contribution of the present paper lies in Lemmas 5.3 and 6.2, which allow overcoming the difficulty arising from the fact that the jump intensity \(\lambda \) is not constant. In particular, the latter enables us to establish (in Lemma 5.1) a suitable generalization of [5, Lemma 5.1], which underlies the arguments used to prove [5, Theorem 5.1] (itself based on the proof of [4, Theorem 4.4]).

We finalize the paper by applying our main result to a special case of the process \(\Psi \), where J is the transition law of the Markov chain arising from a random iterated functions system involving an arbitrary family \(\{w_{\theta }:\,\theta \in \Theta \}\) of continuous transformations from Y into itself (see, e.g., [12]). Specifically, we provide a set of user-friendly conditions on the component functions guaranteeing that \(\Psi \) has a unique stationary distribution with finite first moment (see Theorem 7.10), which leads to a generalization of [4, Corollary 4.5] (cf. also [11, Theorem 5.3.1]). In an upcoming paper, under similar assumptions, we also plan to prove the exponential ergodicity of \(\Psi \) in the bounded Lipschitz distance (and thus generalize [5, Proposition 7.2]).

Eventually, it is worth to emphasize that considering the jumps occurring with a state-dependent intensity is often significant in applications. For example, the above-mentioned special case of \(\Psi \) with \(E={\mathbb {R}}_+\), \(\Theta \) being an compact interval, and \(w_{\theta }(y)=y+\theta \) proves to be useful in analysing the stochastic dynamics of gene expression in the presence of transcriptional bursting (see, e.g., [13] or [4, §5.1]). In short, \(\{Y(t)\}_{t\in {\mathbb {R}}_+}\) then describes the concentration of a protein encoded by some gene of a prokaryotic cell. The protein molecules undergo a degradation process, which is interrupted by production appearing in the so-called bursts at random times \(\tau _n\). From a biological point of view, it is known that the intensity of these bursts depends on the current number of molecules, and thus taking into account the non-constancy of \(\lambda \) makes the model more accurate.

The outline of the paper is as follows. In Sect. 2, we introduce notation and review several basic concepts related to Markov processes and weak generators of contraction semigroups. Section 3 presents a formal construction of the model under consideration. The main result is formulated in Sect. 4. Here, we also provide a simple observation regarding the finiteness of the first moments of the invariant measures under consideration. The proof of the main theorem, along with the statements of all necessary auxiliary facts, is given in Sect. 5. The latter are proved in Sect. 6. Finally, Sect. 7 discusses an application of the main result to the aforementioned specific case of \(\Psi \).

2 Preliminaries

Consider an arbitrary metric space E, and let \({\mathcal {B}}(E)\) stand for its Borel \(\sigma \)-field. By \(B_b(E)\) we will denote the space of all bounded Borel measurable functions from E to \({\mathbb {R}}\), endowed with the supremum norm, i.e., \(\left\| f\right\| _{\infty }:=\sup _{x\in E} |f(x)|\) for \(f\in B_b(E)\), whilst by \(C_b(E)\) we will mean the subspace of \(B_b(E)\) consisting of all continuous functions. Further, let \({\mathcal {M}}_{sig}(E)\) be the family of all finite signed Borel measures on E (that is, all \(\sigma \)-additive real-valued set functions on \({\mathcal {B}}(E)\)), and let \({\mathcal {M}}(E)\), \({\mathcal {M}}_1(E)\) stand for its subsets containing all non-negative measures and all probability measures, respectively. Additionally, for any given Borel measurable function \(V:E\rightarrow [0,\infty )\), the symbol \({\mathcal {M}}_1^V(E)\) will denote the set of all \(\mu \in {\mathcal {M}}_1(E)\) with finite first moment w.r.t. V, i.e., such that \(\int _E V\,d\mu <\infty \). Moreover, for notational brevity, given \(f\in B_b(E)\) and \(\mu \in {\mathcal {M}}(E)\), we will often write \(\left\langle f,\mu \right\rangle \) for the Lebesgue integral \(\int _E f \,d\mu \).

2.1 Markov Processes and their Transition Laws

Let us now recall several basic concepts from the theory of Markov processes, which we will refer to throughout the paper.

A function \(K: E\times {\mathcal {B}}(E)\rightarrow [0,\infty ]\) is said to be a (transition) kernel on E whenever, for every \(A\in {\mathcal {B}}(E)\), the map \(E\ni x \mapsto K(x,A)\) is Borel measurable, and, for every \(x\in E\), \({\mathcal {B}}(E)\ni A \mapsto K(x,A)\) is a non-negative Borel measure. If, additionally, \(\sup _{x\in E} K(x,E)<\infty \), then K is said to be bounded. In the case where \(K(x,E)=1\) for every \(x\in E\), K is called a stochastic kernel (or a Markov kernel) and usually denoted by P rather than K.

For any two kernels \(K_1\) and \(K_2\), we can consider their composition \(K_1K_2\) of the form

The iterates of a kernel K are defined as usual by \(K^1:=K\) and \(K^{n+1}:=K K^n\) for every \(n\in {\mathbb {N}}\). Obviously, the composition of any two bounded kernels is again bounded.

Given a kernel K on E, for any non-negative Borel measure \(\mu \) on E and any bounded below Borel measurable function \(f:E\rightarrow {\mathbb {R}}\), we can consider the measure \(\mu K\) and the function Kf defined as

respectively. They are related to each other in such a way that \(\left\langle f, \mu K\right\rangle =\left\langle Kf,\mu \right\rangle \). Obviously, if K is bounded, then the operator \(\mu \mapsto \mu K\) transforms \({\mathcal {M}}(E)\) into itself, whilst \(f\mapsto Kf\) maps \(B_b(E)\) into itself. In the case where K is stochastic, the operator defined by (2.1) also leaves the set \({\mathcal {M}}_1(X)\) invariant.

Let us stress here that the notation consistent with (2.1) and (2.2) will be used for all the kernels considered in the paper, without further emphasis.

A kernel K on E is said to be Feller if \(Kf\in C_b(E)\) for every function \(f\in C_b(E)\). Furthermore, a non-negative Borel measure \(\mu \) is called invariant for a kernel K (or for the operator on measures induced by this kernel) whenever \(\mu K=\mu \). These two concepts can also be used in reference to any family of transition kernels. The family of this kind is said to be Feller if all its members are Feller. A measure \(\mu \) is called invariant for such a family if \(\mu \) is invariant for each of its members.

For a given E-valued time-homogeneous Markov chain \(\Phi =\{\Phi _n\}_{n\in {\mathbb {N}}_0}\), defined on a probability space \((\Omega ,{\mathcal {F}},{\mathbb {P}})\), a stochastic kernel P on E is called the transition law of this chain if

Letting \(\mu _n\) denote the distribution of \(\Phi _n\) for each \(n\in {\mathbb {N}}_0\), we then have \(\mu _{n+1}=\mu _n P\) for every \(n\in {\mathbb {N}}_0\). The operator \((\cdot )P\) on \({\mathcal {M}}_1(E)\) is thus referred to as the transition operator of \(\Phi \), and the invariant probability measures of P are simply the stationary distributions of \(\Phi \). Moreover, in fact, \({\mathbb {P}}(\Phi _{n+k} \in A \,|\, \Phi _k=x)=P^n(x,A)\) for any \(x\in E\), \(A\in {\mathcal {B}}(E)\) and \(k,n\in {\mathbb {N}}_0\), which implies that

where \({\mathbb {E}}_x\) stands for the expectation with respect to \({\mathbb {P}}_x:={\mathbb {P}}(\cdot \,|\,\Phi _0=x)\).

On the other hand, it is well-known (see, e.g., [14]) that, for every stochastic kernel P on a separable metric space E, there exists an E-valued Markov chain with transition law P. More precisely, putting \(\Omega :=E^{{\mathbb {N}}_0}\), \({\mathcal {F}}={\mathcal {B}}(E^{{\mathbb {N}}_0})\) and, for each \(n\in {\mathbb {N}}_0\), defining \(\Phi _n:\Omega \rightarrow E\) as

one can show that, for every \(x\in E\), there exists a probability measure \({\mathbb {P}}_x\) on \({\mathcal {F}}\) such that, for any \(n\in {\mathbb {N}}_0\), \(A_0,\ldots ,A_n\in {\mathcal {B}}(E)\) and \(F:=\{\Phi _0\in A_0,\ldots ,\Phi _n\in A_n\}\), we have

Then \(\{\Phi _n\}_{n\in {\mathbb {N}}_0}\) specified by (2.3) is a time-homogeneous Markov chain on \((\Omega ,{\mathcal {F}},{\mathbb {P}}_x)\) with initial state x and transition law P. The Markov chain constructed in this way is called the canonical one.

Finally, recall that a family \(\{P_t\}_{t\in {\mathbb {R}}_+}\) of stochastic kernels on E (or the corresponding family of operators on \({\mathcal {M}}_1(X)\) or \(B_b(X)\)) is called a Markov transition semigroup whenever \(P_{s+t}=P_sP_t\) for all \(s,t\ge 0\) and \(P_0(x,\cdot )=\delta _x\) for every \(x\in E\), where \(\delta _x\) stands for the Dirac measure at x. While using this term in the context of a time-homogeneous Markov process \(\Psi =\{\Psi (t)\}_{t\ge 0}\), defined on some probability space \((\Omega ,{\mathcal {F}},{\mathbb {P}})\), we will mean that

Clearly, if \(\mu _t\) denotes the distribution of \(\Psi (t)\) for every \(t\ge 0\), then \(\mu _{s+t}=\mu _s P_t\) for any \(s,t\ge 0\), which, in particular, shows that the invariant probability measures of \(\{P_t\}_{t\in {\mathbb {R}}_+}\) are, in fact, the stationary distributions of the process \(\Psi \). Moreover, analogously to the discrete case, we have

where \({\mathbb {E}}_x\) stands for the expectation with respect to \({\mathbb {P}}_x:={\mathbb {P}}(\cdot \,|\,\Psi (0)=x)\).

2.2 Weak Infinitesimal Generators

As mentioned in the introduction, we shall use certain tools relying on the concept of a weak infinitesimal generator, which generally pertains to contraction semigroups on subspaces of Banach spaces. In our study, we adopt this concept from [8, 9].

Let us first recall that, given a normed space \((L, \left\| \cdot \right\| _L)\), a family \(\{H_t\}_{t\in {\mathbb {R}}_+}\) of linear operators from L to itself is called a contraction semigroup on L if \(H_0={\text {id}}_L\), \(H_{s+t}=H_s\circ H_t\) for any \(s,t\ge 0\), and \(\left\| H_t f\right\| _L\le \left\| f\right\| _L\) for all \(t\ge 0\) and \(f\in L\).

In what follows, we will only focus on the case where L is a subspace of \((B_b(E), \left\| \cdot \right\| _{\infty })\). Note that any Markov transition semigroup, regarded as a family of operators on \(B_b(E)\) is a contraction semigroup of linear operators. Obviously, if such a semigroup enjoys the Feller property, then it forms a semigroup on \(C_b(E)\).

To introduce the notion of the weak generator (adapted to our purposes), let us first consider the Banach space \(({\mathcal {M}}_{sig}(E), \left\| \cdot \right\| _{TV})\) with the total variation norm, defined by

and let \({\mathcal {M}}_{sig}(E)^*\) denote its dual space. Further, for every \(f\in B_b(E)\), define the bounded linear functional \(\ell _f:{\mathcal {M}}_{sig}(E)\rightarrow {\mathbb {R}}\) by

Then \(f\mapsto \ell _f\) is an isometric embedding of \((B_b(E), \left\| \cdot \right\| _{\infty })\) in \({\mathcal {M}}_{sig}(E)^*\) (see [8, p. 50]), i.e., an injective linear map satisfying \(\left\| \ell _f\right\| =\left\| f\right\| _{\infty }\) for all \(f\in B_b(E)\), where \(\left\| \cdot \right\| \) denotes the operator norm. Therefore, \(B_b(E)\) can be regarded as a subspace of \({\mathcal {M}}_{sig}(E)^*\), and thus it can be endowed with the weak \(w^*\)-topology inherited from the latter. Moreover, it is easy to check that \(B_b(E)\) is \(w^*\)-closed in \({\mathcal {M}}_{sig}(E)^*\).

In view of the above, a sequence \(\{f_n\}_{n\in {\mathbb {N}}}\subset B_b(E)\) is said to be \(\hbox {weak}^*\) convergent to \(f\in B_b(E)\), which is written as \(w^*\text{- }\lim _{n\rightarrow \infty } f_n=f\), whenever \(\{\ell _{f_n}\}_{n\in {\mathbb {N}}}\) converges \(\hbox {weakly}^*\) to \(\ell _f\) in \({\mathcal {M}}_{sig}(E)^*\), i.e.,

On the other hand, it is well-known that (2.6) is equivalent to the pointwise convergence of \(\{f_n\}_{n\in {\mathbb {N}}}\) to f in conjunction with the boundedness of the sequence \( \{\left\| f_n\right\| _{\infty } \}_{n\in {\mathbb {N}}}\).

Given a subspace L of \(B_b(E)\subset {\mathcal {M}}_{sig}(E)^*\) and a contraction semigroup \(\{H_t\}_{t\in {\mathbb {R}}_+}\) of linear operators on L, by the weak (infinitesimal) generator of this semigroup we will mean (following [8, Ch.1 § 6]) the operator \(A_H:D(A_H)\rightarrow L_{0,H}\) given by

where

while \(L_{0,H}\) denotes the center of \(\{H_t\}_{t\ge 0}\), i.e.

At the end of this section, let us quote several basic properties of weak generators that will be useful in the further course of the paper.

Remark 2.1

(see [8, p. 40] or [9, pp. 437-448]). Let \(\{H_t\}_{t\ge 0}\) be a contraction semigroup of linear operators on a subspace L of \(B_b(E)\), and let \(A_H\) stand for the weak generator of this semigroup. Then

-

(i)

\(w^*\text{- }{\text {cl}}D(A_H) =w^*\text{- }{\text {cl}}L_{0,H},\) where \(w^*\text{- }{\text {cl}}\) denotes the weak-\(*\) closure in \(B_b(E)\).

-

(ii)

For every \(f\in D(A_H)\) the derivative

$$\begin{aligned} {\mathbb {R}}_+\ni t\mapsto \frac{d^+ H_t f}{dt}:=w^*\text{- }\lim _{h\rightarrow 0^+} \frac{H_{t+h} f - H_t f}{h} \end{aligned}$$exists (in \(L_{0,H}\)) and is \(*\)-weak continuous from the right. Consequently,

$$\begin{aligned} H_t f\in D(A_H)\quad \text {and}\quad \frac{d_+ H_t f}{dt}=A_H H_t f =H_t A_H f\quad \text {for all}\ \quad t\ge 0. \end{aligned}$$Moreover, the Dynkin formula holds, i.e.,

$$\begin{aligned} H_t f-f=\int _0^t H_s A_Hf\,ds\quad \text {for all}\ \quad t\ge 0. \end{aligned}$$

3 Definition of the Model

Let \((Y,\rho _Y)\) be a non-empty complete separable metric space, and let I stand for an arbitrary non-empty finite set endowed with the discrete topology. Moreover, let us introduce

both equipped with the product topologies, upon assuming that \({\mathbb {R}}_+:=[0,\infty )\) is supplied with the Euclidean topology.

Consider a family \(\{S_i:\,i\in I\}\) of jointly continuous semiflows acting from \({\mathbb {R}}_+\times Y\) to Y. By calling \(S_i\) a semiflow we mean, as usual, that

Further, let \(\{\pi _{ij}:\, i,j\in I\}\) be a collection of continuous maps from Y to [0, 1] such that

Moreover, let \(\lambda :Y\rightarrow (0,\infty )\) be a continuous function satisfying

with certain constants \({\underline{\lambda }}, {\bar{\lambda }}>0\), and put

Finally, suppose that we are given an arbitrary stochastic kernel \(J: Y\times {\mathcal {B}}(Y)\rightarrow [0,1]\).

Let us now define a stochastic kernel \({\bar{P}}: {\bar{X}}\times {\mathcal {B}}({\bar{X}})\rightarrow [0,1]\) by setting

for any \(y\in Y\), \(i\in I\), \(s\in {\mathbb {R}}_+\) and \({\bar{A}}\in {\mathcal {B}}({\bar{X}})\). Furthermore, let \(P:X\times {\mathcal {B}}(X)\rightarrow [0,1]\) be given by

Remark 3.1

Taking into account the continuity of the maps \(X\ni (y,i) \mapsto S_i(t,y)\) for \(t\ge 0\), \((y,i)\mapsto \pi _{ij}(y)\) for \(j\in I\), and \((y,i)\mapsto \lambda (y)\), it is easy to see that P is Feller whenever so is the kernel J.

By \({\bar{\Phi }}:=\{(Y_n,\xi _n,\tau _n)\}_{n\in {\mathbb {N}}_0}\) we will denote a time-homogeneous Markov chain with state space \({\bar{X}}\) and transition law \({\bar{P}}\), wherein \(Y_n\), \(\xi _n\), \(\tau _n\) take values in Y, I, \({\mathbb {R}}_+\), respectively. For simplicity of analysis, we shall regard \({\bar{\Phi }}\) as the canonical Markov chain (starting from some point of \({\bar{X}}\)), constructed on the coordinate space \(\Omega :={\bar{X}}^{{\mathbb {N}}_0}\), equipped with the \(\sigma \)-field \({\mathcal {F}}:={\mathcal {B}}\left( {\bar{X}}^{{\mathbb {N}}_0}\right) \) and a suitable probability measure \({\mathbb {P}}\) on \({\mathcal {F}}\). Obviously, \(\Phi :=\{(Y_n,\xi _n)\}_{n\in {\mathbb {N}}_0}\) is then a Markov chain with respect to its own natural filtration, governed by the transition law P, given by (3.3). Moreover, for every \(n\in {\mathbb {N}}_0\), we have

where \(\Delta \tau _{n+1}:=\tau _{n+1}-\tau _n\), and

for \(t\in {\mathbb {R}}_+\) and \((y,i,s)\in {\bar{X}}\).

In particular, (3.4) implies that the conditional distributions of \(\Delta \tau _{n+1}\) given \({\bar{\Phi }}_n\) are of the form

This yields that \(\Delta \tau _n>0\) a.s. for all \(n\in {\mathbb {N}}\) (so \(\{\tau _n\}_{n\in {\mathbb {N}}_0}\) is a.s. strictly increasing), and, together with the Markov property of \({\bar{\Phi }}\), shows that \(\Delta \tau _1,\Delta \tau _2,\ldots \) are mutually independent. Further, it follows that, for any \(n,r\in {\mathbb {N}}\),

whence, in view of (3.1), we get

Consequently, Kolmogorov’s criterion guarantees that \((\Delta \tau _n-{\mathbb {E}}\Delta \tau _n)_{n\in {\mathbb {N}}}\) obeys the strong law of large numbers. Hence, writing

for \(n\in {\mathbb {N}}\), we can conclude that \(\tau _n \uparrow \infty \) a.s.

The main focus of our study will be the PDMP \(\Psi :=\{\Psi (t)\}_{t\in {\mathbb {R}}_+}\) of the form

defined via interpolation of \(\Phi \) according to formula (1.1). Clearly, this definition is well-posed since \(\tau _n \uparrow \infty \) a.s., and \(\Phi \) describes the post-jump locations of the process \(\Psi \), that is,

In what follows, the Markov transition semigroup of \(\Psi \) will be denoted by \(\{P_t\}_{t\ge 0}\).

4 Main Results

In this section, we shall formulate the main result of this paper, concerning a one-to-one correspondence between invariant probability measures of the transition semigroup \(\{P_t\}_{t\in {\mathbb {R}}_+}\) of the process \(\Psi \), determined by (1.1), and those of the transition operator P of the chain \(\Phi \), given by (3.3).

For this aim, let us consider two stochastic kernels \(G,W:X\times {\mathcal {B}}(X)\rightarrow [0,1]\) given by

for all \(x=(y,i)\in X\) and \(A\in {\mathcal {B}}(X)\), where J stands for the kernel involved in (3.2). Further, define

and introduce two (generally non-stochastic) kernels \({\widetilde{G}},{\widetilde{W}}:X\times {\mathcal {B}}(X)\rightarrow [0,\infty )\) of the form

Remark 4.1

It is easily seen that \(GW={\widetilde{G}}{\widetilde{W}}=P\), where P is given by (3.3).

Remark 4.2

According to (3.1), for any \(x\in X\) and \(A\in {\mathcal {B}}(X)\), we have

Consequently, the kernels \({\widetilde{G}}\) and \({\widetilde{W}}\) are bounded, and thus the sets \({\mathcal {M}}(X)\) and \(B_b(X)\) are invariant under the operators induced by these kernels according to (2.1) and (2.2), respectively. Moreover, note that, if \(\mu \in {\mathcal {M}}(X)\) is a non-zero measure, then the measures \(\mu {\widetilde{G}}\) and \(\mu {\widetilde{W}}\) are non-zero as well.

Remark 4.3

The kernels G and \({\widetilde{G}}\) are Feller. Moreover, if the kernel J is Feller then so are the kernels W and \({\widetilde{W}}\).

Essentially, apart from the conditions imposed on the model components in Sect. 3, the only assumption that we need to make in our main theorem is the following strengthened version of the Feller property for the kernel J:

Assumption 4.4

For every \(g\in C_b(Y\times {\mathbb {R}}_+)\), the map \(Y\times {\mathbb {R}}_+\ni (y,t)\mapsto J g(\cdot ,t)(y)\) is jointly continuous.

Although the above assumption might appear somewhat technical, it is crucial to ensure the joint continuity of a certain specific function used to obtain an explicit form of the semigroup \(\{P_t\}_{t\in {\mathbb {R}}+}\) in the upcoming Lemma 5.1(ii). This continuity, in turn, will be needed to prove the subsequent Lemma 5.2, which plays a key role in our approach.

The main result of the paper reads as follows:

Theorem 4.5

Suppose that the kernel J satisfies Assumption 4.4.

-

(a)

If \(\mu _*^{\Phi }\in {\mathcal {M}}_1(X)\) is invariant for P, then

$$\begin{aligned} \mu _*^{\Psi }:=\frac{\mu _*^{\Phi }{\widetilde{G}}}{\mu _*^{\Phi }{\widetilde{G}}(X)} \end{aligned}$$(4.4)is an invariant probability measure of \(\{P_t\}_{t\in {\mathbb {R}}_+}\), and

$$\begin{aligned} \mu _*^{\Phi }=\frac{ \mu _*^{\Psi } {\widetilde{W}}}{\mu _*^{\Psi }{\widetilde{W}}(X)}. \end{aligned}$$(4.5) -

(b)

If \(\mu _*^{\Psi }\in {\mathcal {M}}_1(X)\) is invariant for \(\{P_t\}_{t\in {\mathbb {R}}_+}\), then \(\mu _*^{\Phi }\) defined by (4.5) is an invariant probability measure of P, and \(\mu _*^{\Psi }\) can be then expressed as in (4.4).

The proof of Theorem 4.5, together with all needed auxiliary results, is given in Sect. 5.

Remark 4.6

Note that

Hence, in particular, if \(\lambda \) is constant, then \(\mu _*^{\Psi }=\mu _*^{\Phi }G\) and \(\mu _*^{\Phi }= \mu _*^{\Psi } W.\)

Corollary 4.7

Suppose that Assumption 4.4 holds. Then \(\{P_t\}_{t\in {\mathbb {R}}_+}\) admits a unique invariant probability measure if and only if so does P.

Let us finish this section with a simple observation concerning the property of having finite first moments by the measures featured in Theorem 4.5. We are interested in the moments with respect to the function \(V:X\rightarrow [0,\infty )\) given by

where \(y_*\) is an arbitrarily fixed point of Y. To state an appropriate result, let us introduce additionally the following two assumptions, which will also be utilized in Sect. 7:

Assumption 4.8

For some point \(y_*\in Y\) we have

Assumption 4.9

There exist constants \(L>0\) and \(\alpha <{\underline{\lambda }}\) such that

It should be noted here that, if Assumptions 4.8 and 4.9 are fulfilled, then \(\beta (y_*)<\infty \) for every \(y_*\in Y\).

Proposition 4.10

Let V be the function given by (4.6). Then, the following holds:

-

(i)

Under Assumptions 4.8 and 4.9, for any \(\mu _*^{\Phi }\in {\mathcal {M}}_1^V(X)\), the measure \(\mu _*^{\Psi }\) defined by (4.4) also belongs to \({\mathcal {M}}_1^V(X)\).

-

(ii)

Suppose that there exist constants \({\tilde{a}},{\tilde{b}}\ge 0\) such that, for \({\widetilde{V}}:=\rho _Y(\cdot ,y_*)\), we have

$$\begin{aligned} J{\widetilde{V}}(y)\le {\tilde{a}} {\widetilde{V}}(y)+{\tilde{b}}\quad \text {for all}\quad y\in Y. \end{aligned}$$(4.7)Then, for any \(\mu _*^{\Psi }\in {\mathcal {M}}_1^V(X)\), the measure \(\mu _*^{\Phi }\) defined by (4.5) also belongs to \({\mathcal {M}}_1^V(X)\).

Proof

To see (i) it suffices to observe that, for every \((y,i)\in X\),

Statement (ii) follows from the fact that, for any \((y,i)\in X\),

\(\square \)

5 Proof of the Main Theorem

Before we proceed to prove Theorem 4.5, let us first formulate certain auxiliary results (specifically, Lemmas 5.1–5.3), whose proofs are given in Sect. 6.

The first result (proved in Sect. 6.1) collects certain properties of the transition semigroup \({\{P_t\}}_{t\in {\mathbb {R}}+}\), which are essential for establishing the forthcoming Lemma 5.2, concerning the weak generator of this semigroup. It is also worth noting that this result extends the scope of [4, Lemma 5.1], which was previously established only for constant \(\lambda \) and \(\pi _{ij}\).

Lemma 5.1

The following statements hold for the transition semigroup \(\{P_t\}_{t\in {\mathbb {R}}_+}\) of the process \(\Psi \), specified by (1.1):

-

(i)

If J is Feller, then \(\{P_t\}_{t\in {\mathbb {R}}_+}\) is Feller too.

-

(ii)

For every \(f\in B_b(X)\), there exist functions \(u_f:X\times {\mathbb {R}}_+\rightarrow {\mathbb {R}}\) and \(\psi _f:X\times D_{\psi }\rightarrow {\mathbb {R}}\), with \(D_{\psi }:=\left\{ (t,T)\in {\mathbb {R}}_+^2: t\le T\right\} \), such that

$$\begin{aligned} P_T f(x)=e^{-\Lambda (y,i,T)} f(S_i(T,y),i)+\int _0^T \psi _f((y,i),t,T)\,dt+u_f((y,i),T)\nonumber \\ \end{aligned}$$(5.1)for any \(x=(y,i)\in X\) and \(T\in {\mathbb {R}}_+\), and that the following conditions hold:

$$\begin{aligned}{} & {} u_f(\cdot ,T)\in B_b(X) \quad \text {for all}\quad T\in {\mathbb {R}}_+,\quad \lim _{T\rightarrow 0} \left\| u_f(\cdot ,T)\right\| _{\infty }/{T}=0,\end{aligned}$$(5.2)$$\begin{aligned}{} & {} \psi _f(\cdot ,t,T)\in B_b(X),\quad \left\| \psi _f(\cdot ,t,T)\right\| _{\infty }\le {\bar{\lambda }}\left\| f\right\| _{\infty }\quad \text {for any}\quad (t,T)\in D_{\psi }, \nonumber \\ \end{aligned}$$(5.3)$$\begin{aligned}{} & {} \psi _f(x,0,0)={\hat{\lambda }}(x) Wf(x)\quad \text {for every}\quad x\in X, \end{aligned}$$(5.4)with \({\hat{\lambda }}\) given by (4.3), and, if Assumption 4.4 is fulfilled, then \(D_{\psi }\ni (t,T)\mapsto \psi _f(x,t,T)\) is jointly continuous for every \(x\in X\) whenever \(f\in C_b(X)\).

-

(iii)

\(\{P_t\}_{t\in {\mathbb {R}}_+}\) is stochastically continuous, i.e.

$$\begin{aligned} \lim _{T\rightarrow 0} P_T f(x)=f(x)\quad \text {for any}\quad x\in X,\; f\in C_b(X). \end{aligned}$$

Obviously, according to statement (i) of Lemma 5.1, if J is Feller (or, in particular, if Assumption 4.4 is fulfilled), then \(\{P_t\}_{t\in {\mathbb {R}}_+}\) can be viewed as a contraction semigroup of linear operators on \(C_b(X)\), and thus we can consider its weak generator (in the sense specified in Sect. 2.2). In what follows, this generator will be denoted by \(A_P\). Apart from this, we also employ the weak generator \(A_Q\) of the semigroup \(\{Q_t\}_{t\in {\mathbb {R}}_+}\) defined by

Clearly, \(D(A_p)\) and \(D(A_Q)\) are then subsets of \(C_b(X)\). Furthermore, having in mind (2.7), we see that \(L_{0,P}=C_b(X)\) by statement (iii) of Lemma 5.1, and also \(L_{0,Q}=C_b(X)\), due to the continuity of \(S_i(\cdot ,y)\), \(y\in Y\).

The main idea underlying the proof of our main theorem is that the generator \(A_P\) can be expressed using \(A_Q\) and the operator W, determined by (4.2) (similarly as in [6, Theorem 5.5]), which is exactly the statement of the lemma below. The proof of this result (given in Section 6.2) is founded on assertion (ii) of Lemma 5.1, which, incidentally, is the reason why we require Assumption 4.4 rather than just the Feller property of J. It should also be noted that, according to Remark 4.3, the kernel W is Feller under this assumption, which makes the formula below meaningful.

Lemma 5.2

Suppose that Assumption 4.4 holds. Then \(D(A_P)=D(A_Q)\) and, for every \(f\in D(A_P)\), we have

where \({\hat{\lambda }}\) is given by (4.3), and W is the operator on \(C_b(X)\), induced by the kernel specified in (4.2).

Another fact playing a significant role in the proof of Theorem 4.5 is related with the invertibility of the operator G on \(C_b(X)\) (cf. Remark 4.3), induced by the kernel given by (4.1). Clearly, if \(\lambda \) is constant, then \(G/\lambda \) is simply the resolvent of the semigroup \(\{Q_t\}_{t\in {\mathbb {R}}_+}\). As is well known (see [8, Theorem 1.7]), in this case, one has \(G/\lambda \,|_{\,C_b(X)}= (\lambda {\text {id}}-A_Q)^{-1}\), which is a key observation in [4]. Since this argument fails in the present framework, we will prove (in Sect. 6.2) the following:

Lemma 5.3

Let \(A_Q/{\hat{\lambda }}\) be the operator defined by

with \({\hat{\lambda }}\) given by (4.3). Then the operator \({\text {id}}-A_Q/{\hat{\lambda }}: D(A_Q)\rightarrow C_b(X)\) is invertible, and its inverse is the operator on \(C_b(X)\) induced by the kernel G, specified in (4.1). Equivalently, this means that

Armed with the results above, we are now prepared to prove Theorem 4.5. It is worth highlighting here that, although the reasoning below explicitly utilizes only Lemmas 5.2 and 5.3, it fundamentally relies on Remark 2.1, which is valid for \(\{P_t\}_{t\in {\mathbb {R}}_+}\) with \(L_{0,P}=C_b(X)\) owing to Lemma 5.1.

Proof of Theorem 4.5

(a) To prove statement (a), suppose that \(\mu _*^{\Phi }\in {\mathcal {M}}_1(X)\) is invariant for P, and let \(\mu _*^{\Psi }\) be given by (4.4).

We will first show that

To this end, let \(f\in D(A_P)\) and define \(\nu _*:=\mu _*^{\Phi } G\). Taking into account that \(GW=P\) (cf. Remark 4.1), we see that

which means that \(\nu _*\) is an invariant probability measure of WG. Further, from Lemma 5.2 it follows that \(f\in D(A_Q)\), and that

Using the WG-invariance of \(\nu _*\) and identity (5.8), resulting from Lemma 5.3, we further obtain

In view of (5.10), this gives

which finally implies that

as claimed.

Now, according to Remark 2.1(ii), for any \(f\in D(A_P)\) and \(t\ge 0\), we have

which, together with (5.9), yields that

Since \(C_b(X)\subset w^*-{\text {cl}}D(A_P)\) due to Remark 2.1(i), one can easily conclude that, in fact, the above equality holds for all \(f\in C_b(X)\). We have therefore shown that

This obviously implies that, for any \(t\in {\mathbb {R}}_+\) and \(f\in C_b(X)\),

and thus proves that \(\mu _*^{\Psi }\) is invariant for \(\{P_t\}_{t\in {\mathbb {R}}_+}\).

Furthermore, since \({\widetilde{G}}{\widetilde{W}}=P\) (as emphasized in Remark 4.1) and \(\mu _*^{\Phi }\) is invariant for P, we see that

whence, in particular,

These two observations finally yield that

as claimed in (4.5).

(b) We now proceed to the proof of claim (b). For this aim, suppose that \(\mu _*^{\Psi }\in {\mathcal {M}}_1(X)\) is invariant for \(\{P_t\}_{t\in {\mathbb {R}}_+}\) and consider the measure \(\mu _*^{\Phi }\) defined by (4.5).

Let us begin with showing that

To do this, let \(f\in C_b(X)\) and define \(g:=Gf\). Clearly, lemmas 5.2 and 5.3 guarantee that \(g\in D(A_Q)=D(A_P)\). Taking into account statement (ii) of Remark 2.1 and the fact that \(\mu _*^{\Psi }\) is invariant for \(\{P_t\}_{t\in {\mathbb {R}}_+}\), we infer that

Hence, referring to Lemma 5.2, we get

which gives

Eventually, having in mind that \(g=Gf\) and using (5.7), following from Lemma 5.3, we obtain

which is the desired claim.

If we now fix an arbitrary \(f\in C_b(X)\) and apply (5.11) with \(f/{\hat{\lambda }}\) in place of f, then we obtain

which shows that \(\mu _*^{\Psi }\) is invariant for \({\widetilde{W}}{\widetilde{G}}\). From this and the identity \({\widetilde{G}}{\widetilde{W}}=P\) it follows that

which, in turn, gives

Hence \(\mu _*^{\Phi }\) is indeed invariant for P.

Finally, it remains to show that \(\mu _*^{\Psi }\) can be represented according to (4.4). To see this, we again use the fact that \(\mu _*^{\Psi }\) is invariant for \({\widetilde{W}}{\widetilde{G}}\), to obtain

which, in particular, implies that

Consequently, it now follows that

This completes the proof of the theorem. \(\square \)

6 Proofs of the Auxiliary Results

In this part of the paper we provide the proofs of Lemmas 5.1–5.3, which have been used to prove Theorem 4.5.

6.1 Proof of Lemma 5.1

Let us begin this section with defining

Obviously, \(\{\eta (t)=n\}=\{\tau _n\le t <\tau _{n+1}\}\) for any \(t\in {\mathbb {R}}_+\) and \(n\in {\mathbb {N}}_0\).

Although (6.1) generally does not define a Poisson process (unless \(\lambda \) is constant), using assumption (3.1), one can find an upper limit of the probability of \(\{\eta (t)=n\}\) close to the corresponding probability in such a process, which is crucial in the proof of Lemma 5.1. This is done in Lemma 6.2, which relies on the following observation:

Lemma 6.1

For any \(x\in X\), \(t\in {\mathbb {R}}_+\) and \(n\in {\mathbb {N}}_0\),

Proof

The inequality is obvious for \(n=0\).

According to (3.4), for every \(n\in {\mathbb {N}}_0\) and each \((y,i,s)\in {\bar{X}}\), the conditional probability density function of \(\tau _{n+1}\) given \({\bar{\Phi }}_n=(y,i,s)\) is of the form

Let \(x=(y,i)\in X\) and put \({\bar{x}}:=(x,0)\in {\bar{X}}\). We shall proceed by induction. Taking into account (3.1), for \(n=1\) we have

Now, suppose inductively that (6.2) holds for some arbitrarily fixed \(n\in {\mathbb {N}}\) and all \(t\in {\mathbb {R}}_+\). Then, for every \(T\in {\mathbb {R}}_+\), we can write

Taking the expectation of both sides of this inequality and, further, using the inductive hypothesis gives

which ends the proof. \(\square \)

Lemma 6.2

For any \(x\in X\), \(t\in {\mathbb {R}}_+\) and \(n\in {\mathbb {N}}_0\),

Proof

Let \(x\in X\), \({\bar{x}}:=(x,0)\), \(t\in {\mathbb {R}}_+\), \(n\in {\mathbb {N}}_0\), and observe that

From (3.4) and (3.1) it follows that

Having this in mind and applying Lemma 6.1 we therefore get

which is the desired claim. \(\square \)

Having established this, we are now in a position to conduct the announced proof.

Proof of Lemma 5.1

In what follows, we will write \({\bar{x}}:=(x,0)\) for any given \(x\in X\).

Let \(f\in B_b(X)\) and \(T\in {\mathbb {R}}_+\). Then, appealing to (2.5) and (1.1), for every \(x\in X\), we get

with \(\eta (\cdot )\) defined by (6.1). On the other hand, in view of (2.4), it is clear that, for any \(x\in X\), \(g,h\in B_b({\bar{X}})\) and \(n\in {\mathbb {N}}_0\),

Hence, we can write

where \(g_T,h_T\in B_b({\bar{X}})\) are given by

for any \(u\in Y\), \(j\in I\) and \(s\in {\mathbb {R}}_+\).

(i) Suppose that J is Feller, \(f\in C_b(X)\) and let \(T\in {\mathbb {R}}_+\). The aim is to prove that \(P_T f\) is continuous.

Let us first observe that, for any function \(\varphi \in B_b({\bar{X}})\) such that \(X\ni x\mapsto \varphi (x,s)\) is continuous for every \(s\ge 0\) (which is obviously the case for \(g_T\) and \(h_T\)), the map \(X\ni x\mapsto {\bar{P}}\varphi (x,s)\) is continuous for each \(s\ge 0\) as well. Indeed, fix \(s\in {\mathbb {R}}_+\), and note that, for any \(i,j\in I\) and \(t\in {\mathbb {R}}_+\), the map \(Y \ni y \mapsto J(\pi _{ij} \varphi (\cdot ,j,t))(y)\) is continuous since the kernel J is Feller, and \(\pi _{ij},\, \varphi (\cdot ,j,t)\in C_b(Y)\). Consequently, taking into account the continuity of both \(\lambda \) and the semiflows, it follows that, for all \(j\in I\) and \(t\ge 0\), the maps

are continuous. Moreover, all these maps are bounded by \({\bar{\lambda }}\, e^{-{\underline{\lambda }}t}\left\| \varphi \right\| _{\infty }\). Hence, using the Lebesgue dominated convergence theorem, we can conclude that the function

is continuous, as claimed.

In light of the observation above, all the maps \(X\ni x\mapsto {\bar{P}}^n(g_T{\bar{P}}h_T)({\bar{x}})\), \(n\in {\mathbb {N}}_0\), are continuous. Furthermore, from (6.5) and Lemma 6.2 it follows that

for all \(x\in X\) and \(n\in {\mathbb {N}}_0\). Hence, the continuity of \(P_Tf\) can be now deduced by applying the discrete version of the Lebesgue dominated convergence theorem to (6.6).

(ii) Let us define

and

Obviously, \(u_f(\cdot , T)\) and \(\psi _f(\cdot , t, T)\) are Borel measurable for any \(T\in {\mathbb {R}}_+\) and \(0\le t\le T\).

Now, fix \(T\in {\mathbb {R}}_+\). Then, according to (6.6), we have

Keeping in mind (3.2) and (6.7), we see that for any \(x=(y,i)\in X\) and \(s\in {\mathbb {R}}_+\),

which, in particular, gives

Appealing to (6.10), we can also conclude that

which, together with (6.9) and (6.11) implies (5.1).

Further, referring to (6.8), we obtain

which shows that \(u_f\) fulfills the conditions specified in (5.2). In turn, the properties of the function \(\psi _f\) stated in (5.3) and (5.4) follow directly from its definition and (3.1).

Now, suppose that J satisfies Assumption 4.4, \(f\in C_b(X)\), and let \(x=(y,i)\in X\). To prove that the map \(D_{\psi }\ni (t,T)\mapsto \psi (x,t,T)\) is jointly continuous, define

Then

Taking into account (3.1), for every \(j\in I\) and any \((u,t), (u_0,t_0)\in Y\times {\mathbb {R}}_+\), we have

Hence, in view of the continuity of \(\lambda \) and \(S_j(h,\cdot )\) for any \(h\ge 0\), we can conclude (by applying the Lebesgue dominated convergence theorem) that \((u,t)\mapsto \Lambda (u,j,t)\) is jointly continuous for each \(j\in I\). This, together with the continuity of f, \(S_j\) and \(\pi _{ij}\), \(j\in J\), shows that g is jointly continuous as well, which, in turn, guarantees that \(g\in C_b(Y\times {\mathbb {R}}_+)\), since \(|g(u,t)|\le \left\| f\right\| _{\infty }\) for all \((u,t)\in Y\times {\mathbb {R}}_+\). Eventually, it now follows from Assumption 4.4 and the continuity of \(S_i(\cdot ,y)\) that the map \(D_{\psi }\ni (t,T)\mapsto Jg(\cdot , T-t)(S_i(t,y))\) is jointly continuous, and thus so is \(D_{\psi }\ni (t,T)\mapsto \psi _f(x,t,T)\).

(iii) Statement (iii) follows immediately from (ii). \(\square \)

6.2 Proofs of Lemmas 5.2 and 5.3

Before we proceed, let us recall that \(A_P\) and \(A_Q\) stand for the weak generators of \(\{P_t\}_{t\in {\mathbb {R}}_+}\) and \(\{Q_t\}_{t\in {\mathbb {R}}_+}\), respectively, considered as contraction semigroups on \(C_b(X)\), where \(\{Q_t\}_{t\in {\mathbb {R}}_+}\) is defined by (5.5). Also, keep in mind that G and W are the kernels specified in (4.1) and (4.2), respectively, while \({\hat{\lambda }}\) is given by (4.3).

Proof of Lemma 5.2

Let \(f\in C_b(X)\) and define

for any \(x\in X\) and \(T>0\), where \(\psi _f\) is the function specified in statement (ii) of Lemma 5.1. Recall that, according to this lemma, the map \(D_{\psi }\ni (t,T) \mapsto \psi _f(x,t,T)\) is jointly continuous for every \(x\in X\) (due to Assumption 4.4).

We will first show that

To do this, let \(x\in X\). Since the maps \([0,T]\ni t\mapsto \psi _f(x,t,T)\), \(T>0\), are continuous, the mean value theorem for integrals guarantees that, for every \(T>0\), the first component on the right-hand side of (6.13) is equal to \(\psi _f(x,t_x(T),T)\) for some \(t_x(T)\in [0,T]\). Consequently, taking into account the continuity of \(T\mapsto \psi _f(x,t_x(T),T)\) and (5.4), we see that

This, together with the fact that

resulting from (5.3), implies that \(w^*\text{- }\lim _{T\rightarrow 0}T^{-1}\int _0^T \psi _f(\cdot ,t,T)\,dt={\hat{\lambda }}Wf\). Further, from l’Hospital’s rule it follows that

and (3.1) ensures that

whence \(w^*\text{- }\lim _{T\rightarrow 0} (1-e^{-\Lambda (\cdot ,T)})/T={\hat{\lambda }}\). Moreover, (5.2) gives \(w^*\text{- }\lim _{T\rightarrow 0} u_f(\cdot ,T)/T=0\). Finally, we see \({\hat{\lambda }}Wf-{\hat{\lambda }}f\in C_b(X)\) since W is Feller (by Assumption 4.4) and \({\hat{\lambda }}\in C_b(X)\). This all proves that (6.14) holds.

Now, referring to (5.1), we can write

which, due to (5.5) and (6.13), gives

In view of (6.14) and the fact that \(w^*\text{- }\lim _{T\rightarrow 0} e^{-\Lambda (\cdot ,T)}=1\), this observation shows that \(f\in D(A_P)\) iff \(f\in D(A_Q)\), and that (5.6) is satisfied for all \(f\in D(A_P)=D(A_Q)\). The proof of the lemma is therefore complete. \(\square \)

Proof of Lemma 5.3

Obviously, it suffices to show that (5.7) and (5.8) hold.

To this end, let \(f\in C_b(X)\). Then, using the flow property, for any \(x=(y,i)\in X\) and any \(T>0\), we obtain

Making the substitutions \(t={\bar{t}}+T\) and \(h={\bar{h}}+T\) therefore gives

Further, since

it follows that

Hence, for all \(x=(y,i)\in X\) and \(T>0\), we have

Now, using l’Hospital’s rule and taking into account the continuity of the integrand on the right-hand side of this equality, we can conclude that

and \({\hat{\lambda }}(Gf-f)\in C_b(X)\), since G is Feller. Moreover, bearing in mind (3.1), we see that

for every \(T\in (0,\delta )\) with sufficiently small \(\delta >0\). We have therefore shown that

This obviously means that \(Gf\in D(A_Q)\) and \(A_Q(Gf)={\hat{\lambda }}(Gf-f)\), which immediately implies (5.7).

What is left is to prove (5.8). To this end, let \(f\in D(A_Q)\) and \(x=(y,i)\in X\). Then

According to statement (ii) of Remark 2.1, we have

whence it finally follows that

which clearly yields (5.8). The proof is now complete. \(\square \)

7 Application to a Particular Subclass of PDMPs

In this section we shall use Theorem 4.5 and [3, Theorem 4.1] (cf. also [4, Theorem 4.1] for the case of constant \(\lambda \)) to provide a set of tractable conditions guaranteeing the existence and uniqueness of a stationary distribution for some particular PDMP, where J is the transition law of a random iterated function system with an arbitrary family of transformations and state-dependent probabilities of selecting them.

Let \(\Theta \) be a topological space, and suppose that \(\vartheta \) is a non-negative Borel measure on \(\Theta \). Further, consider an arbitrary set \(\{w_{\theta }:\,\theta \in \Theta \}\) of continuous transformations from Y to itself and an associated collection \(\{p_{\theta }:\, \theta \in \Theta \}\) of continuous maps from Y to \({\mathbb {R}}_+\) such that, for every \(y\in Y\), \(\theta \mapsto p_{\theta }(y)\) is a probability density function with respect to \(\vartheta \). Moreover, assume that \((y,\theta )\mapsto w_{\theta }(y)\) and \((y,\theta )\mapsto p_{\theta }(y)\) are product measurable. Given this framework, we are concerned with the kernel J defined by

The transition law P, specified by (3.3), can be then expressed exactly as in [3], i.e.,

for any \(y\in Y\), \(i\in I\) and \(A\in {\mathcal {B}}(X)\). Moreover, note that, in this case, the first coordinate of \(\Phi \) satisfies the recursive formula:

where \(\{\eta _n\}_{n\in {\mathbb {N}}}\) is a sequence of random variables with values in \(\Theta \), such that

The aforementioned [3, Theorem 4.1] (whose proof relies on [12, Theorem 2.1]) provides certain conditions under which the transition operator P, induced by (7.2), is exponentially ergodic (in the so-called bounded Lipschitz distance) and notably possesses a unique invariant distribution, which belongs to \({\mathcal {M}}_1^V(X)\). To establish the main result of this section, we shall therefore incorporate several additional requirements, which, along with Assumptions 4.8 and 4.9, will enable us to apply this theorem and, simultaneously, ensure the fulfillment of Assumption 4.4, involved in Theorem 4.5.

In connection with the above, we first make one more assumption on the semiflows \(S_i\):

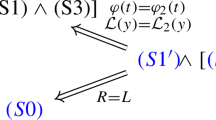

Assumption 7.1

There exist a Lebesgue measurable function \(\varphi :{\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) satisfying

and a function \({\mathcal {L}}:Y\rightarrow {\mathbb {R}}_+\), bounded on bounded sets, such that

Further, we employ the following assumption on the probabilities \(\pi _{ij}\), associated with the semiflows switching:

Assumption 7.2

There exist positive constants \(L_{\pi }\) and \(\delta _{\pi }\) such that

Finally, let us impose certain conditions on the components of the kernel J, defined by (7.1).

Assumption 7.3

There exist \(y_*\in Y\) such that

Assumption 7.4

There exist positive constants \(L_w\), \(L_p>0\) and \(\delta _p>0\) such that

where

In addition to that, we will require that the constants \(L,\alpha \) in Assumption 4.9 and \(L_w\) in Assumption 7.4 are interrelated by the inequality

It is worth stressing here that conditions similar in form to those gathered in Assumptions 7.3 and 7.4 (with \(L_w<1\)) are commonly utilized when examining the existence of invariant measures and stability properties of random iterated function systems; See, e.g., [12, Proposition 3.1] or [15, Theorem 3.1].

Remark 7.5

In what follows, we shall demonstrate that [3, Theorem 4.1], involving conditions [3, (A1)-(A6) and (20)], remains valid (with almost the same proof) under Assumptions 4.8, 4.9 and 7.1–7.4, provided that the constants \(L,\alpha \) and \(L_w\) satisfy (7.6) (which corresponds to (20) in [3]), and that \(\lambda \) is Lipschitz continuous.

-

(i)

Hypothesis (A1), which states that, for some \(y_*\in Y\),

$$\begin{aligned} \max _{i\in I}\sup _{y\in Y}\int _0^{\infty } e^{-{\underline{\lambda }}t}\int _{\Theta }\rho _Y(w_{\theta }(S_i(t,y_*)),y_*)p_{\theta }(S_i(t,y))\,\vartheta (d\theta )\,dt<\infty , \end{aligned}$$can be replaced with the conjunction of Assumptions 4.8 and 7.3. More precisely, the only place where (A1) is used in the proof of [3, Theorem 4.1] occurs in Step 1, which aims to show that P enjoys the Lyapunov condition with V given by (4.6), i.e., there exist \(a\in [0,1)\) and \(b\ge 0\) such that

$$\begin{aligned} PV(x)\le a V(x)+b\quad \text {for all}\quad x\in X. \end{aligned}$$(7.7)This condition is achieved there with

$$\begin{aligned} a:=\frac{{\bar{\lambda }}L_w L}{{\underline{\lambda }}-\alpha }, \end{aligned}$$(7.8)which is obviously \(<1\) due to inequality (7.6). However, as will be seen in Lemma 7.8 (established below), condition (7.7) can be derived (with the same a) using Assumptions 4.8, 4.9, 7.3, and property (7.4) (included in Assumption 7.4).

-

(ii)

Condition (A2) is equivalent to the conjunction of Assumptions 4.9 and 7.1 with \(\varphi (t)=t\). Nevertheless, a simply analysis of the proof of [3, Theorem 4.1] shows that it remains valid if \(\varphi :{\mathbb {R}}_+\rightarrow {\mathbb {R}}_+\) is an arbitrary Lebesgue measurable function satisfying (7.3).

-

(iii)

Hypotheses (A3), (A5) and (A6) simply coincide with Assumptions 7.2 and 7.4.

-

(iv)

Hypothesis (A4) just corresponds to the assumed Lipschitz continuity of \(\lambda \).

Remark 7.6

Regarding Remark 7.5(i), the sole reason we employ Assumptions 4.8 and 7.3 in this paper instead of hypothesis [3, (A1)] is to get the conclusion of Proposition 4.10(i), which ensures that the invariant measures of \(\{P_t\}_{t\in \mathbb {R_+}}\) inherit the property of having finite first moments w.r.t. V from the invariant measures of P.

It is worth emphasizing that Assumptions 4.8 and 7.3 do not generally imply [3, (A1)]. However, it is easy to check (using the triangle inequality) that such an implication does hold in at least three special cases: first, when all \(w_{\theta }\) are Lipschitz continuous (thus strengthening (7.4)); second, when all the maps \(p_{\theta }\) are constant, and third, if there exists a point \(y_*\in Y\) such that \(S_i(t,y_*)=y_*\) for all \(t\ge 0\) and \(i\in I\) (in this case Assumptions 4.8 holds trivially).

The following two lemmas justifies statement (i) of Remark 7.5.

Lemma 7.7

Suppose that Assumption 7.3 is fulfilled, and that condition (7.4) holds for some \(L_w>0\). Then the operator \(J(\cdot )\) on \(B_b(X)\) induced by the kernel specified in (7.1) satisfies (4.7) with \({\widetilde{V}}:=\rho _Y(\cdot ,y_*)\), \({\tilde{a}}:=L_w\) and \({\tilde{b}}:=\gamma (y_*)\).

Proof

For every \(y\in Y\) we have

\(\square \)

Lemma 7.8

Under Assumptions 4.8 and 4.9, if (4.7) holds with \({\widetilde{V}}:=\rho _Y(\cdot ,y_*)\) and certain constants \({\tilde{a}},{\tilde{b}}\ge 0\), then (7.7) is satisfied with V given by (4.6) and the constants

In particular, if J is of the form (7.1), then Assumptions 4.8, 4.9, 7.3 and condition (7.4) yield that (7.7) holds with the constant a given by (7.8).

Proof

Clearly, \(b<\infty \) by Assumption 4.8. Let \(x=(y,i)\in X\). Using condition (4.7) and Assumption 4.9, we obtain

This, in turn, gives

The second part of the assertion follows directly from Lemma 7.7. \(\square \)

What is now left to establish the main theorem of this section is to show that the model under consideration fulfills Assumption 4.4.

Lemma 7.9

If condition (7.5) holds for some \(L_p>0\), then the kernel J defined by (7.1) fulfills Assumption 4.4.

Proof

Let \(g\in C_b(Y\times {\mathbb {R}}_+)\) and fix \((y_0,t_0)\in Y\times {\mathbb {R}}_+\). Then, for any \(y\in Y\) and \(t\ge 0\), we can write

It now suffices to observe that both terms on the right-hand side of this estimation tend to 0 as \((t,y)\rightarrow (t_0,y_0)\). The convergence of the first term follows from the Lebesgue dominated convergence theorem, since \(w_{\theta }\) and g are continuous (and the latter is also bounded). The second one converges by condition (7.5) and the boundedness of g, which enables estimating it from above by \(\left\| g\right\| _{\infty }L_p\rho _Y(y_0,y)\). \(\square \)

Theorem 7.10

Let J be of the form (7.1). Further, suppose that Assumptions 4.8, 4.9 and 7.1–7.4 are fulfilled, and that the constants \(\alpha , L\) and \(L_w\) can be chosen so that (7.6) holds. Moreover, assume that the function \(\lambda \) is Lipschitz continuous. Then the transition semigroup \(\{P_t\}_{t\in {\mathbb {R}}_+}\) of the process \(\Psi \), determined by (1.1), has a unique invariant probability measure, which belongs to \({\mathcal {M}}_1^V(X)\) with V given by (4.6).

Proof

First of all, according to Remark 7.5, we can apply [3, Theorem 4.1] to conclude that the transition operator P of the chain \(\Phi \), specified by (7.2), possesses a unique invariant probability measure \(\mu _*^{\Phi }\), which is a member of \({\mathcal {M}}_1^V(X)\). On the other hand, Lemma 7.9 guarantees that the kernel J fulfills Assumption 4.4. Consequently, it now follows from Theorem 4.5 (cf. also Corollary 4.7) that \(\mu _*^{\Psi }\) given by (4.4) is a unique invariant probability measure of the semigroup \(\{P_t\}_{t\in {\mathbb {R}}_+}\). Finally, Proposition 4.10(i) yields that \(\mu _*^{\Psi }\in {\mathcal {M}}_1^V(X)\), which ends the proof. \(\square \)

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Benaïm, M., Le Borgne, S., Malrieu, F., et al.: Qualitative properties of certain piecewise deterministic Markov processes. Ann. Inst. Henri Poincaré Probab. 51(3), 1040–1075 (2015). https://doi.org/10.1214/14-aihp619

Costa, O.: Stationary distributions for piecewise-deterministic Markov processes. J. Appl. Prob. 27(01), 60–73 (1990). https://doi.org/10.1017/s0021900200038420

Czapla, D., Kubieniec, J.: Exponential ergodicity of some Markov dynamical systems with application to a Poisson driven stochastic differential equation. Dyn. Syst. 34(1), 130–156 (2018). https://doi.org/10.1080/14689367.2018.1485879

Czapla, D., Horbacz, K., Wojewódka-Ściążko, H.: Ergodic properties of some piecewise-deterministic Markov process with application to gene expression modelling. Stoch. Proc. Appl. 130(5), 2851–2885 (2020). https://doi.org/10.1016/j.spa.2019.08.006

Czapla, D., Horbacz, K., Wojewódka-Ściążko, H.: Exponential ergodicity in the bounded-Lipschitz distance for some piecewise-deterministic Markov processes with random switching between flows. Nonlinear Anal. 215, 112678 (2022). https://doi.org/10.1016/j.na.2021.112678

Davis, M.: Piecewise-deterministic Markov processes: a general class of non-diffusion stochastic models. J. R. Stat. Soc. Ser. B (Methodol.) 46(3), 353–376 (1984). https://doi.org/10.1111/j.2517-6161.1984.tb01308.x

Davis, M.: Markov Models and Optimization. Monographs on Statistics and Applied Probability, vol. 49, 1st edn. Chapman & Hall/CRC, Boca Raton (1993). https://doi.org/10.1201/9780203748039

Dynkin, E.: Markov Processes I. Springer-Verlag, Berlin (1965)

Dynkin, E.: Selected Papers of E.B. Dynkin with Commentary. American Mathematical Society, RI; International Press, Cambridge (2000)

Ethier, S., Kurtz, T.: Markov Processes: Characterization and Convergence. Wiley Series in Probability and Statistics, John Wiley & Sons Inc, Hoboken (1986). https://doi.org/10.1002/9780470316658

Horbacz, K.: Invariant measures for random dynamical systems. Diss. Math. 451, 1–63 (2008). https://doi.org/10.4064/dm451-0-1

Kapica, R., Ślęczka, M.: Random iteration with place dependent probabilities. Probab. Math. Stat. 40(1), 119–137 (2020). https://doi.org/10.37190/0208-4147.40.1.8

Mackey, M., Tyran-Kamińska, M., Yvinec, R.: Dynamic behavior of stochastic gene expression models in the presence of bursting. SIAM J. Appl. Math. 73(5), 1830–1852 (2013). https://doi.org/10.1137/12090229X

Revuz, D.: Markov Chains. North-Holland Elsevier, Amsterdam (1975)

Szarek, T.: Invariant measures for Markov operators with application to function systems. Stud. Math. 154(3), 207–222 (2003). https://doi.org/10.4064/sm154-3-2

Funding

The author declares that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Czapla, D. On the Existence and Uniqueness of Stationary Distributions for Some Piecewise Deterministic Markov Processes with State-Dependent Jump Intensity. Results Math 79, 177 (2024). https://doi.org/10.1007/s00025-024-02195-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00025-024-02195-3

Keywords

- Piecewise deterministic Markov process

- invariant measure

- one-to-one correspondence

- state-dependent jump intensity

- switching semiflows