Abstract

Many of today’s problems require techniques that involve the solution of arbitrarily large systems \(A\textbf{x}=\textbf{b}\). A popular numerical approach is the so-called Greedy Rank-One Update Algorithm, based on a particular tensor decomposition. The numerical experiments support the fact that this algorithm converges especially fast when the matrix of the linear system is Laplacian-Like. These matrices that follow the tensor structure of the Laplacian operator are formed by sums of Kronecker product of matrices following a particular pattern. Moreover, this set of matrices is not only a linear subspace it is a Lie sub-algebra of a matrix Lie Algebra. In this paper, we characterize and give the main properties of this particular class of matrices. Moreover, the above results allow us to propose an algorithm to explicitly compute the orthogonal projection onto this subspace of a given square matrix \(A \in \mathbb {R}^{N\times N}.\)

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The study of linear systems is a problem that dates back to the time of the Babylonians, who used words like ‘length’ or ‘width’ to designate the unknowns without being related to measurement problems. The Greeks also solved some systems of equations, but using geometric methods [1]. Over the years, mechanisms to solve linear systems continued to be developed until the discovery of iterative methods, the practice of which began at the end of the 19th century, by the hand of the mathematician Gauss. The development of computers in the mid-20th century prompted numerous mathematicians to delve into the study of this problem [2, 3].

Nowadays, linear systems are widely used to approach computational models in applied sciences, for example, in mechanics, after the discretization of a partial differential equation. There are, in the literature, numerous mechanisms to deal with this type of problem, such as matrix decompositions (QR decomposition, LU decomposition), iterative methods (Newton, quasi-Newton, ...), and optimization algorithms (stochastic gradient descendent, alternative least squares,...), among others, see for instance [4,5,6]. However, most of them lose efficiency as the size of the matrices or vectors involved increases. This effect is known as the curse of the dimensionality problem.

To try to solve this drawback, we can use tensor-based algorithms [7], since their use significantly reduces the number of operations that we must employ. For example, we can obtain a matrix of size \(100 \times 100\) (i.e. a total of 10.000 entries), from two matrices of size \(10 \times 10\) multiplied, by means the tensor product, \(100 + 100 = 200\) entries [8].

Among the algorithms based on tensor products strategies [9], the Proper Generalized Decomposition (PGD) family, based on the so-called Greedy Rank-One Updated (GROU) algorithm [10, 11], is one of the most popular techniques. PGD methods can be interpreted as ‘a priori’ model reduction techniques because they provide a way for the ‘a priori’ construction of optimally reduced bases for the representation of the solution. In particular, they impose a separation of variables to approximate the exact solution of a problem without knowing, in principle, the functions involved in this decomposition [12, 13]. The GROU procedure in the pseudocode is given in the Algorithm 1 (where \(\otimes \) denotes the Kronecker product, that is briefly introduced in Sect. 2).

A good example is provided by the Poisson equation \(-\Delta \phi = \textbf{f}\). Let us consider the following problem in 3D,

where \(\textbf{f}(x,y,z)=3 \cdot (2\pi )^2 \cdot \sin (2\pi x-\pi )\sin (2\pi y-\pi )\sin (2\pi z-\pi )\). This problem has a closed form solution

By using the Finite Element Method (see [10] for more details), we can write the Poisson equation (1) in discrete form as a linear system \(A \cdot \phi _{ijk} = -\textbf{f}_{ijk}\), where the indices i, j, k correspond to the discretization of x, y and z respectively, and A is matrix having a particular representation, called Laplacian-like (see Definition 2.2 below), that allows to solve efficiently a high dimensional linear system. In Fig. 1, we compare the CPU time employed in solving this discrete Poisson problem using the GROU Algorithm and the Matlab operator \(\textbf{x}=A\)\\(\textbf{b}\), for different numbers of nodes in \((0,1)^3\).

CPU time comparative to solve the discrete Poisson equation. For this numerical test, we have used a computer with the following characteristics: 11th Gen Intel(R) Core(TM) i7-11370 H @ 3.30GHz, RAM 16 GB, 64 bit operating system; and a Matlab version R2021b [14]

So, we will use this fact to study if, for a given generic square matrix, a characterization can be stated such that we can decide whether is either Laplacian-like or not. Clearly, under a positive answer, we expect that the analysis of the associated linear system \(A\textbf{x}=\textbf{b}\) would be simpler. This kind of linear operator also exists in infinite dimensional vector spaces to describe evolution equations in tensor Banach spaces [15]. Its main property is that the associated dynamical system has an invariant manifold, the manifold of elementary tensors (see [16] for the details about its manifold structure).

Thus, the goal of this paper is to obtain a complete description of this linear space of matrices, showing that is, in fact, a Lie subalgebra of \(\mathbb {R}^{N\times N}\), and provide an algorithm in order to obtain the best approximation to this linear space, that is, to compute explicitly is the orthogonal projection on that space.

The paper is organized as follows: in Sect. 2, we introduce the linear subspace of Laplacian-like matrices and prove that it is also a matrix Lie sub-algebra associated to a particular Lie group. Then, in Sect. 3, we prove that any matrix is uniquely decomposed as the sum of a Laplacian matrix and a matrix which is the subspace generated by the identity matrix, and we show that any Laplacian matrix is a direct sum of some particular orthogonal subspaces. Section 4 is devoted, with the help of the results of the previous section, to propose an algorithm to explicitly compute the orthogonal projection onto the subspace of Laplacian-like matrices. To illustrate this result, we also give two different numerical examples: the first one on the adjacency matrix of a simple graph; and the second on the numerical solution of the Poisson equation (1) by using a Finite Difference Scheme. Finally, in Sect. 5 some conclusions and final remarks are given.

2 The Algebraic Structure of Laplacian-Like Matrices

First of all, we introduce some definitions, that will be used along this work.

Definition 2.1

Let \(A \in \mathbb {R}^{M \times N}.\) Then, the Fröbenius norm (or the Hilbert-Schmidt norm) is defined as

The Fröbenius norm is the norm induced by the trace therefore, when \(N=M\), we can work with the scalar product given by \(\langle A,B\rangle =\textrm{tr}\left( A^{\top }B\right) \). Let us observe that, in \(\mathbb {R}^{N \times N}\),

-

1.

\(\langle A,B\rangle _{\mathbb {R}^{N\times N}}=\textrm{tr}\left( A^{\top }B\right) \)

-

2.

\(\langle A,\textrm{id}_{N}\rangle _{\mathbb {R}^{N\times N}}=\textrm{tr}(A)=\textrm{tr}\left( A^{\top }\right) \)

-

3.

\(\langle \textrm{id}_{N},\textrm{id}_{N}\rangle _{\mathbb {R}^{N\times N}}=\Vert \textrm{id}_{N} \Vert _F^2=N.\)

Given a linear subspace \({\mathcal {U}} \subset \mathbb {R}^{N \times N}\) we will denote:

-

(a)

the orthogonal complement of \({\mathcal {U}}\) in \(\mathbb {R}^{N \times N}\) by

$$\begin{aligned} {\mathcal {U}}^{\bot } = \left\{ V \in \mathbb {R}^{N \times N}: \langle U, V\rangle _{\mathbb {R}^{N \times N}} = 0 \text { for all } U \in {\mathcal {U}} \right\} , \end{aligned}$$and,

-

(b)

the orthogonal projection of \( \mathbb {R}^{N \times N}\) on \({\mathcal {U}}\) as

$$\begin{aligned} P_{{\mathcal {U}}}(V):= \arg \min _{U \in {\mathcal {U}}}\Vert U-V\Vert _F, \end{aligned}$$and hence

$$\begin{aligned} P_{{\mathcal {U}}^{\bot }} = \textrm{id}_N - P_{{\mathcal {U}}}. \end{aligned}$$

Before defining a Laplacian-like matrix, we recall that the Kronecker product of two matrices \(A \in \mathbb {R}^{N_1 \times M_1}\), \(B \in \mathbb {R}^{N_2 \times M_2}\) is defined by

Some of the well-known properties of the Kronecker product are:

-

1.

\(A \otimes (B \otimes C) = (A \otimes B) \otimes C\).

-

2.

\((A + B) \otimes C = (A \otimes C)+(B \otimes C)\).

-

3.

\(AB \otimes CD = (A \otimes C)(B \otimes D)\).

-

4.

\((A \otimes B)^{-1}=A^{-1}\otimes B^{-1}\).

-

5.

\((A \otimes B)^{\top } = A^{\top } \otimes B^{\top }\).

-

6.

\(\textrm{tr}(A \otimes B) = \textrm{tr}(A) \textrm{tr}(B).\)

From the example given in the introduction, we observe that there is a particular type of matrices to solve high-dimensional linear systems for which the GROU algorithm works particularly well: very fast convergence and also a very good approximation of the solution. These are the so-called Laplacian-Like matrices that we define below.

Definition 2.2

Given a matrix \(A \in \mathbb {R}^{N \times N},\) where \(N=n_1\cdots n_d,\) we say that A is a Laplacian-like matrix if there exist matrices \(A_i \in \mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d\) be such that

where \(\textrm{id}_{n_j}\) is the identity matrix of size \(n_j \times n_j.\)

Observe that for \(1< i < d,\)

hence

Moreover,

It is not difficult to see that the set of Laplacian-like matrices is a linear subspace of \(\mathbb {R}^{N \times N}\). From now on, we will denote by \(\mathcal {L}\left( \mathbb {R}^{N \times N}\right) \) the subspace of Laplacian-like matrices in \(\mathbb {R}^{N \times N}\) for a fixed decomposition of \(N=n_1\cdots n_d\).

These matrices can be easily related to the classical Laplacian operator [17, 18] by writing:

and where \(\frac{\partial ^0}{\partial x_j^0}\) is the identity operator for functions in the variable \(x_j\) for \(j\ne i\).

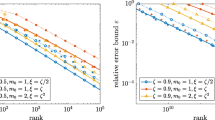

As the next numerical example shows, matrices written as in (2) provide very good performance of the GROU algorithm. In Fig. 2 we give a comparison of the speed of convergence to solve a linear system \(A \textbf{x} = \textbf{b}\), where for each fixed size, we randomly generated two full-rank matrices: one given in the classical form and a Laplacian-like matrix. Both systems were solved following Algorithm 1.

CPU time comparative to solve an \(A\textbf{x}=\textbf{b}\) problem. This graph has been generated by using the following data in Algorithm 1: \(\texttt {tol} = 2.22e-6\); \(\varepsilon = 1.0e-06\); \(\texttt {rank\_max} = 3000\); (an \(\texttt {iter-max}=15\) was used to perform an ALS strategy); and the matrices have been randomly generated for each different size, in Laplacian and classical form. The characteristics of the computer used here are the same as in the case of Fig. 1

The above results, together with the previous Poisson example given in the introduction, motivate the interest to know for a given matrix \(A \in \mathbb {R}^{N \times N}\) how far it is from the linear subspace of Laplacian-like matrices. More precisely, we are interested in decomposing any matrix A as a sum of two orthogonal matrices L and \(L^{\bot },\) where L is in \(\mathcal {L}(\mathbb {R})\) and \(L^{\bot }\) in \(\mathcal {L}(\mathbb {R})^{\bot }.\) Clearly, if we obtain that \(L^{\bot } =0,\) that is, \(A \in \mathcal {L}(\mathbb {R}),\) then we can solve any associated linear system by means of the GROU algorithm.

Recall that the set of matrices \(\mathbb R^{N \times N}\) is a Lie Algebra that appears as the tangent space at the identity matrix of the linear general group \(\textrm{GL}(\mathbb {R}^N),\) a Lie group composed by the non-singular matrices of \(\mathbb {R}^{N \times N}\) (see [19]). Furthermore, the exponential map

is well-defined, however it is not surjective because \(\det (\exp (A)) = e^{\textrm{tr}(A)} > 0.\) Any linear subspace \(\mathfrak {S} \subset \mathbb R^{N \times N}\) is a Lie-subalgebra if for all \(A,B \in \mathfrak {S}\) its Lie crochet is also in \(\mathfrak {S},\) that is, \([A,B]=AB-BA \in \mathfrak {S}\).

The linear space \(\mathcal {L}(\mathbb R^{N \times N})\) is more than a linear subspace of \(\mathbb R^{N \times N},\) it is also a Lie sub-algebra of \(\mathbb R^{N \times N}\) as the next result shows.

Proposition 2.1

Assume \(\mathbb {R}^{N \times N},\) where where \(N=n_1\cdots n_d.\) Then the following statements hold.

-

(a)

The linear subspace \(\mathcal {L}(\mathbb {R}^{N \times N})\) is a Lie subalgebra of the matrix Lie algebra \({\mathbb {R}}^{N \times N}.\)

-

(b)

The matrix group

$$\begin{aligned} \mathfrak {L}(\mathbb R^{N \times N})=\left\{ \bigotimes _{i=1}^d A_i: A_i \in \textrm{GL}(\mathbb R^{n_i}) \text { for } 1 \le i \le d \right\} \end{aligned}$$is a Lie subgroup of \(\textrm{GL}\left( \mathbb {R}^N\right) .\)

-

(c)

The exponential map \(\exp :\mathcal {L}(\mathbb R^{N \times N}) \longrightarrow \mathfrak {L}(\mathbb R^{N \times N})\) is well defined and it is given by

$$\begin{aligned} \exp \left( \sum _{i=1}^d A_{i} \otimes \textrm{id}_{n_{[i]}}\right) = \bigotimes _{i=1}^d \exp (A_i). \end{aligned}$$

Proof

(a) To prove the first statement, take \(A,B \in \mathcal {L}(\mathbb R^{N \times N}).\) Then there exist matrices \(A_i,B_i \in \mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d\) be such that

Observe, that for \(i < j\)

both products are equal to

A similar expression is obtained for \(i > j.\) Thus,

for all \(i \ne j.\)

On the other hand, for \(i=j\) we have

and

Thus,

that is,

Here \([A_i,B_i]\) is the Lie crochet in \(\mathbb {R}^{n_i \times n_i}.\)

In consequence, from all said above, we conclude

This proves that \(\mathcal {L}(\mathbb R^{N \times N})\) is a Lie sub-algebra of \(\mathbb R^{N \times N}.\)

(b) It is not difficult to see that \(\mathfrak {L}(\mathbb {R}^{N\times N})\) is a subgroup of \(\textrm{GL}(\mathbb R^{N}).\) From Theorem 19.18 in [19], to prove that \(\mathfrak {L}(\mathbb {R}^{N\times N})\) is a Lie subgroup of \(\textrm{GL}(\mathbb {R}^N)\) we only need to show that \(\mathfrak {L}(\mathbb {R}^{N\times N})\) is a closed set in \(\textrm{GL}(\mathbb {R}^N).\) This follows from the fact that the map

is continuous. Assume that there exists a sequence, \(\{A_n\}_{n \in \mathbb {N}} \subset \mathfrak {L}(\mathbb {R}^{N\times N})\) convergent to \(A \in \textrm{GL}(\mathbb {R}^n).\) Then the sequence \(\{A_n\}_{n \in \mathbb {N}}\) is bounded. Since there exists a sequence \(\{(A_1^{(n)},\ldots ,A_d^{(n)})\}_{n \in \mathbb {N}} \subset \textrm{GL}(\mathbb {R}^{n_1}) \times \cdots \times \textrm{GL}(\mathbb {R}^{n_d})\) such that \(A_n = \bigotimes _{j=1}^d A_j^{(n)},\) the sequence \(\{(A_1^{(n)},\ldots ,A_d^{(n)})\}_{n \in \mathbb {N}}\) is also bounded. Thus, there exists a convergent sub-sequence, also denoted by \(\{(A_1^{(n)},\ldots ,A_d^{(n)})\}_{n \in \mathbb {N}},\) to \((A_1,\ldots ,A_d) \in \textrm{GL}(\mathbb {R}^{n_1}) \times \cdots \times \textrm{GL}(\mathbb {R}^{n_d}).\) The continuity of \(\Phi ,\) implies that \(A = \bigotimes _{i=1}^d A_i.\) Thus \(\mathfrak {L}(\mathbb {R}^{N\times N})\) is closed in \(\textrm{GL}(\mathbb {R}^N),\) and hence a Lie subgroup.

(c) From Lemma 4.169(b) [8], the following equality

holds. Thus, the exponential map is well defined. This ends the proof of the proposition. \(\square \)

We conclude this section describing in a more detail the structure of matrices \(A\in \mathbb {R}^{N \times N}\) for which there exists \(A_i \in \mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d\) such that

For dealing easily with Laplacian-like matrices, we introduce the following notation. For each \(1 < i \le d\) consider the integer number \(n_1n_2\cdots n_{i-1}.\) Then, we will denote by

a block square matrix composed by \(n_1n_2\cdots n_{i-1} \times n_1n_2\cdots n_{i-1}\)-blocks.

Since \(A_i \otimes \textrm{id}_{n_{i+1}\cdots n_d} \)

then \(\textrm{id}_{n_1\cdots n_{i-1}} \otimes A_i \otimes \textrm{id}_{n_{i+1}\cdots n_d}\)

where \(O_i\) denotes the zero matrix in \(\mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d.\) To conclude, we have the following cases

and

We wish to point out that the above operations are widely used in quantum computing.

3 A Decomposition of the Linear Space of Laplacian-Like Matrices

We start by introducing some definitions and preliminary results needed to give an interesting decomposition of the linear space of Laplacian-like matrices. The next lemma lets us show how is the decomposition of \(\mathbb {R}^{N \times N}\) as a direct sum of \(\textrm{span}\{\textrm{id}_{N}\}\) and its orthogonal space \(\textrm{span}\{ \textrm{id}_{N} \}^{\bot },\) with respect the inner product \(\langle A,B \rangle _{\mathbb {R}^{N \times N}} = \textrm{tr}(A^TB).\) From now one, we will denote by

the linear subspace of null trace matrices in \(\mathbb {R}^{n \times n}.\)

Lemma 3.1

Consider \(\left( \mathbb {R}^{n \times n}, \Vert \cdot \Vert _F\right) \) as a Hilbert space. Then there exists a decomposition

Moreover, the orthogonal projection from \( \mathbb {R}^{n \times n}\) on \(\textrm{span}\{\textrm{id}_{n}\}\) is given by

and hence for each \(A \in \mathbb {R}^{n \times n}\) we have the following decomposition,

where \(\left( A - \frac{\textrm{tr}(A)}{N} \textrm{id}_{N}\right) \in \mathfrak {h}_n.\)

Proof

The lemma follows from the fact that

is the orthogonal projection onto \(\textrm{span}\{\textrm{id}_{n}\}.\) \(\square \)

Now, we consider the matrix space \(\mathbb {R}^{N \times N}\) where \(N=n_1\cdots n_d,\) and hence \(\mathbb {R}^{N \times N} = \bigotimes _{i=1}^d \mathbb {R}^{n_i \times n_i}\) can be considered as a tensor space. A norm \(\Vert \cdot \Vert \) defined over \(\mathbb {R}^{N \times N}\) is called a tensor norm if and only if there exists a norm \(\Vert \cdot \Vert _i\) over \(\mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d,\) such that for any tensor \(A=A_1 \otimes \dots \otimes A_d \in \mathbb {R}^{N \times N},\) where \(A_i \in \mathbb {R}^{n_i \times n_i}\) \((1 \le i \le d),\) it holds

We remark that for tensors \(A=A_1 \otimes \dots \otimes A_d\) and \(B=B_1 \otimes \dots \otimes B_d\) where \(A_i,B_i \in \mathbb {R}^{n_i \times n_i},\) we have

and hence \(\Vert A\Vert _F= \sqrt{\langle A, A \rangle _{\mathbb {R}^{N \times N}}},\) is a tensor-norm. In particular, the inner product \(\langle \cdot , \cdot \rangle _{\mathbb R^{N \times N}}\) satisfies

The next result gives a first characterization of the linear space \({\mathcal {L}}\left( \mathbb R^{N \times N}\right) .\)

Theorem 3.1

Let \(\mathbb {R}^{N \times N},\) where \(N=n_1\cdots n_d.\) Then

where \(\Delta = \mathfrak {h}_N \cap \mathcal {L}(\mathbb {R}^{N \times N}).\) Furthermore, \(\mathcal {L}(\mathbb {R}^{N \times N})^{\bot }\) is a subspace of \(\mathfrak {h}_N.\)

Proof

Assume that a given matrix \(A \in \mathbb {R}^{N \times N}\) can be written as in (2) and denote each component in the sum representation of A by \(L_i=\textrm{id}_{[n_i]} \otimes A_i,\) where \(A_i \in \mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d.\) Then \(L_i \in \textrm{span}\{\textrm{id}_{[n_i]}\} \otimes \mathbb {R}^{n_i\times n_i}\) for \(1 \le i \le d,\) and in consequence,

Thus, \(\textrm{span}\{ \textrm{id}_{N}\} \subset \mathcal {L}\left( \mathbb {R}^{N \times N}\right) ,\) and, by Lemma 3.1, we have the following decomposition

where \(\Delta =\mathfrak {h}_N \cap \mathcal {L}(\mathbb {R}^{N \times N}).\) The last statement is consequence of Lemma 3.1. This ends the theorem. \(\square \)

Now, given any square matrix in \(\mathbb {R}^{N \times N},\) we would like to project it onto \(\mathcal {L}(\mathbb {R}^{N \times N})\) to obtain its Laplacian approximation. To compute this approximation explicitly, the following result, which is a consequence of the above theorem, will be useful.

Corollary 3.1

Assume \(\mathbb {R}^{N \times N},\) with \(N=n_1 \cdots n_d \in \mathbb {N}.\) Then

that is, for all \(A \in \mathbb {R}^{N \times N}\) it holds

Next, we need to characterize \(\Delta \) in order to explicitly construct the orthogonal projection onto \({\mathcal {L}}(\mathbb R^{N \times N})\). From the proof of the Theorem 3.1 we see that the linear subspaces given by

for \(1 \le i \le d,\) are of interest to characterize \(\Delta \) as the next result shows.

Theorem 3.2

Let \(\mathbb {R}^{N \times N}\) with \(N=n_1 \cdots n_d \in \mathbb {N},\) and let \(\mathfrak {H}_i\) be the orthogonal complement of \(\textrm{span}\{ \textrm{id}_{N} \}\) in the linear subspace \(\mathbb {R}^{n_i \times n_i} \otimes \textrm{span}\{\textrm{id}_{[n_i]}\}\) for \(1 \le i \le d\). Then,

Furthermore, a matrix A belongs to \(\Delta \) if and only if it has the form

Proof

First, we take into account that \(\mathbb {R}^{n_i \times n_i} \otimes \textrm{span}\{\textrm{id}_{[n_i]}\}\) a linear subspace of \(\mathcal {L}(\mathbb {R}^{N \times N})\) linearly isomorphic to the matrix space \(\mathbb {R}^{n_i\times n_i}.\) Thus, motivated by Lemma 3.1 applied on \(\mathbb {R}^{n_i \times n_i}\), we write

Now, we claim that \(\mathfrak {h}_{n_i} \otimes \textrm{span}\{ \textrm{id}_{[n_i]}\}\) it is the orthogonal complement of the linear subspace generated by the identity matrix \(\textrm{id}_N = \textrm{id}_{n_i} \otimes \textrm{id}_{[n_i]}\) in the linear subspace \(\mathbb {R}^{n_i\times n_i} \otimes \textrm{id}_{[n_i]}.\) To prove the claim, observe that for \(A_i \otimes \textrm{id}_{[n_i]} \in \mathfrak {h}_{n_i} \otimes \textrm{span}\{ \textrm{id}_{[n_i]}\}\) \((1 \le i \le d),\) by using (3), it holds

because \(A_i \in \mathfrak {h}_{n_i}\) and hence \(\textrm{tr}(A_i)=0,\) for \(1 \le i \le d.\) Thus, the claim follows and hence

To prove (6), we first consider \(1 \le i < j \le d,\) and take \(A_k \otimes \textrm{id}_{[n_k]} \in \mathfrak {H}_k\) for \(k=i,j.\) Then the inner product satisfies

because \( \textrm{tr}(A_i) = \textrm{tr}(A_j)=0.\) The same equality holds for \(j < i.\) Thus, we conclude that \(\mathfrak {H}_i\) is orthogonal to \(\mathfrak {H}_j\) for all \(i\ne j.\) So, the subspace

is well defined and it is a subspace of \(\mathcal {L}(\mathbb {R}^{N \times N}).\)

To conclude the proof (6), we will show that \(\Delta ' = \Delta .\) Since, for each \(1 \le i \le d,\) \(\mathfrak {h}_i\) is orthogonal to \( \textrm{span}\{\textrm{id}_N\}\) we have

To obtain the equality, take \(A \in \mathcal {L}(\mathbb {R}^{N \times N}).\) Then there exists \(A_i \in \mathbb {R}^{n_i \times n_i}\) for \(1 \le i \le d\) be such that

From Lemma 3.1 we can write

for each \(1 \le i \le d.\) Then,

Observe that \(\left( \sum _{i=1}^d \frac{\textrm{tr}(A_i)}{n_i}\right) \, \textrm{id}_{N} \in \textrm{span}\{\textrm{id}_N\}\) and

Thus, \(\mathcal {L}(\mathbb {R}^{N \times N}) \subset \textrm{span}\{\textrm{id}_N\} \oplus \Delta ^{\prime }.\) In consequence \(\Delta ^{\prime } = \Delta ,\) and this proves the theorem. \(\square \)

A direct consequence of the above theorem is the next corollary.

Corollary 3.2

Assume \(\mathbb {R}^{N \times N},\) with \(N=n_1 \cdots n_d \in \mathbb {N}.\) Then

that is, for all \(A \in \mathbb {R}^{N \times N}\) it holds

where \(A_i \in \mathbb {R}^{n_i \times n_i}\) satisfies \(\textrm{tr}(A_i) =0\) for \(1 \le i \le d.\)

4 A Numerical Strategy to Perform a Laplacian-Like Decomposition

Now, in this section we will study some numerical strategies in order to compute, for a given matrix \(A \in \mathbb {R}^{N \times N},\) with the help of Proposition 3.1 and Theorem 3.2, its best Laplacian-like approximation. Then, we will present two numerical examples to give consistency to the results obtained. In the first example, we will work with the adjacency matrix of a simple graph of 6 nodes; with it, we intend to show, step by step, the procedure to follow to calculate the Laplacian decomposition of a square matrix. The second example will complete the study of the discrete Poisson equation described in Sect. 1; with this example, we will illustrate the goodness of the Laplacian decomposition to solve PDEs in conjunction with the GROU Algorithm. We start with the following Greedy Algorithm.

Theorem 4.1

Let A be a matrix in \( \mathbb {R}^{N \times N}\), with \(N=n_1\cdots n_d,\) such that \(\textrm{tr}(A) = 0.\) Consider the following iterative procedure:

-

1.

Take \(X_k^{(0)} = 0\) for \(1\le k \le d.\)

-

2.

For each \(\ell \ge 1\) compute for \(1 \le i \le d\) the matrix \(U_i^{(\ell )}\) as

$$\begin{aligned} U_i^{(\ell )}=\arg \min _{U_i \in \mathfrak {h}_i} \left\| A - \sum _{k=1}^{i-1} X_k^{(\ell )} \otimes \textrm{id}_{[n_k]} - \xi (U_i) \otimes \textrm{id}_{[n_i]} - \sum _{k=i+1}^{d} X_k^{(\ell -1)} \otimes \textrm{id}_{[n_k]} \right\| , \end{aligned}$$where

$$\begin{aligned} \xi (U_i)= X_i^{(\ell -1)}+U_i, \end{aligned}$$and put \(X_i^{(\ell )} = X_i^{(\ell -1)} + U_i^{(\ell )}.\)

Then

Proof

Recall that \(P_{\Delta }(A)\) solves the problem

To simplify notation put \(P^{(\ell )}_{\Delta }(A) = \sum _{k=1}^d X_k^{(\ell )} \otimes \textrm{id}_{[n_k]}\) for \(\ell \ge 0.\) By construction we have that

holds. Since the sequence \(\{ P_{\Delta }^{(\ell )}(A) \}_{\ell \in \mathbb {N}}\) is bounded, there is a convergent subsequence also denoted by \(\{ P_{\Delta }^{(\ell )}(A) \}_{\ell \in \mathbb {N}}\), so that

If \(L_A = P_{\Delta }(A)\), the theorem holds. Otherwise, assume that \(L_A \ne P_{\Delta }(A)\) (see Fig. 3), then it is clear that

Suppose that \( \Vert A-P_{\Delta }(A) \Vert < \Vert A - L_A \Vert \) and let \(\lambda \in (0,1).\) Now, consider the linear combination \(\lambda L_A+(1-\lambda )P_{\Delta }(A).\) Since \(L_A, P_{\Delta }(A) \in \Delta \), they can be written as

so \(\lambda L_A+(1-\lambda )P_{\Delta }(A)=\sum _{i=1}^d \textrm{id}_{[n_i]} \otimes \left( \lambda A_i + (1-\lambda )A_i^* \right) \in \Delta \). Hence,

That is, we have found d matrices \(Z_i=\lambda A_i + (1-\lambda )A_i^*\), \(i=1,\dots ,d\), such that

which is a contradiction with the definition of \(L_A.\) \(\square \)

The previous result allows us to describe the procedure to obtain the Laplacian approximation of a square matrix, in the form of an algorithm. We can visualize the complete algorithm in the form of pseudocode in Algorithm 2.

4.1 Numerical Examples

4.1.1 The Adjacency Matrix of a Simple Graph

First, let us show an example in which the projection \(P_{\Delta }(A)\) coincides with A and how the tensor representations is provided by the aforementioned proposed algorithm. Let us consider the simple graph G(V, E), with \(V=\{1,2,\ldots ,6\}\) the set of nodes and \(E=\{{(1,2)},(1,4),(2,3),(2,5),(3,6),{(4,5)},(5,6)\}\) the set of edges. Then, the adjacency matrix of G is

We want to find a Laplacian decomposition of the matrix \(A \in \mathbb {R}^{6 \times 6}\). Since \(\textrm{tr}(A)=0\), we can do this by following the iterative scheme given by Theorem 4.1. So, we look for \(X_1 \in \mathbb {R}^{2 \times 2}\), \(X_2 \in \mathbb {R}^{3 \times 3}\) matrices such that

where \(n_1=2, n_2=3\). We proceed according to the algorithm:

-

1.

Computing \(X_1\) by

$$\begin{aligned} \min _{X_1} \Vert A- X_1 \otimes \textrm{id}_{n_2} \Vert , \end{aligned}$$we obtain

$$\begin{aligned} X_1 = \begin{pmatrix} 0 &{}\quad 1 \\ 1 &{}\quad 0 \end{pmatrix}. \end{aligned}$$ -

2.

Computing \(X_2\) by

$$\begin{aligned} \begin{aligned} \min _{X_2} \Vert A - X_1 \otimes \textrm{id}_{n_2} - \textrm{id}_{n_1} \otimes X_2\Vert , \end{aligned} \end{aligned}$$we obtain

$$\begin{aligned} X_2 = \begin{pmatrix} 0&{}\quad 1 &{}\quad 0 \\ 1 &{}\quad 0 &{}\quad 1\\ 0 &{}\quad 1 &{}\quad 0 \end{pmatrix}. \end{aligned}$$

Since the residual value is \(\Vert A-P_{\Delta }(A)\Vert =0,\) the matrix \(A \in \Delta .\) Thus, we can write it as

Matlab spy(A) representation of A given in (8) for \(n=4\)

4.1.2 The Poisson’s Equation

Now, let us consider the Poisson’s equation (1) with homogeneous boundary condition given in Sect. 1. For each \(u \in \{x,y,z\}\) we fix \(h=\frac{1}{n},\) where \(n \in \mathbb {N},\) and take \(u_{\ell } = (\ell -1)h\) for \(1 \le \ell \le n.\) Next, we consider a derivative approximation scheme given by

in (1) for \(u \in \{x,y,z\}.\) It allows to obtain a linear system written as

where the indices \(1 \le i,j,k \le n\) correspond to the discretization of x, y and z respectively, and \(A \in \textrm{GL}\left( \mathbb {R}^{n^3}\right) \) is the matrix given by

where \(T \in \mathbb {R}^{n^2\times n^2}\) is the matrix

We can visualize a representation of the sparsity of the matrix A with the spy command from Matlab (see Fig. 4). Since \(\textrm{tr}(A) = 6n^3 \ne 0,\) instead of looking for the Laplacian approximation of A, we will look for \(\hat{A}=\left( A- 6\,\textrm{id}_{n^3}\right) ,\) which has null trace. Proceeding according Algorithm 2 for sizes \(n_1=n_2=n_3=n\), we obtain the decomposition

where

and the residual of the approximation of \(\hat{A}\) is \( \Vert \hat{A} - P_{\Delta }(\hat{A}) \Vert = 0. \) Thus, following Corollary 3.2, we can write the original matrix A as

Note that the first term is \(6 \cdot \textrm{id}_{n^3} = 6 \cdot \textrm{id}_{n} \otimes \textrm{id}_{n} \otimes \textrm{id}_{n},\) and hence A can be written as

where

CPU Time, in seconds, employed to solve (7) by using the Matlab command \(A\backslash b\), the GROU Algorithm 1, and the GROU Algorithm 1 with A written in Laplacian form, obtained from the Laplacian decomposition Algorithm 2. All algorithms were performed under the Matlab standard environment for basic matrix calculations

CPU Time, in second, employed to solve (7) by using the Matlab command \(A\backslash b\), the GROU Algorithm 1, and the GROU Algorithm 1 with A written in Laplacian form, obtained from the Laplacian decomposition Algorithm 2. All algorithms were performed under the Matlab environment for matrices in sparse form

Next, we study the CPU-time used to solve the linear system (7) by using (a) the Matlab command \(A\backslash b\), (b) the GROU Algorithm 1 with A in the compact form (8), and (c) the GROU Algorithm with A in Laplacian-Like form (9), previously obtained from the Laplacian Decomposition Algorithm 2.

We have used in the numerical experiments the following parameters values. For the GROU Algorithm 1: \(\texttt {tol} = 2.2204e-16\); \(\varepsilon = 2.2204e-16\); \(\texttt {rank\_max} = 15\); (an \(\texttt {iter-max}=5\) was used to perform an ALS strategy); and the number of nodes in \((0,1)^3\) (that is, the number of rows or columns of the matrix A) increase from \(10^3\) to \(35^3\). For the Laplacian Decomposition Algorithm we fixed \(\texttt {iter\_max}=4\) and a tolerance \(\texttt {tol} =10^{-5}\). The characteristics of the computer are the same as we give in Sect. 1.

In the first experiment (see Fig. 5) the algorithms were implemented by means the Matlab standard environment to perform basic matrix calculations whereas we have done a second experiment to increase the size of the high dimensional matrices (see Fig. 6). To this end, the algorithms were implemented under the Matlab environment for sparse matrices, which require less CPU memory. In this second experience we consider matrices with a number of rows (or columns) in a range from \(10^3\) to \(100^3\).

In both figures we observe how, for high-dimensional matrices, the GROU Algorithm 1 improves the CPU time of Matlab’s command \(A\backslash b\). But certainly the Laplacian Decomposition Algorithm 2 combined with the GROU Algorithm 1 significantly reduces the CPU time of the two previous methods.

5 Conclusions

We have presented a result to approximate a generic square matrix by its Laplacian form, and thus decompose it as the sum of two linearly independent matrices. This decomposition is motivated by the fact that tensor algorithms are more efficient when working with Laplacian matrices. We have also described the procedure to perform this approximation in the form of an algorithm and illustrated how it works on some basic examples.

With the proposed algorithm, we may provide an alternative way to solve linear systems, especially interesting if we combine algorithms 1 and 2, as shown in the second example presented above. Due to its structure, this matrix decomposition can be interesting for studying various types of matrices, such as sparse matrices, matrices resulting from the discretization of a PDE, adjacency matrices of simple graphs, and others. We will explore the computational benefits of this approach in future works.

References

Luzardo, D., Peña P., A.J.: Historia del Álgebra Lineal hasta los Albores del Siglo XX. Divulgaciones Matemáticas 14(2), 153–170 (2006)

Saad, Y.: Iterative methods for linear systems of equations: A brief historical journey. In: Brenner, S.C., Shparlinski, I.E., Shu, C.-W., Szyld, D.B. (eds.) 75 Years of Mathematics of Computation. Contemporary Mathematics, vol. 754, pp. 197–215. American Mathematical Society, (2020). https://doi.org/10.1090/conm/754/15141

Saad, Y., Van der Vorst, H.A.: Iterative solution of linear systems in the 20th century. J. Comput. Appl. Math. 123(1), 1–33 (2000). https://doi.org/10.1016/S0377-0427(00)00412-X

Leiserson, C.E., Rivest, R.L., Cormen, T.H., Stein, C.: Introduction to Algorithms, 3rd edn. The MIT press (2009)

Strang, G.: Linear Algebra and Its Applications. Thomson, Brooks/Cole, Belmont, CA (2006)

Golub, G.H., Van Loan, C.F.: Matrix Computations. JHU Press (2013)

Nouy, A.: Chapter 4: Low-Rank Methods for High-Dimensional Approximation and Model Order Reduction., pp. 171–226. Society for Industrial and Applied Mathematics (2017). https://doi.org/10.1137/1.9781611974829.ch4

Hackbusch, W.: Tensor Spaces and Numerical Tensor Calculus (Second Edition). Springer Series in Computational Mathematics, p. 605. Springer (2019)

Simoncini, V.: Numerical solution of a class of third order tensor linear equations. Boll. Unione. Mat. Ital. 13, 429–439 (2020). https://doi.org/10.1007/s40574-020-00247-4

Ammar, A., Chinesta, F., Falcó, A.: On the convergence of a Greedy Rank-One Update algorithm for a class of linear systems. Arch. Comput. Methods Eng. 17(4), 473–486 (2010). https://doi.org/10.1007/s11831-010-9048-z

Georgieva, I., Hofreither, C.: Greedy low-rank approximation in Tucker format of solutions of tensor linear systems. J. Comput. Appl. Math. 358, 206–220 (2019). https://doi.org/10.1016/j.cam.2019.03.002

Falcó, A., Nouy, A.: Proper generalized decomposition for nonlinear convex problems in tensor Banach spaces. Numer. Math. 121, 503–530 (2012). https://doi.org/10.1007/s00211-011-0437-5

Quesada, C., Xu, G., González, D., Alfaro, I., Leygue, A., Visonneau, M., Cueto, E., Chinesta, F.: Un método de descomposición propia generalizada para operadores diferenciales de alto orden. Rev. Int. Metod. Numer. 31(3), 188–197 (2015). https://doi.org/10.1016/j.rimni.2014.09.001

MATLAB: Version R2021b. The MathWorks Inc., Natick, Massachusetts (2021)

Falcó, A.: Tensor Formats Based on Subspaces are Positively Invariant Sets for Laplacian-Like Dynamical Systems. In: Abdulle, A., Deparis, S., Kressner, D., Nobile, F., Picasso, M. (eds.) Numerical Mathematics and Advanced Applications - ENUMATH 2013, pp. 315–323. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-10705-9__31

Falcó, A., Hackbusch, W., Nouy, A.: On the Dirac–Frenkel variational principle on tensor banach spaces. Found Comput Math 19(1), 159–204 (2019). https://doi.org/10.1007/s10208-018-9381-4

Hackbusch, W., Khoromskij, B., Sauter, S., Tyrtyshnikov, E.: Use of tensor formats in elliptic eigenvalue problems. Numer. Linear Algebra Appl. 19, 133–151 (2012). https://doi.org/10.1002/nla.793

Heidel, G., Khoromskaia, V., Khoromskij, B.N., Schulz, V.: Tensor product method for fast solution of optimal control problems with fractional multidimensional Laplacian in constraints. J. Comput. Phys. 424, 109865 (2021). https://doi.org/10.1016/j.jcp.2020.109865

Gallier, J., Quaintance, J.: Differential Geometry and Lie Groups. Springer (2020)

Acknowledgements

This work was supported by the Generalitat Valenciana and the European Social Found under Grant [number ACIF/2020/269)]; Ministerio de Ciencia, Innovación y Universidades under Grant [number RTI2018-093521-B-C32]; Universidad CEU Cardenal Herrera under Grant [number INDI22/15].

Funding

The research was supported by grants from the Universidad CEU Cardenal Herrera, the Ministerio de Ciencia, Innovación y Universidades and the Generalitat Valenciana.

Author information

Authors and Affiliations

Contributions

All authors equally contributed and read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no potential conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Conejero, J.A., Falcó, A. & Mora-Jiménez, M. Structure and Approximation Properties of Laplacian-Like Matrices. Results Math 78, 184 (2023). https://doi.org/10.1007/s00025-023-01960-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00025-023-01960-0

Keywords

- matrix decomposition

- laplacian-like matrix

- high dimensional linear system

- matrix lie algebra

- matrix lie group