Abstract

In this note, we study discrete time majority dynamics over an inhomogeneous random graph G obtained by including each edge e in the complete graph \(K_n\) independently with probability \(p_n(e)\). Each vertex is independently assigned an initial state \(+1\) (with probability \(p_+\)) or \(-1\) (with probability \(1-p_+\)), updated at each time step following the majority of its neighbors’ states. Under some regularity and density conditions of the edge probability sequence, if \(p_+\) is smaller than a threshold, then G will display a unanimous state \(-1\) asymptotically almost surely, meaning that the probability of reaching consensus tends to one as \(n\rightarrow \infty \). The consensus reaching process has a clear difference in terms of the initial state assignment probability: In a dense random graph \(p_+\) can be near a half, while in a sparse random graph \(p_+\) has to be vanishing. The size of a dynamic monopoly in G is also discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Majority dynamics is a discrete-time deterministic process over a graph G with n vertices \(V=\{1,2,\dots ,n\}\), where each vertex \(i\in V\) holds a state \(C_t(i)\in \{-1,+1\}\) at time step \(t\ge 0\). The state configuration of G at t can be represented as a mapping \(C_t: V\rightarrow \{-1,+1\}\). Given an initial configuration \(C_0\), the process evolves following

where N(i) is the set of neighbors of i in G, and \(\mathrm {sgn}(\cdot )\) is the signum function satisfying \(\mathrm {sgn}(x)=+1\) if \(x>0\) and \(\mathrm {sgn}(x)=-1\) if \(x<0\).

This can be viewed as a model of information spreading in social networks, where each individual defers to the majority of its neighbors and keeps its own opinion in case of a tie. Majority dynamics (1.1) is the noiseless special case of the well-known majority-vote model in statistical physics [1,2,3], where each voter has a probability (interpreted as noise or temperature) q choosing the minority of its neighbors and probability \(1-q\) the majority. Threshold and phase transition with respect to noise and other order parameters are the focus of these studies mainly using mean field approximation. Another closely related model is majority bootstrap percolation [4, 5], where each vertex can have one of two colors, red (informed) or blue (uninformed), and blue vertices update their color according to the majority rule while red vertices invariably retain their color. Majority bootstrap percolation can be used to model monotone processes such as infection and rumor diffusion, which is essentially different from majority dynamics where a vertex may change its state many times. For a finite graph, beginning with any initial configuration \(C_0\), the process of majority dynamics will become recurrent at some point, and interestingly, it is shown in [6] that the period is at most 2 when t is sufficiently large. Given a random initial configuration \(C_0\) with each vertex independently taking state \(+1\) with probability \(p_+\) and \(-1\) with probability \(p_-=1-p_+\), majority dynamics has been investigated for several classes of graphs including lattice [7, 8], infinite lattice [9], infinite trees [10], random regular graphs [11], and Erdős-Rényi random graphs [2, 12,13,14,15]. For example, it is shown in [14] that majority dynamics undergoes a phase transition at the threshold of connectivity \(p_n=n^{-1}\ln n\) for Erdős-Rényi random graph \(G(n,p_n)\), where \(p_n\) is the edge probability [16].

In the present note, we continue this line of research by considering majority dynamics in inhomogeneous random graphs, where edges are independent but may have different probability. For \(i\not =j\), let \(e_{ij}=e_{ji}\) be the edge connecting vertices i and j in V. Define mutually independent Bernoulli random variables \(\{X(e_{ij})\}_{1\le i<j\le n}\) by setting \(p_n(e_{ij}):=\mathbb {P}(X(e_{ij})=1)=1-\mathbb {P}(X(e_{ij})=0)\). The edge \(e_{ij}\) is present if \(X(e_{ij})=1\) and absent if \(X(e_{ij})=0\). Given the sequence \(\mathbf{p}_n:=\{p_n(e_{ij})\}_{1\le i<j\le n}\), the inhomogeneous random graph \(G(n,\mathbf{p}_n)\) is the probability measure space of all graphs with each edge \(e_{ij}\) present independently with probability \(p_n(e_{ij})\). Clearly, if \(p_n(e_{ij})\equiv p_n\in (0,1)\), we reproduce the classical Erdős-Rényi random graph model \(G(n,p_n)\).

In the rest of the note, we present the main results in Sect. 2 and provide the proofs in Sect. 3. By convention, we are interested in the properties pertaining to random graphs as \(n\rightarrow \infty \), and a property is said to hold asymptotically almost surely (a.a.s.) if the probability of achieving it tends to 1 as \(n\rightarrow \infty \). Some standard asymptotic notations such as \(o,O,\Theta ,\omega ,\ll \) will be adopted; see e.g., the textbook [16]. All logarithms have base e.

2 Main Results

For a subset \(S\subseteq V\), the expected neighbor densities of a vertex \(i\in V\) in \(G(n,\mathbf{p}_n)\) and in the subgraph induced by S are defined by \(d_n(i)=(n-1)^{-1}\sum _{j\in V\backslash \{i\}}p_n(e_{ij})\) and \(d_n(i,S)=|S\backslash \{i\}|^{-1}\sum _{j\in S\backslash \{i\}}p_n(e_{ij})\) respectively, where \(|\cdot |\) represents the size of a set. Apparently, we have \(d_n(i)=d_n(i,V)\).

Our first result shows that if the graph is dense and the probability \(p_+\) of a vertex having state \(+1\) in \(C_0\) is only slightly smaller than a half, then the vertices in V unanimously hold the state \(-1\) after a constant number of rounds.

Theorem 1

(Dense regime). Suppose there exists a sequence \(p_n\in (0,1)\) and positive constants \(\alpha <1\), \(\beta <1\), and \(4(1-\beta )/\beta \le \gamma \le 1\) such that for all sufficiently large n the following conditions hold:

If \(p_+\le \frac{1}{2}-\omega \left( \frac{1}{\sqrt{np_n}}\right) \), then a.a.s. the vertices in \(G(n,\mathbf{p}_n)\) unanimously have state \(-1\) after a constant number of rounds.

The regularity and density conditions are satisfied with \(\beta =4/5\), \(\gamma =1\) and \(p_n(e_{ij})\equiv p_n\). We obtain the corollary for homogeneous random graph \(G(n,p_n)\) [14, Theorem 2.3].

Corollary 1

Assume that \(p_n\ge \frac{\ln n}{\alpha n}\) for \(\alpha \in (0,1)\) and sufficiently large n. If \(p_+\le \frac{1}{2}-\omega \left( \frac{1}{\sqrt{np_n}}\right) \), then a.a.s. the vertices in \(G(n,p_n)\) unanimously have state \(-1\) after a constant number of rounds.

Remark 1

The margin \(\omega \left( \frac{1}{\sqrt{np_n}}\right) \) is tight in the sense that if it is replaced by \(\frac{c}{\sqrt{np_n}}\) for any constant \(c>0\), the result does not hold; c.f. [14]. In fact, assume \(p_+=\frac{1}{2}-\frac{c}{\sqrt{n}}\) and \(p_n\equiv 1\). Let Y be the number of vertices having state \(+1\) in \(C_0\). Namely, \(Y=\sum _{i\in V}1_{\{C_0(i)=+1\}}\). Y is approximately a normal variable with expectation \(\mathbb {E}Y=\frac{n}{2}-c\sqrt{n}\) and variance \(\mathrm {Var}Y=\frac{n}{4}-c^2\). Therefore, \(\mathbb {P}(Y\ge \frac{n}{2})=(1+o(1))\mathbb {P}\left( Z\ge \frac{c\sqrt{n}}{\sqrt{\frac{n}{4}-c^2}}\right) >0\) by the central limit theorem, where Z is the standardized normal random variable. With a random initial configuration \(C_0\) (independently taking \(+1\) with probability \(p_+\) and \(-1\) otherwise) and \(G(n,p_n)\) being a complete graph, any vertex holding state \(-1\) in \(C_0\) will change its state to \(+1\) in \(C_1\). In other words, with a positive probability all vertices will hold state \(+1\) in just one round.

The next result concerns the sparse graph regime. It says if the graph is sparse, then in order to allow the state \(-1\) to take over the graph the probability \(p_+\) of a vertex having state \(+1\) in \(C_0\) must tend to zero sufficiently fast. This is in stark contrast to dense graphs because there might be small components which persistently hold state \(+1\) impeding consensus if \(p_+\) is not sufficiently small.

Theorem 2

(Sparse regime). Suppose there exists a sequence \(p_n\in (0,1)\) and positive constants \(\alpha >1\), \(\beta <1\), and \(\gamma \ge 1\) such that for all sufficiently large n the following conditions hold:

If \(p_+=\omega \left( \frac{e^{np_n\gamma }}{n}\right) \), then a.a.s. the vertices in \(G(n,\mathbf{p}_n)\) don’t all have state \(-1\) for any \(t\ge 0\). If \(p_+=o\left( \frac{e^{np_n}}{n}\right) \), then a.a.s. the vertices in \(G(n,\mathbf{p}_n)\) unanimously have state \(-1\) after two rounds.

The regularity and density conditions above are satisfied with \(\gamma =1\), any \(\beta \in (0,1)\), and \(p_n(e_{ij})\equiv p_n\). We obtain the following result for homogeneous random graph \(G(n,p_n)\) [14, Theorem 2.4].

Corollary 2

Assume that \(p_n\le \frac{\ln n}{\alpha n}\) for \(\alpha \in (0,1)\) and sufficiently large n. If \(p_+=\omega \left( \frac{e^{np_n}}{n}\right) \), then a.a.s. the vertices in \(G(n,p_n)\) don’t all have state \(-1\) for any \(t\ge 0\). If \(p_+=o\left( \frac{e^{np_n}}{n}\right) \), then a.a.s. the vertices in \(G(n,p_n)\) unanimously have state \(-1\) after two rounds.

In majority dynamics, a set \(S\subseteq V\) is said to be a dynamic monopoly [17, 18] if starting from any configuration with all vertices in S holding state \(-1\) (regardless of the states in \(V\backslash S\)), vertices in V will unanimously have state \(-1\) eventually. It is shown in [19] that for any \(n\ge 1\) there exists a graph G with n vertices, which possesses a dynamic monopoly of a constant size. In the following we show that in \(G(n,\mathbf{p}_n)\) the minimal size of a dynamic monopoly is a.a.s. at least nearly a half of the graph size.

Theorem 3

(Dynamic monopoly). Suppose there exists a sequence \(p_n\in (0,1)\) and positive constants \(\gamma \le 1\), \(c\ge 4(\frac{1}{2}-\theta )^{-1}\sqrt{\frac{1}{\gamma }}\), and \(\frac{c}{\sqrt{np_n}}\le \theta <\frac{1}{2}\) such that for all sufficiently large n the following conditions hold:

and \(\gamma \ge f(\delta )\), where

Then the size of any dynamic monopoly in \(G(n,\mathbf{p}_n)\) is at least \(\left( \frac{1}{2}-\delta \right) n\) a.a.s.

Note that when \(\delta \in (0,1/2)\), we have \(f(\delta )\in (0,1)\). All conditions in Theorem 3 are satisfied with \(\gamma =1\), c sufficiently large, \(p_n(e_{ij})\equiv p_n\) and \(\theta =\frac{c}{\sqrt{np_n}}\). We obtain the following corollary for homogeneous random graph \(G(n,p_n)\) [14, Theorem 2.5].

Corollary 3

The size of any dynamic monopoly in \(G(n,p_n)\) is at least \(\big (\frac{1}{2}-\frac{c}{\sqrt{np_n}}\big )n\) for some large constant \(c>0\) a.a.s.

3 Proofs

In this section, we present the proofs for the above Theorems 1, 2, and 3. Let N(i) be the set of neighbors of vertex \(i\in V\) in the inhomogeneous random graph \(G(n, \mathbf{p}_n)\). The following lemma will be used in the proof of Theorem 1.

Lemma 1

Suppose there exists a sequence \(p_n\in (0,1)\) and a positive constant \(\alpha <1\) such that for all sufficiently large n the following condition holds:

Then for any vertex \(i\in V\), \(\mathbb {P}(|N(i)|<np_n/c)=o(n^{-1})\) for some sufficiently large constant \(c=c(\alpha )>0\) as \(n\rightarrow \infty \).

Proof

If random variable Y is the sum of a list of independent Bernoulli random variables, we have the following tail estimate:

for any \(\varepsilon \in (0,1)\) by [16, Theorem 2.1, Remark 2.9]. For any \(c>1\), there exists a constant \(c_1>1\) satisfying \(\frac{n-1}{n}c>c_1\) for all sufficiently large n. Therefore,

It follows from (3.1) that \(\mathbb {E}|N(i)|=(n-1)d_n(i)\ge (n-1)p_n\ge \alpha ^{-1}\ln n-p_n\ge \alpha ^{-1}\ln n-1\). Hence,

Since \(\alpha <1\), we can choose a sufficiently large c such that \(c_1\) is large and \((c_1e)^{\frac{1}{\alpha c_1}}<e^{\frac{1}{\alpha }-1}\). Hence, \((c_1e)^{\frac{1}{c_1}(\frac{\ln n}{\alpha }-1)}\ll e^{(\frac{1}{\alpha }-1)(\ln n)-1}\) as \(n\rightarrow \infty \). In other words, \((c_1e)^{\frac{1}{c_1}(\frac{\ln n}{\alpha }-1)}\) \(\cdot e^{1-\frac{\ln n}{\alpha }}\ll e^{-\ln n}=1/n\) as \(n\rightarrow \infty \). By an application of (3.2), we obtain the tail estimate \(\mathbb {P}(|N(i)|<np_n/c)=o(n^{-1})\) as desired. \(\square \)

Proof of Theorem 1.

Here, we follow the idea of [14] by dividing the proof into two regimes: (i) \(\max _{i,j\in V}p_n(e_{ij})\ge n^{\theta -1}\) and (ii) \(\max _{i,j\in V}p_n(e_{ij})\le n^{\theta -1}\) for some sufficiently small constant \(\theta >0\).

Regime (i): Claim 1: For any vertex \(i\in V\), \(\mathbb {P}(C_1(i)=+1)=o(1)\) as \(n\rightarrow \infty \).

To show Claim 1, we fix \(i\in V\) and define a random variable Y(i) as the number of vertices in N(i) holding state \(-1\) in \(C_0\), which is the sum of a list of independent Bernoulli random variables. Hence, given N(i), we obtain \(\mathbb {E}(Y(i)|N(i))=\sum _{j\in N(i)}\mathbb {E}1_{\{C_0(j)=-1\}}=|N(i)|(1-p_+)\). By the Hoeffding inequality [20, Corollary 21.7] and majority dynamics (1.1),

where we take \(p_+=\frac{1}{2}-\rho \) and \(\rho =\omega (1/\sqrt{np_n})\le 1/2\). Hence, for any \(c>0\), \(\mathbb {P}(C_1(i)=+1|\ |N(i)|\ge np_n/c)\le \exp \left( -\frac{\rho ^2(\frac{1}{2}+\rho )np_n}{2c}\right) =e^{-\omega (1)}\) as \(n\rightarrow \infty \) by (2.2). Applying Lemma 1 and the total probability formula, we can choose c sufficiently large such that

which concludes the proof of Claim 1.

Given a graph G and two subsets \(S_1, S_2\subseteq V\), we say \(S_1\) controls \(S_2\) [14] if all vertices in \(S_1\) holding the state \(+1\) in \(C_0\) will always lead to all vertices in \(S_2\) holding the state \(+1\) in \(C_1\) (regardless of the states of other vertices). By Claim 1, the expected number of vertices in \(C_1\) holding the state \(+1\) is o(n). For any constant \(c>0\), the probability that the number of vertices in \(C_1\) holding \(+1\) is greater than \((1-\beta )n\) is no more than \(o(n)/(1-\beta )n=o(1)\) by Markov’s inequality (c.f. [20, Lemma 20.1]). We present the following Claim 2.

Claim 2: Any subset S with \(1\le |S|=s\le (1-\beta )n\) can control no more than \(sn^{-\frac{\theta }{2}}\) vertices a.a.s., where \(\theta >0\) as mentioned in the beginning of the proof is taken as a sufficiently small constant.

If Claim 2 is true, we start from those (at most) \((1-\beta )n\) vertices holding \(+1\) in \(C_0\), there will be at most \(s(n^{-\frac{\theta }{2}})^{\frac{2}{\theta }}<1\) vertices holding \(+1\) after \(\frac{2}{\theta }\) rounds a.a.s. by repeatedly applying Claim 2. This will conclude the proof of Regime (i).

What remains to show is Claim 2. To this end, let \(S'\) be a set with \(|S'|=s'=sn^{-\frac{\theta }{2}}\). By the assumption \(4(1-\beta )/\beta \le \gamma \le 1\), we know \(\beta \ge 4/5\). Noticing that \(|V\backslash S|\ge \beta n\ge (1-\beta )n\), by invoking the regularity condition (2.1) we estimate the expected number of edges between \(S'\) and \(V\backslash S\) as

where \(e(S_1,S_2)=\sum _{i\in S_1}\sum _{j\in S_2}1_{\{e_{ij}\in E(G(n,\mathbf{p} _n))\}}\) and \(E(G(n,\mathbf{p} _n))\) represents the edge set of \(G(n,\mathbf{p} _n)\). Utilizing the concentration inequality [21, Theorem 3.3] and recalling that \(\max _{i,j\in V}p_n(e_{ij})\ge n^{\theta -1}\), we have

Similarly, we calculate \(\mathbb {E}e(S',S)=\sum _{i\in S'}\sum _{j\in S}p_n(e_{ij})=\sum _{i\in S'}|S|d_n(i,S)\). By the regularity condition (2.1), \(s's\gamma \max _{i,j\in V}p_n(e_{ij})\le \mathbb {E}e(S',S)\le s's\) \(\cdot \max _{i,j\in V}p_n(e_{ij})\). Since \(\gamma \ge 4(1-\beta )/\beta \) and \(s\le (1-\beta )n\), we have \(\frac{\beta \gamma n}{2s}>2\). Another application of the concentration inequality gives

where in the last inequality above the assumption of Regime (i) is used. Note that \(\left( 1+\left( \frac{\beta \gamma n}{2s}-1\right) \right) \mathbb {E}e(S', S)\le \frac{\beta \gamma n}{2s}ss'\max _{i,j\in V}p_n(e_{ij})=\frac{\beta \gamma n}{2}s'\) \(\cdot \max _{i,j\in V}p_n(e_{ij}):=\Xi _n\) and \(\frac{1}{2}\mathbb {E}e(S',V\backslash S)\ge \Xi _n\) by the above estimates.

Using (3.3), (3.4) and the edge independence in \(G(n, \mathbf{p}_n)\), we obtain

and

If \(e(S',V\backslash S)>e(S',S)\), then there exists a vertex \(i\in S'\) satisfying \(|N(i)\cap V\backslash S|>|N(i)\cap S|\). Therefore, by majority dynamics (1.1), the total probability formula, and the above estimates,

Recall \(\beta \ge 4/5\) and we have

where in the second inequality above we used \(\left( {\begin{array}{c}n\\ sn^{-\frac{\theta }{2}}\end{array}}\right) \le \left( {\begin{array}{c}n\\ s\end{array}}\right) \le n^s\) for any \(s\le (1-\beta )n\) in view of the unimodality and monotonicity of binomial coefficients, and in the last inequality we used \(n^2<e^{\Theta (n^{\frac{\theta }{2}})}\) for large n. This concludes the proof of Claim 2 and hence the proof of Regime (i).

Regime (ii): Claim 3: There exists a sufficiently small constant \(\theta >0\) such that when \(\max _{i,j\in V}p_n(e_{i,j})\le n^{\theta -1}\) a.a.s. \(G(n, \mathbf{p}_n)\) does not contain four types of subgraphs, namely, two triangles sharing one vertex or one edge, two 4-cycles sharing one vertex or one edge.

Claim 3 can be easily seen by directly estimating the probability of containing any of such subgraphs. Since these graphs can have 4 to 7 vertices and the number of edges in these graphs exceeds the number of vertices by exactly 1, this probability is upper bounded by

for a sufficiently small \(\theta \). This proves Claim 3.

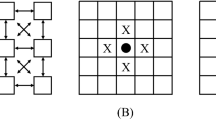

Fix a vertex \(i\in V\) and list its neighbors as \(j_1, j_2,\dots , j_{|N(i)|}\); see Fig. 1 for a schematic illustration of N(i), where i is in at most one 4-cycle with \(j_1\) and \(j_2\), and in at most one triangle with \(j_3\) and \(j_4\). We in the following estimate the probability \(\mathbb {P}(C_2(i)=+1)\). For any \(5\le l\le |N(i)|\), denote by \(j_l^1, j_l^2,\dots , j_l^{|N(j_l)|-1}\) all neighbors of the vertex \(j_l\) apart from i. Let \(Y_l\) be the number of vertices among \(j_l^1, j_l^2,\dots , j_l^{|N(j_l)|-1}\) holding the state \(-1\) in \(C_0\). The vertex \(j_l\) is called good in \(C_1\) if the event \(Y_l\le |N(j_l)|/2\) holds true. In the light of majority dynamics (1.1), it is straightforward to see that \(C_1(j_l)=+1\) implies \(j_l\) is good.

Similarly as in the proof of Claim 1, given \(N(j_l)\), we have \(\mathbb {E}(Y_{l}|N(j_l))=\sum _{k=1}^{|N(j_j)|-1}\mathbb {E}1_{\{C_0(j_l^k)=-1\}}\) \(=(|N(j_l)|-1)(1-p_+)\), where \(p_+=\frac{1}{2}-\rho \) with \(\rho =\omega (1/\sqrt{np_n})\). Without loss of generality, we assume that \(\rho <1/2\). For any constant \(c>0\), using the Hoeffding inequality and the density condition (2.2), we obtain

as \(n\rightarrow \infty \). Hence, taking a sufficiently large \(c>0\) and an arbitrarily small \(\varepsilon >0\), it follows from Lemma 1 and the total probability formula that

In view of Claim 3, we know that a.a.s. no two vertices in \(S:=\{j_5,\dots ,\) \(j_{|N(i)|}\}\) can (a) be adjacent or (b) share a neighbor other than i. An example scenario of N(i) is shown in Fig. 1. Define a random variable Y to be the number of vertices among S, which are good in \(C_1\). By majority dynamics (1.1) and the above comment about good vertices, we have

Given the above (a) and (b) hold, the events \(\left\{ Y_l\le \frac{|N(j_l)|}{2}\right\} _{l=5}^{|N(i)|}\) are independent. Since their probabilities are upper bounded by (3.5), we see that the right-hand side of (3.6) is no more than

Hence,

as \(n\rightarrow \infty \), since \(\varepsilon >0\) can be arbitrarily small and the density condition (2.2) holds with \(\alpha <1\). Using Lemma 1 and the total probability formula again, we have

As there are n vertices in V, the probability of having some vertex i with \(C_2(i)=+1\) is o(1). The proof of Regime (ii) is complete. \(\square \)

Proof of Theorem 2.

We first consider the case \(p_+=\omega (n^{-1}e^{np_n\gamma })\). It suffices to show there exists an isolated vertex \(k\in V\) with \(C_0(k)=+1\) a.a.s. Let Y be the number of isolated vertices holding the state \(+1\) in \(C_0\) and we will resort to the second moment method; see e.g. [20, Sect. 20.1].

To this end, we estimate

where in the first inequality we used the density condition (2.4) and \(1-x\ge e^{-x-x^2}\) for \(x\in [0,1/2]\), and in the last inequality we applied again the density condition to derive \(p_n^2(e_{ij})\le p_n(e_{ij})\gamma p_n\). Furthermore, it follows from the density condition (2.4) and \(\max _{i\in V}d_n(i)\le \max _{i,j\in V}p_n(e_{ij})\) that

since \(0\le np_n^2\le (\ln n)^2/(n\alpha ^2)\rightarrow 0\) as \(n\rightarrow \infty \).

Next, we estimate the variance \({\text {Var}}(Y)\). By direct calculation, we obtain

where in the last inequality we used the density condition (2.4). Since \(p_n\rightarrow 0\), we have \({\text {Var}}Y=o((\mathbb {E}Y)^2)\) in view of (3.7). An application of the second moment method yields \(\mathbb {P}(Y=0)\le \mathbb {P}(|Y-\mathbb {E}Y|\ge \mathbb {E}Y)\le {\text {Var}}Y/(\mathbb {E}Y)^2=o(1)\) as \(n\rightarrow \infty \). The first statement of Theorem 2 is proved.

Next, we assume \(p_+=o(n^{-1}e^{np_n})\). We will show the second statement of Theorem 2 by showing that \(C_2(i)=-1\) a.a.s. for every \(i\in V\) in three separate cases: (i) \(|N(i)|\ge \frac{4\alpha }{\alpha -1}\), (ii) \(2\le |N(i)|\le \frac{4\alpha }{\alpha -1}\) or \(N(i)=\emptyset \), and (iii) \(|N(i)|=1\).

-

(i):

The idea in this case is to use the first moment method; c.f. [20, Lemma 20.1]. Let Z be the number of vertices j with \(|N(j)|\ge \frac{4\alpha }{\alpha -1}\) and taking the state \(+1\) in \(C_1\). We will show \(Z=0\) a.a.s. Fix a vertex \(i\in V\). For the vertex i with degree \(d=|N(i)|\) to take \(+1\) in \(C_1\), it must have \(\lfloor \frac{d}{2}\rfloor +1\) vertices in \(\{i\}\cup N(i)\) which take \(+1\) in \(C_0\) by (1.1). Therefore,

$$\begin{aligned} \mathbb {P}(C_1(i)=+1| |N(i)|=d)&\le \sum _{k=\lfloor \frac{d}{2}\rfloor +1}^{d+1}\left( {\begin{array}{c}d+1\\ k\end{array}}\right) p_+^k(1-p_+)^{d+1-k}\nonumber \\&\le p_+^{\lfloor \frac{d}{2}\rfloor +1}2^{d+1}, \end{aligned}$$(3.8)where we used \((1-p_+)^{d+1-k}\le 1\) and the binomial theorem. Since \(p_+=o(n^{-1}e^{np_n})\) and the density condition (2.4) holds, the right-hand side of (3.8) is no more than \(2^{d+1}(n^{-1}e^{np_n})^{\frac{d}{2}}\le 2^{d+1}(n^{\frac{1}{\alpha }-1})^{\frac{d}{2}}\). Thanks to the assumption in (i) that \(|N(i)|=d\ge \frac{4\alpha }{\alpha -1}\), we have

$$\begin{aligned} \ln \left( n2^{d+1}(n^{\frac{1}{\alpha }-1})^{\frac{d}{2}}\right) =\ln n+(d+1)\ln 2+\frac{d}{2}\left( \frac{1}{\alpha }-1\right) \ln n\rightarrow -\infty \end{aligned}$$as \(n\rightarrow \infty \). This means \(2^{d+1}(n^{\frac{1}{\alpha }-1})^{\frac{d}{2}}=o(n^{-1})\). Combining this with (3.8) yields

$$\begin{aligned} \mathbb {P}\Big (\{C_1(i)=+1\}\cap \Big \{|N(i)|\ge \frac{4\alpha }{\alpha -1}\Big \}\Big )&=\mathbb {P}\Big (C_1(i)=+1\Big | |N(i)|\ge \frac{4\alpha }{\alpha -1}\Big )\\&\quad \cdot \mathbb {P}\Big (|N(i)|\ge \frac{4\alpha }{\alpha -1}\Big )\\&=o(n^{-1}). \end{aligned}$$Hence, \(\mathbb {E}Z=\sum _{i\in V}\mathbb {P}(\{C_1(i)=+1\}\cap \{|N(i)|\ge \frac{4\alpha }{\alpha -1}\})=o(1)\) and the first moment method gives \(\mathbb {P}(Z=0)=1-o(1)\).

-

(ii):

Similarly as in (i), we will resort to the first moment method by showing \(\mathbb {E}Z_1=o(1)\) and \(\mathbb {E}Z_2=o(1)\) as \(n\rightarrow \infty \), where \(Z_1\) is the number of vertices j with \(2\le |N(j)|\le \frac{4\alpha }{\alpha -1}\) and taking the state \(+1\) in \(C_1\), and \(Z_2\) is the number of isolated vertices taking \(+1\) in \(C_1\). Fix a vertex \(i\in V\). We first consider \(Z_1\). For any \(d\ge 2\), we have by the regularity condition (2.3) and the density condition (2.4)

$$\begin{aligned} \mathbb {P}(|N(i)|=d)&=\sum _{\begin{array}{c} S:\ |S|=d\\ S=N(i)\subseteq V\backslash \{i\} \end{array}}\prod _{j\in S}p_n(e_{ij})\prod _{j\in V\backslash (S\cup \{i\})}(1-p_n(e_{ij}))\nonumber \\&\le \sum _{\begin{array}{c} S:\ |S|=d\\ S=N(i)\subseteq V\backslash \{i\} \end{array}}\gamma ^dp_n^de^{-|V\backslash (S\cup \{i\})|d_n(i, V\backslash (S\cup \{i\}))}\nonumber \\&\le \gamma ^dp_n^d\left( {\begin{array}{c}n-1\\ d\end{array}}\right) e^{-p_n(n-1-d)}. \end{aligned}$$(3.9)In view of (3.8), we obtain

$$\begin{aligned} \mathbb {P}(C_1(i)=+1| |N(i)|=d)\le 2^{d+1}p_+^{\lfloor \frac{d}{2}\rfloor +1}=2^{d+1}o\left( n^{-1}e^{np_n}\right) ^{\lfloor \frac{d}{2}\rfloor +1}. \end{aligned}$$(3.10)Combining (3.9) and (3.10), and using the density assumption \(np_n\le \alpha ^{-1}\ln n\), we have

$$\begin{aligned}&\mathbb {P}(\{C_1(i)=+1\}\cap \{|N(i)|=d\})\\&\quad \le 2^{d+1}o\left( \frac{e^{np_n}}{n}\right) ^{\lfloor \frac{d}{2}\rfloor +1}\gamma ^dp_n^d\left( {\begin{array}{c}n-1\\ d\end{array}}\right) e^{-p_n(n-1-d)}\\&\quad \le 2^{d+1}\gamma ^d\left( \frac{e^{np_n}}{n}\right) ^{\lfloor \frac{d}{2}\rfloor +1}\left( \frac{\ln n}{\alpha n}\right) ^d n^d \frac{e^{p_n(1+d)}}{e^{np_n}}\\&\quad \le 2^{d+1}\gamma ^de^{1+d}\alpha ^{-d}\cdot \frac{(\ln n)^de^{np_n\lfloor \frac{d}{2}\rfloor }}{n^{\lfloor \frac{d}{2}\rfloor +1}}\\&\quad \le 2^{d+1}\gamma ^de^{1+d}\alpha ^{-d}\cdot \frac{(\ln n)^d}{n^{\lfloor \frac{d}{2}\rfloor +1-\alpha ^{-1}\lfloor \frac{d}{2}\rfloor }}=o(n^{-1}), \end{aligned}$$where in the last estimate we used the assumption \(d\ge 2\) and \(\alpha >1\). Consequently,

$$\begin{aligned} \mathbb {E}Z_1\le \sum _{i\in V}\sum _{d=2}^{\frac{4\alpha }{\alpha -1}}\mathbb {P}(\{C_1(i)=+1\}\cap \{|N(i)|=d\})=\sum _{d=2}^{\frac{4\alpha }{\alpha -1}}o(1)=o(1), \end{aligned}$$as \(n\rightarrow \infty \). In a similar manner, using (2.3) and (2.4) we can bound the expectation of \(Z_2\) as

$$\begin{aligned} \mathbb {E}Z_2=\sum _{i\in V}p_+\prod _{j\in V\backslash \{i\}}(1-p_n(e_{ij}))\le \sum _{i\in V}o\left( \frac{e^{np_n}}{n}\right) e^{-p_n(n-1)}=o(1), \end{aligned}$$as \(n\rightarrow \infty \). The first moment method gives \(Z_1=Z_2=0\) a.a.s.

-

(iii):

From (i) and (ii) we know that any non-leaf vertices take the state \(-1\) in \(C_1\) a.a.s. Hence, any vertex that is not adjacent to any leaf vertex a.a.s. takes the state \(-1\) in \(C_2\) by majority dynamics (1.1). For a vertex i, which is adjacent to at least one leaf vertex, we will show that \(C_2(i)=-1\) a.a.s. The strategy here is to consider all possibilities that will lead to \(C_2(i)=+1\) and show this happens with probability o(1). Possibility (a): i is adjacent to at least two leaf vertices, say \(i_1\) and \(i_2\). In this case, \(C_0(i)=+1\). [Otherwise, those leaf neighbors take \(-1\) in \(C_1\), and all non-leaf neighbors of i (if exist) also take \(-1\) in \(C_1\). This leads to \(C_2(i)=-1\), which contradicts the assumption.] Possibility (b): i is adjacent to only one leaf vertex, say \(i_3\). In this case, i has no other neighbors. [Otherwise, these non-leaf neighbors and i must take the state \(-1\) in \(C_1\) a.a.s. By (1.1), \(C_2(i)=-1\). This contradicts the assumption.] Moreover, \(C_0(i)=+1\). [Otherwise, the leaf neighbor \(i_3\) holds \(-1\) in \(C_1\), and hence \(C_2(i)=-1\), which is a contradiction.] The expected number of vertices satisfying (a) or (b) is upper bounded by

$$\begin{aligned}&n\left( {\begin{array}{c}n-1\\ 2\end{array}}\right) p_+p_n(e_{ii_1})p_n(e_{ii_2})\prod _{j\not =i,i_1}(1-p_n(e_{i_1j}))\prod _{j\not =i,i_1,i_2}(1-p_n(e_{i_2j}))\nonumber \\&+n\left( {\begin{array}{c}n-1\\ 1\end{array}}\right) p_+\prod _{j\not =i,i_3}(1-p_n(e_{i_3j}))\prod _{j\not =i,i_3}(1-p_n(e_{ij}))\cdot p_n(e_{ii_3})\nonumber \\&\quad \le n^3p_+\gamma ^2p_n^2e^{-\sum _{j\not =i,i_1}p_n(e_{i_1j})-\sum _{j\not =i,i_1,i_2}p_n(e_{i_2j})}\nonumber \\&\qquad +n^2p_+\gamma p_ne^{-\sum _{j\not =i,i_3}p_n(e_{i_3j})-\sum _{j\not =i,i_3}p_n(e_{ij})}, \end{aligned}$$(3.11)where we used the density condition (2.4). Recall \(p_+=o(n^{-1}e^{np_n})\), \(\sum _{j\not =i,i_1}\) \(p_n(e_{i_1j})\) \(\ge p_n(n-2)\), \(\sum _{j\not =i,i_1,i_2}p_n(e_{i_2j})\ge p_n(n-3)\), \(\sum _{j\not =i,i_3}p_n(e_{i_3j})\ge p_n(n-2)\), and \(\sum _{j\not =i,i_1,i_2}p_n(e_{i_2j})\ge p_n(n-2)\) by the regularity condition (2.3). Therefore, the right-hand side of (3.11) is further upper bounded by

$$\begin{aligned}&n^3o\left( \frac{e^{np_n}}{n}\right) \gamma ^2p_n^2e^{-(2n-5)p_n} +n^2o\left( \frac{e^{np_n}}{n}\right) \gamma p_ne^{-(2n-4)p_n}\\&\quad =o\left( \frac{n^2p_n^2}{e^{np_n}}\right) +o\left( \frac{np_n}{e^{np_n}}\right) =o(1). \end{aligned}$$The last step above holds since \(\ln (n^2p_n^2e^{-np_n})=2\ln (np_n)-np_n\le 1\) and \(\ln (np_ne^{-np_n})=\ln (np_n)-np_n\le 1\) for sufficiently large n. Hence, we complete the proof of the second statement of Theorem 2.

\(\square \)

Proof of Theorem 3.

It suffices to show that any subset S with \(|S|=s=(1/2-\delta )n\) can control no more than s vertices a.a.s.

To this end, let \(S'\) be a set with \(|S'|=s\). We will estimate the probability that S can control \(S'\). Since \(\mathbb {E}(e(S',S))=\sum _{i\in S'}\sum _{j\in S}p_n(e_{ij})=\sum _{i\in S'}|S|d_n(i,S)\), we obtain the bounds

by using the regularity condition (2.5). Likewise, another application of (2.5) yields

Using the concentration inequality [21, Theorem 3.3], we obtain

where we applied the inequality \(\max _{i,j\in V}p_n(e_{ij})\ge \max _{i\in V}d_n(i)\) and the density condition (2.6). Since \(c\ge 4(\frac{1}{2}-\theta )^{-1}\sqrt{\frac{1}{\gamma }}\) and \(0\le \delta \le \theta <1/2\),

Thus, \(\mathbb {P}(e(S',V\backslash S)\le (1-\delta )\mathbb {E}(e(S',V\backslash S)))\le e^{-2n}\). Similarly, by the concentration inequality and (2.6),

Noting that

we obtain \(\mathbb {P}(e(S', S)\ge (1+\delta )\mathbb {E}(e(S', S)))\le e^{-\frac{24}{13}n}\). Since \(\gamma \ge f(\delta )\), we know

Therefore, by the total probability formula and the independence between random variables \(e(S',S)\) and \(e(S',V\backslash S)\), we have

Thanks to majority dynamics (1.1), we obtain \(\mathbb {P}(S\ \mathrm {controls}\ S')\) \(\le \) \(\mathbb {P}(e(S',S)\) \(\ge e(S',V\backslash S))\le e^{-2n}+e^{-\frac{24}{13}n}\). Considering all possibilities of the two sets S and \(S'\), we derive that the probability of some set S controls another set \(S'\) with the same size is upper bounded by

as \(n\rightarrow \infty \), where we used the estimate \(\left( {\begin{array}{c}n\\ s\end{array}}\right) \le \left( {\begin{array}{c}n\\ \frac{n}{2}\end{array}}\right) \le \left( \frac{en}{\frac{n}{2}}\right) ^{\frac{n}{2}}\). This concludes the proof of Theorem 3. \(\square \)

References

Krapivsky, P.L., Redner, S.: Dynamics of majority rule in two-state interacting spin systems. Phys. Rev. Lett. 90, 238701 (2003)

Pereira, L.F.C., Moreira, F.G.B.: Majority-vote model on random graphs. Phys. Rev. E 71, 016123 (2005)

Shang, Y.: Consensus in averager-copier-voter networks of moving dynamical agents. Chaos 27, 023116 (2017)

Balogh, J., Bollobás, B., Morris, R.: Majority bootstrap percolation on the hypercube. Comb. Prob. Comput. 18, 17–51 (2009)

Holmgren, C., Juškevičius, T., Kettle, N.: Majority bootstrap percolation on \(G(n, p)\). Elect. J. Combin. 24, P1.1 (2017)

Goles, E., Olivos, J.: Periodic behaviour of generalized threshold functions. Discrete Math. 30, 187–189 (1980)

Peres, L.R., Fontanari, J.F.: Statistics of opinion domains of the majority-vote model on a square lattice. Phys. Rev. E 82, 046103 (2010)

Gärtner, B., Zehmakan, A. N.: Color war: Cellular automata with majority-rule. In: Drewes, F., Martin-Vide, C., Truthe, B. (eds.) Language and Automata Theory and Applications, Proceedings of 11th International Conference of LATA. Springer, pp. 393–404 (2017)

Fontes, L.R., Schonmann, R., Sidoravicius, V.: Stretched exponential fixation in stochastic ising models at zero temperature. Commun. Math. Phys. 228, 495–518 (2002)

Kanoria, Y., Montanari, A.: Majority dynamics on trees and the dynamic cavity method. Ann. Appl. Probab. 21, 1694–1748 (2011)

Gärtner, B., Zehmakan, A.N.: Majority model on random regular graphs. In: Bender, M. A., Farach-Colton, M., Mosteiro, M. A. (eds.) LATIN 2018: Theoretical Informatics, Proc. 13th Latin American Symposium. Springer, pp. 572–583 (2018)

Benjamini, I., Chan, S.-O., O’Donnell, R., Tamuz, O., Tan, L.-Y.: Convergence, unanimity and disagreement in majority dynamics on unimodular graphs and random graphs. Stoch. Process. Appl. 126, 2719–2733 (2016)

Tran, L., Vu, V.: Reaching a consensus on random networks: the power of few. In: Byrka, J., Meka, R. (eds.) Approximation, Randomization, and Combinatorial Optimization: Algorithms and Techniques (APPROX/RANDOM 2020). Leibniz International Proceedings in Informatics vol. 176, Schloss Dagstuhl-Leibniz-Zentrum für Informatik, Germany, pp. 20:1–20:15 (2020)

Zehmakan, A.N.: Opinion forming in Erdős-Rényi random graph and expanders. Discrete Appl. Math. 277, 280–290 (2020)

Fountoulakis, N., Kang, M., Makai, T.: Resolution of a conjecture on majority dynamics: rapid stabilisation in dense random graphs. arXiv:1910.05820 (2019)

Janson, S., Łuczak, T., Ruciński, A.: Random Graphs. Wiley, New Jersey (2000)

Peleg, D.: Local majorities, coalitions and monopolies in graphs: a review. Theor. Comput. Sci. 282, 231–257 (2002)

Avin, C., Lotker, Z., Mizrachi, A., Peleg, D.: Majority vote and monopolies in social networks. In: Proceedings of 20th International Conference on Distributed Computing and Networking, Bangalore, India, pp. 342–351 (2019)

Berger, E.: Dynamic monopolies of constant size. J. Combin. Theory Ser. B 83, 191–200 (2001)

Frieze, A., Karoński, M.: Introduction to Random Graphs. Cambridge University Press, Cambridge (2016)

Chung, F., Lu, L.: Concentration inequalities and martingale inequalities: a survey. Int. Math. 3, 79–127 (2006)

Acknowledgements

The author would like to thank Ahad Zehmakan for offering a copy of thesis. The author is also grateful to the anonymous referee for detailed comments that helped to improve the quality of the paper. The work is supported by UoA Flexible Fund No. 201920A1001 from Northumbria University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shang, Y. A Note on the Majority Dynamics in Inhomogeneous Random Graphs. Results Math 76, 119 (2021). https://doi.org/10.1007/s00025-021-01436-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00025-021-01436-z