Abstract

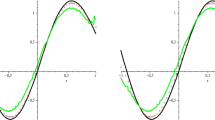

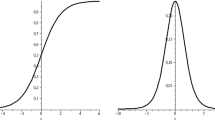

In the present paper, we obtain a saturation result for the neural network (NN) operators of the max-product type. In particular, we show that any non-constant, continuous function on the interval [0, 1] cannot be approximated by the above operators \(F^{(M)}_n\), \(n \in \mathbb {N}^+\), by a rate of convergence higher than 1 / n. Moreover, since we know that any Lipschitz function f can be approximated by the NN operators with an order of approximation of 1 / n, here we are able to prove a local inverse result, in order to provide a characterization of the saturation (Favard) classes.

Similar content being viewed by others

References

Anastassiou, G.A.: Intelligent Systems: Approximation by Artificial Neural Networks, Intelligent Systems Reference Library 19. Springer, Berlin (2011)

Anastassiou, G.A., Coroianu, L., Gal, S.G.: Approximation by a nonlinear Cardaliaguet-Euvrard neural network operator of max-product kind. J. Comput. Anal. Appl. 12(2), 396–406 (2010)

Bardaro, C., Karsli, H., Vinti, G.: Nonlinear integral operators with homogeneous kernels: Pointwise approximation theorems. Appl. Anal. 90(3–4), 463–474 (2011)

Barron, A.R.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans. Inf. Theory 39(3), 930–945 (1993)

Barron, A.R., Klusowski, J.M.: Uniform approximation by neural networks activated by first and second order ridge splines, arXiv preprint arXiv:1607.07819, (2016)

Bede, B., Coroianu, L., Gal, S.G.: Approximation by max-product type operators. Springer, Berlin (2016). doi:10.1007/978-3-319-34189-7

Cao, F., Chen, Z.: The approximation operators with sigmoidal functions. Comput. Math. Appl. 58(4), 758–765 (2009)

Cao, F., Chen, Z.: The construction and approximation of a class of neural networks operators with ramp functions. J. Comput. Anal. Appl. 14(1), 101–112 (2012)

Cao, F., Chen, Z.: Scattered data approximation by neural networks operators. Neurocomputing 190, 237–242 (2016)

Cao, F., Liu, B., Park, D.S.: Image classification based on effective extreme learning machine. Neurocomputing 102, 90–97 (2013)

Cheang, G.H.L.: Approximation with neural networks activated by ramp sigmoids. J. Approx. Theory 162, 1450–1465 (2010)

Chui, C.K., Mhaskar, H.N.: Deep nets for local manifold learning, arXiv preprint arXiv:1607.07110, (2016)

Cluni, F., Costarelli, D., Minotti, A.M., Vinti, G.: Enhancement of thermographic images as tool for structural analysis in earthquake engineering. NDT E Int. 70, 60–72 (2015)

Cluni, F., Costarelli, D., Minotti, A.M., Vinti, G.: Applications of sampling Kantorovich operators to thermographic images for seismic engineering. J. Comput. Anal. Appl. 19(4), 602–617 (2015)

Coroianu, L., Gal, S.G.: Approximation by nonlinear generalized sampling operators of max-product kind. Sampl. Theory Signal Image Process. 9(1–3), 59–75 (2010)

Coroianu, L., Gal, S.G.: Approximation by max-product sampling operators based on sinc-type kernels. Sampl. Theory Signal Image Process. 10(3), 211–230 (2011)

Coroianu, L., Gal, S.G.: Saturation results for the truncated max-product sampling operators based on sinc and Fejér-type kernels. Sampl. Theory Signal Image Process. 11(1), 113–132 (2012)

Coroianu, L., Gal, S.G.: Saturation and inverse results for the Bernstein max-product operator. Period. Math. Hung. 69, 126–133 (2014)

Costarelli, D.: Neural network operators: constructive interpolation of multivariate functions. Neural Netw. 67, 28–36 (2015)

Costarelli, D., Spigler, R.: Solving Volterra integral equations of the second kind by sigmoidal functions approximation. J. Integral Equ. Appl. 25(2), 193–222 (2013)

Costarelli, D., Spigler, R.: A collocation method for solving nonlinear Volterra integro-differential equations of the neutral type by sigmoidal functions. J. Integral Equ. Appl. 26(1), 15–52 (2014)

Costarelli, D., Spigler, R.: Approximation by series of sigmoidal functions with applications to neural networks. Annali di Matematica Pura ed Applicata 194(1), 289–306 (2015)

Costarelli, D., Vinti, G.: Rate of approximation for multivariate sampling Kantorovich operators on some functions spaces. J. Integral Equ. Appl. 26(4), 455–481 (2014)

Costarelli, D., Vinti, G.: Degree of approximation for nonlinear multivariate sampling Kantorovich operators on some functions spaces. Numer. Funct. Anal. Optim. 36(8), 964–990 (2015)

Costarelli, D., Vinti, G.: Max-product neural network and quasi interpolation operators activated by sigmoidal functions. J. Approx. Theory 209, 1–22 (2016)

Costarelli, D., Vinti, G.: Approximation by max-product neural network operators of Kantorovich type. Results Math. 69(3), 505–519 (2016)

Costarelli, D., Vinti, G.: Pointwise and uniform approximation by multivariate neural network operators of the max-product type. Neural Netw. 81, 81–90 (2016)

Costarelli, D., Vinti, G.: Convergence for a family of neural network operators in Orlicz spaces. Math. Nachr. 290(2–3), 226–235 (2017)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

Di Marco, M., Forti, M., Grazzini, M., Pancioni, L.: Necessary and sufficient condition for multistability of neural networks evolving on a closed hypercube. Neural Netw. 54, 38–48 (2014)

Di Marco, M., Forti, M., Nistri, P., Pancioni, L.: Discontinuous neural networks for finite-time solution of time-dependent linear equations. IEEE Trans. Cybern. 46(11), 2509–2520 (2016). doi:10.1109/TCYB.2015.2479118

Fard, S.P., Zainuddin, Z.: The universal approximation capabilities of cylindrical approximate identity neural networks. Arab. J. Sci. Eng. 41(8), 3027–3034 (2016). doi:10.1007/s13369-016-2067-9

Fard, S.P., Zainuddin, Z.: Theoretical analyses of the universal approximation capability of a class of higher order neural networks based on approximate identity. In: Nature-Inspired Computing: Concepts, Methodologies, Tools, and Applications (2016, in print). doi:10.4018/978-1-5225-0788-8.ch055

Favard, J.: Sur l’approximations des fonctions d’une variable réelle. Colloques Internationaux CNRS 15, 97–110 (1949)

Gripenberg, G.: Approximation by neural network with a bounded number of nodes at each level. J. Approx. Theory 122(2), 260–266 (2003)

Hahm, N., Hong, B.I.: A Note on neural network approximation with a sigmoidal function. Appl. Math. Sci. 10(42), 2075–2085 (2016)

Ismailov, V.E.: On the approximation by neural networks with bounded number of neurons in hidden layers. J. Math. Anal. Appl. 417(2), 963–969 (2014)

Ito, Y.: Independence of unscaled basis functions and finite mappings by neural networks. Math. Sci. 26, 117–126 (2001)

Lin, S., Zeng, J., Zhang, X.: Constructive neural network learning, arXiv preprint arXiv:1605.00079, (2016)

Kainen, P.C., Kurková, V.: An integral upper bound for neural network approximation. Neural Comput. 21, 2970–2989 (2009)

Kurková, V.: Lower bounds on complexity of shallow perceptron networks. Eng. Appl. Neural Netw. Commun. Comput. Inf. Sci. 629, 283–294 (2016)

Llanas, B., Sainz, F.J.: Constructive approximate interpolation by neural networks. J. Comput. Appl. Math. 188, 283–308 (2006)

Maiorov, V.: Approximation by neural networks and learning theory. J. Complex. 22(1), 102–117 (2006)

Maiorov, V., Meir, R.: On the near optimality of the stochastic approximation of smooth functions by neural networks. Adv. Comput. Math. 13(1), 79–103 (2000)

Makovoz, Y.: Uniform approximation by neural networks. J. Approx. Theory 95(2), 215–228 (1998)

Mhaskar, H., Poggio, T.: Deep vs. shallow networks: An approximation theory perspective, arXiv preprint arXiv:1608.03287, (2016)

Pinkus, A.: Approximation theory of the MLP model in neural networks. Acta Numer. 8, 143–195 (1999)

Vinti, G., Zampogni, L.: Approximation results for a general class of Kantorovich type operators. Adv. Nonlinear Stud. 14, 991–1011 (2014)

Acknowledgements

The authors are members of the Gruppo Nazionale per l’Analisi Matematica, la Probabilitá e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM). The authors are partially supported by the ”Department of Mathematics and Computer Science” of the University of Perugia (Italy). Moreover, the first author of the paper holds a research grant (Post-Doc) funded by the INdAM, and finally, he has been partially supported within the GNAMPA-INdAM Project “Approssimazione con operatori discreti e problemi di minimo per funzionali del calcolo delle variazioni con applicazioni all’imaging”.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Costarelli, D., Vinti, G. Saturation Classes for Max-Product Neural Network Operators Activated by Sigmoidal Functions. Results Math 72, 1555–1569 (2017). https://doi.org/10.1007/s00025-017-0692-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00025-017-0692-6