Abstract

We generalize Lévy’s lemma, a concentration-of-measure result for the uniform probability distribution on high-dimensional spheres, to a much more general class of measures, so-called GAP measures. For any given density matrix \(\rho \) on a separable Hilbert space \({\mathcal {H}}\), \({\textrm{GAP}}(\rho )\) is the most spread-out probability measure on the unit sphere of \({\mathcal {H}}\) that has density matrix \(\rho \) and thus forms the natural generalization of the uniform distribution. We prove concentration-of-measure whenever the largest eigenvalue \(\Vert \rho \Vert \) of \(\rho \) is small. We use this fact to generalize and improve well-known and important typicality results of quantum statistical mechanics to GAP measures, namely canonical typicality and dynamical typicality. Canonical typicality is the statement that for “most” pure states \(\psi \) of a given ensemble, the reduced density matrix of a sufficiently small subsystem is very close to a \(\psi \)-independent matrix. Dynamical typicality is the statement that for any observable and any unitary time evolution, for “most” pure states \(\psi \) from a given ensemble the (coarse-grained) Born distribution of that observable in the time-evolved state \(\psi _t\) is very close to a \(\psi \)-independent distribution. So far, canonical typicality and dynamical typicality were known for the uniform distribution on finite-dimensional spheres, corresponding to the micro-canonical ensemble, and for rather special mean-value ensembles. Our result shows that these typicality results hold also for \({\textrm{GAP}}(\rho )\), provided the density matrix \(\rho \) has small eigenvalues. Since certain GAP measures are quantum analogs of the canonical ensemble of classical mechanics, our results can also be regarded as a version of equivalence of ensembles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the 21st century, a modern perspective on quantum statistical mechanics is to consider an individual closed system in a pure state and investigate its and its subsystems’ thermodynamic behavior; see, e.g., [1, 2, 7,8,9, 11, 12, 15, 16, 20, 31, 34, 36,37,38,39, 41, 42, 45, 48] after pioneering work in [4, 40, 44, 47, 51].

Roughly speaking, “canonical typicality” is the statement that the reduced density matrix of a subsystem obtained from a pure state of the total system is nearly deterministic if the pure state is randomly drawn from a sufficiently large subspace and the subsystem is not too large. More precisely, the original statement of canonical typicality [7, 18, 26, 31] asserts that for most pure states \(\psi \) from a high-dimensional (e.g., micro-canonical) subspace \({\mathcal {H}}_R\) of the Hilbert space \({\mathcal {H}}_S\) of a macroscopic quantum system S and for a subsystem a of \(S=a\cup b\) so that \({\mathcal {H}}_S={\mathcal {H}}_a \otimes {\mathcal {H}}_b\), the reduced density matrix

is close to the partial trace of \(\rho _R:=P_R/d_R\) (the normalized projection to \({\mathcal {H}}_R\)) and thus deterministic, provided that \(d_R:=\dim {\mathcal {H}}_R\) is sufficiently large:

Here, the words “most \(\psi \)” refer to the uniform distribution \(u_R\) (normalized surface area measure) over the unit sphere

in \({\mathcal {H}}_R\). The name “canonical typicality” comes from the fact that if \({\mathcal {H}}_R={\mathcal {H}}_\textrm{mc}\) is a micro-canonical subspace and thus \(\rho _R=\rho _\textrm{mc}\) a micro-canonical density matrix, then \({{\,\textrm{tr}\,}}_b \rho _\textrm{mc}\) is close to the canonical density matrix

for a with suitable \(\beta \), provided b is large and the interaction between a and b is weak; see, e.g., [18] for a summary of the standard derivation of this fact.

In this paper, we replace the uniform distribution by other, much more general distributions, so-called GAP measures, and show that for them a generalized canonical typicality remains valid. For any density matrix \(\rho \) replacing \(\rho _R\) in \({\mathcal {H}}_S\), \({\textrm{GAP}}(\rho )\) is the most spread-out distribution over \({\mathbb {S}}({\mathcal {H}}_S)\) with density matrix \(\rho \); the acronym stands for Gaussian adjusted projected measure [19, 23]. For \(\rho =\rho _{\textrm{can}}\), it arises as the distribution of wave functions in thermal equilibrium [17, 19]. If a system is initially in thermal equilibrium for the Hamiltonian \(H_0\) but then driven out of equilibrium by means of a time-dependent \(H_t\), its wave function will still be \({\textrm{GAP}}(\rho )\)-distributed for suitable \(\rho \). For general \(\rho \), we think of \({\textrm{GAP}}(\rho )\) as the natural ensemble of wave functions with density matrix \(\rho \); for a more detailed description, see Sect. 2.2.

We prove quantitative bounds asserting that for any \(\rho \) with small eigenvalues (so \(\rho \) is far from pure) and \({\textrm{GAP}}(\rho )\)-most \(\psi \in {\mathbb {S}}({\mathcal {H}}_S)\),

Some reasons for seeking this generalization are as follows: first, that it is mathematically natural; second, that in situations in which we can ask what the actual distribution of \(\psi \) is (more detail later), this distribution might not be uniform; third, that it shows that the sharp cut-off of energies involved in the definition of \({\mathcal {H}}_\textrm{mc}\) actually plays no role; and finally, that it informs and extends our picture of the equivalence of ensembles. A more detailed discussion of these reasons is given in Sect. 2.1.

As a direct consequence of generalized canonical typicality let us mention that, just as canonical typicality implies that for most pure states \(\psi \in {\mathbb {S}}({\mathcal {H}}_S)\) the entanglement entropy \(-{{\,\textrm{tr}\,}}(\rho _a^\psi \log \rho _a^\psi )\) has nearly the maximal value \(\log d_a\) with \(d_a=\dim {\mathcal {H}}_a\) [22] (because \(\rho _a^\psi \approx {{\,\textrm{tr}\,}}_b I_S/D = I_a/d_a\) with I the identity operator and \(D=d_ad_b=\dim {\mathcal {H}}_S\)), generalized canonical typicality implies that \({\textrm{GAP}}(\rho )\)-typical \(\psi \) have entanglement entropy \(-{{\,\textrm{tr}\,}}(\rho _a^\psi \log \rho _a^\psi )\approx -{{\,\textrm{tr}\,}}(\rho _a\log \rho _a)\) with \(\rho _a={{\,\textrm{tr}\,}}_b\rho \).

Since different probability distributions over the unit sphere in a Hilbert space \({\mathcal {H}}\) can have the same density matrix, and since the outcome statistics of any experiment depend only on the density matrix, it may seem at first irrelevant to even consider distributions over \({\mathbb {S}}({\mathcal {H}})\). However, for example, an ensemble of spins prepared so that (about) half are in state \(\bigl | \uparrow \bigr \rangle \) and the others in \(\bigl | \downarrow \bigr \rangle \) is physically different from a uniform ensemble over \({\mathbb {S}}({\mathbb {C}}^2)\), even though both ensembles have density matrix \(\tfrac{1}{2}I\). Likewise, for an ensemble of particles prepared by taking them from a system in thermal equilibrium, the wave function is GAP-distributed (see Sect. 2.2). More basically, probability distributions play a key role in any typicality statement, i.e., one saying that some condition is satisfied by most wave functions—“most” relative to a certain distribution; such a statement cannot be formulated in terms of density matrices.

We note that the generalization of canonical typicality from uniform measures to GAP measures is not straightforward. First, not every measure \(\mu \) over \({\mathbb {S}}({\mathcal {H}}_S)\) with a given density matrix \(\rho \) with small eigenvalues makes it true that for \(\mu \)-most \(\psi \), \(\rho _a^\psi \approx {{\,\textrm{tr}\,}}_b \rho \). We give a counter-example in Remark 15 in Sect. 3. Second, if \(\rho \) is not close to a multiple of a projection, then GAP\((\rho )\) is far from uniform; specifically, its density will at some points be larger than at others by a factor like \(\exp (D)\) (see Remark 13). And third, even measures close to uniform (for example the von Mises-Fisher distribution, see again Remark 13) can fail to satisfy generalized canonical typicality.

In this paper, we prove generalized canonical typicality in rigorous form by providing error bounds for (5) at any desired confidence level that is implicit in the word “most,” see Theorem 1 and Theorem 3. Compared to the known error bounds based on \(u_R\), we can prove more or less the same bounds with \(d_R\) replaced by the reciprocal of the largest eigenvalue of \(\rho \),

with \(\Vert \cdot \Vert \) the operator norm. Thus, the approximation is good as soon as no single direction contributes too much to \(\rho \). In particular, for \(\rho =\rho _R\), our results essentially reproduce the known error bounds. As one central part of our proof, we also establish a variant of Lévy’s lemma [24, 25, 27] (a statement about the concentration of measure on a high-dimensional sphere, see below) for GAP measures instead of the uniform measure (Theorem 2). In particular, our version of Lévy’s lemma holds also on infinite dimensional spheres, where the uniform measure does not exist.

Furthermore, we provide several corollaries. The first one shows that for any observable and \({\textrm{GAP}}(\rho )\)-most \(\psi \), the coarse-grained Born distribution is near a \(\psi \)-independent one (see Remark 4 in Sect. 3.1 for discussion). The second arises from evolving the observable with time and provides a form of dynamical typicality [2], which means that for typical initial wave functions, the time evolution “looks” the same; here, “typical” refers to the \({\textrm{GAP}}(\rho )\) distribution, and “look” (which in [48] meant the macroscopic appearance) refers to the Born distribution for the observable considered. In fact, Corollary 2 even shows that the relevant kind of closeness (to a t-dependent but \(\psi \)-independent distribution) holds jointly for most \(t\in [0,T]\). As a further variant (Corollary 3), dynamical typicality also holds when “look” refers to \(\rho _a^\psi \). Put differently, the statement here is that for \({\textrm{GAP}}(\rho )\)-most \(\psi \) and most \(t\in [0,T]\),

where \(\psi _t=U_t \,\psi \) and \(\rho _t=U_{t} \, \rho \, U_{t}^*\) for an arbitrary unitary time evolution \(U_{t}\) (allowing for time-dependent \(H_t\)). In the original version of canonical typicality, one particularly considers for \(\rho _R\) the micro-canonical density matrix \(\rho _\textrm{mc}\) for a fixed Hamiltonian H, for which the time evolution yields nothing interesting because it is invariant anyway; but if we consider arbitrary \(\rho \), then \(\rho \) can evolve in a non-trivial way even for fixed H.

Another corollary (Corollary 4) concerns the conditional wave function \(\psi _a\) of a (which is the natural notion of the subsystem wave function for a, see Sect. 2.2 for the definition): It is known that if \(d_R\) is large, then for \(u_R\)-most \(\psi \) and most bases of \({\mathcal {H}}_b\), the Born distribution of \(\psi _a\) is approximately \({\textrm{GAP}}({{\,\textrm{tr}\,}}_b \rho _R)\). We generalize this statement as follows: if \(d_b\) is large and \(\rho \) has small eigenvalues, then for \({\textrm{GAP}}(\rho )\)-most \(\psi \) and most bases of \({\mathcal {H}}_b\), the Born distribution of \(\psi _a\) is approximately \({\textrm{GAP}}({{\,\textrm{tr}\,}}_b \rho )\).

The results of this paper can also be regarded as a variant of equivalence-of-ensembles in quantum statistical mechanics, i.e., as a new instance of the well-known phenomenon in statistical mechanics that it does not make a big difference whether we use the micro-canonical ensemble or the canonical one (for suitable \(\beta \)) or another equilibrium ensemble. Indeed, the uniform distribution over the unit sphere in a micro-canonical subspace can be regarded as a quantum analog of the micro-canonical distribution in classical statistical mechanics, and the GAP measure associated with a canonical density matrix as a quantum analog of the canonical distribution; see also Remark 11 in Sect. 3.2.

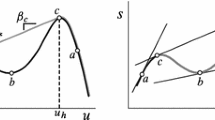

Our results on generalized canonical typicality (5) provide two kinds of error bounds based on two strategies of proof. They are roughly analogous to the following two bounds on the probability that a random variable X deviates from its expectation \({\mathbb {E}}X\) by more than n standard deviations \(\sqrt{{{\,\textrm{Var}\,}}(X)}\): First, the Chebyshev inequality yields the bound \(1/n^2\), which is valid for any distribution of X. Second, the Gaussian distribution has very light tails, so if X is Gaussian distributed, then the aforementioned probability is actually smaller than \(e^{-n}\) (a type of bound known as a Chernoff bound), so the Chebyshev bound would be very coarse. Likewise, the two kinds of bound we provide are based, respectively, on the Chebyshev inequality and the Chernoff bound (in the form of Lévy’s lemma). The former is polynomial in \(p_{\max }\), the latter exponential as in \(e^{-1/p_{\max }}\). For the original statement of canonical typicality (using \(u_R\)), the Chebyshev-type bounds were first given by Sugita [46], the Chernoff-type bounds by Popescu et al. [30]. Our proof of the Chebyshev-type bounds makes heavy use of results of Reimann [35].

A version of Lévy’s lemma was also established for the mean-value ensemble on a finite-dimensional Hilbert space \({\mathcal {H}}\) [28]. This is the uniform distribution on \({\mathbb {S}}({\mathcal {H}})\) restricted to the set \(\{\psi \in {\mathbb {S}}({\mathcal {H}}):\langle \psi |A|\psi \rangle =a\}\) for a given observable A and a value a satisfying further conditions. However, as also the authors of [28] point out, the physical relevance of this ensemble remains unclear. Also dynamical typicality has been established for the mean-value ensemble, see [39] for an overview.

The remainder of this paper is organized as follows: In Sect. 2, we elucidate the motivation and background. In Sect. 3, we formulate and discuss our results. In Sect. 4, we provide the proofs. In Sect. 5, we conclude.

2 Motivation and Background

2.1 Motivation

Canonical typicality is often (rightly) used as a justification and derivation of the canonical density matrix \(\rho _\textrm{can}\) from something simpler, viz., from the uniform distribution over the unit sphere in an appropriate subspace \({\mathcal {H}}_\textrm{mc}\). So it may appear surprising that here we consider other distributions instead of the uniform one. That is why we give some elucidation in this section.

The uniform distribution for \(\psi \) can appear in either of two roles: as a measure of probability or a measure of typicality. What is the difference? The concept of probability, in the narrower sense used here, refers to a physical situation that occurs many times or can be made to occur many times, so that one can meaningfully speak of the empirical distribution of part of the physical state, such as \(\psi \), over the ensemble of trials. In contrast, the concept of typicality, in the sense used here, refers to a hypothetical ensemble and applies also in situations that do not occur repeatedly, such as the universe as a whole, or occur at most a few times; it defines what a typical solution of an equation or theory looks like, or the meaning of “most.” Typicality is used in defining what counts as thermal equilibrium (e.g., [10] and references therein), but also in certain laws of nature such as the past hypothesis (a proposed law about the initial micro-state of the universe serving as the basis of the arrow of time; see [21, Sec. 5.7] for a formulation in terms of typicality). Moreover, it plays a key role for the explanation of certain phenomena by showing that they occur in “most” cases.

The mathematical statements apply regardless of whether we think of the measure as probability or typicality. If we use \(u_\textrm{mc}\) as probability, then the question naturally arises whether the actual distribution of \(\psi \) is uniform, and generalizations to other measures are called for. The GAP measures are then particularly relevant, not just as a natural choice of measures, but also because they arise as the thermal equilibrium distribution of wave functions.

But also for \(u_\textrm{mc}\) as a measure of typicality, which is perhaps the more important or more widely used case, the generalization is relevant. The way we practically think of canonical typicality is that if \(\psi \) is just “any old” wave function of S, then \(\rho _a^\psi \) will be approximately canonical. But the theorem of original canonical typicality (using \(u_\textrm{mc}\)) would require that the coefficients of \(\psi \) relative to energy levels of S outside of the micro-canonical energy interval \([E-\Delta E, E]\) are exactly zero, which of course goes against the idea of \(\psi \) being “any old” \(\psi \). Of course, we would expect that the canonicality of \(\rho _a^\psi \) does not depend much on whether other coefficients are exactly zero or not. And the theorems in this paper show that this is correct! They show that if the \(\rho \) we start from is not \(\rho _\textrm{mc}\), then the crucial part of the reasoning (the typical-\(\psi \) part) still goes through, just with corrections reflected in the deviation of \({{\,\textrm{tr}\,}}_b \rho \) from \({{\,\textrm{tr}\,}}_b \rho _\textrm{mc}\) (which, by the way, will be minor for \(\rho =\rho _\textrm{can}\) with appropriate inverse temperature \(\beta \)). More generally, the theorems in this paper prove the robustness of canonical typicality toward changes in the underlying measure.

The results of this paper also show that when computing the typical reduced state \(\rho _a^\psi \) for “any old” \(\psi \), we can start from various choices of \(\rho \) of the whole, as long as they yield approximately the same \({{\,\textrm{tr}\,}}_b \rho \). The results thus provide researchers with a new angle of looking at canonical typicality: it is OK to imagine “any old” \(\psi \), and not crucial to start from \(u_\textrm{mc}\).

More generally, our results are a kind of equivalence-of-ensembles statement in the quantum case, and thus add to the picture consisting of various senses in which different thermal equilibrium ensembles are practically equivalent, in this case with “ensemble” meaning ensemble of wave functions (i.e., measures over the unit sphere). Again, it plays a role that the GAP measures arise as the thermal equilibrium distribution of wave functions, and thus as an analog of the canonical ensemble in classical statistical mechanics. This means also that if \(\psi \) is itself a conditional wave function, a case in which we know [17, 19] that (for high dimension and most orthonormal bases) \(\psi \) is approximately GAP distributed, then canonical typicality applies. A special application concerns the thermodynamic limit, for which it is desirable to think of the conditional wave function \(\psi _A\) of a region A in 3-space as obtained from \(\psi _{A'}\) for a larger \(A'\supset A\), which in turn is obtained from \(\psi _{A''}\) for an even larger \(A'' \supset A'\), and so on. Then for each step, \(\psi _{A'}\) (etc.) is GAP-distributed.

By the way, the results here also have the converse implication of supporting the naturalness of the GAP measures. One might even consider a version of the past hypothesis that uses, as the measure of typicality, a GAP measure instead of the uniform distribution over the unit sphere in some subspace of the Hilbert space of the universe.

2.2 Mathematical Setup and Some Background

One often considers the uniform distribution over the unit sphere in a subspace \({\mathcal {H}}'\) of a system’s Hilbert space \({\mathcal {H}}\). While this distribution is associated with a density matrix given by the normalized projection to \({\mathcal {H}}'\), the measure \({\textrm{GAP}}(\rho )\) forms an analog of it for an arbitrary density matrix. We now give its definition and that of some other mathematical concepts we use.

Throughout this paper, all Hilbert spaces \({\mathcal {H}}\) are assumed to be separable, i.e., to have either a finite or a countably infinite orthonormal basis (ONB). The unit sphere \({\mathbb {S}}({\mathcal {H}})\) is always equipped with the Borel \(\sigma \)-algebra.

Density matrix. To any probability measure \(\mu \) on \({\mathbb {S}}({\mathcal {H}})\) we can associate a density matrix \(\rho _\mu \) by

(which always exists [49, Lemma 1]). Note that if \(\mu \) has mean zero then \(\rho _\mu \) is the covariance matrix of \(\mu \). It will turn out for \(\mu = {\textrm{GAP}}(\rho )\) that \(\rho _{\mu } = \rho \).

GAP measure. The measure \({\textrm{GAP}}(\rho )\) was first introduced for finite-dimensional \({\mathcal {H}}\) by Jozsa, Robb, and Wootters [23], who named it Scrooge measure.Footnote 1 Among several equivalent definitions [17], we use the following one based on Gaussian measures. Let \({\mathcal {H}}\) be separable and \(\rho \) a density matrix on \({\mathcal {H}}\) with eigenvalues \(p_n\) and eigen-ONB \((|n\rangle )_{n=1\ldots \dim {\mathcal {H}}}\), i.e.,

A complex-valued random variable Z will be said to be Gaussian with mean \(z\in {\mathbb {C}}\) and variance \(\sigma ^2>0\) if and only if \({\textrm{Re}}\,Z\) and \(\textrm{Im}\,Z\) are independent real Gaussian random variables with mean \({\textrm{Re}}\,z\), respectively, \(\textrm{Im}\,z\) and each with variance \(\sigma ^2/2\). Let \((Z_n)_{n=1\ldots \dim {\mathcal {H}}}\) be a sequence of independent \({\mathbb {C}}\)-valued Gaussian random variables with mean 0 and variances

Then, we define \({\textrm{G}}(\rho )\) to be the distribution of the random vector

i.e., the Gaussian measure on \({\mathcal {H}}\) with mean 0 and covariance operator \(\rho \). (It is known [32] in general that for every \(\phi \in {\mathcal {H}}\) and every positive trace-class operator \(\rho \) there exists a unique Gaussian measure on \({\mathcal {H}}\) with mean \(\phi \) and covariance operator \(\rho \).) Note that

which also shows that \(\Vert \Psi ^{\textrm{G}}\Vert <\infty \) almost surely, but in general \(\Vert \Psi ^{{\textrm{G}}}\Vert \ne 1\), i.e., \({\textrm{G}}(\rho )\) is not a distribution on the sphere \({\mathbb {S}}({\mathcal {H}})\). Projecting the measure \({\textrm{G}}(\rho )\) to the sphere \({\mathbb {S}}({\mathcal {H}})\) would not result in a measure with density matrix \(\rho \); therefore, we first adjust the density of \({\textrm{G}}(\rho )\) and define the adjusted Gaussian measure \({\text {GA}}(\rho )\) on \({\mathcal {H}}\) as the measure that has density \(\Vert \psi \Vert ^2\) relative to \({\textrm{G}}(\rho )\), i.e.,

which is a probability measure by virtue of (12). It will turn out below that \(\Vert \psi \Vert ^2\) is the right factor to ensure that \(\rho _{{\textrm{GAP}}(\rho )}=\rho \).

Let \(\Psi ^{{\text {GA}}}\) be a \({\text {GA}}(\rho )\)-distributed random vector. We define \({\textrm{GAP}}(\rho )\) to be the distribution of

Note that the denominator is almost surely nonzero (because every 1-element subset of \({\mathcal {H}}\) has \({\textrm{G}}(\rho )\)-measure 0 because every \(Z_n\) has continuous distribution). With this, we find that indeed

See [49] for a complete proof of existence and uniqueness of \({\textrm{GAP}}(\rho )\) for every density matrix \(\rho \).

\({\textrm{GAP}}(\rho )\) can also be characterized as the minimizer of the “accessible information” functional under the constraint that its density matrix is \(\rho \) [23]. If all eigenvalues of \(\rho \) are positive and \(D:=\dim {\mathcal {H}}<\infty \), then \({\textrm{GAP}}(\rho )\) possesses a density relative to the uniform distribution u on \({\mathbb {S}}({\mathcal {H}})\) [17, 19],

It was argued in [19] and mathematically justified in [17] that GAP measures describe the thermal equilibrium distribution of the (conditional) wave function of the system if \(\rho \) is a canonical density matrix.

It was also shown in [19] that \({\textrm{GAP}}\) is equivariant under unitary transformations, i.e., for all density matrices \(\rho \), all unitary operators U on \({\mathcal {H}}\), and all measurable sets \(M\subset {\mathbb {S}}({\mathcal {H}})\) one has

In particular, \({\textrm{GAP}}\) is equivariant under unitary time evolution, and, as a consequence, \({\textrm{GAP}}(\rho _t)\) is the relevant distribution on \({\mathbb {S}}({\mathcal {H}})\) whenever the system starts in thermal equilibrium with respect to some Hamiltonian \(H_0\) and evolves according to any Hamiltonian \(H_t\) at later times. More generally, the results of [17] (and their extension in Corollary 4) show that if a system has density matrix \(\rho \) arising from entanglement, then its (conditional) wave function (relative to a typical basis, see below) is asymptotically GAP-distributed. Thus, GAP is the correct distribution in many practically relevant cases. On top of that, when we have no further restriction than that the density matrix is \(\rho \), then the natural concept of a “typical \(\psi \)” should refer to the most spread-out distribution compatible with \(\rho \), which is \({\textrm{GAP}}(\rho )\).

Finally, let us remark that \({\textrm{GAP}}(\rho )\) is also invariant under global phase changes, i.e., \({\textrm{GAP}}(\rho )(M) ={\textrm{GAP}}(\rho )(e^{i\varphi }M)\) for all measurable \(M\subset {\mathbb {S}}({\mathcal {H}})\) and \(\varphi \in {\mathbb {R}}\). Hence, \({\textrm{GAP}}(\rho )\) naturally also defines a probability distribution on the projective space of complex rays in \({\mathcal {H}}\) and all results presented in the following can be equivalently formulated for rays instead of vectors.

Remark 1

In terms of \(\rho _\mu \), we can easily formulate and prove a weaker version of our main result (5); this version is related to (5) in more or less the same way as the statement that in a certain population, the average height is 170 cm, is related to the stronger statement that in that population, most people are 170 cm tall. The weaker version asserts that the average of \(\rho ^\psi _a\) over \(\psi \) using the \({\textrm{GAP}}(\rho )\) distribution is equal to \({{\,\textrm{tr}\,}}_b \rho \), whereas the statement about (5) was that most \(\psi \) relative to \({\textrm{GAP}}(\rho )\) have \(\rho ^\psi _a\) (approximately) equal to \({{\,\textrm{tr}\,}}_b \rho \). On the other hand, the statement about the average is stronger because it asserts, not approximate equality, but exact equality. On top of that, the average statement is not limited to the GAP measure but holds for any probability measure \(\mu \). Here is the full statement: for separable \({\mathcal {H}}={\mathcal {H}}_a \otimes {\mathcal {H}}_b\), any probability measure \(\mu \) on \({\mathbb {S}}({\mathcal {H}})\), and a random vector \(\psi \) with distribution \(\mu \),

Indeed, \({{\,\textrm{tr}\,}}_b\) commutes with \(\mu \)-integration,Footnote 2 so

\(\diamond \)

Norms. The distance between two density matrices will be measured in the trace norm

where \(M^*\) denotes the adjoint operator of M. If M can be diagonalized through an orthonormal basis (ONB), then \(\Vert M\Vert _{{{\,\textrm{tr}\,}}}\) is the sum of the absolute eigenvalues. We will also sometimes use the operator norm

which, if M can be diagonalized through an ONB, is the largest absolute eigenvalue.

Purity. For a density matrix \(\rho \), its purity is defined as \({{\,\textrm{tr}\,}}\rho ^2\). In terms of the spectral decomposition \(\rho =\sum _n p_n |n\rangle \langle n|\), the purity is \({{\,\textrm{tr}\,}}\rho ^2=\sum _n p_n^2\), which can be thought of as the average size of \(p_n\). In particular, the purity is positive and \(\le 1\); it is \(=1\) if and only if \(\rho \) is pure, i.e., a 1d projection; for a normalized projection \(\rho _R=P_R/d_R\), the purity is \(1/d_R\); conversely, 1/purity can be thought of as the effective number of dimensions over which \(\rho \) is spread out. It also easily follows that

because \(p_n^2 \le p_n \Vert \rho \Vert \), and if \(p_{n_0}\) is the largest eigenvalue, then \(p_{n_0}^2 \le \sum _n p_n^2\) because all other terms are \(\ge 0\). In words, the average \(p_n\) is no greater than the maximal \(p_n\), which is bounded by the square root of the average \(p_n\) (and the square root of the maximal \(p_n\)).

Conditional wave function. For \({\mathcal {H}}={\mathcal {H}}_a\otimes {\mathcal {H}}_b\), an ONB \(B=(|m\rangle _b)_{m=1\ldots \dim {\mathcal {H}}_b}\) of \({\mathcal {H}}_b\), and \(\psi \in {\mathbb {S}}({\mathcal {H}})\), the conditional wave function \(\psi _a\) [5, 6, 19] of system a is a random vector in \({\mathcal {H}}_a\) that can be constructed by choosing a random one of the basis vectors \(|m\rangle _b\), let us call it \(|M\rangle _b\), with the Born distribution

taking the partial inner product of \(|M\rangle _b\) and \(\psi \), and normalizing:

(Note that the event that \(\Vert {}_b\langle M|\psi \rangle \Vert _a=0\) has probability 0 by (23). In the context of Bohmian mechanics, the expression “conditional wave function” refers to the position basis and the Bohmian configuration of b [5]; but for our purposes, we can leave it general.)

We can also think of \(\psi _a\) as arising from \(\psi \) through a quantum measurement with eigenbasis B on system b, which leads to the collapsed quantum state \(\psi _a \otimes |M\rangle _b\). Correspondingly, we call the distribution of \(\psi _a\) in \({\mathbb {S}}({\mathcal {H}}_a)\) the Born distribution of \(\psi _a\) and denote it by \({\textrm{Born}}_a^{\psi ,B}\). However, when considering \(\psi _a\), we will not assume that any observer actually, physically carries out such a quantum measurement; rather, we use \(\psi _a\) as a theoretical concept of a wave function associated with the subsystem a. It is related to the reduced density matrix \(\rho ^\psi _a\) in a way similar to how a conditional probability distribution is to a marginal distribution,

\(\psi _a\) is also related to the GAP measure, in fact in two ways. First, when we average \({\textrm{Born}}^{\psi ,B}_a\) over all ONBs B (using the uniform distribution corresponding to the Haar measure), then we obtain \({\textrm{GAP}}(\rho _a^\psi )\) [17, Lemma 1]. Put differently, if we think of both M and B as random and \(\psi _a\) thus as doubly random, then its (marginal) distribution is \({\textrm{GAP}}(\rho _a^\psi )\); put more briefly, \({\textrm{GAP}}(\rho _a^\psi )\) is the distribution of the collapsed pure state in a after a purely random quantum measurement in b on \(\psi \). Second, if \(d_b\) is large, then even conditionally on a single given B, the distribution of \(\psi _a\) is close to a GAP measure for most B and most \(\psi \) according to a GAP measure on \({\mathcal {H}}_a\otimes {\mathcal {H}}_b\); this is the content of Corollary 4 below.

3 Main Results

In this section, we present and discuss our main results about generalized canonical typicality. In the following, we use the notation \(\mu (f)\) for the average of the function f under the measure \(\mu \),

Note that, by (18),

The statement of our generalized canonical typicality differs in that it concerns approximate equality and holds for the individual \(\rho _a^\psi \), not only for its average.

3.1 Statements

We first formulate our main theorem on canonical typicality for GAP measures and the underlying variant of Lévy’s lemma for GAP measures. We then give a list of further consequences of this generalized version of Lévy’s lemma, including results on dynamical typicality and the fact that the typical Born distribution of conditional wave functions is itself a GAP measure. At the end of this section we also state a slightly weaker version of our main theorem that is not based on Lévy’s lemma but instead allows for a rather elementary proof based on the Chebyshev inequality. Finally, the known bounds for uniformly distributed \(\psi \) will be stated in Remark 12 in Sect. 3.2 for comparison.

Theorem 1

(Generalized canonical typicality, exponential bounds). Let \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\) be Hilbert spaces with \({\mathcal {H}}_a\) having finite dimension \(d_a\) and \({\mathcal {H}}_b\) being separable, and let \(\rho \) be a density matrix on \({\mathcal {H}}= {\mathcal {H}}_a \otimes {\mathcal {H}}_b\). Then for every \(\delta >0\),

where \(c=48\pi \).

Remark 2

The relation (28) can equivalently be formulated as a bound on the confidence level, given the allowed deviation: For every \(\varepsilon \ge 0\),

where \({\tilde{C}}=\frac{1}{2304\pi ^2}\). This form makes it visible why we call Theorem 1 an “exponential bound”: because the bound on the probability of too large a deviation is exponentially small in \(1/\Vert \rho \Vert \). In contrast, the bound (37) is polynomially small in \({{\,\textrm{tr}\,}}\rho ^2\).\(\diamond \)

A key tool for proving Theorem 1 is Theorem 2 below, a variant of Lévy’s lemma for GAP measures. Recall the notation (26).

Theorem 2

(Lévy’s lemma for GAP measures). Let \({\mathcal {H}}\) be a separable Hilbert space, let \(f:{\mathbb {S}}({\mathcal {H}})\rightarrow {\mathbb {R}}\) be a Lipschitz continuous function with Lipschitz constantFootnote 3\(\eta \), let \(\rho \) be a density matrix on \({\mathcal {H}}\), and let \(\varepsilon \ge 0\). Then,

where \(C=\frac{1}{288\pi ^2}\).

Remark 3

The statement remains true for complex-valued f if we replace the constant factor 6 in (30) by 12 and C by C/2, as follows from considering the real and imaginary parts of f separately.\(\diamond \)

As an immediate consequence of Theorem 2 for \(f(\psi ) = \langle \psi |B|\psi \rangle \), which has Lipschitz constant \(\eta \le 2\Vert B\Vert \) [30, Lemma 5], we obtain:

Corollary 1

Let \(\rho \) be a density matrix and B a bounded operator on the separable Hilbert space \({\mathcal {H}}\). For every \(\varepsilon \ge 0\),

with \({\tilde{C}} = \frac{1}{2304\pi ^2}\).

Remark 4

Corollary 1 provides an extension to GAP measures of the known fact [33] that \(\langle \psi |B|\psi \rangle \) has nearly the same value for most \(\psi \) relative to the uniform distribution. This kind of near-constancy is different from the near-constancy property of a macroscopic observable, viz., that most of its eigenvalues (counted with multiplicity) in the micro-canonical energy shell are nearly equal. Here, in contrast, nothing (except boundedness) is assumed about the distribution of eigenvalues of B. In particular, if B is a self-adjoint observable, then a typical \(\psi \) may well define a non-trivial probability distribution over the spectrum of B, not necessarily a sharply peaked one. The near-constancy property asserted here is that the average of this probability distribution is the same for most \(\psi \). In fact, it also follows that the probability distribution itself is the same for most \(\psi \) (“distribution typicality”), at least on a coarse-grained level (by covering the spectrum of B with not-too-many intervals) and provided that many dimensions participate in \(\rho \). This follows from inserting spectral projections of the observable for B in (31).\(\diamond \)

In contrast to the uniform distribution on the sphere in the micro-canonical subspace, which is invariant under the unitary time evolution, \({\textrm{GAP}}(\rho _0)\) will in general evolve, in fact to \({\textrm{GAP}}(\rho _t)\) by (17). This leads to questions about what the history \(t\mapsto \psi _t\) looks like. Inserting \(U_t^*BU_t\) for B in (31) leads us to the first equation in the following variant of “dynamical typicality” for GAP measures.

Corollary 2

Let \({\mathcal {H}}\) be a separable Hilbert space, B a bounded operator and \(\rho \) a density matrix on \({\mathcal {H}}\), and \(t\mapsto U_{t}\) a measurable family of unitary operators. Then for every \(\varepsilon ,t\ge 0\),

where \(\rho _t = U_{t}\,\rho \, U_{t}^*\), \(\psi _t = U_{t}\psi \) and \({\tilde{C}} = \frac{1}{2304\pi ^2}\). Moreover, for every \(\varepsilon , T>0\),

Clearly, for \(U_t\) we have in mind either a unitary group \(U_t= \exp (-iHt)\) generated by a time-independent Hamiltonian H, or a unitary evolution family \(U_{t}\) satisfying \(i\frac{d}{dt} U_{t} = H_t U_{t}\) and \(U_{0}=I\) generated by a time-dependent Hamiltonian \(H_t\). However, the group resp. co-cycle structure play no role in the proof. (In [48], a similar result for the uniform distribution over the sphere in a large subspace was formulated only for time-independent Hamiltonians, but the proof given there actually applies equally to time-dependent ones.)

The last two corollaries were applications of Lévy’s lemma that did not involve reduced density matrices. We now turn to bi-partite systems again and present two further corollaries. We first ask whether, for \({\textrm{GAP}}(\rho _0)\)-typical \(\psi _0\), the reduced density matrix \(\rho _a^{\psi _t}\) remains close to \({{\,\textrm{tr}\,}}_b \rho _t\) over a whole time interval [0, T]. The following corollary answers this question affirmatively for most times in this interval.

Corollary 3

Let \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\) be Hilbert spaces with \({\mathcal {H}}_a\) having finite dimension \(d_a\) and \({\mathcal {H}}_b\) being separable, \(\rho \) a density matrix on \({\mathcal {H}}={\mathcal {H}}_a\otimes {\mathcal {H}}_b\), and \(t\mapsto U_{t}\) a measurable family of unitary operators on \({\mathcal {H}}\). Then for every \(\varepsilon ,T>0\),

where \(\rho _t = U_{t}\,\rho \, U_{t}^*\), \(\psi _t = U_{t}\psi \) and \({\tilde{C}} = \frac{1}{2304\pi ^2}\).

The next corollary expresses that for \({\textrm{GAP}}(\rho )\)-typical \(\psi \), large \(d_b\), and small \({{\,\textrm{tr}\,}}\rho ^2\), the conditional wave function \(\psi _a\) (relative to a typical basis) has Born distribution close to \({\textrm{GAP}}({{\,\textrm{tr}\,}}_b\rho )\). (Note that we are considering the distribution of \(\psi _a\) conditionally on a given \(\psi \), rather than the marginal distribution of \(\psi _a\) for random \(\psi \), which would be \(\int _{{\mathbb {S}}({\mathcal {H}})} {\textrm{GAP}}(\rho )(\textrm{d}\psi ) \, {\textrm{Born}}^{\psi ,B}_a(\cdot )\).) Recall the notation (26).

Corollary 4

Let \(\varepsilon ,\delta \in (0,1)\), let \({\mathcal {H}}_a\) be a Hilbert space of dimension \(d_a\in {\mathbb {N}}\), let \(f:{\mathbb {S}}({\mathcal {H}}_a)\rightarrow {\mathbb {R}}\) be any continuous (test) function, and let \({\mathcal {H}}_b\) be a Hilbert space of finite dimension \(d_b\ge \max \{4,d_a,32\Vert f\Vert ^2_\infty /\varepsilon ^2\delta \}\). Then, there is \(p>0\) such that for every density matrix \(\rho \) on \({\mathcal {H}}={\mathcal {H}}_a \otimes {\mathcal {H}}_b\) with \(\Vert \rho \Vert \le p\),

where \({\textrm{Born}}_a^{\psi ,B}\) is the distribution of the conditional wave function, \(\textrm{ONB}({\mathcal {H}}_b)\) is the set of all orthonormal bases on \({\mathcal {H}}_b\), and \(u_{\textrm{ONB}}\) the uniform distribution over this set.

Remark 5

We conjecture that the closeness between \({\textrm{Born}}_a^{\psi ,B}\) and \({\textrm{GAP}}({{\,\textrm{tr}\,}}_b\rho )\) is even better than stated in Corollary 4, at least when 0 is not an eigenvalue of \({{\,\textrm{tr}\,}}_b \rho \), in the sense that (35) holds not only for continuous f but even for bounded measurable f, and in fact uniformly in f with given \(\Vert f\Vert _\infty \). This conjecture is suggested by using Lemma 6 of [17] instead of Lemma 5, or rather a variant of it with more explicit bounds. \(\diamond \)

Whereas Theorem 1 is based on the rather technical concentration of measure result Theorem 2, a slightly weaker statement can be obtained using only the Chebyshev inequality and a bound on the variance of random variables of the form \(\psi \mapsto \langle \psi |A|\psi \rangle \) with respect to GAP\((\rho )\) given in Proposition 1 in Sect. 4.2. The latter bound is also of interest in its own right and has already been established for self-adjoint A by Reimann in [35].

Theorem 3

(Generalized canonical typicality, polynomial bounds). Let \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\) be Hilbert spaces with \({\mathcal {H}}_a\) having finite dimension \(d_a\) and \({\mathcal {H}}_b\) being separable. Let \(\rho \) be a density matrix on \({\mathcal {H}}= {\mathcal {H}}_a \otimes {\mathcal {H}}_b\) with \(\Vert \rho \Vert <1/4\). Then for every \(\delta >0\),

Remark 6

Again, we can equivalently express Theorem 3 as a bound on the confidence level \(1-\delta \) for any given allowed deviation of \(\rho _a^\psi \) from \({{\,\textrm{tr}\,}}_b\rho \): For every \(\rho \) with \(\Vert \rho \Vert <1/4\) and every \(\varepsilon >0\),

\(\diamond \)

Remark 7

While our main motivation for developing Theorem 3 is the different strategy of proof, and while the exponential bound of Theorem 1 will usually be tighter than the polynomial bound of Theorem 3, this is not always the case: the bound of Theorem 3 is actually sometimes better, as the following example shows. Suppose that \(\Vert \rho \Vert =\frac{1}{\sqrt{D}} = p_1\) and that all other \(p_j\) are equal, i.e.,

for all \(j>1\). Then,

and for, e.g., \(d_a=1000\) and \(\varepsilon =0.01\) we find that

for \(4.67\cdot 10^{13}< D < 9.17\cdot 10^{31}\), i.e., in this example there is a regime in which D is already very large but still the polynomial bound is smaller than the exponential one. \(\diamond \)

3.2 Discussion

Remark 8

System size. Theorem 3 shows, roughly speaking, that as soon as

\({\textrm{GAP}}(\rho )\)-most wave functions \(\psi \) have \(\rho _a^{\psi }\) close to \({{\,\textrm{tr}\,}}_b\rho \). If we think of \(1/{{\,\textrm{tr}\,}}\rho ^2\) as the effective number of dimensions participating in \(\rho \), and if this number of dimensions is comparable to the full number \(D=\dim {\mathcal {H}}=d_a d_b\) of dimensions, then (41) reduces to

Since the dimension is exponential in the number of degrees of freedom, this condition roughly means that the subsystem a comprises fewer than \(20\%\) of the degrees of freedom of the full system. (The same consideration was carried out in [13, 14] for the original statement of canonical typicality.) The stronger exponential bound yields that a can even comprise up to \(50\%\) of the degrees of freedom [13, 14].\(\diamond \)

Remark 9

Canonical density matrix. A \(\rho \) of particular interest is the canonical density matrix

The relevant condition for generalized canonical typicality to apply to \(\rho =\rho _\textrm{can}\) is that it has small purity \({{\,\textrm{tr}\,}}\rho ^2\) and small largest eigenvalue \(\Vert \rho \Vert \). We argue that indeed it does.

One heuristic reason is equivalence of ensembles: since \(\rho _\textrm{mc}\) has purity \(1/d_\textrm{mc}\) and largest eigenvalue \(1/d_\textrm{mc}\), which is small, the values for \(\rho _\textrm{can}\) should be similarly small. Another heuristic argument is based on the idealization that the system consists of many non-interacting constituents, so that \({\mathcal {H}}={\mathcal {H}}_1^{\otimes N}\) and \(H=\sum _{j=1}^N I^{\otimes (j-1)}\otimes H_1 \otimes I^{\otimes (N-j)}\), so \(\rho _\textrm{can}=\rho _{1\textrm{can}}^{\otimes N}\). It is a general fact that for tensor products \(\rho _1\otimes \rho _2\) of density matrices, the purities multiply, \({{\,\textrm{tr}\,}}(\rho _1\otimes \rho _2)^2=({{\,\textrm{tr}\,}}\rho _1^2)({{\,\textrm{tr}\,}}\rho _2^2)\), and the largest eigenvalues multiply, \(\Vert \rho _1\otimes \rho _2\Vert =\Vert \rho _1\Vert \, \Vert \rho _2\Vert \). Thus, the purity of \(\rho _\textrm{can}\) is the N-th power of that of \(\rho _{1\textrm{can}}\), and likewise the largest eigenvalue. Since \(N\gg 1\) and the values of \(\rho _{1\textrm{can}}\) are somewhere between 0 and 1, and not particularly close to 1, the values of \(\rho _\textrm{can}\) are close to 0, as claimed. We expect that mild interaction does not change that picture very much.\(\diamond \)

Remark 10

Classical vs. quantum. While classically, a typical phase point from a canonical ensemble is also a typical phase point from some micro-canonical ensemble, a typical wave function from \({\textrm{GAP}}(\rho _\beta )\) does not lie in any micro-canonical subspace \({\mathcal {H}}_{\textrm{mc}}\) (if \({\mathcal {H}}\ne {\mathcal {H}}_{\textrm{mc}}\)) and even if it does lie in an \({\mathcal {H}}_{\textrm{mc}}\), then it is not typical from that subspace; that is because typical wave functions are superpositions of many energy eigenstates, and the weights of these eigenstates in \(\rho _{\textrm{mc}}\) and \(\rho _\textrm{can}\) are reflected in the weights of these eigenstates in the superposition. Therefore, already in the case that \(\rho \) is a canonical density matrix, Theorems 3 and 1 are not just simple consequences of canonical typicality but independent results.\(\diamond \)

Remark 11

Equivalence of ensembles. We can now state more precisely the sense in which our results provide a version of equivalence of ensembles. It is well known that if a and b interact weakly and b is large enough, then both \(\rho _{\textrm{mc}}\) and \(\rho _\textrm{can}\) in \({\mathcal {H}}_S={\mathcal {H}}_a\otimes {\mathcal {H}}_b\) lead to reduced density matrices close to the canonical density matrix (4) for a, \({{\,\textrm{tr}\,}}_b\rho _{\textrm{mc}}\approx \rho _{a,\textrm{can}}\approx {{\,\textrm{tr}\,}}_b \rho _\textrm{can}\), provided the parameter \(\beta \) of \(\rho _\textrm{can}\) and \(\rho _{a,\textrm{can}}\) is suitable for the energy E of \(\rho _{\textrm{mc}}\). Hence, Theorems 3 and 1 yield that we can start from either \(u_{\textrm{mc}}\) or \({\textrm{GAP}}(\rho _\textrm{can})\) and obtain for both ensembles of \(\psi \) that \(\rho _a^\psi \) is nearly constant and nearly canonical.

\(\diamond \)

Remark 12

Comparison to original theorems. The original, known theorems about canonical typicality, which refer to the uniform distribution over a suitable sphere instead of a GAP measure, are still contained in our theorems as special cases, except for worse constants and in some places additional factors of \(d_a\) (which we usually think of as constant as well). For more detail, let us begin with the known theorem analogous to Theorem 3 (formulated this way in [14, Eq. (32)], based on arguments from [46]):

Theorem 4

(Canonical typicality, polynomial bounds). Let \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\) be Hilbert spaces of respective dimensions \(d_a, d_b \in {\mathbb {N}}\), \({\mathcal {H}}= {\mathcal {H}}_a \otimes {\mathcal {H}}_b\), \({\mathcal {H}}_R\) be any subspace of \({\mathcal {H}}\) of dimension \(d_R\), \(\rho _R\) be \(1/d_R\) times the projection to \({\mathcal {H}}_R\), and \(u_R\) the uniform distribution over \({\mathbb {S}}({\mathcal {H}}_R)\). Then for every \(\delta >0\),

When we apply our Theorem 3 to \(\rho =\rho _R\) (and assume \(d_R\ge 4\)), we obtain that \({\textrm{GAP}}(\rho )=u_R\), \({{\,\textrm{tr}\,}}\rho ^2=1/d_R\), and almost exactly the bound (44) except for a (rather irrelevant) factor \(\sqrt{28}\) and \(d_a^{2.5}\) instead of \(d_a^2\). Further explanation of how this different exponent comes about can be found in Sect. 4.6.

Theorem 5

(Canonical typicality, exponential bounds [30, 31]). With the notation and hypotheses as in Theorem 4, for every \(\delta >0\) such that

This theorem was stated slightly differently in [30, 31]; we give the derivation of this form in Sect. 4.6. Again, the bound agrees with the one (28) provided by Theorem 1 for \(\rho =\rho _R\) (so \(\Vert \rho \Vert =1/d_R\)) up to worse constants and additional factors of \(d_a\).

Next, here is the standard statement of Lévy’s lemmaFootnote 4

Theorem 6

(Lévy’s Lemma [27]). Let \({\mathcal {H}}\) be a Hilbert space of finite dimension \(D:=\dim {\mathcal {H}}\in {\mathbb {N}}\), let \(f:{\mathbb {S}}({\mathcal {H}})\rightarrow {\mathbb {R}}\) be a function with Lipschitz constant \(\eta \), let u be the uniform distribution over \({\mathbb {S}}({\mathcal {H}})\), and let \(\varepsilon >0\). Then,

where \({\hat{C}}=\frac{2}{9\pi ^3}\).

When we apply our Theorem 2 to \(\rho =I/D\), we obtain that \({\textrm{GAP}}(\rho )=u\), \(\Vert \rho \Vert =1/D\), and exactly the bound (47) except for worse constants. Note that Theorem 2 holds also for infinite-dimensional separable \({\mathcal {H}}\).

We turn to previous results for dynamical typicality. In [48], an inequality analogous to the bound (32) of Corollary 2 was proven for the uniform distribution over the sphere in a subspace. In [28], variants of Lévy’s lemma and dynamical typicality were established for the mean-value ensemble of an observable A for a value \(a\in {\mathbb {R}}\), defined by restricting the uniform distribution on \({\mathbb {S}}({\mathbb {C}}^D)\) to the set \(\{\psi \in {\mathbb {S}}({\mathbb {C}}^D):\langle \psi | A|\psi \rangle =a\}\) and normalizing afterward. However, the physical relevance of this ensemble is unclear, since, in general, the mean value of an observable is itself no observable, and thus it is unclear how this ensemble could be prepared or occur in an experiment.\(\diamond \)

Remark 13

Lévy’s lemma for other distributions. Lévy’s lemma, although it applies to the uniform and GAP measures, does not apply to all rather-spread-out distributions on the sphere; it is thus a non-trivial property of the family of GAP measures.

This can be illustrated by means of the von Mises-Fisher (VMF) distribution, a well known and natural probability distribution on the unit sphere \({\mathbb {S}}({\mathbb {R}}^D)\) in \({\mathbb {R}}^D\) that is different from the GAP measure. It has parameters \(\kappa \in {\mathbb {R}}_+\) and \(\mu \in {\mathbb {S}}({\mathbb {R}}^D)\) and can be obtained from a Gaussian distribution in \({\mathbb {R}}^D\) with mean \(\mu \) and covariance \(\kappa ^{-1}I\) by conditioning on \({\mathbb {S}}({\mathbb {R}}^D)\). The analog of Lévy’s lemma for the von Mises-Fisher distribution is false; this can be seen as follows. Its density

with respect to the uniform distribution u on \({\mathbb {S}}({\mathbb {R}}^D)\) varies at most by a factor of \(e^{2\kappa }\) when varying x (while keeping D and \(\kappa \) fixed). For a given Lipschitz function F on the sphere, insertion of \(F(x)\,g(x)\) for f(x) in a real variant of Lévy’s lemma for the uniform distribution (Theorem 6 above) yields that \(F(x)\, g(x)\) for u-most x is close to the u-average of Fg, which equals the VMF-average of F (where the Lipschitz constant of \(f=Fg\) could be a bit worse than that of F). The set of exceptional x has small u-measure, and since \(C(D,\kappa )\in [e^{-\kappa },e^\kappa ]\) and thus \(g(x)\in [e^{-2\kappa },e^{2\kappa }]\), it also has small VMF-measure (larger at most by a factor of \(e^{2\kappa }\)). Thus, for VMF-most x, F(x) is close to VMF(F)/g(x), and thus not constant at all. The same argument shows that Lévy’s lemma is violated for any sequence of measures \((\mu _D)_{D\in {\mathbb {N}}}\) on \({\mathbb {S}}({\mathbb {R}}^D)\) whose density \(g_D\) relative to u is bounded uniformly in D, has Lipschitz constant bounded uniformly in D, but deviates significantly from 1 on a non-negligible set in \({\mathbb {S}}({\mathbb {R}}^D)\).

For GAP measures, the situation is very different. From (16) one can see, for example, that if the eigenvalue \(p_{n_2}\) of \(\rho =\sum _n p_n |n\rangle \langle n|\) is twice as large as another eigenvalue \(p_{n_1}\), then the density (16) at \(\psi =|n_2\rangle \) is \(2^{D+1}\) times as large as that at \(\psi =|n_1\rangle \). Thus, the density and its Lipschitz constant are not (for relevant choices of \(\rho \)) bounded uniformly in D; rather, non-uniform GAP measures become more and more singular with respect to the uniform distribution for large D.\(\diamond \)

Remark 14

Generalized canonical typicality from conditional wave function? One might imagine a different strategy of deriving generalized canonical typicality, based on regarding \(\psi \) itself as a conditional wave function and using the known fact [17, 19] that conditional wave functions are typically \({\textrm{GAP}}\) distributed. We could introduce a further big system c, choose a high-dimensional subspace \({\mathcal {H}}_{Rabc}\) in \({\mathcal {H}}_{abc}={\mathcal {H}}_a \otimes {\mathcal {H}}_b \otimes {\mathcal {H}}_c\) so that \({{\,\textrm{tr}\,}}_c P_{Rabc}/d_{Rabc}\) coincides with the given \(\rho \) on \({\mathcal {H}}_a\otimes {\mathcal {H}}_b\), and start from a random wave function from \({\mathbb {S}}({\mathcal {H}}_{Rabc})\). However, we do not see how to make such a derivation work. \(\diamond \)

Remark 15

Not every measure does what \({\textrm{GAP}}(\rho )\) does. Generalized canonical typicality as expressed in Theorems 3 and 1 is not true in general if we replace \({\textrm{GAP}}(\rho )\) by a different measure: if \(\rho \) is a density matrix on \({\mathcal {H}}\) and \(\mu \) a probability distribution over \({\mathbb {S}}({\mathcal {H}})\) with density matrix \(\rho _\mu =\rho \), then it need not be true for \(\mu \)-most \(\psi \) that \(\rho ^\psi _a\approx {{\,\textrm{tr}\,}}_b \rho \).

Here is a counter-example. Let \(\rho =\sum _{n=1}^D p_n |n\rangle \langle n|\) have eigenvalues \(p_n\) and eigen-ONB \((|n\rangle )_{n\in \{1,\ldots ,D\}}\), and let

be the measure that is concentrated on the finite set \(\{|n\rangle :1\le n\le D\}\) and gives weight \(p_n\) to each \(|n\rangle \). This measure is the narrowest, most concentrated measure with density matrix \(\rho \), and thus a kind of opposite of \({\textrm{GAP}}(\rho )\), the most spread-out measure with density matrix \(\rho \). A random vector \(\psi \) with distribution \(\mu \) is a random eigenvector \(|n\rangle \). What the reduced density matrix \(\rho _a^{|n\rangle }\) looks like depends on the vectors \(|n\rangle \in {\mathcal {H}}={\mathcal {H}}_a\otimes {\mathcal {H}}_b\). Suppose that the eigenbasis of \(\rho \) is the product of ONBs of \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\), \(|n\rangle =|\ell \rangle _a \otimes |m\rangle _b\); then \(\rho _a^{|n\rangle }={{\,\textrm{tr}\,}}_b |n\rangle \langle n| = |\ell \rangle _a\langle \ell |\) (in an obvious notation), so \(\rho _a^{|n\rangle }\) is always a pure state and thus far away from \({{\,\textrm{tr}\,}}_b \rho = \sum _{\ell ,m}p_{\ell m} |\ell \rangle _a\langle \ell |\) if that is highly mixed. Note, however, that if instead of a product basis, we had taken \((|n\rangle )_{n=1\ldots D}\) to be a purely random ONB of \({\mathcal {H}}\), then (with overwhelming probability if \(d_b\gg 1\)) \(\rho _a^{|n\rangle }\approx d_a^{-1} I_a\) and thus also \({{\,\textrm{tr}\,}}_b \rho \) (which by (18) is the \(\mu \)-average of \(\rho _a^\psi \)) is close to \(d_a^{-1} I_a\), so \(\rho _a^{\psi }\approx {{\,\textrm{tr}\,}}_b \rho \) for \(\mu \)-most \(\psi \), despite the narrowness of \(\mu \).\(\diamond \)

Remark 16

Canonical typicality with respect to \({\textrm{GAP}}(\rho )\) does not hold for every \(\rho \) . Let us consider the special case in which \(\rho \) has one eigenvalue that is large (e.g., \(10^{-1}\)), while all others are very small (e.g., \(10^{-1000}\)). Such a situation occurs for example for N-body quantum systems with a gapped ground state \(|0\rangle \) at very low temperature, T of order \((\log N)^{-1}\). So call the large eigenvalue p and suppose for definiteness that all other eigenvalues are equal,

with O(1/D) referring to the trace norm and the limit \(D\rightarrow \infty \). In that case, \({{\,\textrm{tr}\,}}\rho ^2\approx p^2\) (e.g., \(10^{-2}\), while \(d_a\) may be \(10^{100}\)), so the smallness condition (41) for generalized canonical typicality is strongly violated. To investigate \(\rho _a^\psi \), note that any vector \(\psi \in {\mathbb {S}}({\mathcal {H}})\) can be written as \(\psi =\cos \theta e^{i\alpha } |0\rangle + \sin \theta |\phi \rangle \) with \(\theta \in [0,\pi /2]\), \(\alpha \in [0,2\pi )\), and \(|\phi \rangle \perp |0\rangle \). If \(\psi \) has distribution \({\textrm{GAP}}(\rho )\), then \(\phi \) has distribution \(u_{{\mathbb {S}}(|0\rangle ^\perp )}\) and is independent of \(\theta \) and \(\alpha \), \(\alpha \) is independent of \(\theta \) and uniformly distributed, and a lengthy computation shows that the distribution of \(\theta \) has density

as \(D\rightarrow \infty \). By an error of order \(1/\sqrt{D}\), we can replace \(\phi \) by a \(u_{{\mathbb {S}}({\mathcal {H}})}\)-distributed vector. If \(|0\rangle \) factorizes as in \(|0\rangle =|0\rangle _a |0\rangle _b\), then \({{\,\textrm{tr}\,}}_b\rho = p|0\rangle _a\langle 0| + (1-p)(I_a/d_a)+O(1/d_b)\) and \(\rho _a^\psi =\cos ^2\theta |0\rangle _a \langle 0| + \sin ^2\theta (I_a/d_a) + O(1/\sqrt{d_b})\). Since the latter depends on \(\theta \) (and thus is not deterministic but has a non-trivial distribution), it follows that \(\rho _a^\psi \not \approx {{\,\textrm{tr}\,}}_b \rho \) with high probability.\(\diamond \)

Remark 17

Comparison to large deviation theory. In large deviation theory [50], one studies another version of concentration of measures: one considers a sequence of probability distributions \(({\mathbb {P}}_N)_{N\in {\mathbb {N}}}\) on (say) the real line and studies whether (and at which rate) \({\mathbb {P}}_N\bigl ([x,\infty )\bigr )\) tends to 0 exponentially fast as \(N\rightarrow \infty \) for fixed \(x\in {\mathbb {R}}\). Our situation is a bit similar, with the role of x played by \(\varepsilon \) in (29), and that of \({\mathbb {P}}_N\) by the distribution of \(\Vert \rho _a^\psi -{{\,\textrm{tr}\,}}_b \rho \Vert _{{{\,\textrm{tr}\,}}}\) in \({\mathbb {R}}\) for \({\textrm{GAP}}(\rho )\)-distributed \(\psi \). However, our situation does not quite fit the standard framework of large deviations because we do not necessarily consider a sequence \(\rho _N\) of density matrices, but rather a fixed \(\rho \) with small \(\Vert \rho \Vert \). That is why we have provided error bounds in terms of the given \(\rho \).\(\diamond \)

4 Proofs

4.1 Proof of Remark 1

What needs proof here is that also in infinite dimension, the partial trace commutes with the expectation,

(For \(\dim {\mathcal {H}}_b<\infty \), \({{\,\textrm{tr}\,}}_b\) is a finite sum and thus trivially commutes with \({\mathbb {E}}_\mu \).) So suppose that \({\mathcal {H}}_b\) has a countable ONB \((|l\rangle _b)_{ l\in {\mathbb {N}}}\), and let \(|\phi \rangle _a\in {\mathcal {H}}_a\). Then

where we used Fubini’s theorem in the third and the definition of \(\rho _\mu \) in the fourth line. Since a bounded operator A is uniquely determined by the quadratic form \(\phi \mapsto \langle \phi |A|\phi \rangle \), it follows that \({\mathbb {E}}_\mu (\rho _a^\psi )={{\,\textrm{tr}\,}}_b\rho _\mu \).

4.2 Proof of Theorem 3

We start with the proof of the polynomial version of generalized canonical typicality and thereby introduce approximation techniques for infinite-dimensional Hilbert spaces, which will also be used in the proof of the exponential bounds of Theorem 1 later on. For the proof of Theorem 3, we make use of a result from Reimann [35]. Let \((|n\rangle )_{n=1\ldots D}\) be an orthonormal basis of eigenvectors of \(\rho \) and \(p_1,\dots ,p_D\) the corresponding (positive) eigenvalues. Reimann used the density of the GAP measure \({\textrm{GAP}}(\rho )\) to compute expressions of the form

where the expectation is taken with respect to \({\textrm{GAP}}(\rho )\) and \(c_j = \langle j|\psi \rangle \) are the coordinates of \(\psi \in {\mathbb {S}}({\mathcal {H}})\) with respect to the orthonormal basis \((|j\rangle )_{j=1\ldots D}\). With the help of these expressions he derived an upper bound for the variance \({{\,\textrm{Var}\,}}\langle \psi |A|\psi \rangle \) (also taken with respect to \({\textrm{GAP}}(\rho )\)) for self-adjoint operators \(A:{\mathcal {H}}\rightarrow {\mathcal {H}}\). We show that Reimann’s upper bound for \({{\,\textrm{Var}\,}}\langle \psi |A|\psi \rangle \) remains essentially valid also for non-self-adjoint A and this bound will be a main ingredient in our proof of Theorem 3.

We start by computing the expectation \({\mathbb {E}}\langle \psi |A|\psi \rangle \) and an upper bound for the variance \({{\,\textrm{Var}\,}}\langle \psi |A|\psi \rangle \) for an arbitrary operator \(A:{\mathcal {H}}\rightarrow {\mathcal {H}}\), where the expectation and variance are with respect to the measure \({\textrm{GAP}}(\rho )\). We closely follow Reimann [35] who did these computations in the case that A is self-adjoint. We arrive at the same bound for the variance (with the distance between the largest and smallest eigenvalue of A replaced by its operator norm); however, one step in the proof needs to be modified to account for A not being necessarily self-adjoint. Moreover, we show that the expression for \({\mathbb {E}}\langle \psi |A|\psi \rangle \) and the upper bound for \({{\,\textrm{Var}\,}}\langle \psi |A|\psi \rangle \) remain valid if \({\mathcal {H}}\) has countably infinite dimension, i.e., if it is separable.

Proposition 1

Let \(\rho \) be a density matrix on a separable Hilbert space \({\mathcal {H}}\) with positive eigenvalues \(p_n\) such that \(p_{\max }=\Vert \rho \Vert <1/4\) and let \(\dim {\mathcal {H}}\ge 4\). For \({\textrm{GAP}}(\rho )\)-distributed \(\psi \) and any bounded operator \(A:{\mathcal {H}}\rightarrow {\mathcal {H}}\),

and

Proof

We first assume that \(D:=\dim {\mathcal {H}}<\infty \). The formula for the expectation follows immediately from the fact that the density matrix of \({\textrm{GAP}}(\rho )\) is \(\rho \):

For a complex-valued random variable X, the variance can be computed by

Since the variance of a random variable does not change when a constant is added, we can assume for its computation without loss of generality that \({\mathbb {E}}\langle \psi |A|\psi \rangle = 0\). Let \((|n\rangle )_{n=1,\dots ,D}\) be an orthonormal basis of \({\mathcal {H}}\) consisting of eigenvectors of \(\rho \). For \(\psi \in {\mathbb {S}}({\mathcal {H}})\), we write

with \(c_l = \langle l|\psi \rangle \) and \(A_{ml} = \langle m|A|l\rangle \). Then, for \(X=\langle \psi |A|\psi \rangle \), we find that

Reimann [35] showed that the fourth moments \({\mathbb {E}}(c_l^* c_m c_{m'}^* c_{l'})\) all vanish except for the two cases \(l=m, m'=l'\) and \(l=m', m=l'\) and that

where

This implies

Because of \(|A_{mm}| \le \Vert A\Vert \) it follows from the computation in [35] that

Moreover, as it was shown in [35], \(K_{ml} \le \frac{1}{1-p_{\max }}\) for all l and m and therefore

Since A is not necessarily self-adjoint, we have to proceed in a different way than Reimann [35] did to bound this term. To this end we make use of the Cauchy-Schwarz inequality for the trace, i.e. \({{\,\textrm{tr}\,}}(B^*C) \le \sqrt{{{\,\textrm{tr}\,}}(B^*B){{\,\textrm{tr}\,}}(C^*C)}\), and the inequality \(|{{\,\textrm{tr}\,}}(BC)|\le \Vert B\Vert {{\,\textrm{tr}\,}}(|C|)\) for any operators B, C [43, Thm. 3.7.6]. With these inequalities, we have that

Combining (64), (65), (66) and (67c) proves the bound for the variance and thus finishes the proof in the finite-dimensional case.

Now suppose that \({\mathcal {H}}\) has a countably infinite ONB. The expectation can be computed as before since \({\textrm{GAP}}(\rho )(|\psi \rangle \langle \psi |)=\rho \) remains true in the infinite-dimensional setting [49]. For the variance, we approximate \(\rho \) by density matrices \(\rho _n\), \(n\in {\mathbb {N}}\), of finite rank defined by

Then, \(\Vert \rho _n-\rho \Vert _{{{\,\textrm{tr}\,}}} \rightarrow 0\) as \(n\rightarrow \infty \), and therefore Theorem 3 in [49] implies that \({\textrm{GAP}}(\rho _n) \Rightarrow {\textrm{GAP}}(\rho )\) (weak convergence). Note also that from some \(n_0\) onwards, \(\sum _{m=n}^\infty p_m \le p_1\) and thus \(\Vert \rho _n\Vert =p_1=\Vert \rho \Vert \). Let \(f(\psi ):=|\langle \psi |A|\psi \rangle -{{\,\textrm{tr}\,}}(A\rho )|^2\) and \(f_n(\psi ):= |\langle \psi |A|\psi \rangle -{{\,\textrm{tr}\,}}(A\rho _n)|^2\). Because of \({{\,\textrm{tr}\,}}(A\rho _n)\rightarrow {{\,\textrm{tr}\,}}(A\rho )\) and therefore \(f_n\rightarrow f\) uniformly in \(\psi \) it follows that \({\textrm{GAP}}(\rho _n)(f_n)-{\textrm{GAP}}(\rho _n)(f) \rightarrow 0\). Since f is continuous, it follows from the weak convergence of the measures \({\textrm{GAP}}(\rho _n)\) that \({\textrm{GAP}}(\rho _n)(f) \rightarrow {\textrm{GAP}}(\rho )(f)\) and therefore altogether that \({\textrm{GAP}}(\rho _n)(f_n)\rightarrow {\textrm{GAP}}(\rho )(f)\). Since, as one easily verifies, \({{\,\textrm{tr}\,}}\rho _n^2 \rightarrow {{\,\textrm{tr}\,}}\rho ^2\), the bound for the variance in the finite-dimensional case remains valid in the infinite-dimensional setting.Footnote 5\(\square \)

Proof of Theorem 3

Without loss of generality assume that all eigenvalues of \(\rho \) are positive. Proposition 1 together with Chebyshev’s inequality implies for any operator A and any \(\varepsilon >0\) that

Let \((|l\rangle _a)_{l=1\dots d_a}\) and \((|n\rangle _b)_{n=1\dots d_b}\), where \(d_a:= \dim {\mathcal {H}}_a \in {\mathbb {N}}\) and \(d_b:=\dim {\mathcal {H}}_b \in {\mathbb {N}}\cup \{\infty \}\), be an orthonormal basis of \({\mathcal {H}}_a\) and \({\mathcal {H}}_b\), respectively. For

where \(I_b\) is the identity on \({\mathcal {H}}_b\), we find \(\Vert A^{lm}\Vert =1\),

and similarly

For any \(d_a\times d_a\) matrix \(M = (M_{ij})\), it holds that \(\Vert M\Vert _{{{\,\textrm{tr}\,}}} \le \sqrt{d_a} \Vert M\Vert _2\), where \(\Vert M\Vert _2\) denotes the Hilbert-Schmidt norm of M which is defined by

see, e.g., Lemma 6 in [30]. Therefore, we have that

and thus

where we used (69c), (71c), (72c) and \(\Vert A^{lm}\Vert =1\) in the last step. By replacing \(\varepsilon \rightarrow d_a^{-3/2}\varepsilon \), we finally obtain

Setting

and solving for \(\varepsilon \) gives (36) and thus finishes the proof. \(\square \)

4.3 Proof of Theorem 1

The proof of Theorem 1 follows largely the one of canonical typicality given in [30]; some crucial differences concern our generalization of the Lévy lemma and the steps needed for covering infinite dimension.

Let \(U_a\) be a unitary operator on \({\mathcal {H}}_a\). Then, the function \(f:{\mathbb {S}}({\mathcal {H}})\rightarrow {\mathbb {C}}\), \(f(\psi ) = {{\,\textrm{tr}\,}}_a(U_a\rho _a^\psi )=\langle \psi |U_a\otimes I_b|\psi \rangle \) is Lipschitz continuous with Lipschitz constant \(\eta \le 2\Vert U_a\Vert = 2\) (see, e.g., Lemma 5 in [30]). By Theorem 2 and Remark 3,

By (27),

Let \((U_a^j)_{j=0}^{d_a^2-1}\) be unitary operators that form a basis for the space of operators on \({\mathcal {H}}_a\) such thatFootnote 6

Then,

As in [30], the density matrix \(\rho _a^\psi \) can be expanded as

where \(C_j(\rho _a^\psi ) = {{\,\textrm{tr}\,}}_a(U_a^{j*}\rho _a^\psi )\) and (81) becomes

If \(|C_{j}(\rho _a^\psi )-C_{j}({{\,\textrm{tr}\,}}_b\rho )|\le \varepsilon \) for all j, then

This implies that

and, after replacing \(\varepsilon \) by \(\varepsilon d_a^{-1}\),

Setting

and solving for \(\varepsilon \) finishes the proof.

4.4 Proof of Theorem 2

The proofs begins with an auxiliary theorem formulated as Theorem 8 below. For better orientation, we also state the analogous fact about Gaussian distributions as Theorem 7 and start with quoting its real version:Footnote 7

Lemma 1

([27]). Let \(F:{\mathbb {R}}^D \rightarrow {\mathbb {R}}\) be a Lipschitz function with constant \(\eta \). Let \(X=(X_1,\dots ,X_D)\) be a vector of independent (real) standard Gaussian random variables. Then for every \(\varepsilon >0\),

Now let \(\rho =\sum _{n=1}^D p_n |n\rangle \langle n|\) be a density matrix on the D-dimensional Hilbert space \({\mathcal {H}}\), and let Z be a random vector in \({\mathcal {H}}\) whose distribution is \({\textrm{G}}(\rho )\), the Gaussian measure with mean 0 and covariance \(\rho \) as defined in Sect. 2.2; equivalently, \(Z=\sum _{n=1}^D Z_n |n\rangle \), where the \(Z_n\) are independent complex mean-zero Gaussian random variables with variances

Then we can write \(Z=\sqrt{\rho /2} {\tilde{Z}}\), where the components \({\tilde{Z}}_n\) of \({\tilde{Z}}=\sum _{n=1}^D {\tilde{Z}}_n |n\rangle \) are D independent complex mean-zero Gaussian random variables with variances \({\mathbb {E}}|{\tilde{Z}}_n|^2=2\), which can be in a natural way identified with a vector of 2D independent real standard Gaussian variables.

If \(F:{\mathcal {H}}\rightarrow {\mathbb {R}}\) is Lipschitz with constant \(\eta \), then \(F\circ \sqrt{\rho /2}: {\mathcal {H}}\rightarrow {\mathbb {R}}\) is also Lipschitz with constant \(\eta \sqrt{\Vert \rho \Vert /2}\). This function can also naturally be considered as a function on \({\mathbb {R}}^{2D}\) and then an application of Lemma 1 immediately proves the following theorem:

Theorem 7

Let \(\dim {\mathcal {H}}<\infty \), let \(\rho \) be a density matrix on \({\mathcal {H}}\), let Z be a random vector with distribution \({\textrm{G}}(\rho )\), and let \(F:{\mathcal {H}}\rightarrow {\mathbb {R}}\) be a Lipschitz function with Lipschitz constant \(\eta \). Then for every \(\varepsilon >0\),

However, instead of using Theorem 7, we will use Theorem 8 below, a similar result for the Gaussian adjusted measure \({\text {GA}}(\rho )\) defined in Sect. 2.2, which has density \(\Vert \psi \Vert ^2\) relative to \({\textrm{G}}(\rho )\). Its proof closely follows the proof of Lévy’s Lemma in [27]; for convenience of the reader we provide all the details.

Theorem 8

Let \(\dim {\mathcal {H}}<\infty \), let \(\rho \) be a density matrix on \({\mathcal {H}}\), let Z be a random vector with distribution \({\textrm{GAP}}(\rho )\), and let \(F:{\mathcal {H}}\rightarrow {\mathbb {R}}\) be a Lipschitz function with Lipschitz constant \(\eta \). Then for every \(\varepsilon >0\),

Proof

We identify \({\mathcal {H}}\) with \({\mathbb {C}}^D\) by means of the ONB \((|n\rangle )_{n=1\ldots D}\). Let \(\varphi : {\mathbb {R}}\rightarrow {\mathbb {R}}\) be a convex function and let \({\tilde{Z}}=(\tilde{Z_1},\dots ,{\tilde{Z}}_D)\) be a vector with the same distribution as Z but independent of it. With the help of Jensen’s inequality and Hölder’s inequality, we find that

where we use the notation F(Z) and \(F(\psi )\) interchangeably. We can write \(Z_n = \text {Re\,}Z_n + i \text {Im\,}Z_n\) where \(\text {Re\,}Z_n\) and \(\text {Im\,}Z_n\) are independent real-valued Gaussian random variables with mean 0 and variance \(p_n/2\). Since \({\mathbb {E}}|\text {Re\,}Z_n|^2 = p_n/2\) and \({\mathbb {E}}|\text {Re\,}Z_n|^4 = 3p_n^2/4\), we obtain

and therefore

We identify Z with the vector \(X:=(\text {Re\,}Z_1,\text {Im\,}Z_1,\text {Re\,}Z_2,\dots ,\text {Re\,}Z_D, \text {Im\,}Z_D)\) of real Gaussian random variables and similarly \({\tilde{Z}}\) with \(Y:= (\text {Re\,}{\tilde{Z}}_1,\text {Im\,}{\tilde{Z}}_1,\text {Re\,} {\tilde{Z}}_2,\dots ,\text {Re\,}{\tilde{Z}}_D, \text {Im\,}{\tilde{Z}}_D)\). For each \(0\le \theta \le \frac{\pi }{2}\) set \(X_\theta = X \sin \theta + Y \cos \theta \). One easily sees that the joint distribution of X and Y, which is the multivariate normal distribution with mean vector 0 and covariance matrix \(\textrm{diag}(p_1,p_1,\dots ,p_D,p_D,,p_1,p_1,\dots ,p_D,p_D)/2\), is the same as the joint distribution of \(X_\theta \) and \(\frac{d}{\textrm{d}\theta } X_\theta = X \cos \theta - Y \sin \theta \) since linear combinations of independent Gaussian random variables are again Gaussian and the entries of the expectation vector and covariance matrix can be easily computed.

Since F can be approximated uniformly by continuously differentiable functions, we can without loss of generality assume that F is continuously differentiable.

Let us now assume that \(\varphi \) is non-negative. Then, \(\varphi ^2\) is also convex. Then, we find with the help of Jensen’s inequality that

where in the last step we used Fubini’s theorem and the fact that the joint distribution of \(X_\theta \) and \(\frac{\textrm{d}}{\textrm{d}\theta }X_\theta \) is the same as the joint distribution of X and Y.

Let \(\lambda \in {\mathbb {R}}\) and set \(\varphi (x)=\exp (\lambda x)\). Then, we get

Altogether we obtain

By Markov’s inequality, we find that

Since \(\lambda \in {\mathbb {R}}\) was arbitrary, we can minimize the right-hand side over \(\lambda \). The minimum is attained at \(\lambda _{\min } = 4\varepsilon /(\pi ^2 \Vert \rho \Vert \eta ^2)\) and inserting this value in (98c) finally yields (91). \(\square \)

The last ingredient we need for the proof of Theorem 2 is the following lemma:

Lemma 2

For all \(r>0\) it holds that

Proof

With the help of Hölder’s inequality, we find that

Note that in the third line we used (93) and that \(\sum _n p_n =1\). We can write

where the \({\tilde{Z}}_n\) are independent complex standard Gaussian random variables. For a random variable Y, let \(M_Y(t) = {\mathbb {E}}(e^{tY})\) denote its moment generating function. The Chernoff bound states that for any \(a\in {\mathbb {R}}\),

Here, we thus obtain

We compute

Next note that \(2(\text {Re\,}{\tilde{Z}}_n)^2\) and \(2(\text {Im\,}{\tilde{Z}}_n)^2\) are chi-squared distributed random variables with one degree of freedom and that the moment generating function of a random variable Y with distribution \(\chi ^2_1\) is given by

Therefore,

and this implies

where we chose \(s=\Vert \rho \Vert ^{-1}\) in the last line. Because of

we find that

Inserting this into (100c) finishes the proof. \(\square \)

Proof of Theorem 2

We first assume that \(D=\dim {\mathcal {H}}<\infty \). Without loss of generality we can assume that \({\textrm{GAP}}(\rho )(f)=0\). Due to the continuity of f it follows that there exists a \(\varphi \in {\mathbb {S}}({\mathcal {H}})\) such that \(f(\varphi )=0\). This implies for all \({\tilde{\varphi }} \in {\mathbb {S}}({\mathcal {H}})\) that

where we used in the last step that the distance (in the spherical metric) between two points on the unit sphere is bounded by \(\pi \). Thus f is bounded by \(\pi \eta \).

Let \(0<r<1\) and define \({\tilde{f}}:{\mathcal {H}}\rightarrow {\mathbb {R}}\) by

For every \(\psi ,\varphi \in {\mathcal {H}}\) such that \(\Vert \psi \Vert ,\Vert \varphi \Vert \ge r\) we find that

where the last inequality follows from

Thus, \({\tilde{f}}\) is Lipschitz continuous with constant \(\eta /r\) on \(\{\psi \in {\mathcal {H}}: \Vert \psi \Vert \ge r\}\).

Now let \(\psi ,\varphi \in {\mathcal {H}}\) such that \(\Vert \psi \Vert ,\Vert \varphi \Vert \le r\) and \(\Vert \varphi \Vert \le \Vert \psi \Vert \). Then, we obtain

where the last inequality follows from

Due to the symmetry of the argument in \(\psi \) and \(\varphi \), one finds the same estimate in the case that \(\Vert \psi \Vert \le \Vert \varphi \Vert \) and we conclude that \({\tilde{f}}\) is Lipschitz continuous with constant \(5\eta /r\) on \(\{\psi \in {\mathcal {H}}: \Vert \psi \Vert \le r\}\).

Finally, let \(\psi ,\varphi \in {\mathcal {H}}\) such that \(\Vert \psi \Vert \le r\) and \(\Vert \varphi \Vert \ge r\) and define \(\gamma : [0,1] \rightarrow {\mathcal {H}}, \gamma (t)=(1-t)\psi + t\varphi \). Then, there exists a \(t_0\in [0,1]\) such that \(\Vert \gamma (t_0)\Vert =r\) and

Therefore, we find that

We conclude that \({\tilde{f}}\) is Lipschitz continuous with Lipschitz constant \(6\eta /r\).

Using the definition of \({\tilde{f}}\), we find that

By Lemma 2, the second term can be bounded by \(\sqrt{2}\exp (-(1/2-r^2)/2\Vert \rho \Vert )\). In order to estimate the first term in (119e), we first derive an upper bound for \(|{\text {GA}}(\rho )({\tilde{f}})|\). We compute

and so we obtain, again by Lemma 2,

This implies with the help of Theorem 8 that

provided that \(\varepsilon > 5\eta \exp (-(1/2-r^2)/2\Vert \rho \Vert )\). Altogether we arrive at

Choosing \(r=1/2\) we obtain

We can assume without loss of generality that

because otherwise the left-hand side of (30) vanishes: indeed, the distance between any two points on the sphere is at most \(\pi \), so their f values can differ at most by \(\pi \eta \), and for the same reason \(f(\psi )\) can differ from its average relative to any measure by at most \(\pi \eta \).

Likewise, we can assume without loss of generality that

because otherwise the right-hand side of (30) is greater than 1: indeed, for \(\varepsilon < 10\eta \exp (-1/8\Vert \rho \Vert )\),

As a consequence of (126) and (127), the first exponent in (125) is greater than the second, so

by (127). This finishes the proof in the finite-dimensional case.

Now suppose that \({\mathcal {H}}\) has a countably infinite ONB. Consider the density matrices \(\rho _n\) defined as in (68). Let \(\varepsilon '>0\). Because the set

is open in \({\mathbb {S}}({\mathcal {H}})\), it follows from the weak convergence of the measures \({\textrm{GAP}}(\rho _n)\) to \({\textrm{GAP}}(\rho )\) by the “portmanteau theorem” [3, Thm. 2.1] that

for some large enough \(N\in {\mathbb {N}}\) with \(N\ge n_0\). Recall that \(n_0\in {\mathbb {N}}\) is chosen such that \(\Vert \rho _n\Vert =\Vert \rho \Vert \) for all \(n\ge n_0\). Let \({\mathcal {H}}_N:= \textrm{span}\{|n\rangle : n=1,\dots ,N\}\). Then, since \(\rho _N\) is a density matrix on \({\mathcal {H}}_N\) and \({\textrm{GAP}}(\rho _N)\) is concentrated on \({\mathcal {H}}_N\), it follows with what we have already proven in the finite-dimensional case that

where \(C=\frac{1}{288\pi ^2}\). Noting that \(\Vert \rho _N\Vert =\Vert \rho \Vert \) and that \(\varepsilon '>0\) was arbitrary, we can altogether conclude that

i.e., the bound (130) remains true in the infinite-dimensional setting. \(\square \)

4.5 Proofs of Corollaries 2, 3, 4

Proof of Corollary 2

As already noted before Corollary 2, the first inequality follows immediately from Corollary 1 by inserting \(U_t^* B U_t\) for B.

For the proof of the second inequality, we define

Then, for every \(s> 0\) we find that

i.e., with \(\delta := e^{s\varepsilon }\),

This implies

With \(a:=\frac{{\tilde{C}}}{\Vert B\Vert ^2\Vert \rho \Vert s^2}\) and assuming that \(a\le 1\), we obtain

An application of Jensen’s inequality and Fubini’s theorem shows that

With the help of Markov’s inequality, we find that