Abstract

We introduce a dually-weighted multi-matrix model that for a suitable choice of weights reproduce two-dimensional Causal Dynamical Triangulations (CDT) coupled to the Ising model. When Ising degrees of freedom are removed, this model corresponds to the CDT-matrix model introduced by Benedetti and Henson (Phys Lett B 678:222, 2009). We present exact as well as approximate results for the Gaussian averages of characters of a Hermitian matrix A and \(A^2\) for a given representation and establish the present limitations that prevent us to solve the model analytically. This sets the stage for the formulation of more sophisticated matter models coupled to two-dimensional CDT as dually weighted multi-matrix models providing a complementary view to the standard simplicial formulation of CDT-matter models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The search for a consistent theory of quantum gravity that is valid up to arbitrarily short length scales remains an open challenge in theoretical physics. The direct quantization of the gravitational field described by general relativity using the standard perturbative field-theoretic techniques renders a perturbatively non-renormalizable quantum field theory (QFT), see, e.g., [1,2,3]. In the history of the construction of quantum theories of the fundamental interactions, perturbative non-renormalizability of a given well-grounded theoretical model was circumvented by the replacement of such a model by a more fundamental description either by the addition of new fields or by the discovery that the degrees of freedom to be quantized were different ones. As such, successful models were constructed and the fulfillment of perturbative renormalizability became a paradigmatic prescription. Such an attitude could be taken in the case of quantum gravity. Perhaps, the Einstein-Hilbert action is not the correct starting point and just corresponds to an effective description of classical gravity at sufficiently low energies and the fundamental degrees of freedom are completely different. Alternatively, one could add more fields that restore a well-behaved ultraviolet behavior for the would-be quantum theory of gravity. In all those cases, there is an insistence in keeping the tools of perturbative renormalization as the guiding principle in the construction of the underlying QFT. However, as it is well-known nowadays, perturbative renormalizability is neither necessary nor sufficient to define a theory that is valid across arbitrary length scales. The existence of Landau poles in scalar field theories in four dimensions or in quantum electrodynamics shows that perturbatively renormalizable theories might require the introduction of a ultraviolet cutoff at some finite energy scale in order to be non-trivial, see, e.g., [4,5,6,7]. In practice, however, such Landau poles live at scales far beyond our experimental capabilities and therefore are harmless. Similarly, by introducing a cutoff for the standard QFT of general relativity, one has a perfectly well-defined framework to compute quantum corrections to gravitational processes at energy scales below the cutoff [8,9,10]. Hence, constructing a fundamental theory of quantum gravity, as opposed to such an effective field theory does not necessarily require the construction of a perturbatively renormalizable QFT. One possibility to define a QFT that is valid up to arbitrarily high energies is by demanding that the coupling constants that enter physical observables reach a renormalization group fixed point. When this happens, the theory attains a scale-invariant regime and the ultraviolet cutoff can be safely removed. A paradigmatic example is quantum chromodynamics which is asymptotically free, i.e., the theory reaches the free (or Gaussian) fixed point in the ultraviolet,Footnote 1 see [11, 12]. In this case, since the coupling becomes sufficiently small at high energies, perturbation theory is applicable and can probe the existence of such a fixed point. Alternatively, the theory could have an interacting (or non-Gaussian) fixed point in the ultraviolet. In this situation, the values of the couplings at the fixed point might not be small and perturbation theory is not sufficient to probe it. When such a non-trivial fixed point exists,Footnote 2 we say that the QFT is asymptotically safe. In [13], Weinberg put forward the conjecture that quantum gravity could be realized as an asymptotically safe QFT. Evidence for that was obtained through the so-called 2 + \(\epsilon \) expansion [14,15,16,17]. However, due to its non-perturbative nature, the search for such a fixed point needs different techniques with different systematics in order to make its finding a robust claim. For many years, this conjecture was not investigated systematically due to the lack of suitable technical tools. However, during the 1990s, this situation suffered a twist due to the development of two major frameworks: the use of functional or non-perturbative renormalization group equations to quantum gravity, see, e.g., [18] and reviews [19,20,21,22,23,24] and references therein and the proper understanding of how to put quantum gravity on a lattice in a consistent fashion with causality constraints, see [25, 26] and reviews [27, 28] and references therein. Those computational techniques can be viewed as complementary approaches to the search for a non-perturbative ultraviolet fixed point for quantum gravity and it has become usual to call the search for the fixed point using continuum techniques as the asymptotic safety approach while the search for a suitable continuum limit of discretized path integrals with causal constraints as the Causal Dynamical Triangulations (CDT) program. Both lines of research have provided a large body of evidence for a suitable continuum limit in four dimensions. We refer the reader to the references in the reviews above mentioned for a comprehensive list of the most recent results in those fields.Footnote 3

Despite the tremendous progress achieved in the physically motivated four-dimensional case, the employment of non-perturbative techniques require substantial truncations and approximations that still need a lot of effort to produce quantitatively reliable results for those universal quantities, i.e., observables that could tell the existence of the continuum limit. Yet a fruitful playground is to consider two-dimensional quantum gravity. In this case, the interplay between continuum and discrete descriptions of the path integral of quantum gravity has witnessed a significant progress over the last decades ranging from the development of novel techniques to perform explicit calculations to rigorous mathematical results. We refer to, e.g., [34, 35] for reviews on the topic. In particular, the Dynamical Triangulation program was born in two dimensions within an Euclidean setting while its continuum counterpart is encoded in Liouville quantum gravity. In particular, a successful implementation of the discretization of the path integral of quantum gravity and thus a sum over geometries and possibly topologies can be achieved by purely combinatorial means with the use of the so-called matrix models [34, 36,37,38,39,40,41,42,43]. Triangles can be taken as dual representations of cubic-matrix vertices and Feynman diagrams of such a model are simply triangulations of two-dimensional surfaces. The perturbative expansion of matrix-models partition functions can be organized in powers of 1/N with N standing for the size of the Hermitian random matrices and each power of such an expansion is associated with a specific genus g. Such a remarkable expansion allows for the investigation of continuum limits, e.g., where just spherical topologies contribute (the so-called planar limit) or where all topologies are taken into account (double-scaling limit). Thanks to the rich combinatorial framework behind the theory of random matrices, very powerful results could be established in such a discrete-to-continuum approach. The two-dimensional case is simple enough so that one can still solve the Dynamical Triangulation model with no need to make use of random matrices, but such a perspective opens up the possibility to think about higher-dimensional discrete approaches as theories of higher-order tensors. So far, the higher dimensional tensor models were not successful in producing a suitable continuum limit in higher dimensions. Primarily, the main obstacle was the lack of the analogue of the 1/N-expansion in matrix models. Such a difficulty was lifted thanks to the works of Gurau that introduced the so-called colored tensor models [44,45,46,47]. Yet the continuum limits obtained from the colored tensor models up to date do not feature an extended-geometry-like phase very much in agreement with the simulations performed for Dynamical Triangulations in dimensionality greater than two [48,49,50,51,52], see however [53,54,55,56,57,58,59,60,61]. This suggests that one could inspect more intricate continuum limits from those models, see, e.g., the realization of such a reasoning following the functional renormalization group [62,63,64,65], or that a restriction to the configuration space should be implemented. This is precisely what is achieved with CDT which displays a robust body of evidence that such a suitable continuum limit exists in higher dimensions [25, 66,67,68,69,70,71,72,73,74,75]. Nevertheless, the analogue of CDT in a tensor-model language is still unknown. In this paper, we explore a matrix model that would be such a realization in two dimensions introduced by Benedetti and Henson in [76]. In essence, this model implements the (non-local) global time foliation of CDT together with the avoidance of spatial topology change by means of local constraints. This is achieved by the so-called dually weighted matrix models introduced in [77,78,79,80]. On top of the CDT configurations generated by the Benedetti-Henson model, we will introduce degreess of freedom arising from the Ising model, i.e., we develop a (multi-)matrix model of the Ising model coupled to CDT in two dimensions. Alternatively, the combinatorial models can be enriched with more structure such as group-theoretic data giving birth to the so-called Group Field Theories which may or may not include a Lorentzian setting [81,82,83,84]. Thus, two-dimensional quantum gravity might be too simplistic for some purposes but certainly is an inspirational source for more sophisticated candidates in higher dimensions.

Moreover, a realistic description of our Universe must accommodate matter degrees of freedom whose fluctuations can affect the existence of a suitable continuum limit. Hence, it is conceivable that our comprehension of the potential mechanism that drives quantum gravity asymptotically safe (irrespective of the approach followed here) depends on a simultaneous treatment of gravitational as well as matter degrees of freedom. This ambitious goal has witnessed significant progress over the last two decades both from the point of view of the functional renormalization group as well as from lattice simulations and encouraging results were found [23, 28, 85,86,87]. In the present work we provide several results regarding a simple but still quite rich gravity-matter model: two-dimensional CDT coupled to the Ising model. There are several motivations to look at this model but we emphasize the following: Implementing the causality constraint at the level of a matrix model is a first step towards its implementation in tensor models. As it is known, such a model does not have a known exact solution and developing appropriate tools to deal with it is mandatory. The inclusion of the Ising model produces a multi-matrix model which is interesting on its own. Clearly, having the Ising model as the matter component is tremendously far from the rich structure of the Standard Model of Particle Physics coupled to quantum gravity. Yet the purpose here is to explore the impact of the dynamical (and causal) lattice to the Ising model and vice-versa. We emphasize that matrix models for Euclidean two-dimensional gravity coupled to the Ising model were investigated in the past [88,89,90,91] as well as its higher-dimensional version [92,93,94]. Moreover, the coupling between the Ising model and CDT was also investigated since the birth of two-dimensional CDT, see, e.g., [95,96,97,98,99,100,101]. We establish several properties of such a CDT-Ising multi-matrix model paving the way for a future complete solution or making its structure well-grounded for studies using non-perturbative tools such as the functional renormalization group methodsFootnote 4 or numerical simulations.

This paper is organized as follows: In Sect. 2, we will briefly review CDT-like matrix model as a dually weighted matrix model as introduced by Benedetti and Henson [76]. In Sect. 3, we develop an analysis concerning topologies which admit global foliations, specifically within the Benedetti-Henson pure CDT-like matrix model (in Sect. 2) and further on non-orientable symmetric matrix models. In Sect. 4, we discuss briefly the well-known two-matrix models which can be interpreted as Ising model on random two-surfaces. In Sect. 5, we present our CDT-matrix model coupled to the Ising model by combining the models in Sects. 2 and 4. In Sect. 6, we study the properties of the matrices \(C_m\) which, in the case of \(m=2\), is responsible for generating the global foliation structure on the dual triangulation graphs of the CDT-like matrix models. Theorem 6.1 is one of the main results of this paper. In Sect. 7, we present the character expansions for partition functions of the two models, i.e., pure CDT-like matrix model by Benedetti-Henson and CDT-like matrix model coupled to Ising model presented in Sects. 2 and 5 respectively. We base our further analyses on these character expansions. In Sect. 8, we analyze the character expansion of the partition function for the latter model (i.e., CDT-like matrix model coupled to Ising model) and present an expression in Proposition 8.1 in terms of Clebsch-Gordan coefficients using Weingarten calculus. In Sect. 9, we evaluate Gaussian averages of characters for a given representation r, \(\langle \chi _r(A) \rangle _0\) and \(\langle \chi _r(A^2) \rangle _0\) for CDT-like matrix models presented in Sect. 2, using various techniques, e.g., Schur-Weyl duality, theory of symmetric group algebra, etc. Theorem 9.6, one of our main results, is related to Brauer algebra. In Sect. 10, we conclude by revisiting and summarizing the various results reported in this work.

2 Causal Dynamical Triangulations as a Matrix Model: A Short Review

Let us describe via matrix model the Causal Dynamical Triangulation (CDT) in two dimensions. The CDT was first worked and solved in [108] as a lattice model. In [76], a definition through a matrix model was given, and we follow this definition. Let us also refer to this model as pureFootnote 5 CDT-like matrix model of Benedetti-Henson. The CDT-like matrix model graphs can be represented as ribbon graphs just like other matrix models. In fact, these CDT-like matrix models fall into a class of so called dually weighted models. In [78], Kazakov, Staudacher, and Wynter introduced a so called dually weighted graphs where different weights are assigned for vertices with different coordination numbers and for faces with different lengths. As one will see below in elaborating the features of such CDT-like matrix models, our models generate the dually weighted graphs only with vertices with coordination number 3, and with faces with arbitrary lengths however, of particular type (restricting to only having two edges of a certain type, which we will name timelike).

Definition 2.1

A prime ribbon graph is a set of topological discs and topological rectangles satisfying the following properties: no two rectangles or two discs intersect; each rectangle has exactly one pair of opposite sides that are contained in disc’s circumferences, and this is the only intersection between rectangles and discs.

Definition 2.2

A rectangle’s edge that is not contained in a disc is called a strand.

Definition 2.3

A ribbon graph is the boundary of the union of the discs and rectangles of a prime ribbon graph.

Additionally, one can represent such ribbon graphs as multigraphs. Given a ribbon graph, we assign a vertex to each disc and an edge to each rectangle. If a disc and a rectangle are connected, i.e., they intersect, then respective vertex and edge are connected. See Fig. 1 for an example. We may also call a ribbon graph’s discs and rectangles as vertices and edges, respectively. We define a face as a closed curve formed by strands, and we carry the notion of faces from the ribbon graph to the multigraph. An example of a face of a ribbon graph is shown in Fig. 1a.

The CDT-like matrix model ribbon graphs are edge-colored (not proper), partitioned into two sets: spacelike edges and timelike edges. Together with the defining properties of a ribbon graph and the edge coloring, the CDT ribbon graph also has the following defining properties (CDT conditions):

-

1.

Every vertex is three valent and has exactly one timelike edge and two spacelike edges incident to it.

-

2.

Every face has either two timelike edges or none.

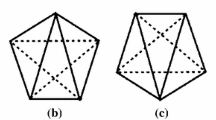

Notice that the faces can have any number of spacelike edges. Figure 2 shows a representation of the vertices and edges. A triangulation is obtained with the dual graph; the dual graph is defined by associating a vertex with each face of the graph, and if two faces share an edge, their respective vertices are connected by an edge (see Fig. 2b). This also leads to each vertex in the graph being associated with a face in the dual graph, and since all vertices are three valent, all faces in the dual graph are triangles. In Fig. 3, we show an example of a sphere triangulation that satisfies the CDT conditions given above.

An example of a CDT triangulation graph (dual of a corresponding CDT ribbon graph). spacelike edges in the ribbon graph are dual to timelike edges in the dual triangulation graph, and vice-versa. The vertices of the dual triangulation graph can have two or zero spacelike edges (in red) and any number of timelike edges (in blue) (color figure online)

A matrix model that generates ribbon graphs, satisfying these defining CDT conditions above can be constructed by associating a Hermitian matrix A with spacelike edges of a ribbon graph and a Hermitian matrix B to timelike edges of a ribbon graph. An auxiliary partition function for such a pure CDT-like matrix model is given by

The action (the negative of the exponent in (1)) of the model has the term \(-Ng A^2B\), responsible for the property that every vertex have exactly two spacelike edges and one timelike edge. A matrix \(C_2\) is introduced in the quadratic term of B, and it satisfies, for a positive integer p and in the large N limit,

This condition on \(C_2\) is what sets the property that faces have only two or zero timelike edges. In Sect. 6, we study this matrix \(C_2\) in more detail. Notice that this constraint (2) on \(C_2\) is overdetermined, once \(p > N\). Therefore, the condition (2) on \(C_2\) can only be imposed in the large N limit. The Gaussian average of a function f(A, B) is defined by

and we find the following properties:

Since the condition on \(C_2\) in (2) can only be set at large N, the partition function for CDT is only be obtained in the large N limit,

3 Properties of the Benedetti–Henson Model: Allowed Topologies and (Non-)orientability

The two defining properties, albeit being local, put a global constraint on the topologies that our ribbon graphs (and in their dual triangulation) may represent. Studying the properties of the elements of the graph enables us to determine the values of Euler characteristic which are allowed. The analysis can be done either with the ribbon graphs or with the triangulations dual to them. Here, let us work on the ribbon graphs.

Let us study some properties satisfied by the faces. A face that has no timelike edges is simply a set of connected spacelike edges. See Fig. 4b. For a face that has two timelike edges, since the vertices can only have one timelike edge, a timelike edge in a face is always a neighbor of two spacelike edges of the face. See Fig. 4a. This way, the edges of a given face of the latter type form a sequence composed by a timelike edge, a several spacelike edges, a timelike edge, a several spacelike edges, and then connecting back to the first timelike edge. Thus, this type of face has two disjoint sets of connected spacelike edges, and these two sets are separated by timelike edges. Let us call these the two sets as spacelike boundaries of a face.

Definition 3.1

A strip is defined as a set that contains faces which are sequentially connected by timelike edges, and also contains the edges and the vertices of these faces. Each face in a strip is glued by timelike edges to either two faces in the same strip (it can also glue to itself) or none, and one timelike edge is shared exactly by two faces (these two faces are possibly the same face). If a spacelike edge has multiplicity two in one face or if it belongs to two faces of the same strip, consider those two appearances as distinct elements of a strip.

Definition 3.2

A boundary of a strip is defined as a set of spacelike edges that are sequentially connected by vertices.

Definition 3.3

A interior of a strip is defined as the set of faces and timelike edges in a strip.

Definition 3.4

A regular strip is a strip that has two boundaries (see Fig. 6a).

Definition 3.5

A singular strip is defined as a strip composed by a face with no timelike edges (see Fig. 6b).

Definition 3.6

A Möbius strip is a non-singular strip that has one boundary (see Fig. 6c).

Some important properties satisfied by CDT ribbon graphs are:

-

1.

Every face belongs to a strip and is in only one strip.

-

2.

A strip has only spacelike edges in its boundary, and all spacelike edges are in boundaries of strips.

-

3.

Since there is a finite number of faces, there is a finite number of strips.

-

4.

Every non-singular strip is a periodic sequence which alternates between faces and timelike edges; and every boundary of a strip is a periodic sequence which alternates between spacelike edges and vertices.

-

5.

A strip has only one or two boundaries.

-

6.

A boundary of a strip either bounds one other strip or connects the strip to itself.

Properties 1 to 4 are easy to see, but let us take a closer look at property 5. The singular strip is just a face with only spacelike edges, thus as a strip it has only one boundary, as shown in Fig. 6b.

For a regular strip or a Möbius strip, we follow the steps below:

-

Consider one of its faces, call it \(F_1\). \(F_1\) has two spacelike boundaries, call them \(A_1\) and \(B_1\).

-

Consider also a face, call it \(F_2\), that is connected to the previously considered face \(F_1\) above by a timelike edge. \(F_2\) also has two spacelike boundaries. Call them \(A_2\) and \(B_2\). (See Fig. 5a.)

-

These two boundaries are each connected to a boundary of the previous face by the vertices the faces share. Together, the two faces create two extended spacelike boundaries, \(A_1\cup A_2\) and \(B_1\cup B_2\).

-

This process is inductively repeated to k connected faces in the strip, and the number of spacelike boundaries is kept two, \(A_1\cup A_2\cup \cdots \cup A_k\), and \(B_1\cup B_2\cup \cdots \cup B_k\).

-

Call l the number of faces in the strip. Considering property 4 of CDT ribbon graphs, when the first and the last faces are connected, there are two possibilities:

-

1.

The two extended boundaries close into \(A_1\cup A_2\cup \cdots \cup A_l\) and \(B_1\cup B_2\cup \cdots \cup B_l\), forming a regular strip, as shown in Fig. 6a.

-

2.

The extended boundaries connect to each other, \(A_1\cup A_2\cup \cdots \cup A_l\cup B_1\cup B_2\cup \cdots \cup B_l\), creating only one closed boundary, forming the Möbius strip as shown in Fig. 6c.

-

1.

We can obtain the property 6 in a similar manner as we did property 5 above:

-

Given a spacelike edge \(e_1\), consider the two faces (that might be the same) that share \(e_1\). Call these faces \(f_1\) and \(g_1\).

-

Consider the strips (that might be the same) that contain the faces \(f_1\) and \(g_1\) respectively. Call them s and t respectively.

-

Consider now a spacelike edge, call it \(e_2\) that has a common vertex with the previous spacelike edge \(e_1\). Call \(f_2\) and \(g_2\) the two faces that \(e_2\) belongs to.

-

The faces \(f_2\) and \(g_2\) either satisfy \(f_2\in s\) and \(g_2\in t\), or satisfy \(f_2\in t\) and \(g_2\in s\). Without the loss of generality, assume the first case. (See Fig. 5b.)

-

The two neighboring spacelike edges have the same two strips at its sides, s and t.

-

Inductively, when considering the entire closed cycle of spacelike edges, either \(s \ne t\) or \(s=t\). Therefore, there are either two or one strip at its sides.

Consequently, similarly to the way faces are connected by the timelike edges to form a strip, the entire graph is construted by strips connected by spacelike edges. Together with property 6, since the graph is connected, every strip is connected to each other by a sequence of strips. Therefore, one can say that a graph is a sequence of strips, and there are three types of strips defined in 3.5, 3.4, and 3.6.

Possible topologies

Let us now take a look at the Euler characteristics of the CDT ribbon graphs. The elements of these ribbon graph can be decomposed into two disjoint sets: boundaries of strips (defined in 3.2) and interiors of strips (defined in 3.3). Setting V as the number of vertices, F the number of faces, \(E_S\) the number of spacelike edges, \(E_T\) the number of timelike edges, and E the total number of edges, the Euler characteristics can be written as:

with \(\chi _B=V-E_S\) being the contribution to the Euler characteristics by the boundaries of strips, and \(\chi _I=F-E_T\) being the contribution by the interiors of strips. It is always true that \(\chi _B=0\), since every vertex has two spacelike edges, and every edge connects two vertices, thus \(2V=2E_S\). For \(\chi _I\), we need to differentiate between different types of strips. For the regular strip and the Möbius strip, since their faces have two timelike edges and every edge is shared by two faces, these strips have the property \(2F=2E_T\), thus \(\chi _I=0\). As for a singular strip, since it is composed of only one face and there are no timelike edges, \(\chi _I=1\). Therefore, the Euler characteristic equals the number of singular strips:

where \(F_s\) is the number of faces with no timelike edges, therefore it is equal to the number of singular strips.

From properties 5 and 6 we also get an important fact: The strips can be ordered by sequencing them according to having a common boundary. This sequencing induces global foliation, which is one of the fundamental properties of the definition of CDT [108]. This sequence may be periodic (and therefore called infinite), just as the strips themselves are a periodic sequence of faces. The sequence also may be finite, starting with a singular or Möbius strip, as they can only share a boundary with one other strip, and may end also with a singular or Möbius strip. The singular strip, then, can only appear at most twice. Therefore, since the Euler characteristics equals the number of singular strips, the Euler characteristics can only be 0, 1 and 2.

-

Considering only orientable surfacesFootnote 6, we know that the Euler characteristics can be expressed as \(\chi =2-2g\), where g is the genus of the surface. The possibilities follow:

-

1.

When the graph has two singular strips, we find that the genus is 0, thus the surface is a sphere. This graph has the sequence of strips starting with a singular strip, having some regular strips in the middle, and then ending in another singular strip.

-

2.

When the graph has one singular strip, there is no solution since there is no orientable surface with \(\chi =1\) (corresponding to \(g=1/2\)).

-

3.

Lastly, when the graph has no singular strips, the genus is 1, therefore the surface is a torus, composed of a periodic sequence of regular strips.

-

1.

-

When we consider nonorientable surfacesFootnote 7, the Euler characteristics now assumes the form

$$\begin{aligned} \chi =2-(2g+c), \end{aligned}$$(10)where c is the number of cross caps.

-

1.

For a graph with two singular strips, (10) can only be satisfied for \(g=0\) and \(c=0\). Then, the topology is of a sphere.

-

2.

When the graph has only one singular strip, the equation (10) can now be satisfied with \(g=0\) and \(c=1\), thus we have the topology of the projective plane.

-

3.

Lastly, when the graph has no singular strips, the equation (10) has two solutions. One solution is \(g=1\) with \(c=0\), implying it is a torus. The other solution is \(g=0\) with \(c=2\), showing the possibility of the topology of the Klein bottle.

-

1.

Existence and construction of topologies

We have shown above that these topologies are allowed, but it does not yet mean that they indeed exist. For this, let us define orientation of faces and strips:

Definition 3.7

Orientation of a face: The edges of a face form a closed curve and thus have the usual notion of orientation. Two faces that share an edge are said to have a compatible orientation if the directions of the closed curves are opposite in the common edge. We extend this notion to any two faces by transitivity.

Definition 3.8

Orientation of a strip: A regular strip admits a compatible orientation among all of its faces and we define the orientation of a strip as the orientation of one of its faces. A Möbius strip does not admit a compatible orientation among all of its faces. Two strips are said to have a compatible orientation if a face from one strip has compatible orientation to a face of the other strip.

In the following, we argue that indeed all the possible topologies discussed above can exist by a simple construction of putting strips together to form the sequence of strips.

-

1.

Sphere: Start the sequence with a singular strip, follow it by a sequence of regular strips, and end the sequence with another singular strip. See Fig. 7.

-

2.

Torus: Given a finite sequence of regular strips, glue the first strip to the last in way that the orientation is compatible. See Fig. 8.

-

3.

Projective plane: (i) Start the sequence with a singular strip, follow it by a sequence of regular strips (or none), and end it with a Möbius strip. See Fig. 9a. (ii) Alternatively, start the sequence with a singular strip, follow it by a sequence of regular strips, and glue the last boundary to itself as a cross-cap. See Fig. 9b. The last boundary must have even length for this to be possible.

-

4.

Klein bottle: (i) Start the sequence with a Möbius strip, follow it by a sequence of regular strips (or none) and end it with another Möbius strip. See Fig. 10a. (ii) Alternatively, we can change one or both of these Möbius strips for (a) cross-cap(s). See Fig. 10b, c. (iii) Furthermore, we can also have a periodic sequence of regular strips, and we glue the first strip to the last one in a way that their orientations are not compatible. See Fig. 10d.

Construction of a sphere graph. The empty circle represents a singular strip, the circle with two lines inside represents a regular strip, and the two non-intersecting lines connecting two circles represent a boundary that keeps the orientation compatible. This example shows four regular strips, but any non-negative integer is possible

Two different ways of constructing the projective plane. The empty circle represents a singular strip, the circle with two lines represents a regular strip, the two non-intersecting lines connecting two circles represent a boundary that keeps the orientation compatible, and the line going in and out of the same circle represents a cross-cap. Both examples show four regular strips, but any non-negative integer is possible

Four different ways of constructing the Klein bottle. The circle with two lines represents a regular strip, the circle with one line represents a Möbius strip, and the two non-intersecting lines connecting two circles represent a boundary that keeps the orientation compatible. These examples are constructed with four regular strips, but any number of them is possible

4 Two-Matrix Model Representing Ising Model on Random Two-Surfaces

Here, let us introduce two-matrix models which have been widely studied starting with Itzykson and Zuber [109], followed by many others [110,111,112,113].

Consider the partition function,

which is a generating function of connected ribbon graphs. Here \(M_+\) and \(M_-\) are \(N\times N\) Hermitian matrices and for a Hermitian matrix M, the measure applied is \(dM=\prod _{i<j}\textrm{Re}(M_{ij}) \textrm{Im}(M_{ij}) \prod _i M_{ii}\). For couplings \(\gamma \) and g,

is said to be the action of the system [89, 114]. One can interpret that the spins are located on the vertices (of Feynman ribbon graphs generated perturbatively by this action) represented by the terms in the action \(M_+^3\) and \(M_-^3\). Figure 11 shows how these elements appear in the ribbon graph, and Fig. 12 shows an example of a ribbon graph represented as a graph.

An example of a part of an two-matrix model graph described by the action (12). The solid lines represent the ribbon graph. The dashed lines represent the dual triangulation. The coloring of the vertices represent the spin

We can interpret g as a cosmological constant, since it is related to the volume of the universe. In the ribbon graph, the volume appears as the number of vertices, while in the dual graph, the triangulation, it is the number of faces. The coupling \(\gamma \) can be related to the Ising temperature T by \(\gamma =e^{-T^{-1}}\). For a Gaussian model with partition function

with a Hermitian matrix A, consider the average

where 0 denotes the Gaussian average. Wick’s theorem says that the computation of this function can be decomposed by taking the products of the propagators related to each edge in our Feynman graphs, where the propagators are simply the mean values taken for \(g=0\),

and

Diagonalizing the kinetic term in (12) takes us to another interesting version of the model, where now one can interpret that the spins are localized on the faces of the matrix model graphs (i.e., ribbon graphs) rather than on the vertices of the matrix model graphs. We achieve this by a few change of variables. First change to the Hermitian matrices K and L given by

so that

and with a last substitution to the couplings \(\gamma '\), \(g'\), and Hermitian matrices U and V given by

we obtain

which is the Ising model where the spins, therefore ± signs, are assigned for each face of Feynman ribbon graphs. Here, U (resp. V) can be thought of as being associated with a ribbon graph edge shared by ribbon graph faces of the same (resp. opposite) parity. For a third degree vertex, since there is a face between each neighboring edges, there are three faces (some may be the same) around it. Either the three faces have the same parity, hence the term \(U^3\), or one of the three has a parity different from the other two, hence the term \(3 \, U \, V^2\). For topological reasons, this interpretation is only valid for planar graphs. For example, consider the torus graph shown in Fig. 13. This graph can be generated by (20) if we do not restrict ourselves to planar graphs. Edges associated with U are in green, and edges associated to V are in orange. In this configuration, the placement of the green edges around almost all faces would imply that all faces have the same spin, while the existence of orange edges would imply that some faces have opposite spins, thus a contradiction.

Note however, that the action (20) after simply the change of variables should contain exactly the same information as the original action (12). The apparent restriction discussed above is only associated with the interpretation that we employed for U and V representing the same or opposite parities respectively.

5 Ising Model Coupled to CDT via Matrix Model

We present a matrix model that describes Ising model on a two-dimensional manifold which has global foliation, by combining the ideas of the two models (CDT-like matrix model and Ising model) we introduced above in Sects. 2 and 4. We would like to define an action of the matrix model which generates ribbon graphs satisfying desired properties of both CDT and Ising model. Hence, we require four types of half-edges to be considered: spacelike half-edges associated with spin-up vertices, spacelike half-edges associated with spin-down vertices, timelike half-edges associated with spin-up vertices, and timelike half-edges associated with spin-down vertices. Therefore, we introduce four matrices, \(A_+\), \(A_-\), \(B_+\), and \(B_-\) so that they induce notions of the space, time, and parity as desired. Each of these matrices is represented by a specific type of half-edges. The following conditions are sufficient in order to implement the desired properties we described above: To impose the existence of spacelike edges connecting equal parities, we set

Similarly, for timelike edges connecting equal parities, we impose

As for spacelike edges connecting unequal parities, we write

Lastly, for timelike edges connecting unequal parities, we put

In order to make sure that only the four types of edges above (21), (22), (23), and (24) exist, we set

The presence of spin-up and spin-down vertices is respectively imposed by introducing the terms \(A_+^2B_+\) and \(A_-^2B_-\) in the action. Therefore,

The partition function is then given by

We can also use similar transformations to (17) and (19), that lead to (20), to arrive at the version where the spins are on the faces. After the transformation the action is

where we may interpret that \(U_s\) generates spacelike edges between faces of the same spin, \(U_t\) timelike edges between faces of the same spin, \(V_s\) spacelike edges between faces of opposite spins, and \(V_t\) timelike edges between faces of opposite spins.

6 Properties of the Matrices \(C_m\)

Let us study the properties of \(C_m\) and find its expression explicitly.

Theorem 6.1

Let \(C_m\) be a GL(N) matrix that satisfies \(\textrm{Tr}[(C_m)^q]=N\delta _{m,q}\) for \(q=1,...,N\), with \(N>0\) and \(N=0 \;\textrm{mod}\; m\). Its eigenvalues can be approximated by

Proof

We show (29) by finding the characteristic polynomial of \(C_m\). Recall [115] that, if the characteristic polynomial of a GL(N) matrix C is given by

then the coefficients \(\pi _k\) are related to the power traces of C, \(t_k=\textrm{Tr}\,(C^k)\), by Girard-Newton formulas

where

Evaluating for \(C=C_m\), we have \(\pi _k=0\) for \(k\ne 0\) mod m, and for \(k=mr\), with r an integer satisfying \(0\le r\le k/m\), we get

Therefore, imposing that N is divisible by m,

where \(s_n\) is the nth partial exponential sum polynomial, and we only need to investigate its zeros because we are interested in eigenvalues \(\lambda _{ts}\). In the large n limit, the polynomials \(s_n(n z)\) have been shown [116, 117] to have the roots lying on the curve \(|z e^{1-z}|=1\) with \(|z|\le 1\), and \(\textrm{arg}(z e^{1-z})\) increases monotonically from each zero. The zeroes \(z_t\), \(t=1,...,n\), are approximately given by \(z_t={\tilde{z}}_t(1+{{{O}}}(N^{-1/2}))\), with

Using the principal branch of the Lambert W function, W, defined by \(W^{-1}(z)=z e^z\), we invert (35)

Now, in order to go back to eigenvalues \(\lambda _{ts}\), we use \(n=N/m\) and \(-\lambda _{ts}^{-m}={{{\tilde{z}}}}_t(1+{O}(N^{-1/2}))\) in (36), and

We note that the eigenvalues are discrete, and the set is finite, with N eigenvalues in total. \(\square \)

We let Mathematica solve numerically the zeros of the polynomial (34). We compare those with (37). We show in Fig. 14 and in Fig. 15 a comparison for different values of N for \(C_1\) and \(C_2\).

Eigenvalues of \(C_1\) in their complex plane, with vertical axis being \(\textrm{Im}(\lambda )\) and the horizontal \(\textrm{Re}(\lambda )\). In blue, we plot the numerical values of the zeros of the polynomial \(p_1(\lambda )\) in (34) using Mathematica, and in red we plot the values obtained from (37) for large N (color figure online)

Eigenvalues of \(C_2\). We obtained the approximate curve in green by letting t and s continuous parameters in (37) (color figure online)

Taking the values of the roots of (34) computed by Mathematica and evaluating the power traces \(\textrm{Tr}(C_1)\) and \(\textrm{Tr}(C_2)^2\), we indeed obtain the correct results within the machine error. Now, let us also check the validity of the expression (37), by inserting the values of \(\lambda _{ts}\) back to compute the power traces \(\textrm{Tr}(C_1)\) and \(\textrm{Tr}(C_2)^2\). We found that for \(m \ne q\), we obtain 0 up to certain power (\(q_{max}\)) of \(C_m\), but the value of the power traces quickly increases after we reach \(q_{max}\). For example, \(q_{max}\) is about 20 for \(N = 10000\). We find that the value of \(q_{max}\) also increases as N increases.

Let us consider the resolvent of the matrix \(C_m\)

The resolvent (38) evaluates to

where \(x=t/N\) and \(\rho (\lambda )=dx/d\lambda \) is called the spectral density, and the indices s and t are joined to only t. By equating (38) and (39), we can solve for \(\rho (\lambda )\).

Let us change the variables, \(\alpha =\lambda ^{-1}\);

Assuming \(\gamma '\) is a simple closed curve enclosing \(\mu \), using Cauchy’s theorem we can use that

Equating (40) and (41), we find a solution

which, in terms of \(\lambda \), translates to

At this point, let us check the validity of (37). Taking (37) to the power of m,

to the leading order in large N. Manipulating the expression (44),

where we change variables \(x = t/N\). Taking a derivative with respect to \(\lambda \),

and noting that \(\rho (\lambda ) = dx/d\lambda \), we again obtain the same solution (43). This concludes checking the validity of the solution (37).

Let us go back to (43) and continue solving for the distribution of \(\lambda \). Inserting \(\rho (\lambda ) = dx/d \lambda \), where \(x \in {{\mathbb {R}}}\) with \(0 \le x \le 1\) in (43) and integrating both sides, we obtain

where \(\kappa \) is some integration constant. In (37), \(\kappa \) was determined to be \(\kappa =-e^{-1}\). Therefore, in Figs. 14 and 15 we only had one curve \(\gamma \) each. However, (47) gives us a series of solutions because \(\kappa \) is free. A larger class of solutions means that now we should have many curves representing families of eigenvalues, with each curve corresponding to a particular value of \(\kappa \). The observation of (47) is that, given two possible values of m, \(m_1\) and \(m_2\), their solutions are related by \(\lambda _1^{m_1}=\lambda _2^{m_2}\). Therefore, we can focus on \(m=1\) without losing any essential information. For \(m=1\), we have

Then, each value of \(\kappa \) is associated with a distinct solution for \(C_m\). The eigenvalues of \(C_m\) are represented by a curve \(\gamma _\kappa \). Equation (48) can be inverted with the help of the W Lambert function,

where \(W_b\) is a branch of W and b is an integer we assign to each branch. In Fig. 16, we show a few of the infinitely many branch regions of the W function with \(z=\lambda ^{-1}\). Let us assign \(b=0\) to the branch where the point \(z=0\) belongs to. As is shown in Fig. 16b, only curves around the origin can be closed. These closed curves are the contours associated with the values of \(|\kappa |=|ze^z|\) with \(0\le |\kappa |\le e^{-1}\). The maximum value \(|\kappa | =e^{-1}\) is associated with the branch point of \(W_0\). Our previous solution (45) picked this particular value \(|\kappa | =e^{-1}\). However, our current analysis indicates that all these closed curves around the origin are valid not just \(|\kappa | =e^{-1}\). In the previous solution (45), the condition \(\kappa =-e^{-1}\) appeared because of the polynomials \(s_{N/m}\) in (34). We speculate that the reason for the existence of multiple solutions is related to the fact that the large N limit condition \(\lim _{N\rightarrow \infty }\frac{1}{N}\textrm{Tr}[(C_m)^p]=\delta _{p,m}\) can be approached through different ways. That is, \(\kappa \) may be a function of \(\textrm{Tr}[C_m]\) and \(\textrm{Tr}[(C_m)^m]\), and this function might not have trivial limits. For example, \(\textrm{Tr}[{({\tilde{C}}_m)}^p ]=N \delta _{p,m} +\delta _{p,1}\) has the same large N limit, \(\lim _{N\rightarrow \infty }\frac{1}{N}\textrm{Tr}(({\tilde{C}}_m)^p)=\delta _{p,m}\). The value of \(\kappa \) may vary when changing the condition on \(\textrm{Tr}C_m\).

7 Character Expansion of Partition Function

For a \(N \times N\) matrix M with eigenvalues \(M_k\), where \(k \in {\mathbb {Z}}\) with its range \(1 \le k \le N\) and given a set of N increasing non-negative integers \(\{h\}=\{h_1,...,h_N\}\), the character of this matrix can be given by

where

is the Vandermonde determinant. In general, the set \(\{h\}\) can be used to uniquely label a representation of \(\textrm{GL}(N)\). We have as a result of character expansion [78]

with a summation over all such sets \(\{h\}\) and where, as we use from now on, \(\sim \) indicates equality up to a constant. We separate the set \(\{h\}\) into a set of only even integers \(\{h\}^e\) and of only odd integers \(\{h\}^o\). If the numbers of even and odd \(h_l\) are equal or the numbers of even is one more than the number of odd, the coefficient \(c_{\{h\}}\) is given by

where, in a similar manner to (51), for a set of complex numbers \(\{m\}=\{m_1,...,m_N\}\), the Vandermonde is defined as

We use \(\lfloor {\cdot }\rfloor \) to represent the floor function. When the condition above is not met, we simply have

The character expansion for the more general case \(e^{\textrm{Tr}M^k}\), for integer \(k\ge 1\) is also known [78].

7.1 Character Expansion for the Pure CDT-Like Matrix Model of Benedetti–Henson

The partition function (1) for the pure CDT-like matrix model due to Benedetti-Henson studied in [76], after integrating out B is given by

By applying the character expansion given in (52) with \(M=\sqrt{\frac{N}{2}g^2}A^2C_2\), we expand the partition function (56),

where \(\#h=\sum _{l=1}^N h_l -\frac{1}{2}N (N-1)\). With the diagonalization \(A=\Omega \Lambda \Omega ^\dagger \), with \(\Omega \in \textrm{U}(N)\) and \(\Lambda \) a diagonal matrix, we preform a change of variables and rewrite

In order to factorize the dependence on \(C_2\), let us use the Schur orthogonality relation,

where \(d_{h}=\Delta (h)/\prod _{i=1}^{N-1}i!\) is the dimension of the representation given by \(\{h\}\). We can then rewrite

now with the integral independent of \(C_2\) as desired, and \(c_{\{h\}}\) is given in (53) or (55), depending on which case \(\{h\}\) satisfies. By changing the variables back to \(A=\Omega \Lambda \Omega ^\dagger \), the integral part of the expression (60) can be written, up to a constant, as

This integral had yet no known general solution in terms of the representation \(\{h\}\). A conjecture was also given in [76]. It was proposed that

where the set of integers \(\{h\}\) has been divided into four sets \(\{h^{(0)}\},...,\{h^{(3)}\}\) according to equivalence modulo 4.

The conjecture stated in (62), given in [76], can be rewritten as

where \(\{h\}^{(\epsilon )}=\{a\in {\{h\}}\;|\; a=\epsilon \;\textrm{mod}\; 4 \}\) and for a set B, we define \(2B=\{2b\,|\,b\in B\}\). \(k_N\) only depends on N, and not on \(\{h\}\). We can test this conjecture by applying it to some representations which we already know the result. Fix \(N=4\). For the trivial representation, which is given by \(\{h\}=\{3,2,1,0\}\), the conjecture (63) tells us that the proportionality factor is \(k_N=1/768\). On the other hand, for the defining representation, which is given by \(\{h\}=\{4,2,1,0\}\), the conjecture tells us that the proportionality factor should be \(k_N=1/(8\cdot 768)\). Therefore, a contradiction.

Furthermore, let us look at representations that have size proportional to N. For example, the kth power determinant representation is one. The representation \(\{h\}\) for which \(\chi _{\{h\}}(A^2)=\textrm{det}(A^{2q})\) is given by \(\{h\}=\{q+N-1,q+N-2,...,q+1,q\}\). Evaluating the average integral (14) for \(f(A)=\textrm{det}(A^{2q})\) we obtain

According to (63), we would have

We then see that \(k_N\) depends on the representation through q, which again goes against the assumption that \(k_N\) only depends on N.

7.2 Character Expansion for CDT-Like Matrix Model Coupled with Ising Model

For CDT-like matrix model coupled with Ising model, the expression is more involved. We write its partition function as

and diagonalize the kinetic part,

where \(U=(A_1+A_2)/\sqrt{2}\) and \(V=(A_1-A_2)/\sqrt{2}\). The character expansion (52) for the latter partition function (67)

where the sum is performed over representations labeled by \(\{h^1\}\) and \(\{h^2\}\), and we defined the integral

Transform \(U=\Omega _1\Lambda _1\Omega _1^\dagger \) and \(V=\Omega _2\Lambda _2\Omega _2^\dagger \), and furthermore, by substituting \(\Omega _2=\Omega _1\Omega \), we have

that allows us to integrate over \(\Omega _1\), however, the orthogonality relation (59) is not enough, but one has to be equipped with a more general expression for Schur orthogonality relation.

8 Unitary Integral of Degree 4 Monomial

A more general way to state the Schur orthogonality relation is that for a compact group G and for matrix elements \(\phi ^\alpha _{ij}(g)\) of an element \(g \in G\), where the upper index indicates the representation and the lower indices indicate the corresponding elements of a given orthogonal basis,

Here, with G as U(N) and for \(\alpha =\beta =h\) by multiplying both sides by \(\phi ^h_{jl}(\Lambda ^2)\) and \(\phi ^h_{ki}(C_2)\) and summing over the repeated lower indices, we recover (59). Let us apply (71) to the unitary integral of (70), by defining the matrices which are independent of \(\Omega _1\) as \(M_1=\Lambda _1^2+\Omega \Lambda _2^2\Omega ^{\dag }\) and \(M_2=\Lambda _1\Omega \Lambda _2\Omega ^{\dag }+\Omega \Lambda _2\Omega ^{\dag }\Lambda _1\). Then, we can factorize the expression

Then, in (70), we have an integral

where \(g =\Omega _1\). This integral has some properties similar to the previous one (71), but has the double amount of matrix elements. This integral is also similar to the type of integral studied with the Weingarten calculus, however, here, more than one representations \(\alpha \) and \(\beta \) appear.

Putting all together, (70) becomes

and with the Theorem 8.1 below, we can evaluate \(I_{ij}\).

Proposition 8.1

Given \(g\in U(N)\) and a representation \(\alpha \), set its matrix elements as \(\phi ^\alpha _{ab}(g)\), where the indices a and b are associated with some chosen basis. The following holds true for a Haar integral:

Proof

In order to apply the Weingarten calculus [118] to the left hand side of (75), we need to know a basis for the invariant space of \(\alpha \,\otimes \,\beta \,\otimes \,{\bar{\alpha }}\,\otimes \,{\bar{\beta }}\). Indeed, finding the basis for the invariant space amounts to identifying the trivial representations \(1^{\oplus l_1^{\alpha \beta {{\bar{\alpha }}} {{\bar{\beta }}}}}\), upon decomposing the tensor product representation into irreducible representations,

where \(l_r^{\alpha \beta {{\bar{\alpha }}}{{\bar{\beta }}}}\) is the number of times the representation r appears in the expansion of the tensor product, known as Littlewood-Richardson coefficients. One can decompose separately the products \(\alpha \,\otimes \,\beta \) and \({\bar{\alpha }} \,\otimes \,{\bar{\beta }}\), i.e.,

Then, using \(l_{{\bar{r}}}^{{\bar{\alpha }}{\bar{\beta }}}=l_{r}^{\alpha \beta }\), we can write

but the representation orthogonality relation tells us that for two irreducible representations r and s, \(l_1^{r{\bar{s}}}=\delta _{r s}\). Therefore, we notice that the second big sum contains no trivial representations and the first contains one trivial representation for each product. In the end, we arrive at

Therefore, we conclude

Remark that there are infinite number of representations to sum over, however, only finite number of them have nonzero \(l_r^{\alpha \beta }\). Equation (80) is important to us because it allows to verify that the set we find later in (93) for the invariant space is indeed a basis because it contains as many elements as the dimension of the invariant space.

Let us analyze a basis for the invariant space \(\bigoplus _{r} 1^{\oplus (l_r^{\alpha \beta })^2}\), since it allows us to evaluate \(I_{ij}\) in (73). First, let us define a basis for the vector space of the tensor product representation \(\alpha \,\otimes \,\beta \,\otimes \,{\bar{\alpha }}\,\otimes \,{\bar{\beta }}\),

where a, \({{\bar{a}}}\), b, \({{\bar{b}}}\), and i are integers and i is a relabelling of indices \(a b {{\bar{a}}} {{\bar{b}}}\) with \(1\le i \le (d_\alpha )^2(d_\beta )^2\), and \(|e_a\rangle \), \(|e_b\rangle \), \(\langle e_{{\bar{a}}}|\) and \(\langle e_{{\bar{b}}}|\), with \(1\le a\le d_\alpha \), \(1\le {{\bar{a}}} \le d_\alpha \), \(1\le b \le d_\beta \), \(1\le {{\bar{b}}} \le d_\beta \), are respectively a basis for representations \(\alpha \), \(\beta \), \({{\bar{\alpha }}}\) and \({{\bar{\beta }}}\). Writing \(T_\mu \) as a basis for this invariant space, where \(1 \le \mu \le l_1^{\alpha \beta {{\bar{\alpha }}} {{\bar{\beta }}}}\), let us define the A matrix

and the Weingarten matrix w through the inverse of a matrix

The Weingarten theorem states that (73) can be expressed as

which is a function of T. T being invariant under \(\alpha \,\otimes \,\beta \,\otimes \,{\bar{\alpha }}\,\otimes \,{\bar{\beta }}\) is equivalent to

for every \(M\in \alpha \,\otimes \,\beta \). Furthermore, there exists a \(V \in U(d_\alpha \, d_\beta )\) such that, for every M, V transforms M into a block diagonal matrix,

where,

with \(n=\sum _r l_r^{\alpha \beta }\). For every representation r appearing in the decomposition of \(\alpha \,\otimes \,\beta \) as in (77), \(M_r\) is M in the specific representation r and appears as a block element in the diagonal of \(M_D\), appearing as many times as it appears in the decomposition (77), i.e., \(l_r^{\alpha \beta }\) times. Now, transform T by a change of basis by V

which implies

\(T_D\) can be found by focusing on the sector of a representation r that appears in the decomposition, where a sector is the block consisting of \(l_r^{\alpha \beta }\) number of block diagonal \(M_r\) matrices. Evaluating

and using properties of block matrix multiplication, each section can act independently. As an illustration, suppose that r appears twice, i.e., \(l_r^{\alpha \beta }=2\),

Then, denoting \(\mathbb {1}_r\) as the identity in the representation r, it is easy to see that

satisfies (89) for any complex numbers \(\gamma _{11}\), \(\gamma _{12}\), \(\gamma _{21}\), and \(\gamma _{22}\). In general, (92) can be written as

where for any representation r, that appears \(l_r^{\alpha \beta }\) times, we can construct \((l_r^{\alpha \beta })^2\) independent matrices \(T_r^{mn}\) by putting an identity matrix in an element of the \(l_r^{\alpha \beta }\times l_r^{\alpha \beta }\) matrix block corresponding to the representation r. If we consider all representations, we find as many independent invariants as (80), thus we have a complete basis for the invariant space. Using \(T_r^{mn}T_s^{pq}=\delta _{rst}\delta ^{np}T^{mq}_t\) and \({T_r^{mn}}^\dag =T_r^{nm}\) (where \(\delta ^{r s t} = 1\) for \(r = s = t\) and zero otherwise), the graham matrix elements are given by

where repeated indices are summed over. Consequently, \(T_r^{mn}\) is an orthogonal basis and

where \(d_r\) is the dimension of the representation r. Recalling (83), we can readily invert (94) to obtain

We also need to calculate the A matrix defined in (82), but from (88) we see that

Therefore, the A matrix is given by

where in the last expression, we insert

Notice that we can also write, now being more explicit on the representation,

where \(c^{rk}_{abp}\) are the Clebsch-Gordan coefficients, and the representations \(\alpha \) and \(\beta \) are written explicitly on the left but not on the right. We also introduce a new index k, an integer which satisfies \(1\le k \le l_r^{\alpha \beta }\) to distinguish the same representation r which appears \(l_r^{\alpha \beta }\) times in the decomposition of \(\alpha \otimes \beta \). Then, we can compute (98)

and using the coefficients for \(T_r^{mn}\) in this basis, \(\langle e^{sk}_p|T_r^{mn}|e^{\bar{s}\bar{k}}_{\bar{p}}\rangle =\delta ^{s\bar{s} r}\delta _{p \bar{p}} \delta ^{km}\delta ^{\bar{k} n}\), we further compute

where in the last expression there is a sum only over p. However, remark that there is no known formula for these coefficients for a general representation, therefore one cannot compute explicitly \(A_{i \mu }\) with the expression above (102). Nevertheless, inserting (102) in the expression (84), we get

Performing some of the sums, we find that \(I_{ij}\) in (73) can be expressed in terms of the (unknown) Clebsch-Gordan coefficients as

\(\square \)

We comment that the result in (104) is expressed in terms of the Clebsch-Gordan coefficients which are base dependent. The possibility of choosing different bases shows that there is a degree of freedom in the summation. This freedom is manifest in the summation over the indices p and q. Further simplification could be achieved by expressing (104) in a base invariant way.

9 Evaluation of \(\langle \chi _r(A) \rangle _0\) and \(\langle \chi _r(A^2) \rangle _0\)

In this section, we study and present several computational results on the large N limit of the Hermitian Gaussian matrix model averages \(\langle \chi _r (A)\rangle _0\) and \(\langle \chi _r (A^2)\rangle _0\) as the latter appeared as a key quantity as in (61) and the former can be an useful exercise and preparation for computing the latter. The Gaussian average of a character of A for a given representation \(\{h\}\) is given by

where \(Z_0\) is an Gaussian integral of A;

Similarly to (105), the Gaussian average of a character of \(A^2\) for a given representation, given by

For \(\{h\}^e=\{a\in \{h\}|\,a\;\mathrm {is\;even}\}\) and \(\{h\}^o=\{a\in \{h\}|\,a\;\mathrm {is\;odd}\}\), (105) evaluates to [119]

where \(n=\sum _i{h_i}-N(N-1)/2\) is the size of the representation, and \(d_h\) is the dimension of the representation h, which can be evaluated as

We also used

as presented in more detail in the appendix of [78].

Lemma 9.1

If there exists a GL(N) matrix M such that Di Francesco-Itzykson integral (105) can be computed as the character of M;

then the integral of our interest (61) can be solved as:

Proof

The function \(\chi _{\{h\}}(A^2)\) is a class function of A, therefore there exists an expansion

This expansion is finite, since it is the same as the present in the identity \(\chi _{\{h\}}(A^2)=\chi _{\textrm{Sym}^2\{h\}}(A)-\chi _{\textrm{Alt}^2\{h\}}(A)\). Thus,

We note that the exchange between the summation and the integral is legal, since the summation is finite. \(\square \)

Lemma 9.1 suggests that we shall study the expression in (108) in order to see if we can find such a M as in (111). Let us present a more general normalized version of (105);

The generalized normalized Di Francesco-Itzykson integral (115) can be expressed as a product of characters

where we used (110) and \(Z(B) = \int dA\;e^{-N\textrm{Tr}\frac{1}{2} AB^{-1}AB^{-1}}\) is a normalization factor. Remark that on the right hand side of (116), the only part that depends on the choice of B is \(\chi _{\{h\}}(B)\).

If we set \(B = \mathbb {1}\) in (116), we indeed recover (108). By setting \(B=C_1\) in (116), we find that

Since \((C_2)^2=C_1\) in the large N limit, by summing over all representations as in (113) on both sides of (117) we obtain

or performing change of variables,

which can also be written as

where \(\sim \) denotes equal up to a constant in N, and \(\langle \rangle _0\) denotes the Gaussian average over A as defined in (14). This result is nearly the expression we aim at computing in (61), except for the extra \(C_1\) in the left hand side.

On this note, let us now investigate further what the condition in (108) tells us about the matrix M in (111).

Proposition 9.2

Given a representation r of \(\textrm{GL}(N)\) defined through the set of shifted weights \({h}_i\), \(i=1,...N,\), consider \(\chi _r\) the character in that representation r. If M is a GL(N) matrix that satisfies

then, for a positive integer q,

Proof

From (50) we can deduce that

by using a representation \(\{h\}\) where \(\chi _{\{h\}}(M)\ne 0\) and the modified representations \(\{h^{p,k}\}\) where the shifted weights are related to the ones of \(\{h\}\) by \(h_i^{p,k}=h_i+p\,\delta _{k,i}\), for \(i=1,...,N\).

Using (109) in (108) we can deduce that, for some constant \(c_N\),

We assume N and p even. For i even, we set \(h_i=p (N-i)/2+1\), therefore \(h_i\) is always odd for ieven. For i odd, we set \(h_i=p {(N-i-1)}/{2}+2\), therefore \(h_i\) is always even for i odd. Consequently, for i even, \(h_i^{p,k}=p {(N-i)}/{2}+1+p\,\delta _{k,i}\), making \(h_i^{p,k}\) odd and, for i odd, \(h_i^{p,k}=p (N-i-1)/2+2+p\,\delta _{k,i}\), making \(h_i^{p,k}\) even. Notice that \(\{h\}^e=\{a+1\,|\,a\in \{h\}^o\}\), thus the Vandermonde determinants \(\Delta (\{h\}^e)\) and \(\Delta (\{h\}^o)\) are

where the indices i and j are always even. Because of this, by setting \(i=2\,m\) and \(j=2n\) we find

We then get that

which will be necessary for the normalization in (123). The terms involving double factorials in (124) are:

The size of the representation is

This way the character of M in the representation \(\{h\}\) is

Now, for the representations \(\{{h^{p,k}}\}\), set \(\{{h^{p,k}}\}^e=\{a\in \{h^{p,k}\}|\,a\;\mathrm {is\;even}\}\) and \(\{{h^{p,k}}\}^o=\{a\in \{h^{p,k}\}|\,a\;\mathrm {is\;odd}\}\). For k odd, \(\{{h^{p,k}}\}^e=\{{h}\}^e\), then

For k even, \(\{{h^{p,k}}\}^o=\{{h}\}^o\), then

Another consequence for k even is

Setting \(i=2\,m\), \(j=2n\), and \(k=2r\) we find

Let us emphasize that \(n>1\) since \(n>m\ge 1\). For \(k=2\),

For \(k> 2\), k even, since the factor for \(m=r-1\) and \(n=r\) in the product is zero,

It is also true that \(\{{h^{p,k}}\}^o=\{a-1|\;a\in \{{h^{p,k+1}}\}^e\}\), then \(\Delta (\{{h^{p,1}}\}^o)=\Delta (\{{h^{p,2}}\}^e)\), therefore

For k odd with \(k>2\), since \(\Delta (\{{h^{p,k}}\}^o)=\Delta (\{{h^{p,k+1}}\}^e)\),

Using (131), (132), (135), (136), (137) and (138) we get

The consequence of (139) in the sum in (123) is that only the terms for \(k=1\) and \(k=2\) are nonzero. For \(k=1\) or \(k=2\), the double factorial terms in (124) is

The size of the representation is

This way the character of M in the representation \(\{{h^{p,k}}\}\) is, for \(k=1\) or \(k=2\),

and zero for \(k\ge 3\). Hence, inserting (130) and (142) in (123), we find that

We evaluate a large N limit by keeping only the largest order in N,

Setting the integer \(q=p/2\), we get the trace property

\(\square \)

In principle, this information should be enough to find M up to matrix conjugation, similarly to what is done in (30) for the matrix \(C_m\). We leave this possibility of computation of M to future studies.

Let us now evaluate \(\langle \chi _r(A^2)\rangle _0\) for a finite N. In particular, in Theorem 9.3, the integral over matrices has been evaluated and replaced with a summation over integers.

Theorem 9.3

Let A be a random variable for a \(N \times N\) Hermitian matrix under the Gaussian measure. Given a representation r of \(\textrm{GL}(N)\) defined through the set of shifted weights \( h_i \), \(i=1,...N,\) and considering \(\chi _r\) the character in that representation r the following holds true:

Proof

For a Hermitian matrix A of size N whose eigenvalues are denoted by x, we write X as a diagonal matrix whose diagonal elements are the eigenvalues x’s. For a given representation r, we are interested in:

where

See [120, 121], and [122] for the change of variables from A to X. We use de Bruijn’s formula [123],

so we can reduce the integration over the N variables on the left hand side to a Pfaffian of an integral over two variables on the right hand side. \(d\mu (x)\) sets the measure on x. Here, it is a Gaussian measure \(d\mu (x)=e^{-\frac{N}{2}x^2}\). Since [124]

let us first rewrite (147),

An important thing to notice is that when \(x_i+x_j=0\), the Pfaffian has a divergence that is controlled by the zero in the determinant. However, when we use the de Bruijn’s formula the determinant is removed. Therefore, it is best to deal with this divergence already here. We introduce a damping with a small constant \(\epsilon \) to prevent a divergence from appearing. We take care of this by multiplying the elements in the Pfaffian by the term \(\frac{(x_i+x_j)^2}{(x_i+x_j)^2+\epsilon ^2}\). We regularize (151) by defining, for \(\epsilon >0\),

We expand the Pfaffian in (152), a polynomial, into its monomials. We do the same for the Pfaffian in (151). By comparing the absolute values of these integrands, term by term, we see that the ones from (152) are bounded by the ones from (151). Therefore, the dominated convergence theorem [125] tells us that

Now we can use the de Bruijn’s formula in (149) and we obtain the damped average

We remark that at this point, if we take the \(\epsilon \rightarrow 0\) limit, the integral in (154) turns into a principal value integral. Let us define

Then, according to (154),

We can simplify the N dependence by changing variables \(x,y\rightarrow N^{-\frac{1}{2}}x,N^{-\frac{1}{2}}y\),

Introducing sources \(\alpha \) and \(\beta \) through the terms \(\alpha x\) and \(\beta y\) in the exponential, we can turn the factors \(x^{2h_i}\) and \(y^{2h_j}\) into derivatives:

Let us also define

thus

By changing integration variables to \(u=(x+y)/\sqrt{2}\) and \(v=(x-y)/\sqrt{2}\), and also defining \(a=(\alpha +\beta )/\sqrt{2}\) and \(b=(\alpha -\beta )/\sqrt{2}\), it becomes

Here we notice that the integration over u and over v are independent, hence we can separate them,

where we define the integrals

The first integral is easily evaluated as

The second integral we solve by introducing another integral,

At this point we can evaluate the \(\epsilon \rightarrow 0\) limit due to the dominated convergence theorem. Thus, by defining

we find that

The integral over v in (167) is a simple Gaussian, and we can evaluate it to find

This function is, up to normalization conventions, the imaginary error function. Joining (164) and (168) in (162), we deduce that

Going back through (160) and (156), we obtain

and by using that

and that \(a=(\alpha +\beta )/\sqrt{2}\) and \(b=(\alpha -\beta )/\sqrt{2}\), we can evaluate the derivatives in (170) to find that

which becomes (146) by using (148), Pfaffian properties and that \((-1)^k=-(-1)^u\) for \(k+u\) odd and \((-1)^k=(-1)^u\) for \(k+u\) even. \(\square \)

We wish to remark here about the quantity \( \langle \chi _r(A^2)\rangle _0 \) which we computed by taking the limit of \(\epsilon \rightarrow 0\) in the expression \( \langle \chi _r(A^2)\rangle _\epsilon \) given in (156). For a given (in other words, finite) N, the expression we obtained in (172) is valid and therefore Theorem 9.3. However, if we pay attention the expression (157), we notice that N comes with \(\epsilon \). Then, one notices that once we send N to infinity, this procedure becomes sensitive to the ratio in which \(N \rightarrow \infty \) and \(\epsilon \rightarrow 0\) are sent.

One naturally wonders if the expression obtained in Theorem 9.3 may become simpler in large N limit. Let us explore this possibility in Proposition 9.4.

Proposition 9.4

Let A be a random variable for a \(N \times N\) Hermitian matrix under the Gaussian measure. Given a representation r of \(\textrm{GL}(N)\) defined through the set of normalized shifted weights \({{{\tilde{h}}}}_i = h_i/N\), \(i=1,...N,\). Consider \(\chi _r\) the character in that representation r. Defining

which satisfies \( \langle \chi _r(A^2)\rangle _0=\lim _{\epsilon \rightarrow 0}\langle \chi _r(A^2)\rangle _\epsilon \), the following holds true:

where \({\tilde{c}}_N=N^{\frac{N^2}{2}}(2\pi )^{-\frac{N}{2}}(\prod _{k=0}^{N-1}k!)^{-1}\).

Proof

We apply the saddle point method to compute (154). Let us first rescale integers \(h_i\) to \({{{\tilde{h}}}}_i = h_i/N\);

and prepare in a form proper to use the saddle point approximation,

Laplace’s method of integration [126] can be expressed as

where X is a set of d real variables, f is a twice-differentiable complex valued function of X, H(f) is the Hessian matrix of f, the points \(X_0\) are local maxima of f, and g is a complex valued function of X nonzero at \(X_0\). Comparing (176) and (177) we identify

Computing the saddle point equations \(x-2 {{{\tilde{h}}}}_i x^{-1}=0\) and \(y-2 {{{\tilde{h}}}}_j y^{-1}=0\), we find four saddle points:

Therefore, we see that the term associated with f is the same for any \(X_0\) and is

and therefore, for the Hessian,

Additionally,

Joining everything, we obtain the saddle point approximate for the regularized character of \(A^2\) as

Using some Pfaffian properties we can simplify the expression (183) and get

Applying the limit \(N\rightarrow \infty \), we find

\(\square \)

One may wish to apply \(\epsilon \rightarrow 0\) limit to (185) and if the limits \(\epsilon \rightarrow 0\) and \(N \rightarrow \infty \) commute, then, (185) can be manipulated to say that in the large N limit, \({\langle \chi _r(A^2)\rangle _0}\) is equal to

We computed \(\langle \chi _r(A^2)\rangle _0\) using (146) and (186) for \(1 \le N \le 30\) (we should note here that if we use integral form for \(\langle \chi _r(A^2)\rangle _0\), then even \(N=6\) the computation becomes slow) using Mathematica for trivial, defining, and determinant representations. However, for the above values of N that we tested, the values of the expression (186) do not converge to the values computed using the expression achieved in (146).

Now, we present first a new way of computing \(\langle \chi _R(A)\rangle _0\) below in Proposition 9.5, whose similar technique is used to compute \(\langle \chi _R(A^2)\rangle _0\) in Theorem 9.6.

Proposition 9.5

Let A be an \(N \times N\) Hermitian matrix under the Gaussian measure. Given a representation R of \(\textrm{GL}(N)\) defined through the set of shifted weights \(h_i\), \(i=1,...N,\) and considering \(\chi _R\) the character in the representation R, the following holds true:

where the numbers h are separated in a set of \(\lceil N/2\rceil \) even numbers \(h^e\) and \(\lfloor N/2 \rfloor \) odd numbers \(h^o\). If such a separation is not possible, then the average is 0.

Proof

For \(A\in \textrm{GL}(N)\), using character orthogonality for the symmetric group \(S_n\), we can write the character of A as

where the bar on \({\bar{\chi }}_R(\sigma )\) denotes complex conjugate. Interchanging the sums and using Schur-Weil duality,

we obtain

Now, we take the average of the above quantity. Wick’s probability theorem tells us that

where \([2^\frac{n}{2}]\) is the conjugacy class of permutations in \(S_n\) with n/2 2-cycles. Therefore we obtain

where we used (189) in the last equality, with \(A ={\mathbb {1}}\). Again using orthogonality relations, rewrite

where we denote the dimension of the \(S_n\) representation \(s_R=\chi _R(\textrm{id})\), where \(\textrm{id}\) is the identity permutation, and

In order to perform \(\sum _{\gamma \in [2^{\frac{n}{2}}]}\), we use the following trick. Let us first return to the relation (190) for some matrix M,

We observe that (195) sums over all elements in \(S_n\), whereas (194) sums over a subset of \(S_n\). We aim to extract (194) from (195). We choose M such that the summation in \(S_n\) is restricted to elements of \([2^{\frac{n}{2}}]\), achieved by \(\textrm{Tr}(\sigma M^{\otimes n})= 0\) for \(\sigma \) not in \([2^\frac{n}{2}]\) and constant for when \(\sigma \) is in \([2^\frac{n}{2}]\). Using the notation \([\prod _k k^{c_k}]\) for the cycle \([\sigma ]\) which \(\sigma \) belongs to, we wish to find M such that

for some constant a (that might depend on N or n but not on \(\sigma \)). But also, we can write

leading us to the identification, for \(k\ne 2\),

Hence, the possibility of a nonzero \(c_k\) with a general permutation \(\sigma \) tells us that

for \(k\ne 2\). Then, recalling

we conclude \(M=C_2\) with \(a=N^\frac{n}{2}\) is a possible solution. Then, setting \(M=C_2\) in (195) with (197), we find that

Finally, using (200)

we succeeded in restricting the sum to \([2^\frac{n}{2}]\). Identifying the last summation in (194) as

we achieve an expression for the average of character,

Let us now compute \(s_R\). We perform a similar trick as above, by considering \([1^n]\) instead of \([2^\frac{n}{2}]\). This time there is no summation in \([1^n]\) because the only element in this class is the identity permutation. We can show in a similar fashion that we find the result through the matrix \(C_1\) instead of \(C_2\). Skipping to the equivalent of (201), just changing the condition from \(k=2\) to \(k=1\) in relevant places, we are able to isolate the character of the identity permutation,

Identifying \(s_R = {\bar{\chi }}_R(id) = {\chi }_R(id)\), the dimension of the \(S_n\) representation can be written in terms of \(\chi _R(C_1)\) by

Finally, using (206) in (204) also with \(d_R=\chi _R(\mathbb {1})\) we find that

Additionally, with a formula for the character of \(C_m\) [78]

where \(\{h^{(\epsilon )}\}=\{a\in {\{h\}}\;|\; a=\epsilon \;\textrm{mod}\; m \}\), we can also find a different expression for \(\langle \chi _R(A) \rangle _0\), by inserting (208) into (207)

This expression is written in terms of the highest weights \(\{h\}\). \(\square \)

Following a similar argument as Proposition 9.5, we compute \(\langle \chi _r(A^2)\rangle _0\).

Theorem 9.6

Let A be an \(N \times N\) Hermitian matrix under the Gaussian measure. Given a representation r of \(\textrm{GL}(N)\) defined through the set of shifted weights \(h_i\), \(i=1,...N,\) and considering \(\chi _r\) the character in that representation, the following holds true:

Proof

We start from the relation

We wish to express in terms of A instead of \(A^2\). We use the relation

where \(\alpha =(1,1+n)(2,2+n)\cdots (n,2n)\) and on the left the trace considers the vector space \({\mathbb {C}}_N^{\otimes n}\), while on the right the vector space considered is \({\mathbb {C}}_N^{\otimes 2n}\). This relation can be shown by using the definition of trace on both sides. We then just write (211),

and just like before in Theorem 9.5, taking the average and using Wick’s theorem we obtain

At this point we need a relation that we prove in Appendix X, which is

where on the right hand side, the traces act on \({\mathbb {C}}_N^{\otimes n}\). Using this equation we then get

The summation over \(\sigma \) can then be evaluated by using the summation over representations (189) with the identity matrix in place of A and the summation over permutation (193);

Repeating for the summation in \(\rho \), and using \(\chi _r(id)=s_R\) and (206), we find

Finally, using (109) and (208) we find that

This interesting new result is valid considering \(N\gg n\). \(\square \)

10 Conclusions

A comprehensive understanding of quantum gravity-matter systems is not only important for phenomenological applications but also to check the consistency of the the underlying quantum theory. A fundamental theory that encompasses quantum-gravitational degrees of freedom as well as matter fluctuations depends on the synergy between the collective quantum fluctuations of the full theory. A paradigmatic example is QCD that loses asymptotic freedom and, thus, UV-completeness when the number of fermions is sufficiently large. Likewise, an asymptotically safe theory of quantum gravity can lose its UV fixed point for a sufficiently intricate matter content. Therefore, understanding the impact of matter degrees of freedom to the continuum limit of a candidate theory of quantum gravity is an essential ingredient in its construction. Conversely, quantum-gravity fluctuations can affect the dynamics of the underlying matter fields and trigger non-trivial effects that can leave imprints in the infrared. Furthermore, the large body of evidence reported by the CDT community reveals that the implementation of causality constraints can be central for a suitable continuum limit of the lattice-regularized path integral of quantum gravity. Thus, in view of what was said above, it is natural to investigate the continuum limit of CDT coupled to matter degrees of freedom. Actually, it is an active field of interest to understand the relation, if any, between the Euclidean and Lorentzian formalisms, see, e.g., [127,128,129,130,131].