Abstract

Consider an odd-sized jury, which determines a majority verdict between two equiprobable states of Nature. If each juror independently receives a binary signal identifying the correct state with identical probability p, then the probability of a correct verdict tends to one as the jury size tends to infinity (Marquis de Condorcet in Essai sur l’application de l’analyse à la probabilité des décisions rendues à la pluralité des voix, Imprim. Royale, Paris, 1785). Recently, Alpern and Chen (Eur J Oper Res 258:1072–1081, 2017, Theory Decis 83:259–282, 2017) developed a model where jurors sequentially receive independent signals from an interval according to a distribution which depends on the state of Nature and on the juror’s “ability”, and vote sequentially. This paper shows that, to mimic Condorcet’s binary signal, such a distribution must satisfy a functional equation related to tail-balance, that is, to the ratio \(\alpha (t)\) of the probability that a mean-zero random variable satisfies X \(>t\) given that \(|X|>t\). In particular, we show that under natural symmetry assumptions the tail-balances \(\alpha (t)\) uniquely determine the signal distribution and so the distributions assumed in Alpern and Chen (Eur J Oper Res 258:1072–1081, 2017, Theory Decis 83:259–282, 2017) are uniquely determined for \(\alpha (t)\) linear.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper solves a functional equation arising from an extension of the celebrated Condorcet Jury Theorem. In Condorcet’s model, an odd-sized jury must decide whether Nature is in one of two equiprobable states of Nature, A or B. Each juror receives an independent binary signal (for A or for B) which is correct with the same probability \(p>1/2\). Condorcet[6] [or see [10, Ch. XVII] for a textbook discussion] showed that, when jurors vote simultaneously according to their signal, the probability of a correct majority verdict tends to 1 as the number of jurors tends to infinity. Recently, Alpern and Chen [2, 3] considered a related sequential voting model where each juror independently receives a signal S in the interval \([-1,+1] \), rather than a binary signal. Low signals indicate B and high signals indicate A. The strength of this “indication” depends on the “ability” of the juror, a number a between 0 and 1, which is a proxy for Condorcet’s p ranging from 1/2 to 1. When deciding how to vote, each juror notes the previous voting, the abilities of the previous jurors, his own signal S and his own ability. He then votes for the alternative, A or B, that is more likely, conditioned on this information. The mechanism that underlies this determination is the common knowledge of the distributions by which a signal is given as private information to each juror, depending on his ability and the state of Nature. It is not relevant to the discussions of this paper, but we mention that one of the main results of Alpern and Chen [2] is that, given three jurors of fixed abilities, their majority verdict is most likely to be correct when they vote in the following order: middle-ability juror first, highest-ability juror next, and finally the lowest-ability juror.

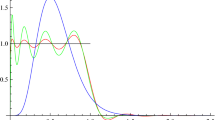

The distribution function, d.f. for short, for the signal S on \(\left[ -1,+1\right] \) that a juror of ability a receives in the Alpern–Chen jury model is given by

These were selected as the simplest family of distributions arising from linear densities in which steepness indicates ability: for the cumulative signal distributions following A or B, a high signal in \([-1,+1]\) was to indicate A as more likely, and a low signal to indicate B as more likely. For this reason an increasing density function was selected to follow A and a decreasing one to follow B. The simplest increasing and decreasing densities on \([-1,+1]\) are the linear functions (taken to mean “affine”) that go through (0, 1/2). This gave the signal distribution of the Alpern–Chen model. The main consequence of the results in this paper is that the signal distributions given by (1) and (2) are uniquely determined when requiring the tail-balance (definition below) to be linear (Theorem 2).

The relation between the two distributions \(F_a\) and \(G_a\) is based on the following assumption of signal symmetry:

For a continuous distribution it follows that

and so

This confirms that, for a juror of any ability a, the probability of receiving a signal less than t when Nature is B is the same as receiving a signal larger than t when Nature is A.

The jurors use their private information (signal) S in \([-1,1]\) to calculate the conditional probabilities of A and B by considering the relative likelihood that their signal came from the distribution F rather than the distribution G.

We now wish to relate this continuous signal model to the binary model of Condorcet. We want our notion of ability a to be a proxy for Condorcet’s probability p. First, we note that his p runs from \(p_{0}=1/2\) (a useless signal) to \(p_{1}=1\) (a certain signal) and the Alpern–Chen ability a runs from 0 (no ability, useless signals) to 1 (highest ability). For any fixed signal value \(t\in [-1,1]\), the conditional probability of A is to be linear in a, and so for some coefficient \(b=b(t)\) we wish to have

Given that the left-hand side of the above is Condorcet’s probability p, we want this conditional probability to be 1 when \(t=+1\) (highest signal) and \(a=1\) (highest ability), so this gives \(b(1)=1/2\). When \(t=-1\) the condition \(S\ge t\) gives no new information for any a, so the left-hand side should be the a priori probability of A, \({\mathbb {P}}_{a}[A]\), which is 1/2. Hence \(b(-1)=0\). Taking the slope b to be linear in t yields \(b(t)=(t+1)/4\), or

Putting \(H_{a}(t):={\mathbb {P}}_{a}[S\le t\,|\,A]\), so that \({\mathbb {P}} _{a}[S\le t\,|\,B]=1-H_{a}(-t)\) as above, by Bayes Law and since \({\mathbb {P}} [B]={\mathbb {P}}[A]\), we have

The last term, comparing the right tail against the tail sum, is known as the tail-balance ratio [5, §8.3], the probability that \( S\ge t\) (or even \(S\ge 0\)) given that \(|S|\ge t\). Its asymptotic behaviour and the regular variation of the tail-sum are particularly relevant to the Domains of Attraction Theorem of probability theory [5, Theorem 8.3.1]. The theorem is concerned with stable laws (stable under addition: the sum of two independent random variables with that law has, to within scale and centring, the same law), and identifies those that arise as limits in distribution of appropriately scaled and centred random walks.

In summary, we seek a family, indexed by the ability a, of signal distributions \(H_{a}\left( t\right) \) on \(\left[ -1,+1\right] \), which correspond to state of Nature A, while the distributions \(1-H_{a}\left( -t\right) \) correspond to state of Nature B, such that by Bayes Law

2 Solution of the tail-balance equation

Our main results are concerned with a functional equation of the following more general type:

with \(\alpha (t)\) a strictly monotone function, interpreting the left-hand side to be 1 for \(t=+1\). Of interest here are non-negative increasing functions H with \(H(-1)=0\) and \(H(1)=1\) representing probability distribution functions, hence the adoption of the name “tail balance” (as above) for this functional equation. Indeed, with these boundary conditions,

implying that the left and right tails of H are exactly balanced; furthermore,

since

Note that \(H(0)=1-\alpha (0)\).

The standard text-book treatment of functional equations is [1], but it is often the case that particular functional equations arising in applications require individual treatment—recent such examples are [7, 8]; for applications in probability, see [9].

2.1 Symmetric case

Let us start with the symmetric (i.e., \({\mathbb {P}}_{a}[A]={\mathbb {P}} _{a}[B]=1/2\)) and linear case introduced in the preceding section:

We have the following result.

Proposition 1

The unique solution for the d.f. \(H_{a}(t)\) on \([-1,+1]\) to the following functional equation, with \(0\le a\le 1\),

is given by

Proposition 1 gives the following as an immediate corollary for the signal distribution in the jury problem.

Theorem 2

The only d.f. on the signal space \([-1,+1]\) that makes the conditional probability \({\mathbb {P}}_{a}[A\,|\,S\ge t]\) a linear function of the juror’s ability a with a slope linear in t is given by the Alpern–Chen functions \(F_{a}(t)\) and \(G_{a}(t)\) given in (1) and (2).

Proof of Proposition 1

For \(t\in [-1,+1)\), take \(\beta (t):=\alpha (t)/(1-\alpha (t))\), which transforms the tail-balance equation (3) to the equivalent form

So with \(-t\) for t:

We obtain

Substitution for \(\alpha \) from (4) yields (6), since

\(\square \)

Remark

Evidently, the derivation above is valid more generally, in particular for an arbitrary increasing tail-balance function \(\alpha \), a matter pursued in the longer arXiv version of this paper [4].

2.2 General case

It is easy to see from the last part of Sect. 1 that, with \(\alpha (t)\) monotone as before, if the a priori probability of the state A of Nature is more generally \({\mathbb {P}}_{a}[A]=\theta \) with \( 0<\theta <1\), so that \({\mathbb {P}}_{a}[B]=1-\theta \), then the application of Bayes Rule described in Sect. 1 gives the following:

Here we have \(\alpha (-1)={\mathbb {P}}_{a}[A\,|\,S\ge -1]={\mathbb {P}} _{a}[A]=\theta \). With an assumption of linearity in a of the probability \( {\mathbb {P}}_{a}[A\,|\,S\ge t]\), we obtain

We now have the following general result.

Proposition 3

The functional equation (9), with \(0\le a\le 1\) and \(\alpha \) given by (10), has the following unique solution:

Here, taking \(\theta =1/2\) reduces (11) to (6); note that the denominator is positive (clear, when expressed as \(2\theta (at^{2}+2-a)+a(1-t^{2}))\). The proof of Proposition 3 is almost the same as that of Proposition 1 : it suffices to replace the term \(\beta (t)\) above (7) by its multiple \(\lambda \alpha (t)/(1-\alpha (t))\) with \(\lambda :=(1-\theta )/\theta \), then (9) becomes equivalent to (12) below (as in (8)):

From here and (10) some routine algebra (for details see [4]) yields (11).

The following general result follows immediately from Proposition 3 for the signal distribution in the jury problem with general a priori probability \({\mathbb {P}}_{a}[A]=\theta \).

Theorem 4

Let \({\mathbb {P}}_{a}[A]=\theta \) and \({\mathbb {P}}_{a}[B]=1-\theta \) with \( 0<\theta <1\). The only d.f. on the signal space \([-1,+1]\) that makes the conditional probability \({\mathbb {P}}_{a}[A\,|\,S\ge t]\) a linear function of the juror’s ability a with a slope linear in t is given by the following functions: if Nature is A, then

if Nature is B, then \({\tilde{G}}_{a}(t)={\mathbb {P}}_{a}[S\le t\,|\,B]=1- {\tilde{F}}_{a}(-t)\).

References

Aczél, J., Dhombres, J.: Functional Equations in Several Variables. Encyclopedia of Mathematics and its Applications, vol. 31. Cambridge University Press, Cambridge (1989)

Alpern, S., Chen, B.: The importance of voting order for jury decisions by sequential majority voting. Eur. J. Oper. Res. 258, 1072–1081 (2017)

Alpern, S., Chen, B.: Who should cast the casting vote? Using sequential voting to amalgamate information. Theory Decis. 83, 259–282 (2017)

Alpern, S., Chen, B., Ostaszewski, A.J.: A Functional Equation of Tail-Balance for Continuous Signals in the Condorcet Jury Theorem. arXiv:1911.11827 [math.PR], 26 Nov 2019 (2019)

Bingham, N.H., Goldie, C.M., Teugels, J.L.: Regular Variation, 2nd edn. Cambridge University Press, Cambridge (1989)

Marquis de Condorcet, J.A.N.C.: Essai sur l’application de l’analyse à la probabilité des décisions rendues à la pluralité des voix, Imprim. Royale, Paris (reprinted: Chelsea, 1972) (1785)

El-Hady, E., Brzdȩk, J., Förg-Rob, W., Nassar, H.: Remarks on solutions of a functional equation arising from an asymmetric switch. Contributions in Mathematics and Engineering. Springer, Cham, pp. 153–163 (2016)

Kahlig, P., Matkowski, J.: On a functional equation related to competition. Aequationes Math. 87, 301–308 (2014)

Ostaszewski, A.J.: Homomorphisms from functional equations in probability. Developments in Functional Equations and Related Topics. Springer Optimization and Its Applications, vol. 124. Springer, Cham, pp. 171–213 (2017)

Todhunter, I.: A History of the Mathematical Theory of Probability: From the Time of Pascal to that of Laplace. Cambridge University Press, Ch. XVII, Condorcet, Trial by Jury, pp. 388–392 (2016)

Acknowledgements

The authors thank the anonymous referee for helping this paper achieve a more concise format.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alpern, S., Chen, B. & Ostaszewski, A.J. A functional equation of tail-balance for continuous signals in the Condorcet Jury Theorem. Aequat. Math. 95, 67–74 (2021). https://doi.org/10.1007/s00010-020-00750-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00010-020-00750-1