Abstract

The starting point of this work is the fact that the class of evolution algebras over a fixed field is closed under tensor product. We prove that, under certain conditions, the tensor product is an evolution algebra if and only if every factor is an evolution algebra. Another issue arises about the inheritance of properties from the tensor product to the factors and conversely. For instance, nondegeneracy, irreducibility, perfectness and simplicity are investigated. The four-dimensional case is illustrative and useful to contrast conjectures, so we achieve a complete classification of four-dimensional perfect evolution algebras emerging as tensor product of two-dimensional ones. We find that there are four-dimensional evolution algebras that are the tensor product of two nonevolution algebras.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The necessity to address the problem of the genetic inheritance in biology from a mathematical point of view has made it essential to introduce tools of abstract algebra. The results of the first studies in genetic from an algebraic perspective of the genetic inheritance behavior, due to I. M. H. Etherington, can be found in [14, 15] and [16]. We can locate a background of algebras in genetics in [29] and [25]. In 2006, some researches arose, by J. P. Tian and P. Vojtechovsky, concerning non-Mendelian genetic inheritance in which it was necessary to introduce a new type of algebra called evolution algebras (see [28] and [27]). From this last paper, a wide amount of works about evolution algebras have appeared, see for example [9, 11, 13] and [5]. See [12] for a deeper background of the state of the art of evolution algebras.

Matrix theory is a fundamental tool for understanding evolution algebras, and in this paper we want to exploit some of the benefits of its Kronecker product. The decomposition of a matrix as the Kronecker product of other two ones has a lot of connections with other fields like physics, multivariate statistics, biochemistry, etc.

In the ground of evolution algebras, the fact that the structure matrix of a given evolution algebra A is a Kronecker product could not go unnoticed. This means that the evolution algebra under scope is a tensor product of evolution algebras of lower dimensions. Thus \( A\cong {A_1}\otimes {A_2}\) and this opens the possibility of investigating the properties of A that “go down” to the factors \(A_1\) and \(A_2\) and conversely, the properties of \(A_1\) and \(A_2\) that “go up” to A. This also poses the question of classifying those evolution algebras as tensor product of lower-dimensional evolution algebras.

The paper is organized as follows. The section of preliminaries and first results is divided into three subsections. In Sect. 2.1, we introduce, for commutative \({\mathbb {K}}\)- algebras, the definition of being locally nondegenerate, and we prove, in Lemma 2.3, that two-dimensional perfect evolution \({\mathbb {K}}\)-algebras and two-dimensional evolution \({\mathbb {K}}\)-algebras with one-dimensional square are locally nondegenerate. In Sect. 2.3, it is shown that perfectness is transferred from the tensor product to the factors and conversely (see item (i) Proposition 2.14). In Sect. 3, we search conditions that ensure that the property of being an evolution algebra is inherited from the tensor product to the factors and conversely obtaining as result Theorem 3.2. We give an example of anticommutative algebras (not evolution algebras) whose tensor product is an evolution algebra. However, if the ground field is algebraically closed, any four-dimensional tensorially decomposable simple evolution algebra is the tensor product of (simple) evolution algebras (Proposition 3.6). We prove this by making use of the classification done in [21]. In fact, if we add the conditions \(\textrm{char}({\mathbb {K}})\ne 2\) and both factors being commutative, the property of being simple can be discarded (Theorem 3.7). We also show, in arbitrary dimension, that nondegeneracy is inherited from the tensor product to the factors and conversely in Lemma 3.8. The annihilator of the tensor product of two algebras is related to the annihilators of the factors (Lemma 3.9). In Sect. 4, we use graph theory following [13] and we apply this to study irreducibility in the context of tensor products (Corollary 4.3). In Sect. 5, we prove that when one of the two factors of the tensor product is perfect, has an ideal of codimension 1 and the tensor product is an evolution algebra, then the other factor is an evolution algebra (Proposition 5.2). We compute the number of zeros z (and of zeros in the diagonal \(z_d\)) of the Kronecker product in terms of the corresponding numbers z and \(z_d\) of the factors. This allows screening the \(4\times 4\) matrices that arise as the Kronecker product of \(2\times 2\) matrices. We also classify four-dimensional tensorially decomposable perfect evolution algebras into 13 classes and determine some complete sets of invariants relative to such classification, some of them based on characteristic and minimal polynomials (Theorem 5.12). Finally, we describe an example of classification task for this class of algebras (Example 5.13). The final section glimpses how to deal with nonperfect evolution algebras.

2 Preliminaries and First Results

2.1 Generalities of the Evolution Algebras and Previous Results

We start recalling several definitions concerning evolution algebras from [5]. An evolution algebra over a field \({\mathbb {K}}\) is a \({\mathbb {K}}\)-algebra A provided with a basis \(B = \{e_i\}_{i \in \Lambda }\) such that \(e_i e_j = 0\) whenever \(i\ne j\) where \(\Lambda \) is a set of indices. Such a basis B is called a natural basis. Let A be an evolution algebra, and fix a natural basis B in A. The scalars \(\omega _{ki} \in {\mathbb {K}}\) such that \(e_i^2:=e_i e_i=\sum _{k\in \Lambda }\omega _{ki}e_k\) are called the structure constants of A relative to B, and the matrix \(M_B := (\omega _{ki})\) is said to be the structure matrix of A relative to B. In what follows, we omit the set of indices in a sum when there is no possible ambiguity. Moreover, we remind that the relation between two structure matrices of the same evolution algebra relative to different bases B and \(B'\) is \(M_{B'}=P^{-1}M_B P^{(2)}\) where P is the change of basis matrix from B to \(B'\) and \(P^{(2)}\) is the Hadamard product square of P (see [5, Theorem 1.3.2(I)]). We recall that an algebra A is said to be perfect if \(A^2=A\). In the particular case of an evolution algebra, perfectness is equivalent to the fact that the determinant of the structure matrix is not zero. Moreover, if \(\{e_i\}_{i \in \Lambda }\) and \(\{f_j\}_{j \in \Lambda }\) are natural bases of a perfect evolution algebra, then we know that there is a permutation \(\sigma \) and nonzero scalars \(c_j\) such that \(f_j=c_j e_{\sigma (j)}\) for any j. Assuming that \(e_i^2=\sum _{j} \omega _{ji}e_j\) and \(f_j^2=\sum _{k}\sigma _{kj}f_k\) (for \(\omega _{ji},\sigma _{kj}\in {\mathbb {K}}\)) we know that the relation between the structure constants is \(\sigma _{ij}=c_j^2c_i^{-1}\omega _{\sigma (i)\sigma (j)}.\) Specifically, if the structure constants satisfy \(\omega _{ii}=\sigma _{ii}=1\) for any \(i \in \Lambda \), we have \(c_i=1\) also for any \(i \in \Lambda \). Accordingly, one basis is a reordering of the other. In terms of the change of basis matrix, it reduces to a permutation matrix.

A reducible evolution algebra is an evolution algebra A that can be decomposed as the direct sum of two nonzero evolution algebras. An evolution algebra that is not reducible will be called irreducible. We say that an algebra A is simple if \(A^2\ne 0\) and 0 is the only proper ideal. An evolution algebra A is nondegenerate if it has a natural basis \(B = \{e_i\}_{i \in \Lambda }\) such that \(e_i^2\ne 0\) for every \(i\in \Lambda \) (as proved in [5, Corollary 1.5.4] the definition of nondegeneracy does not depend on the chosen natural basis). We recall that the annihilator of A, denoted by \(\textrm{ann}(A)\), is the ideal of A defined as \(\textrm{ann}(A):=\{x \in A \ \vert \ xa=0 \ \text {for any}\ a\in A\}.\) By [13, Lemma 2.7], we have that \(\textrm{ann}(A)=\textrm{span}(\{e_i: e_i^2=0\})\). So, A is nondegenerate if and only if \(\textrm{ann}(A)=0\). We will say that an algebra A is anticommutative if and only if \(xy=-yx\) for any \(x,y\in A\).

We will say that an algebra A is a zero-square algebra if \(x^2=0\) for any \(x\in A\). Observe that a zero-square algebra is necessarily anticommutative. If the characteristic of the ground field \({\mathbb {K}}\) is other than 2, then the \({\mathbb {K}}\)-algebra A is anticommutative if and only if A is a zero-square algebra. We denote by \({\mathbb {N}}^*\) the natural numbers except 0 and by \({\mathbb {K}}^\times \) all nonzero elements of \({\mathbb {K}}\).

For the reader’s convenience, we recall some basic concepts in order to define the Gröbner basis. A total order \(\preceq \) on \({\mathbb {Z}}_{\ge 0}^n\) is called a monomial order if the following conditions hold:

-

(1)

we have \((0,\ldots ,0)\preceq \alpha \) for all \(\alpha \in {\mathbb {Z}}_{\ge 0}^n\).

-

(2)

for any \(\alpha ,\beta ,\gamma \in {\mathbb {Z}}_{\ge 0}^n\) we have: \(\alpha \preceq \beta \Rightarrow \alpha +\gamma \preceq \beta +\gamma \).

Now let \(0\ne f=\sum _\alpha a_{\alpha }X^\alpha \in {\mathbb {K}}[x_1,\ldots ,x_n]\). The expressions \(a_\alpha X^\alpha \) where \(a_\alpha \ne 0\) are called the terms of f. Let \(\alpha _0\) be maximal (with respect to a given monomial order \(\preceq \)) such that \(a_{\alpha _0}\ne 0\). Then \({\textrm{LT}}(f):=a_{\alpha _0}X^{\alpha _0}\) is called the leading term (see [19, Definition 1.2.1]). Now we define the Gröbner basis from [19, Definition 1.2.7]. Let \(I\subset {\mathbb {K}}[x_1,\ldots ,x_n]\) be a nonzero ideal and \(\preceq \) be a monomial order on \({\mathbb {Z}}_{\ge 0}^n\). A finite subset \(G\subset I\setminus \{0\}\) is called a Gröbner basis of I if the monomials \({\textrm{LT}}(g)\) (\(g\in G\)) generate the ideal

In general, Gröbner bases are not unique. A Gröbner basis G is called reduced if all \(g\in G\) satisfy the following conditions:

-

(1)

the coefficient of \({\textrm{LT}}(g)\) is 1.

-

(2)

no term of g is divisible by \({\textrm{LT}}(g')\) for any \(g'\in G\), \(g'\ne g\).

The reduced Gröbner basis is unique, so that we can compare ideals by computing their reduced Gröbner basis. Observe that Gröbner bases could also be used for polynomial systems with parameters (see [24]). Indeed, we apply this technique to see whether the tensor product of the evolution algebras that appear in Table 2 is or not an evolution algebra. In this sense, the base field plays a relevant role.

Now, we give here a notion that appears in [6] and that will be used in the sequel. Let A be an n-dimensional commutative algebra with fixed basis \(B=\{e_i\}_{i=1}^n\). We can write the product of A in the form

that provides the family of inner products \(\{\langle \ , \ \rangle _i \}_{i=1}^n\) relative to the basis B. Define \(M_B(\langle \ , \ \rangle _k)\) as the Gram matrix of the inner product \(\langle \ , \ \rangle _k \ :\ A\times A\rightarrow {\mathbb {K}}\) relative to the basis B (taking the same basis for all the inner products). Another notation that we must recall is that if we consider the affine space \({\mathbb {K}}^m\) and H an ideal of the polynomial \({\mathbb {K}}\)-algebra \({\mathbb {K}}[x_1,\ldots ,x_m]\), we define \(V(H):=\{x\in {\mathbb {K}}^m \ :\ q(x)=0,\ \forall q\in H\}\).

Definition 2.1

[6, Definition 1] In the above conditions, we have the polynomial \({\mathbb {K}}\)-algebra \(R:={\mathbb {K}}[\{z\}\sqcup \{x_{ij}\}_{i,j=1}^n]\) and we consider the ideal J of R generated by the polynomials \(p_0\) and \(p_{ijk}\) defined as:

and

where \(i,j,k=1,\ldots ,n\), \(i< j\) since A is a commutative algebra. The ideal J will be called the evolution test ideal of A though its form depends of the chosen basis.

Notice that, if A is an evolution algebra we have a natural basis \(\{f_i\}_{i=1}^n\) where \(f_i=\sum _j a_{ij}e_j\) and the matrix \((a_{ij})\) is invertible whence, defining \(u=\det (a_{ij})\), the element \((u^{-1},a_{11},\ldots ,a_{nn})\) is in V(J). Conversely, any zero of V(J) provides a natural basis of A. Thus, V(J) detects if A is an evolution algebra by the following criterium: \(V(J)\ne \emptyset \) if and only if A is an evolution algebra. Consequently, if \(1\in J\), the algebra A is not an evolution algebra. The effective way to see whether \(1\in J\) or not would be to compute a Gröbner basis of J.

A Gröbner basis of an ideal I in a polynomial algebra is a generating system of the ideal that enjoys interesting computational properties (see [1] or [20]). It is also important to note that the Gröbner basis has also been implemented on evolution algebras in the papers [10, 11, 17] and [18]. Now, to detect if 1 is an element of a given ideal J, we may need to compute a Gröbner basis of the above-defined ideal J. In fact, if \(1\in J\), then its reduced Gröbner basis is \(\{1\}\). For example, before Table 2, we use the evolution test ideal to see that \(\textbf{A}_2\) is an evolution algebra. Later, we also use the evolution test ideal to show that \(\textbf{A}_4(0)\otimes \textbf{A}_4(0)\) is not evolution algebra. Moreover, for this purpose, it is necessary to use computational tools. In general, if \(\dim (A)=n\) with A a commutative algebra, then there are \(n \left( {\begin{array}{c}n\\ 2\end{array}}\right) +1 =\frac{n^3-n^2+2}{2}\) polynomials of \(n^2+1\)-variables in the generating set of the ideal. It has been pointed out that in the worst case, the complexity of the algorithms for computing Gröbner basis is doubly exponential in the number of variables (see [2]). Thus the computation of Gröbner basis may become quickly impracticable as the dimension of the algebra increases. To tackle this hurdle, we can use the following

Remark 2.2

Let A be an n-dimensional commutative algebra and let \(\langle \ ,\ \rangle _i\) with \(i=1,\ldots ,n\) be the set of inner products given by (1). In this case, A is an evolution algebra if and only the set of inner products is simultaneously orthogonalizable, i.e., there is a basis of A such that the matrix of each \(\langle \ ,\ \rangle _i\) is diagonal. This is proved in [4, Theorem 1] when the ground field is \({\mathbb {R}}\) or \({\mathbb {C}}\) and for a general field in [6]. So, we are led to the problem of the possibility of simultaneously orthogonalizability of a set of inner products. This is translated into the problem of the simultaneous diagonalization of matrices by congruence: that is, given a set of matrices \(M_1,\ldots , M_n\) with coefficients in \({\mathbb {K}}\), under which conditions is there an invertible matrix P such that all the matrices \(PM_iP^t\) are diagonal matrices (\(P^t\) is the transpose matrix of P)?

We briefly outline the steps to follow:

-

(1)

If some of the \(M_i\)’s are nonsingular (without loss of generality, we may assume that \(M_1\) is nonsingular) the procedure is as follows: consider \(N_i:=M_iM_1^{-1}\) for \(i=2,\ldots ,n\). Then, if these \(N_i\)’s are diagonalizable and commute, we conclude that \(\{M_1,\ldots ,M_n\}\) is simultaneously diagonalizable by congruence and conversely if \(\textrm{char}({\mathbb {K}})\ne 2\). The proof of this result is in [6, Corollary 1]. Also, we use [6, Theorem 1] for \(\textrm{char}({\mathbb {K}})=2\). In this case, we have the following:

-

(a)

If \(\{M_1,\ldots ,M_n\}\) is simultaneously diagonalizable by congruence, then the \(N_i\)’s commute and are diagonalizable.

-

(b)

If the \(N_i\)’s commute and are diagonalizable with respect to a basis that is orthogonal relative to \(M_1\), then \(\{M_1,\ldots ,M_n\}\) is simultaneously diagonalizable by congruence.

-

(a)

-

(2)

If all the matrices in the set \(\{M_1,\ldots , M_n\}\) are singular, then a reduction to the previous case is possible as explained in [6].

Recall that a symmetric bilinear form \(f:A\times A \rightarrow {\mathbb {K}}\) is nondegenerate if \(f(x,y)=0\) for all \(y\in A\) implies that \(x=0\), in other case we say that f is degenerate.

Lemma 2.3

If A is a 2-dimensional evolution \({\mathbb {K}}\)-algebra in some of the following cases:

-

(i)

A is perfect,

-

(ii)

A is such that \(\dim (A^2)=1\) but \(\textrm{ann}(A)=0\),

the inner products \(\langle \ ,\ \rangle _i\) of A given by (1) may be chosen so that some of them are nondegenerate.

Proof

In the first case, given that \(\dim (A^2)=2\) the inner products \(\langle \ ,\ \rangle _i\) such that \(xy=\langle x,y\rangle _1e_1+\langle x,y\rangle _2e_2\) for any x, y, are linearly independent. If some of them is nondegenerate there is nothing to prove, so assume that both are degenerate. Take a natural basis B of A. Then the matrices of both inner products relative to B are diagonal and of the form \(\tiny \begin{pmatrix}\lambda &{} 0\\ 0 &{} 0\end{pmatrix}, \ \begin{pmatrix}0 &{} 0\\ 0 &{} \mu \end{pmatrix}\), respectively, with \(\lambda , \mu \in {\mathbb {K}}^{\times }\). Consequently, the matrix of \(\langle \ ,\ \rangle ':=\langle \ ,\ \rangle _1+\langle \ ,\ \rangle _2\) in the basis B is diagonal with nonzero entries; hence, \(\langle \ ,\ \rangle '\) is nondegenerate. Furthermore, \(\langle \ ,\ \rangle _1=\langle \ ,\ \rangle '-\langle \ ,\ \rangle _2\) and \(xy=\langle x,y\rangle 'e_1+\langle x,y\rangle _2(e_2-e_1)\) for any \(x,y\in A\). Thus, we have found a basis \(\{e_1,e_2-e_1\}\) such that one of the inner products given by (1) relative to that basis is nondegenerate. The second item is trivial since, without loss of generality, we may assume that \(xy=\langle x,y\rangle _1e_1\) for a nonzero element \(e_1\) and \(\textrm{ann}(A)\) is precisely the radical of the inner product \(\langle \ ,\ \rangle _1\). \(\square \)

Observe that if A is a 2-dimensional evolution \({\mathbb {K}}\)-algebra with \(\textrm{ann}(A)\ne 0\), then the two inner products of A given by (1) are degenerate.

Definition 2.4

Let A be a commutative \({\mathbb {K}}\)-algebra. We say that A is locally nondegenerate if there exists a basis such that some of the inner products associated with the product of A, as in formula (1), is nondegenerate.

Observe that all the algebras that are under the conditions of Lemma 2.3 are locally nondegenerate.

2.2 Generalities of Graph Theory

We recall some definitions relative to graphs that will be used throughout the article. A directed graph is a 4-tuple \(G=(E^0, E^1, s, t)\) with \(E^0\), \(E^1\) sets and \(s, t: E^1 \rightarrow E^0\) maps. Sometimes we will write \(G=(E_G^0, E_G^1, s_G, t_G)\) to indicate the graph we refer to. The vertices of G are the elements of \(E^0\) and the arrows or directed edges of G are the elements of \(E^1\). For \(f\in E^1\) the vertices t(f) and s(f) are called the target and the source of f, respectively. Every arrow f is of the form s(f)t(f). A finite sequence of arrows \(\mu =f_1\dots f_n\) in G, such that \(t(f_i)=s(f_{i+1})\) for \(i\in \{1,\dots ,(n-1)\}\) is called a path or a path from \(s(f_1)\) to \(t(f_n)\). In this case we say that n is the length of the path \(\mu \) and denote it by \(\vert \mu \vert \). We define the set \(\mu ^0:=\{s(f_1),t(f_1),\dots ,t(f_n)\}\). Let \(\mu = f_1 \dots f_n\) be a path in G. If \(v=s(f_1)=t(f_n)\), then \(\mu \) is called a closed path based at v. Given a finite directed graph G, its adjacency matrix is the matrix \(M_{G}=(a_{ij})\) where \(a_{ij}\) is the number of arrows from i to j.

We denote by \({\mathscr {M}}_{m,n}({\mathbb {K}})\) the set of \(m \times n\) matrices over the field \({\mathbb {K}}\). In particular, if \(m=n\) we write it as \({\mathscr {M}}_{n}({\mathbb {K}})\).

We associate a directed graph to an evolution algebra following [13]. Let A be an n-dimensional evolution algebra with natural basis \(B=\{e_i \}_{i=1}^{n}\) and structure matrix \(M_B=(\omega _{ij})\in {\mathscr {M}}_n({\mathbb {K}})\). We consider the matrix \(M=(a_{ij})\in {\mathscr {M}}_n({\mathbb {K}})\) such that \(a_{ij}=0\) if \(\omega _{ij}=0\) and \(a_{ij}=1\) if \(\omega _{ij}\ne 0\). The directed graph whose adjacency matrix is given by \(M=(a_{ij})\) is called the directed graph associated with the evolution algebra A (relative to the basis B). We denote it by \(G_{B}\) (or simply by G if the basis B is understood) and its adjacency matrix by \(M_{G_B}\) or \(M_G\). In this way, we consider directed graphs with at most one arrow between two vertices (not necessarily different) of \(E_G^0\). A directed graph is strongly connected if given two different vertices there exists a path that goes from the first to the second one.

We remind some results about connectivity of directed graphs. Consider \({\mathscr {B}}=(\{0,1\},\vee ,\wedge )\) the standard Boolean algebra (\(\vee \) denotes logical OR, and \(\wedge \) denotes logical AND). We denote by \({\mathscr {B}}^{(n\times n)}\) the set of all \(n\times n\) Boolean matrices. Consider \(\odot \) the Boolean matrix multiplication, that is, if \(A,B\in {\mathscr {B}}^{(n\times n)}\), then \(C=A\odot B\in {\mathscr {B}}^{(n\times n)}\) with \(c_{ij}=\bigvee _{k=1}^{n}a_{ik}\wedge b_{kj}\). Let \(A^0=I\) where I denotes the identity matrix and let \(A^i=A\odot A^{i-1}\) for \(i >0\) integer. We define \(\sigma _k(A)=A\vee A^2 \vee \ldots \vee A^k\) for any \(A\in {\mathscr {B}}^{(n\times n)}\). As the number of elements of \({\mathscr {B}}^{(n\times n)}\) is finite, we have that \(\sigma _j(A)=\sigma _i(A)\) for some \(j>i\) and \(j\le n\). This is a consequence of [22, Theorem 2, Sect. 4.8]. It is easy to check that if \(\sigma _{k-1}(A)=\sigma _k(A)\) for some \(k \in {\mathbb {N}}\), then \(\sigma _k(A)=\sigma _{k+1}(A)\).

Definition 2.5

For a matrix \(A\in {\mathscr {B}}^{(n\times n)}\) the minimum k such that \(\sigma _k(A)=\sigma _{k+1}(A)\) will be called the stabilizing index of A.

Remark 2.6

By [13, Corollary 4.5], we know that if A is a finite-dimensional perfect evolution algebra and \(B_1\) and \(B_2\) are two natural bases of A, then the directed graphs \(G_{B_1}\) and \(G_{B_2}\) are isomorphic. So, their adjacency matrices \(M_{G_{B_1}}\) and \(M_{G_{B_2}}\) differ by a permutation of rows and columns. Therefore, there exists a permutation matrix Q such that \(M_{G_{B_2}}=Q M_{G_{B_1}}Q^{-1}\). That is to say, \(M_{G_{B_1}}\) and \(M_{G_{B_2}}\) are similar matrices. Consequently, \(M_{G_{B_1}}\) and \(M_{G_{B_2}}\) have the same characteristic and minimal polynomial. Note also that \(Q^{-1}=Q^{t}\).

2.3 Generalities of the Tensor Product of Algebras and Previous Results

In this section, we will consider the tensor product of algebras over fields. As it is well known, this product is commutative and associative (up to isomorphism). We will define tensorially decomposable algebras and investigate the way in which certain properties can be transferred from the factors to the tensor product and/or vice versa.

First, we recall that the tensor product of two vector spaces V and W over a field \({\mathbb {K}}\) is a vector space defined as the set

where \(v\otimes w \) is a bilinear map

\(\forall v\in V\), \(\forall w\in W\) with \(V^*\) and \(W^*\) denoting the dual vector spaces of V and W, respectively. Moreover, if \(B_1=\{e_{i}\}_{i\in \Lambda }\) is a basis of V and \(B_2=\{f_{j}\}_{j\in \Gamma }\) is a basis of W, then \(B_1\otimes B_2:=\{e_{i}\otimes f_{j}\}_{i\in \Lambda , j\in \Gamma }\) is a basis of the tensor product \(V \otimes W\).

Definition 2.7

Let \(A_1\) and \(A_2\) be two \({\mathbb {K}}-\)algebras with bases \(\{e_{i}\}_{i\in \Lambda }\) and \(\{f_{j}\}_{j\in \Gamma }\), respectively. We define a product on \(A_1\otimes A_2\) as follows:

Remark 2.8

If \(A_1\) and \(A_2\) are both commutative algebras or anticommutative algebras, then \(A_1\otimes A_2\) is commutative. Since zero-square algebras are anticommutative we have: if both algebras \(A_1\) and \(A_2\) are commutative or both zero-square algebras, then their tensor product is a commutative algebra.

Definition 2.9

We say that a \({\mathbb {K}}\)-algebra A is tensorially decomposable if it is isomorphic to \(A_1\otimes A_2\) where \(A_1\) and \(A_2\) are \({\mathbb {K}}\)-algebras with \(\dim (A_1),\dim (A_2)> 1\). Otherwise we say that A is tensorially indecomposable.

We use the terms tensorially decomposable and tensorially indecomposable also for matrices when they come or not from the Kronecker product of two matrices.

Lemma 2.10

Let \(A_1\) and \(A_2\) be \({\mathbb {K}}\)-algebras such that \(A_1\otimes A_2\) is commutative and \((A_1\otimes A_2)^2\ne 0\), then either \(A_1\) and \(A_2\) are commutative or both are zero-square algebras.

Proof

Assume that \(A_1\) or \(A_2\) is not a zero-square algebra. Without loss of generality, we may assume that \(A_1\) is not zero-square and take \(a\in A_1\) such that \(a^2\ne 0\). Then for any \(x,y\in A_2\) we have \(a^2\otimes xy=(a\otimes x)(a\otimes y)=(a\otimes y)(a\otimes x)=a^2\otimes yx\) whence \(a^2\otimes (xy-yx)=0\) that implies \(xy=yx\). So \(A_2\) is commutative. We know that \(A_2^2\ne 0\) because \((A_1\otimes A_2)^2\ne 0\). Take \(b_1,b_2\in A_2\) such that \(b_1b_2\ne 0\). Then for any \(a_1,a_2\in A_1\) we have

hence by commutativity of \(A_2\), \(a_1a_2=a_2a_1\) so that \(A_1\) is also commutative.

\(\square \)

Remark 2.11

Recall that if we have two \({\mathbb {K}}\)-vector spaces V and W and two inner products (symmetric bilinear forms) \(\langle \ , \ \rangle _1:V\times V \rightarrow {\mathbb {K}}\) and \(\langle \ , \ \rangle _2:W\times W \rightarrow {\mathbb {K}}\), then there is an inner product \(\langle \ , \ \rangle _1 \otimes \langle \ , \ \rangle _2 :(V \otimes W) \times (V \otimes W)\rightarrow {\mathbb {K}}\) such that \((x \otimes y, x' \otimes y') \mapsto \langle x,x'\rangle _1 \langle y,y'\rangle _2\). Fixing bases \(B_1\) and \(B_2\) in V and W, respectively, then the Gram matrix of the inner product \(\langle \ , \ \rangle _1 \otimes \langle \ , \ \rangle _2\) relative to the basis \(B_1\otimes B_2\) is

Remark 2.12

Recall that for V and W \({\mathbb {K}}\)-vector spaces and \(\sum _{i\in I } v_i\otimes w_i=0\) with \(\{w_i\,|\,i\in I\}\) a linearly independent set of W and \(v_i\in V\) for all \(i\in I\), then \(v_i=0\) for all \(i\in I\).

Lemma 2.13

Let \(A_1\) and \(A_2\) be two \({\mathbb {K}}\)-vector spaces of arbitrary dimension. If \(U_1, V_1\) are subspaces of \(A_1\) and \(U_2, V_2\) are subspaces of \(A_2\) with \(U_i \subset V_i\) (\(i=1,2\)), then \(U_1\otimes U_2 = V_1 \otimes V_2\) if and only if \(U_1=V_1\) and \(U_2=V_2\).

Proof

Consider \(U_1=\textrm{span}\{a_i\,|\,i\in I\}\), \(V_1=\textrm{span}(\{a_i\,|\, i\in I\} {\dot{\bigcup }}\{a_j\,|\,j\in J\})\), \(A_1=\textrm{span}(\{a_i\,|\, i\in I\}{\dot{\bigcup }} \{a_j\,|\,j\in J\}{\dot{\bigcup }}\{a_h\,|\, h\in H\})\) and \(U_2=\textrm{span}\{b_p\,|\,p\in P\}\), \(V_2=\textrm{span}(\{b_p\,|\, p\in P\} {\dot{\bigcup }}\{b_q\,|\,q\in Q\})\), \(A_2=\textrm{span}(\{b_p\,|\, p\in P\}{\dot{\bigcup }} \{b_q\,|\,q\in Q\}{\dot{\bigcup }}\{b_r\,|\, r\in R\})\). Now we take \(x\in V_1\) and \(b_k\in V_2\) with \(k\in P\) and consider \(x\otimes b_k\in V_1\otimes V_2=U_1\otimes U_2\). So \(x\otimes b_k=\displaystyle \sum _{(i,p)\in I\times P}\lambda _{ip}^{(k)}a_i\otimes b_p\). If we write \(z_{kp}=\sum _{i\in I}\lambda _{ip}^{(k)}a_i\), then \(x\otimes b_{k}=\sum _{p\in P}z_{kp}\otimes b_p\). So \((x-z_{kk})\otimes b_k-\displaystyle \sum _{p\in P, p\ne k} z_{kp}\otimes b_p=0\).

As \(\{b_p\,|\,p\in P\}\) is a basis of \(U_2\) for Remark 2.12, then \(x=z_{kk}\in U_1\). Analogously, it can be proved that \(V_2\subset U_2\). \(\square \)

For finite-dimensional \({\mathbb {K}}\)-vector spaces the previous lemma can be proved by using a dimensional argument.

Proposition 2.14

Let \(A_1\) and \(A_2\) be two \({\mathbb {K}}\)-algebras and consider the tensor product \(A_1 \otimes A_2\). Then

-

(i)

\(A_1\) and \(A_2\) are perfect algebras if and only if \(A_1 \otimes A_2\) is a perfect algebra.

-

(ii)

\(A_1\) and \(A_2\) are both simple algebras if \(A_1\otimes A_2\) is simple.

Proof

For the first item, let \(B_1=\{e_i\}_{i \in \Lambda }\) be a basis of \(A_1\) and \(B_2=\{f_j\}_{j \in \Gamma }\) a basis of \(A_2\). We consider \(B_1\otimes B_2=\{e_i\otimes f_j\}_{i\in \Lambda , j\in \Gamma }\) a basis of \(A_1\otimes A_2\). If we suppose that \(A_1\) and \(A_2\) are perfect, then \(A_1=A_1^2\) and \(A_2=A_2^2\). Moreover \(e_i=\sum _{q}\lambda _{qi}e_q^2\) and \(f_j=\sum _{t}\mu _{tj} f_t^2\). To see that \(A_1\otimes A_2\) is perfect we need to show that \(A_1\otimes A_2\subset (A_1\otimes A_2)^2\). Indeed, as \(e_i\otimes f_j=\sum _{t,q} \lambda _{qi} \, \mu _{tj}e_q^2\otimes f_t^2=\sum _{t,q} \lambda _{qi} \, \mu _{tj}(e_q\otimes f_t)^2\in (A_1\otimes A_2)^2\), then we have the statement.

For the converse, we first prove that \((A_1\otimes A_2)^2=A_1^2\otimes A_2^2\). To show the inclusion \((A_1\otimes A_2)^2\subset A_1^2\otimes A_2^2\) we take \(z\in (A_1\otimes A_2)^2\), then \(z=\sum _{i,k,j,l}\lambda _{ijkl}(e_i\otimes f_j)(e_k\otimes f_l)=\sum _{i,j,k,l}\lambda _{ijkl}e_i e_k\otimes f_j f_l\in A_1^2\otimes A_2^2\).

For the other inclusion, since \(A_1^2\subset A_1\) and \(A_2^2\subset A_2\) then \(A_1^2\otimes A_2^2\subset A_1\otimes A_2\). Consequently, as \(A_1\otimes A_2\) is perfect we have \(A_1^2\otimes A_2^2 \subset A_1\otimes A_2=(A_1\otimes A_2)^2\).

So far we know \((A_1\otimes A_2)^2=A_1^2\otimes A_2^2=A_1 \otimes A_2\). By Lemma 2.13 we get \(A_1^2=A_1\) and \(A_2^2=A_2\) as we wanted.

For the second item, if \(0\ne I\) is a proper ideal of \(A_1\), then \(0\ne I\otimes A_2\triangleleft A_1\otimes A_2\) is also proper by Lemma 2.13. \(\square \)

Recall that if \(M_1 \in {\mathscr {M}}_n({\mathbb {K}})\) and \(M_2\in {\mathscr {M}}_m({\mathbb {K}})\), then \( \vert M_1 \otimes M_2 \vert = \vert M_1 \vert ^m \vert M_2\vert ^n\) (see [26, Corollary p.6]). So, in a finite-dimensional context, item (i) Proposition 2.14 is a corollary of the previous formula.

Observe that the converse of item (ii) Proposition 2.14 is not true because there are simple algebras (even evolution algebras) whose tensor product is not simple. For instance, take \({\mathbb {K}}\) to be a field of characteristic other than 2 and A to be the 2-dimensional \({\mathbb {K}}\)-algebra with a basis \(\{e_1,e_2\}\) such that \(e_2e_1=e_1e_2=0\), \(e_1^2=e_2\) and \(e_2^2=e_1\). It is easy to check that A is a simple algebra. However, if we consider the vector subspace \(I=\textrm{span}(\{e_1\otimes e_1, e_2 \otimes e_2\})\) of \(A \otimes A\), one can see that I is an ideal of \(A\otimes A\). Then \(A\otimes A\) is not a simple algebra.

Remark 2.15

Not every ideal of the tensor product of two evolution algebras is a tensor product of ideals. Consider \(A_1\) any simple evolution \({\mathbb {K}}\)-algebra with \(\dim (A_1)>1\) and \(A_2\) a nonzero evolution \({\mathbb {K}}\)-algebra with \(A_2^2=0\). The tensor product \({\mathbb {K}}\)-algebra \(A_1\otimes A_2\) satisfies \((A_1\otimes A_2)^2=0\); hence, any subspace is an ideal. Take \(x\in A_1 \setminus \{0\}\) and \(y\in A_2\setminus \{0\}\). Then \({\mathbb {K}}(x\otimes y)\) is a one-dimensional ideal of \(A_1\otimes A_2\). However, \({\mathbb {K}}(x\otimes y)\) cannot be written as \(I_1\otimes I_2\) with \(I_1\triangleleft A_1\) and \(I_2\triangleleft A_2\). Indeed, if we had \({\mathbb {K}}(x\otimes y)=I_1\otimes I_2\), then \(\dim (I_1\otimes I_2)=1\) hence \(\dim (I_1)=\dim (I_2)=1\), but \(A_1\) has no ideal of dimension 1 because it is simple and \(\dim (A_1)>1\).

3 The Tensor Product of Evolution Algebras

Now, we will study under which conditions being evolution algebra is inherited from the tensor product to the factors. We will prove that for the particular case of commutative 4-dimensional evolution \({\mathbb {K}}\)-algebras of nonzero product with \({\mathbb {K}}\) algebraically closed and \(\textrm{char}({\mathbb {K}})\ne 2\), the previously mentioned property holds. One of the tools for proving this is the classification in [21, Table 1]. We also study the annihilator of the tensor product of evolution algebras relating it to the annihilators of the factors.

The following technique lemma will be used later.

Lemma 3.1

Let V be a finite-dimensional \({\mathbb {K}}\)-vector space and \(f:V\rightarrow V\) linear. If the linear map \(1\otimes f:V\otimes V\rightarrow V\otimes V\) such that \(x\otimes y\mapsto x\otimes f(y)\) is diagonalizable, then f is diagonalizable.

Proof

Fix a basis \(\{e_i\}_{i=1}^n\) of V and let \(\{w_j\}_{j=1}^{n^2}\) be a basis of \(V\otimes V\) such that \((1\otimes f)(w_j)=\lambda _j w_j\) for any j with \(\lambda _j\in {\mathbb {K}}\). If we write \(w_j=\sum _{k} e_k\otimes x_{kj}\) where \(0 \ne x_{kj}\in V\), then \(\sum _{k} e_k\otimes f(x_{kj})=\sum _k e_k\otimes \lambda _j x_{kj}\) or

So \(f(x_{kj})=\lambda _j x_{kj}\) for any k, j. Let us prove that there are n linearly independent elements in the set \(S=\{x_{kj} \ :\ k,j=1,\ldots ,n\}\). Since \(\{\omega _j\}_{j=1}^{n^2}\) form a basis of \(V\otimes V\), then S has n linearly independent elements and so f is diagonalizable. \(\square \)

Theorem 3.2

Let \(A_1\) and \(A_2\) be \({\mathbb {K}}\)-algebras.

-

(i)

If \(A_1\) and \(A_2\) are evolution algebras, then \(A_1\otimes A_2\) is also an evolution \({\mathbb {K}}\)-algebra. Furthermore, if \(B_1=\{e_{i}\}_{i \in \Lambda }\) and \(B_2=\{f_{j}\}_{j \in \Gamma }\) are natural bases of \(A_1\) and \(A_2\), respectively, then \(\{e_{i}\otimes f_{j}\}_{i\in \Lambda , j\in \Gamma }\) is a natural basis of \(A_1 \otimes A_2\).

-

(ii)

Let \(A_1\) and \(A_2\) be commutative with \(\textrm{char}({\mathbb {K}})\ne 2\) and let \(A_1\otimes A_2\) be a finite-dimensional evolution algebra. If \(A_1\) and \(A_2\) are locally nondegenerate, then \(A_1\) and \(A_2\) are evolution algebras.

Proof

For item (i), let \(B_1=\{e_{i}\}_{i \in \Lambda }\) and \(B_2=\{f_{j}\}_{j \in \Gamma }\) be natural bases of \(A_1\) and \(A_2\), respectively. We are going to see that the basis \(\{e_{i}\otimes f_{j}\}_{i\in \Lambda , j\in \Gamma }\) is a natural basis of \(A_1 \otimes A_2\), i.e., \((e_i\otimes f_j)(e_k \otimes f_s)=e_i e_k \otimes f_j f_s=0\) for \(i \ne k\) or \(j\ne s\). Let \((h,g) \in A_1^*\times A_2^*\), then \((e_i\otimes f_j)(e_k \otimes f_s)(h,g)=h(e_i e_k)g(f_j f_s)=0\) if \(i \ne k\) or \(j\ne s\) since \(B_1\) and \(B_2\) are natural bases and h and g are linear maps. Therefore, as (h, g) is an arbitrary element of \(A_1^*\times A_2^*\), we get that \(e_i e_k \otimes f_j f_s =0\) for \(i \ne k\) or \(j\ne s\). For the second item, the product in \(A_1\) is given by \(xy=\sum _i\langle x,y\rangle _{1i} a_i\) for some \(a_i\in A_1\) and a collection of inner products \(\langle \ ,\ \rangle _{1i}\) on \(A_1\). Similarly, in \(A_2\) we have \(x'y'=\sum _j\langle x',y'\rangle _{2j}b_j\). We may assume that \(\langle \ ,\ \rangle _{11}\) and \(\langle \ ,\ \rangle _{21}\) are nondegenerate. Then, in \(A_1\otimes A_2\) we have \((x\otimes x')(y\otimes y'):=xy\otimes x'y'= \sum _{ij}\langle x,y\rangle _{1i}\langle x',y'\rangle _{2j}a_i\otimes b_j\). Fixing basis in \(A_1\) and \(A_2\) we consider the Gram matrices \(N_i\) of \(\langle \ ,\ \rangle _{1i}\) and \(H_j\) of \(\langle \ ,\ \rangle _{2j}\). Thus, the Gram matrices of the inner products of \(A_1\otimes A_2\) given by (1), relative to the product basis, are \(M_{ij}:=N_i\otimes H_j\) by Remark 2.11. Moreover, \(M_{11}=N_1\otimes H_1\) is invertible. Then, the matrices \(M_{ij}M_{11}^{-1}\) are diagonalizable and commute (see Remark 2.2). The subcollection \(M_{1j}M_{11}^{-1}=(N_1\otimes H_j)(N_1^{-1}\otimes H_1^{-1})=1\otimes H_jH_1^{-1}\) (with j ranging) is also a commutative family, and each one is diagonalizable. This implies, by Lemma 3.1, that each \(H_jH_1^{-1}\) is diagonalizable and the commutativity of the \(M_{1j}M_{11}^{-1}\) for different j’s implies the commutativity of the different \(H_jH_1^{-1}\)’s. From this, we have that \(A_2\) is an evolution algebra. In a similar fashion, \(A_1\) is it. \(\square \)

Remark 3.3

(Structure matrix of tensor product of finite-dimensional evolution algebras) Suppose that \(A_1\) and \(A_2\) are two finite dimensional evolution \({\mathbb {K}}\)-algebras with natural bases \(B_1=\{e_1,\ldots , e_n\}\) and \(B_2=\{f_1,\ldots ,f_m\}\), respectively. Let \(M_{B_1}=(\omega _{ij})\) and \(M_{B_2}=(\beta _{kl})\) be the structure matrices relative to \(B_1\) and \(B_2\), respectively. Then, the structure matrix of the evolution algebra \(A_1\otimes A_2\) relative to the basis \(B_1\otimes B_2=\{e_1\otimes f_1,\ldots ,e_1 \otimes f_m,\ldots ,e_n\otimes f_1, \ldots , e_n \otimes f_m \}\) is the Kronecker product of \(M_{B_1}\) and \(M_{B_2}\), i.e., \(M_{B_1\otimes B_2}=M_{B_1}\otimes M_{B_2}\).

Lemma 3.4

Let \(A_1\) and \(A_2\) be evolution algebras, \(B_1\) and \(B'_1\) natural bases of \(A_1\) and \(B_2\) and \(B'_2\) natural bases of \(A_2\). We denote by P the change of basis matrix from \({B_1}\) to \({B'_1}\) and Q the change of basis matrix from \({B_2}\) to \({B'_2}\). Hence, \(P \otimes Q\) is a change of basis matrix from \(B_1 \otimes B_2\) to \(B'_1 \otimes B'_2\), that is,

where \((P\otimes Q )^{(2)}\) is the Hadamard product square of \(P \otimes Q\).

Proof

The proof is a consequence of the relation between two structure matrices of an evolution algebra and the fact that if P and Q are square matrices, then \((P\otimes Q)^{(2)}=P^{(2)}\otimes Q^{(2)} \) and \((P\otimes Q)^{-1}= P^{-1}\otimes Q^{-1}\). \(\square \)

However, the converse is not true in general. For example, if we consider the 2-dimensional evolution algebra A with natural basis \(\{e_1,e_2\}\) and product \(e_1^2=e_2\) and \(e_2^2=e_1\) and the 2-dimensional evolution algebra \(A^{'}\) with natural basis \(\{e^{'}_1,e^{'}_2\}\) and product \((e^{'}_1)^2=e^{'}_2\) and \((e^{'}_2)^2=0\). Then the evolution algebra \(A\otimes A^{'}\) has multiplication \((e_1\otimes e^{'}_1)^2=e_2\otimes e^{'}_2\), \((e_2\otimes e^{'}_1)^2=e_1\otimes e^{'}_2\) and \((e_1\otimes e^{'}_2)^2=(e_2\otimes e^{'}_2)^2=0\). If we consider the change of basis \(f_1=e_1\otimes e^{'}_1+e_1\otimes e^{'}_2\), \(f_2=e_1\otimes e^{'}_2+e_2\otimes e^{'}_2\), \(f_3=e_1\otimes e^{'}_2+e_2\otimes e^{'}_1\) and \(f_4=e_1\otimes e^{'}_2\), it is easy to check that the change of basis matrix from \(\{f_k\}_{k=1}^4\) to \(\{e_i\otimes e^{'}_j\}_{i,j=1}^2\) is not tensorially decomposable.

There are tensorially decomposable evolution algebras that can be written as a tensor product of nonevolution algebras. Moreover, since the decomposition of an algebra into tensor product is not unique, it may happen that some evolution algebra A splits as \(A_1\otimes A_2\) with none of the \(A_i\) an evolution algebra and also \(A=A'_1\otimes A'_2\) where both \(A'_i\) are evolution algebras. This is the case of the following example.

Example 3.5

Let \({\mathbb {K}}\) be a field with characteristic different from two (we denote by \(\textrm{char}({\mathbb {K}})\) the characteristic of \({\mathbb {K}}\)) and consider the 2-dimensional \({\mathbb {K}}\)-algebra \(\textbf{B}_3\), which appears in Table 1 of [21], with basis \(\{e_1,e_2\}\) such that \(e_1^2=e_2^2=0\) and \(e_1e_2=e_2=-e_2e_1\). Using Definition 2.1, it can be checked that this is not an evolution algebra by computing the evolution test ideal I and seeing that \(1\in I\) (see [6] and [7]). Indeed, the evolution test ideal is \(I=(x_{11}x_{22}-x_{12}x_{21},z(x_{11}x_{22}-x_{12}x_{21})-1)\triangleleft {\mathbb {K}}[x_{11},x_{12},x_{21},x_{22},z]\) and clearly \(1\in I\). However, \(A:= \textbf{B}_3\otimes \textbf{B}_3\) is an evolution algebra applying again the method that involves the evolution test ideal. First, we consider \(u_1=e_1\otimes e_1\), \(u_2=e_1\otimes e_2\), \(u_3=e_2\otimes e_1\) and \(u_4=e_2\otimes e_2\). The multiplication table of A relative to the basis \(\{u_i\}_1^4\) is

\(\cdot \) | \(u_1\) | \(u_2\) | \(u_3\) | \(u_4\) |

|---|---|---|---|---|

\(u_1\) | 0 | 0 | 0 | \(u_4\) |

\(u_2\) | 0 | 0 | \(-u_4\) | 0 |

\(u_3\) | 0 | \(-u_4\) | 0 | 0 |

\(u_4\) | \(u_4\) | 0 | 0 | 0 |

and then a natural basis for A is \(B=\{u_1+u_4,u_1-u_4,u_2+u_3,u_2-u_3\}\). Hence, there is a four-dimensional evolution algebra that is the tensor product of two nonevolution algebras of dimension two. We can see that the structure matrix \(M_B\) is tensorially decomposable into two structure matrices of evolution algebras. Under the assumption \(\textrm{char}({\mathbb {K}})\ne 2\), it seems that A is the unique 4-dimensional algebra (up to isomorphism) that splits as a tensor product of 2-dimensional anticommutative algebras (the unique 2-dimensional algebra in which any element has zero square is \(\textbf{B}_3\) using Kaygorodov and Volkov classification for algebraically closed fields in [21]). Observe that A is not simple. Hence, it is worth investigating if there exist 4-dimensional simple evolution algebras that split as the tensor product of 2-dimensional algebras that are not evolution algebras.

Now, we consider that \(A=A_1\otimes A_2\) is a simple evolution algebra. If some of \(A_1\) or \(A_2\) are one-dimensional, then the decomposition is trivial (the ground field, which is an evolution algebra, tensor product with something isomorphic to A, hence an evolution algebra). Next, we suppose that \(\dim (A_i)=2\) for \(i=1,2\). By Lemma 2.10, we know that both \(A_1\) and \(A_2\) are commutative or both are zero-square algebras and simple because their tensor product is simple (see item (ii) Proposition 2.14). We assume, for now, that \({\mathbb {K}}\) is algebraically closed. For a more self-contained argument, we reproduce here the nonzero product of the algebras given in [21, Table 1], keeping its notation to whose authors we are indebted.

Next, we discriminate from Table 1, those algebras that are commutative (and add other algebraic properties for completeness). Concretely, we have added which ones are simple, perfect and evolution algebras. To illustrate the tools that we use for checking the evolution character of an algebra, we give two examples.

For \(\textbf{A}_4(0)\) with \(\textrm{char}({\mathbb {K}})=2\), it is enough to write the equations that a natural basis should verify (and realize that the system is inconsistent). Consider \(\{e_1,e_2\}\) the basis of \(\textbf{A}_4(0)\) given in Table 1. We look for a natural basis \(\{u_1,u_2\}\) with \(u_1=x_{11}e_1+x_{12}e_2\), \( u_2=x_{21}e_1+x_{22}e_2\) such that \(x_{11}x_{22}+x_{12}x_{21}\ne 0\) and \(u_1u_2=u_2u_1=0\). Hence, \(x_{11}x_{21}e_1^2+(x_{11}x_{22}+x_{12}x_{21})e_1e_2=0\) and \(x_{11}x_{21}e_2+(x_{11}x_{22}+x_{12}x_{21})e_1=0\). So, the variables \(x_{ij}\) (\(i,j=1,2\)) have to satisfy the following conditions so that \(\{u_1,u_2\}\) is a natural basis \(\left\{ \begin{matrix} x_{11}x_{21}=0 \\ x_{11}x_{22}+x_{12}x_{21}=0\\ x_{11}x_{22}+x_{12}x_{21}\ne 0 \end{matrix}\right. \). This system is inconsistent, so \(\textbf{A}_4(0)\) is not an evolution algebra. Analogously, for \(\textbf{D}_3(\alpha ,\alpha )\) and \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\), we also obtain inconsistent systems.

Now, we check that \(\textbf{A}_2\) is an evolution algebra when \(\textrm{char}({\mathbb {K}})=2\). To check whether an algebra is an evolution algebra or not, we can use the evolution test ideal (see [6] and Definition 2.1) computing the reduced Gröbner basis of that ideal. Consider \(\{e_1,e_2\}\) the basis of \(\textbf{A}_2\) given in Table 1. As before, we look for a natural basis \(\{u_1,u_2\}\) with \(u_1=x_{11}e_1+x_{12}e_2\), \( u_2=x_{21}e_1+x_{22}e_2\) such that \(x_{11}x_{22}+x_{12}x_{21}\ne 0\) and \(u_1u_2=u_2u_1=0\). By imposing \(u_1 u_2=u_2 u_1=0\) we get that \(x_{11}x_{21}+x_{11}x_{22}+x_{12}x_{21}=0\). Moreover, \(z(x_{11}x_{22}+x_{12}x_{21})=1\). So, we get the ideal \(\textrm{I}=(x_{11}x_{21}+x_{11}x_{22}+x_{12}x_{21}, z(x_{11}x_{22}+x_{12}x_{21})-1)=(x_{11}x_{21}+x_{11}x_{22}+x_{12}x_{21}, z x_{11}x_{21}-1)\). We compute the reduced Gröbner basis of \(\mathrm I\) by using the software Singular/4.2.1 under the computation server picasso.scbi.uma.es. Since singular does not work with subindices, we changed the parameters \(x_{ij}\) by xij.

Since the reduced Gröbner basis of \(\mathrm I\) obtained is not \(\{1\}\) and \({\mathbb {K}}\) is algebraically closed, the ideal \(\mathrm I\) has a zero. Then \(\textbf{A}_2\) is an evolution algebra. Indeed, one can see that a solution is \(x_{12}=0\) and the other variables \(x_{ij}=1\).

Once given these two examples, and after all the corresponding computations, we are in the position of displaying the following table with all the information obtained.

\(\begin{array}{l} \textbf{A}_1(\frac{1}{2}) \\ \text {if}\ \textrm{char}({\mathbb {K}})\ne 2\\ \text {Nonsimple}\\ \text {Perfect}\\ \text {Nonevolution} \end{array}\) | \(\begin{array}{l} \textbf{A}_2\\ \ \text {if}\ \textrm{char}({\mathbb {K}})=2\\ \text {Nonsimple}\\ \text { Non perfect}\\ \text {Evolution}\end{array}\) | \(\begin{array}{l} { \textbf{A}}_3\\ \text {Nonsimple}\\ \text {Non perfect}\\ \text {Evolution}\end{array}\) | \(\begin{array}{l} \textbf{A}_4(0)\ \\ \text {if}\ \textrm{char}({\mathbb {K}})=2\\ \text {Simple}\\ \text {Nonevolution}\end{array}\) |

\(\begin{array}{l} \textbf{B}_2(\frac{1}{2})\\ \text {if}\ \textrm{char}({\mathbb {K}})=2\\ \text {Nonsimple}\\ \text {Nonperfect}\\ \text {Evolution}\end{array}\) | \(\begin{array}{l} \textbf{B}_3\\ \ \text {if}\ \textrm{char}({\mathbb {K}})=2\\ \text {Non simple}\\ \text {Nonperfect}\\ \text {Evolution} \end{array}\) | \(\begin{array}{l} \textbf{C}(\frac{1}{2},0)\ \\ \text {if}\ \textrm{char}({\mathbb {K}})\ne 2 \\ \text {Simple}\\ \text {Evolution} \end{array}\) | \(\begin{array}{l} \textbf{D}_1(\frac{1}{2},0)\\ \text {if}\ \textrm{char}({\mathbb {K}})\ne 2\\ \text {Nonsimple}\\ \text {Nonperfect} \\ \text {Evolution}\end{array}\) |

\(\begin{array}{l} \textbf{D}_2(\alpha ,\alpha ), \alpha \ne \frac{1}{2}\\ \text {Nonsimple}\\ \text {Perfect} \\ \text {Non evolution if}\,\, \alpha \ne 0 \end{array}\) | \(\begin{array}{l} \textbf{D}_3(\alpha ,\alpha )\text { if}\ \textrm{char}({\mathbb {K}})=2\\ \text {Simple if}\ \alpha \ne 0 \\ \text {Nonevolution} \end{array}\) |

\(\begin{array}{l} \textbf{E}_1(\alpha ,\beta ,\alpha ,\beta ), 4\alpha \beta \ne 1, 2\beta \ne 1, 2\alpha \ne 1 \\ \text {Simple if } \alpha +\beta \ne 1; \, \, \alpha , \beta \ne 0 \\ \text {Evolution}\end{array}\) | \(\begin{array}{l} \textbf{E}_2(\frac{1}{2},\beta ,\beta ) \\ \text {if}\ \textrm{char}({\mathbb {K}})\ne 2 \\ \text {Simple if }2\beta \ne 1,\beta \ne 0\\ \text {Evolution} \end{array}\) |

Observe that \(\textbf{B}_3\) of Table 2 is the only two-dimensional nonsimple zero-square algebra. The unique commutative simple nonevolution algebras of the above table are: \(\textbf{A}_4(0)\), \(\textbf{D}_3(\alpha ,\alpha )\) and \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\).

We summarize all the information about simple algebras in the following table.

Simple algebra | Evolution | Nat. Basis \(\{e_1,e_2\}\) |

|---|---|---|

\(\textbf{A}_4(0)\), \(\textrm{char}({\mathbb {K}})=2\) | FALSE | |

\(\textbf{C}(\frac{1}{2},0)\), \(\textrm{char}({\mathbb {K}})\ne 2\) | TRUE |

|

\(\begin{array}{l}{} \textbf{D}_3(\alpha ,\alpha ), \textrm{char}({\mathbb {K}})=2\\ \alpha \ne 0\end{array}\) | FALSE | |

\(\begin{array}{l}{} \textbf{E}_1(\alpha ,\beta ,\alpha ,\beta ), \\ 4\alpha \beta \ne 1, 2\beta \ne 1, 2\alpha \ne 1; \\ \alpha +\beta \ne 1; \alpha , \beta \ne 0\end{array}\) | TRUE |

|

\(\begin{array}{l}{} \textbf{E}_2(\frac{1}{2},\beta ,\beta ), \textrm{char}({\mathbb {K}})\ne 2\\ 2\beta \ne 1,\beta \ne 0\end{array}\) | TRUE |

|

\(\begin{array}{l}{} \textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma ), \textrm{char}({\mathbb {K}})\ne 2\\ \gamma \ne 0,1\end{array}\) | FALSE |

Next, we investigate whether or not there are 4-dimensional simple evolution algebras A that split as the tensor products of two necessarily simple nonevolution algebras. For this purpose we apply the simultaneous diagonalization algorithm explained in [6]. Thus in the decomposition \(A=A_1\otimes A_2\) we have both \(A_1,A_2\) isomorphic either to \(\textbf{A}_4(0)\), \(\textbf{D}_3(\alpha ,\alpha )\) or to \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\). One can check that none of the algebras \(\textbf{A}_4(0)\otimes \textbf{A}_4(0)\), \(\textbf{A}_4(0)\otimes \textbf{D}_3(\alpha ,\alpha )\), \(\textbf{D}_3(\alpha ,\alpha )\otimes \textbf{D}_3(\alpha ,\alpha )\) and \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\) is an evolution algebra. For the cases \(\textbf{A}_4(0)\otimes \textbf{A}_4(0)\), \(\textbf{A}_4(0)\otimes \textbf{D}_3(\alpha ,\alpha )\) and \(\textbf{D}_3(\alpha ,\alpha )\otimes \textbf{D}_3(\alpha ,\alpha )\)) we use again the evolution test ideal. As before, we use the software Singular/4.2.1 under the computation server picasso.scbi.uma.es to get that the reduced Gröbner basis of the evolution test ideal \(\mathrm I\) of \(\textbf{A}_4(0)\otimes \textbf{A}_4(0)\) is \(\{1\}\) and so, this is not an evolution algebra. Indeed, the multiplication table of this algebra is

\(\cdot \) | \(u_1\) | \(u_2\) | \(u_3\) | \(u_4\) |

|---|---|---|---|---|

\(u_1\) | \(u_4\) | \(u_3\) | \(u_2\) | \(u_1\) |

\(u_2\) | \(u_3\) | 0 | \(u_1\) | 0 |

\(u_3\) | \(u_2\) | \(u_1\) | 0 | 0 |

\(u_4\) | \(u_1\) | 0 | 0 | 0 |

where \(u_1=e_1\otimes e_1\), \(u_2=e_1\otimes e_2\), \(u_3=e_2\otimes e_1\) and \(u_4=e_2\otimes e_2\). Next, we define new generators \(w_i=\sum _j a_{ij} u_j\) and impose the conditions \(w_iw_j=0\) (\(i<j\)) and \(\det (a_{ij})\ne 0\). We define a ring R of characteristic 2 in the 17 variables \(a_{ij}\) (\(i,j=1,\ldots ,16\)) and z. The variable z is used in the polynomial \(1-\det (a_{ij})z\). This gives the ideal \(\mathrm I\) in the singular code below.

For the case \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\), we use the procedure of simultaneous orthogonalization outlined in Remark 2.2 instead of the evolution test ideal. The inner products associated with its product as in (1) are given by the following matrices:

We get that \(M_1M_2^{-1}\) is not diagonalizable whence we deduce that \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\) is not an evolution algebra. Moreover, we have checked that \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{C}(\frac{1}{2},0)\), \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{E}_1(\alpha ,\beta ,\alpha ,\beta )\) and \(\textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\otimes \textbf{E}_2(\frac{1}{2},\beta ,\beta )\) are not evolution algebras.

Then we have the following result:

Proposition 3.6

If \({\mathbb {K}}\) is algebraically closed and \(A_1\otimes A_2\) is a four-dimensional simple evolution algebra, then both factors \(A_1\) and \(A_2\) are evolution algebras.

By adding \(\textrm{char}({\mathbb {K}})\ne 2\) in the next theorem, we can give a similar result eliminating the condition of simplicity for the tensor product.

Theorem 3.7

Let \(A_i\) (\(i=1,2\)) be \({\mathbb {K}}\)-algebras with \({\mathbb {K}}\) algebraically closed and \(\textrm{char}({\mathbb {K}})\ne 2\). Assume that \(A_1\otimes A_2\) is a nontrivial \({\mathbb {K}}\)-evolution algebra with \(\dim (A_1\otimes A_2)=4\) and both \(A_i\) are commutative algebras. Then \(A_1\) and \(A_2\) are evolution algebras.

Proof

Firstly, we may discard most of the cases under the light of Theorem 3.2: if both factors \(A_i\) are locally nondegenerate, Theorem 3.2 implies that each \(A_i\) is an evolution algebra. Otherwise, some \(A_i\) (or both) is not locally nondegenerate. But, if we inspect the table of commutative algebras of dimension 2 (that is, Table 2) we see that the unique (up to isomorphism) algebra that is not locally nondegenerate is \({\textbf{A}}_3\), which is an evolution algebra. This implies that the only remaining cases to study are \({\textbf{A}}_3\otimes {A}_2\) where \({A}_2\) is not an evolution algebra. This reduces the cases to \({\textbf{A}}_3\otimes \textbf{A}_1(\frac{1}{2})\), \({\textbf{A}}_3\otimes \textbf{D}_2(\alpha ,\alpha )\) with \(\alpha \notin \{0, \frac{1}{2}\}\), \({\textbf{A}}_3\otimes \textbf{E}_3(\frac{1}{2},\frac{1}{2},\gamma )\) with \(\gamma \ne 0\) and \({\textbf{A}}_3\otimes \textbf{E}_5(\frac{1}{2})\). But applying the algorithm explained in Remark 2.2 for recognizing simultaneous diagonalizability by congruence, we obtain that none of these algebras are an evolution algebra. \(\square \)

Lemma 3.8

Let \(A_1\) and \(A_2\) be two evolution \({\mathbb {K}}\)-algebras. Then \(A_1\) and \(A_2\) are both nondegenerate if and only if \(A_1\otimes A_2\) is nondegenerate.

Proof

Suppose that \(A_1\) and \(A_2\) are both nondegenerate. Take natural bases \(B_1=\{e_i\}_{i\in \Lambda }\) of \(A_1\) and \(B_2=\{f_j\}_{j\in \Gamma }\) of \(A_2\). Assume that \(e_i^2=\sum _s\omega _{si} e_s\) and \(f_j^2=\sum _t\sigma _{tj} f_t\) with \(\omega _{si}, \sigma _{tj} \in {\mathbb {K}}\) for every \(s,i \in \Lambda \) and \(t,j \in \Gamma \). We know that \(B_1\otimes B_2=\{e_i\otimes f_j\}_{i\in \Lambda ,j\in \Gamma }\) is a natural basis of \(A_1\otimes A_2\). If there exists some \((i,j)\in \Lambda \times \Gamma \) such that \((e_i\otimes f_j)^2=0\), then \(0=\sum _{s,t}\omega _{si}\sigma _{tj} e_s\otimes f_t\) and we have \(\omega _{si}\sigma _{tj}=0\) for any s and t. As \(A_1\) is nondegenerate there is \(s\in \Lambda \) such that \(\omega _{si}\ne 0\), therefore \(\sigma _{tj}=0\) for any t contradicting that \(A_2\) is nondegenerate. So \(\omega _{si}=0\) for any \(s\in \Lambda \) contradicting that \(A_1\) is nondegenerate. Reciprocally, suppose that \(A_1\) is degenerate, thus there exists \(k \in \Lambda \) such that \(e_k^2=0\). Then \((e_k \otimes f_t)^2=0\) for every \(t \in \Gamma \), so \(A_1 \otimes A_2\) is degenerate. \(\square \)

Lemma 3.9

If \(A_i\) are evolution algebras over \({\mathbb {K}}\) (\(i=1,2\)), then

Proof

The nontrivial inclusion is as follows: take a natural basis \(\{e_i\}_{i\in \Lambda }\) of \(A_1\) and \(\{f_j\}_{j\in \Gamma }\) of \(A_2\). We write \(e_i^2=\sum _n\omega _{ni} e_n\) and similarly \(f_j^2=\sum _m\sigma _{mj} f_m\) with \(\omega _{ni}\), \(\sigma _{mj} \in {\mathbb {K}}\) for all \(n,i \in \Lambda \) and \(m,j \in \Gamma \). Choose an arbitrary \(z\in \textrm{ann}(A_1\otimes A_2)\). So \(z=\sum _{i,j}\lambda _{ij}e_i\otimes f_j\) for some scalars \(\lambda _{ij}\in {\mathbb {K}}\). But \(z(e_p\otimes f_q)=0\) for any \((p,q)\in \Lambda \times \Gamma \). This implies that

for any \(p\in \Lambda \) and \(q\in \Gamma \). Thus \(\lambda _{pq}\omega _{sp}\sigma _{tq}=0\) for any \(p,s\in \Lambda \) and \(q,t\in \Gamma \). Next, we prove that for any \(p\in \Lambda \) and \(q\in \Gamma \) such that \(\lambda _{pq}\ne 0\) we have \(e_p\in \textrm{ann}(A_1)\) or \(f_q\in \textrm{ann}(A_2)\): indeed, if \(e_p\notin \textrm{ann}(A_1)\), then some \(\omega _{sp}\ne 0\) and so \(\lambda _{pq}\sigma _{tq}=0\) for any \(q\in \Gamma \). Since \(\lambda _{pq}\ne 0\) we conclude that \(\sigma _{tq}=0\) for any t. Thus \(f_q^2=0\) and \(f_q\in \textrm{ann}(A_2)\). In a similar way, it is proved that if \(f_q\notin \textrm{ann}(A_2)\), then \(e_p\in \textrm{ann}(A_1)\). Thus \(z\in \textrm{ann}(A_1)\otimes A_2+A_1\otimes \textrm{ann}(A_2)\).

\(\square \)

4 Directed Graph Associated with a Tensor Product of Evolution Algebras

Let \(G_1=(E_{G_1}^0,E_{G_1}^1,s_{G_1},t_{G_1})\) and \(G_2=(E_{G_2}^0,E_{G_2}^1,s_{G_2},t_{G_2})\) be two directed graphs. We recall that the categorical product of \(G_1\) and \(G_2\) is the directed graph defined by \(G_1 \times G_2 := (E_{G_1}^0 \times E_{G_2}^0, E_{G_1}^1 \times E_{G_2}^1, s, t)\) where \(s(f,g) =(s(f), s(g))\) and \(t(f,g)=(t(f), t(g))\) for any \((f,g)\in E_{G_1}^1 \times E_{G_2}^1\).

Lemma 4.1

Suppose that \(A_1\) and \(A_2\) are evolution algebras with basis \(B_1\) and \(B_2\) and associated directed graphs \(G_{1}\) and \(G_{2}\), respectively. Therefore, the directed graph associated with the tensor evolution algebra, \(G_{B_1\otimes B_2}\), coincides with the categorical product of \(G_{1}\) and \(G_{2}\), \(G_1\times G_2\). Moreover, \(M_{G_1\times G_2}=M_{G_1}\otimes M_{G_2}\).

Proof

Suppose that \(M_{B_1}=(c_{ik})\) is the structure matrix of \(A_{1}\) (\(e_i^2=\sum c_{ik}e_k\)), and \(M_{B_2}=(d_{jl})\) is the structure matrix of \(A_{2}\) (\(f_j^2=\sum d_{jl}f_l\)). By Remark 3.3, we know that \(M_{B_1\otimes B_2}=M_{B_1} \otimes M_{B_2}\). This implies that \((e_i\otimes f_j)^2=\sum (c_{ik} d_{jl})e_k\otimes f_l\) and so,

-

(i)

\(c_{ik}\ne 0\) if and only if there exists an edge from \(e_i\) to \(e_k\)

-

(ii)

\(d_{jl}\ne 0\) if and only if there exists an edge from \(f_j\) to \(f_l\)

-

(iii)

\(c_{ik} d_{jl}\ne 0\) if an only if \(c_{ik}\ne 0\) and \(d_{jl}\ne 0\)

Finally, by the definition of the categorical product of directed graphs this means that there exists an edge from \(e_i\otimes f_j\) to \(e_k\otimes f_l\). These edges are the same that we obtain computing \(M_{G_1}\otimes M_{G_2}\). Hence, \(M_{G_1\times G_2}=M_{G_1}\otimes M_{G_2}\) and therefore, \(G_1\times G_2=G_{B_1\otimes B_2}\). \(\square \)

The following result appears in [23] and concerns strongly connected directed graphs involving the categorical product of graphs.

Theorem 4.2

([23], Theorem 1.(ii)) Let \(G_1\) and \(G_2\) be strongly connected directed graphs. Let

and

Then the number of connected components of \(G_1\times G_2\) is \(d_3\).

Corollary 4.3

Let \(A_i\) (\(i=1,2\)) be evolution algebras whose associated directed graphs are \(G_i\) (\(i=1,2\)), respectively. Thus, we have:

-

(i)

If \(G_1\) and \(G_2\) are strongly connected directed graphs and have closed paths of coprime length, then \(A_1\otimes A_2\) is nondegenerate and its associated directed graph is connected, so \(A_1\otimes A_2\) is irreducible.

-

(ii)

If \(A_1\otimes A_2\) is nondegenerate and irreducible, then each factor is nondegenerate and irreducible.

Proof

Since \(G_1\) and \(G_2\) are strongly connected directed graphs and have closed paths of coprime length, then \(d_3=1\) where \(d_3\) is the parameter described in Theorem 4.2. By Theorem 4.2, the categorical product \(G_1\times G_2\) has only one connected component, so it is connected. It is clear that the evolution algebra associated with a strongly connected directed graph is nondegenerate. So, \(A_1\) and \(A_2\) are nondegenerate evolution algebras. By Lemma 3.8, \(A_1\otimes A_2\) is nondegenerate. Moreover, since the associated directed graph to \(A_1\otimes A_2\) is \(G_1 \times G_2\) (by Lemma 4.1) and \(G_1\times G_2\) is connected, this is equivalent to say that \(A_1\otimes A_2\) is irreducible (see [13, Proposition 2.10]). For the second part of the corollary, we know that each factor is nondegenerate by Lemma 3.8. Moreover, since the product graph is connected, then each factor graph has to be connected whence the corresponding algebra is irreducible by [13, Proposition 2.10]. \(\square \)

5 Perfect Tensor Product of Evolution Algebras

In this section, we continue researching how some properties pass from the factors to the tensor product and conversely from the tensor product to the factors under suitable conditions. After that, we aboard other of the main goals of our study: the classification of perfect 4-dimensional evolution algebras over arbitrary fields that are tensorially decomposable into evolution algebras.

Lemma 5.1

Let \(A_1\) and \(A_2\) be two \({\mathbb {K}}\)-algebras and assume that \(A_1\otimes A_2\) is an evolution algebra and \(A_2\) has an ideal J of codimension 1. Then either \(A_1\) is an evolution algebra or \(A_2^2\subset J\).

Proof

Assume that \(A_2^2\not \subset J\), then there is an algebra epimorphism \(\phi :A_2\rightarrow {\mathbb {K}}\cong A_2/J\). Take \(b_0\in A_2\) such that \(\phi (b_0)=1\). Define the \({\mathbb {K}}\)-algebra homomorphism \(\Omega :A_1\otimes A_2\rightarrow A_1\) such that \(\Omega (a\otimes b)=\phi (b)a\). For any \(a\in A_1\) we have \(a=\Omega (a\otimes b_0)\); hence, \(\Omega \) is an epimorphism. But any epimorphic image of an evolution algebra is an evolution algebra whence \(A_1\) is an evolution algebra. Note that when \(A_2^2\subset J\), then \(\Omega \) is not an algebra homomorphism.

\(\square \)

Proposition 5.2

Let \(A_1\) and \(A_2\) be two \({\mathbb {K}}\)-algebras. If the tensor product \(A_1\otimes A_2\) is a perfect evolution algebra and \(A_2\) has an ideal of codimension 1, then \(A_1\) is an evolution algebra.

Proof

It is a direct consequence of item (i) Proposition 2.14 and Lemma 5.1.

\(\square \)

Proposition 5.3

Let \(A_1\) and \(A_2\) be two 2-dimensional evolution \({\mathbb {K}}\)-algebras. If \(A_1\otimes A_2\) is a perfect evolution algebra, then either \(A_1\) and \(A_2\) are simple or one of them is an evolution algebra.

Proof

If \(A_1\) or \(A_2\) is not a simple algebra, without loss of generality, we assume that \(A_2\) is not simple. Therefore, \(A_2\) has a one-dimensional ideal J. If \(A_2^2\subset J\), then \(A_2\) is not perfect implying that \(A_1\otimes A_2\) is not perfect by item (i) Proposition 2.14, a contradiction. Hence, \(A_2^2\not \subset J\) and this implies that \(A_1\) is an evolution algebra by Lemma 5.1. \(\square \)

Consequently, in our quest for tensorially decomposable evolution algebras of dimension 4, if we focus first on the perfect ones, we know that both factors must be simple, or one of them must be an evolution algebra. So, the above results control the number of cases to be considered.

Now, we focus on \(2-\)dimensional evolution algebras, \(A_1\) and \(A_2\). First, we prove that not every perfect evolution algebra of dimension 4 comes from the tensor product of two evolution algebras of dimension 2 giving a specific example. We need several formulas concerning the number of zeros in the structure matrices.

Remark 5.4

If M is an \(n\times n\) matrix with coefficients in \({\mathbb {K}}\), we will denote by z(M) the number of zeros in M, by \(z_d(M)\) the number of zeros in the diagonal of M, by \(z_c(M)\) the number of columns filled with zeros of M by \(z_r(M)\) the number of rows filled with zeros of M and by \(\textrm{rank}(M)\) the rank of the matrix M. Therefore, if M is \(n\times n\) and N is \(m\times m\) (both with coefficients in \({\mathbb {K}}\)) we have

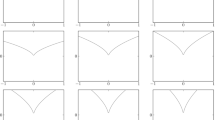

Now, if M and N are \(2 \times 2\) matrices, then \(M \otimes N\) cannot have exactly one column with zero entries because the equation \(2x + (2-x)y=1\) has no solution in natural numbers. Moreover, since \(\textrm{rank}(M\otimes N)=\textrm{rank}(M)\textrm{rank}(N)\) we have that it is not possible that \(M \otimes N\) has rank 3. We have the possibilities shown in Table 3. Note that the cases \((z(M),z(N))=(1,0)\) and \((z(M),z(N))=(0,1)\) produce the same result module by interchanging some rows and columns. We only compute one case since both involve isomorphic evolution algebras when we are working with structure matrices. So, we may assume all through Table 3 that \(z(M)\le z(N)\) without loss of generality.

In conclusion, we have the following proposition.

Proposition 5.5

Let M be a \(4 \times 4\) matrix with coefficients in \({\mathbb {K}}\). M is tensorially indecomposable if \(z(M)\in \{1,2,3,5,6,9,11\}\) or \(\textrm{rank}(M)=3\) or \(z_c(M)=1\) or \(z_r(M)=1\) or \(z_d(M)=1\).

Remark 5.6

If A is an n-dimensional perfect evolution algebra with structure matrix \(M_B\) and B a natural basis, then by [8, Proposition 2.13] we have that the unique change of basis matrices are induced by the elements of the semidirect product \(S_n \rtimes ({\mathbb {K}}^\times )^n \). This semidirect product is defined as follows. We consider the so-called torus \(({\mathbb {K}}^\times )^n\) as the group of maps \(T:\{1,\ldots ,n\}\rightarrow {\mathbb {K}}^\times \) with product \(T_1T_2(i)=T_1(i)T_2(i)\) for any \(i\in \{1,\ldots ,n\}\) and \(T_1,T_2\in ({\mathbb {K}}^\times )^n\). If \(S_n\) is the symmetric group, that is, the group of bijections of \(\{1,\ldots ,n\}\), then for any \(\sigma \in S_n\) and \(T\in ({\mathbb {K}}^\times )^n\) we have \(T\sigma \in ({\mathbb {K}}^\times )^n\). Then we can define a group structure in the set of all couples \((T,\sigma )\) where \(T\in ({\mathbb {K}}^\times )^n\) and \(\sigma \in S_n\). This structure is given by

and the group arising in this way is denoted as above \(S_n \rtimes ({\mathbb {K}}^\times )^n\). It is isomorphic to the subgroup of \(\hbox {GL}_n(K)\) of all matrices with only a nonzero element in each row and column. In this way, and defining an action of this product on the set of the matrices of order n (see [5, Sect. 4.1]), we can speak of the orbit of a structure matrix. In fact, the elements of the orbit are \(M_{B'}=(P_\sigma P)^{-1}M_B (P_\sigma P)^{(2)}\) where \(P_\sigma \) is the permutation matrix relative to a permutation \(\sigma \in S_n\) and P is a invertible diagonal matrix. Also by [8, Proposition 2.13], we know that \(z(M_B)\), \(z_d(M_B)\) and \(\textrm{rank}(M_B)\) are invariants for all the elements on the orbit. Moreover, \(z_c(M_B)\) (dimension of annihilator) and \(z_r(M_B)\) are also invariants. Furthermore, if we consider the number of zero entries in a chosen column i denoted by \(z_c^i(M_B)\) and similarly we define \(z^j_r(M_B)\) as the number of zero entries in the \(j^{th}\) row, then for every natural basis \(B'\) there exists a permutation \(\sigma \in S_n\) such that \(z_c^i(M_B)=z_c^{\sigma (i)}(M_{B'})\). So, we get a method to discard all the matrices of an orbit that do not come from the Kronecker product of two matrices by counting the number of zeros or computing the rank of one of them. Consequently, since isomorphic perfect evolution algebras have structure matrices in the same orbit, this remark implies that the given numbers in the structure matrix do not depend on the choice of natural basis. Therefore, they are invariants that can be used for classification tasks.

Example 5.7

If A is the perfect evolution algebra with structure matrix  , then A is tensorially indecomposable since \(z(M)=6\) (by using the conclusion given in Remark 5.4).

, then A is tensorially indecomposable since \(z(M)=6\) (by using the conclusion given in Remark 5.4).

Example 5.8

Now, consider the perfect evolution algebra A with structure matrix  . Following Table 3 we may be in one of the cases, since \(z(N)=7\) and \(z_d(N)=0\); nevertheless, N is tensorially indecomposable as we will see in Example 5.10.

. Following Table 3 we may be in one of the cases, since \(z(N)=7\) and \(z_d(N)=0\); nevertheless, N is tensorially indecomposable as we will see in Example 5.10.

Now, we describe another well-known tool to check if a matrix is tensorially decomposable. We define the map \(\omega : {\mathscr {M}}_m({\mathbb {K}})\rightarrow {\mathbb {K}}^{m^2}\) such that, if \(M=(a_{ij})_{i,j=1}^{m}\), then

Consider an arbitrary matrix M that comes from the Kronecker product of two matrices of order n and k, respectively (\(n,k > 1\)). That is,  with \(V_{ij}\in {\mathscr {M}}_n({\mathbb {K}})\) and \(M\in {\mathscr {M}}_{nk}({\mathbb {K}})\). We now construct a matrix whose rows are the extended blocks that appear in the Kronecker product matrix. Let us call it the extended matrix of M and denote by \(\textrm{Ex}(M)\) the following matrix

with \(V_{ij}\in {\mathscr {M}}_n({\mathbb {K}})\) and \(M\in {\mathscr {M}}_{nk}({\mathbb {K}})\). We now construct a matrix whose rows are the extended blocks that appear in the Kronecker product matrix. Let us call it the extended matrix of M and denote by \(\textrm{Ex}(M)\) the following matrix

so we have the next lemma.

Lemma 5.9

Let \(M \in {\mathscr {M}}_{nk}({\mathbb {K}})\) and \(n, k >1\). Then, M is tensorially decomposable into two matrices of order n and k, respectively, if and only if \(\textrm{rank}(\textrm{Ex}(M))=1\).

Proof

Assume that \(M=A_1\otimes A_2\) with \(A_1\in {\mathscr {M}}_{k}({\mathbb {K}})\), \(A_2\in {\mathscr {M}}_{n}({\mathbb {K}})\). If \(A_1=(a_{ij})\) we have  and the set of all vectors \(\omega (a_{ij}A_2)\) (with \(i,j\in \{1,\ldots ,k\}\)) has rank one. Conversely, if \(\textrm{rank}(\textrm{Ex}(M))=1\), then there is vector \(a\in {\mathbb {K}}^{n^2}\) such that for any i, j we have \(\omega (V_{ij})=\mu _{ij}a\) for some scalars \(\mu _{ij}\in {\mathbb {K}}\). Then \(V_{ij}=\mu _{ij}A_1\) for some matrix \(A_1\in {\mathscr {M}}_n({\mathbb {K}})\) such that \(a=\omega (A_1)\). This implies that

and the set of all vectors \(\omega (a_{ij}A_2)\) (with \(i,j\in \{1,\ldots ,k\}\)) has rank one. Conversely, if \(\textrm{rank}(\textrm{Ex}(M))=1\), then there is vector \(a\in {\mathbb {K}}^{n^2}\) such that for any i, j we have \(\omega (V_{ij})=\mu _{ij}a\) for some scalars \(\mu _{ij}\in {\mathbb {K}}\). Then \(V_{ij}=\mu _{ij}A_1\) for some matrix \(A_1\in {\mathscr {M}}_n({\mathbb {K}})\) such that \(a=\omega (A_1)\). This implies that  . \(\square \)

. \(\square \)

Let us consider a finite-dimensional perfect evolution algebra with structure matrix \(M_B\) relative to a natural basis B. It is easy to check that there exist examples such that \(\textrm{rank}(\textrm{Ex}(M_B))=1\) but \(\textrm{rank}(\textrm{Ex}(M_{B'})) \ne 1\) where \(B'\) is another natural basis.

Example 5.10

If we apply Lemma 5.9 to the structure matrix N that appears in Example 5.8, one can check that the rank of all the extended matrices on the orbit of N is 3. Hence, N does not come from the Kronecker product of two \(2\times 2\) matrices.

Let us give all the possible tensor product of two \(2-\)dimensional perfect evolution algebras, \(A_1\) and \(A_2\), regarding the number of zeros in their corresponding structure matrices \(M_1\) and \(M_2\). It is known that the perfect simple evolution algebras of dimension 2 have, on a certain natural basis, one of the following structure matrices:  with \(1-ab \ne 0\),

with \(1-ab \ne 0\),  with \(c \ne 0\) and

with \(c \ne 0\) and  with \(d \ne 0\) and \(a, b, c, d \in {\mathbb {K}}\) (see [10, Lemma 3.1]).

with \(d \ne 0\) and \(a, b, c, d \in {\mathbb {K}}\) (see [10, Lemma 3.1]).

Now, attending to these types of possible structure matrices for \(2-\)dimensional perfect evolution algebras, we classify in Table 4 the tensor algebra \(A_1\otimes A_2\) taking into account its structure matrix M and its associated directed graph G. For it, we look for a complete system of invariants that will be formed by some of the following elements: z(M), \(z_d(M)\), simplicity of the evolution algebra of the tensor product, \(p_c(M_G)\) and \(p_m(M_G)\), where \(p_c(M_G)\) and \(p_m(M_G)\) denote the characteristic and minimal polynomials of \(M_G\), respectively. Observe that, as the directed graph of a perfect evolution algebra is uniquely determined up to isomorphism, the characteristic and minimal polynomials of the adjacency matrix are so. Finally, applying the \(\sigma _k\)-criterion, we determine that \(A_1\otimes A_2\) is simple in the family types I-V, VII, XI.

It is important to note that, in fact, we have obtained several complete systems of invariants for all family types.

Proposition 5.11

In the previous conditions, we have the following sets that yield complete systems of invariants:

-

(i)

The numbers z(M), \(z_d(M)\) and the simplicity of the tensor product.

-

(ii)

The number z(M) and the polynomial \(p_c(M_G)\).

-

(iii)

The polynomials \(p_c(M_G)\) and \(p_m(M_G)\).

In summary, we get a classification of all tensorially decomposable evolution algebras of dimension 4, depending on if it is simple or perfect (but not simple). The classification is described according to the number of zeros.

Theorem 5.12

Let \({\mathbb {K}}\) be an arbitrary field and consider \(A_1\) and \(A_2\) two-dimensional perfect evolution \({\mathbb {K}}\)-algebras with structure matrices \(M_1\) and \(M_2\), respectively.

-

(i)

If \(A_1\otimes A_2\) is a \(4-\)dimensional simple evolution algebra, then it turns out that it belongs to one of the family types I–V, VII and XI of Table (4).

-

(ii)

If \(A_1\otimes A_2\) is a \(4-\)dimensional nonsimple evolution algebra, then it turns out that it belongs to one of the family types VI, VIII–X, XII, XIII of Table (4).

As an example of a classification task of evolution algebras we have the following one.

Example 5.13

Let A be an evolution algebra with structure matrix  relative to the natural basis \(\{e_1,e_2,e_3,e_4\}\) and associated directed graph G. Since the determinant of M is nonzero, this matrix corresponds to a perfect evolution algebra. If we compute the stabilizing index of \(M_G\) we see that it is 1 since \(\sigma _2(M_G)=\sigma _1(M_G)\). However, \(\sigma _2(M_G)\) is not the matrix whose entries are 1. Consequently, A is not a simple algebra. Next, we get that the characteristic polynomial is \(p_c(M_G)=(\lambda -1)^4\), so if A is tensorially decomposable, it should be an algebra of the family type VI or X. Now, the minimal polynomial is \(p_m(M_G)=(\lambda -1)^3\), then this determines that the only possible family type is VI (item (iii) Proposition 5.11). So, in order to see if it is tensorially decomposable we compute the orbit of M under the action of \(S_4\) and check if there is some element \(M'\) in that orbit, such that \(\textrm{rank}(\textrm{Ex}(M'))=1\) (see Lemma 5.9). If we consider the natural basis \(B'=\{e_4, e_1,e_2,e_3\}\), we get that

relative to the natural basis \(\{e_1,e_2,e_3,e_4\}\) and associated directed graph G. Since the determinant of M is nonzero, this matrix corresponds to a perfect evolution algebra. If we compute the stabilizing index of \(M_G\) we see that it is 1 since \(\sigma _2(M_G)=\sigma _1(M_G)\). However, \(\sigma _2(M_G)\) is not the matrix whose entries are 1. Consequently, A is not a simple algebra. Next, we get that the characteristic polynomial is \(p_c(M_G)=(\lambda -1)^4\), so if A is tensorially decomposable, it should be an algebra of the family type VI or X. Now, the minimal polynomial is \(p_m(M_G)=(\lambda -1)^3\), then this determines that the only possible family type is VI (item (iii) Proposition 5.11). So, in order to see if it is tensorially decomposable we compute the orbit of M under the action of \(S_4\) and check if there is some element \(M'\) in that orbit, such that \(\textrm{rank}(\textrm{Ex}(M'))=1\) (see Lemma 5.9). If we consider the natural basis \(B'=\{e_4, e_1,e_2,e_3\}\), we get that  satisfies \(\textrm{rank}(\textrm{Ex}(M'))=1\). In fact,

satisfies \(\textrm{rank}(\textrm{Ex}(M'))=1\). In fact,  .

.

6 Some Proposal of Further Work: The Nonperfect Tensorially Decomposable Evolution Algebra

Concerning the nonperfect evolution algebras that are tensor product of other evolution algebras, some factor of the tensor product must be nonperfect. So, if \(A_i\) (with \(i=1,2\)) are evolution algebras and \(A_1 \otimes A_2\) is nonperfect we have two possibilities: either both \(A_i\) are nonperfect or one of them is perfect and the other nonperfect (see item (i) Proposition 2.14). It can be proved that these two cases are mutually excluding. Furthermore, in the first case there are nonzero elements \(a_i\in A_i\) (\(i=1,2\)) such that \(A_i^2={\mathbb {K}}a_i\). Then \(A_1\otimes A_2={\mathbb {K}}(a_1\otimes a_2)\), so the tensor product is an algebra with one-dimensional square. In general, the evolution \({\mathbb {K}}\)-algebras A such that \(A^2={\mathbb {K}}a\) for some nonzero a can be described in terms of the symmetric bilinear form \(\langle \ , \ \rangle :A\times A\rightarrow {\mathbb {K}}\) (also termed inner product) such that \(xy=\langle x,y\rangle a\) for any \(x,y\in A\). The couple \((\langle \ , \ \rangle ,a)\) is uniquely determined up to nonzero scalars, and the classification of such algebras is related to that of the couples \((\langle \ , \ \rangle ,a)\) modulo a suitable relation involving isometries between the corresponding bilinear forms (see [3]). This task will be performed in a forthcoming paper that will also include the second case: that in which only one of the factors is nonperfect. In this ambient, we highlight that the handicap when dealing with nonperfect evolution algebras is that, in general, the natural bases are not uniquely determined up to reordering and scalar multiplication times nonzero elements. This implies that the structure matrices are not so rigidly related as in the perfect case, and we cannot associate in a unique fashion a graph to the algebra. Despite this drawback, a classification of nonperfect 4-dimensional evolution algebras that are tensor product of 2-dimensional ones is feasible and will be the main goal of future work.

Data availability

The authors confirm that the data supporting the findings of this study are available within the article.

References

Adams, W.W., Loustaunau, P.: An Introduction to Gröbner Bases. Graduate Studies in Mathematics Volume 3, American Mathematical Society, (1994)

Bardet, M., Faugère, J., Salvy, B.: On the complexity of the \(F_5\) Gröbner basis algorithm. Journal of Symbolic Computation, Volume 70, 2015, Pages 49-70. https://doi.org/10.1016/j.jsc.2014.09.025

Brache, C., Barquero, D.M., González, C.M., Sánchez-Ortega, J.: Evolution algebras with one-dimensional square. arXiv:2103.01625

Bustamante, M.D., Mellon, P., Velasco, M.V.: Determining when an Algebra is an evolution algebra. Mathematics. 8, 1349 (2020)

Casado, Y.C.: Evolution algebras. Doctoral dissertation. Universidad de Málaga (2016). http://hdl.handle.net/10630/14175