Abstract

In this paper, we consider the problem of the approximation of the integral of a function f over a d-dimensional simplex S of \(\mathbb {R}^{d}\) by some quadrature formulas which use only the functional and derivative values of f on the boundary of the simplex S or function data at the vertices of S, at points on its facets and at its center of gravity. The quadrature formulas are computed by integrating over S a polynomial approximant of f which uses functional and derivative values at the vertices of S.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of the determination of quadrature rules for triangles, tetrahedra and, in general, for d-dimensional simplicial domains has reached the attention of a number of scholars starting from the middle of the nineteenth century up today (see [13] and the references therein). Although many papers focus on quadrature rules for triangles [3, 10, 12, 16], only a limited literature is available on the integration in three or higher dimensions [4, 14, 17]. In this paper, we approach the problem of integration over general d -dimensional simplices by special type integration formulas which use functional and derivative values of the integrand function f mainly on points on the boundary of the d-dimensional simplex S. When the nodes lie only on the boundary of S these formulas are called boundary type quadrature formulas and are used when the values of f and its derivatives inside the simplex are not given or are not easily determinable. Applications of these formulas can be realized in the framework of the numerical solution of boundary value problems of partial differential equations (see [9] and the references therein).

To reach our goal, we follow the approach proposed in Refs. [1, 2]. More precisely, we approximate the integrand function f with a polynomial interpolant \(L_r^S[f]({\varvec{x}})\) which uses functional and derivative data values up to a fixed order \(r\in \mathbb {N}\) at the vertices of S, i.e.

and then, we integrate both sides of (1.1) over the d-dimensional simplex S to get the quadrature formula

where

The obtained quadrature formula (1.2) uses function and derivative data up to the order r at the vertices of S and has degree of exactness \(1+r\), i.e. \(E_{r}^S[f]=0\) whenever f is a polynomial in d variables of total degree at most \(1+r\). The main feature of the quadrature formula \(Q^{S}_{r}[f]\) is that it relies only on function and derivative data up to the order r at the vertices of S. This motivates us to look for approximations of those derivatives to obtain quadrature formulas which do not use any derivative data. To this end, we restrict to the case \(r=1\) and, by following the technique described in Ref. [6], we approximate the first order derivative data by three-point finite differences approximation. According to the choice of the approximation of the derivative data, we get different quadrature formulas with degree of exactness 2 and, to increase the degree of exactness of such formulas, we consider the convex combination of two of them to get a quadrature formula with degree of exactness 3 (see Sect. 3). Finally, we restrict to the two-dimensional case (see Sect. 4) and we provide numerical results to test the approximation accuracies of the proposed formulas (see Sect. 5).

2 A Quadrature Formula on the d-Dimensional Simplex

2.1 Preliminaries and Notations

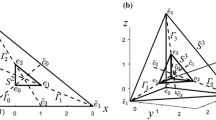

Let \(S\subset \mathbb {R}^{d}\) be a not degenerated d-dimensional simplex with vertices \({\varvec{v}}_{0},\dots ,{\varvec{v}}_{d}\in \mathbb {R}^{d}\) and

the signed volume of the hyperparallelepiped with vertices \({\varvec{v}}_{0},\dots ,{\varvec{v}}_{d}\). For a point \({\varvec{x}}\in S\) and for each \(l=0,1,\dots ,d\) we denote by \(S_{l}({\varvec{x}})\) the d-dimensional simplex of vertices \({\varvec{v}} _{0},\dots ,{\varvec{v}}_{l-1},{\varvec{x}},{\varvec{v}}_{l+1},\dots ,{\varvec{v}}_{d}\). The barycentric coordinates of \({\varvec{x}}\) with respect to the simplex S are then defined by

For each \(\alpha =\left( \alpha _{1},\alpha _{2},\dots ,\alpha _{d}\right) \in \mathbb {N}^{d}\) and \({\varvec{x}}=(x_{1},\dots ,x_{d})\in \mathbb {R}^{d}\), as usual, we denote by

We also set \({\varvec{\lambda }}=(\lambda _{0},\lambda _{1},\lambda _{2},\dots ,\lambda _{d})\) and \({\varvec{\lambda }}_{l}=(\lambda _{0},\dots ,\lambda _{l-1},\lambda _{l+1},\dots ,\lambda _{d})\) for each \(l=0,\dots ,d\). Moreover, we denote by \(D^{\alpha }f=\frac{\partial ^{{\left| \alpha \right| }}f}{ \partial x_{1}^{\alpha _{1}}\partial x_{2}^{\alpha _{2}}\dots \partial x_{d}^{\alpha _{d}}}\) and by

the k-th order directional derivative of f along the line segment between \({\varvec{x}}\) and \({\varvec{v}}\). Finally, we use the notations

for the derivative of f along the directed line segment from \({\varvec{x}}_{j}\) to \({\varvec{x}}_{i}\) (as usual, \(\cdot \) denotes the dot product) and

for the composition of derivatives along the directed sides of the simplex. Under these assumptions, we get the following result.

Lemma 2.1

Let \(f\in C^{r}(S)\), then

for any \(k \in \mathbb {N}, k \le r \text { and }\varvec{x}\in \mathbb {R}^d\).

Proof

Due to the properties satisfied by the barycentric coordinates, for any \(l=0,1,\dots ,d\), we have

By substituting (2.6) in (2.2) and by definition (2.4), we have

\(\square \)

Proposition 2.2

Let \(S\subset \mathbb {R}^{d}\) be a not degenerated d-dimensional simplex with vertices \({\varvec{v}}_0,\dots ,{\varvec{v}}_d\) then

Proof

See [15, Theorem 2.2]. \(\square \)

Proposition 2.3

Let \(S\subset \mathbb {R}^{d}\) be a not degenerated d-dimensional simplex with vertices \({\varvec{v}}_{0},{\varvec{v}} _{1},\dots ,{\varvec{v}}_{d}\). For any \({\varvec{x}}\in S\) and \(r\in \mathbb {N}\), we have

Proof

By the equality (2.6) and by recalling that \(0\le \lambda _{j}({\varvec{x}})\le 1\) for each \({\varvec{x}}\in S\), we easily obtain

for any \(l=0,\dots ,d\). Consequently,

and, by Proposition 2.2, we easily get the inequality (2.8). \(\square \)

2.2 Construction of the Quadrature Formula

The multivariate Lagrange interpolation polynomial on the simplex S in barycentric coordinates is

The operator \(L^{S}\) reproduces polynomials up to the degree 1 and interpolates the values of f at the vertices \({\varvec{v}}_{l}\) of the simplex S. If the function f belongs to \(C^{r}(S)\), we can replace the values \(f( {\varvec{v}}_{l})\) by the modified Taylor polynomial of degree r at \({\varvec{v}}_{\ell }\) proposed in [6], the resulting polynomial operator is

where \(a_{rk}=\frac{(1+r-k)!r!}{(1+r)!(r-k)!}\). As specified in [6], the operator \(L_{r}^{S}[f]({\varvec{x}})\) reproduces polynomials up to the degree \(\max \left\{ 1,1+r\right\} =1+r\). Moreover, for each \({\varvec{x}}\in S\) and \(f\in C^{r+2}\left( S\right) \), its remainder term \(R_{r}^{S}[f]({\varvec{x}})=f({\varvec{x}} )-L_{r}^{S}[f]({\varvec{x}})\) can be explicitly represented as

Remark 2.4

Since S is a compact convex domain and \(L^{S}\) is a linear bounded operator, in line with [8], \(L_{r}^{S}\) can be interpreted as

and, from [8, Proposition 3.4], it follows that \(L_{r}^{S}\) inherits the interpolation properties of the Lagrange operator (2.9).

To obtain the desired quadrature formula, we rearrange polynomial (2.10) by taking into account Lemma 2.1. More precisely,

and, by the change of dummy index, we get

The quadrature formula is then computed by integrating the right hand side of (2.13) on the simplex S.

Theorem 2.5

Let \(f\in C^{r+2}(S)\). Then

where

and

Moreover, the quadrature formula \(Q_{r}^{S}[f]\) has degree of exactness \(1+r\) .

Proof

By integrating the right hand side of equality (2.13), we get

By Proposition (2.2)

and then (2.16) becomes

The expression of \(E^{S}_{r} [f] \) is obtained by integrating on the simplex S the remainder term \(R_{r} ^{S}[f]({\varvec{x}})\) in formula (2.11). Since \(R_r^S[f]({\varvec{x}})\) vanishes whenever f is a polynomial in d variables of total degree at most \(1+r\), \(E^{S}_{r}[f]\) inherits this property and the quadrature formula has degree of exactness \(1+r\). \(\square \)

2.3 Error Bounds

To give a bound for the remainder term \(E_{r}^{S}[f]\) of the quadrature formula in Theorem 2.5, we need some additional notations. More precisely, for a k-times continuous differentiable function \(f:S\rightarrow \mathbb {R}\), we introduce the norm

where \(\Vert \cdot \Vert \) denotes the Euclidean norm in \(\mathbb {R}^{d}\), and \({\varvec{y}}\) is assumed to be a column vector. Consequently, for any \({\varvec{x}} \in S\) and \({\varvec{y}}\in \mathbb {R}^{d}\), we have

Proposition 2.6

Let \(S\subset \mathbb {R}^{d}\) be a not degenerated d-dimensional simplex with vertices \({\varvec{v}}_{0},\dots ,{\varvec{v}}_{d}\) and \(f\in C^{r+2}(S)\). Then

Proof

By taking the modulus of both sides of equality (2.15), by applying the triangular inequality and by bounding the directional derivative of f of order \(r+2\) by (2.19), we have

Using the inequality in Proposition (2.3), and by the fact that

we have

and then (2.20). \(\square \)

Remark 2.7

It is worth noting that Theorem 2.5 gives a quadrature formula obtained by integrating both sides of the expression in [6, Theorem 1] and the bound in Proposition 2.6 is nothing but the integral of the bound given in [6, Theorem 2], where \(\Omega =S\), \(m=1\) and \(\phi _{i}\left( {\varvec{x}}\right) =\lambda _{i}({\varvec{x}})\). Consequently, the bound (2.20) is the best possible estimation for each \(r\in \mathbb {N}_0\).

3 Integration Formulas on the Simplex with Only Function Data

The main feature of the quadrature formula (2.14) is that it uses only derivatives of f along the edges of S; this motivates to consider approximations of those derivatives to obtain quadrature formulas which use the function data at the vertices of the simplex S, at points on its facets or at its center of gravity. To this aim, we focus on the case \(r=1\) and we consider different kinds of approximation of the derivatives in (2.14). For \( r=1\), the quadrature formula (2.14) in Theorem 2.5 becomes

Proposition 3.1

Let \(f:S \rightarrow \mathbb {R}\) be a 3-times continuous differentiable function on S, then

where

Moreover, the quadrature formula (3.2) has degree of exactness 2.

Proof

By definition (2.4), the sum of first-order derivatives in the second term of \(Q^{S}_{1}[f]\) can be rewritten as

where the differences of directional derivatives along the edges of the simplex S can be replaced by a three-point finite difference approximation. To do this, let us recall that for a univariate function g, it is possible to consider the derivation formula

for some \(\xi \in [a-h,a+h]\). Using this formula with \(h=\pm 1/2\) and \(a=1/2\) we get a three-point approximation for \(g'(0)-g'(1)\) with a remainder term which is expressed in terms of the modulus of continuity of \(g'''\) [6, Section 5.1]. By applying the formula (3.4) along the edges of S we get

with

where \(\omega \) denotes the modulus of continuity with respect to \(t\in [0,1]\). By substituting expression (3.5) in (3.3) and by rearranging, we get

Finally, by substituting (3.6) in (3.1), we get

\(\varepsilon _{l,r}[f]=0\) whenever f is a polynomial in d variables of total degree 2 and this implies that \(\tilde{Q}_1^S[f]\) has degree of exactness 2. \(\square \)

Proposition 3.2

Let \(S\subset \mathbb {R}^{d}\) be a not degenerated d-dimensional simplex with vertices \(({\varvec{v}}_{l})_{l=0,1,\dots ,d}\). Let us denote by \( s_{l}^{d-1},l=0,1,\dots ,d\) the facet opposite to the vertex \({\varvec{v}}_{l}\) and by \({\varvec{g}}_{l}\) the barycenter of \(s_{l}^{d-1}\). For all \(\alpha \in (0,1)\) we have

with \({\varvec{y}}_{l}(\alpha )={\varvec{v}}_{l}+\alpha ({\varvec{g}}_{l}-{\varvec{v}}_{l})\) and

The quadrature formula (3.7) has degree of exactness 2.

Proof

Let \({\varvec{g}}_l\) be the barycenter of \(s^{d-1}_{l}\), by Lemma (2.1) the sum of first-order derivatives along the edges of S in (3.1) can be rewritten as

and, since \(\lambda _k({\varvec{g}}_l)=\frac{1}{d}\), for each \(l,k=0,\dots ,d\), then

By substituting (3.9) in (3.1), we get

To have a three point finite difference approximation of the directional derivatives in (3.10), for each \(l=0,\dots ,d\), let us introduce the univariate function

For \(t=1\) and \(t=\alpha \in (0,1)\), the second-order Taylor expansion of \(h_{l}(1)\) and \(h_{l}(\alpha )\) centered at 0 with integral remainder are

and

Then, by (3.12) and (3.13), we get

where

Therefore, by (3.14) it follows that

By rewriting equality (3.15) in terms of f we get

where

Finally, by substituting (3.16) in (3.1), we get (3.7). \(R(\alpha )[f]=0\) whenever f is a polynomial in d variables of total degree 2 and, therefore, the quadrature formula (3.7) has degree of exactness 2. \(\square \)

Remark 3.3

-

1.

For \(\alpha =\dfrac{d}{d+4}\) the formula (3.7) becomes

$$\begin{aligned} \displaystyle \int _{S}f({\varvec{x}}){\text {d}}{\varvec{x}}= & {} \frac{A(S)}{2(d+2)!}\left( \frac{ (d+4)^{2}}{4}\sum _{l=0}^{d}f\left( \frac{d{\varvec{g}}_{l}+4{\varvec{v}}_{l}}{d+4} \right) -\frac{d^{2}}{4}\sum _{l=0}^{d}f({\varvec{g}}_{l})\right) \nonumber \\&+R\left( d,\frac{d}{d+4}\right) [f], \end{aligned}$$(3.18)that is a quadrature formula which uses only the function data at the points \({\varvec{g}}_{l}\) and \(\frac{d{\varvec{g}}_{l}+4{\varvec{v}}_{l}}{d+4}\), \(l=0,\dots ,d\), and is exact for all polynomial of degree less than or equal to 2.

-

2.

For \(\alpha =\frac{d}{d+1}\), the quadrature formula (3.7) becomes,

$$\begin{aligned} \displaystyle \int _{S}f({\varvec{x}}){\text {d}}{\varvec{x}}= & {} \frac{A(S)}{2(d+2)!}\left( 3\sum _{l=0}^{d}f\left( {\varvec{v}}_{l}\right) +(d+1)^{3}f({\varvec{x}}^{*})-d^{2}\sum _{l=0}^{d}f({\varvec{g}}_{l})\right) \nonumber \\&+R\left( d,\frac{d}{d+1}\right) [f] \end{aligned}$$(3.19)where \({\varvec{x}}^{*}\) is the center of gravity of S.

To improve the approximation accuracy of the quadrature formula (3.19), let us consider a convex combination of this formula with a multivariate Simpson rule for a simplex proposed in [7, Theorem 5.1]. For a particular value of the parameter of the linear combination, we are able to get a quadrature formula with an higher degree of exactness.

Corollary 3.4

Let \(f:S \rightarrow \mathbb {R}\) be a 3-times continuous differentiable function on S. Let us denote by \({\varvec{x}}^*\) the center of gravity of S, by \( s^{d-1}_{l}\), \(l=0,1,\dots ,d\) the facets of S opposite to the vertex \( {\varvec{v}}_{l}\) and by \({\varvec{g}}_{l}\) the barycenter of \(s^{d-1}_{l}\). Then,

where

is the multivariate Simpson rule for a simplex [7, Theorem 5.1],

is given by (3.7) for \(\alpha =\frac{d}{d+1}\) and

with \(R^{Si}_d[f]\) denoting the remainder term in the multivariate Simpson rule for a simplex. For all \(\tau \in \mathbb {R}\) we have \(\tilde{R} (\tau )[f]=0\), whenever f is a polynomial in d variables of total degree at most 2.

Proof

Since \(R^{Si}_d[f]=0\) and \(R\left( d,\frac{d}{d+1}\right) [f]=0\) whenever f is a polynomial in d variables of total degree less than or equal to 2, it easily follows that \(\tilde{R} (\tau )[f]\) vanishes for each polynomial of degree at most 2. \(\square \)

For \(\tau =\frac{3(d+1)}{d+3}\), the family of quadrature formulas (3.20) yields to a formula which has degree of exactness 3.

Theorem 3.5

Let \(f:S\rightarrow \mathbb {R}\) be a 3-times continuous differentiable function on S and let us denote by \({\varvec{x}}^{*}\) the center of gravity of S, by \(s_{l}^{d-1}\), \(l=0,1,\dots ,d\) the facet opposite to the vertex \({\varvec{v}}_{l}\) and by \({\varvec{g}}_{l}\) the barycenter of \( s_{l}^{d-1}\). The quadrature formula

with \(\tilde{R}\left( \frac{3(d+1)}{d+3}\right) [f]\) defined in (3.21), has degree of exactness 3.

Proof

For \(\tau =\frac{3(d+1)}{d+3}\), the quadrature formula (3.20) reduces to

and, by Corollary 3.4, it follows that \(F_{3}[f]\) has degree of exactness 2. Let \(P_{3}({\varvec{x}})\) be a polynomial in d variables of degree 3; we can write \(P_3({\varvec{x}})\) as

where \(P_{2}({\varvec{x}})\) is a polynomial of degree 2. Therefore, it is sufficient to prove that \(F_{3}[\cdot ]\) is exact for the monomials

Thanks to the linear isomorphism which maps the standard simplex \(\Delta _d\) of \({\mathbb {R}}^d\) to a generic simplex S, without loss of generality, we can restrict to the case of the simplex \(\Delta _d\) of vertices \({\varvec{v}}_{0}=(0,\dots ,0)\); \({\varvec{v}}_{1}=(1,0,\dots ,0)\); \({\varvec{v}}_l=(0,0,\dots ,1,\dots ,0)\); \({\varvec{v}}_{d}=(0,\dots ,0,1)\). The center of gravity of \(\Delta _d\) is \({\varvec{x}}^{*}=\left( \frac{1}{d+1},\dots ,\frac{1}{d+1}\right) \) and the barycenter of the facets are

Let us now consider \(M_{1}=x_i^3\), \(i=0,\dots ,d\). The exact integral of \(M_1\) over the simplex \(\Delta _d\) is [11]

and, in addition,

By substituting equalities (3.24) in the quadrature formula (3.23) we have

Let us consider \(M_{2}=x_i^3x_j\), \(i,j=0,\dots ,d\) and \(j\ne i\). The exact integral of \(M_2\) over the simplex \(\Delta _d\) is [11]

and, in addition,

By substituting equalities (3.25) in the quadrature formula (3.23) we have

Finally, let us consider \(M_{3}=x_i x_j x_k\), \(i,j,k=0,\dots ,d\) and \(k\ne i,j\) for which the exact integral over the simplex \(\Delta _d\) is [11]

and

By substituting equalities (3.26) in the quadrature formula (3.23), we have

Then

and this shows that the degree of exactness of the quadrature formula (3.22) is 3. \(\square \)

4 The 2-Simplex Case

In this section, we restrict to the case \(d=2\) in which S is a triangle of vertices \({\varvec{v}}_0\), \({\varvec{v}}_1\), \({\varvec{v}}_2\). In this particular case, the quadrature formula (2.14) reduces to

and, by easy computations, the bound for the approximation error becomes

The quadrature formula (3.19), which has degree of exactness 2 and uses only function data at the vertices of S, at the midpoints of its sides and at its center of gravity, becomes

where

and

The quadrature formula (3.22), which has degree of exactness 3 and uses only function data at the vertices of S, at the midpoints of its sides and at its center of gravity, becomes

where \({\varvec{g}}_l= \dfrac{{\varvec{v}}_{l}+{\varvec{v}}_{r}}{2}\), i.e. \({\varvec{g}}_l\) is nothing but the midpoint of the side of the triangle S opposite to \({\varvec{v}} _l\). For \(d=2\) and \(\alpha =1/3\) the formula (3.7), which has degree of exactness 2 and uses function data at the midpoints of the sides of S and at the points \(\frac{{\varvec{g}}_l+2{\varvec{v}}_l}{3}\), \( l=0,\dots ,d\), becomes

with

and \(R\left( 2,\frac{1}{3}\right) [f]\) defined in (3.8).To enhance the degree of exactness of the quadrature formula (4.6), let us consider the midpoint formula for the 2-dimensional simplex [5]

where \(E[f]=0\) whenever f is a polynomial in 2 variables of total degree at most 2. We set

and define the convex combination

where

Since, for all \(\alpha \in \mathbb {R}\), \(E_{\alpha }[f]=0\) whenever f is a polynomial in 2 variables of total degree at least 2, the quadrature formula (4.7) has at least degree of exactness 2.

Theorem 4.1

Let \(f:S\subset \mathbb {R}^{2} \rightarrow \mathbb {R}\) be a 3-times continuous differentiable function on S. Then, the quadrature formula

with

has degree of exactness 3.

Proof

Equality (4.10) follows by setting \(\alpha =\frac{4}{5}\) in (4.7) and by rearranging. To prove the degree of exactness of the formula (4.9), it is sufficient to follow the same arguments used in the proof of Theorem 3.5 for \(d=2\). \(\square \)

Finally, to obtain a quadrature formula over S with degree of exactness 4, we consider the convex combination of the quadrature formulas (4.4) and (4.10)

where

with \(\tilde{R}\left( \frac{9}{5} \right) [f]\) given by the Eq. (3.21) and \(E_{\frac{4}{5} }[f]\) by the equation (4.8). Since, for all \(\alpha \in \mathbb {R}\) , \(E^{\prime }_{\alpha }[f]=0\) whenever f is a polynomial in 2 variables of total degree at least 3, the quadrature formula (4.11) has at least degree of exactness 3.

Theorem 4.2

Let \(f:S\subset \mathbb {R}^{2} \rightarrow \mathbb {R}\) be a 3-times continuous differentiable function on S and \({\varvec{x}}^*\) the center of gravity of S. Then, the quadrature formula

where

and

has degree of exactness 4.

Proof

Equality (4.13) follows by setting \(\alpha =\frac{1}{3}\) in (4.11). To prove the degree of exactness of the formula (4.12), we proceed by verifying the exactness of the quadrature formula for the monomials \(x^4\), \(x^3y\), \(xy^3\), \(x^2y^2\), \(y^4\), similarly to the proof of Theorem 3.5 for \(d=2\). \(\square \)

5 Numerical Results in \(d=2\)

To test the approximation accuracies of the proposed formulas, we consider the case \(d=2\) and the standard triangle \(S=\Delta _{2}\) of vertices \({\varvec{v}}_{0}=(0,0)\), \({\varvec{v}}_{1}=(1,0)\), \({\varvec{v}}_{2}=(0,1)\). The numerical experiments are conducted by considering the following set of test functions [1]

In all the experiments, the exact value of the integrals for functions \(f_{1} \) and \(f_{2}\) are computed by assuming as exact the numerical integration performed by Mathematica. In Table 1, we report the absolute value of the remainder terms \(E_{r}^{\Delta _{2}}[f_{i}]=Q_{r}^{\Delta _{2}}[f_{i}]-\int _{\Delta _{2}}f_{i}({\varvec{x}}){\text {d}}{\varvec{x}}\), \(i=1,\dots ,4\), \( r=1,\dots ,10,\) and, in Table 2, we display the absolute value of the remainder terms

References

Costabile, F.A., Dell’Accio, F.: New embedded boundary-type quadrature formulas for the simplex. Numer. Algorithms 45(1–4), 253–267 (2007)

Costabile, F.A., Dell’Accio, F., Guzzardi, L.: New bivariate polynomial expansion with boundary data on the simplex. Calcolo 45(3), 177–192 (2008)

Dunavant, D.A.: High degree efficient symmetrical Gaussian quadrature rules for the triangle. Int. J. Numer. Methods Eng. 21(6), 1129–1148 (1985)

Grundmann, A., Möller, H.M.: Invariant integration formulas for the n-simplex by combinatorial methods. SIAM J. Numer. Anal. 15(2), 282–290 (1978)

Guenther, R.B., Roetman, E.L.: Newton–Cotes formulae in \(n\)-dimension. Numer. Math. 14, 330–345 (1970)

Guessab, A., Nouisser, O., Schmeisser, G.: Multivariate approximation by a combination of modified Taylor polynomials. J. Comput. Appl. Math. 196(1), 162–179 (2006)

Guessab, A., Schmeisser, G.: Convexity results and sharp error estimates in approximate multivariate integration. Math. Comput. 73, 1365–1384 (2003)

Guessab, A., Nouisser, O., Schmeisser, G.: Enhancement of the algebraic precision of a linear operator and consequences under positivity. Positivity 13(4), 693–707 (2009)

He, T.X.: Dimensionality Reducing Expansion of Multivariate Integration, pp. 1–23. Birkhäuser, Boston (2001)

Hesthaven, J.S.: From electrostatics to almost optimal nodal sets for polynomial interpolation in a simplex. SIAM J. Numer. Anal. 35(2), 655–676 (1998)

Konerth, N.: Exact multivariate integration on simplices: an explanation of the Lasserre–Avrachenkov theorem. Technical report no. 2014-05 (AU-Cas-Mathstats) (2014)

Lyness, J.N., Cools, R.: A survey of numerical cubature over triangles. In: Proceedings of Symposia in Applied Mathematics, vol. 48. American Mathematical Society, pp. 127–150 (1994)

Shunn, L., Ham, F.: Symmetric quadrature rules for tetrahedra based on a cubic close-packed lattice arrangement. J. Comput. Appl. Math. 236(17), 4348–4364 (2012)

Silvester, P.: Symmetric quadrature formulae for simplexes. Math. Comput. 24(109), 95–100 (1970)

Vermolen, F.J., Segal, A.: On an integration rule for products of barycentric coordinates over simplexes in \(\mathbb{R}^n\). J. Comput. Appl. Math. 330, 289–294 (2018)

Wandzurat, S., Xiao, H.: Symmetric quadrature rules on a triangle. Comput. Math. Appl. 45(12), 1829–1840 (2003)

Zhang, L., Cui, T., Liu, H.: A set of symmetric quadrature rules on triangles and tetrahedra. J. Comput. Math. 27, 89–96 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research has been accomplished within the RITA “Research ITalian network on Approximation”. This research was supported by GNCS–INdAM 2019 project. The authors would like to thank Professor F. Dell’Accio, Professor O. Nouisser and the anonymous referee for their valuable comments.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Di Tommaso, F., Zerroudi, B. On Some Numerical Integration Formulas on the d-Dimensional Simplex. Mediterr. J. Math. 17, 142 (2020). https://doi.org/10.1007/s00009-020-01579-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-020-01579-3