Abstract

A geometric algebra provides a single environment in which geometric entities can be represented and manipulated and in which transforms can be applied to these entities. A number of versions of geometric algebra have been proposed and the aim of the paper is to investigate one of these as it has a number of advantageous features. Points, lines and planes are presented naturally by element of grades 1, 2, and 3 respectively. The self-reverse elements in the algebra form a field. This allows an equivalence relation between elements of grade 2 to be defined so that, although not every grade 2 element corresponds to a line, each equivalence class does, and vice versa. Examples are given to illustrate the ease in which geometric objects are represented and manipulated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Geometry is concerned with the properties of objects such as points and lines and whether these remain invariant under various transforms. In the 1800s, work by researchers such as Clifford, Grassmann and Hamilton sought means to represent transforms (particularly rigid-body transforms) such as rotations and translations. What emerged were various approaches including the quaternions and the Clifford and Grassmann algebras [1, 7,8,9, 18, 19]. However, these ideas lay dormant for the early part of the 1900s and transforms were studied using \(4 \times 4\) matrices and homogeneous coordinates [29]. The algebra-based ideas started to reappear towards the end of the 1900s as they provided a more robust means for dealing with transforms in such applications as computer games [20].

Of the algebra-based ideas, the quaternions and dual quaternions can be used as a means for handling rigid-body transforms of 3D space in a compact way [9, 17, 18, 22]. While they allow points to be defined, they are limited in their ability to represent other geometric objects (lines, planes, etc.).

The algebras of Clifford and Grassmann have evolved into the ideas of geometric algebra which provides a single framework for dealing with both geometric objects (such as points, line and planes) and the transformations acting upon them. A number of versions of geometric algebra have been proposed [27] which have somewhat different properties and are therefore better suited to specific applications. Perhaps the version most frequently used is the conformal geometric algebra (CGA) [2, 7, 33].

Other versions include the homogeneous model [10, 11, 30, 31] and projective geometric algebra (PGA) [12, 13] which are created by extending a real vector space of dimension 4, with the introduction of a multiplication in which the square of one of the basis vectors is zero. This has the effect of relating vectors in the algebra to planes in the geometry, which seems unnatural, rather than to points which seems more natural. An alternative version, \({\mathcal {G}}_4\), of this algebra has been proposed [3, 23, 24] in which the square of the particular basis element is made (effectively) infinite. This has the effect of associating vectors in the algebra with points. Both the homogeneous model and \({\mathcal {G}}_4\) use an underlying vector space of dimension 4 to describe 3D space, the CGA uses dimension 5. The differences in these dimensions is considered in [21].

The purpose of this paper is to study geometric attributes of \({\mathcal {G}}_4\) in more detail. In particular, three significant properties are dealt with. The first is that points, lines and planes are represented naturally in the algebra by elements of grades 1, 2 and 3 respectively. A self-reverse element is a linear combination of elements of grade 0 and grade 4, and the second property is that the set of self-reverse elements forms a field. While lines are represented by elements of grade 2, not every such element corresponds to a line. However, the second property leads to the third: there is an equivalence relation between elements of grade 2, and every line corresponds to a unique equivalence class and vice versa.

To make this paper more self-contained, Sect. 2 provides an overview of how the geometric algebra \({\mathcal {G}}_4\) is formed, and Sect. 3 discusses self-reverse elements and their properties. Sect. 4 shows how geometric objects (points, lines and planes in three dimensions) are represented in \({\mathcal {G}}_4\). Lines are considered further in Sect. 5 where, as noted above, not all elements of grade 2 represent lines, but every equivalence class of such elements does. This section also discusses results relating geometric properties to relations between the elements representing them. Sect. 6 applies these results to two geometric applications [4]: proofs of Desargues’s theorem and of Fermat’s triangle theorem. Finally, Sect. 7 draws conclusions: the geometric algebra \({\mathcal {G}}_4\) provides a single environment in which three-dimensional geometric entities (such as points, line and planes) can be represented in a natural way and rigid-body transforms can be applied to them. Elements representing geometric objects are shown to have a particular “grade” and the algebra is rich enough to allow use of the grade as a means of distinguishing object types.

2 Geometric Algebra \({\mathcal {G}}_4\)

There are various versions of geometric algebra. These include the conformal geometric algebra (CGA) [2, 7, 33], the homogeneous model [10, 11, 30, 31], and the projective geometric algebra (PGA) [12, 13].

The version used here is called \({\mathcal {G}}_4\). This is discussed in greater detail elsewhere [3, 23, 24] and what follows here is an overview of its construction. The \({\mathcal {G}}_4\) version is used here since the purpose of this paper is to investigate its properties which are different from other versions of geometric algebra: in particular and importantly, as discussed in Sect. 3, the subalgebra of self-reverse elements forms a field.

The algebra \({\mathcal {G}}_4\) can be regarded as a real vector space of dimension 16 which has basis elements denoted by \(e_{\sigma }\) where \(\sigma \) is an ordered subset of the set of subscripts \(\{ 0, 1, 2, 3 \}\). The basis vectors are the elements \(e_0\), \(e_1\), \(e_2\), \(e_3\). A multiplication is created on the basis elements by defining the following products of basis vectors

where \(i< j < k\) (\(0 \le i,j,k \le 3\)) are distinct subscripts. Additionally the following definition is made

The squares of the basis vectors are defined to be

where \(\varepsilon \) is a symbol which can be regarded as a small real quantity.

The typical element a of \({\mathcal {G}}_4\) is a linear combination of basis elements

where the \(a_{\sigma }\) are real coefficients. The multiplication is extended to the product of two such general elements by multiplying out on a term-by-term basis. The basis vector \(e_{\phi }\) corresponding to the empty set acts as unity with respect to multiplication and is identified with the real number 1. The basis element \(e_{0123}\) is denoted by \(\omega \):

The quantity \(\varepsilon \) is carried through all multiplications. This means that the coefficients of the basis elements become polynomials in \(\varepsilon \), and, as a result of other operations, they can also become power series in \(\varepsilon \). So an alternative view is that the typical element a in (2.3) is a linear combination of basis elements with coefficients \(a_{\sigma }\) lying in the field \(\mathbb {R}(({\varepsilon }))\) of formal (Laurent) power series in \(\varepsilon \). Such a coefficient is here called an \(\varepsilon \)-scalar and has the form

where the \({\alpha }_i\) are real numbers and m is a finite integer (possibly negative). Assuming that \({\alpha }_m\) is non-zero, it is called the leading coefficient, and m is the leading power. An element \(a \in {\mathcal {G}}_4\) is said to be of order \({\varepsilon }^i\) if the leading power of each of its non-zero coefficients is at least i, and one is equal to i. This is written \(a = O({\varepsilon }^i)\). Similarly, \(a \simeq b\) denotes that \(a-b = O({\varepsilon })\).

The grade of the basis element \(e_{\sigma }\) is the size of the subset \(\sigma \). More generally, if an element is a linear combination of basis elements of a single grade, then this is also the grade of the element. Elements of grade 1 are called vectors; those of grade 2 are bivectors; and grade 3 elements are trivectors. An element of the form \({\alpha } + {\varepsilon }{\beta }{\omega }\) where \({\alpha }\) and \({\beta }\) are \(\varepsilon \)-scalars is called a self-reverse element.

The reverse of a basis element is obtained by reversing the order of its subscripts. For example

The reverse of the general element of (2.3) is obtained by taking the reverse of each of its summands.

An inner and outer product of any two elements \(x, y \in {\mathcal {G}}_4\) are defined by equations

Note that this is different from other definitions [7, 25] which rely upon finding components of a particular grade within a product. The above definitions deal entirely with addition, subtraction and multiplication of elements within the algebra.

In addition, if \(a, b, c \in {\mathcal {G}}_4\) are any three elements, their triproduct is defined to be the following.

The following result is immediate.

Lemma 2.1

If \(a, b, c \in {\mathcal {G}}_4\) are three elements, then

-

1.

\(\left[ a , b , c \right] = \left[ b , c , a \right] = \left[ c , a , b \right] = -\left[ a , c , b \right] = -\left[ b , a , c \right] = -\left[ c , b , a \right] \);

-

2.

if any two of a, b, c are equal, then \(\left[ a , b , c \right] = 0\).

\(\square \)

The next two results look to relating the triproduct to the inner and outer products when the three elements involved have the same grade.

Lemma 2.2

If \(x, y \in {\mathcal {G}}_4\) have the same grade k, then the inner product  commutes with all elements of grade k.

commutes with all elements of grade k.

Proof

Firstly note that  has even grade and is equal to its own reverse. Hence it is a self-reverse element. Since \(\omega \) commutes with all even-grade elements of \({\mathcal {G}}_4\), this proves the result when k is 0, 2 or 4.

has even grade and is equal to its own reverse. Hence it is a self-reverse element. Since \(\omega \) commutes with all even-grade elements of \({\mathcal {G}}_4\), this proves the result when k is 0, 2 or 4.

If k is 1 or 3, there is not enough scope for any \(\omega \) component to appear in the products forming  and so it is a pure \(\varepsilon \)-scalar and the result is trivial. \(\square \)

and so it is a pure \(\varepsilon \)-scalar and the result is trivial. \(\square \)

Lemma 2.3

If \(a, b, c \in {\mathcal {G}}_4\) are three elements of the same grade, then

Further

Proof

Multiplying out the first three product gives

The difference of the first two gives

since  commutes with b by Lemma 2.2. So

commutes with b by Lemma 2.2. So

and, by symmetry, these are also equal to the third of the products; call this common value t. Then adding the expressions for the three products gives

so that \(t = \left[ a , b , c \right] \).

Also, from Eq. (2.4), it is seen that

By symmetry, this common values is also equal to \(abc-cba\) and the last part of the lemma follows. \(\square \)

As discussed in Sect. 4, a point in three-dimensional space is represented by the vector

where W is the additional coordinate and the cartesian coordinates of the point are (X/W, Y/W, Z/W). Products of vectors representing points are now considered.

Lemma 2.4

If \(p_1, p_2, p_3 \in {\mathcal {G}}_4\) are three vectors, then

Proof

The first equality is from Lemma 2.3. The second follows by multiplying out the triproduct. \(\square \)

Corollary 2.5

Three vectors \(p_1\), \(p_2\), \(p_3\) are linearly dependent if and only if \(\left[ p_1 , p_2 , p_3 \right] \) is zero. \(\square \)

Lemma 2.6

If \(p_1\), \(p_2\), \(p_3\), \(p_4\) are four vectors, then

where the \(W_i\) are the additional coordinates of the vectors \(p_i\), and V is the volume of the tetrahedron formed by the points corresponding to the four vectors.

Proof

This is derived from Lemma 2.4 by taking the outer product with \(p_4\). \(\square \)

Corollary 2.7

Four vectors \(p_1\), \(p_2\), \(p_3\), \(p_4\), with non-zero additional components, represent coplanar points if and only if

\(\square \)

Corollary 2.8

If \(p_i = e_0 + q_i\) for \(i=1,2,3,4\) are four vectors with each \(q_i = x_i e_1 + y_i e_2 + z_i e_3\) independent of \(e_0\), then

where V is the volume of the tetrahedron formed by the \(p_i\).

Proof

Using Lemma 2.3

From Lemma 2.4,

with similar expressions for the other triple products.

Combining the determinants gives

and the result follows from the last lemma. \(\square \)

Lemma 2.9

For any elements \(a, b, c \in {\mathcal {G}}_4\)

Proof

Expanding the right hand side gives the following

\(\square \)

3 Self-Reverse Elements

The only elements of \({\mathcal {G}}_4\) that have grade 4 are non-zero multiples of \(\omega = e_{0123}\). It anticommutes with every element of odd grade, commutes with every element of even grade, \({\omega }^2 = {{\varepsilon }^{-1}}\), and \({\omega }^{\dagger }={\omega }\).

More generally, an element of the form

where \(\alpha \) and \(\beta \) are \(\varepsilon \)-scalars, is called a self-reverse element. Such elements are the only ones of even grade equal to their own reverse.

The conjugate of a self-reverse element is the result of changing the sign of \(\omega \).

Theorem 3.1

The self-reverse elements form a field.

Proof

The only property that is not immediately obvious is the existence of multiplicative inverses. If \({\gamma } = {\alpha }+{\varepsilon }{\beta }{\omega }\) is a non-zero self-reverse element, then it has a multiplicative inverse in the element

Consideration of powers of \(\varepsilon \) in the denominator shows that it cannot be zero since \(\alpha \) and \(\beta \) are not both zero. \(\square \)

The next results consider when a self-reverse element can have a square root. Such roots are used in other forms of geometric algebra including the CGA [6].

Lemma 3.2

Suppose that \(\alpha \) is an \(\varepsilon \)-scalar with a positive leading coefficient. Then

-

(i)

if its leading power is even, \(\alpha \) has a square root which is an \(\varepsilon \)-scalar;

-

(ii)

more generally, \(\alpha \) has a square root which is a self-reverse element.

Proof

In case (i), the element has the form

where the \({\alpha }_i\) are real numbers, \({\alpha }_0 > 0\), and \(\beta \) has non-negative leading power. Then, using the binomial expansion, \(\alpha \) has two square roots, namely

In case (ii), if the leading power of \(\alpha \) is even, case (i) applies. If it is odd, then, since \({\varepsilon }{\omega }^2=1\), \(\alpha \) can be written as \({\alpha } = {\omega }^2 ( {\varepsilon }{\alpha } )\) and its square roots are \(\pm {\omega } \surd [ {\varepsilon }{\alpha } ]\) which exist by case (i). \(\square \)

Lemma 3.3

Suppose that \({\gamma } = {\alpha } + {\varepsilon }{\beta }{\omega }\) is a self-reverse element where \({\alpha }\) has zero leading power and positive leading coefficient, and the leading power of \(\beta \) is non-negative. Then \(\gamma \) has a square root.

Proof

Since \(\alpha \) is non-zero, the self-reverse element can be expressed as

where \({\phi } = {\beta }/{\alpha }\). Here \(\phi \) is an \(\varepsilon \)-scalar whose leading power is non-negative.

Lemma 3.2 shows that \(\alpha \) has a square root. Hence, using the binomial series, the following are square roots of \(\gamma \):

Since the power series being used are treated as “formal”, the question of their convergence is not important. However, it can be noted that the series appearing in the last two lemmas are derived from standard convergent series and hence are themselves convergent for suitably small values of \(\varepsilon \).

4 Points and Planes

The typical vector in \({\mathcal {G}}_4\) is the element

This is used to represent the point (W, X, Y, Z) in the projective space \({\mathbb {R}(({\varepsilon }))}^3\), and the point (X/W, Y/W, Z/W) in \({\mathbb {RP}(({\varepsilon }))}^3\). By letting \(\varepsilon \) become zero, it also represents a point in Euclidean space \({\mathbb {R}}^3\), assuming that the components remain finite.

An element of \({\mathcal {G}}_4\) is said to be in standard form if the coefficients of its components have non-negative leading powers and at least one leading power is zero. This definition is only used here with respect to the particular choice of basis vectors. However, it is independent of that choice provided the new set of basis vectors satisfies (2.1) and (2.2). Clearly, any element can be put into standard form by multiplying by a power of \(\varepsilon \), and any \(\varepsilon \)-scalar multiple of a point p corresponds to the same point in Euclidean space.

Conversely, the point (x, y, z) is represented by the vector \(W(e_0 + xe_1 + ye_2 + ze_3) \in {\mathcal {G}}_4\) for any non-zero choice of the \(\varepsilon \)-scalar W.

A vector in \({\mathcal {G}}_4\) is also called a point. When required to avoid confusion, the corresponding entity in Euclidean space is called a geometric point. The component W of the vector p is the additional coordinate, and p, in standard form, is said to be finite if W has zero leading power, and normalized if \(W=1\).

A vector of the form

is regarded as a direction.

In Euclidean geometry, three geometric points which are not collinear define a plane. If \(p_1, p_2, p_3 \in {\mathcal {G}}_4\) represent three such points, then the plane is represented by the triproduct \({\Pi } = \left[ p_1 , p_2 , p_3 \right] \). It is assumed that \({\Pi }\) is non-zero and this happens if the points are indeed not collinear as shown in the following lemma

Lemma 4.1

Suppose that \({\Pi }=\left[ p_1 , p_2 , p_3 \right] \) is the triproduct of three points. Then

-

(i)

\({\Pi }\) is non-zero if and only if the points are linearly independent (so that they do indeed form a plane);

-

(ii)

a point q lies in the plane \({\Pi }\) if and only if

;

; -

(iii)

if \({\Pi }\) is a plane and \(q_1\), \(q_2\), \(q_3\) are three points in the plane which are not collinear then \(\left[ q_1 , q_2 , q_3 \right] \) is the product of \({\Pi }\) and a non-zero \(\varepsilon \)-scalar.

Proof

Lemma 2.4 expresses \({\Pi }\) as a determinant, and this is non-zero if and only if the points are linearly independent. This gives (i). Part (ii) follows from Corollary 2.7.

For (iii), choose two independent directions u and v in the Euclidean plane, and suppose that \(p_0\) is a point in the plane, with

Then

for \(\varepsilon \)-scalars \({\lambda }_i\) and \({\mu }_i\). Column operations on the determinant in Lemma 2.4 show that \({\Pi }\) is a non-zero \(\varepsilon \)-scalar multiple of

Similarly, \(\left[ q_1 , q_2 , q_3 \right] \) is also a (possibly different) non-zero \(\varepsilon \)-scalar multiple of this determinant as required. \(\square \)

This means that the triproduct \({\Pi }\) for a plane is independent of the choices of points chosen within the plane up to multiplication by a non-zero \(\varepsilon \)-scalar.

Lemma 4.2

If q is a point in a plane \({\Pi }\), or a combination (by addition and/or multiplication) of such points, then q and \({\Pi }\) commute.

Proof

Suppose that \({\Pi } = \left[ p_1 , p_2 , p_3 \right] \) where \(p_1\), \(p_2\), \(p_3\) are three points defining the plane. If q is a point in the plane, then Corollary 2.7 says that  and so \({\Pi }\) and q commute. The extension to a combination of points in the plane is immediate. \(\square \)

and so \({\Pi }\) and q commute. The extension to a combination of points in the plane is immediate. \(\square \)

5 Lines

The previous section shows that points and planes correspond precisely to elements of grades 1 and 3 respectively in \({\mathcal {G}}_4\). This section considers lines and the situation is not as simple. A line is defined as the outer product of two points and as such is an element of grade 2. However not every element of grade 2 in \({\mathcal {G}}_4\) is a line. It is shown that an equivalence relation can be established between elements. This relies upon the result, Theorem 3.1, that the self-reverse elements form a field. It can then be shown that lines correspond precisely to the associated equivalence classes (Theorem 5.13). Additionally, this section provides some results relating geometric properties of lines to expressions involving elements of \({\mathcal {G}}_4\). Lines appear in other forms of geometric algebra (e.g. [11, 13, 14, 16]).

A line in \({\mathcal {G}}_4\) is defined to be the outer product  of two vectors. It is a finite line if it is non-zero and the two vectors are finite and represent different geometric points.

of two vectors. It is a finite line if it is non-zero and the two vectors are finite and represent different geometric points.

Lemma 5.1

Suppose that  is a finite line. Then

is a finite line. Then

-

(i)

if \(q \in {\mathcal {G}}_4\) is a finite vector corresponding to a point on the line joining the geometric points \(p_1\) and \(p_2\), then

and

and  are \(\varepsilon \)-scalar multiples of \(\ell \);

are \(\varepsilon \)-scalar multiples of \(\ell \); -

(ii)

if \(q_1, q_2 \in {\mathcal {G}}_4\) are finite vectors corresponding to points on the line joining the geometric points \(p_1\) and \(p_2\), then

is an \(\varepsilon \)-scalar multiple of \(\ell \).

is an \(\varepsilon \)-scalar multiple of \(\ell \).

Proof

In case (i), \(q = {\lambda }_1p_1 + {\lambda }_2p_2\) where \({\lambda }_1\) and \({\lambda }_2\) are \(\varepsilon \)-scalars, so that  and

and  .

.

Case (ii) is an obvious extension of (i). \(\square \)

Thus any line in Euclidean space is represented by the element  where \(p_1\) and \(p_2\) correspond to geometric points on the line. Except for multiplication by a non-zero \(\varepsilon \)-scalar, the element is independent of the choice of the points.

where \(p_1\) and \(p_2\) correspond to geometric points on the line. Except for multiplication by a non-zero \(\varepsilon \)-scalar, the element is independent of the choice of the points.

Lemma 5.2

If \(p_1\) and \(p_2\) are vectors with \(p_i = W_ie_0 + X_ie_1 + Y_ie_2 + Z_ie_3\) for \(i=1,2\), then  is given as

is given as

Proof

The proof is by multiplying out the product. \(\square \)

Note that the coefficients in  are the Plücker coordinates of the line joining the points showing that representation of a line as a bivector is closely related to other representations and also to the associated theory of screws

[26, 28, 31].

are the Plücker coordinates of the line joining the points showing that representation of a line as a bivector is closely related to other representations and also to the associated theory of screws

[26, 28, 31].

Lemma 5.3

Every line \(\ell \) is a bivector and \({\ell }^{\dagger }{\ell }\) is an \(\varepsilon \)-scalar.

Proof

Suppose that  where \(p_1\) and \(p_2\) are vectors. Then Lemma 5.2 shows that \(\ell \) is a bivector, and, using the notation of that lemma,

where \(p_1\) and \(p_2\) are vectors. Then Lemma 5.2 shows that \(\ell \) is a bivector, and, using the notation of that lemma,

where

so that \({\ell }^{\dagger }{\ell } = a\) is an \(\varepsilon \)-scalar. \(\square \)

Corollary 5.4

If  is a line, then \({\varepsilon }{\ell }^{\dagger }{\ell } \simeq (W_1W_2)d^2\) where d is the distance between the points \(p_1\) and \(p_2\), and \(W_1\) and \(W_2\) are their additional coordinates.

is a line, then \({\varepsilon }{\ell }^{\dagger }{\ell } \simeq (W_1W_2)d^2\) where d is the distance between the points \(p_1\) and \(p_2\), and \(W_1\) and \(W_2\) are their additional coordinates.

Proof

From the proof of the Lemma 5.3,

where \((x_1,y_1,z_1)\) and \((x_2,y_2,z_2)\) are the cartesian coordinates of the points. This proves the result. \(\square \)

Lemma 5.5

If \({\ell } \in {\mathcal {G}}_4\) is a line, and \(q \in {\mathcal {G}}_4\) is a point, then  if and only if the geometric point represented by q lies on the geometric line represented by \(\ell \).

if and only if the geometric point represented by q lies on the geometric line represented by \(\ell \).

Proof

If  , then

, then  . By Lemma 2.4 and Corollary 2.5, this is zero if and only if vectors \(p_1\), \(p_2\), q are linearly dependent, which in turn happens if and only if the corresponding geometric points are collinear. \(\square \)

. By Lemma 2.4 and Corollary 2.5, this is zero if and only if vectors \(p_1\), \(p_2\), q are linearly dependent, which in turn happens if and only if the corresponding geometric points are collinear. \(\square \)

The following question is now considered. If b is a bivector, is it necessarily a line: that is, can it be expressed as the outer product of two vectors?

Any bivector \(b \in {\mathcal {G}}_4\) can be written as

where v and \(b_1\) are a vector and a bivector respectively which do not involve \(e_0\). A bivector is called finite if it is in standard form and v is non-zero and has leading power zero.

Lemma 5.6

A finite line is a finite bivector.

Proof

A finite line has the form  where \(p_1\) and \(p_2\) are finite vectors. Suppose that

where \(p_1\) and \(p_2\) are finite vectors. Suppose that

where \(q_1\), \(q_2\) are vectors independent of \(e_0\), and \((e_0+q_1)\) and \((e_0+q_2)\) represent different geometric points. Then

and it is seen that \(\ell \) is a finite bivector. \(\square \)

Lemma 5.7

If b is a finite bivector, then \({\varepsilon }{b}^{\dagger }b\) is a self-reverse element with a square root.

Proof

Firstly, \({\varepsilon }{b}^{\dagger }b\) is certainly a self-reverse element since it has even grade and is equal to its own reverse.

Writing \(b = e_0v + b_1\) as in (5.1), it follows that

The first summand here is \(v^2\) which is a non-zero \(\varepsilon \)-scalar with zero leading power (from the definition of b as a finite bivector). The other summands have a factor of \(\varepsilon \). So Lemma 3.3 applies and completes the proof. \(\square \)

If b is a finite bivector, then its size is the self-reverse element defined by

The square root is an \(\varepsilon \)-scalar of the form in (3.1) with coefficients \(\alpha \) and \(\beta \). The plus sign means choosing the root whose \(\alpha \) has strictly positive leading coefficient if \(\alpha \) is non-zero; otherwise choosing the \(\beta \) with strictly positive leading coefficient. (If both are zero, then b is itself zero.)

A finite bivector is said to be normalized if it has unit size.

Note that in stating some of the following results the reverse of the bivector b is used. This is to show the symmetry involved. The results could be restated simply in terms of b itself since

Lemma 5.8

Suppose that \(b \in {\mathcal {G}}_4\) is a non-zero bivector and that \(p \in {\mathcal {G}}_4\) is a point. Set

Then q is a point if and only if \({b}^{\dagger }b\) is an \(\varepsilon \)-scalar.

Proof

Note that \(\gamma = {b}^{\dagger }b\) is certainly a self-reverse element, and that q has odd grade. The reverse of q is given by

So \({q}^{\dagger }=q\) if and only if \({\gamma }^{*}={\gamma }\), and this is the case if and only if \(\gamma \) is an \(\varepsilon \)-scalar. \(\square \)

Lemma 5.9

If \(b \in {\mathcal {G}}_4\) is a finite bivector with \({b}^{\dagger }b\) being an \(\varepsilon \)-scalar, and \(p \in {\mathcal {G}}_4\) is a finite vector, then \(q = {\textstyle \frac{1}{2}}{\varepsilon } \left[ {b}^{\dagger }bp - {b}^{\dagger }pb \right] \) is a finite point.

Proof

The last lemma shows that q is a vector, so it remains to show that it is finite.

Suppose that

where \(p_1\), v are vectors, \(b_1\) is a bivector and all three are independent of \(e_0\).

Then

and

where x and y are independent of \(e_0\).

Hence \(q \simeq {\alpha }_0 v^2 e_0 + {\textstyle \frac{1}{2}} {\varepsilon } (x-y)\) and the coefficient of \(e_0\) has leading power zero. \(\square \)

Lemma 5.10

Suppose that b is a non-zero bivector for which \({b}^{\dagger }b\) is an \(\varepsilon \)-scalar and that p is a point. Set \(q = {\textstyle \frac{1}{2}}{\varepsilon } [ {b}^{\dagger }bp - {b}^{\dagger }pb ]\). Then  .

.

Proof

Note that \({b}^{\dagger }=-b\) and that \(b^2\) commutes with all elements of \({\mathcal {G}}_4\) since it is an \(\varepsilon \)-scalar. So

\(\square \)

Lemma 5.11

Any non-zero finite bivector \(b \in {\mathcal {G}}_4\) for which \({b}^{\dagger }b\) is an \(\varepsilon \)-scalar is a finite line, that is b is the outer product of two finite vectors.

Proof

Choose two finite points \(p_1\), \(p_2\) and set

which are again two finite points.

Note that if

then

Since \(v_i\) has odd grade and is equal to its own reverse, \(v_i\) is a vector.

Consider the outer product of \(q_2\) and \(q_1\).

Using Lemma 2.9,

Considering part of the second summand here

In the first summand,  is the inner product of two vectors and hence is an \(\varepsilon \)-scalar. Thus

is the inner product of two vectors and hence is an \(\varepsilon \)-scalar. Thus

Since \(p_1\) and \(p_2\) are open to choice, \(p_1\) can be chosen arbitrarily, and then \(p_2\) chosen such that \(\alpha \) is non-zero. Then \({\varepsilon }b^2\) and \({\varepsilon }{\alpha }\) are non-zero \(\varepsilon \)-scalars with zero leading powers. Thus \(q_1/({\varepsilon }b^2)\) and \(q_2/({\varepsilon }{\alpha })\) are finite vectors whose outer product is b, as required. \(\square \)

Two elements of \({\mathcal {G}}_4\) are said to be equivalent if one is the product of the other and a self-reverse element. Since the self-reverse elements form a field, this is an equivalence relation.

Lemma 5.12

Suppose that b is a finite bivector of size \(\gamma \) (cf. Lemma 5.7), then \(B={\gamma }^{-1}b\) is a normalized bivector equivalent to b.

Proof

It is clear that B is equivalent to b, and

\(\square \)

Theorem 5.13

Every non-zero finite bivector \(b \in {\mathcal {G}}_4\) is equivalent to a finite line, and, if \({b}^{\dagger }b\) is an \(\varepsilon \)-scalar, then b is itself that line.

Proof

If \({b}^{\dagger }b\) is an \(\varepsilon \)-scalar, then Lemma 5.11 says that b is a finite line. If not, then by the last lemma, b is certainly equivalent to a bivector B such \({B}^{\dagger }B = {{\varepsilon }^{-1}}\), and B is a finite line by Lemma 5.11. \(\square \)

Now consider four finite points in \({\mathcal {G}}_4\)

where \(\hat{a}\), \(\hat{b}\), \(\hat{c}\), \(\hat{d}\) do not involve \(e_0\). Let A, B, C, D denote the corresponding geometric points.

Then

so that

The first term in the square brackets is essentially the scalar product of two vectors. Lemma 2.6 and Corollary 2.8 can be applied to the second term. The third term can be ignored because of the \(\varepsilon \) multiplier. Hence

where \(\mathrm{{\Delta }}(p,q)\) is used to denote the Euclidean distance between the geometric points corresponding to points \(p, q \in {\mathcal {G}}_4\), \(\alpha \) is the angle between lines AB and CD (when viewed along their common normal), and V is the volume of the tetrahedron formed by the four points in Euclidean space.

Using traditional geometry, the volume of the tetrahedron is given by the following as shown in Lemma 8.1 in the Appendix.

Thus

This gives the inner product of two lines. In particular, taking \(c=a\), \(d=b\) and setting  , the inner product of a line with itself is given by

, the inner product of a line with itself is given by

so that, using (5.2),

(5.3) and (5.4) provide the proof of the following result which extends the idea of the scalar product of two ordinary vectors. Not only does it gives the angle between two lines, but also it provides the distance between them. There are related results in other forms of geometric algebra (e.g. [5, 11]).

Theorem 5.14

Suppose that \(a, b, c, d \in {\mathcal {G}}_4\) are finite points and  and

and  are finite lines, then

are finite lines, then

where \(\alpha \) is the angle between the lines (when viewed along their common normal), and h is the distance between them.

\(\square \)

This result allows more insight to be provided into the choice of points made in the proof of Lemma 5.11. These points are the feet of perpendiculars dropped from the chosen points onto the line represented by the bivector in that result. This is shown by the following lemma.

Lemma 5.15

If \({\ell } \in {\mathcal {G}}_4\) is a finite line and \(p \in {\mathcal {G}}_4\) is a finite point, then

-

(i)

is the point on \({\ell }\) nearest to p;

is the point on \({\ell }\) nearest to p; -

(ii)

\(({\ell }p{\ell }p - p{\ell }p{\ell })\) is the line through p perpendicular to \(\ell \).

Proof

Set

which has odd grade. Since \({\ell }^2 = -{\ell }^{\dagger }{\ell }\) is an \(\varepsilon \)-scalar (Lemma 5.3), it commutes with all elements of \({\mathcal {G}}_4\), and hence \({q}^{\dagger }=q\). Thus q is indeed a point (vector).

To check that q lies on \(\ell \), note that

and Lemma 5.5 applies.

The line joining p and q is

which is the expression in (ii).

Then

so that, by Theorem 5.14, the lines are perpendicular (and zero distance apart). \(\square \)

Lemma 5.16

Suppose that \({\Pi }\) is a plane and \(\ell \) and m are two lines within it. Then  is their point of intersection.

is their point of intersection.

Proof

First note that q is an element of odd grade and is equal to its own reverse. Hence q is a point.

Since \(\ell \) is the outer product of two points in \({\Pi }\), Lemma 4.2 says that \({\Pi }\) commutes with \(\ell \). Hence, using Lemmas 2.3 and 2.1,

So, by Lemma 5.5, point q lies on line \(\ell \). Similarly, q lies also on m and so q is the point of intersection of the lines. \(\square \)

Finally, in this section, suppose that \({\ell }_1\) and \({\ell }_2\) are two lines, and consider their outer product. It certainly has even grade and is equal to minus its own reverse. Hence it has grade 2. So it is equivalent to a line. This raises the question: what line is it? The following result provides the answer.

Theorem 5.17

The outer product of two distinct lines is equivalent to the line which is their common normal.

Proof

As before, let \({\ell }_1\) and \({\ell }_2\) be the lines. Let

where \(\gamma \) is the appropriate self-reverse element, be the line equivalent to their outer product.

Since \(\gamma \) commutes with all even-grade elements, it is seen that

using Lemmas 2.3 and 2.1. Theorem 5.14 now shows that line m is normal to line \({\ell }_1\). Similarly, it is normal to line \({\ell }_2\) and hence is the required common normal. \(\square \)

6 Geometry

This section shows how the geometric algebra \({\mathcal {G}}_4\) can be used to deal with geometrical applications. It makes use of the fact that \({\mathcal {G}}_4\) has both a model of projective space (and hence Euclidean space) and the ability to generate rigid-body transforms. These ideas are used to present a proof of Desargues’s theorem and Fermat’s triangle theorem [4]. Different proofs using others form of geometric algebra are given in [15, 32].

Some preliminary ideas and results about \({\mathcal {G}}_4\) and transforms of Euclidean space are required for the Fermat result. These are dealt with first and are discussed in the case of two-dimensional space. They are particular cases of more general results [24].

Any element \(S \in {\mathcal {G}}_4\) defines a map \(F_S\) of \({\mathcal {G}}_4\) to itself given by

If S has even-grade and x is a vector, then \(F_S(x)\) is an element of odd grade equal to its own reverse and so is also a vector. Hence \(F_S\) generates a transform of Euclidean space. Further, this is a linear transform [24].

There are two particular cases of importance. The even-grade elements

generate transforms which are respectively: a rotation through angle \(\phi \) about the z-axis or equivalently about the origin O when considered as a transform of the plane; and a translation in the plane through distance u in the x-direction and v is the y-direction. (It is straightforward to check the actions of these elements by multiplying out the appropriate products.) Further, these elements are normalized in the sense that

A rotation about the Euclidean point Q corresponding to the vector \(e_0 + ue_1 + ve_2\) can be formed by translating this point to the origin, performing the rotation about the origin, and then translating back again. Thus the even-grade element which generates such a rotation is \(R_Q = {T}^{\dagger }R_OT\) and multiplying out gives the following result.

Lemma 6.1

The even-grade element

lies in the subalgebra of \({\mathcal {G}}_4\) generated by \(e_0\), \(e_1\), \(e_2\). It generates a transform which is an anticlockwise rotation of two-dimensional Euclidean space through angle \(\phi \) about the point Q with coordinates (u, v). Further \({R_Q}^{\dagger }R_Q = 1 = R_Q{R_Q}^{\dagger }\).

\(\square \)

Application to the proofs of the two theorems are now given.

6.1 Desargues’s Theorem

Desargues’s theorem is stated below and is illustrated in Fig. 1. Note that it is not a requirement that the points are all coplanar. In the version given here, it is assumed that the six points \(A_1\), \(A_2\), \(B_1\), \(B_2\), \(C_1\), \(C_2\) are distinct. This is the version usually illustrated in text books. The result holds in some other cases (possibly trivially) and the proof given here can be modified appropriately.

Theorem 6.2

(Desargues). Suppose that I is a point in three-dimensional space and that \(A_1\), \(A_2\), \(B_1\), \(B_2\), \(C_1\), \(C_2\) are six distinct points, such that \(IA_1A_2\), \(IB_1B_2\), \(IC_1C_2\) are three distinct straight lines. Then I, \(B_1\), \(B_2\), \(C_1\), \(C_2\) are coplanar and hence lines \(B_1C_1\) and \(B_2C_2\) intersect. Let X denote this intersection. Similarly, let Y be the intersection of lines \(C_1A_1\) and \(C_2A_2\), and let Z be the intersection of lines \(A_1B_1\) and \(A_2B_2\). Then X, Y, Z are collinear points.

Proof

Regard the points indicated in the theorem as being normalized vectors in \({\mathcal {G}}_4\). The collinearity of points I, \(A_1\), \(A_2\) means that \(A_2\) is a linear combination of I and \(A_1\). Similar considerations apply to the other two original lines and so

where the coefficients are \(\varepsilon \)-scalars. (If I coincides with one of the other six points, then assume, without loss, that the other point has subscript 2. Then the corresponding expression above remains valid with the coefficient of I being unity and the other coefficient zero.)

The lines \(B_1C_1\) and \(B_2C_2\) are represented by the following elements

and

The two lines \(IB_1B_2\) and \(IC_1C_2\) form a plane which can be represented by the triproduct

which is non-zero as the lines are distinct. It follows that

Since points \(B_2\) and \(C_2\) commute with \({\Pi }\) (Lemma 4.2), and \({\varepsilon }{\Pi }^2\) is an \(\varepsilon \)-scalar, Lemma 5.16 yields

By cyclic symmetry, the following also hold

Hence

and then

Thus the points X, Y, Z are linearly dependent and form a line (Lemma 4.1). \(\square \)

6.2 Fermat’s Triangle Theorem

Fermat’s triangle theorem relates to the construction shown in Fig. 2. Here A, B, C are three non-collinear points in the plane. On each side of the triangle ABC an equilateral triangle is constructed lying outside triangle ABC. The additional vertices are points X, Y, Z.

Theorem 6.3

(Fermat). The lines AX, BY, CZ have the same length and they intersect in a single point, F, called the Fermat point.

Proof

Let \(A, B, C \in {\mathcal {G}}_4\) be normalized vectors representing the vertices of the original triangle. For simplicity, assume that these vertices lie in the xy-plane. This is the plane \({\Pi } = e_{012}\), and then A, B, C depend only on \(e_0\), \(e_1\), \(e_2\).

Let \(R_A, R_B, R_C \in {\mathcal {G}}_4\) be even-grade elements which generate anticlockwise rotations through angle \({\pi }/3\) about the points A, B, C respectively. As in Lemma 6.1, \(R_A\), \(R_B\), \(R_C\) lie in the subalgebra generated by \(e_0\), \(e_1\), \(e_2\),

and

The lines AX, BY, CZ are represented by the following even-grade elements.

So

and similarly

Then, from (5.2),

Hence \({\Vert }{x}{\Vert } = {\Vert }{y}{\Vert } = {\Vert }{z}{\Vert }\) and the lengths of the three lines are equal by (5.4).

Set \(s = x+y+z\). It is clear that s is an even-grade element. As s has no component of \(e_3\), \({s}^{\dagger }s\) is an \(\varepsilon \)-scalar. Hence, by Theorem 5.13, s is a line, assuming it is non-zero. The aim is now to deduce that \(s=0\) by showing that, if it is non-zero, then A, B, C all lie on it.

Consider

The repetition in the first triproduct means it is zero (Corollary 2.1). Consider the effect of rotation \(R_A\) on the third triproduct:

The triproduct \(\left[ C , Z , A \right] \) represents the plane generated by C, Z, A and this is \({\Pi }\). Hence \(\left[ C , Z , A \right] \) is an \(\varepsilon \)-scalar multiple of \({\Pi }\) (Lemma 4.1), and it commutes with \(R_A\) (Lemma 4.2) and so \(\left[ C , Z , A \right] \;=\; - \left[ B , Y , A \right] \).

So it follows that  , and point A lies on line s. Similarly

, and point A lies on line s. Similarly  and

and  are also zero. Since A, B, C are not collinear, the assumption that s is a line is invalid and so

are also zero. Since A, B, C are not collinear, the assumption that s is a line is invalid and so

It follows that

and that

Now Lemma 5.16 shows that the lines x, y, z have a common point of intersection. \(\square \)

7 Conclusions

The ideas of geometric algebra grew out of a desire to be able to represent, within a single environment, geometric entities and the transforms that can act upon them. Several versions of such algebras have been proposed.

A particular formulation, \({\mathcal {G}}_4\), has been discussed here. Differently to other formulations, it represents points, lines and planes as elements within the algebra of grades 1, 2 and 3 respectively. This is natural in the sense that the grade reflects the dimension of the entity concerned. In order to achieve this correspondence, it is necessary to treat the square of one of the basis vectors of the algebra as being infinite. It has been seen that this can be achieved by defining the square to be the reciprocal of a symbol representing a small positive quantity, \(\varepsilon \). An alternative view is to regard the scalars for the algebra as being members of the field of formal power series in \(\varepsilon \).

This has been seen to lead to a significant property of \({\mathcal {G}}_4\), namely that its self-reverse elements form a field. Again this is different to other formulations. It means that an equivalence relation between elements of grade 2 can be defined. While every geometric line can be represented by an element of grade 2, not every grade 2 element represents a line directly. However, what has also been shown is that each geometric line corresponds to a unique equivalence class of grade 2 elements, and vice versa. So the result of evaluating any expression in \({\mathcal {G}}_4\) corresponds to something geometrically. For example, it is immediate that the outer product of two distinct lines, which certainly has grade 2, must correspond to another line, the line associated with the appropriate equivalence class. Indeed it has been seen that this new line is in fact the common normal to the original two lines.

The ability of \({\mathcal {G}}_4\) to handle combinations of geometric objects has been demonstrated by its use in obtaining proofs of Desargues’s theorem and Fermat’s triangle theorem.

References

Browne, J.: Grassmann Algebra-Volume 1: Foundations. Barnard Publishing, Eltham (2012)

Cibura, C., Dorst, L.: Determining conformal transformations in \({\mathbb{R}}^n\) from minimal correspondence data. Math. Methods Appl. Sci. 34(16), 2031–2046 (2011)

Cripps, R.J., Mullineux, G.: Using geometric algebra to represent and interpolate tool poses. Int. J. Comp. Int. Manuf. 29(4), 406–423 (2016)

Coxeter, H.S.M.: Introduction to Geometry, 2nd edn. Wiley, New York (1989)

Du, J., Goldman, R., Mann, S.: Modelling 3D geometry in the Clifford algebra \(R(4,4)\). Adv. Appl. Clifford Algebr. 27(5), 3039–3061 (2017)

Dorst, L., Valkenburg, R.: Square root and logarithm of rotors in 3D conformal geometric algebra using polar decomposition. In: Dorst, L., Lasenby, J. (eds.) Guide to Geometric Algebra in Practice, pp. 81–104. Springer, London (2011)

Dorst, L., Fontijne, D., Mann, S.: Geometric Algebra for Computer Science: An Object-oriented Approach to Geometry. Morgan Kaufmann, Amsterdam (2007)

Epstein, M.: Affine \(n\)-dimensional statics, affine screws and Grassmann algebra. Mech. Res. Commun. 94, 74–79 (2018)

Goldman, R.: Understanding quaternions. Graph. Models 73(1), 21–49 (2011)

Gunn, C.: On the homogeneous model of Euclidean geometry. In: Dorst, L., Lasenby, J. (eds.) Guide to Geometric Algebra in Practice, pp. 297–327. Springer, London (2011)

Gunn, C. G., De Keninck, S.: Geometric algebra and computer graphics. In: Proceedings of ACM SIGGRAPH 2019 Courses, Los Angeles (2019)

Gunn, G.C.: Doing Euclidean plane geometry using projective geometric algebra. Adv. Appl. Clifford Algebr. 27, 1203–1232 (2019)

Gunn, G. C.: Projective geometric algebra: a new framework for doing Euclidean geometry. arXiv:1901.05873v1 (2019)

González Calvet, R.: Treatise of Plane Geometry through Geometric Algebra. TIMSAC, Cerdanyola del Vallès (2007)

González Calvet R.: Application of geometric algebra to plane and space geometry. In: Early Proceedings of the Alterman Conference 2016, pp. 15–42. https://re.public.polimi.it/retrieve/handle/11311/1003587/153364/161008early_proceedings_alterman_conference.pdf (2006). Accessed Jun 2018

Hadfield, H., Lasenby, A.: Direct linear interpolation of geometric objects in conformal geometric algebra. Adv. Appl. Clifford Algebr. 29(4), 1–25 (2019)

Hou, X., Chuan, M., Wang, Z., Yuan, J.: Adaptive pose and inertial parameters estimation of free-floating tumbling space objects using dual vector quaternions. Adv. Mech. Eng. 9(10), 1–17 (2017)

Leclercq, G., Lefèvre, P., Blohm, G.: 3D kinematics using dual quaternions: theory and applications in neuroscience. Front. Behav. Neurosci. 7(7), 1–25 (2013)

Lengyel, E.: Fundamentals of Grassmann algebra. In: Presentation to Games Developers Conference, San Francisco (2012). http://www.terathon.com/gdc12 lengyel.pdf. Accessed March 2018

Lengyel, E.: Foundations of Game Engine Development. Terathon Software LLC, Lincoln (2016)

Lasenby, A.: Rigid body dynamics in a constant curvature space ans the ‘1D-up’ approach to conformal geometric algebra. In: Dorst, L., Lasenby, J. (eds.) Guide to Geometric Algebra in Practice, pp. 371–389. Springer, London (2011)

Lang, M., Kleinsteuber, M., Hirche, S.: Gaussian process for 6-DoF rigid motions. Autonom. Robots 42(6), 1151–1167 (2018)

Mullineux, G.: Modeling spatial displacements using Clifford algebra. Trans. ASME J. Mech. Des. 126, 420–424 (2004)

Mullineux, G., Simpson, L.C.: Rigid-body transforms using symbolic infinitesimals. In: Dorst, L., Lasenby, J. (eds.) Guide to Geometric Algebra in Practice, pp. 353–368. Springer, London (2011)

Macdonald, A.: Linear and Geometric Algebra. Luther College, Decorah (2010)

McCarthy, J.M., Soh, G.S.: Geometric Design of Linkages. Springer, New York (2011)

Macdonald, A.: A survey of geometric algebra and geometric calculus. Adv. Appl. Clifford Algebr. 27(1), 853–891 (2017)

Pottmann, H., Wallner, J.: Computational Line Geometry. Springer, Berlin (2001)

Röschel, O.: Rational motion design—a survey. Comput. Aid. Design 30(3), 169–178 (1998)

Selig, J.M.: Clifford algebra of points, lines and planes. Robotica 18(5), 545–556 (2000)

Selig, J.M.: Geometric Fundamentals of Robotics, 2nd edn. Springer, New York (2005)

Sobczyk, G.: Universal geometric algebra. In: Bayro Corrochani, E., Sobczyk, G. (eds.) Geometric Algebra with Applications in Science and Engineering, pp. 18–41. Birkhäuser, Boston (2001)

Vince, J.: Geometric Algebra for Computer Graphics. Springer, London (2008)

Acknowledgements

The authors are very grateful to the reviewers for their comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all the authors, the corresponding author states that there is no conflict of interest.

Additional information

Communicated by Dietmar Hildenbrand

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Volume of a Tetrahedron

Appendix: Volume of a Tetrahedron

This appendix provides a formula for the volume of a tetrahedron. This is working in conventional Euclidean space and using ordinary position vectors, denoted by bold symbols.

Lemma 8.1

Suppose there are two line segments in 3D space of lengths \(d_1\) and \(d_2\). Let h be the length of their common normal and \(\alpha \) the angle between the segments (when viewed along the line of the common normal). The four end points of the segments form a tetrahedron. The volume of the tetrahedron is

Proof

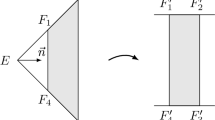

The situation is shown in Fig. 3. On the left, the figure shows a general view; on the right is a view along the common normal to the two segments.

Let the end points of one segment be \(\mathbf{p}_1\) and \(\mathbf{p}_2\), and those of the other \(\mathbf{q}_1\) and \(\mathbf{q}_2\). Suppose that t is a parameter which goes between 0 and 1 along the common normal. Then a planar slice perpendicular to the common normal cuts four sides of the tetrahedron (that is those sides which are not the original segments) in the following points:

Taking differences, it is seen that the slice is a parallelogram and the following are vectors along the sides:

The vector product of these gives the following vector along the common normal whose magnitude is the area of the parallelogram

where \(\mathbf{n}\) is a unit vector along the common normal. If the slice is given a thickness \((h \, dt)\), then the element of volume is

Integrating this between 0 and 1 gives the required result. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cripps, R.J., Cross, B. & Mullineux, G. Self-Reverse Elements and Lines in an Algebra for 3D Space. Adv. Appl. Clifford Algebras 30, 50 (2020). https://doi.org/10.1007/s00006-020-01075-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00006-020-01075-7

;

; and

and  are

are  is an

is an  is the point on

is the point on