Abstract

Some architects use design processes that conceive each architecture as a model to be optimized with tools borrowed from various disciplines. Therefore, modelling in architecture may assume the role of an interpretative tool for complex phenomena, capable of guiding a design towards its best possible configuration. Today, we can work with discrete data with extreme efficiency. Consequently, we can also create models in architectural design with a new approach that aims to contain and generate large amounts of information. It is precisely by analyzing that information, with various tools of applied mathematics and through the strength of correlation, that modelling may interpret complex phenomena. In both approaches, the search for optimization may shape the purpose of modelling in architectural design. This contribution addresses the evolution and role of optimization in architectural design with an interdisciplinary approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Premises

According to its Latin origin, optimus, as well as its first uses in biology in the nineteenth century, the optimum of a system is its most desirable configuration, while the term optimization includes the processes and techniques used to search for it. A broad and clear definition of optimization in architectural design comes from its mathematical aspects: optimization can be traced, with extreme synthesis, to the search for maximum (or minimum) points of certain functions associated with a design’s performance. When applied in architectural design, this process may focus on the measurable properties of architecture and on what we decide to optimize, as it will be specified in this paper. These mechanisms, with different levels of pervasiveness, are present in several approaches to architectural design that strive to obtain the most out of available resources. Optimization in architectural design must thus be addressed in an interdisciplinary way, including mathematics, computer science, structural design, simulation, calculus and more. For these reasons, the use of optimizations in architectural design should be supported by a deep knowledge of their processes. This paper proposes to analyse the cultural implications in parallel with different technical aspects.

In architecture, it is rarely possible to explicitly identify a design’s fitness function, and therefore it is not possible to study its gradient analytically in a search for its maximum points, as it would be done in a strictly mathematical context. The act of modelling helps overcome this difficulty in different ways.

This paper, in giving a systemic view of the evolution of optimizations in architecture, proposes to divide them into two approaches, one with the aspiration of models able to unfold complex problems and a second one which, instead, arises from the intuition to model a system by using its descriptive properties and its results. Both approaches actually coexist today.

The first approach is captured George Box’s quote, “all models are wrong, but some are useful” (Box 1979) and derives from the search for methods consistently able to interpret the world around us. The second approach is recognized by Chris Anderson (2008) in an article that has seen particular success even among non-expert, in which, albeit with an exaggerated and provocative attitude (Norvig 2009), he acknowledge how in different fields, from predictive advertising to biology, models may be built from data instead of being derived. From this latter approach derives that the key to success in optimization may lie in having large amounts of data available to describe a phenomenon and using them to model it, as done in many contemporary approaches to optimization in architectural design recalled in this paper.

While the first approach aims to understand the hidden mechanisms that bind the descriptive elements of a phenomenon through processes based on the scientific method, the second approach is satisfied with just grasping the apparently superficial correlation of the descriptive data. In fact, with the second approach, we could analyze large amounts of data without formulating any hypothesis to verify. In particular, these methods perform evaluations that are more probabilistic than deterministic (Gambetta 2018). As will be shown, these latter methods are based on the generation, through automated processes, of large quantities of information to be analyzed with innovative tools of applied mathematics where “correlation supersedes causation” (Anderson 2008).

This paper only apparently focuses on a narrow niche of architectural design interested in the search for scientific and epistemological validation of its actions through tools borrowed from other disciplines, in which the control of the design process and its direction towards the best use of its resources is fundamental. In fact, if we consider an architectural project as the product of a political and economic system, it is almost impossible to untie it from some sort of quantitative validation procedure. This paper is not intended to be a literature review of the optimization methods used or usable in architecture because an excessively technical approach would not grasp the changes this particular culture imposes on the design process. Similarly, a study based on just the formal results of optimizations could certainly help to trace and connect the tools designers used to shape their intuitions, but it would not go deep enough in the cases where optimization went from being a technical tool to becoming the content of architecture itself. Today the relevance of optimization processes and their different levels of pervasiveness arises from a new technical drift of architecture as a discipline (Purini 2018; Canestrino 2021).

This research is founded on various existing sources, such as monographs and scientific articles, which describe different aspects of optimization processes used or usable in architectural design, based on direct observation, archival research, patents and direct testimonies of architects and engineers. This article adds elements of innovation by associating epistemological and cultural considerations derived from the study of those sources interested in the more technical aspects of optimization processes. The majority of case studies mentioned in this contribution have been identified by looking at the results of the research focused on the origin of parametric architecture, or more generally of Computer Aided Architectural Design (CAAD), in which for some designers, due to its intrinsic logic, optimization is an indispensable tool to explore the vast sea of possible outputs of architectural design. Existing literature interested in the implications of these design methods in the architectural design processes is limited but essential to support the cultural framework proposed by this paper, which is its main element of novelty.

All Models are Wrong, but Some are Useful

Several authors recognize in the design of shape-resistant structures the seed of optimization in architectural design (Tedeschi 2014; Burry and Burry 2010; Addis 2014). This position is widely accepted today and offers an interesting view on what Sigfried Giedion (1888–1968) called “the schism between architecture and technology” (Giedion 1982: 146). The design processes of these shape-resistant forms contain elements of optimization when a particular knowledge, process or tool permits the identification of the most desirable shape for each initial geometric and static condition, where desirable often means capable of resisting a particular stress condition. These processes, as will be shown, consisted of graphical statical methods, physical modelling and mathematical descriptions of architectural forms. However, it remains evident that this particular type of structures has also always been designed with a more intuitive and instinctive process guided by the fascination of form and beauty, as in the case of Oscar Niemeyer’s concrete surfaces (Lucente 2017).

Identifying the modelling processes capable not only of verifying a shape but also designing it is fundamental. The origin of modern form-finding is certainly traceable to Robert Hooke (1635–1703), who, thanks to the tools of graphical statics, found design processes wherein optimization consisted of identifying a two-dimensional form for an arch in a state of prevalent compression. This approach is substantially different from the use of models to validate a design, which is traced to the writings of Andrea Palladio (1508–1580) and Claude Perrault (1613–1688), who spoke of models of timber bridges subjected to load tests, or in the research of Benjamin Baker (1840–1907) concerning measurements on scaled models of dams to validate analytical calculations (Addis 2013).

In the design of form-finding, the meaning of optimization, as well as its possible purposes, expanded both in terms of tools and purposes. The contribution of designers such as Antonì Gaudì (1852–1926), Frei Paul Otto (1925–2015), Eduardo Torroja (1899–1961), Pier Luigi Nervi (1891–1978), Heinz Isler (1926–2009) and others to the optimization in architecture lies in the new types of optimum that through various processes can be searched for and given to architectural morphology. In addition to the natural expansion of the two-dimensional catenary toward three-dimensionality through famous hanging models, physical models can pursue processes wherein optimization means obtaining a shape in a state of prevalent traction (useful for the design of tensile structures), as well as surfaces with constant tension through soap films or minimal surfaces (Emmer 2014). These are fundamental to make and then optimize different structural concepts in terms of used material, construction processes, prefabrication, maximum span and architectural space (although on a qualitative base as it is founded on many unmeasurable aspects). In these experiences, the design act could be compared to that of a craftsman with the appropriate technical skills who follows an iterative path until he is satisfied with his intuition. This vision is confirmed by a series of well-documented design processes, such as Otto’s Bundesgartenschau Multihalle (Addis 2013) (Fig. 1) or Cullinan Studio’s Downland Gridshell (Harris et al. 2003).

These design processes also tend to see architecture as a problem of shape and morphology, in which design, in particular the structural one, cannot be solved through notions that have developed slowly over time. The extraordinariness and innovations of these architectures led to their being designed as prototypes of themselves, in which optimization acts as a guide toward the right decision through an almost scientific validation.

These projects aim to achieve the absolute optimum in terms of shape and structural behaviour. These optimums are granted for an initial system of parameters. However, a form-finding optimization based on physical models does not guarantee that a particular state of an initial system of parameters is the best possible. Some designers, in fact, have felt the necessity of introducing methods capable of investigating multiple conformations of initial parameters through a single model; see, for example, Otto’s models with variable supports or Gaudì’s models with variable weights.

This limit is overcome by optimizations based on not physical but mathematical models that allow a greater level of indeterminacy and therefore of variability of the initial parameters. If an architectural form can be defined by a purely mathematical equation, it may be possible to implement an optimization process that simultaneously guarantees the optimum of both the final form and the initial parameters. In this case, optimization also consists of improvements derived from the intrinsic logic of the mathematical modelling, such as a greater level of precision in the description of surfaces, a major accuracy in the analysis that measures the performance of a design or constructive intuition strictly related to the type of modelled surface. An example is the rationalization of formwork into linear elements in hyperbolic surfaces as shown several times by Félix Candela (1910–1997) (Fig. 2). The main objective of optimization remained in most cases linked to the structural aspects, such as material usage reduction, although the research of Luigi Moretti (1906–1973) significantly expanded what we can measure, and therefore optimize in practical case studies, in architecture.

In Candela’s works (Savorra and Fabbrocino 2013), we can see an adherence to the suggestions of Gilles Deleuze (1993), or rather in the interpretations of Deleuze’s thoughts on the problem of the form as a continuum of possibilities. The dichotomy of continuity versus discretization remains an open question because we will always be able to pursue both (Consiglieri and Consiglieri 2009).

This approach surely leads toward optimizations based on digital methods, as they show the possibility of automating mathematical optimization processes, and toward the modern computational approach. However, a profound difference must be noted, even on the epistemological level: on one hand we face a continuous problem, and on the other a discrete problem.

Moretti’s contribution to architectural design’s optimizations is fundamental, both in terms of tools and purposes. Moretti raised the search for the optimum in architectural form to a designer’s ethical necessity; he also recognized a sort of liberation for decision-makers who can finally operate on a truly quantitative basis. As described in the pages of Moebius, Moretti’s (1971) parametric architecture is apparently a scientific design process characterized by extreme rigour, but Moretti himself leaves to the architect the freedom of decision and expression within the design. To Moretti, optimization is a way to reduce purely empirical decision and a naive iteration of traditional design strategies. Another important contribution from Moretti concerns what can be optimized (user safety, visual quality, cost control, etc.) as well as a way to design enriched by knowledge, tools and processes transferred from disciplines such as game theory and operational research. As he said in an article published in Spazio (Moretti 1952), when the possible variations an architectural form can assume are minimal and the parameters to be optimized are limited, we enter the field of technique and not of architecture because the best possible architectural design can be obtained with a purely scientific approach that leads to an optimum. This concept will be used in this paper to frame various and recent design experiences.

Models may be Built from Data Instead of Being Derived

A general expansion of what can be optimized in architecture, tied with a demand for more complex multi-objective optimization, made seeking a (mathematically) closed-form that binds a design to its fitness function progressively inefficient in terms of design effort, especially with free-form surfaces that aroused a general interest after the diffusion of digital tools in architectural design.

This pushes us to search for optimal design through numerical or iterative methods based on simulation processes. Among the designers who were instead able to grasp architectural design problems in a closed-form, we can certainly identify the previously mentioned Félix Candela and Luigi Moretti. However, today we can still see research that updates the methods of Candela and Moretti by increasing their possibilities and efficiency with digital models and tools (Tošić et al. 2019; Bianconi et al. 2020).

The processes described in the first part of this article can be framed as trial-and-error workflows. In an article appeared in LOG, François Jouve (2012) acknowledges that since the 1980s computers have begun to numerically solve various architectural problems by computing them. In particular, it has become possible to predict the characteristics of a system starting from initial geometric information, to which known boundary conditions are associated (i.e., in the structural field we are talking about, loads, constraints, materials etc.). Initially, these tools were used to replicate the trial-and-error workflow: the designer starts from a limited series of intuitions of various types (formal, structural, functional etc.) for which he verifies their feasibility under different disciplines (architecture, structures, plant engineering etc.) and then manually iterates and tweaks the initial system until he is satisfied with the result, or in any case, as long as it is reasonably convenient to continue seeking a better solution. In the same article, Jouve also acknowledges a contraction of the temporal dimension between intuition and its validation thanks to digital tools: however, this process still requires significant efforts and is unable to produce unexpected insights as this particular optimization remains man-led. This approach differs, for example, from that described by Greg Lynn and based on exploring software possibilities not on a performative basis, leading to what he defines as “happy accidents” (Rocker 2004).

Mario Carpo (2017: 175) agrees with the ideas of Jouve and underlines how practically every optimization process, except in the simplest cases, involves such a large number of variables that the solutions can be neither deduced nor calculated. Reconnecting with Moretti’s thoughts already briefly mentioned, we can underscore that when the variables involved are limited, the optimization process could lead to an absolute optimum, and once again, we are facing technique, not architecture.

However, the contraction of the temporal dimension of validation is not enough to abandon the convenience of building theoretical and interpretative models: the possibility of simulating and comparing the performance of a theoretically infinite number of design solutions with an automated process is missing. In fact, subsequent research automates the trial-and-error process, “arriving at better solutions algorithmically, or recursively, rather than intuitively” as said by Carpo (2017: 175). Thinking that this automation frees architects from a time-consuming activity so they can devote themselves to something else is a naive view. In fact, it must be emphasized that to even access the possibility of an automated optimization process, we must necessarily design in a certain way, opening up many opportunities given by digital tools but also losing many others. By this, we mean that implementing an optimization process profoundly influences design action.

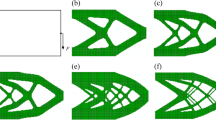

Searching for what Marshall McLuhan calls “boundary break” (McLuhan 1964: 58) or Charles Jencks’s “jumps” (1995), or more generally the factors that make possible sudden changes in a particular system in a compressed transition period, we must look for when digital tools have made it possible to simulate, analyze and order in incredibly short periods of time, let say a few hours, more design alternatives than an architect could evaluate in a lifetime (Fig. 3), or to use Carpo’s (2017: 40) words more “full-size trials than a traditional craftsman would have made and broken in a lifetime”.

Automated generation of layout alternatives with an evolutionary approach. This particular application shows the possibility of generating thousands of design alternatives in a matter of minutes with digital tools (Canestrino et al. 2020)

It is quite easy to identify attempts as early as before the 1970s in which the power of the automation of trial-and-error began to make building theoretical models impractical. An example can be found in the first event of the Computer Art Society where GRASP (Generation of Random Access Site Plans) was presented, defined as

a program which generates computer produced site plans using predefined modular architectural units. The computer produces as many “random” solutions to a plan as desired, evaluates each solution according to specified criteria, and draws the appropriate “good” solution (Computer Art Society 1969).

In this early research, much of which was interested in architectural space planning problems, a separation from the tectonic dimension of architecture, and therefore its optimization, is recorded, and this led to the measurement and optimization of aspects of architectural quality such as shortest paths, adherence to programmatic requirements in terms of area or in satisfying relational constraints between the parts of the project.

The impact of these researches remains confined to academia, but as recognized by Nicholas Negroponte (1972: 39), these automatizations were viewed as immoral because they could lead to the unemployment of some architects, despite the fact that they consisted in very crude randomized trial-and-error processes which, through ex-post analysis, identified the best proposed options. Negroponte points out that if we face architectural design as a problem to be optimized, we can view it as an “overconstrained problem”, where sometimes no solution can completely satisfy our requests and that optimizations processes actually seek those solutions that offer “a point of greatest happiness” and least amount of “friction”. Those that Negroponte called instead “underconstrained problems”, rarer in architecture according to him, have a wide range of possible solutions where all the criteria are satisfied by multiple alternatives. It is possible to see another parallelism with what Moretti defined as technical problems and not architecture.

Another boundary break of digital optimization processes can be identified in the shift from technical and research problems to true architecture. This means having tools that can optimize architectural problems with a large number of variables and objectives. Jagielski and Gero (1997), in research dedicated to the kind of space layout planning problems that have always been a testing ground for optimization and automation in architecture, as evidenced by the research listed by Negroponte in Architecture Machine and the first catalogue of the Computer Art Society, recognize that this particular architectural problem belongs to a certain class of mathematical problem, called NP-hard (non-deterministic polynomial acceptable problems). Wanting to continue the parallelism with Moretti, that which does not fall into technique is an NP-hard problem, that is, architecture. Jagielski and Gero identified the evolutionary approach as a method able to solve “not-trivial” problems, and as a literature review testifies (Calixtor and Celani 2015), we may say that for many years the evolutionary approach seemed particularly suitable for complex optimization problems.

In parallel with the development of digital optimization tools, we see a novel cultural interest. In the wake of Gordon Pask (1928–1996), it is John Frazer (1995: 18) who recognizes that optimizations can become tools of inspiration, “an electronic muse,” thanks to their ability to compress time and space (and therefore overcome the aforementioned metaphor of the craftsman), so that architecture can develop itself. Furthermore, Frazer proposes that these tools do not eliminate human creativity but rather move its field of applications towards the creation of rules that optimization tools must follow. Finally, Frazer (1995: 24–60) proposes a generative toolbox in An Evolutionary Architecture that today, twenty-five years later, remains almost unchanged in its principles, although it has acquired an unimaginable additional computational power. A partial list includes neural network, datastructures, logical datastructures, shape processing, cellular automata, the life game, artificial life, adaptive models, genetic algorithms, biomorph and classifier systems. Today we can say that most of the contemporary optimization processes are in fact recombinations of the toolbox proposed by Frazer.

The shift from seeking optimizations with scientific/intuitive tools and processes to algorithmic/recursive automated procedure can be framed, mathematically, by a shift from calculus’s continuum to computation’s discretization, or from derivative methods to derivative-free methods. The derivative-free methods often refer to the black-box model, the use of which is appropriate when we do not have clear interpretative models but large amounts of information unable to be processed with only a human brain. A black-box can be described as a process in which initial inputs are transformed into something else through a process that is hidden, unknown or, in any case, not easy to understand.

A possible classification of black-box methods used as optimization tools in architecture is offered by Wortmann and Nannicini (2016): metaheuristics, direct search methods and model-based methods. Metaheuristic black-box methods have aroused the most research interest as well as professional use due to the widespread class of evolutionary algorithms. Metaheuristic methods are mainly based on weighted randomization processes and biological analogies to search for the design that performs best. The reason for their success in architectural design lies not in their precision or efficiency but rather in their flexibility, ease of implementation and ability to optimize even poorly posed problems. In fact, from a technical point of view, these methods are generally less efficient in comparative benchmarks and remain professionally valid alternatives when the time to calculate the efficiency of a solution is reasonable, as a convergence of the results may require several thousand analyses. This conception, therefore, makes it possible to optimize any aspect of a project to which it is possible to associate a measurable performance function. Digital methods also open up complex optimizations of functions relating to different disciplines at the same time. Analog design methods are unable to simultaneously optimize aspects related to energy efficiency, material usage, structural behaviour, view quality and more, even if this complexity leads to design processes with high entropy and close to the black-box methods mentioned above.

Conclusions

If men were able to be convinced that art is precise advance knowledge of how to cope with the psychic and social consequences of the next technology, would they all become artists? Or would they begin a careful translation of new art forms into social navigation charts? (McLuhan 1964: 97).

With these words, Marshall McLuhan recognizes that the artist—and therefore why not the architect too? —has a particular intuitive ability to understand the implications that technologies, even if not yet fully developed, have on society. In fact, talking about optimization in architecture means retracing different attitudes with which we have tried over time to tackle complex problems. In recent approaches, dominated by metaheuristic methods, architectural design has anticipated by a few years a concept that today is clear: we no longer try to tackle complex problems only through the construction of theoretical models, but we understand that it is possible to address them by deriving knowledge, perhaps partial but that certainly works, from the analysis of large amounts of data, which we can generate as in the case of evolutionary optimization processes.

It must be recognized that a large number of optimization processes usable in architecture, due to their operating modes already described, does not guarantee that the final chosen solution is the most efficient. Even in the “trivial” case of a single-objective optimization, the derivative-free methods do not guarantee the possibility to find an absolute optimum.

Today, society is pushing for a more ethical use of the resources consumed in architecture, and therefore, the use of optimizations, especially if applied to increase sustainability, could soon be increasingly required due to their ability to validate the choices of a designer. But architectural design may be overwhelmed by the pressure of an increasingly demanding society: optimizations could go from being useful tools to becoming the ultimate goal of architectural design. We are facing the risk that an optimized and flawless design will automatically be considered a quality design, ignoring all non-measurable aspects in architecture by focusing only on what we can scientifically measure.

Architectural design needs to be in balance between the technical hunting for an optimum, which requires quantitative descriptors so that it can be sought, and the need for architecture to respond to a deeper sense of human needs that we can not associate with quantitative descriptors. If we consider architectural design as the organization of the materials that participate in it, understood not as concrete, steel or wood but rather as the “contesto fisico e culturale, la storia della disciplina, delle sue forme, dei suoi principi” (Gregotti 2018: 36, “physical and cultural contexts, the history of the discipline, of its form, of its principles”), and certainly even the optimization processes which we can pour into a design process, we can grasp the current heteronomy in architecture as already indicated several times by Vittorio Gregotti.

We could say that the desire to make the most of the resources that flow into a design process has always been a force that implicitly shapes the architectural form, but we can also recognize that in a certain niche of architecture, as discussed in this paper, the technical tools of optimization may have a predominant role, in the first described approach as a means to make bold formal intuitions possible and in the second approach as a means to direct an increasingly complex discipline, in its tools as in its forms. The different organization of architecture’s materials, if extreme, may shift the emphasis from the designed object to the design process and its instrument with a particular reference to optimization tools. An example can be identified in Patrick Schumacher’s (2017, 2018) Tectonism (Fig. 4), conceived as the most mature and promising subsidiary style of parametricism, based on the “stylistic heightening of engineering and fabrication-based form-finding and optimization processes.”

The search for this optimum with the progressive expansion of what can be optimized pushes us to look less at their results and to focus on their processes. These optimization processes have instilled a new lexicon in the discipline of architectural design that must be recognized. Without deeper knowledge their use can hardly bring consistently towards quality results. Pareto surface, non-dominated solution, design space and fitness function are concepts without which a certain kind of architecture could not exist.

References

Addis, Bill. 2013. ‘Toys that save millions’: A history of using physical models in structural design. Structural Engineering 91(4): p. 12-28.

Addis, Bill. 2014. Physical modelling and form finding. In: Shell Structures for Architecture. Form Finding and Optimization, eds. Sigrid Adriaenssens, Philippe Block, Diederik Veenendaal and Christ Williams, 33-44. New York: Routledge.

Anderson, Chris. 2008. The end of theory: the data deluge makes the scientific method obsolete. Wired, https://www.wired.com/2008/06/pb-theory/. Accessed 13 March 2021.

Bianconi, Fabio, Marco Filippucci, Alessandro Buffi, and Luisa Vitali. 2020. Morphological and visual optimization in stadium design: a digital reinterpretation of Luigi Moretti’s stadiums. Architectural Science Review 63(29): 194-209.

Box, George E. P. 1979. Robustness in the strategy of scientific model building. In Robustness in Statistics, 201-236. New York: Academic Press.

Burry, Jane, and Mark Burry. 2010. Optimization. In: The New Mathematics of Architecture, eds. Jane Burry and Mark Burry, 116-154. London: Thames & Hudson.

Calixtor, Victor and Gabriela Celani. 2015. A literature review for space planning optimization using an evolutionary algorithm approach: 1992-2014. In Proceedings of SiGraDi 2015, 662-671. São Paulo: Blucher.

Canestrino, Giuseppe, Laura Greco, Francesco Spada and Roberta Lucente. 2020. Generating architectural plan with evolutionary multiobjective optimization algorithms: a benchmark case with an existent construction system. In Proceedings of SiGraDi 2020, eds. Natalia Builes Escobar and David A. Torreblanca-Díaz, 149-156. São Paulo: Blucher.

Canestrino, Giuseppe. 2021. On the use of BIM and parametric modelling for building design: implications and risks on the quality of architecture. In AMPS Proocedings Series 20.2. Connections: exploring heritage, architecture, cities, art, media, ed. Howard Griffin: 152-160. Liverpool: AMPS.

Carpo, Mario. 2017. The Second Digital Turn: Design Beyond Intelligence. Cambridge, MA: MIT Press.

Computer Art Society. 1969. Event One Catalogue. https://computer-arts-society.com/uploads/event-one-1969.pdf. Accessed 8 Mar 2021.

Consiglieri, Luisa and Consiglieri, Victor. 2009. Continuity versus Discretization. Nexus Network Journal 11: 151-162.

Deleuze, Gilles. 1993. The Fold. Leibniz and the Baroque. Trans. Tim Conley. London: The Athlone Press Ltd.

Emmer, Michele. 2014. Minimal surfaces and archtiecture: new forms. Nexus Network Journal 15: 227-239.

Frazer, John. 1995. An Evolutionary Architecture. London: Architectural Association.

Gambetta, Daniele. 2018. Divenire cyborg nella complessità. In Datacrazia. Politica, cultura algoritmica e conflitti al tempo dei big data, ed. Daniele Gambetta: 14-42. Ladispoli: D Editore.

Giedion, Sigfried. 1982. Space, Time and Architecture, Fifth Revised and Enlarged Edition, Cambridge, Mass: Harvard University Press.

Gregotti, Vittorio. 2018. I racconti del progetto. Milan: Skira.

Harris, Richard, John Romer, Oliver Kelly and Stephen Johnson. 2003. Design and construction of the Downland Gridshell. Building Research & Information 31(6): 427-454.

Jagielski, Romuald and Gero, John. 1997. A genetic programming approach to the space layout planning problem. In Proceedings of CAAD Futures, ed. Richard Junge. Dordrecht: Springer.

Jencks, Charles. 1995. The Architecture of the Jumping Universe. London: Academy Editions.

Jouve, François. 2012. Structural Optimization. LOG 25: 41-44.

Lucente, Roberta. 2017. Oscar Niemeyer: invenzione, forma, struttura. Metamorfosi quaderni di architettura 3: 98-103.

McLuhan, Marshall. 1964. Understanding Media: The Extensions of Man. New York: McGraw-Hill.

Moretti, Luigi. 1971. Architettura Parametrica. Moebius IV(1): 30–53.

Moretti, Luigi. 1952. Struttura come forma. Spazio 6: 21-30; 110.

Negroponte, Nicholas. 1972. The Architecture Machine. Cambridge: The MIT Press.

Norvig, Peter. 2009. All we want are the facts, ma’am. Norvig, https://norvig.com/fact-check.html. Accessed 2 June 2021.

Purini, Franco. 2018. Il BIM, un parere in evoluzione. Op. cit. Selezione della critica dell’arte contemporanea 162: 5-16.

Rocker, Ingeborg. 2004. Calculus-Based Form. An interview with Greg Lynn. Architectural Design 76(4): 88-96.

Savorra, Massimiliano and Giovanni Fabbrocino. 2013. Félix Candela between philosophy and engineering: the meaning of shape. In: Structures and Architectures: New Concepts, Applications and Challenges, ed. Paulo J. Cruz, 253-260. London: Taylor & Francis.

Schumacher, Patrik. 2017. Tectonism in Architecture, Design and Fashion - Innovations in Digital Fabrication as Stylistic Drivers. In: 3D-Printed Body Architecture, guest-edited by Neil Leach & Behnaz Farahi, Architectural Design, Profile no. 250 (November/December 2017): 106-113.

Schumacher, Patrik. 2018. The progress of geometry as design resource. LOG 43: 105-118.

Tedeschi, Arturo. 2014. From traditional drawings to the parametric diagram. In: AAD_Algorithms-Aided Design, Parametric Strategies using Grasshopper, ed. Arturo Tedeschi, 14-32. Potenza: Le Penseur.

Tošić, Zlata, Sonja Krasić, Naomi Ando and Milos Milić. 2019. Geometry and Construction Optimization: An example using Felix Candela’s Church of St. Joseph the Craftsman in Mexico. In Nexus Network Journal 21(1): 91-107.

Wortmann, Thomas and Giacomo Nannicini. 2016. Black-box optimization methods for architectural design. In Proceedings of CAADRIA, 177-186. Hong Kong: CAADRIA.

Funding

Open access funding provided by Università della Calabria within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Canestrino, G. Considerations on Optimization as an Architectural Design Tool. Nexus Netw J 23, 919–931 (2021). https://doi.org/10.1007/s00004-021-00563-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00004-021-00563-y