Abstract

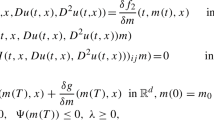

The stochastic maximum principle gives a necessary condition for the optimal control problem for diffusions. If the controlled diffusion is approximated by a controlled Markov chain, and if approximating controls are chosen to maximize a Hamiltonian for the chain, then it is shown using weak convergence that the chains converge to a diffusion with a control satisfying the necessary condition of the maximum principle, and the corresponding costs also converge.

Similar content being viewed by others

References

Bismut, J. M.,Thèorie Probabiliste du Contrôle des Diffusions, Memoirs of the American Mathematics Society, Vol. 4, Providence, Rhode Island, 1976.

Fleming, W. H., andRishel, R. W.,Deterministic and Stochastic Optimal Control, Springer Verlag, New York, New York, 1975.

Haussmann, U. G.,On the Adjoint Process for Optimal Control of Diffusion Processes, SIAM Journal on Control and Optimization, Vol. 19, pp. 221–243, 1981.

Bismut, J. M.,An Approximation Method in Optimal Stochastic Control, SIAM Journal on Control and Optimization, Vol. 16, pp. 122–130, 1978.

Puterman, M. L.,On the Convergence of Policy Iteration for Controlled Diffusions, Journal of Optimization Theory and Applications, Vol. 33, pp. 137–144, 1981.

Kushner, H. J.,Probability Methods for Approximations in Stochastic Control and for Elliptic Equations, Academic Press, New York, New York, 1977.

Quadrat, J. P.,Existence de Solution et Algorithme de Résolution Numérique de Problème de Contrôle Optimal de Diffusion Stochastique Dégenerée ou Non, SIAM Journal on Control and Optimization, Vol. 18, pp. 199–226, 1980.

Haussmann, U. G.,Extremal Controls for Completely Observable Diffusions, Lecture Notes in Control and Information Sciences, Vol. 42, pp. 149–160, 1982.

Billingsley, P.,Convergence of Probability Measures. John Wiley and Sons, New York, New York, 1968.

Haussmann, U. G.,A Discrete Approximation to Optimal Stochastic Controls, Analysis and Optimization of Stochastic Systems, Edited by M. H. A. Davis, M. A. H. Dempster, C. J. Harris, O. L. R. Jacobs, and P. C. Parks, Academic Press, London, England, 1980.

Author information

Authors and Affiliations

Additional information

Communicated by R. Rishel

This research was supported by NSERC under Grant No. A8051.

Rights and permissions

About this article

Cite this article

Haussmann, U.G. On the approximation of optimal stochastic controls. J Optim Theory Appl 40, 433–450 (1983). https://doi.org/10.1007/BF00933509

Issue Date:

DOI: https://doi.org/10.1007/BF00933509