Abstract

Low power fault tolerance design techniques trade reliability to reduce the area cost and the power overhead of integrated circuits by protecting only a subset of their workload or their most vulnerable parts. However, in the presence of faults not all workloads are equally susceptible to errors. In this paper, we present a low power fault tolerance design technique that selects and protects the most susceptible workload. We propose to rank the workload susceptibility as the likelihood of any error to bypass the logic masking of the circuit and propagate to its outputs. The susceptible workload is protected by a partial Triple Modular Redundancy (TMR) scheme. We evaluate the proposed technique on timing-independent and timing-dependent errors induced by permanent and transient faults. In comparison with unranked selective fault tolerance approach, we demonstrate a) a similar error coverage with a 39.7% average reduction of the area overhead or b) a 86.9% average error coverage improvement for a similar area overhead. For the same area overhead case, we observe an error coverage improvement of 53.1% and 53.5% against permanent stuck-at and transition faults, respectively, and an average error coverage improvement of 151.8% and 89.0% against timing-dependent and timing-independent transient faults, respectively. Compared to TMR, the proposed technique achieves an area and power overhead reduction of 145.8% to 182.0%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability of devices has been affected by technology scaling despite its advantages. Devices manufactured using 32 nm technologies and below are more prone to errors produced by all sources of instability and noise [19, 20] due to the elevated cost of mitigating process variability [6] and the escalation of aging mechanisms [16]. As a result, transient and permanent faults can appear in general logic and generate errors in-the-field. Therefore techniques to make Integrated Circuits (ICs) fault tolerant are required. Fault tolerant IC design techniques are utilized for enhancing circuit reliability. These techniques often rely on redundancy of information, time or hardware [7]. Particularly, hardware redundancy consists of the complete or partial replication of a circuit in order to ensure correct functionality. By replicating the circuit, the reliability is increased as it is highly unlikely that an error would occur on every replica at the same time. Triple Modular Redundancy (TMR) utilizes two replicas of the original circuit, whose outputs are passed on to a majority voter [7]. TMR has been widely used for safety-critical applications where robustness and data integrity are the top priority. Although TMR achieves a high level of reliability, it imposes a high area and power overhead, 200% of the original circuit plus the voter circuits, thus it is not viable for low power applications. Selective Fault Tolerance (SFT) and Selective hardening have been proposed to reduce area overhead and power consumption, by protecting only a subset of the workload of a circuit or its most vulnerable parts [3, 27].

Selective hardening aims to protect the most vulnerable parts of a circuit against soft errors [27]. This has been achieved in microprocessors [13, 14] through the identification of the architectural vulnerability factor of state elements which often requires stuck-at fault injection campaigns to calculate. Moreover, selective hardening has also been used in combinational logic to identify and protect vulnerable gates or nodes. This is achieved by propagating signal probabilities at the RT-Level to estimate the likelihood of an erroneous output caused by soft errors [8, 15, 17, 27]. A recent selective hardening technique uses a lightweight algorithm to rank the soft error susceptibility of logic cones in combinational logic according to their size, which is given by the sum of the fan-in and fan-out of its cells [23]. The highest ranked cones are duplicated and compared to detect if an error has occurred, in which case, shadow latches at the input enable a roll-back mechanism to recover from the error.

On the other hand, SFT as introduced by [3, 4], ensures functional protection of a pre-defined set of input patterns, which are referred to as workload, by using a partial TMR scheme. However, in the presence of a fault, not all input patterns are equally susceptible to it. Some patterns are less protected by the inherent logic masking of a circuit. When such patterns are executed in the presence of faults, the probability that the logic masking will be bypassed and an erroneous response will be generated is higher. Such patterns are defined as the susceptible workload. Previous works on SFT rely on randomly selecting the workload to protect without examining the susceptibility of that workload to faults.

Probabilistic fault models were developed for ranking test patterns according to their ability to sensitize the logic cones of a circuit that are more likely to propagate an erroneous response. Probabilistic fault models are known for improving both the modeled and the unmodeled defect coverage of tests, while not being biased towards any particular type of faults. Output deviations (OD) were introduced in [26] as an RT-Level fault model calibrated through technology failure information that stems from technology reliability characterization, such as inductive fault analysis [9]. This model is utilized for selecting the input patterns that maximize the probability of propagating an erroneous response to the primary outputs. The input patterns with the highest output deviations have a greater ability to bypass the inherent logic masking of the circuit. In [25] is shown that selecting input patterns with high output deviations tends to provide more effective error detection capabilities than traditional fault models. In [24], a test set enrichment technique for the selection of test patterns is proposed. Output deviations have also been used for enriching the unmodeled defect coverage of tests during x-filling [5] and linear [11, 12, 21] and statistical [22] compression. The output deviation-based metric proposed in [12], was shown to increase the unmodeled defect coverage of test vectors by considering both timing-independent defects, such as stuck-at faults, and timing-dependent defects, such as transition faults which require two patterns.

In this paper, we present a novel low power fault tolerance design technique applicable at the register-transfer-level, that selects and protects the most susceptible workload on the most susceptible logic cones by targeting both timing-independent and timing-dependent errors. Preliminary results of this technique were presented in [10], where only the timing-independent errors induced by stuck-at faults and input bit-flips were considered. The workload susceptibility is ranked as the likelihood of any error to bypass the inherent logic masking of the circuit and propagate an erroneous response to its outputs when that workload is executed. The susceptible workload is protected by a partial Triple Modular Redundancy (TMR) scheme. To evaluate the fault-tolerance ability of the proposed technique, we consider as surrogate error models the timing-independent errors induced by stuck-at faults and transient input bit-flips. We also consider the timing-dependent errors induced by transition faults and temporary erroneous output transitions. We demonstrate that the proposed technique can achieve a similar error coverage with an average 39.7% area/power cost reduction. Furthermore, it can improve by 86.9% on average the achieved error coverage with a similar area/power cost. Particularly, when protecting only the 32 most susceptible patterns, an average error coverage improvement of 53.1% and 53.5% against errors induced by stuck-at and transition faults is achieved, respectively, compared to the case where the same number of patterns are protected without any ranking. Additionally, we observe an average error coverage improvement of 151.8% and 89.0% against temporary erroneous output transitions and errors induced by bit-flips, respectively. These error coverage improvements incur in an area/power cost in the range of 18.0-54.2%, which corresponds to a 145.8-182.0% reduction compared to TMR.

This paper is organized as follows. Section 2 presents an overview of previous works on Selective Fault Tolerance, reviews the probabilistic fault model of output deviations, introduces the concepts of uncorrelated and application-specific workloads and presents a motivational example. Section 3 describes the proposed probabilistic selective fault tolerance design technique and output deviation-based ranking metrics used for pattern ranking. Simulation results from the application of the proposed technique to a set of the LGSynth’91 and ISCAS’85 benchmarks are discussed in Section 4. Finally, the concluding remarks are given in Section 5.

2 Motivation

In this section, the concept of Selective Fault Tolerance and the probabilistic fault model of output deviations (OD) are reviewed and the different types of workloads considered in this paper are briefly introduced. The capabilities of the OD model of detecting errors induced by multiple types of faults compared to the random selection of input patterns is presented as a motivational example.

2.1 Selective Fault Tolerance

Selective Fault Tolerance (SFT) was proposed as a modification of TMR. SFT reduces TMR cost by protecting the functionality of the circuit for only a subset of input patterns [3]. This input pattern subset X 1 is selected randomly by the designer. The input patterns within the subset are ensured to be protected with the same level of reliability of TMR, while the rest are not guaranteed protection.

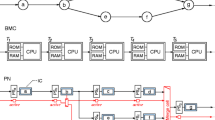

Figure 1 depicts the existing technique of SFT design. For a circuit S 1 to be protected using SFT, two smaller circuits s 2 and s 3 are generated. The behaviour of circuits s 2 and s 3 with a protected set X 1 is described as follows:

According to the heuristic presented in [4], in order to determine if the input x falls within the protected set X 1, the characteristic function χ(x) must be specified. The output of this function is passed on to a modified majority voter as shown in Fig. 1, where the outputs of s 2 and s 3 are considered if and only if χ(x) = 1, otherwise the output of the protected design is the output of circuit S 1.

A simplified method for SFT was proposed in [2], where the circuits s 2 and s 3 are replaced by identical circuits. These circuits are the minimal combinational circuits that for an input pattern within the protected set X 1, exhibit the same output as the original circuit S 1. The protected design can be described in the following form: (don ′ t care indicates undefined values).

2.2 Probabilistic Fault Model: Output Deviations

The selection of input patterns with high output deviations tends to provide higher error detection capabilities than traditional fault models [25]. Output Deviations are used to rank patterns according to their likelihood of propagating a logic error. There are a few requirements to compute the output deviations of an input pattern. First, a confidence level vector is assigned to each gate in the circuit. The confidence level R k of a gate G k with N inputs and one output is a vector with 2N elements such as:

where each \(r_{k}^{xx {\dots } xx}\) denotes the probability that G k ’s output is correct for the corresponding input pattern. The actual probability values of the confidence level vectors can be generated from various sources, e.g., inductive fault analysis, layout information or transistor-level failure probabilities. In this paper, the probability values obtained by inductive fault analysis shown in [9] are used. However, as it is discussed in [26], deviation-based test patterns may be generated using various sets of confidence level vectors.

Propagation of signal probabilities in the circuit follows the principle shown in [18], with no consideration for signal correlation to reduce computation complexity. The signal probabilities p k,0 and p k,1 are associated to each net k in the circuit. In the case of the NOR gate G 2 with inputs c,d and output f, the propagation of signal probabilities with the confidence level vector r is as follows:

Example: considering r = (0.8,0.9,0.9,0.9)), if input c=0 and d=0 (p c,0 p d,0=1), then p z,0=0.2 and p z,1=0.8. Similarly, for c=1 and d=1 (p c,1 p d,1=1), the propagated signal probabilities result in p z,0=0.9 and p z,1=0.1.

The same principle is applied to compute the signal probabilities for all gate types. For a gate G, let its fault-free output value for the input pattern t j be d, d ∈ (0,1). The output deviation Δ Gj of G for input pattern t j is defined as \(p_{G\bar {d}}\), where \(\bar {d}\) is the complement of d \((\bar {d}=1-d)\). In other words, the output deviation of an input pattern is a measure, unbiased towards any fault model, of the likelihood that the output is incorrect due to a fault in the circuit [26].

Example

Figure 2 shows a circuit with a confidence level vector associated with each gate. The table presents three input patterns and their output deviations. The first column shows the input pattern (a,b,c,d), along with the expected fault-free output value z. The next columns show the signal probabilities for both logic ’0’ and ’1’ of the two internal nets and the primary output (e,f,z). The output deviation of a pattern is the likelihood that an incorrect value is observed at the output z. Therefore the output deviations (the erroneous behaviour in G 3) for the presented input patterns are: Δ3,0000 = p z,1, Δ3,0101 = p z,1, Δ3,1111 = p z,0. In this example, the input pattern 1111 has the greatest output deviation, with a probability of observing a 0 (the erroneous value) at z of p z,0=0.396, thus offering the highest likelihood of detecting an error. ∙

Output deviations example [26]: (a) simple circuit with confidence level vectors and (b) propagated output deviations

2.3 Workload Types

In the context of this paper, we consider two workload types based on whether the application is known during design:

-

Uncorrelated workload: We consider that the application of the IC is not known during the design time, such as general purpose processors, and, hence, the in-the-field workload can only be considered uncorrelated. Only protection against the timing-independent errors, such as those induced by stuck-at faults or input bit-flips, can be targeted.

-

Application-specific workload: The application of the IC is known during the design time, hence some information related to the in-the-field workload might also be available. Therefore, the workload can be protected against both timing-independent and timing-dependent errors, because the input patterns of the IC might be correlated allowing the identification of consecutive susceptible patterns within the workload.

2.4 Motivational Example

Table 1 presents the results of a motivational example that shows how different patterns in a workload may exhibit different susceptibility to errors. We select two sets of patterns. The first set is random patterns (rp) and the second set is selected based on the probabilistic fault model of output deviations (pp) [26] for the combinational circuit pdc from the LGSynth’91 benchmarks. For this experiment, we generate rp and pp sets by gradually increasing the size of the sets from 8 to 1024 patterns. The values of the random patterns presented (rp columns), are obtained using the average results of 30 different sets. The first column shows the size of the rp and pp sets. The next columns show the fault coverage of the rp and the pp, respectively, obtained by fault simulating the circuit against the permanent fault models of stuck-at (SA) and transition faults. These results represent he ratio of faults which affect the operation of the circuit for the examined input patterns. The pp set used to compute the stuck-at fault coverage was obtained by considering an uncorrelated workload, while the pp set used to compute the transition fault coverage was obtained considering an application-specific workload consisting of 20000 patterns. Columns ‘Impr.’ show the improvement of the pp over the rp. The pp set presents a higher susceptibility than the rp set for stuck-at faults, which is in the range [51.9%, 311.1%], and for transition faults in the range of [69.1%, 300%]. This is due to the pp set containing the patterns with the highest output deviations which, by definition, have the highest likelihood to propagate an error to the output [26]. The next columns present results from the susceptibility evaluation of the rp and pp sets of patterns against errors induced by input bit-flips and temporary erroneous output transitions. The pp set used to evaluate the error coverage induced by bit-flips was obtained considering an uncorrelated workload, while the pp set used to compute the temporary erroneous output transitions was obtained by considering an application-specific workload. Particularly, input bit-flips are conducted by flipping a single bit at every input pattern of an uncorrelated workload. The values shown are the percentage of bit-flips at the primary inputs that bypassed the logic masking and propagated through the circuit to reach the output. For such errors, the pp set exhibit higher susceptibility [12.0%, 216.0%] compared to the rp set. In the case of temporary erroneous output transitions, which are errors that manifest as sporadic missing transitions at the outputs when applying consecutive pattern pairs within an application-specific workload, we observe that the pp set exhibits an error coverage improvement in the range of [55.2%, 293.8%], compared to the rp set.

3 Proposed Probabilistic Selective Fault Tolerance (PSFT) Design Technique

This section describes the proposed Probabilistic Selective Fault Tolerance (PSFT) design technique.

3.1 PSFT Design

The Probabilistic Selective Fault Tolerance (PSFT) design is presented in Fig. 3. The PSFT design consists of a partial TMR scheme of the original circuit S, two smaller redundant circuits S P , and a Z P characteristic function. The latter validates when the inputs of the S P units belong to the protected input pattern set. Different from the previous SFT design, shown in Fig. 1, the original circuit S is connected to all the inputs nodes, while the S P and Z P units are only connected to the input nodes of the logic cones selected for protection (I p < I, O p < O). A majority voter V P is used at the outputs of the circuit which operates only when the output is asserted (Z = 1). If Z = 0, then the voter propagates the outputs of the original circuit S. This functionality is described in Eqs. 9 and 10.

3.2 Proposed PSFT Design Flow

Figure 4 presents the flow diagram for the proposed PSFT design technique. The number of patterns to protect (N) and the percentage of cones to protect C p are considered as parameters of the proposed technique. The proposed technique consists of two processes. First, the logic cone selection, determines which logic cones of the circuit to protect given the C p parameter, and produces the Selected Cones list S c . The next process is the pattern ranking and selection, which consists of two different sub-processes depending on the workload type (uncorrelated or application-specific). For uncorrelated workload, a timing-independent ranking is performed on a large number of patterns and the N patterns who exhibit the highest output deviation metric are selected. For application-specific workload, a timing-dependent ranking of consecutive pattern pairs is deployed to select the N/2 consecutive pairs that maximize the output deviation metric. Finally, the list of protected patterns is ready and may be synthesized.

3.3 Process 1 : Logic Cone Selection

The C p (cone percentage) parameter defines the percentage of the cones to be protected. This parameter allows that the largest cones, in which errors are most likely to occur, are prioritized for protection, similarly to the cone selection technique presented in [23]. Initially, the cones are weighted according to their exclusive size |C es |. The exclusive size of a cone |C es | is the number of cells included in that cone that are not contained in any previously selected cone. The process begins by setting the percentage of selected cones C sp to 1/(# cones). The cone with the highest exclusive size |C es | is picked for protection by including it in the selected cones list S C . The percentage of selected cones P s is increased by 1/(# cones) and the exclusive sizes of the each cone are updated. The process repeats until P s is higher or equal to the target C p value. Finally, the selected cones list S c is passed on to the pattern ranking and selection process.

Example

The logic cone selection process for a small circuit is shown in Fig. 5. The three logic cones of the circuit have been marked and ranked according to their exclusive size |C es |. The cone Z 1 has a |C es | of 8 cells, the cone Z 2 of 4 and the cone Z 3 of 1 cell. Note that the Z 2 cone has an actual size of 7 cells and an exclusive size of 4, because it shares 3 cells with cone Z 1 that have been discarded when calculating their exclusive sizes. The C p parameter allows a trade-off between area overhead and error coverage. Particularly for this example, when C p = 0.3, the Z 1 cone will be selected. When C p = 0.6, both Z 1 and Z 2 cones are selected. Finally, with a C p = 1.0, all three cones are selected. This trade-off is explored in Section 4. ∙

3.4 Process 2 : Pattern Ranking and Pattern Selection

First, all cones in the selected cones list S c are assigned an initial weight W(c) = 1. The weights are used by the pattern ranking process to ensure that the selected patterns to protect are not all biased towards a particular cone in the S c . Next, according to the workload type, either uncorrelated or application specific, different pattern ranking processes are performed.

3.4.1 Timing-independent pattern ranking for uncorrelated workload

For an uncorrelated workload, where all input patterns are considered equally likely to occur without a known sequence, a ranking of a large number of patterns for protection of timing-independent faults is performed. We define Timing-Independent Deviation TID(p,c) as the output deviation-based metric for ranking input patterns where no sequence of patterns is known.

Let TID(p,c) be the deviation computed of pattern p for cone c ∈ S c . Then the selected probabilistic pattern Pp is given by:

The selected probabilistic pattern Pp shown in Eq. 11 is the one in which the multiplication of the TID(p,c) with the cone weights W(c), is the maximum after the TID(p,c) of a large number of patterns are computed. The weight of the selected cone c s in which the maximum TID(p,c) was observed, is updated according to:

In order to avoid selecting patterns that target the same largest cones, the weight of the cones that have been already targeted by the selected probabilistic patterns are updated using Eq. 12 after each cone selection. The real-valued parameter P may be set to any value within [0,1], however, using small values would significantly reduce the weight of the selected cone, preventing such cone from being selected in the next iteration even if the TID of a pattern for that cone is high. In order to prevent such scenario, we used P = 0.9 for our experiments allowing for cones where the TID is consistently high to be selected in adjacent iterations. The whole ranking process is repeated until all the required N probabilistic patterns have been selected.

Example

Table 2 presents an example of a pattern selection using the TID metric. Consider the logic cones of Fig. 5 with weights W(z 3) = 1, W(z 2) = 0.9 and W(z 1) = 0.81, and two input patterns p a and p b . Applying the ranking based on the TID metric results in a selection of the pattern p b . This is due to W(z 2) ⋅ TID(p b ,z 2) resulting in the highest value (0.63). After this selection, the weight of the cone z 2 is reduced according to Eq. 12.

3.4.2 Timing-dependent Pattern Ranking for Application-specific Workload

In the case of an application-specific workload, where a sequence of patterns of size r is expected, a pattern ranking for timing-dependent faults is deployed. Timing-Dependent Deviation TDD([p k ,p k+1],c) is the output deviation based-metric for ranking consecutive input patterns pairs in a known pattern sequence.

Let TDD([p k ,p k+1],c) be the deviation computed of consecutive pattern pairs [p k ,p k+1] for cone c ∈ S c where k = (0,1,2,...r) and r is the size of the application-specific workload. The selected probabilistic pattern pair [P p ,P p+1] is calculated as follows:

The consecutive pattern pair [P p ,P p+1] shown in Eq. 13 is the one in which the multiplication of the TDD([p k ,p k+1],c) with the cone weights W(c), is the maximum of all possible consecutive pattern pairs in the application-specific workload. Similarly to the timing-independent ranking, the weight of the cone c s in which the maximum TDD([p k ,p k+1],c) was observed, is reduced according to Eq. 12. This process is repeated until N/2 consecutive pattern pairs have been selected.

4 Experimental Validation

This section evaluates the proposed selective fault tolerance design technique by applying it on a subset of combinational circuits from the LGSynth’91 and ISCAS’85 benchmark suites (Table 3). The simulation setup for this evaluation is detailed. Comparison results against randomly protecting workload are presented with a discussion on the area cost of the proposed technique and a trade-off analysis for various number of patterns (N) and cone percentages (C p ). Finally, a comparison of computational effort between the proposed technique and statistical fault injection is performed.

4.1 Evaluation Setup

First, the most susceptible patterns and pattern pairs are synthesized using the ABC synthesis tool [1] into the proposed partial TMR scheme (Fig. 3) used by the PSFT technique. Figure 6 depicts the simulation setup used for the evaluation of the error coverage (EC) achieved by the proposed technique against errors induced by permanent and transient faults. For the permanent faults evaluation, single stuck-at (SSA) faults and transition faults are injected and fault simulated using commercial tools in order to obtain the EC of errors induced by SSA (ibSSA EC) and induced by transitions (ibTran EC). For the transient faults evaluation, bit-flips are injected at the inputs of the circuit to obtain the EC of errors induced by bit-flips (ibBF EC). The ibBF EC is computed by injecting such random upsets and finding 50K events in which a bit-flip on an input pattern propagates an error through the whole circuit and reaches the output of the unprotected circuit (S). The ibBF EC is the percentage of these upsets that are masked by the redundant circuits (S p ) of the PSFT design and therefore are not affecting the protected circuit. Furthermore, the EC of errors induced by temporary erroneous output transitions (ibEOT EC) is calculated for an application-specific workload of size r = 20K. The ibEOT EC is the percentage of transitions at the output occurring while executing such workload that are protected by the selected consecutive pattern pairs set. The experiments were performed on a Linux x64 desktop machine with an Intel Core i7-3770 CPU and 16GB of available RAM.

4.2 Simulation Results

Table 4 presents a comparison of the ibSSA EC in circuit S of a random patterns (S rp ) set and the ranked probabilistic patterns (S pp ) set for a large uncorrelated workload. As shown in Table 7, SFI execution times are particularly prohibiting for large workloads, therefore random patterns were used for comparison as they emulate an arbitrary selection of workload with no susceptibility information. The values shown in the S rp column is the average of 30 different random patterns selections. The S pp column shows the ibSSA EC of the ranked probabilistic patterns. The Impr (%) column shows the improvement of the S pp over the S rp calculated as: Impr%= (S pp − S rp )/S rp ⋅ 100. Note that the S pp consistently exhibit a higher ibSSA EC than the S rp . This improvement saturates as the number of patterns N that is protected increases. This is attributed to the increased probability that the random patterns S rp contain highly susceptible patterns.

Figure 7 presents the resulting ibSSA EC and area cost of the PSFT design for the circuit c880. The results for a various number of protected patterns (N) are shown for a selected cone percentage C p = 0.1. The left axis corresponds to the ibSSA EC and the right axis to the area cost of the proposed PSFT design. The area cost of the proposed PSFT design is the sum of the area costs of the three blocks (S, S P & Z P ) (Fig. 3) divided by the size of the original circuit: PSFT Area Cost = (2 ⋅ S P + Z P )/S. For the scope of this paper the cost of the voters will be ignored. The average area cost resulting after synthesizing many S rp sets is similar to that of synthesizing the S pp set when protecting the same number of patterns. It can be considered that power consumption is proportional to the area cost. Similar to the results shown in Table 4, the ibSSA EC of the pp is consistently higher than that the one of the rp for all examined N values.

The computation of the ibSSA EC of the PSFT design is calculated by adding the coverage in each of the blocks of the design. The EC in the original circuit S is obtained by the protected patterns (S pp ). The coverage of the S P and Z P blocks is 100%, as the protected patterns sensitize them fully. The ibSSA EC of the PSFT design is computed as:

where |S|, |S p |, and |Z P | are the sizes of the blocks depicted in Fig. 3 and S pp is the ibSSA EC of the ranked probabilistic patterns.

Table 5 shows results obtained for the transition faults evaluation. The comparison of the ibTRAN EC of the random patterns S rp and the ranked probabilistic patterns S pp is presented. The results are shown in the same format as Table 4. Note that the S pp also exhibits a higher ibTran EC than the S rp , despite transition faults not being targeted specifically by the pattern ranking using the output deviation-based metric.

The resulting ibTran EC and area cost of the PSFT design for the circuit c880 are presented in Fig. 8. Similarly to Fig. 7, these results were obtained for a selected cone percentage C p = 0.1, thus protecting only the 10% largest cones in the circuit. In both Figs. 7 and 8, the EC provided by the S pp is consistently higher than for the S rp , even though neither stuck-at faults nor transition faults were targeted when selecting the protected patterns.

Table 6 shows the ibBF EC and the ibOT EC improvement of S pp over S rp , the ibSSA EC, ibTran EC, area cost and TMR area improvement when only the top 10% largest logic cones are selected (C p = 0.1) for 8 and 32 protected patterns. The second column shows the ibBF EC improvement calculated by the input bit-flip simulation applied on an uncorrelated workload, while the third column shows the ibEOT EC improvement of the ranked consecutive pattern pairs of an application-specific workload of size r = 20K. The ibSSA and ibTran EC of the S pp and S rp sets and the improvement of S pp over S rp are presented in the next columns. Following is the total ibSSA and ibTran EC of the whole PSFT design, as calculated by Equation 14. The area cost of S P and Z P blocks (Fig. 3) as well as the overall area cost of the proposed PSFT design are presented in the next three columns, respectively. The improvement in area cost over TMR is presented in the last column, which is calculated as TMR impr = 200 - area cosf of PSFT. Error coverage on the LGSynth’91 benchmarks is lower than on the ISCAS’85 benchmarks. This is due to the nature of the circuits, given that the ISCAS’85 circuits were created as a basis for comparing results of test generation, while the combinational LGSynth’91 benchmarks are mainly used in the logic synthesis and optimization field.

When 32 patterns are protected, Table 6 shows an average ibBF EC improvement of 89.0% and an average ibOT EC improvement of 151.8% of S pp over S rp , an average ibSSA EC of 70.5% with an average improvement of 53.1% and an average ibTran EC of 56.2% with an average improvement of 53.5%. This results in an overall average improvement of 86.9%. We observe an area cost in the range of 18.0-54.2% for all circuits, which corresponds to a 145.8-182.0% reduction compared to TMR. Note that for circuit c880 using only 32 susceptible patterns selected with the output deviations-based metric, provides an ibSSA EC of 88.5% and an ibTran EC of 78.2% with an area cost of only 20.2%. The results of circuit c880 exhibit on average a ibBF EC of 4.47% for S rp and of 7.49% for S pp , an improvement of 67.6%. The logic cones selected with a C p = 0.1 have an input space of 210 (10 inputs), therefore, with just 32 out of 210 patterns (32/ 210 = 3.13%), the proposed technique can cover 7.49% of bit-flips at the inputs. Circuit ex5 exhibits a large ibBF EC improvement compared to the other benchmarks due to the small input space (28), which allows for a simpler identification of the susceptible patterns in a workload.

When a specific error coverage constraint is set, the size of the ranked probabilistic patterns set (S pp ) is consistently smaller than the size of the S rp set. For instance, when an ibSSA EC of 80% is required, the S pp set is 12% to 63% smaller than the S rp set. Similarly, for an ibTran EC of 70%, the S pp is 13% to 61% smaller than the S rp set. Considering that the same number of random patterns incurs in a similar area cost, these smaller S pp sets achieve a 39.7% average area reduction compared to the required S rp set size to obtain the same error coverage.

The selective fault tolerance techniques presented in [2] and [4] rely on an arbitrary selection of its workload to protect without examining the susceptibility to either faults or errors. They protect a subset of all the possible input patterns of a combinational circuit. These works present results for a group of small circuits of the LGSynth’91 benchmark suite, which include the single-cone circuits t481 and Z9sym. In the case of circuit t481, results in Tables 4, 5 and 6 show that the proposed PSFT technique (S pp ) offers various error coverage improvements for both timing-independent and timing-dependent errors compared to an unranked selection of patterns (S rp ). Applying PSFT to circuit Z9sym, Table 4 shows that the S pp set consistently exhibits higher error coverage than the S rp set. In particular, with N = 128, which covers 25% of all possible inputs, the proposed technique offers an error coverage improvement of the S pp over the S rp of 6.38%. Furthermore, with N = 256, which covers 50% of the input patterns, the error coverage improvement results in 5.27%. The resulting area cost of the PSFT technique for circuit Z9sym with N = 128 and N = 256 is 99% (TMR impr = 101%) and 139% (TMR impr = 61%), respectively, which is similar to the area cost reported in [2, 4]. The area cost of the two techniques is similar in all cases.

Figure 9 presents the trade-off between area cost of the PSFT design and different cone percentage C p values for the circuit pdc. The area cost of N = (32,64,128,256) protected patterns is shown for all C p values. With a C p = 1, all the logic cones are selected whereas with C p = 0.1, only the largest 10% of the logic cones in the circuit are chosen. When C p = 1, the PSFT design is synthesized for all cones, which yields a high area cost. This is due to the intrinsic logic sharing present in most circuits which the synthesis tool is unable to simply. It can be seen as expected, that the area cost decreases until reaching a C p = 0. Note that the area cost of the PSFT design for C p = 1 ranges from 176% for N = 256 to 72% for N = 32, which decreases to 57% and 18% with C p = 0.1.

4.3 Computational Effort Comparison

We compare the CPU time that is required by the proposed technique (pattern-ranking presented in Section 3.4) in order to compute the most susceptible patterns in an application-specific workload of 2000 patterns (Section 2.3) with that required by a statistical fault injection (SFI) simulation. The SFI simulation consists of five different stuck-at fault injection campaigns on 50% of all possible fault sites while executing the same uncorrelated workload. The circuit outputs are compared against the error-free case on each cycle to determine if an error has occurred. The N patterns that exhibited the highest number of errors across all fault injection campaigns were deemed as the most susceptible for the SFI simulation.

Table 7 shows the CPU runtime of both the SFI simulation and the pattern ranking of the proposed PSFT technique. The second column shows the number of selected patterns N. The third column presents the CPU runtime in seconds required by the SFI to run the five different fault injection campaigns. Note that the execution time is the same for all N as the simulation must run all the 2000 patterns of the workload to then select the N patterns that propagate the most errors. Column 4 shows the time required to find the N most susceptible patterns using the proposed technique. Finally, column 5 presents the speed up achieved by the proposed technique compared to the SFI simulation. The time required by the proposed technique increases as the number of patterns increase, although even for 1024 patterns, the proposed technique is several orders of magnitude faster for selecting the most susceptible patterns in a workload. Additionally, the patterns selected by the proposed technique are not biased towards any particular fault model while those selected by the SFI simulation are biased towards the stuck-at fault model.

5 Conclusion

We showed that not every workload is equally susceptible to errors induced by permanent or transient faults, which results in some input patterns being less protected by the inherent logic masking of the circuit (Table 1). We proposed to rank this susceptibility to errors in order to protect those patterns that have the most likelihood of propagating errors to the output. By combining the technique of Selective Fault Tolerance (Fig. 1) and a probabilistic fault model based on the theory of output deviations (Fig. 2), we proposed a low power selective fault tolerance design technique (Figs. 3 and 4). The proposed technique protects the most susceptible workload of the most susceptible logic cones using a partial TMR scheme. We showed that the proposed technique is up to almost 5 orders of magnitude faster when finding the most susceptible workload than a fault-injection-based methodology. We evaluated the proposed technique by considering as surrogate error models the timing-independent errors induced by permanent stuck-at faults and transient bit-flips and the timing-dependent errors induced by permanent transition faults and temporary erroneous output transitions on a set of benchmarks (Table 3). Trade-offs between achieved tolerance against permanent (Tables 4 and 5) and transient (Table 6) errors, together with area cost (Fig. 9) are also presented. We conclude that the protection of the most susceptible workload through a probabilistic fault model that is unbiased towards any type of fault, ensures that the fault tolerance against any type of errors is enriched. Therefore, the usage of output deviations to determine the most susceptible workload in an application may assist in enhancing circuit reliability beyond the scope of a partial TMR scheme.

References

ABC: A system for sequential synthesis and verification. http://www.eecs.berkeley.edu/alanmi/abc/

Agnola L, Vladutiu M, Udrescu M, Prodan L Simplified selective fault tolerance technique for protection of selected inputs via triple modular redundancy systems. In: 2012 7th IEEE international symposium on applied computational intelligence and informatics (SACI)

Augustin M, Goessel M, Kraemer R (2010) Reducing the area overhead of TMR-systems by protecting specific signals. In: 2010 IEEE 16th international on-line testing symposium, pp 268– 273

Augustin M, Goessel M, Kraemer R (2011) Implementation of selective fault tolerance with conventional synthesis tools. In: 2011 IEEE 14th international symposium on design and diagnostics of electronic circuits systems (DDECS), pp 213– 218

Balatsouka S, Tenentes V, Kavousianos X, Chakrabarty K (2010) Defect aware x-filling for low-power scan testing. In: Design, automation test in Europe conference exhibition (DATE 2010), p 2010

Bhunia S, Mukhopadhyay S, Roy K (2007) Process variations and process-tolerant design. In: 20th international conference on VLSI design held jointly with 6th international conference on embedded systems (VLSID’07), pp 699–704

Cazeaux JM, Rossi D, Metra C (2004) New high speed CMOS self-checking voter. In: 10th IEEE international symposium on on-line testing 2004 (IOLTS), pp 58–63

Fazeli M, Ahmadian S, Miremadi S, Asadi H, Tahoori M (2011) Soft error rate estimation of digital circuits in the presence of Multiple Event Transients (METs). In: 2011 design, automation & test in Europe, pp 1–6

Ferguson FJ, Shen JP (1988) A CMOS fault extractor for inductive fault analysis. IEEE Trans Comput-Aided Des Integr Circ Syst 7(11):1181–1194

Gutierrez M, Tenentes V, Kazmierski T (2016) Susceptible workload driven selective fault tolerance using a probabilistic fault model. In: 22nd IEEE international symposium on on-line testing and robust system design 2016 (IOLTS)

Kavousianos X, Chakrabarty K, Kalligeros E, Tenentes V (2010) Defect coverage-driven window-based test compression. In: 2010 19th IEEE Asian Test Symposium, pp 141–146

Kavousianos X, Tenentes V, Chakrabarty K, Kalligeros E (2011) Defect-oriented LFSR reseeding to target unmodeled defects using stuck-at test sets. IEEE Trans Very Large Scale Integr (VLSI) Syst 19(12):2330–2335

Maniatakos M, Makris Y (2010) Workload-driven selective hardening of control state elements in modern microprocessors. In: 2010 28th VLSI test symposium (VTS), pp 159–164

Maniatakos M, Michael M, Tirumurti C, Makris Y (2015) Revisiting vulnerability analysis in modern microprocessors. IEEE Trans Comput 64(9):2664–2674

Mohanram K, Touba N (2003) Cost-effective approach for reducing soft error failure rate in logic circuits. In: International test conference, 2003. Proc. ITC 2003

Omana M, Rossi D, Bosio N, Metra C (2013) Low cost NBTI degradation detection and masking approaches. IEEE Trans Comput 62(3):496–509

Pagliarini S, Naviner L, Naviner JF (2012) Selective hardening methodology for combinational logic. In: 2012 13th latin American test workshop (LATW), pp 1–6

Parker KP, McCluskey EJ (1975) Probabilistic treatment of general combinational networks. IEEE Trans Comput C-24(6):668–670

Semiconductor industry association, international technology roadmap for semiconductors (ITRS) 2013. http://www.itrs2.net/2013-itrs.html

Srinivasan J, Adve S, Bose P, Rivers J (2005) Lifetime reliability: toward an architectural solution. IEEE Micro 25(3):70– 80

Tenentes V, Kavousianos X (2011) Low power test-compression for high test-quality and low test-data volume. In: 2011 20th Asian test symposium (ATS), pp 46–53

Tenentes V, Kavousianos X (2013) High-quality statistical test compression with narrow ate interface. IEEE Trans Comput-Aided Des Integr Circ Syst 32(9):1369–1382

Wali I, Virazel A, Deveautour B, Bosio A, Girard P, Sonza Reorda M (2017) A low-cost reliability cost trade-off methodology to selectively harden logic circuits. Journal of 91 Electronic Testing: Theory and Applications (JETTA)

Wang Z, Chakrabarty K (2006) An efficient test pattern selection method for improving defect coverage with reduced test data volume and test application time. In: 2006 15th Asian test symposium

Wang Z, Chakrabarty K (2008) Test-quality/cost optimization using output-deviation-based reordering of test patterns. IEEE Trans Comput-Aided Des Integr Circ Syst 27(2):352–365

Wang Z, Chakrabarty K, Goessel M (2006) Test set enrichment using a probabilistic fault model and the theory of output deviations. In: Design automation test in Europe conference, vol 1, pp 1–6

Zoellin CG, Wunderlich HJ, Polian I, Becker B (2008) Selective hardening in early design steps. In: 2008 13th European test symposium, pp 185–190

Acknowledgments

This work has been supported by the Mexican CONACYT and by the and EPSRC Grant EP/K034448/1 (the PRiME Programme www.primeproject.org).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: M. Goessel

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Gutierrez, M.D., Tenentes, V., Rossi, D. et al. Susceptible Workload Evaluation and Protection using Selective Fault Tolerance. J Electron Test 33, 463–477 (2017). https://doi.org/10.1007/s10836-017-5668-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10836-017-5668-7