Abstract

We measured the pupil response to a light stimulus subject to a size illusion and found that stimuli perceived as larger evoke a stronger pupillary response. The size illusion depends on combining retinal signals with contextual 3D information; contextual processing is thought to vary across individuals, being weaker in individuals with stronger autistic traits. Consistent with this theory, autistic traits correlated negatively with the magnitude of pupil modulations in our sample of neurotypical adults; however, psychophysical measurements of the illusion did not correlate with autistic traits, or with the pupil modulations. This shows that pupillometry provides an accurate objective index of complex perceptual processes, particularly useful for quantifying interindividual differences, and potentially more informative than standard psychophysical measures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Although atypical perception is not a diagnostic criterion for Autism Spectrum Disorders (ASD), growing evidence shows that autistic individuals have different perceptual styles than neurotypicals. Perhaps the best known aspect of this consists of a preference for local details in children and adults with ASD, who often outperform controls in tasks requiring the discrimination of fine-grained visual features abstracted from their global context, like the embedded figure task or visual search tasks (Chouinard et al. 2016; Jolliffe and Baron-Cohen 1997; Shah and Frith 1983). Several theories have been developed to account for perceptual idiosyncrasies in ASD. These include the “Weak Central Coherence Theory” (Happe and Frith 2006), where the preference for focusing on details brings about a failure to extract (or, in more recent formulations, a preference to disregard) global “gestalt” cues (Chouinard et al. 2016; Happe and Frith 2006). The “Enhanced Perceptual Functioning theory”, posits that local preference results from overtraining of sensory function, which interferes with higher order operations necessary to capture the “gestalt” of sensory experience (Mottron et al. 2006). A more recent account links atypical autistic perception with Bayesian models of sensory integration. The key concept is that perception is a form of implicit inference, where sensory information is used to test hypotheses on the status of the world around us—hypotheses that we implicitly and automatically make based on a priori knowledge (Gregory 1980; Helmholtz and Southall 1962). Pellicano and Burr (2012) proposed that perception in ASD is less influenced by prior experience—it is more “data-driven”. A similar concept is at the basis of several other proposals that have been recently put forward (Friston et al. 2013; Lawson et al. 2014; Rosenberg et al. 2015; Sinha et al. 2014; van Boxtel and Lu 2013; Van de Cruys et al. 2014).

All these models would predict that autistic perception should be less susceptible to illusions. Illusions typically occur when one element is integrated into its global context, which can be informative and efficient in most real-world circumstances, but can be misleading in the peculiar settings that prompt illusions. If global information is underweighted in autism, it follows that an element may be seen “as is” irrespectively of its context, hence less illusorily (e.g. Happe 1996). Also, illusions can often result from a priori assumptions; if prior knowledge is underweighted in autism (as suggested by Pellicano and Burr 2012), it follows that perception may be generally less efficient, but paradoxically more veridical in limit cases that generate illusions.

In the face of this theoretical consensus, experimental approaches testing susceptibility to illusions in ASD have produced mixed results. Several studies compared behaviour in individuals with ASD and controls, and found evidence for reduced susceptibility to illusions in individuals with ASD (Bolte et al. 2007; Happe 1996; Mitchell et al. 2010). However, other studies have failed to detect significant differences in the strength of illusory effects between ASD and controls (Hoy et al. 2004; Manning et al. 2017; Milne and Scope 2008; Ropar and Mitchell 1999, 2001).

A variety of factors could account for the divergent findings. First, there are factors related to the selection criteria of participants, a common concern in clinical studies, particularly relevant for the highly heterogeneous class of ASDs. For example, different studies may have considered clinical samples with varying degrees of severity (Baron-Cohen and Wheelwright 2004; Belmonte et al. 2004; Happe and Frith 2006; Ring et al. 2008); comorbidities may have acted as confounding factors (Gillberg and Billstedt 2000), and the decision to match cases and controls based on chronological or mental age might also have mattered (Gori et al. 2016; Walter et al. 2009). Second, there are factors related to the psychophysical task. Any procedure for measuring illusions is sensitive to the instructions given to the participants and to the details of the procedure, which may well have differed in subtle ways across studies (Gori et al. 2016; Happe and Frith 2006). Moreover, compliance with the task is likely to be reduced in the ASD groups, given the high prevalence of cognitive disability, anxiety disorders and perseverative behaviours (Chouinard et al. 2013, 2016).

To mitigate these concerns, two strategies have been recently proposed. The first addresses the difficulty of studying clinical populations, and proposes to shift attention towards typically developed individuals that share features, or traits, with the clinical cases. There is a vast literature supporting a dimensional concept of autistic traits, distributed along a continuum across the whole population, of which the clinical sample forms an extreme (Bailey et al. 1995; Baron-Cohen et al. 2001; Chouinard et al. 2013, 2016; Constantino and Todd 2003; Piven 2001; Ruzich et al. 2015; Skuse et al. 2009; Wheelwright et al. 2010). A validated tool for quantification of these autistic traits is the Autistic Quotient Questionnaire, available in most languages for both adults (Baron-Cohen et al. 2001) and children (Auyeung et al. 2008). Using this tool, recent studies have reported a link between susceptibility to visual illusion and autistic traits in typical adults (Chouinard et al. 2013, 2016; Walter et al. 2009), suggesting that some but not all types of visual illusions (in which are involved different kind of perceptual integration) could be affected by the level of autistic traits.

The second strategy addresses the difficulties inherent in psychophysical techniques, aiming to develop objective measures to support the necessarily subjective psychophysical measures. Our group and others have proposed pupillometry. The diameter of the eye pupil is mainly affected by light and sympathetic tone, but also shows more subtle variations that reflect attentional and perceptual events. For example, a white disk elicits a stronger pupillary constriction when it is interpreted as a picture of the sun vs. the moon (Binda et al. 2013b; Naber and Nakayama 2013). Brightness illusions are also accompanied by enhanced constriction (Laeng and Endestad 2012; Zavagno et al. 2017). Even simply shifting covert attention to locations or surfaces/features with higher luminance is sufficient to induce a relative constriction (Binda and Murray 2015b; Binda et al. 2013a, 2014; Mathot et al. 2016, 2013; Turi et al. 2018), suggesting that tiny pupil size changes can track the focus of attention and the content of perception (Binda and Gamlin 2017; Binda and Murray 2015a; Mathot and Van der Stigchel 2015).

Recently both strategies has been combined, using pupillometry to index the preference for local elements vs. global configuration in association with autistic traits measured as AQ scores (Turi et al. 2018). This provided clear evidence that pupillometry reliably tracks inter-individual differences in perceptual styles: quickly and objectively, without interfering with spontaneous perceptual strategies.

In the present study we apply a similar logic, using pupillometry to index illusion-susceptibility, and relate it to autistic traits. Specifically, we applied a version of the Ponzo size illusion (Ponzo 1910) where the apparent size of an object changes illusorily with its apparent depth 3D. This illusion is a clear example of Emmert’s law (Boring 1940), according to which images of the same retinal size will look larger or smaller depending on their apparent distance, with nearer images appearing smaller and more distant images appearing larger, consistent with the conditions that would have cast that image. As the object (a small figurine) was brighter than the background, it is expected to evoke a pupillary constriction that scales with the actual size of the object. Combining pupillometry with psychophysics to examine a group of neurotypical adults, we asked: (1) whether pupil-constriction strength also scales with apparent object size when this is varied independently of actual size by 3D context; (2) whether the objective measure of illusion strength provided by pupillometry is tightly correlated with subjective measures obtained by psychophysical testing; and (3) whether between-participant variance of pupillometry and/or psychophysical estimates is associated with variance in autistic traits, estimated through the AQ scores.

Based on previous studies showing that pupillary responses are modulated by contextual information, we hypothesize that the pupillary response evoked by a light stimulus should be modulated by its perceived size, which in turn depends on its 3D context (Ponzo Illusion). We expect that the contextual effect should vary across individuals, being reduced in individuals with stronger autistic traits (higher AQ scores).

Methods

Compliance with Ethical Standards

None of the authors have conflicts of interest to declare. The research reported here involved human participants, who gave their written informed consent to the participation in this study. Experimental procedures were approved by the regional ethics committee [Comitato Etico Pediatrico Regionale—Azienda Ospedaliero-Universitaria Meyer—Firenze (FI) “under the protocol “Fusione di Informazioni Multisensoriali" v4/04.10.2018”] and were in accordance with the Declaration of Helsinki.

Participants

50 neurotypical adults (33 females; mean age and standard error: 25.7 ± 4.0) were recruited for the study. All participants are university students and reported normal or corrected-to-normal vision, and no known neurological or medical condition.

The Autism-Spectrum Quotient Questionnaire (AQ)

The AQ is a 50-item self-report questionnaire measuring tendency towards autistic traits (Baron-Cohen et al. 2001). Participants filled out an Italian version of the test (Ruta et al. 2012) on an on-line format at the end of the experimental session, before leaving the lab. Responses are made on a 4-point scale: ‘‘strongly agree’’, ‘‘slightly agree’’, ‘‘slightly disagree’’, and ‘‘strongly disagree’’ (in Italian). Items were scored as described in the original paper: 1 when the participant’s response was characteristic of ASD (slightly or strongly), 0 otherwise. The score can vary between 0 and 50, with higher scores indicating greater inclination towards autistic traits. All participants of our sample scored under 32, which is the cut-off over which a clinical assessment is recommended (Baron-Cohen et al. 2001). In our sample, the AQ scores ranged between 2 and 31 with a median score of 16.

Apparatus and Stimuli

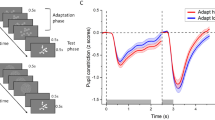

The experiment was performed in a quiet dark room (no lighting; windows obscured with shutters). Participants sat in an experimental boot inside thick black curtains that further shut off any ambient light. Thus, the only illumination was provided by the stimulus display, identical for all participants. This was a CRT monitor screen (40 × 30°, Barco Calibrator, resolution of 1024 × 768 pixels and a refresh rate of 120 Hz), placed at a distance of 57 cm from the participant’s head, which was stabilized by chin rest. Viewing was binocular. Stimuli were created by modifying a well-known example of the Ponzo Illusion. White figurines (with a luminance of 55 cd/m2) representing a monster were shown against a steady background creating a 3D context (corridor) with equiluminant red/green elements (green: 1.7 cd/m2; red: 2.0 cd/m2). Stimuli were generated with the PsychoPhysics Toolbox routines (Brainard 1997; Pelli 1997) for MATLAB (MATLAB r2010a, The Math Works) housed in a Mac Pro 4.1. Five figurines were used, with height varying between 6.2 and 9.3° in steps of 0.8° (the width varied proportionally). On each trial, a single figurine was presented, positioned to appear standing at the near-end or far-end of the corridor (see Fig. 1).

Stimuli and procedure. a Ponzo Illusion Task: timeline of the stimuli presentations. The stimulus was a white figurine displayed for 4 s within an illusory 3D corridor. Participants verbally estimated the size of the stimulus in millimeters. b The stimulus was presented in two experimental conditions, near and far positions of the illusory corridor, with 5 different psychical sizes (size varied of ± 20%, ± 10%, 0%; compared to the height of the original figurine, 7.8° of visual angle)

Two-dimensional eye position and pupil diameter were monitored at 500 Hz with an EyeLink 1000 system (SR Research) with infrared camera mounted below the screen, recording from the left eye. Pupil measures were calibrated by an artificial 4-mm pupil. Eye position recordings were linearized by a standard 9-point calibration routine performed at the beginning of each session (two sessions per participant). Synchronization between eye recordings and visual presentations was ensured by the Eyelink toolbox for MATLAB (Cornelissen et al. 2002).

Procedure

Participants started the experiment with a brief training session on the size estimation task. They were shown the five figurines 20 times, always presented against a uniform black screen, and asked to voice their size estimate in millimeters. All participants understood the task and proceeded to the experimental session. This comprised 100 trials (5 figurine sizes, 2 figurine positions, and 10 repetitions of each combination), administered in two blocks of 50 trials. During a block, the background-corridor remained constantly visible. At the beginning of each trial, a fixation point (0.25° diameter) was presented at either the front or far-end of the corridor for 1 s, allowing participants to move their gaze to the location of the upcoming figurine. After this interval, the fixation point was extinguished and the figurine appeared at the same location, remaining on-screen for 4 s. During this time, fixation was not enforced (no fixation-point was visible); participants were encouraged to focus their attention on the entire figurine in order to estimate its size as precisely as possible, and to voice their estimate on extinction of the figurine. The experimenter entered the response by keyboard, automatically starting the following trial. Participants were asked to minimize blinking during the trial, postponing it to the inter-trial interval during which they voiced their responses.

Analysis of Pupillometry and Eye-Tracking Data

Eye-tracking data were preprocessed using custom Matlab scripts that implemented the following steps:

-

1.

Exclusion of the first trial in each block (due to the sudden appearance of the corridor against a completely dark background, which induced additional pupil constriction that contaminated the pupil light response to the figurine).

-

2.

Identification and removal of gross artifacts: removal of time-points with unrealistically small or large pupil size (more than 1 mm from the median of the trial or < 0.1 mm, corresponding to blinks or other signal losses).

-

3.

Identification and removal of finer artifacts: identification of samples where pupil size varied at unrealistically high speeds (> 2.5 mm per second, beyond the physiological range) and removal of the 20 ms epoch surrounding this disturbance.

-

4.

Down-sampling of data at 10 Hz, by averaging the retained time-points in non-overlapping 100 ms windows. If no retained sample was present in a window, that window was set to “NaN” (MATLAB code for “not a number”).

Horizontal and vertical gaze position traces were transformed into deviations from screen center to degrees. Pupil traces were transformed into changes from baseline by subtracting the average pupil diameter in the first 200 ms after stimulus onset (i.e. during the latency of the pupillary light response).

For statistical comparisons, we summarized the pupil change and gaze position traces by averaging over the stimulus presentation window (excluding the initial 200 ms used for baseline estimation). Due to the preprocessing described above, trials with blinks or artifacts included traces with several missing values; we excluded these from our analyses by eliminating all traces for which 40% or more of the 10 Hz samples were missing (mean ± s.e.m across participants: 20.4 ± 3.6% for a total of 921 trials across all participants). We verified that varying the values of any of these preprocessing parameters does not critically change the results (specifically, we verified that setting the maximum pupil deviation to 2 mm does not introduce the saturation effect seen in Fig. 2).

Ponzo illusion measured with perceptual reports and pupillary responses. a Timecourses of pupil change relative to baseline (first 200 ms from stimulus onset), plotted separately for the near (black) and far (red) conditions and for the five stimulus sizes (from ± 20% the standard size); dashed lines indicate the window over which pupil changes are averaged to compute the pupil response (whole trial duration except the initial 200 ms used to define pupil baseline). Thick lines show averages across participants, thin lines ± 1 s.e.m. b Mean magnitude estimates of the figurine size. Separate lines are for the near (black) and far (red) conditions and estimates are plotted against stimulus size, with error bars giving standard errors across participants. c Mean pupil responses (pupil change averaged in the window defined by dashed vertical lines in a, corresponding to the whole stimulus duration except the initial 200 ms used to define pupil baseline). Same format as in b

Statistical Analysis

We used a linear-mixed-model approach to analyze data from individual trials. We modelled fixed effects for the figurine location (nominal variable with two levels: near/far) and figurine size (nominal variable with five levels), and a random effect to allow the intercept of the linear model to vary on a participant-by-participant basis. In addition, we separately modelled the interaction between figurine location (near/far) and AQ scores (real values, with as many levels as the scores we observed in our sample), again letting the intercept of the model vary across participants. Thus defined, the model allows for different participants having idiosyncratic response sizes, e.g. overall pupillary response amplitude, while fixing the relationship between AQ and the response difference across near/far figurine locations, which the model quantifies as the “AQ” × “near/far” interaction. The same models with the same trial selection (determined by the validity of pupil measurements) were applied to behavioral performance (size estimates) and gaze-behavior (pupil diameter and gaze deviations).

We complemented this analysis with a repeated-measures approach (mainly, for visualization purposes), computing average per-participant responses, analyzing these for figurine location and size, and correlating the results with the participants’ AQ scores through Pearson’s correlation coefficient. Significance of these statistics was evaluated using both p-values and log-transformed Bayes Factors (Wetzels and Wagenmakers 2012). The Bayes Factor is the ratio of the likelihood of the two models H1/H0, where H1 assumes a correlation between the two variables and H0 assumes no correlation. By convention, when the base 10 logarithm of the Bayes Factor (lgBF) > 0.5 is considered substantial evidence in favor of H1, and lgBF < − 0.5 substantial evidence in favor of H0.

Data Availability Statement

The data reported in this manuscript may be found at the following link: https://doi.org/10.5281/zenodo.3940543.

Results

We tracked the pupillary response evoked by the presentation of a light stimulus (monster-like figurine) within an illusory 3D context: a corridor extending in depth. As expected from Emmert’s law, perceived size of the figurine depended on its apparent 3D location, with a relative size overestimation for the figurines at the far-end of the corridor (Fig. 2b). Importantly, pupillary light responses also varied with apparent 3D location (Fig. 2a, c): stronger pupillary responses were evoked by figurines at the far-end, which were matched in physical size but perceived as larger than figurines at the near-end.

We used a linear-mixed-model to evaluate the two effects statistically; we modelled the actual figurine size (five sizes) and its apparent 3D location (near/far) as fixed effects and added a random intercept to account for inter-individual variability of average responses (e.g. larger size estimate or pupil responses across all conditions). As expected both physical size of the figurines and their apparent 3D location independently affected behavioral size estimates (significant main effect of size: F(4,3921) = 592.26, p < 0.00001; significant difference between figurines at the far/near end of the corridor: F(1,3921) = 115.37, p < 0.00001; no significant interaction between figurine size and location: F(4,3921) = 1.55, p = 0.18). Pupillary responses were also significantly affected by 3D location, but the effect depended on figurine size (significant interaction between figurine size and location: F(4,3921) = 2.80, p = 0.024), possibly due to a saturation effect.

Thus, behavioral and pupillary responses were similarly affected by the 3D context, with figurines perceived as larger evoking greater pupil constrictions. Figure 3a plots the average response to each individual figurine (each of the 5 different physical sizes) at each apparent location (near and far), correlating pupillary responses with psychophysical reports. The actual variation of physical size is expected to introduce a negative correlation (because larger figurines will induce stronger pupillary constriction). Indeed, the observed correlation is weakly negative. Importantly, this correlation was entirely abolished after the effect of physical size of figurine was partialled out. This implies that illusory effects measured by perceptual reports and pupillary responses are independent.

Association between illusion magnitude and Autistic Quotient scores. Correlation analyses: legends report the number of points entered the correlation analysis, the Pearson’s rho coefficient and its confidence interval; the associated p-value and lgBF, or the logarithm with base 10 of the Bayes Factor (see “Methods”). a Correlation between pupil responses and size estimates. Each participant is represented by a maximum of 10 points, one for each stimulus size and condition (missing points are for invalid trials/failed pupil measurements). Thick lines show the best fit linear regression across the data points of the corresponding color. After partialling out the effect of stimulus size, the correlation between pupil responses and size estimates becomes non-significant (text inset). b Psychophysical estimate of illusory size (pooled across stimulus size) plotted against AQ scores. Each participant is represented by 1 point, given by the subtraction of the mean response to figurines at the far-end minus the near-end of the corridor. The two measures were not correlated (see legend). The thick blue line shows the best fit linear regression across the data points and horizontal black horizontal lines (thick and thin) show the illusion size (mean and 95% confidence intervals) in participants with lower and higher AQ scores, defined by a median split. c. Pupillary response to illusory size (pooled across stimulus size) plotted against AQ scores. The correlation remains significant at p < 0.05 after removing the participant with the most extreme value (marked by the blue asterisk). The thick blue line shows the best fit linear regression and the black horizontal lines show the median split analyses: the pupil size modulation is non-significantly different from 0 for individuals with higher AQ scores (> 16, the median of our sample); for individuals with lower AQ scores, it is significantly below 0 (implying enhanced pupil constriction in response to figurines on the far-end of the corridor)

Given that the two measures are statistically independent, we tested whether either correlated with autistic traits (AQ). Specifically, we tested the hypothesis that individuals with higher autistic traits would be less susceptible to context, so responses to figurines should be less affected by apparent 3D location. The distribution of AQ scores was consistent with those expected from a sample of neurotypical individuals (range 2–31; median: 16). We quantified the effect of 3D context as the difference between the average response to figurines at the far vs. near end of the corridor (pooling across figurine sizes). For behavioral responses, this value was almost always positive (as all but one individual was susceptible to the illusion), and was uncorrelated with AQ scores, (r = 0.04, lgBF = − 0.94). This provides robust evidence supporting the lack of correlation between the behavioral size illusion effect and autistic traits (Fig. 3b). No further trends emerged when analyzing each figurine size individually, rather than pooling across sizes.

However, pupillary responses did correlate significantly with AQ scores (r = 0.38, lgBF = 0.62), implying that pupil responses are modulated by the 3D context (stronger for figurines at the far-end of the corridor) more in participants with low than high AQ scores (Fig. 3c). Given this correlation, we divided the participant sample into two groups with high and low AQ (compared with the median of 16), and analyzed each separately. Pupil modulations were significantly higher than zero only in the subsample with low AQ scores (95% confidence intervals do not encroach the y = 0 axis), but not for those with high AQ scores (95% confidence intervals embrace the y = 0 axis). When analyzing each figurine size individually, we found the strongest correlation for the smallest stimulus size, which gives the strongest effect (Fig. 2c).

We complemented this correlational analysis with a linear-mixed model approach with individual trial data modelling two fixed effects, the apparent 3D location of the figurine (near/far) and AQ scores (see “Methods”). For behavioral responses, this analysis showed a main effect only of “near/far” location (F(1,3927) = 22, p < 0.00001—note that the degrees of freedom differ from the previous analysis due to the different number of levels in the modelled effects). There was no effect of AQ and no “AQ” × “near/far” interaction. Conversely, for pupillary responses we found a significant “AQ” × “near/far” interaction (F(1,3927) = 12, p = 0.00057), consistent with a larger pupil response difference in the far vs. near condition in individuals with weaker autistic traits.

The same analysis approach was applied to other eye-tracking measurements. Baseline pupil diameter measured at stimulus onset (mean and s.e.m. across participants: 4.26 ± 0.11 mm) was not affected by any of the experimental factors (all F < 2.3 and all p-values > 0.05), and did not correlate with AQ (r = 0.05 [− 0.23 0.33] p = 0.712, lgBF = − 0.93). Gaze position, on the other hand, faithfully reflected the different figurine locations. Figurines at the far-end of the corridor were higher and more to the right, and so was gaze position (main effect of figurine location on horizontal gaze: F(1,3921) = 1320, p < 0.00001; on vertical gaze: F(1,3921) = 2658, p < 0.00001). In addition, because the figurine “feet” were always on the “ground” irrespectively of its size, their center-of-mass moved higher for larger figurines, and so did vertical gaze position (F(4,3921) = 74, p < 0.00001). It may be argued that these small gaze deviations (about 4° in any direction) could impact on the pupil size differences. This is unlikely, given that the impact of experimental factors on pupil and gaze are qualitatively different: figurine size and location interact to affect the pupil, whereas they independently affect gaze. In addition, we found no correlation between the effects of figurine location (difference far–near) on pupil responses and on horizontal gaze (r = − 0.02 [− 0.30 0.26] p = 0.890, lgBF = − 0.95) and on vertical gaze (r = 0.09 [− 0.20 0.36] p = 0.545, lgBF = − 0.88): both correlation coefficients are associated with a lgBF less than − 0.5, implying robust evidence against an association between gaze and pupil modulations. Finally, we examined the relationship between gaze position and AQ and found no reliable associations (far/near difference of horizontal gaze r = 0.05 [− 0.23 0.32] p = 0.731, lgBF = − 0.93; vertical gaze: r = 0.06 [− 0.22 0.34] p = 0.658, lgBF = − 0.91), confirming that pupil size modulation is selectively correlated with autistic traits. Finally, we explored the effect of gender across participants. We compared the effect of 3D context in females (the majority of our sample, 33) and males (N = 17) and found no significant gender effect in either behavioural size estimation [t(48) = 0.18; p = 0.86; lgBF = − 0.52] or pupil response [t(48) = 0.75; p = 0.46; lgBF = − 0.43].

Discussion

Emmert’s law states that objects with the same retinal image will look larger if they appear to be located further away (Boring 1940). A clear example of this is the Ponzo Illusion (Ponzo 1910). Our first objective was to show that Emmert’s law is reflected in the magnitude of pupillary light responses, evoked by objects that change perceived size with perceived distance. We showed that bright stimuli cause significantly stronger pupillary constriction when they appear to be at the far end than at the near end of an illusory corridor. The effect of the illusion is not merely to change pupil size, but to increase or reduce the pupillary response to the light-stimuli in the direction expected from a change of their physical size, increased or reduced. This modulation cannot be explained by factors that are well known to affect pupil size, such as changes in focus and arousal. Optical focus was constant throughout the experiment, implying no expected “near response”—the pupillary constriction usually coupled with accommodation and convergence (Bharadwaj et al. 2011; Marg and Morgan 1949; Zhang et al. 1996). A residual illusory near response could in principle be generated by the apparent 3D context, predicting a steady pupil constriction for trials where the stimulus, hence fixation and focus, were on the near end of the corridor. However, we see no such modulation of baseline pupil size; what we find is a stimulus locked modulation of the pupillary light response (and a larger pupil constriction for trials where the stimulus was on the far end of the corridor). Finally, a variety of studies have demonstrated that arousal and cognitive/emotional load are accompanied by pupil dilation (Hess and Polt 1960; Kahneman and Beatty 1966), but none of these effects can explain pupil differences that emerge with identical settings and task requirements, simply changing the apparent 3D context of the stimulus. Our findings indicate that the pupillary light response is not a simple subcortical reflex; it is modulated by complex visual perceptual processes, which take 3D context and size constancy into account. This is in line with much recent evidence showing that pupil diameter depends on signals other than retinal illumination, tracking attentional focus and perceptual content (Binda and Gamlin 2017; Binda and Murray 2015a; Mathot and Van der Stigchel 2015). These effects suggest a ‘top-down’ modulation of the subcortical system controlling the pupillary light response. Such modulation likely involves multiple neural substrates, possibly including direct pre-frontal input to the pupillomotor circuit, as well as input from the visual cortex, in turn modulated by pre-frontal and parietal signals, projecting a cortical representation of visual stimuli to the pupillary circuit (Binda and Gamlin 2017; Ebitz and Moore 2017).

Neuroimaging work in humans (Chen et al. 2019; Fang et al. 2008; He et al. 2015; Murray et al. 2006; Schwarzkopf et al. 2011; Sperandio et al. 2012) and neurophysiological studies in non-human primates (Ni et al. 2014; Tanaka and Fujita 2015) has consistently shown that the Emmert’s law affects visual representations in the visual cortex, where the retinotopic projection of the stimulus is magnified or contracted depending on its apparent 3D location and therefore its illusory size changes. It is possible that the enlarged representation of the bright figurine in occipital cortex is fed into the circuit controlling pupil size, so that a larger stimulus area generates a stronger pupil-constrictor signal. At the same time, the pupillomotor circuit holds a representation of the actual amount of light-flux generated by the stimulus. This suggests that the final pupil constriction response results from the integration of two sources, direct retinal projection and feed-back cortical input, which converge to define a one-dimensional output variable: pupil size. It is easy to imagine how this combination might be progressively dominated by retinal signals as these become stronger, due to a larger or brighter light stimulus. This might explain our finding of a weaker 3D context effect on pupillary responses to larger stimuli.

Having established the sensitivity of pupillary light responses to illusory size changes, we asked whether estimates of illusion strength obtained through pupillometry are consistent with estimates obtained through psychophysical magnitude estimation. The perceptual reports reliably tracked actual size and, like pupillometry, showed a systematic effect of 3D context—as in previous reports of the Ponzo Illusion. However, inter-individual variability in pupillometric and psychophysical estimates of illusion susceptibility were statistically dissociable: their correlation was non-significant with inferential statistics, and it was significantly absent with Bayesian statistics.

Given the independence between pupillometry and psychophysics, we asked whether inter-individual variability in illusion susceptibility, estimated by either measure, was associated with variability in autistic traits across our sample of neurotypical participants. We found that psychophysical estimates of illusion strength were uncorrelated with scores on the Autistic Quotient (AQ) questionnaire, consistent with Chouinard et al. (2016) who also found no relationship between AQ scores and susceptibility to size illusions. This is also generally consistent with the numerous studies finding no differences in illusion susceptibility in individuals with ASD compared with controls (Hoy et al. 2004; Manning et al. 2017; Milne and Scope 2008; Ropar and Mitchell 1999, 2001). However, for illusion susceptibility indirectly estimated through the modulation of pupillary responses, we found a significant association with AQ scores. Pupillary modulations with 3D context were stronger in individuals with lower AQ scores, and were nearly absent in individuals with higher AQ scores, consistent with the concept of reduced illusion susceptibility in autism (Happe 1996).

There is growing interest in using objective indices, such as pupillometry, to quantify the peculiarities of autistic perception. Several studies have attempted to use the dynamics of the simple pupillary light response (evoked by an isolated light flash) to dissociate individuals with and without ASD (Daluwatte et al. 2013; Fan et al. 2009; Lynch et al. 2018; Nyström et al. 2015); among the many parameters that can be used to define such dynamics, several have been found to differ, but with little consensus across studies (Lynch 2018). Other studies have used pupillometry to index cognitive or emotional load, reporting differences between ASD and controls (Anderson and Colombo 2009; Blaser et al. 2014; Nuske et al. 2014a, b; Wagner et al. 2016). Notably, none of these studies has focused on perceptual idiosyncrasies or modulations of the pupillary light response. A recent exception is a study by Laeng et al. (2018), which used a very similar approach to what we propose here, and measured the modulation of pupillary light responses to illusory glare. This study failed to find differences between adults with ASD and controls. However, we know that different types of illusions rely on disparate mechanisms, at different levels of visual processing; numerous studies suggest that autistic traits impact primarily higher-level mechanisms, whereas lower-level processes are similar between ASD and controls (Maule et al. 2018; Pellicano et al. 2007; Turi et al. 2015). Thus, it is possible that illusory glare arises at an earlier level than the Ponzo illusion and size constancy mechanisms (the former requiring only the integration of local surround within the retinal image; the latter implying the construction of a whole 3D representation); this would suggest that complex phenomena like the Ponzo illusion may prove more successful than illusory glare in revealing differences between ASD and controls. Our present data allow only for speculation, given that we did not measure autistic individuals, but rather studied autistic traits in neurotypical individuals.

Our findings are coherent with two other recent reports (Pome et al. 2020; Turi et al. 2018), where autistic traits were associated with pupillometric indices of perceptual processing, not with psychophysical estimates. Turi et al. showed that pupillary modulations driven by the shift of attention during the exposure to an illusory bistable stimulus is highly predictive of AQ scores in neurotypicals—while no behavioral measure of bistability or attention distribution achieved any predictive power, or correlated with pupillometry indices (Turi et al. 2018). Pomè et al. showed that the repetition of priming colour led to faster behavioural responses and to weaker pupil-dilation responses; however, the reaction times and pupil-dilations were un-correlated across participants and only pupil-dilation correlated with AQ scores (Pomè et al. 2020).

This follows other examples of lack of correlation between pupillary light responses and perceptual responses. For example, Benedetto and Binda (2016) showed that both light sensitivity and pupillary light responses are reduced during saccadic eye movements, but the suppression effects are not correlated across individuals, or trials. Binda et al. also showed that pictures of the sun are rated brighter than control images of equal luminance, and generate stronger pupillary responses, but pupillary response strengths are un-correlated with brightness ratings (Binda et al. 2013b). This systematic lack of association between pupillary and perceptual responses may be explained by assuming that separate visual representations with independent noise sources underly the two responses—in analogy with the separation of visual representations for “perception” and “action” originally introduced by (Goodale and Milner 1992). This hypothesis was originally inspired by clinical observations of rare patients with localized cortical lesions, who showed inaccurate size perception (impaired size-constancy) either for perception or for action (grasping). More recently, this was confirmed in neurotypical individuals, in peculiar conditions where size constancy could fail in perception but hold for grasping (Chen et al. 2018), clearly suggesting that size constancy is under the control of different mechanisms for perception and action (Sperandio and Chouinard 2015). Similarly, the dissociation that we find between the behavioural and pupillary response may reflect existence of independent pathways that process visual information and integrate it with 3D context for the purpose of perception or for the purpose of action—motor, or perhaps pupillomotor. At this stage, this hypothesis is entirely speculative; it makes the interesting prediction that autistic features may be more readily assessed by testing the pathway connected with action (pupillomotor or otherwise motor responses, such as grasping, reaching etc.), rather than perception. Future studies may be able to empirically address this possibility.

In conclusion, our findings show that pupil responses provide an accurate objective index of complex perceptual processes. They are more effective than perceptual estimates and particularly useful for quantifying interindividual differences that could be also extended to clinical population in order to measure individual perceptual processes with an objective and non-invasive technique.

References

Anderson, C. J., & Colombo, J. (2009). Larger tonic pupil size in young children with autism spectrum disorder. Developmental Psychobiology, 51, 207–211. https://doi.org/10.1002/dev.20352

Auyeung, B., Baron-Cohen, S., Wheelwright, S., & Allison, C. (2008). The autism spectrum quotient: Children’s version (AQ-child). Journal of Autism and Developmental Disorders, 38, 1230–1240. https://doi.org/10.1007/s10803-007-0504-z

Bailey, A., et al. (1995). Autism as a strongly genetic disorder: Evidence from a British twin study. Psychological Medicine, 25, 63–77. https://doi.org/10.1017/s0033291700028099

Baron-Cohen, S., & Wheelwright, S. (2004). The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders, 34, 163–175. https://doi.org/10.1023/b:jadd.0000022607.19833.00

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31, 5–17. https://doi.org/10.1023/a:1005653411471

Belmonte, M. K., et al. (2004). Autism as a disorder of neural information processing: Directions for research and targets for therapy. Molecular Psychiatry, 9, 646–663. https://doi.org/10.1038/sj.mp.4001499

Benedetto, A., & Binda, P. (2016). Dissociable saccadic suppression of pupillary and perceptual responses to light. Journal of Neurophysiology, 115, 1243–1251. https://doi.org/10.1152/jn.00964.2015

Bharadwaj, S. R., Wang, J., & Candy, T. R. (2011). Pupil responses to near visual demand during human visual development. Journal of Vision, 11, 6. https://doi.org/10.1167/11.4.6

Binda, P., & Gamlin, P. D. (2017). Renewed attention on the pupil light reflex. Trends in Neurosciences, 40, 455–457. https://doi.org/10.1016/j.tins.2017.06.007

Binda, P., & Murray, S. O. (2015a). Keeping a large-pupilled eye on high-level visual processing. Trends in cognitive sciences, 19, 1–3. https://doi.org/10.1016/j.tics.2014.11.002

Binda, P., & Murray, S. O. (2015b). Spatial attention increases the pupillary response to light changes. Journal of Vision, 15, 1. https://doi.org/10.1167/15.2.1

Binda, P., Pereverzeva, M., & Murray, S. O. (2013a). Attention to bright surfaces enhances the pupillary light reflex. Journal of Neuroscience, 33, 2199–2204. https://doi.org/10.1523/JNEUROSCI.3440-12.2013

Binda, P., Pereverzeva, M., & Murray, S. O. (2013b). Pupil constrictions to photographs of the sun. Journal of Vision. https://doi.org/10.1167/13.6.8

Binda, P., Pereverzeva, M., & Murray, S. O. (2014). Pupil size reflects the focus of feature-based attention. Journal of Neurophysiology, 112, 3046–3052. https://doi.org/10.1152/jn.00502.2014

Blaser, E., Eglington, L., Carter, A. S., & Kaldy, Z. (2014). Pupillometry reveals a mechanism for the autism spectrum disorder (ASD) advantage in visual tasks. Scientific Reports, 4, 4301. https://doi.org/10.1038/srep04301

Bolte, S., Holtmann, M., Poustka, F., Scheurich, A., & Schmidt, L. (2007). Gestalt perception and local-global processing in high-functioning autism. Journal of Autism and Developmental Disorders, 37, 1493–1504. https://doi.org/10.1007/s10803-006-0231-x

Boring, E. G. (1940). Size constancy and Emmert’s Law. The American Journal of Psychology, 53, 293–295. https://doi.org/10.2307/1417427

Brainard, D. H. (1997). The psychophysics toolbox. Spatial Vision, 10, 433–436.

Chen, J., Sperandio, I., & Goodale, M. A. (2018). Proprioceptive distance cues restore perfect size constancy in grasping, but not perception, when vision is limited. Current Biology, 28(927–932), e4. https://doi.org/10.1016/j.cub.2018.01.076

Chen, J., Sperandio, I., Henry, M. J., & Goodale, M. A. (2019). Changing the real viewing distance reveals the temporal evolution of size constancy in visual cortex. Current Biology, 29(2237–2243), e4. https://doi.org/10.1016/j.cub.2019.05.069

Chouinard, P. A., Noulty, W. A., Sperandio, I., & Landry, O. (2013). Global processing during the Muller-Lyer illusion is distinctively affected by the degree of autistic traits in the typical population. Experimental Brain Research, 230, 219–231. https://doi.org/10.1007/s00221-013-3646-6

Chouinard, P. A., Unwin, K. L., Landry, O., & Sperandio, I. (2016). Susceptibility to optical illusions varies as a function of the autism-spectrum quotient but not in ways predicted by local-global biases. Journal of Autism and Developmental Disorders, 46, 2224–2239. https://doi.org/10.1007/s10803-016-2753-1

Constantino, J. N., & Todd, R. D. (2003). Autistic traits in the general population: A twin study. Archives of General Psychiatry, 60, 524–530. https://doi.org/10.1001/archpsyc.60.5.524

Cornelissen, F. W., Peters, E. M., & Palmer, J. (2002). The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behavior Research Methods, Instruments, & Computers, 34, 613–617. https://doi.org/10.3758/bf03195489

Daluwatte, C., Miles, J. H., Christ, S. E., Beversdorf, D. Q., Takahashi, T. N., & Yao, G. (2013). Atypical pupillary light reflex and heart rate variability in children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 43, 1910–1925. https://doi.org/10.1007/s10803-012-1741-3

Ebitz, R. B., & Moore, T. (2017). Selective modulation of the pupil light reflex by microstimulation of prefrontal cortex. Journal of Neuroscience, 37, 5008–5018. https://doi.org/10.1523/JNEUROSCI.2433-16.2017

Fan, X., Miles, J. H., Takahashi, N., & Yao, G. (2009). Abnormal transient pupillary light reflex in individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39, 1499–1508. https://doi.org/10.1007/s10803-009-0767-7

Fang, F., Boyaci, H., Kersten, D., & Murray, S. O. (2008). Attention-dependent representation of a size illusion in human V1. Current Biology, 18, 1707–1712. https://doi.org/10.1016/j.cub.2008.09.025

Friston, K. J., Lawson, R., & Frith, C. D. (2013). On hyperpriors and hypopriors: Comment on Pellicano and Burr. Trends in cognitive sciences, 17, 1. https://doi.org/10.1016/j.tics.2012.11.003

Gillberg, C., & Billstedt, E. (2000). Autism and Asperger syndrome: Coexistence with other clinical disorders. Acta Psychiatrica Scandinavica, 102, 321–330. https://doi.org/10.1034/j.1600-0447.2000.102005321.x

Goodale, M., & Milner, A. D. (1992). Separate pathways for perception and action. TINS, 15, 20–25.

Gori, S., Molteni, M., & Facoetti, A. (2016). Visual illusions: An interesting tool to investigate developmental dyslexia and autism spectrum disorder. Frontiers in Human Neuroscience, 10, 175. https://doi.org/10.3389/fnhum.2016.00175

Gregory, R. L. (1980). Perceptions as hypotheses. Philosophical Transactions of the Royal Society B, 290, 181–197. https://doi.org/10.1098/Rstb.1980.0090

Happe, F. G. (1996). Studying weak central coherence at low levels: children with autism do not succumb to visual illusions. A research note. Journal of Child Psychology and Psychiatry, 37, 873–877. https://doi.org/10.1111/j.1469-7610.1996.tb01483.x

Happe, F. G., & Frith, U. (2006). The weak coherence account: Detail-focused cognitive style in autism spectrum disorders. Journal of Autism and Developmental Disorders, 36, 5–25. https://doi.org/10.1007/s10803-005-0039-0

He, D., Mo, C., Wang, Y., & Fang, F. (2015). Position shifts of fMRI-based population receptive fields in human visual cortex induced by Ponzo illusion. Experimental Brain Research, 233, 3535–3541. https://doi.org/10.1007/s00221-015-4425-3

Helmholtz, H.V., & Southall, J.P.C.B. (1962). Helmholtz's treatise on physiological optics. Translated from the 3d German ed. Edited by James P. C. Southall, New York, Dover Publications

Hess, E. H., & Polt, J. M. (1960). Pupil size as related to interest value of visual stimuli. Science, 132, 349–350. https://doi.org/10.1126/science.132.3423.349

Hoy, J. A., Hatton, C., & Hare, D. (2004). Weak central coherence: A cross-domain phenomenon specific to autism? Autism, 8, 267–281. https://doi.org/10.1177/1362361304045218

Jolliffe, T., & Baron-Cohen, S. (1997). Are people with autism and Asperger syndrome faster than normal on the Embedded Figures Test? Journal of Child Psychology and Psychiatry, 38, 527–534. https://doi.org/10.1111/j.1469-7610.1997.tb01539.x

Kahneman, D., & Beatty, J. (1966). Pupil diameter and load on memory. Science, 154, 1583–1585. https://doi.org/10.1126/science.154.3756.1583

Laeng, B., & Endestad, T. (2012). Bright illusions reduce the eye’s pupil. Proceedings of the National Academy of Sciences of the United States of America, 109, 2162–2167. https://doi.org/10.1073/pnas.1118298109

Laeng, B., Faerevaag, F. S., Tanggaard, S., & von Tetzchner, S. (2018). Pupillary responses to illusions of brightness in autism spectrum disorder. Iperception, 9, 2041669518771716. https://doi.org/10.1177/2041669518771716

Lawson, R. P., Rees, G., & Friston, K. J. (2014). An aberrant precision account of autism. Frontiers in Human Neuroscience, 8, 302. https://doi.org/10.3389/fnhum.2014.00302

Lynch, G. (2018). Using pupillometry to assess the atypical pupillary light reflex and LC-NE system in ASD. Behavioural Science (Basel). https://doi.org/10.3390/bs8110108

Lynch, G. T. F., James, S. M., & VanDam, M. (2018). Pupillary response and phenotype in ASD: Latency to constriction discriminates ASD from typically developing adolescents. Autism Research, 11, 364–375. https://doi.org/10.1002/aur.1888

Manning, C., Morgan, M. J., Allen, C. T. W., & Pellicano, E. (2017). Susceptibility to Ebbinghaus and Muller–Lyer illusions in autistic children: A comparison of three different methods. Molecular Autism, 8, 16. https://doi.org/10.1186/s13229-017-0127-y

Marg, E., & Morgan, M. W., Jr. (1949). The pupillary near reflex; the relation of pupillary diameter to accommodation and the various components of convergence. American Journal of Optometry and Archives of American Academy of Optometry, 26, 183–198.

Mathot, S., Melmi, J. B., van der Linden, L., & Van der Stigchel, S. (2016). The mind-writing pupil: A human–computer interface based on decoding of covert attention through pupillometry. PLoS ONE, 11, e0148805. https://doi.org/10.1371/journal.pone.0148805

Mathot, S., van der Linden, L., Grainger, J., & Vitu, F. (2013). The pupillary light response reveals the focus of covert visual attention. PLoS ONE, 8, e78168. https://doi.org/10.1371/journal.pone.0078168

Mathot, S., & Van der Stigchel, S. (2015). New light on the mind’s eye: The pupillary light response as active vision. Current Directions in Psychological Science, 24, 374–378. https://doi.org/10.1177/0963721415593725

Maule, J., Stanworth, K., Pellicano, E., & Franklin, A. (2018). Color afterimages in autistic adults. Journal of Autism and Developmental Disorders, 48, 1409–1421. https://doi.org/10.1007/s10803-016-2786-5

Milne, E., & Scope, A. (2008). Are children with autistic spectrum disorders susceptible to contour illusions? British Journal of Developmental Psychology, 26, 91–102. https://doi.org/10.1348/026151007x202509

Mitchell, P., Mottron, L., Soulieres, I., & Ropar, D. (2010). Susceptibility to the Shepard illusion in participants with autism: reduced top-down influences within perception? Autism Res, 3, 113–119. https://doi.org/10.1002/aur.130

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. (2006). Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36, 27–43. https://doi.org/10.1007/s10803-005-0040-7

Murray, S. O., Boyaci, H., & Kersten, D. (2006). The representation of perceived angular size in human primary visual cortex. Nature Neuroscience, 9, 429–434. https://doi.org/10.1038/nn1641

Naber, M., & Nakayama, K. (2013). Pupil responses to high-level image content. Journal of Vision. https://doi.org/10.1167/13.6.7

Ni, A. M., Murray, S. O., & Horwitz, G. D. (2014). Object-centered shifts of receptive field positions in monkey primary visual cortex. Current Biology, 24, 1653–1658. https://doi.org/10.1016/j.cub.2014.06.003

Nuske, H. J., Vivanti, G., & Dissanayake, C. (2014). Reactivity to fearful expressions of familiar and unfamiliar people in children with autism: An eye-tracking pupillometry study. Journal of Neurodevelopmental Disorders, 6, 14–14. https://doi.org/10.1186/1866-1955-6-14

Nuske, H. J., Vivanti, G., Hudry, K., & Dissanayake, C. (2014). Pupillometry reveals reduced unconscious emotional reactivity in autism. Biological Psychology, 101, 24–35. https://doi.org/10.1016/j.biopsycho.2014.07.003

Nyström, P., Gredebäck, G., Bölte, S., & Falck-Ytter, T. (2015). Hypersensitive pupillary light reflex in infants at risk for autism. Molecular Autism, 6, 10–10. https://doi.org/10.1186/s13229-015-0011-6

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442.

Pellicano, E., & Burr, D. (2012). When the world becomes “too real”: A Bayesian explanation of autistic perception. Trends in Cognitive Sciences, 16, 504–510. https://doi.org/10.1016/j.tics.2012.08.009

Pellicano, E., Jeffery, L., Burr, D., & Rhodes, G. (2007). Abnormal adaptive face-Coding mechanisms in children with autism spectrum disorder. Current Biology, 17, 1508–1512. https://doi.org/10.1016/j.cub.2007.07.065

Piven, J. (2001). The broad autism phenotype: A complementary strategy for molecular genetic studies of autism. American Journal of Medical Genetics, 105, 34–35.

Pome, A., Binda, P., Cicchini, G. M., & Burr, D. C. (2020). Pupillometry correlates of visual priming, and their dependency on autistic traits. J Vis, 20, 3. https://doi.org/10.1167/jovi.20.3.3

Ponzo, M. (1910). Intorno ad alcune illusioni nel campo delle sensazioni tattili, sull'illusione di Aristotele e fenomeni analoghi, Wilhelm Engelmann.

Ring, H., Woodbury-Smith, M., Watson, P., Wheelwright, S., & Baron-Cohen, S. (2008). Clinical heterogeneity among people with high functioning autism spectrum conditions: evidence favouring a continuous severity gradient. Behavioral and Brain Functions, 4, 11. https://doi.org/10.1186/1744-9081-4-11

Ropar, D., & Mitchell, P. (1999). Are individuals with autism and Asperger’s syndrome susceptible to visual illusions? Journal of Child Psychology and Psychiatry, 40, 1283–1293.

Ropar, D., & Mitchell, P. (2001). Susceptibility to illusions and performance on visuospatial tasks in individuals with autism. Journal of Child Psychology and Psychiatry, 42, 539–549.

Rosenberg, A., Patterson, J. S., & Angelaki, D. E. (2015). A computational perspective on autism. Proceedings of the National Academy of Sciences of the United States of America, 112, 9158–9165. https://doi.org/10.1073/pnas.1510583112

Ruta, L., Mazzone, D., Mazzone, L., Wheelwright, S., & Baron-Cohen, S. (2012). The Autism-Spectrum Quotient-Italian version: A cross-cultural confirmation of the broader autism phenotype. Journal of Autism and Developmental Disorders, 42, 625–633. https://doi.org/10.1007/s10803-011-1290-1

Ruzich, E., et al. (2015). Measuring autistic traits in the general population: A systematic review of the Autism-Spectrum Quotient (AQ) in a nonclinical population sample of 6,900 typical adult males and females. Mol Autism, 6, 2. https://doi.org/10.1186/2040-2392-6-2

Schwarzkopf, D. S., Song, C., & Rees, G. (2011). The surface area of human V1 predicts the subjective experience of object size. Nature Neuroscience, 14, 28–30. https://doi.org/10.1038/nn.2706

Shah, A., & Frith, U. (1983). An islet of ability in autistic children: A research note. Journal of Child Psychology and Psychiatry, 24, 613–620. https://doi.org/10.1111/j.1469-7610.1983.tb00137.x

Sinha, P., et al. (2014). Autism as a disorder of prediction. Proceedings of the National Academy of Sciences of the United States of America, 111, 15220–15225. https://doi.org/10.1073/pnas.1416797111

Skuse, D. H., et al. (2009). Social communication competence and functional adaptation in a general population of children: Preliminary evidence for sex-by-verbal IQ differential risk. Journal of the American Academy of Child and Adolescent Psychiatry, 48, 128–137. https://doi.org/10.1097/CHI.0b013e31819176b8

Sperandio, I., & Chouinard, P. A. (2015). The mechanisms of size constancy. Multisensory Research, 28, 253–283. https://doi.org/10.1163/22134808-00002483

Sperandio, I., Chouinard, P. A., & Goodale, M. A. (2012). Retinotopic activity in V1 reflects the perceived and not the retinal size of an afterimage. Nature Neuroscience, 15, 540–542. https://doi.org/10.1038/nn.3069

Tanaka, S., & Fujita, I. (2015). Computation of object size in visual cortical area V4 as a neural basis for size constancy. Journal of Neuroscience, 35, 12033–12046. https://doi.org/10.1523/JNEUROSCI.2665-14.2015

Turi, M., Burr, D. C., & Binda, P. (2018). Pupillometry reveals perceptual differences that are tightly linked to autistic traits in typical adults. Elife. https://doi.org/10.7554/eLife.32399

Turi, M., Burr, D. C., Igliozzi, R., Aagten-Murphy, D., Muratori, F., & Pellicano, E. (2015). Children with autism spectrum disorder show reduced adaptation to number. Proceedings of the National Academy of Sciences of the United States of America, 112, 7868–7872. https://doi.org/10.1073/pnas.1504099112

van Boxtel, J. J., & Lu, H. (2013). A predictive coding perspective on autism spectrum disorders. Frontiers in Psychology, 4, 19. https://doi.org/10.3389/fpsyg.2013.00019

Van de Cruys, S., et al. (2014). Precise minds in uncertain worlds: Predictive coding in autism. Psychological Review, 121, 649–675. https://doi.org/10.1037/a0037665

Wagner, J. B., Luyster, R. J., Tager-Flusberg, H., & Nelson, C. A. (2016). Greater pupil size in response to emotional faces as an early marker of social-communicative difficulties in infants at high risk for autism. Infancy, 21, 560–581. https://doi.org/10.1111/infa.12128

Walter, E., Dassonville, P., & Bochsler, T. M. (2009). A specific autistic trait that modulates visuospatial illusion susceptibility. Journal of Autism and Developmental Disorders, 39, 339–349. https://doi.org/10.1007/s10803-008-0630-2

Wetzels, R., & Wagenmakers, E. J. (2012). A default Bayesian hypothesis test for correlations and partial correlations. Psychonomic Bulletin & Review, 19, 1057–1064. https://doi.org/10.3758/s13423-012-0295-x

Wheelwright, S., Auyeung, B., Allison, C., & Baron-Cohen, S. (2010). Defining the broader, medium and narrow autism phenotype among parents using the Autism Spectrum Quotient (AQ). Molecular Autism, 1, 10. https://doi.org/10.1186/2040-2392-1-10

Zavagno, D., Tommasi, L., & Laeng, B. (2017). The eye pupil’s response to static and dynamic illusions of luminosity and darkness. Iperception, 8, 2041669517717754. https://doi.org/10.1177/2041669517717754

Zhang, H., Clarke, R. J., & Gamlin, P. D. (1996). Behavior of luminance neurons in the pretectal olivary nucleus during the pupillary near response. Experimental Brain Research, 112, 158–162. https://doi.org/10.1007/bf00227189

Acknowledgements

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program, Grant No 801715 (PUPILTRAITS) and Grant No 832813 (GenPercept) and from the Italian Ministry of University Research under the PRIN2017 programme (grant MISMATCH).

Funding

Open access funding provided by Università di Pisa within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

Experimental procedures were approved by the regional ethics committee Comitato Etico Pediatrico Regionale—Azienda Ospedaliero-Universitaria Meyer—Firenze (FI) under the protocol “Fusione di Informazioni Multisensoriali" v4/04.10.2018”.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tortelli, C., Turi, M., Burr, D.C. et al. Pupillary Responses Obey Emmert’s Law and Co-vary with Autistic Traits. J Autism Dev Disord 51, 2908–2919 (2021). https://doi.org/10.1007/s10803-020-04718-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-020-04718-7