Abstract

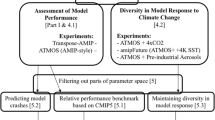

The main aim of this two-part study is to use a perturbed parameter ensemble (PPE) to select plausible and diverse variants of a relatively expensive climate model for use in climate projections. In this first part, the extent to which climate biases develop at weather forecast timescales is assessed with two PPEs, which are based on 5-day forecasts and 10-year simulations with a relatively coarse resolution (N96) atmosphere-only model. Both ensembles share common parameter combinations and strong emergent relationships are found for a wide range of variables between the errors on two timescales. These relationships between the PPEs are demonstrated at several spatial scales from global (using mean square errors), to regional (using pattern correlations), and to individual grid boxes where a large fraction of them show positive correlations. The study confirms more robustly than in previous studies that investigating the errors on weather timescales provides an affordable way to identify and filter out model variants that perform poorly at short timescales and are likely to perform poorly at longer timescales too. The use of PPEs also provides additional information for model development, by identifying parameters and processes responsible for model errors at the two different timescales, and systematic errors that cannot be removed by any combination of parameter values.

Similar content being viewed by others

References

Adler RF, Huffman GJ, Chang A, et al (2003) The version-2 global precipitation climatology project (GPCP) monthly precipitation analysis (1979–present). J Hydrometeorol 4:1147–1167. https://doi.org/10.1175/1525-7541(2003)004%3C1147:TVGPCP%3E2.0.CO;2

Bodas-Salcedo A, Williams KD, Ringer MA et al (2014) Origins of the solar radiation biases over the southern ocean in CFMIP2 models. J Clim 27:41–56. https://doi.org/10.1175/JCLI-D-13-00169.1

Booth BBB, Bernie D, McNeall D et al (2013) Scenario and modelling uncertainty in global mean temperature change derived from emission-driven global climate models. Earth Syst Dyn 4:95–108. https://doi.org/10.5194/esd-4-95-2013

Carnell R (2009) Latin hypercube samples. R Packag ‘lhs’. pp 1–13

Collins M, Booth BBB, Bhaskaran B et al (2011a) Climate model errors, feedbacks and forcings: a comparison of perturbed physics and multi-model ensembles. Clim Dyn 36:1737–1766. https://doi.org/10.1007/s00382-010-0808-0

Collins WJ, Bellouin N, Doutriaux-Boucher M et al (2011b) Development and evaluation of an earth-system model-HadGEM2. Geosci Model Dev 4:1051–1075. https://doi.org/10.5194/gmd-4-1051-2011

Covey C, Lucas DD, Tannahill J et al (2013) Efficient screening of climate model sensitivity to a large number of perturbed input parameters. J Adv Model Earth Syst. https://doi.org/10.1002/jame.20040

CSIRO and Bureau of Meteorology (2015) Climate change in Australia. http://www.climatechangeinaustralia.gov.au/. Accessed 1 Jan 2016

Dee D, Uppala S, Simmons a., et al (2011) The ERA-Interim reanalysis: configuration and performance of the data assimilation system. Q J R Meteorol Soc 137:553–597. https://doi.org/10.1002/qj.828

Demory M-E, Vidale PL, Roberts MJ et al (2014) The role of horizontal resolution in simulating drivers of the global hydrological cycle. Clim Dyn 42:2201–2225. https://doi.org/10.1007/s00382-013-1924-4

Edwards NR, Cameron D, Rougier J (2011) Precalibrating an intermediate complexity climate model. Clim Dyn 37:1469–1482. https://doi.org/10.1007/s00382-010-0921-0

Garud SS, Karimi IA, Kraft M (2017) Design of computer experiments: a review. Comput Chem Eng 106:71–95. https://doi.org/10.1016/j.compchemeng.2017.05.010

Gates WL, Boyle JS, Covey C et al (1999) An overview of the results of the atmospheric model intercomparison project (AMIP I). Bull Am Meteorol Soc 80:29–55

Goldstein M, Rougier J (2004) Probabilistic formulations for transferring inferences from mathematical models to physical systems. SIAM J Sci Comput 26:467–487. https://doi.org/10.1137/S106482750342670X

Harris GR, Sexton DMH, Booth BBB et al (2013) Probabilistic projections of transient climate change. Clim Dyn 40:2937–2972

Huffman GJ, Bolvin DT, Nelkin EJ et al (2007) The TRMM multisatellite precipitation analysis (TMPA): quasi-global, multiyear, combined-sensor precipitation estimates at fine scales. J Hydrometeorol 8:38–55. https://doi.org/10.1175/JHM560.1

Hurrell J, Meehl GA, Bader D et al (2009) A unified modeling approach to climate system prediction. Bull Am Meteorol Soc 90:1819–1832. https://doi.org/10.1175/2009BAMS2752.1

Karmalkar AV, Sexton David MH, James M, Ben Booth BB, Rostron John MD (2019) Finding plausible and diverse variants of a climate model: part 2 development and validation of methodology. https://doi.org/10.1007/s00382-019-04617-3

Lee LA, Carslaw KS, Pringle KJ et al (2011) Emulation of a complex global aerosol model to quantify sensitivity to uncertain parameters. Atmos Chem Phys 11:12253–12273. https://doi.org/10.5194/acp-11-12253-2011

Lee LA, Pringle KJ, Reddington CL et al (2013) The magnitude and causes of uncertainty in global model simulations of cloud condensation nuclei. Atmos Chem Phys 13:8879–8914. https://doi.org/10.5194/acp-13-8879-2013

Loeb NG, Wielicki BA, Doelling DR et al (2009) Toward optimal closure of the Earth’s top-of-atmosphere radiation budget. J Clim 22:748–766. https://doi.org/10.1175/2008JCLI2637.1

Ma H-Y, Xie S, Klein SA et al (2014) On the correspondence between mean forecast errors and climate errors in CMIP5 models. J Clim 27:1781–1798. https://doi.org/10.1175/JCLI-D-13-00474.1

Martin GM, Ringer MA, Pope VD et al (2006) The physical properties of the atmosphere in the new Hadley Centre Global Environmental Model (HadGEM1). Part I: model description and global climatology. J Clim 19:1274–1301. https://doi.org/10.1175/JCLI3636.1

Martin GM, Milton SF, Senior CA et al (2010) Analysis and reduction of systematic errors through a seamless approach to modeling weather and climate. J Clim 23:5933–5957. https://doi.org/10.1175/2010JCLI3541.1

McKay MD, Beckman RJ, Conover WJ (1979) Comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 21:239–245. https://doi.org/10.1080/00401706.1979.10489755

Morris MD (1991) Factorial sampling plans for preliminary computational experiments. Technometrics. https://doi.org/10.1080/00401706.1991.10484804

Mulholland DP, Haines K, Sparrow SN, Wallom D (2017) Climate model forecast biases assessed with a perturbed physics ensemble. Clim Dyn 49:1729–1746. https://doi.org/10.1007/s00382-016-3407-x

Murphy JM, Sexton DMH, Barnett DN et al (2004) Quantification of modelling uncertainties in a large ensemble of climate change simulations. Nature 430:768–772. https://doi.org/10.1038/nature02771

Murphy JM, Sexton DMH, Jenkins GJ et al (2009) UK climate projections science report: climate change projections. Meteorological Office Hadley Centre, Exeter

Murphy JM, Booth BBB, Boulton CA et al (2014) Transient climate changes in a perturbed parameter ensemble of emissions-driven earth system model simulations. Clim Dyn 43:2855–2885. https://doi.org/10.1007/s00382-014-2097-5

Oakley JE, O’ Hagan A (2010) SHELF: the Sheffield elicitation framework (Version 2.0)

Ogura T, Shiogama H, Watanabe M et al (2017) Effectiveness and limitations of parameter tuning in reducing biases of top-of-atmosphere radiation and clouds in MIROC version 5. Geosci Model Dev 10:4647–4664. https://doi.org/10.5194/gmd-10-4647-2017

Phillips TJ, Potter GL, Williamson DL et al (2004) Evaluating parameterizations in general circulation models—climate simulation meets weather prediction. Bull Am Meteorol Soc 85:1903–1915. https://doi.org/10.1175/BAMS-85-12-1903

Prudhomme C, Haxton T, Crooks S et al (2013) Future flows hydrology: an ensemble of daily river flow and monthly groundwater levels for use for climate change impact assessment across Great Britain. Earth Syst Sci Data 5:101–107. https://doi.org/10.5194/essd-5-101-2013

Ringer MA, Martin GM, Greeves CZ et al (2006) The physical properties of the atmosphere in the new Hadley Centre Global Environmental Model (HadGEM1). Part II: aspects of variability and regional climate. J Clim 19:1302–1326. https://doi.org/10.1175/JCLI3713.1

Rodwell MJ, Palmer TN (2007) Using numerical weather prediction to assess climate models. Q J R Meteorol Soc 133:129–146. https://doi.org/10.1002/qj.23

Rossow WB, Schiffer RA (1999) Advances in understanding clouds from ISCCP. Bull Am Meteorol Soc 80:2261–2287. https://doi.org/10.1175/1520-0477(1999)080%3C2261:AIUCFI%3E2.0.CO;2

Rougier J (2007) Probabilistic inference for future climate using an ensemble of climate model evaluations. Clim Change 81:247–264

Roustant O, Ginsbourger D, Deville Y (2012) DiceKriging, DiceOptim : two R packages for the analysis of computer experiments by kriging-based metamodeling and optimization. J Stat Softw Vol 51:1–55. https://doi.org/10.1359/JBMR.0301229

Rowlands DJ, Frame DJ, Ackerley D et al (2012) Broad range of 2050 warming from an observationally constrained large climate model ensemble. Nat Geosci 5:256–260. https://doi.org/10.1038/NGEO1430

Saltelli A, Tarantola S, Chan KPS (1999) A quantitative model-independent method for global sensitivity analysis of model output. Technometrics 41:39–56. https://doi.org/10.2307/1270993

Sanderson BM (2011) A multimodel study of parametric uncertainty in predictions of climate response to rising greenhouse gas concentrations. J Clim 24:1362–1377. https://doi.org/10.1175/2010JCLI3498.1

Scaife AA, Copsey D, Gordon C et al (2011) Improved Atlantic winter blocking in a climate model. Geophys Res Lett. https://doi.org/10.1029/2011GL049573

Scaife AA, Spangehl T, Fereday DR et al (2012) Climate change projections and stratosphere-troposphere interaction. Clim Dyn 38:2089–2097. https://doi.org/10.1007/s00382-011-1080-7

Sexton DMH, Murphy JM, Collins M, Webb MJ (2012) Multivariate prediction using imperfect climate models part I: outline of methodology. Clim Dynam 38:2513–2542

Shiogama H, Watanabe M, Yoshimori M et al (2012) Perturbed physics ensemble using the MIROC5 coupled atmosphere-ocean GCM without flux corrections: experimental design and results. Parametric uncertainty of climate sensitivity. Clim Dyn 39:3041–3056. https://doi.org/10.1007/s00382-012-1441-x

Stainforth DA, Aina T, Christensen C et al (2005) Uncertainty in predictions of the climate response to rising levels of greenhouse gases. Nature 433:403–406. https://doi.org/10.1038/nature03301

Taylor KE, Stouffer RJ, Meehl GA (2012) AN overview of CMIP5 and the experiment design. Bull Am Meteorol Soc 93:485–498. https://doi.org/10.1175/BAMS-D-11-00094.1

van den Hurk B, Siegmund P, Klein Tank A et al (2014) KNMI’14: climate change scenarios for the 21st century—a Netherlands perspective. Sci Rep WR2014-01, KNMI, Bilt, Netherlands. http://www.climatescenarios.nl115. Accessed 1 Jan 2016

Vosper SB (2015) Mountain waves and wakes generated by South Georgia: implications for drag parametrization. Q J R Meteorol Soc 141:2813–2827. https://doi.org/10.1002/qj.2566

Waliser DE, Moncrieff MW, Burridge D et al (2012) The “year” of tropical convection (May 2008–April 2010): climate variability and weather highlights. Bull Am Meteorol Soc 93:1189–1218

Walters DN, Williams KD, Boutle IA et al (2014) The Met Office Unified Model global atmosphere 4.0 and JULES global land 4.0 configurations. Geosci Model Dev 7:361–386. https://doi.org/10.5194/gmd-7-361-2014

Walters D, Boutle I, Brooks M et al (2017) The Met Office Unified Model global atmosphere 6.0/6.1 and JULES global land 6.0/6.1 configurations. Geosci Model Dev 10:1487–1520. https://doi.org/10.5194/gmd-10-1487-2017

Wan H, Rasch PJ, Zhang K et al (2014) Short ensembles: an efficient method for discerning climate-relevant sensitivities in atmospheric general circulation models. Geosci Model Dev 7:1961–1977. https://doi.org/10.5194/gmd-7-1961-2014

Williams KD, Bodas-Salcedo A, Deque M et al (2013) The transpose-AMIP II experiment and its application to the understanding of southern ocean cloud biases in climate models. J Clim 26:3258–3274. https://doi.org/10.1175/JCLI-D-12-00429.1

Williamson D, Goldstein M, Allison L et al (2013) History matching for exploring and reducing climate model parameter space using observations and a large perturbed physics ensemble. Clim Dyn 41:1703–1729. https://doi.org/10.1007/s00382-013-1896-4

Williamson D, Blaker AT, Hampton C, Salter J (2015) Identifying and removing structural biases in climate models with history matching. Clim Dyn 45:1299–1324. https://doi.org/10.1007/s00382-014-2378-z

Wilson DR, Bushell AC, Kerr-Munslow AM et al (2008) PC2: a prognostic cloud fraction and condensation scheme. I: Scheme description. Q J R Meteorol Soc 134:2093–2107. https://doi.org/10.1002/qj.333

Xie Y, Yang P, Liou K-N et al (2012) Parameterization of contrail radiative properties for climate studies. Geophys Res Lett. https://doi.org/10.1029/2012GL054043

Yokohata T, Annan JD, Collins M et al (2013) Reliability and importance of structural diversity of climate model ensembles. Clim Dyn 41:2745–2763. https://doi.org/10.1007/s00382-013-1733-9

Acknowledgements

David Sexton, Ambarish Karmalkar and James Murphy were supported by the Joint UK BEIS/Defra Met Office Hadley Centre Climate Programme (GA01101). The remaining co-authors were supported by the Public Weather Service (PWS) funded by the UK Government. We would like to thank Rachel Stratton, Adrian Lock, Adrian Hill, Steve Derbyshire, Martin Willett, Stuart Webster, James Manners, Andrew Bushell, Paul Field, Jonathan Wilkinson, Kalli Furtado, William Ingram, Ben Shipway and Glenn Shutts for help with the elicitation of the parameters to perturb and comments on the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

For this study, the global MSE of the average forecast error over 16 start dates is used to measure the performance of the TAMIP experiments. An alternative measure of forecast performance would have been to calculate the MSEs for each start date and then average those. We refer to this alternative metric as the mean-individual-forecast-error MSE. We how below how the latter relates to the “mean-error MSE” used in the paper.

Let \({m_{ij}}\) be the 5-day forecast value for the ith start date at the jth grid point, \({o_{ij}}\) be the verifying observation, and let the overbar indicate an average over the index denoted by the dot. Then the “mean-error MSE” used in the paper is

The alternative mean-individual-forecast-error MSE measure is the left-hand side of Eq. (2), which can be expanded:

Defining the deviation of the 5-day forecast value of the ith start date and jth grid point from the ensemble mean 5-day forecast as \(\Delta {m_{ij}}=~~{m_{ij}} - ~\overline {{{m_{ \cdot j}}}}\) and the deviation of the observation 5 days from the ith start date at the jth grid point from the average of the values across the 16 start dates \(\Delta {o_{ij}}=~~{o_{ij}} - \overline {{~{o_{ \cdot j}}}}\), we then get

This shows that the mean-individual-forecast-error MSE has three components: the mean-error MSE from Eq. (1), and two extra terms that measure forecasting performance dependent on the given initial state of the weather. We refer to the sum of these two extra terms as the initial-condition-dependent MSE. An important component for these two extra terms are the 16 differences between the modelled and observed deviations from their respective mean. The two terms are the average of the MSEs of the 16 differences; and a term based on the correlation of the 16 differences with the averaged difference across the 16 start dates. Across the PPE, there is a high correlation between the mean-error MSE and the mean-individual-forecast-error MSE (see Fig. 14) suggesting that for climate models, the mean forecast error dominates. This supports our choice to focus on the mean-error MSE in the paper. In Fig. 15 we plot differences between the two scores against the mean-error MSE, showing that for many variables (other than surface air temperature and precipitation), these two terms are uncorrelated. This suggests that the dominance of mean-error MSE does obscure potentially interesting contributions from the mean-individual-forecast-error MSE that could provide potentially useful information.

A sensitivity analysis (Fig. 16) of the initial-condition-dependent MSE shows that parameters in the convection scheme dominate, even for variables like zonally averaged relative humidity on pressure levels and cloud area fraction, which tend to be dominated by the cloud radiation and microphysics parameters when considering the mean-error MSE. This would suggest that using the mean-individual-forecast-error MSE to filter parameter space would mainly add value for constraining the convection parameters.

Sensitivity analysis showing the fraction of variance of the response surface by the emulators of the “mean-error MSE” (lower triangle) against the difference between the “mean-individual-forecast-error MSE” and “mean-error MSE” (upper triangle) for several variables (y-axis). A key is shown in far-right column

Rights and permissions

About this article

Cite this article

Sexton, D.M.H., Karmalkar, A.V., Murphy, J.M. et al. Finding plausible and diverse variants of a climate model. Part 1: establishing the relationship between errors at weather and climate time scales. Clim Dyn 53, 989–1022 (2019). https://doi.org/10.1007/s00382-019-04625-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-019-04625-3