Abstract

Symmetric quasiconvexity plays a key role for energy minimization in geometrically linear elasticity theory. Due to the complexity of this notion, a common approach is to retreat to necessary and sufficient conditions that are easier to handle. This article focuses on symmetric polyconvexity, which is a sufficient condition. We prove a new characterization of symmetric polyconvex functions in the two- and three-dimensional setting, and use it to investigate relevant subclasses like symmetric polyaffine functions and symmetric polyconvex quadratic forms. In particular, we provide an example of a symmetric rank-one convex quadratic form in 3d that is not symmetric polyconvex. The construction takes the famous work by Serre from 1983 on the classical situation without symmetry as inspiration. Beyond their theoretical interest, these findings may turn out useful for computational relaxation and homogenization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Variational models based on energy minimization principles are known to yield good descriptions of the elastic materials in nonlinear elasticity (so-called hyperelastic materials), and have inspired new mathematical developments in the calculus of variations over the last decades. Typically, one encounters elastic energies of the form

where \(\Omega \subset {\mathbb {R}}^d\), \(d=2,3\), is a bounded domain representing the reference configuration of the elastic body, \(v:\Omega \rightarrow {\mathbb {R}}^d\) is the deformation of the body, and \(W:{\mathbb {R}}^{d\times d}\rightarrow {\mathbb {R}}\) is an elastic energy density. Depending on the specific model, (1.1) can be complemented with external force terms and/or the class of admissible deformations can be restricted by boundary conditions. As is well-known in continuum mechanics, mechanically relevant energy densities cannot be convex due to incompatibility with the concept of frame-indifference, see e.g. [12]. The weaker notion of polyconvexity, which was introduced by Ball in 1977 [2], however, has turned out to be particularly suitable here, since (i) the existence of weak solutions can be shown (using the direct method in the calculus of variations) and (ii) there exist realistic models (involving orientation preservation and avoiding infinite compression) that have polyconvex energies. Explicit polyconvex energies for certain isotropic and anisotropic materials were derived in e.g. [28, 38, 51]. We recall that a function \({\mathbb {R}}^{d\times d}\rightarrow {\mathbb {R}}\) is called polyconvex if it depends in a convex way on its minors; in 3d, second and third order minors correspond to the matrix of cofactors and the determinant, respectively, while the second order minor in 2d is just the determinant.

In this article, we investigate functions that are polyconvex when composed with the linear projection of \({\mathbb {R}}^{d\times d}\) onto the subspace of symmetric matrices \({\mathcal {S}}^{d\times d}\), that is, \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) such that \({\mathbb {R}}^{d\times d}\ni F\mapsto f(F^s)\) is polyconvex. We call such functions symmetric polyconvex, see Definition 2.1 for more details. This notion has applications in the geometrically linear theory of elasticity, which results from nonlinear elasticity theory by replacing the requirement of frame-indifference with the assumption that the elastic energy density is invariant under infinitesimal rotations, see e.g., [7, 8, 12]. In this case, the energy densities depend only on the symmetric part of the deformation gradient, or on linear strains \(e(u)= \frac{1}{2}(\nabla u + (\nabla u)^T)\), where \(u:\Omega \rightarrow {\mathbb {R}}^d\), \(u(x) = v(x) - x\), is the elastic displacement field. That is,

with a density \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\). Here, we are particularly interested in the case when f is symmetric polyconvex. The overall aim is to identify necessary and sufficient conditions for symmetric polyconvexity in 2d and 3d. As we will discuss further below, these conditions will facilitate finding explicit symmetric polyconvex functions that can serve as interesting energy densities in continuum mechanics or that provide lower bounds in the relaxation of models for materials with microstructures. Moreover, a deeper understanding of symmetric polyconvexity may be useful in further applications of the translation method (see Section 1.4 below for more details).

1.1 Summary of Results

Next, let us explain in more detail our new mathematical results, which were inspired by the following examples of functions defined on \({\mathcal {S}}^{d\times d}\):

-

(i) \(\varepsilon \mapsto \det \varepsilon \) is not symmetric polyconvex in \(d=2,3\);

-

(ii) \(\varepsilon \mapsto - \det \varepsilon \) is symmetric polyconvex in \(d=2\), but not in \(d=3\);

-

(iii) \(\varepsilon \mapsto -(\mathrm{cof\,}\varepsilon )_{ii}\), \(i=1,2,3\) is symmetric polyconvex in \(d=3\), while \(\varepsilon \mapsto \mathrm{cof\,}\varepsilon \) is not.

Note that this is different from the classical setting, where \({\mathbb {R}}^{d\times d}\ni F\mapsto \pm \det F\) is polyconvex in any dimension, as are all minors. We discuss these functions in Example 2.4, and give further examples in Remarks 4.2 b) and 5.2.

We show that symmetric polyconvexity in 2d and 3d can be characterized as follows: any symmetric polyconvex function in 2d corresponds exactly to a convex function of all minors that is non-increasing with respect to the determinant (see Theorem 4.1). In particular, this includes (ii) above and elucidates why (i) is not symmetric polyconvex. For 3d, we prove in Theorem 5.1 that any symmetric polyconvex function can be represented as a convex function of first and second order minors (hence, no dependence on the determinant) whose subdifferential with respect to its cofactor variable is negative semi-definite. Notice that this result is in correspondence with example (iii) above. The difficulty here lies in identifying these characterizations. The proofs build on monotonicity properties of convex functions expressed in terms of their (partial) subdifferentials (cf. Lemmas 3.3 and 3.4), as well as on some standard tools from convex analysis, properties of semi-convex functions in the classical setting, and on basic algebraic relations for minors.

Moreover, these findings can be used to investigate the two subclasses of symmetric polyconvex quadratic forms and symmetric polyaffine functions. Indeed, it is not hard to see that the latter, i.e., functions \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) such that f and \(-f\) are symmetric polyconvex, are always affine as we outline in Section 4.3 and Proposition 5.10. Note that this stands in contrast with the classical case, where for instance the function \({\mathbb {R}}^{d\times d}\ni F\mapsto \det F\) is polyaffine, but not affine. Hence, in the symmetric setting, there are no non-trivial Null-Lagrangians. Regarding the class of symmetric polyconvex quadratic forms we give explicit characterizations, both for \(d=2\) (Proposition 4.5) and \(d=3\) (Proposition 5.6). More precisely, if \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) is symmetric polyconvex, then

for 2d and 3d, respectively, where \(h:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) is convex, \(\alpha >0\), and \(A\in {\mathcal {S}}^{3\times 3}\) is positive semi-definite. The case \(d=2\) can be viewed as a refinement of the result by Marcellini in [36] from the classical to the symmetric framework. For a related statement in the three-dimensional classical setting we refer to [16, p. 192].

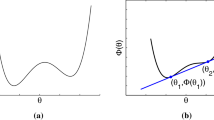

While in two dimensions, symmetric rank-one convexity and symmetric polyconvexity are equivalent for quadratic forms as a consequence of the corresponding well-known result for classical quadratic forms (see e.g. [16, Theorem 5.25]), we prove that this is not true for \(d=3\). For quadratic forms on \({\mathbb {R}}^{3\times 3}\), this has been known since early work by Terpstra [55], which Serre in [50] underpinned with an explicit example. Precisely, he showed that there exists \(\eta >0\) such that

is rank-one convex, but not polyconvex, cf. also [16, p. 194]. More recently, Harutyunyan and Milton [29] gave another example, namely

There, the proof of non-polyconvexity exploits critically that \(F_{13}, F_{21}\) and \(F_{32}\) do not appear in the formula, see [29, Proof of Theorem 1.5 ii)]. How to adapt the examples in (1.4) and (1.5) to the symmetric setting is not immediately obvious due to the kind of dependence on the off-diagonal entries. As one of the main results of this article, we provide a symmetric variant of Serre’s example; Theorem 5.7 states the existence of \(\eta >0\) such that the quadratic form \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) given by

is symmetric rank-one convex, but not symmetric polyconvex. The proof that this function is not symmetric polyconvex is based on the 3d-characterization in (1.3) and on a careful study of the minimizers of \(f + \eta |\varepsilon |^2\) in the set of compatible matrices with unit norm (see Lemma 5.8 for the details).

1.2 Organization of the Article

The paper is structured as follow: in Section 2, we recall the common generalized notions of convexity in the symmetric setting and review selected results from the literature regarding their characterization, properties and relations. The section is concluded with the above mentioned simple motivating examples. After some notational remarks and preliminaries in Section 3, we address characterizations of symmetric polyconvexity in 2d in Section 4 and in 3d in Section 5. Moreover, we consider symmetric polyconvex quadratic forms in Sections 4.2 and 5.2, where we also present the proof of Theorem 5.7. Finally, symmetric polyaffine functions are the topic of Sections 4.3 and 5.3. In the remainder of the introduction, we comment further on the relevance of the notion of (symmetric) polyconvexity in the calculus of variations and elasticity theory.

1.3 Connections with (Symmetric) Quasiconvexity and Relaxation

Polyconvexity is a sufficient condition for quasiconvexity, which has been the subject of intensive investigation since its introduction by Morrey [39] in 1952. It constitutes the central concept for the existence theory of vectorial integral problems in the calculus of variations, generalizing the notion of convexity to multi-dimensional variational problems. If we consider the integral functional E in (1.1) with suitable assumptions on the integrand W and the space of deformations, quasiconvexity of W is necessary and sufficient for weak lower semicontinuity of E. Along with coercivity, quasiconvexity is thus a key ingredient for proving existence of minimizers of E (e.g. among all deformations with given boundary values) via the direct method in the calculus of variations, see e.g. [2, 5, 12, 16]. As for weak lower semicontinuity of the functional \({\mathcal {E}}\) in the geometrically linear theory of elasticity, cf. (1.2), symmetric quasiconvexity of the elastic energy density f takes over the role of quasiconvexity of W [4, 22, 26]. This means that f satisfies Jensen’s inequality for all symmetrized gradient test fields, or equivalently that the function is quasiconvex when composed with the linear projection of \({\mathbb {R}}^{d\times d}\) to symmetric matrices, cf. Proposition 2.2. For a further discussion on the connections between linearized (small-strain) and nonlinear (finite-strain) elasticity theory we refer the reader e.g. to [3, 7, 20, 31].

Despite its importance, the notion of quasiconvexity is still not fully understood in all its facets due to its complexity. A common approach is therefore to retreat to the weaker and stronger conditions like rank-one convexity and polyconvexity, as they are easier to deal with and yet allow to make useful conclusions about quasiconvex functions [16, 41]. The study of generalized notions of convexity for functions with specific properties has helped to gain valuable new insight and to advance the field. Relevant classes include one-homogeneous functions [17, 40], functions obtained by compositions with transposition [34, 42], or functions with different types of invariances, such as isotropic functions [16, 18, 37, 38, 49, 58, 59], quadratic forms with linearly elastic cubic, cyclic and axis-reflection symmetry [29], and functions of linear strains [9, 10, 32, 44, 60, 61]. In this spirit, the characterizations of symmetric polyconvexity proved in this article contribute to a deeper understanding of quasiconvex functions that are invariant under symmetrization.

Even though minimization problems with integral functionals whose density is not (symmetric) quasiconvex may in general not admit solutions, they are highly relevant in many applications as they allow to model oscillation effects, like microstructure formation in materials, see e.g. [41]. The mathematical task is then to describe the effective behavior of the model by analyzing minimizing sequences or low energy states. This comes down to relaxation, i.e. to finding the lower semi-continuous envelope of the given functional. In analogy to the relaxation of integral functionals in the nonlinear setting (see e.g. [16, 41]), this is achieved in the symmetric setting by the symmetric quasiconvexification of the integrand. The symmetric quasiconvex envelope of a function f, i.e. the largest symmetric quasiconvex function not larger than f, can be represented as

see e.g. [62, Proposition 2.1]. We point out that explicit calculations of \(f^{\mathrm{sqc}}\) tend to be rather challenging, since they require to solve the infinite-dimensional minimization problem in (1.7) for every symmetric matrix \(\varepsilon \). A common strategy is to approach this problem by searching for suitable upper and lower bounds, typically in the form of (symmetric) polyconvex and (symmetric) rank-one convex envelopes, cf. e.g. [10, 13,14,15, 24, 27, 35]. In the case of matching bounds and non-extended valued densities, one obtains even an exact relaxation formula. Aside from applications in linearized elasticity, the characterizations of symmetric polyconvexity provided in this paper are potentially useful for relaxation problems arising from elasto-plastic models or in the theory of liquid crystals.

1.4 Applications to the Translation Method

Another technique from homogenization and optimization theory, called the translation method, has also proven itself very successful in deriving good lower estimates on quasiconvex envelopes. For an introduction and a historical overview we refer to [11, Chapter 8], for publications related to elasticity theory see e.g. [9, 25, 32, 44] and the references therein. In our symmetric setting, the translation method can be briefly summarized as follows. We observe that \(f = f- q + q \ge (f-q)^c + q\) for any \(f,q:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\), where the superscript \(\mathrm{c}\) stands for the convex envelope. If the so-called translator q is symmetric quasiconvex, \((f-q)^c + q\) is symmetric quasiconvex. Hence,

Optimizing the right-hand side of (1.8) over a subclass of symmetric quasiconvex functions provides a lower bound on \(f^{\mathrm{sqc}}\). We remark that if one took the supremum over the totality of all symmetric quasiconvex functions q, for which explicit representations are not available, though, the method would be exact. Hence, a good choice of the class of translators in the sense of finding the balance between generality and explicitness determines the effectiveness of the method. There are two natural options to be used here: (i) specific symmetric polyconvex functions, which, thanks to our characterizations in Theorems 4.1 and 5.1, can be easily constructed, and (ii) symmetric rank-one convex quadratic forms, cf. also [61].

Our characterization of symmetric polyconvex (and thus rank-one convex) quadratic forms in 2d (cf. (1.3) or Proposition 4.5) yields an explanation of why the translator \(\varepsilon \mapsto -\det \varepsilon \) is often a good choice. It was used for example in the 2d setting of [9] in the derivation of a relaxation formula for two-well energies with possibly unequal moduli. Indeed, if we rewrite the right hand side of (1.8) as \((f-q)^{\mathrm{c}} - h^{\mathrm{c}} + h + q \ge (f- h - q)^{\mathrm{c}} + h + q\) with a convex quadratic function \(h:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\), we see in view of the first equation in (1.3) that working with just \(\varepsilon \mapsto -\alpha \det \varepsilon \) for \(\alpha >0\) as a translator is equivalent to using all symmetric rank-one convex quadratic forms. The second equation in (1.3) indicates that the analogous observation is true in three dimensions for \(\varepsilon \mapsto -A:\mathrm{cof\,}\varepsilon \) with \(A\in {\mathcal {S}}^{3\times 3}\) positive semi-definite. Translators of this type play a key role in the derivation of the bounds in [9, 44]. Our characterization result hence provides structural insight into the choice of translators in the above-mentioned literature.

In the classical setting, Firoozye [25] showed that a translation bound optimized over rank-one convex quadratic forms and Null-Lagrangians is at least equally good as polyconvexification, and even strictly better for some three-dimensional functions. His proof of this latter statement is based on Serre’s example in (1.4). Our example (1.6) in the 3d symmetric setting clearly implies that, in contrast to 2d, considering symmetric rank-one convex quadratic forms as translators will in general give better bounds than using just symmetric polyconvex ones. Whether there are situations when combining symmetric rank-one convex quadratic forms with other symmetric polyconvex functions leads to improved results remains an open question for future research; notice that we do not have any non-trivial Null-Lagrangians at hand in the symmetric setting, cf. Proposition 5.10.

2 Different Notions of Symmetric Semi-Convexity

When speaking of semi-convexity, we will always refer to one of the following notions of generalized convexity: quasiconvexity, polyconvexity and rank-one convexity. Let us briefly recall that a function \(f:{\mathbb {R}}^{d\times d}\rightarrow {\mathbb {R}}\) is called quasiconvex if it satisfies Jensen’s inequality for all gradient test fields, to the precise,

assuming the integrals on the right-hand side exists. A function \(f:{\mathbb {R}}^{d\times d}\rightarrow {\mathbb {R}}\) is polyconvex if it can be written in terms of a convex function of its minors. Finally, \(f:{\mathbb {R}}^{d\times d}\rightarrow {\mathbb {R}}\) is a rank-one convex function, if it is convex along all rank-one lines \(t\mapsto F+t a\otimes b\) for \(F\in {\mathbb {R}}^{d\times d}\) and \(a, b\in {\mathbb {R}}^d\), where \((a\otimes b)_{ij} = a_ib_j\) for \(i,j=1, \ldots , d\). For more details, see e.g. the standard work by Dacorogna [16].

Here, we are interested in functions that are independent of the skew-symmetric part of its variables \(F\in {\mathbb {R}}^{d\times d}\), that is, functions that depend only on the symmetric part of F as motivated by geometrically linear elasticity theory, cf. Section 1. As documented there, also here the semi-convexity notions are of interest, i.e., symmetric quasi-, poly- and rank-one convex functions. The special class of semi-convex functions that are independent of skew-symmetric parts motivates the concept of symmetric semi-convexity. According to the following definition, we call a function defined on the space of \({\mathcal {S}}^{d\times d}\) symmetric semi-convex, if its natural extension to all matrices in \({\mathbb {R}}^{d\times d}\) is semi-convex in the conventional sense, cf. work by Zhang [60, 62], where symmetric semi-convexity is called semi-convexity on linear strains:

Definition 2.1

(Symmetric semi-convex functions). A function \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) is symmetric semi-convex, if the function

is semi-convex. Here, \(\pi _d( F) = \tfrac{1}{2} (F + F^T) = F^s\), \(F \in {\mathbb {R}}^{d\times d}\), is the orthogonal projection onto the subspace of symmetric matrices.

Note that in particular, \({{\tilde{f}}}(F) = f(F^s) = {{\tilde{f}}}(F^s) = {{\tilde{f}}}(F^T)\) for any \(F\in {\mathbb {R}}^{d\times d}\), i.e., \({{\tilde{f}}}\) is invariant under symmetrization. As an aside, we mention that a corresponding definition of symmetric convex functions is possible. By linearity of \(\pi _d\), a function is symmetric convex if and only if it is convex.

Next we will collect and review some classical, as well as more recent, results in the context of symmetric semi-convex functions. The following characterizations of symmetric quasi- and rank-one convexity for general dimensions d are straightforward to show and appear to be well-known, see e.g. [22, 60].

Proposition 2.2

Let \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\). Then

-

(i)

f is symmetric quasiconvex if and only if for every \(\varepsilon \in {\mathcal {S}}^{d\times d}\),

$$\begin{aligned} f(\varepsilon )\le \inf _{\varphi \in C^{\infty }_c((0,1)^d;{\mathbb {R}}^d)}\int _{(0,1)^d} f(\varepsilon +(\nabla \varphi )^s)\;\mathrm {d}{x}; \end{aligned}$$(2.1) -

(ii)

f is symmetric rank-one convex if and only if

$$\begin{aligned} f(\lambda \varepsilon +(1-\lambda )\eta )\le \lambda f(\varepsilon )+(1-\lambda )f(\eta ) \end{aligned}$$(2.2)for all \(\lambda \in (0,1)\) and \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\) compatible, i.e. \(\varepsilon -\eta = a\odot b := \tfrac{1}{2}(a\otimes b + b\otimes a)\) for some \(a, b \in {\mathbb {R}}^{d}\).

Equivalently, \(t\mapsto f(\varepsilon + t a\odot b)\) is convex for any \(\varepsilon \in {\mathcal {S}}^{d\times d}\) and any \(a,b \in {\mathbb {R}}^d\).

Remark 2.3

-

(a)

Notice that many works involving semi-convex functions defined on linear strains, such as [10, 21, 22, 46], take the characterizations of Proposition 2.2 as a starting point and definition.

-

(b)

In [52], a function \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) is called quasiconvex, if for every \(\varepsilon \in {\mathcal {S}}^{d\times d}\),

$$\begin{aligned} f(\varepsilon )\le \inf _{\psi \in C_c^{\infty }((0,1)^d)} \int _{(0,1)^d} f(\varepsilon + D^2\psi )\;\mathrm {d}{x}, \end{aligned}$$where \(D^2\psi \) denotes the Hessian matrix of \(\psi \), cf. also [23]. This notion is strictly weaker than the symmetric quasiconvexity in the sense of Definition 2.1. Since for every \(\psi \in C_c^{\infty }((0,1)^d)\) the gradient \(\nabla \psi \) is an admissible test field in (2.1), the asserted implication is immediate. To see that it is strict, we consider in 2d the function

$$\begin{aligned} f_0(\varepsilon ) = {\left\{ \begin{array}{ll} \det \varepsilon &{} \text {if }\varepsilon \text { is positive definite},\\ 0 &{} \text {otherwise,} \end{array}\right. } \qquad \varepsilon \in {\mathcal {S}}^{2\times 2}, \end{aligned}$$which Šverák in [52] proved to be quasiconvex. However, \(f_0\) is not symmetric rank-one convex, and therefore not symmetric quasiconvex (see (2.4) below), since the following map, which is the composition of a compatible line with \(f_0\), is not convex:

$$\begin{aligned}&{\mathbb {R}}\rightarrow {\mathbb {R}}, \quad t\mapsto f_0({\mathbb {I}}+ 2te_1\odot e_2)\\&\quad = {\left\{ \begin{array}{ll} \det ({\mathbb {I}}+ 2te_1\odot e_2) = 1-t^2 &{} \text {for }t\in (-1,1)\\ 0 &{}\text {otherwise,} \end{array}\right. } \end{aligned}$$where \({\mathbb {I}}\) is the identity matrix, and \(e_1, e_2\) are the standard unit vectors in \({\mathbb {R}}^2\).

Similarly, we show in the 3d setting that the following quasiconvex functions from [52]

$$\begin{aligned} f_l(\varepsilon ) = {\left\{ \begin{array}{ll} |\det \varepsilon | &{} \text {if }\varepsilon \text { has exactly }l\text { negative eigenvalues},\\ 0 &{} \text {otherwise,} \end{array}\right. } \qquad \varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$with \(l=1,2\), are not symmetric rank-one convex. For \(l=1\) and \(l=2\), we use the compatible lines \(t\mapsto \mathrm{diag}(1,1,-1) + 2te_1\odot e_2\) and \(t\mapsto \mathrm{diag}(-1,-1, 1) + 2 t e_1\odot e_2\), respectively.

-

(c)

Contrary to expectations that may arise in the light of Proposition 2.2, symmetric polyconvexity of a function \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) according to Definition 2.1 is not the same as f being a convex function of symmetric quasiaffine maps (or Null-Lagrangians). Indeed, since there are no non-trivial Null-Lagrangians in the symmetrized context (cf. Section 4.3 for \(d=2\) and Proposition 5.10 for \(d=3\)), the latter property equals convexity of f, and is strictly stronger than symmetric polyconvexity, cf. Example 2.4.

-

(d)

Linearized elasticity can be viewed within the general \({\mathcal {A}}\)-free framework [26, 43, 54]. With the second-order constant-rank operator \({\mathcal {A}}\) defined for \(V\in C^\infty ((0,1)^d;{\mathbb {R}}^{d\times d})\) by

$$\begin{aligned} ({\mathcal {A}}V)_{jk} = \sum _{i=1}^d \partial _{ik}^2 V_{ji} + \partial _{ij}^2 V_{ki} - \partial ^2_{jk}V_{ii} - \partial _{ii}^2 V_{jk}, \quad j,k=1, \ldots , d, \end{aligned}$$(2.3)

one has that \({\mathcal {A}}V=0\) in \((0,1)^d\) if and only if \(V=(\nabla u)^s\) for some \(u\in C^\infty ((0,1)^d; {\mathbb {R}}^d)\). Consequently, (2.1) corresponds to \({\mathcal {A}}\)-quasiconvexity [26], while (2.2) is equivalent to convexity along directions in the characteristic cone

Here \({\mathbb {A}}(\xi )V = V\xi \otimes \xi + \xi \otimes V\xi - ({{\,\mathrm{tr}\,}}V)\xi \otimes \xi -|\xi |^2 V\) for \(\xi \in {\mathbb {R}}^d\), \(V\in {\mathbb {R}}^{d\times d}\) is the symbol of \({\mathcal {A}}\), and \(\ker {\mathbb {A}}(\xi ) = \{a\odot \xi : a\in {\mathbb {R}}^d\}\). For more details, see [26, Example 3.10].

We point out that, even though the notions of symmetric quasiconvexity and \({\mathcal {A}}\)-quasiconvexity with \({\mathcal {A}}\) as in (2.3) fall together, there is a conceptual difference between symmetric polyconvexity and \({\mathcal {A}}\)-polyconvexity due to the lack of non-trivial Null-Lagrangians in the symmetric setting (see c) above). Recall that a function is called \({\mathcal {A}}\)-polyconvex if it can be represented as the composition of a convex function with an \({\mathcal {A}}\)-quasiaffine one, cf. [45, Definition 2.5]. Whereas any \({\mathcal {A}}\)-polyconvex function has to be convex in the symmetric setting, there are symmetric polyconvex functions that are non-convex (cf. Theorems 4.1 and 5.1).

The relation between the different notions of symmetric semi-convexity is an immediate consequence of the implications for their classical versions without symmetry, see [16]. Hence, it holds for \(f:{\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) that

Equivalence in (2.4) is in general not true. Counterexamples that are commonly cited in the classical setting for finite-valued functions on \({\mathbb {R}}^{d\times d}\) are for instance \(F\mapsto \det F\), the parameter-dependent example of [1, 19] for \(d=2\), the famous counterexample by Šverák [53], which shows that quasiconvexity is strictly stronger than rank-one convexity if \(d\ge 3\), and the example of 3d rank-one convex, but not polyconvex quadratic forms in [29, 50]. All these counterexamples depend in a non-trivial way on the skew-symmetric parts, and are hence not suitable in the context of functions on symmetric matrices. In [22, 32], one finds examples of symmetric quasiconvex functions that are not convex, which have resulted from relaxation of double-well functions. We will come back to the discussion of why the reverse implications do not hold, see in particular Example 2.4 and the proof of Theorem 5.7.

An important class of semi-convex functions with a very long history of intensive study are quadratic ones, for more recent work we refer e.g. to [29, 30, 61]. It was shown by van Hove in [56, 57] that for general quadratic forms, the notions of quasiconvexity and rank-one convexity coincide. For \(d=2\), the latter are even equivalent to polyconvexity, see [16, Theorem 5.25] and the references therein. In view of Definition 2.1, these results carry over immediately to the symmetric setting, where a quadratic form is any expression

where M is a fourth order tensor with the symmetries \(M_{ijkl} = M_{lkij}=M_{ijlk}\). Note that a quadratic function f on symmetric matrices is convex if and only if \(f(\varepsilon ) \ge 0\) for any \(\varepsilon \in {\mathcal {S}}^{d\times d}\). A useful characterization for a quadratic form f to be symmetric rank-one convex is that

as follows directly from Proposition 2.2 ii). Based on this characterization, Zhang [61] classified the symmetric rank-one convex quadratic forms for \(d=2,3\) via their nullity and Morse index. In parallel to the classical setting, symmetric rank-one convexity is strictly weaker than symmetric polyconvexity for \(d=3\), as our example in Theorem 5.7 shows.

The following basic example served us as a motivation for the characterization results of symmetric polyconvex functions in Theorems 4.1 and 5.1:

Example 2.4

Let \(d=2,3\). The determinant map \({\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\), \(\varepsilon \mapsto \det \varepsilon \) is not symmetric rank-one convex, and therefore neither symmetric quasi- nor polyconvex.

In 2d, \(\varepsilon \mapsto \det \varepsilon \) is a quadratic form and one finds that for \(a, b\in {\mathbb {R}}^2\),

Hence, in view of (2.5), the determinant map is indeed not symmetric rank-one convex, but we find that \(\varepsilon \mapsto -\det \varepsilon \) is. Since symmetric rank-one convexity is equivalent to symmetric polyconvexity for quadratic forms on \({\mathcal {S}}^{2\times 2}\), \(\varepsilon \mapsto -\det \varepsilon \) is symmetric polyconvex, and thus symmetric quasiconvex. A direct argument for the symmetric quasiconvexity of \(\varepsilon \mapsto -\det \varepsilon \) uses (3.1) below, the fact that for any antisymmetric matrix \(\det F^a \ge 0\) in 2d, and exploits the Null-Lagrangian property of the determinant to conclude that

for all \(\varphi \in C^{\infty }_c((0,1)^2;{\mathbb {R}}^2)\). Interestingly, the previous calculation remains valid if, instead of the function \(-\det \varepsilon \), we consider \(f(\varepsilon )=g(\varepsilon ,\det \varepsilon )\) with \(g:{\mathbb {R}}^5\rightarrow {\mathbb {R}}\) being a convex function that is non-increasing with respect to the last variable. Hence, every such function f is symmetric quasiconvex. In Theorem 4.1, we show that these functions in fact serve as characterization of symmetric polyconvexity in 2d.

In the 3d case, both \(\varepsilon \mapsto \det \varepsilon \) and \(\varepsilon \mapsto - \det \varepsilon \) fail to be symmetric rank-one convex. However, by taking the diagonal \(2\times 2\) minors, simple examples of symmetric rank-one convex functions can be constructed. A direct adaptation of the 2d argument above shows that the maps \(\varepsilon \mapsto -(\mathrm{cof\,}\varepsilon )_{ii}\) for \(\varepsilon \in {\mathcal {S}}^{3\times 3}\) with \(i=1,2,3\) are symmetric polyconvex, while \(\varepsilon \mapsto (\mathrm{cof\,}\varepsilon )_{ii}\) are not.

3 Preliminaries

Before proving the results announced in the introduction, we use this section to collect further relevant notation and auxiliary results.

3.1 Notation

This work focuses on the space dimensions \(d=2,3\). We write \(a\cdot b\) with \(a, b\in {\mathbb {R}}^d\) for the standard inner product on \({\mathbb {R}}^d\), and use the scalar product \(A:B=\sum _{i, j=1}^d A_{ij} B_{ij}\) for \(d\times d\) matrices A and B. The latter induces the Frobenius norm \(|A|^2:=A:A\) on \({\mathbb {R}}^{d\times d}\). Moreover, \(e_i\) with \(i=1, \ldots , d\) are the standard unit vectors in \({\mathbb {R}}^d\), \((a\otimes b)_{ij} = a_ib_j\) with \(i, j=1, \ldots , d\) for \(a, b\in {\mathbb {R}}^d\) is the tensor product of a and b, and \(a\odot b=\frac{1}{2}(a\otimes b + b\otimes a)\) with \(a, b\in {\mathbb {R}}^d\). Further, \(\mathrm{diag}(\lambda _1, \ldots , \lambda _d)\) with \(\lambda _i\in {\mathbb {R}}\) is our notation for diagonal \(d\times d\) matrices.

Let us denote by \({\mathcal {S}}^{d\times d}\) the set of symmetric matrices in \({\mathbb {R}}^{d\times d}\). Any \(F\in {\mathbb {R}}^{d\times d}\) can be decomposed into its symmetric and antisymmetric part, i.e. \(F=F^s+F^a\) with \(F^s=\frac{1}{2}(F+F^T)\in {\mathcal {S}}^{d\times d}\) and \(F^a=\frac{1}{2}(F-F^T)\). For the subsets of positive and negative semi-definite matrices in \({\mathcal {S}}^{d\times d}\) we use the notations \({\mathcal {S}}^{d\times d}_+\) and \({\mathcal {S}}^{d\times d}_-\), respectively.

In the 3d case, the \(2\times 2\) minors of \(F\in {\mathbb {R}}^{3\times 3}\) are \(M_{ij}(F) = \det {\widehat{F}}_{ij}\) for \(i, j\in \{1,2,3\}\), where \({\widehat{F}}_{ij}\) stands for the matrix obtained from F by deleting the ith row and the jth column. The cofactor matrix of F is then given by

One can split \(\mathrm{cof\,}F\in {\mathbb {R}}^{3\times 3}\) into its diagonal and non-diagonal entries, denoted by \(\mathrm{cof\,}_{\mathrm{diag}} F \in {\mathbb {R}}^3\) and \(\mathrm{cof\,}_{\mathrm{off}} F\in {\mathbb {R}}^6\), respectively. More precisely,

and

For symmetric \(\varepsilon \in {\mathcal {S}}^{3\times 3}\) we know that \(\mathrm{cof\,}\varepsilon \) is symmetric, so that we may take \(\mathrm{cof\,}_{\mathrm{off}} \varepsilon \in {\mathbb {R}}^3\), i.e.,

3.2 Properties of Symmetric Matrices and Their Minors

For \(d=2\), we observe that

and for \(d=3\), straightforward calculations yield that

and

Notice that \(\mathrm{cof\,}F^a, \mathrm{cof\,}F^s\in {\mathcal {S}}^{3\times 3}\) for \(F \in {\mathbb {R}}^{3\times 3}\). In general, \(\mathrm{cof\,}F^T =(\mathrm{cof\,}F)^T\) for all \(F\in {\mathbb {R}}^{3\times 3}\).

Another useful formula is Cramer’s rule, which states that for all \(F\in {\mathbb {R}}^{3\times 3}\),

Here \({\mathbb {I}}\) is the identity matrix in \({\mathbb {R}}^{3\times 3}\).

If F is antisymmetric, i.e. \(F=F^a\), there exist \(x\in {\mathbb {R}}^3\) such that

and consequently,

Hence, we obtain for \(a, b\in {\mathbb {R}}^3\) that

where \(a\times b\) stands for the cross product of the vectors a and b. In component notation, \((a\times b)_i = a_{j}b_{k}-a_{k}b_{j}\) for every circular permutation (ijk) of (123). In view of (3.2) and the elementary calculation that \(\mathrm{cof\,}(a\otimes b)=0\), we also find that

These elementary observations allow us to prove the following useful lemma:

Lemma 3.1

For \(A \in {\mathcal {S}}^{3\times 3}\) let \(q_A:{\mathbb {R}}^{3\times 3}\rightarrow {\mathbb {R}}\) be the quadratic form given by

Then the following three conditions are equivalent:

-

(i)

\(q_A\) is convex;

-

(ii)

\(q_A\) is rank-one convex;

-

(iii)

A is positive semi-definite.

Proof

Clearly, (i) implies (ii). For the implication \(``ii) \Rightarrow iii)"\) take any \(x\in {\mathbb {R}}^3\) and select vectors \(a, b\in {\mathbb {R}}^3\) such that \(a\times b=x\). Then, by (3.5),

for all \(t\in {\mathbb {R}}\). Since \(q_A\) is convex along the rank-one line \(t\mapsto t(a\otimes b)\), it follows that \(Ax\cdot x\ge 0\). Hence, \(A\in {\mathcal {S}}^{3\times 3}_+\).

To prove \(``iii) \Rightarrow i)"\) we show that \(q_A(F)\ge 0\) for all \(F\in {\mathbb {R}}^{3\times 3}\). By choosing \(x\in {\mathbb {R}}^3\) such that

one obtains due to (3.4) and the positive definiteness of A that

\(\square \)

We denote the convex hull of any set \(S\subset {\mathbb {R}}^{d\times d}\) by \(S^c\). Later on, we will utilize the characterizations of two specific convex hulls, namely

and

This follows from the observation that the convex hull of a set \(S\subset {\mathbb {R}}^{d\times d}\) with the property that \(S=\alpha S\) for all \(\alpha \in {\mathbb {R}}\) coincides with its linear span. For \(S=\{\varepsilon \in {\mathcal {S}}^{d\times d}: \det \varepsilon =0\}\) the latter coincides with \({\mathcal {S}}^{d\times d}\).

3.3 Convex Functions, Subdifferentials and Monotonicity

For vectors \(y, {\bar{y}}\in {\mathbb {R}}^n\) the order relation \(y \le {\bar{y}}\) is to be understood componentwise, that is as \(y_i \le {\bar{y}}_i\) for \(i=1, \ldots , n\), and analogously for \(y\ge {\bar{y}}\). Monotonicity of a function \(h:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is also defined componentwise, that is, h is called non-increasing (non-decreasing) if \(h(y)\ge h({\bar{y}})\) for all \(y, {\bar{y}}\in {\mathbb {R}}^n\) with \(y \le {\bar{y}}\) (\(y\ge {\bar{y}}\)).

For a convex function \(h:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) and \(y\in {\mathbb {R}}^n\), the non-empty, compact and convex set

is the subdifferential of h in y. Next, we summarize some further well-known facts for subdifferentials. An element \(b\in \partial h(y)\) is commonly referred to as subgradient of h in y, see e.g. [16, 48]. The subdifferential was introduced to generalize the classical notion of differentiability to general convex functions. If g is differentiable in y, then \(\partial h(y)\) contains exactly one element, namely the gradient \(\nabla h(y)\). In generalization of the smooth case, the function h is non-increasing (non-decreasing) if and only if \(\partial h(y)\subset (-\infty , 0]^n\) (\(\partial h(y)\subset [0, \infty )^n\)) for all \(y\in {\mathbb {R}}^n\). The multivalued map \(\partial h: {\mathbb {R}}^n\rightarrow {\mathcal {P}}({\mathbb {R}}^n)\), where \({\mathcal {P}}({\mathbb {R}}^n)\) is the power set of \({\mathbb {R}}^n\), is a monotone operator, meaning that

cf. [47]. Moreover, \(\partial h\) is continuous in the sense that if \((y^{(j)})_j\subset {\mathbb {R}}^n\) is a sequence that converges to some \(y\in {\mathbb {R}}^n\) and \(\delta >0\), there exists an index \(J\in {\mathbb {N}}\) such that

where \(B_1(0)\) is the unit ball in \({\mathbb {R}}^n\) with center in the origin, see [48, Theorem 24.5].

Next, we will collect and prove a few auxiliary results on (partial) subdifferentials and monotonicity properties of convex functions defined on the product space \({\mathbb {R}}^m\times {\mathbb {R}}^n\). If \(g: {\mathbb {R}}^m\times {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is convex, one can define the partial subdifferentials \(\partial _i g(x, y)\) for \(i=1,2\), via (3.10) by freezing the dependence of one of the (vector) variables. Regarding the relation between the full and the partial subdifferentials, it is immediately seen that

For the reverse inclusion, which is more subtle, we state the following version of [6, Theorem 3.3] adapted to the present situation. Let us remark that in the case of convex functions, the notion of Dini subgradient or Fréchet subderivate used in [6] coincides with the classical subgradients of convex analysis. A summary of results on Fréchet subdifferentials can be found in the survey article [33].

Lemma 3.2

Let \(g:{\mathbb {R}}^m\times {\mathbb {R}}^n\rightarrow {\mathbb {R}}\) be convex. Then there exists a dense Borel set \(S\subset {\mathbb {R}}^m\times {\mathbb {R}}^n\) whose complement is a Lebesgue null-set such that

Moreover, for any \(x\in {\mathbb {R}}^m\) the set \(S_x=\{y\in {\mathbb {R}}^n: (x, y)\in S\}\) lies dense in \({\mathbb {R}}^n\) and its complement has zero Lebesgue measure.

The next lemma generalizes the elementary observation that every bounded and convex function \({\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is constant to the situation where one has only partial bounds on the growth behavior of the function in selected variables.

Lemma 3.3

Let \(g:{\mathbb {R}}^m\times {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) be convex. Then

-

(i)

The function g is non-increasing (non-decreasing) in the second variable if and only if \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) (\(\partial _2 g(x,y)\subset [0, \infty )^n\)) for every \((x,y) \in {\mathbb {R}}^m\times {\mathbb {R}}^n\);

-

(ii)

If \(\partial g(x, y)\subset {\mathbb {R}}^m\times (-\infty , 0]^n\) (\(\partial g(x, y)\subset {\mathbb {R}}^m\times [0, \infty )^n\)) for all \((x, y) \in {\mathbb {R}}^m\times {\mathbb {R}}^n\), then \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) (\(\partial _2 g(x, y)\subset [0, \infty )^n\)) for every \((x, y)\in {\mathbb {R}}^m\times {\mathbb {R}}^n\);

-

(iii)

If there exists \({\hat{x}}\in {\mathbb {R}}^m\) such that \(\partial _2 g({\hat{x}}, y)\subset (-\infty , 0]^n\)\((\partial _2 g({\hat{x}}, y)\subset [0, \infty )^n)\) for all \(y\in {\mathbb {R}}^n\), then \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) (\(\partial _2 g(x, y)\subset [0, \infty )^n\)) for all \((x, y)\in {\mathbb {R}}^m\times {\mathbb {R}}^n\);

-

(iv)

If there exists \(({\hat{x}}, {\hat{y}})\in {\mathbb {R}}^{m}\times {\mathbb {R}}^n\) and a constant \(C>0\) such that

$$\begin{aligned} g({\hat{x}}, y)\le C \qquad \text {for all }y\ge {\hat{y}} (y\le {{\hat{y}}}), \end{aligned}$$(3.14)then \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) (\(\partial _2 g(x, y)\subset [0, \infty )^n\)) for all \((x, y)\in {\mathbb {R}}^m\times {\mathbb {R}}^n\).

Proof

We will only prove the primary statements, since those in brackets follow analogously. Part (i) results directly from the single-variable case mentioned above.

Regarding (ii), we let S be the set resulting from Lemma 3.2 and fix \((x, y)\in {\mathbb {R}}^{m}\times {\mathbb {R}}^n\). If \((x, y)\in S\), the inclusion \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) is an immediate consequence of Lemma 3.2. If \((x,y)\notin S\), the density of \(S_x\) in \({\mathbb {R}}^n\) yields for any \(i\in \{1, \ldots , n\}\) a sequence \((y^{(i, j)})_j\subset S_x\) such that

Recall that \(e_i\) denotes the ith standard unit vector in \({\mathbb {R}}^n\). Together with the monotonicity of the subdifferential operator (see (3.11)) it follows then that for every \(b^{(i, j)}\in \partial _2 g(x, y^{(i, j)})\subset (-\infty , 0]^n\) and \(b\in \partial _2 g(x, y)\),

where \(\delta ^{(i,j)} = y^{(i, j)}-y-e_i\). Letting \(j\rightarrow \infty \), the convergence statement (3.15) along with the uniform boundedness of \(b^{(i,j)}\) with regard to j, which is a consequence of the continuity of the subdifferential (cf. (3.12)), implies that \(b_i = b\cdot e_i\le 0\) for every \(i\in \{1, \ldots , n\}\), meaning \(b\le 0\). Hence, all subgradients in \(\partial _2 g(x, y)\) are not greater than the zero vector, which is (ii).

For (iii), we observe that \(g({{\hat{x}}}, \cdot ):{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is non-increasing according to (i). If \(x\in {\mathbb {R}}^m\), \(y\in {\mathbb {R}}^n\) and \(({\tilde{b}}, b)\in \partial g(x, y)\), then it holds for all \({\tilde{y}}\in {\mathbb {R}}^n\) with \({\tilde{y}}\ge 0\) that

Since the left-hand side is independent of \({{\tilde{y}}}\), one has that \(b\le 0\). Hence, \(\partial g(x, y)\subset {\mathbb {R}}^m\times (-\infty , 0]^n\), and therefore \(\partial _2 g(x, y)\subset (-\infty , 0]^n\) for all \((x, y)\in {\mathbb {R}}^m\times {\mathbb {R}}^n\) by (ii).

To see iv), let \(y\in {\mathbb {R}}^n\) and consider the subdifferential inequality with respect to the second variable. If \({{\hat{b}}} \in \partial _2 g({{\hat{x}}}, y)\), then

By letting \({{\tilde{y}}}\rightarrow +\infty \) componentwise, we deduce in view of the upper bound in (3.14) that \({{\hat{b}}}\le 0\). This proves that \(\partial _2 g({\hat{x}}, y) \subset (-\infty , 0]^n\) for all \(y\in {\mathbb {R}}^n\), and (iv) results then directly from (iii). \(\quad \square \)

After suitable identifications, the above results also apply to functions defined on Cartesian products between \({\mathbb {R}}^{d\times d}\), \({\mathcal {S}}^{d \times d}\), and \({\mathbb {R}}^n\). Moreover, we have the following lemma, which we will use in the proof of Theorem 5.1:

Lemma 3.4

Let \(g:{\mathcal {S}}^{d\times d}\times {\mathcal {S}}^{d\times d}\rightarrow {\mathbb {R}}\) be convex. Then,

-

(i)

If \(\partial g(\varepsilon , \eta )\subset {\mathcal {S}}^{d\times d}\times {\mathcal {S}}^{d\times d}_-\) for all \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\), then \(\partial _2 g(\varepsilon , \eta )\subset {\mathcal {S}}^{d\times d}_-\) for every \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\);

-

(ii)

If there exists \({\hat{\varepsilon }}\in {\mathcal {S}}^{d\times d}\) and a constant \(C>0\) such that

$$\begin{aligned} g({\hat{\varepsilon }}, x\otimes x)\le C \qquad \text {for all }x\in {\mathbb {R}}^d, \end{aligned}$$then \(\partial _2 g(\varepsilon , \eta )\subset {\mathcal {S}}^{d\times d}_-\) for all \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\).

Proof

The proof is similar to that of the previous lemma. Let \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\) and \(B\in \partial _2g(\varepsilon , \eta )\), as well as \(x\in {\mathbb {R}}^d\) be fixed. To prove the statement i), it suffices to verify that

After identifying \({\mathcal {S}}^{d\times d}\) with \({\mathbb {R}}^{\frac{1}{2}d(d+1)}\), Lemma 3.2 implies the existence of a dense set \(S_\varepsilon \subset {\mathcal {S}}^{d\times d}\) whose complement is a null-set such that \(\partial _2 g(\varepsilon , {{\tilde{\eta }}})\subset {\mathcal {S}}^{d\times d}_-\) for any \({{\tilde{\eta }}}\in S_\varepsilon \). Hence, we can find a sequence \((\eta ^{(x, j)})_j \subset S_\varepsilon \) such that

Let \(B^{(x, j)} \in \partial _2 g(\varepsilon , \eta ^{(x, j)})\subset {\mathcal {S}}^{d\times d}_-\). It follows then along with the monotonicity of the subdifferential that

where \(\delta ^{(x, j)} = \eta ^{(x,j)} - \eta - x\otimes x\). Finally, we pass to the limit \(j\rightarrow \infty \) in (3.18). As \(\delta ^{(x, j)}\rightarrow 0\) for \(j\rightarrow \infty \) by (3.17), and \(B^{(x, j)}\) is uniformly bounded with respect to j due to the continuity of the subdifferential in the sense of (3.12), one obtains (3.16) as desired.

Next, we turn to ii). Let \(\eta \in {\mathcal {S}}^{d\times d}\) and observe that for any \({\hat{B}}\in \partial _2 g({{\hat{\varepsilon }}}, \eta )\), \(x\in {\mathbb {R}}^d\) and \(t\in {\mathbb {R}}\),

Letting \(t\rightarrow \infty \) implies \({\hat{B}} : (x\otimes x) = {{\hat{B}}} x\cdot x\le 0\). This shows that \({\hat{B}}\) is negative semi-definite and

Then for \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\) and \(({\tilde{B}}, B) \in \partial g(\varepsilon , \eta )\) and \(x\in {\mathbb {R}}^d\),

Since the left-hand side is independent of x, the symmetric matrix B has to be negative semi-definite. Thus, \(\partial g(\varepsilon , \eta )\subset {\mathcal {S}}^{d\times d}\times {\mathcal {S}}^{d\times d}_-\) for all \(\varepsilon , \eta \in {\mathcal {S}}^{d\times d}\), and ii) is an immediate consequence of i). \(\quad \square \)

4 Symmetric Polyconvexity in 2d

In this section, we provide a characterization of symmetric polyconvex functions and study symmetric polyaffine functions as well as symmetric polyconvex quadratic forms in 2d.

4.1 Characterization of Symmetric Polyconvexity in 2d

The next theorem gives a necessary and sufficient condition for a real function on \({\mathcal {S}}^{2\times 2}\) to be symmetric polyconvex. While a classical polyconvex function can be expressed as a convex function of minors, symmetric polyconvex functions in the 2d case can be represented as convex functions of minors satisfying an additional monotonicity condition in the variable of the determinant.

Theorem 4.1

A function \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) is symmetric polyconvex if and only if there exists a convex function \(g:{\mathcal {S}}^{2\times 2}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) that is non-increasing with respect to the second variable such that

Proof

For the proof of sufficiency, let \(f(\varepsilon )=g(\varepsilon , \det \varepsilon )\) with g as in the statement. Then, along with (3.1), we obtain for \(F\in {\mathbb {R}}^{2\times 2}\) that

where \({\tilde{g}}: {\mathbb {R}}^{2\times 2} \times {\mathbb {R}}\rightarrow {\mathbb {R}}\) is given as

By definition, the function \({\tilde{g}}\) is the composition of g with a map that is linear in the first and concave in the second component. The fact that g is convex and non-increasing in its second argument shows that \({\tilde{g}}\) is convex. Hence, \({\tilde{f}}\) is polyconvex, which yields the symmetric polyconvexity of f.

To prove the reverse implication let \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) be symmetric polyconvex. Then, \({\tilde{f}}\) is polyconvex and there exists \({\tilde{g}}:{\mathbb {R}}^{2\times 2}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) convex such that

Defining \(g:{\mathcal {S}}^{2\times 2}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) as the restriction of \({\tilde{g}}\) to \({\mathcal {S}}^{2\times 2}\) in the first variable, i.e. \(g={\tilde{g}}|_{{\mathcal {S}}^{2\times 2}\times {\mathbb {R}}}\), g is convex and \(f(\varepsilon )=g(\varepsilon , \det \varepsilon )\) for all \(\varepsilon \in {\mathcal {S}}^{2\times 2}\). It remains to show that g is non-increasing in the second argument.

For \(t\ge 0\), let

then \(F_t^s=0\) and \(\det F_t=t\), and we conclude from the convexity of \({\tilde{g}}\) that

For the equality before the last, we have used that \({\tilde{f}}(F) = f(F^s) = {\tilde{f}}(F^T)\) for all \(F\in {\mathbb {R}}^{2\times 2}\). Hence, the uniform bound in (4.1) for \(t\ge 0\) together with Lemma 3.3 iv) yields that g is non-increasing in the second variable. \(\quad \square \)

Remark 4.2

-

a)

Due to Lemma 3.3 i), an equivalent way of phrasing the necessary and sufficient condition is that the convex function g satisfies \(\partial _2 g(\varepsilon , t)\subset (-\infty , 0]\) for all \(\varepsilon \in {\mathcal {S}}^{2\times 2}\) and \(t\in {\mathbb {R}}\).

-

b)

We point out that the representation of a function \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) in terms of a convex function of \(\varepsilon \) and \(\det \varepsilon \) is in general not unique. For instance, let

$$\begin{aligned} g(\varepsilon , t) = (\mathrm{tr\,}\varepsilon )^2 - t\quad \text { and }\quad h(\varepsilon , t) = |\varepsilon |^2 + t \end{aligned}$$for \(\varepsilon \in {\mathcal {S}}^{2\times 2}\) and \(t\in {\mathbb {R}}\), and consider

$$\begin{aligned} f(\varepsilon )=g(\varepsilon , \det \varepsilon ) = (\mathrm{tr\,} \varepsilon )^2-\det \varepsilon , \quad \varepsilon \in {\mathcal {S}}^{2\times 2}. \end{aligned}$$(4.2)

Because of the identity

it follows that \(g(\varepsilon , \det \varepsilon )=h(\varepsilon , \det \varepsilon )\) for all \(\varepsilon \in {\mathcal {S}}^{2\times 2}\). Hence, we also have that

even though \(h\ne g\); in particular, g is decreasing in t, while h is increasing in t. In view of (4.2), Theorem 4.1 clearly shows that function f is symmetric polyconvex, whereas Theorem 4.1 does not allow for an immediate conclusion when looking at (4.3).

Generally speaking, it depends on the way a function \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) is given, whether deciding about symmetric polyconvexity of f is immediate or not directly obvious. If, however, f can be expressed as a convex function depending on \(\det \varepsilon \) only, then Corollary 4.3 below gives a simple criterion.

In Example 2.4, we convinced ourselves that \(\varepsilon \mapsto - \det \varepsilon \) is symmetric polyconvex, whereas \(\varepsilon \mapsto \det \varepsilon \) is not. As a consequence of Theorem 4.1, the following more general result can be obtained:

Corollary 4.3

Let \(h:{\mathbb {R}}\rightarrow {\mathbb {R}}\) and let \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) be given by

Then f is symmetric polyconvex if and only if h is convex and non-increasing.

Indeed, in light of the next lemma the proof follows immediately.

Lemma 4.4

Let \(g:{\mathcal {S}}^{2\times 2}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) be convex and \(h:{\mathbb {R}}\rightarrow {\mathbb {R}}\). If

then g is constant in its first variable and \(h(t)=g(0, t)\) for every \(t\in {\mathbb {R}}\).

Proof

In order to show that \(g=g(\varepsilon , t)\) is constant in \(\varepsilon \) for any \(t\in {\mathbb {R}}\) , we use (3.8) to express \(\varepsilon \in {\mathcal {S}}^{2\times 2}\) as the convex combination of two symmetric matrices \(\varepsilon _1, \varepsilon _2\) with vanishing determinant, i.e. \(\varepsilon =\lambda \varepsilon _1 + (1-\lambda ) \varepsilon _2\) with \(\lambda \in [0,1]\). Then, by the convexity of g,

The independence of g of the first argument follows from Lemma 3.3 iv).

To prove the second part of the statement, observe that for every \(t\in {\mathbb {R}}\) one can find a symmetric matrix whose determinant equals t. \(\quad \square \)

At the end of the section, we turn in more detail to two classes of polyconvex functions.

4.2 Symmetric Polyconvex Quadratic Forms

In two dimensions, the three classes of symmetric polyconvex, quasiconvex, and rank-one convex quadratic forms are identical. In view of Definition 2.1, this is a consequence of the corresponding well-known property of classical semi-convex quadratic forms [16, Theorem 5.25]. The following characterization constitutes a refinement for the symmetric case of a result by Marcellini [36, Equation (11)], see also [16, Lemma 5.27].

Proposition 4.5

(Characterization of symmetric polyconvex quadratic forms in 2d). Let \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) be a quadratic form. Then the three following statements are equivalent:

-

(i)

f is symmetric polyconvex;

-

(ii)

there exist \(\alpha \ge 0\) and a convex quadratic form \(h:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) such that

$$\begin{aligned} f(\varepsilon )=h(\varepsilon )-\alpha \det \varepsilon \quad \text {for all }\varepsilon \in {\mathcal {S}}^{2\times 2}; \end{aligned}$$ -

(iii)

there exists \(\alpha \ge 0\) such that

$$\begin{aligned} f(\varepsilon ) +\alpha \det \varepsilon \ge 0 \quad \text {for all }\varepsilon \in {\mathcal {S}}^{2\times 2}. \end{aligned}$$

Proof

Since a quadratic form is convex if and only if it is non-negative, (ii) and (iii) are equivalent. The implication “\((ii)\Rightarrow i)\)” is an immediate consequence of Theorem 4.1. To see that (i) implies (iii), we use that by [16, Lemma 5.27, p.192] there exists \(\alpha \in {\mathbb {R}}\) such that \({{\tilde{f}}}(F) + \alpha \det F\ge 0\), \(F\in {\mathbb {R}}^{2\times 2}\). Hence, \({\tilde{h}}:{\mathbb {R}}^{2\times 2}\rightarrow {\mathbb {R}}\) defined by \({{\tilde{h}}}(F) = \tilde{f}(F) + \alpha \det F\) is quadratic and thus convex. Note that in particular, \(f(\varepsilon ) + \alpha \det \varepsilon \ge 0\) for all \(\varepsilon \in {\mathcal {S}}^{2\times 2}\).

Next, we will show that in the symmetric setting we indeed obtain \(\alpha \ge 0\). The argument is based on the observation that \(\alpha \ge 0\) if and only if the quadratic form \(q_\alpha :{\mathbb {R}}^{2\times 2}\rightarrow {\mathbb {R}}\) defined for \(\alpha \in {\mathbb {R}}\) by

is convex. To show that \(q_\alpha \) is convex, we infer in view of (3.1) that

for all \(F\in {\mathbb {R}}^{2\times 2}\). Exploiting that \(q_\alpha (F)=q_\alpha (F^a)\) then leads to

Since the composition of a convex with a linear function is always convex, \(q_\alpha \) is convex, and hence, \(\alpha \ge 0\) as asserted in iii). \(\quad \square \)

4.3 Symmetric Polyaffine Functions

If \(f:{\mathcal {S}}^{2\times 2}\rightarrow {\mathbb {R}}\) is symmetric polyaffine, that is both f and \(-f\) are symmetric polyconvex, then according to Theorem 4.1, there exist two convex functions \(g_+, g_-\) that are non-increasing in their second argument such that

We apply Lemma 4.4 with \(g=g_++g_-\) and h the zero function to find that \(g_-=-g_+\). Hence, \(g_-\) is both non-increasing and non-decreasing, and therefore constant, with respect to the last variable. Due to the convexity of \(g_+(\varepsilon , 0)\) and \(g_-(\varepsilon , 0)\), f has to be affine.

Summing up, we observe that f is symmetric polyaffine if and only if it is affine.

5 Symmetric Polyconvexity in 3d

This section is devoted to a discussion of symmetric polyconvex functions in 3d. After providing a characterization of symmetric polyconvexity, we subsequently discuss a few examples and two important subclasses of symmetric polyconvex functions in three dimensions, these are symmetric polyconvex quadratic forms and symmetric polyaffine functions.

5.1 Characterization of Symmetric Polyconvexity in 3d

The following theorem constitutes the main result of this paper:

Theorem 5.1

(Characterization of symmetric polyconvexity in 3d). A function \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) is symmetric polyconvex if and only if there exists a convex function \(g:{\mathcal {S}}^{3\times 3}\times {\mathcal {S}}^{3\times 3} \rightarrow {\mathbb {R}}\) such that \(\partial _2 g (\varepsilon , \eta )\subset {\mathcal {S}}^{3\times 3}_-\) for every \(\varepsilon , \eta \in {\mathcal {S}}^{3\times 3}\) and

Proof

We subdivide the proof into the natural two steps, proving first that (5.1) is necessary for symmetric polyconvexity, and then that it is also sufficient.

Step 1: Necessity. Since f is symmetric polyconvex, \(\tilde{f}\) defined as in Definition 2.1 is polyconvex. Hence, there is a convex function \({{\tilde{g}}}: {\mathbb {R}}^{3\times 3}\times {\mathbb {R}}^{3\times 3}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that

Restricting \({{\tilde{g}}}\) in the first two variables to \({\mathcal {S}}^{3\times 3}\) gives a convex function g defined on \({\mathcal {S}}^{3\times 3}\times {\mathcal {S}}^{3\times 3} \times {\mathbb {R}}\) that satisfies

in view of (3.2).

Next, we show that g is constant in the determinant. Then, in Step 1b, the asserted negative semi-definiteness of matrices in the subdifferential with respect to the second variable of g is established.

Step 1a: gis constant in the third variable. We will apply Lemma 3.3 iv) to g with \({{\hat{x}}} = (0,0) \in {\mathcal {S}}^{3\times 3} \times {\mathcal {S}}^{3\times 3}\) and \({\hat{y}} = 0\in {\mathbb {R}}\). To prove the uniform upper bound needed there, the estimate

where \(\varepsilon _\delta =\delta e_3\otimes e_3\), will turn out useful. Indeed, with

one has that \(F_{t, \delta }^s=\varepsilon _\delta \), \((\mathrm{cof\,}F_{t, \delta })^s = \frac{1}{2}(\mathrm{cof\,}F_{t, \delta }+ \mathrm{cof\,}F_{t, \delta }^T)= t e_3\otimes e_3\), and \(\det F_{t, \delta }=\delta t\). Exploiting the symmetry and the convexity of \({\tilde{g}}\) then leads to

which shows (5.2).

Now we use the subdifferentiability of the convex function g. For \(r\in {\mathbb {R}}\), let \(({\tilde{B}}_r, B_r, b_r) \in \partial g(0, 0, r) \subset {\mathcal {S}}^{3\times 3} \times {\mathcal {S}}^{3\times 3} \times {\mathbb {R}}\). Then in conjunction with (5.2) one has that

for all \(t\ge 0\) and \(\delta \in {\mathbb {R}}\). By letting t tend to infinity, we conclude that \(B_r : (e_3 \otimes e_3) + b_r\delta \le 0\) for all \(\delta \). Varying \(\delta \) in \({\mathbb {R}}\) implies that \(b_r=0\). Finally, we set \(t=0\) and \(\delta =0\) in (5.3) to obtain

According to Lemma 3.3 i) and iv), g is then non-increasing and non-decreasing, and thus constant, in the last variable. For simplicity of notation, this third variable is from now on omitted in the functions \({\tilde{g}}\) and g.

Step 1b: Partial subdifferential condition forg. We will show that

Then all partial subgradients with respect to the second variable of g are negative semi-definite as a consequence of Lemma 3.4 ii).

To prove (5.4), let \(F_{x}=X\) with the anti-symmetric matrix

Then, \(F_{x}^s=0\) and \((\mathrm{cof\,}F_{x})^s = \frac{1}{2}(\mathrm{cof\,}F_{x}+\mathrm{cof\,}F_{x}^T) = x\otimes x = \mathrm{cof\,}X\) by (3.4) and (3.2). Since the function \({{\tilde{g}}}\) is convex, we infer that

which is (5.4).

Step 2: Sufficiency. Suppose that f is of the form (5.1) with g as in the statement. To prove that \({{\tilde{f}}}\) is polyconvex, it suffices, according to [16, Theorem 5.6], to show the following two conditions:

-

(i)

There exists a convex function \(k:{\mathbb {R}}^{3\times 3}\times {\mathbb {R}}^{3\times 3}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that \({\tilde{f}}(F)\ge k(F, \mathrm{cof\,}F, \det F)\) for all \(F\in {\mathbb {R}}^{3\times 3}\);

-

(ii)

Let \(F,F_i \in {\mathbb {R}}^{3\times 3}\) and \(\lambda _i\in [0,1]\) with \(i=1,2,\dots , n=20\) such that

$$\begin{aligned}&\sum _{i=1}^{n} \lambda _i=1,\ \ F=\sum _{i=1}^{n}\lambda _iF_i,\ \ \mathrm{cof\,}F\nonumber \\&\quad =\sum _{i=1}^{n}\lambda _i\mathrm{cof\,}F_i, \quad \text {and} \ \ \det F=\sum _{i=1}^{n}\lambda _i\det F_i. \end{aligned}$$(5.5)Then, \({{\tilde{f}}}(F)\le \sum _{i=1}^n \lambda _i {{\tilde{f}}}(F_i)\).

Let \(F\in {\mathbb {R}}^{3\times 3}\). With \(({\tilde{B}}, B) \in \partial g(0, 0) \subset \partial _1 g(0,0) \times \partial _2 g(0,0)\subset {\mathcal {S}}^{3\times 3} \times {\mathcal {S}}^{3\times 3}_-\), condition (i) follows from

where \(q_{-B}\) is as in (3.7) and \(k(F, G ,t) = g(0, 0) + {{\tilde{B}}}: F^s + B:G^s + q_{-B}(F)\) for \(F, G\in {\mathbb {R}}^{3\times 3}\), \(t\in {\mathbb {R}}\). Since \(-B\) is positive semi-definite, \(q_{-B}\) is convex by Lemma 3.1, and so is k.

To prove (ii), let F satisfy (5.5) and let \(B\in \partial _2 g(F^s, \mathrm{cof\,}F^s)\subset {\mathcal {S}}^{3\times 3}_-\). Owing to the concavity of \(q_B\) (again by Lemma 3.1) we then find that

Along with the subgradient property of B, this implies

Then, since \(\mathrm{cof\,}F^s+\mathrm{cof\,}F^a=(\mathrm{cof\,}F)^s = \sum _{i=1}^n \lambda _i (\mathrm{cof\,}F_i)^s\) by (5.5) and g is convex, we infer that

which finishes the proof. \(\quad \square \)

Remark 5.2

-

(a)

As a consequence of Theorem 5.1, any symmetric polyconvex function f satisfies a monotonicity assumption with regard to the diagonal minors. Precisely, there exists a convex function \(g:{\mathcal {S}}^{3\times 3}\times {\mathbb {R}}^3\times {\mathbb {R}}^3 \rightarrow {\mathbb {R}}\) that is non-increasing in its second argument such that

$$\begin{aligned} f(\varepsilon ) = g(\varepsilon , \mathrm{cof\,}_{\mathrm{diag}}\, \varepsilon , \mathrm{cof\,}_{\mathrm{off}}\,\varepsilon ), \quad \varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$cf. Section 3.1 for notations. In view of Lemma 3.3 i) and ii), this follows from the fact that diagonal entries of negative semi-definite matrices are always non-positive.

-

(b)

Note that a function \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) given as in (5.1) with a convex function g whose partial subdifferential regarding the second variable is not negative semi-definite may still be symmetric polyconvex. Indeed,

$$\begin{aligned} f(\varepsilon ) = \varepsilon _{11}^2+ \varepsilon _{22}^2 + 2\varepsilon _{12}^2 + (\mathrm{cof\,}\varepsilon )_{33}, \quad \varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$which depends increasingly on the diagonal cofactors, admits an alternative representation that is in accordance with Theorem 5.1, namely

$$\begin{aligned} f(\varepsilon ) = (\varepsilon _{11} +\varepsilon _{22})^2 - (\mathrm{cof\,}\varepsilon )_{33}, \quad \varepsilon \in {\mathcal {S}}^{3\times 3}. \end{aligned}$$(5.6)Thus, f is in fact symmetric polyconvex.

-

(c)

We emphasize that the representation of a symmetric polyconvex function according to Theorem 5.1 is not unique. To see this, take for example f as in (5.6) and observe that it can equivalently be expressed as

$$\begin{aligned} f(\varepsilon ) = \tfrac{1}{2}(\varepsilon _{11} + \varepsilon _{22})^2 + \tfrac{1}{2} \varepsilon _{11}^2 + \tfrac{1}{2}\varepsilon _{22}^2 +\varepsilon _{12}^2,\quad \varepsilon \in {\mathcal {S}}^{3\times 3}. \end{aligned}$$

In particular, f is even convex.

The 3d analogon of Corollary 4.3 is based on an auxiliary result extending Lemma 4.4.

Lemma 5.3

Let \(g:{\mathcal {S}}^{3\times 3}\times {\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be convex. Then,

-

(i)

If there exists a function \(h: {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that

$$\begin{aligned} g(\varepsilon , \mathrm{cof\,}\varepsilon ) = h(\det \varepsilon )\quad \text {for all }\varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$then h is constant;

-

(ii)

If there exists a continuous function \(h:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) satisfying

$$\begin{aligned} g(\varepsilon , \mathrm{cof\,}\varepsilon ) = h(\mathrm{cof\,}\varepsilon )\quad \text {for all }\varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$then \(h=g(0, \cdot )\).

Proof

First, we argue that, both in i) and ii), the function g is independent of the first variable. This follows as in the proof of Lemma 4.4, just replacing (3.8) with (3.9). Notice that every matrix with vanishing cofactor has in particular zero determinant.

In the following, let \(g_0 = g(0, \cdot ):{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\). As a consequence of the separate convexity of g, the auxiliary function \(g_0\) is convex, and we have that \(g_0(\mathrm{cof\,}\varepsilon ) = g(0, \mathrm{cof\,}\varepsilon ) = g(\varepsilon , \mathrm{cof\,}\varepsilon )\) for any \(\varepsilon \in {\mathcal {S}}^{3\times 3}\).

As for i), we use Lemma 3.3 iii) to infer from the observation that \(g_0(te_i\otimes e_i) = g_0(\mathrm{cof\,}(te_j\otimes e_j + e_k\otimes e_k)) = h(0)\) for all \(t\in {\mathbb {R}}\) and any circular permuation (ijk) of (123) that \(g_0\) is constant in the variables corresponding to the diagonal entries. Thus, plugging diagonal matrices into the identity \(g_0(\mathrm{cof\,}\varepsilon ) = h(\det \varepsilon )\) for \(\eta \in {\mathcal {S}}^{3\times 3}\) shows that h is constant.

Regarding ii), we observe that the image of the map \(\mathrm{cof}:{\mathcal {S}}^{3\times 3}\rightarrow {\mathcal {S}}^{3\times 3}\) contains the set of symmetric invertible matrices. Indeed, for any \(\eta \in {\mathcal {S}}^{3\times 3}\) with \(\det \eta \ne 0\), one finds by (3.3) that

and consequently,

Finally, since the set of symmetric invertible matrices lies dense in \({\mathcal {S}}^{3\times 3}\), we use the continuity of h and \(g_0\) to conclude that \(h(\eta )=g_0(\eta )\) for all \(\eta \in {\mathcal {S}}^{3\times 3}\), which yields ii). \(\quad \square \)

Remark 5.4

-

a)

The statement in Lemma 5.3ii) clearly fails, if we do not require h to be continuous. To see this, we first recall that, in the 3d case, the rank of any cofactor matrix is not equal to 2. Indeed, if \({{\,\mathrm{rank}\,}}\varepsilon =0\) or \({{\,\mathrm{rank}\,}}\varepsilon =1\), then \(\mathrm{cof\,}\varepsilon =0\), so its rank is 0. If \({{\,\mathrm{rank}\,}}\varepsilon =2\), we have by Cramer’s rule (3.3) and Sylvester’s rank formula that \({{\,\mathrm{rank}\,}}\mathrm{cof\,}\varepsilon + {{\,\mathrm{rank}\,}}\varepsilon \le 3\) and hence, \({{\,\mathrm{rank}\,}}\mathrm{cof\,}\varepsilon \le 1\). Finally, if \(\varepsilon \) is invertible, so is \(\mathrm{cof\,}\varepsilon \) and \({{\,\mathrm{rank}\,}}\mathrm{cof\,}\varepsilon =3\). Now, let \(g:{\mathcal {S}}^{3\times 3}\times {\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be the zero function, and choose \(h:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) such that \(h(\varepsilon ) = 1\) for all \(\varepsilon \in {\mathcal {S}}^{3\times 3}\) with \({{\,\mathrm{rank}\,}}\varepsilon = 2\), and \(h(\varepsilon )=0\) otherwise. Then, since \({{\,\mathrm{rank}\,}}\mathrm{cof\,}\varepsilon \ne 2\), \(g(\varepsilon , \mathrm{cof\,}\varepsilon ) = h(\mathrm{cof\,}\varepsilon )\) for any \(\varepsilon \in {\mathcal {S}}^{3\times 3}\), but \(h\ne 0 = g(0,\cdot )\).

-

b)

Let \(g:{\mathcal {S}}^{3\times 3}\times {\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be convex. If there is a continuous function \(h:{\mathcal {S}}^{3\times 3}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) such that

$$\begin{aligned} g(\varepsilon , \mathrm{cof\,}\varepsilon ) = h(\mathrm{cof\,}\varepsilon ,\det \varepsilon )\quad \text {for all } \varepsilon \in {\mathcal {S}}^{3\times 3}, \end{aligned}$$then results analogous to Lemma 5.3i) and ii) cannot be expected. That is, h may be non-constant in the last variable and \(h(\cdot , 0) \ne g(0,\cdot )\). Due to \(\det (\mathrm{cof\,}\varepsilon ) = (\det \varepsilon )^2\) for all \(\varepsilon \in {\mathcal {S}}^{3\times 3}\), considering the functions

$$\begin{aligned} g(\varepsilon , \eta )= 0\quad \text { and }\quad h(\eta , t) = t^2-\det \eta , \quad \varepsilon , \eta \in {\mathcal {S}}^{3\times 3}, t\in {\mathbb {R}}, \end{aligned}$$

is an example.

The following statement is an immediate consequence of Theorem 5.1 and Lemma 5.3, and it allows us to decide in special situations, if a function is symmetric polyconvex or not, without having to find a function g as required in Theorem 5.1:

Corollary 5.5

i) Let \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be given by

with \(h:{\mathbb {R}}\rightarrow {\mathbb {R}}\). Then f is symmetric polyconvex if and only if h is constant.

ii) Let \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be given by

with \(h:{\mathcal {S}}^{3\times 3} \rightarrow {\mathbb {R}}\) continuous. Then f is symmetric polyconvex, if and only if h is convex with \(\partial h(\eta )\subset {\mathcal {S}}_-^{3\times 3}\) for all \(\eta \in {\mathcal {S}}^{3\times 3}\).

In contrast to the 2d setting, Corollary 5.5i) shows that there exists no symmetric polyconvex function in 3d that is given as a non-constant function of the determinant only.

5.2 Symmetric Polyconvex Quadratic Forms

Finally, we turn our attention to quadratic forms in 3d. As a consequence of Theorem 5.1, we obtain a characterization of symmetric polyconvexity for this class of functions, which reminds of the characterization of polyconvex quadratic forms in [16, p. 192].

Proposition 5.6

(Characterization of symmetric polyconvex quadratic forms in 3d). Let \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) be a quadratic form. Then the following statements are equivalent:

-

(i)

f is symmetric polyconvex;

-

(ii)

there exist a convex quadratic form \(h:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) and a matrix \(A\in {\mathcal {S}}^{3\times 3}_+\) such that

$$\begin{aligned} f(\varepsilon ) = h(\varepsilon )-A:\mathrm{cof\,}\varepsilon \quad \text {for all }\varepsilon \in {\mathcal {S}}^{3\times 3}; \end{aligned}$$(5.7) -

(iii)

there is \(A\in {\mathcal {S}}^{3\times 3}_+\) such that

$$\begin{aligned} f(\varepsilon ) + A:\mathrm{cof\,}\varepsilon \ge 0\quad \text {for all }\varepsilon \in {\mathcal {S}}^{3\times 3}. \end{aligned}$$

Proof

Note that ii) and iii) are equivalent since quadratic forms are convex if and only if they are non-negative. To prove “\(ii)\Rightarrow i)\)" suppose that f is of the form (5.7). Defining \(g:{\mathcal {S}}^{3}\times {\mathcal {S}}^{3}\rightarrow {\mathbb {R}}\) by \(g(\varepsilon ,\eta )=h(\varepsilon )-A:\eta \) for \(\varepsilon , \eta \in {\mathcal {S}}^{3\times 3}\), the representation (5.1) is immediate. Moreover, g is convex and smooth with

Since \(-A\) is negative semi-definite by assumption, f is symmetric polyconvex according to Theorem 5.1.

To show that i) implies iii), let f be a symmetric polyconvex quadratic form on \({\mathcal {S}}^{3\times 3}\), and let g be an associated convex function resulting from Theorem 5.1. As g is convex, there exist \(B, {\tilde{B}}\in {\mathcal {S}}^{3\times 3}\) such that \(({\tilde{B}}, B)\in \partial g(0,0)\). We note that owing to \(\partial _2 g(0,0)\subset {\mathcal {S}}^{3\times 3}_-\), the matrix B is negative semi-definite, cf. (3.13). Then, it follows for every \(\varepsilon \in {\mathcal {S}}^{3\times 3}\) that

Since f is a quadratic form, \(f(\varepsilon )=f(-\varepsilon )\) for all \(\varepsilon \in {\mathcal {S}}^{3\times 3}\) and \(g(0,0)=f(0)=0.\) By summing up the two inequalities and setting \(A=-B\), we conclude that \(f(\varepsilon )+A:\mathrm{cof\,}\varepsilon \ge 0\) for all \(\varepsilon \in {\mathcal {S}}^{3\times 3}\), as asserted. \(\quad \square \)

Next we present an example of a quadratic form which is symmetric rank-one convex but not symmetric polyconvex. As already mentioned in the introduction, this is motivated by a corresponding result in the classical setting by Serre [50].

Theorem 5.7

There exists \(\eta >0\) such that the quadratic form \(f:{\mathcal {S}}^{3\times 3}\rightarrow {\mathbb {R}}\) given by

is symmetric rank-one convex, but not symmetric polyconvex.

Proof

Consider the quadratic form

and let

with

Note that the minimum in (5.9) indeed exists, and that \(\eta >0\). To see the latter, assume to the contrary that \(\eta =0\). Then, if \({\bar{\varepsilon }}\) is a minimizer of \(f_0\) in \({\mathcal {M}}\), it holds that \(f_0({{\bar{\varepsilon }}}) =0\). Along with \(|{\bar{\varepsilon }}|=1\) we conclude that, necessarily,

However, since this \({{\bar{\varepsilon }}}\) has full rank, \({{\bar{\varepsilon }}}\notin {\mathcal {M}}\), which is the desired contradiction. Furthermore, observe that \(\eta < 1\) as shown in (5.12) below.

The remainder of the proof is subdivided into the natural two steps.

Step 1:fis symmetric rank-one convex. Due to (5.9), one has that \(f(a\odot b) = f_0(a\odot b) - \eta |a\odot b|^2 \ge 0\) for all \(a, b\in {\mathbb {R}}^3\). Hence, f is symmetric rank-one convex by (2.5).

Step 2:fis not symmetric polyconvex. Let \(A=(A_{ij})_{ij}\in {\mathcal {S}}^{3\times 3}_+\) be fixed but arbitrary. In view of Proposition 5.6iii), it is enough to find one \(\varepsilon _A\in {\mathcal {S}}^{3\times 3}\) such that \(f(\varepsilon _A)+A:\mathrm{cof\,}{\varepsilon _A}<0\).

Let \({{\bar{\varepsilon }}}=a\odot b\) with \(a, b\in {\mathbb {R}}^3\) such that \(|{{\bar{\varepsilon }}}|=1\) be a minimizer of \(f_0\) in \({\mathcal {M}}\). In view of \(f({{\bar{\varepsilon }}})=0\), (3.6), and the positive semi-definiteness of A, we infer that

Hence, if \(A:\mathrm{cof\,}{{\bar{\varepsilon }}}<0\), setting \(\varepsilon _A={{\bar{\varepsilon }}}\) finishes the proof.

If \(A:\mathrm{cof\,}{{\bar{\varepsilon }}}=0\), we consider a perturbation of \({{\bar{\varepsilon }}}\) of the form

for which we have that

One can choose \(\delta \in {\mathbb {R}}\) such that \(f({{\bar{\varepsilon }}}_{\delta }^{11})+A:\mathrm{cof\,}{{\bar{\varepsilon }}}_{\delta }^{11}<0\) and thus, conclude the proof, unless \({{\bar{\varepsilon }}}_{33}A_{22}+{{\bar{\varepsilon }}}_{22}A_{33}-2{{\bar{\varepsilon }}}_{23}A_{23}+2(1-\eta ){{\bar{\varepsilon }}}_{11}=0\). If the latter equation is satisfied, we perturb another entry of \({{\bar{\varepsilon }}}\), say \({{\bar{\varepsilon }}}_{12}\), and consider

It follows then that

Hence, a choice of \(\delta \) such that \(f({{\bar{\varepsilon }}}_{\delta }^{12})+A:\mathrm{cof\,}{{\bar{\varepsilon }}}_{\delta }^{12}<0\) is possible, except when the factor in front of \(\delta \) vanishes. Performing this perturbation procedure for all relevant components yields a proof of Step 2, provided the entries \(A_{ij}\) of A do not satisfy the following six equations:

Indeed, taking into account the special structure of \({{\bar{\varepsilon }}}\) as a linearized strain \(a\odot b\), we will rule out the latter case by proving that the linear system

with unknown \(x\in {\mathbb {R}}^6\),

and

does not admit a solution. To show that (5.11) is unsolvable, we split the argument in two separate cases, based on [32, Lemma 4.1].

The first case assumes that the matrix \(a\odot b\) is of rank one, which means that the vectors a and b are parallel. According to Lemma 5.8i) below, we then know that all components of a and b are different from 0. We now apply the Gaussian algorithm to show that solving (5.11) is equivalent to solving the reduced system

which is clearly not solvable.

In the second case, \(a\odot b\) has rank two. Thus \(\mathrm{cof\,}(a\odot b) \ne 0\). Hence, by (3.6), we can assume without loss of generality that \(a_2b_1-a_1b_2\ne 0\). If \(a_3\ne 0\) and \(b_3\ne 0\), then \(a_3 (a_2 b_1-a_1 b_2) b_3\ne 0\). If \(a_3=0\), then, by Lemma 5.8, the coefficients \(a_1,a_2\) and \(b_3\) cannot vanish. If in addition \(b_1\ne 0\), then \(a_1(a_2b_3-a_3b_2)b_1 =a_1a_2b_1b_3\ne 0\). If \(b_1=0\), then \(b_2\ne 0\) by Lemma 5.8, and we conclude that \(a_2(a_1b_3-a_3b_1)b_2= a_1a_2b_2b_3\ne 0\). Hence, at least one of the expressions \(a_3 (a_2 b_1-a_1 b_2) b_3\), \(a_1(a_2 b_3-a_3 b_2)b_1\) and \(a_2(a_1b_3-a_3b_1)b_2\) is non-zero.

Let us assume that \(a_3(a_2b_1-a_1b_2)b_3\ne 0\), then the Gaussian reduced form of (5.11) reads

which has no solution. The other situations can be treated in the same way after a suitable permutation of lines in (5.11). Finally, this shows that (5.11) does not admit a solution, and the proof is completed. \(\quad \square \)

The proof of Theorem 5.7 makes use of the following auxiliary result on the structure of minimizers of \(f_0\) in \({\mathcal {M}}\), cf. (5.9):

Lemma 5.8

Suppose that \({{\bar{\varepsilon }}}\) is a minimizer of \(f_0\) in \({\mathcal {M}}\), where \(f_0\) is defined in (5.8) and \({\mathcal {M}}\) in (5.10). Then \({{\bar{\varepsilon }}}=a\odot b\), where \(a, b\in {\mathbb {R}}^3\) each has at most one zero entry, but not in the same component. In formulas, this means that

-

(i)

\((a_i,b_i)\ne (0,0)\) for all \(i\in \{1,2,3\}\), and

-

(ii)

\((a_i,a_j)\ne (0,0)\) and \((b_i,b_j)\ne (0,0)\) for all \( i, j\in \{1,2,3\}\), \(i\ne j\).