Abstract

In this work we present LSEGO, an approach to drive efficient global optimization (EGO), based on LS (least squares) ensemble of metamodels. By means of LS ensemble of metamodels it is possible to estimate the uncertainty of the prediction with any kind of model (not only kriging) and provide an estimate for the expected improvement function. For the problems studied, the proposed LSEGO algorithm has shown to be able to find the global optimum with less number of optimization cycles than required by the classical EGO approach. As more infill points are added per cycle, the faster is the convergence to the global optimum (exploitation) and also the quality improvement of the metamodel in the design space (exploration), specially as the number of variables increases, when the standard single point EGO can be quite slow to reach the optimum. LSEGO has shown to be a feasible option to drive EGO with ensemble of metamodels as well as for constrained problems, and it is not restricted to kriging and to a single infill point per optimization cycle.

Similar content being viewed by others

Notes

For instance, even with high end computers clusters used nowadays in automotive industry, one single full vehicle analysis of high fidelity safety crash FEM model takes up to 15 processing hours with 48 CPU in parallel. With respect to CFD analysis one single complete car aerodynamics model for drag calculation, by using 96 CPU, should take up 30 hours to finish. An interesting essay regarding this “never-ending” need of computer resources in structural optimization can be found in Venkatararaman and Haftka (2004).

Only for notational convenience, without loss of generality, we will assume that all equality constraints h(x) can be properly transformed into inequality constraints g(x).

Matlab is a well known and widely used numerical programing platform and it is developed and distributed by The Mathworks Inc., see www.mathworks.com.

Boxplot is a common statistical graph used for visual comparison of the distribution of different variables in a same plane. The box is defined by lines at the lower quartile (25%), median (50%) and upper quartile (75%) of the data. The lines extending above and upper each box (named as whiskers) indicate the spread for the rest of the data out of the quartiles definition. If existent, outliers are represented by plus signs “ + ”, above/below the whiskers. We used the Matlab function boxplot (with default parameters) to create the plots.

For further details and recent updates on SURROGATES Toolbox refer to the website: https://sites.google.com/site/srgtstoolbox/.

As a common practice for metamodel based optimization purposes, the number of points in initial DOE is often in the range 5n v to 10n v .

References

Chaudhuri A, Haftka RT (2012) Efficient global optimization with adaptive target setting. AIAA J 52 (7):1573–1578

Desautels T, Krause A, Burdick J (2012) Parallelizing exploration-exploitation tradeoffs in gaussian process bandit optimization. J Mach Learn Res 15(1):13,873–3923

Encisoa SM, Branke J (2015) Tracking global optima in dynamic environments with efficient global optimization. Eur J Oper Res 242:744–755

Fang KT, Li R, Sudjianto A (2006) Design and modeling for computer experiments. Computer science and data analysis series. Chapman & Hall/CRC, Boca Raton

Ferreira WG (2016) Efficient global optimization driven by ensemble of metamodels: new directions opened by least squares approximation. PhD thesis, Faculty of Mechanical Engineering, University of Campinas (UNICAMP), Campinas, Brazil

Ferreira WG, Serpa AL (2016) Ensemble of metamodels: the augmented least squares approach. Struct Multidiscip Optim 53(5):1019–1046

Forrester A, Keane A (2009) Recent advances in surrogate-based optimization. Prog Aerosp Sci 45:50–79

Forrester A, Sóbester A, Keane A (2008) Engineering desing via surrogate modelling—a practical guide. Wiley, United Kingdom

Ginsbourger D, Riche RL, Carraro L (2010) Kriging is well-suited to parallelize optimization. In: Computational intelligence in expensive optimization problems - adaptation learning and optimization, springer, vol 2, pp 131–162

Giunta AA, Watson LT (1998) Comparison of approximation modeling techniques: polynomial versus interpolating models. In: 7th AIAA/USAF/NASA/ISSMO symposium on multidisciplinary analysis and optimization, AIAA-98-4758, pp 392–404

Gunn SR (1997) Support vector machines for classification and regression. Technical Report. Image, Speech and Inteligent Systems Research Group, University of Southhampton, UK

Haftka RT, Villanueva D, Chaudhuri A (2016) Parallel surrogate-assisted global optimization with expensive functions - a survey. Struct Multidiscip Optim 54(1):3–13

Han ZH, Zhang KS (2012) Surrogate-based optimization - real-world application of genetic algorithms, ISBN 978-953-51-0146-8 edn. InTech, Dr. Olympia Roeva - Editor, Shanghai, China

Henkenjohann N, Kukert J (2007) An efficient sequential optimization approach based on the multivariate expected improvement criterion. Qual Eng 19(4):267–280

Janusevskis J, Riche RL, Ginsbourger D, Girdziusas R (2012) Expected improvements for the asynchronous parallel global optimization of expensive functions: potentials and challenges. Learning and Intelligent Optimization 7219:413–418

Jekabsons G (2009) RBF: radial basis function interpolation for matlab/octave. Riga Technical University, Latvia version 1.1 ed

Jin R, Chen W, Sudjianto A (2002) On sequential sampling for global metamodeling in engineering design. In: Engineering technical conferences and computers and information in engineering conference, DETC2002/DAC-34092, ASME 2002 Design, Montreal-Canada

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Glob Optim 21:345–383

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13:455–492

Jurecka F (2007) Optimization based on metamodeling techniques. PhD thesis, Technische Universität München, München-Germany

Koziel S, Leifesson L (2013) Surrogate-based modeling and optimization - applications in engineering. Springer, New York, USA

Krige DG (1951) A statistical approach to some mine valuations and Allied problems at the Witwatersrand. Master’s thesis, University of Witwatersrand, Witwatersrand

Lophaven SN, Nielsen HB, Sondergaard J (2002) DACE - a matlab kriging toolbox. Tech. Rep. IMM-TR-2002-12, Technical University of Denmark

Mehari MT, Poorter E, Couckuyt I, Deschrijver D, Gerwen JV, Pareit D, Dhaene T, Moerman I (2015) Efficient global optimization of multi-parameter network problems on wireless testbeds. Ad Hoc Netw 29:15–31

Mockus J (1994) Application of bayesian approach to numerical methods of global and stochastic optimization. J Glob Optim 4:347–365

Ponweiser W, Wagner T, Vincze M (2008) Clustered multiple generalized expected improvement: a novel infill sampling criterion for surrogate models. In: Wang J (ed) 2008 IEEE World congress on computational intelligence, IEEE computational intelligence society. IEEE Press, Hong Kong, pp 3514–3521

Queipo NV et al (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Rasmussen CE, Williams CK (2006) Gaussian processes for machine learning. The MIT Press

Rehman SU, Langelaar M, Keulen FV (2014) Efficient kriging-based robust optimization of unconstrained problems. Journal of Computational Science 5:872–881

Schonlau M (1997) Computer experiments and global optimization. PhD thesis, University of Waterloo, Watterloo, Ontario, Canada

Simpson TW, Toropov V, Balabanov V, Viana FAC (2008) Design and analysis of computer experiments in multidisciplinary design optimization: a review of how far we have come - or not. In: 12th AIAA/ISSMO multidisciplinary analysis and optimization conference, Victoria, British Columbia

Sóbester A, Leary SJ, Keane A (2004) A parallel updating scheme for approximating and optimizing high fidelity computer simulations. Struct Multidiscip Optim 27:371–383

Thacker WI, Zhang J, Watson LT, Birch JB, Iyer MA, Berry MW (2010) Algorithm 905: SHEPPACK: modified shepard algorithm for interpolation of scattered multivariate data. ACM Trans Math Softw 37(3):1–20

Venkatararaman S, Haftka RT (2004) Structural optimization complexity: what has moore’s law done for us? Struct Multidiscip Optim 28:375–287

Viana FAC (2009) SURROGATES Toolbox user’s guide version 2.0 (release 3). Available at website: http://fchegury.googlepages.com

Viana FAC (2011) Multiples surrogates for prediction and optimization. PhD thesis, University of Florida, Gainesville, FL, USA

Viana FAC, Haftka RT (2010) Surrogage-based optimization with parallel simulations using probability of improvement. In: Proceedings of the 13th AIAA/SSMO multidisciplinary analysis optimization conference, Forth Worth, Texas, USA

Viana FAC, Haftka RT, Steffen V (2009) Multiple surrogates: how cross-validation error can help us to obtain the best predictor. Struct Multidiscip Optim 39(4):439–457

Viana FAC, Cogu C, Haftka RT (2010) Making the most out of surrogate models: tricks of the trade. In: Proceedings of the ASME 2010 international design engineering technical conferences & computers and information in engineering conference IDETC/CIE 2010, Montreal, Quebec, Canada

Viana FAC, Haftka RT, Watson LT (2013) Efficient global optimization algorithm assisted by multiple surrogates techniques. J Glob Optim 56:669–689

Acknowledgements

The authors would like to thank Dr. F.A.C. Viana for the prompt help with the SURROGATES Toolbox and also for the useful comments and discussions about the preliminary results of this work.

W.G. Ferreira would like to thank Ford Motor Company and also the support of his colleagues at the MDO group and Product Development department that helped in the development of this work, which is part of his doctoral research concluded at UNICAMP by the end of 2016.

Finally, the authors are grateful for the questions and comments from the journal editors and reviewers. Undoubtedly their valuable suggestions helped to improve the clarity and consistency of the present text.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix: A: The kriging metamodel

The Kriging model, originally proposed by Krige (1951), is an interpolating metamodel in which the basis functions, as stated in (1), are of the form

with tuning parameters 𝜃 j and p j normally determined by maximum likelihood estimates.

With the parameters estimated, the final kriging predictor is of the form

where \(\mathbf {y} = \left [y^{(1)}{\ldots } y^{(N)}\right ]^{T}\), 1 is a vector of ones, Ψ = ψ (r)(s) is the so called N × N matrix of correlations between the sample data, calculated by means of (12) as

and \(\hat {\mu }\) is given by

One of the key benefits of kriging models is the provision of uncertainty estimate for the prediction (mean squared error, MSE) at each point x, given by

with variance estimated by

Refer to Forrester et al. (2008) or Fang et al. (2006) for further details on metamodel formulation.

Appendix: B: Analytical benchmark functions

These functions were chosen since they are widely used to validate both metamodeling and optimization methods, as for example in and Jones et al. (1998) and Viana et al. (2013).

Branin-Hoo

for the region − 5 ≤ x 1 ≤ 10 and 0 ≤ x 2 ≤ 15. There are 3 minima in this region, i.e., \(\mathbf {x}^{\ast } \approx \left (-\pi , 12.275 \right ), \left (\pi , 2.275 \right ), \left (3\pi , 2.475 \right )\) with \(f\left (\mathbf {x}^{\ast }\right ) = \frac {5}{4\pi }\).

Hartman

where \(x_{i} \in \left [0, 1\right ]^{n_{v}}\), with constants c i , a i j and p i j given in Table 3, for the case n v = 3 (Hartman-3); and in Tables 4 and 5, for the case n v = 6 (Hartman-6).

In case of Hartman-3, there are four local minima,

with f l o c a l ≈−c i and the global minimum is located at

with \(f\left (\mathbf {x}^{\ast } \right ) \approx -3.862782\).

In case of Hartman-6, there are four local minima,

with f l o c a l ≈−c i and the global minimum is located at

with \(f\left (\mathbf {x}^{\ast } \right ) \approx -3.322368\).

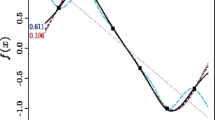

Giunta-Watson

This is the “noise-free” version of the function used by Giunta and Watson (1998)

where \(\mathbf {x} \in \left [-2, 4\right ]^{n_{v}}\).

Appendix: C: SURROGATES Toolbox

The SURROGATES Toolbox (ref. Viana 2009) is a Matlab based toolbox that aggregates and extends several open-source tools previously developed in the literature for design and analysis of computer experiments, i.e., metamodeling and optimization. We used the version v2.0, but v3.0 already includes EGO variants.Footnote 5

The SURROGATES Toolbox uses the following collection of third party software published: SVM by Gunn (1997), DACE by Lophaven et al. (2002), GPML by Rasmussen and Williams (2006), RBF by Jekabsons (2009), and SHEPPACK by Thacker et al. (2010). The compilation in a single framework has been implemented and applied in previous research by Viana and co-workers, as for example Viana et al. (2009) and Viana (2011).

Appendix: D: A note on sequential sampling vs. one-stage approach

In Ferreira (2016) we investigated some examples with analytical engineering functions. We repeated the one-stage optimization ten times, with different initial DOE, at a very large rate of number of sampling points in terms of number of variables,Footnote 6 i.e., for f 1(x) of Three-Bar Truss N = 120 (60n v ); for Cantilever Beam N = 120 (60n v ); for Helical Spring N = 360 (120n v ) and for Pressure Vessel N = 460 (120n v ).

The results for this experiment are presented in Fig. 18. For the cases investigated, the results showed that there is no guarantee to achieve the exact optimum with a one-stage approach, even starting the optimization with a high density of sampling points in the design space. Probably, the majority of these points are working only for improving the overall quality of the metamodels (exploration) and these points are not being effective to help finding the exact minimum (exploitation), what is clearly a waste of resources for optimization objectives in mind.

Boxplots with the converged results for analytical engineering functions with one-stage optimization. Each problem was repeated 10 times with different initial DOE, i.e., for f 1(x) of Three-Bar Truss N = 120 (60n v ); for Cantilever Beam N = 120 (60n v ); for Helical Spring N = 360 (120n v ) and for Pressure Vessel N = 460 (120n v ). Even with very dense number of initial sampling points, there is no guarantee of achieving the exact optimum. For details refer to Ferreira (2016)

These results confirm our beliefs that it is worthwhile to apply sequential sampling approaches like EGO-type algorithms, or some hybrid approach (allied to clustering, for instance), in order to increase the number of points slowly and “surgically” at regions of the design space, with real chance or expectation of improvement in the objective and constraint responses.

In this sense, we reinforce the comments of Forrester and Keane (2009), that the metamodel based optimization must always include some form of iterative search and repetitive infill process to ensure the accuracy in the areas of interest in the design space. In this direction, we agree on the recommendations that a reasonable number of points for starting the sequential sampling metamodel based optimization is about one third (33%) of the available budget in terms of true function/model evaluations (or processing time) to be spent in the whole optimization cycle.

Rights and permissions

About this article

Cite this article

Ferreira, W.G., Serpa, A.L. Ensemble of metamodels: extensions of the least squares approach to efficient global optimization. Struct Multidisc Optim 57, 131–159 (2018). https://doi.org/10.1007/s00158-017-1745-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-017-1745-x