Abstract

This work introduces the English Sublexical Toolkit, a suite of tools that utilizes an experience-dependent learning framework of sublexical knowledge to extract regularities from the English lexicon. The Toolkit quantifies the empirical regularity of sublexical units in both the reading and spelling directions (i.e., grapheme-to-phoneme and phoneme-to-grapheme) and at multiple grain sizes (i.e., phoneme/grapheme and onset/rime unit size). It can extract multiple experience-dependent regularity indices for words or pseudowords, including both frequency indices (e.g., grapheme frequency) and conditional probability indices (e.g., grapheme-to-phoneme probability). These tools provide (1) superior estimates of the regularities that better reflect the complexity of the sublexical system relative to previously published indices and (2) completely novel indices of sublexical units such as phonographeme frequency (i.e., combined units of individual phonemes and graphemes that are independent of processing direction). We demonstrate that measures from the toolkit explain significant amounts of variance in empirical data (naming of real words and lexical decision), and either outperform or are comparable to the best available consistency measures. The flexibility of the toolkit is further demonstrated by its ability to readily index the probability of different pseudowords pronunciations, and we report that the measures account for the majority of variance in these empirically observed probabilities. Overall, this work provides a framework and resources that can be flexibly used to identify optimal corpus-based consistency measures that help explain reading/spelling behaviors for real and pseudowords.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A fundamental component of the cognitive processes of reading and spelling relates to how the mental representation of phonological word forms are associated with their orthographic counterparts. This work introduces the English Sublexical Toolkit, a suite of tools designed to support empirical investigations of the multimodal (e.g., orthographic and phonological) sublexical structures of the English language. As with previous researchers cited in this work, we simply use a set of corpus statistics reflecting the empirical reality of different sublexical units in the English lexicon. While we are not the first to highlight the importance of experience for developing sublexical knowledge, what is novel about the approach used to develop the toolkit is that it considers the implications of experience-dependence to a broader and deeper extent compared to previous work. In essence, the toolkit does not just provide consistency measures for a finite list of real words; rather, it is a whole framework for deriving measures of sublexical consistency (and frequency) according to different assumptions about the underlying nature of sublexical representations, which can then be validated against empirical data by determining which assumptions most improve explanatory power. For example, considering the implications of experience-dependence more deeply lead us to introduce here the completely novel measure of “phonographeme frequency” (e.g., that 38 words in the corpus have the grapheme [CC] pronounced /k/ and only two have it pronounced /tʃ/), which we report explains significant amounts of variance in reading behaviors, for both real words and pseudowords. Altogether, we provide a novel operationalization of experience-dependence in the study of the statistical regularities of the sublexical system, and doing so allows us to consider novel implications of this old idea.

Questions about sublexical processes have most often been addressed in the context of reading, where a number of theoretical models have been proposed in the past decades (for a review, see Rayner & Reichle, 2010) – most prominent among them are two classes of models: localist “dual-route” models and connectionist “triangle” models. In dual-route approaches, such as the influential dual-route cascaded (DRC) model (Coltheart et al., 2001), access to phonological forms from orthographic input can proceed through either of two processes, known as the lexical and sublexical routes. Through the lexical route, also known as the “addressed” route (e.g., Patterson, 1986; Coltheart et al., 1991), whole-word phonological representations are accessed via recognition of whole-word orthographic representations, such as [CAT] to /kæt/ (specific models differ on whether or not they posit mandatory intermediate access through lexical semantics; see, e.g., Rapp et al., 2001). In contrast to the lexical route, the sublexical or “assembled” route allows for a process known as “spelling-to-sound” or “grapheme-phoneme” conversion, wherein access to phonology is achieved not through associating unitary, holistic representations of the whole word but rather through orthographic and phonological units of some grain size smaller than the whole word, such as [C] to /k/ followed by [AT] to /æt/.

Juxtaposed to dual-route models, triangle models eschew any distinction between lexical and sublexical processes, instead positing a network of interconnected orthographic, phonological, and semantic units (the three vertices of the triangle). As a consequence, whereas dual-route models represent lexical knowledge (as opposed to sublexical) in discrete units, triangle models represent it as distributed across the connections between the processing units. While these two approaches to understanding reading exhibit fundamental differences, one thing that they have in common is the concept of sublexical representations – orthographic and phonological units smaller than the whole-word – that are used to generate plausible pronunciations for previously unencountered words. Moreover, while these theories were developed in the context of reading, models of spelling function largely by analogy (on the relationship between reading and spelling, see for example Holmes & Carruthers, 1998; Rapp & Lipka, 2011; Shanahan, 2016), and the ability to generate plausible spellings for novel words is also thought to be supported via these sublexical units. However, the exact nature of these sublexical representations is not well understood, even though they have been considered critical for learning to read and spell (e.g., Apel et al., 2019; Gough & Tunmer, 1986), are implicated in deficits such as dyslexia and dysgraphia (e.g., Beeson et al., 2000; Monsell et al., 1992; Rapp et al., 2002), and are key to understanding cross-linguistic differences (e.g., the relative contributions of sublexical and lexical processing vary markedly across languages, e.g., Frost et al., 1987; Ziegler & Goswami, 2005).

Recently, there have been a number of efforts to more thoroughly characterize the inner workings of sublexical processes and their relationship to lexical processes (e.g., Chee et al., 2020; Siegelman et al., 2020; Siew & Vitevitch, 2019). For example, the size of the representational units at work in the sublexical route has been examined from relatively fine-grained, individual grapheme-phoneme mappings (e.g., [C] ➔ /k/) to relatively coarse-grained mappings, such as the rime (e.g., [AT] ➔ /æt/) or oncleus (e.g., [CA] ➔ /kæ/). In English, for example, a wealth of previous research has indicated that the rime contains the most valuable information from an information theory perspective (e.g., Treiman et al, 1995; Siegelman et al., 2020), and consequently consistency at the rime level has been a focus when accounting for behavior in both reading and spelling tasks (e.g., Dich, 2014; Weekes et al., 2006; Burt & Blackwell, 2008). However, it is apparent both theoretically and empirically that the rime is not sufficient to account for the entirety of the sublexical process. For example, Burt & Blackwell (2008) reported instances of participants spelling pseudowords with novel orthographic rimes, despite those pseudowords having extant phonological rhymes (e.g., /soʊb/ spelled SOAB, despite all rhyming words in English being spelled -OBE). Moreover, the mere fact that one can generate a spelling for a previously unattested rhyme in English (such as -/aɪtʃ/ perhaps spelled -ICHE or -YTCH) demonstrates that sublexical processes must operate, at least to some extent, at the lower level of individual phoneme-grapheme mappings.

The directionality of mappings has also been investigated because spelling consistency, p(G|P) (the probability of the graphemes given the phonemes), and reading consistency, p(P|G) (the probability of the phonemes given the graphemes) dissociate in languages like English. For example, given the letter X, the probability that it is pronounced /ks/ is very high (i.e., reading consistency p(P|G) is high). However, the reverse is not true: the spelling consistency p(G|P) for /ks/ spelled X is relatively lower because of the large number of alternative spellings – /ks/ may be spelled KS, or CKS, or CS, etc. (as in TREKS, PACKS, or EPICS). This phenomenon has been most extensively studied in the context of reading, where it is described as “feedforward” consistency from graphemes to phonemes; however, “feedback” spelling consistency (from phonemes to graphemes) has repeatedly been shown to affect behaviors such as naming latency and lexical decision (see e.g., Ziegler et al., 2008).

In order to better understand these various phenomena in written language processing (consistency effects, grain-size, feedback, etc.), researchers need detailed measures of the units of sublexical representation – in particular, their consistency and frequency. While a number of options currently exist for researchers to collect such measures, they are limited in many ways. For example, the available databases are either in the form of lists of real words with consistency measures (computed in different ways, depending on the database) or tables of correspondences (e.g., the consistency of the rhyme /oʊp/ spelled [OPE] versus [OAP]). Here, we present the English Sublexical Toolkit, a set of tools designed to quantify sublexical units in multiple ways, serving both practical and theoretical purposes. The central premise of the Sublexical Toolkit is that sublexical representations are primarily acquired through learning the associations between orthographic and phonological segments in the context of real words, not through an explicit system of “rules” for converting between letters and sounds or a consciously prescribed set of correspondences. Consequently, a full understanding of the mental representations subserving written language processing requires detailed measures that empirically examine the regularities within the lexicon. The current version of these tools provides both consistency and frequency measures for two levels of granularity: low-level mappings between individual phonemes and individual graphemes, henceforth phonographemes, and higher-level mappings between onsets (syllable-initial consonants) and rimes (vowels plus syllable-final consonants). The consistency measures are available in both the spelling p(G|P) and reading p(P|G) directions in the form of continuous measures, reflecting the probability that a given phoneme will be spelled with a certain grapheme (and vice versa), ranging from near 100% (e.g., /b/ is nearly always spelled [B]) to near 0% (/ɹ/ is rarely spelled [RH]).

There are three particularly novel aspects to this work: First, these measures are accessible in the form of toolkits that allow the user to input grapheme-phoneme/phoneme-grapheme mappings to extract measures for any word, including pseudowords and misspellings. To the best of our knowledge, this is the first tool that provides a method for readily computing consistency measures for any string of letters and phonemes, not restricted to a finite list of real words. This capability in particular enables new opportunities for assessing behaviors with pseudoword tasks, scoring errors (misspellings/mispronunciations), and generating novel stimuli with desired properties.

Second, some of the measures themselves are entirely novel, a consequence of the experience-dependent, corpus-based framework adopted in the current work. In particular, we present original measures of the frequencies of sublexical units, including multi-letter graphemes (e.g., [OUGH]) that are distinct from available unigram, bigram, or trigram frequency measures, as well as “phonographeme” units – the co-occurrences of individual phonemes and individual graphemes (e.g., [CH] pronounced /tʃ/ versus [CH] pronounced /k/). The term “phonographeme” is used throughout this work in a non-directional sense (e.g., it is not juxtaposed with a “graphophoneme”). The concept of a sublexical unit common to both reading and spelling is made clear by examining how consistency and frequency are computed (Eqs. 1–3):

The first two equations are specific to the reading and spelling processes and yet they share their numerator; it is only the denominator that distinguishes between reading and spelling consistency. This follows from the fact that when one experiences a word with a particular grapheme-phoneme mapping, one is simultaneously experiencing a word with the equivalent phoneme-grapheme mapping: for example, an instance of reading aloud the word “cat” entails both experiencing the grapheme-phoneme mapping [C] ➔ /k/ and the phoneme-grapheme mapping /k/ ➔ [C] – in other words, feedback processing ensues feedforward processing, and so the phonographeme frequency of the [C] ➔ /k/ mapping is the same as that of the /k/ ➔ [C] mapping: simply the number of occurrences of [C] ➔ /k/ (which are simultaneously occurrences of /k/ ➔ [C]).

Finally, the tools were made with specific theories of sublexical representations in mind, and as such, we present in detail how they were constructed in terms of those underlying theories. Doing so will better enable us to interpret the empirical data and draw inferences from the relative success or failure of the measures (relative to each other and relative to alternative measures developed by other researchers). Moreover, we are making the tools and codes openly available to researchers so that they can be adapted to test new hypotheses (e.g., alternative rules for syllabic parsing or theories of position coding), and/or extend their capabilities (e.g., to other grain sizes or to other languages).

The remainder of this paper is divided into three sections. The next section (Methods) details the methods used to construct the English Sublexical Toolkit, describing all of the procedures taken and decisions made when computing the various measures of consistency and frequency. The third section (Empirical validations) presents three sets of analyses to validate the toolkit measures (construct validity). It does so by: (1) exploring the similarity of our measures to some of the most commonly used consistency measures previously published in the literature; (2) assessing how well our measures explain naming and lexical decision data from the English Lexicon Project (ELP; Balota et al., 2007); and (3) presenting a novel analysis that accounts for the distribution of different pronunciations in response to a pseudoword reading task. The paper concludes with a discussion (General discussion) highlighting the current capabilities of the toolkit, its limitations, and future directions. A number of appendices are also provided, including vignettes that serve as practical guides for how to use the toolkit.

Methods

Determining the empirical regularities of the English written lexicon requires a large number of decisions about how to operationalize the measures. One contribution of the current effort is to make those decisions explicit and to ground them in cognitive theory. It is challenging to identify all the decisions that must be made, given that they may be hidden by virtue of being based on implicit assumptions. Nonetheless, this section seeks to provide transparency into how the Toolkit was designed to develop a rigorous, coherent system for quantifying English sublexical regularities. The goal is to articulate the theoretical underpinnings of the toolkit such that they generate specific, testable claims that can provide new insights into the underlying nature of sublexical representations in written language. Both the successes and the failures of the toolkit in explaining empirical phenomena allow for testing the theories that informed how the measures were developed. Moreover, future versions of the toolkit can be created by making alternative decisions based on competing hypotheses, thereby allowing for empirically derived support for one hypothesis relative to another. For example, the consistency measures could be recomputed after parsing words according to an alternative to the maximum onset principle (MOP; Kahn, 2015), and meaningful inferences could be drawn if it were found that the alternative resulted in the toolkit better fitting some type of reading or spelling behavior. This type of future work includes many possibilities that will be returned to in the General discussion, including specific examples of how alternative or extended toolkits might be used. The remainder of this section describes each of the major decisions that were made in developing the toolkit, with specific attention paid to the theoretical implications.

Overview of the English Sublexical Toolkit

An overview of the sublexical processes as conceptualized in the Sublexical Toolkit at the phonographeme level is presented in Fig. 1, with an example of reading aloud the visually presented pseudoword BLEASE. The toolkit is available freely from the Open Science Foundation (OSF) at https://osf.io/e95qw/?view_only=167fb28c4842491a885b91435c57b2f0. An overview of the onset/rime level is presented in Fig. 2. The distinction between the two regards the grain size of the representations under examination: at the lowest level, phonographemes (individual phoneme-grapheme mappings) are measured, and at the higher level the units are onsets (syllable-initial consonants, including clusters like CHR or PHL) and rimes (vowels plus following consonants, i.e., the syllabic nucleus and coda). Throughout the manuscript we focus on the reading process, but it should be noted that the toolkit provides both reading consistency p(P|G) and spelling consistency p(G|P) measures. It will be explicitly noted whenever the two directions of processing are importantly distinguished in terms of how the toolkit functions.

The sublexical system as conceptualized in the English Sublexical Toolkit at the phonographeme level, here visualized during the process of reading aloud the visually presented pseudoword BLEASE. A: given a monosyllabic parse with all of the orthographic vowels as a single grapheme (EA_E). B: given a disyllabic parse with EA in the first syllable and a non-silent E in the second syllable (BLEA-SE). The numeric values reflect the reading consistency for that segmental mapping p(P|G). For example, 82% of words with EA_E in the middle of a syllable are pronounced /i/. The mean consistency is higher for the monosyllabic reading (81%) compared to the disyllabic (71%), averaging over all segments; this is largely due to the much lower minimum consistency (the single least-consistent segment) of the disyllabic reading (just 15% for word-final [E] pronounced /eɪ/). Graphemes are represented in [brackets], phonemes in /slashes/. p(P|G) = reading consistency. Gray fill indicates alternative parsings/mappings (e.g., EA_E versus EA and non-silent E)

The sublexical system as conceptualized in the English Sublexical Toolkit at the onset/rime level, here visualized during the process of reading aloud the visually presented pseudoword BLEASE, for two alternative phonemic assignments given the same orthographic parsing (/blis/ versus /bliz/). Gray fill indicates alternative parsings/mappings (e.g., the rime -EASE read /iz/ versus /is/). Graphemes are represented in [brackets], phonemes in /slashes/. p(P|G) = reading consistency

In general, computational models of English reading/spelling must address both parsing of inputs and mapping to outputs (e.g., Gubian et al., 2022; Mousikou et al., 2017; Perry et al., 2007; Pritchard et al., 2012). Models such as the connectionist dual -route model of Perry and colleagues (CDP++, Perry et al., 2010) and the DRC model of Rastle and Coltheart (Rastle & Coltheart, 2000) can generate pronunciations for pseudowords given letters as input by using heuristics to determine how the letters should be grouped into graphemes and what phonemes they should be paired with. The core work of the toolkit presented here is focused on the mappings of phonemes and graphemes but does not have a deterministic heuristic or algorithm that dictates how letters “should” be combined into graphemes or which phonemes should be used. Rather, given a predefined spelling-sound mapping, the toolkit extracts how consistent that choice is with the English lexicon (currently based on a sample of ≈14,000 words). This means that the toolkit’s user must provide both the graphemes and the phonemes, whereas the computational models of reading require only input graphemes to then generate a phonological output.

For example, presented with the pseudoword ADANE, most English speakers will pronounce it /æ 'deɪn/ (Mousikou et al, 2017), indicating that the word was parsed as two syllables, A-DANE, with the final -E in the second syllable grouped with the preceding A as an A-silent-E; we refer to such mappings with an underscore, as in A_E. Given the input ADANE, the DRC model reads the string as /ə 'deɪn/ (Mousikou et al., 2017), differing in the pronunciation of the first letter but otherwise agreeing with the most common human response and the mapping of A_E ➔ /eɪ/. The toolkit does not strictly generate a single pronunciation, but rather can be used to measure the consistency of any given response. For the pseudoword ADANE, for example, at the onset/rime level the toolkit indicates that the most popular human pronunciation is indeed more consistent than is the DRC pronunciation: the mean p(P|G) at the onset/rime level of granularity is 77% for the human response, versus 73% for the DRC response. That is, given the orthographic parse A-DANE, the pronunciation /æ 'deɪn/ is more plausible than /ə 'deɪn/. The current version of the toolkit cannot be used to determine the probability of the parse itself, such as whether a disyllabic parsing A-DANE is more or less likely than a trisyllabic parsing A-DA-NE. However, it can say how consistent A-DA-NE pronounced /'æ də ˌneɪ/ is compared to another pronunciation with the same parsing such as /ə 'dɑ ni/.

The toolkit operates under the self-evident assertion that the knowledge of sound–spelling mappings is grounded in experiential learning. This implies that the relative strength of these connections depends on the amount of experience individuals have with these representations, and this strength in turn can be estimated from the regularity with which sublexical mappings of various grain sizes occur in the English lexicon. This assertion is supported by the preponderance of evidence that pseudoword responses vary greatly both across and within individuals (e.g., Coltheart & Ulicheva, 2018; Ulicheva et al., 2021). Importantly, this variability is much greater than would be expected if the sublexical system operated in a rule-based way that only considers mappings with a high probability, or that requires adherence to larger units such as rimes that are extant in the lexicon. There is clear evidence that individuals generate pseudoword spellings that result in previously unattested rimes – for example, the pseudoword /snoʊb/ has been spelled SNOAB (Burt & Blackwell, 2008), despite the fact that all rhyming words in English are spelled -OBE (thus one expects the spelling SNOBE). We take such evidence as indication that phonographemes are productive units of representation, although their relative importance compared to higher level units like rimes remain an open question.Footnote 1

Altogether, the toolkit quantifies the probability of spelling-sound mappings, both as consistency (the probability of the phoneme given the grapheme, or vice versa) and as frequency (the frequency with which that phonographeme is encountered in the lexicon). For example, in Fig. 1, the p(P|G) reading consistency value 0.82 refers to the proportion of words with EA_E in the syllable medial position that are pronounced /i/. This was computed by dividing the number of words with that pronunciation (e.g., PLEASE, RELEASE) by the total number of words with that or any other pronunciation (e.g., HEARSE, MILEAGE). The toolkit also offers frequency measures that are equivalent simply to the numerator of the consistency measure (i.e., the number of words with that mapping, without dividing by the total number of words with that or any alternative pronunciation). Figure 1 shows that the measures are position-specific: for the pronunciation /blis/ the word-final S ➔ /s/ consistency is 0.43, whereas for the pronunciation /bli `seɪ/ the syllable-initial S ➔ /s/ consistency is 0.76, reflecting the fact that words ending in S are somewhat more often pronounced /z/, whereas syllables beginning S are most often pronounced /s/.

Just as the consistency of a mapping varies depending on its position in the word, consistency at the level of onsets and rimes can differ from the phonographeme level due to the idiosyncrasies of English spelling. One consequence is that the most probable reading at one level may differ from that at another level. For example, the pseudoword BLEASE (Fig. 1) is most probably pronounced /bliz/ when averaging over all phonographeme units, which is more probable than the pronunciation /blis/ because word-final S is more often pronounced /z/ than /s/ (57% versus 43%). However, the situation reverses at the level of the onset and rime, as the rime -EASE is more often pronounced -/is/ as in LEASE than -/iz/ as in PLEASE (55 vs. 45%). In this example, empirical behavioral data (Pritchard et al., 2012) indicates that English speakers have nearly a 2:1 preference for /bliz/ over /blis/, a result more in agreement with the phonographeme than the rime consistency. However, it is generally an open question as to whether one or both of these levels determines behavior, and the extent to which it depends on individual differences or other properties of the stimuli (e.g., orthographic neighbors). Regardless, this is one example of how the toolkit can be used to probe such questions.

The remainder of this section describes how orthographic and phonological representations are conceptualized in the toolkit, and the details of a number of specific decisions and assumptions necessary to operationalize this schema into a working tool.

Corpus

The most basic assumption of the work here is that sublexical knowledge (of sound–spelling mappings) derives from experience with lexical items – consequently, measures of consistency and frequency fundamentally depend upon which words are included in the corpus.

The initial version of the toolkit (version 1.0) is based on approximately 10,000 words, which has been expanded to over 13,000 in the latest update (version 1.1). The initial corpus was formed from three components: all of the monosyllabic words previously coded for Friends/Enemies measures (F/E; Plaut et al., 1996), in order to compare the English Sublexical Toolkit’s measures to the F/E measures; a list of several hundred words that were administered by the authors in other studies of spelling, selected for reasons unrelated to the Sublexical Toolkit; and the rest were the most frequent English words according to the SUBTLEX-US database (Brysbaert & New, 2009). The version 1.1 corpus adds an additional 2688 (the next most-frequent words per the SUBTLEX-US database). The automated R-code based on the version 1.0 corpus successfully parses 99.0% of the words added in version 1.1, which is an indication that even the smaller corpus generalizes to most unseen words. Further details regarding diagnostics of the sufficiency of the corpus are presented in Appendix 3.

Parsing

Perhaps the most fundamental issue to resolve when measuring sublexical properties is how to segment the string into constituents (i.e., how does the lexical item decompose into sublexical units?). There are three aspects to this issue: parsing the phonological word form into sublexical units, parsing the orthographic word form into sublexical units, and encoding the position of these units within their respective strings. For phonological parsing, we adopted the Maximum Onset Principle (MOP, Kahn, 2015; see also Chee et al., 2020), which has the advantage of being well grounded in theories of phonology as well as being readily operationalized for the purposes of sublexical spelling-sound mappings. This principle determines the location of syllabic boundaries by placing consonants in the onset position (i.e., as the start of a new syllable) unless doing so would lead to a phonotactically illegal utterance in English. That phonotactic legality is determined by the sonority hierarchy, which allows consonant clusters so long as they are patterned as follows: the initial phoneme is /s/, which may be followed by a stop such as /p/ or /n/, which may be followed by a liquid /l/ or /ɹ/, or by a glide /j/ or /w/. For example, LOBSTER is parsed as LOB-STER and not LO-BSTER, because the cluster BST would violate the sonority hierarchy (/b/ cannot precede /s/), nor LOBS-TER, because the S should begin the second syllable (/s/ can precede /t/). Application of the MOP results in an internally consistent framework for parsing a string of phonemes (a lexical item) into syllables, and those syllables are defined by consonants (optionally) in the onset, a vowel (mandatorily) in the nucleus, and consonants (optionally) in the coda. This also then determines the onsets and rimes, the latter being simply the concatenation of vowel in the nucleus and any following consonants in the coda (see Fig. 2). It is also worth highlighting that the earlier work of Hanna et al. (1966) and the updates to that work by Fry (2004) and Berndt et al. (1987), suffer from inconsistencies in how word forms were parsed into syllables – and these inconsistencies necessarily had an impact on the measures of spelling-sound consistency. For example, SATYR was parsed as SAT-YR, which not only fails to follow the MOP but also contradicts the parsing of MARTYR (parsed as MAR-TYR).

Position coding

While the MOP provides a clear guide to parsing the words’ phonology, it does not address how to determine equivalency of sublexical positions. The question of letter position encoding has been highly researched yet remains unresolved (see, e.g., Baciero et al., 2022; Gomez et al., 2008; Grainger, 2018; Grainger & Van Heuven, 2004). To the best of our knowledge, no parallel research has investigated the encoding of position in the context of cross-modal mappings, i.e., between the position of graphemes and the position of phonemes (or higher-level units). In the seminal work of Hanna and colleagues (1966), sublexical mappings were considered in three positions: syllable-initial, medial, or final. In the recent work of Chee and colleagues (2020), positions were measured in serial order from the first syllable (e.g., onset of the first syllable, onset of the second syllable, the third, etc.). These approaches have serious consequences when computing spelling-sound consistency. For example, the serial order position schema implies that the phonographeme /tʃ/ ➔ CH in the word CHAIR is independent of the one in MA-CHETE, and both of those are independent of the one in O-VER-CHARGE (as they are the onset of the first, second, and third syllables, respectively). In the syllable-initial/medial/final scheme, on the other hand, all of those /tʃ/ ➔ CH mappings are treated as being in the same position (syllable-initial).

When mapping phonographemes, the English Sublexical Toolkit does not use the serial position schema, as there is currently no particular evidence to support that sound–spelling mappings are represented in this way. Indeed, there are reasons to question the plausibility of a serial position schema, which would imply (among other things) that learning the /tʃ/ in WHICH is spelled CH does nothing to inform one about the potential spelling of the /tʃ/ in OSTRICH (simply because the former is the coda of the first syllable whereas the latter is the coda of the second). Instead, the Sublexical Toolkit adopts and extends the scheme of Hanna and colleagues (1966) from three to five categories: word-initial, syllable-initial, syllable-medial, syllable-final, and word-final. The additional distinction is whether the syllable is the first or last in the word; this was done based on the empirical observation that certain mappings never occur word-initial/final but do occur syllable-initial/final if the syllable is internal. This both-ends scheme has found support both in studies of letter position in reading (e.g., Fischer-Baum et al., 2011) and spelling (e.g., Fischer-Baum et al., 2010) and verbal working memory (e.g., Henson, 1999). For example, parsing the word HAPPY by the MOP results in syllabification as HA-PPY, and as such the /p/ maps to PP. Per the three-position syllabic schema of Hanna and colleagues, /p/ ➔ PP is legal for initial positions – however, it is immediately apparent this is not true for the start of a word. Therefore, in the Sublexical Toolkit the mapping /p/ ➔ PP is possible for syllable-initial, but not word-initial, mappings.

An analogous distinction is made at the onset/rime level: onsets are either word-initial or syllable-initial (onset of a second or later syllable), and rimes are either word-final or syllable-final (rime of a penultimate or earlier syllable). This effectively addresses the issue of how to handle rhymes in multisyllabic words – monosyllabic words are all treated as having only a word-final rime, whereas multisyllabic ones are composed of one or more syllable-final rimes and a single word-final rime.

Pronunciation

While a word’s correct spelling is not subject to debate (alternative spellings or American/British differences notwithstanding), the same is not true of pronunciation. There are multiple sources of variability in speakers’ pronunciation, including regional differences, social class, gender, age, and education (Rickford, 1996). Any measure of English spelling-sound consistency must grapple with the challenge presented by the fact that there is no monolithic, universal English language. For example, the well-documented cot-caught merger (e.g., Labov et al., 2006) entails that speakers who pronounce such words identically must have less consistent sound–spelling mappings compared to those who do not have the merger, as the merger of the two vowels /ɑ/ and /ɔ/ is not reflected in orthographyFootnote 2. Indeed, an intriguing direction for future research is to determine the extent to which individual differences in reading/spelling are attributable to idiosyncratic pronunciation (i.e., person-specific “accents”), which may affect the degree to which English sound–spelling mappings are perceived as (in)consistent. Individuals whose internal phonological representations are more consistently reflected in standardized spelling may be at an advantage for learning to read and spell, relative to those whose dialects are more opaquely related to spelling.

In any case, across-speaker variability in pronunciation limits the ability of any consistency measure to account for behavioral data to the extent that the population sampled will vary in how well it matches with the “canonical” pronunciations used to inform the consistency measure. Indeed, this is a considerable limitation of the database of Hanna and colleagues (1966), as it is apparent that not all of the phonological representations in their corpus reflect contemporary standard American English. For example, the AY in yesterday was mapped to the same vowel as the Y in baby and the UI in guitar – but contemporary standard American pronunciation maps the AY ➔ /eɪ/, the Y ➔ /i/, and the UI ➔ /ɪ/. To address this issue, the work at hand adopted two primary sources for determining the underlying phonology in the corpus data used to construct the consistency measures. Specifically, the Carnegie Mellon Pronouncing Dictionary (Weider, 2005) was used as the primary determinant of lexical phonology, and in instances where there were multiple pronunciations, all were included in the corpus, regardless of whether the alternatives were homographs (as in BASS /beɪs/ versus /bæs/) or regionalisms (as in PECAN /pi kɑn/ versus /pɪ kæn/). In instances where there was an apparent error in the dictionary or a missing entry, the Cambridge English pronouncing dictionary (American accent) was consulted (Jones, 2011).

Morphology

While we acknowledge the evidence that sublexical and lexical processes are interactive in nature, the measures presented here are designed to reflect sublexical processes independent of lexical influences. In particular, the consistency measures do not consider morphological representations of any form, inflectional or otherwise. This is necessary when applying the MOP to parse the phonology, because the resulting syllabic boundaries will routinely be at odds with morphologically defined boundaries (e.g., the MOP parses EATER as EA-TER, whereas morphologically the boundary is EAT-ER).

It is certainly true that lexical knowledge, such as knowing a word’s part of speech, will influence performance on tasks that require sound–spelling mappings, but by definition it will not inform the sublexical processes that the toolkits are intended to reflect. For example, the heard-pseudoword /klaɪd/ presented as a past-tense verb (“She /klaɪd/ the toy on the ground.”) is more likely to be spelled ending in -ED, such as CLIED, than when presented as a noun (“She bought two pounds of /klaɪd/), such as CLIDE. We do not consider it a limitation of the toolkit that it does not integrate such information, because it is designed to reflect stages of processing that occur prior to, or perhaps are encapsulated from, lexical influences. Future work will determine how morphological, semantic, and syntactic representations impinge upon reading and spelling processes even during pseudoword tasks, but this is beyond the scope of the current toolkit.

Graphemes

One fundamental assumption we make is that the sublexical system requires a one-to-one mapping of graphemes to phonemes. It is obvious that English does not have a one-to-one mapping of letters to phonemes (e.g., consider the homophones NIGHT and KNIGHT), which may be why there is the common conception of “silent letters” and many studies on how silent letters are “read” (e.g., Ehri & Wilce, 1982; Gingras & Sénéchal, 2019; Perry et al., 2014). However, we conceptualize the sublexical system as requiring that every grapheme be mapped to a pronunciation, and thus in this sense there are no “silent letters”, only graphemes whose pronunciations differ from those of their constituent letters. For example, in the word WEIGH there are just two phonemes, /w/ and /eɪ/ – in the Sublexical Toolkit, the mappings are /w/ ➔ W and /eɪ/ ➔ EIGH. One alternative framework might map the GH as “silent”, but such a framework would face the serious challenge of how to determine which letters are pronounced and which are “silent”.

In the recent work of Chee et al. (2020), a relatively small inventory of consonant graphemes was used, with all other letters assigned to the vowel graphemes. For example, the only grapheme corresponding to /m/ is listed as M (Chee et al., 2020, Table 11) – it is unclear how this allows for accounting for words like COMMA, LAMB, or DAMN. In the earlier works of Fry (2004), Berndt et al. (1987), and Hanna et al. (1966), a larger inventory of graphemes was used, presumably on the original basis of the procedure of Hanna and colleagues that focused on spelling (i.e., given their corpus of ≈17,000 words, they listed all graphemes needed to spell the phonemes in that corpus). For example, /m/ corresponds to potentially M, MM, MN, and LM (Fry, 2004). The Sublexical Toolkit began with the graphemic inventory originated by Hanna and colleagues (1966) but made parsimonious adjustments over the course of incorporating words into the corpus. Specifically, letters were assigned to graphemes such that the final inventory had as few unique graphemes as possible. For example, ROGUE could potentially be parsed in three ways:

-

(1)

With /g/ ➔ GUE

-

(2)

With /g/ ➔ G, and so consequently the vowel /oʊ/ ➔ O_UE

-

(3)

With /g/ ➔ GU and /oʊ/ ➔ O_E

The first option was employed by Hanna and colleagues and was restricted to syllable-final positions as in ROGUE and MORGUE. The second option is appealing because it allows the /g/ to have the most common spelling, G, but it is unappealing because it requires positing a grapheme made of noncontiguous letters (/oʊ/ ➔ O_UE). That problem would be exacerbated by encountering other vowel mappings in a similar context; for example, LEAGUE would require an EA_UE grapheme. Instead, the third option was adopted for the Sublexical Toolkit based on the principle of parsimony (Epstein, 1984), because it minimizes the total number of graphemes required. It does so because both the GU and O_E graphemes are necessary for other words – the GU as in GUESS (not included in the original Hanna et al., 1966 nor the Berndt et al., 1987, but adopted by Fry, 2004) and the O_E as in GONE.

In other words, the third option allows for the words ROGUE, GUESS, and GONE to be represented with six graphemes total (R, O_E, GU, SS, G, and N), whereas the first option would require eight (R, O, GUE, G, UE, SS, O_E, and N) and the second option would require at least seven (R, O_UE, G, UE, SS, O_E, and N). This parsimonious approach was taken throughout the process of building the toolkit, keeping the number of graphemes to a minimum while also providing a principled heuristic for whether or not to adopt new graphemes. It is also worth noting that this principle did not necessarily result in grouping orthographic consonants and vowels together to form graphemes as with GU. For example, while QU is sometimes mapped to /k/, as in QUICHE, in other words the Q is mapped separately from the U, as in QUICK, where the U maps to /w/. That did not require positing an additional phonographeme, as the mapping of U to /w/ is not limited to the QU bigram, but also occurs in words like CUISINE, DISTINGUISH, and PERSUADE. All of the graphemes identified for the Sublexical Toolkit that occur at least 1% of the time and in at least two different word forms are presented in Appendix 1 (for vowels) and Appendix 2 (for consonants), as a series of phoneme-grapheme correspondences (e.g., /f/ spelled F, FF, PH, or GH); all graphemes including those occurring very infrequently are accessible through the English Sublexical Toolkit itself available on OSF (https://osf.io/e95qw/?view_only=167fb28c4842491a885b91435c57b2f0).

Final E’s

One hallmark of English spelling is the prevalence of the “silent E”, referring to when the letter E appears after a consonant but does not receive its own pronunciation. It has long been noted that this E tends to have an effect on the quality of the vowel, typically “lengthening” (see, e.g., Joshi et al., 2008) – hence the distinction between BID /bɪd/ and BIDE /baɪd/. We prefer the term “final E” instead, to highlight the fact that these letter E’s do in fact convey information about pronunciation, and moreover, some are not silent in any sense but instead reflect a non-linear mapping between letters and sounds. As such, there are two categories of final E: those traditionally called “silent E” and those we call “non-linear E”. Instances of the first category are always referred to using underscores, as in the A_E of BAKE or O_E of POSE – these silent E’s modify the quality of a preceding vowel. Unlike the silent E’s, non-linear E’s do not modify a preceding vowel, but rather represent a vowel themselves, specifically either schwa /ə/ or /ʌ/. This second category of final E is very clearly seen when comparing words such as MUSCLE and MUSSEL. While they are pronounced identically, the /əl/ at the end of the words maps directly (linearly) onto the EL in MUSSEL, whereas the schwa /ə/ in MUSCLE maps onto the final, non-linear E as though the order of the phonemes were reversed (the phonological order is vowel + consonant, but the orthographic order is consonant + vowel). In some works, these are treated as syllabic consonants (including that of Hanna et al., 1966). Within the corpus used to build the toolkit, in addition to the common final -LE ending (TABLE, MAPLE, etc.) we also encountered less frequent but analogous instances of -RE (e.g., THEATRE). We further added to this category the idiosyncratic ONE and ONCE, including words in which they are affixed (e.g., SOMEONE). Consistent with the idea that no letter is truly silent, these E’s were treated as non-linear mappings of /ʌ/. For example, the phonology of ONE, /wʌn/, is mapped as /w/ ➔ O, /ʌ/ ➔ (non-linear) E, /n/ ➔ N.

The letter X

The letter X is unique in that it is the only instance (in English) of a single grapheme used to represent a consonant cluster, typically /ks/ or /gz/. A consequence of employing the MOP is that X is “divided” across syllables when appearing in multisyllabic words. For example, TAXI ➔ /'tæk si/ implies the X is represented both as the coda of the first syllable (/k/) and the onset of the second syllable (/s/). We adopt the same accommodation to the MOP as Chee et al. (2020) by including the letter X with the earlier syllable, effectively parsing the word as TAX-I. An alternative would be TA-XI, which in fact may be predicted by alternative, orthographic-based, parsing rules – but it would suggest a phonotactically illegal English syllable, /ksi/.

We note that others, including the seminal work of Hanna and colleagues (1966), have at times mapped other graphemes onto phonological units consisting of more than one phoneme, most conspicuously /kw/ ➔ QU (as in QUICK or QUIET). This was never done in the English Sublexical Toolkit, as the phonographeme level of representation is defined to be the smallest plausible mapping, i.e., individual phonemes to individual graphemes – as such, the letter X is the only exception. For example, the /kw/ ➔ QU of Hanna et al. (1966) was decomposed into /k/ ➔ Q and /w/ ➔ U. This decision was also compatible with the criteria for deciding on the graphemic inventory (see “Grapheme inventory and grapheme parsing”), as some words with QU cannot be mapped to /kw/ (as in QUICHE), and some words require /w/ ➔ U even in the absence of Q (as in CUISINE).

Measures available in the English Sublexical Toolkit

The methods described above were used to construct a system for extracting a number of sublexical regularities from the lexicon along phonological and orthographic dimensions. These methods were applied to a corpus of over 13,000 English words to compute their sublexical sound–spelling consistency and frequency measures. For an analysis of the sufficiency of the corpus size, see Appendix 3. Critically, these words also formed the basis for measuring the consistency/frequency of any hypothetical string of letters mapped to any potential string of phonemes (or vice versa). For example, the rime -ASTE was found to be pronounced as -/eɪst/ in 88% of words in the corpus (e.g., WASTE, TASTE) and as -/æst/ in 12% (e.g., CASTE). The Sublexical Toolkit can be used not only to look up the values for words included in the corpus, such as WASTE and CASTE, but also to compute the values for words not included in the corpus (regardless of their lexical status). This includes pseudowords such as DASTE, which would be most consistent if pronounced as /deɪst/ rather than as /dæst/ (but either pronunciation would be more consistent than, e.g., /dɪst/ or /dust/).

In total, there are ten measures offered by the toolkit: p(P|G) reading consistency, p(G|P) spelling consistency, phoneme frequency, grapheme frequency, and phonographeme frequency, each at the phonographeme and the onset/rime level. They are available both segmentally (e.g., the consistency of the word-final CH ➔ /k/ mapping in STOMACH) and as summary statistics over the whole word (e.g., the mean consistency of STOMACH averaging across S ➔ /s/, T➔ /t/, O ➔ /ʌ/, etc., or the minimally or maximally consistent segment across the word). Currently, all of the measures are weighted by type, not token (e.g., there are two electronic formats of the toolkit, Excel worksheets and R code, which provide the same information but differ somewhat in their ease of use. In general, the R code Sublexical Toolkit is very fast both at processing words and searching for words or segments with desired properties, whereas the Excel worksheets better support detailed inspection of single words.

There are two particularly novel contributions of these tools. First, they can be used to compute the measures for any desired string, because there is no limitation to the corpus of ≈ 14,000 word; rather, those words form the basis on which any strings can be judged as consistent. This is similar to the correspondence tables of Hanna et al. (1966) and the updated versions from Fry (2014) and Berndt et al. (1987), except those have not been converted into digital tools. Moreover, those resources suffer from a number of errors and internal inconsistencies originating in the 1966 work of Hanna and colleagues, as described throughout this Methods section. Second, the methods used to develop the measures are themselves novel – in particular, the frequency measures are unlike any previously published in the literature. While orthotactic unigram, bigram, and trigram frequency measures are available elsewhere (as are analogous phonotactic measures), no database has quantified the frequency of graphemes, which are conceptually distinct from those orthotactic units. The phonographeme frequency is also an entirely novel measure, as is the concept of non-directional sublexical units. Finally, it is also worth noting that the phoneme frequency measure is novel relative to uniphone frequency measures available from phonotactic databases (see Vitevitch & Luce, 2004), because here the phonemes are coded according to the five-position schema (word-initial/final, syllable-initial/medial/final), which has not previously been used to investigate the effects of phoneme frequency.

We have included vignettes and video guides with further details and practical explanations of how to use the various components of the toolkit (both Excel-based and R-based) on OSF at: https://osf.io/e95qw/?view_only=167fb28c4842491a885b91435c57b2f0.

Empirical validations

The following sections present a series of empirical validations of the toolkit measures: (1) a comparison of the toolkit’s measures with two other sets of measures available in the literature; (2) a series of stepwise regression analyses of English Lexicon Project data (ELP; Balota et al., 2007) to explore the contribution of the toolkit measures to explaining variance in reading behaviors after controlling for surface and lexical variables; and (3) a novel analysis of the pseudoword reading data of Pritchard et al. (2012) to assess the toolkit measures’ ability to account for the within-item variability in pseudoword pronunciations. An internal validation of the Sublexical ToolkitFootnote 3, specifically the sufficiency of the corpus size to establish reliable measures, is also presented in Appendix 3.

Comparisons with other consistency measures

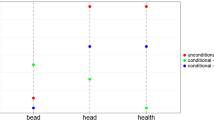

Previous efforts to develop English sound–spelling consistency measures have varied considerably in their methods. Nonetheless, given that they have had some success in accounting for behavioral data with reaction time and accuracy in lexical decision and oral reading of real words, significant positive correlations are expected between the various measures. Here, we examine the correlations between the set of toolkit measures with two other sets: the Onset and Rime consistency norms from Chee et al. (2020) and the Friends/Enemies (F/E) consistency measures from Plaut et al. (1996). These correlations are useful for understanding the extent to which different methodological choices impact the resulting consistency measures (e.g., how to account for syllabic position, how to parse the graphemes, etc.). In addition, they give indications as to how much consistency measures vary as a consequence of different grain sizes and different directions, p(P|G) and p(G|P).

Materials

We first identified words that are coded in our database as well as those available in Chee et al. (2020) and F/E measures of Plaut et al. (1996). Of the words in the toolkit database, 9164 were also coded for rime consistency by Chee et al. (2020), and 9016 for onset consistency.Footnote 4 We specifically used the “composite” measures from Chee et al., which are the mean values across all syllables in the word, and so the toolkit measures used for this purpose were also the mean values. For the F/E measure, which by definition corresponds just to the rime, 2861 words were coded in our database – this number is smaller because the F/E measure is computed only for monosyllabic words, whereas the other measures included in this analysis are for both monosyllabic and multisyllabic words.

Analyses

The Pearson correlation between each set of measures was computed, without correction for multiple comparisons. As reported in Table 1, the vast majority of the correlations that are significant have p values < 0.001, and so would survive even stringent corrections such as Bonferroni.

Results

As shown in Table 1, most pairwise correlations between measures are significant and positive. Only five correlations are non-significant, three of those being with the Sublexical Toolkit phoneme and grapheme frequency measures – this is not surprising, as there is no particular reason that phoneme or grapheme frequency should be correlated with consistency measures at the level of onsets or rimes. The phonographeme frequency measure is more highly correlated with the consistency measures than the phoneme or grapheme frequency measures, however. The implication is that more consistent spellings are associated with more frequent phonographeme units, which is nearly a tautology (although frequency and consistency are not the same, it stands to reason that more consistent mappings must generally be more frequent in the lexicon).

The cells highlighted in yellow in Table 1 draw attention to the correlations that logically should be the highest, as they represent a conceptual match between the measures: they are the correlations between measures matching in both direction and grain size. Indeed, these six correlations are the highest overall, ranging from 0.594 (for p(P|G) rime consistency with the F/E measure) to 0.867 (for p(G|P) rime consistency with the F/E measure). The other pattern of note is that the toolkit p(G|P) measures are generally more similar to the F/E p(G|P) measures, whereas the toolkit p(P|G) measures are generally more similar to the Chee et al. p(P|G) measures.

Summary

Generally, the correlations between conceptually similar methods are high, although in some specific instances perhaps not as high as one might expect. It is worthwhile reviewing the primary differences between the approaches that might account for the discrepancies. The F/E measure is based only on monosyllabic words, which necessarily limits how much it might correspond to any approach that also accounts for multisyllabic words. Moreover, the way in which multisyllabic words influence the consistency measures is different between the approach taken here and that of Chee et al. (2020), due to the way in which position is coded. The approach of Chee and colleagues uses a serial position coding scheme and consequently, although both monosyllabic and multisyllabic words contribute jointly to computing consistency, it is only the first syllable of multisyllabic words that have an impact on the consistency values for monosyllabic words. This is unlike the toolkit approach, which uses a both-ends position coding scheme, and consequently it is both the first and the last syllable of multisyllabic words that have an impact on the consistency values for monosyllabic words. As a concrete example, this means that the rhyme -/ɪn/ as in KIN is treated as the same as that in KIN-DRED per the Chee et al. approach (both are first syllable rimes), whereas for the toolkits’ approach it is treated the same as that in NAP-KIN (both are word-final rimes). Which of these approaches is closest to psychological reality remains an open question.

Regression of ELP data

As a further validation of the toolkits, we conducted a series of stepwise multiple regression analyses using data from the English Lexicon Project (ELP; Balota et al., 2007). The goal of these analyses is both to confirm that the toolkit measures contribute significant unique variance in explaining behavioral data from real word reading tasks (naming and lexical decision), and to demonstrate a theoretical contribution of the various measures. Specifically, the stepwise procedure was used to ascertain the extent to which the theoretical order of precedence of the measures is reflected empirically in their relative importance. In addition, these analyses first control for a number of surface and lexical variables, which is a conservative approach to assessing the importance of the consistency measures.

To complement the stepwise regression, we also present the results of elastic net regression to uncover the relative importance of all the variables: surface, lexical, and sublexical, both the toolkit measures and those from Chee et al., 2020 (the F/E measures were not included as they are available only for monosyllabic words). Elastic net regression can be used to determine an optimized subset of predictors from a larger pool, without any a priori decisions about the order in which variables should be tested, unlike stepwise regression. It also has advantages over other forms of regression in terms of dealing with collinearity, which is important here due to correlations between the various predictors (Tomaschek et al., 2018). Here we specifically use elastic net regression with repeated cross-validation, splitting the data into training and testing sets in order to report a measure of the relative importance when maximally accounting for the behavioral data from the ELP.

Materials

In total, 9164 words were both available in the ELP data set (Balota et al., 2007) and the toolkit corpus. In the instance of homographs, the pronunciation with the higher consistency was selected for the toolkit measures. Both the Naming and Lexical Decision data were extracted from the ELP, and both reaction time (RT) and accuracy were modeled, resulting in a total of four separate stepwise regressions. In addition to the toolkit measures, the following surface and lexical variables were also retrieved from the ELP database (or elsewhere, as noted in the following): Length (in letters), Ortho_N (number of orthographic neighbors), Phono_N (number of phonological neighbors), Freq_N (mean frequency of orthographic neighbors), Freq_Phono_N (mean frequency of phonological neighbors), OLD (mean Levenshtein distance to the 20 closest orthographic neighbors), OLDF (sum frequency of those neighbors), PLD (mean Levenshtein distance to the 20 closest phonological neighbors), PLDF (sum frequency of those neighbors), NSyll (number of syllables), NMorph (number of morphemes), and LgSUBTLWF (log word frequency, SubtlexUS database; Brysbaert & New, 2009). For the elastic net regression only, all of the consistency measures from Chee et al. (2020) were also included (consistency of the Onset, Nucleus, Coda, Oncleus [onset+nucleus], and Rime, in both the reading and spelling directions). For all of the sublexical measures the mean value, across all segments in the word, was entered, rather than the minimum (or the sum, which is confounded with word length).

Stepwise regression analyses

Multiple linear regression models were computed in a stepwise fashion, similar to the approach of Chee et al. (2020), in six steps. At each step past the first, sublexical variables were entered both as main effects and as interactions with word frequency (LgSUBTLWF), in consideration of the well-established interaction between lexical frequency and sublexical measures like consistency (e.g., Andrews, 1982; Cortese & Simpson, 2000). In addition, multicollinearity was assessed at each step by the use of variance inflation factors (VIF), computed with the R package car (version 3.1-0; Fox and Weisberg, 2019). All of the sublexical variables had VIF scores < 10 when entered at their respective steps in the modelFootnote 5.

-

Step one: The base model included all surface and lexical variables listed above in Materials plus two toolkit measures, Phoneme_LgFreq and Grapheme_LgFreq. This order of entry is arguably a conservative test of the value of the consistency measures, as it is not clear that lexical variables impinge upon outcome outcomes such as naming latency prior to sublexical variables. Nonetheless, these variables were entered first, as the focus is on determining the unique contribution of the various toolkit measures to explaining variance in the ELP behavioral data.

-

Step two: The Phonographeme_LgFreq measure was entered second, on the basis that graphemic parsing and phonemic assignment occur early in the reading process (see Fig. 1). This measure does not reflect consistency and as such is not specific to either the reading or spelling direction.

-

Step three: Phonographeme p(P|G) reading consistency was entered third, given the hypothesis that consistency at lower-level units takes precedence over high levels, although a reasonable case could be made for the reverse.

-

Step four: Onset/Rime p(P|G) reading consistency was entered fourth, reflecting the higher-level nature of those units.

-

Step five: Phonographeme p(G|P) spelling consistency was entered fifth, considering that feedback from phonological processing should arise only after feedforward processing begins.

-

Step six: Onset/Rime p(G|P) spelling consistency was entered last, again considering that feedback and higher-level units might be expected to affect behavior last.

Table 2 reports the beta coefficients, associated p values, and the change in adjusted R2 (∆R2) for each variable when first entered in its respective stepwise model (e.g., the reported beta coefficient for Phonographeme p(P|G) reflects the magnitude and direction of its effect on the outcome measure when entered in step 3, not its value in the final model of step 6). We also report, in Table 3, the Bayesian information criterion (BIC) for each stepwise model; the BIC reflects a more conservative approach to identifying the best model, as it applies a greater penalty for model complexity and thus will tend to select a model with fewer variables. We adopt the common interpretation that a change in BIC (∆BIC) < 2 provides essentially no support, ∆BIC of 4–7 provides considerable support, and ∆BIC > 10 provides substantial support for the more complex model relative to the less complex one (Burnham & Anderson, 2004). The regression models, R2, and BIC values were all computed in R with the base stats package (version 4.2.1; R Core Team, 2022). Significance of the ∆R2 was assessed with F-statistics provided by the anova function in the stats package.

Stepwise regression results

-

Step one: As shown in Table 2, the surface and lexical variables entered together in step one explained significant variance: total R2 = 0.41 for Naming RT, 0.18 for Naming Accuracy, 0.51 for Lexical Decision RT, and 0.33 for Lexical Decision Accuracy. With respect to the toolkit measures in this base model, first of all Phoneme_LgFreq was a significant predictor of both Naming RT and Accuracy such that words with more frequent phonemes were read more slowly (p < 0.001) and less accurately (p < 0.05) relative to words with less frequent phonemes. Because the direction of these effects is opposite what one might expect, we examined the first-order correlations between Phoneme_LgFreq and Naming RT and Accuracy to determine whether there might be a suppression effect in the multiple regression. This revealed that the association with RT was positive even without controlling for the other variables in step one, whereas the association with Accuracy was indeed reversedFootnote 6. There was no significant relationship between Phoneme_LgFreq and Lexical Decision RT or Accuracy.

-

Second, Grapheme_LgFreq was a significant predictor of all four outcomes (p’s < 0.05), such that responses were both faster and more accurate for words with relatively more frequent graphemes.

-

Step two: The effect of Phonographeme_LgFreq was significant for all four outcome measures (p’s < 0.001), as were the interactions with word frequency. For both Naming and Lexical Decision, words with frequent phonographemes were responded to more quickly and more accurately. This effect was attenuated for high- compared to low-frequency words for Naming RT, Naming accuracy, and Lexical Decision accuracy. The reverse was true of Lexical Decision RT (the effect was attenuated for low- compared to high-frequency words), although this p value was less robust (p ≈ 03, compared to p ≈ 0.009 for Lexical Decision accuracy and < 0.001 for Naming RT and accuracy). The ∆R2 was significant for all four outcome measures, explaining an additional 1.14% for Naming RT, 2.49% for Naming Accuracy, 0.21% for Lexical Decision RT, and 0.28% for Lexical Decision Accuracy.

-

Step three: The effect of Phonographeme p(P|G) consistency was significant for Naming accuracy (p < 0.01) and for Lexical Decision RT (p < 0.05). While it was not significant for Naming RT or Lexical Decision accuracy, the interactions with lexical frequency were significant for all but Lexical Decision RT (p’s < 0.05). Specifically, For Naming, words with higher reading consistency were read more quickly and more accurately, and these effects were significantly greater for low-frequency compared to high-frequency words. For Lexical Decision, words with higher reading consistency were responded to more quickly and more accurately, but in the case of accuracy the effect was greater for high- compared to low-frequency words. The ∆R2 was significant for all four outcome measures (p’s < 0.001), explaining an additional 0.61% for Naming RT, 0.59% for Naming Accuracy, 0.03% for Lexical Decision RT, and 0.04% for Lexical Decision Accuracy.

-

Step four: The effect of Onset/Rime p(P|G) consistency was significant for all four outcome measures (p’s < 0.001), with responses being both faster and more accurate for words with greater reading consistency. The interaction with word frequency was significant for both Naming outcomes (p’s < 0.001) but neither of the Lexical Decision outcomes (p’s > 0.05). For Naming, the effects of onset/rime reading consistency were greater for low- compared to high-frequency words. The ∆R2 was significant for all four outcome measures (p’s < 0.001), explaining an additional 0.82% for Naming RT, 0.57% for Naming Accuracy, 0.07% for Lexical Decision RT, and 0.09% for Lexical Decision Accuracy.

-

Step five: The effect of Phonographeme p(G|P) spelling consistency was significant for all four outcome measures (p’s < 0.001 except for Lexical Decision Accuracy, p < 0.01). The interaction with word frequency was significant for both Naming outcomes (p’s < 0.001) and for Lexical Decision RT (p < 0.05) but not Lexical Decision Accuracy (p < 0.10). Interestingly, for Naming, words with higher spelling consistency were read more slowly and less accurately, and these effects were significantly greater for high-frequency compared to low-frequency words. For Lexical Decision, as with Naming words with higher spelling consistency were responded to more slowly and less accurately, and this effect on RT was again greater for high- compared to low-frequency words. These findings may indicate interference/competition due to feedback. The ∆R2 was significant for all four outcome measures (p’s < 0.001 except for Lexical Decision Accuracy, p < 0.05), explaining an additional 0.59% for Naming RT, 0.66% for Naming Accuracy, 0.23% for Lexical Decision RT, and 0.05% for Lexical Decision Accuracy.

-

Step six: The effect of feedback Onset/Rime p(G|P) spelling consistency was significant only for Naming RT (p < 0.05), and the interaction with word frequency was significant for both Naming RT (p < 0.01) and Naming Accuracy (p < 0.01); no significant effects were found for Lexical Decision RT (p’s > 0.10). For Naming RT, words with consistent mappings were read more quickly, and this effect was significantly greater for low-frequency compared to high-frequency words. For Naming Accuracy, the significant interaction of consistency by word frequency indicated that low-frequency words relative to high-frequency words were read more accurately if the mapping was consistent. The ∆R2 was significant only for Naming RT (p < 0.01) and marginally so for accuracy (p < 0.10), explaining an additional 0.07% for Naming RT and 0.03% for accuracy.

The BIC values are reported in Table 3 for each stepwise regression, reporting main effects prior to interactions. Negative ∆BIC indicates support in favor of the more complex model and positive ∆BIC indicates support against the more complex model. Naming RT: all but two measures have substantial support for improving model fit. The first exception is Phonographeme p(P|G), which has considerable support against being included, however there is substantial support in favor of its interaction with word frequency. This suggests that reading consistency’s effects on Naming are particularly tied to lexical frequency (e.g., only low-frequency words benefit from having consistent mappings). Second, there is moderate evidence against including Onset/Rime p(P|G), and no evidence in support of it interacting with word frequency. Naming Accuracy: the pattern of results mirrors that for Naming RT. Lexical Decision RT: only Phonographeme_LgFreq, Onset/Rime p(P|G) reading consistency, and Phonographeme p(G|P) spelling consistency receive considerable or substantial support. There is considerable support against including any of the interactions with word frequency as well as against Onset/Rime p(G|P) spelling consistency. Lexical Decision Accuracy: there is substantial support only for Phonographeme_LgFreq, and considerable support against most of the other measures (no evidence either way for Phonographeme_LgFreq interacting with word frequency, Onset/Rime p(P|G), or Phonographeme p(G|P)) .

Stepwise regression summary

The results of the stepwise regression analyses in Tables 2 and 3 confirm that the toolkit measures explain significant amounts of unique variance after controlling for surface and lexical variables. The directions of the effects are generally as expected, with greater consistency/frequency associated with faster and more accurate responses. Similarly, interactions with word frequency typically indicate greater effects for low-frequency relative to high-frequency words. However, in some instances the effects of p(G|P) spelling consistency, which can be considered as feedback in the context of reading tasks, were opposite those of p(P)G) reading consistency. The pattern of reverse effects for feedback relative to feedforward consistency was also observed in some of the analyses of ELP data conducted by Chee et al., (2020), and previously observed in Yap and Balota (2009). In those studies, the apparently inhibitory effects arising from feedback were linked specifically to onset consistency; here, we did not separately model onset and rime consistency but rather only the composite of the two. Instead, here the inhibitory effects were found for Phonographeme p(G|P) spelling consistency, which may or may not be driven by the onsets in particular.

The BIC analyses (Table 3) present a more parsimonious account, in particular suggesting a much smaller role for consistency in Lexical Decision compared to Naming, and then only for consistency at the phonographeme level, not onset/rime. The BIC approach indicates that the difficult-to-interpret interaction between phonographeme reading consistency and word frequency in the context of Lexical Decision RT (Table 2, p ≈ 0.03) is quite possibly spurious, as the evidence goes against it (ΔBIC 7). It also presents substantial evidence against the possibility that feedback from the level of the onset/rime p(G|P) plays a role in either Naming or Lexical Decision, although these results might differ if the stepwise procedure entered variables in a different order.

Elastic net regression analyses

To address the possibility that the selected order of variables biases the results presented here, we also conducted an elastic net regression. This approach provides a method of determining the relative importance of the variables in an unconstrained fashion (i.e., without specifying an order of entry as in stepwise regression). Elastic net regression (Zou & Hastie, 2005) combines the benefits of the least absolute shrinkage and selection operator (LASSO) method, which supports variable selection by setting the coefficients of less important ones to zero (Tibshirani, 2011), and ridge regression, which outperforms LASSO regression in the case of highly correlated variables (Hastie, Tibshirani, & Friedman, 2009). Importantly, this has an effect of protecting “the estimates for the coefficients against collinearity-induced enhancement” (page 263; Tomaschek et al., 2018), essentially taking into account correlations between the predictors.

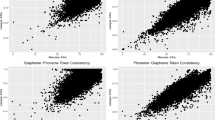

All of the surface, lexical, and toolkit measures included in the stepwise regression models were included in the elastic net regression, as well as the ten consistency measures from Chee et al. (2020): Onset, Nucleus, Coda, Oncleus, and Rime consistency in both the reading and spelling directions. The R package glmnet (version 4.1-4; Friedman et al., 2010) was used to fit the elastic net regression, and variable importance was obtained from the package caret (version 6.0-93; Kuhn, 2022), function varImp. Repeated tenfold cross-validation was used with 20 repetitions, separately for each of the four outcome measures: Naming RT, Naming Accuracy, Lexical Decision RT, and Lexical Decision Accuracy. The primary results of interest are the variable importance metrics, reflecting the absolute value of the scaled coefficients. Specifically, all variables were Z-scored prior to entry in the elastic net regression, and Fig. 3 depicts the variable importance as relative to the predictor with the largest coefficient (which was word frequency, for all outcome measures).

Variable importance from elastic net regressions. Toolkit measures in bold, consistency measures from Chee et al. (2020) in italics. RT = reaction time

Elastic net regression results

Figure 3 reports variable importance relative to the predictor with the largest coefficient, which was word frequency for all outcome measures. Naming RT (Fig. 3, top left): Of the 14 consistency measures (ten from Chee et al., 2020, and four from the toolkits), the most important was Chee and colleagues’ Onset reading consistency (25% as important as word frequency, ranked 8th), followed by the Sublexical Toolkit’s Phonographeme spelling consistency (20%, ranked 11th) and Onset/Rime reading consistency (16%, ranked 12th). However, the toolkit’s Phonographeme_LgFreq measure was more important than any of the consistency measures (52%, ranked 4th), as was its measure of phoneme frequency (Phoneme_LgFreq, 56%, ranked 3rd). The only consistency measure with importance less than 1% of that of word frequency was Chee and colleagues’ Oncleus reading consistency (1%, ranked 31st).

Naming Accuracy (Fig. 3, top right)

The most important consistency measure was the toolkit’s Phonographeme spelling consistency (28%, ranked 5th), followed by Chee and colleagues’ Rime reading consistency (15%, 10th) and Oncleus reading consistency (13%, ranked 13th). However, as with Naming RT, both the toolkit’s Phonographeme_LgFreq (63%, ranked 2nd) and Phoneme_LgFreq (45%, ranked 3rd) measures from the were more important. Two consistency measures had less than 1% of the importance of word frequency: Chee and colleagues’ Rime spelling consistency (0.3%, ranked 32nd) and the toolkit’s Onset/Rime spelling consistency (1%, ranked 30th).

Lexical Decision RT (Fig. 3, bottom left)

The most important consistency measure was the toolkit’s Phonographeme spelling consistency (13%, ranked 11th), followed by its Phonographeme reading consistency (7%, 16th) and Chee and colleagues’ Oncleus reading consistency (7%, ranked 19th). Both the toolkit’s Grapheme_LgFreq (16%, ranked 6th) and Phoneme_LgFreq (16%, ranked 7th) measures were more important. The only consistency measure with importance less than 1% of that of word frequency was Chee and colleagues’ Onset spelling consistency (0%, ranked 33rd).

Lexical Decision Accuracy (Fig. 3, bottom right)

The most important consistency measure was Chee and colleagues’ Oncleus reading consistency (6%, ranked 14th), followed by their Nucleus spelling consistency (5%, 15th) and the Sublexical Toolkit’s Phonographeme spelling consistency measure (5%, ranked 17th). However, the three frequency measures from the Sublexical Toolkit all ranked as more important: Phonographeme_LgFreq (9%, ranked 9th), Grapheme_LgFreq (9%, ranked 11th), and Phoneme_LgFreq (6%, ranked 13th). Two consistency measures had less than 1% of the importance of word frequency: Chee and colleagues’ Onset spelling consistency (0%, ranked 33rd) and the toolkit’s Onset/Rime spelling consistency (1%, ranked 32nd).

Elastic net regression summary