Abstract

Pupil size change is a widely adopted, sensitive indicator for sensory and cognitive processes. However, the interpretation of these changes is complicated by the influence of multiple low-level effects, such as brightness or contrast changes, posing challenges to applying pupillometry outside of extremely controlled settings. Building on and extending previous models, we here introduce Open Dynamic Pupil Size Modeling (Open-DPSM), an open-source toolkit to model pupil size changes to dynamically changing visual inputs using a convolution approach. Open-DPSM incorporates three key steps: (1) Modeling pupillary responses to both luminance and contrast changes; (2) Weighing of the distinct contributions of visual events across the visual field on pupil size change; and (3) Incorporating gaze-contingent visual event extraction and modeling. These steps improve the prediction of pupil size changes beyond the here-evaluated benchmarks. Open-DPSM provides Python functions, as well as a graphical user interface (GUI), enabling the extension of its applications to versatile scenarios and adaptations to individualized needs. By obtaining a predicted pupil trace using video and eye-tracking data, users can mitigate the effects of low-level features by subtracting the predicted trace or assess the efficacy of the low-level feature manipulations a priori by comparing estimated traces across conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Pupillary responses are sensitive and versatile indicators of physiological changes accompanying or underlying human cognition (see Binda & Gamlin, 2017; Mathôt, 2018; Strauch et al., 2022a; Wilhelm, 2008 for reviews). When measuring pupil size in daily life, a complex pattern of constrictions and dilations emerges. What makes this signal so complex? Besides steady-state factors affecting baseline pupil size, such as age and overall luminance (Watson & Yellott, 2012), pupil size also responds to all kinds of sensory and cognitive events (Strauch et al., 2022a). This multitude of parallel factors affecting pupil size makes it challenging to dissociate the different components. For instance, pupillary light responses assessed by a neurologist (Wilhelm, 2008) might be confounded by attention or arousal modulations. Psychologists or cognitive neuroscientists, in turn, are usually interested in such attentional effects exclusively, and thus aim to eliminate possible confounds due to light levels. To study one isolated aspect, such as cognitive modulations, highly controlled stimulus materials and laboratory settings are usually adopted. Here, we introduce “Open dynamic pupil size modeling” (Open-DPSM), a convolutional model capable of modeling the effects of visual low-level features on pupil size changes using dynamic and complex stimuli such as videos with unconstrained eye movement, which in turn allows estimation of attentional effects. We make Open-DPSM fully available with open-source Python scripts and an accessible graphical user interface to the community.

Modeling pupil size

As pupil size changes reflect an intertwined combination of low-level visual events and higher cognitive events, it is necessary to mitigate the influence of unwanted confounders. Brightness changes, eliciting the pupil light response (PLR), are the most prominent low-level features. Besides stringently controlling luminance, modeling is used to estimate the PLR to complex and dynamic stimuli from luminance changes. The subtraction of this modeled trace from measured pupil size then yields a more “purified” trace of cognitively driven pupil size changes (David-John et al., 2018; Fanourakis & Chanel, 2022; Napieralski & Rynkiewicz, 2019; Raiturkar et al., 2016; Wong et al., 2020). Such models can be roughly divided into two categories: steady-state models to estimate PLR under equilibrium state (Moon & Spencer, 1944; Raiturkar et al., 2016; Watson & Yellott, 2012) and dynamic models incorporating the PLR under transient influence of the light (Fan & Yao, 2011; Longtin & Milton, 1989; Pamplona et al., 2009; Usui & Hirata, 1995). As expected, for dynamic and constantly changing stimuli, dynamic models are found to be more effective for the accurate estimation of pupil size change, even though they aren't widely adopted yet (Fanourakis & Chanel, 2022). The adaptation of many of these models is challenging, as they incorporate the biomechanical functions of muscles and the feedback loop from the brain that are thought to control pupillary responses, which require complicated computations or additional experimental conditions/calibrations to estimate free parameters and individual differences (Soleymani et al., 2012; Zandi & Khanh, 2021).

Alternatively to such biophysiological models, studies have adopted convolutional approaches to model pupil dynamics to both higher-level cognitive events and lower-level visual events. As pupil size exhibits a relatively slow response to the perceptual and cognitive events that drive it, pupil size at any given time reflects the consequence of multiple, overlapping, distinct perceptual and cognitive events (Denison et al., 2020; Wierda et al., 2012). Assuming that a linear time-invariant system underlies pupillary changes, two consecutive events elicit responses that overlap with each other in time, resulting in an overall pupil response reflecting the sum of both responses. A gamma-shaped response function (RF) (Fig. 1A) is usually adopted to approximate each of those delayed responses to any events (e.g., changes in stimulus material as shown in Fig. 1B, C). More specifically, as schematically outlined in Fig. 1, each event is convolved with a response function. The “stronger” the event (Fig. 1C), the higher the amplitude of the pupillary response (Fig. 1D). Visual events, such as brightness changes, would influence pupil size not only transiently, but also leads to a sustained new size (e.g., a constricted pupil if brightness is increased). To model this sustained response, the pupillary response is further cumulated (Fig. 1D blue line). A number of studies have exploited this convolutional nature to either model the effects of cognitive events on pupil responses or to retrieve the timing and strength of cognitive events using deconvolution techniques (de Gee et al., 2014; Denison et al., 2020; Hoeks & Levelt, 1993; Kang & Wheatley, 2015; Knapen et al., 2016; Wierda et al., 2012). On the other hand, Korn and Bach (2016) also used the convolutional approach to model luminance changes. To this end, two response functions were fitted to (a) model sustained changes to luminance in general and (b) model temporary 'overshoots' to increases in luminance. These overshoots consist of short-lasting pupil constrictions after luminance increments that are more strongly than expected if only taking into account sustained luminance changes. It is well possible that such constrictions could potentially be the result of a low-level feature other than a sustained luminance change. The effect of luminance can be modeled out as a nuisance covariate to allow for the study of cognition-induced pupil size changes (for existing software, see Psycho Physiological Modelling (PsPM); Korn et al., 2017). Other than luminance changes, convolution should, in principle, also allow modeling the effects of other low-level feature changes, such as contrast, color, or spatial frequency (see Strauch et al., 2022a for a review).

Convolution of response function and visual events. A Pupil response function (RF) for convolution, based on Hoeks and Levelt (1993). B Examples of several movie frames. C Exemplary data of luminance changes over time, which can be either positive (change to brighter) or negative (change to darker). Changes are extracted as difference of luminance between two consecutive images in B and then aggregated to time-series data. D Results of response function in A convoluted with the time-series luminance changes in C, resulting in transient (temporary) changes (black line) and accumulated (sustained) changes (blue line). Note that positive values in C indicate positive luminance change (brighter), the predicted pupil size in D therefore changes to the opposite direction of A

Current model

Although Korn and Bach (2016) successfully captured the characteristics of pupillary responses to simple and temporally separated luminance changes, it is unknown whether this approach can predict pupil size changes when observers watch more dynamic, complex, and temporally overlapping visual changes, such as in a movie. Building on existing modeling and theoretical advancements, Open-DPSM directly applies the convolution approach to the extracted visual events of movies over time. We will additionally demonstrate that Open-DPSM succeeds in predicting pupil size to highly dynamic visual input flexibly without fixation restrictions by incorporating: (i) transient response to contrast changes; (ii) weighted contribution of different visual field regions and (iii) gaze-contingent visual events extraction. Why and how well these features improve the modeling results will be incrementally extended on throughout this manuscript.

Visual event extraction

To model pupil size dynamics, visual events (i.e., luminance changes) are extracted from the stimulus material first (here: movie frames). For each movie frame, a three-color channel (red, green, blue; RGB) image (Fig. 1B) is loaded in an 8-bit matrix. Then, luminance changes are computed as described in the following.

Convert RGB to CIELAB

To better match how the human visual system “perceives” computer images, each RGB image frame is converted to CIELAB space (International Commission on Illumination, 2004) with the function “cvtColor” in the python package OpenCV. The first channel L* of the CIELAB space is used as the input value to calculate luminance on the screen.

Gamma correction to convert L* to physical luminance

The L* extracted from the image represents the relative luminance on the scale of black (0 as minimum value) to white (255 as maximum value). Note that these values do not represent the absolute amount of physical light emitted by the monitor, as the monitor scales the input nonlinearly to luminance using a gamma function. Typically, OLED screens apply a gamma value of 2.2, which prevents luminance saturation and thus enhances the aesthetical appearance of the images (Cooper et al., 2013). The higher this gamma value, the stronger the nonlinearity and darkening of lower input values. Physical luminance in candela per square meters (cd/m2) is hence computed by applying a gamma correction to L* input. This physical luminance in cd/m2 will be used to model pupil size change (which will be referred to as luminance henceforth) because a roughly linear relationship between the physical luminance change in candela per square meters (cd/m2) and the amplitude of pupillary response to luminance change was found within the current limited range of luminance changes in movies (see Supplementary Fig. 1). Note, however, that more extreme luminance ranges result in non-linear links due to flooring/ceiling effects (see Watson & Yellott, 2012).

Luminance change

The change in overall luminance per frame is calculated by taking the mean difference in luminance across all pixel values between two frames. More specifically, for our movies with a 25-Hz frame rate, we calculate the difference in every two movie frames (i.e., two frames separated by 80 ms) to mimic the sampling rate of the human visual system (Intraub, 1981; Potter, 1975; Potter et al., 2002). The changes in luminance at the first two frames (or at times 0 s and 0.04 s) were calculated by subtracting the luminance of a homogenously black image from these initial frames.

Dataset

Participants & stimulus material

To train and test our models, eye-tracking data were collected from n = 15 participants (MAge = 24.43; SDAge = 2.43 years; seven males) who watched twenty movie clips of 60 s each in random order. Movie clips were selected from a larger set published earlier (Gestefeld et al., 2020). The subset of movies, including 18 cartoon/animated movies and two movies with real actors, were selected because these contained relatively many luminance changes.

Apparatus

A large OLED65B8PLA LG 65” TV (145 by 80 cm (88.1° by 56.1° visual angle) displayed the movies at a resolution of 1920 by 1080 pixels and a refresh rate of 100 Hz. The maximum 100% brightness of the TV was 212 cd/m2 and the gamma value is 2.2. Participants sat 75 cm away from the screen while they kept their head fixed in a chin- and forehead rest. The built-in speaker of the TV played the audio of the movie at an average loudness of around 50 dB (range 40–70 dB).

Eye movement and pupil size data of both eyes were recorded with a tower-mounted EyeLink 1000 (SR Research) at 500 Hz. The eye tracker was set to circular fitting of the pupil and its diameter served as the pupil size measure. The TV connected to a separate desktop computer with an installed Python-based PsychoPy package that allowed controlled movie presentations and EyeLink communication (version 2022.2.4, Peirce et al., 2019).

During the experiment, the only source of light apart from the stimulus-displaying TV was the EyeLink communication monitor, resulting in less than 1 Lux (lx) at eye position.

Procedure

The participants watched the movies without further task instructions other than keeping their heads still. An eye-tracker calibration (five-point calibration and validation) was performed before the start of the first trial and every five trials (i.e., movie clips) during the experiment. Participants started trials/movies self-paced. If a participant moved her/his head, a recalibration was performed, and participants watched the movie again.

Modeling pupil size

Benchmark models

Before describing the more advanced steps in building Open-DPSM, we introduce two benchmark models for pupil size predictions, adjusted for continuous video input and modeling: (1) a prior-less polynomial model, and (2) an adaptation of the model by Korn and Bach (2016). To optimize the model, the root mean squared error (RMSE) was minimized by fitting parameters using ordinary least square minimization with a Nelder–Mead simplex search algorithm (“minimize” function in Python’s scipy packageFootnote 1). We used explained variance (R2) between actual and fitted pupil size change to evaluate model performance.

Polynomial model

The polynomial model served as a test on whether a model with the same number of free parameters as the later introduced models can account for the changes in pupil size across time. We fitted a quintic (five-degree) polynomial, corresponding to the number of parameters in the later described ‘Contrast response model’, to all the pupil data traces of all the movies for each participant with one set of best-fitting parameters.

Unsurprisingly, the performance of the polynomial model was poor (R2 < 0.001).

Extending Korn and Bach (2016)

Korn and Bach (2016) suggested that pupil size changes in response to luminance changes can best be explained by two distinct linear-time-invariant (LTI) systems, each employing a unique RF. The RF in the LTI1 modeled the sustained pupillary dilation and constriction to luminance change (Fig. 2B) and the RF in the LTI2 captured the extra ‘overshoot’ in constriction responding to luminance increases (i.e., the residual difference between the red lines in Fig. 2A, B) that was not captured by LTI1. Korn and Bach (2016) tested the model using static and discrete stimuli with alternating luminance levels every 5 s. The two RFs were first fitted to event-related pupil response and then pupil prediction was evaluated with time-series luminance events. To predict pupil size with continuous and overlapping visual events, the model by Korn and Bach (2016) was extended to apply to movie-watching data.

Adapting Korn and Bach (2016) to model event-related pupil responses with RFs and applying them to continuous time series. A Grand mean of event-related pupil responses to luminance increases (constriction, red) and decreases (dilation, blue); B Fitted cumulative event-related pupil responses (see subplot for original derivative) for LTI1 dark (blue) and bright (red) events. Note that Korn and Bach (2016) only used dilation data to model the LTI so the pupil constriction here (red) was created by inversing the modeled pupil dilation pattern. C Predicted difference between dilation and constriction of the LTI2 to capture the overshoot in response to luminance increases. D Actual (solid) and predicted (dashed) event-related pupil responses for constrictions (red) and dilations (blue), with the prediction of constriction based on the combination of the two RFs in panel B and C. E Exemplary convolution of the two RFs of both LTI systems to continuous luminance changes (dashed blue and green), in comparison to actual pupil data (solid grey). F A weighted combination of the two LTI systems (dashed brown) and the comparison to actual pupil data (solid grey)

Modeling methods and results

Event-related pupil response extraction. To extract event-related pupil responses, events of luminance changes in the movies had to be detected first. As we intended to mimic the predefined and rather large discrete luminance changes in Korn and Bach (2016), the luminance changes with varying intensities throughout the movie were determined post hoc with a threshold (all the luminance changes below ±3 cd/m2 were removed; final luminance change magnitudes ranging from – 45.8 to 46.3 cd/m2). Similar to Korn & Bach’s stimulus design, events in too short succession (below 1 s) were removed, which reduced crosstalk between consecutive events. Event-related pupil responses were extracted by segmenting the pupil time-series data into 3-s segments using each event as the onset. Each event-related pupil responses were baseline corrected by subtracting the average pupil size of the first 250 ms after event onset, as the pupil takes approximately 250 ms to start responding to a luminance change. Event-related pupil responses were further divided by the overall standard deviation across all event-related pupil responses of all participants to be further z-standardized. After extracting all luminance-related pupil responses, the grand mean of dilation (Fig. 2A blue trace) and constriction (Fig. 2A red trace), and the difference between them were calculated.

Modeling the pupillary responses with two LTI systems. Following Korn and Bach (2016), the two RFs were then fitted to the grand mean of dilation and the difference between the dilation and constriction respectively, using the gamma probability density function. This function has three free parameters (see formula (1), c standing for the peak amplitude, and k and Θ for the shape and time of peak amplitude collectively. Also see Fig. 3A for examples of this function across different parameter combinations). The starting point of the response function was set to 200 ms past the event.

Exemplary pupil response functions. A The illustrations of Gamma probability density functions (Korn & Bach, 2016) and B Erlang gamma functions (Hoeks & Levelt, 1993) with different combinations of values for the free parameter. For both functions, an unchanged pupil size period of 200 ms is set and all RFs are normalized to a maximum value of 1 for illustration purposes. Θ and k in Gamma probability density function interactively control for timing and shape of the RF. tmax exclusively controls for timing of the peak in Erlang gamma function, and tmax and n for shape/width

As the LTI1 reflected the sustained response to luminance change, the grand mean of pupil dilation was modeled by the cumulative of the first RF (c = 0.04, k = 14.1, Θ = 0.05; Fig. 3B). The second RF was approximated by another gamma probability density function to model the difference between constriction and dilation (Fig. 2C; c = 1.23, k = 4.7, Θ = 0.14). A weight to the LTI2 was fitted to determine the relative amplitude (weight = 1.003) of the second RF as compared with the first RF. The combination of the two RFs explained almost all variance in both the dilation (R2 = 0.97) and constriction (R2 = 0.94) patterns of the event-related pupil responses (Fig. 2D).

Evaluating pupil prediction with time series data. The two RFs were convolved with time-series data of all luminance changes and luminance increases per movie, respectively (for an example, see Fig. 2E), and were then combined to a weighted sum (Fig. 2F), which explained the variance in time-series of pupil size above chance, albeit much less so (mean of all participants: R2 = 0.197, SD = 0.059) than for the event-related responses. Note that the LTI1 contributed much more strongly to explaining variance (R2 = 0.191, SD = 0.0512) than the LTI2 (R2 = 0.046, SD = 0.022).

Alternative response function

Besides the gamma probability density function used in Korn and Bach (2016), the Erlang gamma function was previously proposed to fit event-related pupil responses well (Hoeks & Levelt, 1993). This function can be considered more intuitive because the timing of the peak amplitude is determined by a single parameter (formula (2)) rather than being the result of a complex interaction between two parameters as in the gamma probability density function.

The Erlang gamma function relies on two parameters: tmax, determining the timing of peak amplitude, and n the shape/width of the pupil response function (Fig. 3B). A third multiplication parameter is added to this function to control the relative amplitude of the peak. After applying the Erlang gamma function to our data, the model performs comparably to the gamma probability density function (R2 = 0.197, SD = 0.058). As the Erlang gamma function is easier to interpret, we only adopted this function in the following models (Results of models using the gamma probability density are reported in Supplementary Table 1 as a complement, both response functions can be called in Open-DPSM).

Open-Dynamic Pupil Size Modeling (Open-DPSM)

We next introduce Open-DPSM, a convolutional model for pupil size changes to dynamic visual stimulation. Open-DPSM incorporates three major features discussed in the following sections in more detail: (1) Integration of a transient contrast-based pupil response in addition to a sustained response to luminance change; (2) Separate weights of different image regions in their contribution to pupil size changes; (3) Gaze-contingent feature extraction and modeling. For each step, a brief motivation is outlined, steps are described and model improvements are reported.

Integration of a contrast response function

The phenomenological “overshoot” responding to increases in luminance, which results in “undershoots” by modelled sustained luminance responses (Korn & Bach, 2016), can be alternatively interpreted as the orienting response of pupil (Barbur et al., 1992; Gamlin et al., 1998; Hu et al., 2019; Kanari & Kaneko, 2021; Naber et al., 2011; Nakano et al., 2021; Slooter & van Norren, 1980; Young et al., 1995; see Strauch, 2022a for a review). Visual events, including changes in luminance, contrast, color, or any other visual feature evoke a temporary constriction that scales with the salience of the event as shifts of attention (Wang et al., 2014; together with a sometimes small faster dilation preceding the constriction). Such short-lasting constrictions can overlap with sustained responses to luminance changes. As such, we took luminance contrast (absolute value of luminance change) as a measure of visual change independent of the direction of luminance change (see Discussion for the potential impact of features beyond contrast). We hypothesized that both luminance increases and decreases would elicit a separate transient pupil component which would scale with the degree of luminance contrast change. It is important to note that pupil dilations to luminance decreases are also likely to contain such a contrast-dependent component (Barbur et al., 1992). However, its effect may not always be directly visible as the overlapping, sustained dilation may conceal this transient constriction, although the latter may result in an apparently delayed pupil response. When the transient constriction surpasses the sustained dilation, a counterintuitive observation of constriction response to darkness can also emerge, which is consistent with such observations in rapid presentations of flashes with different illuminance levels (see Figure 8 in Korn & Bach, 2016, and Supplementary Fig. 2). We next extend the model based on this idea.

Modeling methods and results

This “contrast response model” differed from the modified version of Korn and Bach (2016) described above in two aspects. First, the second transient response function was modeled based on contrast and not used to model the “overshoot” in responses to luminance increases exclusively. Second, the amplitude of this response scaled with the degree of contrast change as more salient events should evoke stronger pupil constrictions. As before, we first calculated event-related pupil responses to different magnitudes of sustained luminance changes. We binned the entire range of possible magnitudes of luminance changes into five separate bins using percentiles and calculated the average event-related pupil response per bin (Fig. 4A; 0–20 much darker; 21–40 slightly darker, 41–60 very small change or no change; 61–80 slightly brighter, 81–100 much brighter; see Supplementary Table 2 for the average and range of luminance change amount per bin).

Contrast response model. A Event-related pupil responses binned to five levels of luminance changes. B Predicted event-related pupil responses with the RF (subplot) for sustained responses to luminance changes. C Predicted event-related pupil responses for transient responses to contrast changes. D Actual (solid) and predicted (dashed) event-related pupil responses combined the two RFs in B and C with a weight. E Exemplary combined weighted prediction (dashed brown) and actual pupil size change (solid grey)

The five sustained luminance responses were then modeled with the cumulative RF (Fig. 4B). The contrast responses were modeled with a transient RF (Fig. 4C). Similar to the previous models, optimization was used to find the best-fitting parameters (RF for luminance change: n = 13.7, tmax = 0.28s; RF for contrast change: n = 3.0, tmax = 0.53s; Relative weight of contrast change as compared with luminance change: 0.42; R2 = 0.893, Fig. 4D).

The two RFs were then convolved with luminance and contrast changes respectively and combined into a single weighted prediction (Fig. 4E). The model performance was evaluated the same way with time-series data of all luminance changes, and the result outperformed the last model (R2 = 0.242, SD = 0.069; t(14) = – 12.901, p < 0.001).

Convolving RFs directly with luminance and contrast changes

The aforementioned models mandated a two-step procedure: (1) Fit two RFs to the event-related pupil responses and (2) convolve the RFs to time series of visual events to predict continuous pupil size changes. Although the contrast response model described above already outperformed the polynomial model and Korn and Bach (2016), several constraints remained: Firstly, the detection of events required arbitrary luminance thresholds and an arbitrary number of bins that segregates the range of luminance levels. Secondly, this method cannot account for covariations and interactions between effects of parallel visual events (i.e., luminance and contrast). This would also impede later model extensions for other types of visual events (see Discussion; Gamlin et al., 1998; Kimura & Young, 1995; Oster et al., 2022; Portengenet al., 2023a; Young et al., 1993). Subsequently, we modeled continuous pupil size data using the raw rather than threshold-dependent time-series data of luminance and contrast changes and fitted the RFs directly to time-series data instead of fitting RFs as a priori on event-related pupil responses (Fig. 5A, B). This direct convolution of visual events with RFs would also allow for more flexibility in other manipulations, such as assigning weights to denote relative contributions of visual field regions (see next model). We hereby refer to this modeling procedure as “temporally continuous” and the previous step-wise modeling as “temporally discrete”.

Temporally continuous modeling method. A Two exemplary RFs to model luminance and contrast change each with two free parameters convolved with B the luminance (purple) and contrast changes (green) over time. Note that the convolutional result for luminance changes was cumulated, as in Fig. 1D (blue line). C Prediction (dashed brown) and actual pupil data (solid grey)

The two separate response functions were directly convolved with each trace of luminance and contrast changes, respectively, and parameters were optimized as before. Except for direct convolution of time-series data, this method was different from the previous fitting procedure in two ways: Firstly, since no thresholding or cutoff was required, a larger amount of data was available, and hence, RFs were fitted per participant and results across participants were able to be compared (see Supplementary Fig. 3A, B for selected parameters in RFs across all participants). Secondly, as the parameters of the RFs were determined by fitting the time-series data directly and the fitted result cannot be visually examined, we used a repeated cross-validation procedure to avoid overfitting and ensure the robustness of the model. Twenty trials of each participant were divided randomly into training (70%) and testing sets (30%) and this procedure was repeated over five iterations. All following models would maintain those adaptations and the average results of only the testing sets across the five folds would be reported.

Model performance again improved significantly (R2 = 0.261, SD = 0.069; t(14) = 3.432, p = 0.004).

Regionally weighted

The influence of visual events on pupil size changes depends on the location of their appearance in the visual field, a phenomenon termed a (pupillary) visual field anisotropy (e.g., Ferree et al., 1933; Kardon et al., 1991; Naber & Nakayama, 2013; Portengen et al., 2021). More pronounced pupil responses to visual events falling on the fovea compared to the periphery and the upper visual field compared to the lower visual field have been found in many studies (Strauch et al., 2022b). However, the exact relationship between the locations of visual events in the visual field and the amplitude of pupillary responses is more complicated. For instance, Thurman et al. (2021) found a context-dependent anisotropy (i.e. “blue sky effect”), showing an enhanced pupillary response to blue light in the upper regions of the visual field.

To account for potential anisotropies, we split the visual field into 8 x 6 rectangular and equally sized regions (see Fig. 6A for visual degrees of each region). The number of regions was chosen to balance the trade-off between spatial resolution and computational power. Visual events were extracted for each region separately. Five free parameters were added as regional weights, accounting for relative contributions of the top-middle, top-peripheral, bottom-central, bottom-middle, and bottom-peripheral parts (see W1–W6 in Fig. 6A, the top-central weight (W1) was set to 1). Luminance and contrast changes per region were convolved with the RFs and then multiplied by the respective regional weights.

Regional weights of visual events across the visual field. A Regional weights and visual angles on the 48 regions. B Weights as fitted in the model, consistent with expected vertical and eccentric anisotropies. Each point represents a participant. C Illustration of the mapped regional weights averaged across participants. Darker means stronger weights. D Same illustration as in A but for the regional weights and visual angles of the gaze-contingent coordinate system. The red point represents the gaze position at this certain frame

Incorporating regional weights significantly improved model performance (R2 = 0.302, SD = 0.085; t(14) = -5.425, p <0.001), comparing with the contrast response model. Weights selected by the model were consistent with expected visual field anisotropies: central image regions contributed more to the pupil dynamics than peripheral regions and so did top regions than bottom regions; see Fig. 6B, C). A two-way repeated-measures ANOVA showed significant main effects for the presence of vertical (top versus bottom; F(1,84) = 5.288, p = 0.024) and eccentric (central/middle/peripheral; F(2,84) = 73.268, p < 0.001) asymmetries, but no interaction (F(2,84) = 0.210, p = 0.811). Post hoc Turkey HSD tests showed significant differences in weights between peripheral and middle, and between middle and central regions (all p < 0.001).

Gaze contingency

Unrestricted eye movements would inherently result in a mismatch of the relative positions of the video image and the retina at each time point. For example, when the participant looks towards the top left (see Fig. 6D), the center of the video image no longer falls on the center of the retina. Furthermore, as most screens cannot completely cover the whole visual field of the participant, fixations adjacent to the screen's edges lead to more parafoveal stimulation by the background surrounding the monitor (see black bars surrounding movie images in Fig. 6D). We therefore incorporated gaze position in visual event extraction with a gaze-contingent (retinal) coordinate system representing the actual image falling upon the retina. To create this gaze-contingent coordinate system, a black rectangle 1.5 times larger than the size of the screen and with the same aspect ratio was created, representing the background surrounding the screen. The relative size of the background to the screen was chosen to ensure that the image of the screen stayed inside the borders of the background most of the time for relative peripheral fixations. Each image was then relocated in the gaze-contingent coordinate system by aligning the gaze position at the time of the frame to the center of the new coordinate system.

This resulted in a non-significant, but descriptive improvement (R2 = 0.327, SD = 0.087; t(14) = 1.92, p = 0.08), comparing with the regionally weighted model. Again, the selected regional weights supported the presence of anisotropies (foveal (central) > parafoveal (middle) > peripheral; top > bottom; see Supplementary Fig. 4C, D).

Model evaluation and comparison

To evaluate model performance, we calculated R2 for each step in model development (see a summary of R2 for all steps in Fig. 7; see Supplementary Table 1 also for correlation coefficients and RMSE). Gaze-contingency model incorporating all described steps outperformed all other models. To further compare models, the Bayesian information criterion (BIC) provides insights by considering parsimony (i.e., explained variance relative to model complexity set by the number of free parameters). Note that BIC could only be meaningfully calculated for the later three models, as the split of training and testing data (i.e., cross-validation) resulted in a different sample size relative to the discrete event-related fitting approach. The decreasing BIC values over the last three models (see Supplementary Table 1) suggested meaningfully improved model performance despite higher complexity. In summary, we develop Open-DPSM with a “temporally continuous modeling” for better prediction of pupil size change to our dynamic and complex stimuli and we improve model predictions by incorporating (i) a scaled contrast response function instead of an undershoot-correction function, (ii) regional weights, and (iii) gaze-contingent coordinate system.

Explained variance per model. Boxplots show incremental improvements per modeling step. Single points, connected by dashed lines across models, illustrate model performance per participant. Shaded distributions on the right show smoothed density functions (histograms) of explained variance per model with corresponding colors (*** p < 0.001; • p = 0.08)

To replicate our findings, we further evaluated model performance on a different dataset (Gestefeld et al., 2020). Here, the movies used in our experiment were tested together with many other clips of similar length with a different group of participants and a different lab with a smaller monitor. The pattern of results fell in line with the findings reported above (see Supplementary Material “Replication of findings with different data (Gestefeld et al., 2020)” for details).

Python package (Open-DPSM.py)

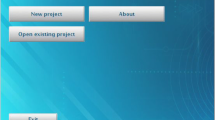

Open-DPSM can be downloaded via https://github.com/caiyuqing/Open-DPSM. It has been tested on Microsoft Windows 2012 R2 with Spyder (version 5), Jupiter notebook (6.4.5)/JupiterLab (3.2.1) and PyCharm (2013.1.4) installed that used Python version 3.9.7. The main Open-DPSM script (main.py) contains two classes of functions to perform visual event extraction (event_extraction.py) and pupil prediction (pupil_prediction.py) as well as one class for interactive plotting of results (interactive_plot.py). Another script (settings.py) allows to set default parameters (e.g., number of image regions, size of the gaze-contingent coordinate system, gamma value of the monitor, etc.), which can be adjusted by experienced users. For users who prefer a graphical user interface (GUI), the script main_app.py activates the Open-DPSM GUI, which can conduct all modeling steps in a user-friendly manner (Fig. 8).

Screenshots of the Open-DPSM GUI. A Screenshot of the GUI page of Visual event extraction & pupil prediction. B Screenshot of the interactive plotting GUI. To start the GUI, run main_app.py. Video (and optional eye-tracking data) are loaded on the welcome page (not depicted), and relevant context information should be entered by the users in the GUI shown in panel A. The main functions, events_extraction() and pupil_prediction() can be called via the buttons shown in panel A. Model performance is outputted on the left side of the screen. A click on “interactive plot” opens the screen shown in panel B with the data traces of gaze positions, extracted visual events, and both predicted and observed pupil size over time. Dragging the slider, shown at the bottom of panel B, allows users to jump to a specific time point and video frame

classes.event_extraction

This class of functions serves to extract the timing and strength of visual events (luminance and contrast changes) of an input video file. This trace is needed later for convolution to make a pupil prediction. The function event_extraction() extracts visual events (e.g., luminance changes) frame by frame from video files (.mp4, .avi, .mkv, .wmv, .mov, .flv, .webm) per image region. When eye tracking data file is provided (.csv) with timestamps and gaze coordinates, gaze-contingent visual events are extracted. Gaze data is automatically downsampled to the video sampling rate. If no eye-tracking data file is provided, screen-based visual events are extracted instead. The function outputs video information (resolution, frame rate, etc.), timestamps, and event traces per image region, which will be saved in a pickle file named “[movieName]_[subjectName]_VF_LAB_6X8.pickle” in a “Visual events” folder.

classes.pupil_prediction

This class of functions serves to model pupil size from the extracted visual events by fitting two response functions, convolved with the extracted visual events from event_extraction(). Without eye-tracking data, a predicted pupil trace is generated based on the average pupil response functions calculated from the data described in this manuscript (see “Integration of a contrast response function”). When eye-tracking data is available, model optimization will be performed and best-fitting parameters for response functions and regional weights will be found and saved in a “csv results” folder as a .csv file named “[movieName]_[subjectName]_parameters.csv” once finished. The results of model performance will also be saved in the same file (correlation and RMSE). The file contains two columns, with the names of the parameters and their values, respectively. Actual and predicted pupil size based on both, as well as combined, visual event traces are saved in another .csv file in the same folder named “[movieName]_[subjectName]_modelPrediction.csv”, which will contain actual pupil size and predicted pupil size as columns. Predicted pupil size (z-standardized) will be provided with three columns, one for the combined prediction with both luminance and contrast change, one for prediction with luminance change only, and one for prediction with contrast change only.

classes.interacitve_plot

This class of functions can be used to interactively plot results of event_extraction() and pupil_prediction(). interactive_plot() produces three subplots as in Fig. 8B, one each for gaze position, visual events extracted via event_extraction(), and the trace of predicted and actual pupil size changes. Subplots can be saved as individual files using the interface. Specific frames can be selected using a slider.

Discussion

Pupil size changes are the integrated outcome of several underlying factors, encompassing effects of changes in a range of low-level sensory features, but also higher-order cognition. To isolate the cognitive effects from the sensory effects, many studies have opted for quantifying the expected influence of the pupil light response (PLR), a primary contributor to pupil size change. Thus far, only a few studies have adopted dynamic models of PLR to estimate pupil size change in presenting complex dynamic stimuli (Fanourakis & Chanel, 2022; Napieralski & Rynkiewicz, 2019). However, those studies did not consider other low-level features, beyond luminance, and disregarded visual field anisotropies or coarsely controlled for these by exclusively focusing on visual events falling on foveal regions.

We here introduce Open-DPSM, an open-source toolkit that enables not only the prediction of pupil size with dynamically changing stimulus materials but also the extraction of visual events from videos. What are Open-DPSM’s key features? (i) Open-DPSM uses a parsimonious convolutional method with simple gamma functions to model pupil responses; (ii) Open-DPSM models pupillary dynamics more accurately by incorporating a separate transient for orienting responses that scale with contrast changes; (iii) Open-DPSM builds on a realistic representation of visual input to pupil size changes by weighing the relative contribution of visual events across the visual field contingent to gaze positions – and incorporates the cortical magnification factor of foveal input. (iv) Open-DPSM is a publicly available tool that provides accessible and open-source Python functions for modeling pupil size while offering high flexibility for future adaptations.

Theoretical implications

The contrast response model demonstrates that pupil responses to changes in luminance reflect more than just a simple change to light. Korn and Bach (2016) highlighted the importance of modeling pupil size changes with a dual-component model, and we here demonstrate that a pupil response is a combination of the responses to light (dilations to dark events, constrictions to bright events) and contrast (constrictions that scale with contrast). This second component is therefore beyond a phenomenological ‘overshoot’ of a pupil light response, but part of an orienting response to salient (high contrast) events (Barbur et al., 1992; Gamlin et al., 1998; Hu et al., 2019; Kanari & Kaneko, 2021; Naber et al., 2011; Nakano et al., 2021; Slooter & van Norren, 1980; Young et al., 1995; for a review, see Strauch et al., 2022a), which is likely modulated by attention (Koevoet et al., 2023; Naber et al., 2013; Strauch et al., 2022b).

Future work and model extensions

Open-DPSM is designed for seamless integrations of additional event streams to achieve higher modeling performance. Whilst the current model only took contrast into account, further low-level visual features, such as changes in color (Barbur et al., 1992), spatial frequency (Barbur & Thomson, 1987; Young et al., 1995), and orientation (Hu et al., 2019) can be incorporated easily. This opens up new avenues for studying low-level visual features with dynamic stimuli – allowing for the decomposition of contributing factors. Further improvements and insights are plausible here, as such features are characterized by distinct response properties (Young et al., 1993; Young & Kennish, 1993). Furthermore, different transformations of low-level features, such as Michelson Contrast (Michelson, 1927), may result in an improved model of pupillary changes (Sandoval Salinas et al., 2020; Wang et al., 2014). As illustrated in previous work (e.g. de Gee et al., 2014; Denison et al., 2020; Korn & Bach, 2016; Lempert et al., 2015; Willems et al., 2015), the same convolutional approach can also be used to model cognitive events. Open-DPSM should, in principle, be applied to model cognitive events given an appropriate event trace, but further evaluation is required.

Our data support the finding that pupil responses to luminance changes are stronger in the central and top than in the peripheral and bottom parts of the visual field (Istiqomah et al., 2022; Strauch et al., 2022b). It is worth mentioning that the current rectangular grid, which evenly divides the visual fields into subregions is a first, but coarse, representation of the visual field. Future versions of Open DPSM should, however, model the visual field as an elliptical (or distorted elliptical) shape (Anderson et al., 2014; Baldwin et al., 2012; Engel, 1977) and take into account the cortical magnification factor. Moreover, the current model also simplified anisotropies by applying the same set of regional weights to both luminance and contrast responses. Different asymmetries across the visual field have been described for a range of visual features (Thurman et al., 2021). Such models could also be used to study relative regional contributions of low-level features to the pupil light response, but also attention and vision more broadly.

In addition, many factors that contribute to (estimated) pupil size changes have not yet fully been explored. For instance, distortions of estimated pupil size resulting from changing angles between camera and eye as the eyes move should be accounted for using foreshortening error corrections (Gagl et al., 2011; Hayes & Petrov, 2016; see Korn et al., 2017 for an implementation). Other factors such as pupil size change before and during saccades (Koevoet et al., 2023; Wang et al., 2015), pupil responses to different durations or temporal frequencies of changes in visual features, pupil responses to depth (pupil near response; Pielage et al., 2022), and pupil responses to changes in other sensory domains (e.g., body movements and audition; Van der Stoep et al., 2021) are also expected to influence pupil size changes, which will need to be incorporated in future versions of the current model to explain even more variance in pupil dynamics.

Use cases of Open-DPSM

Pupillometry has widespread applications in research and practice (see Binda & Gamlin, 2017; Einhäuser, 2017; Mathôt, 2018; Strauch et al., 2022a for reviews). How can Open-DPSM help researchers and practitioners alike? In neurology/ophthalmology, pupil light responses serve as an objective indicator for the dysfunction of vision. Open-DPSM is especially promising for the diagnosis of spatial-attentional disorders such as visual field defects and hemispatial neglect (Lasaponara et al., 2021; Naber et al., 2018; Portengen et al., 2023b; Ten Brink et al., 2023), as it can map the responsivity of the pupil across space, which is, in turn, indicative of visuoattentional deficits. This would be particularly beneficial for perimetric testing of special populations such as children and brain-injured patients, as testing could be done highly automatized and with engaging/naturalistic stimuli, and substantial data can be collected within a short period (Gestefeld et al., 2020, 2021). The application may also extend to the measurement of the sensitivity of other low-level features, such as contrast sensitivity (Hernández et al., 1996; Slooter, 1981; Slooter & van Norren, 1980). In psychology/affective and cognitive neuroscience (but also beyond), pupil dilation is used to study cognitive processes and respective neural underpinnings, such as the effects of mental effort (McLaughlin & Van Engen, 2020; Van Der Meer et al., 2010; Zekveld & Kramer, 2014), emotional processing or regulation (Bradley et al., 2008; Henderson et al., 2014; Kinner et al., 2017; Koevoet et al., 2023; Võ et al., 2008; Wetzel et al., 2020; Zimmermann & Bach, 2020) and attention (Binda et al., 2014; Denison et al., 2020; Naber et al., 2013). Thus far, stimuli highly controlled for low-level features are usually adopted to prevent confounding effects of those features. Open-DPSM can provide an estimate of expected pupil size changes to stimulus material. Previous studies have shown that despite maintaining constant global luminance, variations in local regions of the stimuli can still exert an influence on pupil size, such as luminance differences in the face areas (Laeng et al., 2018) or relative size of bright versus dark regions in the eye (Derksen et al., 2018). Open-DPSM can generate predictions of how pupil size would change with given stimuli and thereby enable researchers to estimate the variance that can be attributed to the stimuli themselves. This would facilitate the examination of potential confounds of low-level design issues in pupillometric studies. Furthermore, Open-DPSM accommodates complex and dynamically changing stimuli as input material to leverage the high sensitivity of pupillometry to cognitive processes – in the lab settings or in real world applications. Previous studies using dynamic stimuli, such as movie-watching (Raiturkar et al., 2016; Soleymani et al., 2012), video-gaming (Fanourakis & Chanel, 2022; Mitre-Hernandez et al., 2021), or driving (Kerautret et al., 2021; Pedrotti et al., 2014; Vintila et al., 2017), adopted different methods to compensate for the effects of luminance. It has been found that modeling the PLR is more advantageous in the sense that it quantifies the effectiveness of separating effects reflected in the pupil (Fanourakis & Chanel, 2022; Wong et al., 2020). In addition, Open-DPSM provides estimations of temporal properties for pupil responses, such as latency and timing of peak amplitude (Denison et al., 2020), which are especially important for the investigation of cognitive events.

Conclusion

We introduce Open-DPSM, an openly available toolkit that offers a robust and flexible approach to model pupil size changes to dynamic visual stimuli. Its incorporation of multiple visual events, regional weights, and gaze-contingent visual event extraction provides new insights into the effects of visual input on pupil size and visual processing and can pave the way for assessing and using attentionally modulated pupil size changes outside highly constrained lab settings. The availability of Open-DPSM as an open-source package enhances its accessibility and potential for further advancements in many fields of research.

Data availability

All data are available via https://osf.io/qvn64/. As our stimuli are a subset of stimuli from Gestefeld et al. (2020), experimental materials can be downloaded directly from their open dataset: https://dataverse.nl/dataset.xhtml;jsessionid=f84f78bc461ba0a539430ae788cd?persistentId=doi%3A10.34894%2FLEYVL8&version=&q=&fileTypeGroupFacet=%22Document%22&fileAccess=&fileSortField=type

Notes

To avoid local minima in fitting, we also tested the “basinhopping” function from the same package with 20 iterations. Model performance was similar, but the fitting time increased significantly. Hence, all the reported results used “minimizing” with a fixed starting point. Both options are provided in the toolbox.

References

Anderson, J. E., Leslie Cameron, E., & Levine, M. W. (2014). A method for quantifying visual field inhomogeneities. Vision Research, 105, 112–120. https://doi.org/10.1016/j.visres.2014.09.010

Baldwin, A. S., Meese, T. S., & Baker, D. H. (2012). The attenuation surface for contrast sensitivity has the form of a witch’s hat within the central visual field. Journal of Vision, 12(11), 23. https://doi.org/10.1167/12.11.23

Barbur, J. L., Harlow, A. J., & Sahraie, A. (1992). Pupillary responses to stimulus structure, colour and movement. Ophthalmic and Physiological Optics, 12(2), 137–141. https://doi.org/10.1111/j.1475-1313.1992.tb00276.x

Barbur, J. L., & Thomson, W. D. (1987). Pupil Response as an Objective Measure of Visual Acuity*. Ophthalmic and Physiological Optics, 7(4), 425–429. https://doi.org/10.1111/j.1475-1313.1987.tb00773.x

Binda, P., & Gamlin, P. D. (2017). Renewed attention on the pupil light reflex. Trends in Neurosciences, 40(8), 455–457. https://doi.org/10.1016/j.tins.2017.06.007

Binda, P., Pereverzeva, M., & Murray, S. O. (2014). Pupil size reflects the focus of feature-based attention. Journal of Neurophysiology, 112(12), 3046–3052. https://doi.org/10.1152/jn.00502.2014

Bradley, M. M., Miccoli, L., Escrig, M. A., & Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology, 45(4), 602–607. https://doi.org/10.1111/j.1469-8986.2008.00654.x

Cooper, E. A., Jiang, H., Vildavski, V., Farrell, J. E., & Norcia, A. M. (2013). Assessment of OLED displays for vision research. Journal of Vision, 13(12), 16. https://doi.org/10.1167/13.12.16

David-John, B., Raiturkar, P., Banerjee, A., & Jain, E. (2018). An evaluation of pupillary light response models for 2D screens and VR HMDs (p. 11). https://doi.org/10.1145/3281505.3281538

de Gee, J. W., Knapen, T., & Donner, T. H. (2014). Decision-related pupil dilation reflects upcoming choice and individual bias. Proceedings of the National Academy of Sciences, 111(5), E618–E625. https://doi.org/10.1073/pnas.1317557111

Denison, R. N., Parker, J. A., & Carrasco, M. (2020). Modeling pupil responses to rapid sequential events. Behavior Research Methods, 52(5), 1991–2007. https://doi.org/10.3758/s13428-020-01368-6

Derksen, M., van Alphen, J., Schaap, S., Mathot, S., & Naber, M. (2018). Pupil mimicry is the result of brightness perception of the iris and pupil. Journal of Cognition, 1(1), 32. https://doi.org/10.5334/joc.34

Einhäuser, W. (2017). The Pupil as Marker of Cognitive Processes. In Q. Zhao (Ed.), Computational and Cognitive Neuroscience of Vision (pp. 141–169). Springer. https://doi.org/10.1007/978-981-10-0213-7_7

Engel, F. L. (1977). Visual conspicuity, visual search and fixation tendencies of the eye. Vision Research, 17(1), 95–108. https://doi.org/10.1016/0042-6989(77)90207-3

Fan, X., & Yao, G. (2011). Modeling Transient Pupillary Light Reflex Induced by a Short Light Flash. IEEE Transactions on Bio-Medical Engineering, 58(1), 36–42. https://doi.org/10.1109/TBME.2010.2080678

Fanourakis, M., & Chanel, G. (2022). Attenuation of the dynamic pupil light response during screen viewing for arousal assessment. Frontiers in Virtual Reality, 3. https://www.frontiersin.org/articles/10.3389/frvir.2022.971613

Ferree, C. E., Rand, G., & Harris, E. T. (1933). Intensity of light and area of illuminated field as interacting factors in size of pupil. Journal of Experimental Psychology, 16(3), 408–422.

Gagl, B., Hawelka, S., & Hutzler, F. (2011). Systematic influence of gaze position on pupil size measurement: Analysis and correction. Behavior Research Methods, 43(4), 1171–1181. https://doi.org/10.3758/s13428-011-0109-5

Gamlin, P. D. R., Zhang, H., Harlow, A., & Barbur, J. L. (1998). Pupil responses to stimulus color, structure and light flux increments in the rhesus monkey. Vision Research, 38(21), 3353–3358. https://doi.org/10.1016/S0042-6989(98)00096-0

Gestefeld, B., Grillini, A., Marsman, J.-B. C., & Cornelissen, F. W. (2020). Using natural viewing behavior to screen for and reconstruct visual field defects. Journal of Vision, 20(9), 11. https://doi.org/10.1167/jov.20.9.11

Gestefeld, B., Marsman, J.-B., & Cornelissen, F. (2021). How free-viewing eye movements can be used to detect the presence of visual field defects in glaucoma patients. Frontiers in Medicine, 8, 689910. https://doi.org/10.3389/fmed.2021.689910

Hayes, T. R., & Petrov, A. A. (2016). Mapping and correcting the influence of gaze position on pupil size measurements. Behavior Research Methods, 48(2), 510–527. https://doi.org/10.3758/s13428-015-0588-x

Henderson, R. R., Bradley, M. M., & Lang, P. J. (2014). Modulation of the initial light reflex during affective picture viewing. Psychophysiology, 51(9), 815–818. https://doi.org/10.1111/psyp.12236

Hernández, C., Doménech, B., Seguí, M. M., & Illueca, C. (1996). The effect of pupil and observation distance on the contrast sensitivity function. Ophthalmic and Physiological Optics, 16(4), 336–341. https://doi.org/10.1016/0275-5408(95)00116-6

Hoeks, B., & Levelt, W. J. M. (1993). Pupillary dilation as a measure of attention: A quantitative system analysis. Behavior Research Methods, Instruments, & Computers, 25(1), 16–26. https://doi.org/10.3758/BF03204445

Hu, X., Hisakata, R., & Kaneko, H. (2019). Effects of spatial frequency and attention on pupillary response. JOSA A, 36(10), 1699–1708. https://doi.org/10.1364/JOSAA.36.001699

International Commission on Illumination. (2004). CIE 15: Technical Report: Colorimetry, 3rd edition. http://archive.org/details/gov.law.cie.15.2004

Intraub, H. (1981). Rapid conceptual identification of sequentially presented pictures. Journal of Experimental Psychology: Human Perception and Performance, 7(3), 604–610. https://doi.org/10.1037/0096-1523.7.3.604

Istiqomah, N., Suzuki, Y., Kinzuka, Y., Minami, T., & Nakauchi, S. (2022). Anisotropy in the peripheral visual field based on pupil response to the glare illusion. Heliyon, 8(6), e09772. https://doi.org/10.1016/j.heliyon.2022.e09772

Kanari, K., & Kaneko, H. (2021). Pupil response is modulated with optokinetic nystagmus in transparent motion. JOSA A, 38(2), 149–156. https://doi.org/10.1364/JOSAA.409940

Kang, O., & Wheatley, T. (2015). Pupil dilation patterns reflect the contents of consciousness. Consciousness and Cognition, 35, 128–135. https://doi.org/10.1016/j.concog.2015.05.001

Kardon, R. H., Kirkali, P. A., & Thompson, H. S. (1991). Automated pupil perimetry pupil field mapping in patients and normal subjects. Ophthalmology, 98(4), 485–496. https://doi.org/10.1016/S0161-6420(91)32267-X

Kerautret, L., Navarro, J., Dabic, S., & Navarro, J. (2021). Sensitivity of physiological measures of acute driver stress: A meta-analytic review. Frontiers in Neuroergonomics, 2. https://doi.org/10.3389/fnrgo.2021.756473

Kimura, E., & Young, R. S. L. (1995). Nature of the pupillary responses evoked by chromatic flashes on a white background. Vision Research, 35(7), 897–906. https://doi.org/10.1016/0042-6989(94)00188-R

Kinner, V. L., Kuchinke, L., Dierolf, A. M., Merz, C. J., Otto, T., & Wolf, O. T. (2017). What our eyes tell us about feelings: Tracking pupillary responses during emotion regulation processes: Pupillary responses during emotion regulation. Psychophysiology, 54(4), 508–518. https://doi.org/10.1111/psyp.12816

Knapen, T., de Gee, J. W., Brascamp, J., Nuiten, S., Hoppenbrouwers, S., & Theeuwes, J. (2016). Cognitive and ocular factors jointly determine pupil responses under equiluminance. PLOS ONE, 11(5), e0155574. https://doi.org/10.1371/journal.pone.0155574

Koevoet, D., Naber, M., Strauch, C., Somai, R. S., & Van der Stigchel, S. (2023). Differential aspects of attention predict the depth of visual working memory encoding: Evidence from pupillometry. Journal of Vision, 23(6), 9. https://doi.org/10.1167/jov.23.6.9

Korn, C. W., & Bach, D. R. (2016). A solid frame for the window on cognition: Modeling event-related pupil responses. Journal of Vision, 16(3), 28. https://doi.org/10.1167/16.3.28

Korn, C. W., Staib, M., Tzovara, A., Castegnetti, G., & Bach, D. R. (2017). A pupil size response model to assess fear learning: Pupil responses and fear conditioning. Psychophysiology, 54(3), 330–343. https://doi.org/10.1111/psyp.12801

Laeng, B., Kiambarua, K. G., Hagen, T., Bochynska, A., Lubell, J., Suzuki, H., & Okubo, M. (2018). The “face race lightness illusion”: An effect of the eyes and pupils? PLoS ONE, 13(8), e0201603. https://doi.org/10.1371/journal.pone.0201603

Lasaponara, S., Fortunato, G., Conversi, D., Pellegrino, M., Pinto, M., Collins, D. L., … Doricchi, F. (2021). Pupil dilation during orienting of attention and conscious detection of visual targets in patients with left spatial neglect. Cortex, 134, 265–277. https://doi.org/10.1016/j.cortex.2020.10.021

Lempert, K. M., Chen, Y. L., & Fleming, S. M. (2015). Relating pupil dilation and metacognitive confidence during auditory decision-making. PLOS ONE, 10(5), e0126588. https://doi.org/10.1371/journal.pone.0126588

Longtin, A., & Milton, J. G. (1989). Modelling autonomous oscillations in the human pupil light reflex using non-linear delay-differential equations. Bulletin of Mathematical Biology, 51(5), 605–624. https://doi.org/10.1016/S0092-8240(89)80103-X

Mathôt, S. (2018). Pupillometry: Psychology, physiology, and function. Journal of Cognition, 1(1), 16. https://doi.org/10.5334/joc.18

McLaughlin, D. J., & Van Engen, K. J. (2020). Task-evoked pupil response for accurately recognized accented speech. The Journal of the Acoustical Society of America, 147(2), EL151–EL156. https://doi.org/10.1121/10.0000718

Michelson, A. A. (1927). Studies In Optics. http://archive.org/details/in.ernet.dli.2015.86849

Mitre-Hernandez, H., Carrillo, R. C., & Lara-Alvarez, C. (2021). Pupillary responses for cognitive load measurement to classify difficulty levels in an educational video game: Empirical study. JMIR Serious Games, 9(1), e21620. https://doi.org/10.2196/21620

Moon, P., & Spencer, D. E. (1944). On the Stiles–Crawford effect. JOSA, 34(6), 319–329. https://doi.org/10.1364/JOSA.34.000319

Naber, M., Alvarez, G. A., & Nakayama, K. (2013). Tracking the allocation of attention using human pupillary oscillations. Frontiers in Psychology, 4, 919. https://doi.org/10.3389/fpsyg.2013.00919

Naber, M., Frässle, S., & Einhäuser, W. (2011). Perceptual rivalry: Reflexes reveal the gradual nature of visual awareness. PLOS ONE, 6(6), e20910. https://doi.org/10.1371/journal.pone.0020910

Naber, M., & Nakayama, K. (2013). Pupil responses to high-level image content. Journal of Vision, 13(6), 7. https://doi.org/10.1167/13.6.7

Naber, M., Roelofzen, C., Fracasso, A., Bergsma, D. P., van Genderen, M., Porro, G. L., & Dumoulin, S. O. (2018). Gaze-contingent flicker pupil perimetry detects scotomas in patients with cerebral visual impairments or glaucoma. Frontiers in Neurology, 9, 558. https://doi.org/10.3389/fneur.2018.00558

Nakano, T., Ichiki, A., & Fujikado, T. (2021). Pupil constriction via the parasympathetic pathway precedes perceptual switch of ambiguous stimuli. International Journal of Psychophysiology, 167, 15–21. https://doi.org/10.1016/j.ijpsycho.2021.06.006

Napieralski, P., & Rynkiewicz, F. (2019). Modeling Human pupil dilation to decouple the pupillary light reflex. Open Physics, 17(1), 458–467. https://doi.org/10.1515/phys-2019-0047

Oster, J., Huang, J., White, B. J., Radach, R., Itti, L., Munoz, D. P., & Wang, C.-A. (2022). Pupillary responses to differences in luminance, color and set size. Experimental Brain Research, 240(6), 1873–1885. https://doi.org/10.1007/s00221-022-06367-x

Pamplona, V. F., Oliveira, M. M., & Baranoski, G. V. G. (2009). Photorealistic models for pupil light reflex and iridal pattern deformation. ACM Transactions on Graphics, 28(4), 106:1-106:12. https://doi.org/10.1145/1559755.1559763

Pedrotti, M., Mirzaei, M. A., Tedesco, A., Chardonnet, J.-R., Mérienne, F., Benedetto, S., & Baccino, T. (2014). Automatic stress classification with pupil diameter analysis. International Journal of Human–Computer Interaction, 30(3), 220–236. https://doi.org/10.1080/10447318.2013.848320

Peirce, J., Gray, J. R., Simpson, S., MacAskill, M., Höchenberger, R., Sogo, H., … Lindeløv, J. K. (2019). PsychoPy2: Experiments in behavior made easy. Behavior Research Methods, 51(1), 195–203. https://doi.org/10.3758/s13428-018-01193-y

Pielage, H., Zekveld, A. A., van de Ven, S., Kramer, S. E., & Naber, M. (2022). The pupil near response is short lasting and intact in virtual reality head mounted displays. Journal of Eye Movement Research, 15(3). https://doi.org/10.16910/jemr.15.3.6

Portengen, B. L., Porro, G. L., Bergsma, D., Veldman, E. J., Imhof, S. M., & Naber, M. (2023b). Effects of stimulus luminance, stimulus color and intra-stimulus color contrast on visual field mapping in neurologically impaired adults using flicker pupil perimetry. Eye and Brain, 15, 77–89. https://doi.org/10.2147/EB.S409905

Portengen, B. L., Porro, G. L., Imhof, S. M., & Naber, M. (2023a). The trade-off between luminance and color contrast assessed with pupil responses. Translational Vision Science & Technology, 12(1), 15. https://doi.org/10.1167/tvst.12.1.15

Portengen, B. L., Roelofzen, C., Porro, G. L., Imhof, S. M., Fracasso, A., & Naber, M. (2021). Blind spot and visual field anisotropy detection with flicker pupil perimetry across brightness and task variations. Vision Research, 178, 79–85. https://doi.org/10.1016/j.visres.2020.10.005

Potter, M. C. (1975). Meaning in visual search. Science, 187(4180), 965–966. https://doi.org/10.1126/science.1145183

Potter, M. C., Staub, A., Rado, J., & O’Connor, D. H. (2002). Recognition memory for briefly presented pictures: The time course of rapid forgetting. Journal of Experimental Psychology. Human Perception and Performance, 28(5), 1163–1175. https://doi.org/10.1037//0096-1523.28.5.1163

Raiturkar, P., Kleinsmith, A., Keil, A., Banerjee, A., & Jain, E. (2016). Decoupling light reflex from pupillary dilation to measure emotional arousal in videos. Proceedings of the ACM Symposium on Applied Perception, 89–96. https://doi.org/10.1145/2931002.2931009

Sandoval Salinas, C., Hermans, S., Sandoval, J., Smet, K. A. G., Hanselaer, P., & Colombo, E. (2020). Relationship between pupillary size, brightness, and photoreceptor responses for unrelated self-luminous stimuli at low photopic light levels. Color Research & Application, 45(6), 977–991. https://doi.org/10.1002/col.22546

Slooter, J. (1981). Clinical use of visual acuity measured with pupil responses. Documenta Ophthalmologica, 50(2), 389–399. https://doi.org/10.1007/BF00158023

Slooter, J., & van Norren, D. (1980). Visual acuity measured with pupil responses to checkerboard stimuli. Investigative Ophthalmology & Visual Science, 19(1), 105–108.

Soleymani, M., Pantic, M., & Pun, T. (2012). Multimodal emotion recognition in response to videos. IEEE Transactions on Affective Computing, 3(2). https://doi.org/10.1109/t-affc.2011.37

Strauch, C., Romein, C., Naber, M., Van der Stigchel, S., & Ten Brink, A. F. (2022b). The orienting response drives pseudoneglect—Evidence from an objective pupillometric method. Cortex, 151, 259–271. https://doi.org/10.1016/j.cortex.2022.03.006

Strauch, C., Wang, C.-A., Einhäuser, W., Van der Stigchel, S., & Naber, M. (2022a). Pupillometry as an integrated readout of distinct attentional networks. Trends in Neurosciences, 45(8), 635–647. https://doi.org/10.1016/j.tins.2022.05.003

Ten Brink, A. F., van Heijst, M., Portengen, B. L., Naber, M., & Strauch, C. (2023). Uncovering the (un)attended: Pupil light responses index persistent biases of spatial attention in neglect. Cortex. https://doi.org/10.1016/j.cortex.2023.06.008

Thurman, S. M., Cohen Hoffing, R. A., Madison, A., Ries, A. J., Gordon, S. M., & Touryan, J. (2021). “Blue Sky Effect”: Contextual influences on pupil size during naturalistic visual search. Frontiers in Psychology, 12. https://www.frontiersin.org/articles/10.3389/fpsyg.2021.748539

Usui, S., & Hirata, Y. (1995). Estimation of autonomic nervous activity using the inverse dynamic model of the pupil muscle plant. Annals of Biomedical Engineering, 23(4), 375–387. https://doi.org/10.1007/BF02584438

Van Der Meer, E., Beyer, R., Horn, J., Foth, M., Bornemann, B., Ries, J., … Wartenburger, I. (2010). Resource allocation and fluid intelligence: Insights from pupillometry. Psychophysiology, 47(1), 158–169. https://doi.org/10.1111/j.1469-8986.2009.00884.x

Van der Stoep, N., Van der Smagt, M. J., Notaro, C., Spock, Z., & Naber, M. (2021). The additive nature of the human multisensory evoked pupil response. Scientific Reports, 11(1), Article 1. https://doi.org/10.1038/s41598-020-80286-1

Vintila, F., Kübler, T. C., & Kasneci, E. (2017). Pupil response as an indicator of hazard perception during simulator driving. Journal of Eye Movement Research, 10(4). https://doi.org/10.16910/jemr.10.4.3.10.16910/jemr.10.4.3

Võ, M. L.-H., Jacobs, A. M., Kuchinke, L., Hofmann, M., Conrad, M., Schacht, A., & Hutzler, F. (2008). The coupling of emotion and cognition in the eye: Introducing the pupil old/new effect. Psychophysiology, 45(1), 130–140. https://doi.org/10.1111/j.1469-8986.2007.00606.x

Wang, C.-A., Boehnke, S. E., Itti, L., & Munoz, D. P. (2014). Transient pupil response is modulated by contrast-based saliency. Journal of Neuroscience, 34(2), 408–417. https://doi.org/10.1523/JNEUROSCI.3550-13.2014

Wang, C.-A., Brien, D. C., & Munoz, D. P. (2015). Pupil size reveals preparatory processes in the generation of pro-saccades and anti-saccades. The European Journal of Neuroscience, 41(8), 1102–1110. https://doi.org/10.1111/ejn.12883

Watson, A. B., & Yellott, J. I. (2012). A unified formula for light-adapted pupil size. Journal of Vision, 12(10), 12. https://doi.org/10.1167/12.10.12

Wetzel, N., Einhäuser, W., & Widmann, A. (2020). Picture-evoked changes in pupil size predict learning success in children. Journal of Experimental Child Psychology, 192, 104787. https://doi.org/10.1016/j.jecp.2019.104787

Wierda, S. M., van Rijn, H., Taatgen, N. A., & Martens, S. (2012). Pupil dilation deconvolution reveals the dynamics of attention at high temporal resolution. Proceedings of the National Academy of Sciences, 109(22), 8456–8460. https://doi.org/10.1073/pnas.1201858109

Wilhelm, H. (2008). The pupil. Current Opinion in Neurology, 21(1), 36–42. https://doi.org/10.1097/WCO.0b013e3282f39173

Willems, C., Damsma, A., Wierda, S. M., Taatgen, N., & Martens, S. (2015). Training-induced changes in the dynamics of attention as reflected in pupil dilation. Journal of Cognitive Neuroscience, 27(6), 1161–1171. https://doi.org/10.1162/jocn_a_00767

Wong, H. K., Epps, J., & Chen, S. (2020). A comparison of methods for mitigating within-task luminance change for eyewear-based cognitive load measurement. IEEE Transactions on Cognitive and Developmental Systems, 12(4). https://doi.org/10.1109/tcds.2018.2876348

Young, R. S. L., Han, B.-C., & Wu, P.-Y. (1993). Transient and sustained components of the pupillary responses evoked by luminance and color. Vision Research, 33(4), 437–446. https://doi.org/10.1016/0042-6989(93)90251-Q

Young, R. S. L., & Kennish, J. (1993). Transient and sustained components of the pupil response evoked by achromatic spatial patterns. Vision Research, 33(16), 2239–2252. https://doi.org/10.1016/0042-6989(93)90103-4

Young, R. S. L., Kimura, E., & Delucia, P. R. (1995). A pupillometric correlate of scotopic visual acuity. Vision Research, 35(15), 2235–2241. Scopus. https://doi.org/10.1016/0042-6989(94)00303-3

Zandi, B., & Khanh, T. Q. (2021). Deep learning-based pupil model predicts time and spectral dependent light responses. Scientific Reports, 11(1), Article 1. https://doi.org/10.1038/s41598-020-79908-5

Zekveld, A. A., & Kramer, S. E. (2014). Cognitive processing load across a wide range of listening conditions: Insights from pupillometry. Psychophysiology, 51(3), 277–284. https://doi.org/10.1111/psyp.12151

Zimmermann, J., & Bach, D. R. (2020). Impact of a reminder/extinction procedure on threat-conditioned pupil size and skin conductance responses. Learning & Memory, 27(4), 164–172. https://doi.org/10.1101/lm.050211.119

Acknowledgements

The authors would like to thank Arslaan Amjad (Utrecht University) for the help with data collection and Birte Gestefeld and her colleagues for making their data openly available.

Code availability

The code is available at https://github.com/caiyuqing/Open-DPSM

Funding

This work was supported by a China Scholarship Council (CSC) scholarship.

Author information

Authors and Affiliations

Contributions

Y. Cai, C. Strauch, and M. Naber conceptualized the studies. Y. Cai performed the data collection, data analysis and visualizations of the results under the supervision of M. Naber and C. Strauch. Y. Cai wrote the draft of the manuscript and C. Strauch, M. Naber and S. Van der Stigchel provided critical review & editing.

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

None.

Ethics approval

The data collection was approved by the local ethics board of the Faculty of Social Sciences of Utrecht University upfront (#22-530).

Consent to participate and publish

All participants gave informed consent before the start of the experiment.

Preregistration

The experiment was not preregistered.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary material

ESM 1

Find the supplementary material via https://osf.io/qvn64/. (DOCX 1860 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cai, Y., Strauch, C., Van der Stigchel, S. et al. Open-DPSM: An open-source toolkit for modeling pupil size changes to dynamic visual inputs. Behav Res (2023). https://doi.org/10.3758/s13428-023-02292-1

Accepted:

Published:

DOI: https://doi.org/10.3758/s13428-023-02292-1