Abstract

Using the megastudy approach, we report a new database (MEGALEX) of visual and auditory lexical decision times and accuracy rates for tens of thousands of words. We collected visual lexical decision data for 28,466 French words and the same number of pseudowords, and auditory lexical decision data for 17,876 French words and the same number of pseudowords (synthesized tokens were used for the auditory modality). This constitutes the first large-scale database for auditory lexical decision, and the first database to enable a direct comparison of word recognition in different modalities. Different regression analyses were conducted to illustrate potential ways to exploit this megastudy database. First, we compared the proportions of variance accounted for by five word frequency measures. Second, we conducted item-level regression analyses to examine the relative importance of the lexical variables influencing performance in the different modalities (visual and auditory). Finally, we compared the similarities and differences between the two modalities. All data are freely available on our website (https://sedufau.shinyapps.io/megalex/) and are searchable at www.lexique.org, inside the Open Lexique search engine.

Similar content being viewed by others

Understanding the cognitive processes involved in visual and auditory word recognition remains a major challenge in cognitive psychology and psycholinguistics. In recent years, researchers have focused on lexical variables affecting the speed and accuracy with which words are processed (e.g., Baayen, Feldman, & Schreuder, 2006; Balota, Cortese, Sergent-Marshall, Spieler, & Yap, 2004; Brysbaert, Warriner, & Kuperman, 2014; Ernestus & Cutler, 2015; Kuperman, Stadthagen-Gonzalez, & Brysbaert, 2012; New, Ferrand, Pallier, & Brysbaert, 2006; Yap & Balota, 2009). In the present study, we focus on ways in which word processing times converge or diverge in the lexical decision task across two modalities: visual and auditory. Visual and auditory word recognition have rarely been studied together. Indeed, to our knowledge, research on spoken-word recognition and reading has developed independently, with little attempt to draw parallels between the two.Footnote 1 The main goal of the present study was to apply the megastudy approach to compare processing times for a very large number of words presented in visual and auditory lexical decision. In this study, we focus (1) on lexical decision (rather than naming), and (2) on very large databases (with more than 10,000 words tested) (see Table 1).

The megastudy approach

Megastudies are studies in which word processing times are gathered for a large number of words (typically between 10,000 and 40,000 words; see Balota, Yap, Hutchison, & Cortese, 2012; Keuleers & Balota, 2015, for reviews). The findings are typically explored with multiple regression analyses. In the visual modality using the lexical decision task (see Table 1 for a review), the first megastudy was published by Balota et al. (2007).Footnote 2 In the so-called English Lexicon Project (ELP), lexical decision and naming data were collected for 40,481 words from several hundreds of participants (each responding to 3,400 stimuli in lexical decision). Ferrand et al. (2010) published a similarly collected set of lexical decision times for 38,840 French words (the French Lexicon Project; FLP) from 975 participants (each responding to 2,000 stimuli). Yap, Rickard Liow, Jalil, and Faizal (2010) collected lexical decision and naming data for 9,592 Malay words (the Malay Lexicon Project; MLP) from 44 participants (each responding to 1,020 stimuli in lexical decision). Keuleers, Diependaele, and Brysbaert (2010) published a set of lexical decision times for 14,000 Dutch words (the Dutch Lexicon Project; DLP) with a new approach, since 39 participants responded to all stimuli (see also Brysbaert, Stevens, Mandera, & Keuleers, 2016, for the Dutch Lexicon Project 2; DLP2). A similar approach was adopted for the British Lexicon Project (Keuleers, Lacey, Rastle, & Brysbaert, 2012), in which data on 28,000 English words were collected by testing 78 participants, each of them responding to one of the two lists of 14,000 words in lexical decision. Dufau et al. (2011) collected lexical decision times for thousands of words in seven languages (English, Basque, Catalan, Dutch, French, Malay, and Spanish) by using smartphone technology (each participant responding to 50, 100, or 140 stimuli). Finally, Tse et al. (2016) collected lexical decision data for 25,286 Chinese words (the Chinese Lexicon Project; CLP) from 594 participants (each responding to 2,810 stimuli).

In the auditory modality, megastudies are scarce, possibly because generating auditory stimuli entails a great deal more effort than generating visual stimuli. To our knowledge, only one published megastudy has used the auditory lexical decision task, BALDEY (“biggest auditory lexical decision experiment yet”). In this megastudy, Ernestus and Cutler (2015) collected lexical decision times on 2,780 Dutch words from 20 participants (see Table 1).

As was pointed out by Balota et al. (2007; Balota et al., 2012) and Brysbaert et al. (2016; see their Table 1, p. 442), megastudies have a number of important advantages over the more commonly used factorial designs. First, megastudies use multiple regression techniques applied to the data obtained with very large numbers of words, and one is no longer limited to analyzing a small number of words since all words (polysyllabic, polymorphemic, inflected forms such as feminine, plural, and verbal forms) are included in the analysis. This avoids the potential biases that may occur when one selects specific items (see, e.g., Forster, 2000). Second, variables (such as word frequency for instance) can be tested in a continuous way along their entire range. Third, megastudies identify the unique predictive power of a large set of targeted variables. Fourth, megastudies have proven valuable for comparing competing metrics of word frequency (e.g., Brysbaert & New, 2009; Ferrand et al., 2010), evaluating the impact of novel psycholinguistic variables (e.g., Bonin, Méot, Ferrand, & Bugaïska, 2015; Brysbaert et al., 2016; Juhasz & Yap, 2013; Yarkoni, Balota, & Yap, 2008), and exploring potential nonlinear functional relationships between lexical variables and word recognition performance (e.g., Baayen et al., 2006; New et al., 2006).

Because of these advantages, an increasing number of large-scale psycholinguistic studies have recently endorsed the megastudy approach using regression designs (see Table 1; see also Keuleers & Balota, 2015, and Brysbaert et al., 2016, for reviews).

Factors influencing visual and auditory lexical decision performance

As Brysbaert et al. (2016, p. 442) put it, “One of the main goals of megastudies is to examine which variables affect word recognition and what their relative importance is.” A survey of the megastudy literature reveals that word frequency is the most important variable in visual lexical decision (accounting for at least 40% unique variance in ELP: Balota et al., 2007; Brysbaert et al., 2016; 34% in DLP1: Keuleers et al., 2010; 33% in DLP2: Brysbaert et al., 2016; and 38% in FLP: Ferrand et al., 2010).Footnote 3 A second important variable accounting for a decent part of variance in lexical decision times is word length in letters (accounting for 16% of variance in ELP; and 7% in the DLP1 and the FLP).Footnote 4 Lexical decision latencies are generally longer for long words; but New, Ferrand, Pallier, and Brysbaert (2006) reexamined the length effect within the ELP and they found a quadratic effect: the effect of number of letters was facilitatory for words of three to five letters, null for words of five to eight letters, and inhibitory for words of eight to 13 letters. This quadratic effect was replicated in French (Ferrand et al., 2010). A third important variable that matters is the orthographic similarity to other words (OLD 20; Yarkoni et al., 2008). Lexical decisions are faster to words that are more orthographically similar to other words (accounting for 20% of variance in ELP, 5% in DLP and 6.5% in FLP; again, see note 4).

Other lexical variables have also been identified even if they only account for a small (but often significant) percentage of variance (around 1%–2% of additional variance). This is the case of (1) semantic variables (such as imageability, sensory experience ratings, concreteness, number of semantic features, and number of associates, to name a few; e.g., Balota et al., 2004; Bonin et al., 2015; Ferrand et al., 2011; Juhasz & Yap, 2013; Juhasz, Yap, Dicke, Taylor, & Gullick, 2011); (2) morphological variables (such as morphological family size, plural word forms, etc.; e.g., Baayen et al., 2006; Gimenes, Brysbaert, & New, 2016); and (3) affective variables (such as valence and arousal; e.g., Kuperman, Estes, Brysbaert, & Warriner, 2014).

In auditory lexical decision, the only megastudy available (Ernestus & Cutler, 2015) reported that word duration was an important variable and that it was a better predictor of response time than different measures of the uniqueness point (i.e., the first moment at which a word differs from all other words).Footnote 5 This result suggests that listeners tended to wait until they had heard the last phoneme in the word before making their decision. Word frequency was also an important variable, with the Dutch subtitle-based word frequency (Keuleers, Brysbaert, & New, 2010) better accounting for the auditory lexical decision data than other frequency measures (such as CELEX, for instance; Baayen, Piepenbrock, & Gulikers, 1995).

Standard research using the auditory lexical decision task in factorial experiments has mainly focused on the effects of lexical variables such as word frequency and word-form similarity. In a review of the auditory lexical decision task published twenty years ago, Goldinger (1996) mentioned one variable that surely influenced lexical decision performance (word frequency), one that probably affected it (neighborhood density) and one that should be controlled (total stimulus duration). So far, researchers have reported clear frequency effects (e.g., Cleland, Gaskell, Quinlan, & Tamminen, 2006; Connine, Mullennix, Shernoff, & Yelen, 1990; Luce & Pisoni, 1998; Petrova, Gaskell, & Ferrand, 2011; Taft & Hambly, 1986), as well as effects of phonological neighbors that are inhibitory in nature (e.g., Goh, Suárez, Yap, & Tan, 2009; Goldinger, Luce, & Pisoni, 1989; Luce & Pisoni, 1998; Vitevitch & Luce, 1999; Ziegler, Muneaux, & Grainger, 2003), and effects of cohorts (e.g., Marslen-Wilson, 1990; Taft & Hambly, 1986) or uniqueness point (e.g., Goodman & Huttenlocher, 1988).

More recently, Goh, Yap, Lau, Ng, and Tan (2016) collected auditory lexical decision (and semantic categorization) data for 514 nouns from 80 participants. The goal of their study was to determine the unique contribution of semantic richness variables (such as concreteness, number of semantic features, and emotional valence), above and beyond the contribution of lexical variables (such as word duration, frequency, structural properties, and number of morphemes) to auditory word recognition. Their results showed that the lexical variables collectively accounted for 44.5% of the variance in lexical decision RT. There were significant positive relationships between RT, on the one hand, and word duration and number of morphemes, on the other hand, such that words that had longer duration and more morphemes were associated with slower RTs. Semantic richness variables (concreteness, valence, and number of semantic features) collectively accounted for an additional 3% of unique variance in lexical decision RT, with faster responses for spoken words that were concrete, emotionally valenced, and with a high number of semantic features, suggesting that words with richer semantic representations are recognized faster.

The present study

The main goal of the present study was to investigate and compare visual and auditory lexical decision times using the megastudy approach. The data collected in the MEGALEX project can be used to perform different types of analyses. As an example, here we outline the different central questions that we had specifically in mind when we designed MEGALEX: Which word frequency measure best predicts response times in the visual and auditory lexical decision tasks? What is the relative importance of the different lexical variables in both modalities? A specific question is when, during stimulus presentation, participants in the auditory lexical decision task decide whether a stimulus is a word? A more general question concerns the similarities and differences of the different variables involved in the visual and auditory lexical decision task. Finally, one methodological goal was to compare the power of the design used in MEGALEX (in which each participants responded to half of the full set of stimuli) relative to the design used in the French Lexicon Project (in which participants responded to only 2,000 of about 40,000 stimuli).

Experiment

Method

Participants

A total of 197 right-handed participants finished the experiment (96 in the visual lexical decision task [LDT] and 101 in the auditory LDT). Five other participants started the visual or auditory experiment but did not finish it. Participants were students or employees coming from three different universities: University Blaise Pascal (Clermont-Ferrand), University Pierre-Mendès France (Grenoble) and Aix-Marseille University (Marseille). Their age ranged between 17 and 52 years (mean = 25.76, SD = 5.71). All were native speakers of French, and had normal or corrected-to-normal vision. None of them had hearing problems. Each participant participated in either the visual LDT or the auditory LDT. Participants were informed that successful completion of the (visual or auditory) experiment would take about 20 h, for which they would receive a payment of €300. They were also informed that they would be excluded if their accuracy dropped below 80% in three successive blocks (as in Keuleers et al., 2012). They were also informed that if they dropped out the experiment or if their accuracy fell consistently below the 80% benchmark, they would be paid €5 per hour completed.

Ethical statement

This study was approved by both the Clermont-Ferrand Sud-Est VI Statutory Ethics Committee (Comité de Protection des Personnes (CPP) Sud-Est 6, France; Authorization #AU 1058) and the French National Agency for Medicines and Health Products Safety (Agence Nationale de Sécurité du Médicament et des Produits de Santé (ANSM), France; Authorization #2013-A00591-44 and #B-130783-81) according to the articles of law L. 1121-1-2 and R 1121-3.

Apparatus

The experimental software (OpenSesame; Mathôt, Schreij, & Theeuwes, 2012) and testing apparatus were identical at each of the three testing sites (Clermont-Ferrand, Grenoble, and Marseille). All the participants were tested on the same platform.

For the visual LDT, the stimuli were presented on a 22-in. Dell LCD monitor (P2212H) with a refresh rate of 60 MHz and a resolution of 1,920 × 1,080 pixels, placed at a distance of about 60 cm from the participants. The monitor was controlled by a PC (Dell Precision T3600). The stimuli were presented in lowercase in Inconsolata (12-point font size), and they appeared on the screen as dark characters on a grey background. The participants responded on a Logitech Gamepad F310, which is used for superfast computer games and does not have time delays with keyboards (see, e.g., Shimizu, 2002).

For the auditory LDT, the apparatus was identical to the one used in the visual LDT, except that the stimuli were played binaurally over headphones (Sennheiser HD 25-1 II) through a high-quality audio soundcard (Asus Xonar DX PCIe). Timing calibration was performed using the Blackbox Toolkit, version 2 (www.blackboxtoolkit.com/).

Word and pseudoword stimuli

The stimuli consisted of a list of 28,466 words. The words were taken from the French Lexicon Project (Ferrand et al., 2010; see Table 2 for descriptive statistics on the words and their behavioral measures). For each word, a matched pseudoword was generated using the Lexique toolbox (available from www.lexique.org/toolbox/toolbox.pub/index.php?page=trigramme). In particular, we used the tool that creates pseudowords based on randomization of bigrams or trigrams coming from real words. The advantage of this generator is that it creates pseudowords having characteristics very close to the category of words chosen. For instance when one enters all the words having six letters, two syllables, being a noun, the toolbox will generate pseudowords having six letters, two syllables and looking like possible nouns. One can therefore generate words resembling plausible French nouns or plausible French inflected verbs, and so forth. For instance, for three letter words, we entered all our words having three letters and we generated many three letter pseudowords. Then we screened the pseudowords in order to exclude real words and in order to end up with as many three letter pseudowords as we had three letter words.

Visual stimuli

In each session, the participants received 260 words and 260 pseudowords. Each session contained two blocks of 130 words and 130 pseudowords, with a short break between the two blocks. Each session lasted about 20 min. Overall, each participant received 55 sessions (or 109 blocks), corresponding to about 20 h of testing.

Auditory stimuli

They were identical to those used in the visual lexical decision task, except that homophonous forms were suppressed, giving a total amount of 17,876 words and 17,876 pseudowords. The auditory stimuli were synthetized using Apple’s Text-to-Speech (TTS) accessibility feature (TTS’s “say” command; Apple, Cupertino, CA, USA).Footnote 6 For instance, shell scripts containing “say” commands synthetized all the words and pseudowords of the visual experiment at regular speed in a female French voice (called “Audrey”) and produced 22100-Hz stereo wav files (each text stimulus had its own associated wav file). Pairwise auditory signal comparison was then carried on to detect identical wav files (hence homophonous forms). Such forms were then removed from stimulus lists. Finally, leading and trailing noninformative zero values in wav files were removed. In each session, the participants received 178 words and 178 pseudowords. Each session contained two blocks of 89 words and 89 pseudowords, with a short break between the two blocks. Each session lasted about 20 min. Overall, each participant received 50 sessions (or 100 blocks), corresponding to about 20 h of testing.

Creation of lists for participants

Both in the visual and in the auditory experiments, each participant was presented with exactly half of the experimental items. This was accomplished in the following way. For each successive pair of participants, we shuffled the words and pseudowords lists separately, then split each of them in half. The items in the first halves were attributed to the first participant and the items in the second half were attributed to the second participant. The words and pseudowords were then mixed and their order shuffled again for each participant. Finally, the obtained lists were split in blocs of 260 stimuli in the visual modality or 178 stimuli in the auditory modality to create individual experimental runs.

Procedure

Each participant started with a one-hour startup session at one of the three universities and was assigned to the visual or the auditory LDT. During that session, participants received information about the experiment and signed the approval forms. Participants then completed practice session of 40 trials (20 words and 20 pseudowords), allowing us to demonstrate the main features of the experiment. This was immediately followed by a real session of 20 min (Session 1, consisting of two blocks). After this first session, participants were free to enter the different labs during office hours and to go through the experiment at their own pace, using a booking system to reserve time slots. After reservation, participants could sit at any of the seven computers specifically devoted to the study. After entering their registration code in the experiment system, they were presented with their next block of trials. After each completed block, participants could choose whether to continue or to stop the session. The only advice given to the participants was to limit their participation to 2 h per half day. The fastest participant finished the experiment in 15 days’ time, the slowest took 58 days (on average, participants took 32 days, SD = 15.40).

Visual LDT

There were ten practice trials before each experimental block. The participants saw words and pseudowords presented on the center of a screen and they had to indicate as rapidly and as accurately as possible whether the presented letter string was a French word or a pseudoword. The participants responded using response buttons on a Logitech Gamepad F310. They answered “yes” by pressing the button corresponding to the index finger of their right hand and “no” by pressing the button corresponding to the index finger of their left hand.

The sequence of events was as follows (for one trial): (1) a fixation point (“.”) appeared in the center of the screen for 250 ms; (2) a blank screen appeared for 100 ms; (3) a stimulus (word or pseudoword) was presented in the center of the screen; (4) the participant made the response as fast and as accurately as possible; and (5) the stimulus was erased from the screen. The stimulus remained on the screen until a manual response was detected or for 2 s maximum if no response was made. At the end of each trial, there was a 1,000-ms intertrial interval with a blank, gray screen. A feedback was provided during each session, one at the end of each block (with mean correct reaction times and percentage of errors for words).

Auditory LDT

The procedure was similar to the one used in the visual modality. Ten practice trials were presented before each experimental block. The sequence of events was as follows (for one trial): (1) a fixation point (“.”) appeared in the center of the screen for 250 ms, announcing the auditory stimulus; (2) a stimulus (word or pseudoword) was then played binaurally over headphones; and (3) the participant made the response as fast and as accurately as possible. The response was triggered as soon as possible, even if the auditory stimulus was still playing. There was a 2-s timeout if the participant had not given any response. At the end of each trial, a blank, gray screen was shown for a 1,000-ms intertrial interval. Feedback—mean correct reaction times and percentage of errors—was provided at the end of each block.

Results

Trimming procedures

Visual lexical decision

First, 3,939 trials with technical problems were eliminated, which left us with 2,596,095 trials (1, 311,832 words).

As in Ferrand et al. (2010), all response latencies shorter than 300 ms or longer than 2,000 ms were then identified as outliers (resulting in the elimination of 8,658 trials [4,383 featuring words]—i.e., 0.33% of the data). Nearly all participants had a mean accuracy higher than 80%. The data for three participants who did not fulfill these criteria were dropped (73,272 trials, 37,071 words). Eight blocks with accuracies lower than 75% were also eliminated (1,981 trials, 1,009 words). The resulting 2,512,184 trials (1,269,369 words) are freely available on our website (https://sedufau.shinyapps.io/megalex/) and are searchable on www.lexique.org inside the Open Lexique search engine.

For the regression analyses on reaction times (RTs) and accuracy presented below, 2,690 words (119,684 trials) with accuracies below 67% were removed (as in Balota et al., 2004; Ferrand et al., 2010; Ferrand et al., 2011). Moreover, for the remaining correct RTs, the means and SDs were computed within Blocks × Participants, and all RTs more than three SDs above or below this mean were considered as outliers as well. This resulted in the rejection of 1.7% (19,229) of the RTs on correct trials. The mean RTs for the trimmed correct word trials, as well as the mean accuracy rates, are presented in Table 2.

Auditory lexical decision

We used the same procedure for the RTs for correct responses as applied in the visual modality. The elimination of 16,878 trials with technical problems led first to a set of 1,669,585 trials (845,078 words). Response latencies shorter than 600 ms or longer than 2,500 ms were then identified as outliers (resulting in the elimination of 1,084 trials [557 words]—i.e., 0.06% of the data). These threshold values are slightly different from the ones used for the visual modality. These were chosen after inspection of the cumulative frequency of the distribution of RTs for correct responses: 600 ms corresponds to the value for which the slope began to change significantly and for which eliminations seemed not to concern particular items. Because the decrease of the slope was very monotonic at the right of the distribution, the upper bound was fixed at 2,500 ms. One participant (16,116 trials, 8,168 words) with a global accuracy below 80% and 17 blocks (2, 738 trials, 1,396 words) with accuracies lower than 75% were then dropped. The resulting 1,649,647 trials (834,957 words) are freely available on our website.

For the regression analyses, 2,034 words (94,197 trials) with accuracies below 67% were then removed (as in Balota et al., 2004; Ferrand et al., 2010; Ferrand et al., 2011). Moreover, for the remaining correct RTs, all RTs more than three SDs above or below the within Blocks × Participants mean were considered outliers as well. This resulted in the rejection of 1.3% (9, 580) of the RTs on the correct trials. The mean RTs for the trimmed correct word trials, as well as the mean accuracy rates, are presented in Table 2.

The trimming procedure left us with 25,776 words (out of 28,466) in the visual modality and 15,842 words (out of 17,876) in the auditory modality. In both modalities, raw RTs were transformed into standardized z scores per participant and per block to remove effects due to block differences and variability/speed between participants (as recommended by Faust, Balota, Spieler, & Ferraro, 1999; see also Ferrand et al., 2010, for such a procedure). By-items RT and zRT means and accuracies for the independent variables used in the regression analyses are freely available on our website (https://sedufau.shinyapps.io/megalex/) and are searchable at www.lexique.org, inside the Open Lexique search engine. A general description of the online materials can be found in the Appendix.

Reaction times and accuracy in MEGALEX

Figure 1 displays the effects of practice on RTs and accuracies over the total duration of the experiment in both modalities (i.e., 109 blocks in the visual modality and 100 blocks in the auditory modality).

In the visual modality, the practice effect is about 100 ms different in RTs for words and 120 ms for pseudowords. This is similar to the effect observed in the BLP (Keuleers et al., 2012), which was also 100 ms. In the auditory modality, the practice effect is also around 100-ms difference in RT for words and 140 ms for pseudowords (see Fig. 1).

Reliability of the MEGALEX measures

As in previous megastudies (Ferrand et al., 2010; Keuleers, Diependaele, & Brysbaert, 2010; Tse et al., 2016), we determined the reliability of accuracy and RTs in order to evaluate how useful they might be. For each word, participants were split in two groups each containing half of the participants having seen that word. RT means, zRT means, and accuracies were calculated for each half. Correlations were then calculated between item scores obtained in the first and second halves. Spearman–Brown corrections are also reported as rcorr [2r/(1 + r)]. In this way, the correlation between (1) accuracy calculated on the first half of the participants who saw a given word and (2) accuracy calculated on the remaining participants who saw the same word was .66 (rcorr = .8) in the visual modality and .68 (rcorr = .81) in the auditory modality. For RTs in the visual modality, r = .70 (rcorr = .82) and for zRT, r = .82 (rcorr = .9). For the RTs in the auditory modality, r = .82 (rcorr = .9) and for zRT, r = .89 (rcorr = .94). The fact that the reliability of zRT is higher than the reliability of the raw RTs confirms that taking away differences in overall RT and variability between participants and blocks removes noise from the data and does not artificially reduce the variability of the items.

Predictors of the MEGALEX lexical decision times

We considered the following predictors for the by-items analyses:

Word frequency

We used the following corpora to measure word frequency: books (New, Pallier, Brysbaert, & Ferrand, 2004; New, Pallier, Ferrand, & Matos, 2001); subtitles (New, Brysbaert, Véronis, & Pallier, 2007); and blogs, Twitter and newspapers (Gimenes & New, 2016).

Word length (in letters)

The number of letters in the word.

Word length (in phonemes)

The number of phonemes in the word.

OLD20

The orthographic similarity to other words (Yarkoni et al., 2008), calculated on the basis of the Lexique vocabulary (Ferrand et al., 2010).

PLD20

The phonological similarity to other words (Yarkoni et al., 2008), calculated on the basis of the Lexique vocabulary (Ferrand et al., 2010).

Orthographic uniqueness point

The point in the letter string, proceeding from the first letter to the last, at which only one orthographic candidate remains in the cohort of possible words (calculated on the basis of Lexique vocabulary: New et al., 2004).

Phonological uniqueness point

The point in the phoneme string at which only one phonological candidate remains in the cohort of possible words (calculated on the basis of Lexique vocabulary: New et al., 2004).

Stimulus duration

The total duration of the spoken item, from the onset of the word to the end of the word, which corresponded to the duration of the edited sound files.

Which word frequency measure best predicts response times in the visual and auditory lexical decision task?

As Tse et al. (2016, p. 7) put it, “Word frequency has consistently been shown to be one of the strongest predictors of visual word recognition in various languages. Given that this is a central lexical variable to manipulate and control, it is important to determine which word frequency count accounts for the largest proportion of variance in lexical decision performance.”

To find out which word frequency measure best predicts response times in the visual and auditory lexical decision task, we have considered five frequency measures that are publicly accessible for the present megastudy.

First, we used two measures of word frequencies from Lexique (New et al., 2004; New et al., 2001): (1) the book-based frequency (Frantext), and (2) the subtitle-based frequency (SUBTLEX-FR). The subtitle-based frequencies were first computed in French by New, Brysbaert, Véronis, and Pallier (2007). They found that the subtitle-based frequencies were a better predictor of lexical decision times than the book-based frequencies (see also Brysbaert & New, 2009, for a similar result in English). Ferrand et al. (2010) replicated this finding in the French Lexicon Project, the subtitle-based frequencies explaining 5%–6% more of the variance in the percent errors and reaction times than did the book-based frequencies. Furthermore, Ferrand et al. (2010; see also Brysbaert & New, 2009) showed that the predictive power of the frequencies further increased when the averages of the subtitle and book frequencies were used. We investigated whether this is also true for auditory lexical decision.

Second, we used three new measures of word frequencies based on (1) Twitter, (2) blog posts, and (3) newspapers (taken from Worldlex by Gimenes & New, 2016). On the basis of lexical decision times for 35,658 words taken from the French Lexicon Project (Ferrand et al., 2010), Gimenes and New (2016) showed that the three new frequencies averaged (Twitter + blogs + newspapers) explained slightly more variance (48.46%) than the two classic frequencies—book-based and subtitle-based averaged—(47.56%). The same result was obtained for the English Lexicon Project (Balota et al., 2007) and the Dutch Lexicon Project (Keuleers, Diependaele, & Brysbaert, 2010).

The frequency measures were log-transformed (see Baayen et al., 2006). Also, because Balota et al. (2004; see also Ferrand et al., 2011) reported a nonlinear relationship between log frequency and lexical decision times, we report regression analyses both for log(frequency) and log(frequency) + log2(frequency).

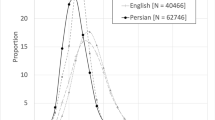

Table 3 shows the percentages of variance in reaction times and accuracy explained by the different frequency measures for all words in each modality (visual and auditory), and also for common words in both the modalities. Several of the results are interesting. First, in line with previous findings (Ferrand et al., 2010; New et al., 2007), the subtitle frequency measure outperforms the book frequency measure in the visual modality. Note, however, that this was not the case in the auditory modality, for which both frequencies were roughly similar (with a slight advantage for the book frequency); this goes against Ernestus and Cutler’s (2015) finding that the subtitle frequency measure outperformed the book frequency measure, as in the visual modality.Footnote 7 Second, the proportion of variance explained by frequency is relatively low in the auditory as compared to the visual modality (for common words, 11.3% vs. 45.1%); this is much lower than the estimates previously reported for visual lexical decision performance.Footnote 8 Third, as in the French Lexicon Project (Ferrand et al., 2010), more variance is explained when the averages of subtitle and book frequencies are used than when these two frequencies are taken separately; this is the case in both modalities, suggesting that a combination of spoken and written frequencies may be the way forward. Fourth, this frequency measure combining subtitle and book frequencies shows results roughly similar to those from the three new frequency measures combining Twitter, blogs, and newspapers when the square of the log frequency was not added (similar to what Gimenes & New, 2016, found; see Table 3). However, when the square of the log frequency was added, the frequency measure combining subtitle and book frequencies outperformed the three new frequency measures combining Twitter, blogs, and newspapers; this was the case in both modalities. Fifth, these analyses confirm that less noise is present in the zRT variable than in the raw RT variable.

Given that the combination of subtitle and book frequencies is unequivocally the best measure, for both visual and auditory word processing (see Table 3), we will use this measure in the remainder of this article.

Comparison of MEGALEX with the French Lexicon Project

A comparison of the visual lexical decision times of MEGALEX and the FLP (25,122 words in common) reveals a clear superiority of MEGALEX over the FLP (in terms of the percentage of variance accounted for). For word frequency (based on a combination of the book and the subtitle frequencies), 8.6% more variance is explained by MEGALEX (40% in MEGALEX vs. 31.4% in FLP; see Table 4). Note, however, that the shapes of the frequency effects are very similar in both megastudies (see Fig. 2).Footnote 9 There is also a slight advantage of MEGALEX over FLP for word length (in number of letters) and orthographic similarity (see Table 5).

Shapes of the word frequency effect in MEGALEX (solid line) and the French Lexicon Project (dotted line) for the 25,122 words in common. Lowess smoothing was obtained using the lowess function in R, which computes locally weighted polynomial regression between dependent variables and independent variables (see Cleveland, 1981)

For word length (in letters), Ferrand et al. (2010; see also New et al., 2006, for English) found that this variable had a quadratic effect on visual lexical decision times: The effect of number of letters was facilitatory for words of three to five letters, null for words of five to eight letters, and inhibitory for words of eight to 13 letters. We replicated this quadratic length effect in MEGALEX (see Fig. 3), even if the range was more limited in the present study (three to nine letters vs. three to 13 letters in FLP).

Shapes of the word length effect in MEGALEX (black dots) and the French Lexicon Project (gray dots) for the 25,122 words in common. As in Ferrand et al. (2010), error bars are given for residuals of the quadratic regression, with RT as the dependent variable and the sum of book and subtitle frequencies (in logarithms) as an independent variable

For orthographic similarity, Ferrand et al. (2010) found that similar words (low OLD 20) were responded to more quickly than dissimilar words (high OLD 20). We replicated this orthographic similarity effect in MEGALEX (see Fig. 4).

A comparison of MEGALEX and FLP further confirms the superiority of a design in which the participants process half of the stimuli (MEGALEX) relative to a design in which participants only see a subset of the stimuli (FLP; see also Ferrand et al., 2015, for the same results based on preliminary data collected in MEGALEX). Indeed, more variance was accounted for (nearly 10% more) in the lexical decision task of MEGALEX than in FLP (see Table 5). This is in line with findings from the Dutch and the British Lexicon Projects, indicating that there is less noise in megastudies with a complete crossover of participants and stimuli (Keuleers, Diependaele, & Brysbaert, 2010; Keuleers et al., 2012).

Correlations between the variables

Tables 6 and 7 show the intercorrelations between the various predictor variables and RTs from MEGALEX (for both the visual and auditory modalities). The analysis was limited to the words that were recognized by at least 67% of the participants (N = 25,776 in the visual modality and N = 15,842 in the auditory modality).

In the visual modality (see Table 6), word frequency had the highest correlations with RT. In the auditory modality (see Table 7), stimulus duration had the highest correlation with RT.

Regression analyses in the visual and auditory modalities

To further examine the impact of the predictor variables for the two modalities, we conducted multiple regression analyses at the item level for each modality separately (25,776 words in the visual modality and 15,842 words in the auditory modality). Regressions have the advantage that nonlinear relations can be examined (by making use of cubic splines). A forward approach was used in order to choose the nonlinear terms: (1) at each step, such terms were included for the predictor variable for which the inclusion of splines lead to the greatest variance increase in comparison with a model in which no such terms were included, and (2) the stopping rule used to choose the number of knots was the value for which adding more knots did not lead to an increase of R 2 above .001. The maximum numbers of knots were limited to 4 for length (N-letters and N-phonemes), OLD20 (PLD20), and acoustic duration, and 7 for frequency.Footnote 10 Table 8 shows the results.

For both modalities and both dependent variables (see Tables 8 and 9), all included variables were significant at p < .001. For RTs, the effects of frequency and word similarity (OLD20 and PLD20) were nonlinear in both modalities, with relatively comparable patterns (see Fig. 5).

Shapes of the frequency effects, word length effects (number of letters and number of phonemes), and word similarity effects (orthographic and phonological) in each modality (visual and auditory) for zRTs. x-axis: standardized variable; y-axis: predicted within-task standardized zRT. 95% confidence intervals are indicated by gray shading

As is shown in Fig. 5, for both modalities, word frequency effects (frequent words were responded to faster) were manifested in the significant negative relationship between RTs and frequency. Figure 6 also shows the shapes of the word frequency effects for both modalities in MEGALEX and for the visual modality in the French Lexicon Project (Ferrand et al., 2010) for the 14,868 words in common. A weak negative effect of word length appeared in the visual modality (number of letters), whereas this effect (number of phonemes) was more pronounced in the auditory modality. For both modalities, the word similarity effect had a negative effect at the left of the scale and then inverted near the mean: similar words (low OLD20 or PLD20) were responded to more quickly than dissimilar words (high OLD20 or PLD20). Finally, acoustic duration showed the most important effect, linear and positive, in the auditory modality: words whose tokens had longer durations took longer to recognize.

Shapes of word frequency effects in the visual (black line) and auditory (dashed line) modalities in MEGALEX, as well as in the French Lexicon Project (dotted line), for the 14,868 words in common. The RT scales being different in MEGALEX (both modalities) and the French Lexicon Project, we centered RTs (RTs – Mean RTs)

Table 9 shows the results of regression analyses including all words in each modality.

For the visual modality, frequency, orthographic similarity, and word length (in letters) explained 43.51% of the variance in RTs and 24.32% in accuracy. For the auditory modality, frequency, phonological similarity, word length (in phonemes), and acoustic duration explained 52.12% of the variance in RTs and 14.26% in accuracy.

Comparing effects across modalities and their interactions

Table 10 shows the intercorrelations between the various predictor variables and RTs from MEGALEX for both the visual and auditory modalities. The analysis was limited to the 14,868 words common in both modalities. Table 10 shows in particular that the correlation between visual RTs and auditory RTs is limited (r = .37), suggesting differences between visual and auditory lexical decision times. These differences between modalities might suggest that people always wait until a perceptual decision can be reached, but that they are constrained differently across modalities.Footnote 11

A comparison of the visual and auditory lexical decision times of MEGALEX (14,868 words in common) reveals that whereas the effect of word frequency is the most important factor in the visual modality (accounting for at least 45% of unique variance; see Table 11), stimulus duration is the main variable in the auditory modality (accounting for at least 46% of the variance; see Table 12).

To further examine the impact of the predictor variables across the two modalities, we conducted multiple regression analyses at the item-level for the 14,868 words common in both modalities. In a first step, using the same forward procedure as above, two preliminary analyses were done within tasks in order to choose the numbers of splines to use in each. Both analyses included all variables under study, that is to say frequency subtitles, the numbers of letters and phonemes, the orthographic and phonologic Levenshtein distances and acoustic duration. In a second step, a global analysis was conducted on scores from both tasks and the inclusion of nonlinear terms retained in the first step (when the numbers of knots differed between the tasks in the first step, the highest one was chosen). In addition, interactions between the two modalities and the predictor variables were entered in the regression model in order to compare the effects of the predictor variables between the two modalities. Tables 13 and 14 show the results in terms of interaction effects and simple effects for both zRTs and accuracy.

For RTs, only the interaction between modalities and number of letters was not significant (all the other interactions between modalities and predictor variables were significant; see Table 13 and Fig. 7). For the visual modality, frequency, orthographic similarity, phonological similarity, word length (in letters and in phonemes), and acoustic duration explained 47.80% of variance in RTs and 26.52% in accuracy. For the auditory modality, frequency, orthographic similarity, phonological similarity, word length (in letters and in phonemes), and acoustic duration explained 52% of variance in RTs and 12.30% in accuracy.

Shapes of the word frequency effects, word length effects (number of letters and number of phonemes), word similarity effects (orthographic and phonological), and acoustic duration, for both modalities (V: visual and A: auditory) for zRTs. x-axis: standardized variable; y-axis: predicted within-task standardized zRT

Figure 7 shows the interaction effects for RTs between modalities and predictor variables. The effect of number of letters did not interact with modalities: It was significant and positive in both modalities. Given the high number of observations, however, it appeared quite weak. All the other effects interacted with modalities. The effect of number of phonemes was also significant in both modalities, with a negative sign in the left part of the scale, becoming then slightly positive. This pattern was more pronounced in the auditory modality. Phonological similarity and acoustic duration effects were only observed in the auditory modality. The orthographic similarity effect had a pattern comparable to the phonological similarity effect, but it was more pronounced in the visual modality; a weak negative effect—nearly linear—was, however, also observed in the auditory modality. Finally, classical frequency effects were observed in both modalities, with more important decreases at the left of the scale. The effect was more pronounced, however, in the visual modality.

These results were generally in agreement with those obtained previously using all the words available in both modalities. The only exception concerned the effect of number of letters in the visual modality, which was negative in the preceding analysis and positive in this one. This effect was, however, rather weak in both analyses.

A similar analysis was conducted with the inclusion of orthographic uniqueness point (OUP) and phonological uniqueness point (PUP) values. For the visual modality, frequency, orthographic similarity, phonological similarity, word length (in letters and in phonemes), and uniqueness point (orthographic and phonological) explained 47.95% of the variance in RTs and 26.84% in accuracy. For the auditory modality, frequency, orthographic similarity, phonological similarity, word length (in letters and in phonemes), acoustic duration and orthographic and phonological uniqueness point explained 52.50% of the variance in RTs and 13.26% in accuracy

Some studies have reported that words with an early OUP were processed more quickly than words with a late OUP in the visual modality (Kwantes & Mewhort, 1999; Lindell, Nicholls, & Castles, 2003), an effect similar to the effect obtained in auditory tasks (e.g., Radeau & Morais, 1990; Radeau, Mousty, & Bertelson, 1989). This has been taken to suggest that readers process the letters of words sequentially in a left-to-right order (but see Lamberts, 2005, for another interpretation). However, other studies found the opposite pattern, words with an early OUP were processed more slowly than words with a late OUP (Izura, Wright, & Fouquet, 2014; Miller, Juhasz, & Rayner, 2006; Radeau, Morais, Mousty, Saerens, & Bertelson, 1992), suggesting that word processing is likely to occur in a parallel manner. Here, we found that words with an early OUP were processed more slowly than words with a late OUP in the visual modality (see Fig. 8). These results are consistent with those of Izura et al. (2014), Miller et al. (2006), and Radeau et al. (1992). The same OUP effect was obtained in the auditory modality. To our knowledge, this is the first time that an OUP effect has been reported in this modality.

In the auditory modality, it has also been reported that words with an early PUP were processed more quickly than words with a late PUP (Radeau & Morais, 1990; Radeau, Morais, Mousty, & Bertelson, 2000; Radeau, Mousty, & Bertelson, 1989). In particular, Radeau et al. (2000) found a PUP effect only in the slow rate condition, not in the fast one (this last condition being closer to natural speech). They also reported that the PUP effects were smaller with synthetic than with natural speech. Here we found that words with an early PUP were processed more quickly than words with a late PUP (see Fig. 8), replicating Radeau et al. (2000). However, concerning the visual modality, words with an early PUP were processed more slowly than words with a late PUP, an effect similar to that for the OUP.

General discussion

Summary of findings

In general, the findings of the visual lexical decision times of MEGALEX are consistent with the results of previous megastudies conducted in English (Balota et al., 2007; Brysbaert et al., 2016; Cortese & Khanna, 2007; Keuleers et al., 2012; Yap & Balota, 2009), Dutch (Brysbaert et al., 2016; Keuleers, Diependaele, & Brysbaert, 2010), and French (Ferrand et al., 2010). Without question, word frequency is the most important predictor in visual lexical decision (accounting for at least 45% unique variance in our study; 40% in ELP: Balota et al., 2007; Brysbaert et al., 2016; 34% in DLP1: Keuleers et al., 2010; 33% in DLP2: Brysbaert et al., 2016; and 38% in FLP: Ferrand et al., 2010). In line with what Ferrand et al. (2010) reported for French, the extra contribution of word length was significant but rather limited (0.8%). The extra contribution of orthographic similarity was larger (around 1.5%). Furthermore, the present megastudy confirms the superiority of a design in which the participants process half of the stimuli (MEGALEX) relative to a design in which participants only see a subset of the stimuli (FLP). This is in line with findings from the Dutch and the British Lexicon Projects, indicating that there is less noise in megastudies with a complete crossover of participants and stimuli (Keuleers, Diependaele, & Brysbaert, 2010; Keuleers et al., 2012).

Concerning the auditory modality, stimulus duration is the most important variable (accounting for 46% of the variance in our study), which is consistent with the results of a previous relatively large-scale study conducted in Dutch (Ernestus & Cutler, 2015; see also Goh et al., 2016). The extra contribution of frequency is around 4% and the extra contribution of number of phonemes is 1.5%. Phonological similarity and phonological uniqueness point also influenced auditory lexical decision times (less than 1%). However, we showed that words that are phonologically similar to many other words (i.e., with low PLD20 values) were responded to faster than phonologically dissimilar words (i.e., with high PLD20 values). This latter result departs from the general finding that, in the auditory modality, words with more similar sounding (or closer phonological neighbors) are usually recognized more slowly than more distinct word-forms (e.g., Goh et al., 2009; Goh et al., 2016; Suárez, Tan, Yap, & Goh, 2011; Ziegler et al., 2003), but it converges with the general finding that, in the visual modality, words that are orthographically similar to many other words are responded to faster than orthographically dissimilar words (e.g., Brysbaert et al., 2016; Ferrand et al., 2010; Keuleers, Diependaele, & Brysbaert, 2010; Keuleers et al., 2012). Concerning phonological uniqueness point, our results are consistent with the general finding that words with an early phonological uniqueness point are responded to faster than words with a late phonological uniqueness point (e.g., Radeau & Morais, 1990; Radeau et al., 2000; Radeau et al., 1989).

Similarities and differences across modalities

A comparison of the effects of lexical variables across modalities revealed interesting differences between auditory and visual lexical decision times, as was predicted by Goldinger (1996; see also Segui, 1994, for a discussion). A first difference across modalities is that word frequency is the most important factor in the visual modality, whereas it is word duration in the auditory modality. A second difference is that the proportion of variance explained by frequency is relatively low in the auditory (11.3%) as compared to the visual (45.1%) modality. A third difference is that listeners tended to wait until they had heard the last phoneme in the word before making their decision (as shown by a strong effect of stimulus duration), whereas readers can make their decision before the word has been identified (as shown by a strong effect of OLD20). Indeed, in the visual modality, as suggested by Ernestus and Cutler (2015, p. 1471), “Participants can see at a glance whether a presented form is long or short, but in the auditory modality, listeners must wait for silence to tell them that the presented form has ended.” In the visual modality, as suggested by Brysbaert et al. (2016, p. 443), “Lexical decisions are partly based on the overall activation in the orthographic lexicon, such that when this overall activation exceeds a certain level, a word response is initiated before the target word itself has been identified. This overall activation is higher when a target word activates many similar word forms than when it has a unique letter sequence” (see Grainger & Jacobs, 1996; see also Dufau, Grainger, & Ziegler, 2012; Grainger, 2017). A fourth difference is that readers process words with an early orthographic uniqueness point more slowly than words with a late orthographic uniqueness point (see also Izura et al., 2014; Miller et al., 2006; Radeau et al., 1992), whereas listeners process words with an early phonological uniqueness point more quickly than words with a late phonological uniqueness point (see also Radeau et al., 2000; Radeau et al., 1989). These results suggest that processing of visually presented words is likely to occur in a parallel manner, whereas processing of auditory presented words is likely to occur in a sequential manner. A fifth difference is that more variance is explained in the auditory modality (max = 53%) than in the visual modality (max = 48%).

Turning to those effects that revealed a similar pattern in the two modalities, a first converging pattern concerns word similarity: Words orthographically similar to many other words (i.e., with low OLD20 values) were responded to more quickly than more orthographically distinct words (i.e., with high OLD20 values) in the visual modality, and words phonologically similar to many other words (i.e., with low PLD20 values) were responded to faster than more phonologically distinct words (i.e., with high PLD20 values) in the auditory modality (see Figs. 5 and 7). A second converging pattern concerns the shape of the word frequency effects in both modalities. Even if a lower proportion of variance is explained by frequency for the auditory than for the visual modality, the shapes of the word frequency effects are very similar (see Fig. 6).

Future directions

Although this study has examined the influence of a certain number of measures on visual and auditory word recognition performance, a number of questions remain open. For instance, it will be useful to test other potentially interesting variables (when these variables will be available for French), such as age of acquisition (AoA; see, e.g., Bonin, Barry, Méot, & Chalard, 2004; Cortese & Khanna, 2007; Ferrand et al., 2008), word prevalence (Wp; see, e.g., Keuleers, Stevens, Mandera, & Brysbaert, 2015), sensory experience rating (SER; see, e.g., Bonin et al., 2015), semantic variables (such as imageability, concreteness, or number of semantic features, to name a few; see, e.g., Goh et al., 2016; Yap, Pexman, Wellsby, Hargreaves, & Huff, 2012), morphological variables (see, e.g., Baayen et al., 2006; Gimenes et al., 2016), and so forth. Indeed, the maximal percentages of variance explained (48% in the visual modality and 53% in the auditory modality) suggest that other variables are still to be discovered.

Conclusion

In this article, we have described the results of a project in which lexical decision data were collected for tens of thousand French words presented in the visual and auditory modalities.

The results of the present megastudy provide an empirical “goldmine” for researchers interested in understanding the core mechanisms of word recognition, independent of modality, as well as for providing a better specification of modality-specific processing. This is clearly a crucial step toward developing a general psycholinguistic theory of word comprehension.

Notes

Note that we are talking about reading and not the use of visual cues in speech perception (e.g., lip reading). There is indeed a large literature on the integration of audiovisual information during speech perception.

Before that, other large-scale studies had been conducted, testing a smaller number of (monosyllabic) words, typically between 1,000 and 3,000. For instance, Balota, Cortese, Sergent-Marshall, Spieler, and Yap (2004) collected naming times and lexical-decision times for 2,902 monosyllabic English words. Before them, Seidenberg and Waters (1989) had collected naming times for 2,897 monosyllabic English words. Treiman, Mullennix, Bijeljac-Babic, and Richmond-Welty (1995) collected naming times for 1,327 monosyllabic English words. Spieler and Balota (1997) collected naming data for 2,820 monosyllabic English words. Later on, Ferrand et al. (2011) collected naming, lexical decision and progressive demasking data for 1,482 monosyllabic French words. More recently, Adelman, Marquis, Sabatos-DeVito, and Estes (2013) collected naming times from four people who read each 2,820 English words 50 times each, and even more recently, Dufau, Grainger, Midgley, and Holcomb (2015) measured event-related potentials for 1,000 English words in a go/no lexical decision task. Very recently, Schröter and Schroeder (2017) collected naming and lexical decision data for 1,152 German words in seven different age groups (Grades 1 to 6, as well as two groups of younger and older adults).

One likely reason for this difference is that in ELP, nonwords were generated by changing one letter in a corresponding target word. This way of constructing nonwords confounded nonword length and word likeness, whereas in DLP and FLP, nonwords were generated in such a way that their orthographic features mimicked the orthographic features of the words.

However, due to the analysis method used by Ernestus and Cutler (2015, pp. 1477–1478), they could not provide the percentage of unique variance explained by this variable, but only revealed which variable was the best predictor.

Initially, we compared the auditory outputs of two different text-to-speech software programs, Acapela (www.acapela-group.com/) and TTS (from Apple), on a few hundred words and nonwords selected randomly. We chose the software that according to five judges (L.F., B.N., S.D., E.S., and C.P.) produced the best quality.

Ernestus and Cutler (2015) also tested a spoken frequency measure (CGN, containing recordings of completely spontaneous casual speech), but the subtitle frequency also outperformed this spoken frequency measure. Note, however, that when lemma frequencies (instead of form frequencies) were considered, the book frequency measure outperformed the subtitle frequency measure, suggesting that this subtitle frequency measure (based on CELEX) better reflects participants’ knowledge of word lemmas. Interestingly, Ernestus and Cutler (2015, p. 1483) reported that “the subtitle and the spoken word form frequencies were better predictors than the corresponding lemma frequencies, suggesting that participants’ recognition of morphologically complex words was based on the word form themselves rather than their stems.”

Due to the analysis method they used, Ernestus and Cutler (2015) could not provide the percentage of unique variance explained by this variable, but could only reveal which variable is the best predictor. However, in an unpublished study available at the following web address (http://crr.ugent.be/members/marc-brysbaert#pu6), Yap and Brysbaert (2009) also reported low proportions of variance explained by frequency around 10%. These estimates were obtained by regression analyses based on the large-scale study of Luce and Pisoni (1998) involving auditory lexical decision times for 918 monosyllabic English words.

Because uniqueness point values were both highly correlated with lengths, we postpone the analysis including this variable to the following section, comparing effects across modalities and their interactions.

We thank an anonymous reviewer for this interesting suggestion.

References

Adelman, J. S., Marquis, S. J., Sabatos-DeVito, M. G., & Estes, Z. (2013). The unexplained nature of reading. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 1037–1053. doi:10.1037/a0031829

Baayen, R. H., Feldman, L. F., & Schreuder, R. (2006). Morphological influences on the recognition of monosyllabic monomorphemic words. Journal of Memory and Language, 55, 290–313. doi:10.1016/j.jml.2006.03.008

Baayen, R. H., Piepenbrock, R., & Gulikers, L. (1995). The CELEX lexical database (Release 2, CD-ROM). Philadelphia: Linguistic Data Consortium, University of Pennsylvania.

Balota, D. A., Cortese, M. J., Sergent-Marshall, S. D., Spieler, D. H., & Yap, M. J. (2004). Visual word recognition for single syllable words. Journal of Experimental Psychology: General, 133, 283–316. doi:10.1037/0096-3445.133.2.283

Balota, D. A., Yap, M. J., Cortese, M. J., Hutchison, K. I., Kessler, B., Loftis, B., … & Treiman, R. (2007). The English Lexicon Project. Behavior Research Methods, 39, 445–459. doi:10.3758/BF03193014

Balota, D. A., Yap, M. J., Hutchison, K. A., & Cortese, M. J. (2012). Megastudies: What do millions (or so) of trials tell us about lexical processing? In J. S. Adelman (Ed.), Visual word recognition (Models and methods, orthography and phonology, Vol. 1, pp. 90–115). Hove: Psychology Press.

Bonin, P., Barry, C., Méot, A., & Chalard, M. (2004). The influence of age of acquisition in word reading and other tasks: A never ending story? Journal of Memory and Language, 50, 456–476. doi:10.1016/j.jml.2004.02.001

Bonin, P., Méot, A., Ferrand, L., & Bugaïska, A. (2015). Sensory experience ratings (SERs) for 1,659 French words: Relationships with other psycholinguistic variables and visual word recognition. Behavior Research Methods, 47, 813–825.

Brysbaert, M., & New, B. (2009). Moving beyond Kucera and Francis: A critical evaluation of present word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavior Research Methods, 41, 977–990. doi:10.3758/BRM.41.4.977

Brysbaert, M., Stevens, M., Mandera, P., & Keuleers, E. (2016). The impact of word prevalence on lexical decision times: Evidence from the Dutch Lexicon Project 2. Journal of Experimental Psychology: Human Perception and Performance, 42, 441–458. doi:10.1037/xhp0000159

Brysbaert, M., Warriner, A. B., & Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behavior Research Methods, 46, 904–911. doi:10.3758/s13428-013-0403-5

Cleland, A. A., Gaskell, M. G., Quinlan, P. T., & Tamminen, J. (2006). Frequency effects in spoken and visual word recognition: Evidence from dual-task methodologies. Journal of Experimental Psychology: Human Perception and Performance, 32, 104–119.

Cleveland, W. S. (1981). LOWESS: A program for smoothing scatterplots by robust locally weighted regression. American Statistician, 35, 54.

Connine, C. M., Mullennix, J., Shernoff, E., & Yellen, J. (1990). Word familiarity and frequency in visual and auditory word recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 16, 1084–1096. doi:10.1037/0278-7393.16.6.1084

Cortese, M. J., & Khanna, M. M. (2007). Age of acquisition predicts naming and lexical-decision performance above and beyond 22 other predictor variables: An analysis of 2,342 words. Quarterly Journal of Experimental Psychology, 60, 1072–1082. doi:10.1080/17470210701315467

Dufau, S., Duñabeita, J. A., Moret-Tatay, C., McGonigal, A., Peeters, D., Alario, F. X., … & Grainger, J. (2011). Smart phone, smart science: How the use of smartphones can revolutionize research in cognitive science. PLoS ONE, 6, e24974. doi:10.1371/journal.pone.0024974

Dufau, S., Grainger, J., Midgley, K. J., & Holcomb, P. J. (2015). A thousand words are worth a picture: Snapshots of printed-word processing in an event-related potential megastudy. Psychological Science, 26, 1887–1897.

Dufau, S., Grainger, J., & Ziegler, J. C. (2012). How to say “no” to a nonword: A leaky competing accumulator model of lexical decision. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 1117–1128.

Ernestus, M., & Cutler, A. (2015). BALDEY: A database of auditory lexical decisions. Quarterly Journal of Experimental Psychology, 68, 1469–1488. doi:10.1080/17470218.2014.984730

Faust, M. E., Balota, D. A., Spieler, D. H., & Ferraro, F. R. (1999). Individual differences in information-processing rate and amount: Implications for group differences in response latency. Psychological Bulletin, 125, 777–799. doi:10.1037/0033-2909.125.6.777

Ferrand, L., Bonin, P., Méot, A., Augustinova, M., New, B., Pallier, C., & Brysbaert, M. (2008). Age-of-acquisition and subjective frequency estimates for all generally known monosyllabic French words and their relation with other psycholinguistic variables. Behavior Research Methods, 40, 1049–1054. doi:10.3758/BRM.40.4.1049

Ferrand, L., Brysbaert, M., Keuleers, E., New, B., Bonin, P., Méot, A., … & Pallier, C. (2011). Comparing word processing times in naming, lexical decision, and progressive demasking: Evidence from Chronolex. Frontiers in Psychology, 2, 306. doi:10.3389/fpsyg.2011.00306

Ferrand, L., Méot, A., Spinelli, E., New, B., Pallier, C., Bonin, P., … & Grainger, J. (2015, September). MEGALEX: A new mega-study of visual word recognition. Some preliminary data. Article presented at the 19th Meeting of the European Society for Cognitive Psychology, Paphos, Cyprus.

Ferrand, L., New, B., Brysbaert, M., Keuleers, E., Bonin, P., Méot, A., … & Pallier, C. (2010). The French Lexicon Project: Lexical decision data for 38,840 French words and 38,840 pseudowords. Behavior Research Methods, 42, 488–496. doi:10.3758/BRM.42.2.488

Forster, K. I. (2000). The potential for experimenter bias effects in word recognition experiments. Memory & Cognition, 28, 1109–1115. doi:10.3758/BF03211812

Gimenes, M., Brysbaert, M., & New, B. (2016). The processing of singular and plural nouns in English, French, and Dutch: New insights from megastudies. Canadian Journal of Experimental Psychology, 70, 316–324.

Gimenes, M., & New, B. (2016). Worldlex: Twitter and blog word frequencies for 66 languages. Behavior Research Methods, 48, 963–972.

Goh, W. D., Suárez, L., Yap, M. J., & Tan, S. H. (2009). Distributional analyses in auditory lexical decision: Neighborhood density and word-frequency effects. Psychonomic Bulletin & Review, 16, 882–887. doi:10.3758/PBR.16.5.882

Goh, W. D., Yap, M. J., Lau, M. C., Ng, M. M. R., & Tan, L.-C. (2016). Semantic richness effects in spoken word recognition: A lexical decision and semantic categorization megastudy. Frontiers in Psychology, 7, 976. doi:10.3389/fpsyg.2016.00976

Goldinger, S. D. (1996). Auditory lexical decision. Language and Cognitive Processes, 11, 559–567.

Goldinger, S. D., Luce, P. A., & Pisoni, D. B. (1989). Priming lexical neighbors of spoken words: Effects of competition and inhibition. Journal of Memory and Language, 28, 501–518.

Goodman, J. C., & Huttenlocher, J. (1988). Do we know how people identify spoken words? Journal of Memory and Language, 27, 684–698.

Grainger, J. (2017). Orthographic processing: A “mid-level” vision of reading. Quarterly Journal of Experimental Psychology. doi:10.1080/17470218.2017.1314515

Grainger, J., & Jacobs, A. M. (1996). Orthographic processing in visual word recognition: A multiple read-out model. Psychological Review, 103, 518–565. doi:10.1037/0033-295X.103.3.518

Izura, C., Wright, V. C., & Fouquet, N. (2014). Hemispheric asymmetries in word recognition as revealed by the orthographic uniqueness point effect. Frontiers in Psychology, 5, 244. doi:10.3389/fpsyg.2014.00244

Juhasz, B. J., & Yap, M. J. (2013). Sensory experience ratings for over 5,000 mono- and disyllabic words. Behavior Research Methods, 45, 160–168. doi:10.3758/s13428-012-0242-9

Juhasz, B., Yap, M. J., Dicke, J., Taylor, S. C., & Gullick, M. M. (2011). Tangible words are recognized faster: The grounding of meaning in sensory and perceptual systems. Quarterly Journal of Experimental Psychology, 64, 1683–1691. doi:10.1080/17470218.2011.605150

Keuleers, E., & Balota, D. (2015). Megastudies, crowdsourcing, and large datasets in psycholinguistics: An overview of recent developments. Quarterly Journal of Experimental Psychology, 68, 1457–1468.

Keuleers, E., Brysbaert, M., & New, B. (2010). SUBTLEX-NL: A new frequency measure for Dutch words based on film subtitles. Behavior Research Methods, 42, 643–650. doi:10.3758/BRM.42.3.643

Keuleers, E., Diependaele, K., & Brysbaert, M. (2010). Practice effects in large-scale visual word recognition studies: A lexical decision study on 14,000 Dutch mono- and disyllabic words and nonwords. Frontiers in Language Sciences, 1, 174. doi:10.3389/fpsyg.2010.00174

Keuleers, E., Lacey, P., Rastle, K., & Brysbaert, M. (2012). The British Lexicon Project: Lexical decision data for 28,730 monosyllabic and disyllabic English words. Behavior Research Methods, 44, 287–304. doi:10.3758/s13428-011-0118-4

Keuleers, E., Stevens, M., Mandera, P., & Brysbaert, M. (2015). Word knowledge in the crowd: Measuring vocabulary size and word prevalence in a massive online experiment. Quarterly Journal of Experimental Psychology, 68, 1665–1692.

Kuperman, V., Estes, Z., Brysbaert, M., & Warriner, A. B. (2014). Emotion and language: Valence and arousal affect word recognition. Journal of Experimental Psychology: General, 143, 1065–1081. doi:10.1037/a0035669

Kuperman, V., Stadthagen-Gonzalez, H., & Brysbaert, M. (2012). Age-of-acquisition ratings for 30 thousand English words. Behavior Research Methods, 44, 978–990. doi:10.3758/s13428-012-0210-4

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2013). lmerTest: Tests in linear mixed effect models. Retrieved from https:/cran.r-project.org/web/packages/lmerTest/

Kwantes, P. J., & Mewhort, D. J. K. (1999). Evidence for sequential processing in visual word recognition. Journal of Experimental Psychology: Human Perception and Performance, 25, 376–381. doi:10.1037/0096-1523.25.2.376

Lamberts, K. (2005). Interpretation of orthographic uniqueness point effects in visual word recognition. Journal of Experimental Psychology: Human Perception and Performance, 31, 14–19.

Lindell, A. K., Nicholls, M. E. R., & Castles, A. E. (2003). The effect of orthographic uniqueness and deviation points on lexical decisions: Evidence from unilateral and bilateral-redundant presentations. Quarterly Journal of Experimental Psychology, 56, 287–307.

Luce, P. A., & Pisoni, D. B. (1998). Recognizing spoken words: The neighborhood activation model. Ear and Hearing, 19, 1–36.

Marlsen-Wilson, W. D. (1990). Activation, competition, and frequency in lexical access. In G. T. M. Altmann (Ed.), Cognitive models of speech processing (pp. 148–172). Cambridge: MIT Press.

Mathôt, S., Schreij, D., & Theeuwes, J. (2012). OpenSesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods, 44, 314–324. doi:10.3758/s13428-011-0168-7

Miller, B., Juhasz, B. J., & Rayner, K. (2006). The orthographic uniqueness point and eye movements during reading. British Journal of Psychology, 97, 191–216.

New, B., Brysbaert, M., Véronis, J., & Pallier, C. (2007). The use of film subtitles to estimate word frequencies. Applied Psycholinguistics, 28, 661–677. doi:10.1017/S014271640707035X

New, B., Ferrand, L., Pallier, C., & Brysbaert, M. (2006). Reexamining the word length effect in visual word recognition: New evidence from the English Lexicon Project. Psychonomic Bulletin & Review, 13, 45–52. doi:10.3758/BF03193811

New, B., Pallier, C., Brysbaert, M., & Ferrand, L. (2004). Lexique 2: A new French lexical database. Behavior Research Methods, Instruments, & Computers, 36, 516–524. doi:10.3758/BF03195598

New, B., Pallier, C., Ferrand, L., & Matos, R. (2001). Une base de données lexicales du français contemporain sur internet: LEXIQUE. L’Année Psychologique, 101, 447–462. doi:10.3406/psy.2001.1341

Petrova, A., Gaskell, G., & Ferrand, L. (2011). Orthographic consistency and word-frequency effects in auditory word recognition: New evidence from lexical decision and rime detection. Frontiers in Psychology, 2, 263. doi:10.3389/fpsyg.2011.00263

R Development Core Team. (2016). R: A language and environment for statistical computing. Vienna. Retrieved from www.r-project.org

Radeau, M., & Morais, J. (1990). The uniqueness point effect in the shadowing of spoken words. Speech Communication, 9, 155–164. doi:10.1016/0167-6393(90)90068-K

Radeau, M., Morais, J., Mousty, P., Saerens, M., & Bertelson, P. (1992). A listener’s investigation of printed word processing. Journal of Experimental Psychology: Human Perception and Performance, 18, 861–871. doi:10.1037/0096-1523.18.3.861

Radeau, M., Morais, J., Mousty, P., & Bertelson, P. (2000). The effect of speaking rate on the role of the uniqueness point in spoken word recognition. Journal of Memory and Language, 42, 406–422.

Radeau, M., Mousty, P., & Bertelson, P. (1989). The effect of the uniqueness point in spoken-word recognition. Psychological Research, 51, 123–128.

Schröter, P., & Schroeder, S. (2017). The Developmental Lexicon Project: A behavioral database to investigate visual word recognition across the lifespan. Behavior Research Methods. doi:10.3758/s13428-016-0851-9

Segui, J. (1994). Language perception in visual and auditory modalities: Similarities and differences. In P. Eelen, G. d’Ydewalle, & P. Bertelson (Eds.), International perspectives on psychological science: II. The state of the art (pp. 119–134). Hove: Psychology Press.

Seidenberg, M. S., & Waters, G. S. (1989). Word recognition and naming: A mega study. Bulletin of the Psychonomic Society, 27, 489.

Shimizu, H. (2002). Measuring keyboard response delays by comparing keyboard and joystick inputs. Behavior Research Methods, Instruments, & Computers, 34, 250–256.

Spieler, D. H., & Balota, D. A. (1997). Bringing computational models of word naming down to the item level. Psychological Science, 8, 411–416. doi:10.1111/j.1467-9280.1997.tb00453.x

Suárez, L., Tan, S. H., Yap, M. J., & Goh, W. D. (2011). Observing neighborhood effects without neighbors. Psychonomic Bulletin & Review, 18, 605–611. doi:10.3758/s13423-011-0078-9

Taft, M., & Hambly, G. (1986). Exploring the cohort model of spoken word recognition. Cognition, 22, 259–282.

Treiman, R., Mullennix, J., Bijeljac-Babic, R., & Richmond-Welty, E. D. (1995). The special role of rimes in the description, use, and acquisition of English orthography. Journal of Experimental Psychology: General, 124, 107–136.

Tse, C.-S., Yap, M. J., Chan, Y.-L., Sze, W. P., Shaoul, C., & Lin, D. (2016). The Chinese Lexicon Project: A megastudy of lexical decision performance for 25,000+ traditional Chinese two-character compound words. Behavior Research Methods. doi:10.3758/s13428-016-0810-5

Vitevitch, M. S., & Luce, P. A. (1999). Probablistic phonotactics and neighborhood activation in spoken word recognition. Journal of Memory and Language, 40, 374–408.

Yap, M. J., & Balota, D. A. (2009). Visual word recognition of multisyllabic words. Journal of Memory and Language, 60, 502–529. doi:10.1016/j.jml.2009.02.001

Yap, M. J., & Brysbaert, M. (2009). Auditory lexical decision: On the relative weights of word frequency, neighborhood density, word length, and onset duration. Unpublished manuscript retrieved at crr.ugent.be/members/marc-brysbaert#pu6

Yap, M. J., Pexman, P. M., Wellsby, M., Hargreaves, J. S., & Huff, M. (2012). An abundance of riches: Cross-task comparisons of semantic richness effects in visual word recognition. Frontiers in Human Neuroscience, 6, 72. doi:10.3389/fnhum.2012.00072

Yap, M. J., Rickard Liow, S. J., Jalil, S. B., & Faizal, S. S. B. (2010). The Malay Lexicon Project: A database of lexical statistics for 9,592 words. Behavior Research Methods, 42, 992–1003. doi:10.3758/BRM.42.4.992

Yarkoni, T., Balota, D. A., & Yap, M. (2008). Moving beyond Coltheart’s N: A new measure of orthographic similarity. Psychonomic Bulletin & Review, 15, 971–979. doi:10.3758/PBR.15.5.971

Ziegler, J. C., Muneaux, M., & Grainger, J. (2003). Neighborhood effects in auditory word recognition: Phonological competition and orthographic facilitation. Journal of Memory and Language, 48, 779–793.

Author note

This work was supported by an ANR Grant no 12-CORP-0001 (Agence Nationale de la Recherche) to L.F. We thank Mickaël Berthon, Pierre Chausse, and Julien Guégan from the LAPSCO and CATECH (Université Clermont Auvergne, France) for their invaluable help at different stages of the project.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOC 27 kb)

Rights and permissions

About this article

Cite this article

Ferrand, L., Méot, A., Spinelli, E. et al. MEGALEX: A megastudy of visual and auditory word recognition. Behav Res 50, 1285–1307 (2018). https://doi.org/10.3758/s13428-017-0943-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-017-0943-1