Abstract

Our actions affect the behavior of other people in predictable ways. In the present article, we describe a theoretical framework for action control in social contexts that we call sociomotor action control. This framework addresses how human agents plan and initiate movements that trigger responses from other people, and we propose that humans represent and control such actions literally in terms of the body movements they consistently evoke from observers. We review evidence for this approach and discuss commonalities and differences to related fields such as joint action, intention understanding, imitation, and interpersonal power. The sociomotor framework highlights a range of open questions pertaining to how representations of other persons’ actions are linked to one’s own motor activity, how specifically they contribute to action initiation, and how they affect the way we perceive the actions of others.

Similar content being viewed by others

How can humans affect their environment? The short answer is: By their actions. Be it that we pick an apple from a branch, move furniture from one place to another, start the engine of a car, or unlock our smartphone—all these changes are brought about by coordinated movements of our body. We further do not affect our environment randomly most of the time, but rather we perceive ourselves and other people as acting in a goal-oriented manner. Hence, we select actions that change the environment in a way we had in mind prior to action execution (Prinz, 1997).

When thinking about how goal-oriented action comes into being, it seems likely that it is a matter of learning. Of course, in theory, humans may be born with a set of actions that perfectly serve their potential goals. But given the flexibility of human behavior, and the huge variety of possible goals, this is obviously unrealistic. Rather, humans have to learn from birth on which motor actions change their environment in which way (Watson, 1997). Only after such action–consequence knowledge has been acquired will it be possible to select those actions that produce intended consequences reliably (Kunde, Elsner, & Kiesel, 2007).

How goal-oriented action emerges from sensorimotor experience, the experience that motor actions change the environment in predictable ways, has been studied extensively in recent years. However, as we explain below, this research has barely considered that our actions change not only the inanimate environment but also the behavior of other people. For example, people tend to gaze to where we point, and they tend to smile back when we smile at them. The fact that people affect each other’s behavior predictably is pivotal for human cooperation and communication. It would thus be important to understand how such predictable responses of other people govern one’s own action control.

To understand this, we propose to extend a framework for goal-oriented action, the so-called ideomotor framework, to the social domain. Consequently, we start by a brief primer on ideomotor theory. Then we will discuss how this theory might be extended to social actions, and which peculiarities of social actions would have to be taken into account. The resulting framework describes an important future direction for psychological research, and we outline several specific questions that await rigorous empirical study.

Ideomotor action control

The roots of ideomotor theory can be traced back to philosophical accounts of voluntary actions that emerged during the 19th century (Harleß, 1861; Herbart, 1825; James, 1890; cf. Stock & Stock, 2004; Pfister & Janczyk, 2012). Their basic assumption is that organisms first acquire associations between certain motor actions and the sensory consequences that reliably follow these actions. After such action–effect associations have been acquired, they can be activated in a bidirectional manner. Hence, certain motor patterns activate images of subsequent effects (i.e., effect prediction), and more importantly, images of intended effects (hence goals) activate the motor patterns that, according to experience, produce the intended outcome (i.e., action selection). In fact, the radical assumption of ideomotor theory is that motor actions are mentally represented in terms of their sensory effects only, so that there is no other way to generate a certain motor pattern deliberately than by recollecting memories of its sensory consequences (cf. Shin, Proctor, & Capaldi, 2010, for a recent review).

The ideomotor approach has received considerable empirical support, both regarding the acquisition of bidirectional action-effect associations and the recollection of an action’s sensory consequences during action planning. The acquisition of bidirectional action-effect associations has been probed in experimental designs in which participants were confronted with novel action-effect mappings in a learning phase. For instance, a key-press action could trigger a specific effect tone, whereas another key press triggered a different effect tone. In a later test phase, these tones occurred as stimuli that required a response. Action-effect learning is assumed to have taken place, when participants respond more likely and more quickly with the response that had produced the now presented imperative stimulus during the learning phase (Elsner & Hommel, 2001; Hoffmann, Lenhard, Sebald, & Pfister, 2009; Hoffmann et al., 2007; Hommel, Alonso, & Fuentes, 2003; Pfister, Kiesel, & Hoffmann, 2011; Wolfensteller & Ruge, 2011, 2014).

By contrast, direct evidence for the recollection of an action’s sensory consequences during action planning stems from so-called anticipation effects. Observations of that kind show that features of predictable action consequences, though not yet perceptually available, do already affect the motor actions that will bring these consequences about. For example, it is easier to refrain from an initially prepared action and switch to a different action when both actions produce the same rather than different sensory consequences. Conceivably this is so because some of the effect anticipation needed to prepare the initial action can be used to generate the eventually required action (Kunde, Hoffmann, & Zellmann, 2002; see also Janczyk & Kunde, 2014).

A second example is the impact of action–effect compatibility (also called response–effect compatibility) on action planning: It is easier to generate motor actions that produce consequences—action effects—that are foreseeably similar rather than dissimilar to the action itself or, respectively, to the proprioceptive consequences of the movement. For example, it is easier, in terms of response time and accuracy, to press a key with the right hand if this keypress predictably changes a stimulus on the right rather than on the left side of the actor/observer (Ansorge, 2002; Kunde, 2001; Pfister & Kunde, 2013; Pfister, Janczyk, Gressmann, Fournier, & Kunde, 2014). Likewise, it is easier to push a button forcefully when this action results in a loud rather than in a soft tone (Paelecke & Kunde, 2007). Related phenomena have been shown in several different domains of motor control such as musical performance (Keller, Dalla Bella, & Koch, 2010; Keller & Koch, 2008), typing (Rieger, 2007), human–machine interaction (Chen & Proctor, 2013), bimanual coordination (Janczyk, Skirde, Weigelt, & Kunde, 2009), tool use (Kunde, Pfister, & Janczyk, 2012; Müsseler, Kunde, Gausepohl, & Heuer, 2008) or dual tasking (Janczyk, Pfister, Hommel, & Kunde, 2014). In all these studies, action effects occurred only after the action had been carried out. Hence, the action effects occurred too late to affect the response time or accuracy as an actual physical event. They could do so only because they were anticipated prior to action execution.

Altogether, there is considerable support for the basic assumptions of effect-based motor control as described by ideomotor theory. Despite this support, it seems fair to say that research on effect-based motor control has so far largely neglected the fact that humans are social beings: It has primarily dealt with the impact of consequences in the inanimate environment such as certain tones, lights, or tactile reafferences on the motor system of a socially isolated individual. But the perhaps most important component of a human’s environment are other people. Very often our actions have consequences for other humans, and sometimes they aim at influencing these others. We will discuss in the next section whether and how action control can incorporate such social action effects.

Sociomotor action control

To affect other people means that our muscle contractions affect another person’s muscle contractions (Wolpert, Doya, & Kawato, 2003). There are situations in which this idea immediately gains credibility, such as when humans interact bodily with each other, as in dancing, shaking hands, kissing, or lifting a heavy object. But humans change other persons’ behavior also in a more distal manner. A crying baby, for example, changes the mother’s behavior quite consistently. The mother will usually interrupt current activities to pick up her child. Somewhat later in life, the child’s opening of the mouth might often be followed by the delivery of food. Likewise the child’s nonverbal request for a certain object by opening its hand might cause the passing of the desired object by another caretaking person. Even considerable parts of verbal communication can be construed as the attempt to influence other person’s behavior and the change of one’s own behavior as a function of such attempts from others. Coordinating the muscles of the vocal tract in order to utter the words “Would you like to go to the cinema with me tonight?” is mostly done to provoke the auditory feedback “yes” from a partner and to sit side by side in the cinema, eventually. It may be correct that part of “true” communication is not (only) to provoke a certain behavior but to induce a certain mental state in another person (Bühler, 1934; Stolk, Verhagen, & Toni, 2016). Still, all we can observe, and all we have at our disposal to infer such states, is the behavior of that person. So we think it is fair to start with the problem of controlling the behavior of other persons and to set aside the question whether or not this behavior correlates with certain mental states.

In many situations, humans try to affect each other’s behavior intentionally—for example, when a person tries to direct another person’s head or body orientation toward an interesting object by either pointing (Herbort & Kunde, 2016a, b) or gazing toward that object (Bayliss et al., 2013; Edwards, Stephenson, Dalmaso, & Bayliss, 2015). But even without such intentions, other people respond consistently to our actions. For example, suddenly looking at an object almost automatically prompts other people to stare at that object as well (Frischen, Bayliss, & Tipper, 2007). So, whether we want it or not, we do affect other persons behavior quite consistently, and there is a chance that human learning mechanisms can pick up such regularities.

In the present manuscript, we describe a theoretical framework and review corresponding evidence for what we call sociomotor action control. This framework is concerned with the cognitive processes that enable agents to incorporate the changes they evoke in others into their own motor control. In extending the traditional ideomotor framework, it assumes that agents can represent their body movements directly by the observable responses they consistently evoke from other humans. These intersubjective action–response links are the basis for, and become apparent through, the generation of own body movements, thus the term sociomotor.

The sociomotor approach is distinct to most research in social cognition and social neuroscience with its focus on the actor in a social context and the mechanisms that bring his or her actions about (for an exception, see Wolpert et al., 2003). There is, of course, a large body of evidence showing that human actions exert specific influences on human observers. We know, for example, that human motion is interpreted in specific ways (Hudson, Nicholson, Ellis, & Bach 2016; Shiffrar & Freyd, 1990). Also, humans attribute mental states and intentions to other people by means of subtle movement cues (Becchio, Manera, Sartori, Cavallo, & Castiello, 2012; Blakemore, Winston, & Frith, 2004; Herbort, Koning, van Uem, & Meulenbroek, 2012; Scholl & Tremoulet, 2000), and tend to imitate these actions spontaneously (Heyes, 2011). However, most of this research considers actions of other people mostly as stimuli and construes humans as passive observers of, or at best responders to, that stimulation. In a standard social situation, as depicted in Fig. 1, previous research thus focused on the responder. In contrast, the sociomotor framework intends to bring to the forefront the actor in such situations.

Social action effects

What exactly does it mean to produce a “social” action effect? As noted before, everything we evoke in other people by our actions comes across as perceptual events, be it that we see, hear, or feel what another person does. Hence, there is no a priori difference between action effects of an animate or an inanimate origin in basic perception. What differs, though, is their interpretation: A social action effect is a reliably produced perceptual change that is assumed to originate from another intentional agent. Certain perceptual changes are readily interpreted as produced by an intentional agent (simply) because their physical appearance is highly correlated with an origin from a human agent. This is true for movements that comply with the principles of biological motion (Johansson, 1973; Lacquaniti, Terzuolo, & Viviani, 1983; Pavlova, 2012). However, even the same physical event can be either construed as a social action effect or not depending on top-down factors. For example, an observed button press might be perceived as being performed by an intentional agent or by a nonintentional machine depending on instructions, experience, and expectations (Cross, Ramsey, Liepelt, Prinz, & Hamilton, 2015; Stenzel et al. 2012). Such an interpretation does not necessarily pop up in an all or none manner but occurs gradually (Pfeiffer, Timmermans, Bente, Vogeley, & Schilbach, 2011). Hence, whether or not a certain action consequence is construed as a social effect is determined jointly by bottom-up and top-down factors.

A useful test bed to study the interaction of bottom-up and top-down factors are robots, who can look and move in a more-or-less humanoid manner, while prior knowledge can suggest, more or less, that such robots behave in an either intentional or preprogrammed manner (Sciutti, Ansuini, Becchio, & Sandini, 2015). While there is some research regarding the impact of these factors to imitate and corepresent robot actions (e.g., Klapper, Ramsey, Wigboldus, & Cross, 2014), little is known about how these factors shape the way people manipulate robots’ behavior, which is the focus of the sociomotor approach. To study this is not just academic practice. Manipulation of human-like robots by, for example, gestures will be of increasing relevance in the technical and health-care sector (Breuer et al., 2012).

Our first assumption is that similar ideomotor processes that are involved to reach certain effects in the inanimate environment are employed as well when it comes to reach social effects. This is the null hypotheses dictated by the principle of parsimony and would be expected on the basis of recent reformulations of ideomotor theory (Hommel, 2009; Hommel, Müsseler, Aschersleben, & Prinz, 2001). More precisely, we assume that motor actions in social contexts become linked to the social consequences that these motor actions consistently produce. This motor-perceptual learning, in turn, enables the intentional production of social effects. To do so, codes of currently intended social effects (i.e., goals) have to be activated that then reactivate the motor patterns to which these codes were linked before. Please also note that actors have considerable degrees of freedom which of the (usually) multiple consequences of motor output they use to retrieve their actions (Memelink & Hommel, 2013). For example, one and the same motor output might produce various tactile, visual, and auditory reafferences at the same time, and current intentions determine which of these multiple reafferences eventually govern action production (e.g., Kunde & Weigelt, 2005). However, we propose that social effects in principle can acquire this role as well.

The assumption that social consequences are by and large presented just like nonsocial consequences is not only suggested by the principle of parsimony, it also likely describes the starting situation of a newborn. Toddlers might be rather sensitive to biological motion and faces, but otherwise it seems plausible that they do not make fundamental distinctions between social and nonsocial aspects of their environment. However, the concept of something being “different” from the inanimate environment might turn out as necessary to predict that certain “objects” (i.e., social partners) in the environment respond less consistently to the toddlers actions. Hence, the concept of other human’s agency might develop from attempts to jointly act with others (cf. Wolpert et al., 2003).

Once such a distinction has been established, it is likely that the processes used to reach goals in the social environment are tuned to specific conditions, as described below (section Peculiarities of Social Action Effects). In fact, this second assumption raises the key question of the sociomotor framework: What are the peculiarities of action control aiming at affecting other people? In the following, we present available evidence for the first assumption—that is, effect-based action control can exploit social action effects in principle—and continue with discussing the peculiarities of effect-based action control in social contexts that await empirical testing.

Available evidence

First studies are beginning to address effect-based action control in a social context. These studies targeted the acquisition of bidirectional action-effect associations for social action effects, the anticipation of such action effects during action planning and initiation as well as the perception of anticipated action effects.

Acquisition of social action effects

The first problem for a sociomotor control mechanism to solve is to associate own body movements with consistently evoked responses of social agents. Such learning has been demonstrated in a number of studies, by using the learning-then-test protocol described in the Ideomotor Action Control section. That is, subjects first experienced that their own actions produce certain responses of (virtual) others. In the test phase, it is then checked whether exposition to these other responses results in faster or more likely execution of those movements that had produced these responses in the preceding learning phase. For example, in the study by Herwig and Horstmann (2011, see Fig. 2), participants first learned that their own eye movements to the left or right caused a face at the target location to either smile or frown. In a later test phase, participants were to perform left or right eye movements in response to a smiling or frowning face, and they were split into a compatible group and an incompatible group. In the compatible group, the effect-response mapping of the test phase was the same as the response-effect mapping in the preceding acquisition phase, whereas it was reversed for the incompatible group. Different saccade latencies between both groups would suggest that the responses of the virtual face did indeed become associated to the producing eye movements, and this is indeed what Herwig and Horstmann observed (for further qualifications, see Fig. 2). The acquisition of similar action–action links, such as links between key presses and facial gestures (Sato & Itakura, 2013) or hand movements and movements of different fingers have been observed as well (Bunlon, Marshall, Quandt, & Bouquet, 2015). These studies provide a proof of principle that social action effects can indeed be associated to motor actions as predicted by the sociomotor approach.

Experimental design to investigate sociomotor learning between own actions (saccadic eye movements) and social action effects (facial expression). In an acquisition phase, participants performed left or right eye movements, and they either decided freely between both options (a) or were prompted which action to perform (b). Each movement consistently made a virtual face smile or frown. In the test phase, left or right movements were prompted by smiling or frowning faces, and the effect-action mapping was either the same as in the acquisition phase (compatible group) or inverted (incompatible group). When participants freely decided between both actions in the acquisition phase, saccadic latencies were lower in the compatible group than in the incompatible group, indicating that social action effects such as facial expressions can become linked bidirectionally to own actions. Reproduced, with permission, from “Action–Effect Associations Revealed by Eye Movements,” by A. Herwig and G. Horstmann, 2011, Psychonomic Bulletin & Review, 18, p. 533.

The traditional ideomotor approach assumes that actions and their sensory effects are bound together by experience of repeated co-occurrence of one’s own action and effect. However, the presence of other people enables novel forms of action-effect learning on top of one’s own experience. One form is observational learning (Paulus, 2014; Paulus et al., 2011). The observed movements of another person match the body-related effects of our own body movements. For example, when we see another person moving her hand, this corresponds quite closely to what we see when we move our own hand. So perceiving another person moving activates codes of body-related effects of own movements, which in turn prime these movements. Perceiving another person producing additional effects in the environment by performing such movements, such as a rattle sound by shaking the rattle, creates concurrent activation of two effect codes: body-related codes of the observed limb movement, which are already linked to one’s own motor codes, and codes of perceived effects on the environment, such as the sound of the rattle. Environment-related codes become linked to body-related codes and their associated motor codes, which is indicated by higher activity of motor brain areas when perceiving those sensory events that were previously produced by another person. For example, a baby who had observed that a certain rattle sound is produced by certain hand movements of a caregiver shows more motor activation when hearing that rattle sound than a sound that was not an effect of a previously observed action (Paulus et al., 2013). Hence, regarding action-effect learning observing a person who produces certain effects can to some extent replace own production of such effects.

Another peculiar form of action-effect learning in a social context is learning by perspective taking. While observational action-effect learning replaces one’s own motor actions by those of another person, learning by perspective taking replaces one’s own perception by the imagination of another person’s perception (cf. Pfister, Pfeuffer, & Kunde, 2014). The procedure works as follows: A person is familiarized with certain perceptual events such as tones. Then the actor has explained that he or she will produce these events for another observer and is asked to take over the observer’s perspective, without actually perceiving the effects herself anymore (such as playing a musical instrument for an audience while wearing sound blockers). These effects, produced for another person, impact action production much like effects produced for oneself. Besides learning of action-effect associations, perspective taking also influences action execution. For instance, when cooperating with a partner, actors adjust their actions in order to make them more predicable (Vesper, Schmitz, Safra, Sebanz, & Knoblich, 2016). This topic, however, exceeds the scope of this review.

Learning by observation and perspective taking are two forms of “social” action-effect learning in the sense that they are driven by the believed or actual presence of another agent. However, it is not clear, though perfectly possible, that they mediate the acquisition of social effects as well. Thus, humans might learn how to affect other persons by observing other people doing so, or by imagining to affect other persons.

To sum up, there is evidence that intersubjective action-effect learning occurs. It is possible, though, that such learning is tuned towards conditions that are specific to social effects (see section Peculiarities of Social Action Effects).

Anticipation of social action effects

Several recent studies have provided evidence for a potential role of anticipated social action effects during action planning and initiation. For instance, participants were trained that a handshake-like action (moving a joystick with the inner side of the left or right hand) produced either social effects (pictures of handshaking hands) or nonsocial effects (arrow stimuli; Flach, Press, Badets, & Heyes 2010). The joystick actions produced these stimuli foreseeably either on the same side or on the opposite side of the action (positional compatibility). Moreover the stimuli pointed either toward or away from the response hand (directional compatibility). While it took generally longer to produce stimuli at positions incompatible to the joystick action, irrespective of whether this was a hand or an arrow, the directional compatibility affected only the production of hands. These results reveal that codes of social action effects (pictures of hands) play a role in the generation of manual actions. However, additional features of hand effects become anticipated compared to conceptually similar nonsocial effects. It remains to be tested whether this coding of specific features of social effects was due to the effect stimuli as such, or to the high sociomotor compatibility, that resulted from the use of gestures as both, motor actions and visual action effects (see section Sociomotor, Imitative, and Interpersonal Similarity for a discussion of compatibility and interpersonal similarity).

A related study extended this research to facial expressions as actions and action effects (Kunde, Lozo, & Neumann, 2011). Participants had a harder time producing a smiling face on a screen by contraction of their corrugator supercilii (a muscle predominantly involved in frowning) than by contraction of the zygomaticus major (involved in smiling), whereas it was harder to produce a frowning face by contraction of the zygomaticus rather than by contraction of the corrugator. When upside-down faces were produced (which are not processed by special face perception mechanisms), no differences were found. The participants produced visual feedback from faces of other persons, not their own faces, indicating that the control of facial muscles is sensitive to the feedback which these muscle contractions produce at others. It might be possible, though, that humans also anticipate the visual feedback of their own facial expressions. However, humans have less experience with visual feedback of their own facial expressions as this is limited to evolutionarily rather recent innovations of mankind, such as mirrors or video feedback.

Anticipation of social action effects was also demonstrated with eye movements. Herwig and Horstmann (2011) had participants gaze toward face stimuli presented either to their left or right. After completion of the saccade, the initially neutral faces began to smile or frown, depending on the direction of the eye movement. Remarkably, the eyes landed already where the most likely change of the facial expression would occur, namely, at the eyebrows with an expected frowning, and at the mouth region with an expected smile. Hence, eye movements preferentially targeted the location of the anticipated change of facial expression, which actually occurred only after the eye movement was completed.

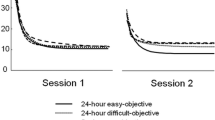

In a similar vein, anticipated responses of real interaction partners (as opposed to virtual avatars) have been shown to bias action planning and initiation (Pfister, Dignath, Hommel, & Kunde 2013). Participants in this study performed in an imitation setup with one participant taking the role of an action model and one participant taking the role of an imitator (see Fig. 3). The imitator either consistently imitated or counterimitated the model or responded randomly. Not surprisingly, the imitator was faster to imitate rather than to counterimitate the model, which is a well-documented finding in imitation research (for a review, see Heyes, 2011). More importantly, consistently being imitated also facilitated the action production of the model as compared to both, being counterimitated or being responded to randomly.

Experimental design to investigate the anticipation of social action effects during action planning and initiation. a An action model performed a short or long key press to which the imitator responded. In different blocks, the imitator either consistently imitated the model action, consistently counterimitated, or performed a random action. b Mean reaction times (RTs) and corresponding standard errors of paired differences for model and imitator actions. Following common findings on imitation research (Brass, Bekkering, & Prinz, 2001), the imitator was faster in the imitation condition than in the counterimitation condition. Importantly, this also held true for the model, even though the imitator response only occurred after the model had carried out his or her action. Error bars show standard errors of paired differences. Reproduced, with permission, from “It Takes Two to Imitate Anticipation and Imitation in Social Interaction,” by R. Pfister, D. Dignath, B. Hommel, and W. Kunde, 2013, Psychological Science, 24, p. 2118.

Follow-up research has revealed that there are actually two components to the “being imitated” advantage for the model. One component is that the identity of the imitator’s response is the same as the model’s response (Pfister, Weller, Dignath, & Kunde, 2017). The second component is the time point of the imitator’s response—hence, the fact that responses follow quickly because the imitator generates the imitative response more quickly than the counterimitative response (Lelonkiewicz & Gambi, 2016). Altogether, imitation does not happen just at the side of the responder; foreseeably being imitated helps the model to produce his or her actions as well (for a similar finding, see Müller, 2016).

This observation might have quite practical implications. Imitation of caregivers is a very beneficial means in child learning of motor skills (more beneficial than “a thousand words” so to say). The results of Pfister et al. (2013) suggest that acting toward an imitating child also helps the caregiver to generate the motor pattern he or she intends to demonstrate in the first place. Conversely, consistently imitating a child not only provides an early means of communication (Nagy, 2006; Nagy & Molnar, 2004) but it also helps the child to plan and execute these very movements. Hence, imitation is a win-win situation as it were, both for the imitator and the person being imitated.

Altogether, there is thus considerable evidence that social effects play a role in the generation of motor actions responded by real and virtual others. So far there are but few hints for peculiarities of social as compared to nonsocial action effects for effect-based action control, such as reliance on eye contact with the carrier of social effects (Sato & Itakura, 2013) and a more complex form of effect-based motor priming (Flach et al, 2010). Yet a systematic comparison still needs to be conducted.

Perception of social action effects

Ideomotor theory is primarily concerned with the mental processes that are involved in generating motor actions. Therefore, it traditionally focusses on the processes that precede overt movement execution and does not directly speak to processes that follow movement initiation. However, the notion of bidirectional action-effect associations suggests not only that action control should be influenced by effect representations (as indicated by the phenomena described above) but also allows for further interactions of actions and effects. For example, once effect codes have been recollected for the purpose of action generation, it seems likely that they remain in an active state beyond action initiation, and thereby influence the perception of events that match these codes. A commonly observed phenomenon suggested by such perpetuated effect codes is that self-produced events are perceived in an attenuated manner, such as the well-documented inability to tickle oneself (Weiskrantz, Elliott, & Darlington, 1971; see also Blakemore, Wolpert, & Frith, 2000). Similar findings of sensory attenuation have been reported for other types of action effects in the agent’s environment, such as self-produced sounds or changes of visual input (Baess, Widmann, Roye, Schröger, & Jacobsen, 2009; Weiss, Herwig, & Schütz-Bosbach, 2011). The same holds true when effects are presented already during action planning (i.e., while the agent is likely to maintain a representation of the upcoming effects). Typically, stimuli that match the subsequent action in certain respects such as spatial orientation are harder to perceive than stimuli that do not (so called action-induced blindness; Kunde & Wühr, 2004; Müsseler & Hommel, 1997; Müsseler, Wühr, Danielmeier, & Zysset, 2005; Pfister, Heinemann, Kiesel, Thomaschke, & Janczyk, 2012).

A related sensory distortion of self-produced action effects is intentional binding (Haggard, Clark, & Kalogeras, 2002; Moore & Obhi, 2012). Intentional binding describes the observation that self-produced events are perceived to be in closer temporal proximity to the action that caused this event than events that are not self-produced. A similar bias occurs in the spatial domain such that self-produced events are perceived in closer spatial proximity to the causing action than physically similar events that are not self-produced (Buehner & Humphreys, 2009; Kirsch, Pfister, & Kunde, 2016).

First, evidence suggests that predicted social action effects might also be prone to distortions of time perception (Pfister, Obhi, Rieger, & Wenke, 2014). In this study, two participants acted in a simple leader–follower sequence (Fig. 4). The participants operated one key each, and the leader started each trial by pressing his or her key. The leader’s key press triggered an effect tone after a variable delay and this tone served as go signal for the follower. In each trial, either the leader or the follower estimated either the time from leader onset to the corresponding tone, or the time from tone onset to the follower’s response. A comparison of the interval estimates from both roles revealed a subjective compression of both intervals in the leader role relative to the follower role. These results are a first indication that intentional binding might also bias the temporal perception of social consequences of one’s own actions. Similar biases in the spatial domain might exist as well, such that we tend to perceive the movements of other people shifted toward an object we wanted that person to pick up (Hudson, Nicholson, Simpson, Ellis, & Bach, 2016).

Experimental setup to investigate intentional binding for social action effects. A leader and a follower sat next to each other and operated one key each. The leader initiated each trial by pressing his or her key. Two effects followed after a variable duration: First, a tone was played back and, second, the follower performed his or her key press in response to the tone (i.e., ultimately contingent on the leader’s key press). After each trial, we obtained interval estimates from either the leader or the follower and found the leader to consistently underestimate the duration of both intervals relative to the follower (L-ATI = leader’s action-tone interval; F-TAI = follower’s tone-action interval). Error bars indicate standard errors of paired differences. Reproduced from “Action and Perception in Social Contexts: Intentional Binding for Social Action Effects,” by R. Pfister, S. Obhi, M. Rieger, and D. Wenke, 2014, Frontiers in Human Neuroscience, 8, 667, doi:10.3389/fnhum.2014.00667

To sum up, the present evidence suggests that predictable responses of other people to our actions are subject to similar perceptual distortions as predictable consequences of motor actions in general. Future research should explore possible consequences that such distortions of predicted responses of others might have. Consider the case of perceptual attenuation as a potential cause of force escalation. Self-generated, and thus predicted, forces exerted at another person are perceived as weaker than externally generated forces of the same magnitude. This causes force escalation when two persons are asked to touch each other as forcefully as the other person did (Shergill, Bays, Frith, & Wolpert, 2003). Possibly the same mechanism applies to more distal than tactile consequences, such as emotional reactions of a social partner that an agent intends to bring about. Such emotional reactions might be conveyed by facial expressions that the agent, in turn, might perceive in an attenuated way.

Peculiarities of social action effects

The previous sections discussed evidence for the idea that the traditional ideomotor approach can basically be expanded to encompass social action effects as well. We now turn to potential peculiarities of such social effects. It is almost trivial to say that conspecifics are a special part of the environment of every social creature, let alone humans. But it is less trivial to say in which specific ways this is true when we describe how human motor actions affect other humans. The list of peculiarities we discuss here is probably not complete, but it appears to us as a reasonable starting point for future research. Each of these peculiarities may or may not show up in each of the just described processes, hence the acquisition, anticipation, and perception of social action effects.

Input–output modalities

It has long been known that specialized routines and neural substrates come into play when we encounter other humans such as when perceiving their faces (Farah, Wilson, Drain, & Tanaka, 1998), motions (Johansson, 1973; Thompson, Clarke, Stewart, & Puce, 2005), or speech (Poeppel & Monahan, 2008). When we learn to change other persons’ behavior, our own motor actions have to be linked to action consequences that are processed by exactly these routines. At present, it is not clear whether own motor patterns are equally well, or perhaps even better, linked to and retrieved by consequences processed by such distinct routines as compared to consequences processed by routines for nonsocial stimuli.

What applies to the input (i.e., reafferent) side of action control might apply to the output (i.e., efferent) side as well, hence the motor repertoire used to affect other people. It is true that many of our 600 muscles or so serve to affect the inanimate as well as the social environment, such as the muscles of the arms, which can be used for both picking up objects and gesturing. Yet some effector systems seem to be more or less reserved to affect other peoples’ behavior. For example, our facial muscles are of no practical use when it comes to manipulating the inanimate environment, but they are quite useful to affect social partners, be it to make another person smile by smiling toward that person, or to avoid a person approaching us by looking grimly. Also, our eyes are not very helpful to manipulate the physical world, but we can use them to guide another persons’ attention toward relevant objects (Frischen et al., 2007; Herwig & Horstmann, 2011) or even to mislead opponents, such as in fake actions in sports (Kunde, Skirde, & Weigelt, 2011). Again, the question arises whether these specialized effector systems are perhaps more readily available when it comes to control social rather than nonsocial action consequences. This is not self-evident. In fact, one could argue that eye and face muscles are less available for effect-oriented actions since they normally serve other purposes, such as visual input selection or spontaneous display of affect, which happens even when no other people can be affected (Ekman & Friesen, 1971; Neumann, Lozo, & Kunde, 2014). A decision between these theoretical positions requires further empirical data.

Contiguity and contingency

When viewed from the perspective of associative learning, social action effects carry two problematic features that an action-effect learning mechanism has to cope with. First, social effects do not result mechanically from one’s own actions but are brought about by another agent. This circumstance renders social action effects likely to occur with reduced temporal contiguity compared to many changes in the inanimate environment. For example, when we knock on a table, the auditory effect from doing so occurs instantaneously. But when we smile at another person, it takes some time for him or her to process our smile and react accordingly. High temporal contiguity is favorable for associative learning in general and to the acquisition of links between motor patterns and nonsocial effects as well (Elsner & Hommel, 2004; but see Dignath, Pfister, Eder, Kiesel, & Kunde, 2014). It is an open question whether longer action-effect intervals are more effective to link motor patterns to social effects as compared to nonsocial effects. To study this question it would be ideal to use the same action effects that are, or are not, interpreted as originating from a social agent. As a working hypothesis, the ideal interval for such learning lies in the range of the average time it takes for human observers to respond to actions of another agent.

Social effects also tend to be less contingent on our own actions compared to most sensory action effects in the inanimate world. When knocking on a table, the table almost always “responds” by a typical knocking sound. Smiling at someone else, however, might produce some kind of positive feedback, but this can come as an expression of friendliness other than smiling, and it happens that the person does not respond at all. Hence, stronger generalization across different types of individual effect exemplars might occur when these effects are interpreted as social rather than nonsocial effects (for a related discussion, see Schilbach, 2014).

Not only might the level of contingency affect the acquisition of links between actions and social consequences, but there is evidence that the level of contingency of social action effects is represented itself as a relevant source of information. Children at the age of 5 months are more responsive to adults who respond to the baby’s actions by the same (familiar) level of contingency as their mothers. Thus, they are less responsive to strangers who respond more contingently to them than their mothers do (Bigelow, 1998). This sensitivity for different levels of contingency of social feedback develops between one and three month (Striano, Henning, & Stahl, 2005). It is an open question for future research whether different contingency levels of nonsocial action effects are represented in a similar fashion, and whether this representation has a different developmental trajectory than contingency representations of social effects.

In addition to reduced contiguity and contingency, social events further have been suggested to affect perception and judgments of causality (Kanizsa & Vicario, 1968, as cited in Schlottmann, Ray, Mitchell, & Demetriou, 2006). Such differences between social and nonsocial events may not only affect action-effect learning and anticipation but might also affect subjective feelings of agency over social action effects, even when compared to physical effects of similar contiguity and contingency (Nowak & Biocca, 2003).

Sociomotor, imitative, and interpersonal similarity

Another specialty in sociomotor action control derives from the fact that both, the producer of, and responder to, certain motor actions are humans and thus potentially possess high degrees of similarity. Similarity can relate to the bodily actions directly involved in an interaction, or to properties of the producers of these actions.

With respect to the bodily actions involved two levels of similarity can reasonably be identified, namely sociomotor similarity and imitative similarity. Sociomotor similarity denotes the overlap of the set of actions and social action effects (akin to set-level compatibility in S-R compatibility research; Kornblum, Hasbroucq, & Osman, 1990). For example, when contractions of facial muscle cause facial feedback of a partner, sociomotor similarity is high, whereas it is low(er) when own contractions of facial muscles trigger manual gestures of a partner. Interestingly most studies on social action effect learning employed conditions with relatively low sociomotor similarity, such as combinations of manual-facial (Sato & Itakura, 2013), manual-pedal (Wiggett, Hudson, Tipper, & Downing, 2011), and ocular-facial actions (Herwig & Horstmann, 2011). It is important to show that one’s own actions can be linked to quite different motor responses of another person, since this is possibly the rule rather than the exception in many joint action settings. For example, joint action scenarios often require complementary actions, such as opening a bag that a partner fills with the shopping or reaching a cup that a partner fills with tea. A systematic comparison of action-response learning with low versus high levels of sociomotor similarity is currently lacking.

Given a sufficient degree of sociomotor similarity, different degrees of imitative similarity come into play, which relates to the mapping of individual actions to individual effects (akin to element-level compatibility à la Kornblum et al., 1990). If, for example, one’s own smiling consistently produces a smile of a partner and frowning produces frowning, imitative similarity is high, whereas it is low(er) when smiling produces frowning and frowning produces smiling. Again, existing research is inconclusive regarding the impact of this factor in action-response learning. Consider the observation that repeated experience of social action effects with low rather than high imitative compatibility reduces later tendencies to imitate actions (Bunlon et al., 2015). This observation might reflect the strengthening of natural (i.e., preexperimentally established) action-response links due to exposure of action-resembling effects, or the acquisition of new links between mutually dissimilar actions due to exposure of action-dissimilar effects, or both. Hence, new designs are needed to reveal whether imitative responses are more easily acquired than nonimitative responses.

Similarity of two people is of course not only determined by the specific actions used to mutually affect each other. It can apply to essentially every feature that describes a human being. We know already that interpersonal factors in general determine the degree to which other agents’ actions are represented in one’s own cognitive system. For example, we tend to represent a person’s task when the relationship to the person is good but not when it is bad (Hommel, Colzato, & van den Wildenberg, 2009). We assume that also linking one’s own actions to responses of another person is constrained by factors that are specific to the persons conveying these social effects. Evidence for a rather superficial but important aspect of interpersonal similarity has been reported by Sato and Itakura (2013). While they observed that humans link simple manual actions to arbitrary facial gestures of a virtual partner, this acquisition did not occur when the partner did not look back (i.e., if there was no rapport between both agents). Though preliminary, this observation suggests that the acquisition of social effects is tuned toward social agents who are in contact with the observer or, conversely, blocked for agents who are not in touch with the actor (Csibra & Gergely, 2009; Sato & Itakura, 2013).

Related fields

We have argued that the problem of action control with social effects can be construed as an extension of action control with inanimate effects. We have identified potential constraints that come with social effects and reviewed initial evidence for the validity of such constraints, with the bulk of research waiting to be done. The sociomotor framework seems to us as being unique with respect to its scope and its experimental approach. Still, it has relationships to other fields in psychology and social neuroscience, most notably to joint action, intention understanding, imitation, and interpersonal power.

Relations to joint action

Joint action is a summary term for research that aims to understand how actions of two or more actors are coordinated to achieve a common goal (Sebanz, Bekkering, & Knoblich, 2006; Sebanz, Knoblich, & Prinz, 2003). So whereas the sociomotor approach focusses on situations where another person’s behavior is the goal, joint action is more concerned with situations in which two or more actors are supposed to have a common goal in mind.

Of course, we may affect another person’s behavior in service of a common goal, when, for example, one partner of a music duet wants the other one to slow down a bit, to maintain a common rhythm. It is thus fair to say that are several more or less closely related research topics under the heading of joint action, such as temporal coordination of two person’s actions, prediction of other persons’ actions, and the mental corepresentation of another person’s task (cf. Knoblich, Butterfill, & Sebanz, 2011). Consider the case of prediction of other person’s actions, which is considered to be a key ingredient to joint action (Vesper, Butterfill, Knoblich, & Sebanz, 2010). Such prediction is inevitable to affect another persons’ action as well. In fact, we not only predict what other people will do, rather we select our actions according to the intended, and thus predicted, responses of other people.

Sociomotor action control can therefore be construed as part of joint action, to the extent that it implies situations in which the action of one partner systematically affects the actions of another partner. Surprisingly this specific aspect is missing in many experimental paradigms used to study joint action, because many paradigms addressed the mental corepresentation of another agent’s task without direct interaction between the two agents. For example, in the joint Simon task, two participants sit next to each other and operate a response key each (Sebanz et al. 2003). They both attend the same monitor and react to imperative stimuli according to a prespecified rule (e.g., if a red stimulus appears, the left person presses his or her key, and when a green stimulus appears, then the right person is to press his or her key). The stimuli can appear either on the left or the right side of the screen (i.e., either congruently or incongruently to the correct response). In this setting, a reliable congruency effect emerges only if two actors are present (joint go/no-go task) but not if only one actor works on his part of the task (individual go/no-go task). This setting is a prime scenario for research on joint action because it allows addressing how one agent’s task set becomes corepresented by the other agent (cf. Dolk et al., 2014; Wenke et al., 2011). What such paradigms do not address, however, is whether and how action generation of one partner is affected by predictable actions of the other agent, which is the focus of the sociomotor approach put forward here. Neither joint action nor the sociomotor approach are confined to cases in which the action of two or more people are similar to each other, thus sociomotor compatible cases. In fact joint action in everyday live probably contains more cases of low that high sociomotor compatibility.

Relations to intention understanding

Human observers are tuned to encode the observed actions of other people, specifically if these people communicate (Manera, Becchio, Schoutne, Bara, & Verfaille, 2011; Neri, Luu, & Levi, 2006). We can, for example, infer the intention of social partners already from subtle movement cues. Most studies in this field have focused on the observers’ capability to use specific differences in perceived kinematics to guess the actors intentions (Abernethy & Zawi, 2007; Sartori, Becchio, & Castiello, 2011; Sebanz & Shiffrar, 2009).

Recent research, however, has begun to focus on the actors and the way they move depending on specific intentions in social contexts (for reviews, see Ansuini, Cavallo, Bertone, & Becchio, 2015; Becchio, Sartori, & Castiello, 2010). For example, seemingly equivalent object-oriented actions are carried out differently depending on whether they do or do not aim at a social partner, such as passing an object to another person or just moving the object (Becchio, Sartori, Bulgheroni, & Castiello, 2008). Whereas this research focusses primarily on action execution, the sociomotor approach is primarily concerned with the mental processes that precede motor execution, hence, action planning. Moreover, the sociomotor approach demonstrates that predictable responses of other people not only shape action planning but tries to explain why they do so. They do so as a consequence of previously acquired links between own and other actions, and by essentially the same mechanisms by which other action effects shape action planning (Pfister, Janczyk, Wirth, Dignath, & Kunde, 2014). Despite these differences in emphasis, these lines of research have revealed important commonalities as well. For example, social partners are not automatically taken into account, neither in movement planning (Pfister, Pfeuffer, et al., 2014) nor in movement execution (Becchio et al., 2008). In any case, a full appraisal of action control in social context has to take both lines of research on board.

Relations to imitation

Imitation entails an automatic tendency to copy observed actions (Heyes, 2011), possibly mediated by a mirror-neuron system (Rizzolatti & Craighero, 2004). The term imitation is used to describe various forms of such tendencies that range from reaching the outcomes of another person’s actions (sometimes also denoted as emulation; Tomasello, 1998) to more direct reproduction of another person’s specific muscle movements (sometimes also called mimicry; Want & Harris, 2002).

Imitation is assumed to occur because of links between observed and produced motor patterns. While some of these links might be innate, others are likely established by learning (e.g., the associative sequence learning model; Heyes, 2005). The evidence for innate links is sparse and based primarily on reports of tendencies of newborns to copy tongue protrusion (Meltzoff & Moore, 1977), while the tendency to mimic other behaviors, such as clapping hands or waving hands, develops much later (Jones, 2007). The idea is that we observe ourselves moving and thereby associate motor patterns to observed movements of our body. The observed movements of other bodies resemble our own, and thus prime corresponding motor patterns through the acquired associations.

If learning of motor-effect association is the basis of imitation, then it should be possible to modify imitation tendencies by establishing novel motor-effect links. In fact, repeated experience that one’s own hand movements produce visual feedback of a moving foot has been shown to yield bidirectional action-effect associations: After training, observed foot movements prime hand movements at the observer (Wiggett et al., 2011). However, these are links between movements of one effector (e.g., hand) and visual feedback of another effector (e.g., foot) of the same person, whereas the sociomotor approach is concerned with links between one’s own movements and those of another person. It might be interesting, though, to explore to which extent participants experience visual feedback from a limb different from the actually moved one as belonging to their own body or as belonging to the body of another person. Surprisingly, all other studies that aimed at altering sensorimotor links used S-R training procedures in which subjects learned to counterimitate observed movements (Catmur, Walsh, & Heyes, 2007). While it is interesting that such procedures shape imitative tendencies, the theoretically more interesting learning protocol would entail altered action-effect links. Such training studies appear to be a promising way to bridge research on sociomotor action control with research on imitation that might be further enriched by addressing additional consequences of social action effects, such as emotional or affective changes for the actor (Dignath, Lotze-Hermes, Farmer, & Pfister, 2017).

Of further relevance to sociomotor action control is that imitative tendencies set the stage for observational learning (cf. section Acquisition of Social Action Effects). Consider a child observing her mother who shakes a rattle whereby she produces a certain sound (Paulus, Hunnius, & Bekkering, 2013). This observation activates both similar movement tendencies in the child as well as codes of the observed sound. Movements and effect codes become linked to each by this concurrent activation in a similar way as they become linked when the child produced the sound by his or her own actual movement.

In any case, while most imitation accounts and the sociomotor approach share the assumption of associative links between observed and produced actions, there are different ideas on what such links are good for. While such links are assumed to mediate automatic stimulus-driven processes in the case of imitation, the sociomotor approach focuses on how they mediate goal-oriented actions.

Interpersonal power

Research on interpersonal power suggests that agents differ in their tendency to ‘objectify’ others as tools to reach one’s own goals (Gruenfeld, Inesi, Magee, & Galinsky, 2008). Even though objectification of social partners can of course occur without a direct function of anticipated partner responses for one’s own motor control, a tendency for objectification might affect sociomotor mechanisms, or vice versa. This possible link could, for instance, be addressed by varying power situationally to address different aspect of sociomotor action control. In addition to objectification, however, power has been shown to increase social distance to others (Lammers, Galinsky, Gordijn, & Otten, 2012) and to reduce perspective taking (Galinsky, Magee, Inesi, & Gruenfeld, 2006). As discussed in the preceding sections, perspective taking can be seen as a prime mechanism for sociomotor learning (Pfister, Pfeuffer, et al., 2014), which might be countered by experiencing interpersonal power over another agent. In other words, these findings pose interesting questions for a sociomotor approach because they suggest that a strong dependence of a responder on an agent might at times hinder rather than promote sociomotor action control, even though the boundary conditions for such an interplay are yet to be demonstrated.

Concluding remark

The sociomotor framework aims to understand the cognitive processes that mediate the change of other human’s behavior by one’s own motor actions. It thus highlights the actor rather than the observer or responder in social interactions, and it aims to uncover the impact of the actor’s social context on basic mechanisms of human action control. The theoretical model underlying this framework is ideomotor theory, which has been a rather fruitful approach for research on the mechanisms underlying goal-directed actions. The sociomotor framework advances classical ideomotor theory by suggesting a range of peculiarities that predominantly apply to the social context: special input/output modalities, special roles for contingency and contiguity of social action effects as well as sociomotor, imitative, and interpersonal similarity. Whether or not the hypotheses put forward by the sociomotor framework do indeed align with empirical reality of course remains to be seen. In any case, pushing the boundaries of ideomotor theory to the social domain will help to resocialize research on motor control, so as it might help to root research on social interaction in general theories of human motor control.

References

Abernethy, B., & Zawi, K. (2007). Pickup of essential kinematics underpins expert perception of movement patterns. Journal of Motor Behavior, 39(5), 353–367.

Ansorge, U. (2002). Spatial intention–response compatibility. Acta Psychologica, 109(3), 285–299.

Ansuini, C., Cavallo, A., Bertone, C., & Becchio, C. (2015). Intentions in the brain: The unveiling of Mister Hyde. The Neuroscientist, 21(2), 126–135.

Baess, P., Widmann, A., Roye, A., Schröger, E., & Jacobsen, T. (2009). Attenuated human auditory middle latency response and evoked 40‐Hz response to self‐initiated sounds. European Journal of Neuroscience, 29(7), 1514–1521.

Bayliss, A. P., Murphy, E., Naughtin, C. K., Kritikos, A., Schilbach, L., & Becker, S. I. (2013). ‘Gaze leading’: Initiating simulated joint attention influences eye movements and choice behavior. Journal of Experimental Psychology: General, 142(1), 76–92.

Brass, M., Bekkering, H., & Prinz, W. (2001). Movement observation affects movement execution in a simple response task. Acta Psychologica, 106(1), 3–22.

Becchio, C., Manera, V., Sartori, L., Cavallo, A., & Castiello, U. (2012). Grasping intentions: From thought experiments to empirical evidence. Frontiers in Human Neuroscience, 6, 117.

Becchio, C., Sartori, L., Bulgheroni, M., & Castiello, U. (2008). Both your intention and mine are reflected in the kinematics of my reach to grasp movement. Cognition, 106(2), 894–912.

Becchio, C., Sartori, L., & Castiello, U. (2010). Toward you: The social side of actions. Current Directions in Psychological Science, 19(3), 183–188.

Bigelow, A. E. (1998). Infants’ sensitivity to familiar imperfect contingencies in social interaction. Infant Behavior & Development, 21(1), 149–162.

Blakemore, S. J., Winston, J., & Frith, U. (2004). Social cognitive neuroscience: Where are we heading? Trends in Cognitive Sciences, 8(5), 216–222.

Blakemore, S. J., Wolpert, D., & Frith, C. (2000). Why can’t you tickle yourself? Neuroreport, 11(11), R11–R16.

Breuer, T., Giorgana Macedo, G. R., Hartanto, R., Hochgeschwender, N., Holz, D., Hegger, F., & Kraetzschmar, G. K. (2012). Johnny: An autonomous service robot for domestic environments. Journal of Intelligent and Robotic Systems, 66, 245–272.

Buehner, M. J., & Humphreys, G. R. (2009). Causal binding of actions to their effects. Psychological Science, 20(10), 1221–1228.

Bühler, K. (1934). Sprachtheorie. Jena, Germany: Gustav Fischer.

Bunlon, F., Marshall, P. J., Quandt, L. C., & Bouquet, C. A. (2015). Influence of action-effect associations acquired by ideomotor learning on imitation. PLoS ONE, 10(3), e0121617.

Catmur, C., Walsh, V., & Heyes, C. (2007). Sensorimotor learning configures the human mirror system. Current Biology, 17(17), 1527–1531.

Chen, J., & Proctor, R. W. (2013). Response–effect compatibility defines the natural scrolling direction. Human Factors, 55(6), 1112–1129.

Cross, E. S., Ramsey, R., Liepelt, R., Prinz, W., & Hamilton, A. F. (2015). The shaping of social perception by stimulus and knowledge cues to human animacy. Philosophical Transactions of the Royal Society B. doi:10.1098/rstb.2015.0075

Csibra, G., & Gergely, G. (2009). Natural pedagogy. Trends in Cognitive Sciences, 13(4), 148–153.

Dignath, D., Lotze-Hermes, P., Farmer, H., & Pfister, R. (2017). Contingency and contiguity of imitative behaviour affect social affiliation. Psychological Research. doi:10.1007/s00426-017-0854-x

Dignath, D., Pfister, R., Eder, A. B., Kiesel, A., & Kunde, W. (2014). Representing the hyphen in action–effect associations: Automatic acquisition and bidirectional retrieval of action–effect intervals. Journal of Experimental Psychology: Learning, Memory, and Cognition, 40(6), 1701–1712.

Dolk, T., Hommel, B., Colzato, L. S., Schütz-Bosbach, S., Prinz, W., & Liepelt, R. (2014). The joint Simon effect: A review and theoretical integration. Frontiers in Psychology, 5, 974.

Edwards, S. G., Stephenson, L. J., Dalmaso, M., & Bayliss, A. P. (2015). Social orienting in gaze leading: A mechanism for shared attention. Proceedings of the Royal Society B, 282(1812), 20151141. doi:10.1098/rspb.2015.1141

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17(2), 124–129.

Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology: Human Perception and Performance, 27(1), 229–240.

Elsner, B., & Hommel, B. (2004). Contiguity and contingency in action-effect learning. Psychological Research, 68(2/3), 138–154.

Farah, M. J., Wilson, K. D., Drain, M., & Tanaka, J. N. (1998). What is” special” about face perception? Psychological Review, 105(3), 482–498.

Flach, R., Press, C., Badets, A., & Heyes, C. (2010). Shaking hands: Priming by social action effects. British Journal of Psychology, 101(4), 739–749.

Frischen, A., Bayliss, A. P., & Tipper, S. P. (2007). Gaze cueing of attention: Visual attention, social cognition, and individual differences. Psychological Bulletin, 133(4), 694–724.

Galinsky, A. D., Magee, J. C., Inesi, M. E., & Gruenfeld, D. H. (2006). Power and perspectives not taken. Psychological Science, 17(12), 1068–1074.

Gruenfeld, D. H., Inesi, M. E., Magee, J. C., & Galinsky, A. D. (2008). Power and the objectification of social targets. Journal of Personality and Social Psychology, 95(1), 111–127.

Haggard, P., Clark, S., & Kalogeras, J. (2002). Voluntary action and conscious awareness. Nature Neuroscience, 5(4), 382–385.

Harleß, E. (1861). Der Apparat des Willens [The apparatus of the will]. Zeitschrift für Philosophie und philosophische Kritik, 38, 50–73.

Herbart, J. F. (1825). Psychologie als Wissenschaft, Zweiter analytischer 111Q712 Theil [Psychology as a Science. Second analytical part]. Werke, 6, 1–339.

Herbort, O., Koning, A., van Uem, J., & Meulenbroek, R. G. (2012). The end-state comfort effect facilitates joint action. Acta Psychologica, 139(3), 404–416.

Herbort, O., & Kunde, W. (2016a). How to point and how to interpret pointing gestures? Instructions can reduce pointer-observer misunderstandings. Psychological Research. doi:10.1007/s00426-016-0824-8

Herbort, O., & Kunde, W. (2016b). Spatial (mis-)interpretation of pointing gestures to distal referents. Journal of Experimental Psychology: Human Perception and Performance, 42(1), 78–89.

Herwig, A., & Horstmann, G. (2011). Action–effect associations revealed by eye movements. Psychonomic Bulletin & Review, 18(3), 531–537.

Heyes, C. (2005). Imitation by association. In S. Hurley & N. Chater (Eds.), Perspectives on imitation: From neuroscience to social science (pp. 157–176). Cambridge, MA: MIT Press.

Heyes, C. (2011). Automatic imitation. Psychological Bulletin, 137(3), 463–483.

Hoffmann, J., Berner, M., Butz, M. V., Herbort, O., Kiesel, A., Kunde, W., & Lenhard, A. (2007). Explorations of anticipatory behavioral control (ABC): A report from the cognitive psychology unit of the University of Würzburg. Cognitive Processing, 8(2), 133–142.

Hoffmann, J., Lenhard, A., Sebald, A., & Pfister, R. (2009). Movements or targets: What makes an action in action–effect learning? The Quarterly Journal of Experimental Psychology, 62(12), 2433–2449.

Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research, 73(4), 512–526.

Hommel, B., Alonso, D., & Fuentes, L. (2003). Acquisition and generalization of action effects. Visual Cognition, 10(8), 965–986.

Hommel, B., Colzato, L. S., & Van Den Wildenberg, W. P. (2009). How social are task representations? Psychological Science, 20(7), 794–798.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24(5), 849–878.

Hudson, M., Nicholson, T., Ellis, R., & Bach, P. (2016). I see what you say: Prior knowledge of other’s goals automatically biases the perception of their actions. Cognition, 146, 245–250.

Hudson, M., Nicholson, T., Simpson, W. A., Ellis, R., & Bach, P. (2016). One step ahead: The perceived kinematics of others’ actions are biased toward expected goals. Journal of Experimental Psychology: General, 145(1), 1–7.

James, W. (1890). The principles of psychology (Vol. 1). New York, NY: Holt.

Janczyk, M., & Kunde, W. (2014). The role of effect grouping in free-choice response selection. Acta Psychologica, 150, 49–54.

Janczyk, M., Pfister, R., Hommel, B., & Kunde, W. (2014). Who is talking in backward crosstalk? Disentangling response-from goal-conflict in dual-task performance. Cognition, 132(1), 30–43.

Janczyk, M., Skirde, S., Weigelt, M., & Kunde, W. (2009). Visual and tactile action effects determine bimanual coordination performance. Human Movement Science, 28(4), 437–449.

Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Perception & Psychophysics, 14(2), 201–211.

Jones, S. S. (2007). Imitation in infancy the development of mimicry. Psychological Science, 18(7), 593–599.

Kanizsa, G. & Vicario, G. (1968). The perception of intentional reaction. In G. Kanizsa amp; G. Vicario (Eds.), Experimental research on perception (pp. 71–126). Trieste: University of Trieste.

Keller, P. E., Dalla Bella, S., & Koch, I. (2010). Auditory imagery shapes movement timing and kinematics: Evidence from a musical task. Journal of Experimental Psychology: Human Perception and Performance, 36(2), 508.

Keller, P. E., & Koch, I. (2008). Action planning in sequential skills: Relations to music performance. The Quarterly Journal of Experimental Psychology, 61(2), 275–291.

Kirsch, W., Pfister, R., & Kunde, W. (2016). Spatial action-effect binding. Attention, Perception, & Psychophysics, 78(1), 133–142.

Klapper, A., Ramsey, R., Wigboldus, D., ∓ Cross, E. S. (2014). The control of automatic imitation based on Bottom–Up and Top–Down cues to animacy: Insights from brain and behavior. Journal of Cognitive Neuroscience, 26(11), 2503–2513.

Knoblich, G. Butterfill, S. & Sebanz, N. Psychological research on joint action: Theory and data. In B. Ross (Ed.), The Psychology of learning and motivation (vol. 54, pp. 59–101). Burlington: Academic Press.

Kornblum, S., Hasbroucq, T., & Osman, A. (1990). Dimensional overlap: Cognitive basis for stimulus-response compatibility–A model and taxonomy. Psychological Review, 97(2), 253.

Kunde, W. (2001). Response-effect compatibility in manual choice reaction tasks. Journal of Experimental Psychology: Human Perception and Performance, 27(2), 387.

Kunde, W., Elsner, K., & Kiesel, A. (2007). No anticipation–no action: The role of anticipation in action and perception. Cognitive Processing, 8(2), 71–78.

Kunde, W., Hoffmann, J., & Zellmann, P. (2002). The impact of anticipated action effects on action planning. Acta Psychologica, 109(2), 137–155.

Kunde, W., Lozo, L., & Neumann, R. (2011). Effect-based control of facial expressions: Evidence from action–effect compatibility. Psychonomic Bulletin & Review, 18(4), 820–826.

Kunde, W., Pfister, R., & Janczyk, M. (2012). The locus of tool-transformation costs. Journal of Experimental Psychology: Human Perception and Performance, 38(3), 703–714.

Kunde, W., Skirde, S., & Weigelt, M. (2011). Trust my face: Cognitive factors of head fakes in sports. Journal of Experimental Psychology: Applied, 17(2), 110–127.

Kunde, W., & Weigelt, M. (2005). Goal congruency in bimanual object manipulation. Journal of Experimental Psychology: Human Perception and Performance, 31(1), 145–156.

Kunde, W., & Wühr, P. (2004). Actions blind to conceptually overlapping stimuli. Psychological Research, 68(4), 199–207.

Lacquaniti, F., Terzuolo, C., & Viviani, P. (1983). The law relating the kinematic and figural aspects of drawing movements. Acta Psychologica, 54, 115–130.

Lammers, J., Galinsky, A. D., Gordijn, E. H., & Otten, S. (2012). Power increases social distance. Social Psychological and Personality Science, 3(3), 282–290.

Lelonkiewicz, J. R., & Gambi, C. (2016). Spontaneous adaptation explains why people act faster when being imitated. Psychonomic Bulletin & Review. doi:10.3758/s13423-016-1141-3

Manera, V., Becchio, C., Schouten, B., Bara, B. G., & Verfaillie, K. (2011). Communicative interactions improve visual detection of biological motion. PLoS One, 6(1), e14594.

Meltzoff, A. N., & Moore, M. K. (1977). Imitation of facial and manual gestures by human neonates. Science, 198(4312), 75–78.

Memelink, J., & Hommel, B. (2013). Intentional weighting: A basic principle in cognitive control. Psychological Research, 77(3), 249–259.

Moore, J. W., & Obhi, S. S. (2012). Intentional binding and the sense of agency: A review. Consciousness and Cognition, 21(1), 546–561.

Müller, R. (2016). Does the anticipation of compatible partner reactions facilitate action planning in joint tasks? Psychological Research, 80(4), 464–486.

Müsseler, J., & Hommel, B. (1997). Blindness to response-compatible stimuli. Journal of Experimental Psychology: Human Perception and Performance, 23(3), 861–872.

Müsseler, J., Kunde, W., Gausepohl, D., & Heuer, H. (2008). Does a tool eliminate spatial compatibility effects? European Journal of Cognitive Psychology, 20(2), 211–231.

Müsseler, J., Wühr, P., Danielmeier, C., & Zysset, S. (2005). Action-induced blindness with lateralized stimuli and responses. Experimental Brain Research, 160(2), 214–222.

Nagy, E. (2006). From imitation to conversation: The first dialogues with human neonates. Infant and Child Development, 15(3), 223–232.

Nagy, E., & Molnar, P. (2004). Homo imitans or homo provocans? Human imprinting model of neonatal imitation. Infant Behavior & Development, 27(1), 54–63.

Neumann, R., Lozo, L., & Kunde, W. (2014). Not all behaviors are controlled in the same way: Different mechanisms underlie manual and facial approach and avoidance responses. Journal of Experimental Psychology: General, 143(1), 1–8.

Nowak, K. L., & Biocca, F. (2003). The effect of the agency and anthropomorphism on users’ sense of telepresence, copresence, and social presence in virtual environments. Presence, 12(5), 481–494.

Paelecke, M., & Kunde, W. (2007). Action-effect codes in and before the central bottleneck: Evidence from the PRP paradigm. Journal of Experimental Psychology: Human Perception and Performance, 33, 627–644.

Paulus, M. (2014). How and why do infants imitate? An ideomotor approach to social and imitative learning in infancy (and beyond). Psychonomic Bulletin & Review, 21(5), 1139–1156.

Paulus, M., Hunnius, S., Vissers, M., & Bekkering, H. (2011). Bridging the gap between the other and me: The functional role of motor resonance and action effects in infants’ imitation. Developmental Science, 14(4), 901–910.

Paulus, M., Hunnius, S., & Bekkering, H. (2013). Neurocognitive mechanisms underlying social learning in infancy: Infants’ neural processing of the effects of others’ actions. Social Cognitive and Affective Neuroscience, 8(7), 774–779.

Pavlova, M. A. (2012). Biological motion processing as a hallmark of social cognition. Cerebral Cortex, 22(5), 981–995.

Pfeiffer, U. J., Timmermans, B., Bente, G., Vogeley, K., & Schilbach, L. (2011). A non-verbal turing test: differentiating mind from machine in gaze-based social interaction. PloS one, 6(11), e27591.

Pfister, R., Dignath, D., Hommel, B., & Kunde, W. (2013). It takes two to imitate anticipation and imitation in social interaction. Psychological Science, 24(10), 2117–2121.

Pfister, R., Heinemann, A., Kiesel, A., Thomaschke, R., & Janczyk, M. (2012). Do endogenous and exogenous action control compete for perception? Journal of Experimental Psychology: Human Perception and Performance, 38(2), 279–284.

Pfister, R., & Janczyk, M. (2012). Harleß’ apparatus of will: 150 years later. Psychological Research, 76(5), 561–565.

Pfister, R., Janczyk, M., Gressmann, M., Fournier, L. R., & Kunde, W. (2014). Good vibrations? Vibrotactile self-stimulation reveals anticipation of body-related action effects in motor control. Experimental Brain Research, 232(3), 847–854.

Pfister, R., Janczyk, M., Wirth, R., Dignath, D., & Kunde, W. (2014). Thinking with portals: Revisiting kinematic cues to intention. Cognition, 133(2), 464–473.

Pfister, R., Kiesel, A., & Hoffmann, J. (2011). Learning at any rate: Action–effect learning for stimulus-based actions. Psychological Research, 75(1), 61–65.

Pfister, R., & Kunde, W. (2013). Dissecting the response in response–effect compatibility. Experimental Brain Research, 224(4), 647–655.

Pfister, R., Obhi, S., Rieger, M., & Wenke, D. (2014). Action and perception in social contexts: Intentional binding for social action effects. Frontiers in Human Neuroscience, 8, 667.

Pfister, R., Pfeuffer, C. U., & Kunde, W. (2014). Perceiving by proxy: Effect-based action control with unperceivable effects. Cognition, 132(3), 251–261.

Pfister, R., Weller, L., Dignath, D., & Kunde, W. (2017). What or when? The impact of anticipated social action effects is driven by action-effect compatibility, not delay. Manuscript submitted for publication.

Poeppel, D., & Monahan, P. J. (2008). Speech perception. Cognitive foundations and cortical implementation. Current Directions in Psychological Science, 17(2), 80–85.

Prinz, W. (1997). Perception and action planning. European Journal of Cognitive Psychology, 9(2), 129–154.

Rieger, M. (2007). Letters as visual action-effects in skilled typing. Acta Psychologica, 126(2), 138–153.

Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192.

Sartori, L., Becchio, C., & Castiello, U. (2011). Cues to intention: The role of movement information. Cognition, 119(2), 242–252.