Abstract

Prior commitment has been found to facilitate choice of a larger later reward (e.g., healthy living) and avoid the impulsive choice of the smaller immediate reward (e.g., smoking, drug taking). In this research with pigeons, we investigated the ephemeral choice task in which pigeons are given a choice between two alternatives, A and B, with similar reinforcement provided for each; however, if they choose A, they can also choose B, whereas if they choose B, A is removed. Thus, choosing A gives them two rewards, whereas choosing B gives them only one. Paradoxically, pigeons actually show a preference for B, the suboptimal alternative. We tested the hypothesis that pigeons made suboptimal choices because they were impulsive. To reduce impulsivity, we required the pigeons to make their initial choice 20 s before receiving the first reward. We found that requiring the pigeons to make a prior commitment encouraged them to choose optimally. The control group, for which the reward was provided immediately following initial choice, continued to choose suboptimally. The results confirm that requiring animals to make a prior commitment can facilitate the development of optimal choice. Furthermore, they may help explain why, without prior commitment, impulsive species, such as primates and pigeons have difficulty with this task, whereas presumably less impulsive species, such as wrasse fish and under some conditions parrots, are able to choose optimally even without prior commitment.

Similar content being viewed by others

Some behaviors are paradoxical in the sense that one would like to stop engaging in them, but the behavior often persists (e.g., smoking, drinking alcohol, eating excessively). The mechanism responsible for the continuation of the maladaptive behavior can be described in terms of delay discounting (Madden & Bickel, 2010). That is, at a time when the need to engage in the maladaptive behavior is not strong, there is often an expressed value in avoiding the behavior. But when the desire to engage in the behavior is strong, there is less value in avoiding the behavior because the earlier expressed desired goal is distant and thus is discounted. One way to facilitate termination of the maladaptive behavior is to make an external commitment to cease the behavior at a time prior to the impulse to initiate it (Giné, Karlan, & Zinman, 2010; Smit, Hoving, Schelleman-Offermans, Weat, & de Vries, 2014). In the case of smoking, for example, one might remove all cigarettes from one’s surroundings or even spend a week camping without cigarettes. Research with animals suggests that if one makes a hungry animal choose between a small immediate reward (2-s access to food—analogous to the immediacy of having a cigarette) and a larger delayed reward (4-s access to food delayed by 4 s—analogous to better health), animals will tend to choose suboptimally the smaller immediate reinforcement (Ainslie, 1974). And similar effects have been found with humans (e.g., Rachlin, Raineri, & Cross, 1991). The degree to which the immediate small reward is preferred over the delayed larger reward as the delay to the larger reward increases has been taken as a measure of the impulsivity of the organism (Odum, 2011).

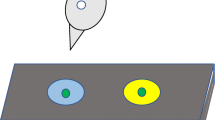

There is evidence, however, that the strong tendency to choose the small, immediate reward can be reduced by forcing the organism to make an earlier commitment by choosing as little as 8 s prior to obtaining the smaller reward (Rachlin & Green, 1972). The explanation for this reversal in choice is that the function relating the discounting of rewards with time is hyperbolic, as can be seen in Fig. 1, at choice time CT1; the immediacy of the smaller, sooner reward gives it more value than the delayed, larger reward, whereas at CT2 the reverse is true (see Green & Myerson, 1996).

Delay discounting functions. CT1 = Choice Time 1. CT2 = Choice Time 2. SS = smaller sooner reinforcer. LL = Larger later reinforcer. Because the delay discounting functions are hyperbolic, at CT1 the value of the SS is greater than the value of the LL and thus the SS is preferred, whereas at CT2 the value of the LL is greater than the value of the SS and thus the LL is preferred

We attempted to apply the notion of prior commitment to the ephemeral choice task, first reported by Bshary and Grutter (2002), that has proven to be deceptively difficult for primates (Salwiczek et al., 2012) and pigeons (Zentall, Case, & Luong, 2016). The task consists of giving animals a choice between two distinctive stimuli (e.g., black and white) on which an identical reinforcer is placed on each. If, for example, the animal chooses the reinforcer on the black background, then it can have that one and the trial is over. However, if the animal chooses the reinforcer on the white background, then it can have that one, and it is allowed to have the reinforcer on the black background as well. In this case, it would be optimal for the animal to always select the reinforcer on the white background because it would receive two reinforcers per trial, whereas if it chooses the reinforcer on the black background, it gets only one. Surprisingly, Salwiczek et al. (2012) found that, several primate species, including capuchin monkeys (Cebus apella) and orangutans (Pongo pygmaeus), did not readily acquire the optimal response, and only two of four chimpanzees (Pan troglodytes) learned to choose optimally within 100 trials. Even more surprising, adult wrasse (cleaner) fish (Labroides dimidiatus) (but not juveniles) learned to choose the optimal alternative within 100 trials.

The authors attributed the species difference in acquisition of this task to differences in the natural foraging strategy of the fish. Cleaner fish forage for food by cleaning the mouths of large predator fish, some of which live on the reef where the cleaner fish live, whereas other “clients” of the wrasse visit the reef on occasion. When a visitor approaches the reef (the ephemeral choice), the cleaner fish must go to the visitor first or the visitor will leave but the reef residents will be there when the cleaner fish return. Presumably, primates have no similar experience.

This hypothesis for why the laboratory ephemeral choice task is easier for the fish than for the primates is appealing because it resolves the otherwise paradoxical conclusion that fish might be more intelligent than primates. However, this ecological explanation for the species difference was questioned by Pepperberg and Hartsfield (2014), who showed that grey parrots (Psittacus erithacus), whose foraging behavior should be more like that of the primates than of the wrasse fish, can easily acquire the optimal response.

Although the optimal response would appear to be obvious because it provides twice as much food, there is reason to believe that the ephemeral choice task involves aspects that may actually encourage suboptimal choice. To appreciate the difficulty of the ephemeral choice task, one must imagine how an animal first encounters this task. Prior to learning, one would expect an animal to choose randomly between the two alternatives. Assuming the white alternative is the optimal choice, on a trial on which the animal chooses the white alternative, it also obtains the reinforcer on the black alternative, whereas if the animal chooses the black alternative, that is the only reinforcer that it receives. Thus, the results of those early choices would be that the animal would receive the reinforcement on the black alternative for either choice, but it would receive the reinforcement on the white alternative only if it made white the initial choice. That means that early on in training, the animal would receive twice as many reinforcements associated with the black alternative as with the white alternative. Furthermore, the last alternative the animal would experience on any trial would always be black. Thus, to choose optimally, the animal must overcome the early tendency to choose the alternative that has been associated with the most frequent and most recently reinforced alternative.

To test the hypothesis that early experience with this task provided conflicting feedback that made the ephemeral choice task difficult, Zentall et al. (2016, Experiment 1) replicated the manual procedure used in earlier research. Two different-colored disks were place in front of the pigeon, with a dried pea on each. If the pigeon chose the pea on the yellow disk, then it could also have the pea on the blue disk, but if it chose the pea on the blue disk, the yellow disk was removed (the contingencies associated with the two colors were counterbalanced over subjects). Under these conditions, pigeons actually chose the suboptimal alternative (the one that provided only one reinforcement) at a level significantly greater than chance. To test the generality of this finding, they automated the task in an operant chamber using two colored pecking keys and a mixed grain feeder and found very similar results (Zentall et al., 2016, Experiment 2). To further test the hypothesis that below-chance optimal choice resulted from the frequency of reinforcement associated with each alternative, in a third experiment, Zentall et al. avoided having the pigeons associate their second reinforcement with the suboptimal alternative by changing the color of the suboptimal alternative immediately after the optimal alternative was selected. Thus, for example, if the optimal alternative was yellow and the suboptimal alternative was blue, upon choice of the yellow alternative the blue alternative changed to white. The result of this manipulation was to produce a significant improvement in choice of the optimal alternative. However, although these pigeons no longer showed a preference for the suboptimal alternative, they still chose suboptimally by showing indifference between the two alternatives.

Zentall et al. (2016) concluded that although they had eliminated the bias associated with the suboptimal alternative, the pigeons still were not associating choice of the optimal alternative with obtaining two reinforcements. That is, the pigeons did not appear to be integrating the reinforcement associated with choice of the optimal alternative with the second reinforcement. This difficulty in integrating choice of the first alternative with the slightly delayed second reinforcement is reminiscent of a delayed discounting effect, the preference for a small immediate reinforcement over a larger, delayed reinforcement.

This brings us to the question of prior commitment. As Rachlin and Green (1972) found, pigeons that were offered a choice between a small, immediate reward and a larger, delayed reward chose the larger, delayed reward if the choice preceded the smaller reward by several seconds. In this research, we asked if pigeons trained on the ephemeral choice task would learn to choose optimally (the two-reward option over the one-reward option) if they were forced to make their choice a few seconds before reinforcement. That is, if we assume that in the ephemeral choice task the second reinforcement is sufficiently delayed to be analogous to the larger, later reinforcement in the delayed discounting task, then perhaps requiring the pigeons to make a prior commitment would encourage them to choice optimally. To test this hypothesis, we compared pigeons’ acquisition of this task with a prior commitment (pigeons were required to make their choice 20 s prior to obtaining the first reward) with pigeons that received the first reward without making a prior commitment (they received the first reward immediately after making their initial choice). The hypothesis was that making a prior commitment would help the pigeons integrate the two rewards (or reduce the relative difference in delay between the two rewards) and thereby increase the value of choice of the optimal alternative.

Method

Subjects

The subjects were eight unsexed homing pigeons, 5 to 8 years old, that had had prior experience with a simultaneous red–green color discrimination with multiple reversals. During this experiment, the pigeons were maintained at 85% of their free-feeding weight. They were housed individually in wire cages, with free access to water and grit. The colony room was maintained on a 12:12-h light–dark cycle. All pigeons were cared for in accordance with University of Kentucky Animal Care Guidelines.

Apparatus

The experiment was conducted in a BRS/LVE (Laurel, MD) sound-attenuating standard automated test chamber with inside measurements 35 cm high, 30 cm long, and 35 cm across the response panel. The response panel in each chamber had a horizontal row of three response keys 25 cm above the floor. The round keys (2.5 cm diameter) were separated from each other by 6.0 cm, and behind each key was a 12-stimulus inline projector (Industrial Electronics Engineering, Van Nuys, CA). The left and right projectors projected blue and yellow hues (Kodak Wratten Filter Nos. 38 and 9, respectively). The center projector projected a red hue (Kodak Wratten Filter No. 26). The bottom of the center-mounted feeder was 9.5 cm from the floor. When the feeder was raised, it was illuminated by a 28-V, 0.04-A lamp. Reinforcement consisted of 2.0 s of Purina Pro Grains. A microcomputer in an adjacent room controlled the experiment.

Procedure

Subjects were randomly assigned to one of two groups, a prior commitment (experimental) group and a no prior commitment (control) group. The pigeons in each group were further randomly assigned to one of two groups based on which color, yellow or blue, represented the optimal alterative. All pigeons were pretrained to peck on a fixed-interval 20-s (FI20s) schedule (the first response after 20 s was reinforced). For pigeons in the prior commitment group, the key was sometimes yellow, sometimes blue. For pigeons in the no prior commitment group, the key was white.

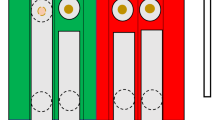

Each trial began with the center key illuminated white. For pigeons in the prior commitment group, a single peck to the white key turned off the white key and turned on the yellow and blue keys on the right and left. Choice of one of the two keys started a 20-s timer. The first response after 20 s (FI20s) turned off both alternatives and provided reinforcement (2-s access to mixed grain). If the optimal alternative had been chosen, the other alternative was turned back on and a single peck to that key provided a second reinforcement, after which the trial was over. If the suboptimal alternative had been chosen, reinforcement was provided, but the other alternative was not turned back on and the trial ended. A 90-s intertrial interval separated the trials. The design of the experiment is presented on the left side of Fig. 2.

Design of experiment: Events that occurred following an optimal choice (top) and following a suboptimal choice (bottom). Prior commitment group on the left, no prior commitment group on the right. Optimal and suboptimal colors (yellow and blue) were counterbalanced over pigeons, and sides (left and right) were counterbalanced over trials FR1 = a single peck required; FI20s = the first response after 20 s; ITI = intertrial interval

For pigeons in the no prior commitment group the pigeons had to complete an FI20s schedule to turn off the white center key and turn on the yellow and blue keys on the right and left. The rationale for the FI20s schedule to turn off the white center key was to give both groups experience with the FI20s schedule and approximately equate the trial duration for the two groups. A single peck to one of the two keys turned off both keys and provided reinforcement. If the optimal alternative had been chosen, the other alternative was turned back on, and a single peck to that key provided a second reinforcement, after which the trial was over. If the suboptimal alternative had been chosen, reinforcement was provided but the other alternative was not turned back on and the trial ended with a 90-s intertrial interval. Each of 40 training sessions consisted of 10 trials.

Results

On the first session of training, pigeons in both groups chose the optimal alternative at a level slightly below chance. By Session 7, choice by the two groups began to diverge, with the prior commitment group beginning to choose the optimal alternative slightly above chance and the no prior commitment group choosing the optimal alternative well below chance (see Fig. 3). Pigeons in the prior commitment group were responding optimally in the ephemeral choice task whereas the control pigeons were not. When the data were pooled over the last five sessions of training, the difference between the two groups was statistically significant, t(6) = 4.47, p = .004, Cohen’s d = 3.65, r(effect size) = 0.88. Furthermore, choice of the optimal alternative was statistically above chance for pigeons in the prior commitment group (Mean proportion optimal = .86, SEM = .03), t(3) = 12.71, p = .001, Cohen’s d = 14.68, r(effect size) = .99. Although the pigeons in the no prior commitment group chose the optimal alternative below chance (Mean proportion optimal = .40), the difference from chance was not statistically significant, p > .05. One of the pigeons in the no prior commitment group chose the optimal alternative well below chance (.10), but the other three pigeons did not. The pigeons in the prior commitment group began choosing optimally at different rates and, as can be seen by the group variability in Fig. 3, their accuracy was not stable until the end of training. Finally, for pigeons in the prior commitment group, once they chose one of the side keys they rarely switched to the other alternative during the FI20s schedule. When pooled over the last five sessions of training, pigeons switched from their original choice an average of 2.9 times per session, but most of those switches (2.5 times per session) were to correct an initial choice of the suboptimal alternative. Observation of the pigeons suggested that during the FI20s schedule the pigeons pecked the response key almost continuously.

Discussion

The purpose of this experiment was to ask if pigeons’ tendency to choose suboptimally on a task in which choice of one alternative provides them with only one reward whereas choice of the other alternative provides them with two rewards could be made optimal by delaying the presentation of the first reward. This was accomplished by forcing pigeons to make a commitment 20 s prior to obtaining the first reward. Pigeons that were required to make a prior commitment showed significantly more optimal choice than those that were not required to do so.

The idea of a prior commitment can be traced to the phenomenon of delay discounting in which humans and other animals often prefer a smaller reinforcement if it occurs sooner over a larger reinforcement that occurs later (Rachlin & Green, 1972). In our experiment, the difference in outcome between the two alternatives is the slightly delayed second reinforcement that follows choice of one of the alternatives. The relatively short delay between the two reinforcements appears to make optimal choice difficult to acquire. For some reason, that delay results in the failure to integrate the two reinforcements that occur following choice of the optimal alternative. The reason for that failure appears to be the immediacy of the similarity of the initial reinforcement associated with the two alternatives. Delaying the reinforcement associated with the initial choice may allow the two reinforcers to be better integrated. If in the original task a single response is required for the first reinforcement and a short 3–4 s delay to the second reinforcement (the time to eat and then peck the second alternative), the ratio of the two delays would be relatively large (perhaps 4:1), whereas if the delay between the initial choice and the first reinforcement was 20 s, the ratio of the two delays would be quite small (24:21, closer to 1.0), and the two reinforcers would likely be better integrated.

The results of this experiment may suggest why some animals have failed to acquire this task. Salwiczek et al. (2012) found that capuchin monkeys and orangutans did not acquire this task in 100 trials, and only 2 of 4 chimpanzees learned to choose optimally with that training. The results of this experiment suggest that primate impulsivity may be responsible for the degree of difficulty with this task (Kim, Hwang, Seo, & Lee, 2009). Furthermore, there is some evidence that chimpanzees are less impulsive when the immediacy of the reinforcement is reduced. For example, Boysen, Berntson, Hannan, and Cacioppo (1997) found that when chimpanzees were given a problem with a reverse reinforcement contingency (choice of the larger amount of observable rewards resulted in obtaining the smaller amount), they responded optimally (to obtain the larger number of rewards) only about 30% of the time. However, when chimpanzees that had been trained to identify the relation between Arabic numerals and number of objects were given the same task, with the Arabic numerals representing the number of rewards, they chose optimally 66% of the time. The function of the Arabic numerals may have been to make the choice less impulsive by removing the choice from the immediacy of the outcome.

Species differences in impulsivity may account for both the successful acquisition of this ephemeral choice task by wrasse fish and parrots as well as the relative failure to acquire this task by primates and pigeons. Pigeons have been shown to be relatively impulsive (as assessed by their steep delay discounting slopes; e.g., Laude, Beckmann, Daniels, & Zentall, 2014) compared with other species (Stevens & Stephens, 2008), but if one makes the outcome of the choice appear less rewarding, pigeons may choose more carefully. This hypothesis may account for a curious phenomenon reported by Clement, Feltus, Kaiser, and Zentall (2000). After training pigeons with a simultaneous discrimination to peck Stimulus A rather than Stimulus B following a relatively aversive (large) peck requirement, and a different simultaneous discrimination to peck Stimulus C rather than Stimulus D following a less aversive (single) peck requirement, the pigeons were tested for their preference between A and C, and between B and D. The results indicated that the pigeons showed a significant preference for A over C. We attributed this effect to a form of contrast between the aversive event (the large peck requirement) and the reinforcement that followed. Surprisingly, however, the pigeons also showed an even stronger preference for B (the nonreinforced stimulus that accompanied A) over D (the nonreinforced stimulus that accompanied C). The preference for B over D was attributed to the generalization of greater response strength from A to B than from C to D. But the greater preference for B over D than for A over B was a mystery. In light of the results of this experiment, one can now posit an explanation. To account for the greater difference in preference between the two nonreinforced stimuli, one can propose that the choice between the two nonreinforced stimuli involved less of an impulsive choice than the choice between the two reinforced stimuli. During training, both positive stimuli were followed immediately by reinforcement, so when they were presented together on test trials they would be likely to engender impulsivity (as the two alternatives were hypothesized to do in the control procedure of this experiment). On the other hand, the negative stimuli were never followed by reinforcement during training, so they would not be assumed to engender impulsivity, and thus they could provide a more robust measure of the contrast effect.

What could account for the ability of wrasse fish to acquire the ephemeral choice task? Any species that feeds by swimming into the mouth of larger fish must be relatively cautious so as not to be eaten. More specifically, wrasse fish prefer to feed on the mucus of the client fish, but that requires that they bite the client and clients typically retaliate by leaving the cleaning station or by attacking the wrasse fish (Bshary & Grutter 2002; Bshary & Schäffer 2002). That this behavior may provide them with impulse control is suggested by the finding that these fish can acquire a reverse contingency task in which, given a choice between a larger and smaller amount of food, they must learn to approach the smaller amount of food to obtain the larger amount of food (Danisman, Bshary, & Bergmüller, 2010). Recall that chimpanzees have great difficulty in acquiring this task unless the choice did not lead immediately to the outcome (Boysen et al., 1997).

As for the parrots, although it is possible that they too are a not very impulsive species, the three parrots that were used in the Pepperberg and Hartsfield (2014) experiment had had extensive prior training. One parrot had been exposed to “continuing studies on comparative cognition and interspecies communication,” whereas the other two had received considerable referential communication training (p. 299). It is possible that this training had the effect of reducing their natural impulsivity. In support of the hypothesis that these parrots had developed considerable impulse control, one of those parrots was found to show a relatively flat delay discounting function when given a choice between an immediate desirable reward and a delayed (by as much as 15 min) more desirable reward (Koepke, Gray, & Pepperberg, 2015). Thus, it may be that Salwiczek et al. (2012) were correct in proposing that the natural ecology of the wrasse fish can account for their ability to acquire the ephemeral task as quickly as they do, but the results of this experiment suggests that the mechanism responsible acquisition of this task is likely to be self-control.

The results of recent research by Prétôt, Bshary, and Brosnan (2016a, b) are generally consistent with the impulsivity account of the failure to learn the optimal choice with the ephemeral reward task. In Prétôt et al. (2016a, Experiment 1), monkeys that did not acquire the optimal response in the original version of the task were exposed to a computer version of the task in which they had to move a cursor to the chosen stimulus. This modification in the task appeared to facilitate choice of the optimal alternative. The use of a computerized version of the task changes it in many ways, but perhaps the most important difference is the reduced contiguity between choice and reward in the computerized task. Subjects reached for the reward directly in the original version of the task. Thus, according to the impulsivity hypothesis, one would expect reduced impulsivity when the reward was not observable and was slightly delayed. In a follow up experiment (Prétôt et al., 2016a, Experiment 2), the researchers added movement to the ephemeral (optimal) alternative on the computer screen and found that this too facilitated choice of the optimal alternative. In this case, in addition to the separation of choice and reward inherent in the computerized version of the task, the movement of the optimal alternative would have drawn particular attention to that stimulus, thus increasing the chances that it would be selected.

In Prétôt et al. (2016b, Experiment 3), the researchers used the original (manual) version of the task, but hid each food reinforcer under a distinctive cup that the monkeys had to lift or point to. This modification led to choice of the optimal alternative as well. But as with the computerized version of the task, this procedure separated the choice response from the reward itself, thus likely reducing impulsivity. Another procedure used with both the monkeys and the fish (Prétôt et al., 2016b, Experiment 2) was to color the ephemeral and permanent food distinctively (pink or black). This, too, facilitated acquisition of the optimal alternative. Why distinctive coloring would facilitate acquisition is not obvious, but the unusual color of the food may have reduced the monkeys’ tendency to choose impulsively. Overall, the results of recent research with primates that did not originally easily acquire the optimal choice response with this task are quite consistent with the hypothesis that any change in procedure that reduces the tendency of the subjects to respond impulsively may facilitate acquisition of the optimal response.

The results of this experiment may have implications for several kinds of maladaptive human behavior that appear to result from impulsive decision making due to temptation, such as addictive commercial gambling and excess caloric intake (obesity). If humans can be taught to make a commitment at a time well before the to-be-avoided reward becomes available (the time of temptation), it may be possible to avoid making suboptimal impulsive choices (Ainslie & Haendel, 1983; Ainslie & Monterosso, 2003). In each case, as in this experiment, arranging for the appropriate prior commitment may provide the means to avoid making some impulsive suboptimal choices.

References

Ainslie, G. W. (1974). Impulse control in pigeons. Journal of the Experimental Analysis of Behavior, 21, 485–489.

Ainslie, G., & Haendel, V. (1983). The motives of the will. In E. Gottheil, K. Druley, T. Skodola, & H. Waxman (Eds.), Etiology aspects of alcohol and drug abuse (pp. 119–140). Springfield, IL: Charles C. Thomas.

Ainslie, G. W., & Monterosso, J. (2003). Hyperbolic discounting as a factor in addiction: A critical analysis. In R. E. Vuchinich & N. Heather (Eds.), Choice, behavioral economics, and addiction (pp. 35–61). Oxford, UK: Elsevier.

Boysen, S. T., Berntson, G. G., Hannan, M. B., & Cacioppo, J. T. (1997). Quantity-based interference and symbolic representation in chimpanzees (Pan-troglodytes). Journal of Experimental Psychology: Animal Behavior Processes, 22, 76–86.

Bshary, R., & Grutter, A. S. (2002). Experimental evidence that partner choice is a driving force in the payoff distribution among cooperators or mutualists: The cleaner fish case. Ecology Letters, 5, 130–136.

Bshary, R., & Schäffer, D. (2002). Choosy reef fish select cleaner fish that provide high-quality service. Animal Behaviour, 63, 557–564.

Clement, T. S., Feltus, J., Kaiser, D. H., & Zentall, T. R. (2000). ‘Work ethic’ in pigeons: Reward value is directly related to the effort or time required to obtain the reward. Psychonomic Bulletin & Review, 7, 100–106.

Danisman, E., Bshary, R., & Bergmüller, R. (2010). Do cleaner fish learn to feed against their preference in a reverse reward contingency task? Animal Cognition, 13, 41–49.

Giné, X., Karlan, D., & Zinman, J. (2010). Put your money where your butt is: A commitment contract for smoking cessation. American Economic Journal: Applied Economics, 2, 213–235.

Green, L., & Myerson, J. (1996). Exponential versus hyperbolic discounting of delayed outcomes: Risk and waiting time. American Zoologist, 36, 496–505.

Kim, S., Hwang, J., Seo, H., & Lee, D. (2009). Valuation of uncertain and delayed rewards in primate prefrontal cortex. Neural Networks, 22, 294–304.

Koepke, A. E., Gray, S. L., & Pepperberg, I. M. (2015). Delayed gratification: A grey parrot (Psittacus erithacus) will wait for a better reward. Journal of Comparative Psychology, 129, 339–346.

Laude, J. R., Beckmann, J. S., Daniels, C. W., & Zentall, T. R. (2014). Impulsivity affects gambling-like choice by pigeons. Journal of Experimental Psychology: Animal Behavior Processes, 40, 2–11.

Madden, G. J., & Bickel, W. K. (Eds.). (2010). Impulsivity: The behavioral and neurological science of discounting. Washington, DC: American Psychological Association.

Odum, A. L. (2011). Delay discounting: I’m a k, you’re a k. Journal of the Experimental Analysis of Behavior, 96, 427–439.

Pepperberg, I. M., & Hartsfield, L. A. (2014). Can grey parrots (Psittacus erithacus) succeed on a “complex” foraging task failed by nonhuman primates (Pan troglodytes, Pongo abelii, Sapajus apella) but solved by wrasse fish (Labroides dimidiatus)? Journal of Comparative Psychology, 128, 298–306.

Prétôt, L., Bshary, R., & Brosnan, S. F. (2016a). Comparing species decisions in a dichotomous choice task: Adjusting task parameters improves performance in monkeys. Animal Cognition, 19, 819–834.

Prétôt, L., Bshary, R., & Brosnan, S. F. (2016b). Factors influencing the different performance of fish and primates on a dichotomous choice task. Animal Behaviour, 119, 189–199.

Rachlin, H., & Green, L. (1972). Commitment, choice and self-control. Journal of the Experimental Analysis of Behavior, 17, 15–22.

Rachlin, H., Raineri, A., & Cross, D. (1991). Subjective probability and delay. Journal of the Experimental Analysis of Behavior, 55, 223–244.

Salwiczek, L. H., Prétôt, L., Demarta, L., Proctor, D., Essler, J., Pinto, A. I., … Bshary, R. (2012). Adult cleaner wrasse outperform capuchin monkeys, chimpanzees, and orangutans in a complex foraging task derived from cleaner-client reef fish cooperation. PLOS ONE, 7, e49068. doi:10.1371/journal.pone.0049068

Smit, E. S., Hoving, C., Schelleman-Offermans, K., Weat, R., & de Vries, H. (2014). Predictors of successful and unsuccessful quit attempts among smokers motivated to quit. Addictive Behavior, 39, 1318–1324.

Stevens, J. R., & Stephens, D. W. (2008). Patience. Current Biology, 18, R11–R12.

Zentall, T. R., Case, J. P., & Luong, J. (2016). Pigeon’s paradoxical preference for the suboptimal alternative in a complex foraging task. Journal of Comparative Psychology, 130, 138–144.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zentall, T.R., Case, J.P. & Berry, J.R. Early commitment facilitates optimal choice by pigeons. Psychon Bull Rev 24, 957–963 (2017). https://doi.org/10.3758/s13423-016-1173-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-016-1173-8