Abstract

The judgement of relative order (JOR) procedure is used to investigate serial-order memory. Measuring response times, the wording of the instructions (whether the earlier or the later item was designated as the target) reversed the direction of search in subspan lists (Chan, Ross, Earle, & Caplan Psychonomic Bulletin & Review, 16(5), 945–951, 2009). If a similar congruity effect applied to above-span lists and, furthermore, with error rate as the measure, this could suggest how to model order memory across scales. Participants performed JORs on lists of nouns (Experiment 1: list lengths = 4, 6, 8, 10) or consonants (Experiment 2: list lengths = 4, 8). In addition to the usual distance, primacy, and recency effects, instructions interacted with serial position of the later probe in both experiments, not only in response time, but also in error rate, suggesting that availability, not just accessibility, is affected by instructions. The congruity effect challenges current memory models. We fitted Hacker’s (Journal of Experimental Psychology: Human Learning and Memory, 6(6), 651–675, 1980) self-terminating search model to our data and found that a switch in search direction could explain the congruity effect for short lists, but not longer lists. This suggests that JORs may need to be understood via direct-access models, adapted to produce a congruity effect, or a mix of mechanisms.

Similar content being viewed by others

Introduction

In remembering everyday information, such as a telephone number, a route, or a sequence of events, order is central (Lashley, 1951). A relatively simple test of memory for order is the judgement of relative order (JOR) procedure (Butters, Kaszniak, Glisky, Eslinger, & Shacter, 1994; Chan, Ross, Earle, & Caplan, 2009; Fozard, 1970; Hacker, 1980; Hockley, 1984; Hurst & Volpe, 1982; Klein, Shiffrin, & Criss, 2007; McElree & Dosher, 1993; Milner, 1971; Muter, 1979; Naveh-Benjamin, 1990; Wolff, 1966; Yntema & Trask, 1963). Illustrated in Fig. 1, the JOR procedure tests memory for relative order without requiring participants to produce the items from memory. The wording of a JOR question typically takes a form like, “Which of two people left the party more recently?” A logically equivalent form of this question could be “Which of two people left the party earlier?” Because, formally, all that has changed is that the target became the nontarget and vice versa, one might presume that these “earlier” and “later” instructions test the same information in memory. Perhaps this is why few studies have compared these instructions. The vast majority have used a recency instruction—hence, the term, judgement of relative recency (the origin of the acronym, JOR). However, instructions do influence JOR performance on both supra- and subspan lists. Flexser and Bower (1974) found that their distant instruction had worse overall accuracy than their recency instruction. More specifically, Chan et al. (2009) found that participants’ behavior on subspan lists resembled backward, self-terminating search for a later instruction, consistent with previous findings (Hacker, 1980; Muter, 1979), but forward, self-terminating search for an earlier instruction. Here, we ask whether this congruity effect is confined to subspan lists or generalizes to longer, supraspan lists.

Time course of one example experimental trial in Experiment 1 (list length = four nouns) with both instructions. At test, two nouns from the list are presented in random order, and the participant is asked to respond to the probe stimulus that occurred earlier (“earlier” instruction) or later (“later” instruction) in the just-presented list. The correct response item is depicted on a dark background in this figure only, not in the experiment itself. The keyboard key that the participant would press to select each probe item is depicted underneath the probe items

Figure 2c illustrates how hypothetical response time data would look for a forward, self-terminating search strategy. The vertical axis plots the behavioral measure; for illustration purposes, we label it “error rate” or “response time,” because speed–accuracy trade-offs notwithstanding (and we found none in our data), one would expect response time and error rates to vary in the same direction as one another. The left horizontal axis plots the serial position of the earlier probe item, and the right horizontal axis plots the serial position of the later probe item. Note that the later-item serial position is plotted in descending order to minimize the bars occluding one another. In forward, self-terminating search, response time/error rate increases as a function of the earlier probe serial position, whereas the later probe serial position has no influence on response time/error rate. The opposite pattern is expected for backward, self-terminating search, where response time/error rate increases when the later probe serial position decreases (Fig. 2d). The effect of instruction can be most clearly visualized if we plot the difference between the earlier and later instruction data (Fig. 2e).

Schematic depictions of hypothesized serial position effects. The dependent measure (error rate or response time) is plotted as a function of both the earlier probe item’s serial position (“Earlier Item”) and the later probe-item’s serial position (“Later Item”). a Serial position effects expected due to the distance effect. b Serial position effects expected due to the primacy and recency effects. c Serial position effects for forward, self-terminating search, as was found in subspan lists using the “earlier” instruction (Chan, Ross, Earle, & Caplan, 2009). d Serial position effects for backward, self-terminating search, as was found in subspan lists using the “later” instruction (Chan et al., 2009). e The difference between (a) and (b), which we use to isolate the congruity effect. f Our hypothesized serial position effects for the “earlier” instruction for supraspan lists: an average of recency, distance, and instruction-based bias across the list. g Our hypothesized serial position effects for the “later” instruction, as an average of recency, distance, and instruction-based bias across the list. Note that the hypothesis for the difference between instructions for supraspan lists remains as in (e), except that edge effects are expected to produce bow-shaped, rather than linear, congruity effects

We already know that JORs for supraspan lists are qualitatively quite different, and two important findings may suggest that we would not find a congruity effect at longer list lengths: (1) a distance effect (Fig. 2a), whereby judgements are better (faster and more accurate) as the difference in serial positions (distance) of the two probe items increases (e.g., Bower, 1971; Yntema & Trask, 1963), similar to the symbolic distance effect (e.g., Banks, 1977; Holyoak, 1977; Moyer & Landauer, 1967), and (2) an inverted U-shaped serial position effect, made up of a primacy and recency effect (Fig. 2b) (e.g., Hacker, 1980; Jou, 2003; Muter, 1979; Yntema & Trask, 1963). Chan et al.’s (2009) congruity effect was found for response times, suggesting that instruction influenced access speed as a function of serial position. For supraspan JORs, error rate is also a useful dependent measure. As list length increases above span, error rate increases; in an extreme case, with a list length of 90 words, accuracy approached chance levels, rising to 60 % accuracy only for very large lags (distance of 36 words; Klein et al., 2007). Primacy and recency effects may seem at odds with self-terminating search models that are reasonable accounts of subspan data (Chan et al., 2009). However, Hacker (1980) suggested that, in the case of imperfect item memory, U-shaped serial position effects due to item memory might distort self-terminating search patterns in JORs, an idea he incorporated into his self-terminating search model. The distance effect is also incompatible with self-terminating search, because the position of the unreached probe item should not affect the outcome of the JOR decision. These arguments might lead one to expect no congruity effect in long lists.

On the other hand, there are reasons to expect there should be a congruity effect at long list lengths. Evidence suggests there is no clear distinction between short- and long-term order memory (McElree, 2006). Moreover, Muter (1979) found a backward self-terminating search pattern extending to lists of 10 items (supraspan). Hacker’s (1980) data did not show obvious break points of his “availability” parameter (representing item memory) that could have distinguished a working memory from a long-term memory. This is consistent with extensive evidence suggesting that memory is scale invariant (Brown, Neath, & Chater, 2007; Crowder, 1982; Howard & Kahana, 1999; Nairne, 2002). We suggest that it is possible that both long and short list lengths are governed by the same memory mechanisms and that the congruity effect will generalize from short to longer list lengths.

In addition, the self-terminating search model has been fitted to long-list JOR data with success (Hacker, 1980; McElree & Dosher, 1993). It is possible that a self-terminating search model operating in the forward, rather than the backward, direction could explain the earlier instruction data and, thus, account for the congruity effect. Thus, the earlier instruction might induce a dominant primacy effect even for longer lists. In serial-recall procedures, forward recall shows a dominant primacy effect, whereas backward recall shows a dominant recency effect (Beaman, 2002; Hulme et al., 1997; Li et al., 2010; Li & Lewandowsky, 1993, 1995; Madigan, 1971; Richardson, 2007; Rosen & Engle, 1997; Thomas, Milner, & Haberlandt, 2003), suggesting that if forward search is based on serial recall, this kind of mechanism might be applicable even for longer lists. At present, published studies of supraspan JORs have mainly used a recency instruction to look at serial-position effects, similar to our later instruction (Butters et al., 1994; Chan et al., 2009; Fozard, 1970; Hacker, 1980; Hockley, 1984; Hurst & Volpe, 1982; Klein et al., 2007; McElree & Dosher, 1993; Milner, 1971 Muter, 1979; Naveh-Benjamin, 1990; Wolff, 1966; Yntema & Trask, 1963). Wyer, Shoben, Fuhrman, and Bodenhausen (1985) used both sooner and later instructions with probes derived from a social-action script (e.g., going to a restaurant) and found a response time congruity effect, but not for events that were specific to the example story. A similar response time congruity effect was found for personal life events in a subset of experimental conditions (Fuhrman & Wyer, 1988). These congruity effects for action scripts and personal life events may reflect supraspan phenomena, but both types of material are arguably tapping into semantic, not episodic, temporal order. We wondered if the JOR congruity effect would generalize above span, with response time as the measure.

Since we expected error rate to be an informative dependent measure for these lists, we wondered whether instruction would affect the quality of information in memory (availability), measured by error rate, or just accessibility, measured by response time. An error rate congruity effect has been found in autobiographical order tasks with yes/no judgements (Skowronski et al., 2007; Skowronski, Walker, & Betz, 2003); however, participants’ confirmation bias (toward selecting “yes” rather than “no”) might underlie that result. We found no clear published error rate congruity effect for temporal-order memory, although error rate congruity effects have occasionally been found for perceptual comparative judgements (Petrusic, 1992). We therefore hypothesized that a similar congruity effect would be observed in supraspan JOR data, but with the addition of recency, primacy, and distance effects, with both response time and error rate as measures. If we assume that the primacy, recency, and distance effects are approximately constant between instructions, we can isolate the congruity effect by analyzing the difference between instructions (Fig. 2e), which would then look similar to that observed in subspan response time data (Chan et al., 2009). We test these hypotheses in two experiments, always manipulating instruction between subjects. Experiment 1 used lists of nouns and manipulated list length (4, 6, 8, and 10) within subjects. Experiment 2 used consonant lists and manipulated list length (4 and 8) between subjects. The experiments produced similar results, suggesting broad boundary conditions for the congruity effect. Experiment 2 used the same materials and presentation rate as Chan et al.’s experiment.

To broaden the theoretical implications of our results, we evaluated our findings with respect to Hacker’s (1980) self-terminating search model. Hacker developed this model specifically to explain JORs, but it has not been tested on the congruity effect. We hypothesize that the congruity effect can be explained by a difference in the direction of search associated with each instruction. Participants may perform forward, self-terminating search with the earlier instruction, and backward, self-terminating search with the later instruction, and we test this with fits of models based on Hacker’s model after presenting the results of both experiments. We also discuss whether other existing memory models for the JOR paradigm could account for the congruity effect in their current form or could be easily adapted to do so.

Experiment 1

Method

Participants

Fourteen participants were recruited from the University of Alberta community. Participants gave informed consent and were paid at a rate of $12 for each of five 1-h sessions, conducted on 5 consecutive days. All had normal or corrected-to-normal vision and had learned English before the age of 6. Participants were randomly assigned to the earlier or later group in alternating testing order. One participant in the later instruction did not attend the last session, so for that participant, only the first four sessions were included in the analyses.

Materials

The stimuli were 1,316 nouns generated from the MRC Psycholinguistic Database (Wilson, 1988) with word length restricted to three to eight letters, two syllables, and Kučera–Francis written frequency above 6 per million, displayed in all capital letters. Nouns that we subjectively determined might be confused with verbs were manually removed from the list. Each trial was randomly drawn from list length 4, 6, 8, and 10, counterbalanced within session. There was no within-session repetition of words, but words were reused across sessions. All participants were tested using an A1207 iMac computer with an Apple Macintosh A1048 Pro keyboard.

Procedure

The experiment was implemented with the Python Experiment-Programming Library (PyEPL; Geller, Schleifer, Sederberg, Jacobs, & Kahana, 2007) and modified from Chan et al.’s (2009) experiment (Fig. 1). Probes were pairs of items drawn from the just-presented list, and all possible combinations were equally probable and counterbalanced within subjects and within list length. Participants in the two groups received slightly different instructions: (1) excerpt from the earlier instruction: “. . . judge which of the two nouns came earlier on the list you just studied. Press the ‘/’ key if the earlier item is presented on the right side of the screen and the ‘.’ key if the earlier item is on the left side of the screen. . . ” (2) Excerpt from the later instruction: “. . . judge which of the two nouns came later on the list you just studied. Press the ‘/’ key if the later item is presented on the right side of the screen and the ‘.’ key if the later item is on the left side of the screen. . . .” Participants were instructed to respond as quickly as they could without compromising accuracy. A session consisted of nine blocks with 20 trials in each block. The first block of each session was a practice block, excluded from analyses, composed of 8 trials, to familiarize (or refamiliarize) participants with the task. The computer provided immediate accuracy feedback after each trial in practice block (“correct” or “incorrect”), and average response time (in milliseconds) and accuracy (percentage correct) at the end of each experimental block. Each trial began with a fixation asterisk, “*,” in the center of the screen, followed by a word list presented sequentially in the center of the screen. Items were presented for 1,500 ms each with an interstimulus interval (ISI) of 175 ms. This is slower than the rate Chan et al. used (575-ms presentation time and 175-ms ISI), due to the greater stimulus complexity of nouns, than consonants (e.g., Sternberg, 1975). After a 2,500-ms delay, participants were presented with a single probe consisting of two words from the just-presented list and were asked which item was presented earlier or later, depending on group, by pressing the “.” key (for the left-hand probe item) or the “/” key (for the right-hand probe item). After a 500-ms delay, participants could press a key to start the next trial.

Data analysis

Trials with response times less than 200 ms and above three standard deviations from a participant’s mean response time were removed from the data (1.3 % of responses). A linear mixed effects (LME) model (Baayen, Davidson, & Bates, 2008; Bates, 2005) was used to analyze our data. We adopted LME analysis because, as compared with ANOVA, LME handles unbalanced designs, can fit individual responses without the need for averaging of the data, and protects against type II error due to increased power (Baayen et al., 2008; Baayen & Milin, 2010). LME analyses were conducted in R (Bates, 2005), using the LME4 (Bates & Sarkar, 2007), LanguageR (Baayen, 2007), and LMERConvenienceFunctions (Tremblay, 2013) libraries. The “lmer” function was used to fit the LME model. The “pamer.fnc” function was used to calculate the p values of model parameters. Eight fixed factors were used as predictors, including instruction (earlier, later), linear and quadratic component of later probe serial position (serial position of the probe item that appeared later from the presented list), distance (absolute value of the difference between two probe’s serial positions), intact/reverse (whether probe order was consistent or inconsistent with presentation order, respectively), trial number, session number, and list length. The linear and quadratic components of the later probe serial position are orthogonal to each other, generated with the “poly” function in R. We included the quadratic term to account for expected primacy and recency effects. Participant was included as a random effect on intercept. Instruction and intact/reverse were treated as categorical factors. All other factors were scaled and centered before being entered in the model. Response time was analyzed for correct trials only and was log-transformed to reduce skewness. The error rate data were fitted with logistic regression since it is a binary variable (“correct” vs. “incorrect”). LME estimates random effects first, followed by fixed effects. In the results tables, the “Estimate” column reports the corresponding regression coefficients, along with their standard errors. For the purposes of reporting the LME results, the intact condition and the earlier instruction were set as the reference levels for the intact/reverse and instruction factors, respectively. The best fits of LME models were obtained by conducting a series of iterative tests comparing progressively simpler models with more complex models using the Bayesian information criterion (BIC). We used BIC because it penalizes free parameters more than the Akaike information criterion (AIC), making it conservative and resistant to overfitting (Motulsky & Christopoulos, 2004; Zuur, Leno, Walker, Saveliev, & Smith, 2009). This approach is adopted to remove interactions and variables that do not explain a significant amount of variance (Baayen et al., 2008). We used the LMERConvenienceFunctions (Tremblay, 2013) library to conduct fitting of fixed effects systematically. In this approach, for each condition, we started with a model that included all factor combinations and interactions, with two exceptions. (1) The quadratic component of later probe serial position was not allowed to interact with the linear component of later probe serial position because both were derived from the later probe serial position. (2) Any interaction term for which one or more levels had no data was not included. Starting with the complete model, the highest-order terms are considered first, progressing to the lowest-order terms. At each stage, considering a given order of interaction, the term with the lowest p value is identified, and a model without this term is compared with the original model using BIC. The term is kept if it improves BIC based on a threshold of 2 or if the term is also contained within a higher-order interaction. When all terms are tested for the highest-order interaction, the comparison process continues to the term with lowest p value in the next highest-order interaction, and so on. The process iterates until all interaction terms have been tested, ending with main effects (Tremblay, 2013).

Results and discussion

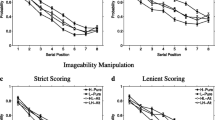

Error rate and response time, averaged across participants, are plotted as functions of serial position of the earlier and later probe items in Figs. 3 and 4. We isolated the congruity effect by plotting the difference between the earlier and later instructions after first removing the overall mean for each participant (right-hand columns). The best-fitting LME model is reported in Tables 1 and 2. To better visualize the pattern of serial-position effects, the overall mean was removed to correct for the mean difference between the earlier and later instructions.

Error rate (Experiment 1) as a function of both probe items’ serial position (earlier item and later item, respectively) broken down by list length in rows, and instruction (earlier, later and the difference, earlier − later, corrected for mean error rate) in columns

Response time (Experiment 1) as a function of both probe items’ serial position (earlier item and later item, respectively) broken down by list length in rows, and instruction (earlier, later, and the difference, earlier − later, corrected for mean response time) in columns

Error rates

First, we replicated the known effects of bow-shaped serial-position effects and distance effects. At all list lengths and for both instructions, the error rate data (Fig. 3) showed a distance effect (Fig. 2a), supported by a significant main effect of distance, and a bow-shaped serial-position effect involving both primacy and recency (Fig. 2b), supported by significant quadratic component of the later probe serial position in the best-fitting LME model (Table 1). The later instruction (Fig. 3, middle column) broadly resembled the earlier instruction (Fig. 3, left-hand column), except that the recency effect was more pronounced for the later instruction.

We next asked whether, despite the presence of distance and serial-position effects, there might also be a congruity effect. The difference bar graph (Fig. 3, right-hand column) shows that instruction indeed interacted with probe serial positions, supported in the LME analysis by interactions between instruction and the linear component of later probe serial position (Table 1). This interaction was due to the earlier instruction producing better performance at earlier serial positions and the later instruction producing better performance at later serial positions, in line with our predicted congruity effect (Fig. 2e).

Additional findings of interest that emerged from the best-fitting LME model were main effects of list length, intact/reverse, trial, and session. More error was associated with greater list length, reverse probe presentation order, lower trial number, and lower session number.

Importantly, list length did not interact with the congruity effect, suggesting that the congruity effect on error rate is replicated at all list lengths and does not change substantially across our four list lengths. We found a significant trial × session interaction. The interaction is consistent with learning-to-learn effects; larger trial numbers have fewer errors, and this effect is reduced in later sessions. Importantly, trial and session both did not interact with the congruity effect, showing that the congruity effect generalizes across these factors.

Finally, a significant interaction was found for instruction × intact/reverse. This is a second kind of congruity effect between instruction and reading order: Intact probes were judged better for the earlier instruction and worse for the later instruction. Reverse probes had the opposite relationship to instruction. If participants read from left to right, this would indicate better performance when the target was read first.

Response times

First, as with error rate, for all list lengths and both instructions, the response time data (Fig. 4) had significant distance and bow-shaped serial-position effects (Fig. 2a), supported by a significant main effect of distance and the quadratic component of later probe serial position, respectively, in the best-fitting LME model (Table 2).

Turning to the congruity effect, as with error rate, the difference bar graph (Fig. 4, right-hand columns) shows the predicted congruity effect, supported in the LME analysis by significant interactions between instruction and the linear component of later probe serial position (Table 2). Again, in line with our predicted congruity effect (Fig. 2e), the earlier instruction produced better performance at earlier serial positions, and vice versa for the later instruction.

We further checked whether the congruity effect was qualified by significant three-way interactions in the best-fitting LME model. The three-way instruction × linear component of later probe serial position × distance interaction showed that increasing distance was associated with a decrease in the slope of the linear component of later probe serial position for both instructions (see Fig. 1 in the supplementary materials). However, the rate of the linear component of later probe serial position function’s slope decrease was steeper for the earlier instruction than for the later instruction. The differential rate of slope decrease, thus, does not contradict the congruity effect. The instruction × quadratic component of later probe serial position × list length interaction showed a pattern of decreasing quadratic component of later probe serial-position slope for the later instructions and increasing quadratic component of later probe serial-position slope for the earlier instruction, as list length increases (see Fig. 2 in the supplementary materials). This interaction suggests that the difference in the primacy and recency effects between instructions decreases as list length increases.

Similar to the error rate results, we found trial × session and instruction × intact/reverse interactions. Instruction also interacted with trial, session, and distance. Response time in the later instruction improved more with practice than that in the earlier instruction. The later instruction also had a smaller distance effect than the earlier instruction. List length interacted with instruction, session, and later probe serial position. To summarize this effect, increasing list length was associated with longer response times for the later instruction, higher session number, and larger later probe serial position.

In sum, Experiment 1 replicated the typical primacy, recency, and distance effects (Hacker, 1980; Jou, 2003; Muter, 1979; Yntema & Trask, 1963) and extended Chan et al.’s (2009) congruity effect finding from subspan (e.g., list length 4) to supraspan (up to list length 10) data. The congruity effect appeared in both error rate and response time measures.

Experiment 2

One potential confound in Experiment 1 is that participants were given four list lengths, intermixed. It is possible that that the congruity effect is, in fact, a subspan—not supraspan—phenomenon but that the inclusion of some subspan lists (list length 4) influenced participants to apply a subspan strategy to supraspan lists. Thus, perhaps our congruity effect in supraspan lists is a special case. To address this, list length was a between-subjects factor in Experiment 2. In addition, to test for boundary conditions of the congruity effect, we switched from nouns to consonants and to a faster presentation rate (similar to the one used by Chan et al., 2009). If the congruity effect were found regardless of practice effects, stimulus type, and presentation rate, the generality of the congruity effect would be further supported.

Method

Participants

A total of 385 undergraduate students from introductory psychology courses at the University of Alberta participated in exchange for partial course credit. Participants gave informed consent, had normal or corrected-to-normal vision, and had learned English before age 6. We included two between-subjects factors: list length (4, 8) × instruction (earlier, later). Participants were run in groups of about 10–15, with all participants within a testing group being assigned to a single experimental group; experimental group cycled across testing groups. Forty-four participants were excluded because their error rate was close to chance (≥40 %). The number of excluded versus included participants in each condition is summarized in Table 5.

Materials

The materials were the same as those used by Chan et al. (2009). The stimuli were 16 consonants (excluding S, W, X, and Z) from the English alphabet displayed in capital letters. Each list comprised 4 or 8 (depending on group) consonants drawn at random without replacement from the stimulus pool, with the restriction that they did not appear in the two preceding lists. Probability was equal for each consonant/serial-position combination. All participants were tested using a group of 15 computers (custom-built PCs) with identical hardware, identical Samsung SyncMaster B2440 monitors, and Logitech K200 keyboards. Therefore, both instruction groups were exposed to the same hardware precision variabilities (Plant & Turner, 2009); thus, we do not expect any bias in our between-subjects design.

Procedure

The experiment was again created and run using the Python Experiment-Programming Library (Geller et al., 2007). A single session lasted approximately 1 h. The session started with a practice block of 8 trials, followed by nine blocks of 20 trials each for list length 4, and six blocks of 20 trials each for list length 8. The different number of blocks ensured that all participants could finish within 1 h. The computer provided online correctness feedback after each trial in practice block (“correct” or “incorrect”) and average response time (in milliseconds) and accuracy (percentage correct) at the end of each block. The instructions were the same as in Experiment 1, except that the word “nouns” was replaced with “consonants.” For each trial, participants were first presented with a fixation asterisk, “*,” in the center of the screen, then followed by a consonant list that was presented sequentially on the center of the screen, with list items presented for 575 ms each with an ISI of 175 ms. After a 2,500-ms delay, participants were presented with a two-item probe that consisted of two consonants from the just-presented list and were asked which item was presented earlier/later in the list by pressing the “.” (for the left-hand item) or “/” key (for the right-hand item). Each response was followed by a 500-ms delay before participants could press any key to start the next trial.

Data analysis

Trials with response times less than 200 ms and above three standard deviations from a participant’s mean response time were removed from the data (1.35 % of all trials). We adopt the same data representation as in Experiment 1. Error rate and response time (correct trials) data were analyzed at each list length separately.

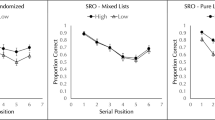

Results and discussion

Error rates

First, because performance was near ceiling, we could not analyze error rates at list length 4 (Fig. 5, top row) in any meaningful way. Out of 171 participants for both list length 4 earlier and later instructions, 89 participants had overall accuracy greater than 95 %, and only 18 participants scored below 90 %. We restrict our error rate analyses to list length 8 only.

Error rate (Experiment 2) as a function of both probe items’ serial position (earlier item and later item, respectively) broken down by list length in rows and instruction (earlier, later, and the difference, earlier − later, corrected for mean error rate) in columns

The list length 8 data (Fig. 5, bottom row) showed a congruity effect consistent with the pattern observed in Experiment 1 (Fig. 2e), with the earlier instruction resulting in more errors than the later instruction as later probe serial position increased, supported by a significant instruction × later probe serial position (linear component) interaction in the best-fitting LME model (Table 4). For this LME model-selection, based on the BIC values, we cannot differentiate the lowest BIC model that included instruction × later probe serial position (the congruity effect) and the same model without the congruity effect term, because Δ BIC < 2. However, because the model that included the congruity effect was nominally better by the BIC, we further compared the two models using other fitness criteria. The model that included the congruity effect was reliably selected on the basis of both AIC and log-likelihood (Table 3). For this reason, we report the model including the congruity effect. Importantly, the congruity effect did not interact significantly with trial, distance, or intact/reverse, suggesting that it generalizes across these factors (Tables 4 and 5).

One can observe an overall recency effect at both list lengths (Fig. 5), supported by significant later probe serial position main effect in the LME model, showing that error rate decreased as later probe serial position increased. The distance effect (Fig. 2a) was also found, supported by a significant main effect of distance in the best-fitting LME model. There was also a significant main effect of intact/reverse and of instruction; intact probes were better judged than the reverse probes, again suggesting a reading-order effect. Probes in the earlier instruction were better judged than in the later instruction. This is despite more poor performers having been excluded for the later instruction (Table 5); thus, this indicates an overall advantage of the earlier instruction over the later instruction. Replicating Experiment 1, the intact/reverse × instruction congruity effect was also significant; intact probes were judged better for the earlier instruction and worse for the later instruction. Reverse probes had the opposite relationship to instruction.

Response time

First, as with Experiment 1 error rates and response time results, visual inspection of list length 4 earlier instruction found a pattern consistent with forward self-terminating search (Fig. 2c) and list length 4 later instruction found a pattern consistent with backward self-terminating search, in line with Chan et al.’s (2009) results. For list length 8, the earlier instruction pattern resembled a distance effect with an overall primacy and recency effect (Fig. 2f). The later instruction resembled a backward self-terminating pattern combined with distance, primacy, and recency effects (Fig. 2g). The distance, primacy, and recency effects for both list lengths are supported by a significant main effect of distance and quadratic component of later probe serial position, respectively, in the best-fitting LME models.

Again, replicating the Experiment 1 results, the response time data for both list length 4 and list length 8 (Fig. 6) showed a congruity effect (Fig. 2e). The congruity effect is supported in the best-fitting model by a significant instruction × later probe serial position (linear component) interaction (Table 6). The two-way interaction is qualified by a significant four-way list length × instruction × later probe serial position × intact/reverse interaction. We conducted additional analyses on four subgroups of the data: list length 4 intact, list length 4 reverse, list length 8 intact, and list length 8 reverse (see Tables 2, 3, 4, and 5 in supplementary materials). The two-way instruction × later probe serial position (linear component) interaction was significant for all four groups, and the effects were consistent in direction. In addition to the four-way interaction, the congruity effect also interacted with distance and trials. The three-way interactions can be understood as increasing trial number, distance all selectively facilitating the later instruction response times at later probe serial positions and having the opposite effect on the earlier instruction response time at later probe serial positions. In other words, the linear later probe serial position curve associated with the earlier instruction is less affected by reverse presentation order, practice effect, and increasing distance.

Response time (Experiment 2) as a function of both probe items’ serial position (earlier item and later item, respectively) broken down by list length in rows and instruction (earlier, later, and the difference, earlier − later, corrected for mean response time) in columns

Replicating the Experiment 1 response time results, the best-fitting LME model also revealed other effects not observable on the data plots, including main effects of list length, instruction, trial, and intact/reverse. Longer list length, later instruction, reverse presentation order, and larger trial number corresponded with longer response time. The two-way instruction × intact/reverse interaction was also significant, suggesting a reading-order effect.

In sum, we found a congruity effect on error rate in list length 8 and a response time congruity effect at both list lengths. This challenges the argument that the findings in Experiment 1 were a consequence of mixing subspan lists in with supraspan lists within subjects. Thus, the congruity effect in JORs persists in supraspan lists, despite differences between Experiments 1 and 2, including presentation rate, stimulus materials, and varied versus fixed list lengths.

Hacker’s backward self-terminating search model

The congruity effect may present a new challenge to mathematical models of serial-order memory. Only a few models have been fit to JOR data (e.g., Brown, Preece, & Hulme, 2000; Hacker, 1980; Lockhart, 1969; McElree & Dosher, 1993). Hacker’s model was designed to explain JOR data with a recency instruction and makes predictions about both response time and error rate. We ask whether Hacker’s model can already explain the congruity effect in its currently published form. If not, we ask whether the model can be modestly modified to explain the congruity effect.

Hacker (1980) proposed that JOR performance is driven by the loss of some items from memory and backward, self-terminating search of the remaining, available items. The serial comparison process was assumed to start at the end of the list, progressing toward the beginning (hence, backward), ending when a match to a probe items was found (hence, self-terminating). If an item were “unavailable” due to item loss, the item would not be encountered during search. Probability of a correct JOR (1 − error rate), P ij , can be computed:

where i and j are the study–test lags of the more recent and less recent probe items, respectively. α i is the probability that item i is available in memory, and Hacker treated α i as free parameters. The first term reflects the case in which the later item is available (a correct response), and the second term represents the case in which both probe items are unavailable, and the response is made by guessing (probability correct = .5). Hacker went on to model response times on correct trials as follows, assuming that if an item is unavailable, it does not add to the response timeFootnote 1:

where b is a base-level response time for “overhead” processes unrelated to memory and s is the rate to search and compare each available item. The term in the leftmost square bracket represents the expected response time when search ends in a correct match, equal to the summed availability of items less than i that must be compared at rate s ms/item. The sum is incremented by 1 because i must be available to make a correct response (if not a guess). The other term is for the condition when both probes are unavailable, in which case search is exhaustive, summing the availability of all serial positions, excluding the probe serial positions i and j (because they are unavailable), at a rate of s ms/item. The matches and guesses are normalized by the P ij for that comparison.

Note that the same α i values are used to calculate error rate and response time. For the parameter search, we wanted to avoid finding a model that fit the earlier and later instructions individually while failing to capture the difference due to instruction. We therefore opted for a fitness measure that weighted the earlier data, the later data, and the difference pattern equally. Thus, we fitted Hacker’s (1980) model by minimizing the summed BIC of the earlier instruction, the later instruction, and the difference between earlier and later instructionFootnote 2 (both error rate and response time). To compare models from different parameter searches, we recalculated BIC without the redundant earlier − later terms. We follow the rule of thumb that a change in BIC (ΔBIC) of less than 2 is considered a nonsignificant difference between models. For error rate, we used the variant of BIC that applies to the special case of least-squares estimation with normally distributed errors on mean performance (Anderson & Burnham, 2004; Burnham & Anderson, 2002).

Fitting was done in MATLAB (The Mathworks, Inc. Natick, MA) with the SIMPLEX algorithm (Nelder & Mead, 1965). With all model fits presented here, the initial parameters were randomly chosen from a range of 0–1 for α and 0–2,000 for b and s, and the best-fitting model was the best of 500 executions of the SIMPLEX with different random starting values.

Both list lengths were fit separately. Visual inspection of the simulated data produced by the best-fitting models (Fig. 7; cf. Figs. 5 and 6) suggests that although the model can reproduce some important features of the data, it does not capture list length 4 error rate pattern well, producing a ceiling error rate for the later instruction. The model also cannot account for the earlier instruction response time pattern at both list lengths; in particular, it had trouble producing the primacy-dominant pattern in the response time measure. However, the model produced differences between instructions that resemble the empirical congruity effect qualitatively, and with approximately the same magnitude (cf. Figs. 3 and 5).

Hacker’s (1980) model error rate (top half) and response time (bottom half), fit to Experiment 2, as a function of both probe items’ serial position (earlier item and later item, respectively) broken down by list length in rows and instruction (earlier, later, and the difference, earlier − later, corrected for mean response time) in columns. *Note: The list length 4 error rate later instruction is plotted on a different scale than the earlier instruction because this model produced very high values; it could not simultaneously account for both instructions’ empirical pattern and their difference pattern

In summary, Hacker’s (1980) backward self-terminating search model ran into problems fitting serial-position effects that have been suggested to reflect forward search, particularly for the list length 4, earlier data. Therefore, we next considered whether a forward self-terminating search model would address this limitation.

A forward-directed variant of Hacker’s self-terminating search model

To implement forward, self-terminating search, for error rate (Equation 1), we changed the first α i to α j :

Similarly, for response time (Equation 2), we changed the first α i term to α j and changed the limits of summation over k. We first asked whether this forward search model would account better for the earlier instruction data than would the backward search model. The best-fitting model parameters from the best-fitting models are summarized in Table 7, along with ΔBIC values comparing the forward model and backward models.

The forward model fit the earlier data better than did the backward model for list length 4, but for list length 8, the backward model fit better (lower ΔBIC) and did so by capturing the early-serial-position advantage that presented a problem for the backward model (Fig. 8). Fitting the earlier data with the forward model and the later data with the backward model also improved fit of the congruity effect qualitatively (cf. Figs. 5 and 6).

The best-fitting Hacker’s model generated plot using forward direction search for earlier instruction (a, d) and backward direction search for earlier instruction (b, e). The right-hand columns (c, f) represent the Hacker’s model generated earlier − later difference pattern when fitting the earlier instruction with forward directed search and later instruction with the backward directional search

For more insight, note that for the forward model, the earlier instruction fit by decreasing α i over serial position (Fig. 9a), whereas the later instruction fit by increasing α i over serial position (Fig. 9b). When both earlier and later instruction fit by the backward model, the α i values were less steeply sloped for the earlier than for the later instruction. It may seem surprising that certain values of α i were near-zero. We understand this as follows. In the earlier instruction, the last item of the list can never be a target. Since participants have very good memory of this last item (McElree, 2006), they may easily rule it out as the target and respond correctly. Because Hacker’s (1980) model selects the item it terminates on as its target, if the α ListLength item were “available,” then paradoxically, the response would be incorrect. Thus, it appears that in fitting the model, α ListLength took on a near-zero level as a means of producing very high accuracy for this kind of probe (and likewise for the backward model).

In summary, Hacker’s (1980) model can fit shorter lists using forward self-terminating search for the earlier instruction and backward search for the later instruction. This reversal of search direction does not appear to extend to longer list lengths. For the longer list lengths, direction of search had to be backward for both instructions, but the degrees of freedom contained within the backward, self-terminating search model were sufficient to produce a qualitatively and quantitatively reasonable congruity effect. We discuss alternative model accounts in the General discussion section.

General discussion

In Experiment 1, we found that the congruity effect in the JOR task generalizes to supraspan noun lists, along with the usual distance, primacy, and recency effects and an intact/reverse congruity effect. The presence of a congruity effect in error rate suggests that instruction affects not only order memory retrieval speed, but also the quality of order information that can be retrieved from memory. Experiment 2 replicated the Experiment 1 findings, but with consonants and a between-subjects manipulation of list length, suggesting that presentation of varied list lengths within subjects does not explain the congruity effect. The fits of Hacker’s (1980) model and the forward-directed variant suggested that the congruity effect may arise for different reasons at different list lengths; at short list lengths, the earlier instruction might, in fact, reverse the direction of self-terminating search, but at longer list lengths, if search is in any sense directional, our model fits suggest that search is backward for both instructions.

Congruity effect across list length

Our results differ from the list length 4 data reported by Chan et al. (2009) in several ways. Chan et al. did not find distance effect or an intact/reverse effect, all of which we found in Experiment 2, presumably due to higher power and the LME analyses. The finding of long-list-like features like a distance effect may not be surprising, since McElree and Dosher (1993) also found signs of a distance effect in relative short lists using a similar JOR response-signal speed–accuracy trade-off (SAT) procedure. Thus, our findings replicate and extend the congruity effect in subspan lists reported by Chan et al. (2009).

Extrapolating, one might expect that a congruity effect will always be present, even at extremely long list lengths. Alternatively, the congruity effect might become vanishingly small as list length increases. Visual inspection of the data suggests that the overall difference in response time remained relatively constant across list lengths. Confirming the visual inspection, LME analysis found that the congruity effect did not interact with list length in both the response time and error rate data in Experiment 1. This suggests that the congruity effect is a general phenomenon that may apply to arbitrarily long lists.

JORs as comparative judgements

Congruity effects similar to ours have been found in closely related paradigms, known as comparative judgements (for reviews, see Birnbaum & Jou, 1990; Petrusic, 1992; Petrusic, Shaki, & Leth-Steensen, 2008), in which a pairwise comparison is made on any of a broad range of stimulus dimensions, including perceptual judgements (e.g., brightness, loudness) and symbolic judgements (e.g., comparing animal size on the basis of animal name). Distance effects, bowed serial-position effects, and congruity effects were found in our temporal-order judgement data and have been commonly found in comparative judgement studies (Banks, 1977). This suggests that JORs may be viewed as a specific instance of comparative judgements, supporting Brown et al.’s (2007) suggestion that temporal order information is processed like magnitude-order information in humans. Thus, congruity effects in JORs may occur for the same reason as they do in other comparative tasks.

Despite the similarities, evidence suggests that episodic (temporal order) and semantic judgements of order are not identical. In one study (Jou, 2003), the first nine letters of the English alphabet were the list, and participants were asked to choose either the letter that appears “earlier” or “later” in the alphabet. The nine-item alphabet condition is very similar to our list length 8 JOR task in Experiment 2, both with short lists of letters and with the earlier versus later instruction. Jou found a main effect of instruction, with earlier response times shorter than later response times but no congruity effect. These results, inconsistent with our findings, could be attributed to the overlearning of the alphabet or to the fact that the forward recall direction is hard to overcome due to the alphabet being highly practiced in that direction.

One further reason for caution in relating the memory JOR congruity effect to congruity effects in comparative judgements is that our subspan results are consistent with sequential, self-terminating search, but to our knowledge, sequential self-terminating search accounts have not been considered for comparative judgements.

Comparison with forward and backward serial recall

The most common procedure used to investigate memory for order is serial recall, where both item and order memory are tested (Kahana, 2012; Murdock, 1974). Could serial recall be the basis of the self-terminating search strategy thought to support JORs? In forward serial recall, participants recall from the beginning toward the end of a list, whereas backward recall starts from the end of the list. At first blush, backward serial recall seems approximately like a mirror-image of forward serial recall, with forward serial recall being dominated by a primacy effect and backward serial recall being dominated by a recency effect (Madigan, 1971; Manning & Pacifici, 1983). Our JOR congruity effect suggests a similar mirroring of serial-position effects as forward versus backward serial recall: The earlier instruction produced better judgements at earlier serial positions (primacy effect), whereas the later instruction produced better judgements at later serial positions (recency effect). However, there are several empirical dissociations that suggest that forward and backward serial recall may rely on different cognitive mechanisms (see Richardson, 2007, for a review). Backward serial recall may rely on more visuospatial processing than forward serial recall (Li & Lewandowsky, 1993, 1995; Reynolds, 1997). Thomas et al. (2003) found a response time pattern that suggested simple sequential search of the items in forward recall, but for backward recall, a U-shaped response time curve suggested that participants may have used multiple forward recalls when recalling backward.

Another interesting set of findings that may inform our results comes from a comparison of free recall with forward serial recall (Ward, Tan, & Grenfell-Essam, 2010). Because free recall does not dictate order of report, participants are free to initiate recall at any serial position. Ward et al. found that for shorter list lengths, the free-recall order resembled their forward serial-recall results; thus, participants prefer to recall short lists in the forward direction. In contrast, at long list lengths, participants chose to initiate recall with one of the last four items, which, although not identical, is more like backward than forward serial recall. This may indicate that a forward search strategy is available and convenient for JORs, but more so for short than for long lists, which is consistent with our model fits. Thus, JORs might be carried out using a covert serial-recall-like strategy, especially at shorter list lengths. This hypothesis leads to interesting, testable predictions. If JORs rely on serial recall, the manipulations that previously dissociated forward from backward serial recall (Beaman, 2002; Li & Lewandowsky, 1993, 1995; Madigan, 1971; Manning & Pacifici, 1983; Reynolds, 1997; Thomas et al., 2003) should produce analogous dissociative effects on JOR behavior comparing the earlier versus later instructions.

Models of order memory and the congruity effect

Although a full consideration of the implications of our findings for models of order memory is beyond the scope of this article, there are some points we can make clearly that speak to the inadequacies of current models and possible future directions for model development in light of our findings.

We first consider Hacker’s (1980) model, an implementation of sequential, self-terminating search. We considered this model in depth because it has been successfully applied, several times, to JOR data. We asked whether this preexisting model could already produce a congruity effect. Although it could not, an adaption of Hacker’s model could capture the congruity effect in subspan lists—namely, assuming forward directional search for the earlier instruction and backward directional search for the later instruction. For short lists, then, there may be no effect of instruction on the underlying processes generating the behavior, apart from a reversal of search direction. However, the forward directional search model was not compatible with earlier instruction data of the supraspan lists, even despite this model’s large number of degrees of freedom, which becomes larger as list length increases. This may indicate that a single explanation of the congruity effect is not possible for both short and long lists. Rather, it may be that the mechanism shifts at some critical list length; but if so, it remains to be determined what principle governs that switch in search direction. Finally, it is important to note that, because we only fit a single model to our data, that does not mean that the model is confirmed. It is quite plausible that a different model (possibly variants of the models we review in this section) would produce a better fit, both quantitatively and qualitatively. The level of success of this model, therefore, should not be taken as support for this particular model over other models.

At first glance, a self-terminating search mechanism presented in Hacker’s (1980) model could be compatible with other models of order memory applied to serial recall—for example, associative chaining models, where each item is associated with the previous item in the list to form a chain (e.g. Kleinfeld, 1986; Lewandowsky & Murdock, 1989; Riedel, Kühn, & van Hemmen, 1988; Sompolinsky & Kanter, 1986; Wicklgren, 1966), and positional coding models, where item position is used to probe each item (e.g., Burgess & Hitch, 1999; Henson, 1998). Both chaining and positional coding mechanisms could be used to model self-terminating search. However, a key assumption of Hacker’s model differs from chaining and positional coding models: that an item can be skipped without any impact on response time, which is how Hacker’s model produces a distance effect. To our knowledge, both chaining and positional coding models have not been implemented in such a way that they save processing time for a missed item. Chaining models may handle a missed item by probing with the previously retrieved vector even if the correct response could not be made (e.g., Lewandowsky & Murdock, 1989). Positional coding models continue to probe with the subsequent position, regardless of accuracy of the previous recall (e.g., Burgess & Hitch, 1999; Henson, 1998). Thus, current models of serial-order memory would need to be modified to incorporate Hacker’s mechanism.

Even if an account based on Hacker’s (1980) model is correct, this model was only developed to explain the JOR task; in its current formulation, it does not do other order memory tasks, like serial recall. Rather than start with a model of JORs and figure out how to develop it into a full-fledged memory model, one could consider models that were designed to explain serial-recall data and ask how such models might handle the JOR task. OSCillator-based Associative Recall (OSCAR; Brown, Preece, & Hulme, 2000) is a model of serial recall that has actually been fit to JOR data with some success. In this model, items are assumed to be associated with the state of an internal context signal (activation values of a bank of sine-wave oscillators), and retrieval of items requires reinstatement of the context. The authors applied OSCAR to the JOR task (Hacker’s 1980 data) by probing with the end-of-list context vector. More recent items tend to be more similar to the end-of-list context. The strongest activated list item was compared with the probe items; if a match was found, the search terminated; if no match was found, the next-highest activated item was considered next, and so on. It is not obvious to us how the congruity effect could be explained with this approach. At the very least, to explain the subspan earlier data, the model might need to be able to substitute the start-of-list context, and the congruity effect in supraspan lists, dominated by an overall recency effect, would still remain to be explained.

TODAM is another model that has been fit to JOR data (Murdock, Smith, & Bai, 2001). In this version of the model (TODAM2), recency was judged on the basis of the strength of the item-memory terms (not the association terms that are used in serial recall), and more recent items had greater strength. This could explain serial-position effects that are dominated by recency, such as we found in supraspan lists, but it is not obvious how this mechanism could be adapted to produce the primacy-dominant pattern found for list length 4. Furthermore, the congruity effect in supraspan lists would still need to be explained. Finally, TODAM was implemented only for error rates and not response times, so additional modifications would be necessary to explain the response time data.

SIMPLE, a scale-invariant model that assumes that memory is driven by discriminability of presentation times of items (Brown et al., 2007), produces bow-shaped serial-position effects and a distance effect, but it remains unclear how the model might account for the congruity effect. One might assume that different instructions can systematically distort the representation of time either directly or by influencing judgements on a separate, serial-position dimension. An interesting possibility is that the congruity effect might be produced by participants encoding list position differently, depending on instruction (Neath & Crowder, 1996)—for example, with the first item first for the earlier instruction and the last item first for the later instruction. Although promising, the current version of SIMPLE does not model response time data, which means more work is required to adapt SIMPLE to explain the full pattern of JOR data reported here.

In short, to our knowledge, no model of serial recall in its current form is sufficient to explain the JOR congruity effect across list lengths.

Conclusion

In sum, the pattern of both speed and errors depends on how the order judgement question is asked. If the target is the earlier item, judgements are better at earlier serial positions, whereas if the target is the later item, judgements are better at later serial positions, reminiscent of congruity effects found in comparative judgements. A self-terminating search model could account for subspan data by a reversal of search direction between instructions, but longer-list data demanded a different account (both backward-search). Direct-access accounts hold promise, but it is unclear how they could capture the full pattern of serial-position effects in both error rate and response time measures, across list lengths. Thus, although instruction has a similar effect across list length, either the underlying mechanisms driving the congruity effect change with list length, or a unified account may need to combine elements of both types of model.

Notes

Hacker (1980) applied his model only to JORs of the last seven list items. He needed an additional parameter, g, to account for additional searching time toward the beginning of the list after the 7th-back item was reached. Because we applied the model to search through the whole list, we no longer need the “shortcut” parameter g, so we set g = 0 to obtain Equation 2.

Note that BIC is a penalized log-likelihood criterion, expressed as − 2(log − likelihood) + k ∗ log(n), where k represents the number of parameters and n represents the number of observations. Because k and n are constant in our parameter search, the parameter search results should be equivalent to log-likelihood optimization.

References

Anderson, D. R., & Burnham, K. P. (2004). Multimodel inference: Understanding AIC and BIC in model selection. Sociological Methods and Research, 33, 261–304.

Baayen, R. H. (2007). LanguageR (R package on CRAN version 1.1) [Computer software and manual]. http://cran.r-project.org/web/packages/languageR/index.html

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412.

Baayen, R. H., & Milin, P. (2010). Analyzing reaction times. International Journal of Psychological Research, 3, 12–28.

Banks, W. P. (1977). Encoding and processing of symbolic information in comparative judgments. In G. H. Bower (Ed.), Psychology of learning and motivation (Vol. 11, p. 101–159). Academic Press.

Bates, D. M. (2005). Fitting linear mixed models in R. R News, 5, 27–30.

Bates, D. M., & Sarkar, D. (2007). lme 4: Linear mixed-effects models using s4 classes (version 0.999375-39) [Computer software and manual]. http://cran.r-project.org/web/packages/lme4/

Beaman, C. P. (2002). Inverting the modality effect in serial recall. The Quarterly Journal of Experimental Psychology, 55A(2), 371–389.

Birnbaum, M. H., & Jou, J. (1990). A theory of comparative response times and “difference” judgments. Cognitive Psychology, 184–210.

Bower, G. H. (1971). Adaptation-level coding of stimuli and serial position effects. In M. H. Appley (Ed.), (p. 175–201). New York: Academic Press.

Brown, G. D. A., Preece, T, & Hulme, C. (2000). Oscillator-based memory for serial order. Psychological Review, 107, 127–181.

Brown, G. D. A., Neath, I., & Chater, N. (2007). A temporal ratio model of memory. Psychological Review, 114(3), 539–576.

Burgess, N., & Hitch, G. J. (1999). Memory for serial order: A network model of the phonological loop and its timing. Psychological Review, 106(3), 551–581.

Burnham, K. P., & Anderson, D. R. (2002). Model selection and multimodel interference (2nd ed.). New York: Springer-Verlag.

Butters, M. A., Kaszniak, A. W., Glisky, E. L., Eslinger, P. J., & Schacter, D. L. (1994). Recency discrimination deficits in frontal lobe patients. Neuropsychology, 8(3), 343–353.

Chan, M., Ross, B., Earle, G., & Caplan, J. B. (2009). Precise instructions determine participants’ memory search strategy in judgments of relative order in short lists. Psychonomic Bulletin & Review, 16(5), 945–951.

Crowder, R. G. (1982). The demise of short-term memory. Acta Psychologica, 50, 291–323.

Flexser, J., & Bower, G. H. (1974). How frequency affects recency judgments: A model for recency discrimination. Journal of Experimental Psychology, 103(4), 706–716.

Fozard, J. L. (1970). Apparent recency of unrelated pictures and nouns presented in the same sequence. Journal of Experimental Psychology: Human Learning and Memory, 86(2), 137–143.

Fuhrman, R. W., & Wyer, J. R. S. (1988). Event memory: Temporal-order judgments of personal life experiences. Journal of Personality and Social Psychology, 54(3), 365–384.

Geller, A. S., Schleifer, I. K., Sederberg, P. B., Jacobs, J., & Kahana, M. J. (2007). PyEPL: A cross-platform experiment-programming library. Behavior Research Methods, 39(4), 950–958.

Hacker, M. J. (1980). Speed and accuracy of recency judgements for events in sort-term memory. Journal of Experimental Psychology: Human Learning and Memory, 6(6), 651–675.

Henson, R. N. A. (1998). Short-term memory for serial order: The start-end model. Cognitive Psychology, 36(2), 73–137.

Hockley, W. (1984). Analysis of response time distribution in the study of cognitive processes. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10, 598–615.

Holyoak, K. J. (1977). The form of analog size information in memory. Cognitive Psychology, 9, 31–51.

Howard, M. W., & Kahana, M. J. (1999). Contextual variability and serial position effects in free recall. Journal of Experimental Psychology, 25(4), 923–941.

Hulme, C., Roodenrys, S., Schweickert, R., Brown, G. D. A., Martin, S., & Stuart, G. (1997). Word-frequency effects on short-term memory tasks: Evidence for a redintegration process in immediate serial recall. Journal of Experimental Psychology: Learning, Memory, and Cognition, 23(5), 1217–1232.

Hurst, W., & Volpe, B. T. (1982). Temporal order judgements with amnesia. Brain and Cognition, 1, 294–306.

Jou, J. (2003). Multiple number and letter comparison: Directionality and accessibility in numeric and alphabetic memories. The American Journal of Psychology, 116, 543–579.

Kahana, M. J. (2012). Foundations of human memory. New York, NY: Oxford University Press.

Klein, K. A., Shiffrin, R. M., & Criss, A. H. (2007). Putting context in context. In J. S. Nairne (Ed.), The foundations of remembering: Essays in honor of Henry L. Roediger III: Psychology Press.

Kleinfeld, D. (1986). Sequential state generation by model neural networks. Proceedings of the National Academy of Sciences of the United States of America, 83, 9469–9473.

Lashley, K. S. (1951). The problem of serial order in behavior. In L. A. Jeffress (Ed.), (p. 112–131). New York: Wiley.

Lewandowsky, S., & Murdock, B. B. (1989). Memory for serial order. Psychological Review, 96, 25–57.

Li, S. C., Chicherio, C., Nyberg, L., von Oertzen, T., Nagel, I. E., Papenberg, G., & Bäckman, L. (2010). Ebbinghaus revisited: Influences of the BDNFVal66Met polymorphism on backward serial recall are modulated by human aging. Journal of Cognitive Neuroscience, 22(10), 2164–2173.

Li, S. C., & Lewandowsky, S. (1993). Intralist distractors and recall direction: Constraints on models of memory for serial recall. Journal of Experimental Psychology: Learning, Memory, and Cognition, 19(4), 895–908.

Li, S. C., & Lewandowsky, S. (1995). Forward and backward recall: Different retrieval processes. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21(4), 837–847.

Lockhart, R. S. (1969). Recency discrimination predicted from absolute lag judgements. Journal of Experimental Psychology: Human Learning and Memory, 6, 42–44.

Madigan, S. A. (1971). Modality and recall order interactions in short-term memory for serial recall. Journal of Experimental Psychology, 87(2), 294–296.

Manning, S. K., & Pacifici, C. (1983). The effects of a suffix-prefix on forward and backward serial recall. The American Journal of Psychology, 96(1), 127–134.

McElree, B. (2006). Accessing recent events. In B. H. Ross (Ed.), (Vol. 46, p. 155–200). Academic Press.

McElree, B., & Dosher, B. A. (1993). Serial retrieval processes in the recovery of order information. Journal of Experimental Psychology: General, 122(3), 291–315.

Milner, B. (1971). Interhemispheric differences in the localization of psychological processes in man. British Medical Bulletin, 27, 272–277.

Motulsky, H., & Christopoulos, A. (2004). Fitting models to biological data using linear and non-linear regression. A practical guide to curve fitting. Oxford, UK: Academic Press.

Moyer, R. S., & Landauer, T. K. (1967). Time required for judgements of numerical inequality. Nature, 215, 1519–1520.

Murdock, B. B. (1974). Human memory: Theory and data. Potomac, MD: Lawrence Erlbaum.

Murdock, B., Smith, D., & Bai, J. (2001). Judgments of frequency and recency in a distributed memory model. Journal of Mathematical Psychology, 45(4), 564–602.

Muter, P. (1979). Response latencies in discriminations of recency. Journal of Experimental Psychology: Human Learning and Memory, 5(2), 160–169.

Nairne, J. S. (2002). Remembering over the short-term: The case against the standard model. Annual Review of Psychology, 53, 53–81.

Naveh-Benjamin, M. (1990). Coding of temporal order information: An automatic process? Journal of Experimental Psychology: Learning, Memory, and Cognition, 16(1), 117–126.

Neath, I., & Crowder, R. G. (1996). Distinctiveness and very short-term serial position effects. Memory, 4(3), 225–242.

Nelder, J. A., & Mead, R. (1965). A simplex method for function minimization. The Computer Journal, 7(4), 308–313.

Petrusic, W. M. (1992). Semantic congruity effects and theories of the comparison process. Journal of Experimental Psychology: Human Perception and Performance, 18, 962–986.

Petrusic, W. M., Shaki, S., & Leth-Steensen, G. (2008). Remembered instructions with symbolic and perceptual comparisons. Perception & Psychophysics, 70, 179–189.

Plant, R. R., & Turner, G. (2009). Millisecond precision psychological research in a world of commodity computers: New hardware, new problems? Behavior Research Methods, 41(3), 598–614.

Reynolds, C. R. (1997). Forward and backward memory span should not be combined for clinical analysis. Archives of Clinical Neuropsychology, 12, 29–40.

Richardson, J. T. (2007). Measures of short-term memory: A historical review. Cortex, 43, 635–650.

Riedel, U., Kühn, R., & van Hemmen, J. L. (1988). Temporal sequences and chaos in neural nets. Physical Review A, 38, 1105–1108.

Rosen, V. M., & Engle, R. W. (1997). Forward and backward serial recall. Intelligence, 25, 37–47.

Skowronski, J. J., Ritchie, D. T., Walker, W. R., Sedikides, C., Bethencourt, L. A., & Martin, A. L. (2007). Ordering our world: The quest for traces of temporal organization in autobiographical memory. Journal of Experimental Social Psychology, 43, 850–856.

Skowronski, J. J., Walker, W. R., & Betz, A. L. (2003). Ordering our world: An examination of time in autobiographical memory. Memory, 11(3), 247–260.

Sompolinsky, H., & Kanter, I. (1986). Temporal association in asymmetric neural networks. Physical Review Letters, 57, 2861–2864.

Sternberg, S. (1975). Memory scanning: New findings and current controversies. Quarterly Journal of Experimental Psychology, 27, 1–32.

Thomas, J. G., Milner, H. R., & Haberlandt, K. F. (2003). Forward and backward recall: Different response time patterns, same retrieval order. Psychological Science, 14(2), 169–174.

Tremblay, A. (2013). LMERConvenienceFunctions: a suite of functions to back-fit fixed effects and forward-fit random effects, as well as other miscellaneous functions (version 2.5) [Computer software and manual]. http://cran.r-project.org/web/packages/LMERConvenienceFunctions/index.html

Ward, G., Tan, L., & Grenfell-Essam, R. (2010). Examining the relationship between free recall and immediate serial recall: The effects of list length and output order. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36(5), 1207–1241.

Wicklgren, W. A. (1966). Associative instructions in short-term recall. Journal of Experimental Psychology, 72, 853–858.

Wilson, M. D. (1988). The MRC psycholinguistic database: Machine readable dictionary, version 2. Behavior Research Methods, 20, 6–11.

Wolff, P. (1966). Trace quality in the temporal ordering of events. Perceptual and Motor Skills, 22(1), 283–286.

Wyer, R. S., Jr., Shoben, E. J., Fuhrman, R. W., & Bodenhausen, G. V. (1985). Event memory: The temporal organization of social action sequences. Journal of Personality and Social Psychology, 49(4), 857–877.

Yntema, D. B., & Trask, F. P. (1963). Recall as a search process. Journal of Verbal Learning and Verbal Behavior, 2(1), 65–74.

Zuur, A. F., Leno, E. N., Walker, N. J., Saveliev, A. A., & Smith, G. M. (2009). Mixed effects models and extensions in ecology with R. New York: Springer.

Acknowledgments

We would like to thank Dr. Harald Baayen for statistics consultation and Christopher Madan for feedback on an earlier draft of the manuscript. We would also like to thank the Natural Sciences and Engineering Research Council of Canada (NSERC) and the Alberta Ingenuity Fund for funding support.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 328 kb)

Rights and permissions

About this article

Cite this article

Liu, Y.S., Chan, M. & Caplan, J.B. Generality of a congruity effect in judgements of relative order. Mem Cogn 42, 1086–1105 (2014). https://doi.org/10.3758/s13421-014-0426-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-014-0426-x